Abstract

Deep-sea biological detection is a pivotal technology for the exploration and conservation of marine resources. Nonetheless, the inherent complexities of the deep-sea environment, the scarcity of available deep-sea organism samples, and the significant refraction and scattering effects of underwater light collectively impose formidable challenges on the current detection algorithms. To address these issues, we propose an advanced deep-sea biometric identification framework based on an enhanced YOLOv8n architecture, termed PSVG-YOLOv8n. Specifically, our model integrates a highly efficient Partial Spatial Attention module immediately preceding the SPPF layer in the backbone, thereby facilitating the refined, localized feature extraction of deep-sea organisms. In the neck network, a Slim-Neck module (GSconv + VoVGSCSP) is incorporated to reduce the parameter count and model size while simultaneously augmenting the detection performance. Moreover, the introduction of a squeeze–excitation residual module (C2f_SENetV2), which leverages a multi-branch fully connected layer, further bolsters the network’s global representational capacity. Finally, an improved detection head synergistically fuses all the modules, yielding substantial enhancements in the overall accuracy. Experiments conducted on a dataset of deep-sea images acquired by the Jiaolong manned submersible indicate that the proposed PSVG-YOLOv8n model achieved a precision of 79.9%, an mAP50 of 67.2%, and an mAP50-95 of 50.9%. These performance metrics represent improvements of 1.2%, 2.3%, and 1.1%, respectively, over the baseline YOLOv8n model. The observed enhancements underscore the effectiveness of the proposed modifications in addressing the challenges associated with deep-sea organism detection, thereby providing a robust framework for accurate deep-sea biological identification.

1. Introduction

Deep-sea organisms play a crucial role in marine ecosystems, directly influencing global climate regulation and the biogeochemical cycling of marine elements [1]. As a key technology used to study and protect deep-sea biodiversity, underwater target detection faces many technical challenges. The special characteristics of the deep-sea environment, including low light, light attenuation, color bias, and low contrast, seriously degrade the quality of underwater images, thereby weakening the accuracy and robustness of the existing detection algorithms [2]. In addition, the scarcity of deep-sea image data and the high cost of image acquisition are making it harder to acquire and annotate the data, further limiting the generalization ability of the models. Moreover, many of the existing detection models usually increase the computational complexity in exchange for higher accuracy, which makes them difficult to effectively deploy in resource-constrained deep-sea detection systems [3]. Therefore, how to improve the detection accuracy while reducing the computational complexity of the models to ensure their real-time detection and high efficiency in deep-sea detection tasks has become a key issue to be solved in this field.

In recent years, scholars at home and abroad have carried out extensive research on underwater bio-detection algorithms. Traditional underwater detection methods based on machine learning are less robust to the complex deep-sea environment, and it is difficult to meet the identification needs of various types of marine macro-organisms [4]. The excellent performance of AlexNet in the field of image recognition has led to the wide application of deep learning technology in the field of computer vision [5,6]. Convolutional neural networks (CNNs) in deep learning stand out in image feature extraction applications [7] and are widely used in computer vision tasks based on underwater images. Sun et al. [8] proposed a method of de-blurring underwater degraded images by a convolutional neural network to correct the degraded images and thereby achieve image enhancement. Currently, deep learning algorithms for underwater biological detection are generally divided into two categories. The first category consists of two-stage algorithms, such as R-CNNs (Region-CNNs) [9] and Fast R-CNNs (Fast Region-CNNs) [10]. These algorithms operate in two stages: first, candidate regions are generated from images; second, fine classification and bounding box regression are performed within these candidate regions. With the continuous progress of deep learning, the attention mechanism has also become the highlight of model improvement. Tan et al. [11] combined the image preprocessing technique of MSRCR with median filtering and added the attention mechanism to the neck network to achieve the high-accuracy counting of fish tails. This method has a high detection accuracy and slow detection speed. The second category is one-stage algorithms, such as YOLO [12], which views the localization and classification task in target detection as a regression problem and has a faster detection speed. Wageeh et al. [13] enhanced the quality of underwater images by using a multiscale Retinex algorithm and combined the YOLO algorithm with the optical flow method to achieve the identification and tracking of fish. Liu et al. [14] proposed a deep learning method with multiscale feature fusion for underwater target detection, which significantly improved the detection performance. Wang et al. [15] combined the PSA module with YOLOv8 for underwater target detection, which improved some accuracy metrics but significantly increased the model’s complexity without a corresponding noticeable improvement in performance. Li et al. [16] optimized the YOLOv8 architecture with Slim-Neck, but this design still lacks significant computational efficiency and environmental adaptability, making it unsuitable for the recognition of underwater organisms. Zhu et al. [17] proposed a high-spectral image classification model based on the squeeze–excitation network and deep learning, which has demonstrated high accuracy in the field of image classification. However, its precision in image recognition applications is relatively low.

Despite the notable advances in deep learning-based underwater bio-detection, significant challenges remain. Images acquired by the Jiaolong manned submersible in the Western Pacific are compromised by pronounced color bias, low contrast, and inconsistent exposure levels—factors that substantially impede the accurate recognition of deep-sea organisms. Furthermore, the exorbitant costs associated with deep-sea image acquisition yield limited datasets, thereby constraining the training and generalization capabilities of the current models. To overcome these obstacles, we designed an improved deep-sea biological recognition model: PSVG-YOLOv8n (P: efficient pyramid compression attention module; S: squeeze–excitation residual module; V: VoVGSCSP; G: GSconv; YOLOv8n: You Only Look Once 8n), with the following main contributions:

- (1)

- By integrating the PSA module and the C2f_SENetV2 module into both the backbone and neck networks, we have addressed issues such as color bias, low contrast, overexposure, and underexposure, thereby significantly enhancing the accuracy of the recognition of deep-sea organisms;

- (2)

- By incorporating the improved Slim-Neck module into the neck network, we have not only enhanced the model’s accuracy in recognizing deep-sea organisms but also reduced the complexity of the model;

- (3)

- We propose a novel detection head output scheme, which is more conducive to combining various models and improving the recognition accuracy;

- (4)

- We facilitated the identification and tracking tasks for the Jiaolong manned submersible, designed for deep-sea operations at depths of up to 7000 m, in carrying out tasks related to objects required for deep-sea work.

The structure of this paper is organized into four main sections. Section 1 introduces the background and significance of the study, along with an overview of the current state of research both domestically and internationally. Section 2 describes the proposed method and outlines the overall architecture of the model. Section 3 details the construction of the dataset, the experimental setup, and the performance evaluation. Finally, Section 4 concludes the paper with a comprehensive summary of the research findings.

2. Method

2.1. YOLOv8n Network Architecture

The YOLO series is celebrated for its exceptional efficiency and accuracy in object detection. The YOLOv8n model [18], building on the achievements of YOLOv5n, introduces significant improvements. Specifically, it replaces the conventional C3 module with the more sophisticated C2f module, thereby refining residual learning and facilitating improved gradient propagation via an optimized bottleneck module. Moreover, the model incorporates a novel image segmentation algorithm that synergistically combines deep learning with an adaptive threshold function [19], resulting in a lightweight framework that effectively captures gradient stream data [20]. The input image is sequentially processed through multiple convolutional layers and C2f modules to extract feature maps at varying scales, which are then refined by an SPPF module prior to being forwarded to the detection head. This detection head seamlessly integrates anchor-free and decoupled-head strategies, while the loss function [21] leverages binary cross-entropy for classification alongside regression losses based on the CIOU and VFL. Additionally, the frame matching process has been improved with the Task-Aligned Assigner, further enhancing the detection accuracy.

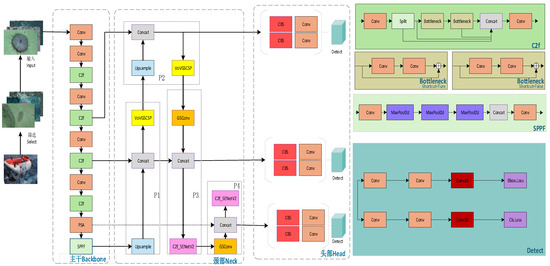

2.2. Improved YOLOv8n Model (PSVG-YOLOv8n Model)

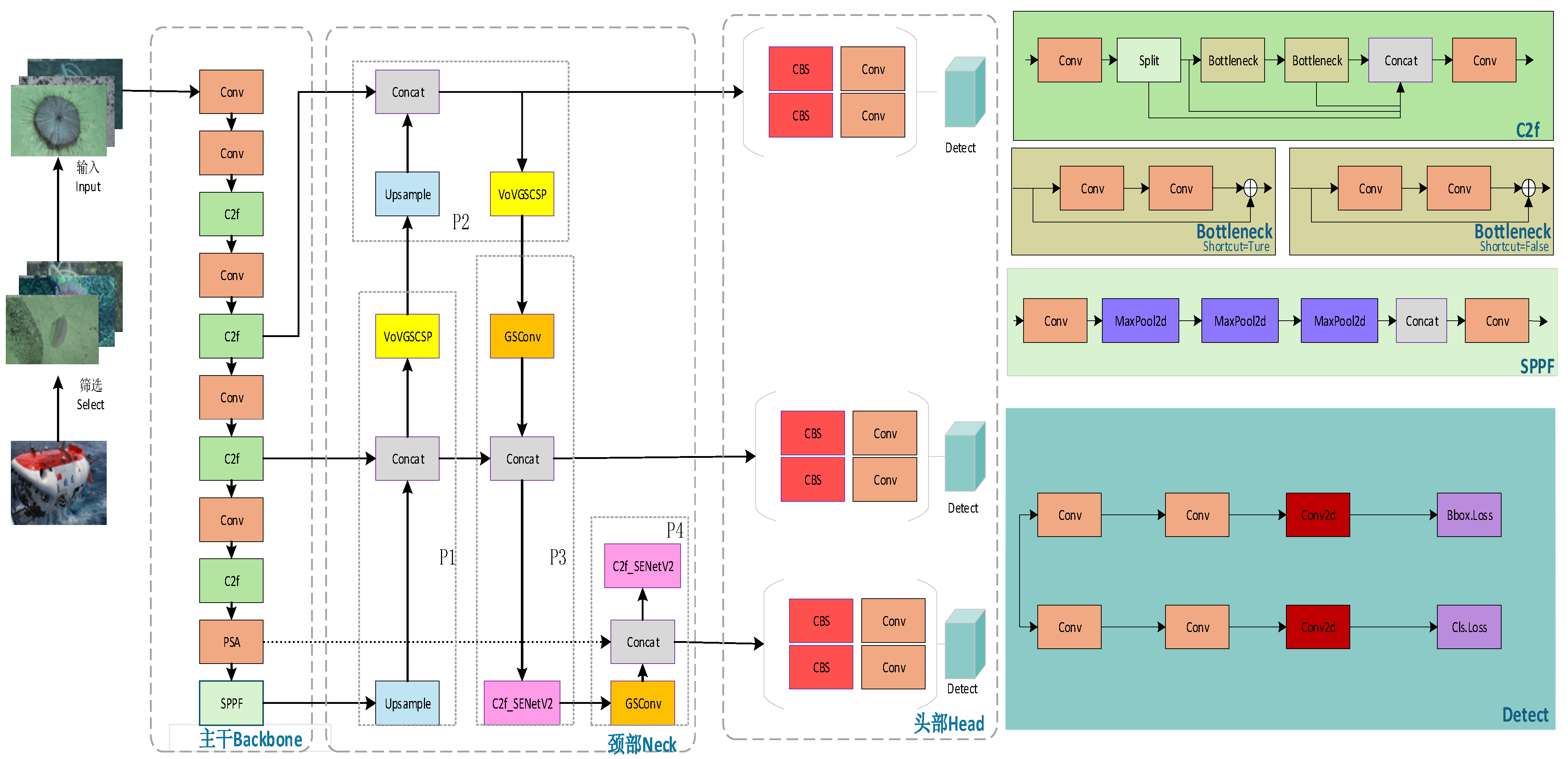

The flowchart of the design model in this paper is shown in Figure 1. First, the PSA efficient pyramid compression attention module is added to the upper layer of the SPPF in the backbone, which dynamically allocates weights in the spatial and channel dimensions through the separated attention mechanism [22] to enhance the feature representation ability in the backbone and, at the same time, improve the efficiency and accuracy of the model. In the neck part of the model, GSConv lightweight convolution is used to replace the traditional convolution for processing the feature mapping coming from the body of the model to improve the generalization ability of the model. By replacing the C2f modules in the P1 and P2 stages of the neck with the lightweight VoVGSCSP architecture, the VoVGSCSP module, which combines the spatial context information propagation, image semantic propagation, multiscale feature fusion, and edge enhancement modules, not only improves the accuracy of the detail recognition and reduces noise interference when handling rough edges but also optimizes the model’s performance in edge regions through an adaptive loss function. This achieves a significant enhancement in both the precision and robustness of edge detection while ensuring lightweight computation. The C2f_SENetV2 module is used to replace the C2f modules of necks P3 and P4, which further improves the fineness of the feature expression and the integration of global information through the multi-branch architecture. The detection head of the model is newly designed to locate the output channel end on the ConCat module. Finally, the final results are output through the detection module.

Figure 1.

Overall structure diagram and basic modules of PSVG-YOLOv8n.

2.3. Efficient Pyramid Compression Attention Module (PSA)

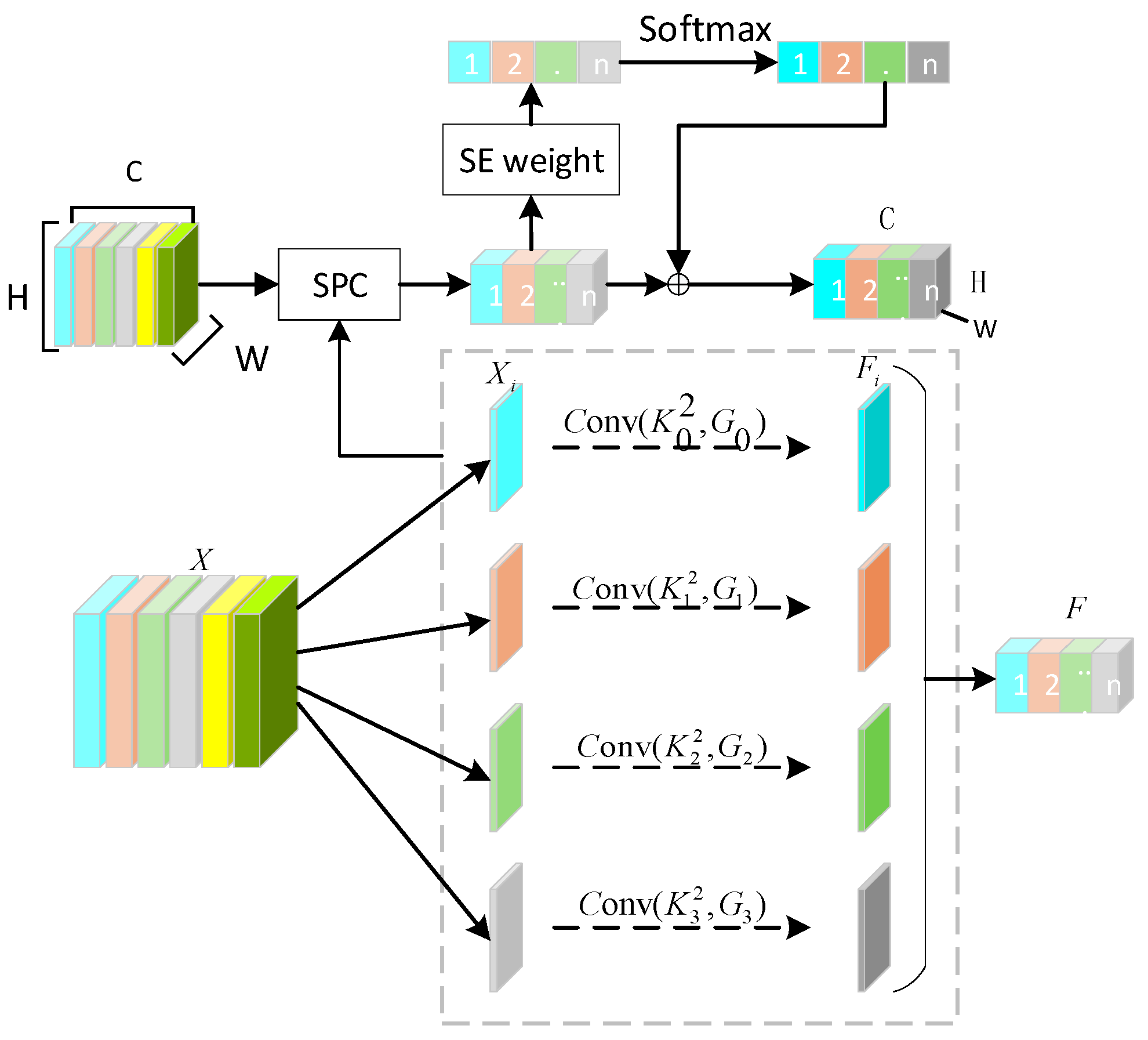

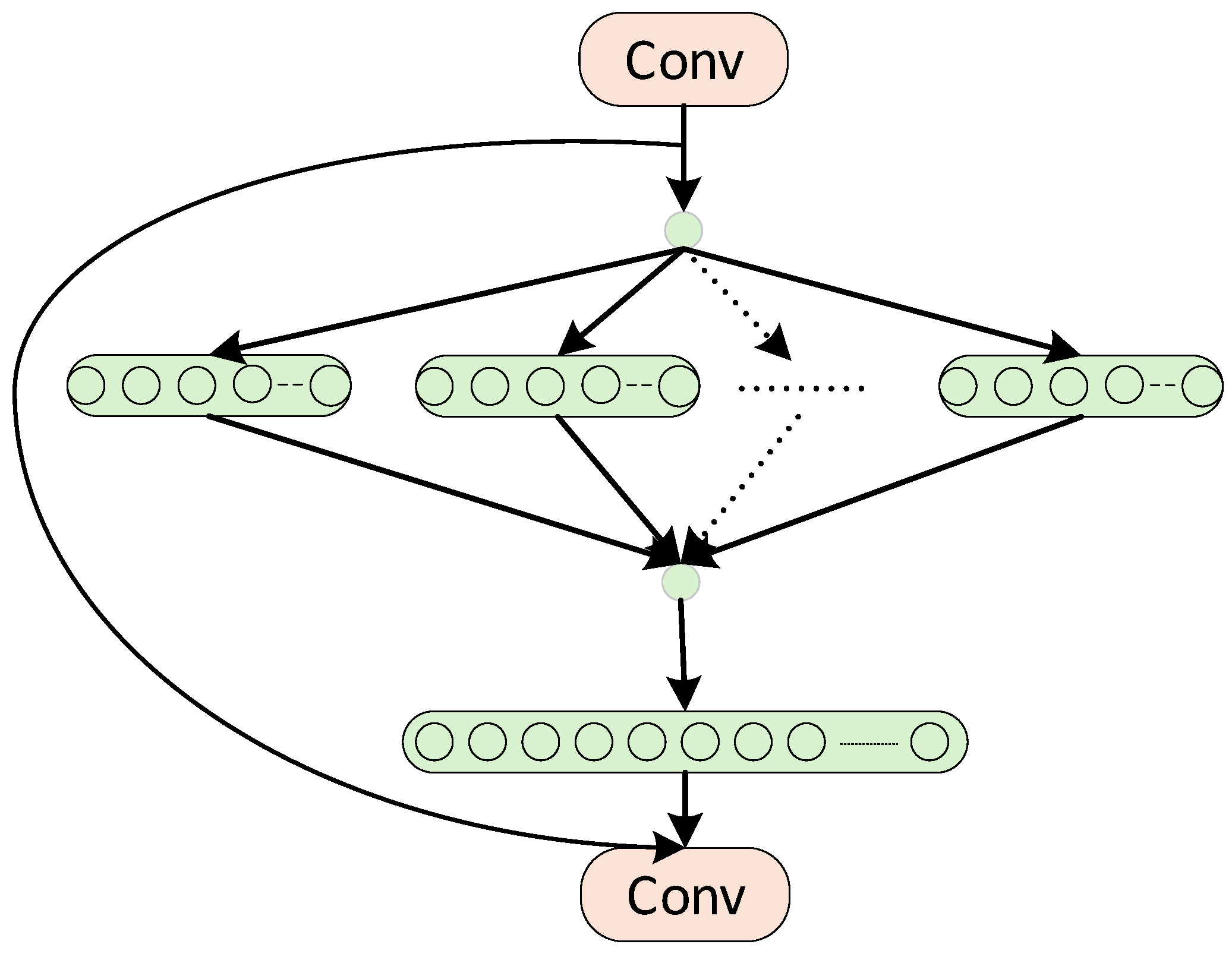

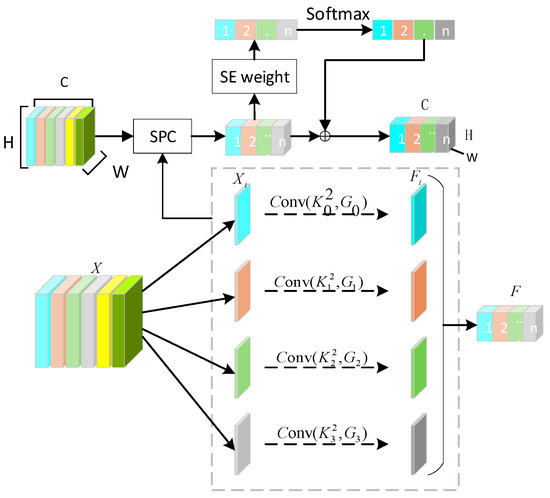

The Partial Spatial Attention module (PSA) [23] is an advanced spatial attention mechanism designed to improve the feature extraction capability of neural networks in complex environments. In the context of deep-sea imaging, the acquired data are often contaminated by heterogeneous and cluttered backgrounds. To address this challenge, the PSA module dynamically recalibrates the weights assigned to each spatial location based on the saliency of the corresponding features. By selectively amplifying the representation of critical regions, the module effectively highlights deep-sea target areas while suppressing irrelevant background noise (e.g., obstacles, color distortion, lighting changes, reduced visibility). The processing workflow of the PSA mechanism is illustrated in Figure 2.

Figure 2.

Efficient pyramid compression attention module. C denotes the number of channels; SPC refers to the Split and ConCat module; SE represents the weighting module; Softmax is the attention function; indicates the grouping convolution operation on .

Assume that the feature map after PSA upper-layer processing is X, its channel number is C, and its new feature map is obtained after the SPC module [24]. Assuming that the number of channels (C) is divided into n groups, each group of features and C/n to obtain the number of feature channels is , and then when grouped for convolution, the relationship between the convolution kernel and each grouping is as follows:

where is the convolution kernel of the it group; is the number of subgroups.

After grouped convolution, the resulting grouped multiscale feature () is denoted as follows:

where denotes the grouped convolution operation for ; denotes the multiscale features obtained from the ith group subsequent to the application of grouped convolutional operations; denotes the splicing of each of the groups.

after the squeeze–excitation module (SE), its channel attention can be extracted, and this process is denoted as follows:

Then, corresponds to the total attention weight vector ():

It is also necessary to use multiscale feature interaction information to address the issue of severe long-channel attention. The corresponding weights are recalibrated using the Softmax to obtain the new attention weight vectors and :

The dot multiplication operation of A and F yields a more informative multiscale feature map with the following formula:

According to Figure 2 and the related formula calculation process, the entire feature map size is unchanged after the efficient pyramid compression attention module, and each feature target of the deep-sea image is grouped and categorized. Employing multiscale convolution to group the corresponding feature maps enables the acquisition of spatial information from multiscale feature maps while reducing the number of parameters in the convolution process.

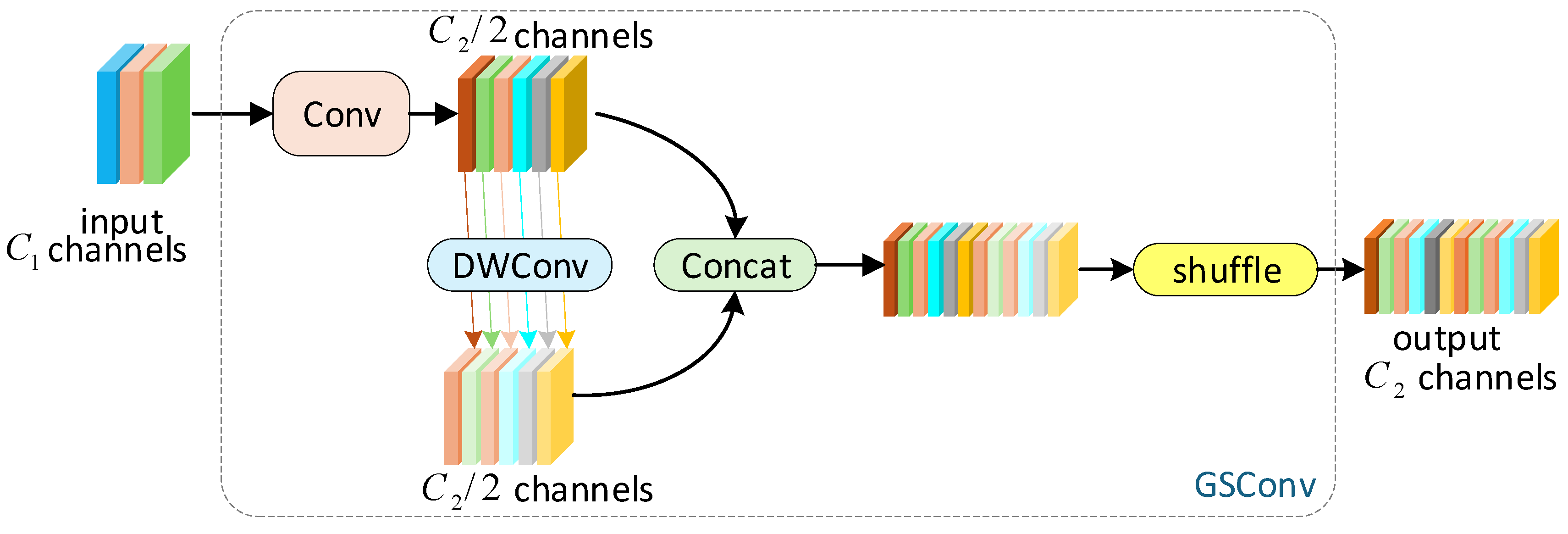

2.4. Slim-Neck Module (GSconv + VoVGSCSP)

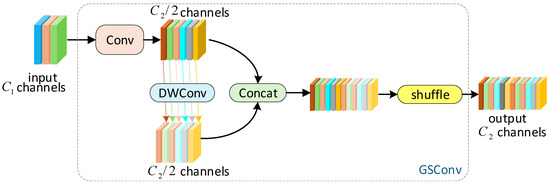

The GSConv module can be applied anywhere in the model. However, when used in the backbone network, it may increase the depth of the network layers, which could lead to greater resistance to data flow and longer inference times. Therefore, the application of GSConv for neck use is more suitable. The structure of GSConv contains a network sampling layer, 3D convolutional layer, and network reconstruction layer. Firstly, the 2D deep-sea feature map is transformed into a 3D tensor, then the 3D tensor is optimized by the 3D convolution, and finally, the 3D tensor is recovered to a 2D feature map by the network reconstruction technique. This process is suitable for feature extraction in a multiscale image pyramid model, with the computational cost being about 60% to 70% of that of standard convolution, significantly reducing redundancy and repetitive information. The structure of the GSConv process is shown in Figure 3.

Figure 3.

Structure of GSConv module. Conv is a convolutional layer with an output channel number of ; DWConv is a depth-separable convolutional operation; shuffle is a rearranged feature channel.

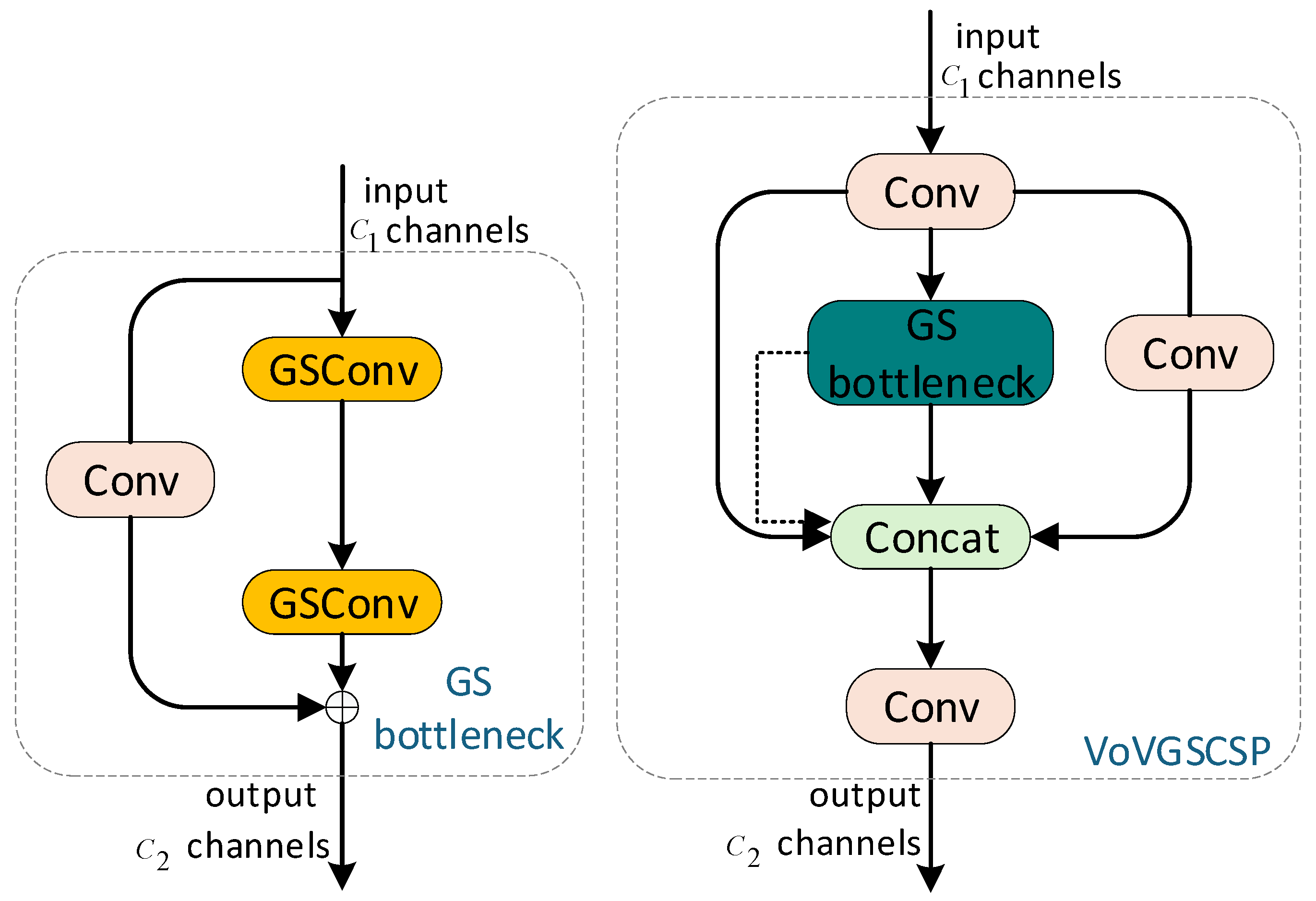

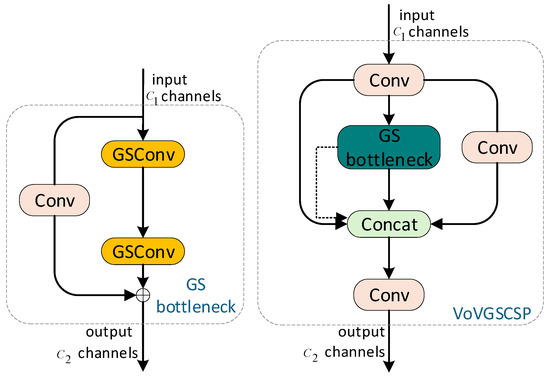

Although GSConv can significantly reduce the redundant information of deep-sea organism feature maps, there are still challenges in the accuracy of the feature map information. In this paper, the GS bottleneck structure and VoVGSCSP are constructed on the basis of GSConv. The structure is shown in Figure 4. By replacing the C2f module in neck layers 1 and 2 with VoVGSCSP, the VoVGSCSP module effectively promotes the propagation of strong semantic features through the use of two GSConv convolutions to realize the processes of up-sampling and down-sampling.

Figure 4.

Structure of GS bottleneck module with VOVGSCSP module. GSConv is a global convolution module; GS bottleneck refers to a Global Skip Bottleneck module.

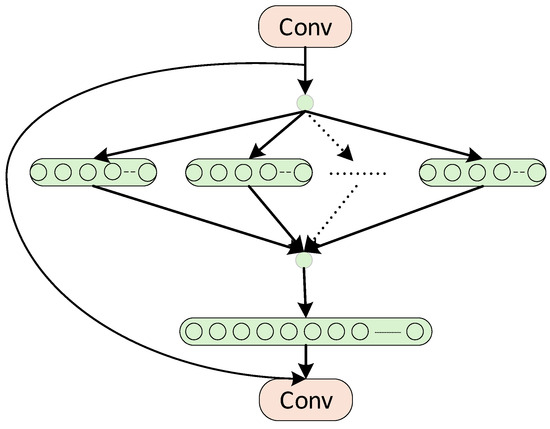

2.5. Squeeze–Excitation Residual Module (C2f_SENetV2)

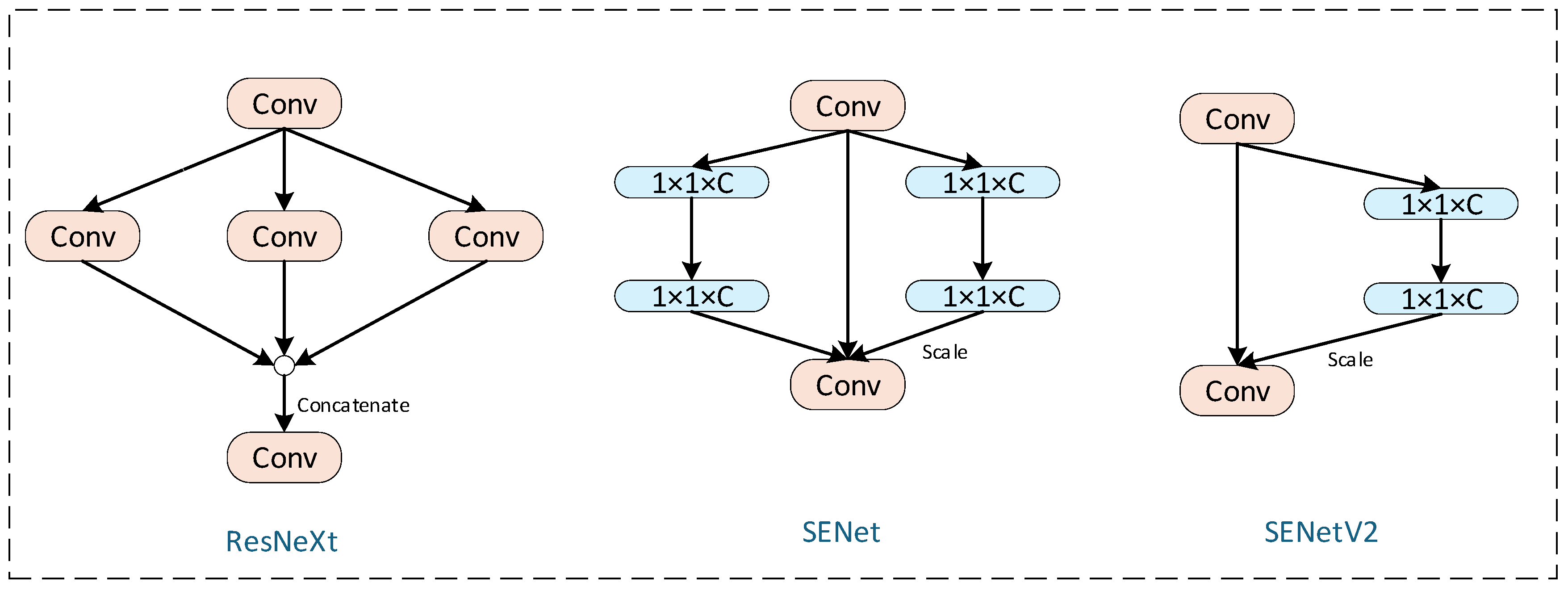

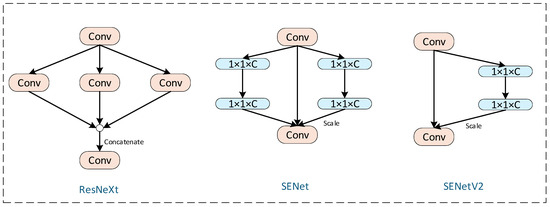

When recognizing organisms in complex deep-sea environments, more contextual information is required. The C2f_SENetV2 module [25] can improve the detection of deep-sea organisms by enhancing the global feature representation and obtaining richer and more accurate contextual information. The C2f_SENetV2 module applies an improved SENet architecture [26], which enhances the network representation by introducing a new module called squeeze aggregated excitation (SaE) [27] to further enhance the expressive power of the network. This module combines the squeezing and excitation (SENetV1) operations to enhance the global representation learning of the network through a multi-branch, fully connected (FC) layer. As illustrated in Figure 5, SaE serves as the primary improvement mechanism of SENetV2 over SENetV1. The process involves compressing outputs and feeding them into multiple branching FC layers, which is followed by an excitation phase that restores the segmented inputs to their original state.

Figure 5.

Squeeze–excitation residual module.

The C2f_SENetV2 module combines the features of the SENet and ResNeXt modules, while borrowing the core idea of SENet—using the squeeze and excitation operations to enhance the representation of the model channels. At the same time, it follows the aggregation layer design of ResNeXt, using multiple FC-layer branches to learn the global feature representation. The outputs of these branches are then spliced to obtain a richer and more accurate feature map. Figure 6 shows the structures of the SENet module, ResNeXt module, and SENetV2 module.

Figure 6.

Structures of SENet module, ResNeXt module, and SENetV2 module.

The formula for the squeeze–excitation operations of the residual module can be expressed as follows:

where the input is denoted as ; the function denotes the squeeze operation and contains an layer; and denotes the excitation operation.

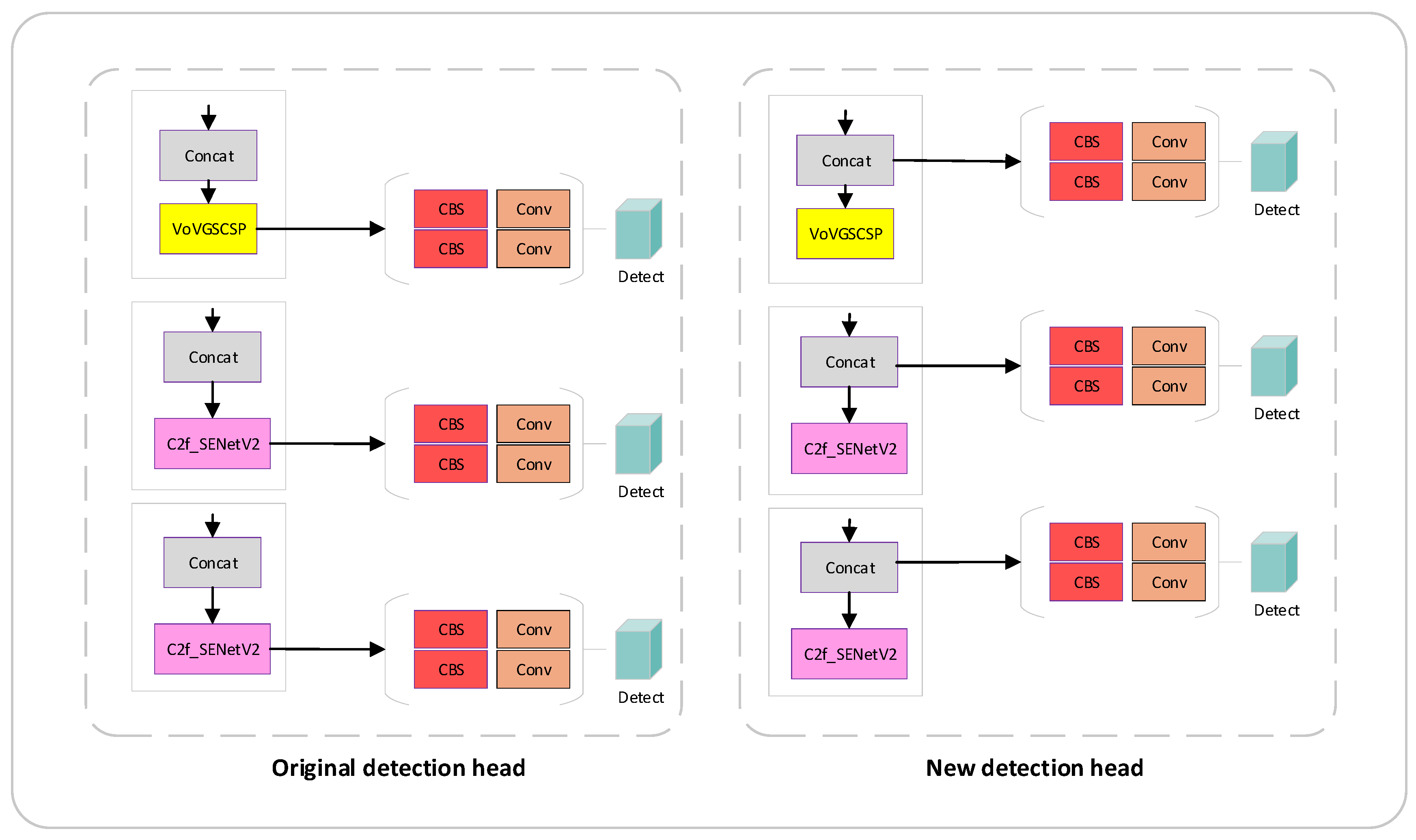

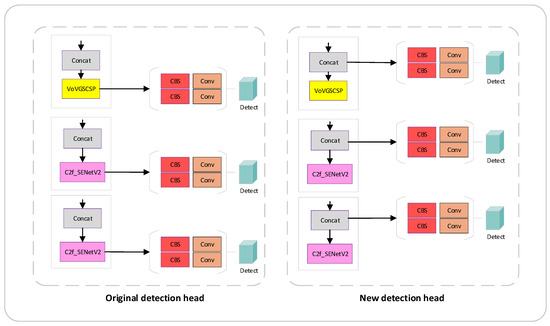

2.6. Detection Head Improvements

The detection head is a key component of the target detection model. It converts and further processes the feature information extracted by the backbone and neck networks, from which key details, such as the location, category, and confidence of the deep-sea biological targets, are extracted to determine the specific characteristics of the target organisms in the deep-sea image [28].

In this paper, the traditional detection head output channel is improved, and the traditional detection head integrates the output from the C2f channel, while the improved detection head outputs the ConCat channel, which breaks the traditional sense of the composition of the detection head and dramatically improves the precision of the model, the average accuracy at an IoU = 0.5, and the average accuracy at an IoU = 0.5–0.95, so that the model recognizes the deep-sea organisms more accurately. Figure 7 shows the detection head improvement module.

Figure 7.

Schematic diagram of detection head.

3. Experiments and Results

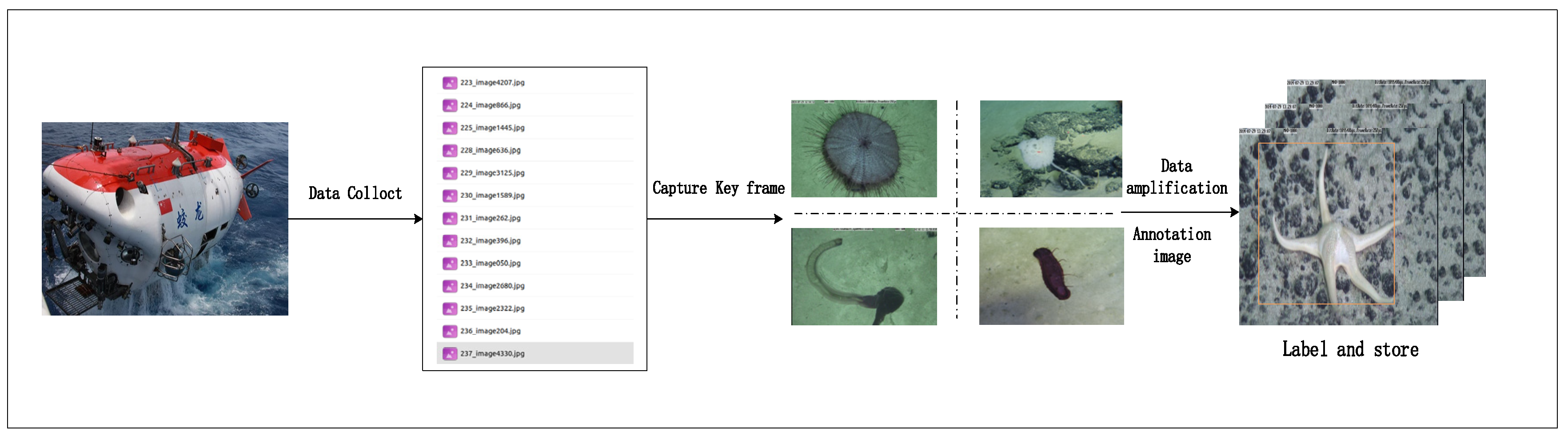

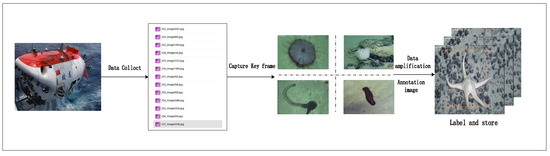

3.1. Dataset Creation and Processing

This experimental dataset was derived from real video data captured by Jiaolong [29], a manned submersible of the 7000 m underwater class in the western Pacific Ocean, and the process shown in Figure 8 below was used to construct the deep-sea biological dataset. Firstly, the video key frame images were intercepted to obtain the deep-sea organism images, then the images were expanded by rotating, inverting, noise addition, and other data enhancement methods [30], and finally, the organism images were annotated using the traditional labeling tool to obtain 5002 images and the corresponding labels.

Figure 8.

Flowchart of dataset production.

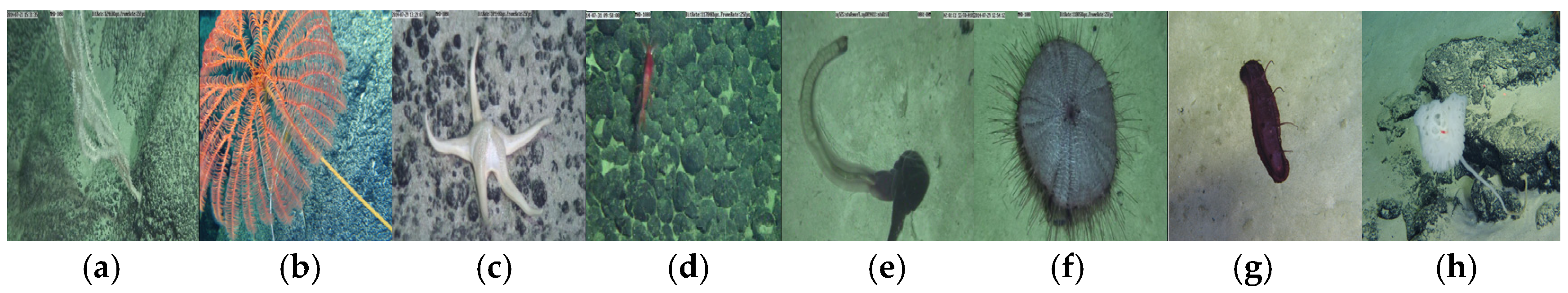

During the annotation process, all image organisms were categorized according to their biological phyla, totaling eight phyla, as illustrated in Figure 9, which include Coral Polyp, Crinoid, Starfish, Crustacean, Ray-Finned Fish, Sea Urchin, Sea Cucumber, and Hexactinellida.

Figure 9.

The Jiaolong dataset contains eight phyla of organisms: (a) Coral Polyp; (b) Crinoid; (c) Starfish; (d) Crustacean; (e) Ray-Finned Fish; (f) Sea Urchin; (g) Sea Cucumber; (h) Hexactinellida.

3.2. Experimental Environment and Parameter Configuration

The experiments in this study used Ubuntu 20.04 as the operating system and PyTorch as the deep learning framework, and the experimental platform used Python 3.8.19 and torch2.0.1 + cuda11.8. The graphics card model was (NVIDIA GeForce RTX4090, 24 GB, NVIDIA Corporation, Santa Clara, CA, USA). The detailed production parameters of the experiment are shown in Table 1.

Table 1.

Detailed hyperparameters of the experiment.

3.3. Evaluation Criteria

In this study, the performance of the improved YOLOv8n model was evaluated using the precision [31] and mean average precision (mAP) as the evaluation metrics. The formulas are as follows:

where P represents the precision of the described model, i.e., what percentage of instances predicted by the model to be positive instances are actually positive instances. R represents the ratio of the instances of correctly identified deep-sea organisms to all the annotated instances of deep-sea organisms. denotes the number of accurate identifications of deep-sea organism detections made by the YOLOv8n network model. denotes the number of inaccurate identifications of deep-sea organism detections made by the YOLOv8n network model. AP is the mean average precision. AP50 is the mean average precision for this category of samples when the threshold value of the IoU of the confusion matrix is taken to be 0.5. mAP is the precision of the samples of all the categories averaged, which reflects the trend of the model’s precision with the recall rate; the higher the value, the easier it is for the model to maintain a high precision at a high recall rate. mAP50-95 represents the average mAP value over different IoU thresholds (from 0.5 to 0.95 in steps of 0.05). N represents the number of categories.

In model evaluation metrics, the precision is a crucial indicator for assessing the accuracy of the model recognition, while the mean average precision (mAP) serves as a comprehensive performance metric that aggregates multiple precision values across different recall rates. As a key evaluation criterion, the mAP holds even greater significance. It not only reflects the model’s precision in recognizing positive samples but also provides a comprehensive evaluation of all the object detections. The mAP plays a pivotal role in assessing the model effectiveness and selecting the optimal model.

3.4. Experimental Results and Analysis

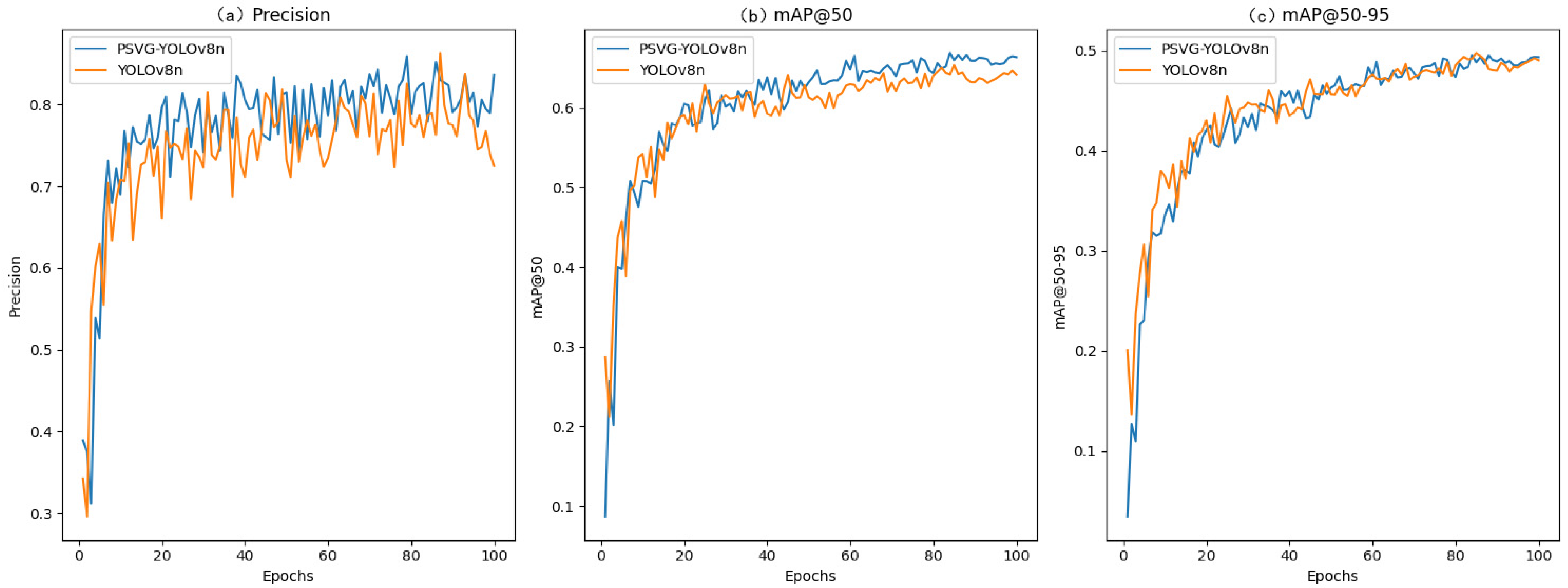

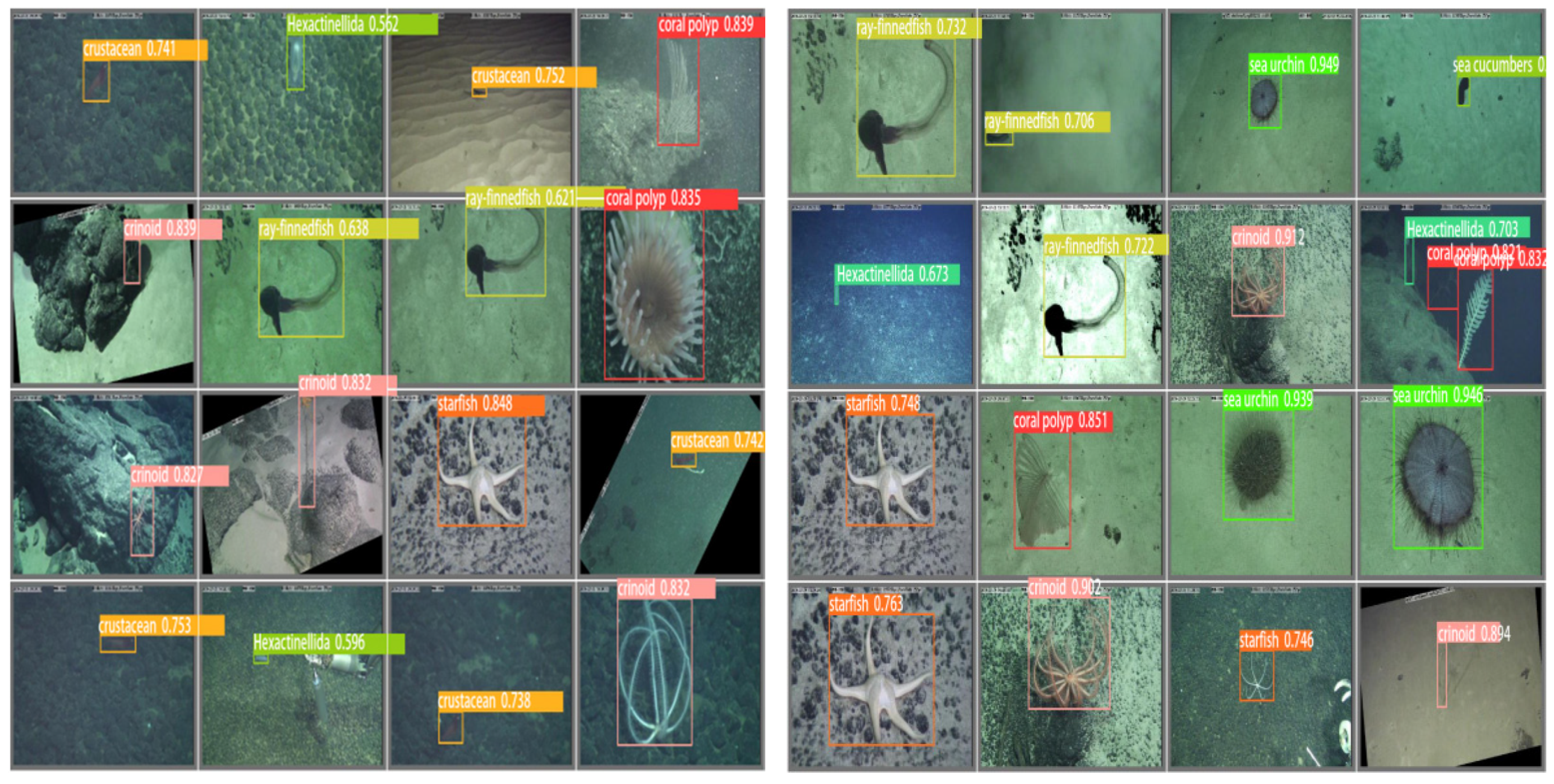

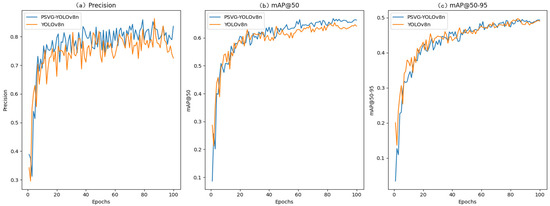

3.4.1. Experimental Comparison Before and After Model Improvement

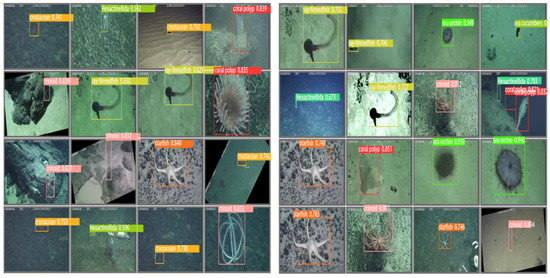

In Figure 10, the precision, mean average precision with an IoU = 0.5, and mean average precision with an IoU = 0.5–0.95 of the original YOLOv8n model and the PSVG-YOLOv8n model after 100 iterations of training are shown. Specifically, the PSVG-YOLOv8n model demonstrated a superior performance when trained on the deep-sea organism dataset. On the mAP50 and mAP50-95 metrics, the PSVG-YOLOv8n model consistently led after 45 rounds, especially on mAP50, which shows a significant advantage. This indicates that the PSVG-YOLOv8n model not only improves the detection accuracy but also exhibits stronger robustness under different IoU thresholds. To visually compare the effectiveness of the YOLOv8n and PSVG-YOLOv8n detection in samples that did not participate in the training, Figure 11 shows some of the detection results covering different deep-sea organisms. Compared with the YOLOv8n model, the PSVG-YOLOv8n model proposed in this paper performed better in terms of the accuracy and recognition error rate, especially in the complex environment of the deep sea, where the PSVG-YOLOv8n model had higher confidence and more stable detection results. Although YOLOv8n can also accurately recognize deep-sea organisms, PSVG-YOLOv8n shows more robustness in different deep-sea contexts, reduces the interference from the complex environment of the deep sea, and exhibits higher accuracy.

Figure 10.

Comparison of indicators before and after model improvement.

Figure 11.

YOLOv8n (left) vs. PSVG-YOLOv8 (right) detection results for different organisms.

3.4.2. Ablation Experiments

To evaluate and validate the effectiveness of the improved model, ablation experiments were conducted. Under the premise of ensuring the consistency of the environment and training parameters, the optimization effect of adding different modules or combinations of YOLOv8n on the detection of deep-sea organisms was analyzed. The results are shown in Table 2 below. The addition of the GSconv + VoVGSCSP combination module [32] to the YOLOv8n model enhanced the feature fusion ability on different scales and improved the model’s ability to capture the target details, and the model’s accuracy, average accuracy at IoU = 0.5, and average accuracy at IoU = 0.5–0.95 were significantly improved, respectively, by 2.4, 1.2, and 0.3 percentage points. Similarly, the introduction of the C2f_SENetV2 module enhanced the global feature representation of the image, which improved 3.9, 0.9, and 0.5 percentage points compared to the original model accuracy, average accuracy at IoU = 0.5, and average accuracy at IoU = 0.5–0.95, respectively. Finally, adding the PSA module before the backbone SPPF enhanced the feature representation in specific spatial regions. As a result, the model’s accuracy and average accuracy at IoU = 0.5 improved by 2.5 and 1.8 percentage points, respectively, while the average accuracy at IoU = 0.5–0.95 remained unchanged. In this study, the three improvement measures all effectively improved the accuracy of the basic model in this experiment. This study systematically combined the three modules and found that, afterwards, the precision was only improved by 0.8 percentage points, while the mean average precision at IoU = 0.5–0.95 was reduced by 0.7 percentage points, which is less than the improvement of the individual modules. Then, by integrating the innovative detection head with the combined module, the final model’s precision, mean average precision at IoU = 0.5, and mean average precision at IoU = 0.5–0.95 were respectively improved by 1.2, 2.3, and 1.1 percentage points, demonstrating that the detection accuracy of the final improved combined model is feasible. The results confirm that the optimization in this experiment performed quite well in terms of the deep-sea biological image detection performance.

Table 2.

Ablation experiments.

3.4.3. Comparative Tests of Different Models

In order to fully evaluate the performance of the PSVG-YOLOv8 model, the same dataset was used as a sample and was analyzed in comparison with a series of target detection models, which included SSD, YOLOv3, YOLOv5, YOLOv7, YOLOv8n, and YOLOv11. The results are shown in Table 3. PSVG-YOLOv8 excelled in all the performance metrics. Its model reached 79.9%, 67.2%, and 50.9% in the precision and mean average precisions (mAP50, mAP50-95), respectively, which fully proves its strong ability in accurately identifying and localizing targets.

Table 3.

Comparison experiments with other models.

4. Conclusions

Due to the complex deep-sea environmental conditions, the Jiaolong manned submersible’s dataset of macrozoa exhibits poor image quality, limited quantity, and significant light refraction and scattering in the underwater environment. The traditional manual identification methods are inefficient and costly, making them unable to meet the stringent requirements of deep-sea technology for high efficiency and accurate detection. In this paper, we propose the PSVG-YOLOv8n model, incorporating the PSA attention mechanism before the SPPF module in the model’s backbone network. Additionally, we introduce the GSconv+VoVGSCS combination module and the C2f_SENetV2 module to the neck network. Finally, we integrate these three modules with an improved detection head to enhance the model’s recognition accuracy. Compared to the YOLOv8n model, the PSVG-YOLOv8n model achieved significant improvements in the precision, mAP50, and mAP50-95, reaching 79.9%, 67.2%, and 50.9%, respectively. Additionally, when compared to SSD, YOLOv3, YOLOv5, YOLOv7, and YOLOv11, the PSVG-YOLOv8n model exhibits a distinct advantage in detection accuracy. The research methodology presented in this paper provides critical technical support for the investigation and assessment of deep-sea biodiversity and for the conservation of marine ecological environments. By enhancing the precision and efficiency of deep-sea biological detection, this study aids scientists in obtaining a more comprehensive understanding of deep-sea ecosystems and offers reliable data support for the sustainable utilization of marine resources and the protection of ecological environments.

Author Contributions

Conceptualization, D.C. and X.S.; Methodology, D.C.; Software, D.C.; Data curation, D.C.; Writing—original draft, D.C., X.S., J.Y., X.G. and Y.R.; Writing—review & editing, D.C., X.S. and Y.R.; Supervision, X.S., J.Y., X.G. and Y.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was supported and sponsored by the National Key Project of Research and Development Program (2024YFC2814400), the National Natural Science Foundation of China (U22A2044), and the Taishan Scholars Program (tsqn202408291).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Danovaro, R.; Gambi, C.; Dell’Anno, A.; Corinaldesi, C. Deep-sea biodiversity informs the design of marine protected areas. Nature 2008, 456, 201–204. [Google Scholar]

- Zhang, L.; Li, Q.; Li, Y. Transferable Deep Learning Model for the Identification of Fish Species in Various Fishing Grounds. J. Mar. Sci. Eng. 2023, 12, 415. [Google Scholar]

- Sun, H.; Zhang, Q.; Li, Z. Enhancing underwater image quality for object detection using generative adversarial networks. Ocean Eng. 2024, 260, 111234. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Mu, R.; Zeng, X. A review of deep learning research. KSII Trans. Internet Inf. Syst. (TIIS) 2019, 13, 1738–1764. [Google Scholar]

- Abouhalima, M.; das Neves, L.; Taveira-Pinto, F.; Rosa-Santos, P. Machine Learning in Coastal Engineering: Applications, Challenges, and Prospects. J. Mar. Sci. Eng. 2024, 12, 638. [Google Scholar] [CrossRef]

- Yang, Y.; Ye, Z.; Su, Y.; Zhao, Q.; Li, X.; Ouyang, D. Deep learning for in vitro prediction of pharmaceutical formulations. Acta Pharm. Sin. B 2019, 9, 177–185. [Google Scholar] [CrossRef] [PubMed]

- Sun, B.Y.; Mei, Y.P.; Yan, N.; Chen, Y. UMGAN: Underwater Image Enhancement Network for Unpaired Image-to-Image Translation. J. Mar. Sci. Eng. 2023, 11, 447. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Zhang, M.; Li, X. Improved YOLOv8-Based Underwater Target Detection Algorithm. J. Mar. Sci. Eng. 2023, 11, 753. [Google Scholar]

- Tan, H.; Li, Y.; Zhu, M.; Deng, Y.; Tong, M. Detection of Overlapping Fish Counts through Image Enhancement and Improved Faster R-CNN Network. Trans. Chin. Soc. Agric. Eng. 2022, 38, 167–176. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wageeh, Y.; Mohamed HE, D.; Fadl, A.; Anas, O.; ElMasry, N.; Nabil, A.; Atia, A. YOLO fish detection with Euclidean tracking in fish farms. J. Ambient Intell. Humaniz. Comput. 2021, 12, 5–12. [Google Scholar] [CrossRef]

- Liu, D.; Feng, G.; Wang, H. Multi-scale feature fusion for underwater object detection based on deep learning. Ocean Eng. 2025, 270, 111567. [Google Scholar]

- Wang, L.; Zhang, Y.; Liu, H. Underwater Object Detection Using YOLOv8 with Polarized Self-Attention Mechanism. Int. J. Comput. Vis. Pattern Recognit. 2023, 45, 245–260. [Google Scholar]

- Li, H.; Zhang, Y. Optimizing YOLOv8 Architecture with Slim-neck and GSConv for Autonomous Vehicle Detection. Int. J. Image Process. 2023, 29, 405–420. [Google Scholar]

- Zhu, X.; Li, L.; Li, J. Hyperspectral Image Classification Model Using Squeeze and Excitation Network with Deep Learning. J. Imaging 2022, 8, 243. [Google Scholar]

- Guo, A.; Sun, K.; Zhang, Z. A lightweight YOLOv8 integrating FasterNet for real-time underwater object detection. J. Real-Time Image Process. 2024, 21, 49. [Google Scholar] [CrossRef]

- Anjing, G.; Yirui, W.; Lijun, G.; Zhang, R.; Yu, Y.; Gao, S. An adaptive position-guided gravitational search algorithm for function optimization and image threshold segmentation. Eng. Appl. Artif. Intell. 2023, 121, 106040. [Google Scholar]

- Shi, X.; Wang, H. Lightweight underwater object detection network based on improved YOLOv4. J. Harbin Eng. Univ. 2023, 44, 154–160. [Google Scholar]

- Hu, D.; Yu, M.; Wu, X.; Hu, J.; Sheng, Y.; Jiang, Y.; Zheng, Y. DGW-YOLOv8: A small insulator target detection algorithm based on deformable attention backbone and WIoU loss function. IET Image Process. 2024, 18, 1096–1108. [Google Scholar] [CrossRef]

- Chu, X.; Tian, Z.; Wang, Y.; Zhang, B.; Ren, H.; Wei, X.; Xia, H.; Shen, C. Twins: Revisiting the design of spatial attention in vision transformers. In Proceedings of the Advances in Neural Information Processing Systems 34 (NeurIPS 2021), Virtual, 6–14 December 2021; pp. 9355–9366. [Google Scholar]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An efficient pyramid squeeze attention block on convolutional neural network. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1161–1177. [Google Scholar]

- Zhao, Z.; Wang, L.; Xu, D. Segmentation and Detection via Split and Concatenate Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. IEEE Trans. Image Process. 2018, 27, 5610–5622. [Google Scholar]

- Li, J.; Chen, S.; Yang, Z. Frequency-based Feature Extraction for Few-Shot Learning. Pattern Recognit. 2020, 104, 107331. [Google Scholar]

- Shen, J.; Wu, T. Learning Spatially-Adaptive Squeeze-Excitation Networks for Image Synthesis and Image Recognition. arXiv 2021, arXiv:2112.14804. [Google Scholar]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

- Shi, X.; Ren, Y.; Tang, J.; Fu, W.; Liu, B. Working Tools Study for JiaoLong Manned Submersible. Mar. Technol. Soc. J. 2019, 53, 56–64. [Google Scholar] [CrossRef]

- Feng, X.; Shen, Y.; Wang, D. A Review of the Development Status of Image-based Data Augmentation Methods. Comput. Sci. Appl. 2021, 11, 370. [Google Scholar]

- Hestness, J.; Ardalani, N.; Diamos, G. Beyond human-level accuracy: Computational challenges in deep learning. In Proceedings of the 24th Symposium on Principles and Practice of Parallel Programming, Washington, DC, USA, 16–20 February 2019; pp. 1–14. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).