Underwater Target Tracking Method Based on Forward-Looking Sonar Data

Abstract

1. Introduction

- (1)

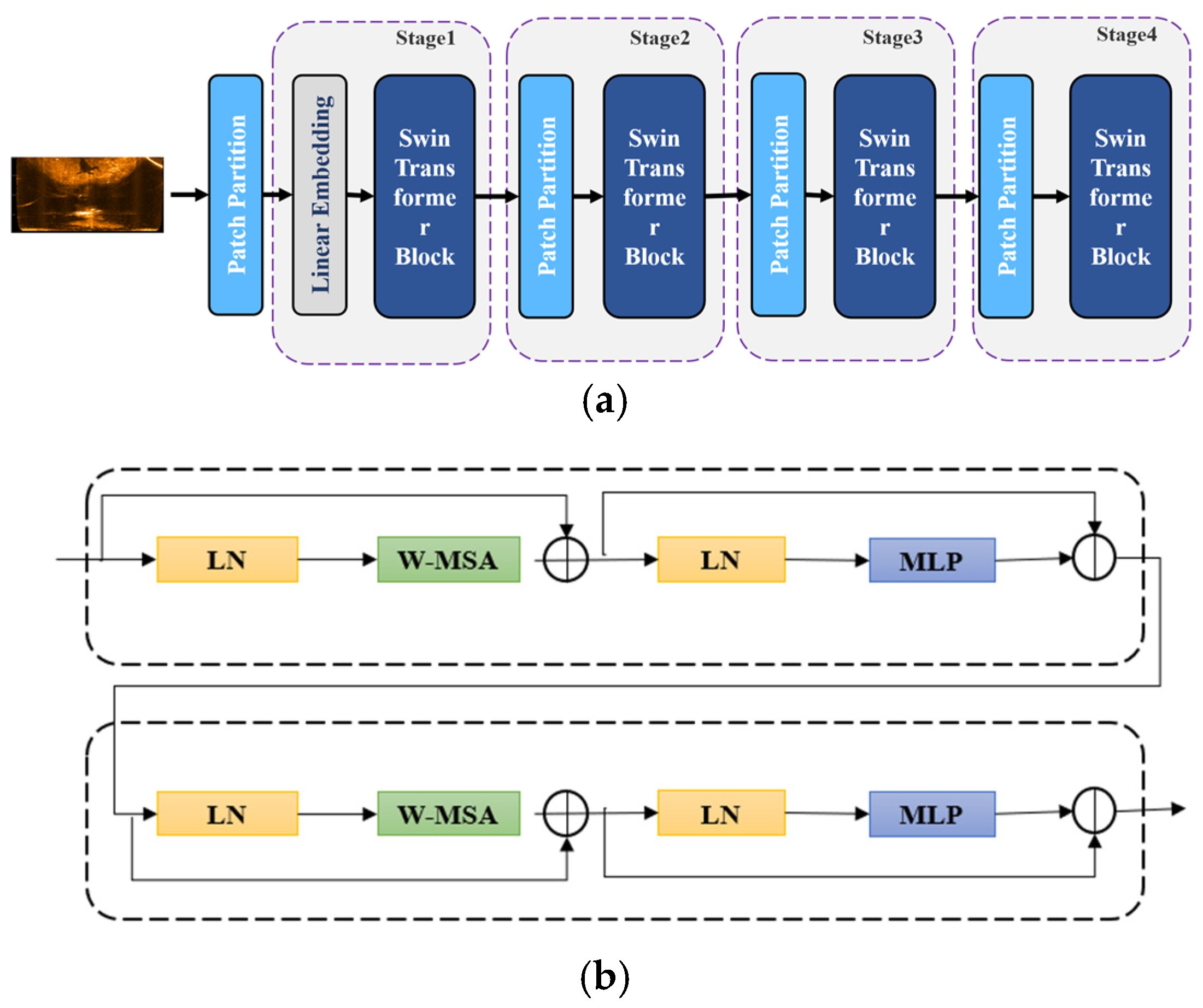

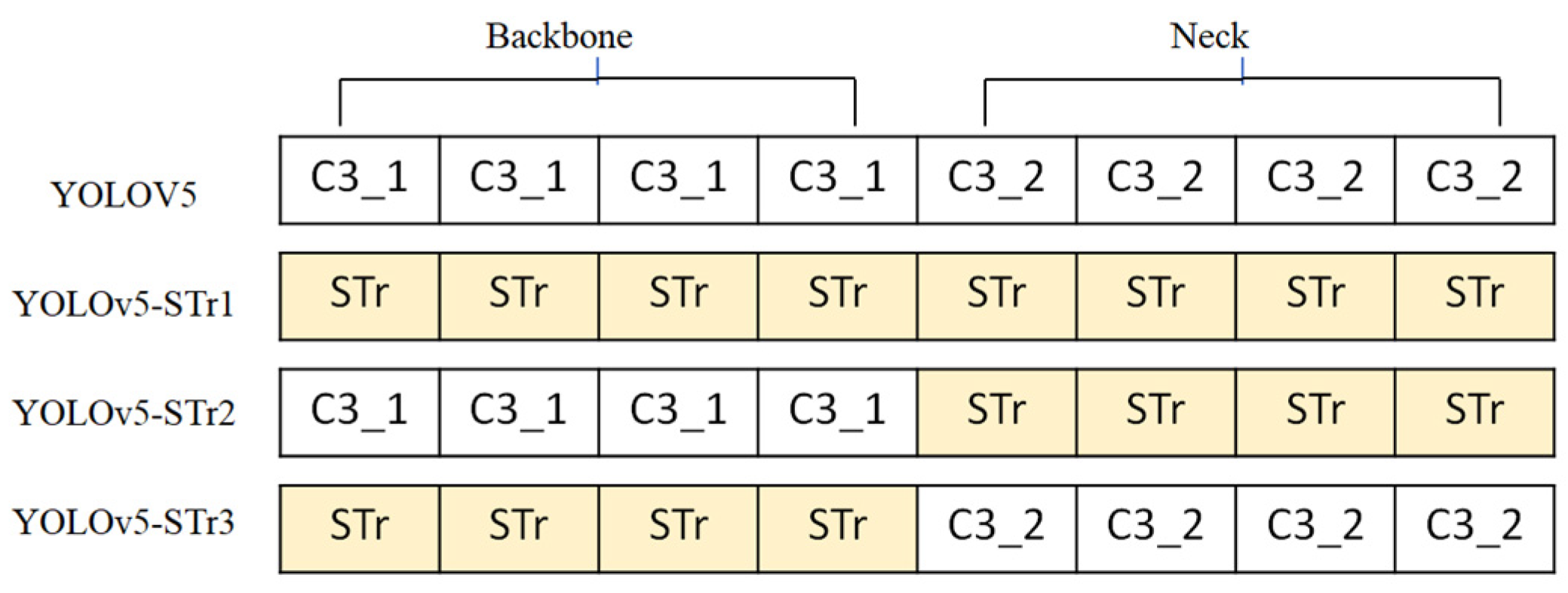

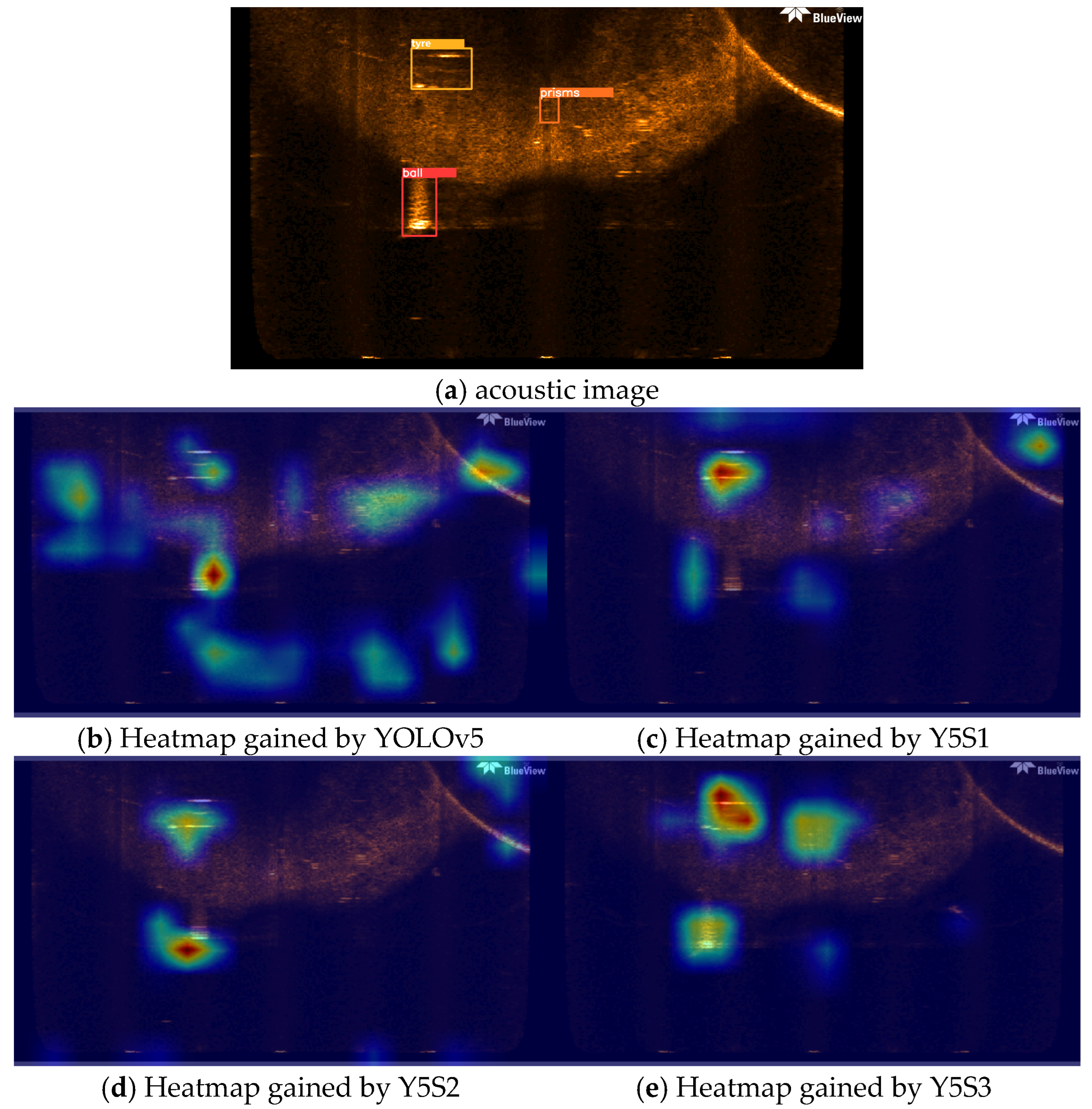

- In view of the problems of low contrast, high noise levels in underwater acoustic images, and the insufficient global image information fusion capability in the CNN structure of the YOLOv5 network, the C3 structure in the network was replaced with an STR block to improve the detection accuracy of objects in the sonar images.

- (2)

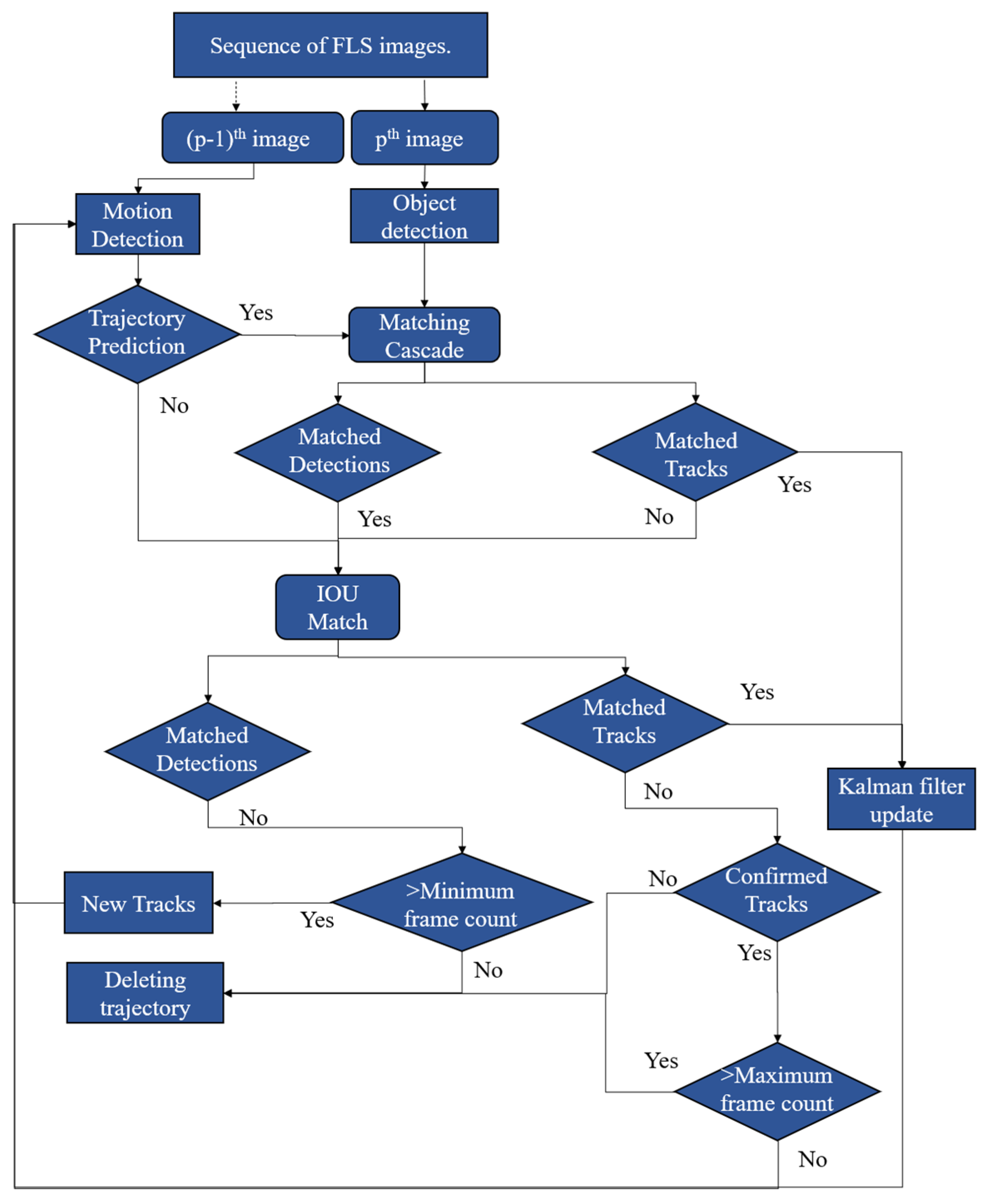

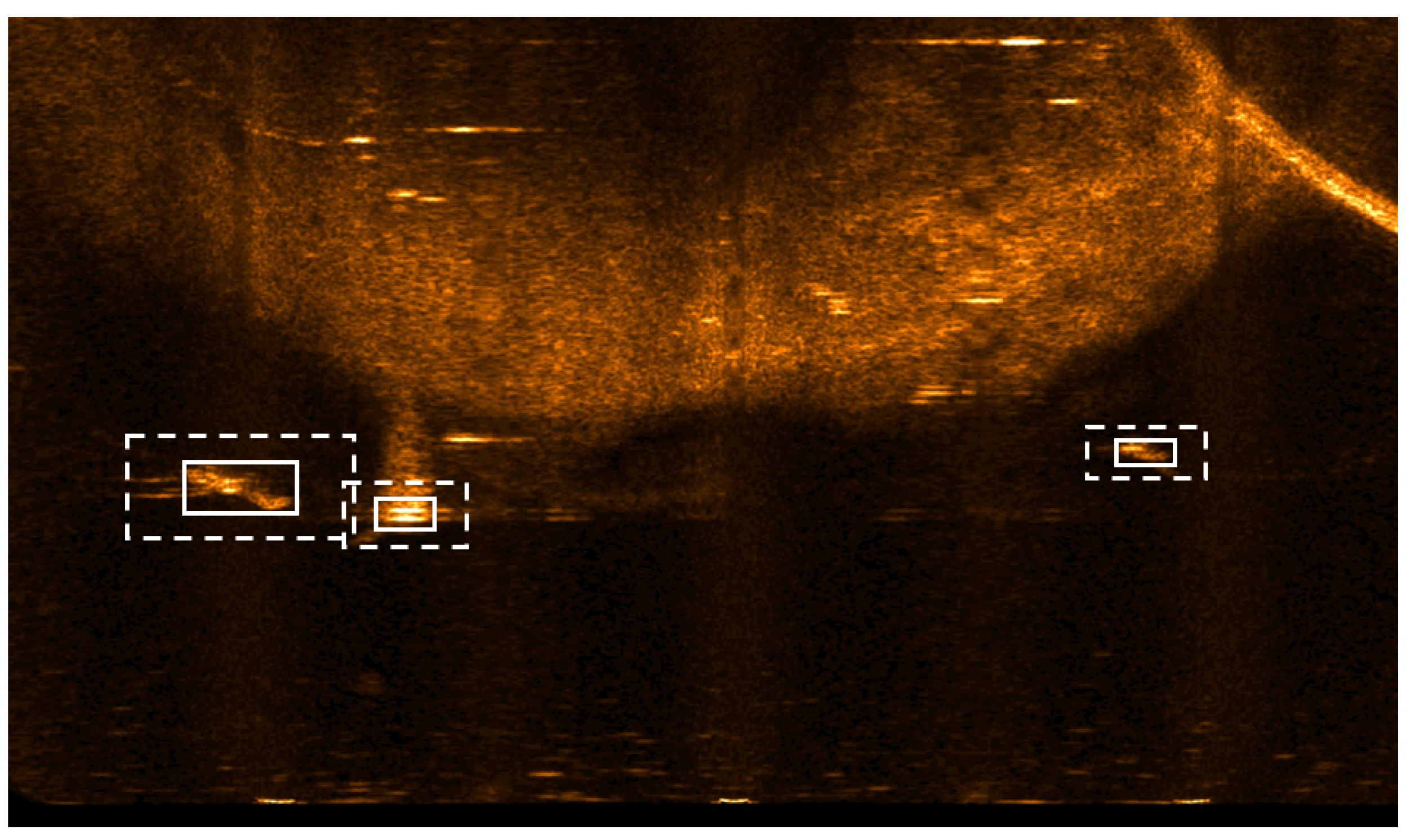

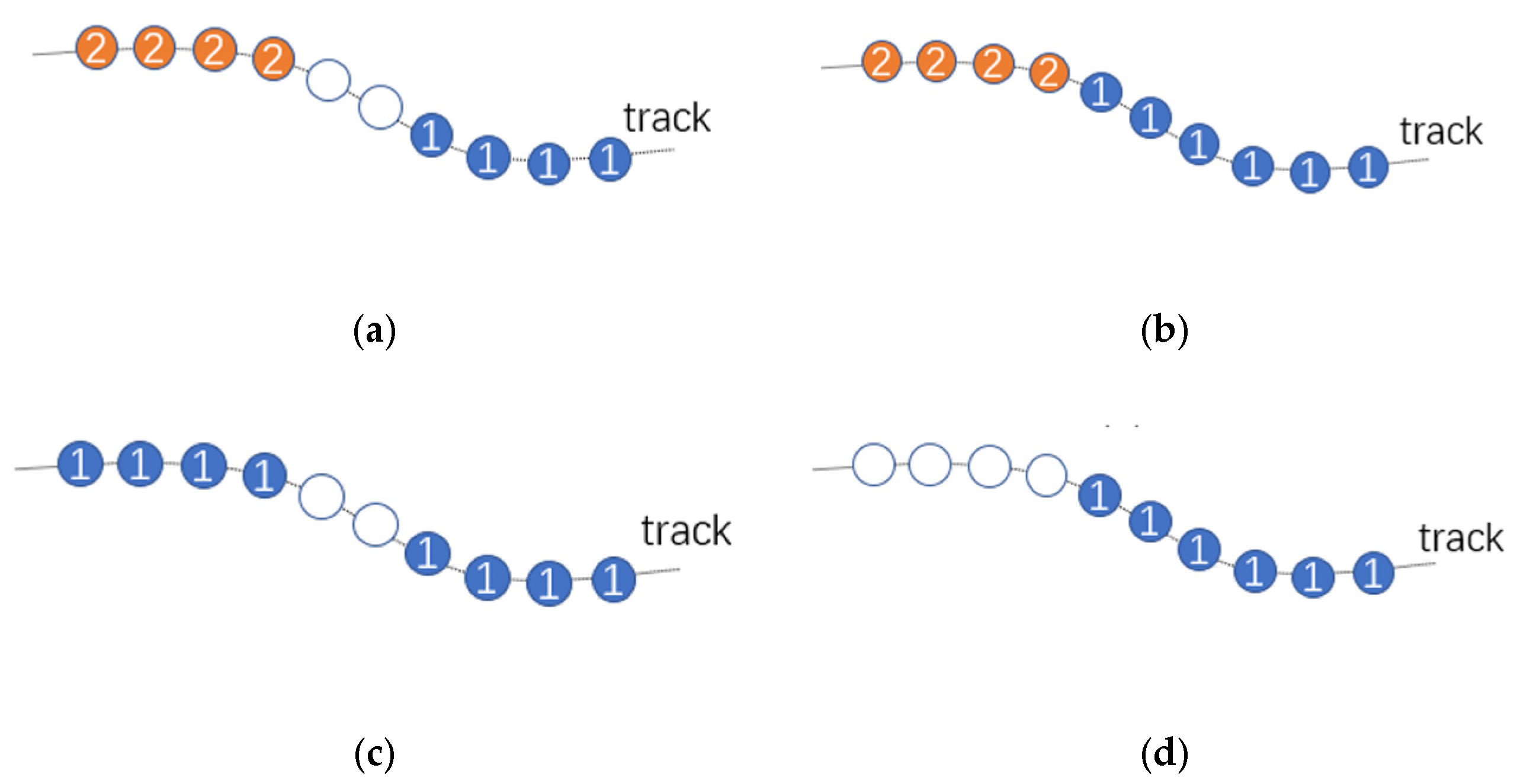

- A DeepSORT tracking improvement framework based on extended bounding boxes was designed. For issues such as trajectory interruption and ID switch in the field of underwater target tracking, an extension strategy for detecting bounding boxes was proposed, and the features of the scattered noise around the target were extracted to compensate for the sparsity of the target’s own features. Experimental comparison results show that the proposed method can effectively suppress trajectory interruption and ID switch issues during the tracking process, and improves the stability of the tracking network.

2. Related Work

2.1. Traditional Target Tracking Methods of Acoustic Images

2.2. Deep Learning-Based Target Tracking Methods of Acoustic Images

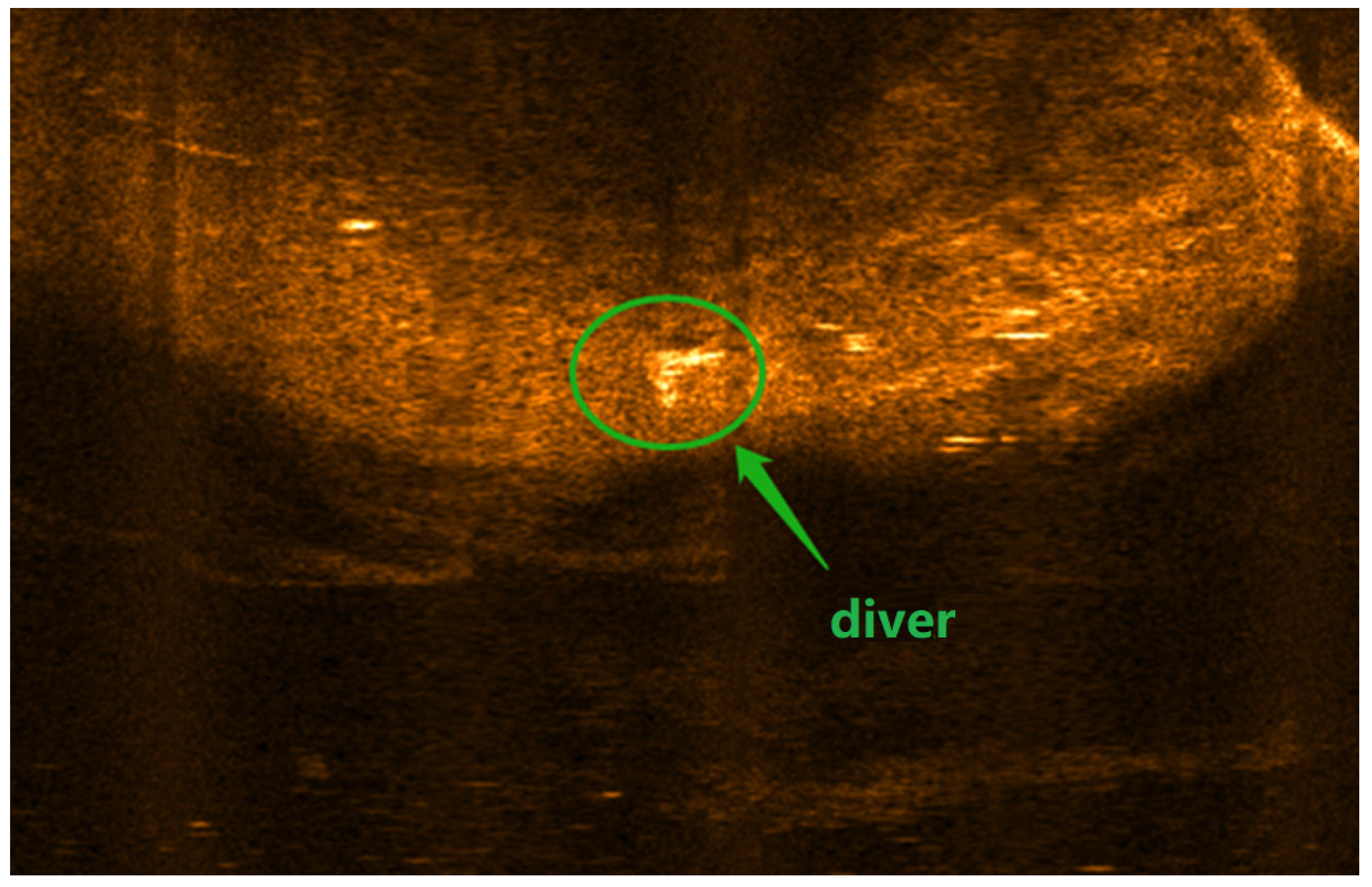

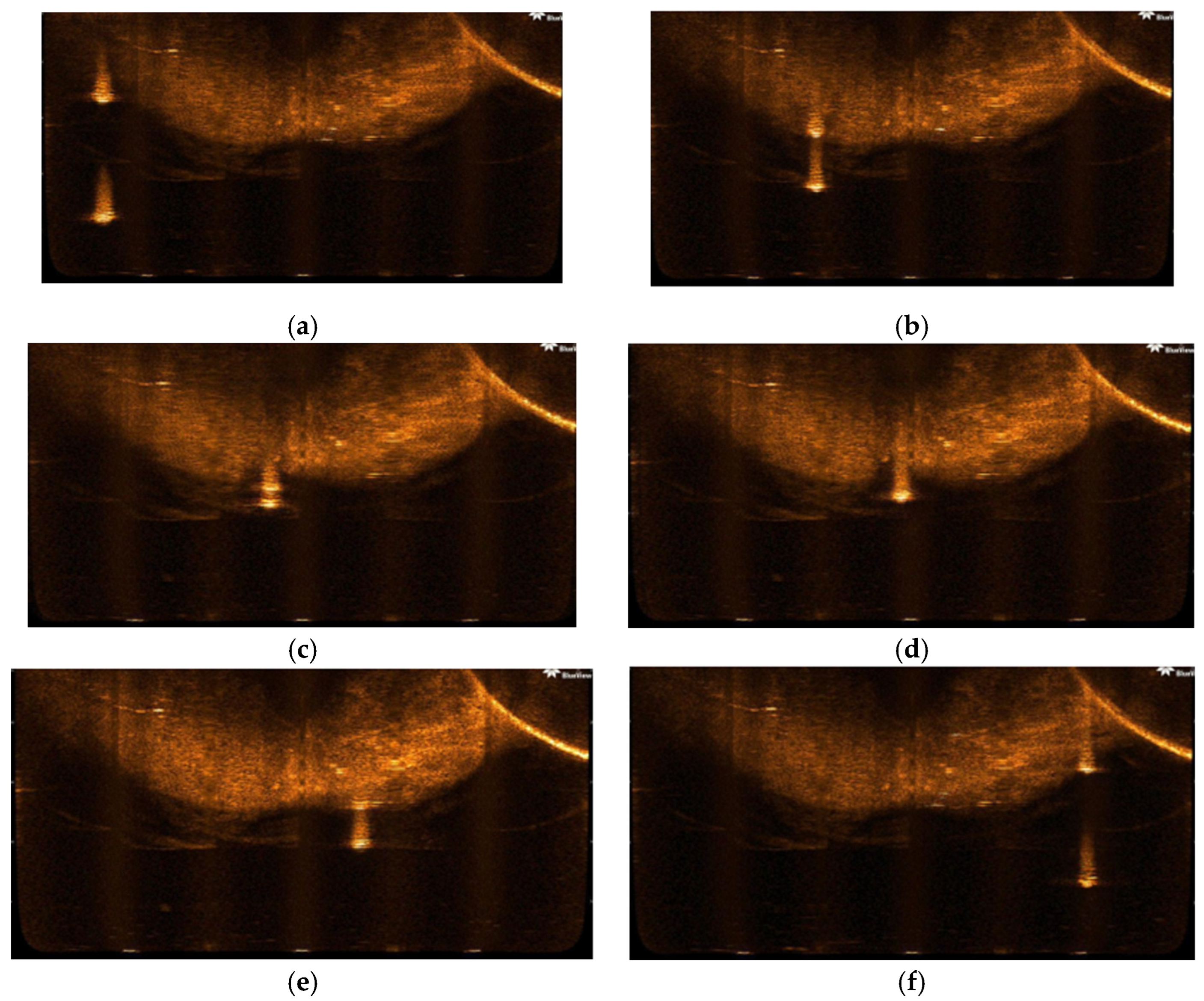

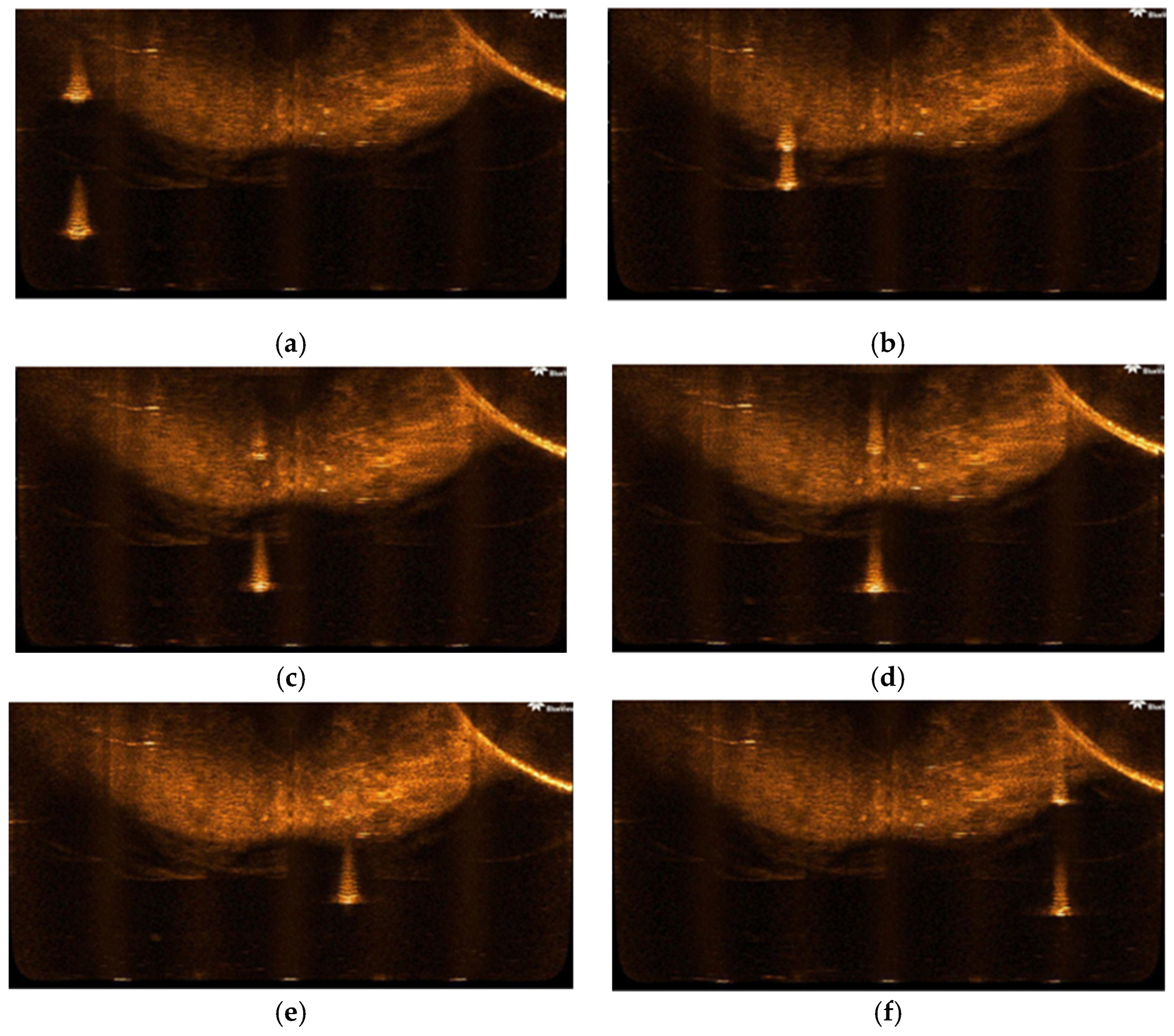

3. FLS Overview

- (1)

- The physical limitations associated with the transducer size restrict the quantity of transducers that can be incorporated into an array. As a result, the resolution of the images produced by the FLS is compromised, leading to a diminished grayscale representation of the target area. This reduction in resolution complicates the process of discerning finer details within the target.

- (2)

- The scattering properties of different regions on the target surface demonstrate variability, which is affected by factors such as shape, material composition, and the spatial relationship between the target and the sonar system. Furthermore, the angle at which acoustic waves strike the target may change as a result of the target’s movement, leading to the emergence of distinct regions within the acoustic image of the same target. These regions frequently appear as disjointed segments in acoustic imagery.

- (3)

- Multipath propagation is a significant phenomenon in acoustic imaging, characterized by the occurrence of reflected acoustic waves that may exhibit higher energy levels than those reflected from obstacles. This phenomenon can result in the inaccurate or incomplete detection of targets, thereby complicating the processing of acoustic images.

4. Design of YOLOv5 Network Model for Acoustic Object Tracking

4.1. Swin Transformer Basic Principle

4.2. Improvement in YOLOv5 Based on Swin Transformer

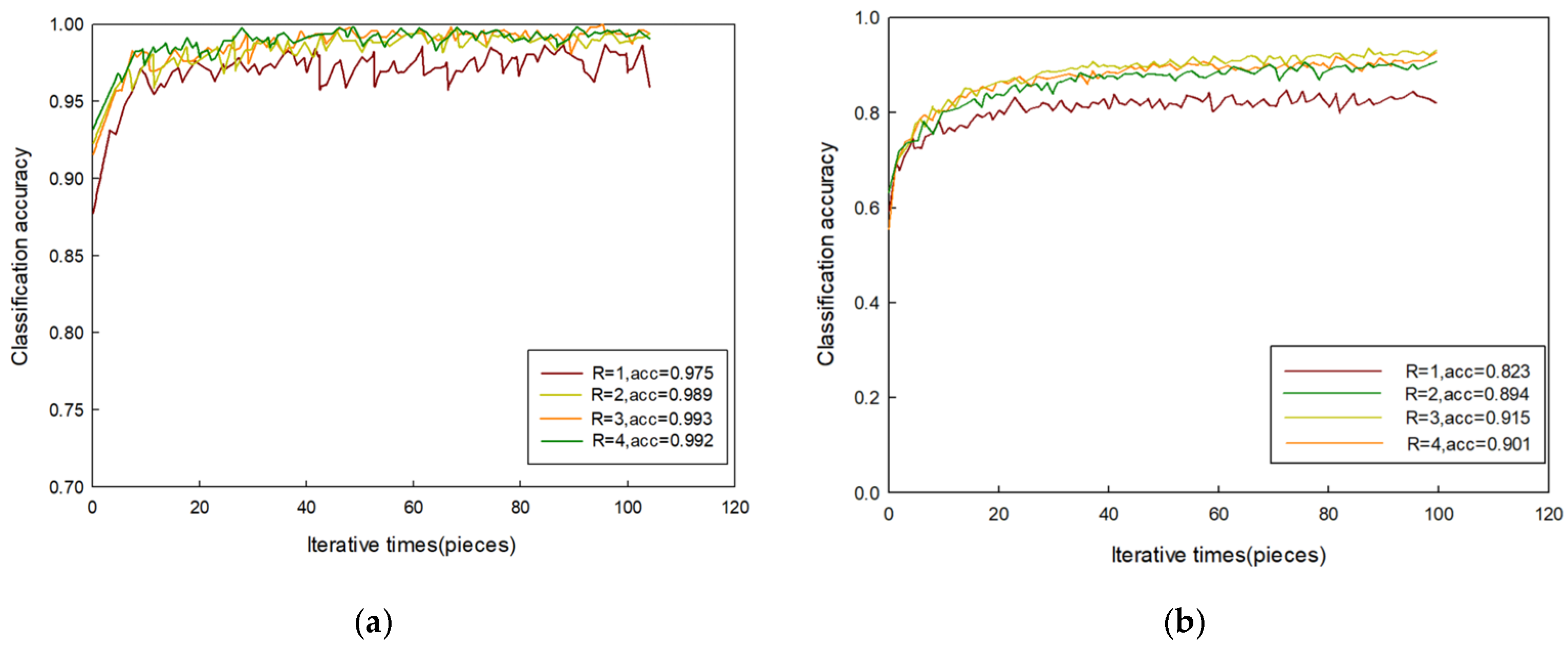

4.3. Experiment Results and Analysis

5. Tracker Design Based on DEEPSORT

5.1. DeepSORT Basic Principle

5.2. Improvement in DeepSORT

6. Experimental Test

6.1. Constructing the Experimental Datasets

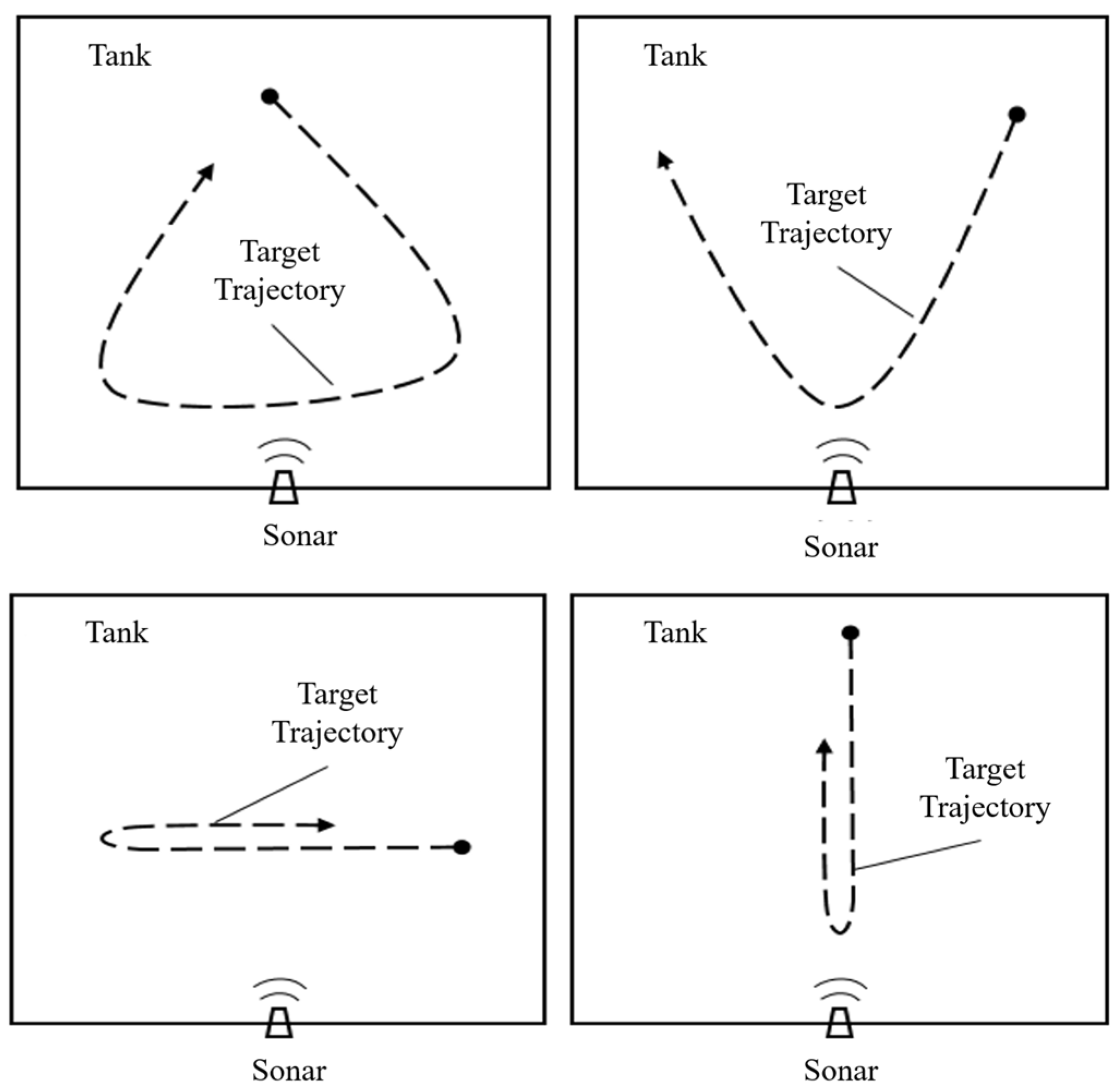

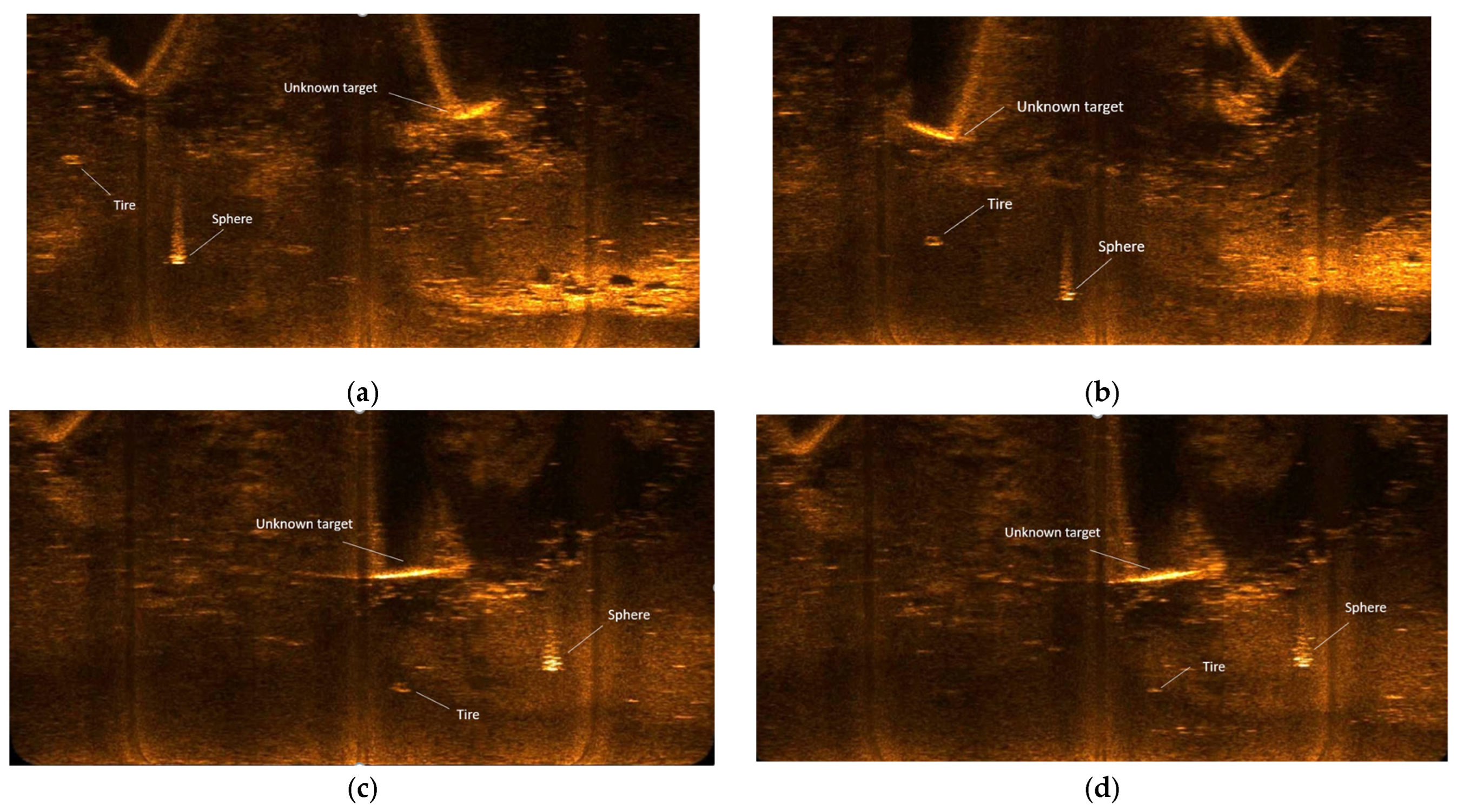

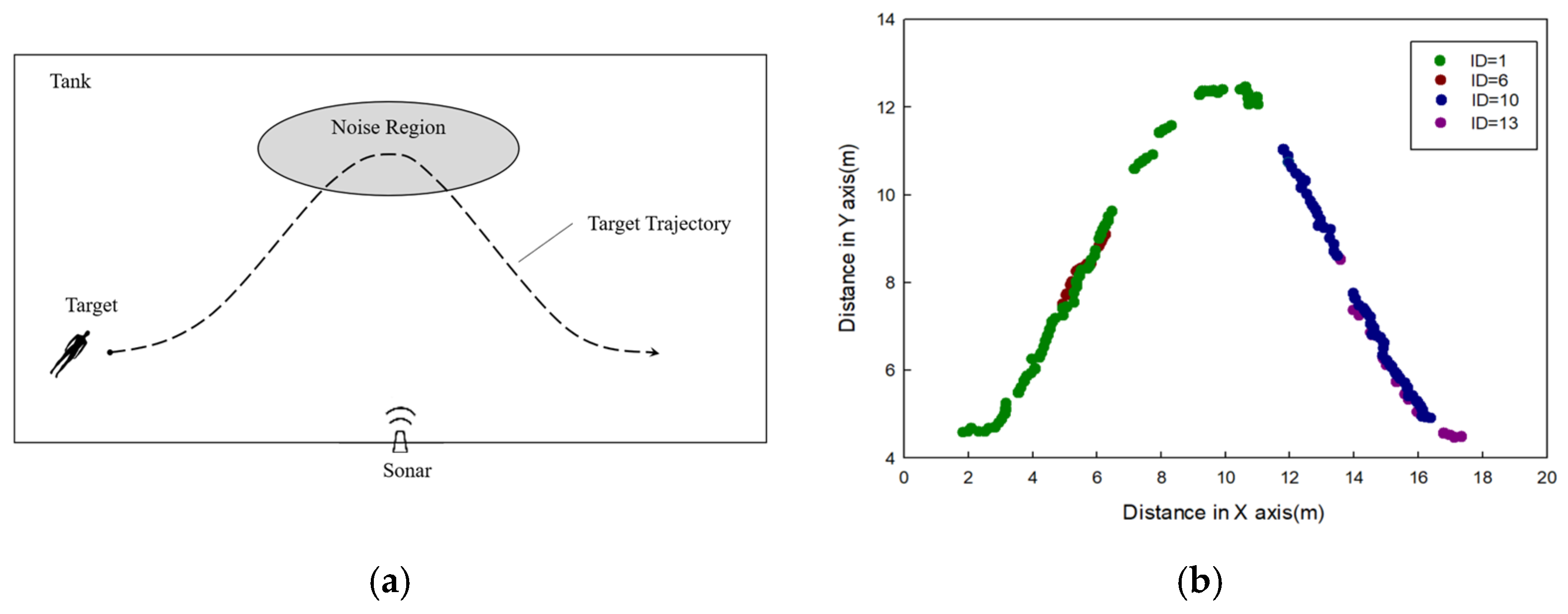

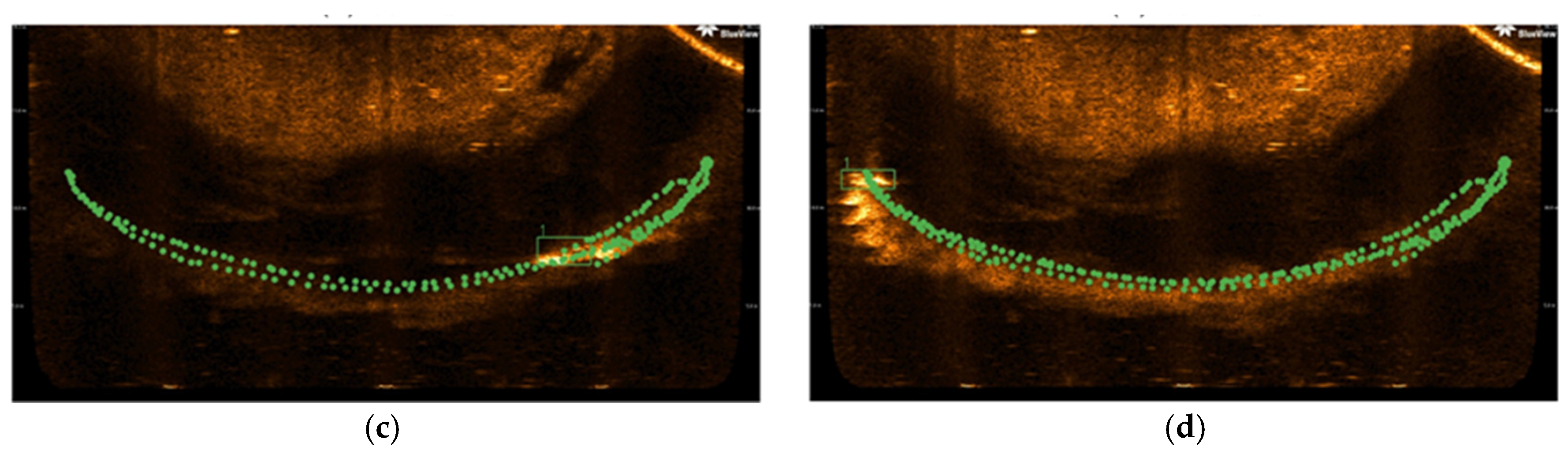

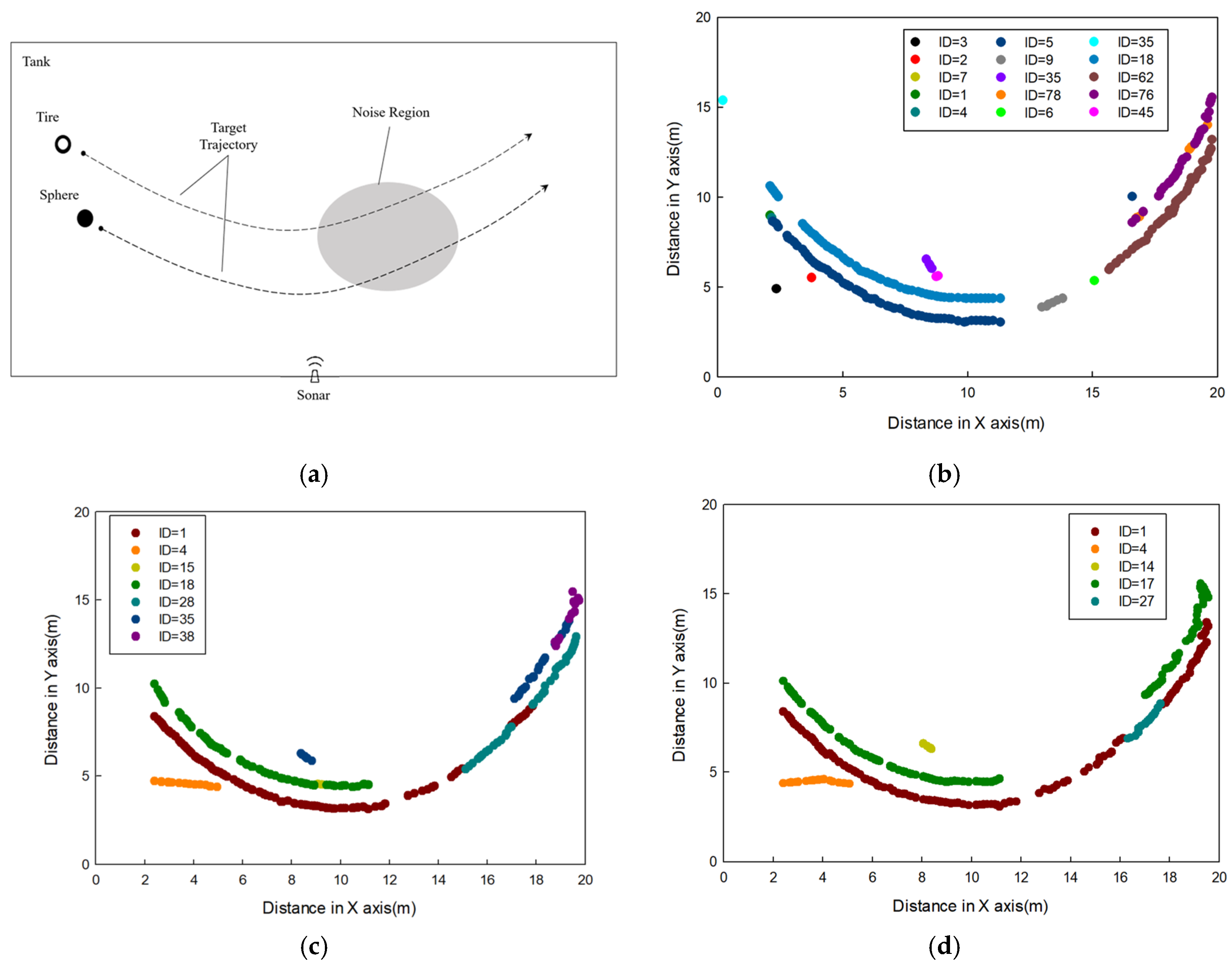

6.1.1. Acquisition of Acoustic Images in Tank

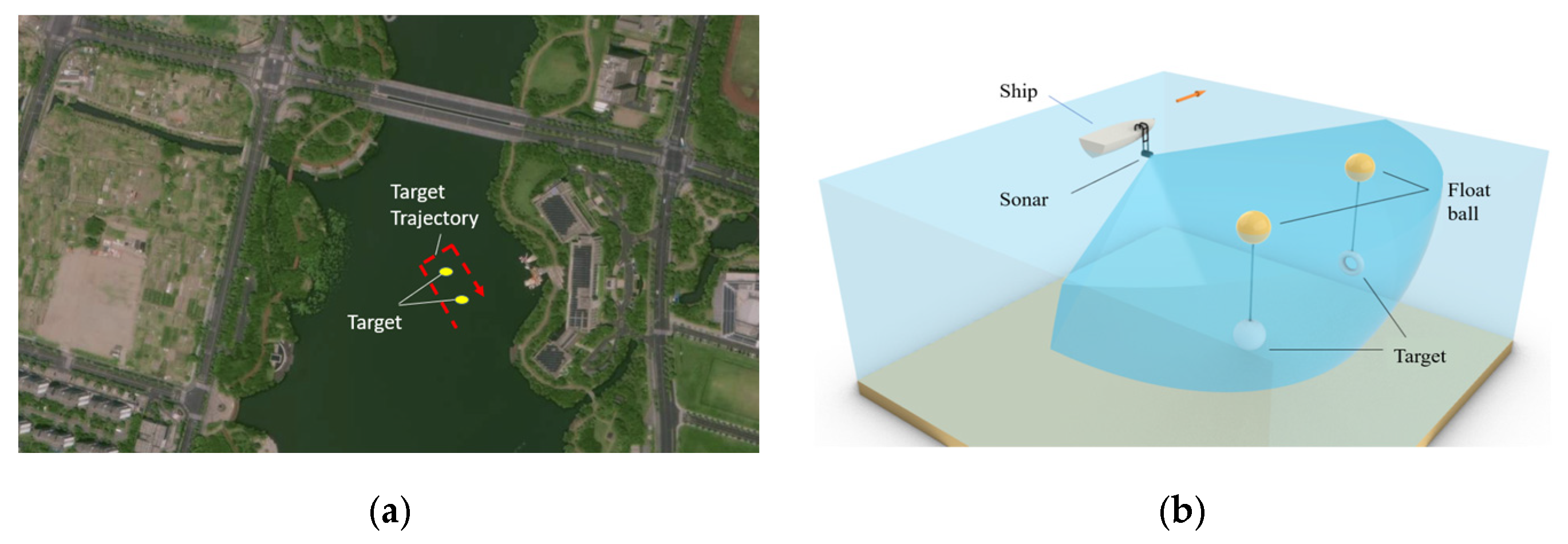

6.1.2. Acquisition of Acoustic Images in Lake

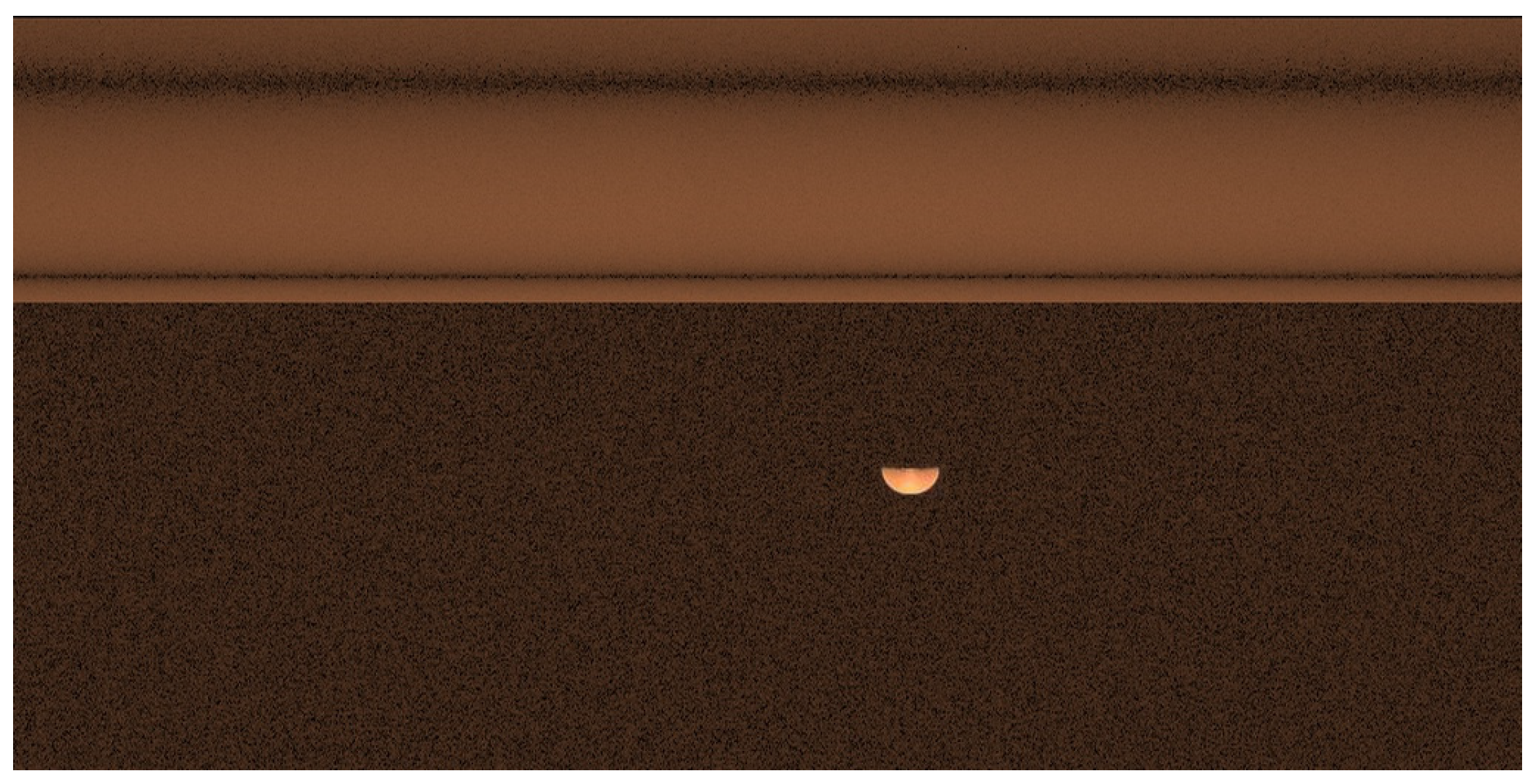

6.1.3. Acoustic Image Dataset Extension Based on Pix2PixHD

6.1.4. Ablation Experiment

6.2. Underwater Target Tracking Evaluation Criteria

- (1)

- ID Switch: An ID switch is defined as an alteration in the identification number of a target along a singular trajectory line.

- (2)

- Frag Ratio: A trajectory interruption is identified as the absence of an assigned ID for the target within a single trajectory line. The frequency of frames exhibiting trajectory interruptions is quantified as a ratio of the total frames within the trajectory, known as the trajectory interruption proportion, as detailed in the following sections.

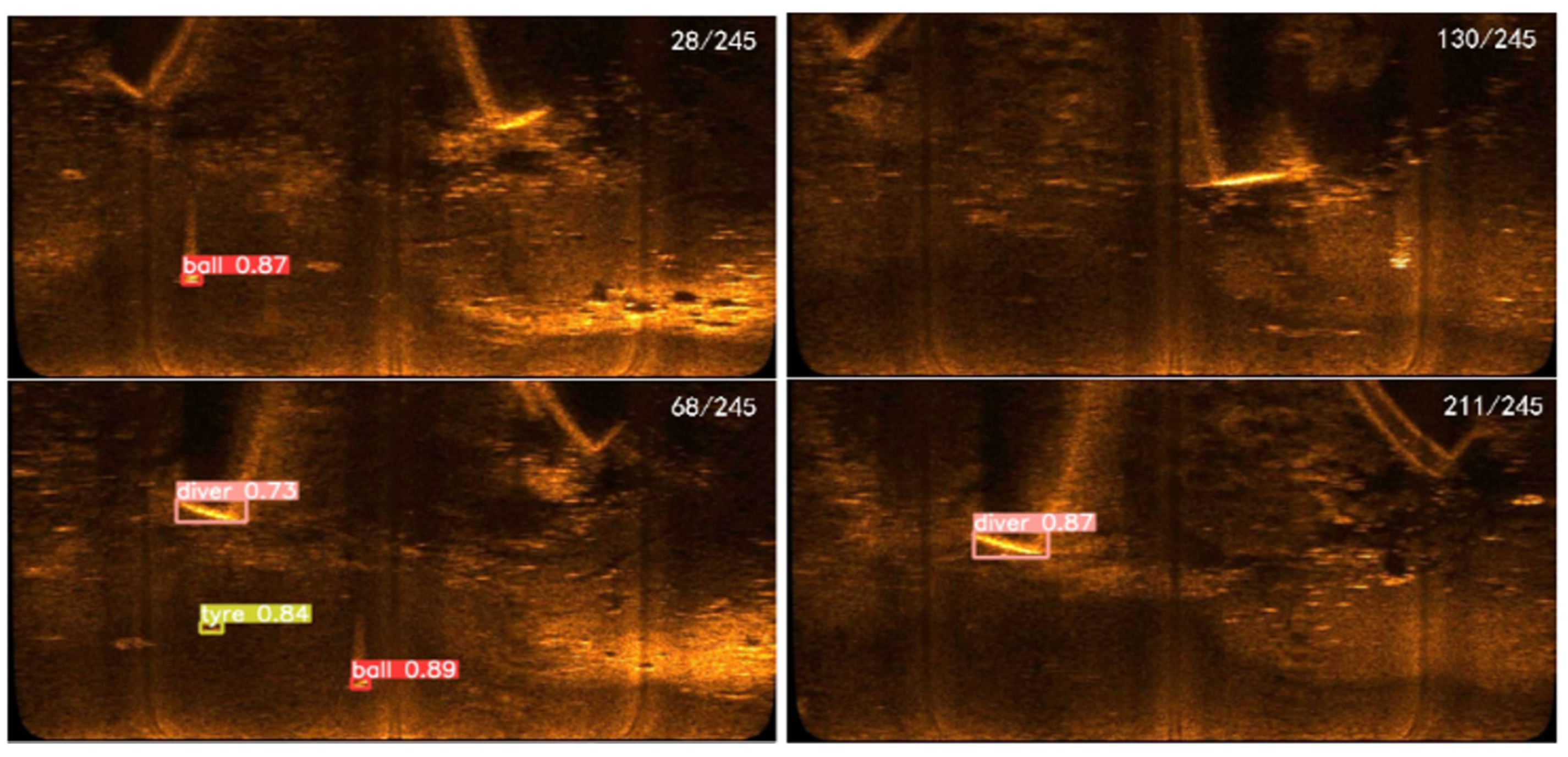

6.3. Underwater Target Tracking Experiment Based on Extension Datasets

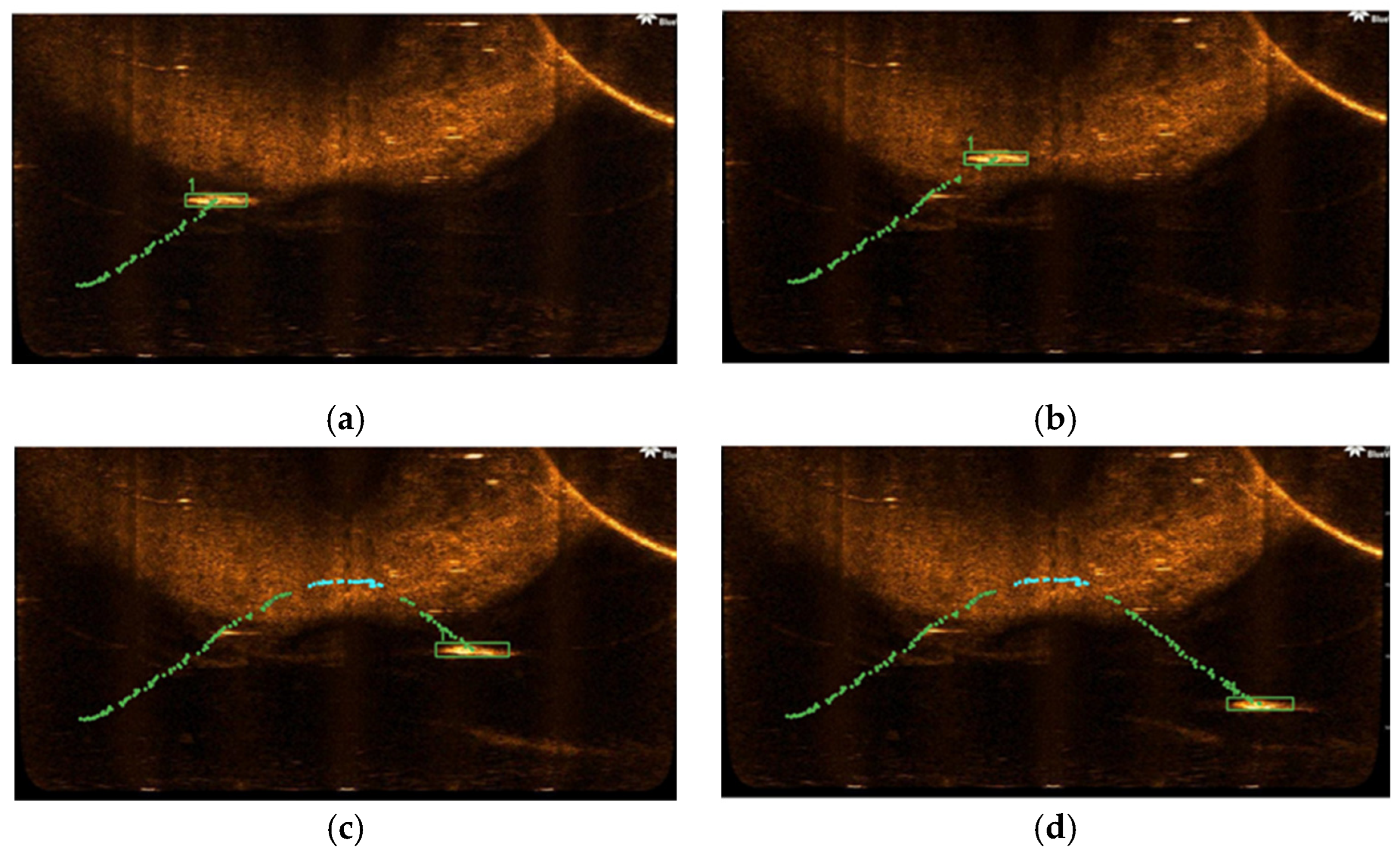

6.3.1. Single-Target Tracking Experiment

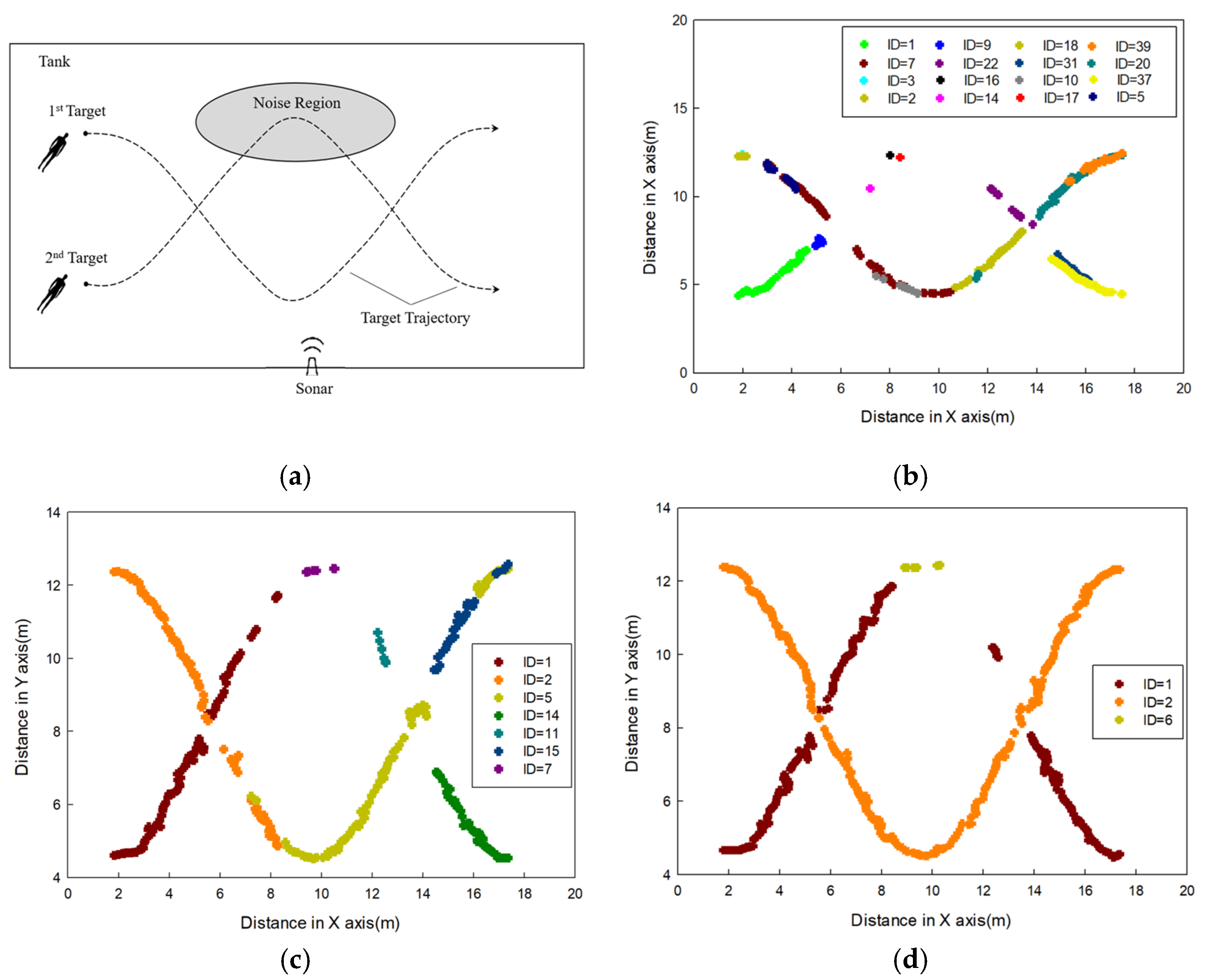

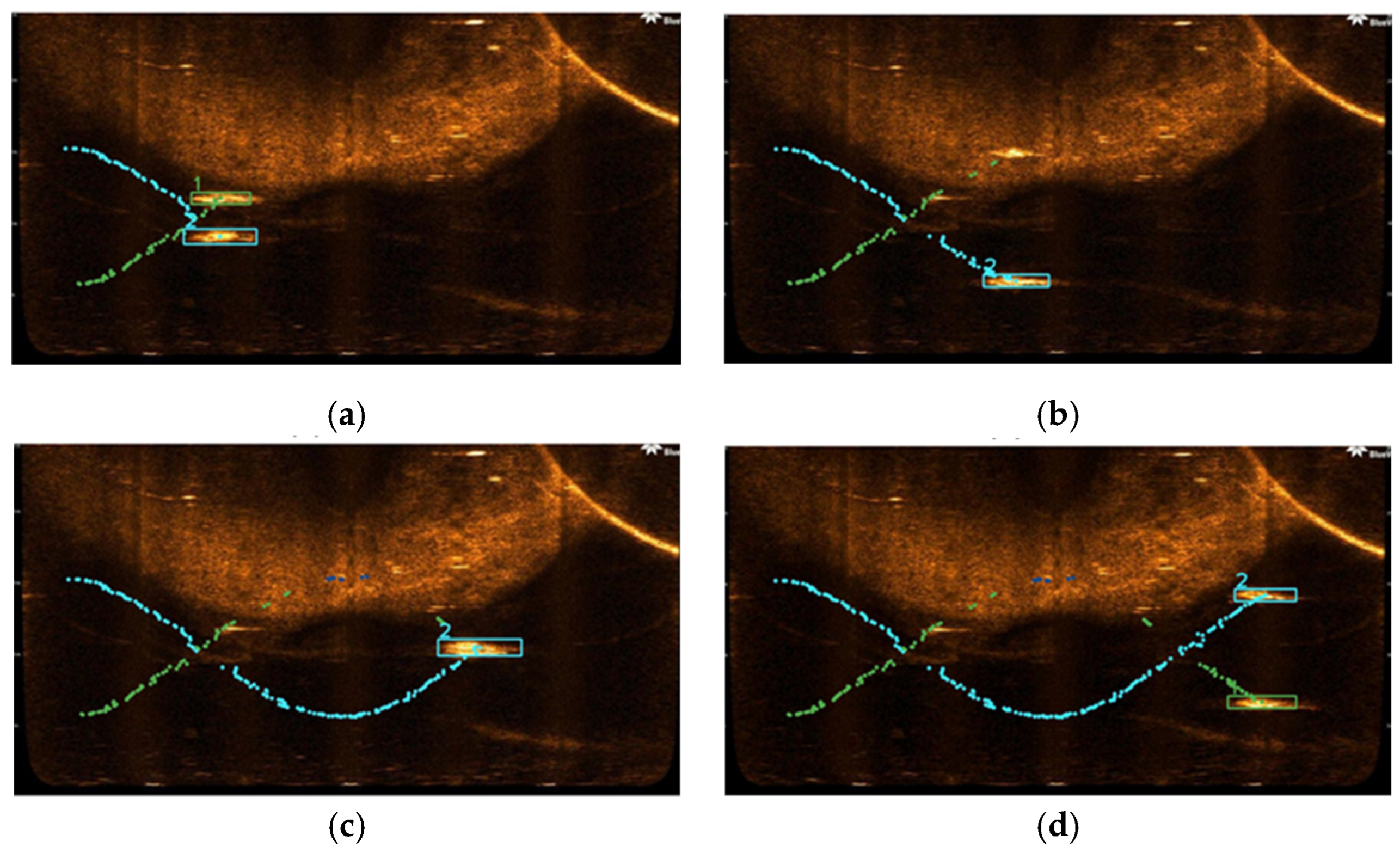

6.3.2. Double-Target Tracking Experiment

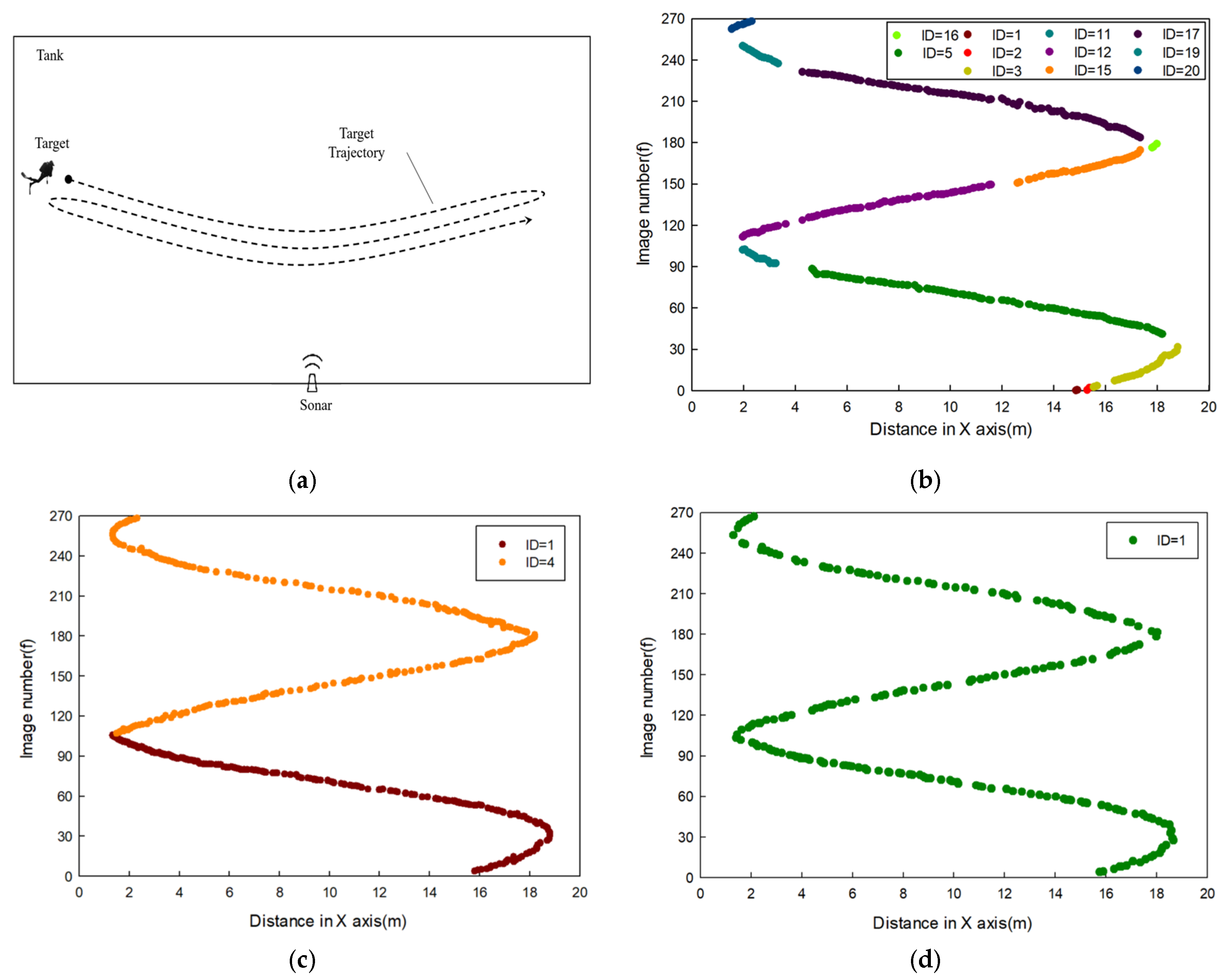

6.3.3. Underwater Target Tracking Trial Based on Pond Test Data

6.4. Underwater Target Tracking Experiment Based on Sea Trial Data

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Williams Glen, N.; Lagace Glenn, E. A collision avoidance controller for autonomous underwater vehicles. In Proceedings of the Symposium on Autonomous Underwater Vehicle Technology, Washington, DC, USA, 5–6 June 1990. [Google Scholar]

- Dai, D.; Chantler, M.J.; Lane, D.M.; Williams, N. A Spatial-Temporal Approach for Segmentation of Moving and Static Objects in Sector Scan Sonar Image Sequences. In Proceedings of the 5th International Conference on Image Processing and its Applications, Stevenage, UK, 4–6 July 1995. [Google Scholar]

- Lane, D.M.; Chantler, M.J.; Dai, D. Robust Tracking of Multiple Objects in Sector-Scan Sonar Image Sequences Using Optical Flow Motion Estimation. IEEE J. Ocean. Eng. 1998, 23, 31–46. [Google Scholar] [CrossRef]

- Chantler, M.J.; Stoner, J.P. Automatic Interpretation of Sonar Image Sequences Using Temporal Feature Measures. IEEE J. Ocean. Eng. 1997, 22, 29–34. [Google Scholar] [CrossRef]

- Ruiz, I.T.; Lane, D.; Chantler, M. A Comparison of Inter-Frame Feature Measures for Robust Object Classification in Sector Scan Sonar Image Sequences. IEEE J. Ocean. Eng. 1999, 24, 458–469. [Google Scholar] [CrossRef][Green Version]

- Petillot, Y.; Tena-Ruiz, I.; Lane, D.M. Underwater vehicle obstacle avoidance and path planning using a multi-beam forward looking sonar. IEEE J. Ocean. Eng. 2001, 26, 240–251. [Google Scholar] [CrossRef]

- Liu, D. Sonar Image Target Detection and Tracking Based on Multi-Resolution Analysis. Ph.D. Thesis, Harbin Engineering University, Harbin, China, 2011. [Google Scholar]

- Perry, S.W.; Ling, G. Pulse-Length-Tolerant Features and Detectors for Sector-Scan Sonar Imagery. IEEE J. Ocean. Eng. 2004, 29, 35–45. [Google Scholar] [CrossRef]

- Perry, S.W.; Ling, G. A Recurrent Neural Network for Detecting Objects in Sequences of Sector-Scan Sonar Images. IEEE J. Ocean. Eng. 2004, 29, 47–52. [Google Scholar] [CrossRef]

- Blanding, W.R.; Willett, P.K.; Bar-Shalom, Y.; Lynch, R.S. UUV-platform cooperation in covert tracking. In Signal and Data Processing of Small Targets 2005; SPIE: Bellingham, WA, USA, 2005; Volume 5913, pp. 607–615. [Google Scholar]

- Clark, D.E.; Bell, J. Bayesian Multiple Target Tracking in Forward Scan Sonar Images Using the PHD filter. IEE Proc. Radar Sonar Navig. 2005, 152, 327–334. [Google Scholar] [CrossRef]

- Clark, D.E.; Tena-Ruiz, I.; Petillot, Y.; Bell, J. Multiple Target Tracking and Data Association in Sonar Images. In Proceedings of the 2006 IEEE Seminar on Target Tracking: Algorithms and Applications, Birmingham, UK, 19 June 2006; pp. 149–154. [Google Scholar]

- Musicki, D.; Wang, X.; Ellem, R.; Fletcher, F. Efficient active sonar multitarget tracking. In Proceedings of the OCEANS 2006-Asia Pacific, Singapore, 16–19 May 2006; IEEE: New York, NY, USA, 2006; pp. 1–8. [Google Scholar]

- Handegard, N.O.; Williams, K. Automated tracking of fish in trawls using the DIDSON (dual frequency identification sonar). ICES J. Mar. Sci. 2008, 65, 636–644. [Google Scholar] [CrossRef]

- Yue, M. Research of Underwater Object Detection and Tracking Based on Sonar. Master’s Thesis, Harbin Engineering University, Harbin, China, 2008. [Google Scholar]

- Quidu, I.; Jaulin, L.; Bertholom, A.; Dupas, Y. Robust multitarget tracking in forward-looking sonar image sequences using navigational data. IEEE J. Ocean. Eng. 2012, 37, 417–430. [Google Scholar] [CrossRef]

- DeMarco, K.J.; West, M.E. Sonar-Based Detection and Tracking of a Diver for Underwater Human-Robot Interaction Scenarios. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 2378–2383. [Google Scholar]

- Karoui, I.; Quidu, I.; Legris, M. Automatic sea-surface obstacle detection and tracking in forward-looking sonar image sequences. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4661–4669. [Google Scholar] [CrossRef]

- Hurtós, N.; Palomeras, N.; Carrera, A.; Carreras, M. Autonomous detection, following and mapping of an underwater chain using sonar. Ocean Eng. 2017, 130, 336–350. [Google Scholar] [CrossRef]

- AI Muallim, M.T.; Duzenli, O. Improve Divers Tracking and Classification in Sonar Images Using Robust Diver Wake Detection Algorithm. In Proceedings of the 19th International Conference on Machine Learning and Computing, Vancouver, BC, Canada, 7–8 August 2017; pp. 85–90. [Google Scholar]

- Jing, D.; Han, J.; Wang, X.; Wang, G.; Tong, J.; Shen, W.; Zhang, J. A method to estimate the abundance of fish based on dual-frequency identification sonar (DIDSON) imaging. Fish. Sci. 2017, 83, 685–697. [Google Scholar] [CrossRef]

- Ye, X.; Sun, Y.; Li, C. FCN and Siamese network for small target tracking in forward-looking sonar images. In Proceedings of the OCEANS 2018, Charleston, SC, USA, 10 January 2019. [Google Scholar]

- Wang, X.; Wang, G.; Wu, Y. An adaptive particle swarm optimization for underwater target tracking in forward looking sonar image sequences. IEEE Access 2018, 6, 46833–46843. [Google Scholar] [CrossRef]

- Fuchs, L.R.; Gällström, A.; Folkesson, J. Object recognition in forward looking sonar images using transfer learning. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop, Porto, Portugal, 6 June 2019. [Google Scholar]

- Sheng, M.; Tang, S.; Qin, H.; Wan, L. Clustering Cloud-Like Model-Based Targets Underwater Tracking for AUVs. Sensors 2019, 19, 370–375. [Google Scholar] [CrossRef]

- Jing, D.; Han, J.; Xu, Z.; Chen, Y. Underwater multi-target tracking using imaging sonar. J. Zhejiang Univ. (Eng. Sci.) 2019, 53, 753–760. [Google Scholar]

- Zhang, T.; Liu, S.; He, X.; Huang, H.; Hao, K. Underwater target tracking using forward-looking sonar for autonomous underwater vehicles. Sensors 2019, 20, 102–103. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Gu, Y.; Zhu, P. Feature Tracking for Target Identification in Acoustic Image Sequences. Complexity 2021, 2021, 8885821. [Google Scholar] [CrossRef]

- Christensen, J.H.; Mogensen, L.V.; Ravn, O. Side-scan sonar imaging: Automatic boulder identification. In Proceedings of the Oceans 2021, San Diego, CA, USA, 15 February 2022. [Google Scholar]

- Horimoto, H.; Maki, T.; Kofuji, K.; Ishihara, T. Autonomous sea turtle detection using multi-beam imaging sonar: Toward autonomous tracking. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6 June 2019. [Google Scholar]

- Sun, Y. Forward-Looking Sonar Underwater Target Tracking Technology Based on Deep Learning. Master’s Thesis, Harbin Engineering University, Harbin, China, 2019. [Google Scholar]

- Kvasić, I.; Mišković, N.; Vukić, Z. Convolutional Neural Network Architectures for Sonar-Based Diver Detection and Tracking. In Proceedings of the OCEANS 2019, Marseille, France, 17–20 June 2019. [Google Scholar]

- Ma, X. Research on Object Detection and Tracking Based on Forward-Looking Sonar. Master’s Thesis, Harbin Engineering University, Harbin, China, 2020. [Google Scholar]

- Pian, Z. Forward-Looking Sonar Underwater Target Detection and Tracking Technology Based on Deep Learning. Master’s Thesis, Harbin Engineering University, Harbin, China, 2020. [Google Scholar]

- Ye, X.; Zhang, W.; Li, Y.; Luo, W. Mobilenetv3-YOLOv4-Sonar: Object Detection Model Based on Lightweight Network for Forward-Looking Sonar Image. In Proceedings of the OCEANS 2021, San Diego, CA, USA, 15 February 2022. [Google Scholar]

- Li, B.; Huang, H.; Liu, J.; Liu, Z.; Wei, L. Synthetic Aperture Sonar Underwater Multi-scale Target Efficient Detection Model Based on Improved Single Shot Detector. J. Electron. Inf. Technol. 2021, 43, 2854–2862. [Google Scholar]

- Yu, Y.; Zhao, J.; Gong, Q.; Huang, C.; Zheng, G.; Ma, J. Real-time underwater maritime object detection in side-scan sonar images based on transformer-YOLOv5. Remote Sens. 2021, 13, 3555. [Google Scholar] [CrossRef]

- Chen, R.; Zhan, S.; Chen, Y. Underwater target detection algorithm based on YOLO and Swin transformer for sonar images. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 19 December 2022. [Google Scholar]

- Cao, X.; Ren, L.; Sun, C. Research on Obstacle Detection and Avoidance of Autonomous Underwater Vehicle Based on Forward-Looking Sonar. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 9198–9208. [Google Scholar] [CrossRef]

- Zhou, X.; Tian, K.; Zhou, Z.; Ning, B.; Wang, Y. SID-TGAN: A Transformer-Based Generative Adversarial Network for Sonar Image Despeckling. Remote Sens. 2023, 15, 5072. [Google Scholar] [CrossRef]

- Chun, S.; Kawamura, C.; Ohkuma, K.; Maki, T. 3D Detection and Tracking of a Moving Object by an Autonomous Underwater Vehicle With a Multibeam Imaging Sonar: Toward Continuous Observation of Marine Life. IEEE Robot. Autom. Lett. 2024, 9, 3037–3044. [Google Scholar] [CrossRef]

- Qin, K.S.; Liu, D.; Wang, F.; Zhou, J.; Yang, J.; Zhang, W. Improved YOLOv7 model for underwater sonar image object detection. J. Vis. Commun. Image Represent. 2024, 100, 104124. [Google Scholar] [CrossRef]

- M-Series Mk2 Sonars Operator’s Manual. TELEDYNE BlueView Press, Version 2. Available online: https://www.manualslib.com/brand/teledyne/sonar.html (accessed on 4 May 2020).

- M-Series Sonars Quick Start Guide. TELEDYNE BlueView Press. Available online: http://ocean-innovations.net/OceanInnovationsNEW/TeledyneBlueview/M-Series-QuickStart-Guide-rev-.pdf (accessed on 2 July 2020).

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Jocher, G. Available online: https://github.com/ultralytics/yolov5 (accessed on 19 June 2020).

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

| Operating Frequency | Horizontal Beam Width | Vertical Beam Width | Maximum Range | Range Resolution | Field of View | Weight |

|---|---|---|---|---|---|---|

| 900 KHz | 1° | 12° | 100 m | 1.3 cm | 130° | 2.5 kg in air, 1.2 kg in water |

| Network Architecture | mAP (IOU = 0.5) | Re (IOU = 0.65) | Fps |

|---|---|---|---|

| YOLOv5n (Y5n) | 92.6% | 91.1% | 30.6 |

| YOLOv5s (Y5s) | 93.5% | 93.6% | 29.8 |

| YOLOv5m (Y5m) | 93.3% | 93.1% | 29.8 |

| YOLOv5l (Y51) | 92.3% | 93.9% | 29.4 |

| YOLOv5x (Y5x) | 91.9% | 93.9% | 20.1 |

| YOLOv5-STr1 (Y5S1) | 91.4% | 89.4% | 27.7 |

| YOLOv5-STr2 (Y5S2) | 93.3% | 93.7% | 28.2 |

| YOLOv5-STr3 (Y5S3) | 94.4% | 93.6% | 28.0 |

| Data Origination | Trajectory Classification | Number of Sequences | Total Frames |

|---|---|---|---|

| Data Expansion | One Object | 20 | 4000 |

| Two Objects | 10 | 2000 | |

| Tank Experiment | One Object | 16 | 1710 |

| Lake Experiment | Two Objects | 1 | 245 |

| Parameter | Unit | Value |

|---|---|---|

| Distance between sonar and the seabed | m | 9 |

| Inclination angle of sonar | ° | 10 |

| Field of view | ° | 130 |

| Range resolution | m | 0.02 |

| Transmission frequency | kHz | 900 |

| No. | Real Data | Extension Data | mAP (IOU = 0.5) | Re (IOU = 0.5) |

|---|---|---|---|---|

| 1 | ✓ | - | 69.88% | 46.59% |

| 2 | ✓ | ✓ | 87.46% | 52.73% |

| Hardware | GPU | NVIDIA GeForce RTX2080 Ti (Harbin Ship Electronics Market., Harbin, China) |

| CPU | Intel(R) Core(TM) i9-9900K (Harbin Ship Electronics Market., Harbin, China) | |

| Parameters | Operation System | Windows 11 |

| CUDA | 12.2.79 | |

| CUDNN | 7.6.5 | |

| Python | 3.8.5 | |

| Pytorch | 1.10.0 |

| Tracking Framework | Frag Ratio | ID Switch | Fps |

|---|---|---|---|

| SORT | 22.69% | 83 | 13.68 |

| DeepSORT | 9.50% | 31 | 7.35 |

| ExDeepSORT | 9.09% | 12 | 6.98 |

| Target | SORT | DeepSORT | ExDeepSORT |

|---|---|---|---|

| Sphere | 15.8% | 8.9% | 8.1% |

| Diver | 9.5% | 5.3% | 4.9% |

| Prop person | 9.5% | 3.3% | 3.4% |

| Single Cylinder | 43.3% | 12.4% | 11.8% |

| Tire | 22.3% | 13.5% | 13.1% |

| Target | SORT | DeepSORT | ExDeepSORT |

|---|---|---|---|

| Sphere | 5 | 1 | 1 |

| Diver | 3 | 1 | 0 |

| Prop person | 6 | 4 | 2 |

| Single Cylinder | 36 | 21 | 8 |

| Tire | 36 | 9 | 1 |

| Tracking Framework | Frag Ratio | ID Switch |

|---|---|---|

| SORT | 30.44% | 109 |

| DeepSORT | 16.41% | 59 |

| ExDeepSORT | 14.97% | 28 |

| Target | SORT | DeepSORT | ExDeepSORT |

|---|---|---|---|

| Sphere | 36.4% | 22.6% | 19.1% |

| Diver | 21.8% | 13.5% | 11.5% |

| Dummy model | 27.4% | 18.3% | 15.7% |

| Single cylinder | 59.6% | 29.5% | 28.5% |

| Tire | 34.6% | 23.8% | 23.1% |

| Target | SORT | DeepSORT | ExDeepSORT |

|---|---|---|---|

| Sphere | 30 | 21 | 7 |

| Diver | 18 | 13 | 4 |

| Dummy model | 37 | 48 | 27 |

| Single cylinder | 68 | 47 | 33 |

| Tire | 77 | 27 | 26 |

| Tracking Framework | Frag Ratio | ID Switch |

|---|---|---|

| SORT | 37.21% | 69 |

| DeepSORT | 17.16% | 33 |

| ExDeepSORT | 10.30% | 19 |

| Target | SORT | DeepSORT | ExDeepSORT |

|---|---|---|---|

| Sphere | 9.2% | 4.6% | 3.9% |

| Diver | 20.8% | 4.0% | 0.4% |

| Dummy model | 23.3% | 7.4% | 6.1% |

| Single cylinder | 61.9% | 36.4% | 20.1% |

| Tire | 32.5% | 16.8% | 12.1% |

| Target | SORT | DeepSORT | ExDeepSORT |

|---|---|---|---|

| Sphere | 7 | 2 | 2 |

| Diver | 16 | 10 | 0 |

| Dummy model | 19 | 1 | 3 |

| Single cylinder | 16 | 13 | 10 |

| Tire | 13 | 8 | 4 |

| Tracking Framework | Frag Ratio | ID Switch |

|---|---|---|

| SORT | 33.67% | 12 |

| DeepSORT | 21.22% | 8 |

| ExDeepSORT | 20.00% | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, W.; Li, R.; Zhou, H.; Zhang, T. Underwater Target Tracking Method Based on Forward-Looking Sonar Data. J. Mar. Sci. Eng. 2025, 13, 430. https://doi.org/10.3390/jmse13030430

Zeng W, Li R, Zhou H, Zhang T. Underwater Target Tracking Method Based on Forward-Looking Sonar Data. Journal of Marine Science and Engineering. 2025; 13(3):430. https://doi.org/10.3390/jmse13030430

Chicago/Turabian StyleZeng, Wenjing, Renzhe Li, Heng Zhou, and Tiedong Zhang. 2025. "Underwater Target Tracking Method Based on Forward-Looking Sonar Data" Journal of Marine Science and Engineering 13, no. 3: 430. https://doi.org/10.3390/jmse13030430

APA StyleZeng, W., Li, R., Zhou, H., & Zhang, T. (2025). Underwater Target Tracking Method Based on Forward-Looking Sonar Data. Journal of Marine Science and Engineering, 13(3), 430. https://doi.org/10.3390/jmse13030430