1. Introduction

Vessel collision incidents have long been a major challenge in maritime safety, with catastrophic consequences that extend far beyond direct economic losses (as reported by the International Maritime Organization, a single major collision can result in average losses exceeding USD 30 million). More critically, these incidents pose severe threats to human life (with approximately 2000 fatalities annually worldwide due to maritime accidents) and to the marine environment (collisions account for about 12% of marine oil pollution). Studies [

1,

2,

3] have revealed that approximately 75% to 85% of maritime accidents can be attributed to human error, including misinterpretation of Convention on the International Regulations for Preventing Collisions at Sea (COLREGs), delayed situational awareness under complex conditions, and inappropriate emergency maneuvers. These realities have spurred an urgent demand for intelligent ship collision avoidance (SCA) systems capable of autonomously generating optimal, regulation-compliant decisions in dynamic and uncertain environments.

Traditional SCA approaches primarily follow three technical paradigms: (1) Geometric algorithms. Methods such as the Velocity Obstacle (VO) approach [

4] and its variants (e.g., Reciprocal VO (RVO) [

5] and Hybrid RVO (HRVO) [

6]), forecast collision risks by constructing velocity feasible regions. These methods are computationally inefficient, and incapable of modeling nonlinear vessel dynamics. (2) Rule-based expert systems. These systems, exemplified by fuzzy logic [

7], operate based on predefined rule bases for decision-making. However, their generalizability is constrained by the completeness of the rule set, limiting their applicability in diverse scenarios. (3) Optimal control theory, notably Model Predictive Control (MPC) [

8], generates collision-free trajectories by solving constrained optimal control problems online. Yet, the performance of MPC heavily depends on precise hydrodynamic modeling (such as added mass and damping coefficients) and lacks robustness to abrupt disturbances (e.g., sudden gusts or current shifts).

In recent years, reinforcement learning (RL) [

9], as a promising model-free sequential decision-making framework, has been extensively studied to overcome the limitations of traditional SCA methods. Rooted in Markov Decision Process (MDP) [

10] theory, RL enables an agent to interact with the environment, observe its state (e.g., own-vessel position, velocity vector, relative bearing of obstacles), take actions (e.g., rudder angle, engine throttle commands), and receive rewards (e.g., collision penalties, safety distance rewards). The agent ultimately learns a policy that maximizes the expected cumulative reward, which is a discount factor. Compared to traditional methods, RL offers three key advantages: (1) Environmental adaptability. End-to-end learning enables direct feature extraction from raw sensor data (AIS, radar point clouds, visual images) without explicit environment modeling. (2) Autonomous decision-making. Policy networks can learn complex, high-dimensional mappings, allowing for sophisticated maneuvers beyond human-defined rules (such as emergent evasive strategies in emergencies). (3) Online optimization. Techniques such as experience replay enable continuous policy improvement, enhancing robustness in uncertain scenarios.

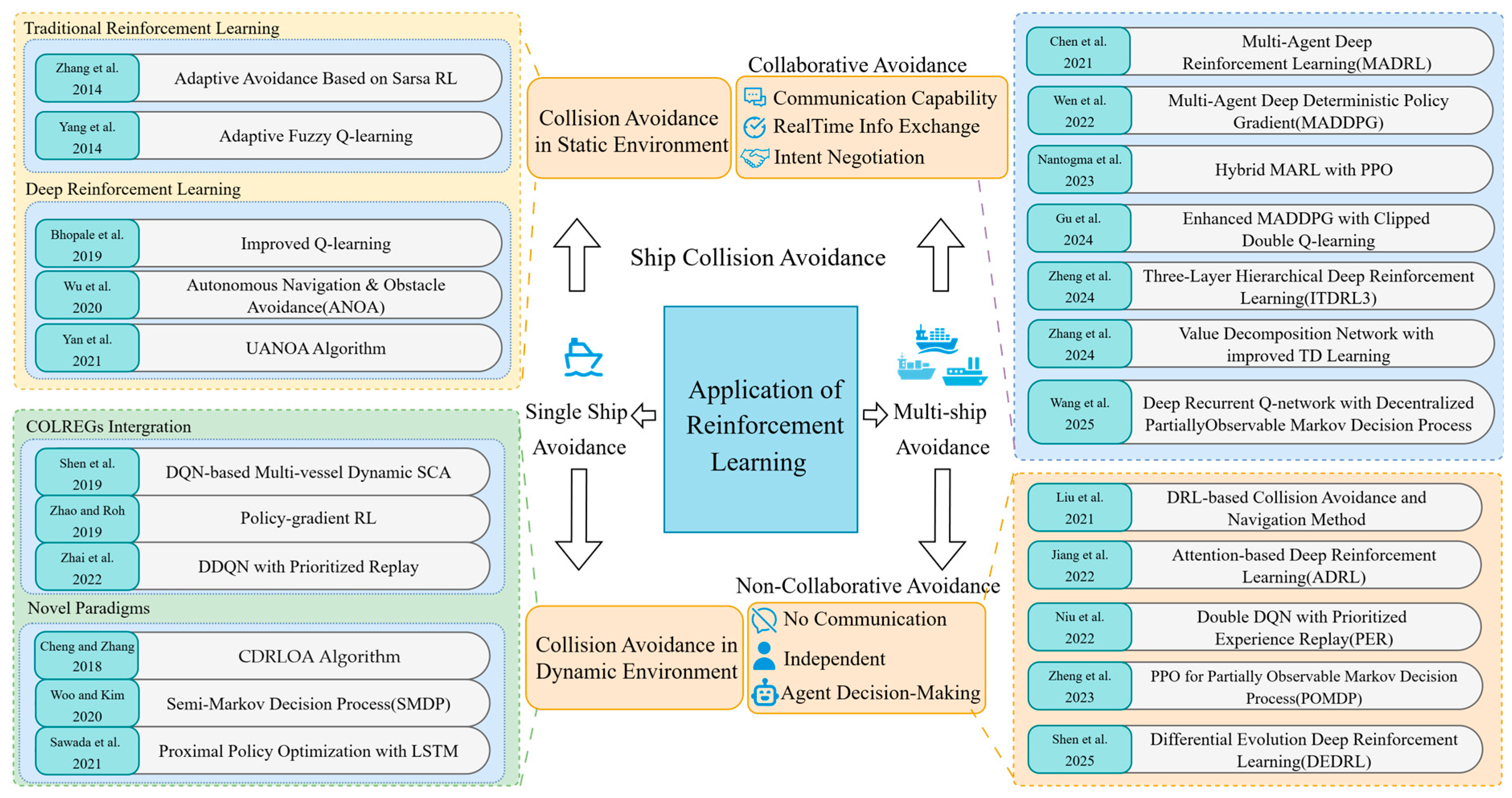

As illustrated in

Figure 1, the evolution of RL applications in SCA can be delineated into three distinct stages. (1) The initial stage (beginning around 2014) was characterized by the adoption of classical RL algorithms, such as Q-learning [

11], for relatively simple scenarios involving single-vessel navigation and static obstacle avoidance, establishing a foundational methodological framework for subsequent advancements. (2) The second stage (from 2018 onward) marked a transition to more complex and realistic settings, with research efforts increasingly focusing on multi-vessel dynamic interaction scenarios. During this period, Deep Reinforcement Learning (DRL) methods, including Deep Q-Network (DQN) [

12], Deep Deterministic Policy Gradient (DDPG) [

13], and Proximal Policy Optimization (PPO) [

14], were extensively explored to address the higher dimensionality and uncertainty inherent in dynamic maritime environments. Studies in this stage [

15] also witnessed a growing emphasis on modeling vessel-to-vessel interactions and ensuring compliance with COLREGs to enhance the generalizability and robustness of RL algorithms. (3) The latest stage (emerging since 2021) has been defined by a paradigm shift toward large-scale, multi-agent, and fully autonomous maritime scenarios. In this stage, driven by significant advances in computing power and the Multi-Agent Reinforcement Learning (MARL) framework [

16], many studies [

17] have begun to focus on achieving multi-vessel collaborative SCA, developing integrated perception and decision-making architectures, and achieving end-to-end autonomous navigation, which opening up new avenues for improving decision-making capabilities and safety performance in highly complex and uncertain marine environments.

Despite the growing body of research in this field, a systematic literature review and bibliometric analysis specifically focused on RL-based SCA remains conspicuously lacking, as evidenced by comprehensive searches in the Web of Science database. This notable gap highlights the pressing need for a rigorous and structured synthesis of the existing literature to elucidate current trends, critically assess recent advancements, and inform future research directions. In response, this review aims to provide a comprehensive and systematic overview of the state-of-the-art in RL-driven SCA, examining prevailing trends, unresolved challenges, and emerging opportunities, and ultimately offering valuable insights to guide future innovation in this rapidly evolving domain.

2. Methods of the Current Study

While foundational reviews on SCA exist, a significant gap remains for a systematic analysis encompassing the rapid advancements in RL since 2018, particularly the pivotal shift toward MARL paradigms post-2021. Furthermore, previous syntheses have not explicitly deconstructed the problem according to the fundamental dichotomy of SCA scenarios: single-agent versus multi-agent, and collaborative versus non-collaborative environments. Therefore, the purpose of this study is twofold: first, to provide an up-to-date review of RL-based SCA from 2018 to the present, capturing the latest developments in deep RL and MARL; and second, to introduce a novel analytical framework that systematically categorizes and evaluates methods according to this scenario splitting, thereby offering a more structured and granular understanding of the field’s evolution and current state of the art.

2.1. Search Strategy

To ensure comprehensive coverage, a search was performed across three major interdisciplinary databases: Web of Science (WOS), IEEE Xplore, and Scopus. The search query combined terms related to the technology (“reinforcement learning,” “deep reinforcement learning,” “multi-agent reinforcement learning”) with terms related to the application (“ship collision avoidance,” “maritime autonomous navigation,” “unmanned surface vehicle,” “COLREGs”).

2.2. Inclusion and Exclusion Criteria

This study established specific criteria for article selection to maintain a focus on recent and technically substantive contributions:

Inclusion Criteria:

- ▪

Publication date between 31 October 2014, and 17 March 2025.

- ▪

Primary focus on RL or Deep RL algorithms for SCA.

- ▪

Empirical validation through simulation or real-world experiments.

- ▪

Peer-reviewed journal articles or conference proceedings published in English.

Exclusion Criteria:

- ▪

Studies published prior to 2018 or focusing solely on traditional non-RL methods.

- ▪

Applications in non-maritime domains (e.g., aerial or ground robots).

- ▪

Fully theoretical papers without algorithmic implementation or validation.

2.3. Selection Process

The literature selection followed a structured multi-stage process. Initial database searches yielded 346 records. After removing 189 articles that did not focus on RL for SCA, 157 articles were screened for relevance. The remaining full-text articles were assessed for eligibility based on the predefined criteria. This rigorous evaluation led to the exclusion of 132 articles, with common reasons being non-maritime application scope or lack of empirical RL focus. Ultimately, 25 studies met all inclusion criteria and formed the core corpus for this systematic review. The screening was conducted independently by two reviewers, with disagreements resolved through consensus or consultation with a third reviewer. Following this,

Figure 2 visually present the overall structure and key points of the review.

3. Single-Agent Collision Avoidance

As the foundation of autonomous maritime navigation, single-agent SCA primarily focuses on enabling an individual vessel to independently perceive its surroundings and safely maneuver through complex environments. The main objective is to dynamically adjust the heading and speed of the ego vessel to minimize the risk of collisions with both static and dynamic obstacles, including other vessels. Early research in this area leveraged traditional reinforcement learning algorithms to address the limitations of rule-based and optimization-based methods, laying an essential technological groundwork for subsequent developments. With the advent of deep reinforcement learning, the field has seen significant progress in handling more realistic scenarios involving moving obstacles and regulatory compliance. This section provides a comprehensive review of single-agent SCA methods, covering both static and dynamic environments, and analyzes the strengths and limitations of representative approaches.

3.1. Collision Avoidance in Static Environment

Early research on single-agent SCA centered on applying traditional RL algorithms to vessel navigation in environments with only static obstacles, aiming to overcome the limitations of conventional methods in addressing complex maritime environments with multiple influencing factors. These foundational studies established an important technological basis for subsequent developments in the field.

For example, Zhang et al. [

18] addressed the challenge of static SCA under environmental disturbances, such as wind and currents, by proposing an Adaptive obstacle Avoidance algorithm Based on Sarsa on-policy RL (AABSRL) for Unmanned Surface Vehicles (USVs) in complex static environments. Specifically, in AABSRL, a Local Obstacle Avoidance Module is proposed, which utilizes an improved heading window algorithm to generate feasible headings and speeds while excluding directions that intersect with static obstacles. Concurrently, an adaptive learning module is introduced to leverage the Sarsa RL algorithm [

19] to adjust for heading deviations caused by environmental disturbances. Field trials demonstrated that the algorithm achieved a 42.3% reduction in average course deviation compared to traditional methods, facilitating safe navigation at speeds of up to 20 knots in clustered static obstacle fields. However, its dependence on complete prior knowledge of obstacles and the necessity for pre-training in disturbance compensation limit its adaptability, particularly in partially unknown or highly cluttered environments. To overcome the necessity for pre-training of disturbance compensation, Yang et al. [

20] proposed a single-agent collision avoidance method based on fuzzy Q-learning [

21]. This method simplifies environmental modeling by fuzzifying wind states, systematically navigates discrete compensation angles using chaotic logic mapping during the exploration phase, and introduces “similar states” to accelerate Q-table updates, thereby improving learning efficiency and solving the problem of wind-induced deviation in static obstacle avoidance. Simulations and field tests revealed a 37.5% reduction in heading error compared to vanilla methods. Nonetheless, this approach is constrained by the discrete nature of compensation actions, assumptions regarding wind continuity, and the exclusion of wave-induced disturbances, which can hinder performance in environments characterized by abrupt changes or high-accuracy requirements.

More recently, to enhance the applicability of SCA models in partially unknown or highly chaotic environments, Bhopale et al. [

22] proposed an improved Q-learning algorithm for SCA in unknown environments with static obstacles. Their method introduces a “danger zone” around detected obstacles, enforcing a pure exploitation strategy within these zones and applying Q-value penalties to discourage unsafe actions. Additionally, a neural network function approximator is used to manage continuous state spaces. However, this method requires a large number of iterations for the algorithm and neural network to converge, and retraining is required when the obstacle layout or environmental conditions change, resulting in high deployment costs and inefficiency. Wu et al. [

23] addressed real-time static obstacle avoidance for USVs using the Autonomous Navigation and Obstacle Avoidance (ANOA) method based on a Dueling DQN. The ANOA defines a custom state space using environmental perception images and a discrete action space, while a multi-objective reward function drives target-reaching and penalizes collisions and boundary violations. Experiments reveal improvements in exploration efficiency and convergence over conventional DQN, but the method’s applicability is limited to grid-based simple obstacles, and its performance degrades under partial observability or real-time requirements. To further address the challenge of partial observability, Yan et al. [

24] proposed the Autonomous Navigation and Obstacle Avoidance in USVs (UANOA) algorithm, which employs a double Q-network for end-to-end static obstacle avoidance in USVs. Raw sensor data (position and LiDAR) are processed to output discrete rudder commands. The reward function integrates components for SCA, target proximity, and penalizing ineffective navigation. While effective in simulation, the method’s reliance on fixed throttle settings constrains maneuverability and adaptability in complex environments.

Although the early attempts of RL methods for SCA in static environments have brought significant performance improvements, these approaches remain limited by their dependence on complete or predefined knowledge of obstacle distributions, reliance on discrete compensation strategies, and simplified environmental assumptions. These limitations notably restrict their adaptability to complex, partially known, and rapidly changing real-world ocean scenarios.

3.2. Collision Avoidance in Dynamic Environment

With the advent of DRL architecture, research on SCA has progressively focused on addressing the demands of real-world navigation, particularly dynamic SCA in complex maritime environments with moving obstacles, other vessels, and regulatory requirements.

For instance, to overcome the limitations of traditional static methods, Cheng and Zhang [

25] proposed a Concise DRL Obstacle Avoidance (CDRLOA) algorithm for underactuated unmanned vessels (UMVs) operating under unknown disturbances. In CDRLOA, obstacle avoidance, target approach, speed adjustment, and attitude correction are integrated into a unified reward function, with control strategies learned directly from environmental interactions using a DQN. While effective in simulation, the CDRLOA is limited to single-agent scenarios, does not account for COLREGs, and employs a fixed detection radius, which restricts its applicability in real-world maritime traffic. Shen et al. [

26], to better adapt their model to COLREGs and SCA strategies in multi-vessel encounter scenarios, innovatively integrated COLREGs into the reward function of their proposed DQN-based multi-vessel dynamic SCA framework. Meanwhile, by introducing multi-vessel dynamic interaction modeling, such as vessel bumper, vessel domain, and predicted area of danger, their framework demonstrated significant performance improvements in complex maritime environments with a variable number of dynamic targets. However, the need for manual parameter adjustment and discrete action space limits its adaptability in highly congested waters such as inland waterways. Focusing on the dynamic SCA in high-density multi-vessel environments, Zhao and Roh [

27] proposed a policy-gradient RL approach that classifies dynamic targets into encounter regions as defined by COLREGs. By considering only the nearest vessel in each region, the input space remains consistent regardless of the total number of obstacles, which enables simultaneous path following and SCA in multi-vessel scenarios. However, it assumes homogeneous vessel characteristics and does not account for communication delays. Zhai et al. [

28] introduced an intelligent SCA algorithm based on Double DQN with prioritized experience replay, explicitly incorporating COLREGs and expert knowledge into the reward function. As summarized in

Table 1, their composite reward employs a condition-triggered, multi-dimensional evaluation scheme that closely links the legitimacy and effectiveness of actions to regulatory compliance, which mainly consists of basic rewards (e.g., +10 for successful avoidance, −10 for collisions, −1 for potential danger, and 0 for neutral states) and additional weighted scores across five assessment dimensions. This finely tuned reward mechanism enables the agent to make decisions more closely aligned with human-like, rule-compliant SCA. Nevertheless, the discrete nature of the action space and the assumption of constant speed continue to constrain the algorithm’s adaptability in complex scenarios involving variable speeds.

More recently, to overcome the limitations of previous methods that relied heavily on idealized assumptions and thus lacking applicability in real-world scenarios, several studies have begun exploring novel paradigms that combine deep learning with DRL framework. Woo and Kim [

29] proposed a method based on visual state representation using Convolutional Neural Networks (CNN) and a semi-Markov decision process (SMDP). Unlike earlier approaches that relied on kinematic parameters as input, they designed a three-layer grid map to encode the target route, dynamic obstacles, and static obstacles, leveraging the visual feature extraction capabilities of CNN to better analyze complex and ambiguous situations commonly encountered at sea. This approach addresses the shortcomings of conventional DRL frameworks under non-ideal conditions to a certain extent. However, its performance is constrained by the fixed grid resolution and relies on the accuracy of visual detection, which may limit its effectiveness in low-visibility conditions such as fog or heavy rain. Additionally, to address the limitations of existing DRL methods that rely on discrete action spaces, Sawada et al. [

30] proposed a continuous-action DRL framework based on PPO and Long Short-Term Memory (LSTM) networks, referred to as Inside OZT. By expanding the obstacle risk zones and employing LSTM units to process sequential data, Inside OZT can effectively capture near-field vessels information and enhance the SCA safety margins. However, Inside OZT incurs high computational complexity and depends on fixed risk zone parameters. In addition, the introduction of a continuous action space results in notable instability in heading control, which may lead to unpredictable risks.

Despite the considerable progress brought by the introduction of DRL framework to dynamic SCA methods in handling moving obstacles and regulatory constraints, they remain constrained by several fundamental limitations. Most existing methods still rely on idealized assumptions such as homogeneous vessel characteristics, fixed action or observation spaces, and perfect perception, which reduce their robustness and generalizability in highly dynamic and uncertain maritime environments. Moreover, the inherent complexity of multi-vessel interactions and the unpredictability of non-ego vessel behaviors in high-traffic scenarios often make DRL choose solution of suboptimal decision-making or reduced safety margins. These challenges underscore the urgent need for more scalable and adaptive solutions, thereby motivating the exploration of collaborative SCA methods based on multi-agent reinforcement learning paradigms.

4. Multi-Agent Collision Avoidance

With the increasing complexity of maritime traffic and the growing presence of autonomous vessels, single-agent collision avoidance approaches are often insufficient to ensure safety and efficiency in dense or highly interactive scenarios. Consequently, in recent year, research attention has been slowly shifting towards multi-agent SCA paradigms, which explicitly consider the interactions, cooperation, or competition among multiple autonomous vessels. The core objective is to develop scalable and adaptive strategies that account for the collective dynamics and decentralized decision-making inherent in real-world maritime environments. This section aims to review the recent advancements in multi-agent collision avoidance, focusing on both collaborative and non-collaborative settings and highlighting the unique challenges and opportunities introduced by multi-agent interactions.

4.1. Collision Avoidance in Collaborative Environment

Research on multi-agent collision avoidance in collaborative environments emphasizes the development of strategies that enable multiple vessels to share information, coordinate actions, and jointly optimize navigation decisions. The primary objective is to enhance overall safety and efficiency by leveraging inter-vessel communication and cooperation, thereby addressing the challenges posed by dense traffic, complex interactions, and dynamic maritime conditions. These approaches lay the groundwork for intelligent and scalable maritime navigation systems capable of adaptive and collective decision-making.

As one of the early explorations of MARL for SCA, Chen et al. [

31] pioneered a collaborative approach where each vessel was modeled as an independent agent controlled by the DQN algorithm. To enhance practicability, they integrated the Mathematical Model Group vessel motion model and introduced cooperation coefficients to distinguish between three types of collaborative relationships, demonstrating effectiveness in COLREGs-compliant scenarios. However, their work was constrained to simple two-vessel encounters, did not consider static obstacles, wind, or current disturbances, and utilized a limited action space without speed adjustments. Furthermore, the training complexity increased sharply when scaling to more than two vessels. To overcome some of these limitations, Wen et al. [

32] extended multi-agent cooperation to the joint optimization of dynamic navigation and area assignment for multiple USVs, leveraging a Multi-Agent Deep Deterministic Policy Gradient algorithm. This framework broke the conventional separation of trajectory optimization, obstacle avoidance, and task coordination, enabling integrated multi-objective optimization. However, their study did not deeply embed COLREGs rules, relied solely on the Gym simulation platform [

33] without real-world validation, and exhibited insufficient robustness when facing dynamic obstacles.

Recently, based on previous work, some researchers have begun to explore hybrid architectures that combine traditional methods with MARL framework. Nantogma et al. [

34] addressed multi-USV dynamic navigation and target capture in complex environments by proposing a hybrid MARL framework with heuristic guidance. They generated navigation subgoals using expert knowledge and utilized an immune network-based model for subgoal selection, followed by actor-critic PPO for policy learning. While this approach improved the interpretability of group coordination, it assumed independent subgoals and only handled single intruders with regularized escape strategies, limiting its adaptability to more complex interactions. Concurrently, Gu et al. [

35] introduced a virtual leader to calibrate USV formation, enhanced Multi-agent DDPG with clipped double Q-learning and target policy smoothing to mitigate overestimation, and incorporated an artificial potential field into the reward function for better SCA awareness. Nevertheless, their work involved only limited vessels’formation switching scenarios, lacked analysis of communication delays, and required faster SCA responses in dense obstacle environments.

More recently, with the continuous progress of MARL algorithms, many studies have begun to focus on specific pain points such as local minima and partial observability in complex obstacle environments. Zheng et al. [

36] presented a three-layer hierarchical deep reinforcement learning method (ITDRL3) to tackle multi-agent collaborative navigation in U-shaped obstacle scenarios. By decoupling target selection, right-turn strategies, and close-range avoidance, ITDRL3 reduced both the action space and training time. However, it was optimized primarily for U-shaped obstacles, exhibited poor adaptability to other complex scenarios, and saw diminished training efficiency with more than five agents. To address the challenge of task complexity and learning efficiency, Zhang et al. [

37] decomposed multi-USV planning into task allocation and autonomous SCA using a value decomposition network and improved temporal difference learning. They also introduced hierarchical and regional division mechanisms to limit the exploration space. Yet, this method presupposed complete information sharing, did not account for incomplete information scenarios, thus insufficiently modeled dynamic environmental disturbances. To address the inherent partial observability challenges of dynamic environments, Wang et al. [

38] combined the Deep Recurrent Q-network (DRQN) with the Decentralized Partially Observable Markov Decision Process (Dec-POMDP). They improved the DRQN update mechanism and adopted a centralized training and decentralized execution architecture, validating their approach in real-world waters and demonstrating superiority over DQN, DDQN, and APF baselines. Nonetheless, computational complexity increased substantially with more than four agents, the maneuverability differences between USVs of various tonnages were neglected, and further refinement of collaborative rules was needed for complex scenarios.

Despite significant progress, current collaborative multi-agent collision avoidance methods remain constrained by limited scalability, insufficient robustness to environmental uncertainties, and inadequate handling of partial observability and heterogeneous vessel dynamics. Future research should focus on developing more generalizable and adaptive frameworks that can effectively address these challenges, thereby enabling safe and efficient deployment of autonomous vessels in complex, real-world maritime environments.

4.2. Collision Avoidance in Non-Collaborative Environment

Unlike collaborative SCA, non-collaborative multi-agent collision avoidance focuses on scenarios where vessels navigate independently, often without explicit communication or coordination. The core challenge lies in designing robust strategies to cope with the unpredictable behavior of other agents and the inherent uncertainty in decentralized decision-making. Research in this area aims to improve the safety and adaptability of autonomous vessels operating in competitive, adversarial, or information-constrained maritime environments.

In their early foundational work, Liu et al. [

39] pioneered the application of DRL to multi-vessel non-collaborative SCA, constructing simulated environments for both open waters and narrow waterways and integrating vessel motion characteristics to design a hierarchical state space, discrete action space, and scenario-dependent reward function. While their approach demonstrated effectiveness for dynamic SCA in two- and three-vessel scenarios, several limitations were evident: decision confusion arose in multi-vessel encounters due to the lack of prioritized avoidance responsibility, managing curved narrow waterways proved difficult owing to the challenge of balancing boundary and dynamic vessel constraints, and the absence of COLREGs integration led to non-compliant maneuvers. With these insights, Jiang et al. [

40] addressed the insufficient risk assessment accuracy in multi-vessel environments by introducing an attention-based deep reinforcement learning (ADRL) algorithm. This method decomposed SCA into two modules: a risk assessment module, which simulated the attention distribution of human officers via an 8 × 8 local map to generate real-time attention weights, and a motion planning module, which combined supervised and reinforcement learning to accelerate exploration using historical avoidance data. This approach improved risk differentiation and learning efficiency, but the action space remained limited to heading adjustments without speed control, and the unpredictable behaviors of target vessels were not modeled, thus reducing robustness in dynamic coastal waters. Recognizing the need for enhanced COLREGs integration and scenario specificity, Guan et al. [

41] proposed a Generalized Behavior Decision-Making (GBDM) model based on Q-learning. They introduced the Obstacle Zone by Target (OZT) to quantify collision risk, employed a 20 × 8 grid sensor to vectorize environmental data, and developed a navigation situation judgment mechanism to distinguish stand-on from give-way vessels—ensuring only give-way vessels performed avoidance and rewarding starboard turns to comply with COLREGs. While trained across a wide range of multi-vessel scenarios, this model did not account for vessel maneuverability differences and relied on fixed grid parameters, limiting its applicability in long-range open-water situations.

Recently, focusing on specialized COLREGs scenarios, Li et al. [

42] targeted the risk of reduced closest point of approach in starboard-to-starboard head-on encounters. Leveraging DQN, the study defined state spaces by inter-vessel distance, speeds, and heading, and designed a discrete action space of turning angles, integrating closest point of approach (CPA) metrics into the reward function. Although this improved timing and angle of avoidance in specific scenarios, the solution was restricted to two-vessel encounters and failed to consider environmental disturbances, leading to idealized assumptions and limited generalizability. To address algorithmic shortcomings such as Q-value overestimation, Niu et al. [

43] proposed an improved approach combining Double DQN and Prioritized Experience Replay (PER). By constructing a multi-agent system with up to 12 vessels and simplifying the state space via a grid-based quantification of danger areas, the model achieved more stable learning and balanced safety and economy through a multi-metric reward function. Nevertheless, the approach still relied on simplified vessel models and PER prioritization based solely on temporal difference error. Addressing the challenge of incomplete sensor information in real maritime environments, Zheng et al. [

44] introduced the PPO for Partially Observable Markov Decision Process (POMDP) with guidelines under dense reward. Using high-resolution local images as state input and a dense reward function to accelerate training, combined with a route guidance mechanism to preserve original courses, this method enhanced adaptability in mixed-obstacle, multi-vessel scenarios. However, dependence on visual sensors and risks of local optimality persisted.

Most recently, in response to the path redundancy and local planning inefficiencies of traditional algorithms, Shen et al. [

45] proposed the Differential Evolution Deep Reinforcement Learning (DEDRL) algorithm, integrating Differential Evolution (DE) with DQN within a two-layer optimization framework. Global path planning utilized DQN for collision-free path search and DE for node optimization, while local planning applied a course-punishing reward mechanism with a quaternion vessel domain and COLREGs compliance. Although this approach improved global-local coordination and regulatory adherence, DE-induced computational latency and reliance on global path direction for local turning angles reduced real-time responsiveness in rapidly changing multi-vessel encounters.

Despite notable progress, non-collaborative multi-agent collision avoidance methods remain fundamentally constrained by the lack of explicit communication and coordination, which often leads to conservative or conflicting maneuvers, increased path redundancy, and suboptimal navigation efficiency in dense traffic. Additionally, these methods struggle to accurately predict and respond to the highly dynamic and sometimes adversarial behaviors of other vessels, especially under partial observability and heterogeneous decision-making strategies. Future research should prioritize the development of adaptive algorithms that can better infer intent, dynamically adjust to unpredictable interactions, and robustly balance safety with efficiency in complex, large-scale maritime environments.

5. Challenges and Future Directions

5.1. Limitations and Open Challenges of Present RL Solutions

To date, reinforcement learning–based SCA has achieved encouraging progress in both single-agent and multi-agent frameworks. Nevertheless, despite these advancements, existing approaches remain fundamentally limited in scalability, adaptability, and robustness when facing the complexities of real-world maritime environments. Their performance still depends heavily on simplified assumptions, idealized sensing conditions, and static interaction models, restricting their practical deployment in dynamic, uncertain, and heterogeneous multi-vessel scenarios. The main limitations and open challenges can be summarized as follows:

- ▪

Scalability and Generalization Gap. Single-agent RL methods achieve good performance in simplified settings but fail to generalize to dynamic, large-scale maritime environments. Their reliance on ideal assumptions—such as perfect perception or homogeneous vessel models—limits scalability and adaptability across diverse operational scenarios.

- ▪

Real-Time Decision Constraints. Due to noisy and heterogeneous sensing data, computational complexity of DRL and MARL, real-time safety-critical decision-making at sea is difficult to achieve.

- ▪

Insufficient Environmental Adaptation. Most RL approaches overlook real-world disturbances like wind, current, and sensor noise, while regulatory RL rules are often simplified into static rewards. Such abstraction reduces robustness and interpretability in complex, uncertain maritime conditions.

- ▪

Deficient Collaboration in MARL. MARL enables distributed cooperations, but they usually were designed upon unrealistic communication conditions. Without the reasoning ability, vessel agents cannot infer non-collaborative ones’ intentions. These weaknesses frequently result in conservative or conflicting maneuvers in real world dense traffic scenarios.

Considering the above limitations, these challenges suggest that existing RL-based approaches are reaching their capacity limits in handling the cognitive complexity of real-world maritime decision-making. Overcoming these issues requires a new paradigm that can integrate perception, reasoning, and regulation in a unified, interpretable, and adaptive manner.

5.2. Future Directions for SCA

Recent advances in RL have driven increasingly sophisticated applications of autonomous agents in the maritime domain, characterized by enhanced collaborative perception and distributed decision-making capabilities. However, despite these notable achievements, a fundamental limitation persists: the absence of a unified, robust, and scalable architecture that capable of integrating perception, adaptive interaction modeling, and regulatory compliance in highly dynamic and uncertain maritime environments.

To address these challenges, current research has predominantly focused on the design of hierarchical decision-making architectures for multi-agent cooperative SCA.

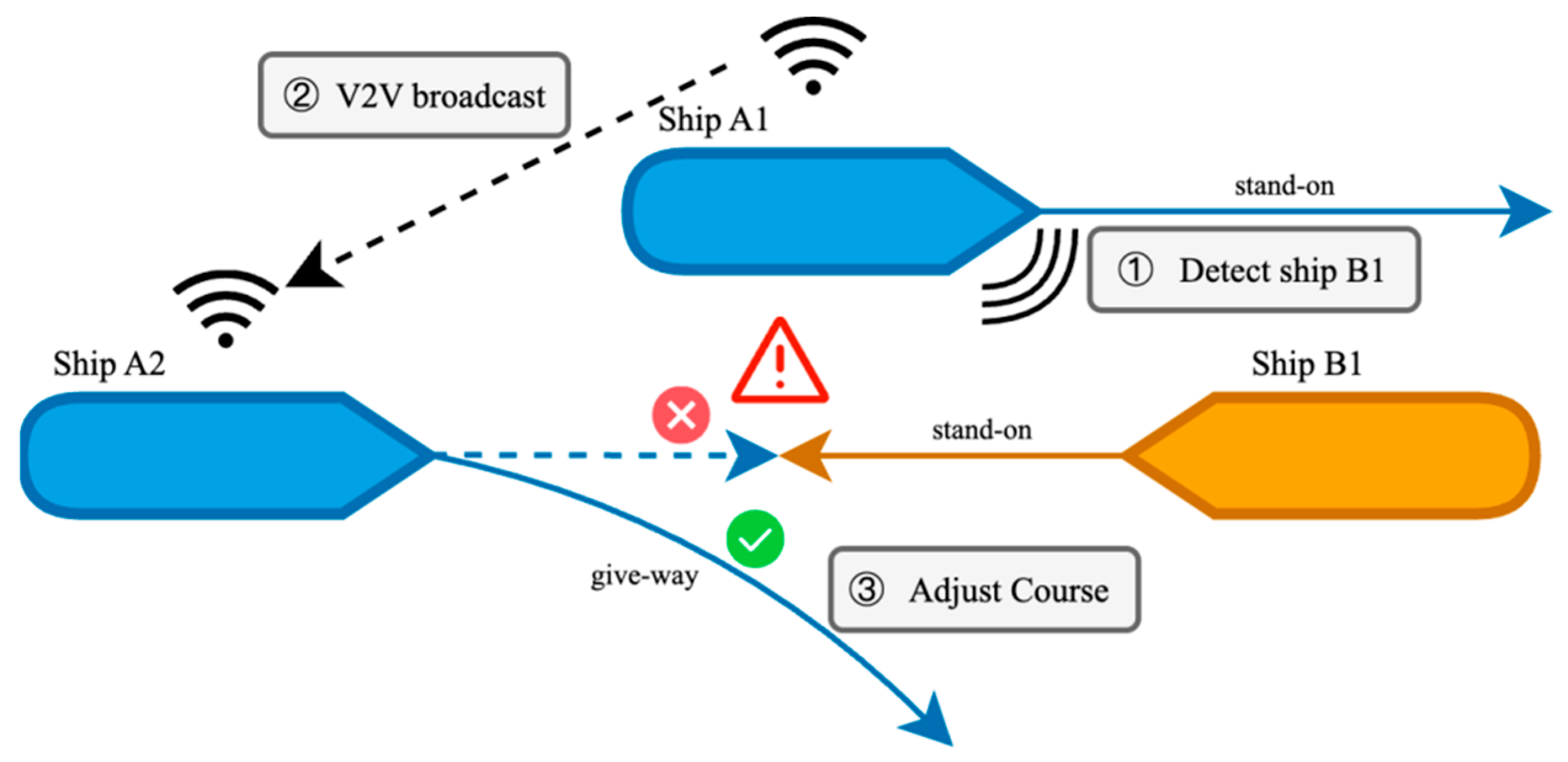

As illustrated in

Figure 3, a typical multi-agent collision avoidance process begins when vessel agent A1 detects an approaching vessel B1 through perception. Agent A1 then shares this risk information with agent A2 via vessel-to-vessel communication. Upon receiving the information, A2 integrates it with its own trajectory planning and the COLREGs rules to assess the potential collision risk and, if necessary, executes a give-way maneuver, while A1 and B1 maintain stand-on status. This procedure highlights the potential of multi-agent collaboration for achieving joint situational awareness and coordinated decision-making. However, existing agents still face significant limitations in scalability, robustness against real-world uncertainties, and the cognitive complexity required for adaptive interaction modeling and interpretable regulatory compliance in dynamic, multi-vessel scenarios.

Recently, the exploration of Large Language Models (LLMs) in the maritime domain has emerged as a promising new research direction. Although still in early stages with only a limited number of studies, initial findings have demonstrated the considerable potential of LLMs in processing heterogeneous maritime data, interpreting regulatory knowledge for real-world decision-making, and improving the explainability of autonomous navigation systems. For example, Park et al. [

46] introduced AIS-LLM, a unified end-to-end framework that integrates AIS time-series data with LLM-based semantic reasoning to support trajectory prediction, anomaly detection, and collision risk assessment, while generating human-understandable explanations. Agyei et al. [

47] developed an LLM-based autonomous surface vehicles control framework and validated the model’s capability to interpret COLREGs and generate compliant decisions through extensive experiments in 22 standardized simulated encounter scenarios. Ku et al. [

48] further examined the performance of LLM under realistic operational constraints, such as limited onboard computational resources and offline communication environments, and demonstrated that lightweight LLM can achieve high-accuracy reasoning for VHF communication tasks. Collectively, these studies confirm the feasibility of applying LLM in highly dynamic and uncertain maritime environments, offering new opportunities for enhancing autonomy, interpretability, and rule compliance of agents in maritime decision-making. Additionally, it is worth mentioning that Hu et al. [

49] proposed LLM-Coach, the first attempt to integrate LLM with RL for multi-agent cooperative maritime navigation. LLM-Coach adopts a dual-agent architecture in which LLM-derived commonsense and regulatory knowledge are used to enhance feature abstraction and reward shaping. This work highlights a compelling synergy: RL provides the capability for optimal sequential decision-making, while LLM contribute powerful contextual reasoning, regulatory interpretation, and explainability.

In conclusion, existing studies indicate that integrating RL with LLM has emerged as an important development direction for future intelligent SCA systems. The combination of the two creates a complementary relationship. LLM enhances RL in scene comprehension, risk identification, and rule compliance, enabling policies that better align with maritime context, while RL compensates for the limitations of LLM in action generation, numerical stability, and dynamic adaptability through environmental interaction and feedback-driven policy optimization, enabling semantic reasoning to be translated into stable and reliable SCA behaviors. Therefore, developing integrated agents that combine semantic understanding with policy optimization will become a core direction for next-generation intelligent SCA technologies and lay a solid foundation for safer, more robust, and more interpretable autonomous navigation systems in complex maritime environments.