1. Introduction

Ship detection is a critical task in the field of marine affairs. As a key technological advancement, it supports the advancement of national marine economies, safeguards maritime rights and interests, and facilitates the intelligent transformation of the shipping industry. This technology has wide-ranging applications, including ensuring shipping safety, maintaining maritime traffic, supporting marine environmental protection, providing rescue services, and enhancing national maritime security control [

1,

2,

3,

4].

In the field of ship detection, high-frequency surface wave radars (HFSWRs) [

5,

6,

7] have long served as a widely used ground-based sensing technology. It offers several advantages, including real-time monitoring capability, rich motion information, and relatively low operational costs. However, its limited spatial resolution prevents the extraction of detailed image features, its positioning accuracy is modest, and its detection range does not extend to deep-sea targets. These limitations significantly constrain its applicability across broader maritime scenarios. Traditional satellite-based ship detection methodologies have relied on optical, infrared, and microwave remote sensing modalities. Initially, optical-based approaches dominated, harnessing visible or infrared imagery to exploit color, morphology, and textural cues [

8,

9,

10]. Despite their interpretability, these techniques are inherently vulnerable to atmospheric perturbations, with inclement weather precipitating marked declines in efficacy. Moreover, optical sensors preclude uninterrupted, all-weather surveillance. The continuous advancement of synthetic aperture radar (SAR) technology has propelled SAR as the modality of choice for ship detection [

11,

12]. Unlike optical data, SAR exhibits resilience to meteorological and illuminative variability, penetrating obfuscating phenomena like clouds and fog to enable persistent, round-the-clock monitoring. Moreover, maritime ships, especially those constructed from metallic materials, exhibit distinct radiometric characteristics in microwave remote sensing, often showing strong backscattering coefficients. These features effectively overcome the limitations of optical imaging systems.

Pioneering detection paradigms hinged on manually designed features, such as Histogram of Oriented Gradients (HOG) [

13] and Deformable Part Model (DPM) [

14], which suffered from limited generalization ability and poor adaptability to complex scenes. Subsequently, the task of object detection was advanced by models such as Region-based Convolutional Neural Networks (R-CNN) [

15], Fast Region-based Convolutional Network (Fast R-CNN) [

16], and Faster Region-based Convolutional Network (Faster R-CNN) [

17], which adopted a two-stage detection framework. While these models provided significant advancements, they are relatively slow in computation and involve large numbers of parameters. Additionally, due to their reliance on fixed anchor boxes, their positioning accuracy is limited, and they struggle to detect small targets effectively. The introduction of the You Only Look Once (YOLO)v1-YOLOv3 series [

18,

19,

20] marked a shift toward one-stage algorithms, significantly improving computational speed compared to earlier models. Subsequently, with the release of YOLOv5-YOLOv7 [

21,

22,

23], dynamic anchor boxes were incorporated, enhancing the boundary accuracy of target detection. The latest iterations, YOLOv8-YOLOv12 [

24,

25,

26,

27,

28], have adopted an anchor-free design, which further enhances the detection accuracy of small targets. Building upon these YOLO models, advanced methods for small target detection have emerged, including feature enhancement, fusion and context aware YOLO (FFCA-YOLO) [

29] and faster and better for real-time YOLO (FBRT-YOLO) [

30]. In recent years, alongside the YOLO series, transformer-based models [

31] utilizing global attention mechanisms, such as real-time detection transformer (RT-DETR) [

32], and models like efficient object detection V2 (EfficientDet-V2) [

33], which offer self-supervised pre-training capabilities, have been developed. Some researchers have employed pruning techniques together with knowledge distillation to achieve both compact model size and low computational cost [

34]. These models provide high accuracy and advanced features. However, despite their strengths, they are characterized by large model parameters and high computational demands. Additionally, their ability to capture small targets remains limited, making them less suitable for processing the vast amounts of data generated by maritime satellite monitoring.

Therefore, this study leverages SAR imagery to develop a lightweight, all-weather ship detection framework, prioritizing computational efficiency over mere parameter reduction, as 1M–3M parameters pose minimal burden on satellite-edge devices. We designed the Multi-Feature Channel Convolution (MFC-Conv) module to build an efficient backbone, enabling multi-scale feature propagation, lightweight residual approximation for improved gradient flow, and reparameterization into a branch-free dual-layer convolution for seamless edge deployment. The Multi-Feature Attention (MFA) module was incorporated to boost localization and classification with low overhead. Exploiting the limited scale variation in SAR vessel targets, the decoder was optimized by eliminating redundant heads, reducing computations and mitigating background noise. The main contributions are as follows:

We unveiled MFC-Conv, a module that concurrently diminishes parameters and FLOPs, elevating operational efficiency, with reparameterization to dual convolutions for edge-device compatibility.

An efficient emulation of residual blocks was introduced, balancing lightweight design with enhanced training dynamics.

A novel multi-feature attention (MFA) module was proposed, which enhanced the model’s localization and recognition capabilities, improving overall detection accuracy.

Exploiting SAR-specific traits, the decoder was rationalized, yielding reductions in parameters and computations while mitigating clutter and refining target acuity.

The remainder of this paper proceeds as follows:

Section 2 presents the proposed method, elaborating on the structure of the key modules and the loss function used for training;

Section 3 reports the experimental environment and parameter design of this paper, and conducts comparative tests on three datasets to evaluate the proposed method and other recent methods;

Section 4 discusses the effectiveness of each module proposed in this paper, and also examines the impact of different parameter settings on the results; and finally,

Section 5 summarizes the above results and presents the final conclusion.

2. Methodology

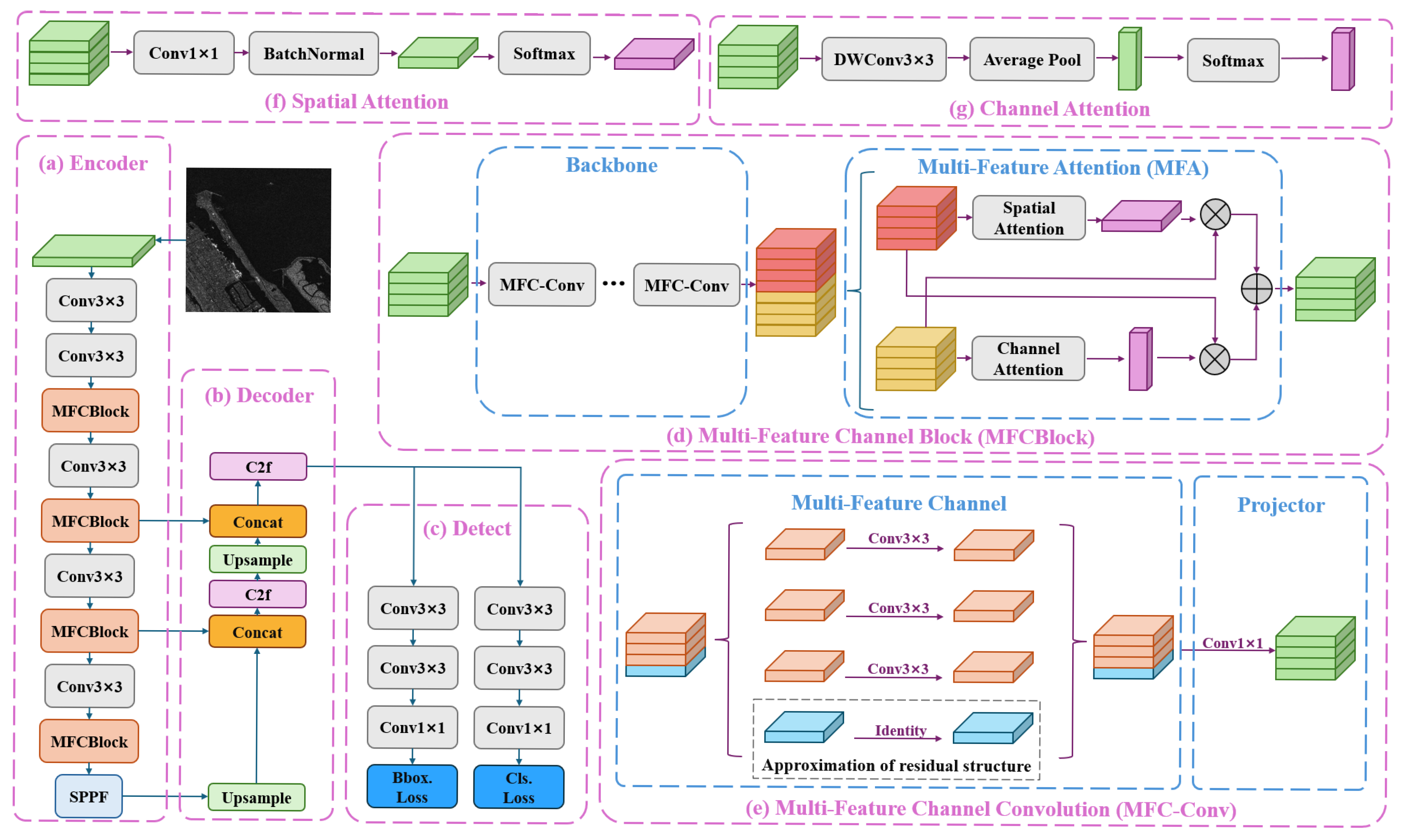

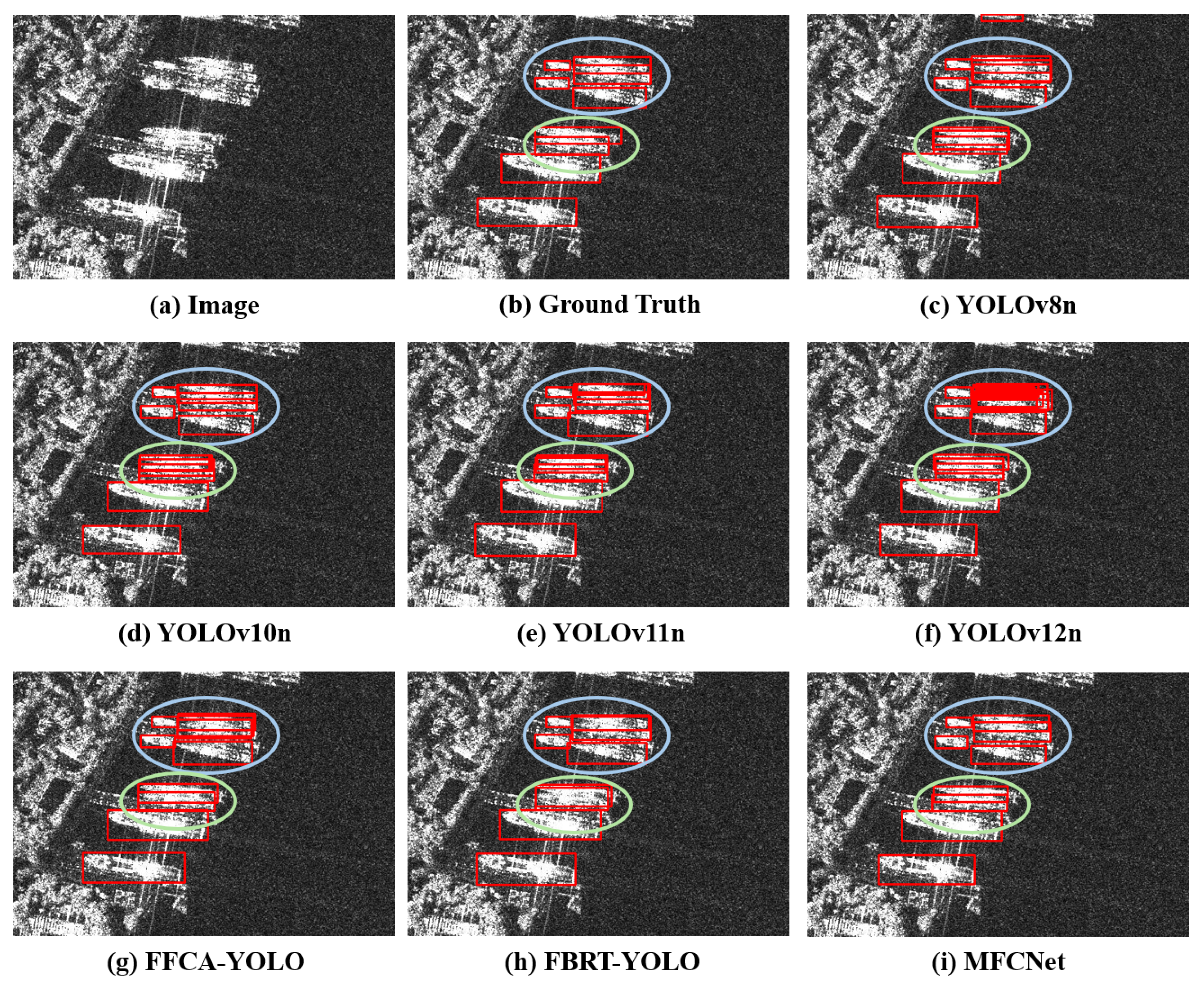

Our framework, MFCNet, as illustrated in

Figure 1, builds upon the YOLOv8n model as the baseline through pivotal enhancements tailored to satellite SAR imagery. Firstly, we modified the decoder to better accommodate the characteristics of satellite SAR images, in which ship targets are typically small and may comprise only a few dozen pixels. In target detection tasks, such targets are classified as small objects. Consequently, the detect module responsible for identifying targets of other sizes can be pruned. By removing the unnecessary detect module, we reduce the model’s computational cost while allowing it to focus more effectively on learning the features of small ship targets. Second, we introduce the MFC-Conv to construct a new backbone. This modification further reduces the model’s computational cost, with only a slight trade-off in accuracy. Lastly, we integrate the MFA module on top of MFC-Conv. By leveraging the diverse feature information provided by MFC-Conv, MFA enhances both the spatial and channel aspects, significantly improving the model’s ability to recognize and localize targets. The overall architecture of the model is shown in the figure below:

In the Encoder section (as depicted in

Figure 1a), all 3 × 3 convolutions within MFCNet are followed by a batch normalization layer and the SiLU activation function [

35] to yield the resultant features. In this section, all independent 3 × 3 convolutions are configured with a stride of two, which serves to reduce the spatial resolution of the feature maps, thereby decreasing subsequent computational costs while simultaneously enlarging the receptive field of the deep model. In the decoder section (as depicted in

Figure 1b), the upsampling module doubles the spatial resolution to align the feature maps with the subsequent concatenation layer in terms of spatial scale. In the detect section (as depicted in

Figure 1c), the 3 × 3 convolutions across the dual branches emulate those in the primary pathway, with their outputs subsequently normalized via batch normalization and activated using SiLU. The terminal 1 × 1 convolution layer undergoes no additional post-processing.

2.1. Multi-Feature Channel Convolution

As shown in

Figure 1e, the MFC-Conv module incorporates multiple feature channels, allowing for the simultaneous processing of several sets of feature information within a single layer. In each feature channel, a 3 × 3 convolution is employed to further extract features with high parameter and computational efficiency. This design ensures the overall computational efficiency of the model while maintaining compatibility with convolution acceleration algorithms. Furthermore, MFC-Conv includes an approximation of residual structure, enabling the model to retain essential shallow features while also facilitating the extraction of deeper features. Furthermore, just like the typical residual structure, it enables the gradient to be efficiently propagated during the training process, thereby enhancing the training performance of deep models. Finally, after completing feature extraction, a linear projection is applied along the channel direction to filter out the information requiring further extraction, as well as to preserve the necessary shallow features. The equation is expressed as follows:

where

represents the model’s input. Through the split operation, the input is evenly divided into four parts,

to

, along the channel dimension. The first three components are processed according to Equation (

2), where the subscript (i) denotes the index corresponding to each input branch.

and

represent the weights and biases of the corresponding convolutional layers respectively. BN denotes the batch normalization layer, and SiLU is the activation function. Finally, in Equation (

3), the outputs

to

obtained from Equation (

2), together with

from Equation (

1), are concatenated along the channel dimension for fusion. The merged result is then processed through a convolutional layer, followed by batch normalization and the SiLU activation function, to produce the final output of the module.

The MFC-Conv module enhances parameter utilization efficiency by introducing multiple feature channels, thereby mitigating feature interference and reducing both the number of parameters and computational cost while maintaining model performance. MFC-Conv adopts an approximation of residual structure, which facilitates the downward transmission of shallow features and the upward propagation of error gradients during training. This design not only lowers computational costs during inference but also minimizes memory consumption by avoiding the overhead typically introduced by complex branching structures.

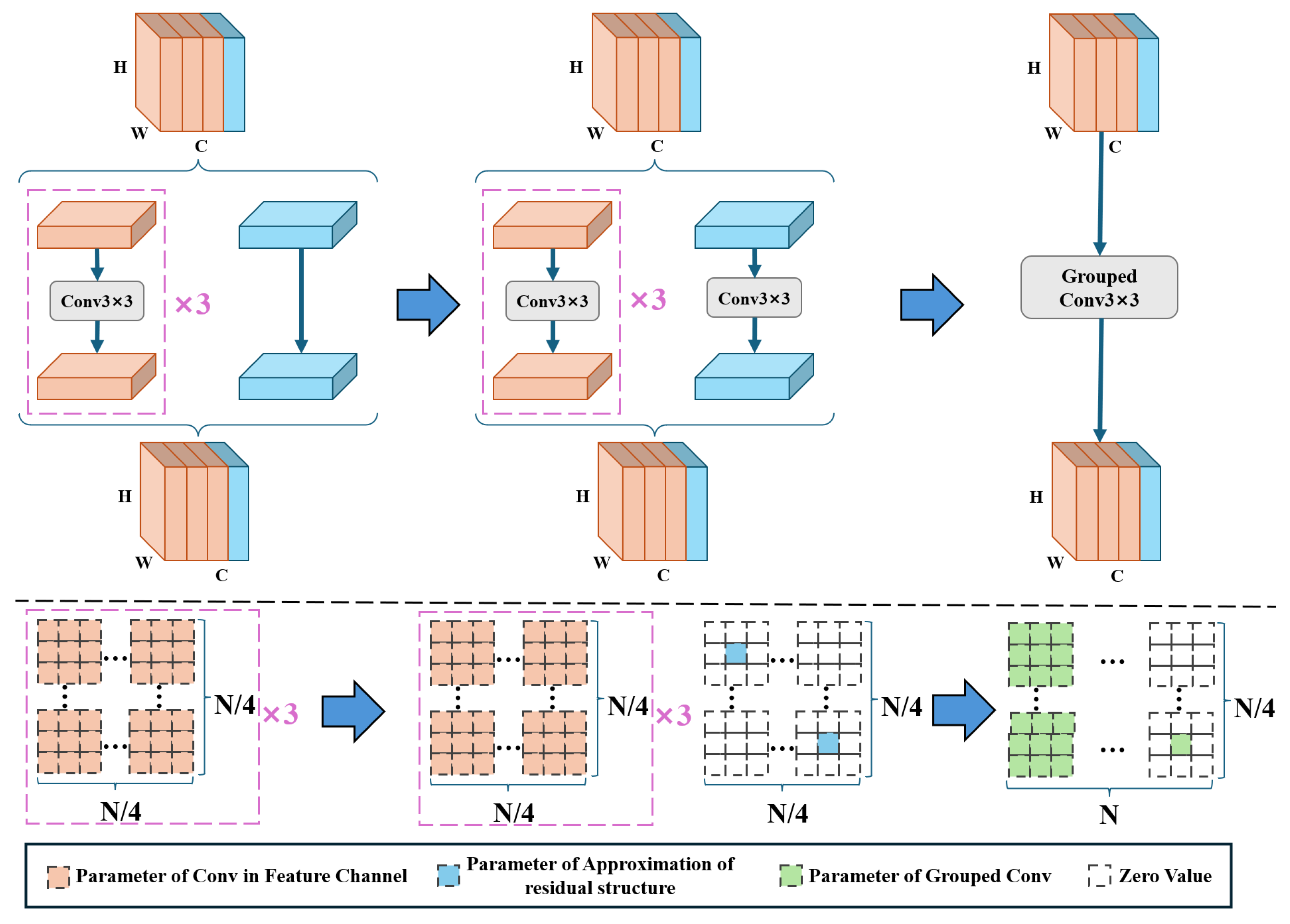

Furthermore, certain edge computing devices may provide only limited support for the split operation. To overcome this limitation, the first three convolutional branches in the MFC-Conv module can be seamlessly merged into a grouped convolution. The approximation of residual structure can also be equivalently transformed into a standard convolution by introducing a set of manually designed fixed parameters. As a result, the MFC-Conv module can be reconfigured, when necessary, into a simplified structure consisting of a 3 × 3 grouped convolution and a 1 × 1 standard convolution. This reconfiguration greatly enhances deployment efficiency on resource-constrained edge devices. The reconfiguration process is illustrated in the

Figure 2.

The upper part of

Figure 2 illustrates the structural evolution of the MFC-Conv module during the parameter reconstruction process, while the lower part shows the corresponding changes in the parameter structure. In this figure, the orange branch represents the multi-feature channel branch, and its parameters are depicted as orange squares. The blue branch denotes the approximation of residual structure, with the reconstructed parameters represented by blue squares, each assigned a value of one. The symbol

N refers to the channel dimension of the input and output feature matrices. In the final step, the parameters from the four branches are integrated to form a grouped convolution with four groups. This design enables better adaptability to various types of deep learning edge computing devices.

2.2. Multi-Feature Attention

To enhance the model’s spatial localization and feature recognition capabilities, this study designs the MFA module based on the backbone constructed using the proposed MFC-Conv, leveraging its ability to transmit multi-level feature information. As illustrated in

Figure 1d, the MFA module uses diverse feature channels propagated through the backbone network to generate both spatial and channel attention weights. These weights are then cross fused, complementing another set of features that lack corresponding information. The resulting features are subsequently aggregated and fused to produce an enhanced output that simultaneously strengthens both spatial and channel representations, thereby improving the overall performance of the model. The corresponding Equations are expressed as follows:

The final 1 × 1 convolutional projection layer in the backbone’s MFC-Conv maps the output to twice the required number of channels. As shown in Equation (

4), the input feature

is divided into two independent data blocks,

and

, which are used to extract spatial and channel attention weights, respectively. This design prevents a single set of features from simultaneously containing spatial, channel, and object-level information, thereby providing dedicated and independent transmission pathways for spatial and channel features. As a result, the learning difficulty of the model is reduced. The computation of spatial weights is expressed in Equation (

5), where

and

denote the weights and biases of a 1 × 1 convolution. The output is then processed by a batch normalization (BN) layer and a Softmax function to obtain the spatial attention weights. The calculation of channel weights is shown in Equations (

6) and (

7), where

and

represent the weights and biases of a 3 × 3 depth wise convolution. Each channel’s features are independently extracted through the depth wise convolution, followed by channel-wise average pooling and normalization using the Softmax activation function to produce the channel attention weights. Finally, the output of the MFA module is computed as shown in Equation (

8). The channel weights are applied to the data block to refine spatial features, while the spatial weights are applied to enhance channel features. The two results are then combined through element-wise addition and fusion to generate the final output. This cross-attention mechanism compensates for the missing spatial or channel information in each feature subset, ultimately producing outputs with precise spatial localization and salient feature representation.

2.3. Loss Functions

In this paper, three loss functions [

19] are employed to achieve accurate ship target detection. To determine the existence of a predicted bounding box, the binary cross-entropy loss, referred to as the classification loss (Cls loss), is utilized. Its equation is as follows:

where

represents the true value in one-hot encoding,

is the category logit output by the classification branch of the detect module of the model.

N represents the number of candidate regions. The remaining two losses are box loss and DFL loss. These two are collectively referred to as bounding box loss (Bbox loss) and are calculated by the output of another branch of the detect module. Box loss is used to enhance the overlap between the predicted box and the real box. DFL loss optimizes the prediction of boundary positions and improves the robustness of the model. Their Equations are as follows:

The box loss is based on intersection over union (IoU), where

represents the prediction box and

represents the ground truth box. For the DFL loss,

y represents the value obtained by scaling the distance between one of the four boundaries and the center point of the true bounding box to the scale of the output feature.

and

are the predicted distribution probability values by the model for the two adjacent integer intervals where y lies. The overall loss is as follows:

In this paper, the weighting parameters , , and are set to 7.5, 1.5, and 0.5, respectively.

4. Discussion

4.1. Ablation Experiment

To compare the effectiveness of each module of the model, we conducted ablation experiments on the decoder, the MFC-Conv backbone, and the MFA attention modules in each block, and verified them on the three datasets used in this paper. Firstly, the parameter quantities and computational cost under different configurations are as

Table 7.

It can be observed that by improving the decoder and introducing the MFC-Conv module proposed in this paper, the number of parameters and computational cost of the model have significantly decreased. The subsequently added MFA attention module only slightly increased the number of parameters and computational volume. The final model of this paper has reduced the parameter count by 57.8% and the computational cost by 42.7% compared to the baseline model. The

Table 8 presents the ablation experiment results for three datasets.

From the ablation experiment results on the above three datasets, it can be observed that the effects of each module are consistent with the expectations. The decoder simplified based on the resolution of the satellite SAR data reduces the computational load without causing any negative impact on the results. The backbone composed of MFC-Conv avoids the appearance of branch structures and further reduces the computational load, causing only a slight negative effect on the results. Finally, the added MFA module enhances the positioning and recognition capabilities, increasing a small amount of computational cost to improve the overall performance of the model.

4.2. Efficiency of MFA

To verify the effectiveness of the MFA attention module, we compared it with several other low-computation-volume attention mechanism modules, including channel attention, spatial attention, and CBAM [

39]. The comparison results on the three datasets are shown in

Table 9.

From the above results, it can be seen that the MFA module proposed in this paper can more effectively use the information transmitted by the main backbone composed of the MFC-Conv module, while improving the positioning accuracy of the target and the features of the target, thereby enhancing the overall accuracy of the model. Overall, the performance of MFA is superior to those of the other several low-computation-volume attention modules compared.

4.3. Feature Channels Experiment

The MFC-Conv module proposed in this study serves as the backbone of the model, and the selection of its internal feature channel number has a substantial influence on both the model’s overall performance and computational cost. Therefore, this subsection presents a comparative analysis of the model’s performance and computational cost under different channel configurations of the MFC-Conv module. The results across the three datasets are summarized in

Table 10.

From the data presented above, it can be observed that the model achieves higher overall performance when the number of feature channels is relatively small. However, as the number of channels increases, the limited amount of information contained within each channel becomes insufficient for effective feature transmission, which negatively impacts performance. For the backbone of a lightweight model, it is essential not only to consider the final performance but also to account for variations in computational cost.

Table 11 presents the number of parameters and computational load of the model under different feature channel configurations.

From the table above, it can be observed that when the number of feature channels is set to two, four, or eight, the differences in computational cost between adjacent configurations are approximately 5.9% and 2%, respectively. This indicates that as the number of feature channels increases, the computational efficiency gains gradually diminish. Moreover, when the number of feature channels is set to two or four, the variations in model accuracy across all evaluation metrics remain within 1%. Therefore, considering the trade-off between accuracy and computational efficiency, the configuration with four feature channels is ultimately selected as the standard setting for the MFC-Conv module.

4.4. The Current Shortcomings and Future Tasks

In the current implementation of the proposed model, all MFC-Conv modules use the same number of feature channels. However, the amount and complexity of feature information vary across different network depths. To optimize feature transmission and further reduce computational cost, it would be beneficial to assign different channel configurations to MFC-Conv modules at different depths. Future work will focus on a more comprehensive investigation of this aspect, analyzing the effects of various channel allocation strategies on ship target recognition performance in the SAR data. The goal is to achieve higher detection accuracy while maintaining an optimal balance between model efficiency and computational cost.

Regarding the influence of weather conditions on model accuracy, the special physical properties of the microwave signals used by satellite SAR ensure that fog and cloud cover cause virtually no interference, resulting in negligible impact on detection performance. However, heavy precipitation can induce scattering of the microwave signal, weakening the returned echoes and slightly reducing recognition accuracy. In addition, high wind speeds can generate a strong background on the sea surface, which may obscure the signatures of small vessels and make them difficult to detect.