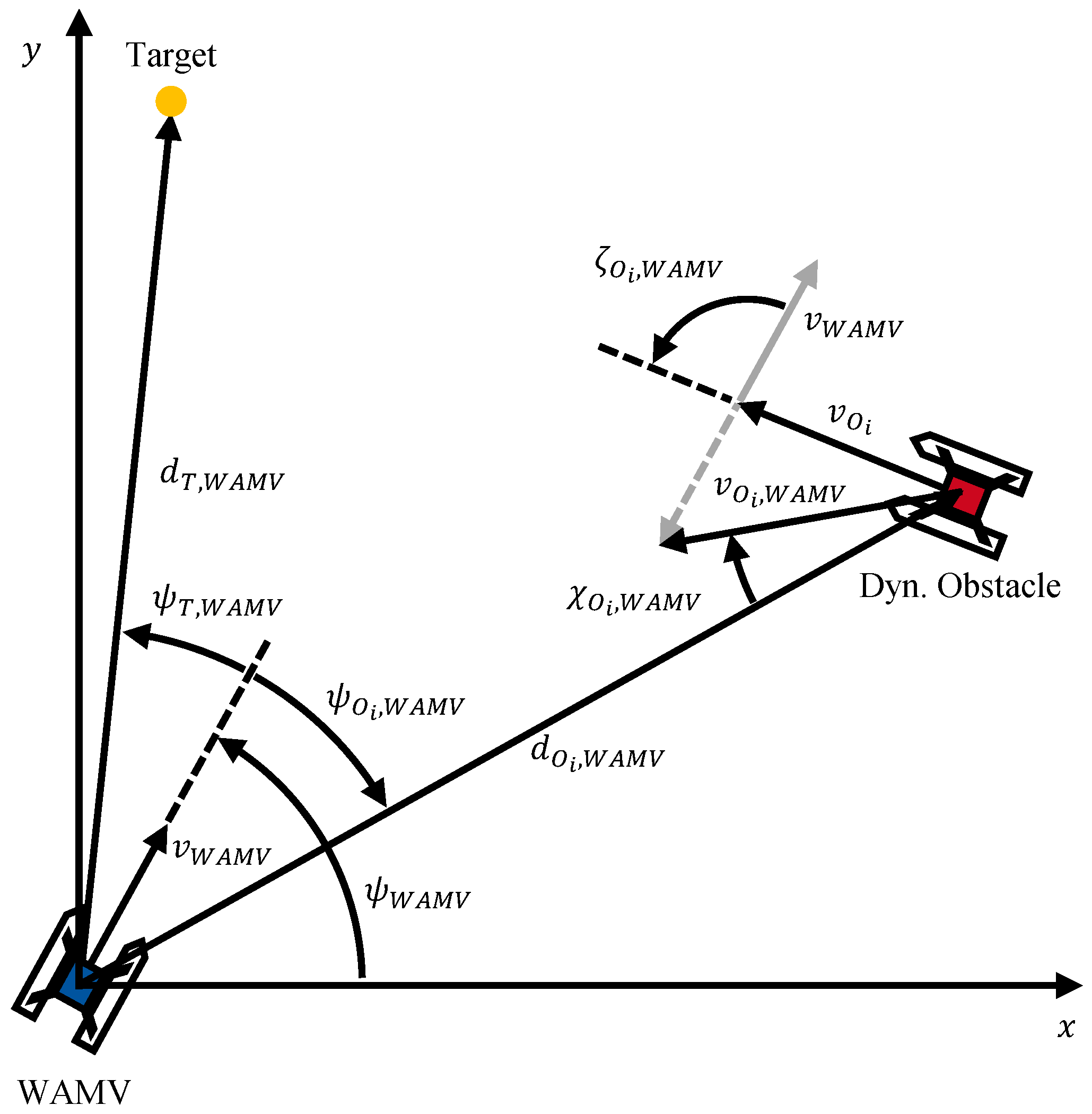

This section presents the training results of the various scenarios presented with their respective RL formulations. For validation, the agents are executed in the same scenario in which they were trained over 200 episodes. The step limit per episode is identical to the training. However, a different variation of the scenarios is used in the evaluation, so that, for example, obstacle speed or disturbance variables such as wind and waves may be modified compared to the training. To ensure comparability of the evaluation results across all scenarios and agents, the same set of 200 evaluation variations is used for all agents of one scenario. To analyze the impact of reward shaping on the agent’s behavior, multiple variations of the reward weights (obstacle avoidance) and (target course) are considered. While these parameters are varied, the remaining parameters are held constant throughout all experiments: , , (goal-reaching reward), and (failure penalty). For reasons of computational effort and in view of the trade-off focus of this article, a full factorial review of all parameters was not performed. In a multi-term reward function, the agent’s emergent policy is governed by the relative dependencies and ratios between the competing reward components, not their absolute magnitudes. Therefore, the most direct method to analyze the high-level trade-off between safety and efficiency is to fix the function shapes (, ) and systematically vary the principal weights of the competing terms, and .

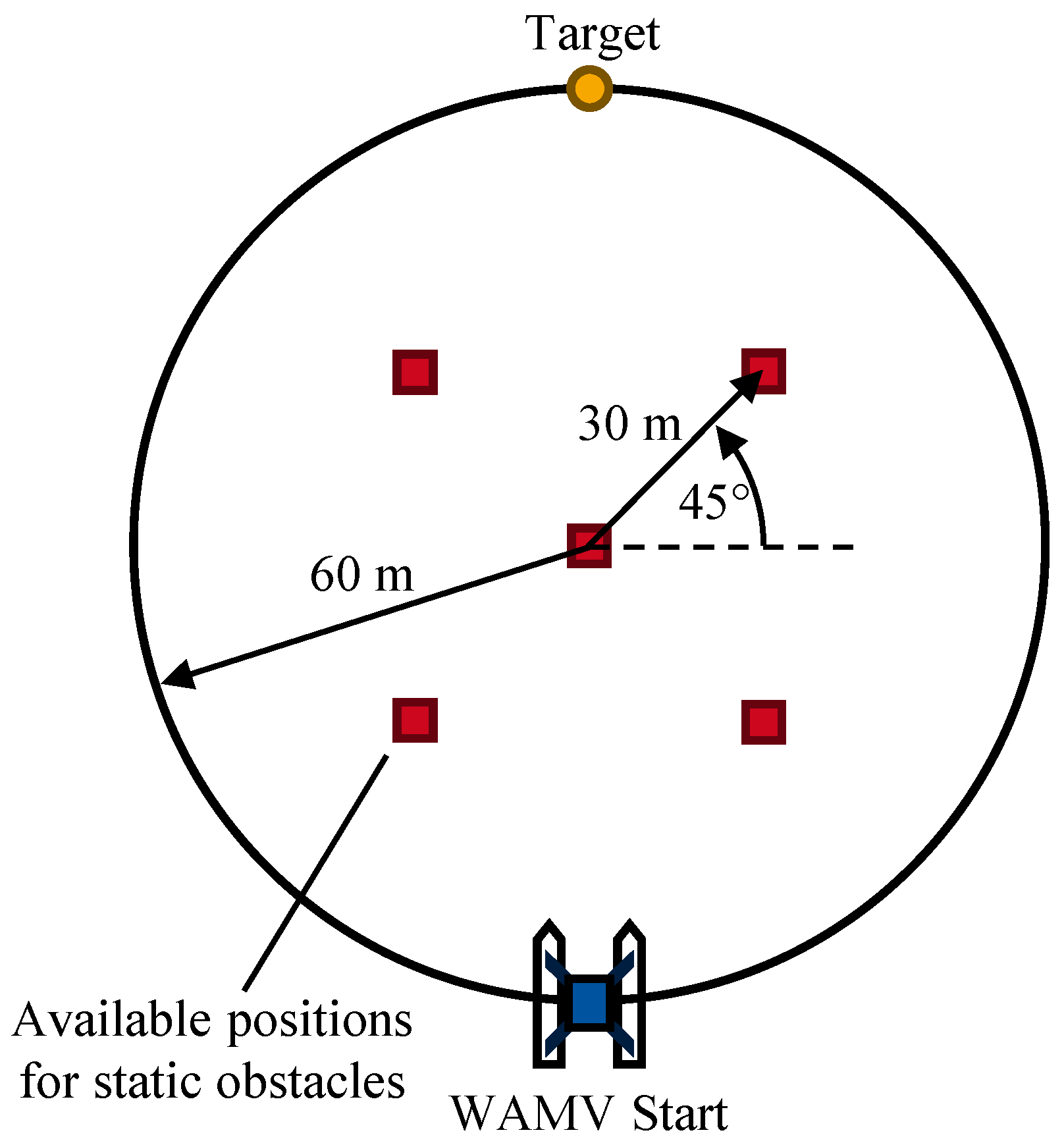

3.1. Scenario CA1—Static Obstacle Avoidance

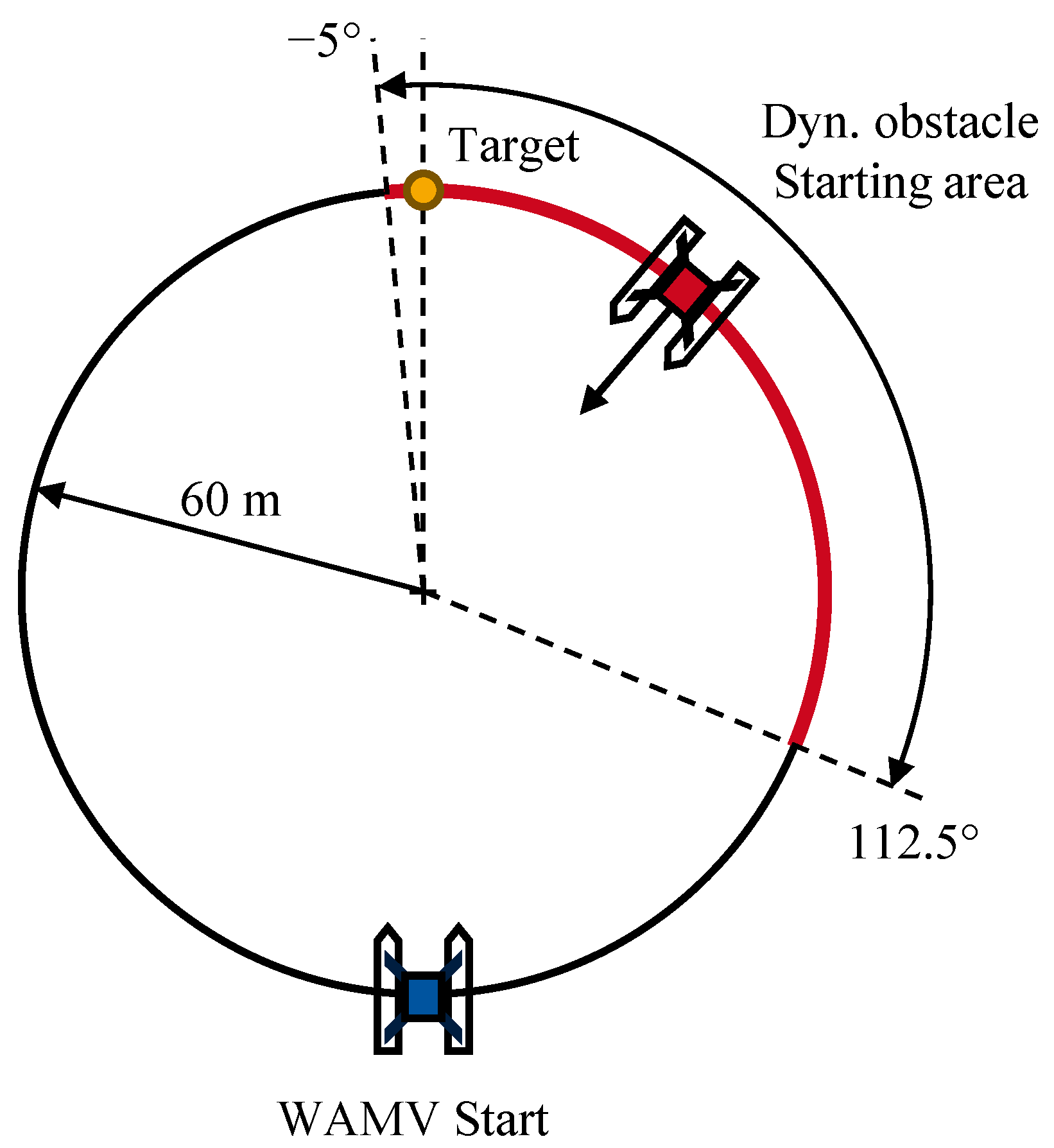

In the CA1 scenario, the agent is evaluated in a static obstacle avoidance task, as detailed in

Section 2.2.4.

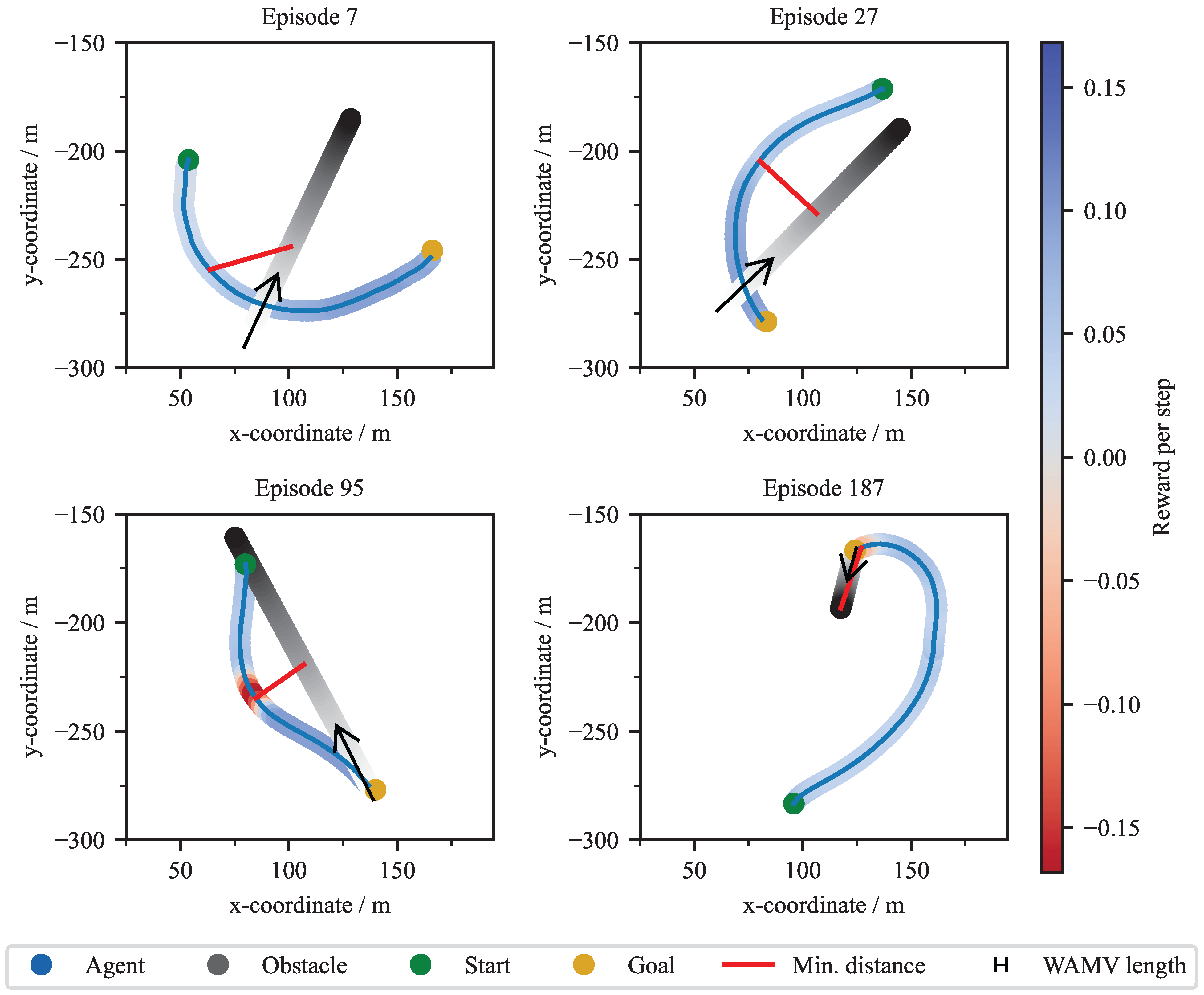

Figure 7 shows four exemplary trajectories from the validation simulations of one exemplary agent (

C_1_3 agent). Each plot illustrates the agent’s path from a start to a goal position in environments with one to three static obstacles. The trajectories demonstrate that the agent successfully learned to maneuver around the obstacles. In the episodes shown, a collision can be observed because, unlike other agents, the agent does not manage to remain collision-free. Notably, even when a gap exists, the agent often chooses a wider path around a group of obstacles rather than navigating between them. The trajectory is color-coded to represent the reward at each timestep: blue segments signify positive rewards for efficient progress toward the target, while red segments indicate penalties incurred for close proximity to obstacles. When the agent gets close to the static obstacles, the reward is colored red accordingly, but this seems to be a reasonable compromise for quickly reaching the target position. Statistical validation results for the CA1 scenario are summarized in

Table 2. Each agent was evaluated based on four key metrics: percentage of successful target reaching, percentage of episodes terminated due to step limit, percentage of collisions with the dynamic obstacle, and percentage of episodes in which the agent exited the defined workspace. It can be observed that certain parameter configurations lead to significantly lower target-reaching rates. Specifically, combinations with a relatively high obstacle avoidance reward

compared to the target course reward

tend to result in reduced performance (e.g.,

C_3_1 and

C_3_2 agents). The primary cause of unsuccessful episodes is typically that the step limit is exceeded, indicating that the agent fails to reach the target within the allowed time. Notably, the configuration

C_3_2 also frequently exits the workspace, indicating that the agent performs excessive evasive maneuvers.

To further evaluate the robustness of the agent, the parameter configuration C_2_1 was subjected to validation under disturbance conditions C_2_1_D1. It still shows high target achievement rates, even though it has not been trained with wind and waves previously. This indicates that the agent has learned to control the system dynamics sufficiently well to ensure safe navigation of the WAMV, even under previously unseen disturbances. However, the target was not achieved in 1.5% of the evaluation episodes, because the workspace was left. In contrast to this, agent C_2_1_D2 was trained with additional training under disturbances. After completion of training in 800 episodes without disturbances, this configuration was retrained with 400 episodes with disturbances. It shows a 100% target achievement rate; this implies that gradually increasing the complexity of the scenario and thus performing curriculum learning is beneficial for the agent’s training success, although in this scenario, the gains in success rate are rather small. These findings highlight the importance of a balanced reward formulation to ensure both efficient navigation and reliable target reaching in static obstacle environments.

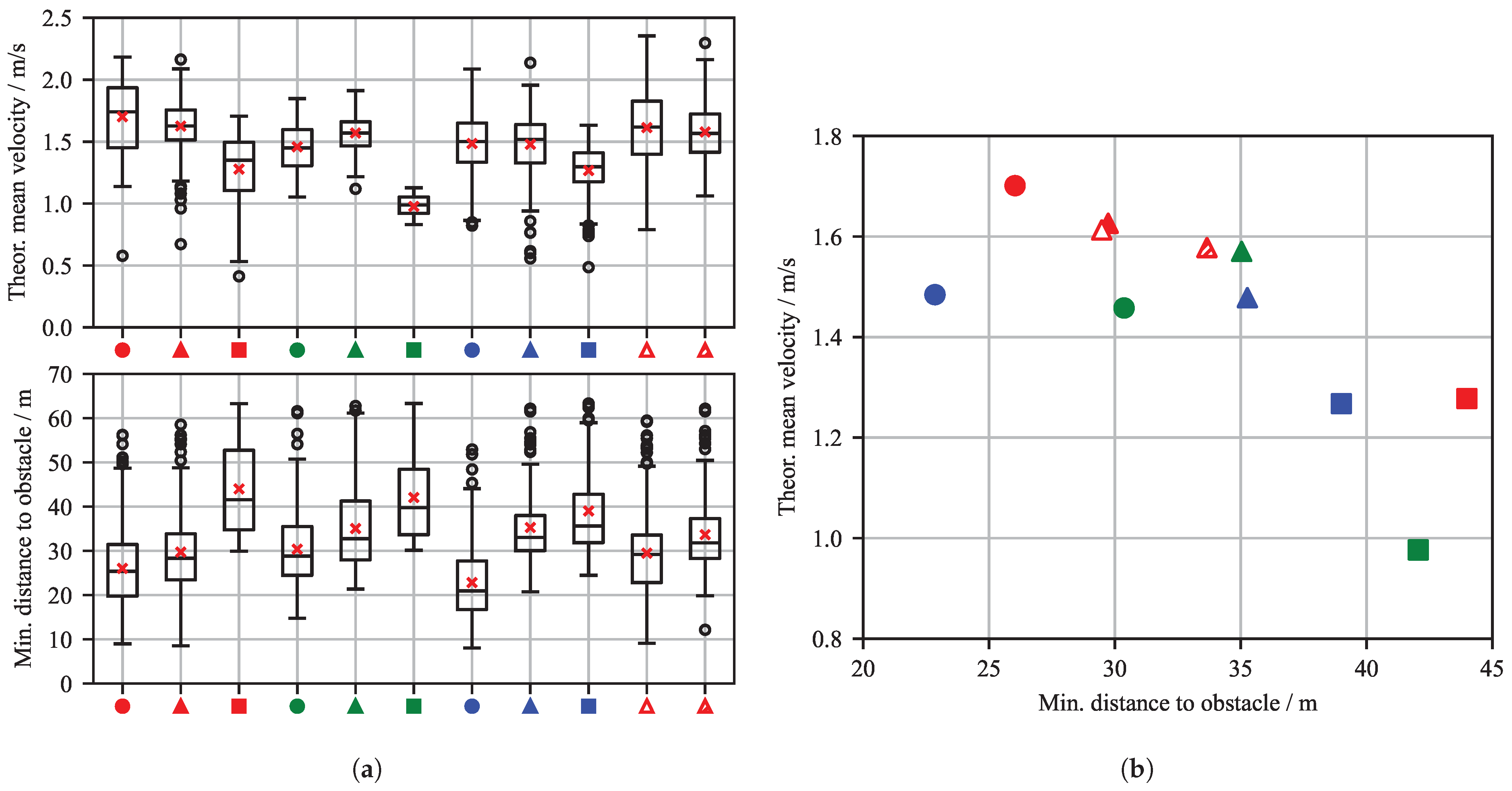

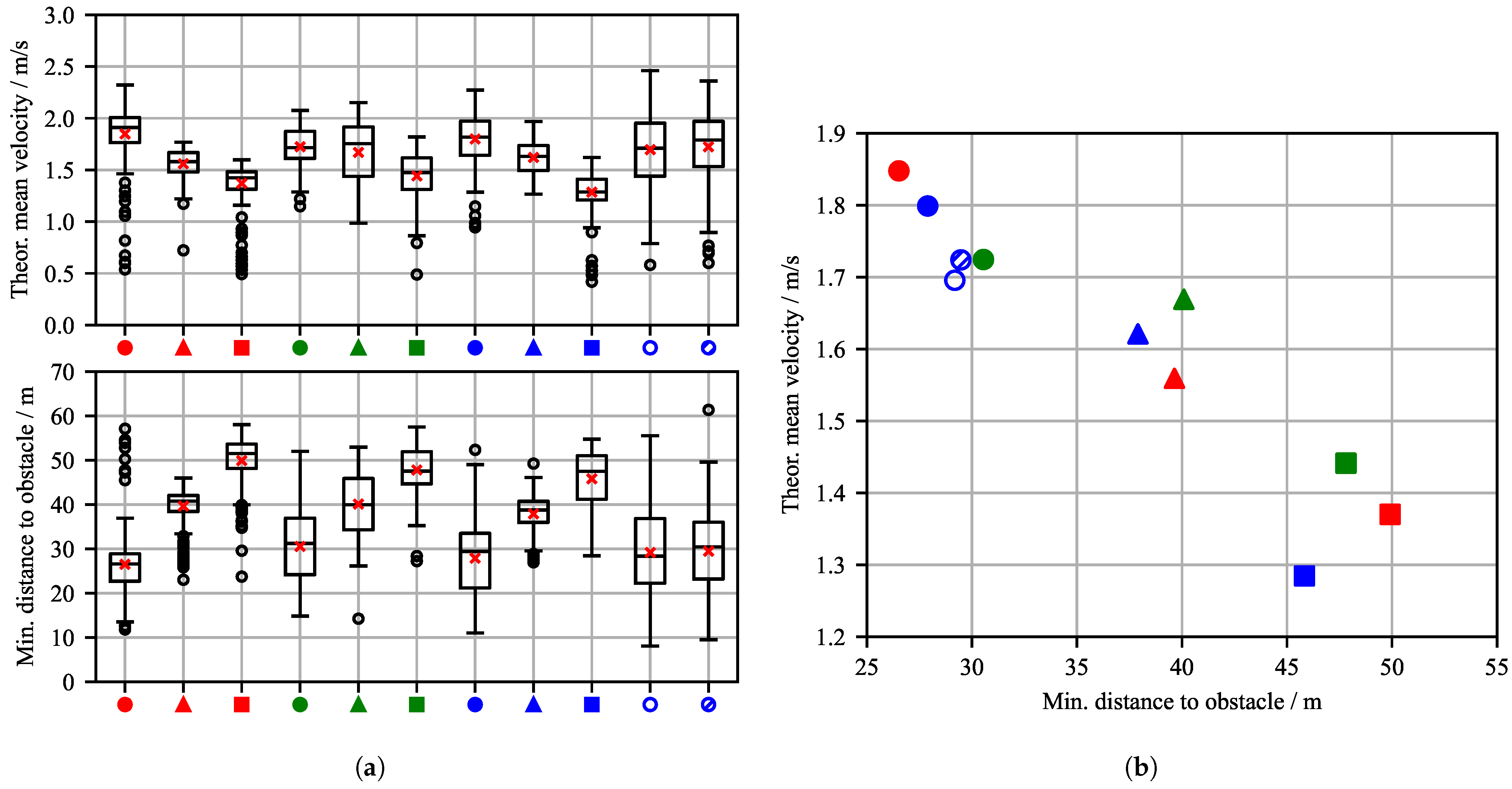

Figure 8a shows box plots with statistical data for the theoretical mean velocity and minimum distance to obstacles in the validation episodes in which the target was reached. For each successful episode, two metrics are evaluated: the minimum distance to the obstacle and the theoretical mean velocity of the WAMV. The minimum distance to obstacles refers to the closest point between the WAMV and the obstacle during the episode. The theoretical mean velocity is calculated as the straight-line distance between the start and target positions divided by the time the agent takes to reach the target position. The mean value across all validation episodes is marked with a red cross. The variation in CA1 configurations generally shows that agents with a higher parameter value for obstacle avoidance

maintain a greater distance from obstacles. This corresponds to intuition in the design of parameter weighting. Furthermore, the theoretical mean speed decreases noticeably under these configurations. It becomes clear that there is a spread in the values of the evaluation metrics considered across the evaluation episodes, i.e., the agent does not always keep exactly the same distance from the varying number of static obstacles, but rather makes compromises in order to reach its goal quickly.

This is also consistent with the observations in the trajectories presented above (see

Figure 7), where the agent maintains different distances to the obstacles. Now it also becomes apparent that the configuration that was subjected to disturbances in the evaluation, without having been trained with them

C_2_1_D1 exhibits increased variance in velocity and minimum obstacle distance despite having good target reaching rates. In comparison to

C_2_1, the standard deviation in the velocity of

C_2_1_D1 increased from

to

, and the standard deviation in the minimum obstacle distance increased from

to

. In contrast, agent

C_2_1_D2 shows significantly less dispersion, indicating that the additional training with 400 disturbance episodes has been clearly successful. The standard deviation in its velocity is

while the standard deviation in its minimum obstacle distance is

. The mean values and standard deviations of all agents are listed in

Appendix A within

Table A3.

Figure 8b presents a scatter plot illustrating the theoretical mean velocity of the agents plotted against the minimum distance to the nearest static obstacle, across the considered reward and disturbance configurations. The plot reveals a clear trade-off between navigation speed and obstacle proximity: higher velocities tend to correlate with reduced minimum distances, necessitating a compromise between short target reaching time and safety. This compromise also reflects natural expectations regarding the influence of the parameters, but helps to quantify this impact. Given the approximate length of the WAMV platform (

), all observed minimum distances are sufficiently large to avoid collisions, indicating that the agents maintain safe margins even at higher speeds.

Figure 8b also highlights the influence of reward shaping on agent behavior: Configurations with stronger emphasis on obstacle avoidance tend to maintain larger safety distances but may sacrifice speed, whereas those prioritizing target course alignment may achieve faster trajectories at the cost of reduced clearance. These insights are critical for tuning reward functions in real-world applications where both safety and efficiency are paramount.

3.2. Scenario CA2—Dynamic Obstacle Avoidance

In the CA2 scenario, the agent is trained and evaluated in a dynamic collision avoidance task involving a single moving obstacle. The scenario is described in detail in

Section 2.2.4. To investigate the influence of reward design on agent behavior, the same variations in reward weights

(obstacle avoidance) and

as in CA1 (target course) are considered; see

Table 1. Furthermore, multiple disturbance configurations are evaluated to assess the robustness of the learned policy. In addition to the trajectory plots already presented in

Figure 7,

Figure 9 also contains the trajectories of the obstacle ship, as well as the minimum distances to the dynamic obstacle marked in red. The gray curve represents the trajectory of the dynamic obstacle, where lighter segments denote earlier positions in time. The direction of the black arrow corresponds to the direction of movement of the obstacle. The agent with the configuration

C_1_3 managed to avoid a collision in all four test episodes.

Notably, in all shown cases, the WAMV consistently performs the avoidance maneuver to the right. This can be explained by the scenario setup, as the dynamic obstacle always comes head-on or from the right. In this case, the agent has learned that it can prevent most collisions by slightly evading to the right.

Table 3 summarizes the statistical validation results of the trained agents in the CA2 scenario. The same evaluation criteria are used across all test episodes: percentage of successful target reaching, percentage of episodes terminated due to step limit, percentage of collisions with the dynamic obstacle, and percentage of episodes in which the agent exited the defined workspace. Across all parameterizations, the agents demonstrate high reliability, with most achieving target-reaching rates above 98% without disturbances. Notably, the agent

C_1_3 achieved a 100% target reaching rates, without any collisions, step limit violations, or workspace exits. Minor deviations are observed in agents such as

C_1_1 and

C_3_2, which exhibit slightly reduced target reaching rates and isolated cases of workspace exits or collisions. However, no trend can be identified that would allow conclusions to be drawn about the parameter configuration. Overall, the agents learned to avoid dynamic obstacles more effectively than static ones. This can probably be explained by the fact that, in CA1, obstacles usually block the path to the goal, necessitating evasive trajectories from the beginning. In contrast, CA2 has scenario variations in which agents can reach the goal without evading any obstacles.

To evaluate the robustness of the C_1_3 configuration, it is also tested under environmental disturbances C_1_3_D1, conditions it had not encountered during initial training. Under these disturbed conditions, the agent’s target achievement rate decreased. The collision rate increased to , and the agent exited the workspace in of the episodes. To improve performance, the agent was retrained for an additional 400 episodes C_1_3_D2 with these disturbances present. This retrained configuration achieved a target-reaching rate of , a notable improvement. This result suggests that curriculum learning, a gradual increase in scenario complexity, enhances the agent’s performance. However, collisions still occurred in of cases. For comparison, we also trained agents with disturbances present from the beginning of the training. This approach yielded substantially poorer performance and was therefore not pursued further.

Figure 10a presents box plots of the theoretical mean velocity and minimum obstacle distance from the CA2 evaluation episodes. Similar to the CA1 scenario, these results reveal a clear dependency on the reward function parameters. Increasing the obstacle avoidance weight,

(indicated by symbol shape), results in more cautious agent behavior. Consequently, the agent maintains a greater distance from the obstacle, which increases the theoretical mean velocity to reach the target position. In contrast, increasing the target course weight,

(indicated by color), does not significantly influence the mean values for velocity or distance. However, a larger parameter value generally correlates with a greater spread in both metrics. Both agents tested under environment disturbance conditions,

C_1_3_D1 and

C_1_3_D2, show a marginally higher data dispersion in their results without a statistically significant difference between them.

Theoretical mean velocity and minimum distance to obstacles are plotted against each other in a scatter diagram (see

Figure 10b). The results reveal a trade-off between minimum obstacle distance and theoretical mean speed: The closer the WAMV passes by the obstacle, the faster it moves to the goal. Considering the approximate length of the WAMV, which is about

, all depicted minimum distances can be considered as non-critical. The mean minimum distances to the dynamic obstacle vary between

and

.

To further analyze the safety performance of the trained agents in dynamic encounters,

Figure 11 presents a scatter plot of the minimum TTC against the PET for three distinct agent configurations. These metrics are critical for quantifying the risk associated with each encounter, where lower values indicate a higher collision risk.

Agents C_1_3 and C_2_1, which were neither trained nor validated with environmental disturbances, demonstrate robustly safe behavior. Their encounters predominantly populate regions of higher TTC and PET values, and neither agent registered a collision during the 200 validation episodes. A subtle difference is observable between them, as C_1_3 generally operates with lower TTC values compared to C_2_1. In contrast, the agent C_1_3_D2, which underwent additional training with disturbances and was validated under the same conditions, exhibits a notable shift in its operational characteristics. Its data points are more densely clustered in the lower-left quadrant of the plot, with a visible shift towards lower PET values, indicating that the agent struggles to maintain large safety margins when subjected to environmental disturbances. This performance degradation is further evidenced by the three collisions this agent experienced during validation. The results highlight a clear challenge in achieving robustness; while the agent learns to operate in disturbed conditions, it does so with a reduced safety envelope, revealing a difficult trade-off between operational capability and consistent collision avoidance.

In summary, the proposed formulation for the reinforcement learning agents enables effective collision avoidance not only with static obstacles but also in scenarios involving dynamically moving obstacles. It is important to critically note that, in some cases, avoiding collisions with dynamic obstacles may be easier to achieve than with static ones. In static scenarios, there is almost always at least one obstacle directly in the direct path of the vessel. In contrast, the defined dynamic obstacle avoidance setup includes scenario variations where evasive maneuvers were not strictly necessary to prevent a collision. This is also reflected in the training outcomes: success rates in dynamic obstacle avoidance are consistently high, and preliminary experiments indicate that pre-training without obstacles would not have been required to achieve reliable performances. Next, combined scenarios involving both static and dynamic obstacles are considered. These represent a fusion of CA1 and CA2 and are designed to closely approximate real-world conditions.

3.3. Scenario CA3—Multi-Hindrance Scenario

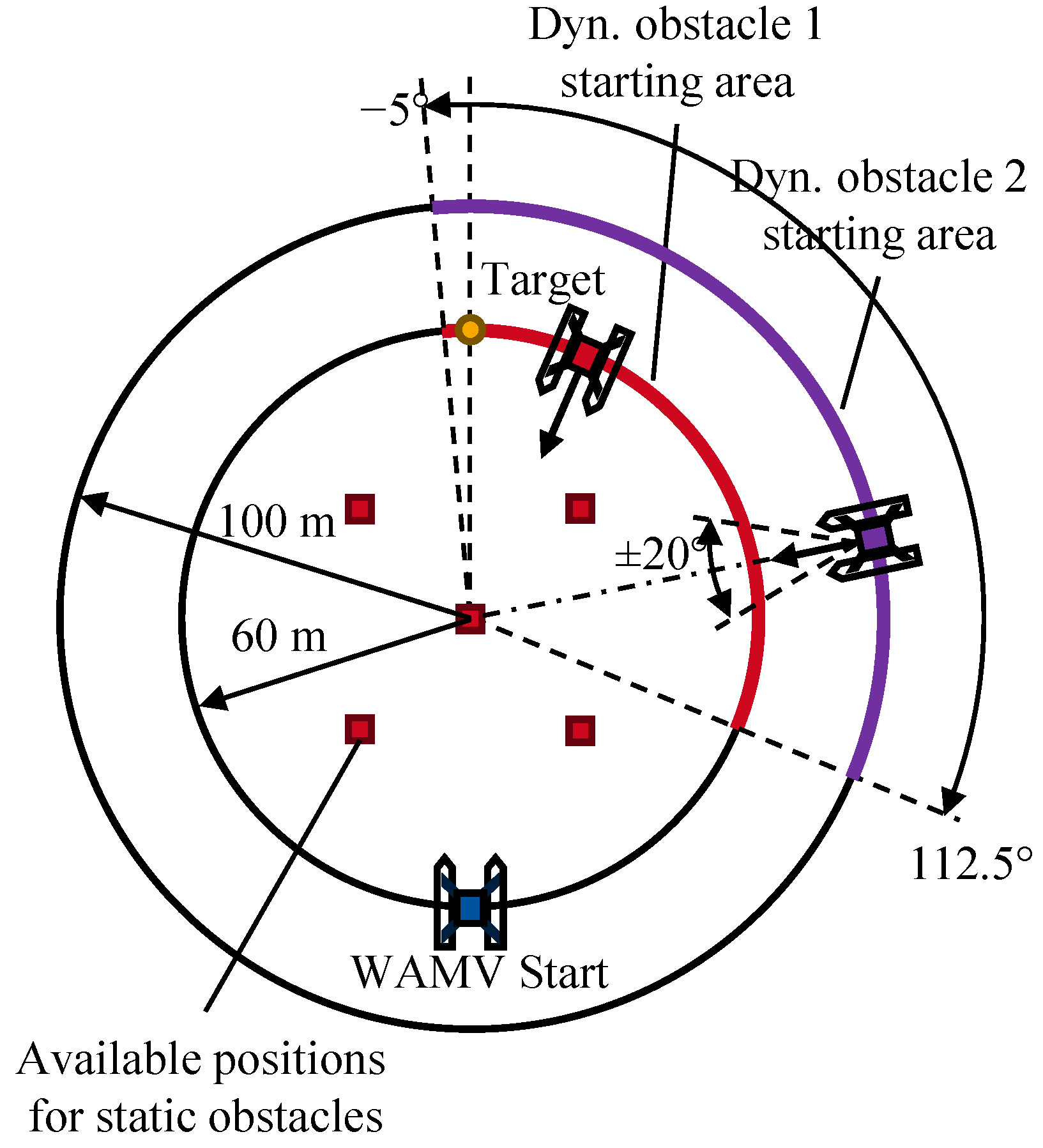

The final scenario, CA3, assesses the agent’s performance in a complex multi-hindrance environment combining both static and dynamic obstacles, as described in

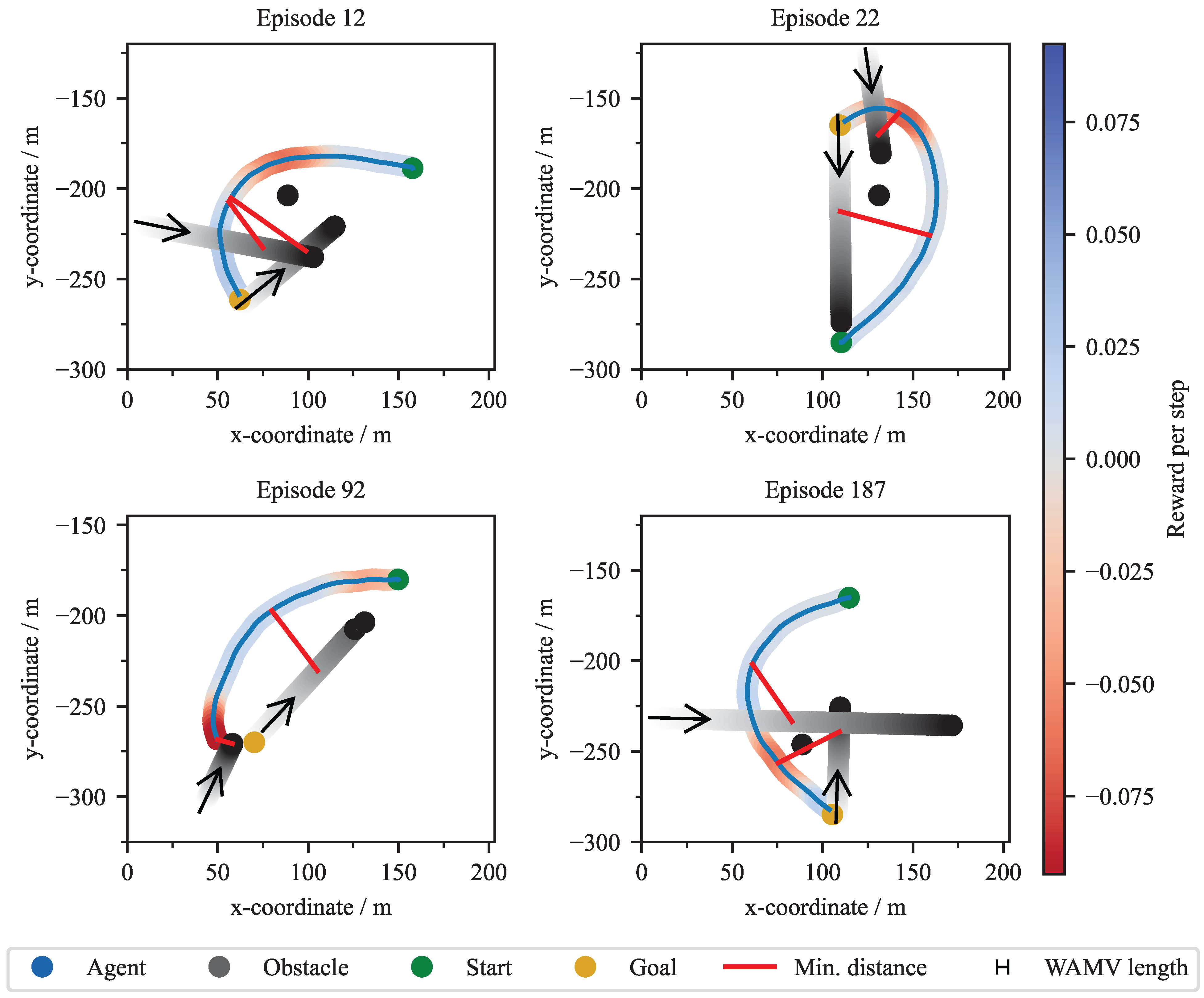

Section 2.2.4. Exemplary validation trajectories for the

C_1_1 agent are depicted in

Figure 12. These plots illustrate the agent’s capacity to navigate complex encounters. The agent successfully develops avoidance strategies, such as crossing behind the path of dynamic obstacles (Episode 12 and 92) or navigating between them (Episode 22). The red connecting lines mark the minimum distance between the WAMV and the respective dynamic objects. The direction of the black arrow corresponds to the direction of movement of the obstacle. This scenario’s difficulty is also highlighted by Episode 187, which terminates in a collision with a dynamic obstacle.

A statistical summary of the performance across all reward configurations is provided in

Table 4. Due to the increased scenario complexity, overall success rates are slightly lower and more variable than in the single-obstacle scenarios. Target-reaching rates for agents trained without disturbances remained high, generally between

(

C_2_3) and

(

C_2_2). Unlike the static-only CA1 scenario, failure was not dominated by workspace violations or time-outs, which were minimal across all configurations. Instead, the primary failure mode was direct collision, with rates ranging from

(

C_3_1) to

(

C_2_3).

The impact of environmental disturbances is significant. The C_2_1_D1 agent, which was validated under disturbances without prior training, experienced a substantial performance drop: its success rate fell from to , while its collision rate increased from to . In contrast, the C_2_1_D2 agent, which underwent an additional 400 episodes of curriculum learning with disturbances, demonstrated robustness. This agent restored its target-reaching success rate to and reduced its collision rate to , performing comparably to the original agent in ideal conditions. This result strongly validates the curriculum learning approach, where scenario complexity is gradually increased to achieve robust policy generalization.

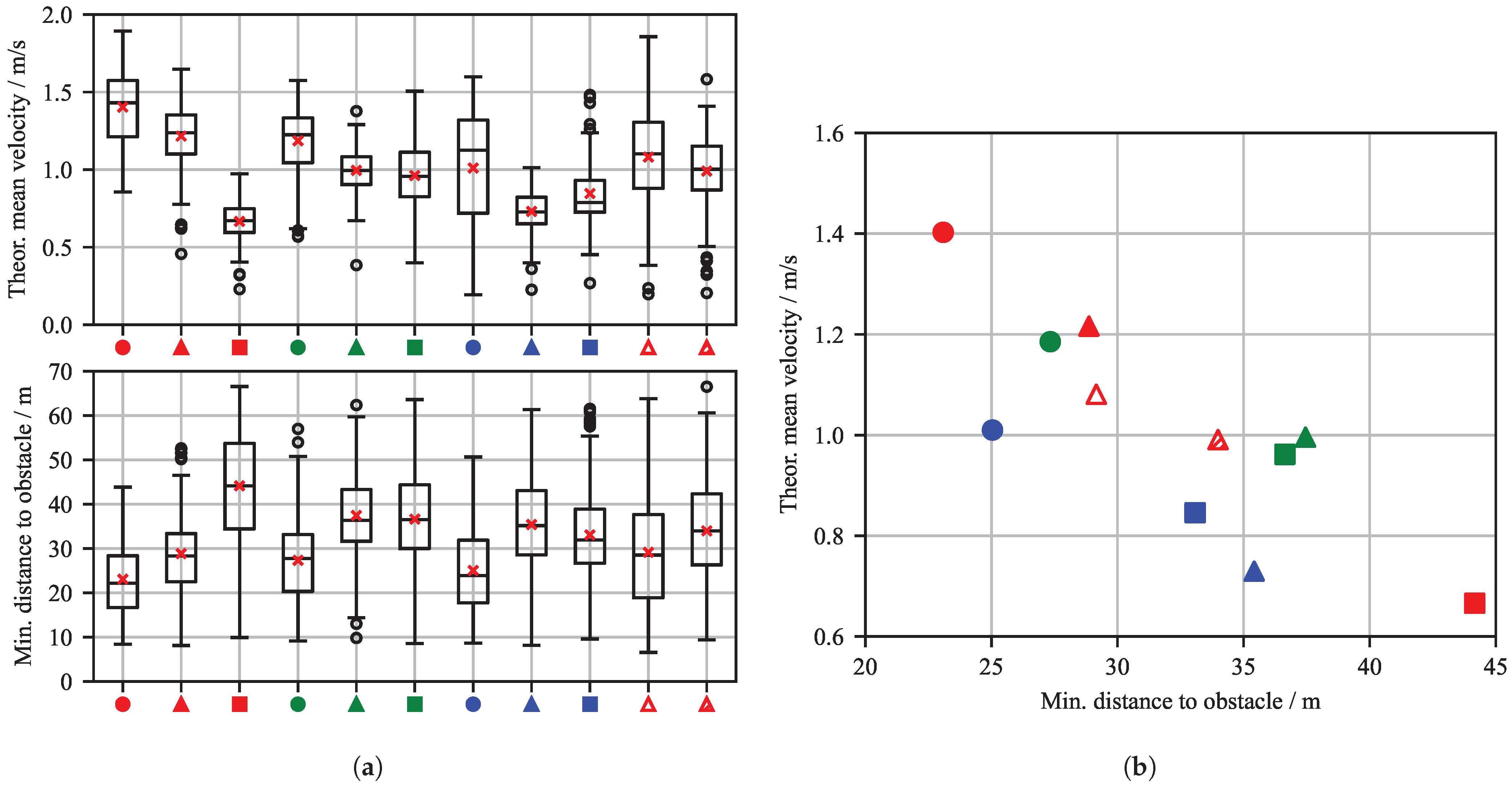

The trade-off between mission efficiency and safety margins is quantitatively mapped in

Figure 13. The box plots in

Figure 13a confirm the influence of the reward shaping parameters. Agents with a higher obstacle avoidance weight

(e.g.,

C_3_1,

C_3_3) consistently maintain a larger minimum distance to obstacles, adopting a more cautious policy. The scatter plot in

Figure 13b visualizes this compromise directly. An inverse relationship exists between navigation speed and safety clearance. For instance, the

C_1_1 agent achieves a high mean velocity of

but maintains a tighter average distance of approximately

. Conversely, the highly cautious

C_3_1 agent achieves a large

minimum distance at the cost of a significantly reduced mean velocity of only

.

Figure 13a also reinforces the disturbance training findings: the

C_2_1_D1 agent (unfilled triangle) exhibits high variance in both velocity, with a standard deviation of

, and distance, with a standard deviation of

. In contrast, the retrained

C_2_1_D2 agent (hatched triangle) shows a much more consistent and stable performance. Its standard deviations are

for velocity and

for minimum obstacle distance. This is similar to the baseline

C_2_1, which shows standard deviations of

and

, respectively.

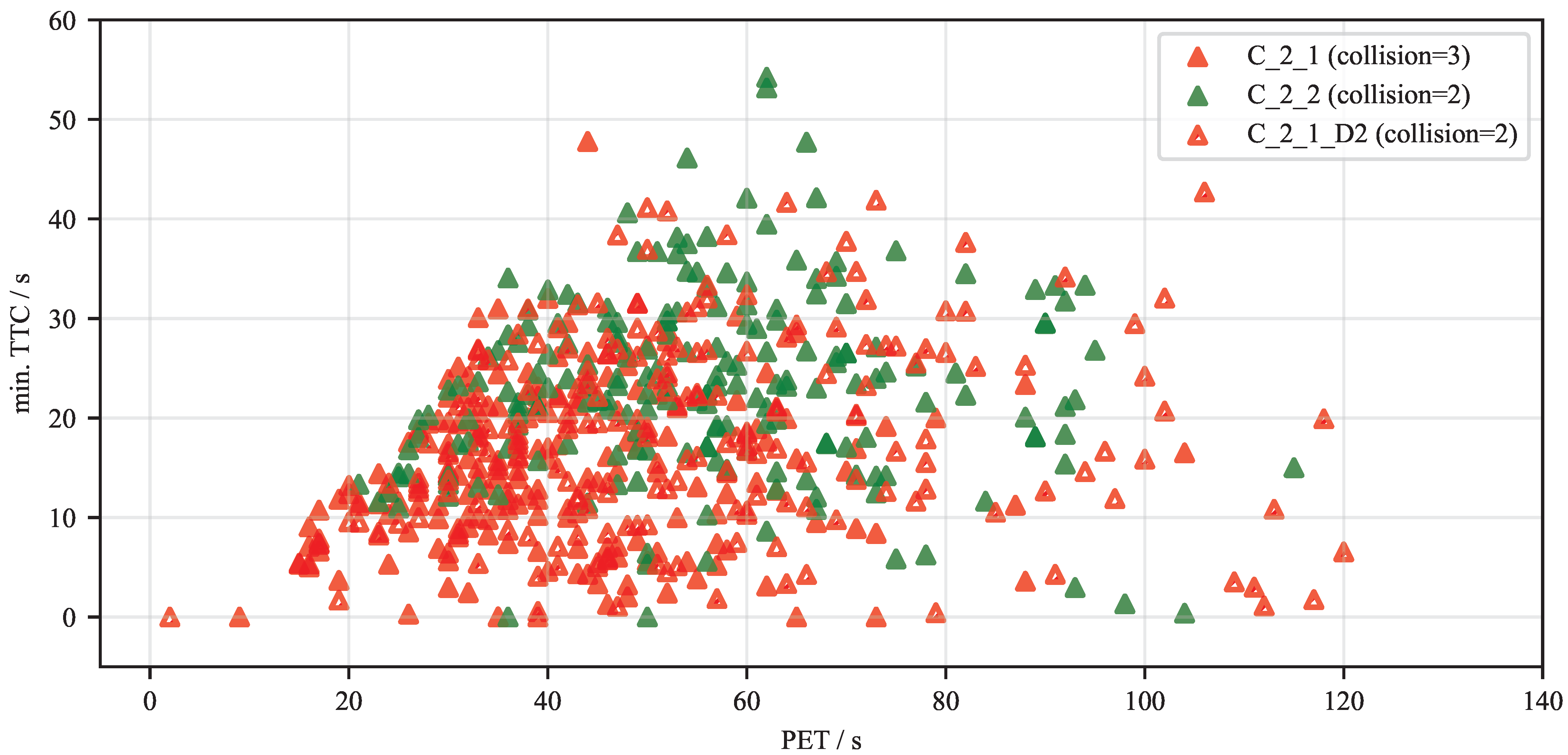

To further analyze the safety characteristics of the agents in the multi-hindrance scenario,

Figure 14 plots the minimum TTC against the PET for three configurations. This analysis reveals nuanced differences in their avoidance behaviors. The

C_2_2 agent, for instance, generally exhibits higher PET values compared to the other agents, indicating that its policy favors maneuvers that create greater temporal separation from obstacles, even at the cost of accepting a wide range of TTCs.

In contrast, the agent trained with disturbances, C_2_1_D2, displays a significantly higher variance in both its TTC and PET values. This increased scatter suggests that while the agent can still operate effectively, the environmental disturbances introduce a degree of unpredictability, making it more difficult to maintain consistent safety margins. The most critical encounters are represented by data points where the TTC approaches zero. These instances correspond directly to the recorded collisions—three for C_2_1, two for C_2_2, and two for C_2_1_D2—as well as other high-risk, near-miss situations, underscoring the residual risk present even in well-trained policies when faced with complex, dynamic scenarios.