1. Introduction

Hydraulic structures (dams, gates, and pipelines, etc.) are critical facilities for hydraulic safety and exploration [

1]. Underwater structures are susceptible to the initiation and accelerated growth of defects—particularly cracks, holes, and spalling—due to high-velocity flow-induced erosion, severe stress concentrations, and harsh service conditions; if unaddressed, these defects pose serious safety risks and can lead to substantial economic losses and significant environmental damage. Automatic underwater structural multi-defects automatic detection has emerged as a crucial technology for inspecting hydraulic structures [

2]. However, relative to terrestrial objects, underwater defects exhibit distinct characteristics. In particular, common structural defects—cracks, holes, and spalling—show pronounced intra-class variability in surface appearance, in-plane (planform) morphology, and subsurface (through-thickness) extent. Comprehensively and sufficiently representing a variety of underwater structural multi-defects is challenging for underwater detection. Moreover, the low visibility in complex underwater environments further exacerbates the challenges in representing underwater defects.

To motivate the sensing modality adopted in this study, we first situate sonar within the broader landscape of non-destructive testing (NDT) methods for hydraulic structures. Common NDT techniques include ultrasonic testing and impact-echo, which provide material-level diagnostics but typically require contact or careful coupling and are difficult to scale to large underwater areas. Magnetic particle/dye-penetrant methods are limited to specific materials and surface-breaking defects on prepared surfaces. Optical/laser imaging can deliver high spatial detail in clear water but suffers in turbidity and low illumination. In contrast, sonar imaging is an active, stand-off modality that remains effective in turbid water, offers longer imaging ranges suitable for AUV/ROV surveys, and is intrinsically sensitive to geometric discontinuities (e.g., edges, cavities, delamination) that characterize cracks, holes, and spalling. Sonar’s limitations—speckle noise, clutter, and coarser spatial resolution—motivate the method proposed in this work, which strengthens morphological representation, enforces cross-scale consistency, and selectively emphasizes salient defect cues in noisy acoustic backgrounds. Guided by this rationale, we next detail the forward-looking sonar (FLS) acquisition setting and its operational constraints, which directly inform the design choices of our sonar-based structural-defect object detector.

Recently, autonomous underwater vehicles outfitted with an FLS imaging system have emerged as a crucial instrument for inspecting hydraulic structures [

3] since they can largely address the issues caused by signal attenuation and scattering. Despite the long range of underwater visibility, the use of FLS still faces challenges for underwater defect detection [

4,

5,

6,

7]. First, the low spatial resolution of underwater sonar imaging makes it difficult to identify small and weak defects. For example, small-scale defects—such as holes and fine cracks—are often low-contrast and poorly resolved in sonar imagery. In addition, sonar acquisitions are contaminated by speckle and reverberation, which further reduce the SNR and impair the representational fidelity and separability of defect signatures. These limitations of underwater sonar imaging and the inherent variability of underwater structural defects jointly render underwater defect detection a highly challenging task. Aiming to address the above challenges in underwater defect detection, efforts have been devoted to enhance the feature representability of multi-defects. Considering the monochromatic and texture representation of sonar images, previous methods [

8,

9,

10,

11,

12,

13] largely rely on handcrafted low-level features, e.g., edges and textures. Although these features have clear semantic information, they can hardly reach promising detection accuracy due to their limited generalization. Deep learning provides a data-driven way to mitigate this issue by learning features that adapt to complex underwater scenes. Nonetheless, under limited data, any high-capacity model—not only deep networks—can overfit, biasing the detector toward a narrow range of defect appearances. In addition, supervised deep learning benefits from sufficient labeled data; in hydraulic inspections where annotations are scarce, model generalization is constrained unless more training data are curated.

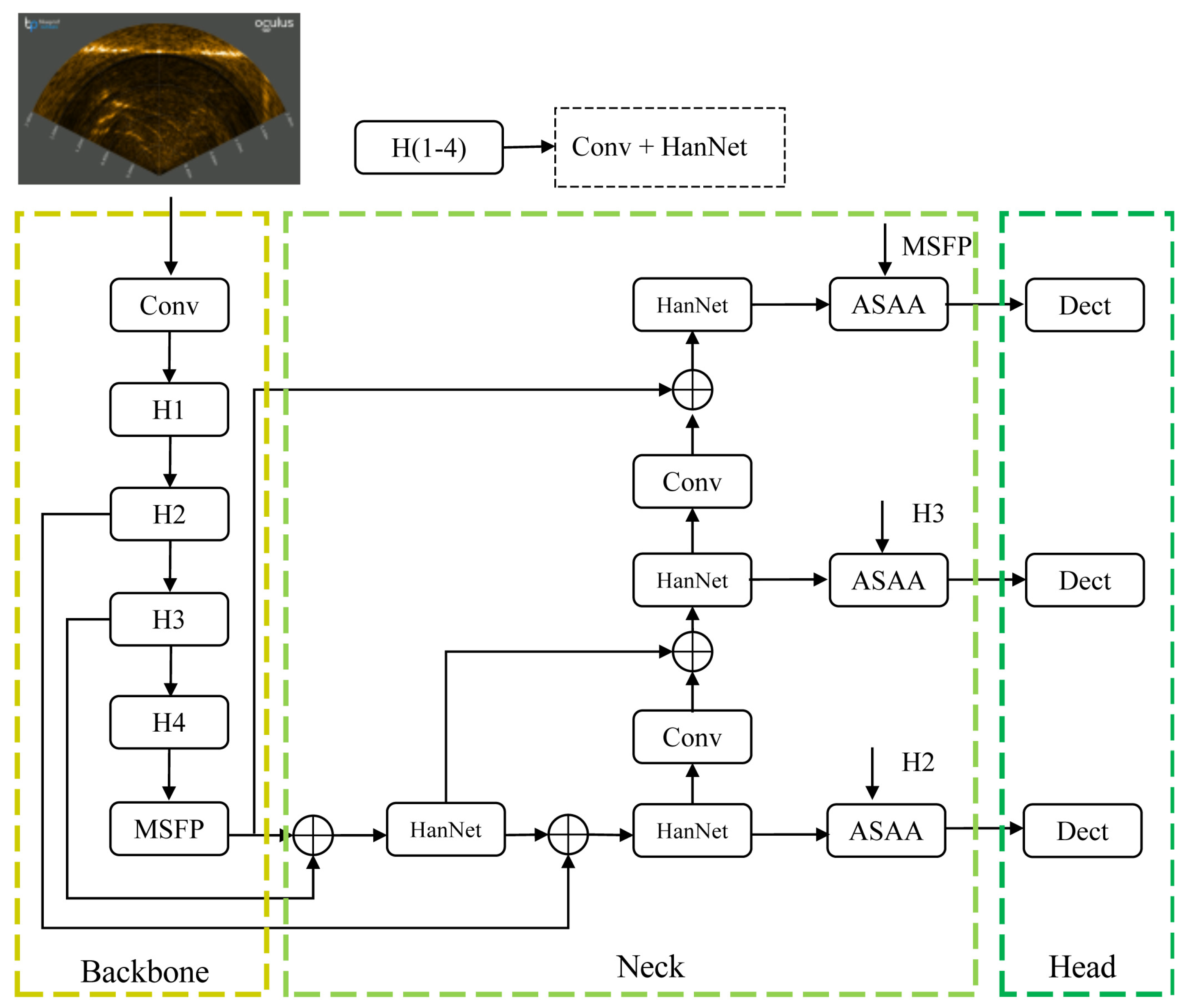

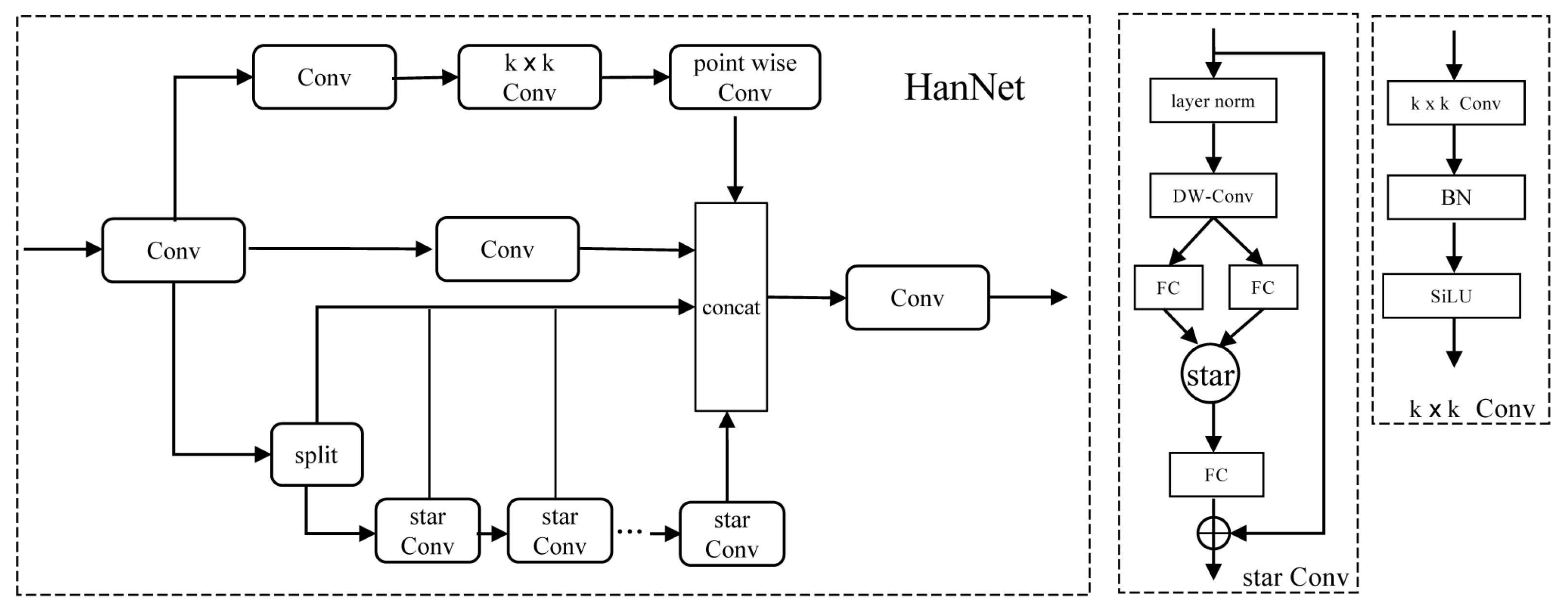

Concerning three typical underwater structural defects, i.e., cracks, holes, and spalling, this paper comprehensively addresses the above issues from two perspectives. First, we conducted underwater inspecting tests to increase the number of underwater sonar images of multi-defects, which provides a comprehensive dataset for training defect detection models. Second, we proposed a novel hybrid neural network which can comprehensively and fully represent typical underwater structural defects by adaptively fusing multi-type feature representation. The main contributions of this work are summarized as follows:

- (1)

An underwater structural defect dataset is built by conducting underwater inspecting tests in a real-world water body, which is of 2500 images catering to most deep learning model training.

- (2)

A hybrid neural network is proposed, which integrates multi-scale multi-type convolutions to enrich feature types. It can enhance the feature representability to different types of underwater structural defects.

- (3)

The adaptive spatial-aware attention module is introduced, which dynamically adjusts the receptive field to selectively fuse discriminative features for backend defect detection.

The remainder of this paper is organized as follows.

Section 2 reviews related work on underwater structural defect detection and underwater sonar image object detection. The project background and underwater sonar defect dataset (USDD) are introduced in

Section 3.

Section 4 presents the novel hybrid neural network in detail. Experimental results along with comprehensive analysis are reported in

Section 5. Finally,

Section 6 concludes this paper.

2. Related Work

This study addresses object detection of structural defects (crack, hole, spalling) in sonar imagery. Accordingly, we organize this section into two parts: (i) multimodal object detection for structural defects, which surveys methods across optical and sonar modalities; and (ii) sonar object detection for underwater structures, which focuses specifically on acoustic imaging methods and sonar-specific challenges. Both subsections review object detection; the distinction is breadth across modalities vs. sonar-specific depth.

2.1. Multimodal Object Detection for Structural Defects

Underwater structural defects have emerged as a vital cause of hydraulic engineering risk. The most efficient method to swiftly detect underwater defects is through the use of underwater imaging technologies. Optical and sonar imaging are the two representative modalities evaluated in this domain. Owing to their high spatial resolution and acquisition efficiency, optical systems have been widely adopted for the inspection of hydraulic structures. However, underwater optical imaging suffers from serious light absorption and scattering effects, which in turn causes information attenuation and distortion in underwater images. This issue would lead to a significant decline in defect detection accuracy. Aiming to solve this problem, previous methods commonly employ various mathematical techniques to enhance the optical image quality. In general, underwater image enhancement techniques generally fall into three categories: contrast compensation, color correction, and hybrid method. For contrast compensation, the simple histogram equalization method proved highly effective in the early stage, while other complex mathematical methods, e.g., empirical mode decomposition and biological vision-inspired methods, have been proposed later. All of these methods have been evaluated for underwater defect detection and have proven effective at suppressing background clutter. Color-correction approaches, in particular, show promising performance for enhancing imagery of underwater concrete structures and improving the visibility of defects. Due to the complementarity between contrast compensation and color correction, many hybrid methods have been proposed to comprehensively enhance the quality of underwater images [

14,

15]. Also, other traditional mathematical morphology technologies [

16] have been utilized in underwater defect detectors. The advantage of these mathematical models lies in that they are lightweight; however, this type of models faces significant challenges in adapting to complex hydraulic engineering environments, particularly in turbid waters and high-flow-rate areas. Additionally, these conventional methods are of low detection efficiency and often impractical for real-world tasks. Recently, deep learning-based underwater image enhancement models have been utilized in underwater structural defects detection, demonstrating a superior performance in contrast to traditional mathematical models [

17,

18]. The main advantage of these learning-based methods lies in their availability to various underwater detection tasks, which have gained significant attention, primarily in underwater image enhancement and object detection applications. Typical deep learning models, e.g., CNN or generative adversarial networks (GANs), have been used to enhance the quality of underwater defect images. They learn and map the relationship between underwater images and their high-quality counterparts, correcting issues like blurriness, uneven illumination, and color distortion. Also, deep learning models have been applied in developing defect detectors [

19]. Typical deep learning-based detection models, e.g., Faster R-CNN, YOLO, SSD, and others have been evaluated in underwater defect detection, which locate underwater structural defects based on the image feature expression. For example, Zhuang et al. [

20] demonstrated that domain-tailored DL models improve structural damage-level recognition after earthquakes.

In the realm of underwater defect detection, sonar technology stands as a promising tool since it can overcome the issues caused by light attenuation and scattering. In contrast to underwater optical imaging technologies, underwater sonar imaging is of longer imaging range and better representation of the geometric shape. In general, sonar imaging detection just requires speckle noise removal, while it is independent of complex image enhancement computing. Traditional sonar imaging-based underwater defect detection methods rely on eye observation and experience of structural defect types. However, underwater sonar imaging results are distinct from perceptual intuition, posing a considerable difficulty on accurately interpreting defect features. To address these difficulties, deep learning models have been investigated in underwater defect detection. The use of deep learning-based sonar image detection enables autonomous extraction and learning defect features, eliminating the need for hand-craft feature designing. Methodologically, the deep learning architecture for processing underwater sonar images is similar to that used in underwater optical images [

21]. For example, the aforementioned CNN and Yolo models have been evaluated in sonar imaging detection, demonstrating a nice performance in underwater structural defect detection.

In conclusion, deep learning demonstrates a promising performance in underwater structural defect detection, yet it currently faces challenges in practical applications due to the small dataset of underwater defects. Moreover, hydraulic structure inspecting is commonly conducted in the wild, and computational resources desired for deep learning can hardly be deployed.

2.2. Sonar Object Detection for Underwater Structures

This subsection focuses on the details of underwater sonar imaging detection models related to general underwater objects. Recent development of the Yolo architecture, e.g., PP-YOLOv2 [

22] and YOLOv3 [

23], has led to their widespread adoption in sonar image processing due to the real-time processing capability. Based on the YOLO baseline architecture, numerous attention mechanisms and denoising modules have been introduced to strengthen feature representations, achieving a favorable accuracy–efficiency balance. In addition, hybrid architectures [

24] have been proposed by combining Yolo with Transformer architectures [

25]. These hybrid architectures have been deployed on remotely operated vehicles (ROVs) [

26], demonstrating their performance in practice. Aiming to improve the efficiency of global context representability, the Transformer architectures have been utilized in sonar image processing. Many studies [

27] have demonstrated performance improvement by using Vision Transformers (ViT-B/16) and Swin Transformers (Swin-T).

Complementary to these detector-centric advances, Tan et al. [

28] demonstrated a deep learning-assisted high-resolution sonar pipeline alongside a task-specific dataset that effectively detects local damages in underwater structures, highlighting the importance of tailored data and feature design for acoustic imaging. From a deployment perspective, Zheng et al. [

29] developed a real-time sonar-based crack detector for underwater concrete, emphasizing latency and resource constraints typical of field operations. To lighten the model complexity, pruning technologies, e.g., Batch Normalization [

30] and DepGraph [

31], have been developed in underwater sonar image detection.

In conclusion, underwater sonar image-based object detection has a very broad space for development, and few efforts have been devoted in this field due to the small available datasets. Existing underwater detection techniques primarily adapt existing object detection technologies on the ground. This strategy is somewhat problematic. First, mature object detection models are primarily designed for processing color optical images, making them unsuited to apply to sonar image modalities. Second, existing object detection models are based on the images on the ground, while they suffer from problems caused by complex underwater imaging environments. Finally, the morphological differences between underwater objects and objects on the ground cause the performance degeneration of detection methods. These gaps call for detectors natively designed for acoustic imagery rather than direct reuse of color-image heuristics. Motivated by this, we develop a sonar-oriented architecture that emphasizes morphology fidelity, parameter-efficient multi-scale fusion, and robustness to speckle/clutter. Unlike prior detectors that rely on a single convolutional morphology, scale-independent pyramids, or generic attention, our pipeline explicitly targets sonar-specific challenges—morphological diversity, scale variation, and speckle/clutter—via HanNet (multi-morphology aggregation), MSFP (parameter-shared pyramid), and ASAA (sonar-aware spatial attention). Together with USDD (2,500 images) and a unified evaluation protocol, this clarifies where the gains originate and supports reproducible comparisons.

3. Underwater Structural Defect Dataset

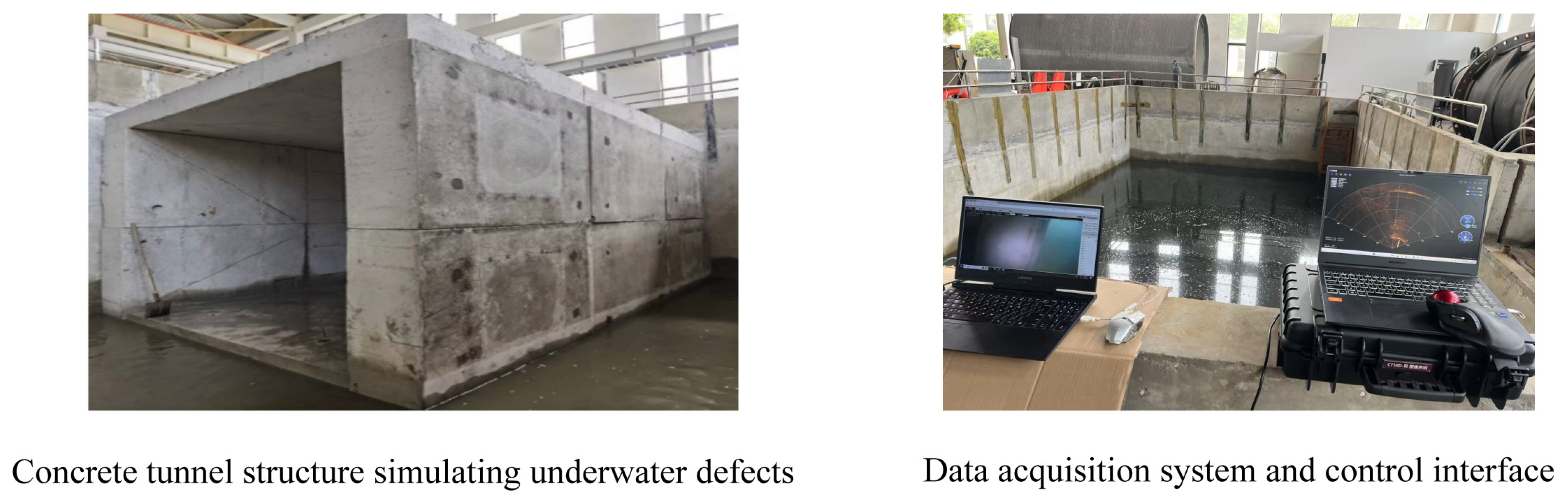

As aforementioned, most existing intelligent detection technologies are heavily reliant on data-driven training. To address this, we have developed a comprehensive underwater structural defect dataset specifically designed for model fine-tuning and parameter optimization. Unlike previous datasets that focus on any signal defects, the proposed dataset incorporates multiple defect types, providing sufficient samples to cover various typical defects, i.e., cracks, holes, and spalling. This enables it to accommodate diverse hydraulic inspection tasks. To this end, an engineering simulation scenario is previously established in the Dangtu Scientific Research and Technology Development Base of Nanjing Hydraulic Research Institute. The experimental water was collected from the Huai River mainstream to ensure natural hydrological characteristics. The experimental site included a reinforced test pool with dimensions of 11.0 m × 5.9 m × 4.2 m (length × width × depth), with 3.4 m below ground level and a 0.8 m high surrounding wall reinforced with carbon fiber cloth. Simulated hydraulic structures, including tunnels and dams, were constructed using C30 concrete. The underwater tunnel is of 6.0 m × 4.0 m × 3.3 m (length × width × height), with one side attached to the pool wall and the tunnel end located 1.3 m from the boundary. A 5.9 m × 5.0 m water space was reserved as the deployment area of the underwater sonar imaging platform. The interior walls, ceiling, and floor are inserted with typical defect patterns, i.e., cracks, holes, and spalling. By removing the ceiling panel, the tunnel scene can be converted into a dam inspection scenario. The tunnel provides a clear internal width of 3.0 m and height of 2.08 m, offering sufficient operational space for the platform and its manipulator arm. The experimental platform and the data collection process are presented in

Figure 1. This facility offers a standardized and controlled environment for dataset acquisition.

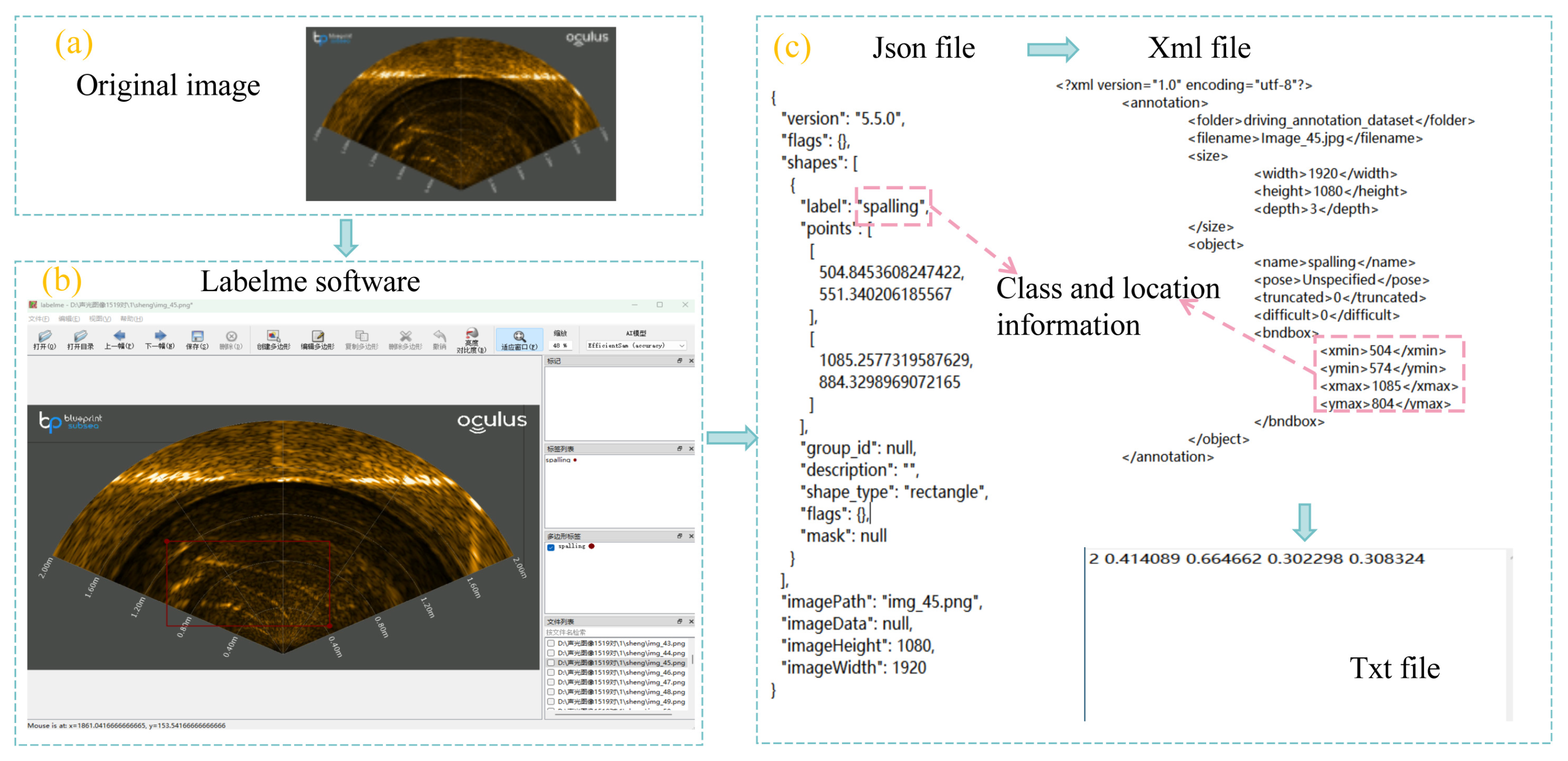

To enrich available underwater data for model training, an underwater sonar defect dataset (USDD) was constructed. The raw data used in this study was extracted from underwater inspection videos recorded at a hydraulic engineering testing facility. Video frames were sampled to generate static images for further processing. Specifically, frames were collected at a rate of 0.1 frames per image to reduce redundancy and improve the diversity of the dataset. In total, 2500 images were obtained, each with a fixed resolution of 1920 × 1080 pixels. The images were manually annotated using the LabelImg v1.8.6 tool, where structural defects were enclosed using the largest possible horizontal bounding boxes. The annotations were saved in XML format following the PASCAL VOC standard, ensuring that each image contains at least one defect label. Following annotation, all images were normalized using the calculated mean and standard deviation values. The XML annotation files were subsequently converted into TXT format, making them compatible with object detection model training pipelines. The data labeling process is illustrated in

Figure 2.

5. Experiments and Analysis

An ablation study was conducted to show the intermediates in our proposed Hybrid Neural Network, aiming to present layerwise performance of defect detection. Moreover, our model was compared with other state-of-the-art methods in terms of both objective and subjective evaluations. These comparative experiments comprehensively validate its effectiveness and the efficiency of our proposed network.

5.1. Experimental Environment

The experimental environment is set up as follows: The computer used features an Intel Core i7-14700KF CPU and an Nvidia GeForce RTX 4070Ti GPU, along with 32 GB of DDR43200 Mhz RAM. We used the PyTorch 1.10.2 deep learning framework, based on CUDA 11.3 and cuDNN 8.0 within the PyCharm 2021.3 integrated development environment.

5.2. Training Strategy

The dataset is partitioned into training/validation/test using a stratified 7:2:1 split at the image level to preserve per-class proportions (crack, hole, spalling). Unless otherwise noted, all methods are trained under a unified protocol: input ; Adam (, ), weight decay ; batch size 4; 200 epochs; cosine annealing of the learning rate () with a 10-epoch warm-up (). We fix the random seed (2024) for reproducibility and, where stated, report results as mean±std over repeated runs. To make the sampling rationale explicit and reproducible, we next summarize the class statistics of USDD and quantify label skew for crack, hole, and spalling. These summaries motivate our use of multi-label stratified sampling and provide the basis for the 7:2:1 split.

We report the numbers of annotated instances for crack, hole, and spalling in

Table 1 and quantify label skew using two complementary metrics.

where

denotes the

Imbalance Ratio;

is the set of classes,

, hence

;

are class indices that iterate over the elements of

;

is the count for class

i;

and

are the maximum and minimum class counts across

;

is the relative proportion of class

i (i.e.,

); and

is the normalized entropy of the class distribution, taking values in

, with

when all classes are equally represented (

for all

i) and

when all samples belong to a single class.

We adopt stratified sampling to preserve per-class proportions across subsets, with a fixed seed 2024. We produce five independent splits and report mean ± std across runs. With 2500 images in total, the final partition sizes are 1750/500/250 for train/val/test, respectively. Our sonar images are class-imbalanced and some contain multiple defect types; random splitting can under-represent minority classes in val/test and inflate metric variance. Stratified splitting consistently reduces the standard deviation of mAP and per-class recall across the five runs (detailed numbers in

Table 2). This practice is consistent with prior work that applies stratified sampling to imbalanced operational datasets [

32].

Totals across subsets (instances): Crack/Hole/Spalling , overall . Per-subset instance totals preserve global proportions by design.

5.3. Ablation Experiment

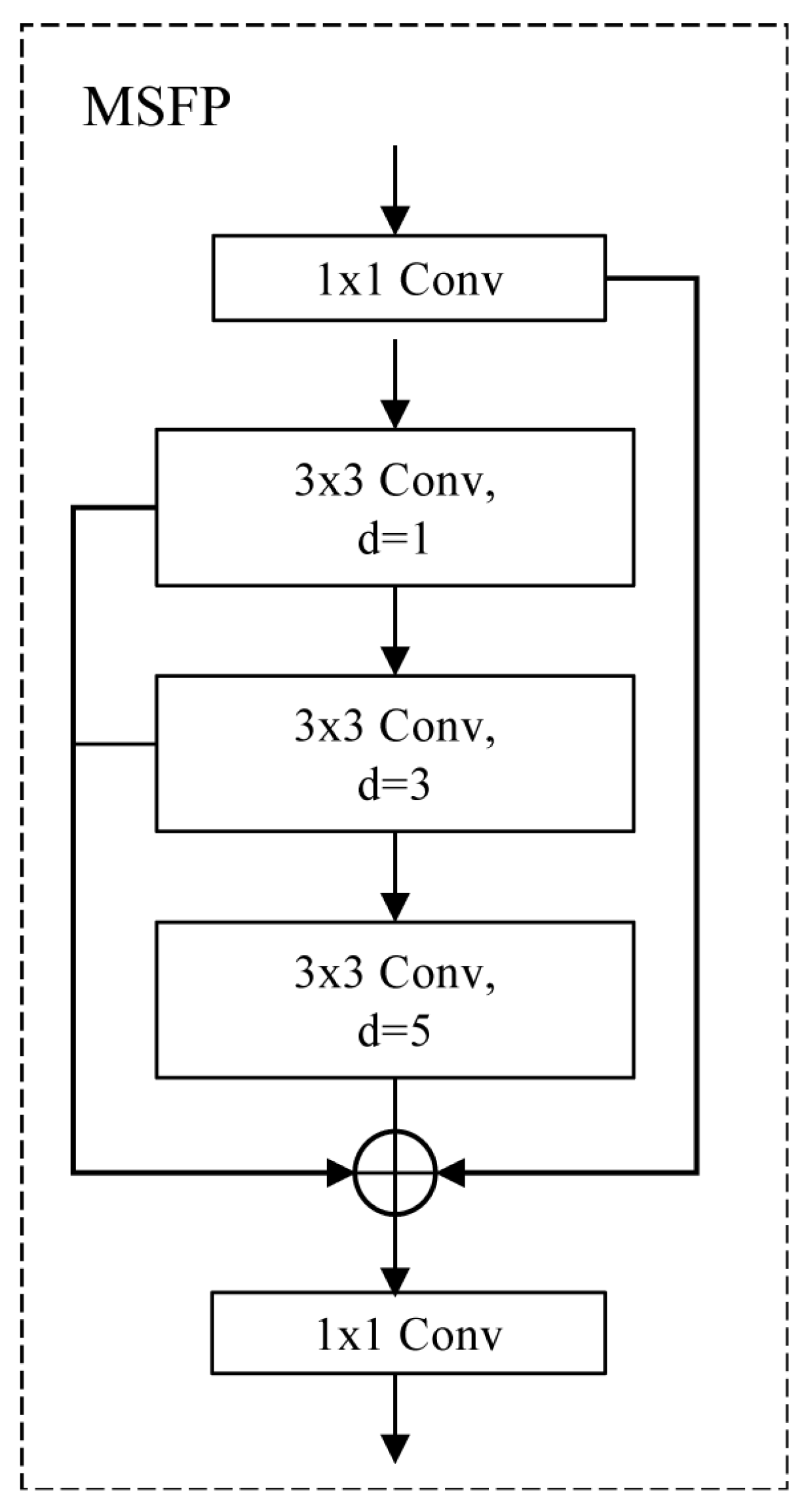

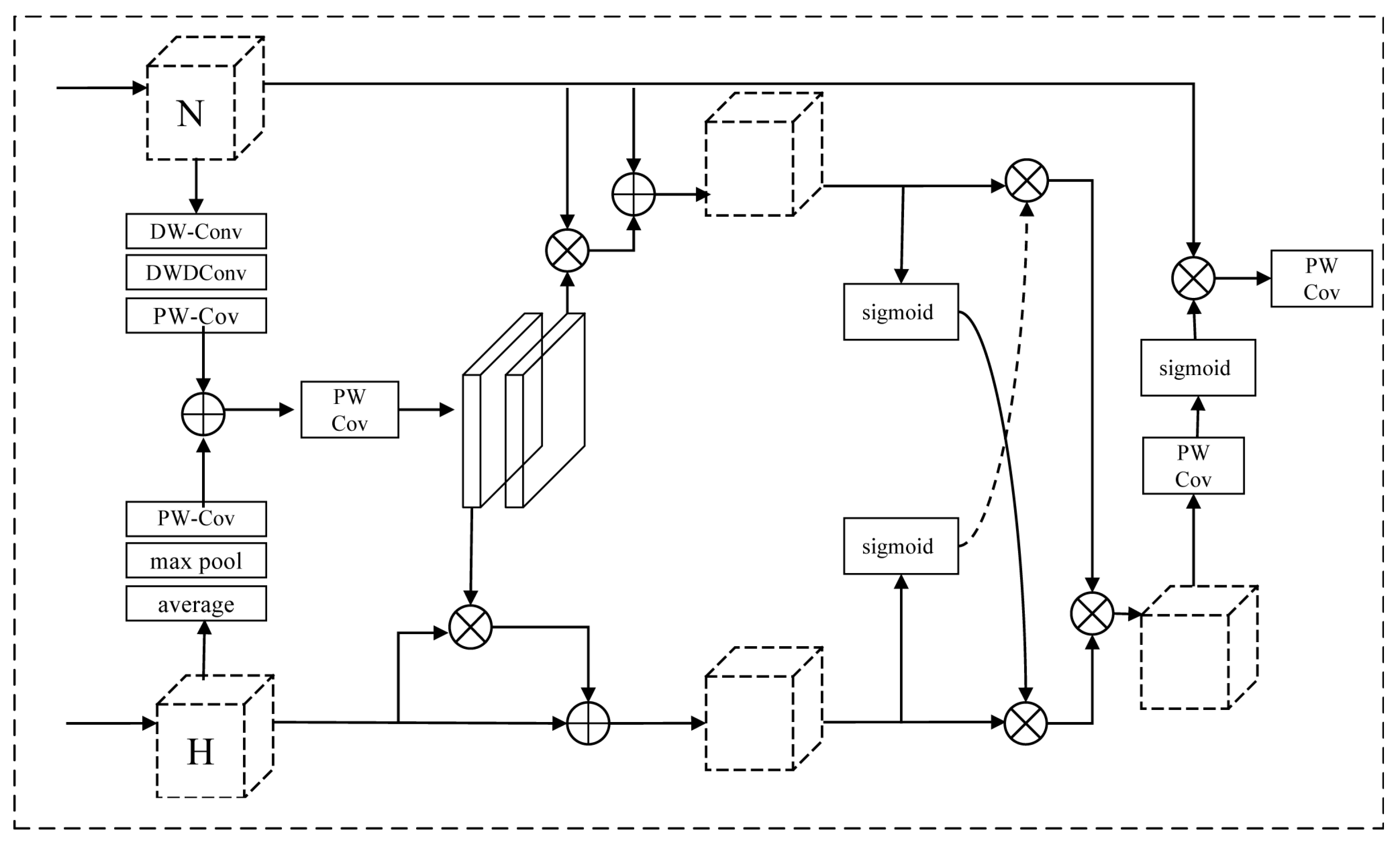

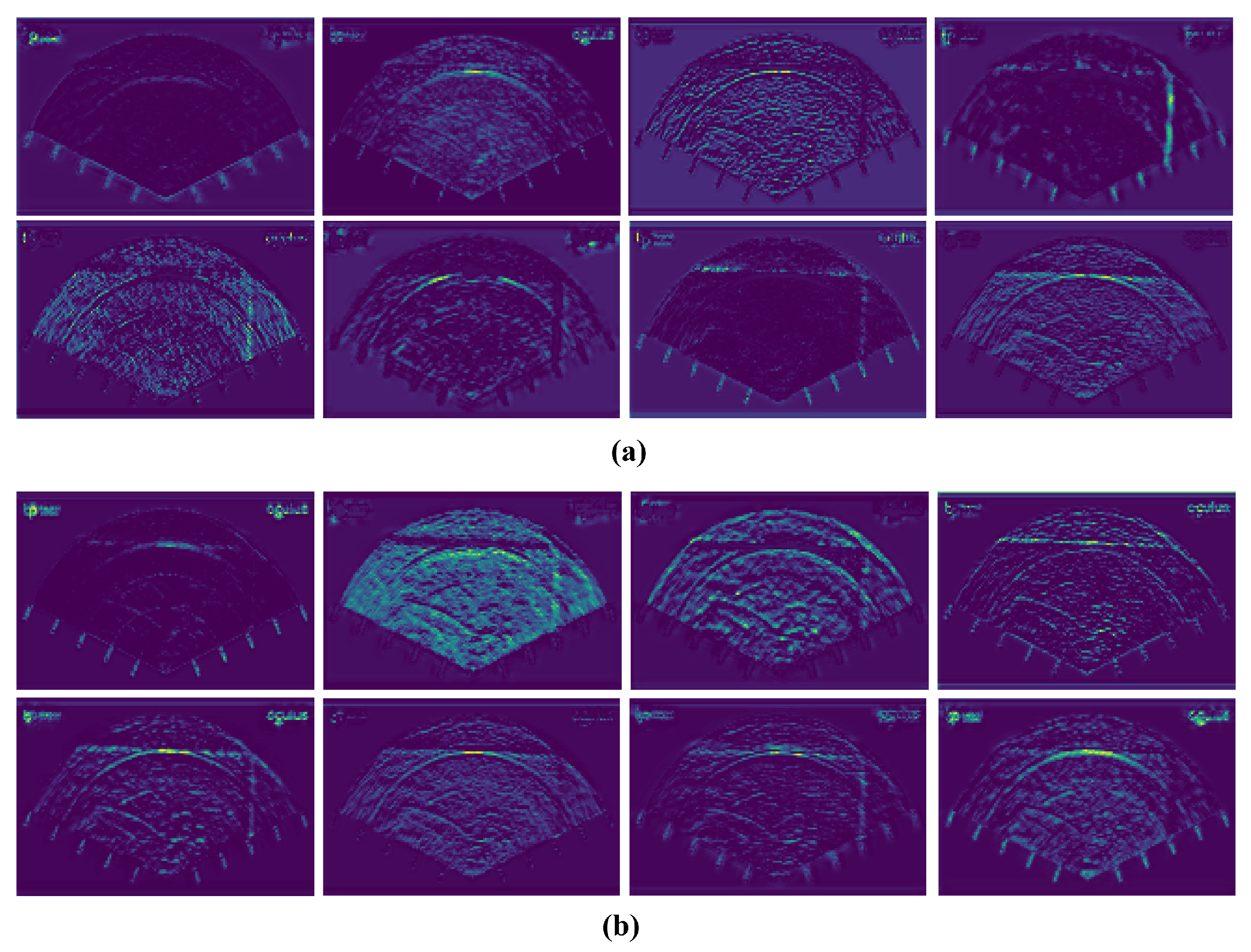

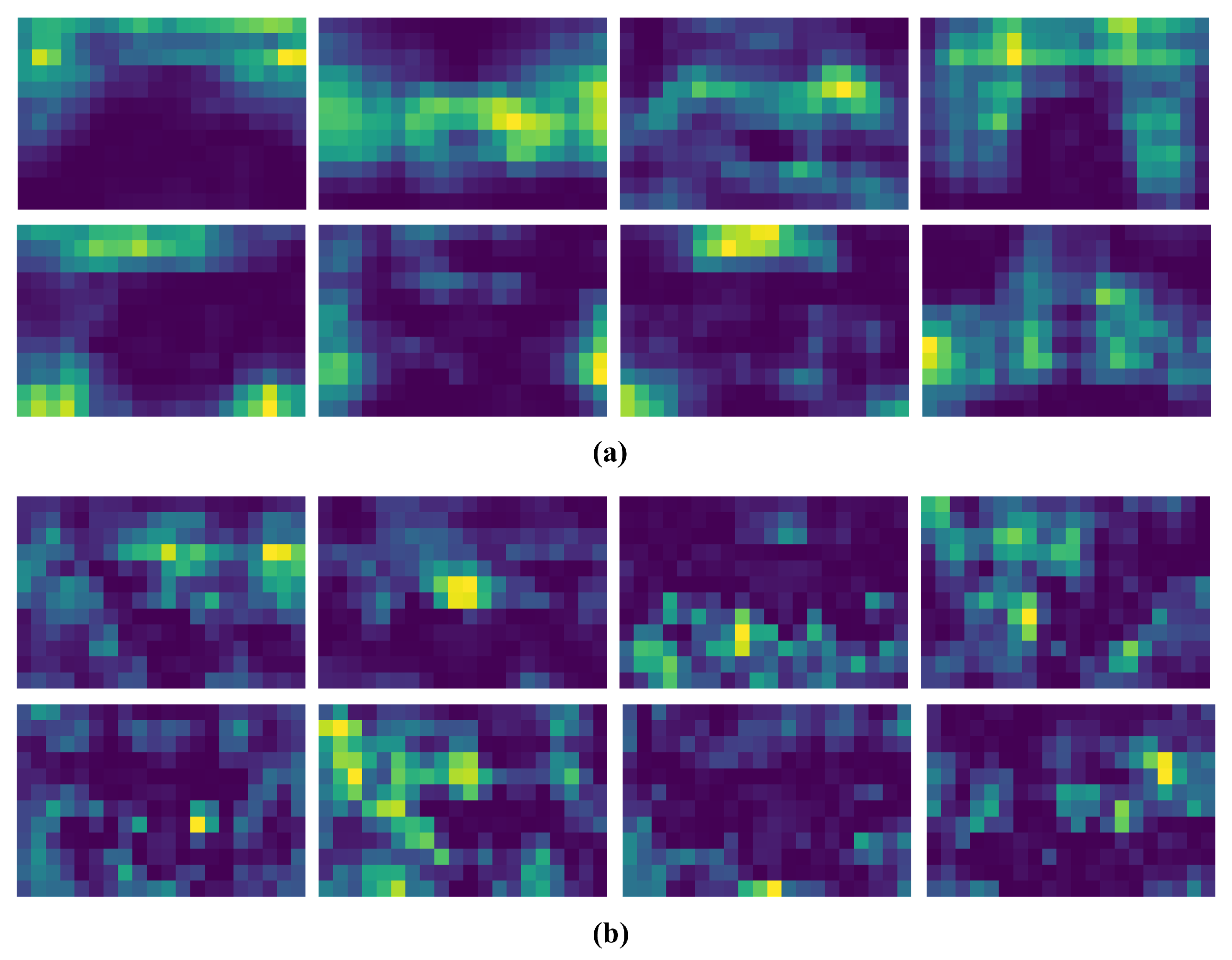

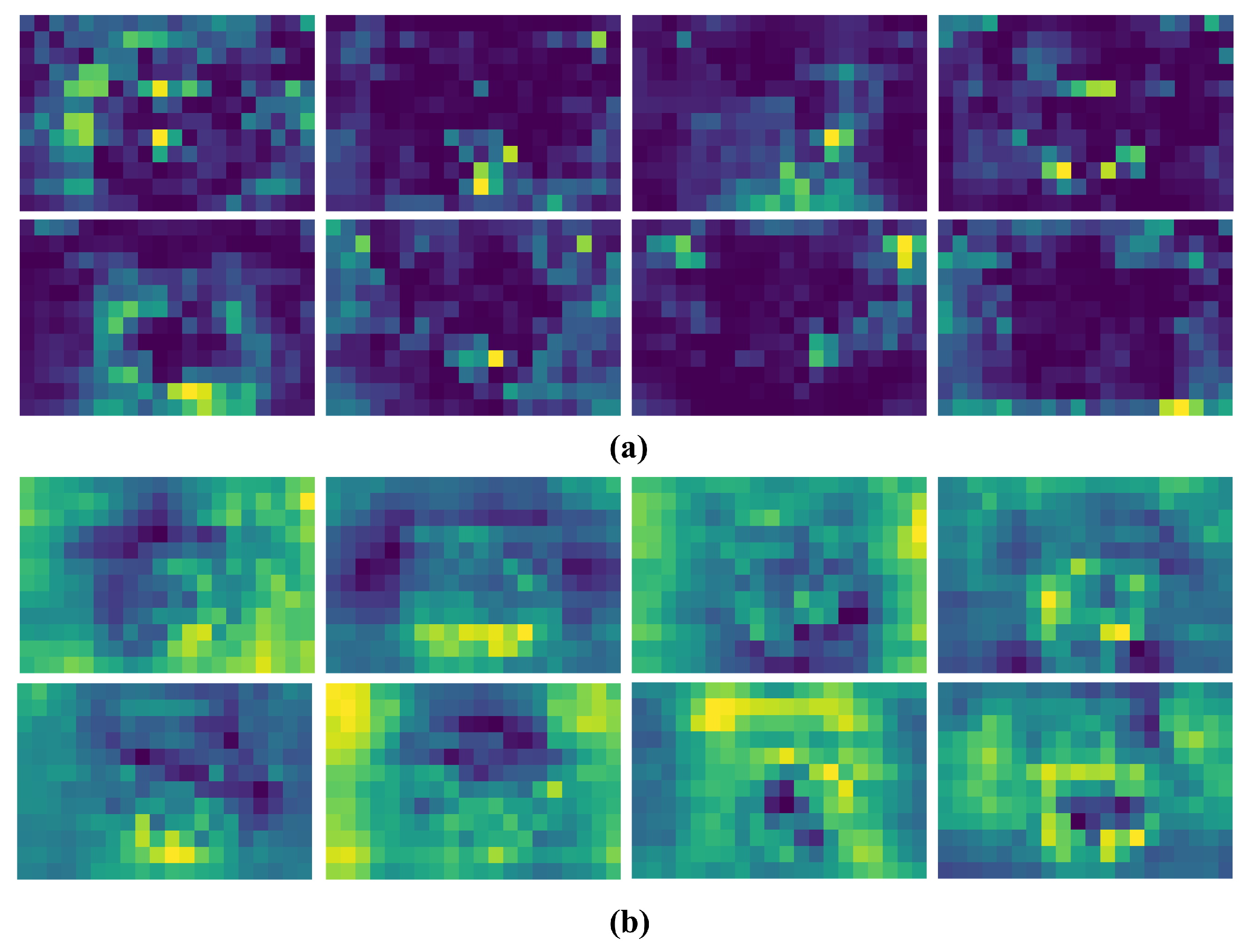

We selected the feature outputs from the HanNet, MSFP, and ASAA network layers. The visualized results are shown in

Figure 7,

Figure 8 and

Figure 9, respectively. The qualitative results are shown in

Table 3. As shown in

Figure 7, feature maps generated by HanNet (

Figure 7b) demonstrate enhanced boundary sharpness and improved defect discriminability. In contrast, the boundary features extracted by the baseline model (

Figure 7a) exhibit noticeable blurriness, which significantly impairs the discriminability between distinct defect categories.

The comparative analysis between the baseline model (

Figure 8a) and the proposed MSPF (

Figure 8b) reveals a substantial enhancement in feature fusion. As illustrated in

Figure 8, the results from baseline model suffer from blurred feature representations, which degenerates its ability to preserve fine-grained details. In contrast, the MSPF-generated feature maps exhibit improved clarity, with well-defined textures, coherent structures, and sharp boundaries.

The feature maps generated by the ASAA module (

Figure 9b) exhibit a marked improvement in defect representation compared to the baseline. Without ASAA (

Figure 9a), the feature map displays pronounced blurriness, spatially scattered activations with diffused distributions, and a lack of well-defined structural clusters. In contrast, the ASAA-enhanced feature map presents more continuous and sharply delineated high-response regions, reflecting targeted feature activation.

In summary, our proposed Hybrid Neural Network model is compact with the different layers being distinctive and collaborative, some for noise suppression and others for feature representation. These modules collectively contribute to accurate, robust, and reliable underwater structural multi-defects detection.

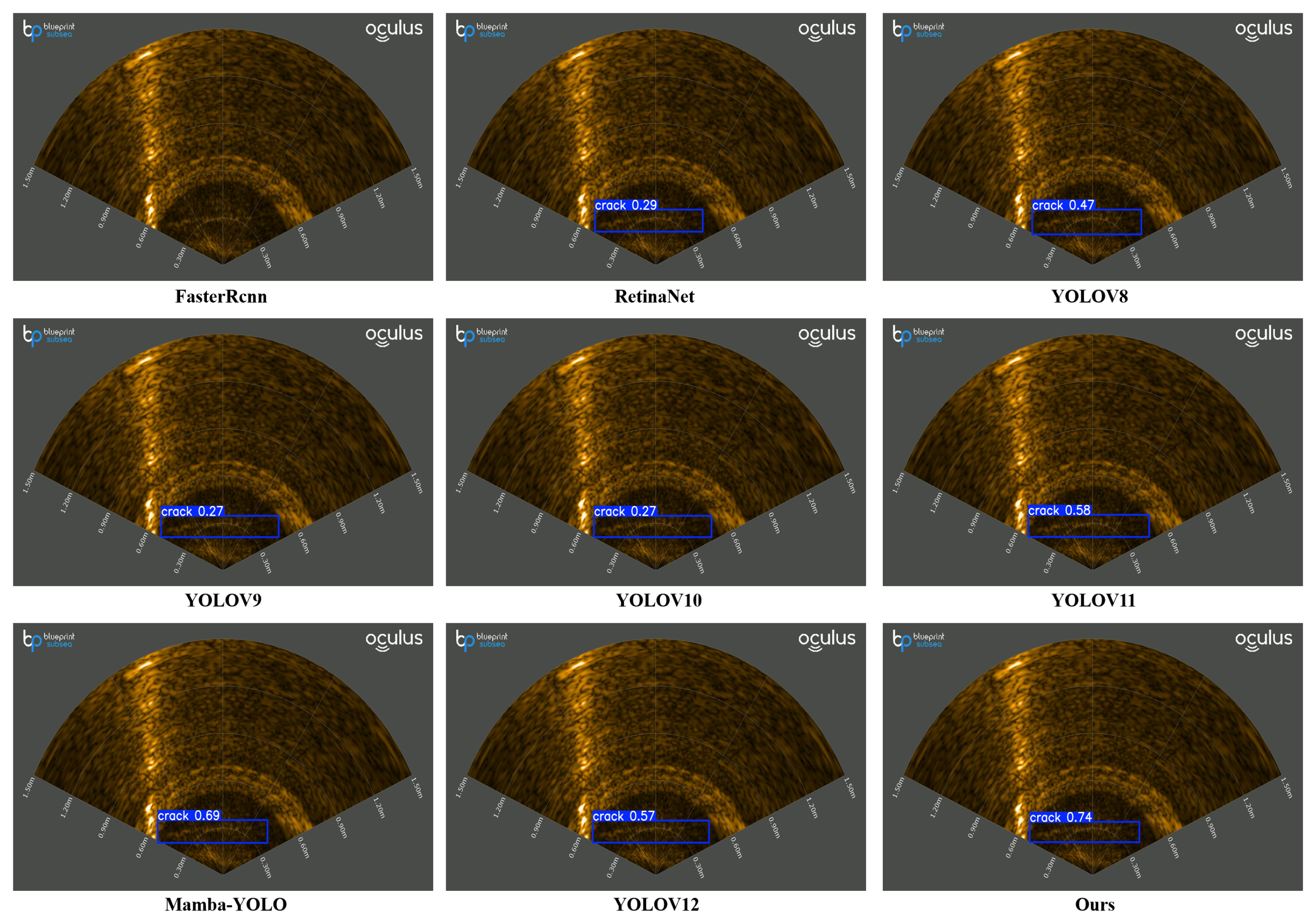

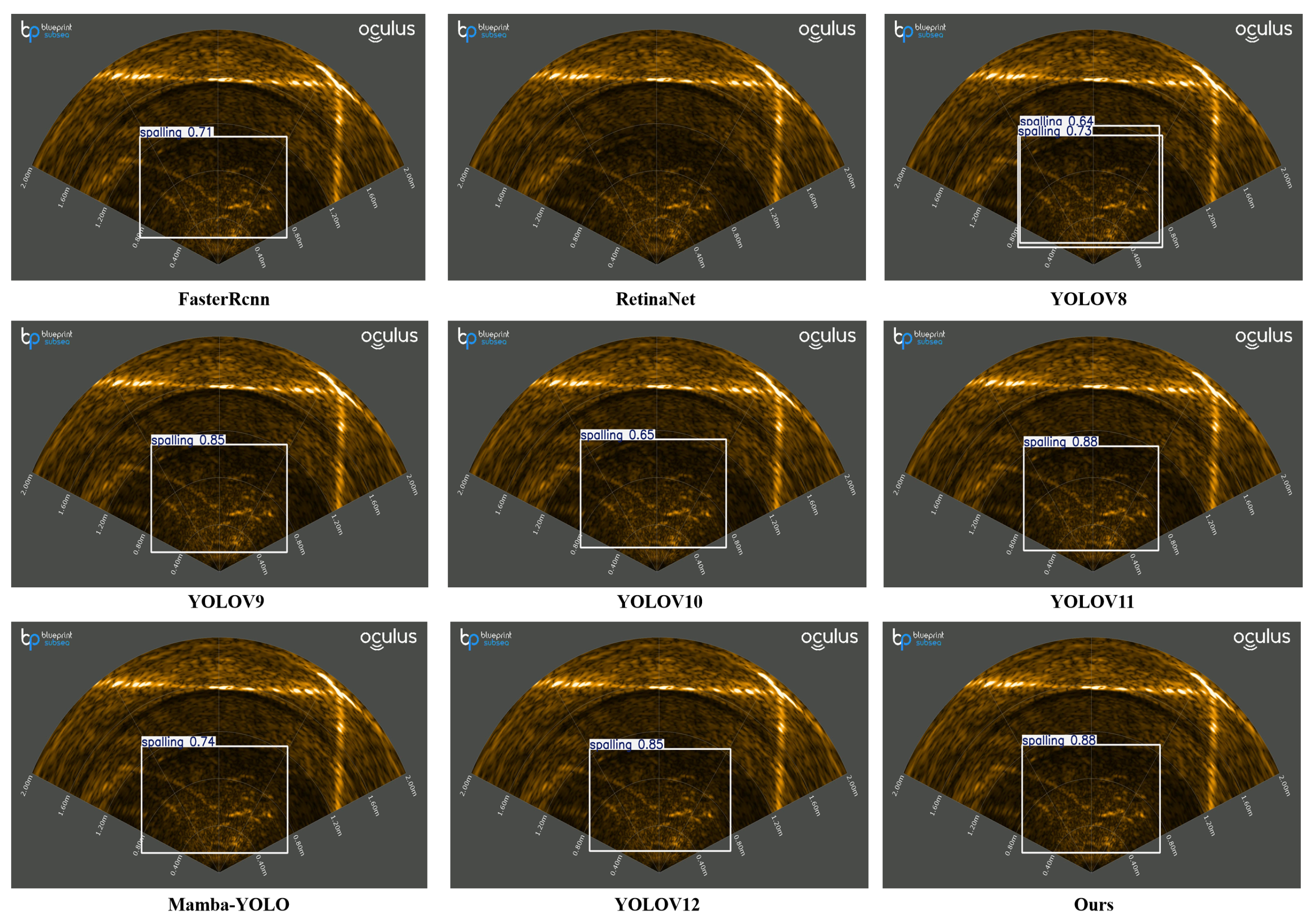

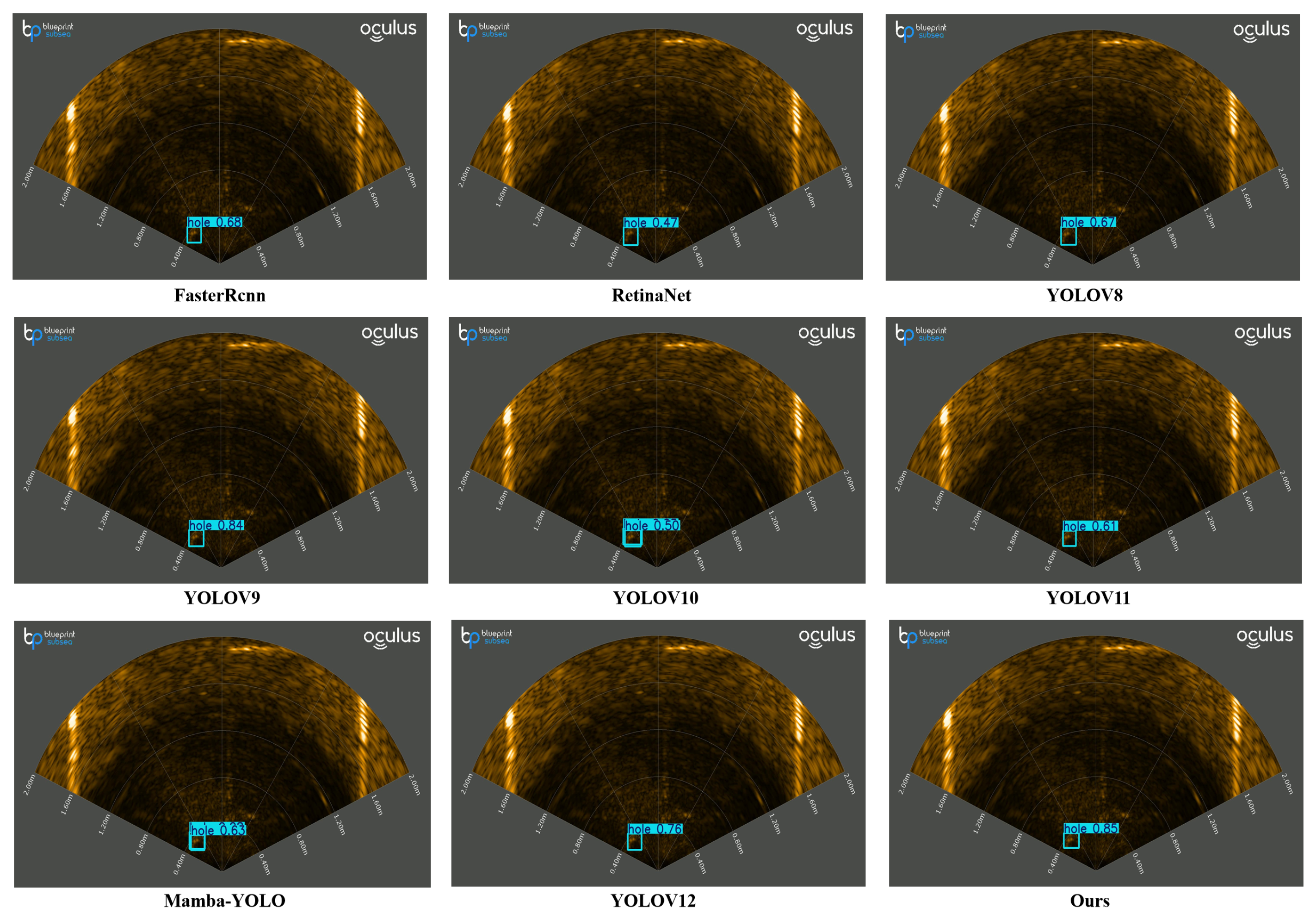

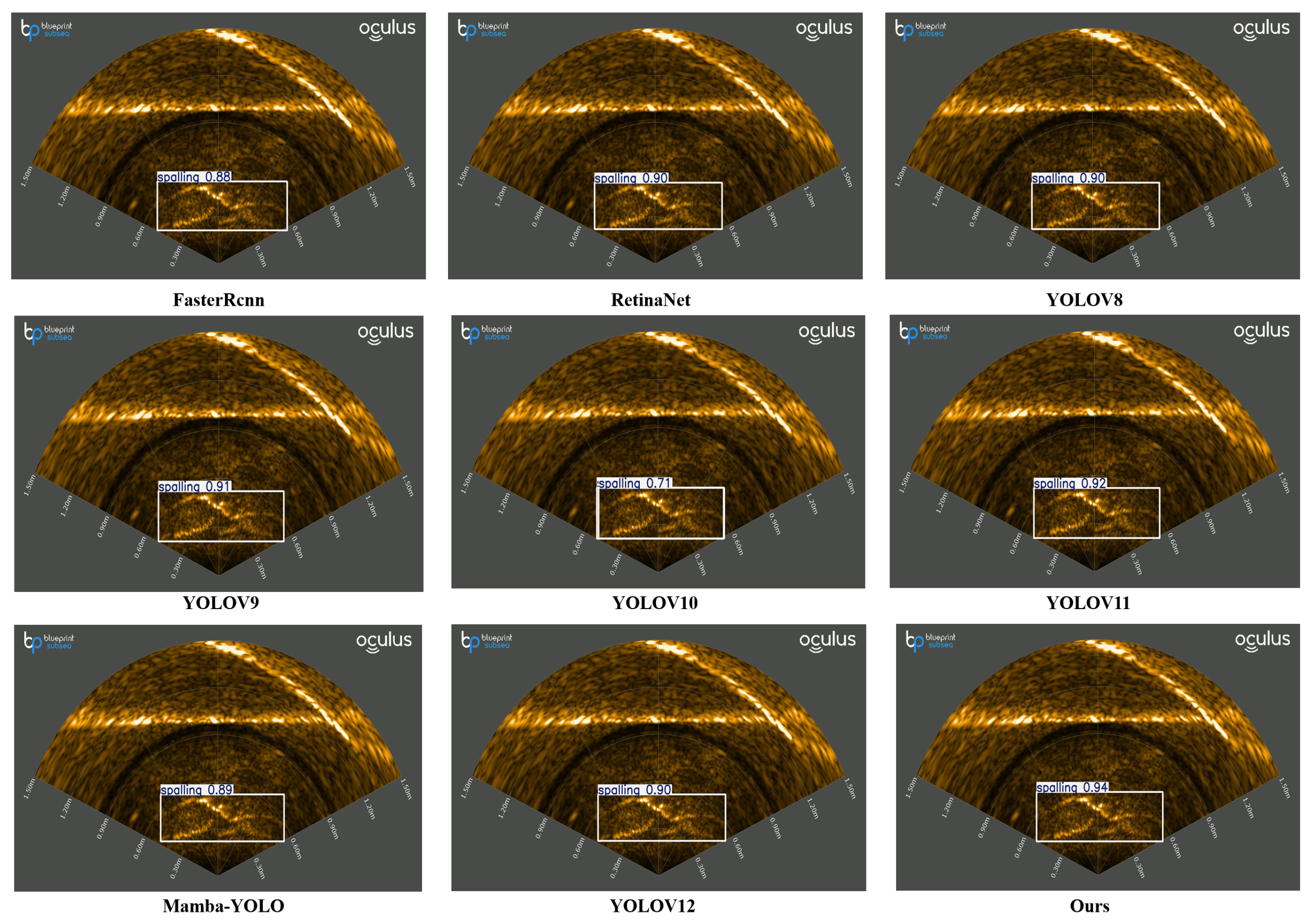

5.4. Comparison Experiment

To systematically evaluate the proposed method under small-target and real-time constraints, we compare with eight representative detectors at

and a shared compute budget. Faster R-CNN [

33] serves as a two-stage, accuracy-oriented reference with strong region-level recall on small objects but higher latency; RetinaNet [

34] is a one-stage baseline whose Focal Loss mitigates severe foreground–background imbalance typical of sparse small defects. YOLOv8n [

35] is a widely used lightweight real-time baseline within our FLOPs/parameter limits; YOLOv9s [

36] introduces GELAN/PGI to enhance information flow and small-object recall at similar FLOPs. YOLOv10 [

37,

38] adopts an end-to-end, NMS-free design that improves the accuracy–latency Pareto frontier and has been validated on small, occluded PPE targets in safety-helmet monitoring with surveillance and body-worn cameras. YOLOv11 provides a contemporary real-time baseline under identical input resolution; Mamba-YOLO [

39] integrates state-space modules to model long-range dependencies in linear time, potentially benefiting elongated crack patterns; and YOLOv12 [

40] evaluates attention-centric refinements for tiny targets while maintaining FPS. Qualitative comparisons are shown in

Figure 10,

Figure 11,

Figure 12 and

Figure 13, and quantitative results in

Table 4. From these results, Faster R-CNN demonstrated competitive hole/spalling detection (mAP50: 78.2/76.5) but exhibited critical limitations in crack detection (mAP50: 42.1), while RetinaNet outperformed it in crack detection (mAP50: 48.7) yet failed in large spalling detection (mAP50: 51.3).YOLOv8 achieved exceptional hole/spalling detection (mAP50: 83.4/81.2) but suffered from redundant detections and poor crack detection (mAP50: 45.9). YOLOv9 maintained strong hole/spalling performance (mAP50: 82.1/79.8) with marginal crack detection improvements (mAP50: 50.3), whereas YOLOv10 exhibited balanced hole detection (mAP50: 75.4) but significantly lagged in crack/spalling detection (mAP50: 43.2/52.7). YOLOv11 emerged as a strong competitor excelling in crack/spalling detection (mAP50: 85.3/80.6) though slightly inferior in hole detection (mAP50: 78.9). Mamba-YOLO showcased superior crack/hole detection (mAP50: 86.2/82.5) but demonstrated critical deficiencies in spalling detection (mAP50: 54.1), while YOLOv12 delivered promising spalling/hole detection (mAP50: 83.7/81.5) yet struggled with fine cracks (mAP50: 47.8). The proposed method achieved state-of-the-art performance across all defect categories with highest mAP50 scores: 92.4 (cracks), 90.1 (holes), and 89.7 (spalling). This superiority stems from its innovative multi-scale feature fusion architecture and adaptive attention mechanisms, effectively addressing challenges in underwater structural multi-defects detection.

To fairly assess the efficiency of the proposed detector under real-time constraints, we benchmark eight representative frameworks at a unified input size and report model complexity and throughput. As shown in

Table 5, two-stage/large one-stage baselines are heavy and slow (e.g., Faster R-CNN: 41.40 M/173.6 G/32.6 FPS; RetinaNet: 36.52 M/182.5 G/51.4 FPS), whereas our model attains the best throughput at comparable computation (2.87 M params, 8.3 GFLOPs, 190.2 FPS), exceeding YOLOv8n (177.3 FPS) and YOLOv10 (187.3 FPS). Among the remaining baselines, YOLOv9s (7.16 M, 26.8 G) reaches 67.6 FPS; YOLOv11 (2.58 M, 6.3 G) delivers 93.8 FPS; Mamba-YOLO is relatively heavy (26.9 M, 74.2 G) and runs at 73.5 FPS; and YOLOv12 (2.55 M, 6.4 G) achieves 129.1 FPS. Overall, our detector offers the highest measured throughput without inflating FLOPs or parameter count, situating it on a favorable accuracy–efficiency frontier.

6. Conclusions

This paper presents a hybrid neural network for multi-defect detection in underwater environments. The architecture jointly integrates HanNet, MSFP, and ASAA modules, leveraging their complementary strengths to enable accurate detection of diverse defect types under challenging conditions. To address the challenges of underwater multi-defect detection, this paper introduces a comprehensive dataset specifically designed for underwater structural defects, i.e., Underwater Structural Defect Dataset (USDD), which serves as a foundational resource for data-driven model training. Experimental results on USDD demonstrate that our method outperforms existing methods, achieving superior detection accuracy and robustness.

Despite these promising results, there are areas for improvement in future work. The proposed model still experiences missed or erroneous detections for complex crack geometries. Feature representation is required to further enhance feature extraction. Furthermore, noise reduction for sonar-based detection is essential to improving defect detection quality and ensuring the accurate identification of defects. Lastly, future research should also explore methods for measuring defect attributes, e.g., length, width, and depth.