Multi-Attention Pyramid Context Network for Infrared Small Ship Detection

Abstract

1. Introduction

- Scale attention mechanisms in AGPC-Net for small target detection. Addressing the characteristics of small targets, we enhance the foundational network, AGPC-Net, by incorporating a scale attention mechanism after the feature pyramid, adjusting the weights of different scale feature maps.

- Additionally, we add a channel attention mechanism during the upsampling process of AGPC-Net, facilitating the learning of relationships between channels and obtaining more effective feature representations.

- Proposal of the Maritime-SIRST infrared small ship dataset in complex sea surface scenes based on satellite infrared band images. We present the Maritime-SIRST dataset, a comprehensive infrared small ship dataset derived from satellite infrared band images, designed to meet the requirements of our research and foster advancements in related fields.

2. Related Work

2.1. Infrared Small Target Detection Networks

2.2. Attention Mechanism

2.3. Datasets for Infrared Small Targets

3. Materials and Methods

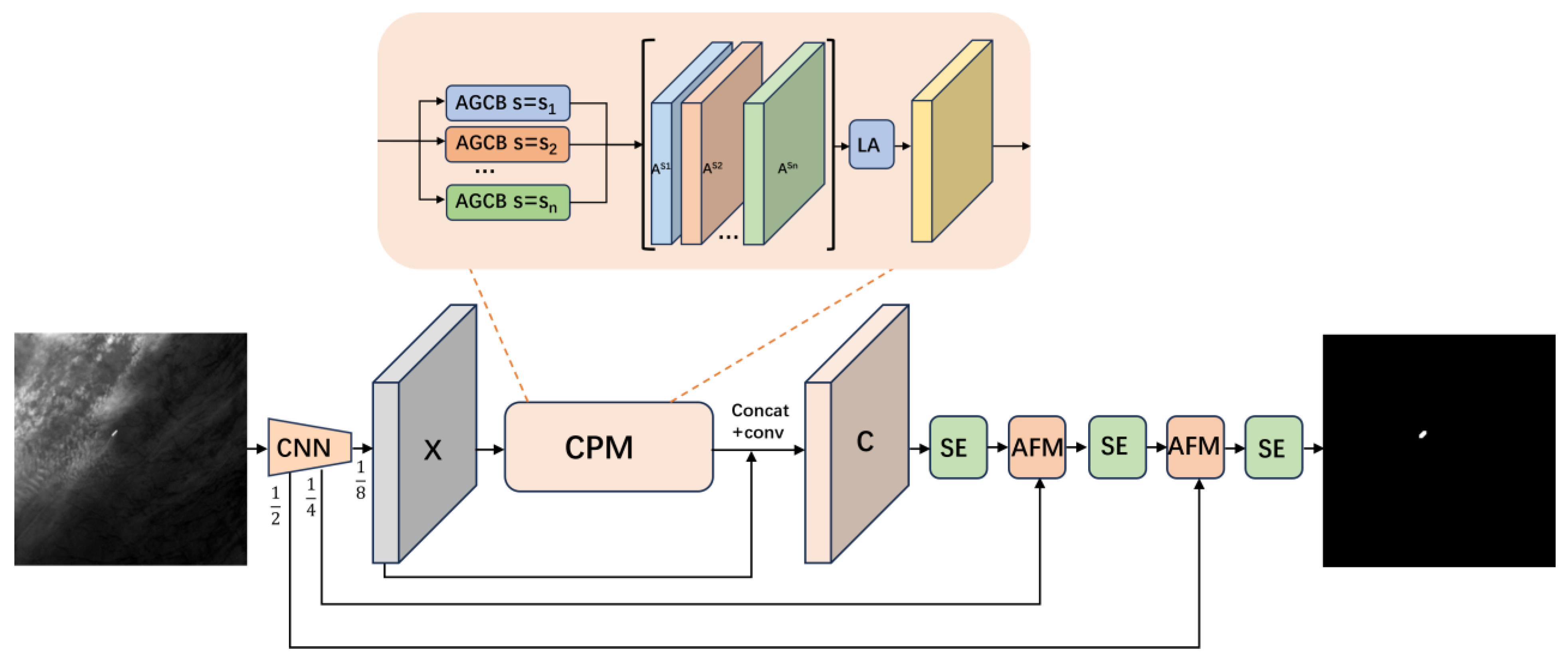

3.1. MAPC-Net

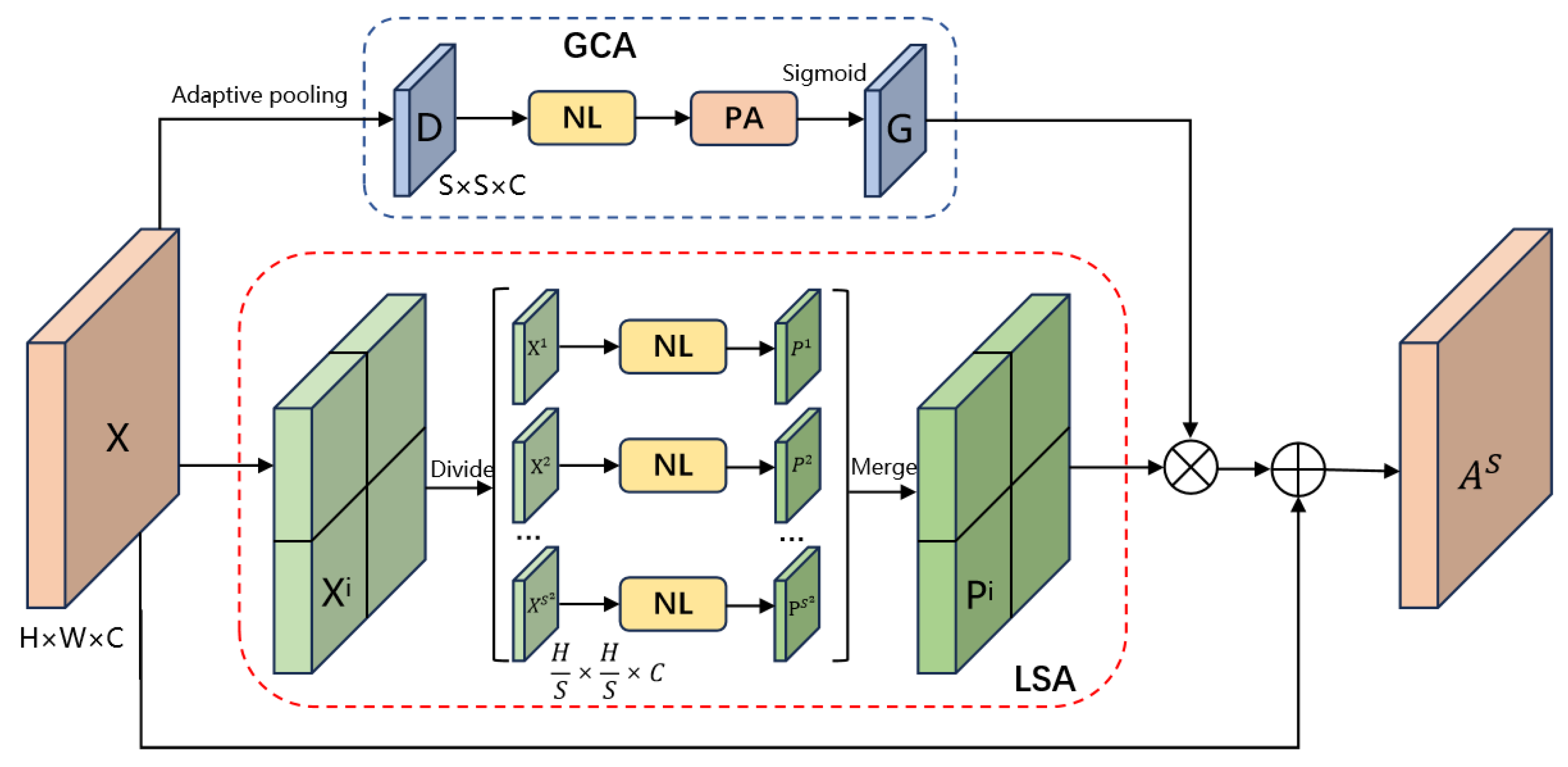

3.1.1. Attention-Guided Context Block

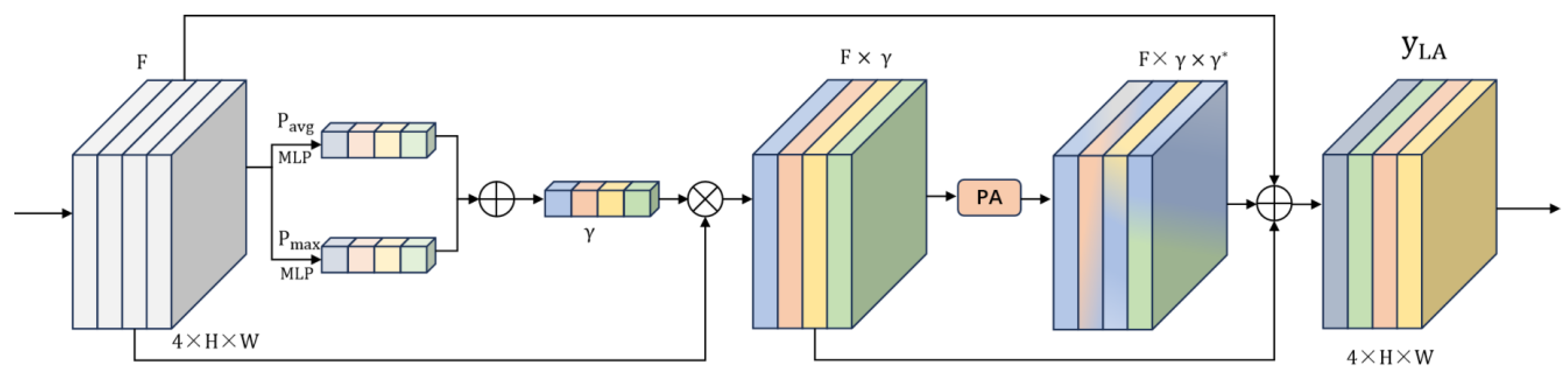

3.1.2. Scale Attention Module

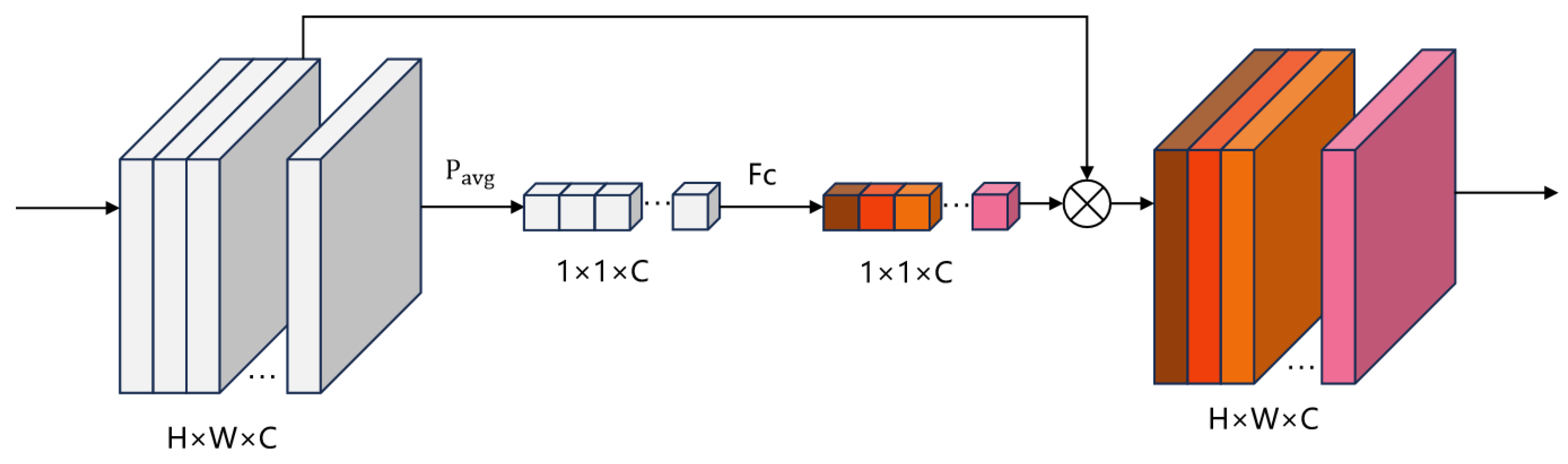

3.1.3. Squeeze-and-Excitation Block

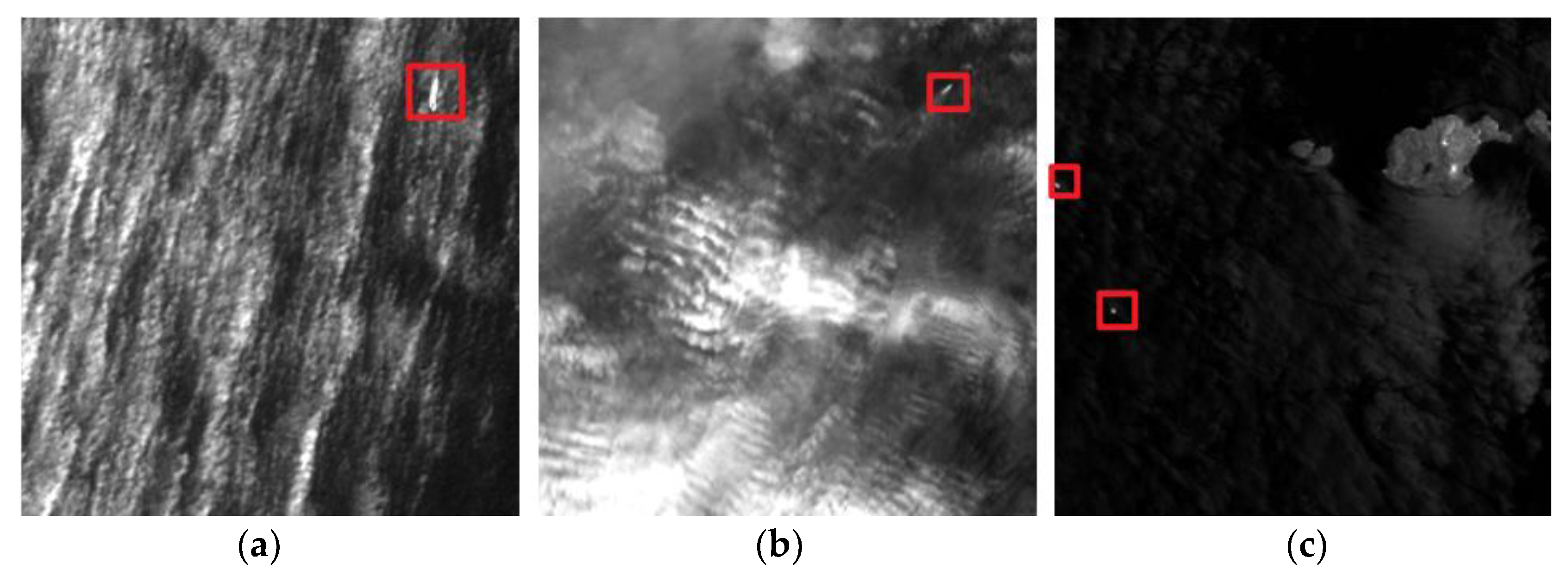

3.2. Maritime-SIRST

4. Experiment and Discussion

4.1. Experimental Setting

4.2. Evaluation Metrics

4.3. Quantitative Results

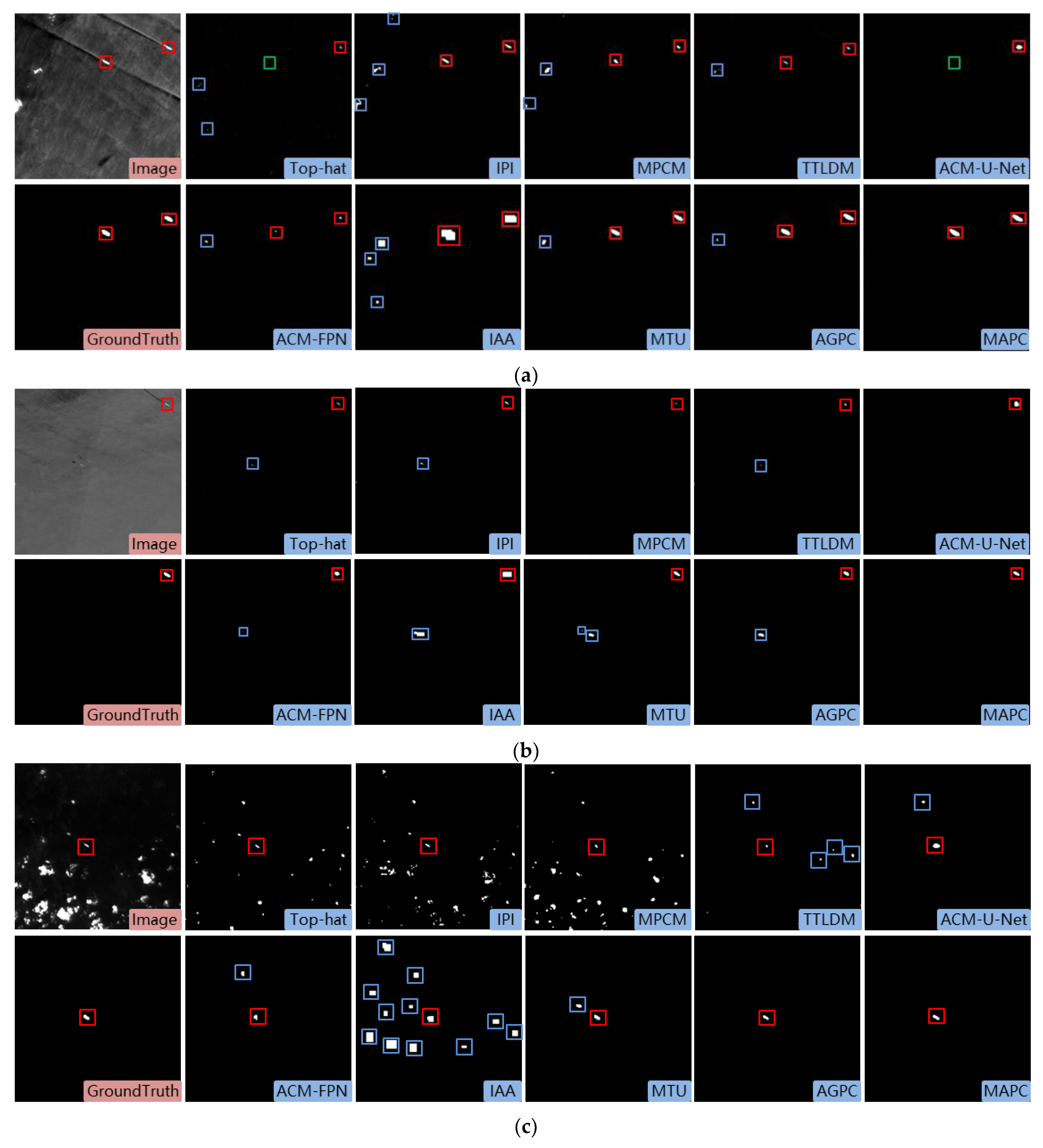

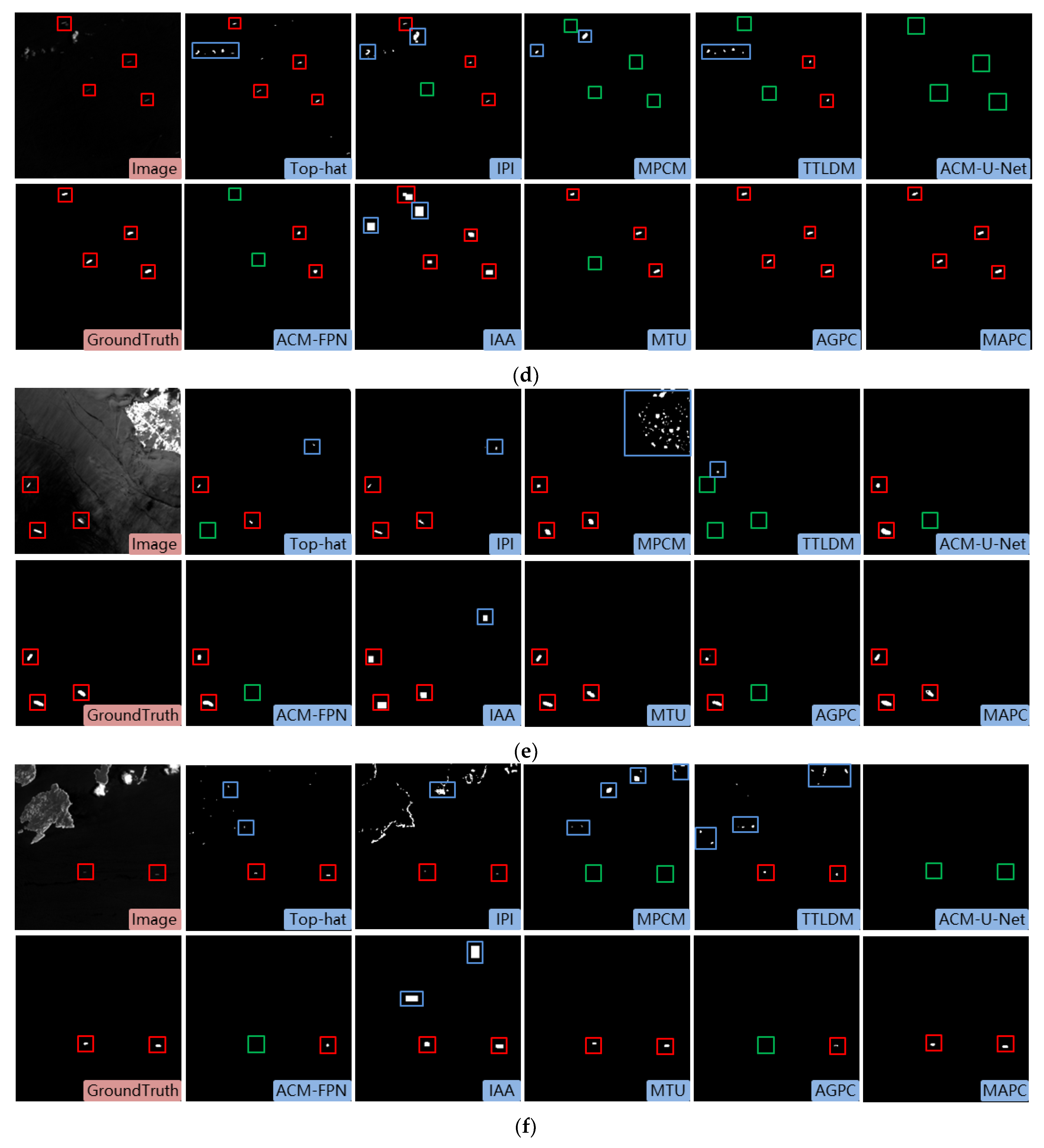

4.4. Visual Results

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, J.; Deng, C.; Huang, G.-B.; Zhao, B. Compressed-Domain Ship Detection on Spaceborne Optical Image Using Deep Neural Network and Extreme Learning Machine. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1174–1185. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Z.; Kong, D.; He, Y. Infrared Dim and Small Target Detection Based on Stable Multisubspace Learning in Heterogeneous Scene. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5481–5493. [Google Scholar] [CrossRef]

- Gao, Z.; Zhang, Y.; Wang, S. Lightweight Small Ship Detection Algorithm Combined with Infrared Characteristic Analysis for Autonomous Navigation. J. Mar. Sci. Eng. 2023, 11, 1114. [Google Scholar] [CrossRef]

- Lu, C.; Qin, H.; Deng, Z.; Zhu, Z. Fusion2Fusion: An Infrared–Visible Image Fusion Algorithm for Surface Water Environments. J. Mar. Sci. Eng. 2023, 11, 902. [Google Scholar] [CrossRef]

- Li, L.; Lv, M.; Jia, Z.; Ma, H. Sparse Representation-Based Multi-Focus Image Fusion Method via Local Energy in Shearlet Domain. Sensors 2023, 23, 2888. [Google Scholar] [CrossRef] [PubMed]

- Deng, H.; Sun, X.; Liu, M.; Ye, C.; Zhou, X. Small Infrared Target Detection Based on Weighted Local Difference Measure. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4204–4214. [Google Scholar] [CrossRef]

- Wang, N.; Li, B.; Wei, X.; Wang, Y.; Yan, H. Ship Detection in Spaceborne Infrared Image Based on Lightweight CNN and Multisource Feature Cascade Decision. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4324–4339. [Google Scholar] [CrossRef]

- Cao, Z.; Kong, X.; Zhu, Q.; Cao, S.; Peng, Z. Infrared Dim Target Detection via Mode-K1k2 Extension Tensor Tubal Rank under Complex Ocean Environment. ISPRS J. Photogramm. Remote Sens. 2021, 181, 167–190. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Analysis of New Top-Hat Transformation and the Application for Infrared Dim Small Target Detection. Pattern Recognit. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Cao, Y.; Liu, R.; Yang, J. Small Target Detection Using Two-Dimensional Least Mean Square (TDLMS) Filter Based on Neighborhood Analysis. Int. J. Infrared Millim. Waves 2008, 29, 188–200. [Google Scholar] [CrossRef]

- Mu, J.; Li, W.; Rao, J.; Li, F.; Wei, H. Infrared Small Target Detection Using Tri-Layer Template Local Difference Measure. Opt. Precis. Eng. 2022, 30, 869–882. [Google Scholar] [CrossRef]

- Chen, C.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Pan, S.D.; Zhang, S.; Zhao, M.; An, B.W. Infrared Small Target Detection Based on Double-Layer Local Contrast Measure. Acta Photonica Sin. 2020, 49, 0110003. [Google Scholar]

- Wei, Y.; You, X.; Li, H. Multiscale Patch-Based Contrast Measure for Small Infrared Target Detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Bai, X.; Bi, Y. Derivative Entropy-Based Contrast Measure for Infrared Small-Target Detection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2452–2466. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y. Reweighted Infrared Patch-Tensor Model with Both Nonlocal and Local Priors for Single-Frame Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef]

- Wang, C.; Qin, S. Adaptive Detection Method of Infrared Small Target Based on Target-Background Separation via Robust Principal Component Analysis. Infrared Phys. Technol. 2015, 69, 123–135. [Google Scholar] [CrossRef]

- Zhang, T.; Peng, Z.; Wu, H.; He, Y.; Li, C.; Yang, C. Infrared Small Target Detection via Self-Regularized Weighted Sparse Model. Neurocomputing 2021, 420, 124–148. [Google Scholar] [CrossRef]

- Hou, Q.; Zhang, L.; Tan, F.; Xi, Y.; Zheng, H.; Li, N. ISTDU-Net: Infrared Small-Target Detection U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 7506205. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss Detection vs. False Alarm: Adversarial Learning for Small Object Segmentation in Infrared Images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8509–8518. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric Contextual Modulation for Infrared Small Target Detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual Conference, 5–9 January 2021; pp. 950–959. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Wang, K.; Du, S.; Liu, C.; Cao, Z. Interior Attention-Aware Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5002013. [Google Scholar] [CrossRef]

- Wu, T.; Li, B.; Luo, Y.; Wang, Y.; Xiao, C.; Liu, T.; Yang, J.; An, W.; Guo, Y. MTU-Net: Multilevel TransUNet for Space-Based Infrared Tiny Ship Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5601015. [Google Scholar] [CrossRef]

- Zhang, T.; Li, L.; Cao, S.; Pu, T.; Peng, Z. Attention-Guided Pyramid Context Networks for Detecting Infrared Small Target Under Complex Background. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 4250–4261. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, J.; An, W. Infrared Dim and Small Target Detection via Multiple Subspace Learning and Spatial-Temporal Patch-Tensor Model. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3737–3752. [Google Scholar] [CrossRef]

- Pan, P.; Wang, H.; Wang, C.; Nie, C. ABC: Attention with Bilinear Correlation for Infrared Small Target Detection. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 2381–2386. [Google Scholar]

- Kou, R.; Wang, C.; Yu, Y.; Peng, Z.; Yang, M.; Huang, F.; Fu, Q. LW-IRSTNet: Lightweight Infrared Small Target Segmentation Network and Application Deployment. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5621313. [Google Scholar] [CrossRef]

- Huang, S.; Liu, Y.; He, Y.; Zhang, T.; Peng, Z. Structure-Adaptive Clutter Suppression for Infrared Small Target Detection: Chain-Growth Filtering. Remote Sens. 2019, 12, 47. [Google Scholar] [CrossRef]

- Kou, R.; Wang, C.; Peng, Z.; Zhao, Z.; Chen, Y.; Han, J.; Huang, F.; Yu, Y.; Fu, Q. Infrared Small Target Segmentation Networks: A Survey. Pattern Recognit. 2023, 143, 109788. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (accessed on 8 February 2024).

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Mnih, V.; Heess, N.; Graves, A. Recurrent Models of Visual Attention. Adv. Neural Inf. Process. Syst. 2014, 27. Available online: https://proceedings.neurips.cc/paper_files/paper/2014/file/09c6c3783b4a70054da74f2538ed47c6-Paper.pdf (accessed on 8 February 2024).

- Gu, R.; Wang, G.; Song, T.; Huang, R.; Aertsen, M.; Deprest, J.; Ourselin, S.; Vercauteren, T.; Zhang, S. CA-Net: Comprehensive Attention Convolutional Neural Networks for Explainable Medical Image Segmentation. IEEE Trans. Med. Imaging 2020, 40, 699–711. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.-Y.; Kweon, I.S. BAM: Bottleneck Attention Module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- Roy, A.G.; Navab, N.; Wachinger, C. Recalibrating Fully Convolutional Networks with Spatial and Channel “Squeeze and Excitation” Blocks. IEEE Trans. Med. Imaging 2018, 38, 540–549. [Google Scholar] [CrossRef]

- Dai, Y.; Li, X.; Zhou, F.; Qian, Y.; Chen, Y.; Yang, J. One-Stage Cascade Refinement Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5000917. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. ISNet: Shape Matters for Infrared Small Target Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 877–886. [Google Scholar]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. IEEE Trans. Image Process. 2022, 32, 1745–1758. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Conference, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

| Features | Number | Ratio/% | |

|---|---|---|---|

| Background | simple | 203 | 35.9 |

| waves | 62 | 11.0 | |

| clouds | 272 | 48.2 | |

| islands, ports | 28 | 4.9 | |

| Target size/pixel | <25 | 55 | 9.7 |

| 25–81 | 319 | 56.4 | |

| 81–225 | 174 | 30.7 | |

| >225 | 18 | 3.2 | |

| Target number | 0 | 21 | 3.7 |

| 1 | 388 | 68.6 | |

| 1–8 | 157 | 27.7 | |

| Methods | Prec./% | Rec./% | mIOU/% | F1/% | AUC/% | Time/s |

|---|---|---|---|---|---|---|

| Top-hat | 38.83 | 22.53 | 14.96 | 23.75 | 62.32 | 0.0019 |

| IPI | 39.65 | 38.26 | 18.32 | 26.89 | 67.78 | 7.780 |

| MPCM | 27.11 | 59.57 | 16.73 | 22.98 | 64.05 | 1.240 |

| TTLDM | 42.92 | 52.75 | 31.15 | 40.81 | 82.36 | 0.017 |

| ACM-FPN | 58.10 | 54.69 | 39.22 | 56.34 | 74.06 | 0.160 |

| ACM-U-NET | 58.15 | 47.87 | 35.60 | 52.51 | 78.29 | 0.160 |

| IAA-NET | 69.59 | 52.55 | 45.03 | 59.88 | 83.80 | 0.160 |

| MTU-NET | 70.46 | 85.62 | 63.01 | 77.31 | 93.91 | 0.125 |

| AGPC-NET | 71.60 | 85.29 | 63.73 | 77.85 | 93.63 | 0.130 |

| Ours | 77.39 | 87.78 | 69.87 | 82.26 | 94.83 | 0.135 |

| Module | Prec./% | Rec./% | mIOU/% | F1/% | AUC/% |

|---|---|---|---|---|---|

| AGPC | 71.60 | 85.29 | 63.73 | 77.85 | 93.63 |

| AGPC + LA | 72.03 | 86.41 | 64.70 | 78.57 | 94.19 |

| AGPC + SE | 75.69 | 86.83 | 67.89 | 80.88 | 94.48 |

| AGPC + LA + SE | 77.39 | 87.78 | 69.87 | 82.26 | 94.83 |

| Module | Prec./% | Rec./% | mIOU/% | F1/% | AUC/% |

|---|---|---|---|---|---|

| AGPC + GAM | 74.35 | 84.26 | 65.29 | 79.00 | 93.44 |

| AGPC + CA | 70.44 | 87.94 | 64.24 | 78.22 | 94.21 |

| AGPC + CBAM | 62.08 | 86.96 | 56.80 | 72.45 | 94.71 |

| AGPC + SE | 75.69 | 86.83 | 67.89 | 80.88 | 94.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, F.; Ma, H.; Li, L.; Lv, M.; Jia, Z. Multi-Attention Pyramid Context Network for Infrared Small Ship Detection. J. Mar. Sci. Eng. 2024, 12, 345. https://doi.org/10.3390/jmse12020345

Guo F, Ma H, Li L, Lv M, Jia Z. Multi-Attention Pyramid Context Network for Infrared Small Ship Detection. Journal of Marine Science and Engineering. 2024; 12(2):345. https://doi.org/10.3390/jmse12020345

Chicago/Turabian StyleGuo, Feng, Hongbing Ma, Liangliang Li, Ming Lv, and Zhenhong Jia. 2024. "Multi-Attention Pyramid Context Network for Infrared Small Ship Detection" Journal of Marine Science and Engineering 12, no. 2: 345. https://doi.org/10.3390/jmse12020345

APA StyleGuo, F., Ma, H., Li, L., Lv, M., & Jia, Z. (2024). Multi-Attention Pyramid Context Network for Infrared Small Ship Detection. Journal of Marine Science and Engineering, 12(2), 345. https://doi.org/10.3390/jmse12020345