The Wide-Area Coverage Path Planning Strategy for Deep-Sea Mining Vehicle Cluster Based on Deep Reinforcement Learning

Abstract

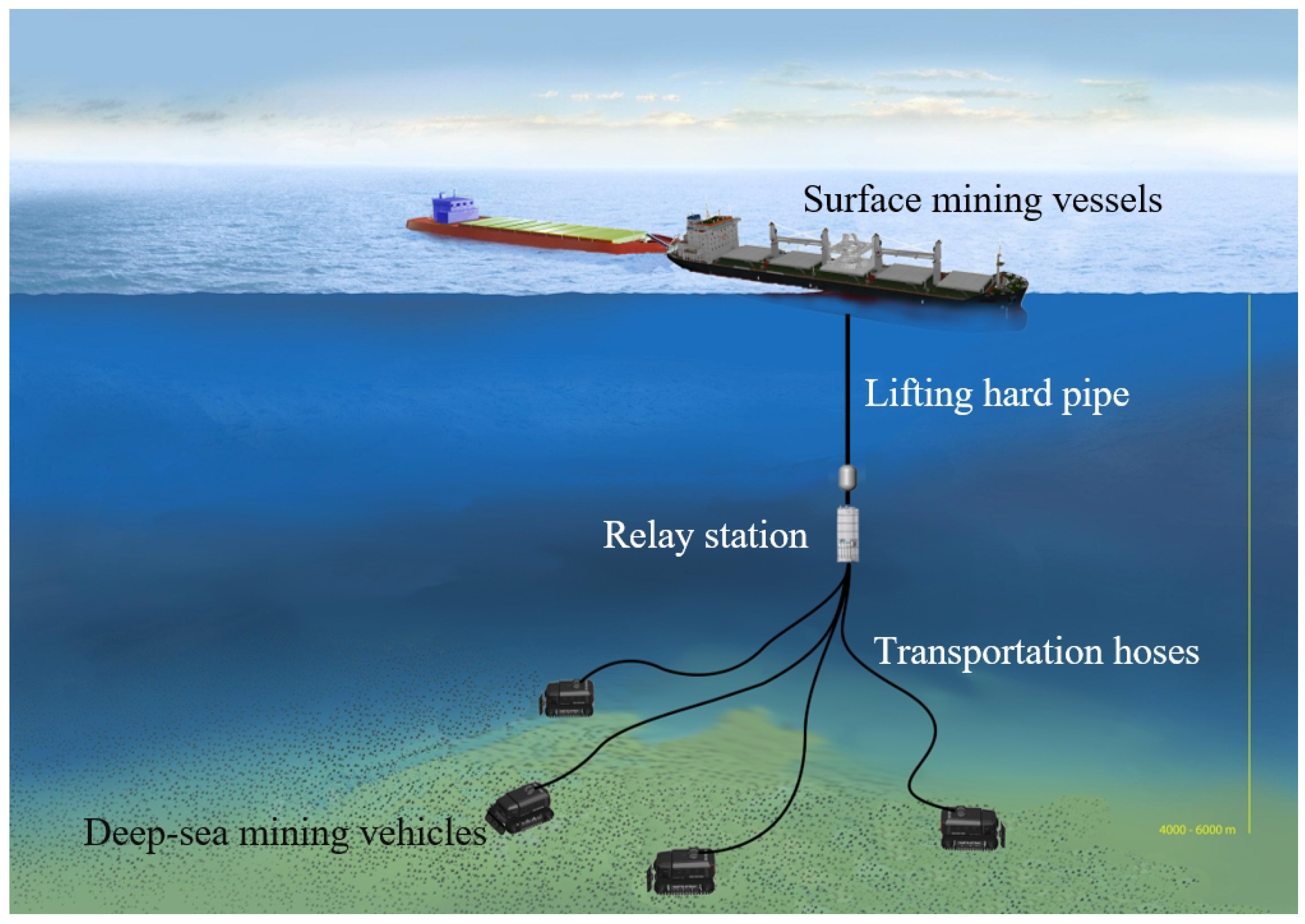

1. Introduction

2. Technical Method

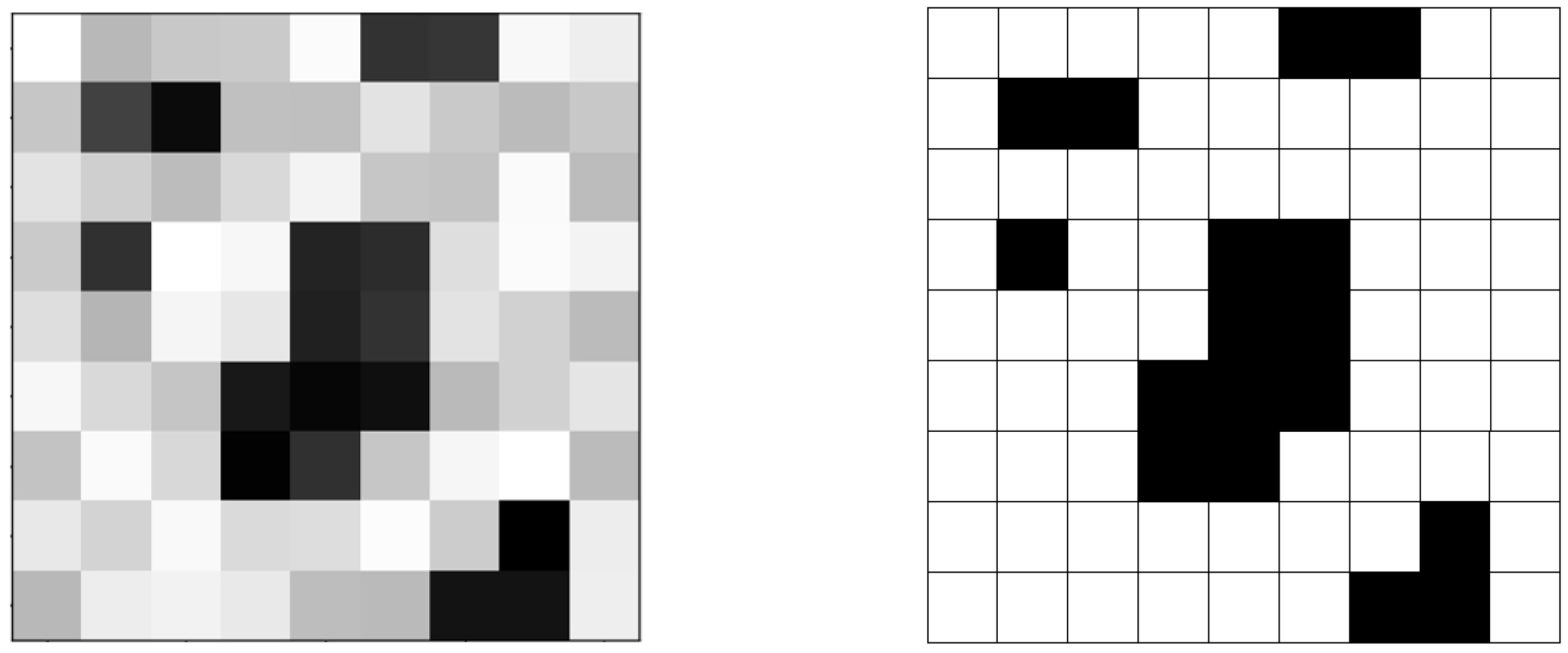

2.1. Deep-Sea Environmental Modeling

2.2. Map Decomposition

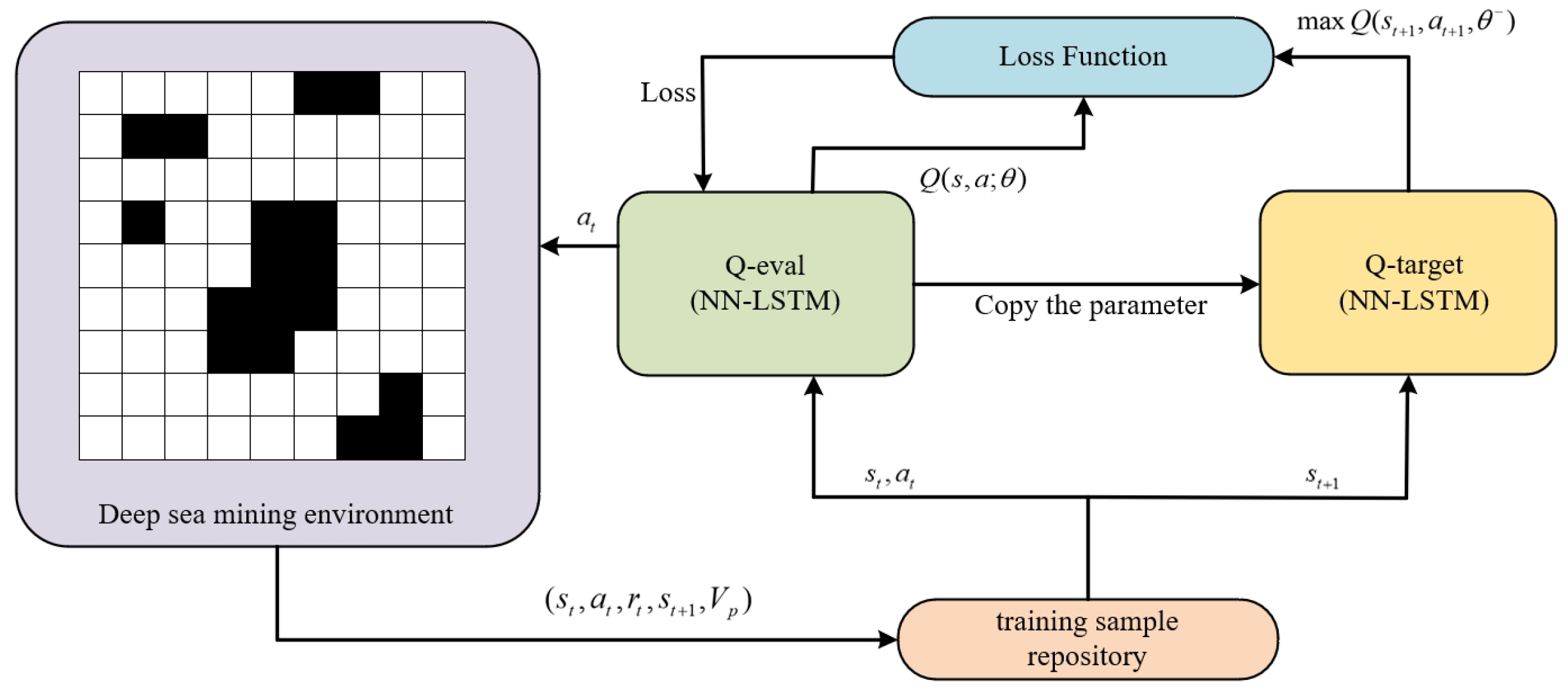

3. Design and Optimization of Algorithms

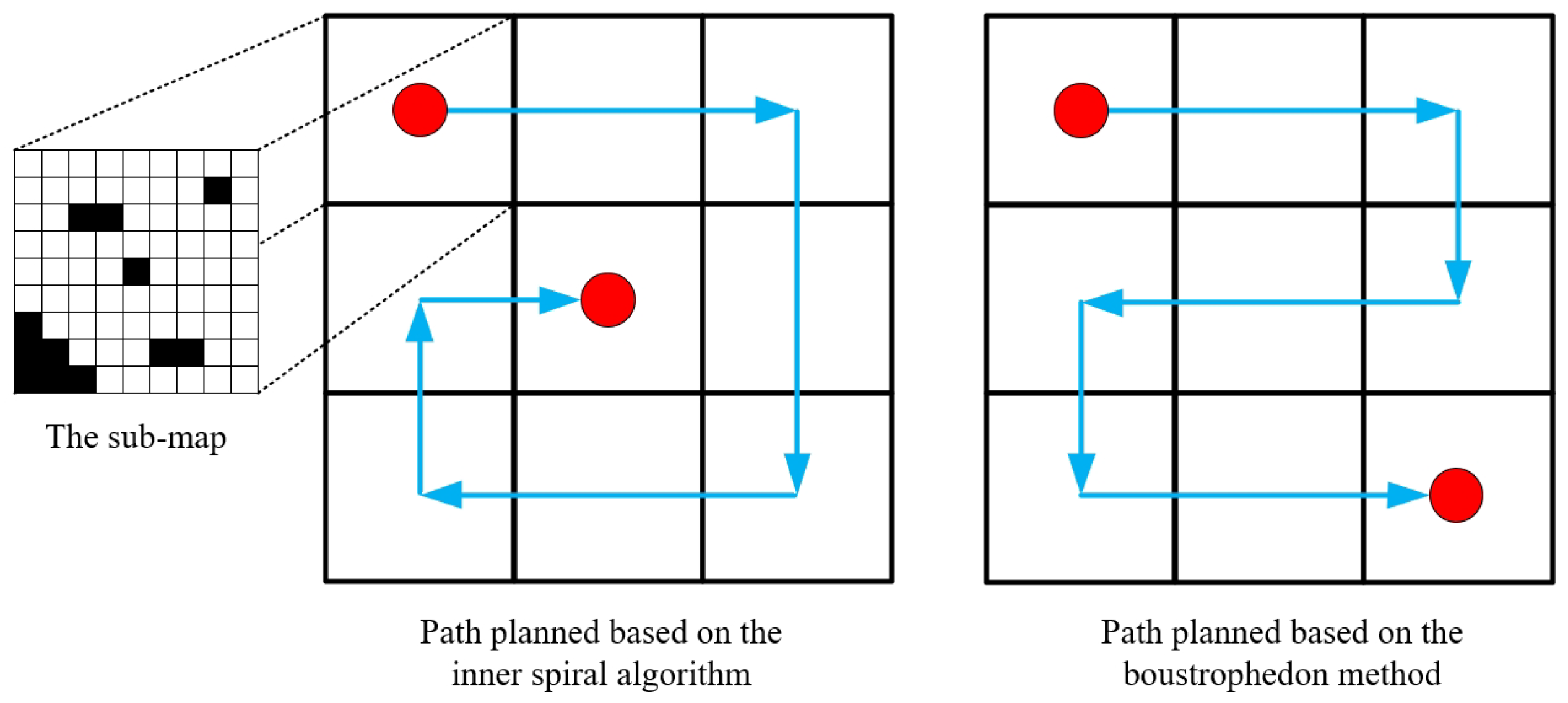

3.1. Design of Path Planning Strategy for Relay Station

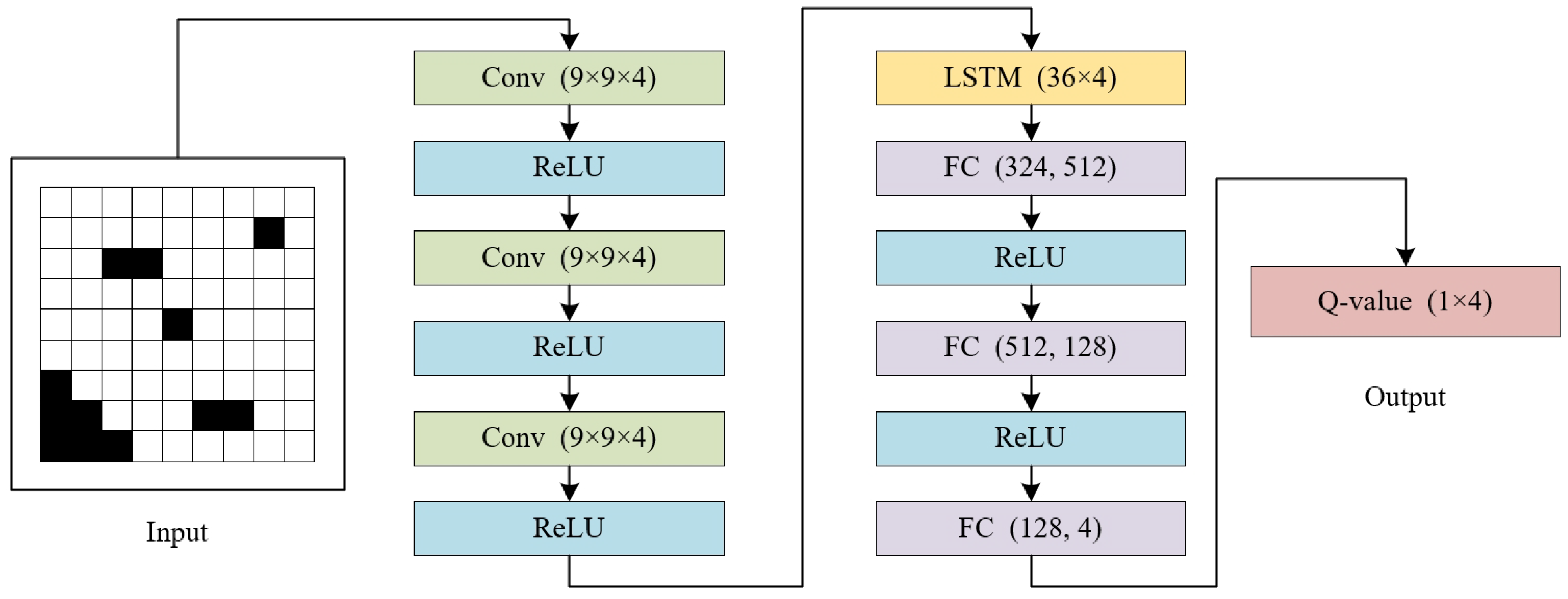

3.2. Design of Path Planning Algorithms for Mining Vehicle Cluster

3.3. Design of Constraints

3.4. Design of Reward Function

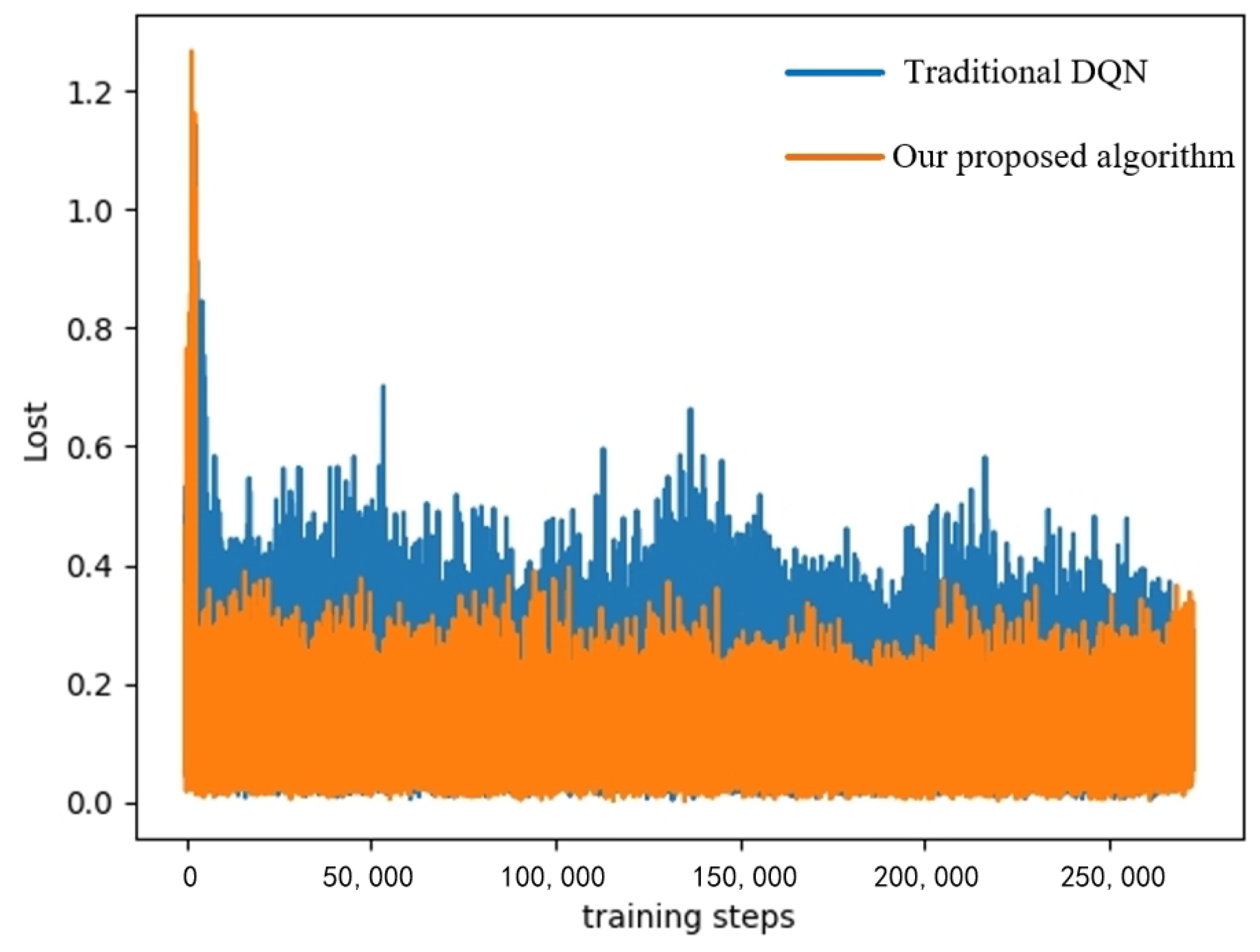

4. Algorithm Simulation and Discussion

4.1. Simulation Platform and Parameters

4.2. Simulation Results

5. Conclusions

5.1. Main Result

- 1.

- A deep-sea mining area environment modeling method and map decomposition method were proposed, and were able to effectively process the original map data.

- 2.

- Based on actual conditions, appropriate path-planning strategies were designed for relay stations and mining vehicle clusters using traditional algorithms and deep reinforcement learning algorithms.

- 3.

- A series of DQN optimization methods were proposed. The loss function, neural network structure and sample selection mechanism of traditional DQN were improved based on the requirements of deep-sea mining missions.

- 4.

- Considering the safety, coverage, efficiency and transfer of mining vehicles between sub-maps, a suitable reward function was designed for the path-planning algorithm of deep-sea mining tasks.

- 5.

- The angle-based constraint was designed to solve the hose entanglement problem in deep-sea mining missions.

5.2. Main Limitation of the Method

5.3. Future Research Prospects

- 1.

- Establishing a higher-precision kinematic model of mining vehicles to simulate the actual movement capabilities of mining vehicles;

- 2.

- Optimizing the collaborative operation capabilities between mining vehicle cluster and relay stations;

- 3.

- Continuing to improve the performance of the path planning algorithm and reducing the path coverage and energy consumption of mining vehicles while meeting basic requirements.

- 4.

- Based on A3C and DDPG, we will work on further research about wide-area coverage path planning strategies for deep-sea mining vehicle clusters.

- 5.

- To solve the twisting problem of hose, we will explore appropriate solutions in terms of constraint design or system hardware design.

- 6.

- After completing the theoretical research at the pre-project stage of deep-sea mining, we will conduct experiments in actual environments to validate and improve the path-planning strategy proposed in this paper at the mid-project stage. Meanwhile, we will also collect and analyze the performance data of the proposed strategy in real experiments on deep-sea mining missions to further strengthen the paper’s contribution.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, J.; Cui, W. Explore China’s stakeholders in the exploration and exploitation of mineral resources in deep seabed areas: Identification, challenges and prospects. Ocean Coast. Manag. 2023, 244, 106712. [Google Scholar] [CrossRef]

- Lv, X.; Zhong, Y.; Fu, G.; Wu, Y.; Xu, X. Revealing Heavy Metal-Resistant Mechanisms and Bioremediation Potential in a Novel Croceicoccus Species Using Microbial-Induced Carbonate Precipitation. J. Mar. Sci. Eng. 2023, 11, 2195. [Google Scholar] [CrossRef]

- Hammond, A.L. Manganese Nodules (II): Prospects for Deep Sea Mining. Science 1974, 183, 644–646. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, K.; Chen, X.; Ma, Z.; Lv, R.; Wei, C.; Ma, K. Deep-sea rock mechanics and mining technology: State of the art and perspectives. Int. J. Min. Sci. Technol. 2023, 33, 1083–1115. [Google Scholar] [CrossRef]

- Li, B.; Jia, Y.; Fan, Z.; Li, K.; Shi, X. Impact of the Mining Process on the Near-Seabed Environment of a Polymetallic Nodule Area: A Field Simulation Experiment in a Western Pacific Area. Sensors 2023, 23, 8110. [Google Scholar] [CrossRef]

- Sha, F.; Xi, M.; Chen, X.; Liu, X.; Niu, H.; Zuo, Y. A recent review on multi-physics coupling between deep-sea mining equipment and marine sediment. Ocean Eng. 2023, 276, 114229. [Google Scholar] [CrossRef]

- Hu, Q.; Zhu, J.; Deng, L.; Chen, J.; Wang, Y. Effect of Particle Factors on the Reflux and Blockage of a Deep-Sea Six-Stage Pump Based on CFD-DEM. Adv. Theory Simulations 2023, 2300931. [Google Scholar] [CrossRef]

- Quan, H.; Sun, J.; Li, Y.; Liu, X.; Li, J.; Su, H. Research on gas–liquid separation characteristics in the helico-axial multiphase pump. Phys. Fluids 2023, 35, 113304. [Google Scholar] [CrossRef]

- Wei, M.; Duan, J.; Wang, X.; Zhou, J. Motion of a solid particle in an ore-lifting riser with transverse vibrations. Phys. Fluids 2023, 35, 113311. [Google Scholar] [CrossRef]

- Liu, Z.; Zhao, G.; Xiao, L.; Yue, Z. Experimental and numerical study of a conceptual nodule pick-up device with spiral flow generator. Ocean Eng. 2023, 287, 115852. [Google Scholar] [CrossRef]

- Lee, H.W.; Lee, C.S. Research on logistics of intelligent unmanned aerial vehicle integration system. J. Ind. Inf. Integr. 2023, 36, 100534. [Google Scholar] [CrossRef]

- Xu, W.; Yang, J.; Wei, H.; Lu, H.; Tian, X.; Li, X. A localization algorithm based on pose graph using Forward-looking sonar for deep-sea mining vehicle. Ocean Eng. 2023, 284, 114968. [Google Scholar] [CrossRef]

- Simon, J. Fuzzy Control of Self-Balancing, Two-Wheel-Driven, SLAM-Based, Unmanned System for Agriculture 4.0 Applications. Machines 2023, 11, 467. [Google Scholar] [CrossRef]

- Cao, Y.; Gu, H.; Guo, H.; Li, X. Modeling and dynamic analysis of integral vertical transport system for deep-sea mining in three-dimensional space. Ocean Eng. 2023, 271, 113749. [Google Scholar] [CrossRef]

- Leng, D.; Shao, S.; Xie, Y.; Wang, H.; Liu, G. A brief review of recent progress on deep sea mining vehicle. Ocean Eng. 2021, 228, 108565. [Google Scholar] [CrossRef]

- Wang, L.; Chen, X.; Wang, L.; Li, Z.; Yang, W. Mechanical properties and soil failure process of interface between grouser of tracked mining vehicle and deep-sea sediment. Ocean Eng. 2023, 285, 115336. [Google Scholar] [CrossRef]

- Xing, B.; Wang, X.; Liu, Z. An Algorithm of Complete Coverage Path Planning for Deep-Sea Mining Vehicle Clusters Based on Reinforcement Learning. Adv. Theory Simulations 2024, 2300970. [Google Scholar] [CrossRef]

- Xia, M.; Lu, H.; Yang, J.; Sun, P. Multi-Body Dynamics Modeling and Straight-Line Travel Simulation of a Four-Tracked Deep-Sea Mining Vehicle on Flat Ground. J. Mar. Sci. Eng. 2023, 11, 1005. [Google Scholar] [CrossRef]

- Luan, L.; Chen, X.; Kouretzis, G.; Ding, X. Dynamic seabed stresses due to moving deep-sea mining vehicles. Comput. Geotech. 2023, 157, 105356. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, Y.; Yang, G.; Xia, J.; Dou, Z.; Meng, Q.; Xu, X. Research on contact model of track-soft sediment and traction performance of four-tracked seabed mining vehicle. Ocean Eng. 2022, 259, 111902. [Google Scholar] [CrossRef]

- Mao, L.; Luo, J.; Zeng, S.; Li, J.; Chen, R. Dynamic characteristic analysis of riser considering drilling pipe contact collision. Ocean Eng. 2023, 286, 115470. [Google Scholar] [CrossRef]

- Shobayo, P.; van Hassel, E.; Vanelslander, T. Logistical Assessment of Deep-Sea Polymetallic Nodules Transport from an Offshore to an Onshore Location Using a Multiobjective Optimization Approach. Sustainability 2023, 15, 1317. [Google Scholar] [CrossRef]

- Niu, H.; Ji, Z.; Liguori, P.; Yin, H.; Carrasco, J. Design, Integration and Sea Trials of 3D Printed Unmanned Aerial Vehicle and Unmanned Surface Vehicle for Cooperative Missions. In Proceedings of the 2021 IEEE/SICE International Symposium on System Integration (SII), Fukushima, Japan, 11–14 January 2021; pp. 590–591. [Google Scholar] [CrossRef]

- Liu, D.; Gao, X.; Huo, C. Motion planning for unmanned surface vehicle based on a maneuverability mathematical model. Ocean Eng. 2022, 265, 112507. [Google Scholar] [CrossRef]

- Xie, Y.; Liu, C.; Chen, X.; Liu, G.; Leng, D.; Pan, W.; Shao, S. Research on path planning of autonomous manganese nodule mining vehicle based on lifting mining system. Front. Robot. AI 2023, 10, 1224115. [Google Scholar] [CrossRef]

- Li, L.; Shi, D.; Jin, S.; Yang, S.; Zhou, C.; Lian, Y.; Liu, H. Exact and Heuristic Multi-Robot Dubins Coverage Path Planning for Known Environments. Sensors 2023, 23, 2560. [Google Scholar] [CrossRef]

- Tan, X.; Han, L.; Gong, H.; Wu, Q. Biologically Inspired Complete Coverage Path Planning Algorithm Based on Q-Learning. Sensors 2023, 23, 4647. [Google Scholar] [CrossRef]

- Lu, J.; Zeng, B.; Tang, J.; Lam, T.L.; Wen, J. TMSTC*: A Path Planning Algorithm for Minimizing Turns in Multi-Robot Coverage. IEEE Robot. Autom. Lett. 2023, 8, 5275–5282. [Google Scholar] [CrossRef]

- Ai, B.; Jia, M.; Xu, H.; Xu, J.; Wen, Z.; Li, B.; Zhang, D. Coverage path planning for maritime search and rescue using reinforcement learning. Ocean Eng. 2021, 241, 110098. [Google Scholar] [CrossRef]

- Qiu, G.; Li, J. Path Planning for Unified Scheduling of Multi-Robot Based on BSO Algorithm. J. Circuits, Syst. Comput. 2023, 2450133. [Google Scholar] [CrossRef]

- Dong, X.; Shi, C.; Wen, W.; Zhou, J. Multi-Mission Oriented Joint Optimization of Task Assignment and Flight Path Planning for Heterogeneous UAV Cluster. Remote Sens. 2023, 15, 5315. [Google Scholar] [CrossRef]

- Yan, X.; Chen, R.; Jiang, Z. UAV Cluster Mission Planning Strategy for Area Coverage Tasks. Sensors 2023, 23, 9122. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Lee, Y.; Jang, I.; Kim, H.J. DLSC: Distributed Multi-Agent Trajectory Planning in Maze-Like Dynamic Environments Using Linear Safe Corridor. IEEE Trans. Robot. 2023, 39, 3739–3758. [Google Scholar] [CrossRef]

- Zhang, L.; He, C.; Peng, Y.; Liu, Z.; Zhu, X. Multi-UAV Data Collection and Path Planning Method for Large-Scale Terminal Access. Sensors 2023, 23, 8601. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zhao, Z.; Xu, J.; Wang, X.; Lu, Y.; Yu, J. A Cooperative Hunting Method for Multi-USV Based on the A* Algorithm in an Environment with Obstacles. Sensors 2023, 23, 7058. [Google Scholar] [CrossRef] [PubMed]

- Baras, N.; Dasygenis, M. Area Division Using Affinity Propagation for Multi-Robot Coverage Path Planning. Appl. Sci. 2023, 13, 8207. [Google Scholar] [CrossRef]

- Lei, T.; Chintam, P.; Luo, C.; Liu, L.; Jan, G.E. A Convex Optimization Approach to Multi-Robot Task Allocation and Path Planning. Sensors 2023, 23, 5103. [Google Scholar] [CrossRef] [PubMed]

- Fang, M.; Li, H.; Zhang, X. A Heuristic Reinforcement Learning Based on State Backtracking Method. In Proceedings of the 2012 IEEE/WIC/ACM International Conferences on Web Intelligence and Intelligent Agent Technology, Washington, DC, USA, 4–7 December 2012; Volume 1, pp. 673–678. [Google Scholar] [CrossRef]

- Wang, X.; Fang, X. A multi-agent reinforcement learning algorithm with the action preference selection strategy for massive target cooperative search mission planning. Expert Syst. Appl. 2023, 231, 120643. [Google Scholar] [CrossRef]

- Xu, S.; Gu, Y.; Li, X.; Chen, C.; Hu, Y.; Sang, Y.; Jiang, W. Indoor Emergency Path Planning Based on the Q-Learning Optimization Algorithm. ISPRS Int. J. Geo-Inf. 2022, 11, 66. [Google Scholar] [CrossRef]

- Wang, Y.H.; Li, T.H.S.; Lin, C.J. Backward Q-learning: The combination of Sarsa algorithm and Q-learning. Eng. Appl. Artif. Intell. 2013, 26, 2184–2193. [Google Scholar] [CrossRef]

- Fotouhi, A.; Ding, M.; Hassan, M. Deep Q-Learning for Two-Hop Communications of Drone Base Stations. Sensors 2021, 21, 1960. [Google Scholar] [CrossRef]

| The Value of | State |

|---|---|

| 0 | Uncovered security areas |

| 1 | Covered security area |

| −1 | Dangerous areas with obstacles |

| 7 | The current area of the mining vehicle |

| Framework | Language | CPU | GPU | RAM |

|---|---|---|---|---|

| PyTorch | Python3.11 | Intel-12490F (Intel, Santa Clara, CA, USA) | RTX4060Ti (NVIDIA, Santa Clara, CA, USA) | 32 GB |

| Parameter | Value | Definition |

|---|---|---|

| 0.001 | learning rate | |

| 0.9 | discount factor | |

| 100,000 | training sample repository | |

| 128 | batch size | |

| 10,000 | maximum of training episodes | |

| 200 | parameter update frequency | |

| 0.125 | adjustment factor for coverage | |

| 0.0625 | adjustment factor for |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xing, B.; Wang, X.; Liu, Z. The Wide-Area Coverage Path Planning Strategy for Deep-Sea Mining Vehicle Cluster Based on Deep Reinforcement Learning. J. Mar. Sci. Eng. 2024, 12, 316. https://doi.org/10.3390/jmse12020316

Xing B, Wang X, Liu Z. The Wide-Area Coverage Path Planning Strategy for Deep-Sea Mining Vehicle Cluster Based on Deep Reinforcement Learning. Journal of Marine Science and Engineering. 2024; 12(2):316. https://doi.org/10.3390/jmse12020316

Chicago/Turabian StyleXing, Bowen, Xiao Wang, and Zhenchong Liu. 2024. "The Wide-Area Coverage Path Planning Strategy for Deep-Sea Mining Vehicle Cluster Based on Deep Reinforcement Learning" Journal of Marine Science and Engineering 12, no. 2: 316. https://doi.org/10.3390/jmse12020316

APA StyleXing, B., Wang, X., & Liu, Z. (2024). The Wide-Area Coverage Path Planning Strategy for Deep-Sea Mining Vehicle Cluster Based on Deep Reinforcement Learning. Journal of Marine Science and Engineering, 12(2), 316. https://doi.org/10.3390/jmse12020316