Abstract

Engineering and scientific applications are frequently affected by turbulent phenomena, which are associated with a great deal of uncertainty and complexity. Therefore, proper modeling and simulation studies are required. Traditional modeling methods, however, pose certain difficulties. As computer technology continues to improve, machine learning has proven to be a useful solution to some of these problems. The purpose of this paper is to further promote the development of turbulence modeling using data-driven machine learning; it begins by reviewing the development of turbulence modeling techniques, as well as the development of turbulence modeling for machine learning applications using a time-tracking approach. Afterwards, it examines the application of different algorithms to turbulent flows. In addition, this paper discusses some methods for the assimilation of data. As a result of the review, analysis, and discussion presented in this paper, some limitations in the development process are identified, and related developments are suggested. There are some limitations identified and recommendations made in this paper, as well as development goals, which are useful for the development of this field to some extent. In some respects, this paper may serve as a guide for development.

1. Introduction

The turbulence phenomenon occurs frequently in engineering and scientific applications and is associated with a great deal of uncertainty and complexity. As a result, the appropriate modeling and simulation studies are required. Turbulence modeling is a fluid mechanics method that uses numerical computation and analysis to study complex turbulent flows in fluid systems [1].

There are many fields in engineering and science that utilize turbulence modeling, including aircraft aerodynamic noise control, automotive aerodynamics optimization, ship flow resistance and stability analysis, oil drilling flow simulation, atmospheric ocean simulation, and many others. In order to improve the accuracy and reliability of their calculations, engineers can use turbulence modeling to gain a deeper understanding of fluid systems and optimize them. Several examples can be found in refs. [2,3,4,5].

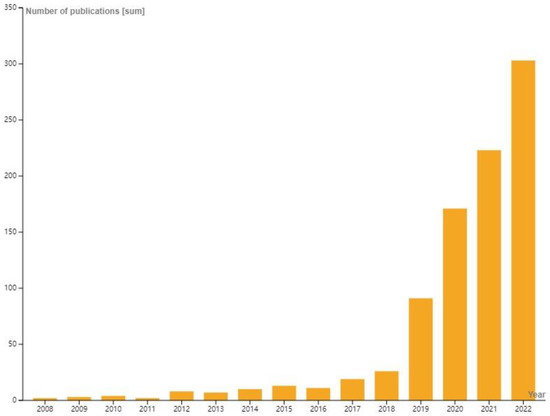

Traditional turbulence models, such as Reynolds-averaged Navier-Stokes (RANS), however, are limited by oversimplified assumptions, a lack of physical description, mesh dependence, and unreliable data. These limitations affect the accuracy and reliability of the models. Several simulation methods, such as direct numerical simulation (DNS) and large eddy simulation (LES), have the disadvantage of requiring large computational resources and resulting in high simulation costs [6]. Using turbulence closure models, Wu et al. found limitations in Reynolds-averaged Navier-Stokes (RANS) simulations for flows with nonequilibrium turbulence [7]; Ishihara et al. reported that the number of degrees of freedom required by direct numerical simulation (DNS) increases rapidly with an increase in the Reynolds number (Re) [8]; according to Yang, LES still needs to address some important modeling and numerical challenges, such as how to accurately simulate transition flows and gas turbine combustor flows [9]. As a result of these negative effects, engineering projects can encounter many difficulties, including increased costs and increased safety risks. Recent years have seen rapid advancements in the fields of information and computer technology. Computers are now capable of processing and computing information more efficiently than ever before due to the advancements in technologies such as cloud computing, high-performance processors, big data analytics, and machine learning [10]. As can be seen in Figure 1, the application of data-driven machine learning methods to turbulence modeling has gradually gained attention. Using machine learning algorithms, turbulence experimental or simulation data can be analyzed for their features and predictions can be made. Machine learning methods based on data have many advantages over traditional modeling techniques.

Figure 1.

The number of published results about machine learning turbulence modeling in the Web of Science.

In this paper, we aim to contribute to the development of machine learning-based turbulence modeling. Initially, we review the history of turbulence modeling, analyze the characteristics of turbulence modeling proposed in different periods, and identify some limitations of the traditional methods. The next step is to review the relevant algorithms for turbulence modeling with data-driven machine learning. An analysis and discussion are also provided. This paper also discusses some methods for optimizing data. The final section of the paper presents recommendations based on the obstacles discussed and analyzed throughout the paper. This paper seeks to identify the limitations of the development path in this field and suggest some constructive developments. It is intended that these limitations and suggestions will, to a certain extent, assist researchers in this field in understanding the current state of the research and in identifying directions for its future development. In some ways, this paper can be used as a guide for the development of turbulence modeling using data-driven machine learning.

2. Literature Review

2.1. History of Turbulence Modeling

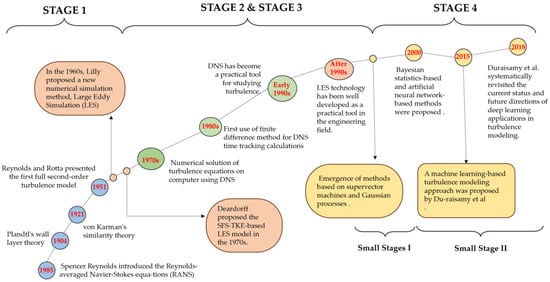

As shown in Figure 2, four phases can be used to describe the development of turbulence modeling methods. As the first stage, we have the traditional turbulence modeling that has been developed gradually since the 1950s, which was mainly based on the Reynolds averaged Navier-Stokes equation (RANS) model. The second stage was the direct numerical simulation (DNS), which began to mature in the 1970s. DNS provided more accurate information on the turbulent flow field, but it is computationally costly and can only be considered under very small Reynolds numbers. In the third stage, Large Eddy Simulation (LES) was developed, which has better physical descriptions capabilities than RANS, but has limitations as well. During the fourth stage, machine learning-based turbulence modeling was performed, which can be divided into two sub-stages. In the first sub-stage, statistical learning is used. The turbulence data is generally processed, the features are extracted, and the models are constructed using machine learning algorithms. The second sub-stage involves the use of deep learning techniques. Machine learning algorithms are used in this stage of the approach in order to learn the model directly from the data. These algorithms are efficient and versatile. This method is also capable of handling more complex turbulent flow fields and improves the prediction capability and accuracy of the model.

Figure 2.

An overview of the development history of turbulence modeling. As each stage of development has an intersection of development elements, this picture distinguishes each stage by color. The blue color represents the first stage; the green color represents the second stage; the orange color represents the third stage; and the yellow color represents the fourth stage.

2.1.1. Reynolds Averaged Navier-Stokes Equation Model

As early as 1895, Reynolds introduced the RANS equations (RANS) [4], which describe turbulent flows. By incorporating approximations based on turbulence properties, these equations provide an approximate time-averaged solution to the Navier-Stokes equations. Initially, zero-equation models were developed in the early period [11,12]. Empirical and experimental data were used to create these models, where the flow near a wall was assumed to be stable and was described by the wall friction. The characteristic length scale of the flow was determined using the wall friction velocity and pressure.

Following the zero-equation model, one-equation models were developed [13]. These models consider the transport of turbulent kinetic energy without factoring in the turbulent vortex viscosity. The Spalart-Allmaras model [14] was one of the earliest one-equation models, while later models include the Baldwin-Lomax model [15] and the Sarkar model [16]. However, one-equation models have limitations and struggle to accurately model rotating flows and pressure gradients. To overcome these limitations, full second-order models were developed by incorporating the transport of turbulent kinetic energy, turbulent dissipation rate, and Reynolds stress tensor [17]. Reynolds and Rotta proposed the first full second-order model in 1951 [18], but it had limitations regarding non-uniform and rotating flows. Scholars continued to improve and modify the model, leading to models like the Reynolds Stress Transport Equation Model (RSTM) by Launder and Spalding in 1972 [19] and the k-ω model proposed by Wilcox in 1988 [20].

2.1.2. Direct Numerical Simulation

The direct numerical simulation (DNS) method is used for solving the Navier-Stokes equations that describe fluid motion. In DNS, the equations are solved numerically on a computer without using any modeling or simplifying assumptions. Since its development in the 1970s and 1980s, the method has become an important tool in the study of turbulence [21].

Among the key developments of the DNS method was the use of time-tracking techniques [22]. The equations in the Fourier space were originally solved using spectral methods in the early days of DNS [23]. However, this method, was not able to capture the full range of turbulence scales. In contrast, time-tracking methods allow for the direct calculation of turbulence at its full extent. DNS time-tracking calculations were first performed using finite difference methods in the early 1980s [24]. The Navier-Stokes equations could be directly calculated in the physical space without any assumptions regarding the flow. However, the computational requirements of these methods were so high that DNS did not become a practical tool for the study of turbulence until the late 1980s and early 1990s. DNS is widely used in turbulence research today and has provided many important insights into turbulence physics [25]. Despite this, the method remains computationally expensive and is limited to relatively simple geometries and flow configurations.

2.1.3. Large Eddy Simulation

LES was developed in the 1960s. In that period, Lilly proposed a new numerical simulation method, Large Eddy Simulation (LES), for simulating turbulence in the atmospheric boundary layer [26]. LES reduces computational costs by solving for larger scales than direct numerical simulation (DNS) while describing turbulence through parameterizing small-scale processes. LES solves large scale structures directly, while small scale structures are parameterized.

LES was widely used and developed in the following decades. For the description of the small-scale structure of turbulent flows, Deardorff proposed an LES model based on SFS-TKE in the 1970s [27]. LES was first applied to the simulation of cloud boundary layers in the 1980s [28], and it was further developed in the 1990s.

LES has also been applied in other fields such as engineering [29], marine science [30], and environmental science [31]. As computer technology continues to develop, the computational power of LES has been greatly enhanced. The models and algorithms of LES have also been continuously improved and optimized. As an example, LES models based on wall functions [32] and LES models based on hybrid models [33] may be used.

2.1.4. Machine Learning-Based Turbulence Modeling

The first small stage of this period dates back to the 1980s, when wavelet analysis was used for analyzing the local features of turbulent signals [34]. Methods based on support vector machines [35] and Gaussian processes emerged in the 1990s [36]. Around 2000, Bayesian statistics-based [37] and artificial neural network-based methods were proposed [38].

The second small phase of the research period began roughly in 2015, when Zhang and Duraisamy proposed a machine learning-based turbulence modeling approach that uses statistical inference and machine learning techniques to calibrate uncertainty in the RANS model [39]. In 2016, Parish and Duraisamy. developed this approach and applied it to the LES model. In order to calibrate the traditional turbulence model, they used statistical inference to extract model discrepancies from the dataset and embedded them into the RANS solver using machine learning techniques [40]. According to Singh et al., this approach led to significant improvements in the SA model of turbulent flow in 2017. To calibrate the traditional turbulence model, they extracted model differences from the dataset using statistical inference and embedded them into the SA model using machine learning techniques [41]. Deep learning has been applied to turbulence modeling more and more frequently since then. In 2018, Duraisamy et al. systematically reviewed the current status and future directions of deep learning applications in the field of turbulence modeling. In their article, they note that deep learning has been applied to turbulence modeling with some initial success, but that there are still numerous challenges and problems to be resolved [42].

2.1.5. Comparison of the Characteristics of Different Stages

Based on the results of the above review, a table was created. As shown in Table 1. The analysis indicates that the RANS, DNS, and LES models suffer from high computational costs, high computational difficulty, low computational progress, and a lack of wide application. Machine learning techniques can be used to solve these problems effectively. The machine learning approach to turbulence modeling, however, requires a large amount of data to train the model, and it faces challenges in collecting, processing, and selecting the data, as well as in generalizing the model. It is therefore necessary to conduct further research and exploration in order to refine the application of the method. Section 3 and Section 4 of this paper will discuss these limitations and the related development proposals.

Table 1.

Disadvantage of different turbulence modeling methods.

2.2. Elements of Data-Driven Modeling

This section analyzes and discusses the data and algorithms from the perspectives of data-driven machine learning-based turbulence modeling.

2.2.1. Related Algorithms

Turbulence modeling algorithms include Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), Random Forest, Support Vector Machines (SVM), Deep Reinforcement Learning (DRL), and Physics-informed Neural Networks (PINNs) [43].

Convolutional Neural Networks

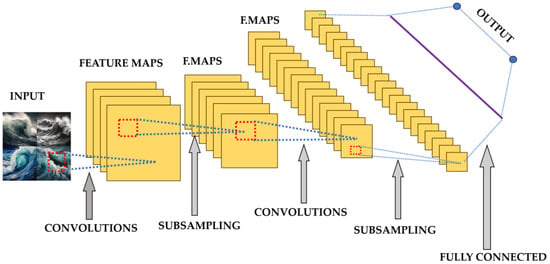

The convolutional neural network (CNN) is a type of deep learning neural network that is commonly used to recognize, classify, and process images and videos. Input data is used to automatically and adaptively learn the spatial hierarchies of the features. In CNNs, the features are extracted from the input data using a mathematical operation known as convolution. The features are then passed through multiple layers of the network for further processing and classification. By using convolution, the network can identify patterns and features in the input data, including edges, corners, and textures, which can then be used to make predictions or classifications [44]. Figure 3 shows a typical convolutional neural network framework.

Figure 3.

Typical CNN architecture.

Turbulence modeling can benefit from the use of convolutional neural networks (CNNs). In the first instance, they are able to automatically learn and extract features from the input data, which is particularly helpful when dealing with complex and high-dimensional data, such as turbulent flow fields. Second, CNNs can capture spatial correlations and patterns in the data, which is important for turbulence modeling as turbulence is inherently spatially correlated. Furthermore, CNNs can be trained on large datasets, which is crucial for developing accurate and robust turbulence models. As a final point, CNNs can be used to predict complex quantities, such as heat transfer and pressure fluctuations, that are difficult to model using traditional turbulence models [45].

For optical orbital angular momentum (OAM) multiplex communication, Xiong et al. proposed a convolutional neural network (CNN)-based atmospheric turbulence compensation method. It is possible for the model to automatically extract the feature parameters from the distorted intensity distribution of the vortex beam (VB) as a result of atmospheric turbulence. Their experimental results demonstrate that the proposed method improves the mode purity of the distorted VB from 26.91% to 93.12%. In order to compensate for atmospheric turbulence (AT), a Convolutional Neural Network (CNN) model was used; this resulted in a significant improvement, from 26% to 93%. The CNN has a number of advantages, including the ability to automatically extract features from the input data, the ability to learn from large datasets, and the ability to generalize to new datasets. The CNN model was optimized by adding input training data, which provided additional feature information to improve the correctness of the CNN model, reflecting the relationship between inputs and outputs. The CNN model is able to accurately predict the AT, which greatly improves the pattern purity of the distorted VB. The CNN model consists of 11 convolutional layers and 4 anti-convolutional layers. There are some limitations to the proposed method, although it shows encouraging results in terms of compensating for atmospheric turbulence and improving the mode purity of distorted VB. The proposed method may perform differently under different atmospheric turbulence conditions due to the fact that the experiments were conducted under specific atmospheric turbulence conditions. Additionally, the authors emphasize that collecting and preparing a large amount of training data for the training of the model may prove difficult [46].

A fully convolutional neural network (FCN) was proposed by Guastoni et al. to predict the flow-by-flow velocity field in turbulent open channel flows at multiple wall-normal locations. The FCN model was shown to be capable of accurately predicting the average flow, as well as the flow-by-velocity fluctuation profiles, including near-wall peaks. However, the study is based on a set of training data generated through direct numerical simulation (DNS) at a friction Reynolds number of 180. The extent to which this approach can be applied to other Reynolds number words or other types of wall boundary flows is unclear [47,48].

Using a convolutional neural network (CNN), Zhang et al. modelled turbulence in a large virtual river with wall-mounted piers. There are two parts to the CNN algorithm: an encoder and a decoder. As the encoder maps the input image into the feature space, the decoder reconstructs the output image based on the learned features. There are two types of layers in the encoder part, including a convolutional layer and a sampling layer. In the convolutional layer, an operation called the “convolutional operation” is performed and a nonlinear activation function is embedded. For training the CNN algorithm, the LES results from the training testbed rivers are used, as well as instantaneous and time-averaged flow field data. Physically constrained and physically unconstrained training methods are used. The CNN was developed to minimize the error between the LES results and CNN predictions in the training method without physical constraints. It remains to be seen whether the CNN algorithm can be generalized to other rivers with different geometries and boundary conditions as it was trained using the LES results from a training testbed river [49].

Lapeyre et al. proposed a new approach to modelling premixed turbulent combustion using convolutional neural networks (CNNs). To estimate the subgrid-scale wrinkling, the authors designed and trained a CNN using the direct numerical simulation (DNS) of premixed turbulent flames. The CNN model was found to be more effective than classical algebraic models at predicting the subgrid-scale wrinkling phenomenon and extracting the topological properties of the flame. Using topological information to estimate unresolved flame wrinkling improves the accuracy, and the present technique allows automated and multi-scale extraction in order to improve the prediction accuracy. The CNN architecture used in the study is based on the U-Net design, and the performance of other CNN architectures may differ. A U-Net is a type of convolutional neural network (CNN) commonly used for the segmentation of images. This paper presents the design and implementation of a CNN based on the U-Net architecture to estimate wrinkles at the scale of a turbulent combustion neutron grid. U-Net architectures are characterized by contraction paths that capture the context and symmetric expansion paths that enable precise localization. In the contraction path, convolutional and pooling layers are used to downscale the input image, while in the expansion path, convolutional and up-sampling layers are used to upscale the feature map. In addition, the study only considers specific slot burner configurations, and the method may need to be adjusted for other geometries or boundary conditions [50].

An artificial neural network (ANN) turbulence model was developed using data generated using the Spalart-Allmaras (SA) turbulence model by Liang et al. In complex turbulence models, data-driven turbulence models based on artificial neural networks have the potential to improve the convergence efficiency of RANS. However, it should be noted that the developed ANN model was trained using data generated by the SA turbulence model, which may prevent it from being generalizable to other turbulence models or flow conditions [51].

Anantrasirichai et al. proposed a deep learning-based framework for mitigating atmospheric turbulence distortion in dynamic scenes. By using complex-valued convolution, the framework captures phase information that has been altered by atmospheric turbulence. The proposed framework consists of two modules: a distortion mitigation module and an optimization module. In order to train the models, synthetic and real datasets are combined. Despite strong atmospheric turbulence, the proposed approach performs well in experiments [52].

Recurrent Neural Networks

Recurrent neural networks (RNNs) are types of neural networks that are designed to process sequential data, such as time series or natural language. RNNs contain loops that allow information to persist and be passed between steps in the network. It is for this reason that RNNs are particularly useful for tasks that involve the prediction or generation of data sequences [53].

There are several advantages to using recurrent neural networks (RNNs) for the modeling of turbulence [54,55,56].

- ■

- RNNs can be used to capture the time-dependent characteristics of data, which is important for modeling the time-varying nature of turbulence.

- ■

- RNNs can handle variable-length input sequences, which is useful when modeling turbulent data that may have different lengths.

- ■

- RNNs can be trained using backpropagation in time (BPTT), a powerful optimization algorithm that can efficiently train RNNs on large datasets.

Hassanian et al. developed DL models and predictions using RNN methods, specifically long short-term memory (LSTM) and gated recurrent units (GRU). The data sources for the time series were derived from laboratory turbulence and extracted using 2D Lagrangian particle tracking (LPT). Using the proposed method, the velocity field of turbulence can be accurately predicted with a low mean absolute error (MAE) and a high coefficient of determination (R2). To test the method, a limited dataset was generated through two-dimensional Lagrangian particle tracking (LPT) in the laboratory. Further research is required in order to extend the proposed method to other datasets [57].

Guastoni et al. investigated the predictive power of recurrent neural networks in a low-order model of near-wall turbulence. The results demonstrate that turbulence statistics and the dynamic behavior of the flow can be well predicted by a properly trained long short-term memory (LSTM) network, with relative errors below 1%. In addition, the study highlights the importance of defining the stopping criteria based on the calculated statistics and using more complex loss functions in order to improve the speed of the neural network training. However, there is no comprehensive comparison of the proposed neural network model with other state-of-the-art methods for predicting turbulence, such as large eddy simulations (LES) and direct numerical simulations (DNS) [58].

Using observations rather than gates to update the network state in each prediction, Elsaraiti et al. proposed a modified long short-term memory (LSTM) model to predict wind speed. In this model, the wind speed predictions are more accurate [59].

The network proposed by Mehdipour et al. is based on a combination of recurrent neural networks (RNN) and convolutional neural networks (CNN) designed to consider the temporal dependence of the data recorded in each site, while the convolutional neural networks (CNN) take into consideration the spatial correlations among neighboring sites. To check the robustness of the hybrid network in the event of missing values, a number of popular data interpolation techniques are used, including tandem and parallel connections of RNNs and CNNs. Using the proposed serial-parallel hybrid network and mean interpolation technique, the proposed method achieves the lowest error in predicting traffic flow when applied to incomplete test data and up to 21% missingness when applied to both complete and incomplete training data. In both complete and incomplete training data scenarios, the trained model exhibits good robustness to missing values until applied to incomplete test data, with missing rates exceeding 21% in both cases [60].

Using long short-term memory (LSTM) neural networks, Mohan et al. proposed a deep learning approach to construct reduced-order models (ROMs) for turbulence control applications. The reduced-order modeling (ROM) approach was used to model the physical features of the problem without the need to calculate the full Navier-Stokes equations. By using dimensionality reduction techniques, such as Proper Orthogonal Decomposition (POD) coupled with Galerkin projections, the high-dimensional dynamics of the flow field are projected into a low-dimensional subspace. The simulations were based on canonical DNS datasets, namely the Forced Isotropic Turbulence Dataset (ISO) and the Magnetohydrodynamic Turbulence Dataset (MHD). The ISO dataset was derived from 3D DNS Navier Stokes simulations solved using a spectral approach on a grid size of 10243, preserving the time steps of 5023 3D data frames. In addition, the MHD dataset is derived from a 3D DNS Navier Stokes simulation on a grid size of 1024 by 384 data frames, which contains the time steps of 1024 3D data frames for the purpose of analysis. All of the results shown in the study were represented by the U-velocity field. According to this study, LSTM can be applied to the temporal dynamics modeling of turbulent flows in order to model the key physics/features of the flow field without computing the full Navier-Stokes equations [61].

According to Eivazi et al., unsteady fluid flows are modeled using RNN techniques, specifically long short-term memory (LSTM) networks, a recurrent neural network architecture designed for learning sequential data, such as numerical or experimental time series. Using autoencoders and LSTM networks, the proposed approach exploits the power of nonlinear downscaling and sequential data learning to model unsteady flows noninvasively. As a result, the step-down modeling can be more efficient. Conventional methods may have difficulty modeling complex nonlinear dynamics capable of capturing non-stationary fluid flows [62].

Random Forest

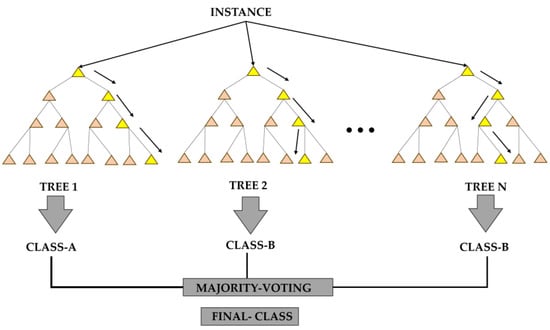

The random forest algorithm consists of multiple decision trees arranged in an integrated manner. However, individual decision trees are useful for modeling fluids, for example, random tree algorithms can be very useful for modeling hydraulics. As a result, one is able to predict the size of paving stones to prevent dams, embankments, and other structures from being eroded by overflow flows [63,64,65]. Random forests train decision trees based on a randomly selected set of features [66]. Random Forest is a powerful tool for modeling turbulence due to its robustness, accuracy, and ability to handle complex data [67]. Figure 4 shows a simple example of the random forest algorithm.

Figure 4.

A simple example of Random Forest algorithm. Two of the different colors are used to highlight feature selection. For each node of the decision tree, a subset of features is randomly selected at the node. The yellow triangles indicate the selected subset of features.

In Nagawkar et al., a new random forest-based machine learning algorithm was proposed for predicting Reynolds-averaged Navier-Stokes (RANS) flow fields with high accuracy. In this algorithm, potential flow fields with low fidelity are improved. Based on the results of the proposed forest-based stochastic machine learning algorithm, it is shown that the high-fidelity Reynolds-averaged Navier-Stokes (RANS) flow field can be predicted with a greater degree of accuracy. In the first two cases, the relative L2 parametric error of the algorithm is consistently lower than that of TensorFlow Foam (TFF). According to the algorithm, the pressure and friction coefficients of the RAE 2822 wing are better predicted than those of the NACA 0012 wing in the third case. It should be noted, however, that the study was limited to three cases, and the performance of the proposed algorithm may vary for other cases [68].

For the regression of base and wall pressures, Afzal et al. used a random forest classification algorithm. Mathematical modeling is very complex when dealing with highly nonlinear and sensitive data, which makes the algorithm highly suitable for predicting these types of data. It is much easier to perform regression analysis on such large datasets using the random forest algorithm [69].

Williams et al. used random forests to diagnose aviation turbulence associated with thunderstorms. A variety of data sources are incorporated into the algorithm, including Doppler radar, geosynchronous satellites, lightning detection networks, and numerical weather prediction models. A retrospective dataset that includes objective turbulence reports and synoptic prediction data from commercial aircrafts is used to train the algorithm. Multiple performance metrics, including the receiver operating characteristic curves, are evaluated on an independent test setup. For air traffic managers, dispatchers, and pilots, the algorithm generates deterministic and probabilistic turbulence evaluations [70].

In Matha et al., machine learning methods, in particular random forests, are employed to quantify epistemic uncertainty in turbulence models. A random forest approach is used to perturb the Reynolds stress tensor using eigenspace perturbations. In their study, the authors evaluate the effect of different hyperparameters on the accuracy of the random regression forest model, and they train the model according to different Reynolds numbers. According to the results, the proposed method can effectively estimate the uncertainty bound for computational fluid dynamics. However, the paper does not provide any numerical results or comparisons with other methods [71].

Muoz-Esparza uses random forest models to predict turbulence in aviation. In order to facilitate manipulation within the ML modeling framework, a dataset of 2.42 million model-observation pairs, including 114 predictors for each comparison instance, was created and arranged into a 2D array. After conducting sensitivity analysis of the two most relevant hyperparameters of the RF algorithm, namely the number of trees and maximum tree depth, the researchers concluded that the reduction in the tree depth caused a reduction in the skill for low EDRs (0.1 m2/3 s−1), while td > 30 did not lead to significant improvements. When considering the number of trees in the forest, nt = 100 provided good results, with more trees adding negligible skill to the model, while a decrease in nt resulted in a significant decrease in skill. In this paper, the authors demonstrated how the RF model can be used to improve the turbulence prediction skill, particularly by reducing the frequency of false alarms in conditions of null or very light turbulence, as well as simplifying the current turbulence prediction methodology [72].

To improve the ability to model separated flows, Ho et al. used a random forest machine learning approach. To obtain the correction factors for the production terms in a given dissipation rate equation, the authors used field inversion and machine learning (FIML) methods. In the process of inversion, accompanying methods were used. They then used the inversion results to train a random forest machine learning model and tested it on three unseen cases, including a 3D non-axisymmetric rumble. In all test cases, applying the FIML method leads to an improvement in the prediction of the mean velocity and turbulent kinetic energy. Furthermore, the authors discussed the importance of feature selection and feature importance analysis in improving the accuracy of the random forest model. Accordingly, the main contribution of this paper is to propose a new method for improving the turbulence model and to demonstrate its effectiveness. However, only a specific range of Reynolds numbers is considered in this study, and the effects of other Reynolds number ranges may differ [73].

Support Vector Machines

The support vector machine (SVM) is a machine learning algorithm used for classification and regression analysis. The method consists of determining which hyperplane best separates the data into different categories. In addition to handling both linear and nonlinear classification problems, support vector machines are particularly useful when dealing with high-dimensional data. Furthermore, they are known for their ability to handle noisy data and outliers [74]. Many scholars have attempted to model or predict turbulent phenomena using SVM algorithms in turbulence research. In order to correlate the input features of the turbulent flow field with the corresponding output labels, the SVM regression method can be used. The input features may include a variety of physical quantities of the flow field, including the velocity, pressure, etc., while the output labels may indicate specific characteristics or behaviors of the turbulence. As a result of analyzing the relationship between the input features and the output labels, the SVM regression algorithm will create a regression equation to describe the turbulence phenomenon. As a result of this regression equation-based approach, we will be able to gain a better understanding of the turbulence phenomenon and will be able to predict and control its behavior. In order to gain a deeper understanding of the nature and evolution of turbulence, we can learn and derive regression equations from large amounts of turbulence data [75,76,77].

Turbulence modeling can benefit from support vector machines (SVMs). Firstly, they display an excellent generalization performance, making them suitable for solving problems with a limited number of samples. Second, they are capable of adapting to the noise distribution, which makes them effective at denoising signals. A third advantage of these models is that they can be used for nonparametric regression modeling, which allows them to capture relationships between variables that are complex. As a fourth advantage, they can be tuned using bootstrap resampling techniques, which can provide a reliable method for selecting the algorithm’s optimal free parameters [78].

Support vector machines (SVMs) can be used to model turbulent flows. Firstly, they have excellent generalization capabilities, which makes them suitable for solving problems involving a limited number of samples. Furthermore, they are capable of adapting to the noise distribution, which makes them effective at denoising signals. Additionally, these models can be used for nonparametric regression modeling, which allows them to capture complex relationships between variables. Furthermore, they can be tuned using bootstrap resampling techniques, which can provide a reliable method for determining the optimal free parameters of the algorithm [79].

In order to address the problem of wavenumber spectrum mismatch due to invalid data in turbulence observations, Wang et al. proposed an algorithm based on SVM to match the wavenumber spectrums. Cross-validation is used to obtain the class labels and SVM is used to train the classifier to determine the optimal parameters and kernel functions. Using data from the sea trial, the algorithm was found to have high accuracy in matching and to reduce the subjective factors associated with the manual identification of wavenumber spectra. By matching the turbulent wavenumber spectrums, this study offers a new method for calculating the turbulent kinetic energy dissipation rate, which improves the calculation efficiency and accuracy. Due to the complexity of the ocean environment and the limited amount of data available, this algorithm needs further in-depth and extensive validation. To confirm the applicability of the algorithm, more experiments and data validation are required [80].

Based on the estimated pixel movement, Zhang et al. proposed a distortion correction algorithm for underwater turbulence-induced distortions. Using reference frame selection and two-dimensional pixel registration, the algorithm recovers and reconstructs distorted images. A support vector machine-based kernel correlation filtering algorithm is also proposed and applied to improve the speed and efficiency of the correction algorithm. This work is primarily concerned with the development of a fast algorithm for correcting underwater turbulent image distortions. A controlled simulation system for turbulent water and field experiments in rivers and oceans were used to validate the algorithm. We compare the experimental results with conventional, theoretical model-based, and particle image velocimetry-based recovery and reconstruction algorithms. The proposed algorithm effectively suppresses image distortion and can be used for real-time underwater target detection [81].

According to Hanbay et al., LS-SVM models can be used to predict flow conditions and aeration the efficiency of stepped ladders. Based on the ratio between the critical flow depth and step height, the channel slope, and the flow conditions, LS-SVM models were used to predict flow conditions and aeration efficiency. The LS-SVM model was found to be capable of accurately predicting flow conditions and aeration efficiency. This work is primarily concerned with the development of the LS-SVM model to predict the flow condition and aeration efficiency of stepped ladders. However, this study only considered several parameters of the stepped waterfall, such as the ratio of the critical flow depth to step height, the flume slope, and the flow conditions, without considering other factors that may influence the oxidation efficiency [82].

Deep Reinforcement Learning

A subfield of machine learning known as Deep Reinforcement Learning (DRL) combines deep neural networks with reinforcement learning algorithms to allow agents to learn from their environment and make predictions based on their observations [83].

In the context of turbulence modeling, Deep Reinforcement Learning (Deep RL) has several advantages. As a data-driven approach that learns from experience, it is well-suited to complex, nonlinear systems, such as turbulence. RL allows for adaptability and flexibility in the modeling process as the agent can learn to adjust its policy based on the current environment. Due to its ability to optimize a specific objective function, such as the energy spectrum of a turbulent flow, it can produce highly accurate models. In contrast to traditional analytical models, deep RL models can be trained on a specific set of conditions and then applied to new conditions, making them more generalizable. Furthermore, Deep RL can automate the modeling process, which reduces the need for human intervention and expertise. As a whole, Deep RL has the potential to revolutionize turbulence modeling by providing accurate, adaptable, and automated models that can be applied to a wide range of conditions [83].

According to Novati et al., a multi-agent reinforcement learning (MARL) method is proposed for the automatic discovery of closed models for subgrid-scale physics (SGS) in turbulence simulations. It is specifically used in large eddy simulations (LES) of isotropic turbulence with the aim of reproducing the energy spectrum predicted through direct numerical simulation (DNS) by controlling the coefficients of the vortex viscosity closure model. This work has made important contributions to the discovery of turbulence models using MARL, the incorporation of MARL proxies into flow solvers, and the application of a reward function based on the similarity of the statistics generated by the model to the reference data for the quantities of interest. They demonstrate the potential of their approach for the LES of isotropic turbulence, and they demonstrate that the learned turbulence model can be generalized to different grid sizes and flow conditions. However, this has only been tested in isotropic turbulence and may not be applicable to other types of turbulence. Additionally, the method requires considerable computational resources and may not be practical for real-time applications [84].

In large eddy simulation (LES), Kurz et al. used deep reinforcement learning to improve the turbulence model. The neural network-based reinforcement learning framework is used to dynamically tune the coefficients of the Smagorinsky model. A key contribution of this research is the development of a new data-driven turbulence model that outperforms the Smagorinsky model and implicit modeling strategies in terms of the energy spectrum. As a result of utilizing reinforcement learning paradigms in turbulence modeling, state-of-the-art techniques can be developed more efficiently due to the availability of the necessary resources on massively parallel systems. However, the proposed approach requires significant computational resources that may not be available to all researchers. Furthermore, reinforcement learning may not be applicable to all turbulence modeling problems, as the effectiveness of reinforcement learning methods may depend on the particular characteristics of the problem [85].

The authors propose a method that combines scientific computing and multi-agent reinforcement learning (MARL) in order to automatically discover closed models for wall turbulence simulations. By using Large Eddy Simulation (LES) to solve the filtered Navier-Stokes equations, the method develops a wall model as a control strategy to recover the correct mean wall shear stress. Having been trained on moderate Reynolds number flows, the method has demonstrated its good performance in extreme Reynolds number flows. By studying two models with different state spaces, the robustness of the method has been evaluated. This method can be applied to a wide range of grid resolutions and Reynolds numbers and can be extended to more complex geometries and flow configurations. The central contribution of this work is to develop a new method that combines scientific computation and MARL to automatically discover closed models for wall turbulence simulations. Through the study of two models with different state spaces, the method is demonstrated to be effective in moderate and extreme Reynolds number flows, as well as evaluating its robustness [86].

Linot et al. used deep reinforcement learning (RL) to reduce drag and control turbulent Kuyt flow. This work contributes to the development of the data-driven manifold dynamics RL framework (DMAND-RL). There are two main learning objectives: obtaining a low-dimensional proxy model of the underlying dynamics of turbulent DNS and using this model for RL training to obtain an effective control proxy as quickly and efficiently as possible. The authors demonstrate that the DManD-RL agent can significantly reduce the drag of turbulent Kuyt flow and convert the turbulent conditions of 21/25 into laminar conditions. DManD-RL requires a large dataset to learn an accurate proxy model, which may be difficult for some systems to obtain. Furthermore, the framework may not be suitable for systems with highly complex dynamics or those that are difficult to model accurately [87].

For the application of deep reinforcement learning (DRL) to fluid dynamics, Wang et al. proposed an open source Python platform called DRLinFluids. The platform utilizes OpenFOAM, a popular Navier-Stokes solver, and Tensorforce or Tianshou, which are widely used DRL packages. In two tailflow stabilization benchmark problems, the authors demonstrate the reliability and efficiency of DRLinFluids. We have developed a platform that significantly reduces the workload of DRL applications in fluid mechanics, which will accelerate academic and industrial research [88].

Under turbulent conditions, R uses deep reinforcement learning (DRL) to control the flow around a cylinder. This study aims to reduce the drag and lift fluctuations experienced by the cylinder. Under turbulent conditions, the authors demonstrate that DRL can be used to find effective control strategies, but that the learning process requires more iterations in order to become convergent. DRL is applied to hydrodynamic problems, particularly to control the flow around cylinders, in this work. Additionally, the authors propose two different learning strategies for more challenging control at higher Reynolds numbers. The study is limited to two-dimensional flows, and the computational cost of using DRL for hydrodynamic problems can be very high [89].

Based on the Neuro-Ordinary Differential Equation (NODE) and deep reinforcement learning (RL), Zeng et al. discovered a method for learning and implementing fluid dynamics control strategies. Using the Kuramoto-Sivashinsky equation and planar Couette flow as a case study, the method is demonstrated to be effective. According to the results, the method can be applied to reduced-order models and complete systems in order to learn control strategies that minimize energy losses and total power input. Additionally, the authors demonstrate that their method can be used to stabilize unstable equilibria and improve the turbulent Kuyt flow performance. Using NODE and RL, we have developed a new method for learning fluid dynamics control strategies and demonstrated its effectiveness in reduced-order and full-scale models. Additionally, the authors demonstrate that their method can be used to stabilize unstable equilibrium conditions and to improve the performance of turbulent Kuyt flows [90].

Physics-Informed Neural Networks

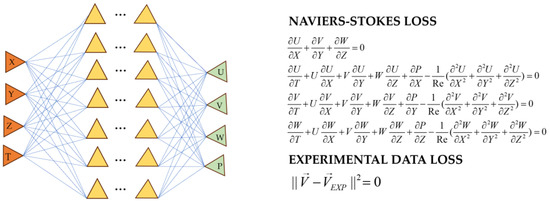

The physical information neural network (PINN) is a machine learning algorithm that combines neural networks with physical laws in order to solve complex problems in science and engineering [91]. Inverse problems in fluid dynamics, biomedical flow, and other fields can be solved using PINNs that seamlessly integrate noisy data with mathematical models. In PINNs, all differential operators are represented by automatic differentiation, which eliminates the need for explicit grid generation. The governing equations and any other constraints can be incorporated directly into the loss function of the neural network and then minimized to reach a solution. Many problems related to compressible flows, turbulent convection, and free boundaries have been solved using PINNs [92]. Figure 5 illustrates a physically based neural network for solving the Navier-Stokes equation.

Figure 5.

Physics-based neural networks for solving the Navier-Stokes equations. Where red triangles denote inputs green triangles denote outputs while yellow triangles denote end to end. The input values X, Y, Z are the spatial coordinates T is the time and the output values U, V, W are the velocities of the flow field P is the pressure.

Pioch et al. used the PINNs method to model turbulence by training neural networks to satisfy the Reynolds-averaged Navier-Stokes (RANS) equations, turbulence models, boundary conditions, and matched DNS data. Based on different turbulence models, the PINNs are trained to predict the velocity and pressure fields of a two-dimensional turbulent backward stepped flow, including the mixed length model, the k-ω model, the νt model, and the pseudo-Reynolds stress model. As part of the research, a fully connected feedforward PINN based on different amounts of labeled training data was applied to compare the prediction quality of turbulence models, and the PINN method was demonstrated as a surrogate model for steering or optimization problems, as well as an initial condition generator for computational fluid dynamics calculations. However, PINNs cannot accurately predict angular vortices in backward stepped flows if physics-based equations are not specified. PINN training can also be time-consuming, and it may not be suitable for all turbulence modeling applications [93].

Yang et al. applied physical information neural networks (PINNs) to predict wall shear stresses and spanwise velocities in large eddy simulations (LES) of incompressible turbulence using neural networks. A key contribution of this research is the incorporation of physical insights into the model inputs, such as the use of inputs based on vertically integrated thin boundary layer equations and vortex population density scaling. In addition, the trained network is able to capture wall patterns at arbitrary Reynolds numbers and outperform traditional equilibrium models in non-equilibrium flows. Data-based models have the ability to answer questions that do not register directly around the training data, including incorporating prior knowledge into the neural networks without imposing exact scaling, and handling sparse and incompletely sampled parameter spaces [94].

In turbulence modeling, Cruz et al. incorporated the Reynolds force vector as a learning objective using physical information neural networks (PINNs). The important contribution of this approach is that it predicts the Reynolds stress tensor and Reynolds force vector field, which are important quantities for turbulence modeling. Additionally, using the Reynolds force vector as a learning target helps to reduce the errors that may arise from the propagation of the Reynolds stress tensor to the mean velocity. Although this approach is computationally intensive, it requires a large amount of data for training [95].

The Navier-Stokes equations for natural convection in three-dimensional turbulence were solved by Lucor et al. using physical information neural networks (PINNs). Their main contribution was demonstrating the effectiveness of PINNs in solving such problems and proposing some methodological improvements regarding data and residual sampling strategies. It is worth noting, however, that the study does not provide a posteriori error estimates or convergence theory, which limits the application of PINNs to more parametric surrogate models. Additionally, the high-dimensional nonconvex optimization problem with composite loss functions involves significant training costs as a result of the time integration of nonlinear PDEs and the depth of the neural network architecture [96].

Raissi et al. proposed a method to improve the performance of neural networks in small data environments by incorporating physical laws into them. In particular, the authors used the Physical Information Neural Networks (PINNs) approach to solve partial differential equations (PDEs) governing fluid dynamics. Using two examples—the Schrödinger equation and the Navier-Stokes equation for flow through a cylinder—they demonstrated the effectiveness of their approach. Among their major contributions is the development of a method for incorporating physical laws into neural networks in order to improve their performance in small data environments [97].

2.3. Data Assimilation

In light of the above review, it is evident that data are of the utmost importance for machine learning methods. Training a model requires a large amount of high-quality data. Turbulence modeling uses data assimilation to describe and predict turbulence phenomena more accurately. Turbulence modeling involves a large amount of experimental, numerical simulations, or observational data, which requires the application of a technique or method to process these data. One of these methods is data assimilation, which combines actual observational data with model predictions to produce more accurate and reliable simulations. Here are some examples of data assimilation.

As a unified approach for data assimilation and the turbulence modeling of high Reynolds number separated flows, Wang et al. proposed an ensemble Kalman inversion method. A high-fidelity turbulence model that closely matches the experiment is obtained by combining data assimilation and model training into one step [98].

According to He et al., continuous concomitant formulations can be used to assimilate turbulence data. The Spalart-Allmaras turbulence model is modified by adding a spatial variation coefficient to the turbulence generation term. A model form error is corrected by optimizing the β distribution to minimize the discrepancy between the predictions and observations. The eddy viscosity constraint is applied in order to avoid flow instability resulting from low eddy viscosity [99].

Kato et al. proposed a method for optimizing the turbulence model parameters using data assimilation. For a1 = 1.0, the proposed method is used to estimate a better match between the calculated and experimental results than for a1 = 0.31. Based on the observed results, the proposed data assimilation method is effective in optimizing the parameters of the turbulence model. At a1 = 1.0, the SST-2003 model performs more efficiently than at a1 = 0.31 [100].

Cook et al. discussed the uncertainties associated with turbulence models and how they lead to suboptimal designs in design optimization. The authors propose a design under the uncertainty approach to obtain an optimal design that is robust to structural uncertainties in turbulence models [101].

Using laser velocimetry, Mayo et al. proposed a new digital data processing technique for turbulence measurements. At low data rates, the technique can be used to estimate the turbulence power spectra from randomly timed samples online. Based on the study’s development of a self-covariance estimator, the turbulence self-covariance at each delay was estimated by discretizing the time axis into delay units, accumulating the products of the sample magnitude pairs occurring at each delay, and dividing the accumulated sum by the lag product histogram. An estimate of the turbulent power spectrum is obtained by calculating the discrete Fourier transform of the turbulent self-covariance [102].

Data assimilation has been proposed by Franceschini et al. for the reconstruction of turbulent mean values at high Reynolds numbers from a few time-averaged measurements. Using a variational approach, the method involves correcting a given baseline model by adjusting the spatially dependent source terms in order to obtain solutions that are in agreement with the available measurements [103].

Meldi et al. proposed a reduced-order Kalman filter model for turbulent sequential data assimilation. For the analysis of incompressible flows, a sequence estimator based on the Kalman filter has been incorporated into the structure of the separation solver. The proposed method provides an enhanced flow state that integrates the observations available in the CFD model and maintains the zero-divergence condition of the velocity field naturally. Additionally, the paper presents two strategies for reducing the cost of a full Kalman filter application. The paper concludes that the proposed technique can effectively reduce the computational costs of Kalman filter applications while maintaining their accuracy [104].

Rosu et al. used advanced lidar data processing techniques to retrieve various atmospheric parameters, including turbulence data optimization. This paper presents a semi-empirical model that uses the data collected by a standard two-axis elastic lidar platform to calculate the structure coefficients of the atmospheric refractive index C2N(z) and the height profiles of other relevant turbulence parameters [105].

He et al. proposed an anisotropic formulation for the assimilation of turbulent flow data using a continuous concomitant system. Using the Reynolds-averaged Navier-Stokes method, the scheme complements the flow measurement data with a predictive model. The data profiles measured at multiple locations are used as observations. To compensate for the contribution of the anisotropic eddy viscosity to the momentum equation, a forcing term F is added to the conventional turbulence model to determine the isotropic component of the eddy viscosity. A continuous concomitant equation is used to optimize F so that the predicted flow rate is pushed towards the observed flow rate. Scalar predictions were treated in a similar manner. An evaluation and validation of the current DA scheme is conducted using three test cases. As a result of the optimization of F, the average flow field and scalar field can be perfectly reproduced from the observations [106].

In their study, Parent et al. discussed the importance of data preprocessing techniques (e.g., scaling, centered, and outlier removal) in PCA analysis. In the presence of outliers in the dataset, the PC structure can be significantly altered and specific variables can be overestimated. The authors conclude that PCA has shown promise in identifying low-dimensional chemical manifolds in turbulent reaction systems, and that the proposed data pre-processing approach may enhance the accuracy of PSA [107].

For optimal sensor placement in turbulence data assimilation, Deng et al. proposed a deep neural network-based strategy. A feature importance layer is used in the proposed strategy to obtain the spatial sensitivity of the velocity to variations in the RANS model constants. Preliminary tests on free circular jet flows and further studies on separated and reconnected flows around blunt plates demonstrated the effectiveness of the proposed strategy. Based on the results of the assimilation, it appears that the DNN-OSP strategy can be successfully applied to enkf-based DA techniques [108].

3. Limitations

Considering the above review, it can be concluded that the current limitations of the data-driven machine learning-based turbulence modeling approach are mainly caused by a lack of high-quality data used for training the model, the high consumption of computational resources, and the algorithm’s shortcomings. Here is a specific analysis.

3.1. Data Issues

3.1.1. Data Quality Issues

- (1)

- There is often high noise in turbulent data, which makes it difficult for machine learning algorithms to identify the key patterns and features that can be used for classification.

- (2)

- The algorithm may fail to accurately predict the results when there are missing values or incomplete data in the turbulence data.

- (3)

- Turbulent data may also contain anomalies, which may affect machine learning algorithms and result in inaccurate predictions.

3.1.2. Lack of Physics Foundation

- (1)

- Physical models can be inaccurate if the underlying physical concepts and principles are not understood. A kinetic model may be inaccurate if the relationship between the mass, force, and acceleration is not properly considered.

- (2)

- A lack of knowledge of physical fundamentals can lead to inaccurate results when using physical models to predict or explain phenomena. An incorrect numerical result or conclusion may be obtained for a complex physical phenomenon if the underlying principles are not fully understood.

- (3)

- A lack of a physical basis can also limit the scope of research applications. In the absence of sufficient knowledge of physics, it is impossible to apply and extend the existing physical models or to conduct more comprehensive research and development.

3.1.3. Dimensional Disaster Problem

Data analysis and machine learning are affected by the dimensional catastrophe problem because the density of data points in a high-dimensional space decreases dramatically with the increasing dimensionality.

- (1)

- Due to the sparse distribution of data points in high-dimensional spaces, it is difficult to produce accurate results when searching for relationships between the data points, which can affect the accuracy of the data analysis and machine learning.

- (2)

- The computation of distances in high-dimensional spaces becomes more difficult and time-consuming, as well as requiring the use of more computational resources. This can make data analysis and machine learning in high-dimensional spaces extremely difficult, or even impossible.

- (3)

- There are a large number of features in the high-dimensional space, but not all of them contribute to the accuracy of the model. It is therefore necessary to select the most important features for modeling, which is especially important for high-dimensional data.

3.2. High Demand for Computing Resources

Due to the high computational requirements, machine learning-based turbulence modeling requires a significant amount of computation power and storage space, as demonstrated by the following:

- (1)

- For machine learning models to be trained, large amounts of data are typically required, and several iterations of tuning are often required to optimize the model. Due to the large number of computational resources required by the iterative training process, the training process is lengthy.

- (2)

- The accuracy and generalization ability of machine learning models are influenced by the quality and quantity of the training data. Turbulence modeling requires a large amount of data due to the complexity. In addition, this increases the demand for computational resources.

- (3)

- As turbulent modeling involves the analysis of high-dimensional, large-scale data, the machine learning models developed are also usually complex, containing several parameters and layers. The result can be a significant increase in the amount of storage space and computational power required.

3.3. Limited Generalization Capability

A limited generalization capability refers to the inability of a machine learning model to handle new, unknown data effectively, resulting in low accuracy and performance. The cause of this limitation may be attributed to a variety of factors. As a result of overfitting, a model becomes too specific to the training data, resulting in poor generalization. Underfitting occurs when the model is too simplistic to capture complex relationships in the data. The ability of a model to generalize can be hindered by data bias when there is a significant difference between the training data and the application data. Lastly, selecting the wrong algorithm can limit the generalization as different algorithms have different strengths and weaknesses. Developing robust and high-performing machine learning models that are capable of generalization requires an understanding of these factors.

3.4. Bad Interpretability

Using machine learning algorithms, data are efficiently learned and fitted in order to gain predictive power. However, machine learning models are typically black boxes; that is, their underlying rationale or decision basis cannot be explained. Often, when in doubt about the prediction result of a model, we cannot obtain sufficient explanations, which impedes our ability to assess the validity and application of the model.

4. Suggestions and Prospects

As a result of the problems identified in the above review and discussion, it can be concluded that access to quality data and algorithmic limitations are the main factors hindering machine learning turbulence modeling development. Thus, the following recommendations are made based on the analysis presented above.

4.1. Developments in Data

4.1.1. Collect More Experimental Data

The purpose of experimental research in the field of turbulence is to obtain more realistic and reliable ground observation data and numerical simulation data for computational fluid dynamics. Here are some suggestions regarding this issue:

- (1)

- The scientific and rational design of experimental equipment, measurement instruments, and measurement methods are carried out in accordance with the research directions and problems in the field of turbulence.

- (2)

- Numerical simulations are used rationally to support experiments by providing more turbulence data.

- (3)

- It is imperative to strengthen the data quality control process in order to ensure that the experimental data are accurate and reliable.

- (4)

- Establish relationships with domestic and international turbulence research groups to promote the sharing and exchange of experimental data.

- (5)

- For the large amounts of data generated in turbulence experiments, developed data processing and analysis techniques in order to extract key information and analyze the turbulence characteristics in depth.

- (6)

- The application of the experimental data and analysis results to practical engineering problems, continuous verification and improvement of the experimental data and analysis methods, and the enhancement of their application value.

4.1.2. Extending the Coverage of Data Sources

Data collection should be expanded beyond one physical field data source. Qualitative changes are a result of quantitative changes. Gather physical field data from a variety of sources, including ground-based observations, weather station data, satellite remote sensing data, and regional model data. The following are some suggestions in this regard:

- (1)

- Improve the existing data collection and processing systems in order to improve data accuracy, reliability, and expand the range of data sources.

- (2)

- Enhance cross-disciplinary collaboration by collaborating with meteorology, geophysics, remote sensing technology, and other disciplines to explore and utilize data resources from various disciplines.

- (3)

- Promote digital technology: make use of digital technology for data collection and processing, create a digital data collection and processing system for the entire process, and improve the ability to visualize and share data.

- (4)

- The conduct of customized research involves the collection and processing of data according to the research needs and application scenarios of the individual. An example of this would be the analysis of satellite images, the focus on weather station data and actual measurements within local areas, etc.

- (5)

- In the basic research area, it is important to focus on the physical field itself, to strengthen the integration, mining, and utilization of the existing data resources, and to improve the quality and reliability of the physical field data.

4.1.3. Improve Data Quality

Turbulence modeling requires accuracy. In addition to the high volume of data in terms of quantity, the data also needs to be filtered and processed to ensure its accuracy and usability. Here are some recommendations in this regard:

- (1)

- An in-depth analysis of data is possible through statistical analysis, multi-scale analysis, and other methods. It is possible, for example, to extract richer structural information by processing the data with gradients and averages.

- (2)

- In the field of sensor technology, the research and development of high-precision sensors is being conducted, as well as the application of sensors for the measurement and monitoring of turbulent flow fields in order to provide more reliable experimental results.

- (3)

- The research and development of artificial intelligence techniques and machine learning algorithms related to turbulence to improve the accuracy and stability of the models, as well as their interpretability and practicality.

4.2. Suggestions for Missing Physics Fundamentals

Turbulence modeling requires a deep understanding of the physical mechanisms and foundations due to its complexity and uncertainty. To address the lack of a physical basis, the following work is required.

4.2.1. Strengthening Basic Theoretical Research

- (1)

- In-depth research on the physical mechanisms of turbulence, tracking, analyzing, and simulating various fluid motions in turbulence, which reveals the causes and evolutionary laws of turbulence phenomena, and thus improves the understanding of turbulent behavior.

- (2)

- The research on basic turbulence theory is primarily concerned with the theoretical analysis and modeling of turbulence from a mathematical perspective, with the aim of improving the theoretical understanding of turbulence by exploring some basic problems in turbulence, including the turbulent energy spectrum, turbulent structure, and turbulent decay.

4.2.2. Machine Learning and Artificial Intelligence Field Application

Utilize machine learning and artificial intelligence techniques in conjunction with traditional physical models in order to gain a deeper understanding of turbulence. The physical mechanisms of turbulence are explored by interpreting and visualizing the model parameters. A data-driven approach can also be used to study turbulence phenomena in order to discover new laws and properties and to expand the field of turbulence research. To obtain a deeper understanding of turbulence, machine learning and artificial intelligence techniques are combined with traditional physical models. It is possible to examine the key factors and processes in the physical mechanisms of turbulence by interpreting and visualizing the model parameters. Furthermore, it is possible to study turbulence phenomena using a data-driven approach in order to discover new laws and properties and to expand the field of turbulence research.

4.3. Suggestions for Improving Computing Resources

Machine learning-based turbulence modeling requires processing large datasets and performing complex computations, and therefore requires a substantial amount of computational resources, including storage, computation, and network resources. The following work is required in order to solve this problem.

4.3.1. Optimization of Hardware

High-performance computing (HPC) clusters or high-performance hardware such as GPUs are used to accelerate computing processes and improve computational efficiency.

- (1)

- Turbulence modeling is performed using a high-performance computing cluster. The speed of computation can be significantly increased by assigning tasks to multiple processing nodes and using parallel computing techniques. For turbulence modeling, it is recommended to choose an HPC cluster with a large number of computational nodes, a high-speed interconnection network, and a high-performance storage system.

- (2)

- GPUs can significantly improve the computational efficiency of turbulence modeling when used to accelerate the computation process. GPUs are capable of parallel computations and have optimized graphics processing architectures for accelerating numerical computations and data processing in turbulence simulation. Use a high-performance GPU that supports GPU programming frameworks, such as CUDA or OpenCL, and adapt the code for GPU computation using parallel programming techniques.

- (3)

- Algorithms and codes for turbulence modeling can be parallelized and vectorized to take full advantage of the computing power of multi-core processors.

4.3.2. Targeted Feature Selection and Parameter Tuning

The optimization of machine learning models and training methods through targeted feature selection and parameter adjustments to improve their performance and accuracy:

- (1)

- Data dimensionality and redundant information can be reduced by selecting the important features in a targeted manner to improve the model training efficiency and prediction accuracy. In order to identify and select the features that are most relevant and effective, algorithms such as correlation analysis, information gain, and variance thresholding are recommended.

- (2)

- The model performance and accuracy can be improved by tuning the model’s hyperparameters (e.g., learning rate, regularization parameters, etc.) The parameter tuning process can be automated by using techniques such as cross-validation, grid search, and Bayesian optimization.

- (3)

- It is possible to improve the performance and stability of the overall model by combining the prediction results of multiple base models using integrated learning methods, such as Random Forests and Gradient Boosting Trees. To optimize the effect of the integrated model, it is recommended to experiment with different integrated learning algorithms with strategies such as model fusion, weight adjustment, etc.

- (4)

- By using data enhancement techniques, such as random flipping, rotation, and cropping, the training dataset can be expanded to increase the diversity and number of samples and improve the generalization ability of the model. Furthermore, the application of regularization techniques, such as L1 and L2 regularization, can alleviate the overfitting problem and improve the model’s generalization capability.

- (5)

- It is possible to improve the expressiveness and learning ability of the model by adjusting its structure and layering. To optimize the performance and efficiency of the model, it is recommended to try different combinations of model architectures, activation functions, optimizers, etc.

4.3.3. Optimization of Algorithms

The optimization of algorithms: Develop more efficient and accurate turbulence modeling algorithms in order to meet the practical application needs of turbulence modeling.

- (1)

- Modeling turbulence using deep learning techniques, such as convolutional neural networks (CNN) and recurrent neural networks (RNN), can result in more accurate and efficient results. It is possible to learn features from large-scale turbulence data and predict the development and evolution of turbulence using these methods. Research and exploration of methods that combine deep learning with turbulence modeling is recommended.

- (2)

- Use machine learning techniques such as Support Vector Machines (SVM), Random Forests, etc., to extract the main features of turbulence and use them to construct more simplified and efficient models. The use of this approach reduces the level of computational complexity and increases the speed of the algorithm while maintaining a certain level of accuracy.

- (3)

- In order to describe turbulent behavior more accurately, it is important to take into account the interactions and couplings between different physical fields and the effects of turbulence at different scales. Simulating and analyzing turbulence should incorporate multi-physics field coupling and multi-scale modeling approaches in order to consider a wider range of physical mechanisms.

- (4)

- To improve the efficiency and accuracy, high-performance computing platforms and parallelization techniques can be used to accelerate the computational process of turbulence modeling algorithms. The implementation and optimization of turbulence modeling algorithms can benefit from techniques such as parallel computing, accelerated computing, and distributed computing.

4.4. Suggestions for Improving the Poor Interpretability of Turbulence Modeling

It is difficult to understand the decision process within machine learning-based turbulence models and the factors that influence them. Because of this lack of interpretability, scientists and engineers may overlook some important factors that may affect the accuracy of the final results. In order to improve the interpretability of the model, the following steps should be taken:

- (1)

- Selection of features: Reduce the redundancy of the model by selecting the appropriate features manually or automatically, and facilitate the interpretation of the model’s internal operation process by selecting a limited number of features.

- (2)

- Modeling Turbulence Data Visualization: In order to facilitate the understanding and analysis of the behavior of a model, data visualization is used to present the model inputs and outputs in human-perceivable images or graphs.

- (3)

- Model evaluation criteria: Develop reasonable model evaluation criteria so that the model performance and interpretability can be quantified and compared.

Author Contributions

Conceptualization, Y.Z. and D.Z.; methodology, D.Z. and H.J.; software, D.Z.; validation, D.Z. and Y.Z.; formal analysis, Y.Z. and D.Z.; investigation, Y.Z. and D.Z.; resources, D.Z.; data curation, Y.Z.; writing—original draft preparation, Y.Z.; writing—review and editing, D.Z.; visualization, D.Z.; supervision, D.Z. and H.J.; funding acquisition, D.Z. and H.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Program for Scientific Research Start-up Funds of Guangdong Ocean University, grant number 060302072101, and Zhanjiang Marine Youth Talent Project—Comparative Study and Optimization of Horizontal Lifting of Subsea Pipeline, grant number 2021E5011, and the National Natural Science Foundation of China, grant number 62272109.

Institutional Review Board Statement

The study did not require ethical approval.

Informed Consent Statement

The study did not involve humans.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lumley, J.L. Turbulence modeling. ASME J. Appl. Mech. 1983, 50, 1097–1103. [Google Scholar] [CrossRef]