An Efficient Underwater Navigation Method Using MPC with Unknown Kinematics and Non-Linear Disturbances

Abstract

1. Introduction

- In order to deal with unknown kinematics, we use a linear approximation that is based only on linear algebra, and hence, it is computationally efficient. In case that the AUV has enough computational capacity, it is possible to replace this module by other techniques such as LWPR (Locally Weighted Projection Regression) [23,24], XCSF (eXtended Classifier Systems for Function approximation) [25,26] or ISSGPR (Incremental Sparse Spectrum Gaussian Process Regression) [27], to mention some.

- Although it is possible to include the effect of the disturbances in the kinematics estimator, we derive a specific non-linear disturbance estimator based on optimization which allows for significantly improving the results, with increases in cost of up to a 47%.

2. Setup Description

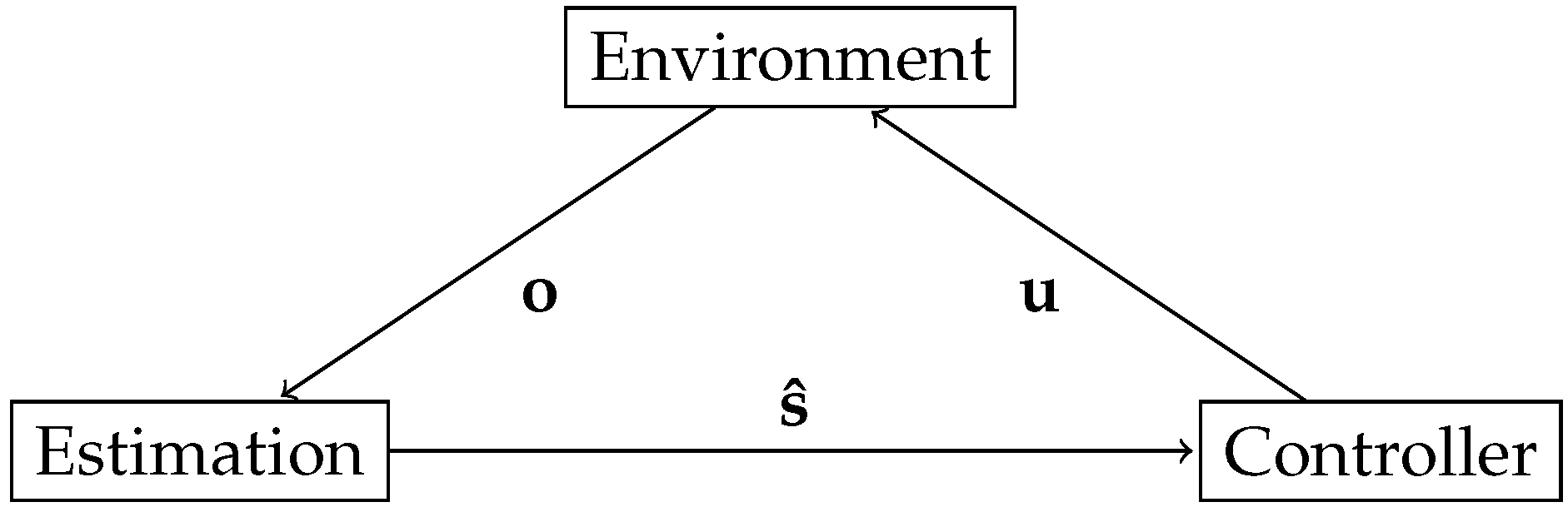

2.1. General Diagram

- The environment block, that simulates the underwater conditions, that is, the kinematics. This block takes as input the control , that contains the change in the AUV actuators, and returns an observation , which reflect the information that the sensors of the AUV capture.

- The estimation block, whose main purpose is to extract meaningful information for the controller block from the signals provided by the AUV sensors .

- The controller block, which is devoted to decide which controls are to be taken at each time instant. This block receives as input the information extracted from the estimation block, and outputs the adequate control.

2.2. Environment Block

2.2.1. Underwater Navigation Model

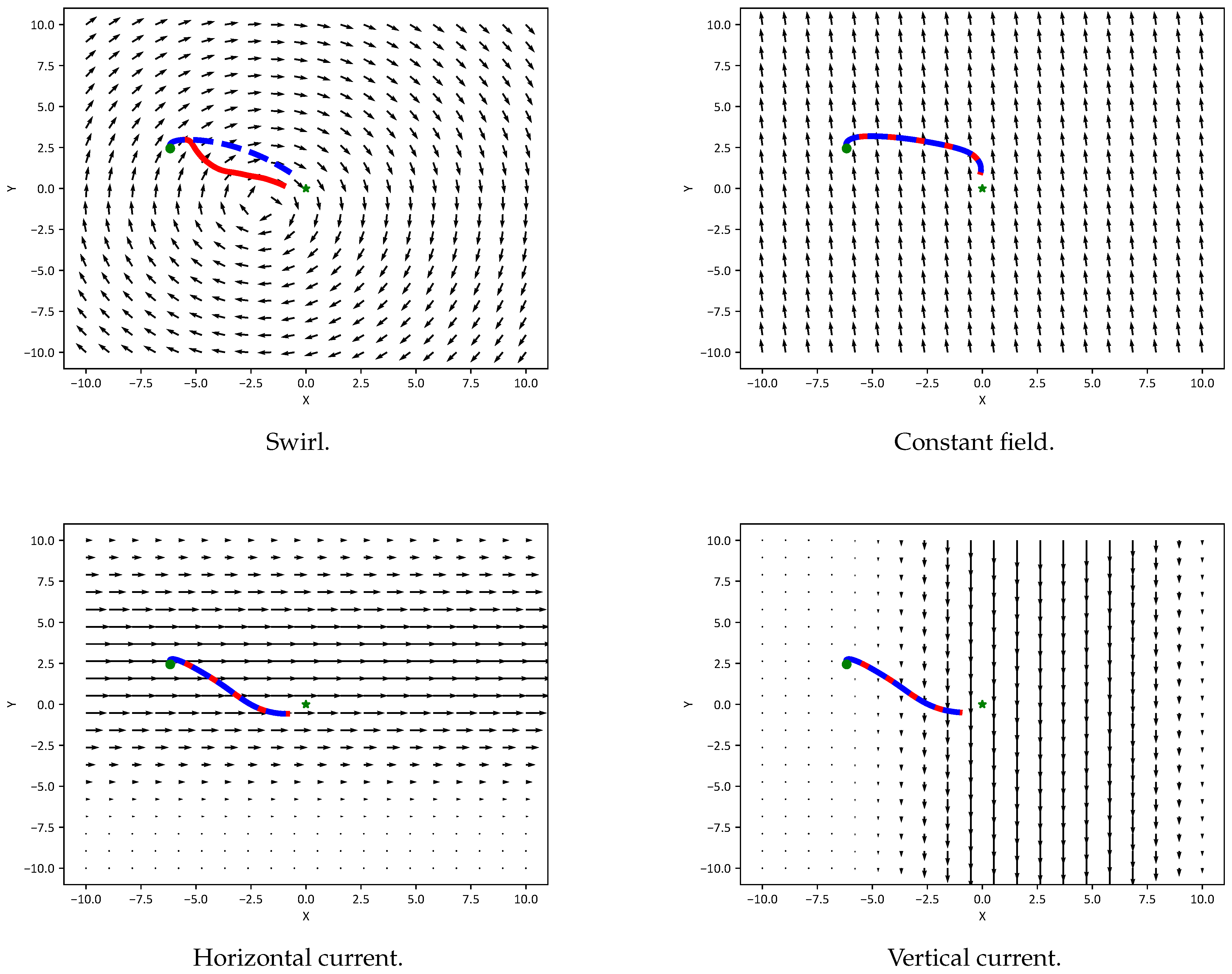

2.2.2. Disturbance Models

2.2.3. Observation Model

2.3. Estimation Block

2.4. Controller Block

3. Estimation of the Model and Disturbances

3.1. Linear Transition Model Estimation

3.2. Linear Transition Model Estimator with Disturbances

3.3. Disturbance Estimator

3.3.1. Constant Field

3.3.2. Currents

3.3.3. Swirls

3.4. Joint Transition and Disturbance Estimator

| Algorithm 1 Joint transition and Full Disturbance Estimator (FDE) |

| Input: Output: , |

| Algorithm 2 Joint transition and Constant Disturbance Estimator (CDE) |

Input:

|

4. Simulations

4.1. Testbench Description

4.1.1. Environment

4.1.2. Estimation

- The case with full knowledge of the transition model: in this case, the matrices and from (34) are assumed known. Note that this is the less realistic case, as this knowledge seldom happens, but it serves as the best case baseline to which we can compare our results.

- The case in which the transition model is estimated without the help of the disturbance estimator. In this case, we estimate and using (19).

- The case in which the transition model is estimated with the help of the disturbance estimator. In this case, we estimate and using (21), as described in Algorithm 1 in case of using FDE for the disturbances, and follow Algorithm 2 in case of using CDE for the disturbances.

- No state estimator, and hence, . This is the case when we have full observability, but when there is partial observability, this estate estimator introduces error.

- Use as state estimator a KF, which relies on the knowledge of and in case that we have full knowledge, or on the estimated and in case that we use the transition model estimator.

4.1.3. Controller

4.1.4. Simulation Conditions

4.2. Transition Estimator Accuracy

- After each simulation, we measured the latest disturbance estimated, and in the column “Disturbance estimated”, we reflect the proportion of times that the disturbance estimated was, in this order, a swirl, a horizontal current, a vertical current, and a constant field, in case of using FDE (for CDE, we remind that we only estimate a Constant Field). For ease of visualization, we show in bold the actual disturbance for each case. Note that the actual disturbance is always the one that is estimated most often, with proportions higher than in case of the Constant Field and the swirl, and in case of the currents. We note that the drop in the accuracy of the currents can be explained because they can be confused with a Constant Field if we are far from the current center, as can be seen in Figure 2. Thus, the accuracy of the FDE model to detect the right disturbance is very high, regardless of the observation model and the state estimation used.

- Every time that the FDE model obtained the right disturbance, as seen in the previous point, we also computed the relative error of the estimated parameters compared to the actual parameters . In case that there was no actual disturbance, the error is the absolute value of the or parameter estimated (e.g., the strength of the disturbance estimated, related to the error committed). In this metric, we can appreciate the effect of the observation model, as the error is considerably lower if of total observation than when there is partial observation. This is due to the fact that the noise in the observation translates to inaccuracies in the disturbance estimator, and this effect is particularly noticeable in the currents, which contain the highest errors.

- For both FDE and CDE, we have also measured the norm between the estimated model and the real model as:where the subscript T stands for the case of using only the transition model estimator, and for the case of using also a disturbance estimator (e.g., FDE or CDE). When this gain is positive, it means that the disturbance estimator provides a more accurate model estimator than the transition estimator alone (thus, higher is better). Note that this gain is always positive (or very close to 0) in FDE, meaning that using FDE provides a consistent improvement in the transition estimator. The gains are particularly dramatic in case of total observability, where the gain is around , while when there is noise, the maximum gain reduces to around . This is due to the presence of noise, and it is to be expected, but observe that the disturbance estimator still manages to improve the model estimation. In case of CDE, it is remarkable that the error gains are similar to the FDE case, except for the swirls: as seen in Figure 2, the swirls are the most non-linear disturbance, and hence, CDE commits a higher error.

- We have also measured the number of steps that MPC takes to reach the origin, and we have defined the steps gain as:where is the average number of steps that took the transition model alone to reach the origin, and is the average number of steps that took the transition model with disturbance estimator (e.g., FDE or CDE) to reach the origin. Again, higher is better: a positive number indicates that the transition estimator alone took more steps, which means that the disturbance estimator actually takes less steps to reach the target position, as the model is estimated better. The gain without noise for FDE and CDE is around and reduces to around 15– in case that there is observation noise. Note that in case that there is no actual disturbance, the gains are close to 0. Again, the only significant difference between FDE and CDE are in case of having a swirl, where CDE performance degrades significantly.

- We have also measured the simulation time between using a transition estimator alone or with disturbance estimator aswhere is the average simulation time that took the transition model alone to reach the origin, and is the average simulation time that took the transition model with disturbance estimator (e.g., FDE or CDE) to reach the origin. Again, higher is better, as a positive number indicates that the transition estimator with FDE/CDE took less simulation time than the transition estimator without. Note that for FDE, there are many positive numbers, which indicate that the use of FDE, which translates in less steps to the origin as we have seen, also translates to less simulation time (e.g., less computational load). In other words, the extra computation time of the disturbance estimator is compensated by needing less calls to the optimizer to reach the origin. Additionally, note that the cases with negative gain are correlated to the cases with low step gain. In case of CDE, the gains are dramatic, due to its reduced computational complexity which, nonetheless, allows it to require fewer steps to reach the origin (the exception is, again, the swirl).

4.3. Cost Gains

- K: the model with full knowledge of the model, e.g., the actual model matrices and are known, but without knowing nor estimating the disturbances.

- T: Transition Estimator alone, i.e., without any disturbance estimator.

- TFDE: Transition Estimator and FDE model for estimating the disturbances.

- TCDE: Transition Estimator and CDE model for estimating the disturbances.

- If we compare the transition estimator alone with knowing the model, we see that the latter has a clear advantage in most cases, specially when there is no noise or there is a constant disturbance. However, the advantage of knowing the model vanishes if we also estimate the disturbances. This is a very important conclusion, as in terms of cost, it is similar to know the model than to estimate it using our proposed disturbance estimator. In a real environment, when the model is unknown, our results suggest that estimating the disturbance has a consistent advantage in terms of costs. Moreover, the results from Table 1 and Table 2 indicate that this advantage also extends to the computational load.

- The previous conclusion is reinforced by observing that using the disturbance estimator provides a consistent gain against using the transition estimator alone. Note that, in case of estimating the disturbances using CDE, the only case where the gain is negative (e.g., the transition estimator alone is better) is when the disturbance is a swirl, which is to be expected. However, when using FDE, the gain compared to the transition estimator alone is always positive, and ranges goes up to a with perfect observability and up to a improvement in case that there is observation noise.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Winston, C.; Karpilow, Q. Autonomous Vehicles: The Road to Economic Growth? Brookings Institution Press: Washington, DC, USA, 2020. [Google Scholar]

- Sahoo, A.; Dwivedy, S.K.; Robi, P. Advancements in the field of autonomous underwater vehicle. Ocean Eng. 2019, 181, 145–160. [Google Scholar] [CrossRef]

- Paull, L.; Saeedi, S.; Seto, M.; Li, H. AUV navigation and localization: A review. IEEE J. Ocean. Eng. 2013, 39, 131–149. [Google Scholar] [CrossRef]

- Stutters, L.; Liu, H.; Tiltman, C.; Brown, D.J. Navigation technologies for autonomous underwater vehicles. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2008, 38, 581–589. [Google Scholar] [CrossRef]

- Schillai, S.M.; Turnock, S.R.; Rogers, E.; Phillips, A.B. Evaluation of terrain collision risks for flight style autonomous underwater vehicles. In Proceedings of the 2016 IEEE/OES Autonomous Underwater Vehicles (AUV), Tokyo, Japan, 6–9 November 2016; pp. 311–318. [Google Scholar]

- Zhang, Y.; Liu, X.; Luo, M.; Yang, C. MPC-based 3-D trajectory tracking for an autonomous underwater vehicle with constraints in complex ocean environments. Ocean Eng. 2019, 189, 106309. [Google Scholar] [CrossRef]

- Xiang, X.; Lapierre, L.; Jouvencel, B. Smooth transition of AUV motion control: From fully-actuated to under-actuated configuration. Robot. Auton. Syst. 2015, 67, 14–22. [Google Scholar] [CrossRef]

- Londhe, P.S.; Patre, B. Adaptive fuzzy sliding mode control for robust trajectory tracking control of an autonomous underwater vehicle. Intell. Serv. Robot. 2019, 12, 87–102. [Google Scholar] [CrossRef]

- Yan, Z.; Wang, M.; Xu, J. Robust adaptive sliding mode control of underactuated autonomous underwater vehicles with uncertain dynamics. Ocean Eng. 2019, 173, 802–809. [Google Scholar] [CrossRef]

- Shojaei, K. Three-dimensional neural network tracking control of a moving target by underactuated autonomous underwater vehicles. Neural Comput. Appl. 2019, 31, 509–521. [Google Scholar] [CrossRef]

- Parras, J.; Zazo, S. Robust Deep Reinforcement Learning for Underwater Navigation with Unknown Disturbances. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3440–3444. [Google Scholar]

- González-García, J.; Gómez-Espinosa, A.; Cuan-Urquizo, E.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Escobedo Cabello, J.A. Autonomous underwater vehicles: Localization, navigation, and communication for collaborative missions. Appl. Sci. 2020, 10, 1256. [Google Scholar] [CrossRef]

- Hou, X.; Zhou, J.; Yang, Y.; Yang, L.; Qiao, G. Adaptive two-Step bearing-only underwater uncooperative target tracking with uncertain underwater disturbances. Entropy 2021, 23, 907. [Google Scholar] [CrossRef] [PubMed]

- Parras, J.; Apellániz, P.A.; Zazo, S. Deep Learning for Efficient and Optimal Motion Planning for AUVs with Disturbances. Sensors 2021, 21, 5011. [Google Scholar] [CrossRef]

- Yan, Z.; Gong, P.; Zhang, W.; Wu, W. Model predictive control of autonomous underwater vehicles for trajectory tracking with external disturbances. Ocean Eng. 2020, 217, 107884. [Google Scholar] [CrossRef]

- Peng, Z.; Wang, J.; Wang, J. Constrained control of autonomous underwater vehicles based on command optimization and disturbance estimation. IEEE Trans. Ind. Electron. 2018, 66, 3627–3635. [Google Scholar] [CrossRef]

- Liu, S.; Liu, Y.; Wang, N. Nonlinear disturbance observer-based backstepping finite-time sliding mode tracking control of underwater vehicles with system uncertainties and external disturbances. Nonlinear Dyn. 2017, 88, 465–476. [Google Scholar] [CrossRef]

- Kim, J.; Joe, H.; Yu, S.c.; Lee, J.S.; Kim, M. Time-delay controller design for position control of autonomous underwater vehicle under disturbances. IEEE Trans. Ind. Electron. 2015, 63, 1052–1061. [Google Scholar] [CrossRef]

- Kawano, H.; Ura, T. Motion planning algorithm for nonholonomic autonomous underwater vehicle in disturbance using reinforcement learning and teaching method. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 4, pp. 4032–4038. [Google Scholar]

- Desaraju, V.; Michael, N. Leveraging Experience for Computationally Efficient Adaptive Nonlinear Model Predictive Control. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May 2017–3 June 2017; pp. 5314–5320. [Google Scholar]

- Desaraju, V.; Spitzer, A.; O’Meadhra, C.; Lieu, L.; Michael, N. Leveraging experience for robust, adaptive nonlinear MPC on computationally constrained systems with time-varying state uncertainty. Int. J. Robot. Res. 2018, 37, 1690–1712. [Google Scholar] [CrossRef]

- Vijayakumar, S.; Schaal, S. Locally weighted projection regression: An O (n) algorithm for incremental real time learning in high dimensional space. In Proceedings of the seventeenth international conference on machine learning (ICML 2000), Stanford, CA, USA, 29 June–2 July 2000; Volume 1, pp. 288–293. [Google Scholar]

- Vijayakumar, S.; D’Souza, A.; Schaal, S. Incremental Online Learning in High Dimensions. Neural Comput. 2005, 17, 2602–2634. [Google Scholar] [CrossRef] [PubMed]

- Vijayakumar, S.; D’Souza, A.; Schaal, S. Current XCSF Capabilities and Challenges. Learn. Classif. Syst. 2010, 6471. [Google Scholar]

- Stalph, P.; Rubinsztajn, J.; Sigaud, O.; Butz, M. A comparative study: Function approximation with LWPR and XCSF. In Proceedings of the 12th Annual Conference Companion on Genetic and Evolutionary Computation, Portland, OR, USA, 7–11 July 2010; pp. 1–8. [Google Scholar]

- Gijsberts, A.; Metta, G. Real-time model learning using incremental sparse spectrum gaussian process regression. Neural Netw. 2013, 41, 59–69. [Google Scholar] [CrossRef]

- Thrun, S. Probabilistic robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

- Li, Q.; Li, R.; Ji, K.; Dai, W. Kalman filter and its application. In Proceedings of the 2015 8th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2015; pp. 74–77. [Google Scholar]

- Shaiju, A.J.; Petersen, I.R. Formulas for Discrete Time LQR, LQG, LEQG and Minimax LQG Optimal Control Problems. In Proceedings of the 17th World Congress The International Federation of Automatic Control, Seoul, Republic of Korea, 6–11 July 2008; pp. 8773–8778. [Google Scholar]

- Bemporad, A.; Morari, M.; Dua, V.; Pistikopoulos, E.N. The explicit linear quadratic regulator for constrained systems. Automatica 2002, 38, 3–20. [Google Scholar] [CrossRef]

- Zhu, C.; Byrd, R.H.; Lu, P.; Nocedal, J. Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Trans. Math. Softw. (TOMS) 1997, 23, 550–560. [Google Scholar] [CrossRef]

- Barthelemy, J.; Haftka, R. Function approximations. Prog. Astronaut. Aeronaut. 1993, 150, 51. [Google Scholar]

- Wang, W.; Yan, J.; Wang, H.; Ge, H.; Zhu, Z.; Yang, G. Adaptive MPC trajectory tracking for AUV based on Laguerre function. Ocean Eng. 2022, 261, 111870. [Google Scholar] [CrossRef]

- Dismuke, C.; Lindrooth, R. Ordinary least squares. Methods Des. Outcomes Res. 2006, 93, 93–104. [Google Scholar]

- Islam, S.A.U.; Bernstein, D.S. Recursive least squares for real-time implementation [lecture notes]. IEEE Control Syst. Mag. 2019, 39, 82–85. [Google Scholar] [CrossRef]

- Owen, J.P.; Ryu, W.S. The effects of linear and quadratic drag on falling spheres: An undergraduate laboratory. Eur. J. Phys. 2005, 26, 1085. [Google Scholar] [CrossRef]

- Isaacs, R. Differential Games: A Mathematical Theory with Applications to Warfare and Pursuit, Control and Optimization; Dover publications: Mineola, NY, USA, 1999. [Google Scholar]

- Zhang, R.; Gao, W.; Yang, S.; Wang, Y.; Lan, S.; Yang, X. Ocean Current-Aided Localization and Navigation for Underwater Gliders With Information Matching Algorithm. IEEE Sens. J. 2021, 21, 26104–26114. [Google Scholar] [CrossRef]

- Yang, M.; Wang, Y.; Liang, Y.; Song, Y.; Yang, S. A Novel Method of Trajectory Optimization for Underwater Gliders Based on Dynamic Identification. J. Mar. Sci. Eng. 2022, 10, 307. [Google Scholar] [CrossRef]

| Obs | State | Disturbance | Disturbance Estimated | p Error | Error Gain | Time Gain | Steps Gain |

|---|---|---|---|---|---|---|---|

| T | N | None | [0.04 0.01 0.03 0.92] | 0.00 | −0.09 | −0.36 | 0.00 |

| T | N | Swirl | [0.94 0.01 0.01 0.04] | 8.44 | 85.51 | 44.24 | 48.74 |

| T | N | H. Current | [0.00 0.86 0.00 0.14] | 8.60 | 89.33 | 15.81 | 43.94 |

| T | N | V. Current | [0.00 0.00 0.67 0.33] | 12.09 | 87.52 | 29.01 | 44.73 |

| T | N | Constant | [0.00 0.00 0.00 1.00] | 0.00 | 93.31 | 34.79 | 40.62 |

| P | N | None | [0.00 0.45 0.39 0.16] | 3.19 | −0.12 | −11.47 | 0.09 |

| P | N | Swirl | [0.94 0.02 0.01 0.03] | 12.59 | 3.70 | −5.68 | 15.81 |

| P | N | H. Current | [0.00 0.64 0.15 0.21] | 81.88 | 6.90 | −2.79 | 30.98 |

| P | N | V. Current | [0.00 0.14 0.59 0.27] | 118.96 | 0.49 | −3.70 | 22.69 |

| P | N | Constant | [0.00 0.03 0.03 0.94] | 0.51 | 7.85 | 10.21 | 25.98 |

| P | KF | None | [0.00 0.33 0.23 0.44] | 0.54 | 0.04 | −6.61 | 0.03 |

| P | KF | Swirl | [0.93 0.03 0.02 0.02] | 30.37 | 5.14 | 0.42 | 15.97 |

| P | KF | H. Current | [0.02 0.59 0.14 0.25] | 75.99 | 6.60 | −1.28 | 28.97 |

| P | KF | V. Current | [0.00 0.12 0.62 0.26] | 95.53 | −0.28 | −11.69 | 16.89 |

| P | KF | Constant | [0.00 0.04 0.00 0.96] | 1.19 | 7.51 | −25.41 | −1.30 |

| Obs | State | Disturbance | Error Gain | Time Gain | Steps Gain |

|---|---|---|---|---|---|

| T | N | None | −0.12 | −1.42 | 0.00 |

| T | N | Swirl | −10.77 | −26.62 | −28.85 |

| T | N | H. Current | 53.07 | 39.32 | 41.14 |

| T | N | V. Current | 57.04 | 32.91 | 32.56 |

| T | N | Constant | 93.31 | 52.98 | 40.62 |

| P | N | None | −0.03 | 0.38 | 0.00 |

| P | N | Swirl | −7.58 | −37.30 | −32.00 |

| P | N | H. Current | 6.38 | 29.63 | 29.67 |

| P | N | V. Current | 2.12 | 23.15 | 20.16 |

| P | N | Constant | 7.86 | 31.83 | 25.98 |

| P | KF | None | 0.06 | 5.47 | 0.00 |

| P | KF | Swirl | −8.82 | −37.73 | −37.74 |

| P | KF | H. Current | 6.04 | 23.72 | 21.09 |

| P | KF | V. Current | 1.61 | 3.42 | 8.87 |

| P | KF | Constant | 7.53 | 10.39 | −1.30 |

| Obs | State | Disturbance | Gain T/K | Gain TCDE/K | Gain TFDE/K | Gain TCDE/T | Gain TFDE/T |

|---|---|---|---|---|---|---|---|

| T | N | None | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| T | N | Swirl | −54.33 | −149.55 | −3.15 | −61.70 | 33.16 |

| T | N | H. Current | −46.24 | −2.85 | −1.92 | 29.67 | 30.30 |

| T | N | V. Current | −59.70 | −2.99 | −1.71 | 35.51 | 36.31 |

| T | N | Constant | −91.37 | −0.33 | −0.33 | 47.57 | 47.57 |

| P | N | None | 3.60 | 3.60 | 3.62 | −0.00 | 0.02 |

| P | N | Swirl | −0.36 | −12.38 | 1.92 | −11.98 | 2.27 |

| P | N | H. Current | −2.18 | 2.19 | 2.36 | 4.27 | 4.44 |

| P | N | V. Current | −5.92 | 3.26 | 3.55 | 8.67 | 8.94 |

| P | N | Constant | −21.40 | 2.85 | 2.85 | 19.98 | 19.98 |

| P | KF | None | 5.93 | 5.93 | 5.93 | −0.00 | 0.00 |

| P | KF | Swirl | 4.80 | −8.85 | 9.21 | −14.34 | 4.64 |

| P | KF | H. Current | −0.48 | 5.62 | 6.40 | 6.07 | 6.85 |

| P | KF | V. Current | 0.80 | 5.74 | 6.52 | 4.98 | 5.77 |

| P | KF | Constant | −15.17 | 10.41 | 10.41 | 22.21 | 22.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barreno, P.; Parras, J.; Zazo, S. An Efficient Underwater Navigation Method Using MPC with Unknown Kinematics and Non-Linear Disturbances. J. Mar. Sci. Eng. 2023, 11, 710. https://doi.org/10.3390/jmse11040710

Barreno P, Parras J, Zazo S. An Efficient Underwater Navigation Method Using MPC with Unknown Kinematics and Non-Linear Disturbances. Journal of Marine Science and Engineering. 2023; 11(4):710. https://doi.org/10.3390/jmse11040710

Chicago/Turabian StyleBarreno, Pablo, Juan Parras, and Santiago Zazo. 2023. "An Efficient Underwater Navigation Method Using MPC with Unknown Kinematics and Non-Linear Disturbances" Journal of Marine Science and Engineering 11, no. 4: 710. https://doi.org/10.3390/jmse11040710

APA StyleBarreno, P., Parras, J., & Zazo, S. (2023). An Efficient Underwater Navigation Method Using MPC with Unknown Kinematics and Non-Linear Disturbances. Journal of Marine Science and Engineering, 11(4), 710. https://doi.org/10.3390/jmse11040710