Adaptive Sampling Path Planning for a 3D Marine Observation Platform Based on Evolutionary Deep Reinforcement Learning

Abstract

:1. Introduction

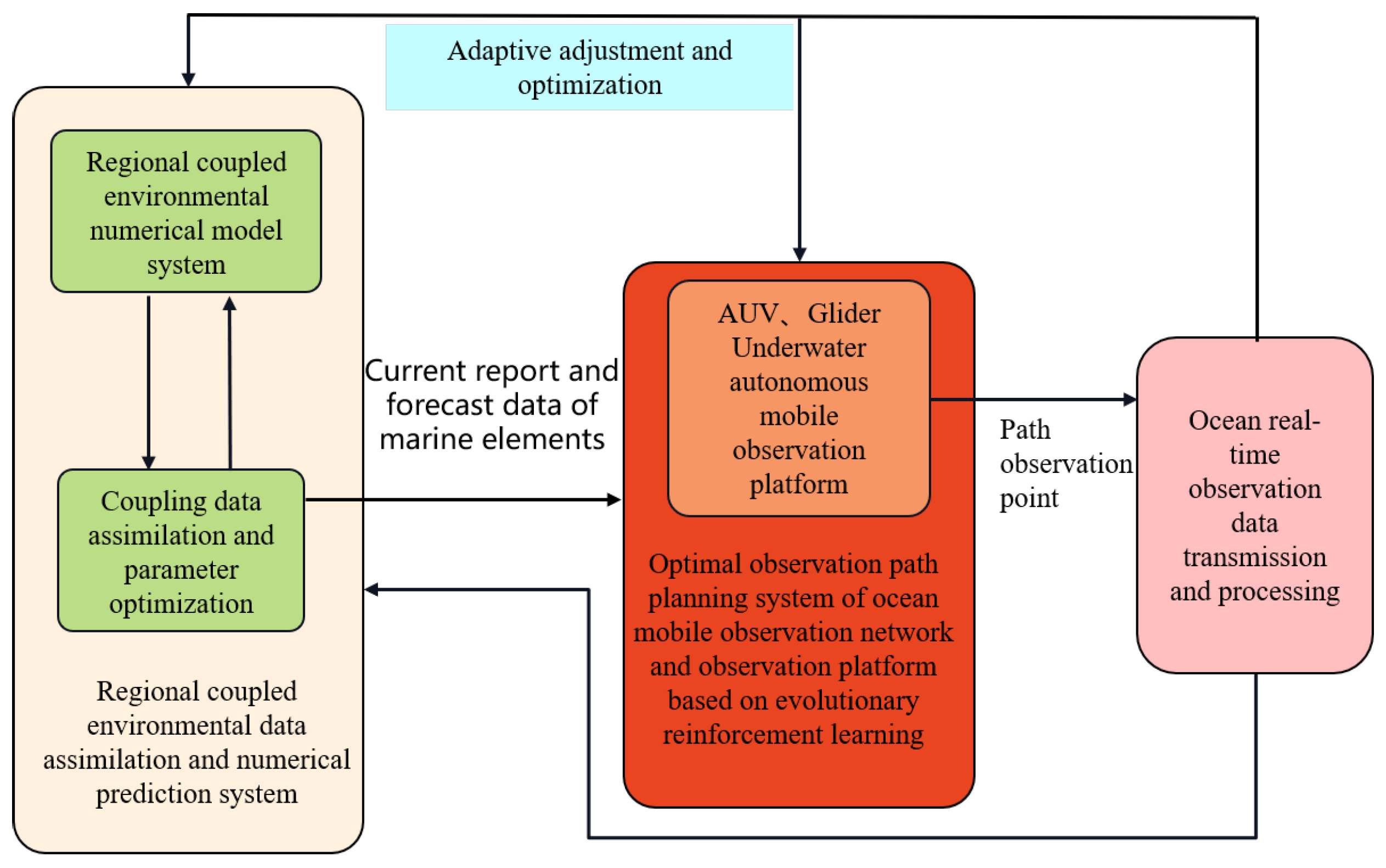

- The RL algorithm was applied to sample the path planning of MOPs in a 3D dynamic ocean environment, and research on coupled modeling of ocean observations incorporating a priori environmental information was carried out. We took advantage of the fact that RL algorithms enable direct interaction between MOPs and the marine environment to solve the problems that arise when using heuristic algorithms, such as the difficulty of modeling the tight coupling between the environmental information and the observation process, the difficulty of solving the optimal observation paths, and the low efficiency of observation.

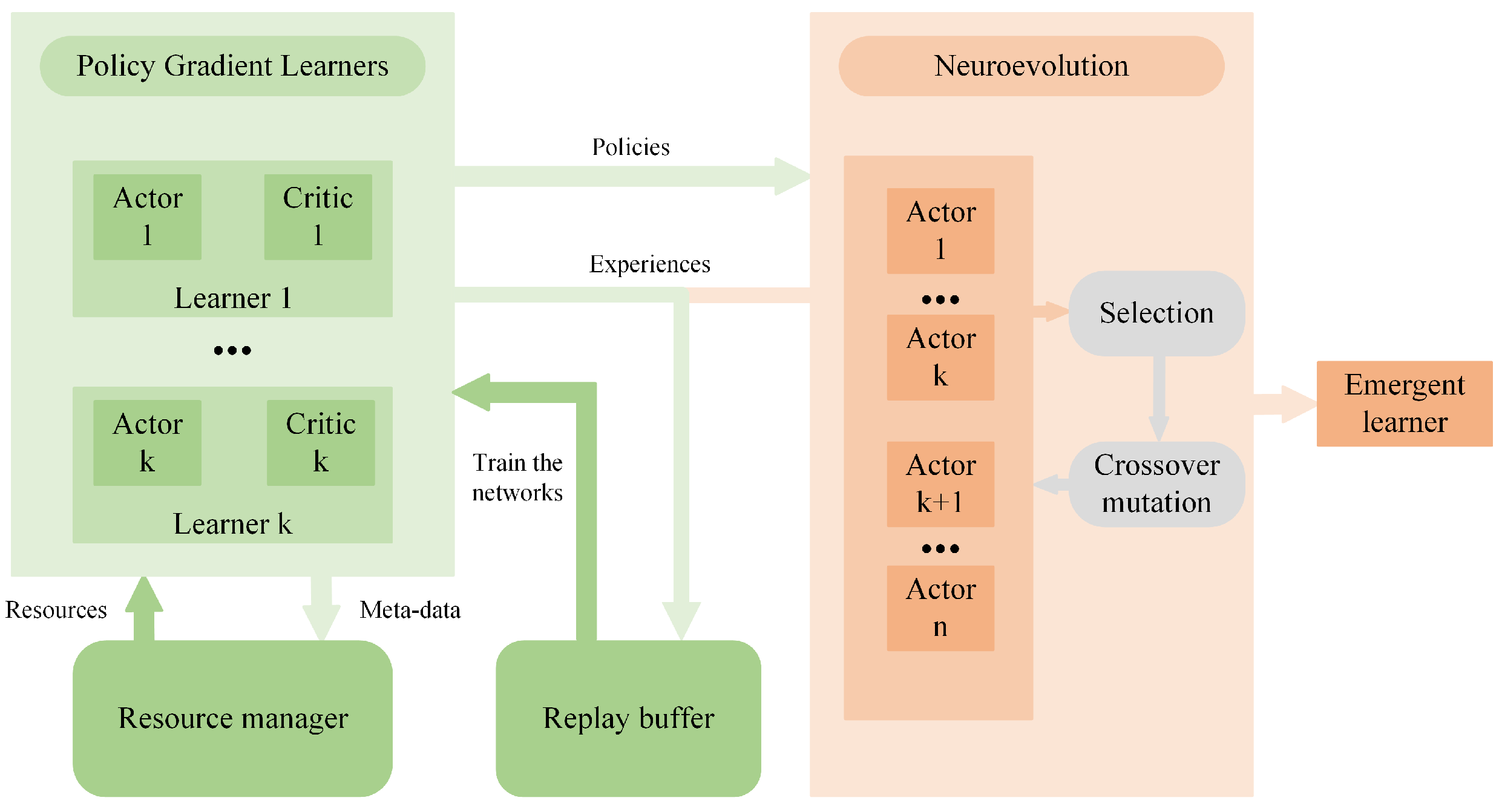

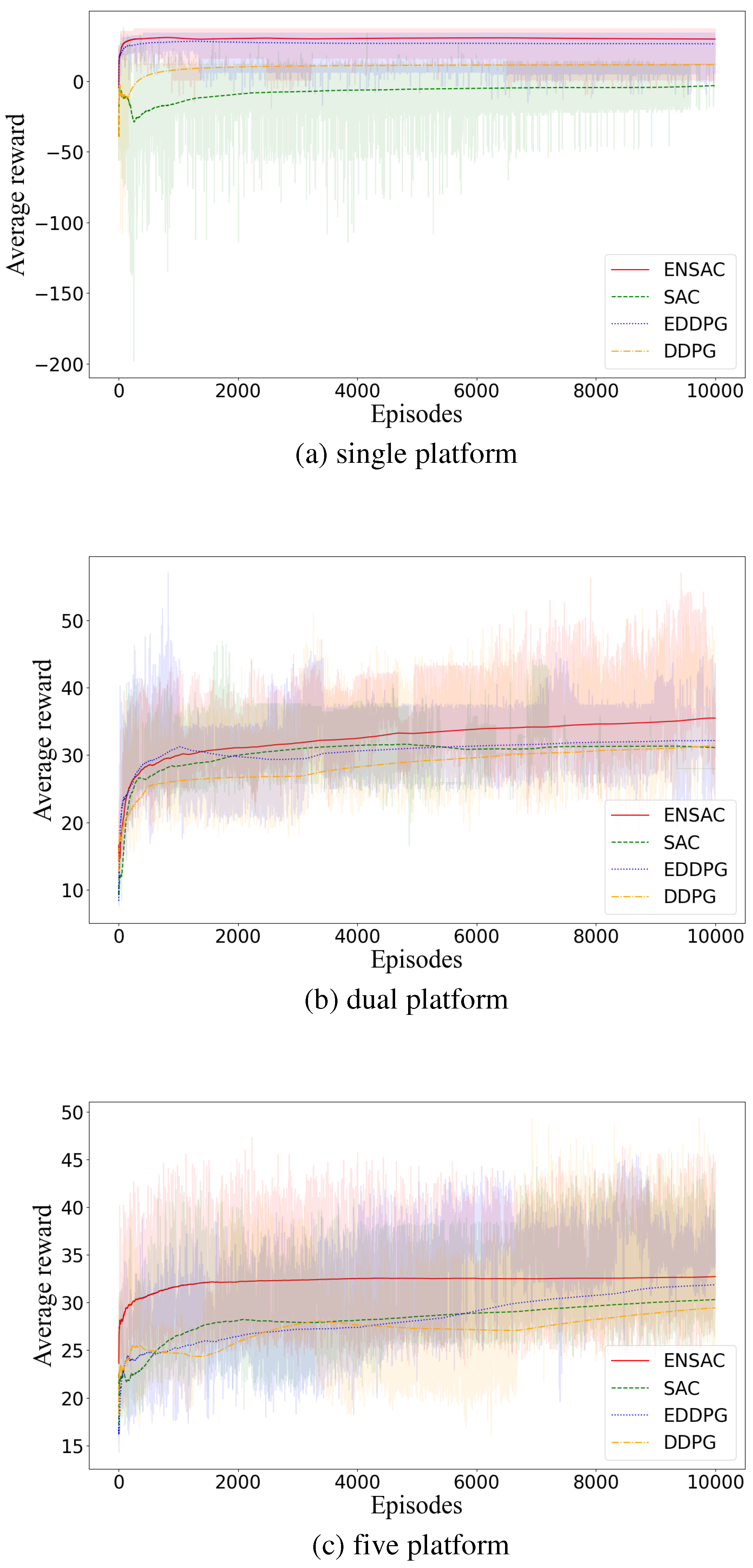

- The ERL algorithm was introduced to overcome the low sampling efficiency and robustness of traditional RL in MOP path planning, and an adaptive path planning method for MOPs based on the ERL algorithm was designed. We conducted an analysis of the fusion of the evolutionary algorithm and two strategic gradient-reinforcement learning algorithms (DDPG and Soft Actor-Critic with maximum entropy (SAC)), termed EDDPG and ENSAC. Path-planning simulation results based on the ENSAC, EDDPG, SAC, and DDPG algorithms in a 3D environment field were compared, and the advantages of the ERL algorithm were highlighted.

- By conducting data assimilation experiments with the sampling results of the four algorithms, we verified that the ENSAC and EDDPG are more effective than the SAC and DDPG, and in particular, the ENSAC is able to effectively improve the observational efficiency and analytical forecasting capability of marine environmental elements.

- Moreover, single-platform, dual-platform and five-platform observation experiments were conducted, and the results were assimilated. The assimilation results show that an increase in the number of platforms improves the accuracy of numerical predictions of the marine environment, but the effect diminishes with more than two platforms.

2. Methods

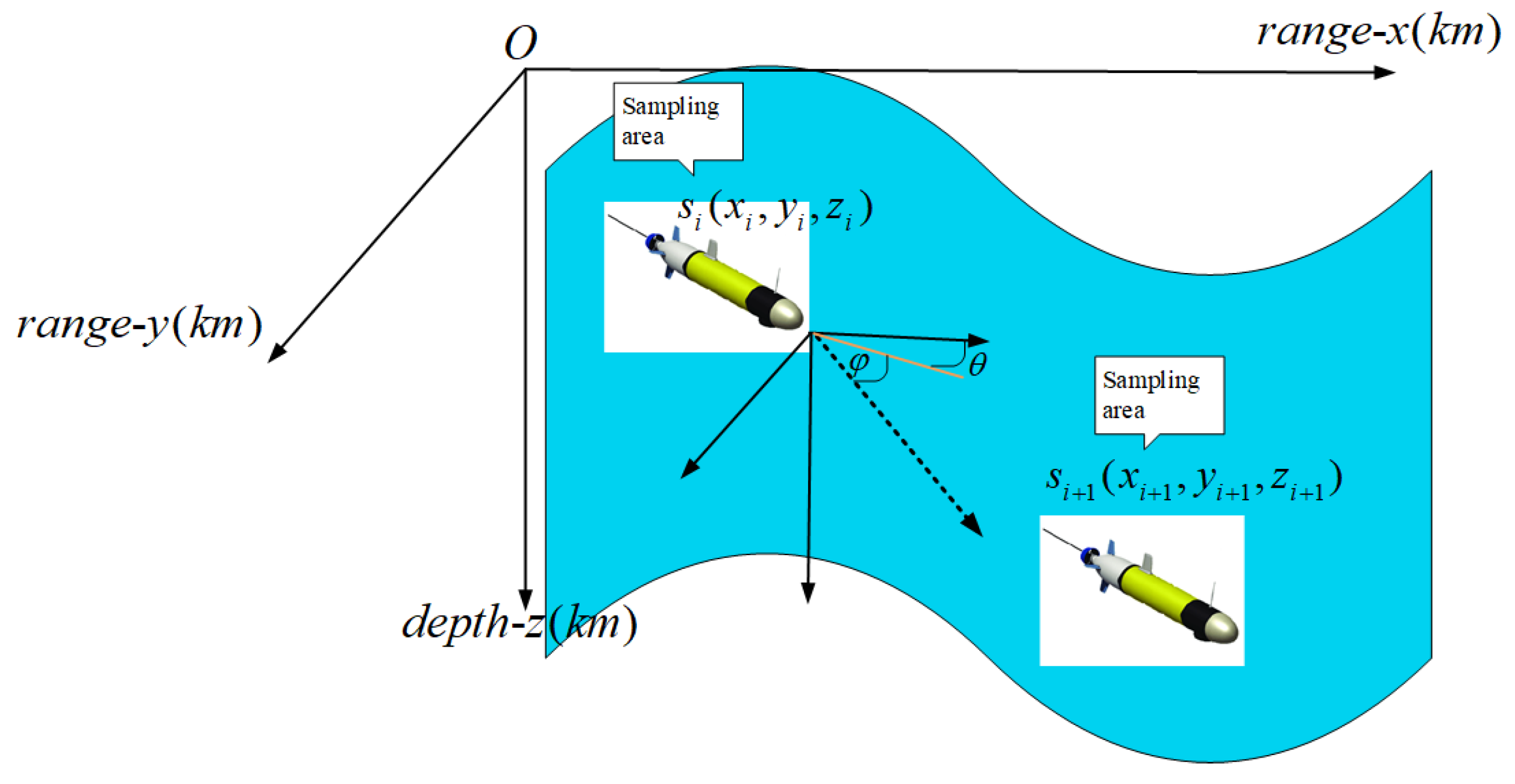

2.1. Adaptive Model of the 3D Ocean MOP

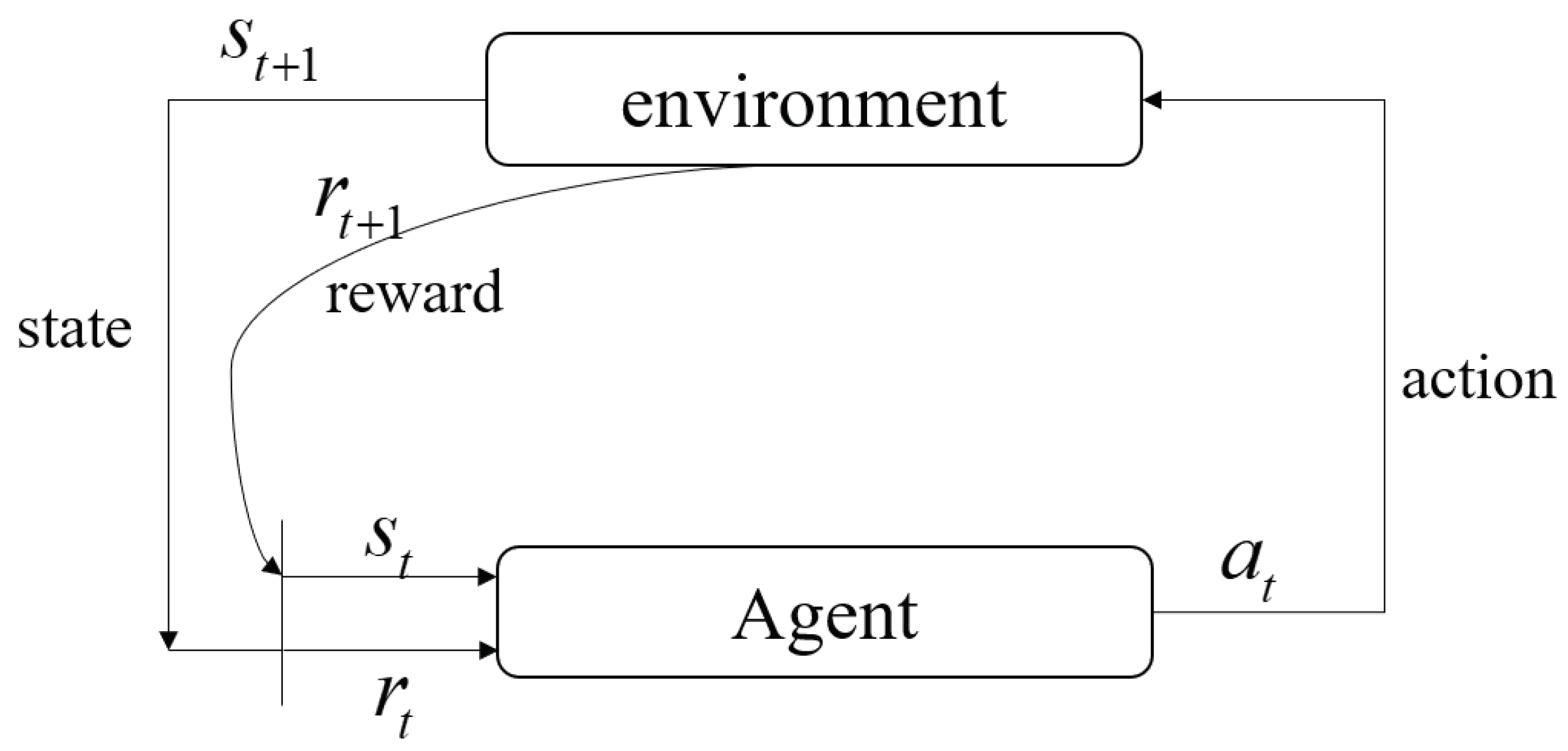

2.2. Deep Reinforcement Learning

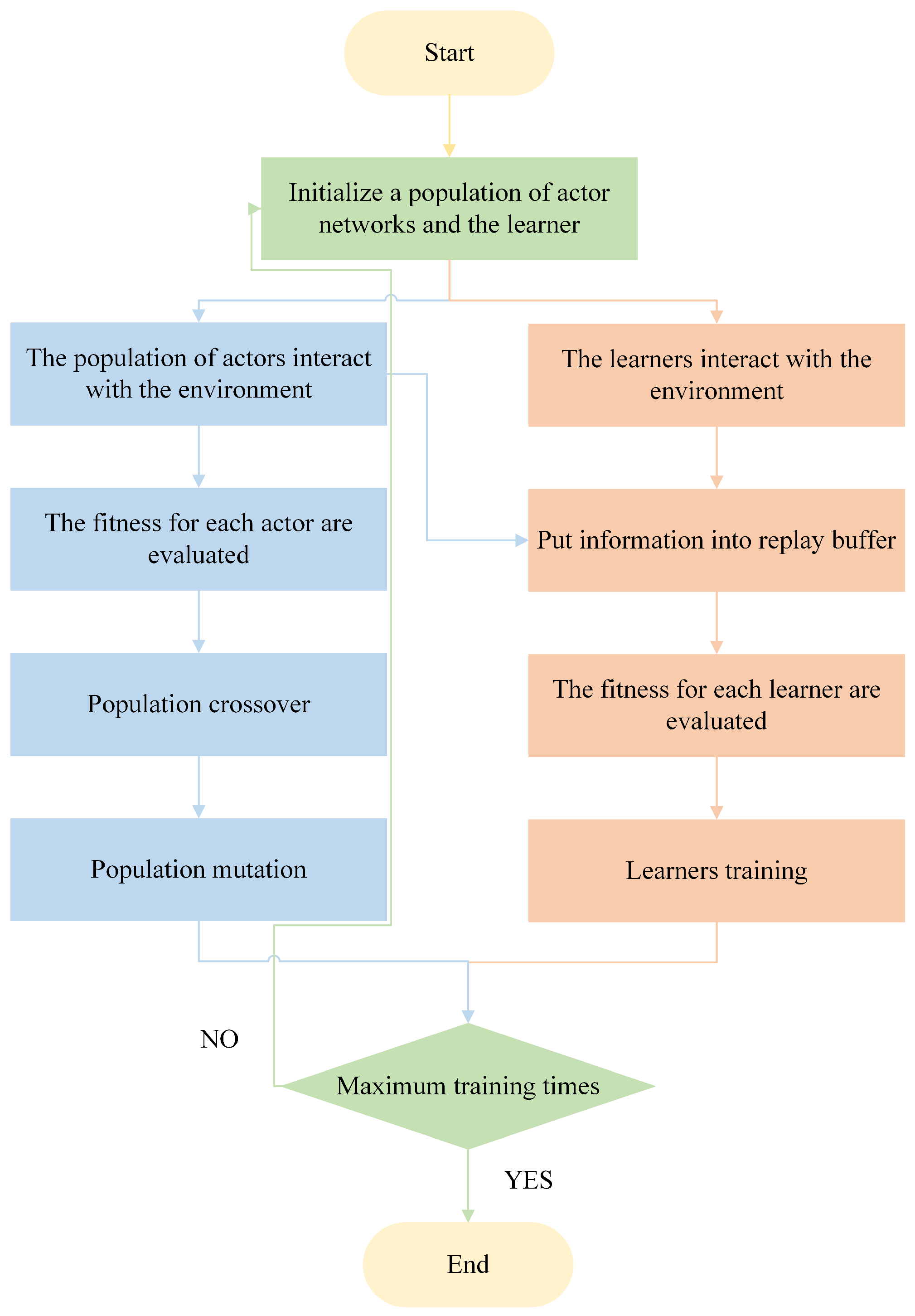

2.3. Evolutionary Reinforcement Learning

3. ERL Adaptive Sampling Path Planning for 3D Ocean MOPs

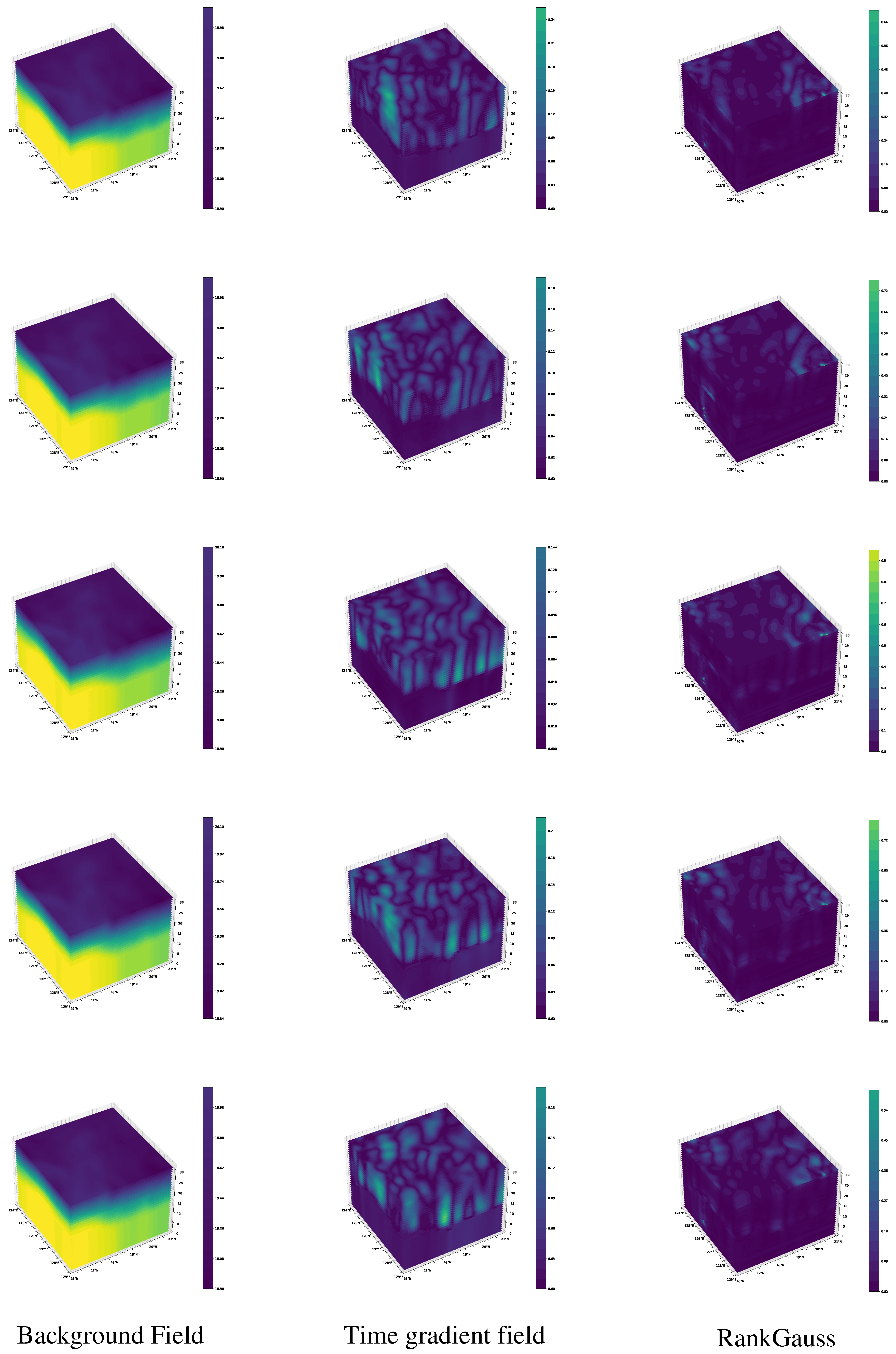

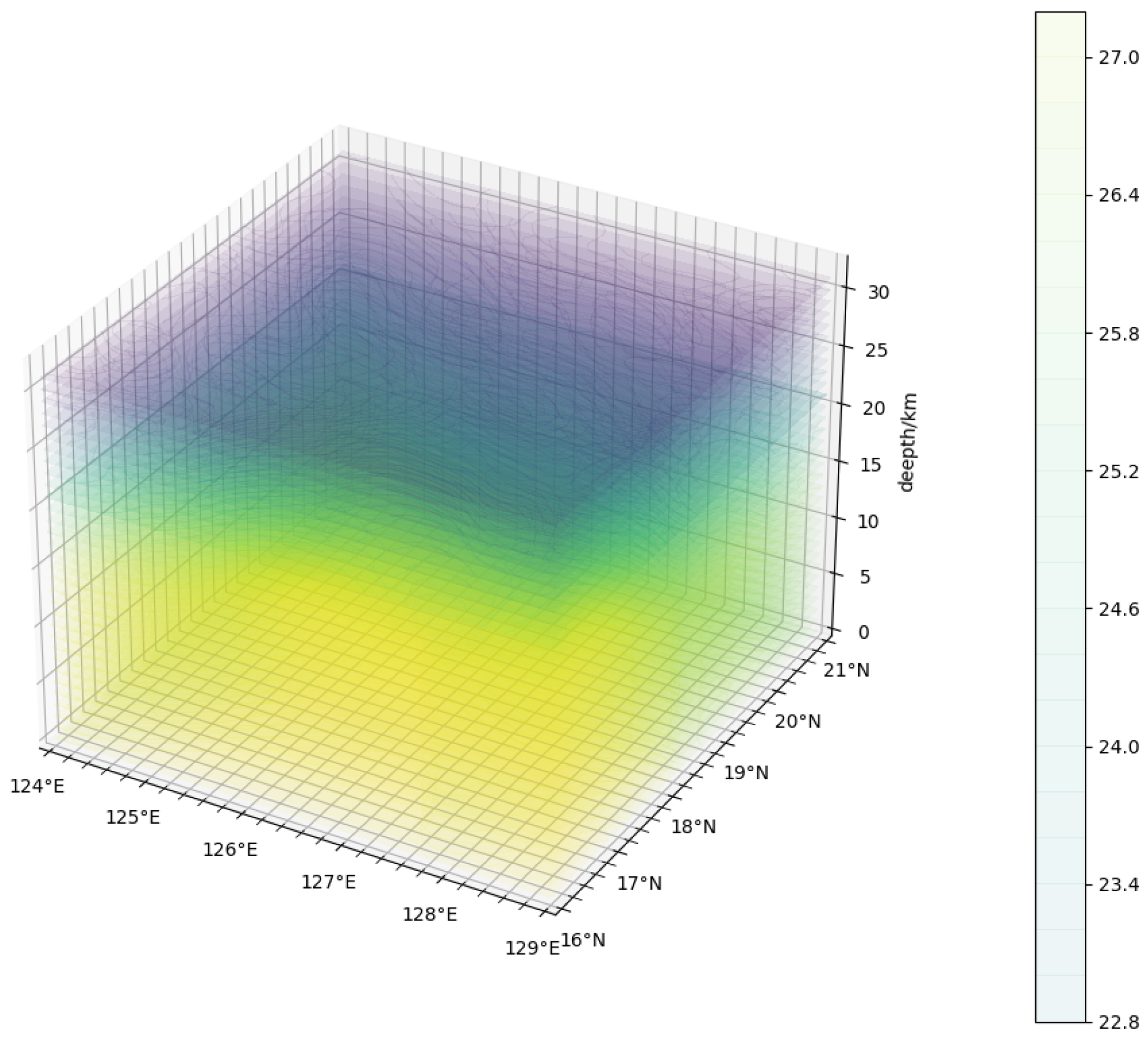

3.1. Acquisition of Marine Environmental Background Fields and Verification of Observations

3.2. Simulation Environment Modeling

3.2.1. Data Collection and Processing

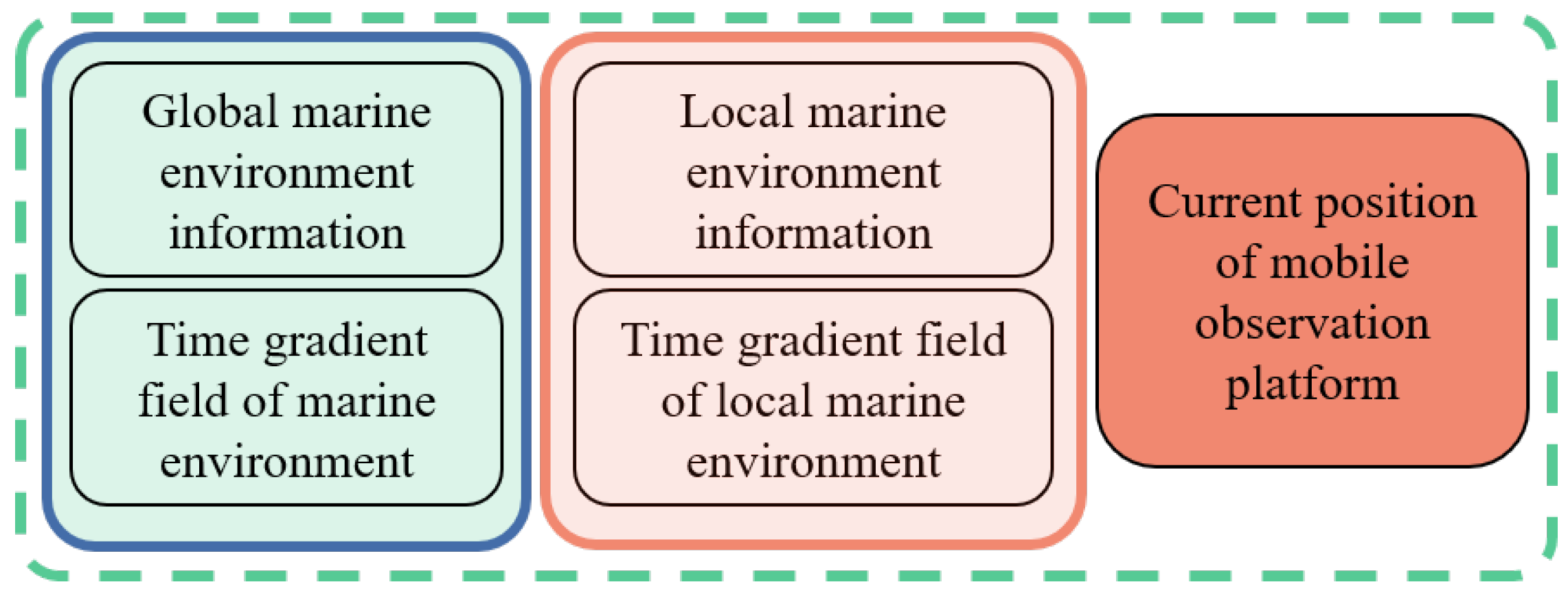

3.2.2. State Space

3.2.3. Action Space

3.2.4. Reward Function

3.3. Regional Marine Environment Mobile Observation Network Model

4. Experiment and Analysis

4.1. Parameter Setting

4.2. Adaptive Sampling Results, Data Assimilation Results, and Analysis of Observation Platform

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Berget, G.E.; Fossum, T.O.; Johansen, T.A.; Eidsvik, J.; Rajan, K. Adaptive sampling of ocean processes using an auv with a gaussian proxy model. IFAC-PapersOnLine 2018, 51, 238–243. [Google Scholar] [CrossRef]

- Stankiewicz, P.; Tan, Y.T.; Kobilarov, M. Adaptive sampling with an autonomous underwater vehicle in static marine environments. J. Field Robot. 2021, 38, 572–597. [Google Scholar] [CrossRef]

- Zhang, B.; Sukhatme, G.S.; Requicha, A.A. Adaptive sampling for marine microorganism monitoring. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 2, pp. 1115–1122. [Google Scholar]

- Ezeora, O.S.; Heckenbergerova, J.; Musilek, P. A new adaptive sampling method for energy-efficient measurement of environmental parameters. In Proceedings of the 2016 IEEE 16th International Conference on Environment and Electrical Engineering (EEEIC), Florence, Italy, 7–10 June 2016; pp. 1–6. [Google Scholar]

- Fossum, T.O. Adaptive Sampling for Marine Robotics. Ph.D. Thesis, Institutt for Marin Teknikk, Trondheim, Norway, 2019. [Google Scholar]

- Vu, M.T.; Le, T.H.; Thanh, H.L.N.N.; Huynh, T.T.; Van, M.; Hoang, Q.D.; Do, T.D. Robust Position Control of an Over-actuated Underwater Vehicle under Model Uncertainties and Ocean Current Effects Using Dynamic Sliding Mode Surface and Optimal Allocation Control. Sensors 2021, 21, 747. [Google Scholar] [CrossRef] [PubMed]

- Vu, M.T.; Thanh, H.L.N.N.; Huynh, T.T.; Do, Q.T.; Do, T.D.; Hoang, Q.D.; Le, T.H. Station-Keeping Control of a Hovering Over-Actuated Autonomous Underwater Vehicle under Ocean Current Effects and Model Uncertainties in Horizontal Plane. IEEE Access 2021, 9, 6855–6867. [Google Scholar] [CrossRef]

- Singh, Y.; Sharma, S.; Sutton, R.; Hatton, D. Optimal path planning of an unmanned surface vehicle in a real-time marine environment using Dijkstra algorithm. In Proceedings of the 12th International Conference on Marine Navigation and Safety of Sea Transportation (TransNav 2017), Gdynia, Poland, 21–23 June 2017; pp. 399–402. [Google Scholar]

- Parimala, M.; Broumi, S.; Prakash, K.; Topal, S. Bellman–Ford algorithm for solving shortest path problem of a network under picture fuzzy environment. Complex Intell. Syst. 2021, 7, 2373–2381. [Google Scholar] [CrossRef]

- Solichudin, S.; Triwiyatno, A.; Riyadi, M.A. Conflict-free dynamic route multi-agv using dijkstra Floyd-warshall hybrid algorithm with time windows. Int. J. Electr. Comput. Eng. 2020, 10, 3596. [Google Scholar] [CrossRef]

- Martins, O.O.; Adekunle, A.A.; Olaniyan, O.M.; Bolaji, B.O. An Improved multi-objective a-star algorithm for path planning in a large workspace: Design, Implementation, and Evaluation. Sci. Afr. 2022, 15, e01068. [Google Scholar] [CrossRef]

- Mokrane, A.; Braham, A.C.; Cherki, B. UAV path planning based on dynamic programming algorithm on photogrammetric DEMs. In Proceedings of the 2020 International Conference on Electrical Engineering (ICEE), Istanbul, Turkey, 25–27 September 2020; pp. 1–5. [Google Scholar]

- Lin, Z.; Yue, M.; Wu, X.; Tian, H. An improved artificial potential field method for path planning of mobile robot with subgoal adaptive selection. In Intelligent Robotics and Applications, Proceedings of the 12th International Conference, ICIRA 2019, Shenyang, China, 8–11 August 2019; Proceedings, Part I 12; Springer: Berlin/Heidelberg, Germany, 2019; pp. 211–220. [Google Scholar]

- Putro, I.E.; Duhri, R.A. Longitudinal stability augmentation control for turbojet UAV based on linear quadratic regulator (LQR) approach. In Proceedings of the 7th International Seminar on Aerospace Science and Technology—ISAST 2019, Jakarta, Indonesia, 24–25 September 2019; Volume 2226. [Google Scholar]

- Wang, H.; Fu, Z.; Zhou, J.; Fu, M.; Ruan, L. Cooperative collision avoidance for unmanned surface vehicles based on improved genetic algorithm. Ocean Eng. 2021, 221, 108612. [Google Scholar] [CrossRef]

- Han, G.; Zhou, Z.; Zhang, T.; Wang, H.; Liu, L.; Peng, Y.; Guizani, M. Ant-colony-based complete-coverage path-planning algorithm for underwater gliders in ocean areas with thermoclines. IEEE Trans. Veh. Technol. 2020, 69, 8959–8971. [Google Scholar] [CrossRef]

- Hu, L.; Naeem, W.; Rajabally, E.; Watson, G.; Mills, T.; Bhuiyan, Z.; Raeburn, C.; Salter, I.; Pekcan, C. A multiobjective optimization approach for COLREGs-compliant path planning of autonomous surface vehicles verified on networked bridge simulators. IEEE Trans. Veh. Technol. 2019, 21, 1167–1179. [Google Scholar] [CrossRef]

- de Castro, G.G.R.; Pinto, M.F.; Biundini, I.Z.; Melo, A.G.; Marcato, A.L.M.; Haddad, D.B. Dynamic Path Planning Based on Neural Networks for Aerial Inspection. J. Control Autom. Electr. Syst. 2023, 34, 85–105. [Google Scholar] [CrossRef]

- Kim, W.S.; Lee, D.H.; Kim, Y.J.; Kim, T.; Lee, H.J. Path detection for autonomous traveling in orchards using patch-based cnn. Comput. Electron. Agric. 2020, 175, 105620. [Google Scholar] [CrossRef]

- Terasawa, R.; Ariki, Y.; Narihira, T.; Tsuboi, T.; Nagasaka, K. 3d-cnn based heuristic guided task-space planner for faster motion planning. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9548–9554. [Google Scholar]

- Rehder, E.; Naumann, M.; Salscheider, N.O.; Stiller, C. Cooperative motion planning for non-holonomic agents with value iteration networks. arXiv 2017, arXiv:1709.05273. [Google Scholar]

- Tamar, A.; Wu, Y.; Thomas, G.; Levine, S.; Abbeel, P. Value iteration networks. Adv. Neural Inf. Process. Syst. 2016, 29, 2154–2162. [Google Scholar]

- Luo, M.; Hou, X.; Yang, J. Multi-robot one-target 3d path planning based on improved bioinspired neural network. In Proceedings of the 2019 16th International Computer Conference on Wavelet Active Media Technology and Information Processing, Chengdu, China, 14–15 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 410–413. [Google Scholar]

- Ni, J.; Yang, S.X. Bioinspired neural network for real-time cooperative hunting by multirobots in unknown environments. IEEE Trans. Neural Netw. 2011, 22, 2062–2077. [Google Scholar] [PubMed]

- Godio, S.; Primatesta, S.; Guglieri, G.; Dovis, F. A Bioinspired Neural Network-Based Approach for Cooperative Coverage Planning of UAVs. Information 2021, 12, 51. [Google Scholar] [CrossRef]

- Cao, X.; Chen, L.; Guo, L.; Han, W. AUV global security path planning based on a potential field bio-inspired neural network in underwater environment. Intell. Autom. Soft Comput. 2021, 27, 391–407. [Google Scholar] [CrossRef]

- Qin, H.; Shao, S.; Wang, T.; Yu, X.; Jiang, Y.; Cao, Z. Review of Autonomous Path Planning Algorithms for Mobile Robots. Drones 2023, 7, 211. [Google Scholar] [CrossRef]

- Liu, L.X.; Wang, X.; Yang, X.; Liu, H.; Li, J.; Wang, P. Path planning techniques for mobile robots: Review and prospect. Expert Syst. Appl. 2023, 227, 120254. [Google Scholar] [CrossRef]

- Wang, S.; Ma, F.; Yan, X.; Wu, P.; Liu, Y. Adaptive and extendable control of unmanned surface vehicle formations using distributed deep reinforcement learning. Appl. Ocean Res. 2021, 110, 102590. [Google Scholar] [CrossRef]

- Li, L.; Wu, D.; Huang, Y.; Yuan, Z.M. A path planning strategy unified with a COLREGS collision avoidance function based on deep reinforcement learning and artificial potential field. Appl. Ocean Res. 2021, 113, 102759. [Google Scholar] [CrossRef]

- Wang, D.; Shen, Y.; Wan, J.; Sha, Q.; Li, G.; Chen, G.; He, B. Sliding mode heading control for AUV based on continuous hybrid model-free and model-based reinforcement learning. Appl. Ocean Res. 2022, 118, 102960. [Google Scholar] [CrossRef]

- Miao, R.; Wang, L.; Pang, S. Coordination of distributed unmanned surface vehicles via model-based reinforcement learning methods. Appl. Ocean Res. 2022, 122, 103106. [Google Scholar] [CrossRef]

- Zheng, S.; Liu, H. Improved multi-agent deep deterministic policy gradient for path planning-based crowd simulation. IEEE Access 2019, 7, 147755–147770. [Google Scholar] [CrossRef]

- Muse, D.; Wermter, S. Actor-critic learning for platform-independent robot navigation. Cogn. Comput. 2009, 1, 203–220. [Google Scholar] [CrossRef]

- Lachekhab, F.; Tadjine, M. Goal seeking of mobile robot using fuzzy actor critic learning algorithm. In Proceedings of the 2015 7th International Conference on Modelling, Identification and Control (ICMIC), Sousse, Tunisia, 18–20 December 2015; pp. 1–6. [Google Scholar]

- Çetinkaya, M. Multi-Agent Path Planning Using Deep Reinforcement Learning. arXiv 2021, arXiv:2110.01460. [Google Scholar]

- Zhang, Y.; Qian, Y.; Yao, Y.; Hu, H.; Xu, Y. Learning to cooperate: Application of deep reinforcement learning for online AGV path finding. In Proceedings of the 19th International Conference on Autonomous Agents and Multiagent Systems, Auckland, New Zealand, 9–13 May 2020; pp. 2077–2079. [Google Scholar]

- Liu, Z.; Chen, B.; Zhou, H.; Koushik, G.; Hebert, M.; Zhao, D. Mapper: Multi-agent path planning with evolutionary reinforcement learning in mixed dynamic environments. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 11748–11754. [Google Scholar]

- Qiu, H. Multi-agent navigation based on deep reinforcement learning and traditional pathfinding algorithm. arXiv 2020, arXiv:2012.09134. [Google Scholar]

- Sartoretti, G.; Kerr, J.; Shi, Y.; Wagner, G.; Kumar, T.S.; Koenig, S.; Choset, H. Primal: Pathfinding via reinforcement and imitation multi-agent learning. IEEE Robot. Autom. Lett. 2019, 4, 2378–2385. [Google Scholar] [CrossRef]

- Risi, S.; Togelius, J. Neuroevolution in games: State of the art and open challenges. IEEE Trans. Comput. Intell. Games 2015, 9, 25–41. [Google Scholar] [CrossRef]

- Stanley, K.O.; Miikkulainen, R. Evolving neural networks through augmenting topologies. Evol. Comput. 2002, 10, 99–127. [Google Scholar] [CrossRef]

- Khadka, S.; Tumer, K. Evolution-guided policy gradient in reinforcement learning. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2018; Volume 31. [Google Scholar]

- Salimans, T.; Ho, J.; Chen, X.; Sidor, S.; Sutskever, I. Evolution strategies as a scalable alternative to reinforcement learning. arXiv 2017, arXiv:1703.03864. [Google Scholar]

- Cheng, S.; Quilodrán-Casas, C.; Ouala, S.; Farchi, A.; Liu, C.; Tandeo, P.; Fablet, R.; Lucor, D.; Iooss, B.; Brajard, J.; et al. Machine learning with data assimilation and uncertainty quantification for dynamical systems: A review. IEEE/CAA J. Autom. Sin. 2023, 10, 1361–1387. [Google Scholar] [CrossRef]

- Farchi, A.; Laloyaux, P.; Bonavita, M.; Bocquet, M. Using machine learning to correct model error in data assimilation and forecast applications. Q. J. R. Meteorol. Soc. 2021, 147, 3067–3084. [Google Scholar] [CrossRef]

- Tang, M.; Liu, Y.; Durlofsky, L.J. A deep-learning-based surrogate model for data assimilation in dynamic subsurface flow problems. J. Comput. Phys. 2020, 413, 109456. [Google Scholar] [CrossRef]

- Cheng, S.; Prentice, I.C.; Huang, Y.; Jin, Y.; Guo, Y.K.; Arcucci, R. Data-driven surrogate model with latent data assimilation: Application to wildfire forecasting. J. Comput. Phys. 2022, 464, 111302. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, D.; Li, J.; Wang, Y.; Shen, H. Adaptive environmental sampling for underwater vehicles based on ant colony optimization algorithm. In Global Oceans 2020: Singapore–US Gulf Coast; IEEE: Piscataway, NJ, USA, 2020; pp. 1–9. [Google Scholar]

- White, C.C., III; White, D.J. Markov decision processes. Eur. J. Oper. Res. 1989, 39, 1–16. [Google Scholar] [CrossRef]

- Sutton, R.S. Reinforcement Learning, a Bradford Book; Bradford Books: Bradford, PA, USA, 1998; pp. 665–685. [Google Scholar]

- Mellor, G.L. Users guide for a three-dimensional, primitive equation, numerical ocean model (June 2003 version). Prog. Atmos. Ocean. Sci. 2003. Available online: https://www.researchgate.net/publication/242777179_Users_Guide_For_A_Three-Dimensional_Primitive_Equation_Numerical_Ocean_Model (accessed on 1 November 2023).

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Hartikainen, K.; Tucker, G.; Ha, S.; Tan, J.; Kumar, V.; Zhu, H.; Gupta, A.; Abbeel, P. Soft actor-critic algorithms and applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Jahrer, M. Rankgauss. Available online: https://github.com/michaeljahrer/rankGauss (accessed on 1 November 2023).

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, PMLR, Vienna, Austria, 25–31 July 2018; pp. 1861–1870. [Google Scholar]

- Wang, Z.; Sui, Y.; Qin, H.; Lu, H. State Super Sampling Soft Actor–Critic Algorithm for Multi-AUV Hunting in 3D Underwater Environment. J. Mar. Sci. Eng. 2023, 11, 1257. [Google Scholar] [CrossRef]

- Wang, Z.; Lu, H.; Qin, H.; Sui, Y. Autonomous Underwater Vehicle Path Planning Method of Soft Actor–Critic Based on Game Training. J. Mar. Sci. Eng. 2022, 10, 2018. [Google Scholar] [CrossRef]

- Pourchot, A.; Sigaud, O. CEM-RL: Combining evolutionary and gradient-based methods for policy search. arXiv 2018, arXiv:1810.01222. [Google Scholar]

| Parameter | Value | Description |

|---|---|---|

| minibatch size | 64 | Make the gradient descent direction more accurate |

| buffer size | 10,000 | Buffer capacity |

| discount factor | 0.9 | Attenuation coefficient |

| rollout_size | 3 | Size of learner rollouts |

| init_w | 1 | Whether the neural network parameters are initialized |

| actor_lr | Actor learning rate | |

| critic_lr | Critic learning rate | |

| noise_std | 0.1 | Gaussian noise exploration std |

| ucb_coefficient | 0.9 | Exploration coefficient in UCB |

| pop_size | 7 | Population size |

| elite_fraction | 0.2 | Proportion of elites in the population |

| crossover_prob | 0.01 | Probability of crossing |

| mutation_prob | 0.2 | Probability of variation |

| Platform | ENSAC | EDDPG | DDPG | SAC |

|---|---|---|---|---|

| single | 0.16539 | 0.17408 | 0.17938 | 0.16775 |

| dual | 0.12013 | 0.13619 | 0.13052 | 0.13284 |

| five | 0.16952 | 0.17350 | 0.17213 | 0.17151 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Liu, Y.; Zhou, W. Adaptive Sampling Path Planning for a 3D Marine Observation Platform Based on Evolutionary Deep Reinforcement Learning. J. Mar. Sci. Eng. 2023, 11, 2313. https://doi.org/10.3390/jmse11122313

Zhang J, Liu Y, Zhou W. Adaptive Sampling Path Planning for a 3D Marine Observation Platform Based on Evolutionary Deep Reinforcement Learning. Journal of Marine Science and Engineering. 2023; 11(12):2313. https://doi.org/10.3390/jmse11122313

Chicago/Turabian StyleZhang, Jingjing, Yanlong Liu, and Weidong Zhou. 2023. "Adaptive Sampling Path Planning for a 3D Marine Observation Platform Based on Evolutionary Deep Reinforcement Learning" Journal of Marine Science and Engineering 11, no. 12: 2313. https://doi.org/10.3390/jmse11122313

APA StyleZhang, J., Liu, Y., & Zhou, W. (2023). Adaptive Sampling Path Planning for a 3D Marine Observation Platform Based on Evolutionary Deep Reinforcement Learning. Journal of Marine Science and Engineering, 11(12), 2313. https://doi.org/10.3390/jmse11122313