Prediction of Wave Energy Flux in the Bohai Sea through Automated Machine Learning

Abstract

:1. Introduction

- Apply a method for time series point-to-point prediction.

- Compare models of traditional machine learning and automated machine learning in the scenario of wave energy prediction.

- Predict significant wave height, mean wave period, and wave energy flux of the Bohai Sea, which provides ocean parameters theoretical characterizations for the wave development in the Bohai Sea.

2. Materials and Methods

2.1. Data

2.2. Mann–Kendall Test

2.3. Forecast

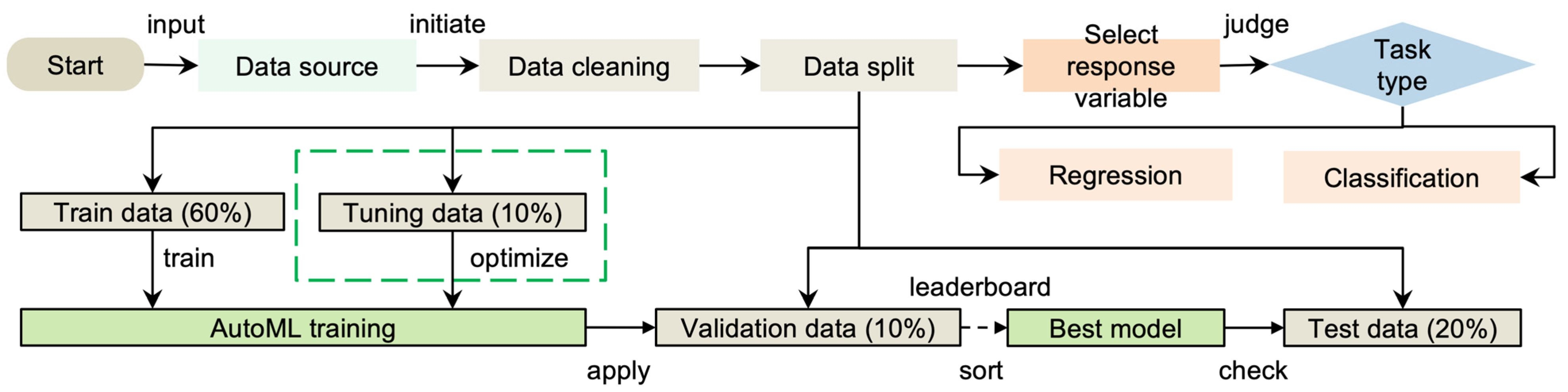

2.3.1. Data Processing

2.3.2. Conventional Machine Learning Models

2.3.3. Automated Machine Learning Models

2.3.4. Automated Deep Learning Models

2.3.5. Experimental Conditions

2.4. Wave Energy Flux Calculation

2.5. Statistical Metrics

3. Results

3.1. Study Area

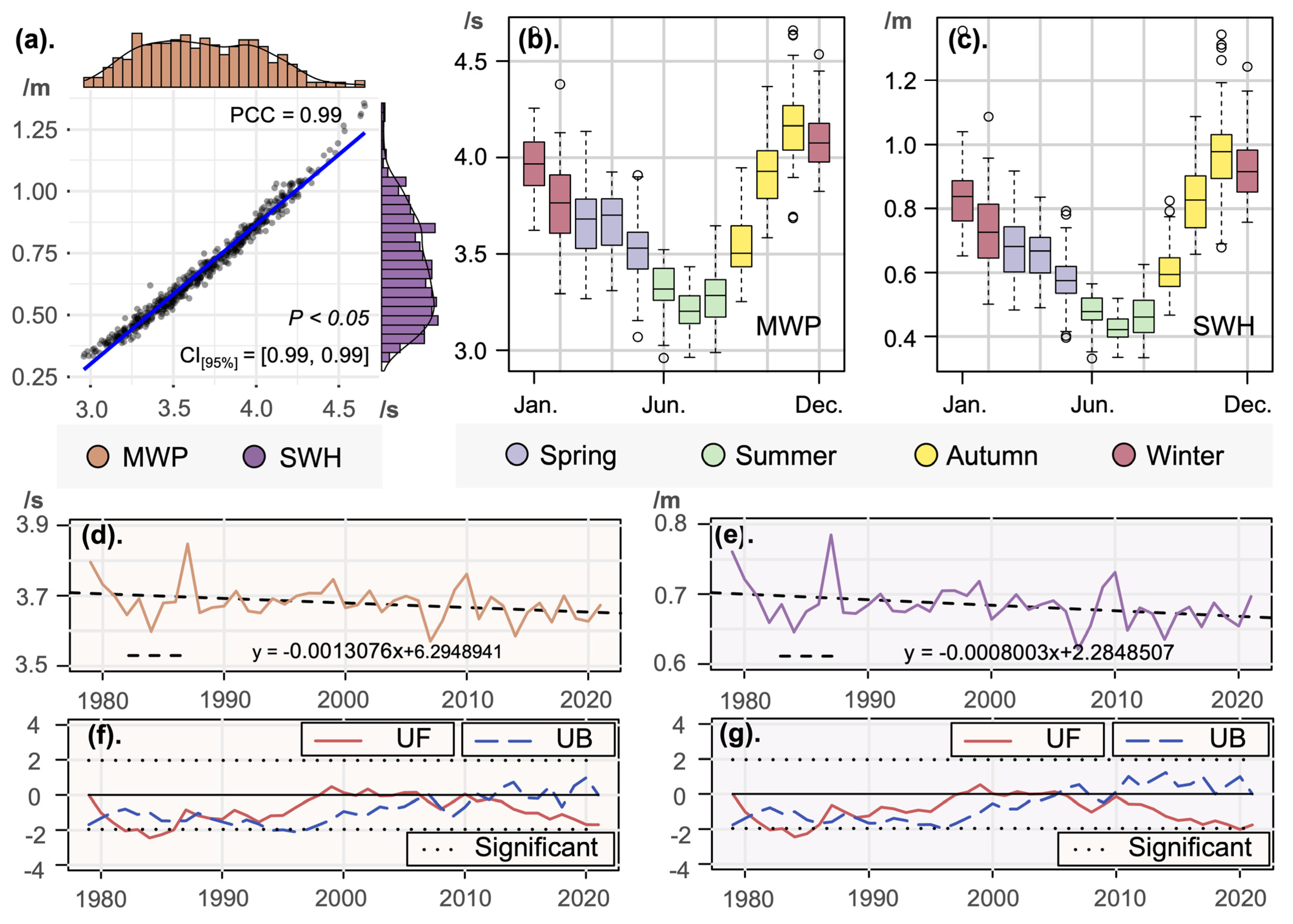

3.2. Statistical Analysis

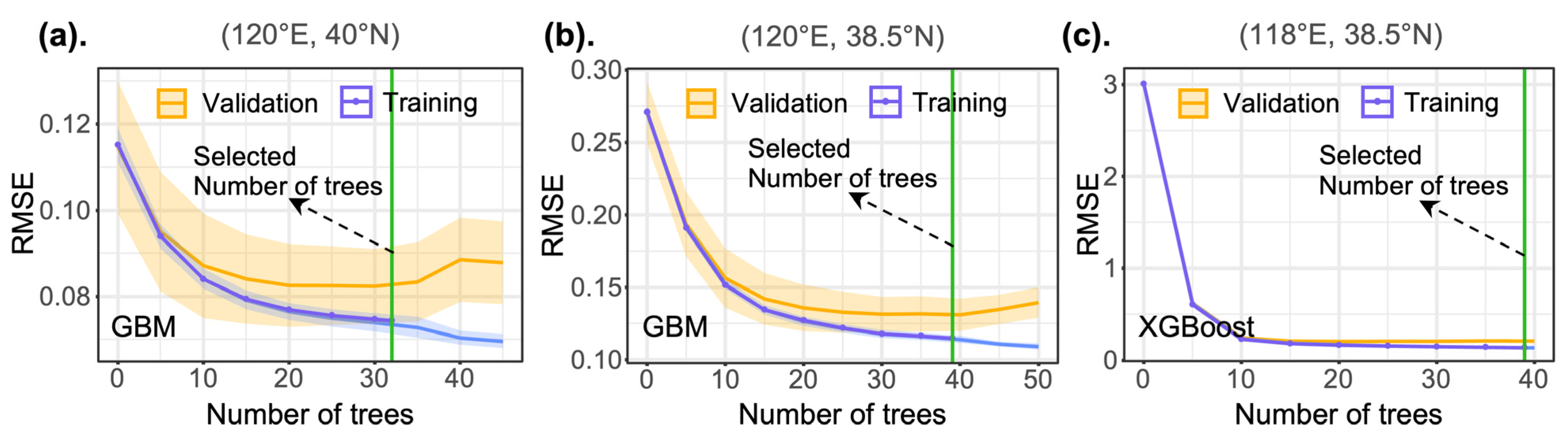

3.3. Model Performance

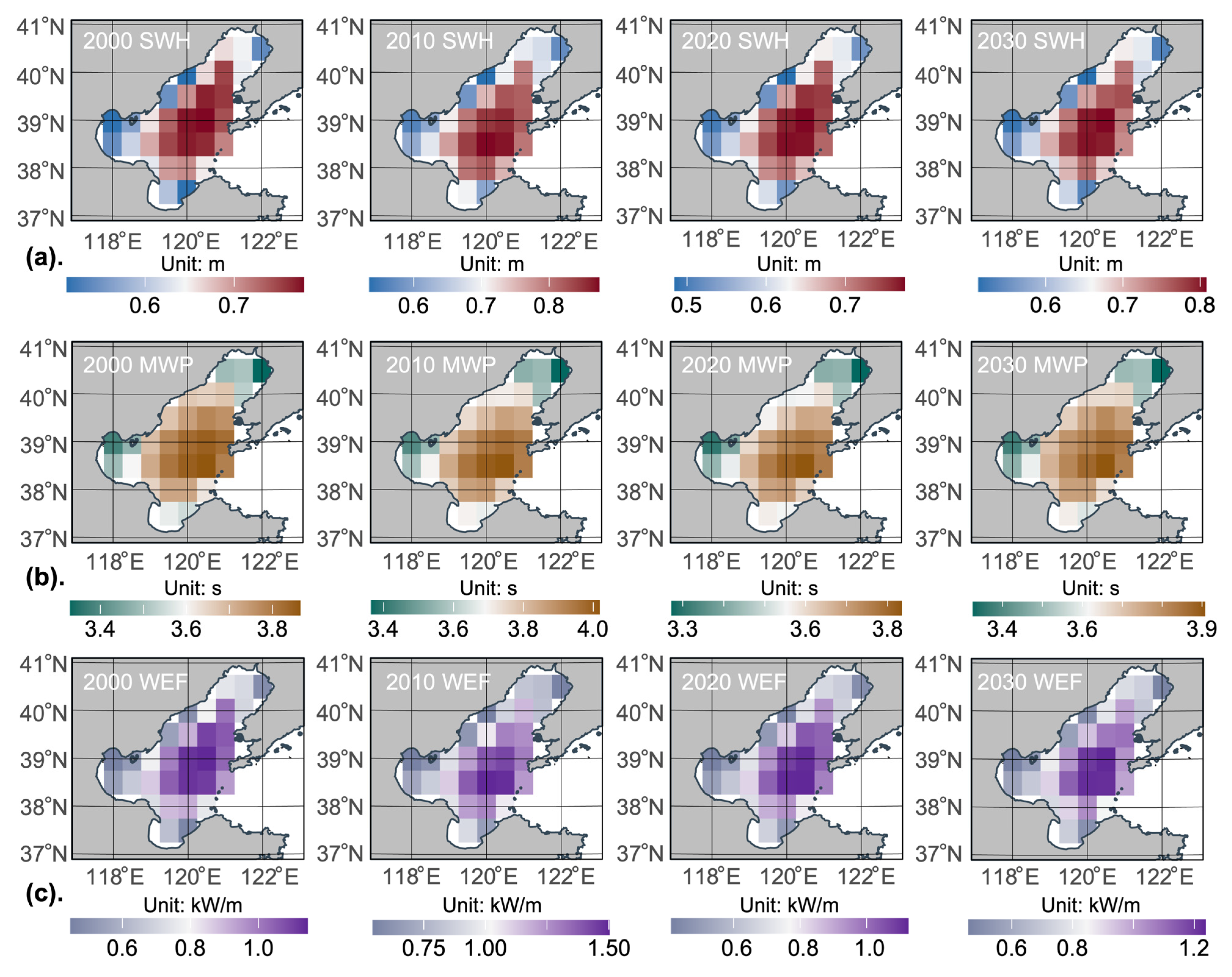

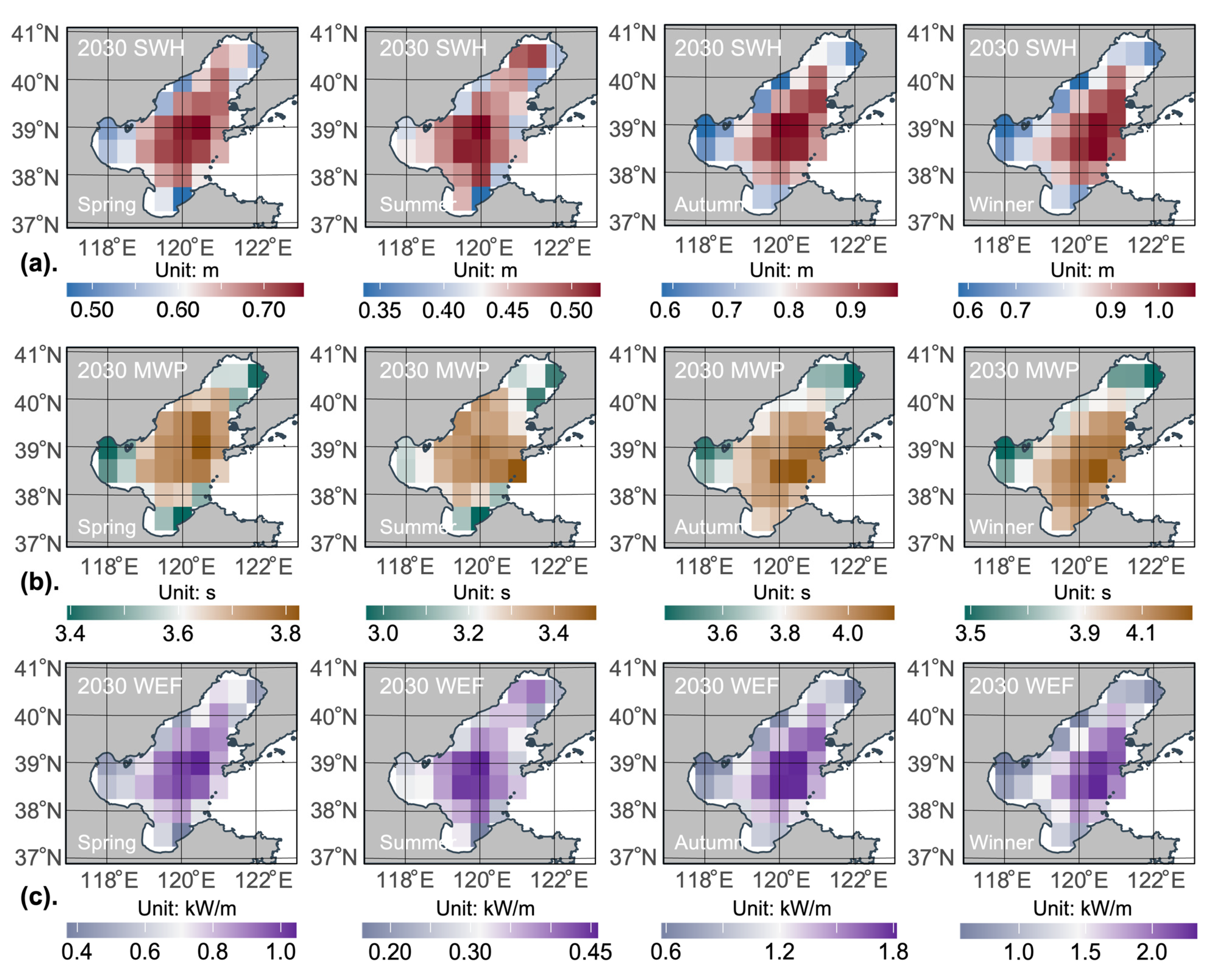

3.4. Wave Energy Flux Prediction

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zou, C.; Xiong, B.; Xue, H.; Zheng, D.; Ge, Z.; Wang, Y.; Jiang, L.; Pan, S.; Wu, S. The Role of New Energy in Carbon Neutral. Pet. Explor. Dev. 2021, 48, 480–491. [Google Scholar] [CrossRef]

- Smith, A.M.; Brown, M.A. Demand Response: A Carbon-Neutral Resource? Energy 2015, 85, 10–22. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Ma, X.; Chen, B.; Shang, Y.; Song, M. Challenges toward Carbon Neutrality in China: Strategies and Countermeasures. Resour. Conserv. Recycl. 2022, 176, 105959. [Google Scholar] [CrossRef]

- Dong, L.; Miao, G.; Wen, W. China’s Carbon Neutrality Policy: Objectives, Impacts and Paths. East Asian Policy 2021, 13, 5–18. [Google Scholar] [CrossRef]

- Prasad, S.; Venkatramanan, V.; Singh, A. Renewable Energy for a Low-Carbon Future: Policy Perspectives. In Sustainable Bioeconomy: Pathways to Sustainable Development Goals; Venkatramanan, V., Shah, S., Prasad, R., Eds.; Springer: Singapore, 2021; pp. 267–284. ISBN 9789811573217. [Google Scholar]

- Quereshi, S.; Jadhao, P.R.; Pandey, A.; Ahmad, E.; Pant, K.K. 1—Overview of Sustainable Fuel and Energy Technologies. In Sustainable Fuel Technologies Handbook; Dutta, S., Mustansar Hussain, C., Eds.; Academic Press: Cambridge, MA, USA, 2021; pp. 3–25. ISBN 978-0-12-822989-7. [Google Scholar]

- Agarwal, U.; Jain, N.; Kumawat, M. Ocean Energy: An Endless Source of Renewable Energy. Available online: https://www.igi-global.com/chapter/ocean-energy/www.igi-global.com/chapter/ocean-energy/293178 (accessed on 10 February 2022).

- Feng, C.; Ye, G.; Jiang, Q.; Zheng, Y.; Chen, G.; Wu, J.; Feng, X.; Si, Y.; Zeng, J.; Li, P.; et al. The Contribution of Ocean-Based Solutions to Carbon Reduction in China. Sci. Total Environ. 2021, 797, 149168. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Y.; Sun, W.; Li, J. Ocean Wave Energy Converters: Technical Principle, Device Realization, and Performance Evaluation. Renew. Sustain. Energy Rev. 2021, 141, 110764. [Google Scholar] [CrossRef]

- Madan, D.; Rathnakumar, P.; Marichamy, S.; Ganesan, P.; Vinothbabu, K.; Stalin, B. A Technological Assessment of the Ocean Wave Energy Converters. In Advances in Industrial Automation and Smart Manufacturing; Arockiarajan, A., Duraiselvam, M., Raju, R., Eds.; Springer: Singapore, 2021; pp. 1057–1072. [Google Scholar]

- Ahamed, R.; McKee, K.; Howard, I. Advancements of Wave Energy Converters Based on Power Take Off (PTO) Systems: A Review. Ocean Eng. 2020, 204, 107248. [Google Scholar] [CrossRef]

- Wu, J.; Qin, L.; Chen, N.; Qian, C.; Zheng, S. Investigation on a Spring-Integrated Mechanical Power Take-off System for Wave Energy Conversion Purpose. Energy 2022, 245, 123318. [Google Scholar] [CrossRef]

- Cai, Q.; Zhu, S. Applying Double-Mass Pendulum Oscillator with Tunable Ultra-Low Frequency in Wave Energy Converters. Appl. Energy 2021, 298, 117228. [Google Scholar] [CrossRef]

- Li, Q.; Mi, J.; Li, X.; Chen, S.; Jiang, B.; Zuo, L. A Self-Floating Oscillating Surge Wave Energy Converter. Energy 2021, 230, 120668. [Google Scholar] [CrossRef]

- Falnes, J. A Review of Wave-Energy Extraction. Mar. Struct. 2007, 20, 185–201. [Google Scholar] [CrossRef]

- Fan, F.-R.; Tian, Z.-Q.; Wang, Z.L. Flexible Triboelectric Generator. Nano Energy 2012, 1, 328–334. [Google Scholar] [CrossRef]

- Zi, Y.; Guo, H.; Wen, Z.; Yeh, M.-H.; Hu, C.; Wang, Z.L. Harvesting Low-Frequency (<5 Hz) Irregular Mechanical Energy: A Possible Killer Application of Triboelectric Nanogenerator. ACS Nano 2016, 10, 4797–4805. [Google Scholar] [CrossRef]

- Wang, Z.L. Triboelectric Nanogenerators as New Energy Technology and Self-Powered Sensors—Principles, Problems and Perspectives. Faraday Discuss. 2015, 176, 447–458. [Google Scholar] [CrossRef]

- Wang, Z.L. Catch Wave Power in Floating Nets. Nature 2017, 542, 159–160. [Google Scholar] [CrossRef]

- Rodrigues, C.; Nunes, D.; Clemente, D.; Mathias, N.; Correia, J.M.; Rosa-Santos, P.; Taveira-Pinto, F.; Morais, T.; Pereira, A.; Ventura, J. Emerging Triboelectric Nanogenerators for Ocean Wave Energy Harvesting: State of the Art and Future Perspectives. Energy Environ. Sci. 2020, 13, 2657–2683. [Google Scholar] [CrossRef]

- Dvorak, M.J.; Archer, C.L.; Jacobson, M.Z. California Offshore Wind Energy Potential. Renew. Energy 2010, 35, 1244–1254. [Google Scholar] [CrossRef]

- Wan, Y.; Fan, C.; Dai, Y.; Li, L.; Sun, W.; Zhou, P.; Qu, X. Assessment of the Joint Development Potential of Wave and Wind Energy in the South China Sea. Energies 2018, 11, 398. [Google Scholar] [CrossRef] [Green Version]

- Liu, G.; Wu, W.; Ge, Q.; Dai, E.; Wan, Z.; Zhou, Y. GIS-Based Assessment of Roof-Mounted Solar Energy Potential in Jiangsu, China. In Proceedings of the 2011 Second International Conference on Digital Manufacturing Automation, Zhangjiajie, China, 5–7 August 2011; pp. 565–571. [Google Scholar]

- Dasari, H.P.; Desamsetti, S.; Langodan, S.; Attada, R.; Kunchala, R.K.; Viswanadhapalli, Y.; Knio, O.; Hoteit, I. High-Resolution Assessment of Solar Energy Resources over the Arabian Peninsula. Appl. Energy 2019, 248, 354–371. [Google Scholar] [CrossRef]

- Odhiambo, M.R.O.; Abbas, A.; Wang, X.; Mutinda, G. Solar Energy Potential in the Yangtze River Delta Region—A GIS-Based Assessment. Energies 2021, 14, 143. [Google Scholar] [CrossRef]

- Iglesias, G.; López, M.; Carballo, R.; Castro, A.; Fraguela, J.A.; Frigaard, P. Wave Energy Potential in Galicia (NW Spain). Renew. Energy 2009, 34, 2323–2333. [Google Scholar] [CrossRef]

- Wang, H.; Fu, D.; Liu, D.; Xiao, X.; He, X.; Liu, B. Analysis and Prediction of Significant Wave Height in the Beibu Gulf, South China Sea. J. Geophys. Res. Oceans 2021, 126, e2020JC017144. [Google Scholar] [CrossRef]

- Sierra, J.P.; Martín, C.; Mösso, C.; Mestres, M.; Jebbad, R. Wave Energy Potential along the Atlantic Coast of Morocco. Renew. Energy 2016, 96, 20–32. [Google Scholar] [CrossRef]

- Zhou, D.; Yu, M.; Yu, J.; Li, Y.; Guan, B.; Wang, X.; Wang, Z.; Lv, Z.; Qu, F.; Yang, J. Impacts of Inland Pollution Input on Coastal Water Quality of the Bohai Sea. Sci. Total Environ. 2021, 765, 142691. [Google Scholar] [CrossRef]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2020; ISBN 0-262-04379-3. [Google Scholar]

- Wright, R.E. Logistic Regression; American Psychological Association: Washington, DC, USA, 1995. [Google Scholar]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support Vector Machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H.; Chen, K. Extreme Gradient Boosting [R Package xgboost Version 1.6.0.1]. 2022. Available online: https://cran.r-project.org/web/packages/xgboost/index.html (accessed on 23 May 2022).

- Liu, M.; Huang, Y.; Li, Z.; Tong, B.; Liu, Z.; Sun, M.; Jiang, F.; Zhang, H. The Applicability of LSTM-KNN Model for Real-Time Flood Forecasting in Different Climate Zones in China. Water 2020, 12, 440. [Google Scholar] [CrossRef] [Green Version]

- Jamil, S.; Rahman, M.; Haider, A. Bag of Features (BoF) Based Deep Learning Framework for Bleached Corals Detection. Big Data Cogn. Comput. 2021, 5, 53. [Google Scholar] [CrossRef]

- Sun, M.; Li, Z.; Yao, C.; Liu, Z.; Wang, J.; Hou, A.; Zhang, K.; Huo, W.; Liu, M. Evaluation of Flood Prediction Capability of the WRF-Hydro Model Based on Multiple Forcing Scenarios. Water 2020, 12, 874. [Google Scholar] [CrossRef] [Green Version]

- Yao, X. Evolving Artificial Neural Networks. Proc. IEEE 1999, 87, 1423–1447. [Google Scholar]

- McCulloch, W.S.; Pitts, W. A Logical Calculus of the Ideas Immanent in Nervous Activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Medsker, L.R.; Jain, L.C. Recurrent Neural Networks. Des. Appl. 2001, 5, 64–67. [Google Scholar]

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Valueva, M.V.; Nagornov, N.N.; Lyakhov, P.A.; Valuev, G.V.; Chervyakov, N.I. Application of the Residue Number System to Reduce Hardware Costs of the Convolutional Neural Network Implementation. Math. Comput. Simul. 2020, 177, 232–243. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Abdel-Aal, R.E.; Elhadidy, M.A.; Shaahid, S.M. Modeling and Forecasting the Mean Hourly Wind Speed Time Series Using GMDH-Based Abductive Networks. Renew. Energy 2009, 34, 1686–1699. [Google Scholar] [CrossRef]

- Jamil, S.; Abbas, M.S.; Roy, A.M. Distinguishing Malicious Drones Using Vision Transformer. AI 2022, 3, 260–273. [Google Scholar] [CrossRef]

- Sun, A.Y.; Scanlon, B.R.; Save, H.; Rateb, A. Reconstruction of GRACE Total Water Storage Through Automated Machine Learning. Water Resour. Res. 2021, 57, e2020WR028666. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Bao, Y.; Liu, Z. A Fast Grid Search Method in Support Vector Regression Forecasting Time Series. In Proceedings of the Intelligent Data Engineering and Automated Learning—IDEAL 2006, Burgos, Spain, 20–23 September 2006; Corchado, E., Yin, H., Botti, V., Fyfe, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 504–511. [Google Scholar]

- Brochu, E.; Cora, V.M.; de Freitas, N. A Tutorial on Bayesian Optimization of Expensive Cost Functions, with Application to Active User Modeling and Hierarchical Reinforcement Learning. arXiv 2010, arXiv:1012.2599. [Google Scholar]

- Koh, J.C.O.; Spangenberg, G.; Kant, S. Automated Machine Learning for High-Throughput Image-Based Plant Phenotyping. Remote Sens. 2021, 13, 858. [Google Scholar] [CrossRef]

- Han, T.; Gois, F.N.B.; Oliveira, R.; Prates, L.R.; Porto, M.M.D.A. Modeling the Progression of COVID-19 Deaths Using Kalman Filter and AutoML. Soft Comput. 2021. [Google Scholar] [CrossRef]

- Liu, X.; Taylor, M.P.; Aelion, C.M.; Dong, C. Novel Application of Machine Learning Algorithms and Model-Agnostic Methods to Identify Factors Influencing Childhood Blood Lead Levels. Environ. Sci. Technol. 2021, 55, 13387–13399. [Google Scholar] [CrossRef]

- Hersbach, H.; Bell, B.; Berrisford, P.; Hirahara, S.; Horanyi, A.; Muñoz-Sabater, J.; Nicolas, J.; Peubey, C.; Radu, R.; Schepers, D.; et al. The ERA5 Global Reanalysis. Q. J. R. Meteorol. Soc. 2020, 146, 1999–2049. [Google Scholar] [CrossRef]

- Hersbach, H.; Bell, B.; Berrisford, P.; Biavati, G.; Horányi, A.; Muñoz Sabater, J.; Nicolas, J.; Peubey, C.; Radu, R.; Rozum, I.; et al. ERA5 Monthly Averaged Data on Single Levels from 1979 to Present. Copernic. Clim. Change Serv. C3S Clim. Data Store CDS 2019, 10, 252–266. [Google Scholar] [CrossRef]

- Wang, J.; Li, B.; Gao, Z.; Wang, J. Comparison of ECMWF Significant Wave Height Forecasts in the China Sea with Buoy Data. Weather Forecast. 2019, 34, 1693–1704. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Y. Evaluation of the ERA5 Significant Wave Height against NDBC Buoy Data from 1979 to 2019. Mar. Geod. 2022, 45, 151–165. [Google Scholar] [CrossRef]

- Wang, J.; Liu, J.; Wang, Y.; Liao, Z.; Sun, P. Spatiotemporal Variations and Extreme Value Analysis of Significant Wave Height in the South China Sea Based on 71-Year Long ERA5 Wave Reanalysis. Appl. Ocean Res. 2021, 113, 102750. [Google Scholar] [CrossRef]

- Mahmoodi, K.; Ghassemi, H.; Razminia, A. Temporal and Spatial Characteristics of Wave Energy in the Persian Gulf Based on the ERA5 Reanalysis Dataset. Energy 2019, 187, 115991. [Google Scholar] [CrossRef]

- Mayer, L.; Jakobsson, M.; Allen, G.; Dorschel, B.; Falconer, R.; Ferrini, V.; Lamarche, G.; Snaith, H.; Weatherall, P. The Nip-pon Foundation—GEBCO Seabed 2030 Project: The Quest to See the World’s Oceans Completely Mapped by 2030. Geosciences 2018, 8, 63. [Google Scholar] [CrossRef] [Green Version]

- Weatherall, P.; Tozer, B.; Arndt, J.E.; Bazhenova, E.; Bringensparr, C.; Castro, C.; Dorschel, B.; Drennon, H.; Ferrini, V.; Harper, H.; et al. The GEBCO_2021 Grid—A Continuous Terrain Model of the Global Oceans and Land; NERC EDS British Oceanographic Data Centre NOC: Liverpool, UK, 2021. [Google Scholar] [CrossRef]

- Hashim, M.; Nayan, N.; Setyowati, D.L.; Said, Z.M.; Mahat, H.; Saleh, Y. Analysis of Water Quality Trends Using the Mann-Kendall Test and Sen’s Estimator of Slope in a Tropical River Basin. Pollution 2021, 7, 933–942. [Google Scholar] [CrossRef]

- Mann, H.B. Nonparametric Tests against Trend. Econometrica 1945, 13, 245–259. [Google Scholar] [CrossRef]

- Kendall, M.G. Rank Correlation Methods, 2nd ed.; Hafner Publishing Co.: Oxford, UK, 1955; pp. 7–196. [Google Scholar]

- Iacobucci, D.; Posavac, S.S.; Kardes, F.R.; Schneider, M.J.; Popovich, D. The Median Split: Robust, Refined, and Revived. J. Consum. Psychol. 2015, 25, 690–704. [Google Scholar] [CrossRef]

- Yapar, G.; Selamlar, H.T.; Capar, S.; Yavuz, İ. ATA Method. Hacet. J. Math. Stat. 2019, 48, 1838–1844. [Google Scholar] [CrossRef]

- Yapar, G. Modified Simple Exponential Smoothing. Hacet. J. Math. Stat. 2018, 47, 741–754. [Google Scholar] [CrossRef]

- Yapar, G.; Capar, S.; Selamlar, H.T.; Yavuz, İ. Modified Holt’s Linear Trend Method. Hacet. J. Math. Stat. 2018, 47, 1394–1403. [Google Scholar]

- Taylan, A.S.; Selamlar, H.T.; Yapar, G. ATAforecasting: Automatic Time Series Analysis and Forecasting Using the Ata Method. R J. 2021, 13, 507–541. [Google Scholar] [CrossRef]

- Shaub, D.; Ellis, P. Convenient Functions for Ensemble Time Series Forecasts [R Package forecastHybrid Version 5.0.19]. 2022. Available online: https://cran.r-project.org/web/packages/forecastHybrid/index.html (accessed on 25 May 2022).

- Panigrahi, S.; Behera, H.S. A Hybrid ETS–ANN Model for Time Series Forecasting. Eng. Appl. Artif. Intell. 2017, 66, 49–59. [Google Scholar] [CrossRef]

- Shaub, D. Fast and Accurate Yearly Time Series Forecasting with Forecast Combinations. Int. J. Forecast. 2020, 36, 116–120. [Google Scholar] [CrossRef]

- Brożyna, J.; Mentel, G.; Szetela, B.; Strielkowski, W. Multi-Seasonality in the TBATS Model Using Demand for Electric Energy as a Case Study. Econ. Comput. Econ. Cybern. Stud. Res. 2018, 52, 229–246. [Google Scholar] [CrossRef]

- Zhang, H. The Optimality of Naive Bayes. Aa 2004, 1, 3. [Google Scholar]

- Hyndman, R.; Athanasopoulos, G.; Bergmeir, C.; Caceres, G.; Chhay, L.; O’Hara-Wild, M.; Petropoulos, F.; Razbash, S.; Wang, E.; Yasmeen, F.; et al. Forecasting Functions for Time Series and Linear Models [R Package Forecast Version 8.17.0]. 2022. Available online: https://cran.r-project.org/web/packages/forecast/index.html (accessed on 29 May 2022).

- Hyndman, R.J.; Khandakar, Y. Automatic Time Series Forecasting: The Forecast Package for R. J. Stat. Softw. 2008, 27, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Hillmer, S.C.; Tiao, G.C. An ARIMA-Model-Based Approach to Seasonal Adjustment. J. Am. Stat. Assoc. 1982, 77, 63–70. [Google Scholar] [CrossRef]

- LeDell, E.; Poirier, S. H2O Automl: Scalable Automatic Machine Learning. In Proceedings of the AutoML Workshop at ICML, Vienna, Austria, 18 July 2020; Volume 2020. [Google Scholar]

- Nelder, J.A.; Wedderburn, R.W. Generalized Linear Models. J. R. Stat. Soc. Ser. A 1972, 135, 370–384. [Google Scholar] [CrossRef]

- Svozil, D.; Kvasnicka, V.; Pospichal, J. Introduction to Multi-Layer Feed-Forward Neural Networks. Chemom. Intell. Lab. Syst. 1997, 39, 43–62. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Statist. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the KDD’16: The 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef] [Green Version]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely Randomized Trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef] [Green Version]

- Wolpert, D.H. Stacked Generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Van Der Laan, M.J.; Polley, E.C.; Hubbard, A.E. Super Learner. Stat. Appl. Genet. Mol. Biol. 2007, 6, 25. [Google Scholar] [CrossRef]

- Cortez, P. Data Mining with Neural Networks and Support Vector Machines Using the R/Rminer Tool. In Proceedings of the Advances in Data Mining. Applications and Theoretical Aspects, New York, NY, USA, 16–21 July 2013; Perner, P., Ed.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 572–583. [Google Scholar]

- Cortez, P. Data Mining Classification and Regression Methods [R Package rminer Version 1.4.6]. 2022. Available online: https://cran.r-project.org/web/packages/rminer/index.html (accessed on 30 May 2022).

- Keerthi, S.S.; Lin, C.-J. Asymptotic Behaviors of Support Vector Machines with Gaussian Kernel. Neural Comput. 2003, 15, 1667–1689. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Dey, R.; Salem, F.M. Gate-Variants of Gated Recurrent Unit (GRU) Neural Networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar]

- Liang, B.; Shao, Z.; Wu, G.; Shao, M.; Sun, J. New Equations of Wave Energy Assessment Accounting for the Water Depth. Appl. Energy 2017, 188, 130–139. [Google Scholar] [CrossRef]

- Beji, S. Improved Explicit Approximation of Linear Dispersion Relationship for Gravity Waves. Coast. Eng. 2013, 73, 11–12. [Google Scholar] [CrossRef]

- Simarro, G.; Orfila, A. Improved Explicit Approximation of Linear Dispersion Relationship for Gravity Waves: Another Discussion. Coast. Eng. 2013, 80, 15. [Google Scholar] [CrossRef]

- Cornett, A.M. A Global Wave Energy Resource Assessment. In Proceedings of the Eighteenth International Offshore and Polar Engineering Conference, Vancouver, BC, Canada, 6–17 July 2008. [Google Scholar]

- Amante, C.; Eakins, B.W. Eakins ETOPO1 1 Arc-Minute Global Relief Model: Procedures, Data Sources and Analysis; Technical Memorandum NESDIS NGDC-24; National Geophysical Data Center, NOAA: Boulder, CO, USA, 2009. [CrossRef]

- Vihtakari, M. Plot Data on Oceanographic Maps using ‘ggplot2’ [R Package ggOceanMaps Version 1.2.6]. 2022. Available online: https://cran.r-project.org/web/packages/ggOceanMaps/index.html (accessed on 30 May 2022).

- Shi, J.; Zheng, J.; Zhang, C.; Joly, A.; Zhang, W.; Xu, P.; Sui, T.; Chen, T. A 39-Year High Resolution Wave Hindcast for the Chinese Coast: Model Validation and Wave Climate Analysis. Ocean Eng. 2019, 183, 224–235. [Google Scholar] [CrossRef]

- Dunnett, D.; Wallace, J.S. Electricity Generation from Wave Power in Canada. Renew. Energy 2009, 34, 179–195. [Google Scholar] [CrossRef]

- Chen, F.; Duan, D.; Han, Q.; Yang, X.; Zhao, F. Study on Force and Wave Energy Conversion Efficiency of Buoys in Low Wave Energy Density Seas. Energy Convers. Manag. 2019, 182, 191–200. [Google Scholar] [CrossRef]

| 118° E | 118.5° E | 119° E | 119.5° E | 120° E | 120.5° E | 121° E | 121.5° E | 122° E | |

|---|---|---|---|---|---|---|---|---|---|

| 40.5° N | 1 | 2 | 3 | ||||||

| 40.0° N | 4 | 5 | 6 | 7 | |||||

| 39.5° N | 8 | 9 | 10 | 11 | |||||

| 39.0° N | 12 | 13 | 14 | 15 | 16 | 17 | 18 | ||

| 38.5° N | 19 | 20 | 21 | 22 | 23 | 24 | 25 | ||

| 38.0° N | 26 | 27 | 28 | 29 | |||||

| 37.5° N | 30 | 31 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, H.; Wang, H.; Ma, Y.; Xu, M. Prediction of Wave Energy Flux in the Bohai Sea through Automated Machine Learning. J. Mar. Sci. Eng. 2022, 10, 1025. https://doi.org/10.3390/jmse10081025

Yang H, Wang H, Ma Y, Xu M. Prediction of Wave Energy Flux in the Bohai Sea through Automated Machine Learning. Journal of Marine Science and Engineering. 2022; 10(8):1025. https://doi.org/10.3390/jmse10081025

Chicago/Turabian StyleYang, Hengyi, Hao Wang, Yong Ma, and Minyi Xu. 2022. "Prediction of Wave Energy Flux in the Bohai Sea through Automated Machine Learning" Journal of Marine Science and Engineering 10, no. 8: 1025. https://doi.org/10.3390/jmse10081025

APA StyleYang, H., Wang, H., Ma, Y., & Xu, M. (2022). Prediction of Wave Energy Flux in the Bohai Sea through Automated Machine Learning. Journal of Marine Science and Engineering, 10(8), 1025. https://doi.org/10.3390/jmse10081025