Multi-Scale Object Detection Model for Autonomous Ship Navigation in Maritime Environment

Abstract

1. Introduction

- We use DCN to optimize the backbone network by introducing a learnable offset to describe the feature orientation of the object, so that the receptive field of the network is not limited by a fixed range to more flexibly adapt to changes in the geometry of sea-surface objects.

- The DIoU loss function combined with the SNMS method is proposed to judge and screen the candidate boxes in the same grid multiple times to improve the reliability of the object detection box in dense maritime scenes.

- We provide useful training networks and data augmentation tricks and filter out some useless tricks for the task of object detection task on unmanned surface vehicle (USV)-captured scenarios.

- Experimental results in different complex maritime scenarios have demonstrated our superior sea-surface object detection performance in terms of accuracy and robustness.

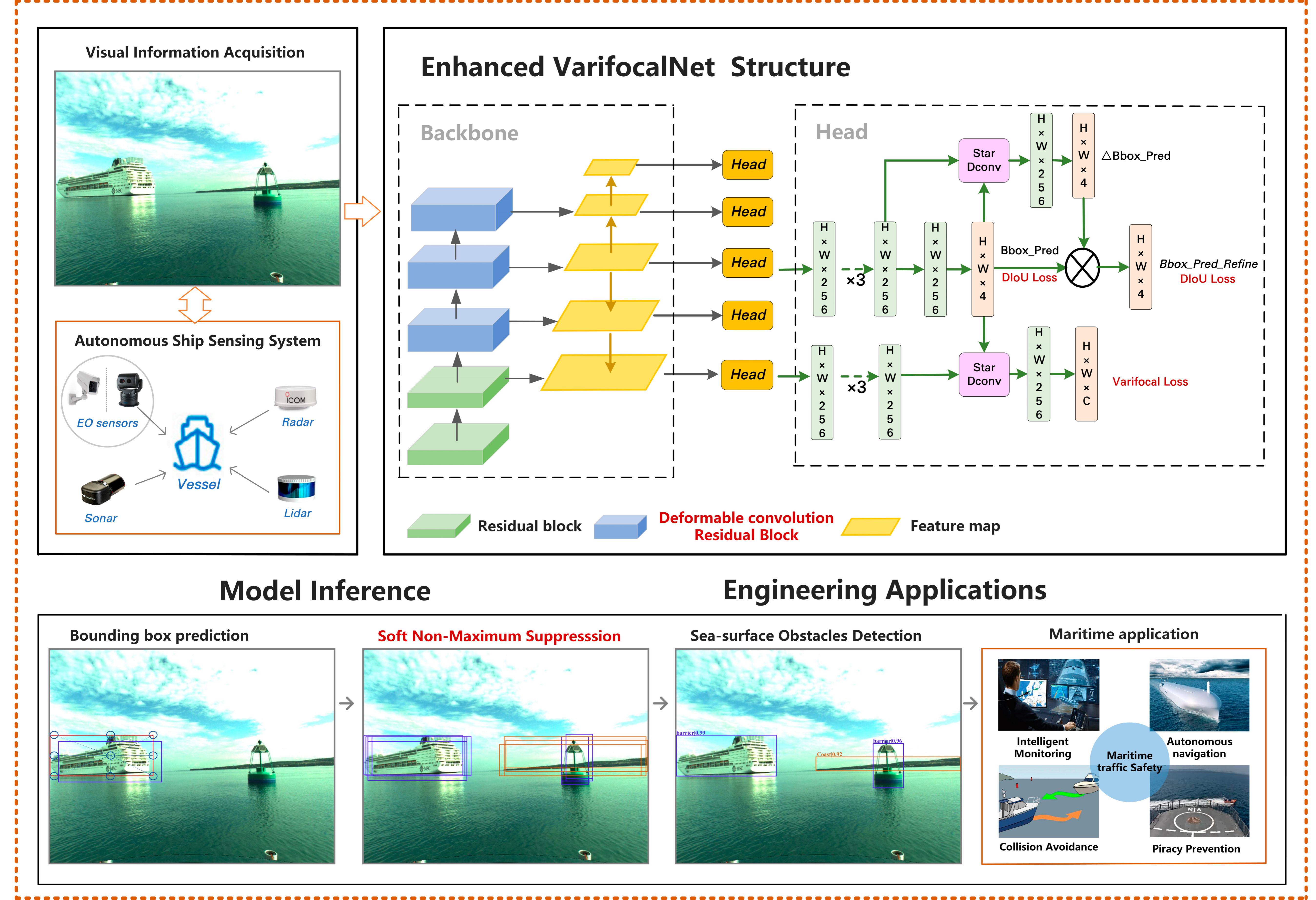

2. The Proposed CNN-Enhanced Maritime Obstacle Object Detection Model

2.1. Network Architecture

2.2. Deformable Convolutional Module

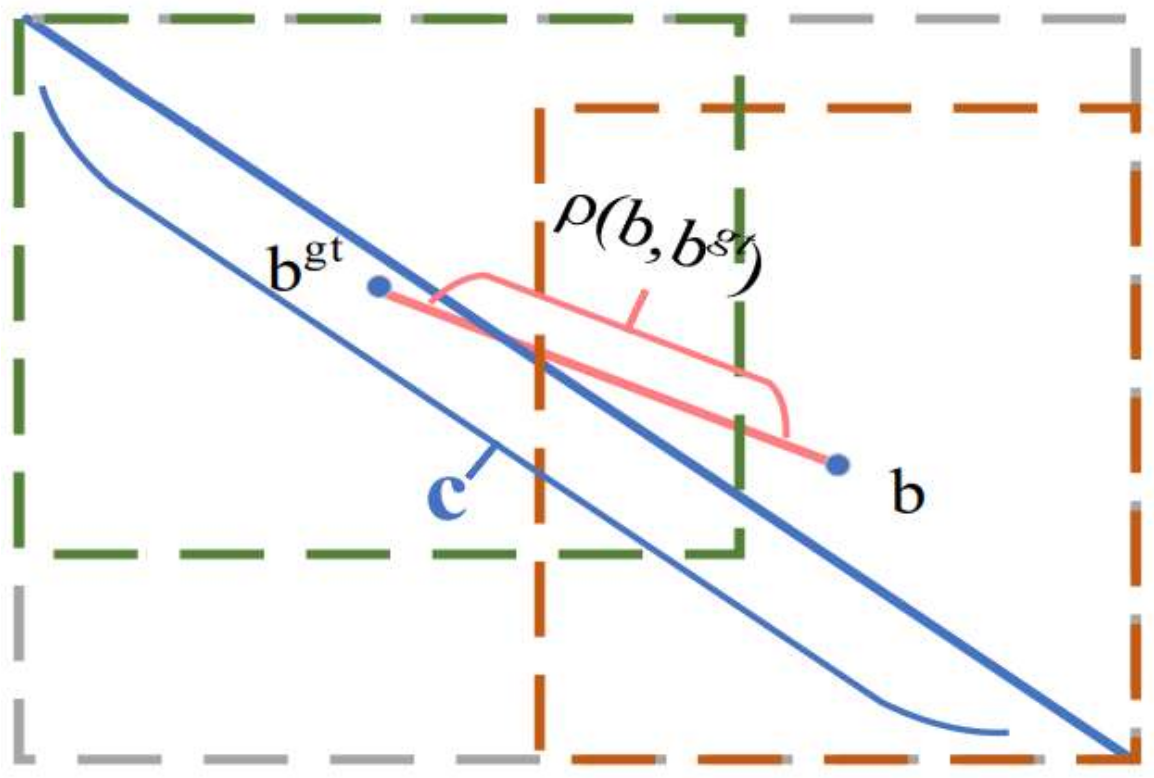

2.3. Loss Function

2.4. Inference Algorithm

2.5. Multi-Scale Technology

3. Experimental Results and Analysis

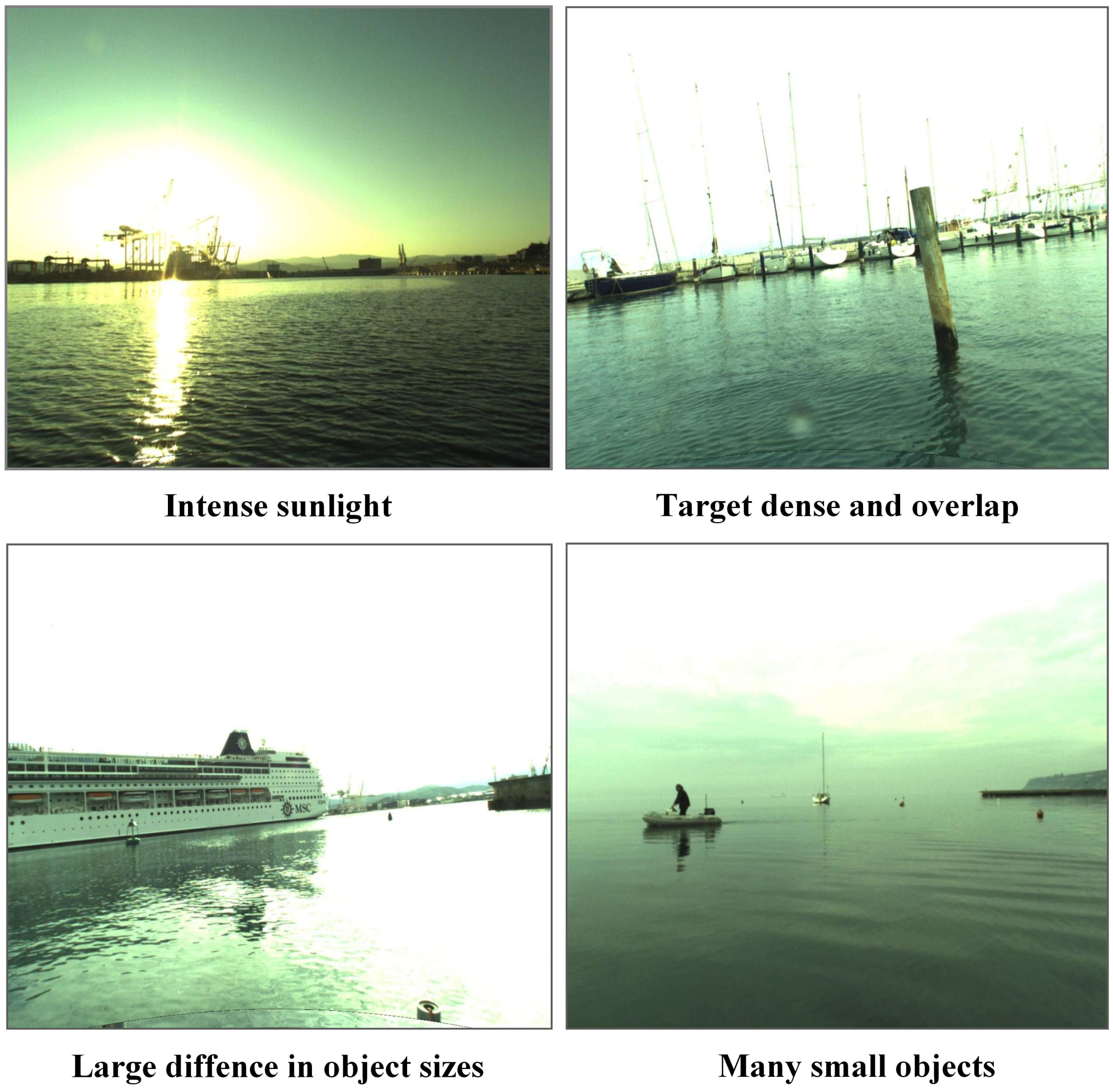

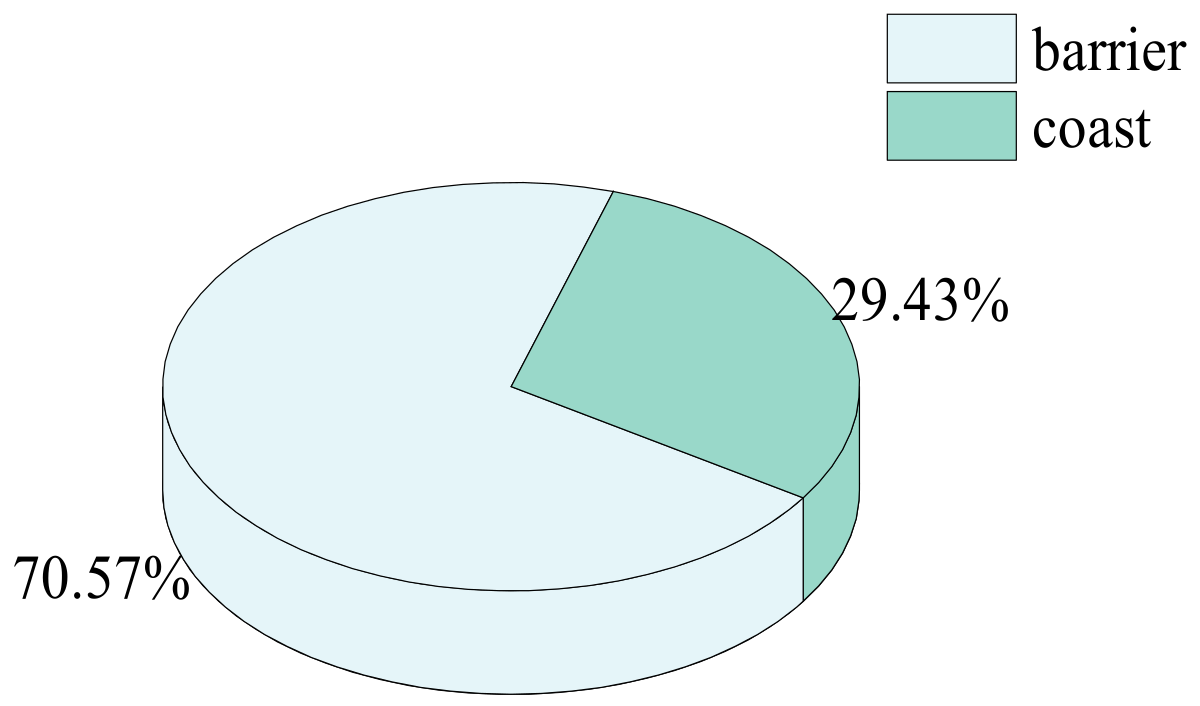

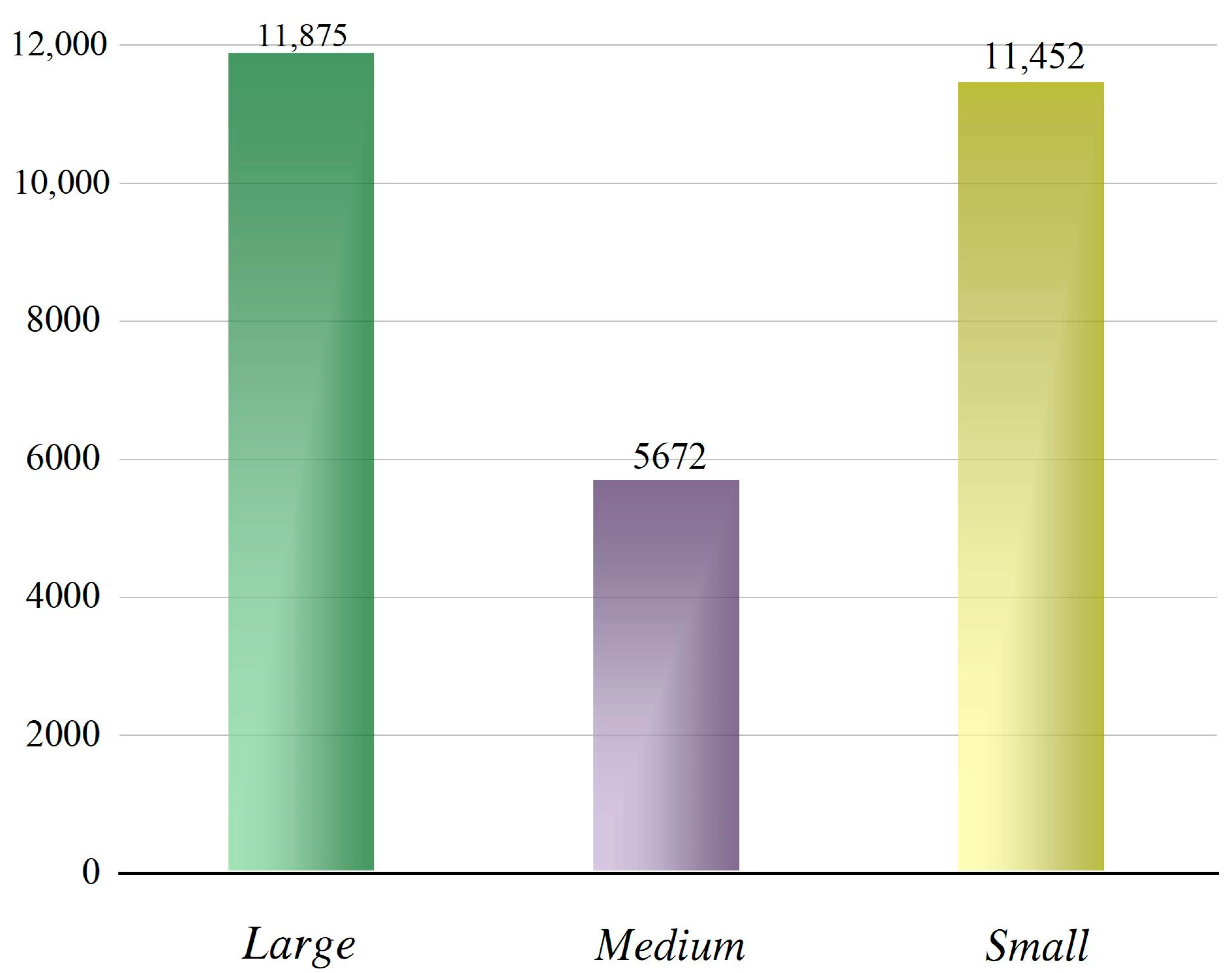

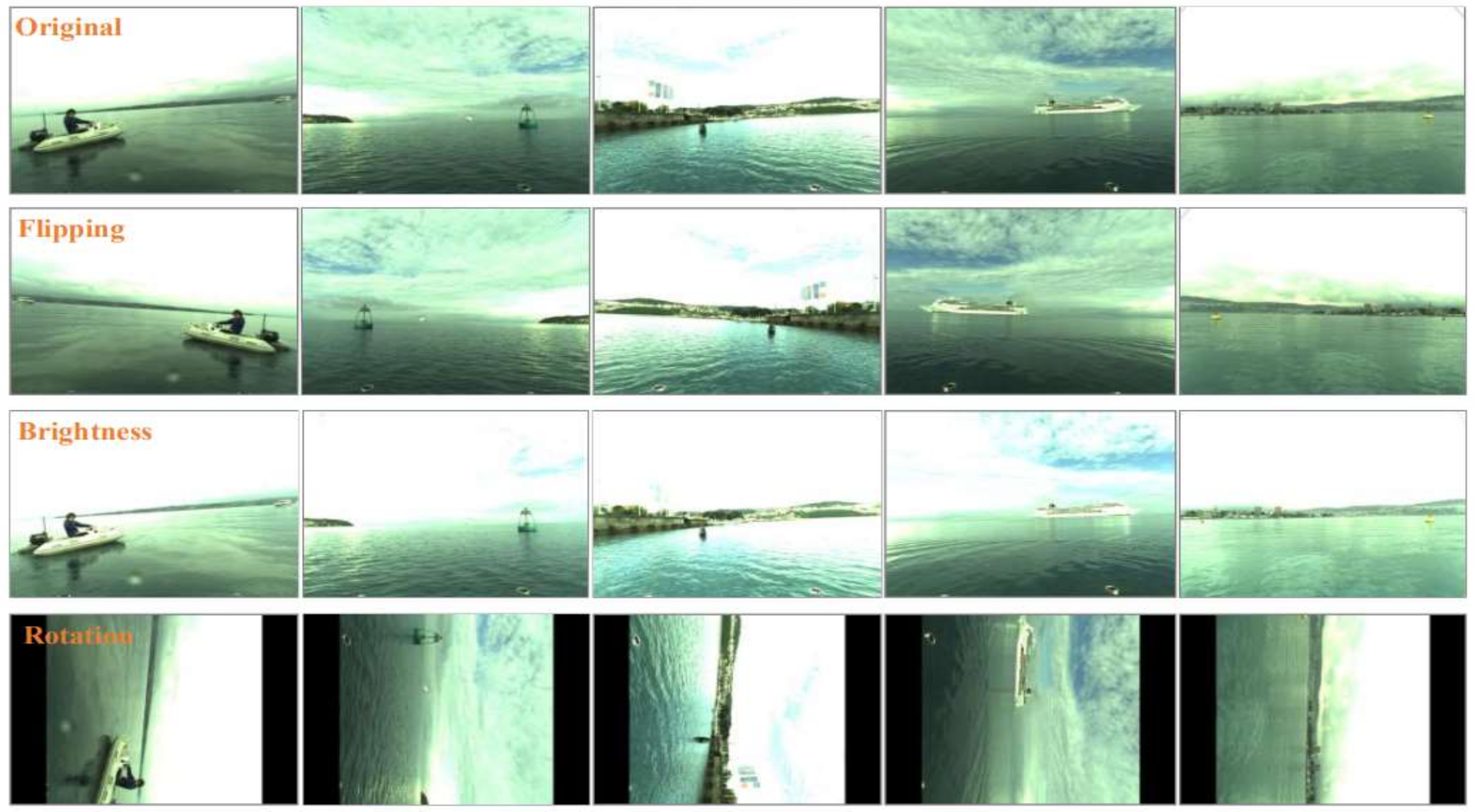

3.1. Datasets Description and Data Augmentation

3.2. Evaluation Metrics

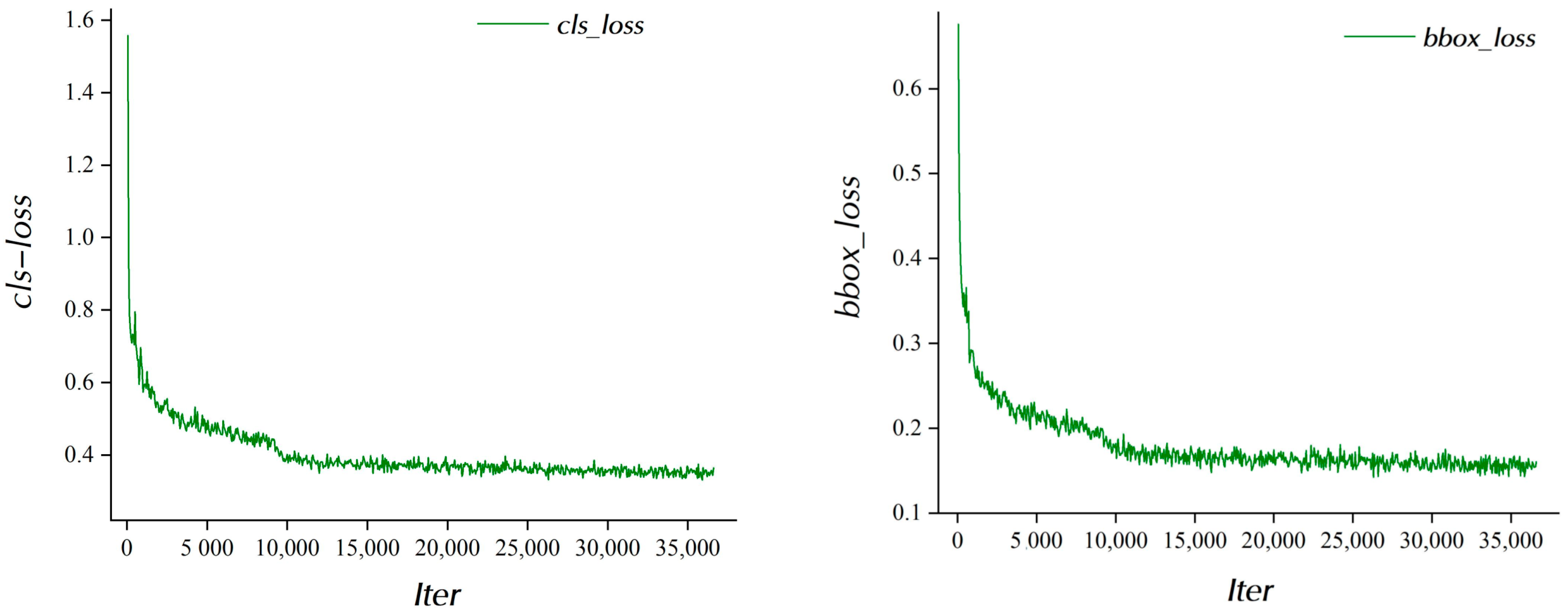

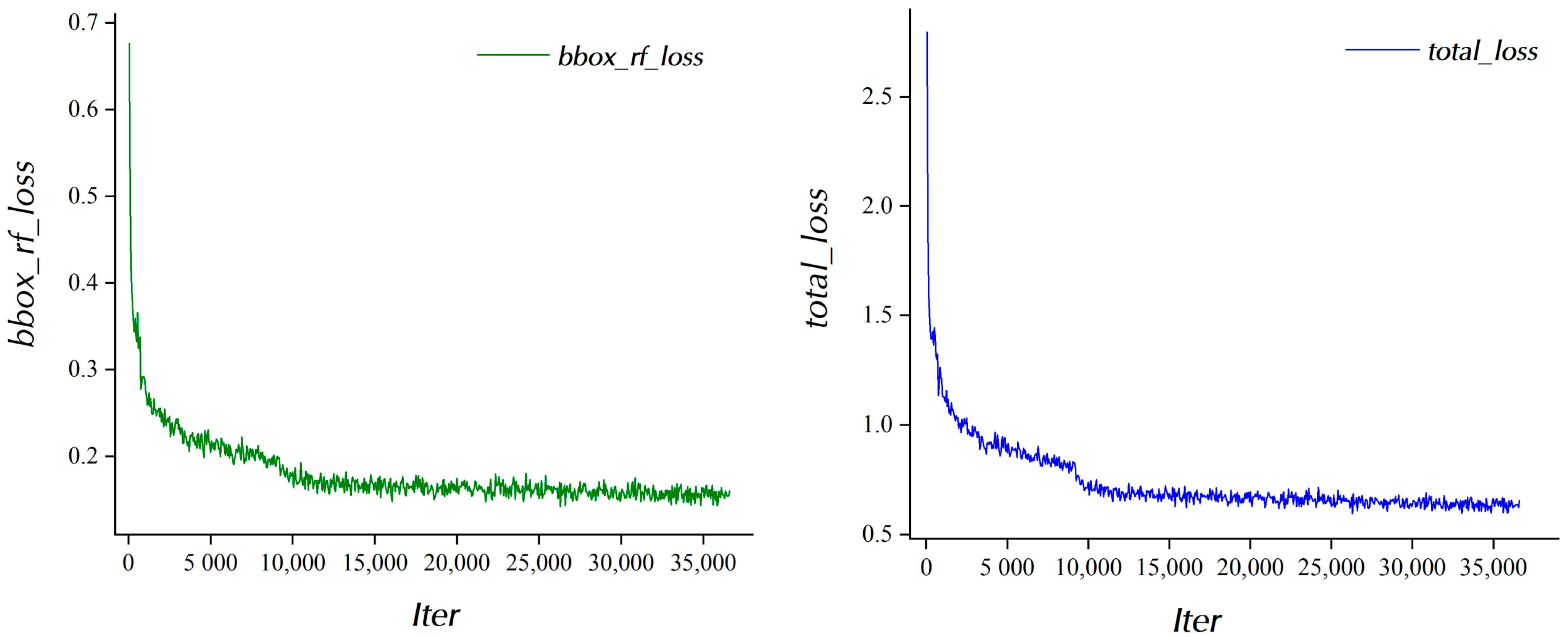

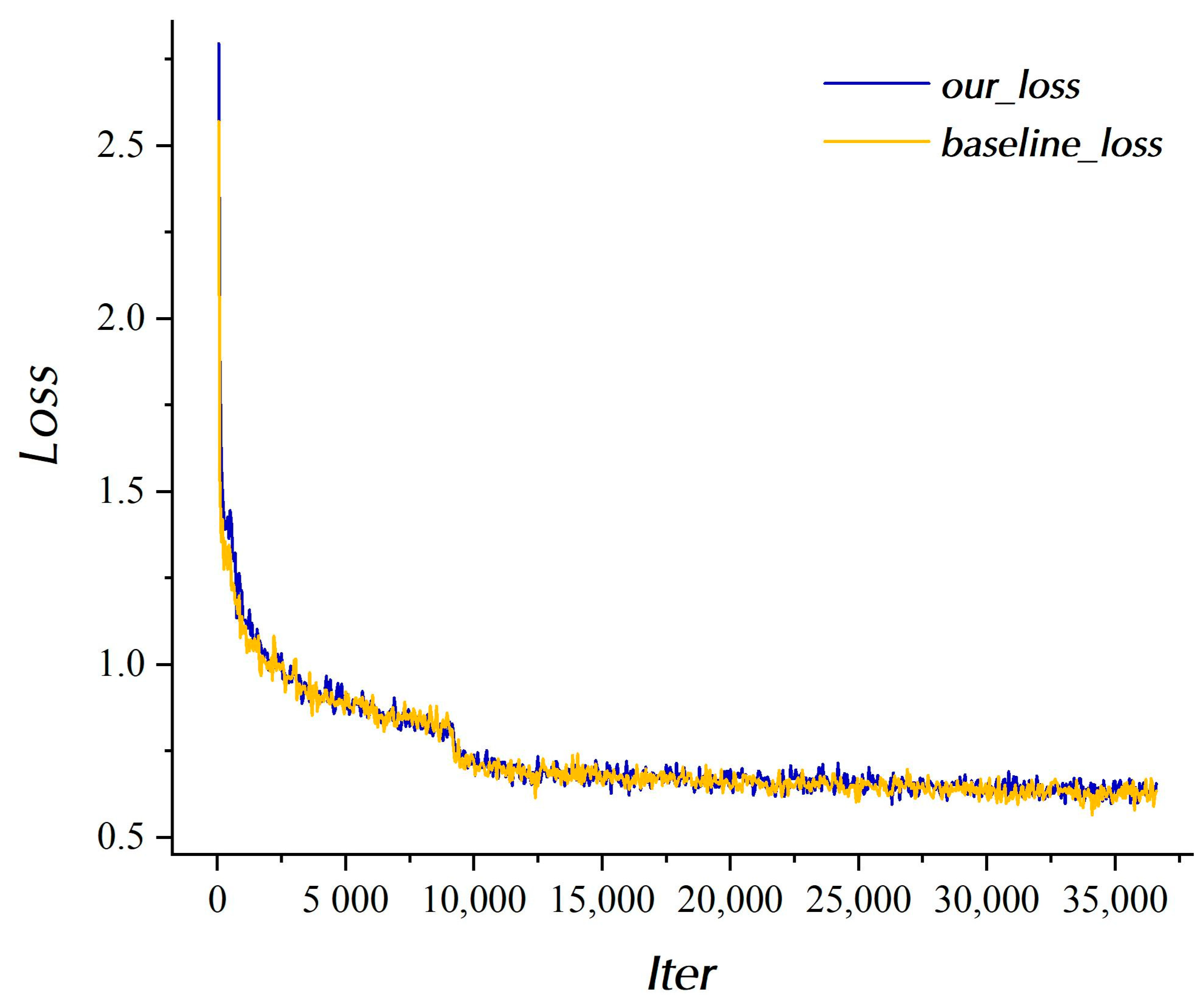

3.3. Training Loss

3.4. Ablation Experiment

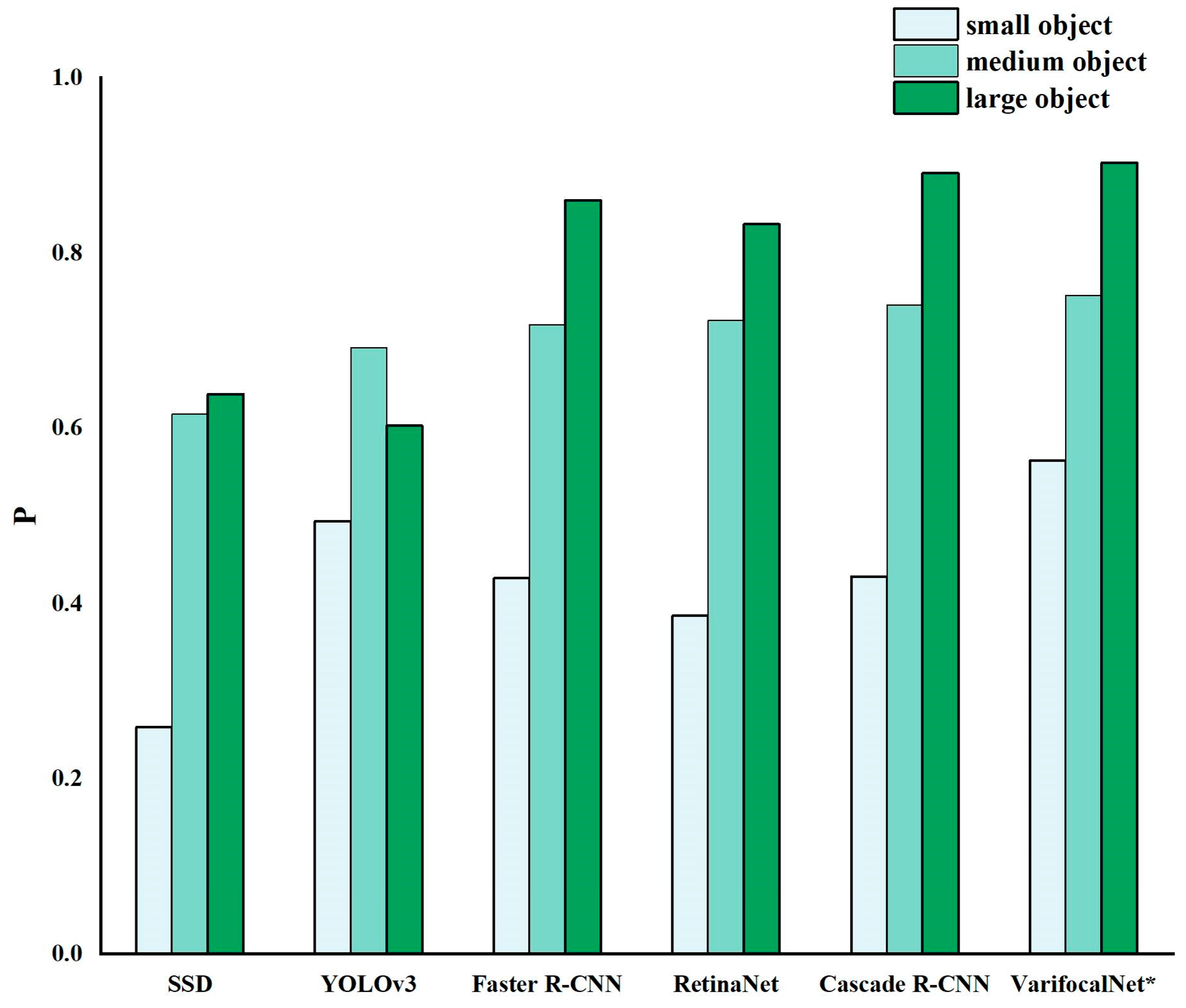

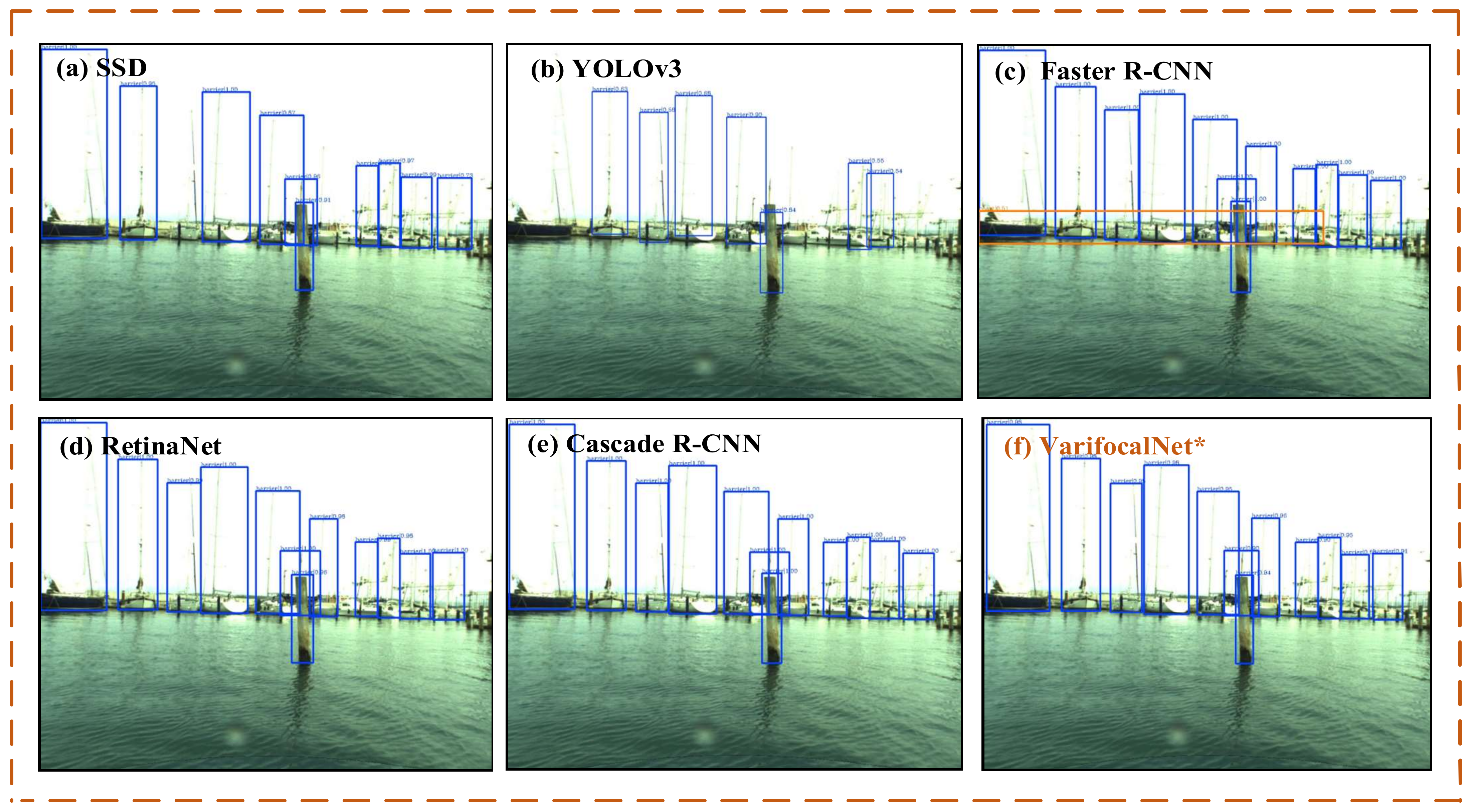

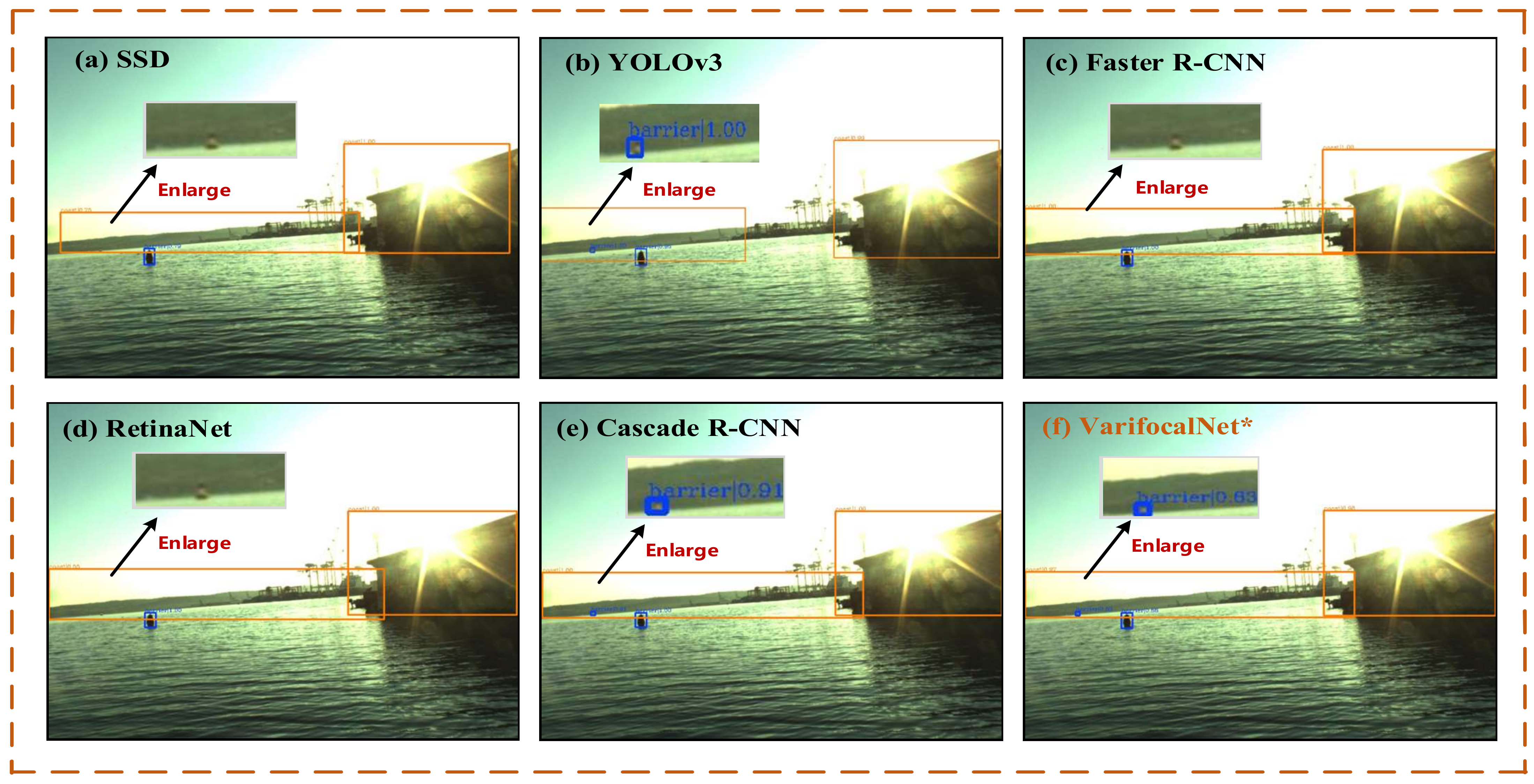

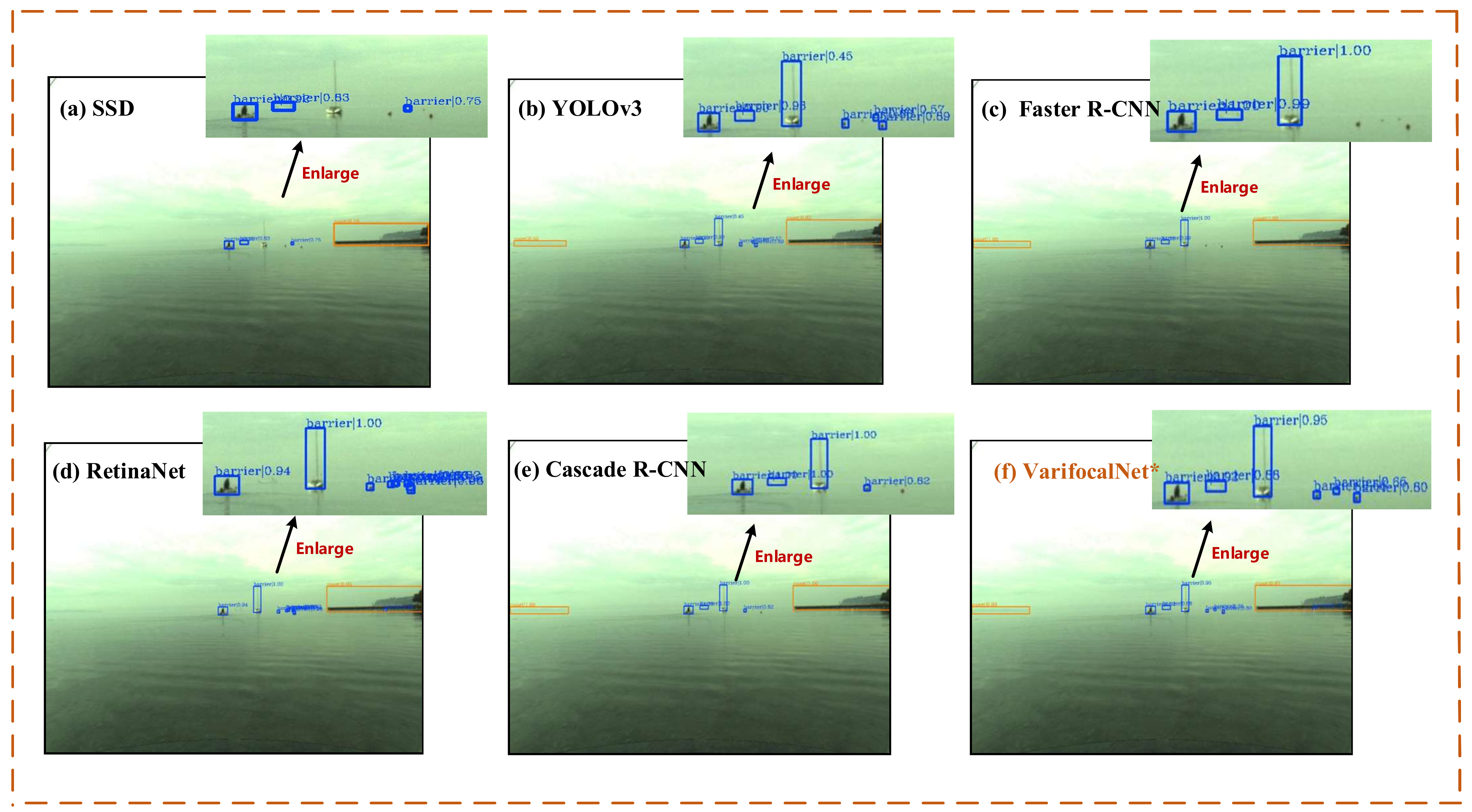

3.5. Comparisons with Other Detection Methods

4. Discussion

4.1. Advantages of the Model

- (1)

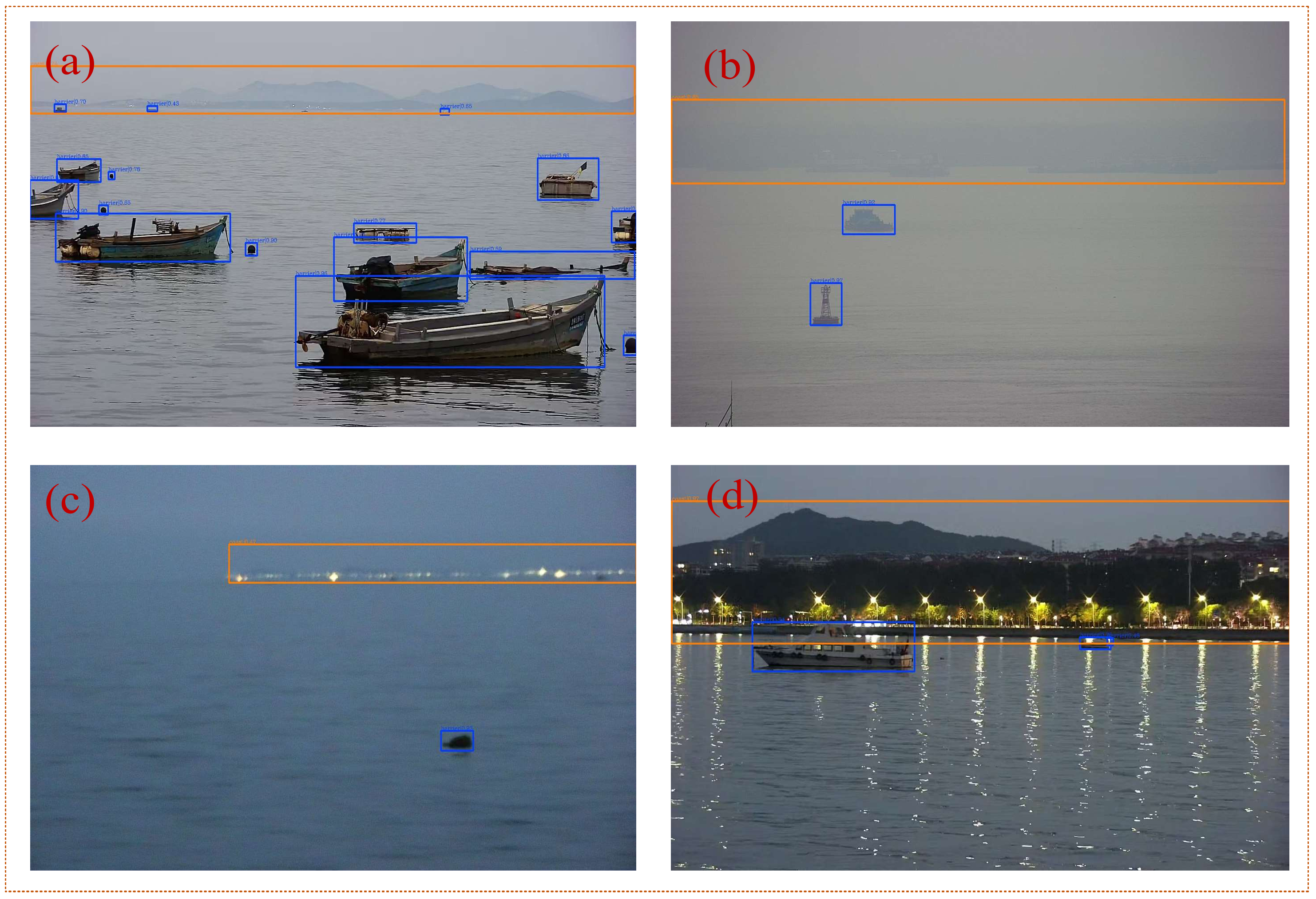

- Adaption to complex maritime environment

- (2)

- Good performance for detecting multi-scale objects

- (3)

- High capability for detecting small objects

4.2. Limitations

5. Summary and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Thombre, S.; Zhao, Z.; Ramm-Schmidt, H.; Garcia, J.M.V.; Malkamaki, T.; Nikolskiy, S.; Hammarberg, T.; Nuortie, H.; Bhuiyan, M.Z.H.; Sarkka, S.; et al. Sensors and AI Techniques for Situational Awareness in Autonomous Ships: A Review. IEEE Trans. Intell. Transp. Syst. 2022, 23, 64–83. [Google Scholar] [CrossRef]

- Lyu, H.; Shao, Z.; Cheng, T.; Yin, Y.; Gao, X. Sea-Surface Object Detection Based on Electro-Optical Sensors: A Review. IEEE Intell. Transp. Syst. Mag. 2022, 2–27. [Google Scholar] [CrossRef]

- Vicen-Bueno, R.; Carrasco-Alvarez, R.; Jarabo-Amores, M.; Nieto-Borge, J.; Rosa-Zurera, M. Ship detection by different data selection templates and multilayer perceptrons from incoherent maritime radar data. IET Radar Sonar Navig. 2011, 5, 144. [Google Scholar] [CrossRef]

- Zhuang, J.-Y.; Zhang, L.; Zhao, S.-Q.; Cao, J.; Wang, B.; Sun, H.-B. Radar-based collision avoidance for unmanned surface vehicles. China Ocean Eng. 2016, 30, 867–883. [Google Scholar] [CrossRef]

- Szpak, Z.; Tapamo, J.-R. Maritime surveillance: Tracking ships inside a dynamic background using a fast level-set. Expert Syst. Appl. 2011, 38, 6669–6680. [Google Scholar] [CrossRef]

- Bloisi, D.D.; Previtali, F.; Pennisi, A.; Nardi, D.; Fiorini, M. Enhancing Automatic Maritime Surveillance Systems with Visual Information. IEEE Intell. Transp. Syst. 2017, 18, 824–833. [Google Scholar] [CrossRef]

- Prasad, D.K.; Prasath, C.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Object Detection in a Maritime Environment: Performance Evaluation of Background Subtraction Methods. IEEE Intell. Transp. Syst. 2019, 20, 1787–1802. [Google Scholar] [CrossRef]

- Liu, K.K.; Wang, J.H. A Method of Detecting Wave Grade Based on Visual Image Taken by USV. Appl. Mech. Mater. 2013, 291–294, 2437–2441. [Google Scholar] [CrossRef]

- Liu, R.W.; Yuan, W.; Chen, X.; Lu, Y. An enhanced CNN-enabled learning method for promoting ship detection in maritime surveillance system. Ocean. Eng. 2021, 235, 109435. [Google Scholar] [CrossRef]

- Muhovic, J.; Mandeljc, R.; Bovcon, B.; Kristan, M.; Pers, J. Obstacle Tracking for Unmanned Surface Vessels Using 3-D Point Cloud. IEEE J. Ocean. Eng. 2020, 45, 786–798. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Wang, Z.; Du, W.; Li, C. SeaShips: A Large-Scale Precisely Annotated Dataset for Ship Detection. IEEE Trans. Multimedia 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Chen, X.; Yang, Y.; Wang, S.; Wu, H.; Tang, J.; Zhao, J.; Wang, Z. Ship Type Recognition via a Coarse-to-Fine Cascaded Convolution Neural Network. J. Navig. 2020, 73, 813–832. [Google Scholar] [CrossRef]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video Processing from Electro-Optical Sensors for Object Detection and Tracking in a Maritime Environment: A Survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef]

- Chan, Y.-T. Comprehensive comparative evaluation of background subtraction algorithms in open sea environments. Comput. Vis. Image Underst. 2021, 202, 103101. [Google Scholar] [CrossRef]

- Zhu, C.; Zhou, H.; Wang, R.; Guo, J. A Novel Hierarchical Method of Ship Detection from Spaceborne Optical Image Based on Shape and Texture Features. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3446–3456. [Google Scholar] [CrossRef]

- Arshad, N.; Moon, K.-S.; Kim, J.-N. Multiple Ship Detection and Tracking Using Background Registration and Morphological Operations. In Signal Processing and Multimedia; Kim, T., Pal, S.K., Grosky, W.I., Pissinou, N., Shih, T.K., Ślęzak, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; Volume 123, pp. 121–126. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:1512.00567. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 1–9 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Scene Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 4, 640–651. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. arXiv 2017, arXiv:1712.00726. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-J.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. arXiv 2019, arXiv:1904.01355. [Google Scholar]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sünderhauf, N. VarifocalNet: An IoU-aware Dense Object Detector. arXiv 2021, arXiv:2008.13367. [Google Scholar]

- Shao, Z.; Wang, L.; Wang, Z.; Du, W.; Wu, W. Saliency-Aware Convolution Neural Network for Ship Detection in Surveillance Video. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 781–794. [Google Scholar] [CrossRef]

- Liu, T.; Pang, B.; Zhang, L.; Yang, W.; Sun, X. Sea Surface Object Detection Algorithm Based on YOLO v4 Fused with Reverse Depthwise Separable Convolution (RDSC) for USV. J. Mar. Sci. Eng. 2021, 9, 753. [Google Scholar] [CrossRef]

- Guo, H.; Yang, X.; Wang, N.; Song, B.; Gao, X. A Rotational Libra R-CNN Method for Ship Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5772–5781. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Ghahremani, A.; Bondarev, E.; De With, P.H. Cascaded CNN Method for Far Object Detection in Outdoor Surveillance. In Proceedings of the 2018 14th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Las Palmas de Gran Canaria, Spain, 26–29 November 2018; pp. 40–47. [Google Scholar] [CrossRef]

- Iancu, B.; Soloviev, V.; Zelioli, L.; Lilius, J. ABOships—An Inshore and Offshore Maritime Vessel Detection Dataset with Precise Annotations. Remote Sens. 2021, 13, 988. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. arXiv 2017, arXiv:1703.06211. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019, arXiv:1911.08287. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving Object Detection with One Line of Code. arXiv 2017, arXiv:1704.04503. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. UnitBox: An Advanced Object Detection Network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression. arXiv 2019, arXiv:1902.09630. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient Non-Maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 850–855. [Google Scholar] [CrossRef]

- Bovcon, B.; Mandeljc, R.; Perš, J.; Kristan, M. Stereo obstacle detection for unmanned surface vehicles by IMU-assisted semantic segmentation. Robot. Auton. Syst. 2018, 104, 1–13. [Google Scholar] [CrossRef]

- Zhou, Z.; Sun, J.; Yu, J.; Liu, K.; Duan, J.; Chen, L.; Chen, C.L.P. An Image-Based Benchmark Dataset and a Novel Object Detector for Water Surface Object Detection. Front. Neurorobot. 2021, 15, 723336. [Google Scholar] [CrossRef]

- Wang, S.; He, Z. A prediction model of vessel trajectory based on generative adversarial network. J. Navig. 2021, 74, 1161–1171. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, D.; Zhang, Y.; Cheng, X.; Zhang, M.; Wu, C. Deep learning for autonomous ship-oriented small ship detection. Saf. Sci. 2020, 130, 104812. [Google Scholar] [CrossRef]

| Barrier | Coast | Number of Labels | Proportion (%) | |

|---|---|---|---|---|

| Large objects | 5290 | 6585 | 11,875 | 40.9 |

| Medium objects | 3736 | 1936 | 5672 | 19.6 |

| Small objects | 11,439 | 13 | 11,452 | 39.5 |

| Total | 20,465 | 8534 | 28,999 | 100.0 |

| Methods | SNMS | DCN | DIoU | MS | P0.5 | P[0.5,:0.95] | Ps | Pm | Pl | R[0.5:0.95] | Rs | Rm | Rl |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| VarifocalNet | × | × | × | × | 0.979 | 0.771 | 0.525 | 0.738 | 0.893 | 0.763 | 0.576 | 0.809 | 0.919 |

| √ | × | × | × | 0.979 | 0.779 | 0.536 | 0.746 | 0.901 | 0.819 | 0.603 | 0.819 | 0.936 | |

| Our methods | √ | √ | × | × | 0.980 | 0.781 | 0.545 | 0.746 | 0.903 | 0.822 | 0.605 | 0.824 | 0.936 |

| √ | √ | √ | × | 0.981 | 0.783 | 0.548 | 0.749 | 0.903 | 0.823 | 0.613 | 0.826 | 0.937 | |

| √ | √ | √ | √ | 0.986 | 0.789 | 0.563 | 0.752 | 0.903 | 0.837 | 0.650 | 0.829 | 0.940 |

| Detection Network | P0.5 | P[0.5:0.95] | Ps | Pm | Pl | R[0.5:0.95] | Rs | Rm | Rl |

|---|---|---|---|---|---|---|---|---|---|

| SSD | 0.863 | 0.493 | 0.259 | 0.616 | 0.639 | 0.575 | 0.380 | 0.676 | 0.702 |

| YOLOv3 | 0.937 | 0.528 | 0.494 | 0.692 | 0.603 | 0.639 | 0.595 | 0.747 | 0.681 |

| Faster R-CNN | 0.870 | 0.689 | 0.429 | 0.718 | 0.860 | 0.722 | 0.451 | 0.777 | 0.894 |

| RetinaNet | 0.962 | 0.704 | 0.386 | 0.723 | 0.833 | 0.764 | 0.577 | 0.777 | 0.872 |

| Cascade R-CNN | 0.910 | 0.742 | 0.431 | 0.741 | 0.891 | 0.768 | 0.463 | 0.792 | 0.921 |

| VarifocalNet | 0.979 | 0.771 | 0.525 | 0.738 | 0.893 | 0.763 | 0.576 | 0.809 | 0.919 |

| VarifocalNet * | 0.986 | 0.789 | 0.563 | 0.752 | 0.903 | 0.837 | 0.650 | 0.829 | 0.940 |

| Methods | SNMS | DIoU | DCN | MS | Model Parameters/M | Inference Time/ms |

|---|---|---|---|---|---|---|

| VarifocalNet | × | × | × | × | 32.49 | 45 |

| √ | × | × | × | 32.49 | 45 | |

| Our methods | √ | √ | × | × | 32.49 | 45 |

| √ | √ | √ | × | 33.07 | 48 | |

| √ | √ | √ | √ | 33.07 | 91 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, Z.; Lyu, H.; Yin, Y.; Cheng, T.; Gao, X.; Zhang, W.; Jing, Q.; Zhao, Y.; Zhang, L. Multi-Scale Object Detection Model for Autonomous Ship Navigation in Maritime Environment. J. Mar. Sci. Eng. 2022, 10, 1783. https://doi.org/10.3390/jmse10111783

Shao Z, Lyu H, Yin Y, Cheng T, Gao X, Zhang W, Jing Q, Zhao Y, Zhang L. Multi-Scale Object Detection Model for Autonomous Ship Navigation in Maritime Environment. Journal of Marine Science and Engineering. 2022; 10(11):1783. https://doi.org/10.3390/jmse10111783

Chicago/Turabian StyleShao, Zeyuan, Hongguang Lyu, Yong Yin, Tao Cheng, Xiaowei Gao, Wenjun Zhang, Qianfeng Jing, Yanjie Zhao, and Lunping Zhang. 2022. "Multi-Scale Object Detection Model for Autonomous Ship Navigation in Maritime Environment" Journal of Marine Science and Engineering 10, no. 11: 1783. https://doi.org/10.3390/jmse10111783

APA StyleShao, Z., Lyu, H., Yin, Y., Cheng, T., Gao, X., Zhang, W., Jing, Q., Zhao, Y., & Zhang, L. (2022). Multi-Scale Object Detection Model for Autonomous Ship Navigation in Maritime Environment. Journal of Marine Science and Engineering, 10(11), 1783. https://doi.org/10.3390/jmse10111783