Stereo Visual Odometry and Real-Time Appearance-Based SLAM for Mapping and Localization in Indoor and Outdoor Orchard Environments

Abstract

1. Introduction

2. Materials and Methods

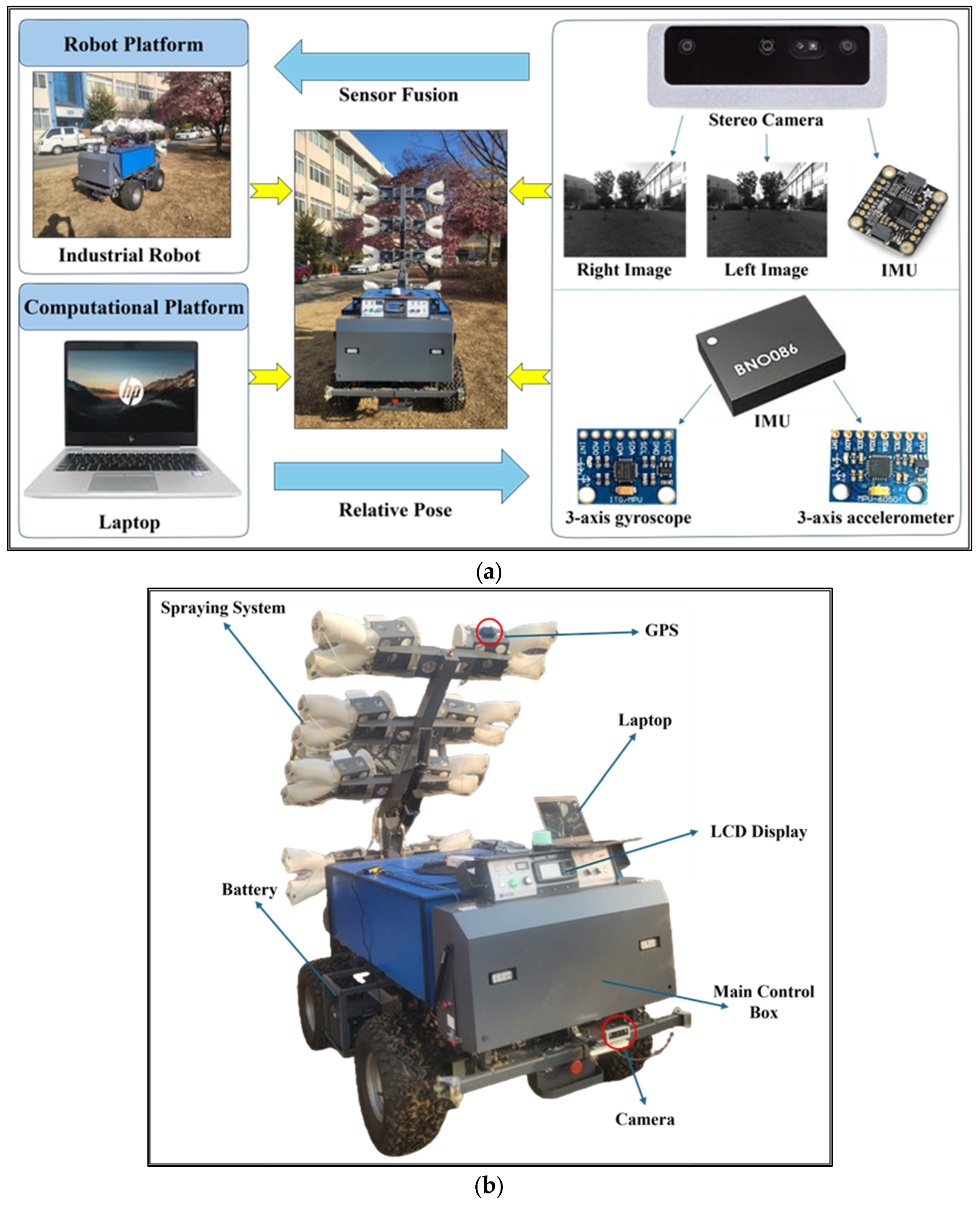

2.1. System Hardware Components

2.2. Stereo Visual Odometry

2.2.1. Stereo Image Rectification

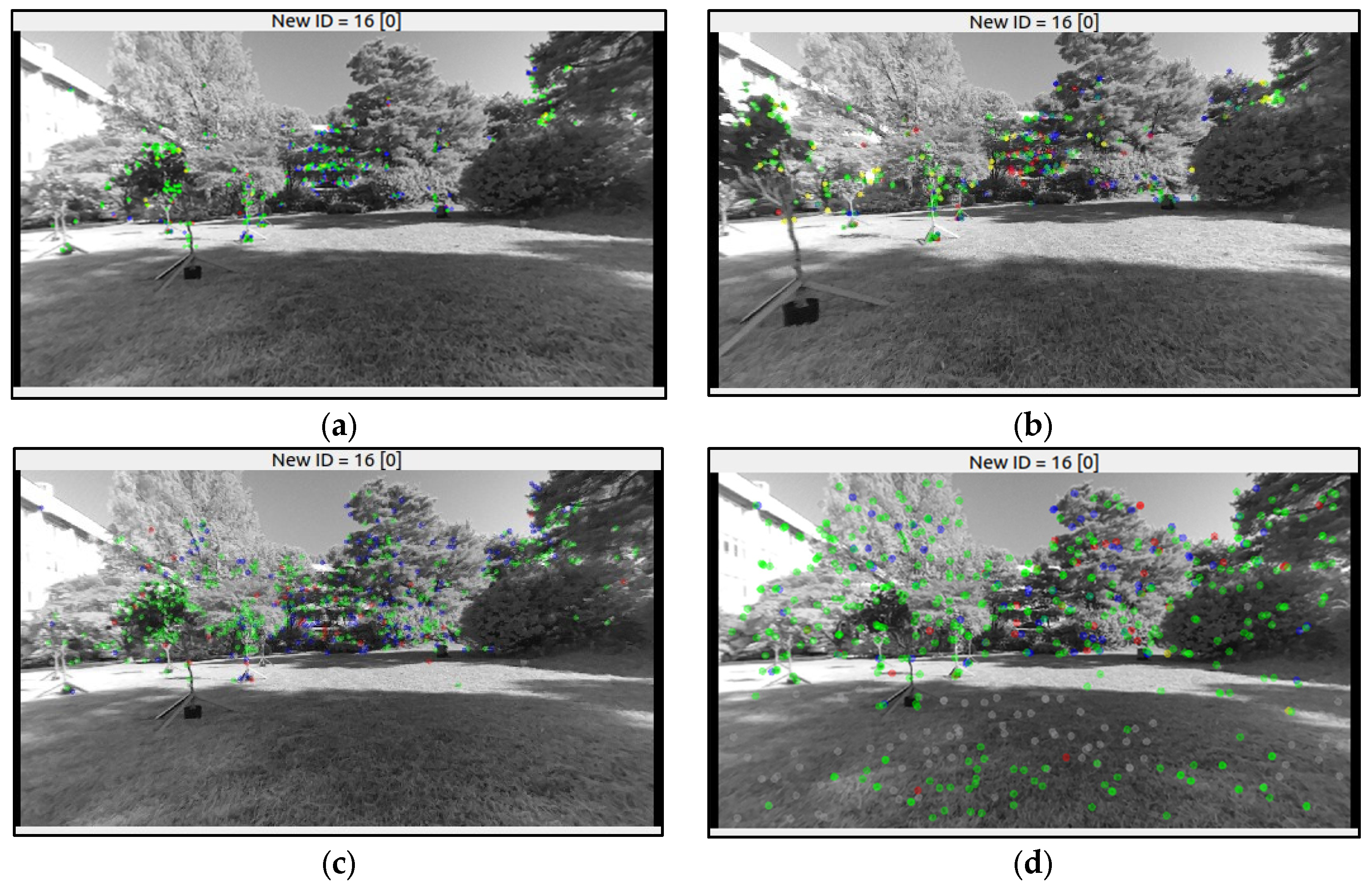

2.2.2. Feature Detection

2.2.3. Optical Flow Estimation and Stereo Image Matching

2.3. IMU Integration

2.3.1. IMU Integration in Visual Odometry

2.3.2. IMU Integration in SLAM

2.4. Dense 3D Point Cloud Generation and Mapping

2.5. Overall SLAM Framework

2.6. Experimental Setup

3. Results

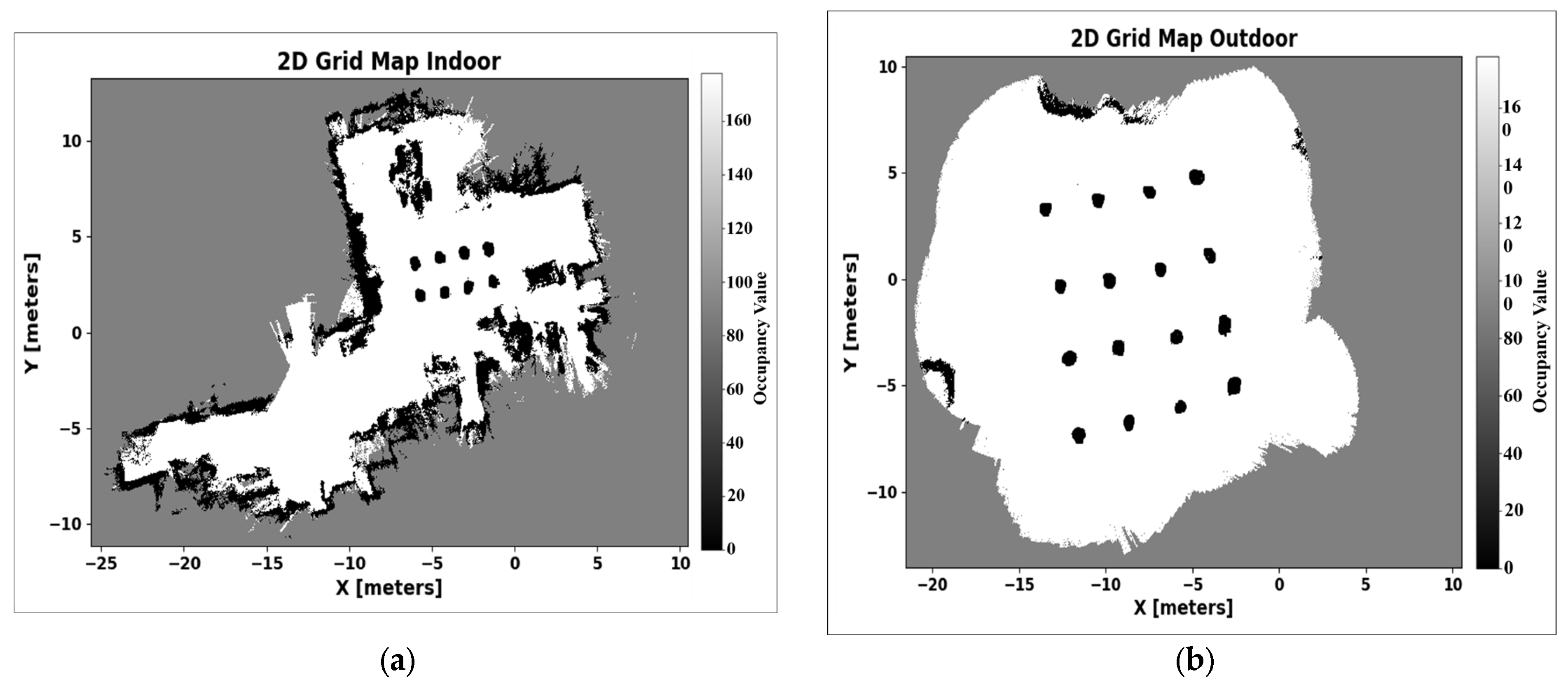

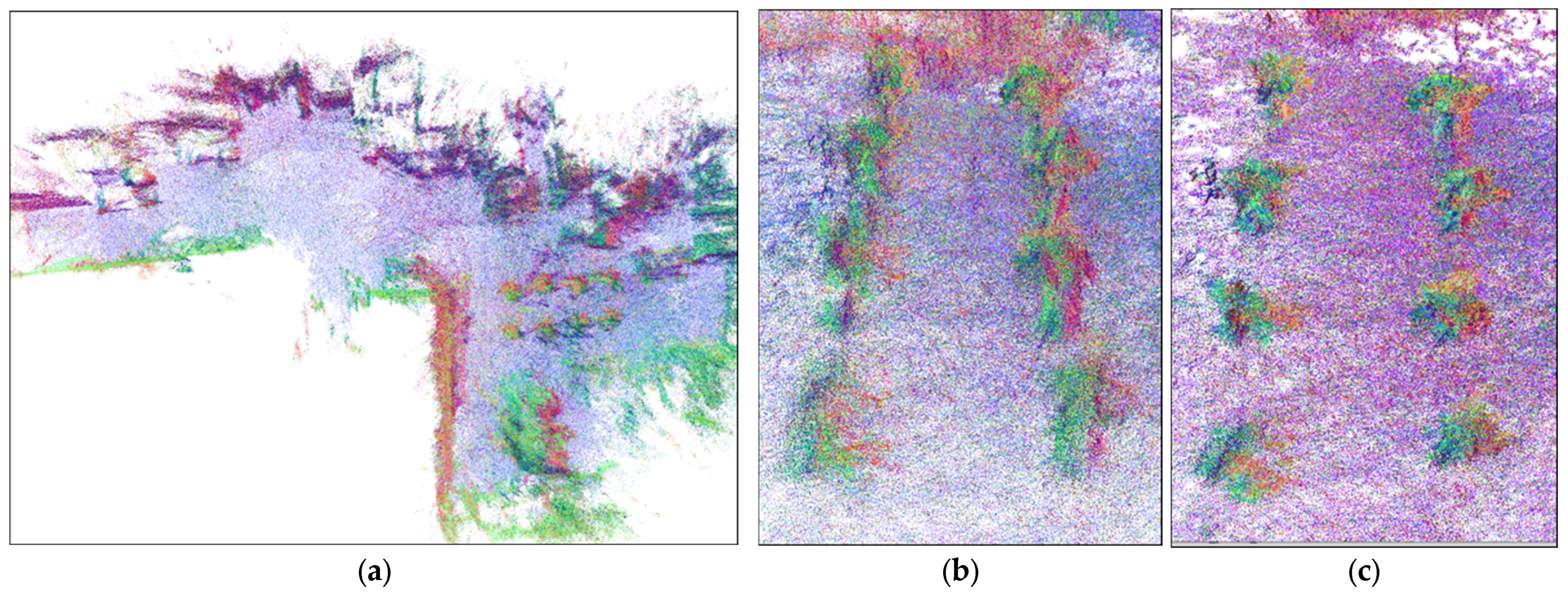

3.1. Mapping Results for the Indoor and Outdoor Environments

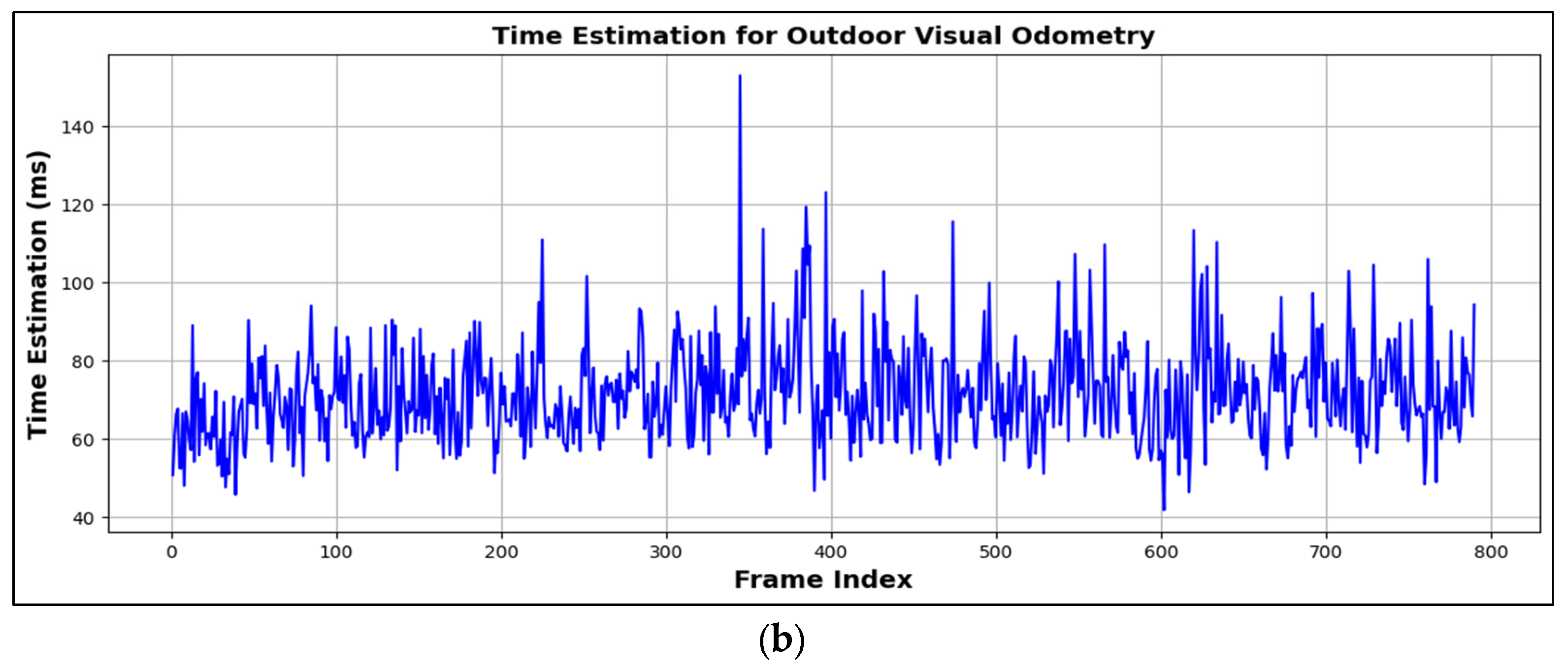

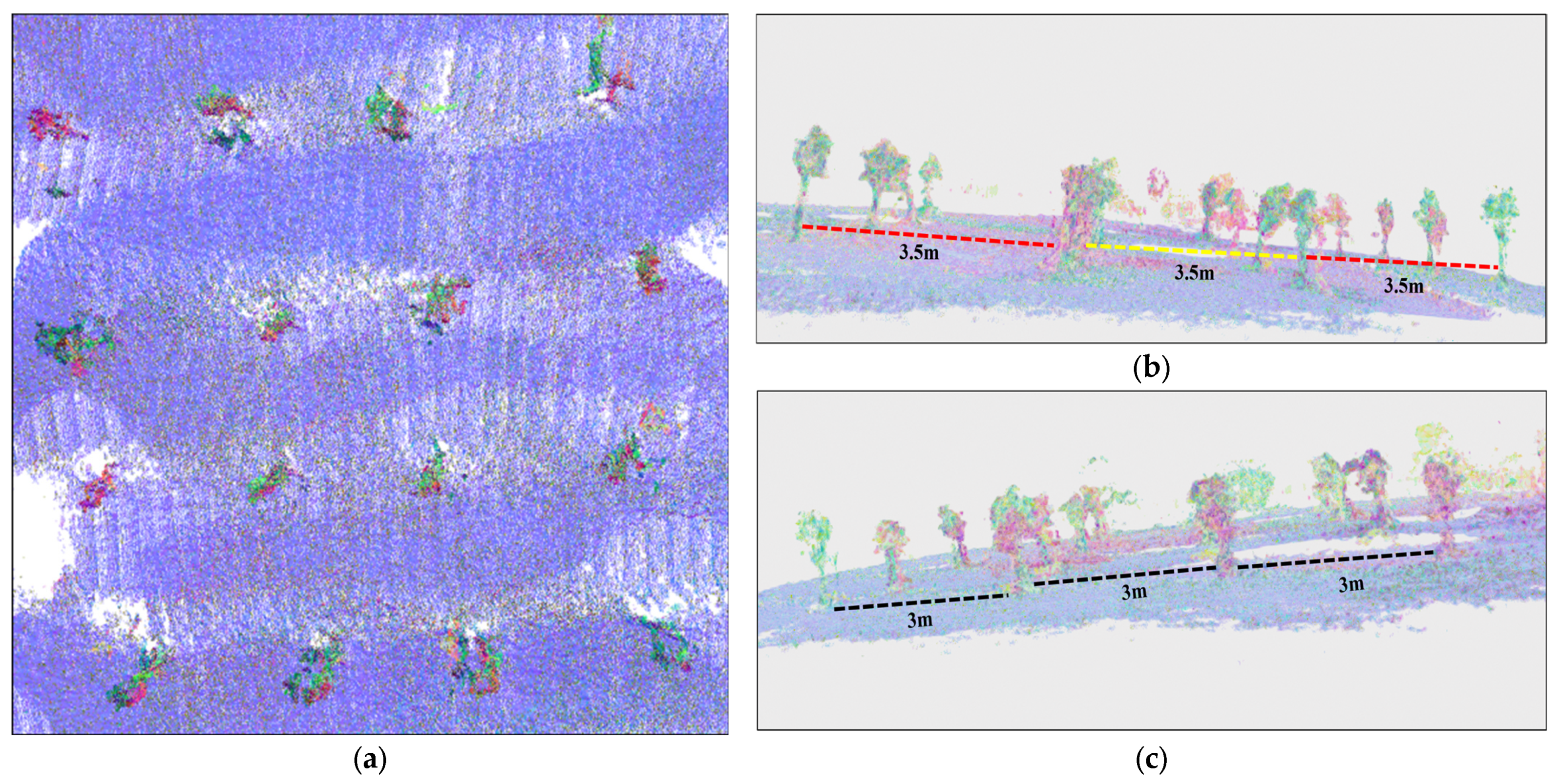

3.2. Mapping Accuracy Analysis

3.3. Three-Dimensional Dense Point Cloud Processing

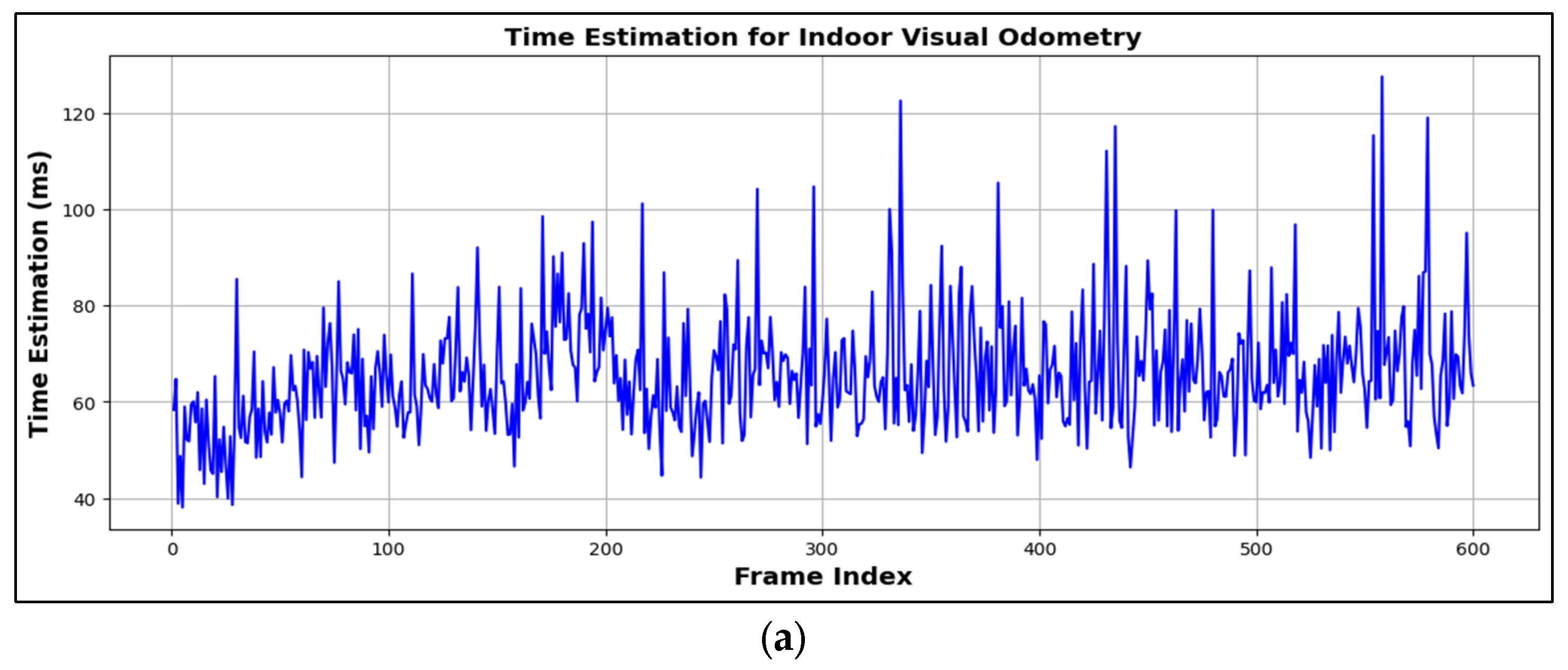

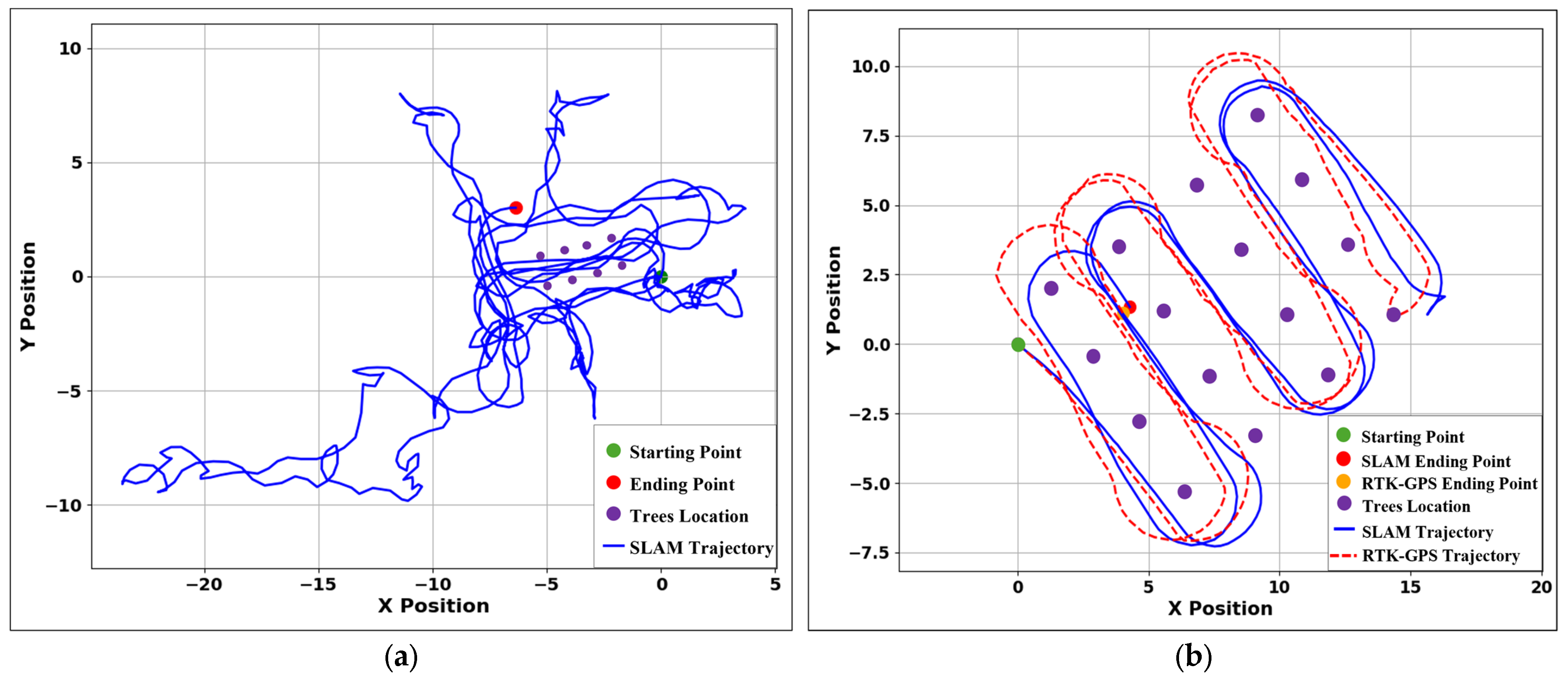

3.4. Visual Odometry Trajectory and Time Estimation

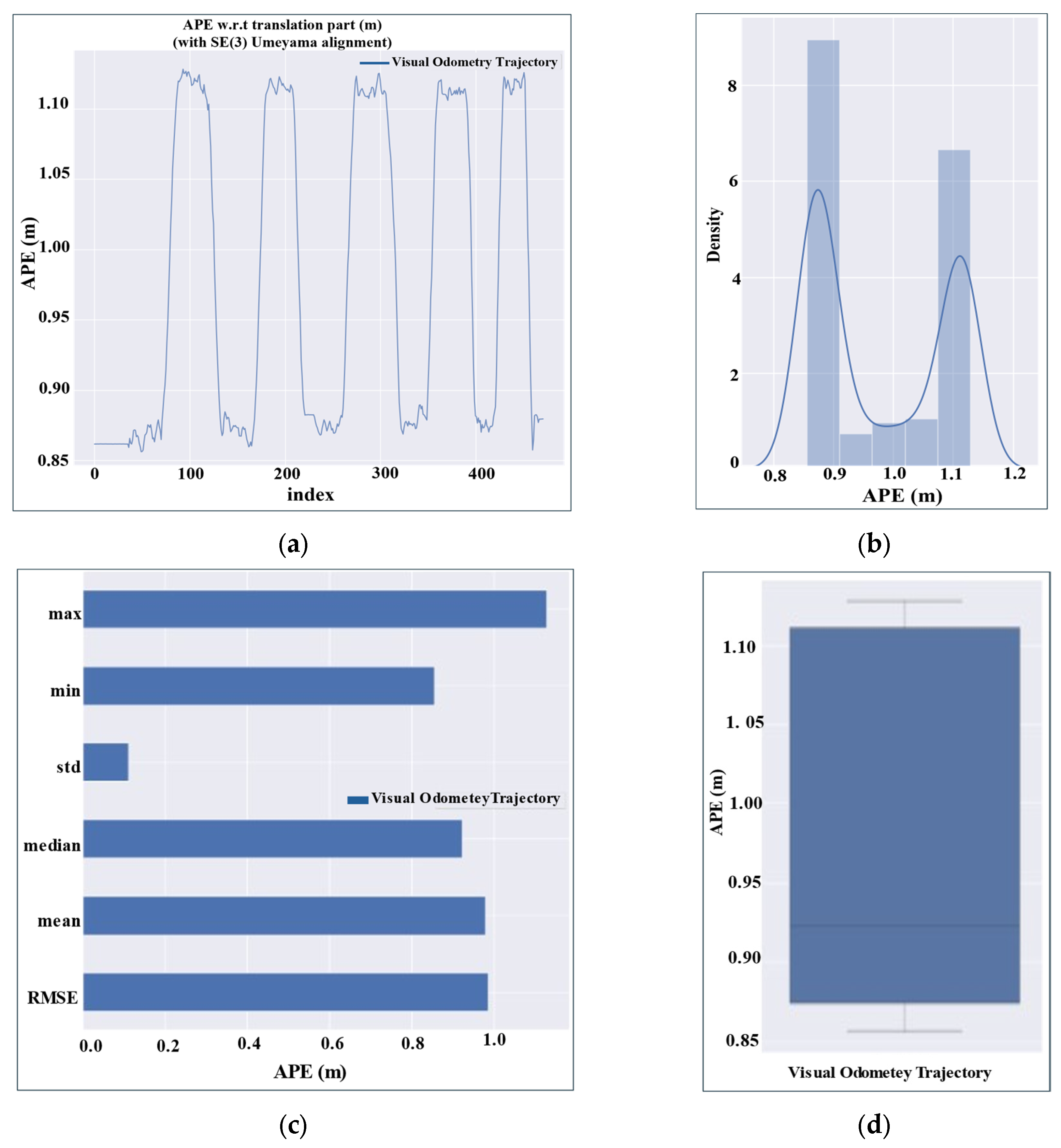

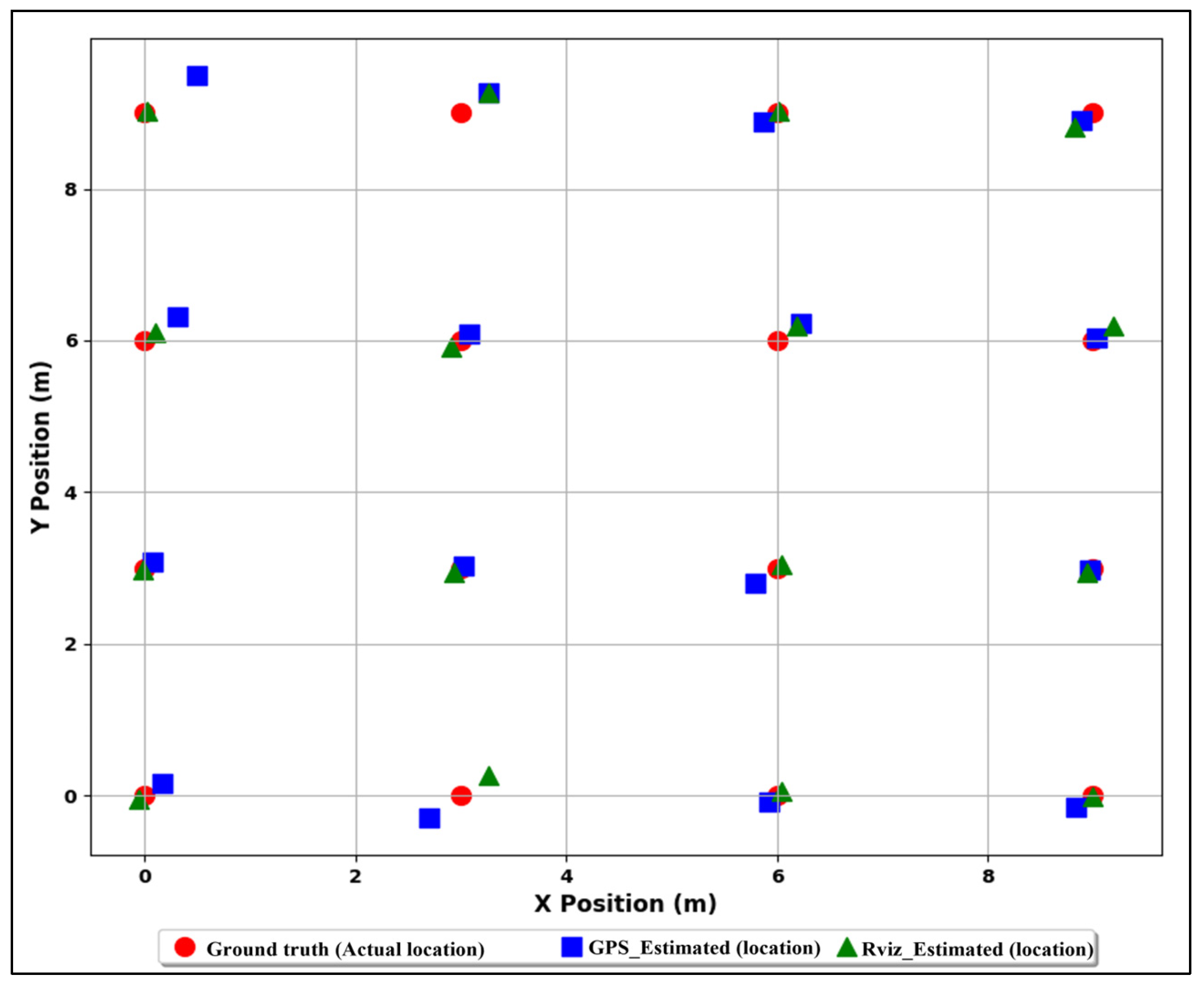

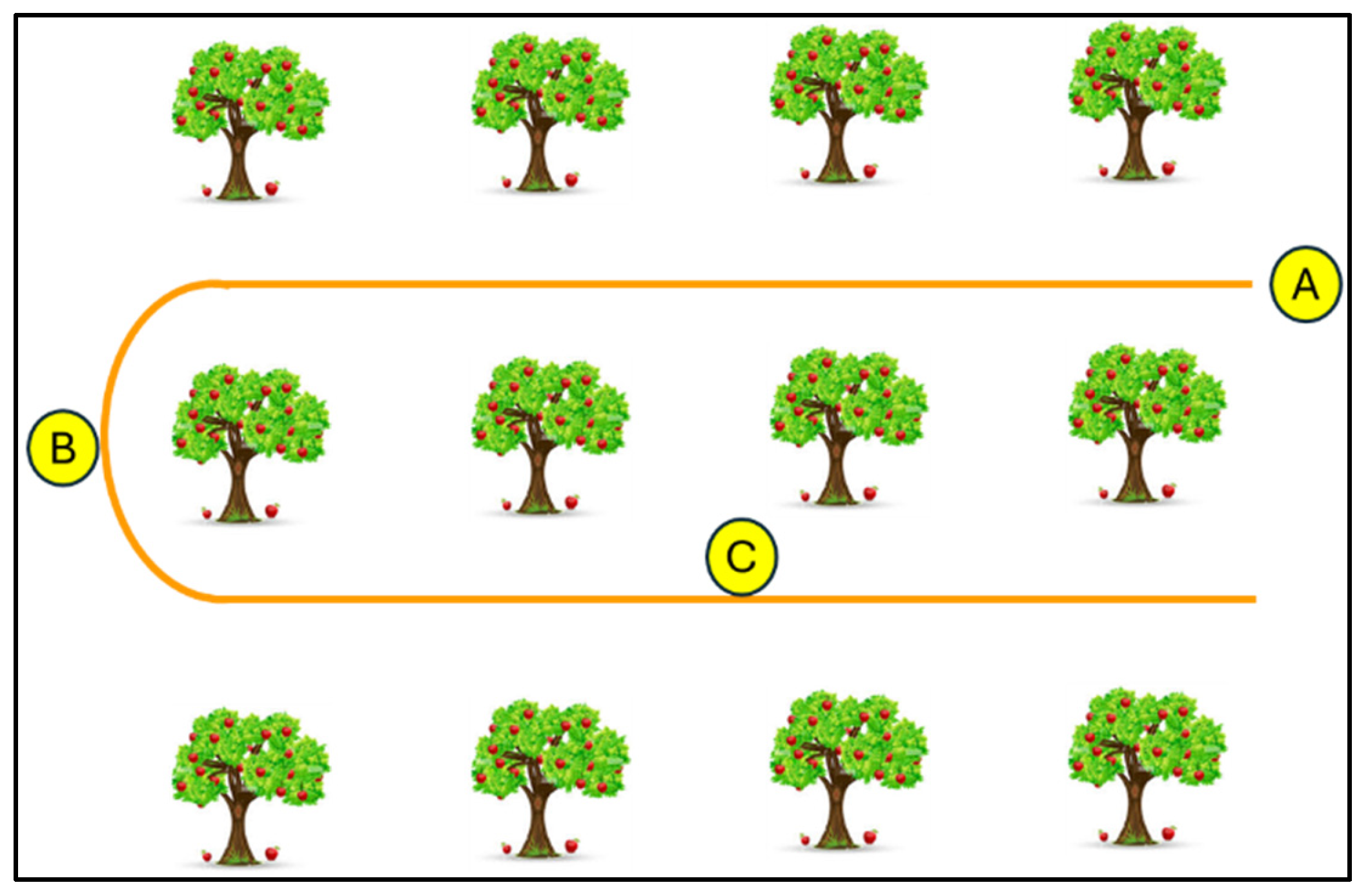

3.5. Localization Accuracy Test

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SLAM | Simultaneous Localization and Mapping |

| GPS | Global Positioning System |

| RTAB-Map | Real-Time Appearance Based-Mapping |

| IMU | Inertial Measurement Unit |

| CAN | Controller Area Network |

| FOV | Field of View |

| DFOV | Display Field of View |

| HFOV | Horizontal Field of View |

| VFOV | Vertical Field of View |

| ORB | Oriented FAST and Rotated BRIEF |

| 6-DOF | 6 Degree of Freedom |

| RTK | Real-Time Kinematic |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Square Error |

| APE | Absolute Pose Error |

| SSE | Sum of Squared Errors |

| RANSAC | RANdom SAmple Consensus |

| ROS | Robot Operating System |

| GTSAM | Georgia Tech Smoothing and Mapping |

Appendix A

References

- Karunathilake, E.M.B.M.; Le, A.T.; Heo, S.; Chung, Y.S.; Mansoor, S. The Path to Smart Farming: Innovations and Opportunities in Precision Agriculture. Agriculture 2023, 13, 1593. [Google Scholar] [CrossRef]

- Rehman, A.U.; Alamoudi, Y.; Khalid, H.M.; Morchid, A.; Muyeen, S.M.; Abdelaziz, A.Y. Smart Agriculture Technology: An Integrated Framework of Renewable Energy Resources, IoT-Based Energy Management, and Precision Robotics. Clean. Energy Syst. 2024, 9, 100132. [Google Scholar] [CrossRef]

- Vinod, C.S.S.; Anand, H.S.; Albaaji, G.F. Precision Farming for Sustainability: An Agricultural Intelligence Model. Comput. Electron. Agric. 2024, 226, 109386. [Google Scholar] [CrossRef]

- Yépez-Ponce, D.F.; Salcedo, J.V.; Rosero-Montalvo, P.D.; Sanchis, J. Mobile Robotics in Smart Farming: Current Trends and Applications. Front. Artif. Intell. 2023, 6, 1213330. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural Robots for Field Operations: Concepts and Components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Keefe, R.F.; Wempe, A.M.; Becker, R.M.; Zimbelman, E.G.; Nagler, E.S.; Gilbert, S.L.; Caudill, C.C. Positioning Methods and the Use of Location and Activity Data in Forests. Forests 2019, 10, 458. [Google Scholar] [CrossRef]

- Ogunsina, M.; Efunniyi, C.P.; Osundare, O.S.; Folorunsho, S.O.; Akwawa, A.A. Advanced Sensor Fusion and Localization Techniques for Autonomous Systems: A Review and New Approaches. Int. J. Frontline Res. Eng. Technol. 2024, 2, 51–60. [Google Scholar] [CrossRef]

- Vélez, S.; Valente, J.; Bretzel, T.; Trommsdorff, M. Assessing the Impact of Overhead Agrivoltaic Systems on GNSS Signal Performance for Precision Agriculture. Smart Agric. Technol. 2024, 9, 100664. [Google Scholar] [CrossRef]

- Chakraborty, S.; Elangovan, D.; Govindarajan, P.L.; ELnaggar, M.F.; Alrashed, M.M.; Kamel, S. A Comprehensive Review of Path Planning for Agricultural Ground Robots. Sustainability 2022, 14, 9156. [Google Scholar] [CrossRef]

- Ding, H.; Zhang, B.; Zhou, J.; Yan, Y.; Tian, G.; Gu, B. Recent Developments and Applications of Simultaneous Localization and Mapping in Agriculture. J. Field Robot. 2022, 39, 956–983. [Google Scholar] [CrossRef]

- Jiang, X.; Yang, D.K.; Tian, Z.; Liu, G.; Lu, M. Single-Line LiDAR Localization via Contribution Sampling and Map Update Technology. Sensors 2024, 24, 3927. [Google Scholar] [CrossRef] [PubMed]

- Yue, X.; Zhang, Y.; Chen, J.; Chen, J.; Zhou, X.; He, M. LiDAR-Based SLAM for Robotic Mapping: State of the Art and New Frontiers. Ind. Robot. 2024, 51, 196–205. [Google Scholar] [CrossRef]

- Bala, J.A.; Adeshina, S.A.; Aibinu, A.M. Advances in Visual Simultaneous Localization and Mapping Techniques for Autonomous Vehicles: A Review. Sensors 2022, 22, 8943. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.; Zhang, L.; Chen, Q.; Hu, X.; Cai, J. A Review of Visual SLAM Methods for Autonomous Driving Vehicles. Eng. Appl. Artif. Intell. 2022, 114, 104992. [Google Scholar] [CrossRef]

- Wang, S.; Li, Y.; Sun, Y.; Li, X.; Sun, N.; Zhang, X.; Yu, N. A Localization and Navigation Method with ORB-SLAM for Indoor Service Mobile Robots. In Proceedings of the 2016 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Angkor Wat, Cambodia, 6–10 June 2016; pp. 443–447. [Google Scholar]

- Xu, L.; Feng, C.; Kamat, V.R.; Menassa, C.C. An Occupancy Grid Mapping Enhanced Visual SLAM for Real-Time Locating Applications in Indoor GPS-Denied Environments. Autom. Constr. 2019, 104, 230–245. [Google Scholar] [CrossRef]

- Fang, B.; Mei, G.; Yuan, X.; Wang, L.; Wang, Z.; Wang, J. Visual SLAM for Robot Navigation in Healthcare Facility. Pattern Recognit. 2021, 113, 107822. [Google Scholar] [CrossRef]

- Yang, Y.; Tang, D.; Wang, D.; Song, W.; Wang, J.; Fu, M. Multi-Camera Visual SLAM for off-Road Navigation. Robot. Auton. Syst. 2020, 128, 103505. [Google Scholar] [CrossRef]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An Overview on Visual SLAM: From Tradition to Semantic. Remote Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Wijayathunga, L.; Rassau, A.; Chai, D. Challenges and Solutions for Autonomous Ground Robot Scene Understanding and Navigation in Unstructured Outdoor Environments: A Review. Appl. Sci. 2023, 13, 9877. [Google Scholar] [CrossRef]

- Zhu, Y.; An, H.; Wang, H.; Xu, R.; Sun, Z.; Lu, K. DOT-SLAM: A Stereo Visual Simultaneous Localization and Mapping System with Dynamic Object Tracking Based on Graph Optimization. Sensors 2024, 24, 4676. [Google Scholar] [CrossRef]

- Ai, Y.; Sun, Q.; Xi, Z.; Li, N.; Dong, J.; Wang, X. Stereo SLAM in Dynamic Environments Using Semantic Segmentation. Electronics 2023, 12, 3112. [Google Scholar] [CrossRef]

- Islam, R.; Habibullah, H.; Hossain, T. AGRI-SLAM: A Real-Time Stereo Visual SLAM for Agricultural Environment. Auton. Robot. 2023, 47, 649–668. [Google Scholar] [CrossRef]

- Zhang, Z.; Chen, J.; Xu, X.; Liu, C.; Han, Y. Hawk-eye-inspired Perception Algorithm of Stereo Vision for Obtaining Orchard 3D Pointcloud Navigation Map. CAAI Trans. Intell. Technol. 2023, 8, 987–1001. [Google Scholar] [CrossRef]

- Li, W.; Chen, X.; Zeng, P.; Wen, Z.; Zhang, G.; Meng, W. Evaluation of ORB-SLAM2 and Stereo-DSO in Complex Indoor-Outdoor Scenes. In Proceedings of the 2023 IEEE 6th International Conference on Electronic Information and Communication Technology (ICEICT), Qingdao, China, 21 July 2023; pp. 1144–1148. [Google Scholar]

- Zhang, Y.; Sun, H.; Zhang, F.; Zhang, B.; Tao, S.; Li, H.; Qi, K.; Zhang, S.; Ninomiya, S.; Mu, Y. Real-Time Localization and Colorful Three-Dimensional Mapping of Orchards Based on Multi-Sensor Fusion Using Extended Kalman Filter. Agronomy 2023, 13, 2158. [Google Scholar] [CrossRef]

- Möller, R.; Furnari, A.; Battiato, S.; Härmä, A.; Farinella, G.M. A Survey on Human-Aware Robot Navigation. Robot. Auton. Syst. 2021, 145, 103837. [Google Scholar] [CrossRef]

- Argush, G.; Holincheck, W.; Krynitsky, J.; McGuire, B.; Scott, D.; Tolleson, C.; Behl, M. Explorer51—Indoor Mapping, Discovery, and Navigation for an Autonomous Mobile Robot. In Proceedings of the 2020 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 24 April 2020; pp. 1–5. [Google Scholar]

- Yin, H.; Ma, Z.; Zhong, M.; Wu, K.; Wei, Y.; Guo, J.; Huang, B. SLAM-Based Self-Calibration of a Binocular Stereo Vision Rig in Real-Time. Sensors 2020, 20, 621. [Google Scholar] [CrossRef]

- Kumar, P.; Sunil, U. Autonomous System of Heavy Vehicle Using CAN Networking. In Proceedings of the First International Conference on Data Engineering and Machine Intelligence; Kumar, S.R., Kadry, S., Gayathri, N., Chelliah, P.R., Eds.; Lecture Notes in Electrical Engineering; Springer Nature: Singapore, 2024; Volume 1261, pp. 83–96. ISBN 978-981-97-7615-3. [Google Scholar]

- Spencer, G.; Mateus, F.; Torres, P.; Dionísio, R.; Martins, R. Design of CAN Bus Communication Interfaces for Forestry Machines. Computers 2021, 10, 144. [Google Scholar] [CrossRef]

- Zhong, F.; Quan, C. Stereo-Rectification and Homography-Transform-Based Stereo Matching Methods for Stereo Digital Image Correlation. Measurement 2021, 173, 108635. [Google Scholar] [CrossRef]

- Zhang, Z. Camera Parameters (Intrinsic, Extrinsic). In Computer Vision; Ikeuchi, K., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 135–140. ISBN 978-3-030-63416-2. [Google Scholar]

- Nusantika, N.R.; Xiao, J.; Hu, X. An Enhanced Multiscale Retinex, Oriented FAST and Rotated BRIEF (ORB), and Scale-Invariant Feature Transform (SIFT) Pipeline for Robust Key Matching in 3D Monitoring of Power Transmission Line Icing with Binocular Vision. Electronics 2024, 13, 4252. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y. Improved Feature Point Extraction Method of ORB-SLAM2 Dense Map. Assem. Autom. 2022, 42, 552–566. [Google Scholar] [CrossRef]

- Yedjour, H. Optical Flow Based on Lucas-Kanade Method for Motion Estimation. In Artificial Intelligence and Renewables Towards an Energy Transition; Hatti, M., Ed.; Lecture Notes in Networks and Systems; Springer International Publishing: Cham, Switzerland, 2021; Volume 174, pp. 937–945. ISBN 978-3-030-63846-7. [Google Scholar]

- Ebrahimi, A.; Czarnuch, S. Automatic Super-Surface Removal in Complex 3D Indoor Environments Using Iterative Region-Based RANSAC. Sensors 2021, 21, 3724. [Google Scholar] [CrossRef] [PubMed]

- Ghasemieh, A.; Kashef, R. Advanced Monocular Outdoor Pose Estimation in Autonomous Systems: Leveraging Optical Flow, Depth Estimation, and Semantic Segmentation with Dynamic Object Removal. Sensors 2024, 24, 8040. [Google Scholar] [CrossRef] [PubMed]

- Zeinali, B.; Zanddizari, H.; Chang, M.J. IMUNet: Efficient Regression Architecture for Inertial IMU Navigation and Positioning. IEEE Trans. Instrum. Meas. 2024, 73, 1–13. [Google Scholar] [CrossRef]

- Cai, Y.; Ou, Y.; Qin, T. Improving SLAM Techniques with Integrated Multi-Sensor Fusion for 3D Reconstruction. Sensors 2024, 24, 2033. [Google Scholar] [CrossRef]

- Zhu, J.; Zhou, H.; Wang, Z.; Yang, S. Improved Multi-Sensor Fusion Positioning System Based on GNSS/LiDAR/Vision/IMU with Semi-Tight Coupling and Graph Optimization in GNSS Challenging Environments. IEEE Access 2023, 11, 95711–95723. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an Open-source Lidar and Visual Simultaneous Localization and Mapping Library for Large-scale and Long-term Online Operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Xiong, B.; Jiang, W.; Li, D.; Qi, M. Voxel Grid-Based Fast Registration of Terrestrial Pointcloud. Remote Sens. 2021, 13, 1905. [Google Scholar] [CrossRef]

- Labbe, M.; Michaud, F. Online Global Loop Closure Detection for Large-Scale Multi-Session Graph-Based SLAM. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2661–2666. [Google Scholar]

- Zheng, T.; Wang, F.; Xu, Z. An Improved Gtsam-Based Nonlinear Optimization Algorithm in ORBSLAM3. In Proceedings of the 2022 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Hengyang, China, 26–27 March 2022; pp. 30–33. [Google Scholar]

- Kam, H.R.; Lee, S.-H.; Park, T.; Kim, C.-H. RViz: A Toolkit for Real Domain Data Visualization. Telecommun. Syst. 2015, 60, 337–345. [Google Scholar] [CrossRef]

- Shiran, W.; Ahamed, T. Navigation System for Autonomous Agricultural Vehicles for Indoor Farms. In IoT and AI in Agriculture; Ahamed, T., Ed.; Springer Nature: Singapore, 2024; pp. 123–147. ISBN 978-981-97-1263-2. [Google Scholar]

- Libby, J.; Kantor, G. Deployment of a Point and Line Feature Localization System for an Outdoor Agriculture Vehicle. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1565–1570. [Google Scholar]

- Jamaludin, A.; Radzi, S.S.M.; Yatim, N.M.; Masuan, N.A.; Adam, N.M.; Suarin, N.A.S.; Sulong, P.M.; Radzi, S.S.M. SLAM Performance Evaluation on Uneven Terrain under Varying Illuminance Conditions and Trajectory Lengths. IEEE Access 2025, 13, 46426–46447. [Google Scholar] [CrossRef]

- Han, F.; Wei, Y.; Jiao, Y.; Zhang, Z.; Pan, Y.; Huang, W.; Tang, L.; Yin, H.; Ding, X.; Xiong, R.; et al. Multi-Cam Multi-Map Visual Inertial Localization: System, Validation and Dataset 2024. arXiv 2024, arXiv:2412.04287. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Karam, S.; Lehtola, V.; Vosselman, G. Strategies to Integrate Imu and Lidar Slam for Indoor Mapping. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 2020, 223–230. [Google Scholar] [CrossRef]

| Camera Module | Calibration Type | Error Metric | Error Value (cm) |

|---|---|---|---|

| RGB | Intrinsic Parameter Optimization | Reprojection Error | 1.589 |

| Left Camera | Intrinsic Parameter Optimization | Reprojection Error | 0.157 |

| Right Camera | Intrinsic Parameter Optimization | Reprojection Error | 0.175 |

| Left–Right Stereo | Extrinsic Parameter Refinement | Average Epipolar Error | 0.246 |

| Right–RGB Stereo | Extrinsic Parameter Refinement | Average Epipolar Error | 0.225 |

| Industrial Robot | Stereo-Depth Camera | ||

|---|---|---|---|

| Parameter | Value | Parameter | Value |

| Dimension | 2397 × 1383 × 2841 mm | Dimension | 111 × 31.3 × 40 mm |

| Weight | 700 kg | Range | 0–10 m |

| Payload | 500 L | DFOV/HFOV/VFOV | 150°/127°/79.5° |

| Maximum Speed | 5.3 km/h | Stereo Frame Resolution | 120 FPS at 1280 × 800 Pixels |

| Maximum Speed Rotation | 8000 rpm | Depth Accuracy | <2% at 4 m, <4% at 6.5 m and <6% at 9 m |

| Battery | 48 V Rechargeable | Integrated IMU | 9-axis BNO085 |

| Measurement Tool | Environment | Average Error (m) | Error Consistency (m) | Smallest Error (m) | Largest Error (m) |

|---|---|---|---|---|---|

| GPS_Estimated | Outdoor | 0.063 | 0.208 | −0.295 | 0.495 |

| Rviz_Estimated | Outdoor | 0.018 | 0.147 | −0.18 | 0.34 |

| Rviz_Estimated | Indoor | 0.03 | 0.15 | −0.08 | 0.41 |

| Environment | Total Points | Points After Voxel Filtering | Points After Outlier Removal | Points for Trees | Points for Other Objects | Average Points per Tree |

|---|---|---|---|---|---|---|

| Outdoor | 2,119,222 | 2,046,906 | 1,877,329 | 63,669 | 4630 | 4086 |

| Indoor | 3,065,270 | 2,944,767 | 2,669,318 | 84,769 | 449,376 | 11,995 |

| Metric | Max. Error (m) | Mean Error (m) | Median Error (m) | Min. Error (m) | RMSE (m) | SSEs (m2) | Standard Deviation (m) |

|---|---|---|---|---|---|---|---|

| Value | 1.1281 | 0.0979 | 0.9222 | 0.8561 | 0.9854 | 458.3559 | 0.1113 |

| Testing Date | Testing Time | Speed (m/s) | Localization Status | ||

|---|---|---|---|---|---|

| A | B | C | |||

| 22 January 2025 | Morning | 0.3 | ✓ | ✓ | ✓ |

| 22 January 2025 | Morning | 0.5 | ✓ | ✓ | ✓ |

| 22 January 2025 | Morning | 1.0 | ✓ | ✓ | ✓ |

| 22 January 2025 | Evening | 0.3 | ✓ | ✓ | ✓ |

| 22 January 2025 | Evening | 0.5 | ✓ | ✖ | ✓ |

| 22 January 2025 | Evening | 1.0 | ✓ | ✖ | ✓ |

| 23 January 2025 | Morning | 0.3 | ✓ | ✓ | ✓ |

| 23 January 2025 | Morning | 0.5 | ✓ | ✓ | ✓ |

| 23 January 2025 | Morning | 1.0 | ✓ | ✖ | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, I.; Han, X.; Ha, J.-W. Stereo Visual Odometry and Real-Time Appearance-Based SLAM for Mapping and Localization in Indoor and Outdoor Orchard Environments. Agriculture 2025, 15, 872. https://doi.org/10.3390/agriculture15080872

Hussain I, Han X, Ha J-W. Stereo Visual Odometry and Real-Time Appearance-Based SLAM for Mapping and Localization in Indoor and Outdoor Orchard Environments. Agriculture. 2025; 15(8):872. https://doi.org/10.3390/agriculture15080872

Chicago/Turabian StyleHussain, Imran, Xiongzhe Han, and Jong-Woo Ha. 2025. "Stereo Visual Odometry and Real-Time Appearance-Based SLAM for Mapping and Localization in Indoor and Outdoor Orchard Environments" Agriculture 15, no. 8: 872. https://doi.org/10.3390/agriculture15080872

APA StyleHussain, I., Han, X., & Ha, J.-W. (2025). Stereo Visual Odometry and Real-Time Appearance-Based SLAM for Mapping and Localization in Indoor and Outdoor Orchard Environments. Agriculture, 15(8), 872. https://doi.org/10.3390/agriculture15080872