A Unmanned Aerial Vehicle-Based Image Information Acquisition Technique for the Middle and Lower Sections of Rice Plants and a Predictive Algorithm Model for Pest and Disease Detection

Abstract

1. Introduction

1.1. Research Background

1.2. Development Status of Farmland Image Information Acquisition Technology

1.3. Limitations of Existing UAV and Disease Detection Methods

- (1)

- Single acquisition area: Most of the current UAV systems focus on the acquisition of rice canopy images, while the incidence of rice disease is mainly concentrated in the middle and lower parts of the plant, resulting in incomplete disease monitoring results.

- (2)

- Insufficient image quality and stability: Limited by equipment performance and flight environment, the captured images are susceptible to factors such as light and wind speed, and the image stability and clarity are low.

- (3)

- Limitations of the algorithm in small target detection: The plaques of rice warp disease are small in size, densely distributed, and close to the background color, and traditional target detection algorithms such as Faster R-CNN and SSD have poor performance in small target detection.

- (4)

- Lack of data fusion and system optimization: Single-RGB-image information cannot capture the early spectral characteristics of rice disease, and does not make full use of infrared, hyperspectral, and other multi-source data.

1.4. Research Objectives and Contents

- (1)

- This research was equipped with an automatic telescopic rod and 360° automatic turntable to achieve multi-angle, middle and lower area image information acquisition. An integrated HD RGB camera, infrared camera, and hyperspectral camera were used to provide multi-dimensional data support.

- (2)

- A UAV flight path based on a path planning algorithm and ant colony algorithm was designed to improve collection efficiency and coverage.

- (3)

- RGB, infrared, and hyperspectral data were fused, and multi-source data alignment and fusion were carried out through a deep learning algorithm.

- (4)

- The improved YOLOv8 target detection model and lightweight Transformer network were proposed to carry out small target detection for rice yeast disease. The feature pyramid network and attention mechanism were combined to improve the detection accuracy and stability.

- (5)

- Field experiments were conducted to verify the image acquisition effect of the system and the performance of the rice disease detection model.

1.5. Technical Architecture and Innovation Points

2. Drone Monitoring and Forecasting System for Rice Disease

2.1. YOLOv8 Model Introduction

2.1.1. Anchor-Free Mechanism

- (1)

- The process of anchor frame generation and matching is eliminated, which significantly reduces the number of parameters and computational complexity of the model;

- (2)

- The anchor-free mechanism is particularly suitable for the detection of small targets such as rice disease spots, avoiding the problem of missing detection and precision reduction caused by mismatching anchor frames;

- (3)

- The model does not depend on the preset anchor frame size, adapts to more target shapes and scales, and has stronger adaptability.

2.1.2. Multi-Scale Feature Fusion

2.1.3. Lightweight Design

- (1)

- The weight of the convolutional kernel is dynamically adjusted to improve the efficiency of feature extraction.

- (2)

- Combining the CBAM (Convolutional Block Attention Mechanism) and other modules, the channels and spatial feature allocation are designed to further reduce redundant calculations.

- (3)

- YOLOv8 supports a variety of scale models (Nano, Small, Medium, Large), and the appropriate version can be chosen according to the scene requirements. In this study, the YOLOv8-Small model was selected, which has low parameters and computation amounts, and is suitable for the real-time deployment of UAV rice field monitoring.

2.1.4. Integrated Framework of Training and Reasoning

2.1.5. Model Optimization in This Study

- (1)

- The parameter configuration of the prediction head was designed to make it more suitable for the boundary box prediction of small targets such as rice disease spot.

- (2)

- The CBAM was introduced to further enhance the ability to distinguish the features and background of rice stalk lesions, and the fusion of high-level and low-level features was enhanced to improve the detection accuracy in complex scenes.

- (3)

- The width and depth of the network were adjusted to reduce computing costs while maintaining efficient detection performance; using a smaller convolution kernel (e.g., a 3 × 3 convolution instead of a 5 × 5 convolution) further reduced the number of parameters.

- (4)

- Through these optimizations, the overall detection performance of the model was significantly improved, making it more suitable for the real-time monitoring needs of the UAV platform.

2.1.6. Advantages of YOLOv8 in Monitoring Rice Disease

2.2. Improved Feature Pyramid (FPN)

2.2.1. Feature Pyramid Network (FPN)

- (1)

- Downward propagation of high-level features: After upsampling, the high-level features are added with the underlying features pixel by pixel to give the underlying features richer semantic information.

- (2)

- Enhancement in low-level features: While retaining detailed information, low-level features improve feature differentiation by integrating the global semantics of high-level features [23].

2.2.2. Convolutional Block Attention Mechanism (CBAM)

- (1)

- Channel attention module

- (2)

- Spatial attention module

2.2.3. Synergistic Effect of FPN and CBAM

2.3. Selection of Activation Function (SiLU)

2.3.1. Definition and Mathematical Expression of SiLU Activation Function

- (1)

- SiLU shows smooth nonlinear mapping characteristics in different input ranges, which makes the network have a stronger expression ability.

- (2)

- The SiLU function is a first-order continuous differentiable function in the global range, which provides stability for gradient backpropagation and helps accelerate the optimization process of the model.

2.3.2. Comparison with Other Activation Functions

- (1)

- Avoid the “neuron death” problem

- (2)

- Linear mapping characteristics

- (3)

- Smooth gradient update

- (4)

- Performance advantage

2.3.3. Application of SiLU in YOLOv8

2.3.4. Mathematical Features and Performance Advantages

2.4. Multi-Dimensional Information Collection Scheme

2.4.1. Data Enhancement

2.4.2. Attention Mechanism

2.4.3. Anchor Frame Optimization

2.4.4. High-Resolution Input

2.5. Model Architecture Adjustment

2.5.1. Detection Header Optimization

- (1)

- Increase the number of image information collection equipment

- (2)

- Adjust the Anchor-free mechanism parameters.

2.5.2. Improved Loss Function

2.5.3. Adjusting the Network Depth and Width

- (1)

- Increase network width

- (2)

- Reduce network depth

- (3)

- Depth-to-width ratio optimization

2.6. Rice Disease Monitoring Model (YOLOv8-EDCA)

2.6.1. Model Integration

- (1)

- The core idea of EDCA: The EDCA mechanism is improved on the basis of the CBAM (Convolutional Block Attention Mechanism) and achieves richer context information captured between feature channels by adding parallel convolutional paths with different kernel sizes [32]. Specifically, the EDCA uses two parallel attention paths to process features of different receptive fields: it uses a small convolution kernel (e.g., 3 × 3) to extract local detailed features, and it uses large convolution kernels (such as 5 × 5 or 7 × 7) to extract global context information.

- (2)

- The recall rate of small target detection can be improved by integrating the prediction results of multiple models.

2.6.2. Design and Optimization of Lightweight Model

- (1)

- Use the SiLU activation function with a lightweight convolution structure

- (2)

- Adjust the stacking mode of the network layer

- (3)

- Network parameter adjustment

3. Experiment and Result Analysis

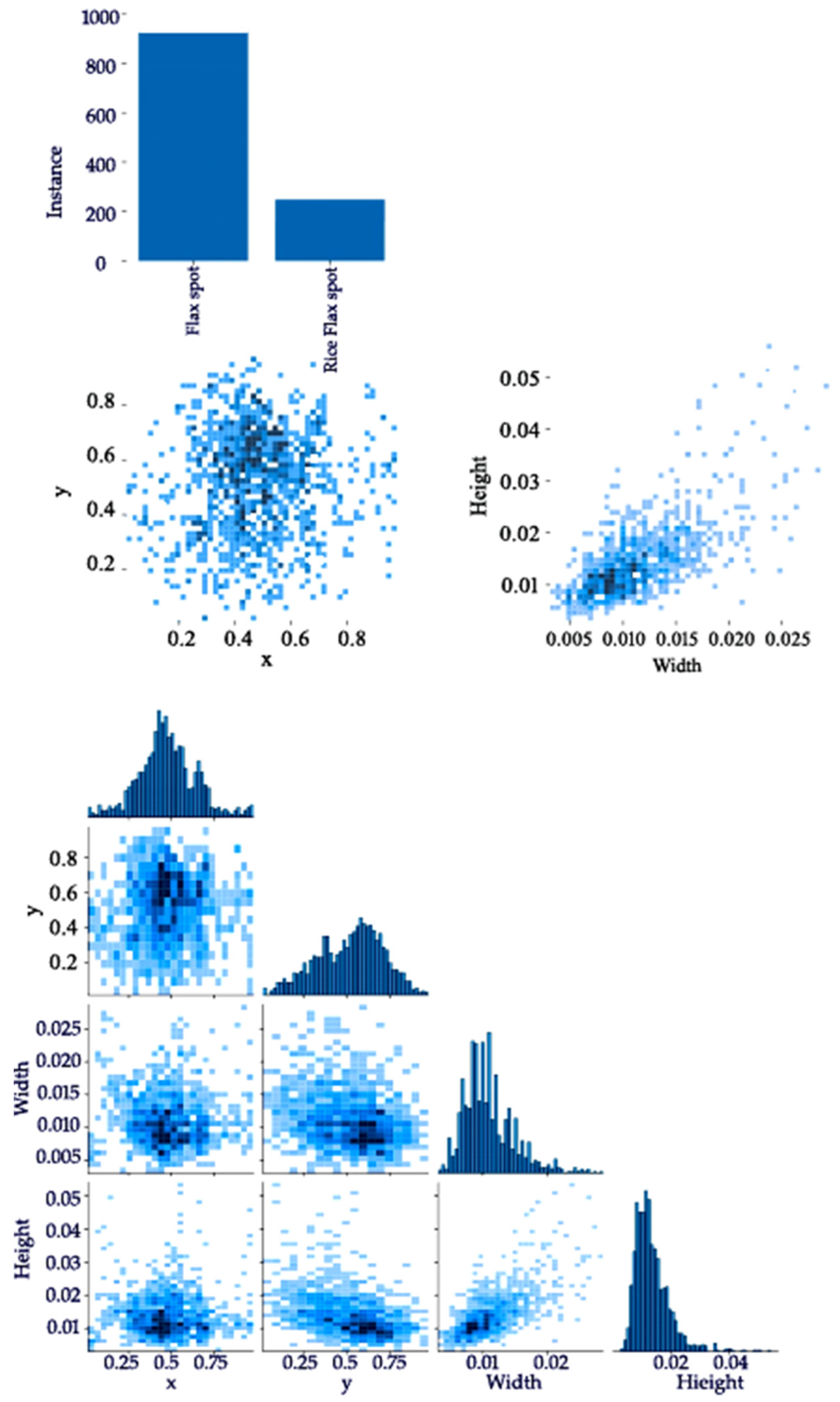

3.1. Introduction to Data Sets

3.2. Experimental Environment

3.3. Model Evaluation Index

3.4. Model Performance Comparison

3.5. Ablation Experiment

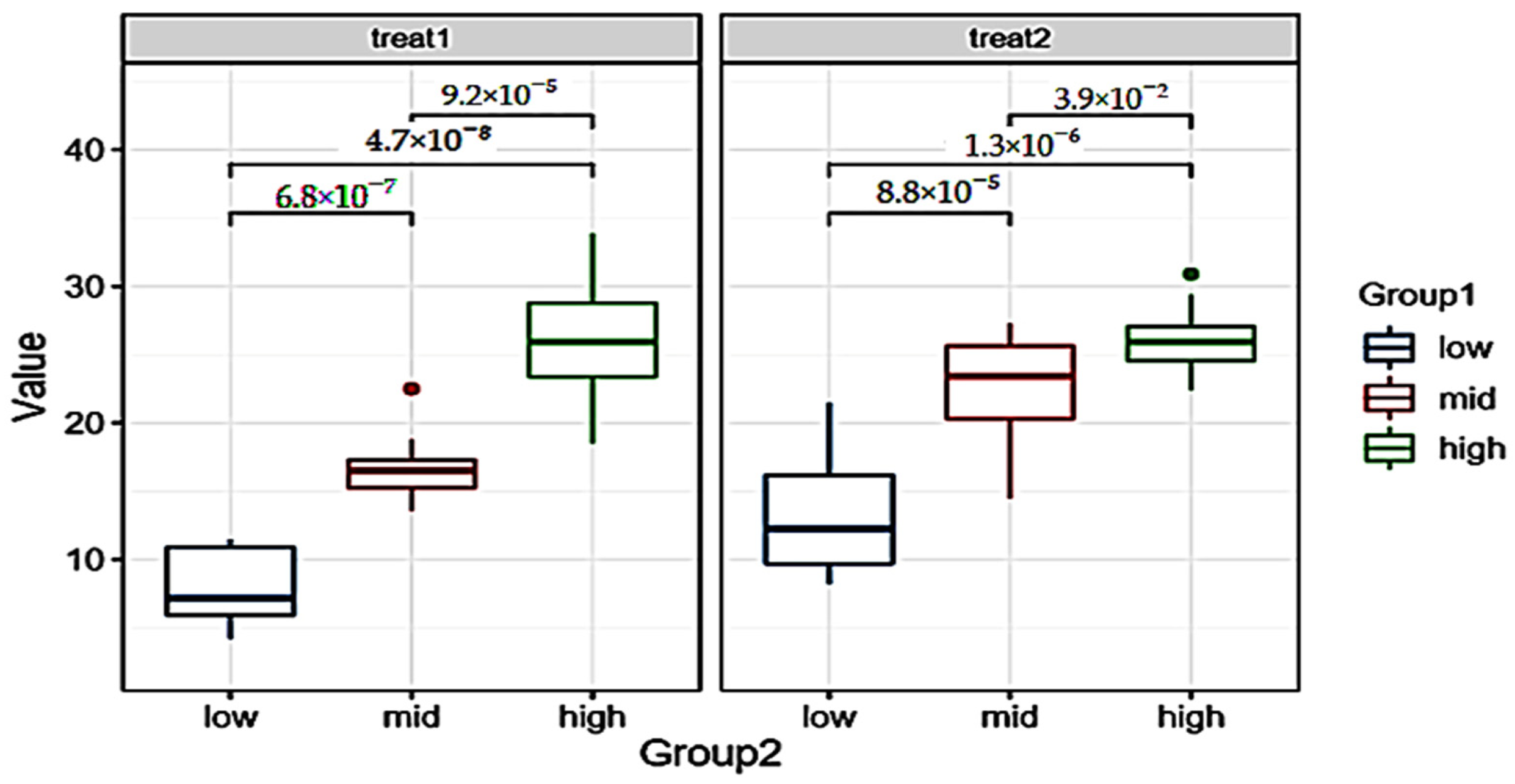

3.6. Analysis of Results Under Different Treatment Groups and Detection Degrees

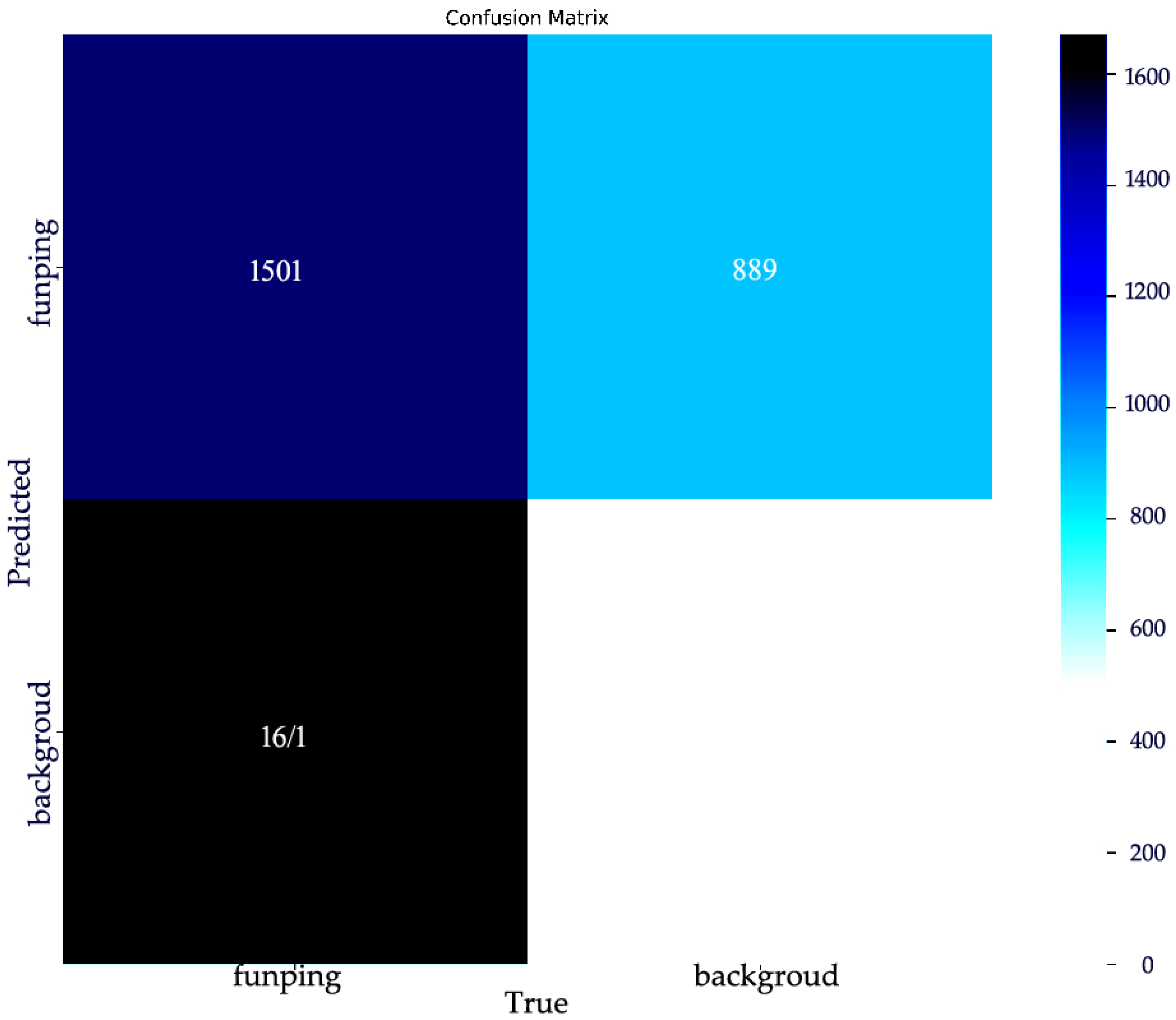

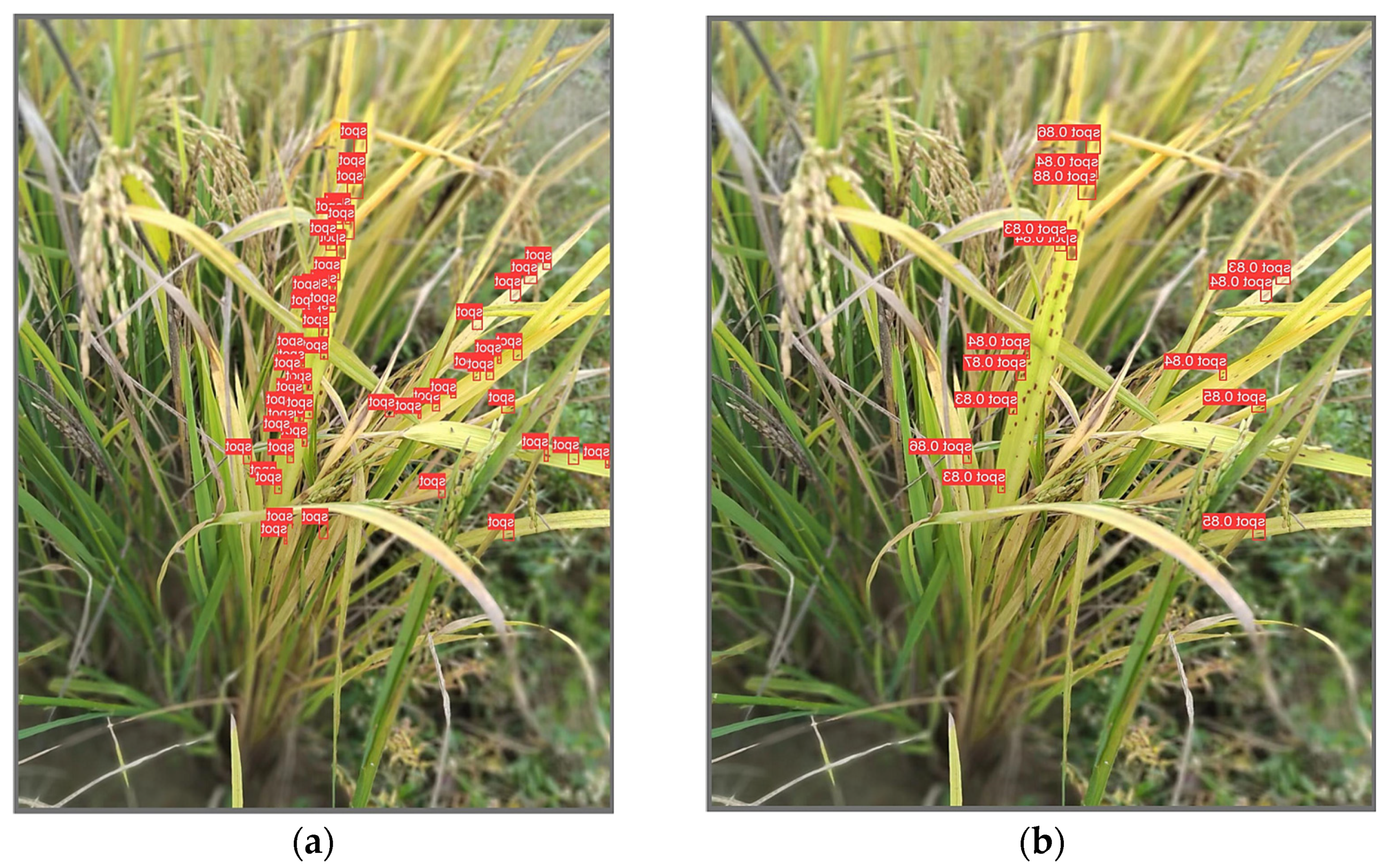

3.7. Analysis of Visual Results

3.8. Summary

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lv, Q.; Zhao, X.; Yang, X.; Huang, F.; Liang, W. Paddy rice soil bacteria chlamydospore piece of wintering capability study. J. Plant Pathol. 2024, 777–786. [Google Scholar]

- Zheng, G. Research on Monitoring and Early Warning Technology of Rice Pests and Diseases Based on Machine Vision. Ph.D. Thesis, Chongqing Three Gorges College, Chongqing, China, 2023. [Google Scholar]

- Cai, N. Rice Disease and Efficacy Evaluation Based on UAV Image Research. Ph.D. Thesis, Anhui University, Hefei, China, 2022. [Google Scholar]

- Cui, X.D. Integrated control technology of rice pests and diseases in Northeast China. Spec. Econ. Flora Fauna 2022, 25, 125–127. (In Chinese) [Google Scholar]

- Li, J.; Zhang, T.; Peng, X.; Yan, G.; Chen, Y. Application of small UAV in farmland information monitoring. Agric. Mech. Res. 2010, 183–186. (In Chinese) [Google Scholar]

- Li, F.; Duan, Y.; Shi, Y.; Wu, W.; Huang, P. Using a single drone image to accurately identify fruit trees. Agric. Inf. China 2019, 4, 56–69. [Google Scholar] [CrossRef]

- Yao, Q.; Guan, Z.; Zhou, Y.; Tang, J.; Hu, Y.; Yang, B. Application of support vector machine for detecting rice diseases using shape and color texture features. In Proceedings of the 2009 International Conference on Engineering Computation, ICEC 2009, Hong Kong, China, 2–3 May 2009; pp. 79–83. [Google Scholar]

- Yang, T.-L.; Qian, Y.-S.; Wu, W.; Liu, T.; Sun, C. Intelligent acquisition of rice disease images based on Python crawler and feature matching. Henan Agric. Sci. 2020, 12, 45–57. [Google Scholar]

- Deng, R.; Pan, W.; Wang, Z. Research progress and prospect of crop phenotype technology and its intelligent equipment. Mod. Agric. Equip. 2021, 42, 2–9. (In Chinese) [Google Scholar]

- Mu, Y.; Li, A.; Wang, S. Research on rice growth image acquisition and growth monitoring. Priv. Sci. Technol. 2014, 3, 254–261. [Google Scholar]

- Ge, J.; Shao, L.; Ding, K.; Li, J.; Zhao, S. Detection of disease degree of maize small spot by image. Trans. Chin. Soc. Agric. Mach. 2008, 39, 1142117. [Google Scholar]

- Lang, L.-Y.; Tao, J.-J. Study on the infection degree of cotton red blight based on image processing. Agric. Mech. Res. 2012, 6, 126–131. [Google Scholar]

- Li, J. Study on Image Processing Technology and Physiological Index of Maize Small Spot Disease. Master’s Thesis, Anhui Agricultural University, Hefei, China, 2008. [Google Scholar]

- Palva, R.; Kaila, E.; Pascual, G.B.; Bloch, V. Assessment of the Performance of a Field Weeding Location-Based Robot Using YOLOv8. J. Agron. 2024, 14, 2215. [Google Scholar] [CrossRef]

- Liu, J.; Huang, X.; Guo, J. Lightweight cotton grade field detection based on YOLOv8. Comput. Eng. 2024, 1–13. [Google Scholar] [CrossRef]

- Zhao, C.; Tang, Q.; Xu, H.; Zhu, X.; Li, Y.; Tao, Y.; Zhang, X. Cdd-yolo: Based on Improved YOLOv8n UAV small target lightweight detection algorithm. J. Zhejiang Norm. Univ. (Nat. Sci. Ed.) 2024, 1–10. [Google Scholar] [CrossRef]

- Xiao, B.; Nguyen, M.; Yan, W.Q. Fruit ripeness identification using YOLOv8 model. Multimed. Tools Turned Appl. 2023, 83, 28039–28056. [Google Scholar]

- Deng, L.; Zhou, J.; Liu, Q. Flames and smoke detection algorithm based on improved YOLOv8. J. Tsinghua Univ. (Nat. Sci. Ed.) 2024, 8, 1–9. [Google Scholar]

- Liu, G.; Di, X.; Yang, Y.; Wang, J. The rice pest detection algorithm based on YOLOv8. J. Yangtze River Inf. Commun. 2024, 9, 13–16. [Google Scholar]

- Zhang, S.; Chen, S.; Zhao, Z. Improve YOLOv8 crop leaf diseases and insect pests recognition algorithm. Chin. J. Agric. Mech. 2024, 45, 255–260. [Google Scholar]

- Li, Y.; Fan, Q.; Huang, Z.; Gu, Q. A Modified YOLOv8 Detection Network for UAV Aerial Image Recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Wang, Y. Research on SMFF-YOLO Algorithm Based on Multilevel Feature Fusion and Scale Adaptive in UAV Remote Sensing Images. Master’s Thesis, Wuhan Textile University, Wuhan, China, 2024. [Google Scholar]

- Guo, Z.; Li, Y. HCI-YOLO: Detection model of eggplant fruit pests and diseases based on improved YOLOv8. Softw. Eng. 2024, 27, 63–68. [Google Scholar]

- Cao, Y.; Chen, X.; Lin, Y.; Li, Y.; Guo, Z. Weeds semantic segmentation method based on multi-scale information fusion. J. Shenyang Agric. Univ. 2024, 6, 1–9. [Google Scholar]

- Song, W.; Zhai, W.; Gao, M.; Li, Q.; Chehri, A.; Jeon, G. Multiscale aggregation and illumination-aware attention network for infrared and visible image fusion. Concurr. Comput. Pract. Exp. 2023, 36, e7712. [Google Scholar] [CrossRef]

- Sun, M.; Sun, T.; Hao, F.; Mu, C.; Ma, D. Recognition of lightweight maize kernel varieties based on MobileNetV2 and convolutional attention mechanism. Shandong Agric. Sci. 2024, 12, 1–11. [Google Scholar]

- Li, Q.Q.; Chen, J.Q.; Hao, K.W.; Mu, C.; Ma, D. A lightweight blind spot detection based on YOLOv8 network. J. Mod. Electron. Technol. 2024, 47, 163–170. [Google Scholar]

- Li, Y.; Wu, Z.; Sun, S.; Lin, M.; Wu, Z.; Shen, H. YOLOv5 algorithm of apple leaf diseases more detection. J. Chin. Agric. Mech. 2024, 7, 230–237+353. [Google Scholar]

- Chen, J.; Hu, H.; Yang, J. Plant leaf disease recognition based on improved SinGAN and improved ResNet34. Front. Artif. Intell. 2024, 7, 1414274. [Google Scholar] [CrossRef]

- Chen, W.; Yuan, H. Improved Forest Pest Detection Method of YOLOv8n. J. Beijing For. Univ. 2025, 47, 119–131. [Google Scholar]

- Wen, X. Lightweight for Embedded Network Research. Ph.D. Thesis, Huazhong University of Science and Technology, Wuhan, China, 2020. [Google Scholar]

- Feng, C. For Image Retrieval in Depth and the Bottom of the Visual Convolution Feature Extraction Method Research. Ph.D. Thesis, Northeastern University, Shenyang, China, 2020. [Google Scholar]

- Li, B.; Song, T.; Gao, J.; Li, D.; Gao, P.; Li, R.; Zhao, D. Calcium strawberry leaf recognition method based on YOLO v5 model. J. Jiangsu Agric. Sci. 2024, 52, 74–82. [Google Scholar]

- Zhang, G.; Li, C.; Li, G.; Lu, W. Small target detection algorithm of UAV aerial image based on improved YOLOv7-tiny. Eng. Sci. Technol. 2024, 4, 1–14. [Google Scholar]

| Test Serial Number | Time | Collection Period | Rice Variety | Growth Period | Height (cm) | Specification (p/m²) | Weather | Wind Speed (m/s) | Humidity (%) |

|---|---|---|---|---|---|---|---|---|---|

| Test 1 | 14 October 2022 | 06:00–08:00 | Huangguang oil | Booting stage | 102–107 | 138 | Cloudy | 3.6 | 78 |

| Number | Function | Parameter |

|---|---|---|

| 1 | Effective focal length | Autofocus |

| 2 | Continuous working time on a single charge | 60 min |

| 3 | Pixel | 1600 W |

| 4 | Quality | 50 g |

| Index | Parameter |

|---|---|

| Image resolution | 640 × 640 |

| Batch size | 16 |

| Learning rate | 0.01 (Dynamic adjustment by cosine annealing strategy) |

| design | AdamW |

| Training rounds | 2000 |

| Loss function | Focal loss function, combined with IoU loss, is used to design positioning accuracy |

| Model | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Inference Time (ms/Image) | Model Size (MB) |

|---|---|---|---|---|

| YOLOv8 original version | 91.3 | 72.4 | 7.2 | 17.9 |

| YOLOv8-EDCA | 94.7 | 76.8 | 6.5 | 13.7 |

| Faster R-CNN | 78.9 | 54.3 | 19.8 | 108.2 |

| SSD-VGG | 69.4 | 41.7 | 8.5 | 28.9 |

| Module Combination | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Inference Time (ms/Image) |

|---|---|---|---|

| Original YOLOv8 | 91.3 | 72.4 | 7.2 |

| +Dual Channel Attention (EDCA) | 93.5 | 74.6 | 6.9 |

| +SiLU activation function | 92.8 | 73.8 | 7.0 |

| +Model architecture optimization | 94.7 | 76.8 | 6.5 |

| Model Name | Precision/% | Recall/% | mAP_0.5/% | mAP@0.5:0.95 (%) | Modelsize/MB |

|---|---|---|---|---|---|

| YOLOv8-EDCA | 87.51 | 89.92 | 92.93 | 70.14 | 25.9 |

| YOLOv8 | 82.16 | 83.54 | 87.30 | 65.61 | 28.9 |

| SSD-VGG | 81.76 | 37.60 | 47.92 | 45.17 | 28.96 |

| Faster R-Cnn | 65.17 | 42.20 | 39.91 | 36.80 | 108.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, X.; Ou, Y.; Deng, K.; Fan, X.; Gao, R.; Zhou, Z. A Unmanned Aerial Vehicle-Based Image Information Acquisition Technique for the Middle and Lower Sections of Rice Plants and a Predictive Algorithm Model for Pest and Disease Detection. Agriculture 2025, 15, 790. https://doi.org/10.3390/agriculture15070790

Guo X, Ou Y, Deng K, Fan X, Gao R, Zhou Z. A Unmanned Aerial Vehicle-Based Image Information Acquisition Technique for the Middle and Lower Sections of Rice Plants and a Predictive Algorithm Model for Pest and Disease Detection. Agriculture. 2025; 15(7):790. https://doi.org/10.3390/agriculture15070790

Chicago/Turabian StyleGuo, Xiaoyan, Yuanzhen Ou, Konghong Deng, Xiaolong Fan, Ruitao Gao, and Zhiyan Zhou. 2025. "A Unmanned Aerial Vehicle-Based Image Information Acquisition Technique for the Middle and Lower Sections of Rice Plants and a Predictive Algorithm Model for Pest and Disease Detection" Agriculture 15, no. 7: 790. https://doi.org/10.3390/agriculture15070790

APA StyleGuo, X., Ou, Y., Deng, K., Fan, X., Gao, R., & Zhou, Z. (2025). A Unmanned Aerial Vehicle-Based Image Information Acquisition Technique for the Middle and Lower Sections of Rice Plants and a Predictive Algorithm Model for Pest and Disease Detection. Agriculture, 15(7), 790. https://doi.org/10.3390/agriculture15070790