Comparative Analysis of CNN-Based Semantic Segmentation for Apple Tree Canopy Size Recognition in Automated Variable-Rate Spraying

Abstract

:1. Introduction

2. Materials and Methods

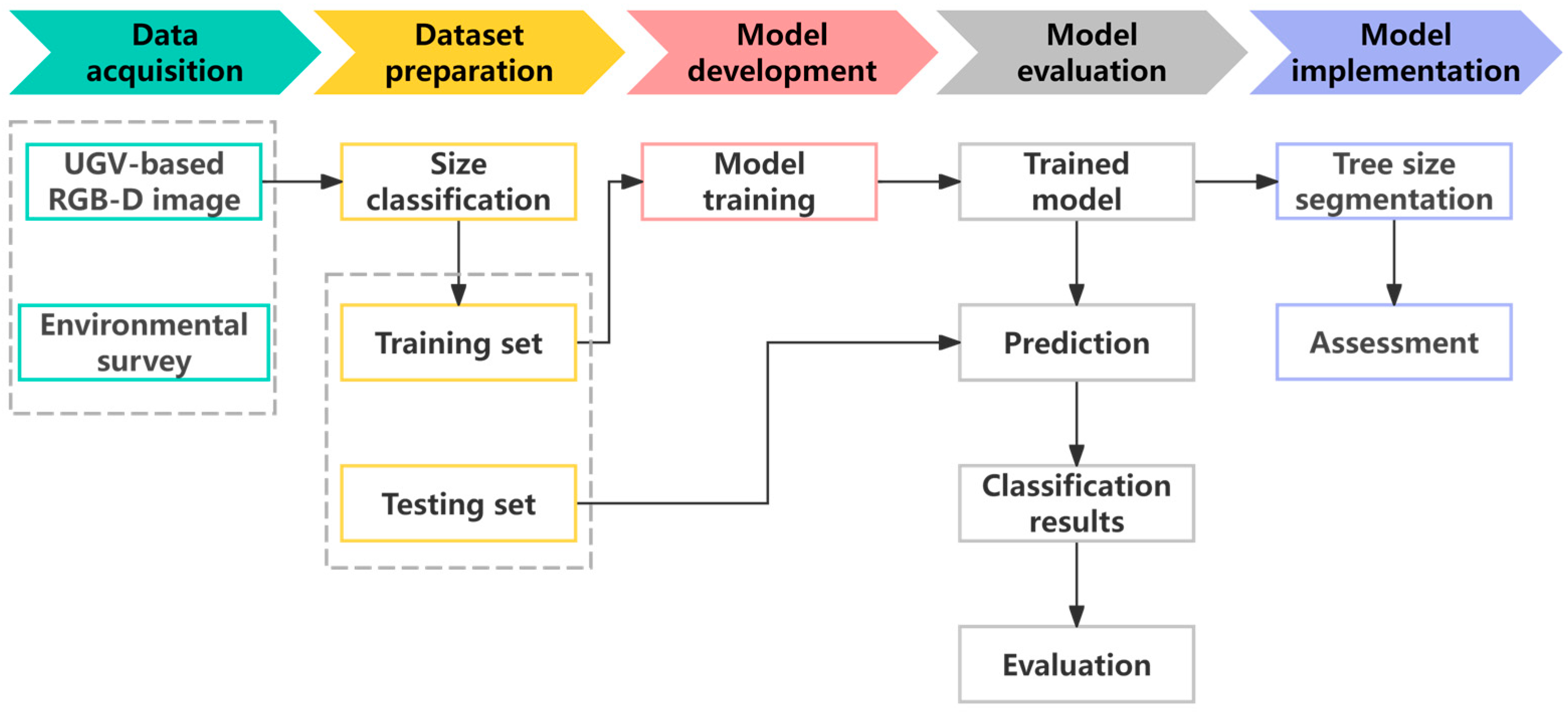

2.1. Overall Workflow

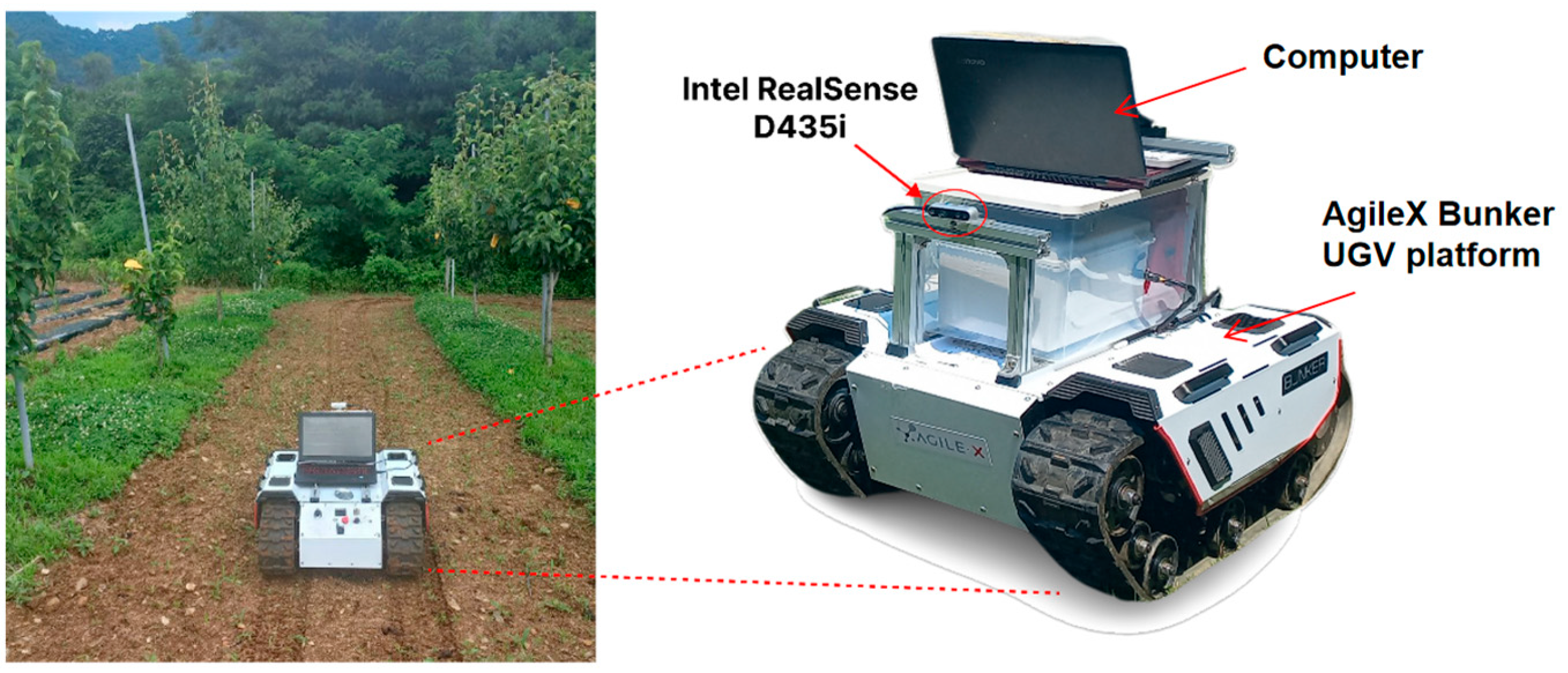

2.2. Data Acquisition

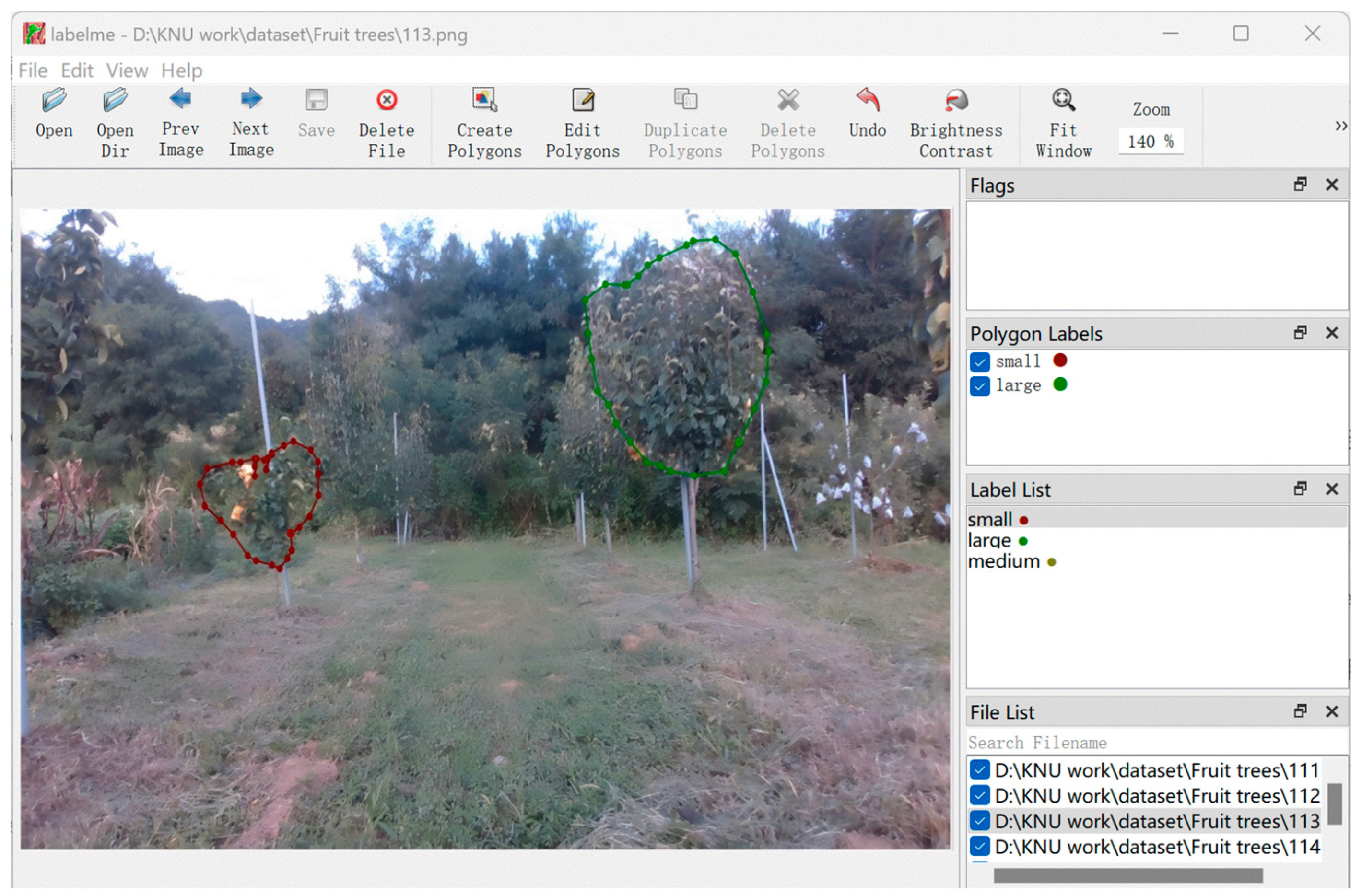

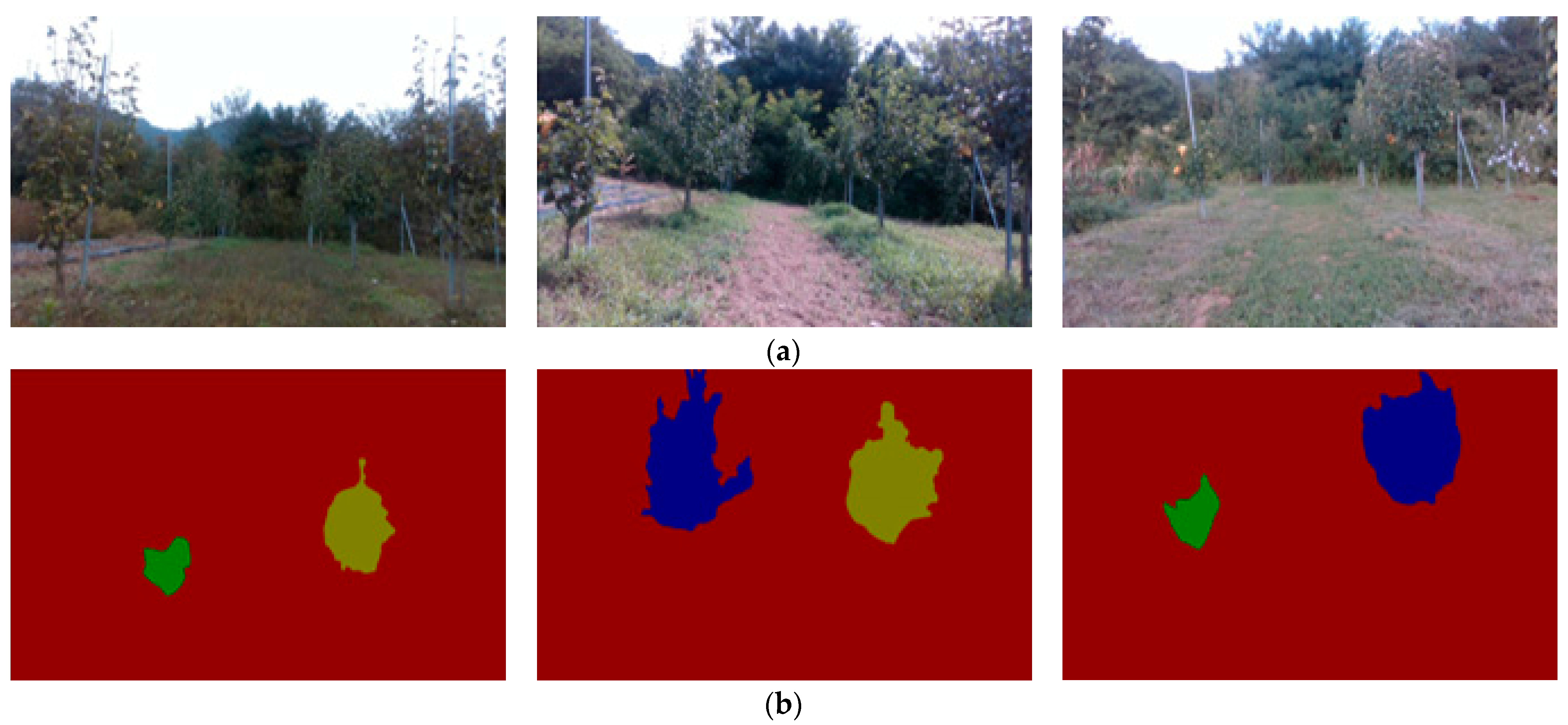

2.3. Dataset Preparation

2.4. Enhanced Deep CNN Architectures for Semantic Segmentation

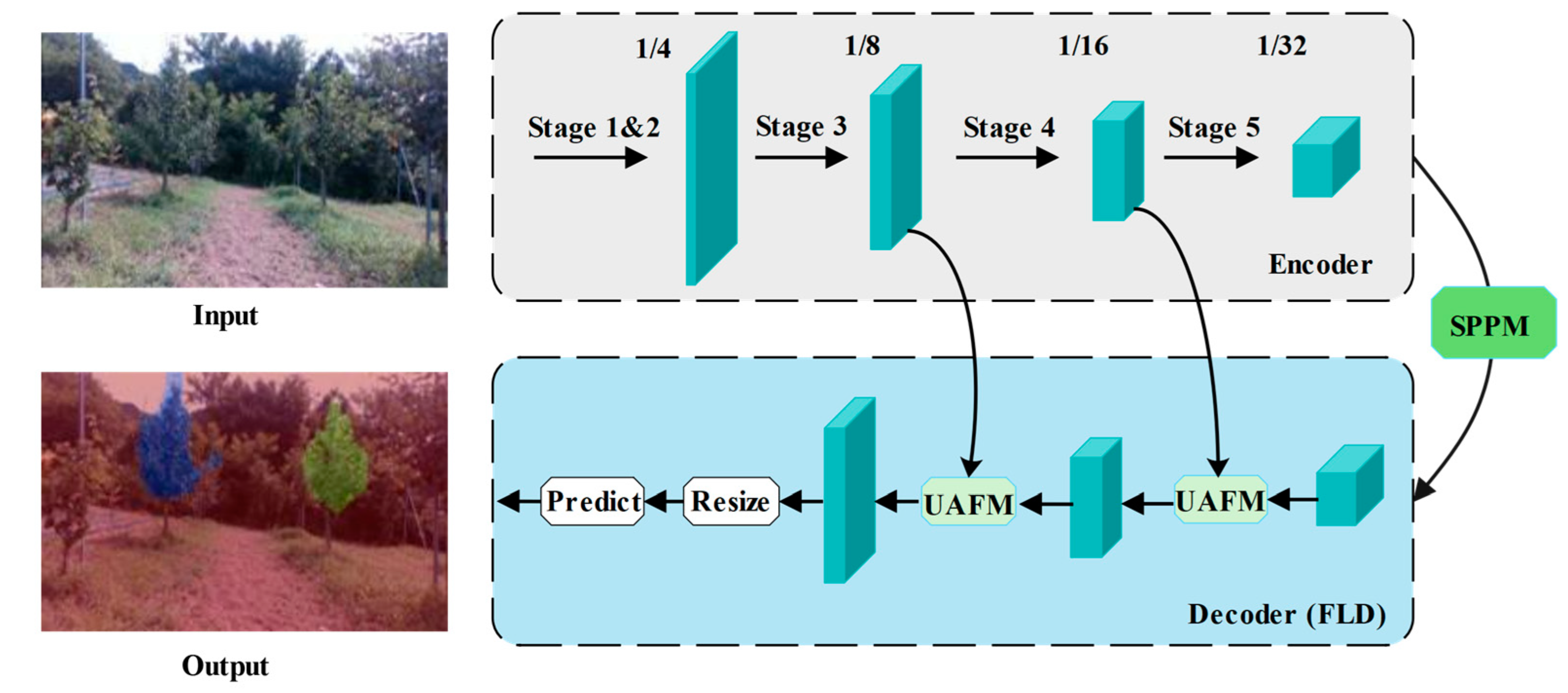

2.4.1. PP-LiteSeg Model

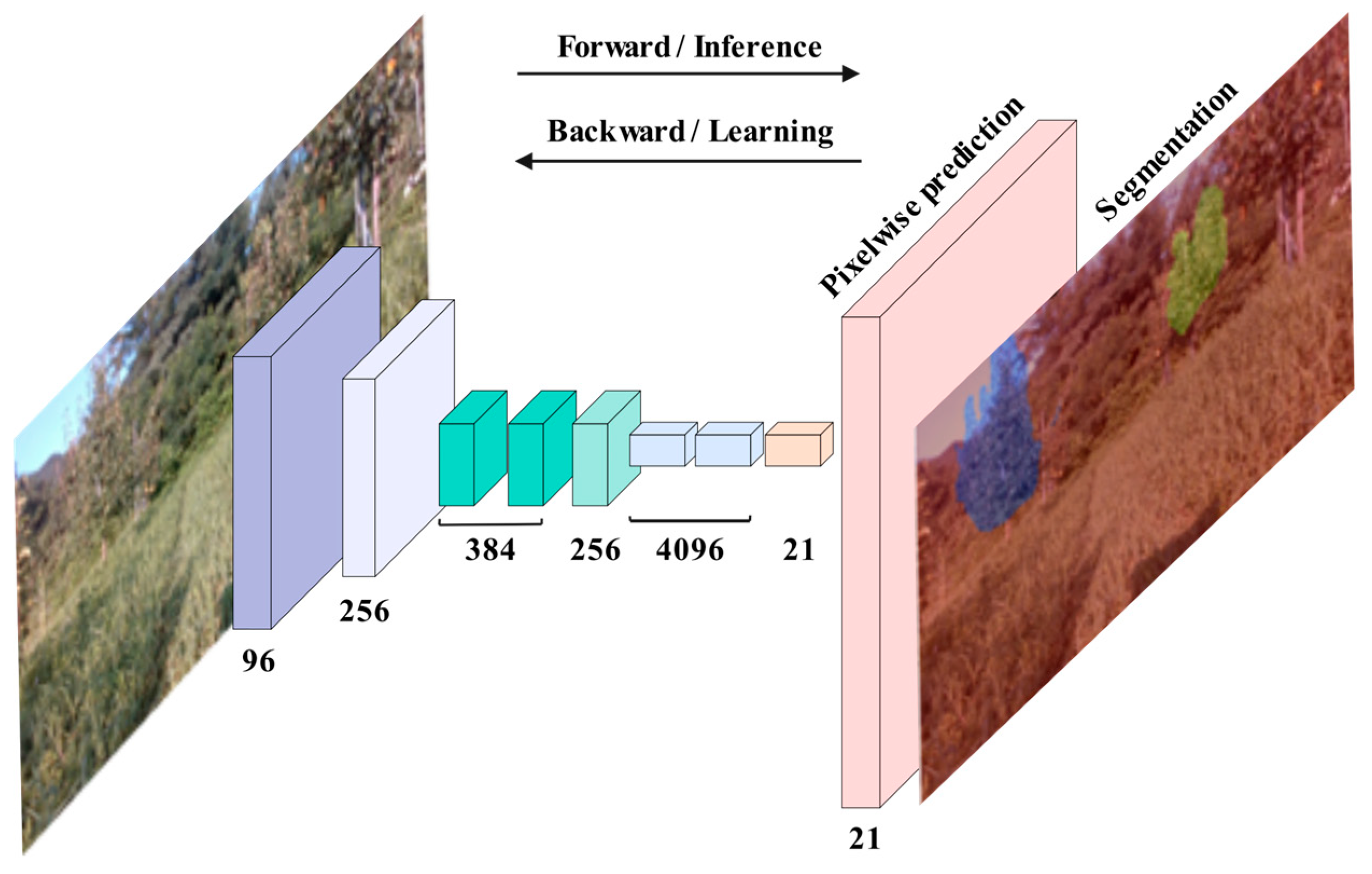

2.4.2. FCN Model

2.4.3. STDC Backbone

2.5. Comprehensive Metrics for Model Performance Evaluation

3. Results

3.1. Empirical Analysis of Model Training Performance

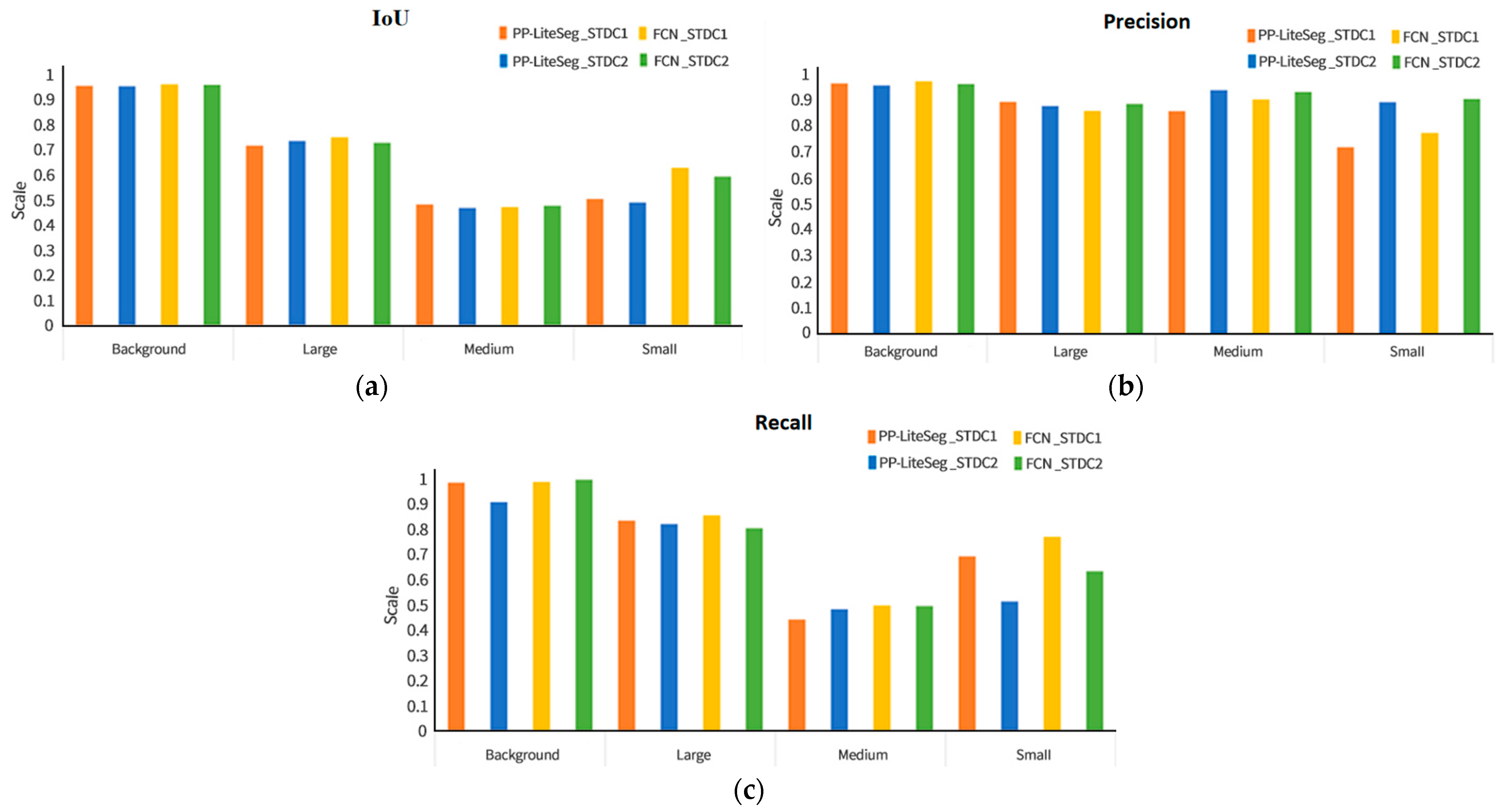

3.2. Comparative Evaluation of Model Performance

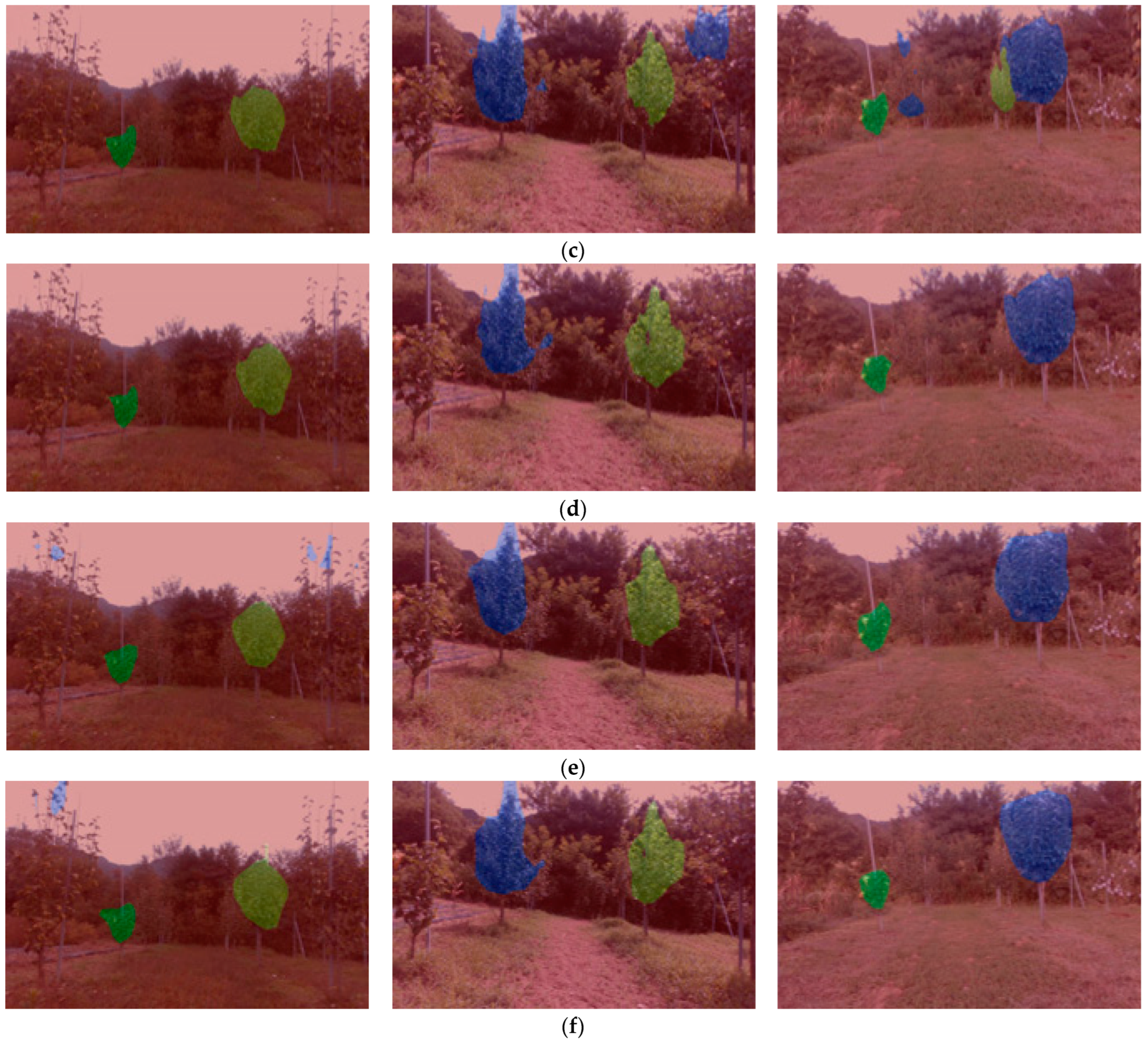

3.3. Automated Recognition of Apple Tree Canopy Sizes

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pérez-Lucas, G.; Navarro, G.; Navarro, S. Adapting agriculture and pesticide use in mediterranean regions under climate change scenarios: A comprehensive review. Eur. J. Agron. 2024, 161, 127337. [Google Scholar] [CrossRef]

- Omoyajowo, K. Sustainable Environmental Policies: An Impact Analysis of U.S. Regulations on Pesticides and Chemical Discharges. SSRN Preprints 2024.4943299. Available online: https://dx.doi.org/10.2139/ssrn.4943299 (accessed on 9 February 2025).

- Statista. Forecast Agricultural Consumption of Pesticides Worldwide from 2023 to 2027 (in 1000 Metric Tons). Available online: https://www.statista.com/statistics/1401556/global-agricultural-use-of-pesticides-forecast/ (accessed on 9 February 2025).

- Khan, Z.; Liu, H.; Shen, Y.; Zeng, X. Deep learning improved yolov8 algorithm: Real-time precise instance segmentation of crown region orchard canopies in natural environment. Comput. Electron. Agric. 2024, 224, 109168. [Google Scholar] [CrossRef]

- Kansotia, K.; Saikanth, D.R.; Kumar, R.; Pamala, P.; Kumar, P.; Pandey, S.; Kushwaha, T. Role of modern technologies in plant disease management: A comprehensive review of benefits, challenges, and future perspectives. Int. J. Environ. Clim. Change 2023, 13, 1325–1335. [Google Scholar] [CrossRef]

- Liu, L.; Liu, Y.; He, X.; Liu, W. Precision variable-rate spraying robot by using single 3D lidar in orchards. Agronomy 2022, 12, 2509. [Google Scholar] [CrossRef]

- Liu, H.; Du, Z.; Shen, Y.; Du, W.; Zhang, X. Development and evaluation of an intelligent multivariable spraying robot for orchards and nurseries. Comput. Electron. Agric. 2024, 222, 109056. [Google Scholar] [CrossRef]

- Maghsoudi, H.; Minaei, S.; Ghobadian, B.; Masoudi, H. Ultrasonic sensing of pistachio canopy for low-volume precision spraying. Comput. Electron. Agric. 2015, 112, 149–160. [Google Scholar] [CrossRef]

- Nan, Y.; Zhang, H.; Zheng, J.; Yang, K.; Ge, Y. Low-volume precision spray for plant pest control using profile variable rate spraying and ultrasonic detection. Front. Plant Sci. 2022, 13, 1042769. [Google Scholar] [CrossRef]

- Luo, S.; Wen, S.; Zhang, L.; Lan, Y.; Chen, X. Extraction of crop canopy features and decision-making for variable spraying based on unmanned aerial vehicle lidar data. Comput. Electron. Agric. 2024, 224, 109197. [Google Scholar] [CrossRef]

- Xue, X.; Luo, Q.; Bu, M.; Li, Z.; Lyu, S.; Song, S. Citrus tree canopy segmentation of orchard spraying robot based on RGB-D image and the improved DeepLabv3+. Agronomy 2023, 13, 2059. [Google Scholar] [CrossRef]

- Wang, X.; Tang, J.; Whitty, M. Side-view apple flower mapping using edge-based fully convolutional networks for variable rate chemical thinning. Comput. Electron. Agric. 2020, 178, 105673. [Google Scholar] [CrossRef]

- Gu, C.; Wang, X.; Wang, X.; Yang, F.; Zhai, C. Research progress on variable-rate spraying technology in orchards. Appl. Eng. Agric. 2020, 36, 927–942. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Zahid, A.; He, L.; Martin, P. Opportunities and possibilities of developing an advanced precision spraying system for tree fruits. Sensors 2021, 21, 3262. [Google Scholar] [CrossRef] [PubMed]

- Abbas, I.; Liu, J.; Faheem, M.; Noor, R.S.; Shaikh, S.A.; Solangi, K.A.; Raza, S.M. Different sensor based intelligent spraying systems in agriculture. Sens. Actuators A Phys. 2020, 316, 112265. [Google Scholar] [CrossRef]

- Azizi, A.; Abbaspour-Gilandeh, Y.; Vannier, E.; Dusséaux, R.; Mseri-Gundoshmian, T.; Moghaddam, H.A. Semantic segmentation: A modern approach for identifying soil clods in precision farming. Biosyst. Eng. 2020, 196, 172–182. [Google Scholar] [CrossRef]

- Abioye, A.E.; Larbi, P.A.; Hadwan, A.A.K. Deep learning guided variable rate robotic sprayer prototype. Smart Agric. Technol. 2024, 9, 100540. [Google Scholar] [CrossRef]

- Hussain, N.; Farooque, A.A.; Schumann, A.W.; McKenzie-Gopsill, A.; Esau, T.; Abbas, F.; Acharya, B.; Zaman, Q. Design and development of a smart variable rate sprayer using deep learning. Remote Sens. 2020, 12, 4091. [Google Scholar] [CrossRef]

- Asaei, H.; Jafari, A.; Loghavi, M. Site-specific orchard sprayer equipped with machine vision for chemical usage management. Comput. Electron. Agric. 2019, 162, 431–439. [Google Scholar] [CrossRef]

- Pathan, R.K.; Lim, W.L.; Lau, S.L.; Ho, C.C.; Khare, P.; Koneru, R.B. Experimental analysis of U-Net and Mask R-CNN for segmentation of synthetic liquid spray. In Proceedings of the 2022 IEEE International Conference on Computing (ICOCO), Kota Kinabalu, Malaysia, 14–16 November 2022; pp. 237–242. [Google Scholar] [CrossRef]

- Li, X.; Duan, F.; Hu, M.; Hua, J.; Du, X. Weed density detection method based on a high weed pressure dataset and improved PSP Net. IEEE Access 2023, 11, 98244–98255. [Google Scholar] [CrossRef]

- Zhao, J.; Li, Z.; Lei, Y.; Huang, L. Application of UAV RGB images and improved PSP Net network to the identification of wheat lodging areas. Agronomy 2023, 13, 1309. [Google Scholar] [CrossRef]

- Chang, Z.; Li, H.; Chen, D.; Liu, Y.; Zou, C.; Chen, J.; Han, W.; Liu, S.; Zhang, N. Crop type identification using high-resolution remote sensing images based on an improved DeepLabv3+ network. Remote Sens. 2023, 15, 5088. [Google Scholar] [CrossRef]

- Czymmek, V.; Köhn, C.; Harders, L.O.; Hussmann, S. Review of energy-efficient embedded system acceleration of convolution neural networks for organic weeding robots. Agriculture 2023, 13, 2103. [Google Scholar] [CrossRef]

- Son, H.; Weiland, J. Lightweight Semantic Segmentation Network for Semantic Scene Understanding on Low-Compute Devices. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 62–69. [Google Scholar] [CrossRef]

- Zhang, J.; Qiang, Z.; Lin, H.; Chen, Z.; Li, K.; Zhang, S. Research on tobacco field semantic segmentation method based on multispectral unmanned aerial vehicle data and improved PP-LiteSeg model. Agronomy 2024, 14, 1502. [Google Scholar] [CrossRef]

- Liu, Y.; Chu, L.; Chen, G.; Wu, Z.; Chen, Z.; Lai, B.; Hao, Y. PaddleSeg: A high-efficient development toolkit for image segmentation. arXiv 2021, arXiv:2101.06175. [Google Scholar] [CrossRef]

- Torralba, A.; Russell, B.C.; Yuen, J. Labelme: Online image annotation and applications. Proc. IEEE 2010, 98, 1467–1484. [Google Scholar] [CrossRef]

- Peng, J.; Liu, Y.; Tang, S.; Hao, Y.; Chu, L.; Chen, G.; Wu, Z.; Chen, Z.; Yu, Z.; Du, Y.; et al. PP- LiteSeg: A superior real-time semantic segmentation model. arXiv 2022, arXiv:2204.02681. [Google Scholar] [CrossRef]

- Zheng, Z.; Yuan, J.; Yao, W.; Yao, H.; Liu, Q.; Guo, L. Crop classification from drone imagery based on lightweight semantic segmentation methods. Remote Sens. 2024, 16, 4099. [Google Scholar] [CrossRef]

- Yang, M.; Huang, C.; Li, Z.; Shao, Y.; Yuan, J.; Yang, W.; Song, P. Autonomous navigation method based on RGB-D camera for a crop phenotyping robot. J. Field Robot. 2024, 41, 2663–2675. [Google Scholar] [CrossRef]

- Zhao, S.; Zhao, X.; Huo, Z.; Zhang, F. BMSeNet: Multiscale context pyramid pooling and spatial detail enhancement network for real-time semantic segmentation. Sensors 2024, 24, 5145. [Google Scholar] [CrossRef]

- Cao, X.; Tian, Y.; Yao, Z.; Zhao, Y.; Zhang, T. Semantic segmentation network for unstructured rural roads based on improved SPPM and fused multiscale features. Appl. Sci. 2024, 14, 8739. [Google Scholar] [CrossRef]

- Xu, H.; Li, C.; Liu, Y.; Shao, G.; Hussain, Z.K. A Fast Rooftop Extraction Deep Learning Method Based on PP-LiteSeg for UAV Imagery. In Proceedings of the 2023 IEEE International Conference on Artificial Intelligence and Automation Control (AIAC), Xiamen, China, 17–19 November 2023; pp. 70–76. [Google Scholar] [CrossRef]

- Tan, Y.; Li, X.; Lai, J.; Ai, J. Real-time tunnel lining leakage image semantic segmentation via multiple attention mechanisms. Meas. Sci. Technol. 2024, 35, 075204. [Google Scholar] [CrossRef]

- Qu, G.; Wu, Y.; Lv, Z.; Zhao, D.; Lu, Y.; Zhou, K.; Tang, J.; Zhang, Q.; Zhang, A. Road-MobileSeg: Lightweight and accurate road extraction model from remote sensing images for mobile devices. Sensors 2024, 24, 531. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Ling, M. An improved point cloud denoising method in adverse weather conditions based on PP-LiteSeg network. PeerJ Comput. Sci. 2024, 10, e1832. [Google Scholar] [CrossRef] [PubMed]

- Dang, T.-V.; Bui, N.-T. Multi-scale fully convolutional network-based semantic segmentation for mobile robot navigation. Electronics 2023, 12, 533. [Google Scholar] [CrossRef]

- Bilinski, P.; Prisacariu, V. Dense decoder shortcut connections for single-pass semantic segmentation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6596–6605. [Google Scholar]

- Xing, Y.; Zhong, L.; Zhong, X. An Encoder-Decoder Network Based FCN Architecture for Semantic Segmentation. Wirel. Commun. Mob. Com. 2020, 2020, 8861886. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, G.; Tan, X.; Guo, B.; Zhu, K.; Liao, P.; Wang, T.; Wang, Q.; Zhang, X. SDFCNv2: An improved FCN framework for remote sensing images semantic segmentation. Remote Sens. 2021, 13, 4902. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Tian, L.; Zhong, X.; Chen, M. Semantic segmentation of remote sensing image based on GAN and FCN network model. Sci. Program. 2021, 2021, 9491376. [Google Scholar] [CrossRef]

- Tian, T.; Chu, Z.; Hu, Q.; Ma, L. Class-wise fully convolutional network for semantic segmentation of remote sensing images. Remote Sens. 2021, 13, 3211. [Google Scholar] [CrossRef]

- Wang, C.; Chen, X.; Wang, B.; Zhang, L.; Liu, B. SLTM network: Efficient application of lightweight image segmentation technology in detecting drivable areas for unmanned line-marking machines. IEEE Access 2024, 12, 169001–169012. [Google Scholar] [CrossRef]

- Wang, X.; Li, Z.; Zhang, Y.; An, G. Water level recognition based on deep learning and character interpolation strategy for stained water gauge. River 2023, 2, 506–517. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking BiSeNet for Real-Time Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 20–25 June 2021; pp. 9716–9725. [Google Scholar]

- Gong, D.; Du, Y.; Li, Z.; Li, D.; Xi, W. Semantic Segmentation for Tool Wear Monitoring: A Comparative Study. In Proceedings of the Seventh International Symposium on Laser Interaction with Matter (LIMIS), Shenzhen, China, 20–22 November 2024. Proc. SPIE 2025, 13543, 135430Y. [Google Scholar] [CrossRef]

- Wang, X.; Liu, R.; Yang, X.; Zhang, Q.; Zhou, D. MCFNet: Multi-attentional class feature augmentation network for real-time scene parsing. ACM Trans. Multimedia Comput. Commun. Appl. 2024, 20, 166. [Google Scholar] [CrossRef]

- Jin, T.; Han, X.; Wang, P.; Zhang, Z.; Guo, J.; Ding, F. Enhanced deep learning model for apple detection, localization, and counting in complex orchards for robotic arm-based harvesting. Smart Agric. Technol. 2025, 10, 100784. [Google Scholar] [CrossRef]

- Culman, M.; Delalieux, S.; Van Tricht, K. Individual palm tree detection using deep learning on RGB imagery to support tree inventory. Remote Sens. 2020, 12, 3476. [Google Scholar] [CrossRef]

- Gan, Y.; Wang, Q.; Iio, A. Tree crown detection and delineation in a temperate deciduous forest from UAV RGB imagery using deep learning approaches: Effects of spatial resolution and species characteristics. Remote Sens. 2023, 15, 778. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual tree-crown detection in RGB imagery using semi-supervised deep learning neural networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

| Product Name | Intel RealSense D435i | AgileX Bunker |

|---|---|---|

| Size | 90 × 25 × 25 mm | 1020 × 760 × 360 mm |

| Weight | 0.72 kg | 130 kg |

| Battery | Powered via USB-C | 48 V/30 Ah Lithium battery |

| Maximum angle degree | Not applicable | 35° |

| Speed range | Not applicable | 0–15 m/s |

| Receiver | Not applicable | 2.4 GHz/Max distance 1 km |

| Communication interface | USB-C | CAN |

| Field of view (FOV) | Horizontal: 87°; vertical: 58°; diagonal: 95° | Not applicable |

| Brushless servo motor | Not applicable | 2 × 650 W |

| Resolution | Depth: 1280 × 720 pixels; RGB: 1920 × 1080 pixels | Not applicable |

| Frame rate | Depth: Up to 90 fps; RGB: 30 fps | Not applicable |

| Model | Backbone | Class | IoU | Precision | Recall |

|---|---|---|---|---|---|

| PP-LiteSeg | STDC1 | Background | 0.9525 | 0.9647 | 0.9823 |

| Small | 0.5020 | 0.7187 | 0.6902 | ||

| Medium | 0.4815 | 0.8586 | 0.4399 | ||

| Large | 0.7147 | 0.8942 | 0.8323 | ||

| PP-LiteSeg | STDC2 | Background | 0.9511 | 0.9562 | 0.9045 |

| Small | 0.4887 | 0.8918 | 0.5106 | ||

| Medium | 0.4669 | 0.9376 | 0.4810 | ||

| Large | 0.7340 | 0.8770 | 0.8183 | ||

| FCN | STDC1 | Background | 0.9576 | 0.9712 | 0.9855 |

| Small | 0.6266 | 0.7735 | 0.7674 | ||

| Medium | 0.4706 | 0.9022 | 0.4958 | ||

| Large | 0.7476 | 0.8588 | 0.8524 | ||

| FCN | STDC2 | Background | 0.9570 | 0.9624 | 0.9942 |

| Small | 0.5912 | 0.9305 | 0.6311 | ||

| Medium | 0.4761 | 0.9315 | 0.4933 | ||

| Large | 0.7258 | 0.8851 | 0.8014 |

| Method | Object | Accuracy | Speed | Reference |

|---|---|---|---|---|

| RetinaNet | Detection (orchard scene) | P: 0.79; R: 0.65 | No evaluation | [52] |

| DeepForest | Detection and delineation | P: 0.59; R: 0.46 | No evaluation | [53] |

| Detectree2 | Detection and delineation | P: 0.66; R: 0.50 | No evaluation | [53] |

| Semi-supervised | Detection | IoU: 0.5; P: 0.69; R: 0.61 | No evaluation | [54] |

| FCN_STDC1 | Segmentation and classification of different sizes | IoU: 0.70; P: 0.88; R: 0.78 | 27.8 FPS | This study |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, T.; Kang, S.M.; Kim, N.R.; Kim, H.R.; Han, X. Comparative Analysis of CNN-Based Semantic Segmentation for Apple Tree Canopy Size Recognition in Automated Variable-Rate Spraying. Agriculture 2025, 15, 789. https://doi.org/10.3390/agriculture15070789

Jin T, Kang SM, Kim NR, Kim HR, Han X. Comparative Analysis of CNN-Based Semantic Segmentation for Apple Tree Canopy Size Recognition in Automated Variable-Rate Spraying. Agriculture. 2025; 15(7):789. https://doi.org/10.3390/agriculture15070789

Chicago/Turabian StyleJin, Tantan, Su Min Kang, Na Rin Kim, Hye Ryeong Kim, and Xiongzhe Han. 2025. "Comparative Analysis of CNN-Based Semantic Segmentation for Apple Tree Canopy Size Recognition in Automated Variable-Rate Spraying" Agriculture 15, no. 7: 789. https://doi.org/10.3390/agriculture15070789

APA StyleJin, T., Kang, S. M., Kim, N. R., Kim, H. R., & Han, X. (2025). Comparative Analysis of CNN-Based Semantic Segmentation for Apple Tree Canopy Size Recognition in Automated Variable-Rate Spraying. Agriculture, 15(7), 789. https://doi.org/10.3390/agriculture15070789