Abstract

It is challenging to achieve accurate tea bud detection in optical images with complex backgrounds since distinguishing between the foregrounds and backgrounds of these images remains difficult. Although several studies have been proposed to implicitly distinguish foregrounds and backgrounds via various attention mechanisms, explicit distinction between foregrounds and backgrounds has been seldom explored. Inspired by recent successful applications of the Segment Anything Model (SAM) in computer vision, this study proposes a SAM-assisted dual-branch YOLOv8 model named SD-YOLOv8 for tea bud detection to address the challenges of explicit distinction between foregrounds and backgrounds. The SD-YOLOv8 model mainly consists of two key components: (1) the SAM-based foreground segmenter (SFS) to generate foreground masks of tea bud images without any training, and (2) the heterogeneous feature extractor to parallelly capture both color features in optical images and edge features in foreground masks. The experimental results show that the proposed SD-YOLOv8 significantly improves the performance of tea bud detection based on the explicit distinction between foregrounds and backgrounds. The mean Average Precision of the SD-YOLOv8 model reaches 86.0%, surpassing the YOLOv8 (mAP 81.6%) by 5 percentage points and outperforming recent object detection models, including Faster R-CNN (mAP 60.7%), DETR (mAP 64.6%), YOLOv5 (mAP 72.4%), and YOLOv7 (mAP 80.6%). This demonstrates its superior capability in efficiently detecting tea buds against complex backgrounds. Additionally, this study proposes a self-built tea bud dataset with three seasons to address the data shortages in tea bud detection.

1. Introduction

As one of the top three beverages globally, tea has a great impact worldwide [1]. Firstly, the popularity of tea stems from its nutritional value, being rich in vitamins and polyphenols, so that tea consumption contributes to positive health. Secondly, the tea trade has played a central role in the development of international trade, and the global tea market is growing rapidly. Thirdly, a wide range of social drinking activities have been passed down reflecting various tea cultures [2]. With increase in customer needs, tea production has also risen significantly [3]. Although tea cultivation is a relatively mature activity, the technology applied to the picking and management of tea buds remains limited [4]. Existing tea bud picking machines used in production mainly simply focus on picking tea buds in a coarse-grained way—they are not able to automatically distinguish the grades of tea buds [5]. This is because tea bud picking machines fundamentally lack the visual perception capabilities required for morphology-based quality grading, which is a core requirement for automated picking of high-quality tea. Consequently, research into methods of accurately picking tea buds has become imperative.

Early tea bud detection strategies mainly relied on unsupervised algorithms and traditional machine learning models. Zhao et al. [6] utilized handcraft color features and a threshold-based algorithm to segment tea buds. Zhang et al. [7] employed an unsupervised corner detection method to locate picking points. Shao et al. [8] explored employing color features and K-means to extract tea buds. Karunasena et al. [9] explored the effectiveness of support vector machine (SVM) and gradient features in tea bud detection. However, these unsupervised algorithms and traditional machine learning models depend on manually designed features, and their adaptivity and robustness are relatively weak [10].

With the development of convolutional neural networks (CNNs) [11], scholars in computer vision have proposed a series of deep learning-based generic object detectors [12], which can adaptively extract robust image features via supervised training. The outstanding ability of deep learning has been demonstrated in visual agriculture tasks [13]. Thus, many scholars have introduced deep learning-based generic detectors, typified by the You-Only-Look-Once (YOLO) series [14,15,16,17,18,19,20], into the field of tea bud detection [21] to meet the requirements of real-time detection, as the YOLO series models are specifically designed to balance complexity and performance. This is achieved through a single-stage detection framework that eliminates the need for region proposal networks (RPNs), significantly reducing computational overhead. Additionally, the YOLO series employs techniques such as anchor box prediction and grid-based localization to further optimize efficiency, enabling high accuracy with low latency.

However, considering the specific application requirements of tea bud detection, recent generic object detectors are not completely suitable for this task. The reasons are the following: The detection model is designed for the tea bud picking machine. The picking machine only picks and counts the tea buds which are nearest to the camera during one picking process. After one picking process is finished, the picking machine will move to the next place and repeat the above process. Since tea bud detection and picking typically rely on optical images collected via close-up photography, the auto-focus mechanism employed in close-range photography usually creates the problem of blurring [22]. In the photographic imaging process of the camera, the nearest tea buds are clear, while the other farther tea buds are blurred. The clear region is named the foreground and the blurred region is named the background. Thus, the detection model only needs to detect tea buds in the foreground. To avoid duplicate counting, the system should only detect and count the tea buds that are in the foreground, rather than those in the background. This is because, in practice, the machine picks tea buds sequentially from front to back. Therefore, only the nearest tea buds should be recognized as true-positive, while any detection of tea buds in the background should be considered as false-positive.

Therefore, several scholars have explored algorithms that integrate attention mechanisms [23] to implicitly highlight the foreground and filter out the background during model inference. Li et al. [24] and Zhu et al. [25] aggregated SENet [26] and YOLOv5 [18] to adaptively filter redundant features in the channel dimension of feature maps. Similarly, Jiang et al. [27] incorporated CBAM [28] into YOLOv7 [19] to selectively eliminate redundant features across both channel and spatial dimensions.

Nevertheless, recent tea bud detection models usually focus on implicit distinction while neglecting explicit separation between the foreground and background [29]. As mentioned above, it is only when the tea bud in the foreground is detected as a tea bud that it is true-positive, and the tea bud in the background is detected as a tea bud that is false-positive. Thus, if the foreground and background regions are clearly distinguished before detection, it will reduce the complexity of tea bud detection, as this narrows down the model’s search space [30].

Recently, since the Segment Anything Model (SAM) [31] has shown outstanding performance in zero-shot instance segmentation of various scenes [32,33], several studies have explored utilizing SAM to assist semantic segmentation and object detection tasks via reducing the search spaces of deep learning-based models. Inspired by the superiority of recent SAM-assisted visual models and the advantages of SAM on contour perception, this study proposes a SAM-assisted dual-branch YOLOv8 model termed SD-YOLOv8 to explicitly distinguish the foreground and background in tea bud images and further improve the accuracy of tea bud detection.

This study makes three main contributions, which are outlined below:

- First, since only detecting tea buds in the foreground images is necessary for a tea bud picking machine, this study explores utilizing SAM to effectively distinguish the foregrounds and backgrounds of tea bud images and further improve the accuracy of tea bud detection.

- Second, a SAM-assisted dual-branch YOLOv8 (SD-YOLOv8) model is proposed to effectively extract heterogeneous features of optical tea bud images and foreground masks.

- Third, to evaluate the performance of the proposed SD-YOLOv8, an annotated self-built tea bud detection dataset with high-resolution optical images is implemented.

2. Methodology

2.1. The Self-Built Tea Bud Detection Dataset

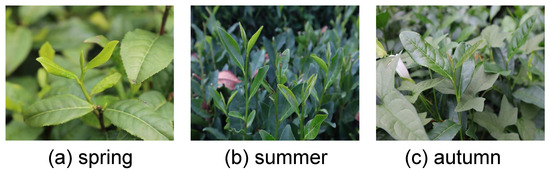

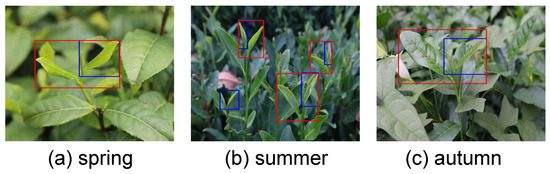

The dataset employed in this experiment is a self-built dataset whose images were collected via a handheld SONY ZV-E10L camera (The manufacturer is SONY Corporation of Tokyo, Japan) in a Tea Garden in Hangzhou, China, from April to September 2024. To perform the image acquisition, the camera was equipped with a 15–45 mm zoom lens with an f/aperture of 5.6. It works in Aperture Priority (Av) mode while automatically optimizing the exposure parameters. It contains 748 tea bud images captured during spring, summer, and autumn, each with a resolution of 4096 × 3072 pixels. These images are densely distributed with tea buds. There are significant differences in the morphology, color, and texture of tea buds in different seasons. Through cross-season dataset fusion training, this model can effectively capture the seasonal phenotypic feature variation of tea buds, then optimize the feature spatial representation, and significantly reduce the deviation of the feature distribution caused by the limitation of using single seasonal data. Owing to the camera’s autofocus capability, the foreground in each image remains sharp while the background appears blurred. In addition, to simulate the viewing angle of the tea bud picking machine, the camera was positioned at angles ranging from 0° and 30° relative to the horizontal line, with shooting distances between 20 to 40 cm. A subset of the tea bud images in this dataset is shown in Figure 1. In this study, the tea bud dataset is divided into two categories—“one bud with one leaf” and “one bud with two leaves”. The tea buds are manually annotated using the LabelImg annotation tool, and all bud images are marked with rectangular boxes, as shown in Figure 2. The category of “one bud with one leaf” is marked by blue bounding boxes, while “one bud with two leaves” is represented by a red bounding box. The label information contains the image filename, the path of the stored image, the pixel size, the dimension category name, and the pixel coordinates of the target box for subsequent object detection tasks. To ensure the generalization ability of the model, the original dataset was augmented to 3745 tea bud images by applying Gaussian noise with a standard deviation () of 0.05 and image rotations within a range of . The enhanced dataset was randomly divided into a training set, validation set, and test set in a ratio of 6:2:2 for model training and testing. The dataset details are presented in Table 1.

Figure 1.

Illustration of collected optical tea bud images.

Figure 2.

Annotation instances of tea bud images.

Table 1.

The initial dataset and post-augmentation dataset.

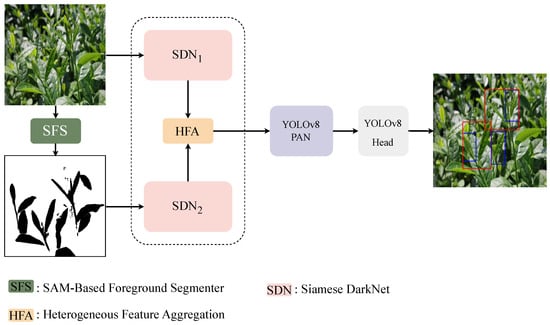

2.2. The SAM-Assisted Dual-Branch YOLOv8 (SD-YOLOv8)

The SD-YOLOv8 is a YOLOv8-based single-stage tea bud detection model, with its architecture illustrated in Figure 3. It primarily comprises four components: (1) the SAM-based foreground segmenter (SFS), (2) the heterogeneous feature encoder (HFE), (3) the path aggregation network (PAN) from the original YOLOv8, (4) the detection head (DH) from the original YOLOv8. The forward computations of the SD-YOLOV8 mainly involve four steps. Firstly, the SFS takes one optical image of tea buds as input and outputs one binary foreground mask . Secondly, the HFE takes and as input and produces three feature maps , and as output. Thirdly, the PAN takes , , and as input and outputs three scale-wise feature maps , , and . Finally, the DH takes , , and as input and outputs the predicted bounding boxes , where N is the total number of detected bounding boxes. The overall procedure of SD-YOLOv8 can be formulated as follows:

Figure 3.

The feed-forward procedure and core components of SD-YOLOv8.

Furthermore, since the PAN module and DH module employed in the SD-YOLOv8 directly follow the design of the original YOLOv8, the subsequent sections provide detailed descriptions only for the SFS and HFE.

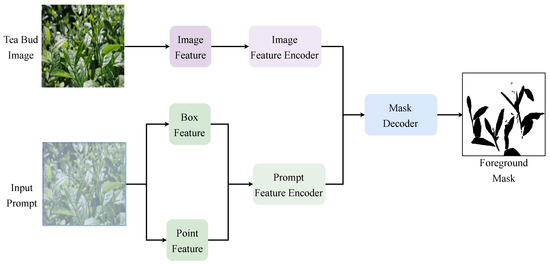

2.3. The SAM-Based Foreground Segmenter

SAM [31] is a large pre-trained image segmentation model which has been trained on 1 billion natural images and 11 million instance masks, utilizing input prompts. Due to the pre-training, SAM possesses three remarkable capabilities which have been demonstrated across various visual tasks. Firstly, SAM is able to take one image and prompt as inputs and output instance masks without any post-training. Secondly, it is highly adaptable and compatible with images from any scene. Thirdly, SAM is sensitive to the contours of instances as long as they are clear enough. Leveraging these features, SAM is effectively employed to generate binary masks to distinguish between the foreground and background in tea bud images.

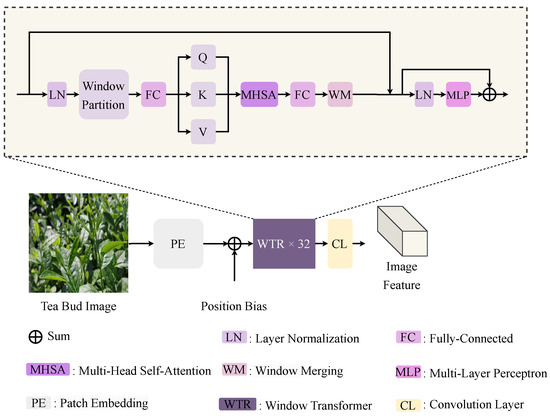

The SFS (illustrated in Figure 4) is a refined variant of the SAM. This design reduces redundancy while retaining critical details and enables the model to capture fine-grained edges without excessive fragmentation. It encompasses three core components: (1) a pre-trained global feature encoder (GFE) with a Patch Embedding (PE) module and 32 Window Transformer (WTR) modules, (2) a pre-trained prompt feature encoder (PFE) with a Point Embedding (PTE) module and a Bounding Box Embedding (BBE) module, (3) a pre-trained mask decoder (MD) ) with a Point Embedding (PTE) module and a Bounding Box Embedding (BBE) module. The SAM provides three types of prompts—point, bounding box, and text—to guide the segmentation of regions and instances. Because only the dynamically generated binary foreground mask of the whole tea bud image is needed for subsequent work, this study uses 1024 evenly distributed points, with the bounding box containing the whole image as a prompt, without the text type prompt. As shown in Figure 4, the SFS inference procedure contains four steps. Firstly, the GFE processes the input image and outputs the global feature map . Next, the PFE takes the prompts as input and outputs the prompt feature map . Subsequently, the MD predicts a binary foreground mask , where the white regions indicate foregrounds while the black regions represent the backgrounds. The SFS procedure can be formulated as follows:

Figure 4.

The feed-forward procedure and core components of the SAM-based foreground segmenter.

2.3.1. The Global Feature Encoder in SFS

As illustrated in Figure 5, the GFE comprises a Patch Embedding (PE) module and 32 Window Transformer (WTR) modules. In the PE module, the input image is initially segmented into 1600 image patches , each with size of via a sliding window (SW) [34] of the same size that globally slides in spatial dimensions of the image with a stride of 16. Subsequently, each image patch (), where m represents the serial number of each patch, is transformed into an embedding vector through a fully connected (FC) layer. These embedding vectors are subsequently concatenated to form an embedding matrix E. Finally, the initial feature map is obtained by adding a position bias B to the E. The computational process to obtain the initial feature map in the patch embedding module is formulated as follows:

Figure 5.

The structure of GFE. The PE module is employed to extract the local features, the WTR module aims to extract the global contexts, and the CL module is used for feature downsampling.

Subsequently, is transformed into the global feature map through 32 serial WTR modules. The 32 WTR modules are designed to extract global contextual information from the input image. This configuration follows the original design of the SAM (Segment Anything Model). In each WTR module, undergoes the following processess:

(1) Layer Normalization: Initially, is processed via a Layer Normalization (LN) module.

(2) Patch Partitioning: The normalized feature map is subsequently partitioned into 36 image patches, denoted as , each with dimensions of . This is achieved using a sliding window (SW) of size that moves globally across the spatial dimensions of the image with a stride of 14.

(3) Linear Projection: Each patch , where m represents the patch’s serial number, is processed through a fully connected (FC) layer. Since the FC layer is a linear computation, the output matrices , , and are three linear projections.

(4) Multi-Head Self-Attention: A Multi-Head Self-Attention (MHSA) layer [35], followed by another FC layer, takes these three projections as input and outputs a context matrix for each patch .

(5) Window Merging: All context matrices , , ..., are concatenated in the spatial dimension via a Window Merging (WM) module, forming the global context matrix G.

(6) Feature Fusion: To balance global contexts and local details, the input feature map X and the global context matrix G are summed to produce a temporary feature map .

(7) Channel Enhancement: Finally, to enhance features in the channel dimension, is processed through a residual module consisting of Layer Normalization (LN) and a Multi-Layer Perceptron (MLP).

Given an input X, the calculations for the output Y in each WTR module can be formulated as follows:

2.3.2. Prompt Encoder of SAM

As illustrated in Figure 6, since encompasses both the point vector and the bounding box vector , the Prompt Feature Encoder (PFE) primarily comprises two parallel modules: a Point Embedding (PTE) module and a Bounding Box Embedding (BBE) module. In the PTE module, is first normalized through a Coordinate Normalization Layer (CNL). The Gaussian representation of the points is derived by computing the inner product of the Gaussian matrix and the normalized , where the is learned from the data. The relative position matrix of is subsequently extracted using trigonometric functions and channel concatenation. Lastly, the point feature map is calculated by the sum of and a learnable embedding matrix .

Figure 6.

The feed-forward procedure and core components of PFE.

The computational steps involved in BBE follow a similar transformation process as PTE. In the PFE module, the input bounding box vector is first normalized through the Coordinate Normalization Layer (CNL). Thereafter, the Gaussian representation of the bounding box is derived by computing the inner product of the Gaussian matrix and the normalized . The relative position matrix for is subsequently extracted using trigonometric functions and channel concatenation. Finally, the bounding box feature map is computed as the sum of and a learnable embedding matrix .

Finally, the is computed via the sum of and .

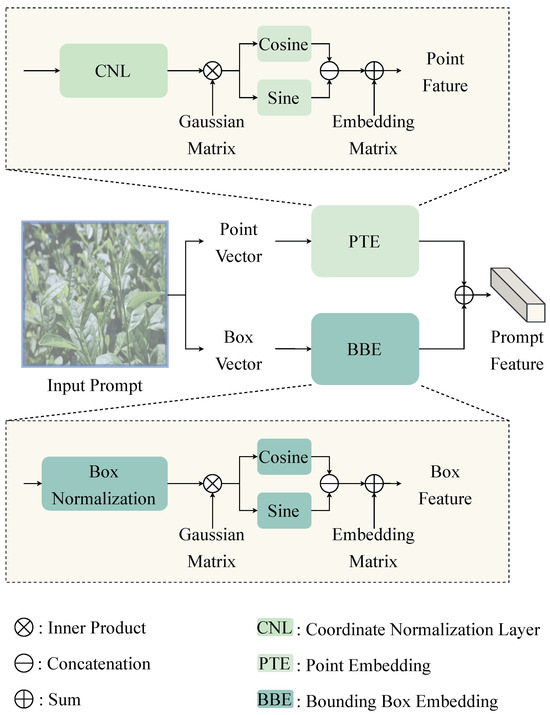

2.3.3. Mask Decoder of SAM

As shown in Figure 7, the image feature encoder mainly consists of one convolution layer (CL), four MLP layers, and two transposed convolution layers (TCLs). Initially, to balance the gap between the prompt feature and the image feature, is processed via a series of MLPs. After that, to align the dimensions of prompt feature with the image global feature, undergoes transposed convolutions. Subsequently, the joint feature is calculated by the inner-product of and . Following this, resizes the width and height back to the input size through the transpose convolution of the three upsampling (US) layers in order to obtain the instance mask . The Mask Binarization (MB) layer applies a fixed threshold of 0.5 to convert into a binary mask. The upsampling layer is built on a bilinear interpolation operator. The computations of the mask binarization layer are as follows: First, the instance segmentation map is traversed pixel-by-pixel. If it is the pixel of the instance, it is determined as the foreground pixel, and otherwise it is the background pixel. The calculations within the MD module can be formulated as follows:

Figure 7.

The feed-forward procedure and core components of MD.

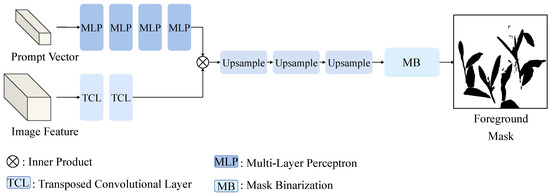

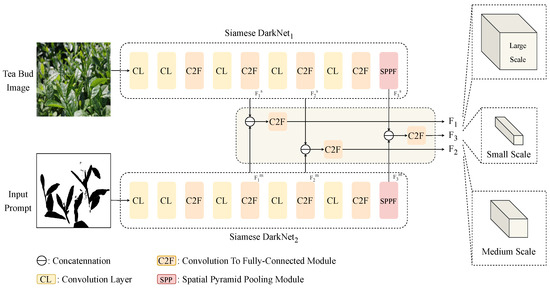

2.4. The Heterogeneous Feature Extractor

As shown in Figure 8, the heterogeneous feature extractor mainly consists of two Siamese DarkNet (SDN) encoders and one heterogeneous feature aggregation (HFA) module. The two SDNs aim to separately extract local semantic features in the tea bud image and local edge features in the foreground mask via two parallel branches, and . Specifically, takes as input and outputs three multi-scale semantic feature maps . In parallel, takes the foreground image as input and outputs three multi-scale edge feature maps , and . The HFA module is designed to fuse local semantic features and local edge features. The overall procedure of two SDN encoders and HFA module can be formulated as follows:

Figure 8.

The feed-forward procedure and core components of HFE.

2.4.1. The Siamese DarkNet Encoder

and share the same network structure, which contains five convolution layers (), four modules, and one spatial pyramid pooling (SPP) module [36]. The layers are designed to extract local features of various patterns within images. Given an input X, the output Y of the layer is calculated as formula 30, where C is a convolution operator, is a batch normalization operator, and is the the activation function SiLU:

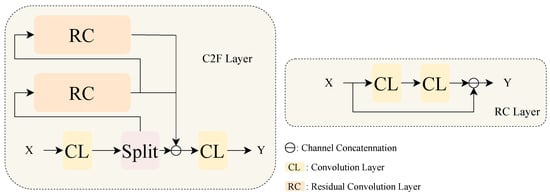

As shown in Figure 9, the modules are designed to strike a balance between low-level features with rich details and high-level features with abstract semantics. This is achieved through the use of layers and (residual convolution) layers. Given an input X, the output Y of the module layer is calculated by the following steps:

Figure 9.

The feed-forward procedure and core components of the C2F Module.

(1) Split the input X into two parts, and , using a layer:

(2) Apply two layers to :

(3) Concatenate with to obtain the output Y:

Thereby, given an input X, the output Y can be formulated as follows:

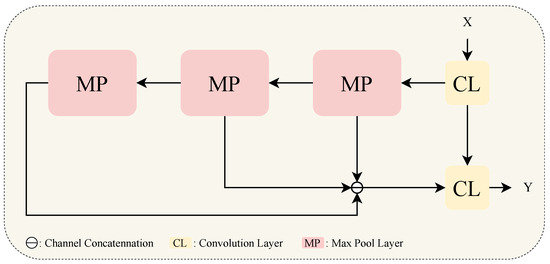

As shown in Figure 10, the SPP module aims to assign multi-scale receptive fields to semantic features via max pooling () layers and CL layers. Given an input X, the output Y of the SPP layer is calculated by the following formulas:

Figure 10.

The feed-forward procedure and core components of the SPP module.

2.4.2. The Heterogeneous Feature Aggregation

Since the feature maps extracted via two Siamese SDNs represent heterogeneous features from two distinct image types, the HFA module is designed to fuse these features via channel concatenations and three C2F layers. The computational steps of the HFA module can be formulated as follows:

3. Experimental Settings

3.1. Evaluation Matrices

To evaluate the performance of the SD-YOLOv8 in tea bud detection, this study employs three widely adopted metrics: precision (P), recall (R), and mean average precision (mAP). Precision refers to the ratio of the correctly identified positive samples to the total number of predicted positive samples. Recall corresponds to the proportion of correctly identified true positive samples to the total number of measured positive samples. TP indicates the number of true positive samples, FP represents the number of false positive samples, and FN denotes the number of false negative samples. The formulas for calculating P and R are as follows:

Furthermore, the average precision (AP) is the average of the precision recall curve and the area under the axis. The mAP is the average of the AP values for each detected category. The calculations for the average precision (AP) and mAP are detailed below:

where indicates the precision at recall level r, and represents the average precision of the ith category.

3.2. Settings of Experimental Environment and Model Parameters

The experimental setup comprised an AMD Ryzen 7 5800H CPU and NVIDIA RTX 3060 GPU with 16 GB RAM, providing computational acceleration through the CUDA 11.7 framework. The software environment was configured with the Windows 11 operating system, Python 3.8 interpreter, and PyTorch 1.10.0 deep learning library, ensuring compatibility with GPU-accelerated tensor operations. This hardware–software configuration delivered excellent compute performance, effectively supporting the training requirements of the tea bud detection models.

The model training in this study employed the following hyperparameter configuration: The epoch number was set to 100 to ensure comprehensive feature learning through sufficient iterations, while the batch size was fixed at 32 to maintain memory efficiency and gradient stability. An initial learning rate of 0.01 was selected in conjunction with the Adam optimizer to enable adaptive parameter updates. Additionally, a momentum coefficient of 0.937 was applied to accelerate convergence dynamics. This parameter configuration effectively balanced training speed and accuracy requirements, thereby achieving optimal performance in tea bud detection tasks.

4. Results and Discussion

4.1. Performance Evaluation of SD-YOLOv8

To evaluate the performance of SD-YOLOv8, this study conducted a comparative analysis with five classic object detection models: Faster-RCNN [37], DETR [38], YOLOv5 [18], YOLOv7 [19], and YOLOv8 [20]. The comparison was based on the test set of this study’s custom-built dataset, specifically designed for tea bud detection.

Table 2 reveals that the mean Average Precision (mAP) of the SD-YOLOv8 model reaches 86.0%, surpassing the YOLOv8 (mAP 81.0%) by 5 percentage points and outperforming current mainstream object detection models, including Faster R-CNN (mAP 60.7%), DETR (mAP 64.6%), YOLOv5 (mAP 72.4%), and YOLOv7 (mAP 80.6%). The performances of the Faster R-CNN and DETR are relatively inferior, primarily due to their challenges in detecting small and densely packed buds. Faster R-CNN relies on regional proposal networks (RPNS) which may not be effective in generating high-quality proposal regions for small target detection. DETR uses an end-to-end Transformer architecture which lacks the ability to extract detailed features from small buds. YOLOv5 performs better than Faster R-CNN and DETR, thanks to its Bottleneck CSP module [17], which trades off the balance between low-level features and abstract features. YOLOv7 surpasses YOLOv5 in network performance by introducing a more efficient detection head, which enhances the capture of multi-level feature information. However the detection of small-scale tea buds in YOLOv5 is constrained by its reliance on loss function optimization, which results in slower convergence rates during model training. Meanwhile, YOLOv7’s dependence on conventional label assignment strategies introduces limitations in balancing positive and negative sample distributions, potentially compromising the detection accuracy for targets. YOLOv8 further improves the detection capabilities by the introduction of new bottleneck structures. The proposed SD-YOLOv8 model exhibits a pronounced performance edge, attributed to its dual backbone architecture that simultaneously extracts both semantic and edge features of the foreground. The improved SD-YOLOv8 model achieves heterologous feature complementarity and synergistic characterization optimization by parallel input of the mask image generated by the SAM and the original RGB image. Specifically, the mask image generated by the SAM strengthens the edge features of the tea bud, effectively solving the problem of blurred edges in tea bud detection; while the RGB image completely retains the biological morphological features of the tea bud in color distribution and surface texture. This multi-modal data fusion strategy enriches the feature information extracted by the model. In addition, SD-YOLOv8 has a better generalization ability when dealing with environmental diversity. To intuitively display the performance of SD-YOLOv8 in tea bud detection, this study selected tea bud images with varying lighting conditions and angles as visualization samples and compared them with YOLOv5, YOLOv7, YOLOv8, Faster R-CNN, and DETR. As shown in Figure 11, SD-YOLOv8 outperforms the other models. The Faster R-CNN and DETR models exhibited missed detections when detecting small buds. Particularly, DETR failed to recognize some obvious areas of tea buds. YOLOv5 yielded numerous false detections in images with complex background. YOLOv7 encountered difficulties in identifying areas with dense buds. Although YOLOv8 shows improvement over the aforementioned models, its detection performance remains inadequate for small buds. SD-YOLOv8, leveraging its multi-modal data fusion strategy, achieves accurate identification of small buds and accurate detection under a complex background.

Table 2.

Performance comparison between SD-YOLOv8 and five classic object detection models.

Figure 11.

Illustrations of the tea bud detection results of different models.

4.2. Ablation Analysis

The purpose of this section is to deeply analyze the effectiveness of SFS and HFE in SD-YOLOv8 for tea bud detection. This study used the standard YOLOv8 model as a baseline, for which the mAP is 81.6%. Five ablation experiments are implemented. The results are shown in Table 3.

Table 3.

Ablation analysis of SD-YOLOv8.

Firstly, this study replaced red (R), green (G), and blue (B) channels in the input tea bud image successively with the foreground mask generated by the SFS module, while keeping the baseline unchanged. This was done to explore the effectiveness of the foreground mask. The mAP is 84.1% for the red channel replacement, 82.9% mAP for the green channel replacement, and 82.8% mAP for the blue channel replacement. The performances of all three channel replacements are better than the baseline, which demonstrates that despite the loss of some color features, the features of the foreground mask are more significant in each of the three channels.

Secondly, this study used a four-channel TIFF image that combined the RGB channels with the foreground mask as the input for YOLOv8. This step was taken to further explore the effectiveness of the foreground mask. The use of a four-channel TIFF image led to performance improvements due to the increased available information. The mAP of the four-channel tiff image in the YOLOv8 model is 83.8%.The four-channel YOLOv8 model outperformed both the baseline and the channel-replaced YOLOv8 models, which suggests that integrating foreground features and color features can enhance the detection of tea buds.

Finally, SD-YOLOv8 demonstrated superior performance compared to the four-channel YOLOv8 model. This result underscores the superiority of the HFE module, which employs a dual-branch encoder to extract both color features and foreground mask features. Unlike the simplistic channel concatenation approach, the dual-branch encoder achieves heterogeneous feature complementarity and synergistic characterization optimization by processing the SAM-generated mask image and the original RGB image in parallel.

4.3. Feature Visualization

To vividly illustrate the effect of the SD-YOLOv8 algorithm in detecting tea buds, this study employed thermal maps for visualization. As shown in Figure 12, different colors represent different levels of importance. Regions with a higher color temperature indicate greater attention given by the model, suggesting their significance in the detection process. Regions with a lower temperature indicate less attention given by the model, indicating they have no significance during the detection process. Table 4 provides quantitative evaluation data for both the YOLOv8 and SD-YOLOv8 models in the foreground and background discrimination task. It can be seen that the proposed model pays almost no attention to the background regions while YOLOv8 focuses more on the background. Correspondingly, this visualization clearly demonstrates that the SD-YOLOv8 model is capable of precisely targeting tea buds with a heightened focus.

Figure 12.

Comparative feature visualization of SD-YOLOv8.

Table 4.

Quantitative evaluation of various foreground and background distinguishing methods.

4.4. Analysis of Foreground and Background Distinguishing Techniques

Although the effectiveness of the SAM-based explicit distinction of the foreground and background was evaluated through ablation analysis, this sub-section aims to separately evaluate the advantages of the SAM-based explicit distinction over two attention mechanism-based implicit foreground distinction methods, including SENet and CBAM. As depicted in Table 5 and Figure 13, the SD-YOLOv8 model outperforms SE-YOLOv8 and CBAM-YOLOv8, which demonstrates that the SAM-based explicit distinction of foreground and background is more advantageous than attention mechanism-based implicit distinction methods.

Table 5.

Quantitative evaluation of various foreground and background distinguishing methods.

Figure 13.

Illustrations of the effectiveness of various foreground and background distinguishing methods.

5. Conclusions

This study first proposes a Segment Anything Model-based foreground segmenter (SFS), designed to explicitly distinguish foreground and background in tea bud images through zero-shot instance segmentation technology. Subsequently, a Heterogeneous Feature Extractor (HFE) is proposed that effectively captures heterogeneous features from both optical tea bud images and foreground masks concurrently. Thirdly, by integrating SFS and HFE, this study presents a novel model named SAM-assisted dual-branch YOLOv8 (SD-YOLOv8), which efficiently leverages the foreground information in tea bud images. The experimental results show that the SAM-based method for the explicit distinction of foreground and background is more advantageous than attention mechanism-based implicit distinctions. Furthermore, comparative evaluations reveal that SD-YOLOv8 achieves remarkable overall performance in tea bud detection, outperforming recent classic object detection models. Additionally, to address the lack of a dataset for tea bud detection, this study proposes a self-built, annotated dataset with high-resolution optical images.

Although the self-built dataset in this article covers multiple seasons, the number of images is somewhat insufficient, and they mainly come from tea gardens in Hangzhou, China. This may restrict the model’s generalization ability when applied to images from other regions or under different environmental conditions. To address this limitation, future work should focus on expanding the dataset’s diversity by increasing the number of images and incorporating data from a wider range of geographical locations and environmental scenarios. In addition, the proposed model currently lacks an explicit occlusion-aware learning mechanism. Although occlusion handling is not the primary focus of this study, it is a critical challenge in practical tea bud counting applications. To address this, future work should explore dedicated solutions to improve the model’s robustness and adaptability. For instance, incorporating real tea leaves and twigs as occluders into the training data could enhance the model’s ability to handle natural occlusions. Alternatively, integrating attention mechanisms such as CBAM (Convolutional Block Attention Module) could enable the model to focus on visible tea bud regions while suppressing interference from occluded areas.

Author Contributions

X.Z.: Data curation, methodology, software, validation, visualization, writing—original draft; D.W.: Conceptualization, data curation, funding acquisition, supervision, writing—review and editing; F.X.: Investigation, project administration, resources, writing—original draft. All authors have read and agreed to the published version of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the Zhejiang Forestry Science and Technology Project (Grant No. 2023SY08). The work was also supported by the Open Research Fund of the Engineering Research Center of Intelligent Technology for Agriculture, Ministry of Education (Grant number ERCITA-KF003).

Data Availability Statement

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Xu, Q.; Yang, Y.; Hu, K.; Chen, J.; Djomo, S.N.; Yang, X.; Knudsen, M.T. Economic, environmental, and emergy analysis of China’s green tea production. Sustain. Prod. Consum. 2021, 28, 269–280. [Google Scholar] [CrossRef]

- Heiss, M.; Heiss, R. The Story of Tea: A Cultural History and Drinking Guide; Cookery, Food and Drink Series; Clarkson Potter/Ten Speed: Berkeley, CA, USA, 2007. [Google Scholar]

- Guan, J. The Tea Industry in Modern China and Public Demand for Tea. In Proceedings of the Making Food in Local and Global Contexts; Nobayashi, A., Ed.; Springer: Singapore, 2022; pp. 173–185. [Google Scholar] [CrossRef]

- Huang, B.; Zou, S. Tea Image Recognition and Research on Structure of Tea Picking End-Effector. J. Electron. Cool. Therm. Control. 2024, 13, 51–60. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, Z.; Cao, C.; Luo, K.; Qin, K.; Huang, Y.; Cao, J. Design and operation of a deep-learning-based fresh tea-leaf sorting robot. Comput. Electron. Agric. 2023, 206, 107664. [Google Scholar] [CrossRef]

- Zhao, B.; Wei, D.; Sun, W.; Liu, Y.; Wei, K. Research on tea bud identification technology based on HSI/HSV color transformation. In Proceedings of the 2019 6th International Conference on Information Science and Control Engineering (ICISCE), Shanghai, China, 20–22 December 2019; IEEE: New York, NY, USA, 2019; pp. 511–515. [Google Scholar] [CrossRef]

- Zhang, L.; Zou, L.; Wu, C.; Chen, J.; Chen, H. Locating Famous Tea’s Picking Point Based on Shi-Tomasi Algorithm. Comput. Mater. Contin. 2021, 69, 1109–1122. [Google Scholar] [CrossRef]

- Shao, P.; Wu, M.; Wang, X.; Zhou, J.; Liu, S. Research on the tea bud recognition based on improved k-means algorithm. Matec Web Conf. 2018, 232, 03050. [Google Scholar] [CrossRef]

- Karunasena, G.; Priyankara, H. Tea bud leaf identification by using machine learning and image processing techniques. Int. J. Sci. Eng. Res. 2020, 11, 624–628. [Google Scholar] [CrossRef]

- Meng, J.; Wang, Y.; Zhang, J.; Tong, S.; Chen, C.; Zhang, C.; An, Y.; Kang, F. Tea Bud and Picking Point Detection Based on Deep Learning. Forests 2023, 14, 1188. [Google Scholar] [CrossRef]

- Nebauer, C. Evaluation of convolutional neural networks for visual recognition. IEEE Trans. Neural Netw. 1998, 9, 685–696. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Comparison of different computer vision methods for vineyard canopy detection using UAV multispectral images. Comput. Electron. Agric. 2024, 225, 109277. [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, W.; Zhao, L.; Li, J.; Shang, S.; Ding, X.; Wang, T. Detection and classification of tea buds based on deep learning. Comput. Electron. Agric. 2022, 192, 106547. [Google Scholar] [CrossRef]

- Wang, S.; Wu, D.; Zheng, X. TBC-YOLOv7: A refined YOLOv7-based algorithm for tea bud grading detection. Front. Plant Sci. 2023, 14, 1223410. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Li, Y.; Ma, R.; Zhang, R.; Cheng, Y.; Dong, C. A Tea Buds Counting Method Based on YOLOv5 and Kalman Filter Tracking Algorithm. Plant Phenomics 2023, 5, 0030. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, Z.; Lin, G.; Chen, P.; Li, X.; Zhang, S. Detection and Localization of Tea Bud Based on Improved YOLOv5s and 3D Point Cloud Processing. Agronomy 2023, 13, 2412. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Jiang, K.; Xie, T.; Yan, R.; Wen, X.; Li, D.; Jiang, H.; Jiang, N.; Feng, L.; Duan, X.; Wang, J. An attention mechanism-improved YOLOv7 object detection algorithm for hemp duck count estimation. Agriculture 2022, 12, 1659. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Liu, Z.; Zhuo, L.; Dong, C.; Li, J. YOLO-TBD: Tea Bud Detection with Triple-Branch Attention Mechanism and Self-Correction Group Convolution. Ind. Crop. Prod. 2025, 226, 120607. [Google Scholar] [CrossRef]

- Xia, C.; Zhang, H.; Gao, X.; Li, K. Exploiting background divergence and foreground compactness for salient object detection. Neurocomputing 2020, 383, 194–211. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, Y.; Guo, J.; Li, Y.; Gao, X.; Zhang, J. IRSAM: Advancing Segment Anything Model for Infrared Small Target Detection. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerlan, 2025; pp. 233–249. [Google Scholar]

- Zhang, Y.; Yin, M.; Bi, W.; Yan, H.; Bian, S.; Zhang, C.H.; Hua, C. ZISVFM: Zero-Shot Object Instance Segmentation in Indoor Robotic Environments With Vision Foundation Models. IEEE Trans. Robot. 2025, 41, 1568–1580. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Ashish, V. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, I. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerlan, 2020; pp. 213–229. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).