A Variable-Threshold Segmentation Method for Rice Row Detection Considering Robot Travelling Prior Information

Abstract

1. Introduction

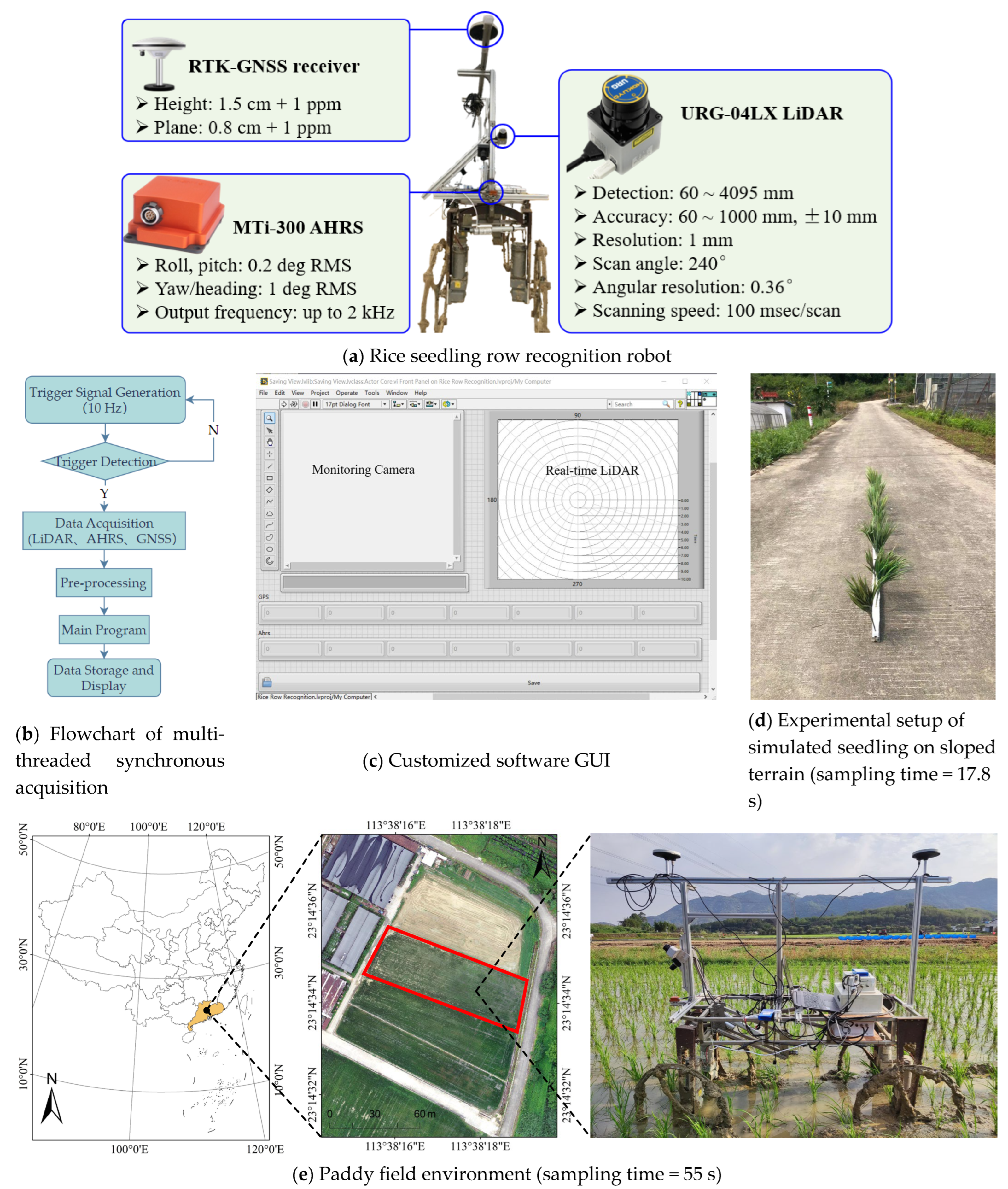

2. Materials and Methods

2.1. Three-Dimensional Point Cloud Acquisition and Preprocessing

2.1.1. LiDAR and AHRS Sensor Coordinate Calibration

- (1)

- Conversion of LiDAR Coordinate System to Vehicle Coordinate System

- (2)

- Conversion of the Vehicle Coordinate System to the Geodetic Coordinate System

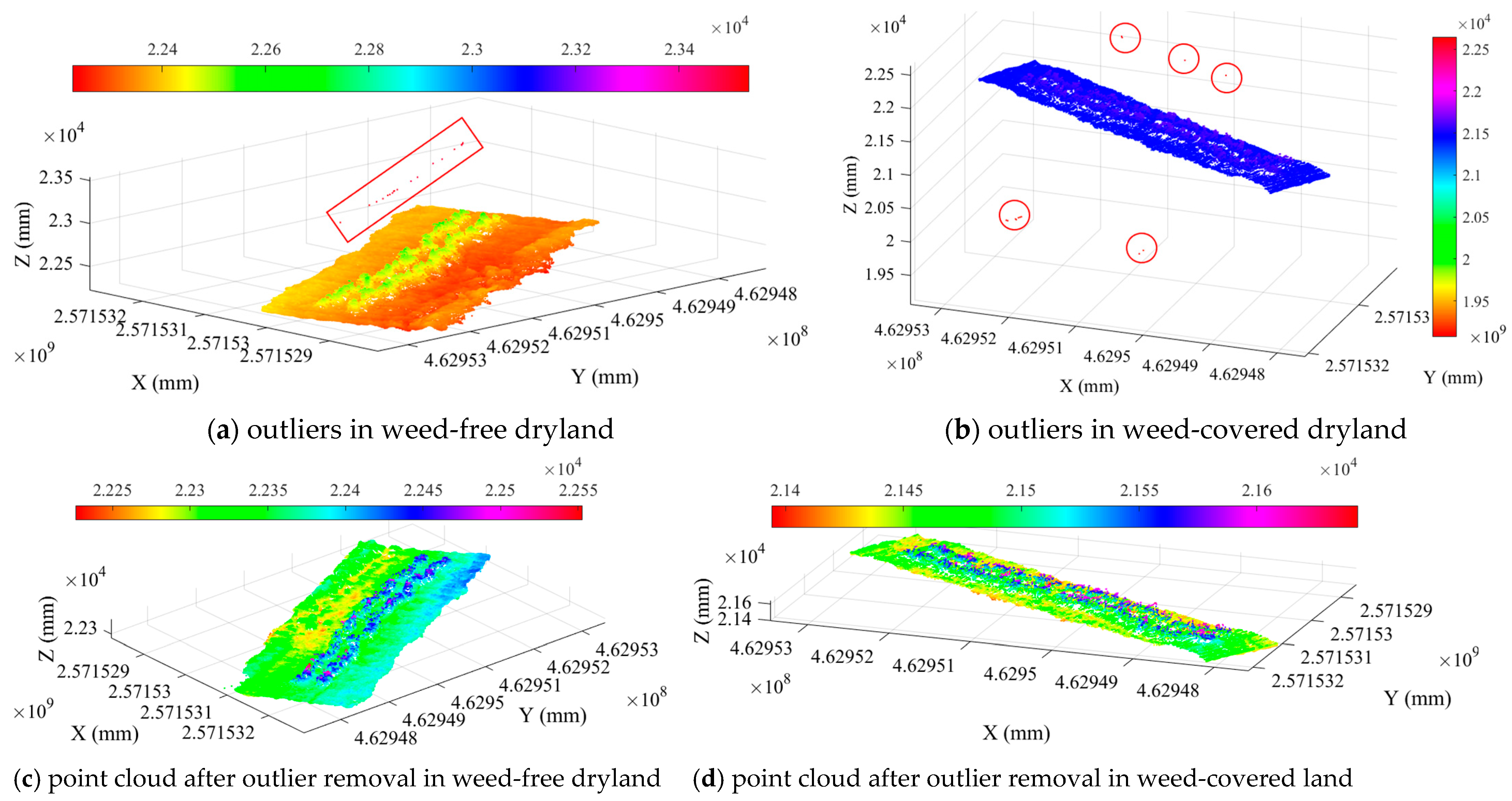

2.1.2. Outlier Elimination Based on the Pauta Criterion

2.2. Rice Row Recognition Method Based on Multi-Sensor Information

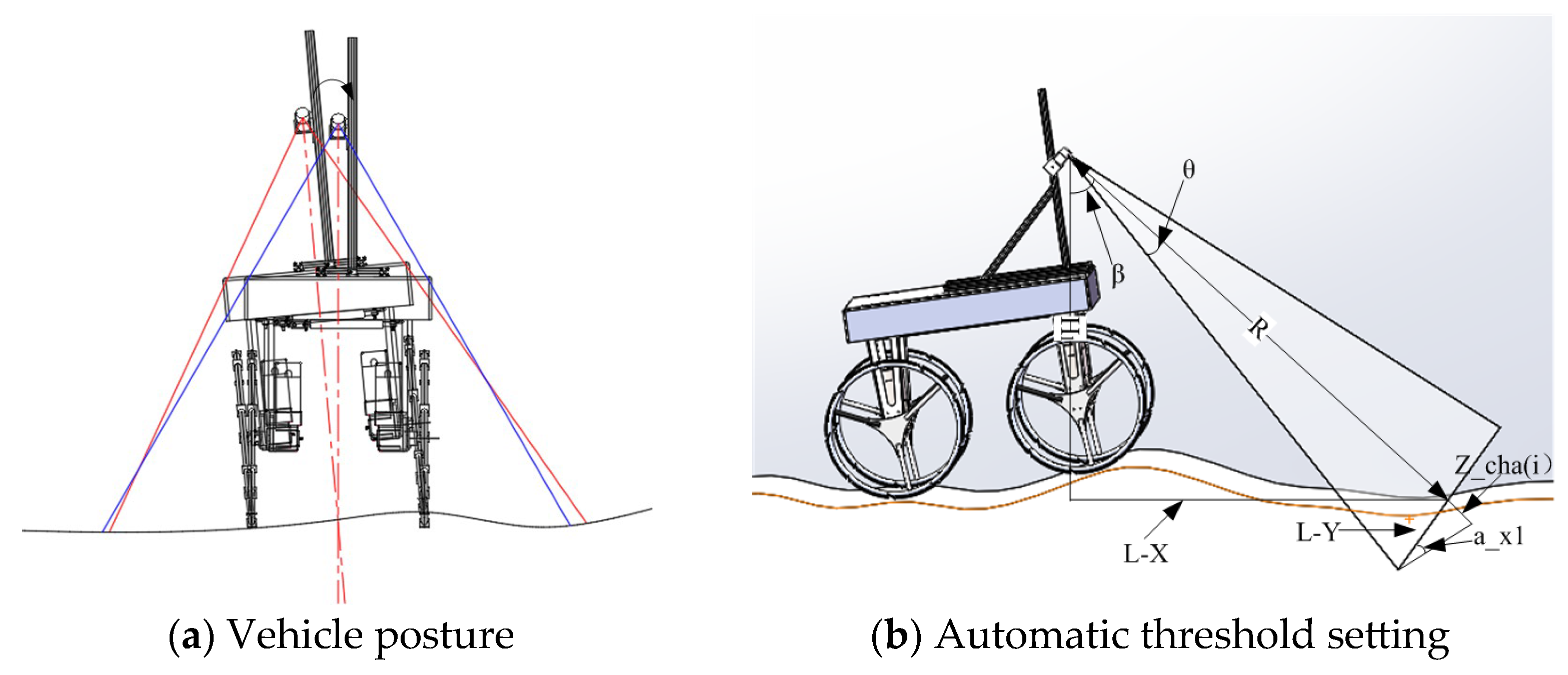

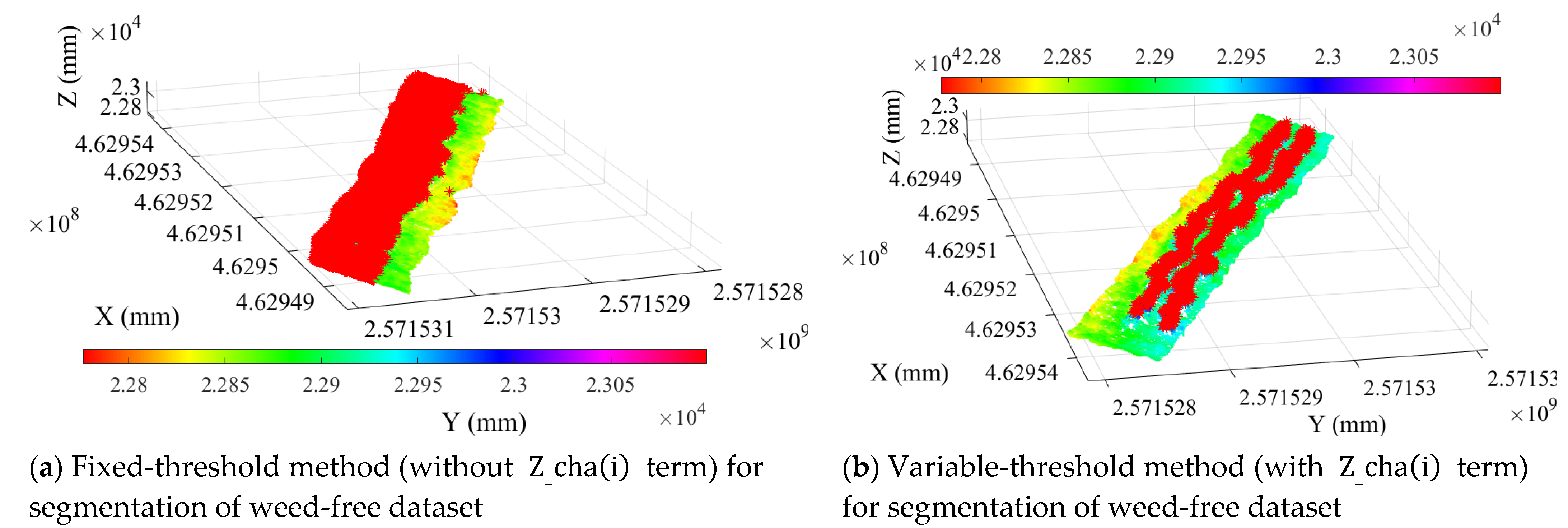

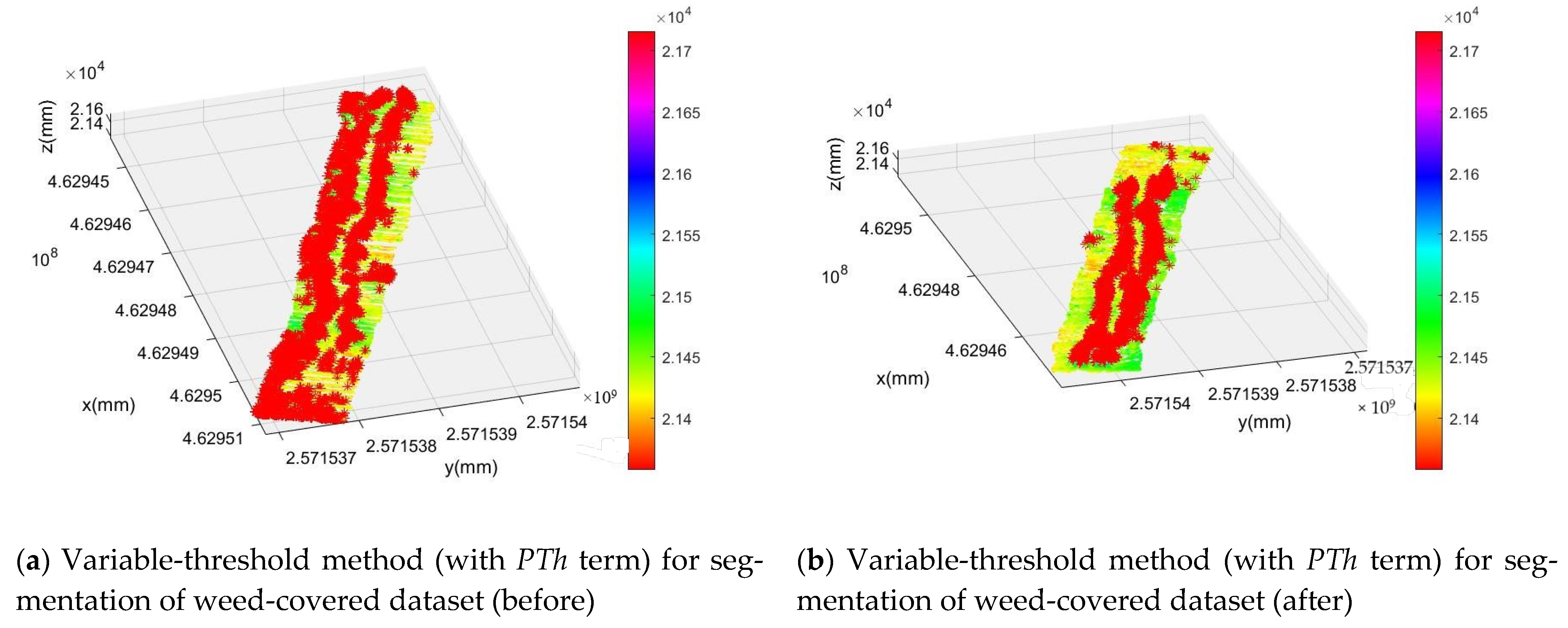

2.2.1. Variable-Threshold Object Segmentation Method Based on Posture Perception

2.2.2. Rice Row Cluster Method Based on Prior Information

- (1)

- Extraction of the Center Point of Rice Rows

- Threshold Setting

- Data Classification

- Center Point Extraction

- (2)

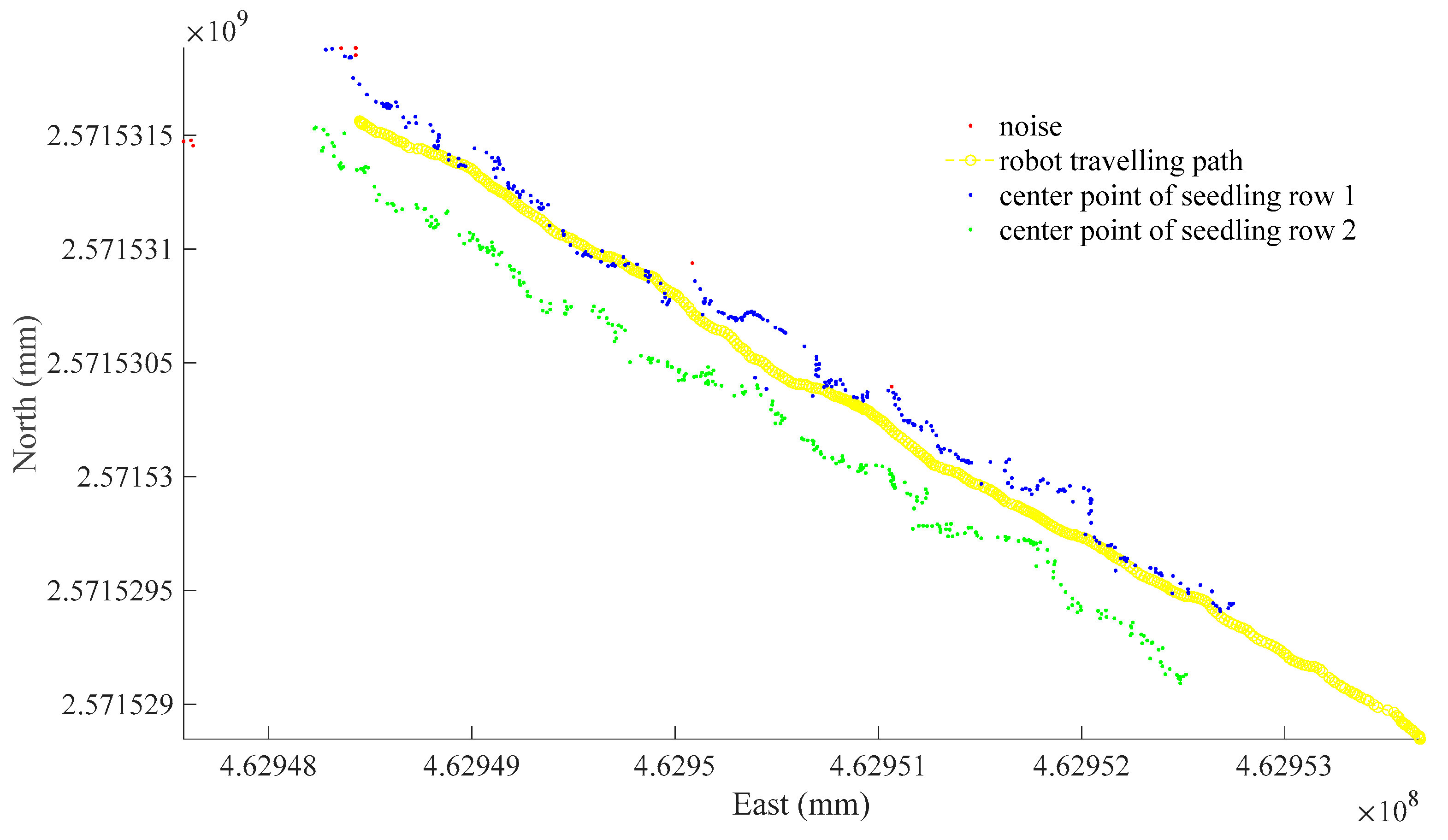

- Center Point Classification Based on Robot Travelling Path

- Horizontal Distance Calculation

- Point Clustering

- Elimination of Small Weed Interference

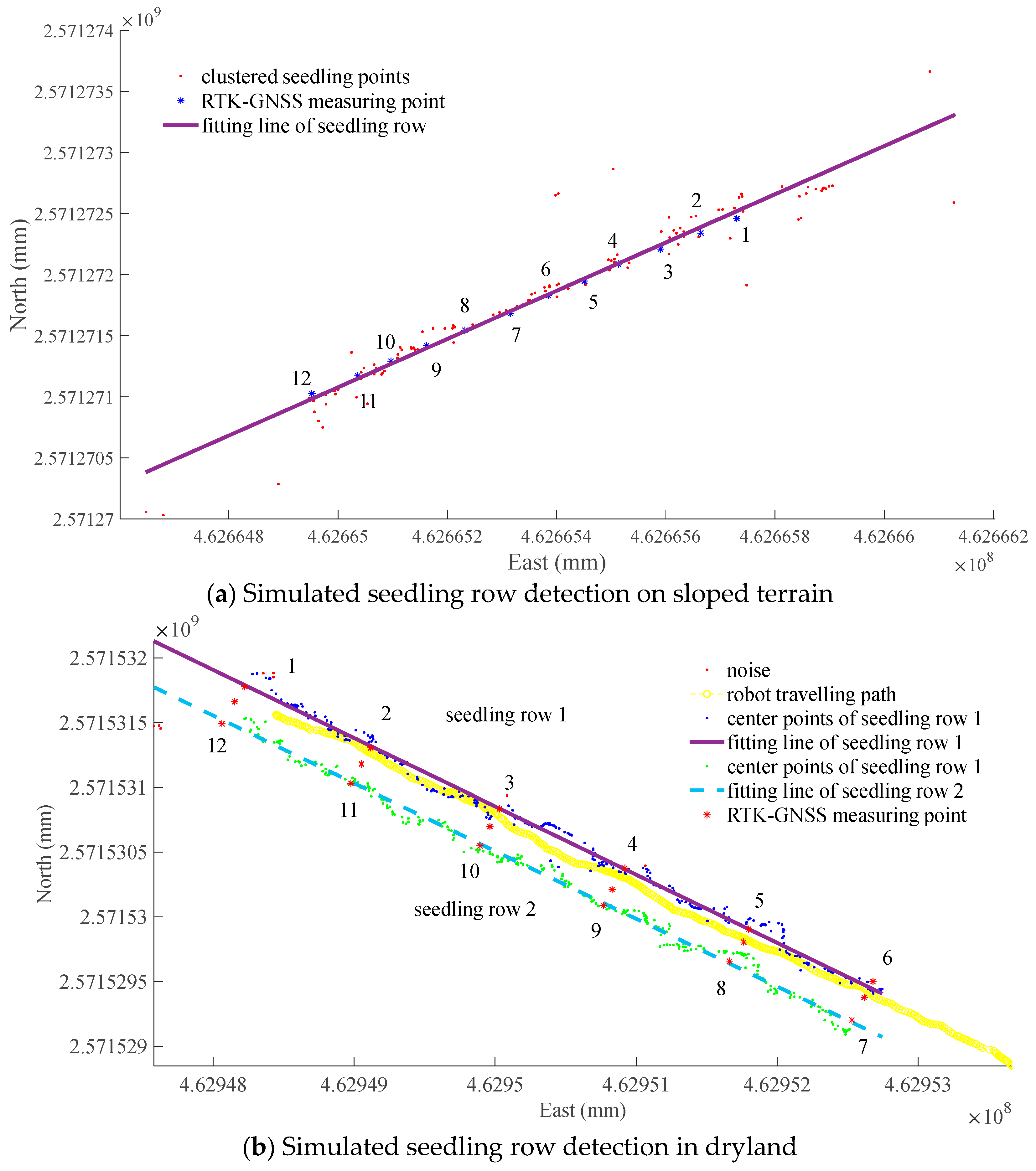

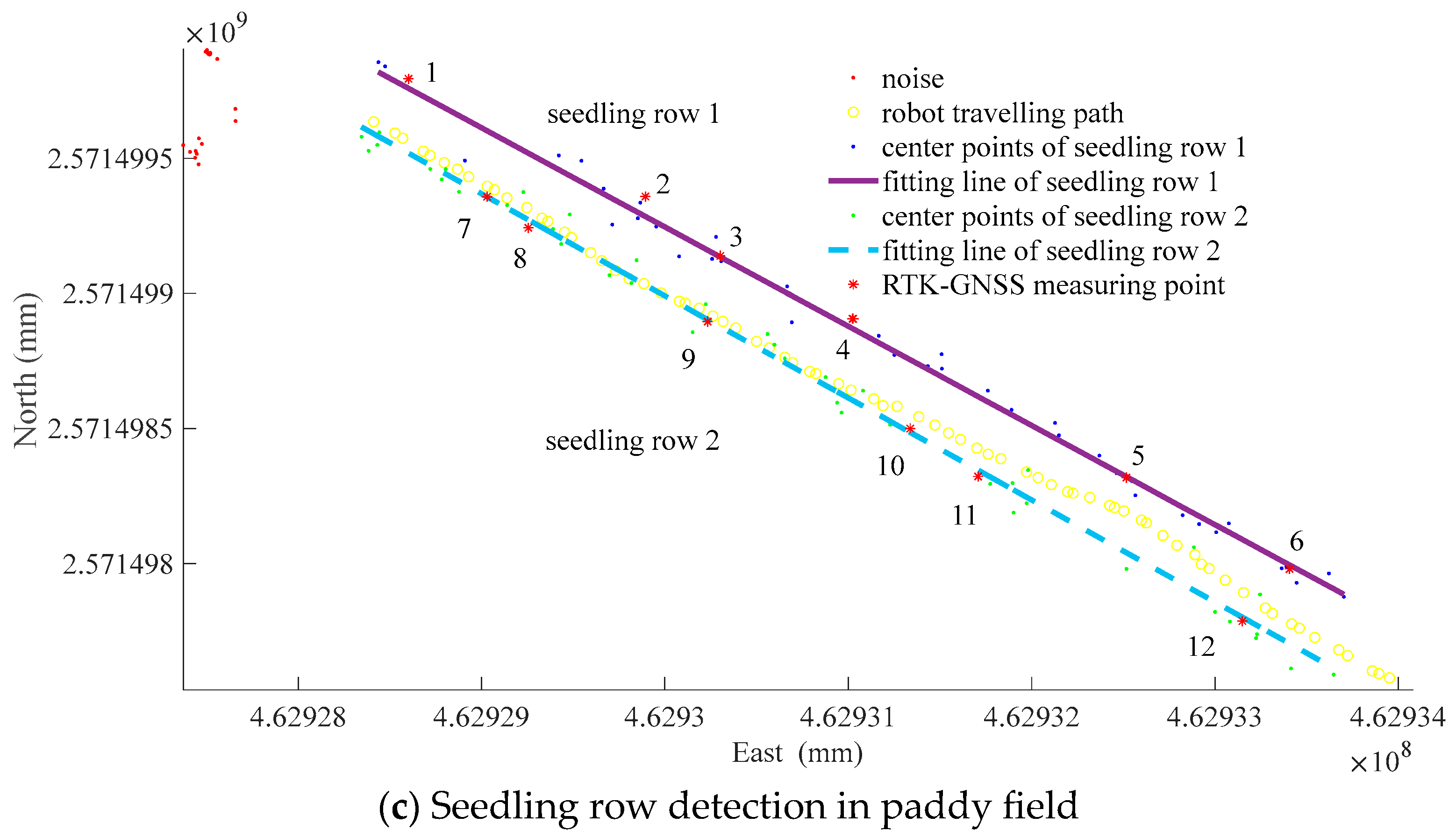

3. Experiment and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dehghanpir, S.; Bazrafshan, O.; Nadi, S.; Jamshidi, S. Assessing the sustainability of Agricultural Water Use Based on Water Footprints of wheat and rice production. In Sustainability and Water Footprint; Springer: Cham, Switzerland, 2024; Volume 10, pp. 57–82. [Google Scholar]

- Bwire, D.; Saito, H.; Sidle, R.C.; Nishiwaki, J. Water management and hydrological characteristics of paddy-rice fields under alternate wetting and drying irrigation practice as climate smart practice: A review. Agronomy 2024, 14, 1421. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, F.; Zhang, K.; Liao, P.; Xu, Q. Effect of agricultural management practices on rice yield and greenhouse gas emissions in the rice–wheat rotation system in China. Sci. Total Environ. 2024, 916, 170307. [Google Scholar] [CrossRef]

- Peng, S.; Zheng, C.; Yu, X. Progress and challenges of rice ratooning technology in China. Crop Environ. 2023, 2, 5–11. [Google Scholar] [CrossRef]

- Chen, Q. China Agricultural Mechanization Yearbook; China Agricultural Science and Technology Press: Beijing, China, 2020. [Google Scholar]

- He, Y.; Jiang, H.; Fang, H.; Wang, Y.; Liu, Y. Research progress of intelligent obstacle detection methods of vehicles and their application on agriculture. Trans. Chin. Soc. Agric. Eng. 2018, 34, 21–32. [Google Scholar]

- Ji, Y.; Xu, H.; Zhang, M.; Li, S.; Cao, R.; Li, H. Design of Point Cloud Acquisition System for Farmland Environment Based on LiDAR. Trans. Chin. Soc. Agric. Mach. 2019, 50, 1–7. [Google Scholar]

- Ji, C.; Zhou, J. Current Situation of Navigation Technologies for Agricultural Machinery. Trans. Chin. Soc. Agric. Mach. 2014, 45, 44–54. [Google Scholar]

- Zhang, Q.; Chen, M.E.S.; Li, B. A visual navigation algorithm for paddy field weeding robot based on image understanding. Comput. Electron. Agric. 2017, 143, 66–78. [Google Scholar] [CrossRef]

- Kaizu, Y.; Imou, K. A dual-spectral camera system for paddy rice seedling row detection. Comput. Electron. Agric. 2008, 63, 49–56. [Google Scholar] [CrossRef]

- Zhang, X.; Li, X.; Zhang, B.; Zhou, J.; Tian, G.; Xiong, Y.; Gu, B. Automated robust crop-row detection in maize fields based on position clustering algorithm and shortest path method. Comput. Electron. Agric. 2018, 154, 165–175. [Google Scholar] [CrossRef]

- Jing, G.; Yang, X.; Wang, Z.; Liu, H. Crop rows detection based on image characteristic point and particle swarm optimization-clustering algorithm. Trans. Chin. Soc. Agric. Eng. 2017, 33, 165–170. [Google Scholar]

- Choi, K.H.; Han, S.K.; Han, S.H.; Park, K.H.; Kim, K.S.; Kim, S. Morphology-based guidance line extraction for an autonomous weeding robot in paddy fields. Comput. Electron. Agric. 2015, 113, 266–274. [Google Scholar] [CrossRef]

- Wang, S.; Dai, X.; Xu, N.; Zhang, P. Overview on Environment Perception Technology for Unmanned Ground Vehicle. J. Chang. Univ. Sci. Technol. (Nat. Sci. Ed.) 2017, 40, 1–6. [Google Scholar]

- Chateau, T.; Debain, C.; Collange, F.; Trassoudaine, L.; Alizon, J. Automatic guidance of agricultural vehicles using a laser sensor. Comput. Electron. Agric. 2000, 28, 243–257. [Google Scholar] [CrossRef]

- Barawid, O.C., Jr.; Mizushima, A.; Ishii, K.; Noguchi, N. Development of an Autonomous Navigation System using a Two-dimensional Laser Scanner in an Orchard Application. Biosyst. Eng. 2007, 96, 139–149. [Google Scholar] [CrossRef]

- Hiremath, S.A.; Van Der Heijden, G.W.; Van Evert, F.K.; Stein, A.; Ter Braak, C.J. Laser range finder model for autonomous navigation of a robot in a maize field using a particle filter. Comput. Electron. Agric. 2014, 100, 41–50. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, J. Laser radar based orchard trunk detection. J. China Agric. Univ. 2015, 20, 249–255. [Google Scholar]

- Hämmerle, M.; Höfle, B. Effects of reduced terrestrial LiDAR point density on high-resolution grain crop surface models in precision agriculture. Sensors 2014, 14, 24212–24230. [Google Scholar] [CrossRef]

- Keightley, K.E.; Bawden, G.W. 3D volumetric modeling of grapevine biomass using Tripod LiDAR. Comput. Electron. Agric. 2010, 74, 305–312. [Google Scholar] [CrossRef]

- Xue, J.; Dong, S.; Fan, B. Detection of Obstacles Based on Information Fusion for Autonomous Agricultural Vehicles. Trans. Chin. Soc. Agric. Mach. 2018, 49, 36–41. [Google Scholar]

- Peng, Y.; Qu, D.; Zhong, Y.; Xie, S.; Luo, J.; Gu, J. The obstacle detection and obstacle avoidance algorithm based on 2-D LiDAR. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; IEEE: New York, NY, USA, 2015. [Google Scholar]

- Malavazi, F.B.P.; Guyonneau, R.; Fasquel, J.B.; Lagrange, S.; Mercier, F. LiDAR-only based navigation algorithm for an autonomous agricultural robot. Comput. Electron. Agric. 2018, 154, 71–79. [Google Scholar] [CrossRef]

- Liu, W.; Li, W.; Feng, H.; Xu, J.; Yang, S.; Zheng, Y.; Liu, X.; Wang, Z.; Yi, X.; He, Y.; et al. Overall integrated navigation based on satellite and lidar in the standardized tall spindle apple orchards. Comput. Electron. Agric. 2024, 216, 108489. [Google Scholar] [CrossRef]

- Liu, Y.; He, Y.; Noboru, N. Development of a collision avoidance system for agricultural airboat based on laser sensor. J. Zhejiang Univ. (Agric. Life Sci.) 2018, 44, 431–439. [Google Scholar]

- Colaço, A.F.; Trevisan, R.G.; Molin, J.P.; Rosell-Polo, J.R.; Escolà, A. A Method to Obtain Orange Crop Geometry Information Using a Mobile Terrestrial Laser Scanner and 3D Modeling. Remote Sens. 2017, 9, 763. [Google Scholar] [CrossRef]

- Garrido, M.; Paraforos, D.S.; Reiser, D.; Vázquez Arellano, M.; Griepentrog, H.W.; Valero, C. 3D Maize Plant Reconstruction Based on Georeferenced Overlapping LiDAR Point Clouds. Remote Sens. 2015, 7, 17077–17096. [Google Scholar] [CrossRef]

- Reiser, D.; Vázquez-Arellano, M.; Paraforos, D.S.; Garrido-Izard, M.; Griepentrog, H.W. Iterative individual plant clustering in maize with assembled 2D LiDAR data. Comput. Ind. 2018, 99, 42–52. [Google Scholar] [CrossRef]

- Zhao, R.; Hu, L.; Luo, X.; Zhou, H.; Du, P.; Tang, L.; He, J.; Mao, T. A novel approach for describing and classifying the unevenness of the bottom layer of paddy fields. Comput. Electron. Agric. 2019, 162, 552–560. [Google Scholar] [CrossRef]

- He, J.; Zang, Y.; Luo, X.; Zhao, R.; He, J.; Jiao, J. Visual detection of rice rows based on Bayesian decision theory and robust regression least squares method. Int. J. Agric. Biol. Eng. 2021, 14, 199–206. [Google Scholar] [CrossRef]

- Hu, L.; Lin, C.; Luo, X.; Yang, W.; Xu, Y.; Zhou, H.; Zhang, Z. Design and experiment on auto leveling control system of agricultural implements. Trans. Chin. Soc. Agric. Eng. 2015, 31, 15–20. [Google Scholar]

- Balduzzi, M.A.; Van der Zande, D.; Stuckens, J.; Verstraeten, W.W.; Coppin, P. The Properties of Terrestrial Laser System Intensity for Measuring Leaf Geometries: A Case Study with Conference Pear Trees (Pyrus communis). Sensors 2011, 11, 1657–1681. [Google Scholar] [CrossRef]

- Li, B.; Fang, Z.; Ren, J. Extraction of Building’s Feature from Laser Scanning Data. Geomat. Inf. Sci. Wuhan Univ. 2003, 28, 65–70. [Google Scholar]

- Guan, Y.L.; Liu, S.T.; Zhou, S.J.; Zhang, L.; Lu, T. Robust Plane Fitting of Point Clouds Based On TLS. J. Geod. Geodyn. 2011, 31, 80–83. [Google Scholar]

- Reiser, D.; Miguel, G.; Arellano, M.V.; Griepentrog, H.W.; Paraforos, D.S. Crop row detection in maize for developing navigation algorithms under changing plant growth stages. In Proceedings of the Robot 2015: Second Iberian Robotics Conference, Lisbon, Portugal, 19–21 November 2015; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Wang, C. Selection of smoothing coefficient via exponential smoothing algorithm. J. North Univ. China (Nat. Sci. Ed.) 2006, 27, 4. [Google Scholar] [CrossRef]

- Li, S.; Liu, K. Quadric Exponential Smoothing Model with Adapted Parameter and Its Applications. Syst. Eng. Theory Pract. 2004, 24, 94–99. [Google Scholar]

- Zhang, S.; Ma, Q.; Cheng, S.; An, D.; Yang, Z.; Ma, B.; Yang, Y. Crop row detection in the middle and late periods of maize under sheltering based on solid state LiDAR. Agriculture 2022, 12, 2011. [Google Scholar] [CrossRef]

- Yang, Y.; Ma, Q.; Chen, Z.; Wen, X.; Zhang, G.; Zhang, T.; Dong, X.; Chen, L. Real-time extraction of the navigation lines between sugarcane ridges using LiDAR. Trans. Chin. Soc. Agric. Eng. 2022, 38, 8. [Google Scholar] [CrossRef]

| Seedling position recognition on sloped terrain | RTK-GNSS measuring point | 1 | 2 | 3 | 4 | 5 | 6 |

| absolute error (mm) | 27.42 | 21.82 | 15.86 | 1.81 | 9.72 | 5.03 | |

| RTK-GNSS measuring point | 7 | 8 | 9 | 10 | 11 | 12 | |

| absolute error (mm) | 9.40 | 4.12 | 9.67 | 9.86 | 10.09 | 19.55 | |

| Seedling position recognition in dryland | RTK-GNSS measuring point | 1 | 2 | 3 | 4 | 5 | 6 |

| absolute error (mm) | 15.18 | 14.70 | 0.86 | 10.57 | 4.89 | 59.41 | |

| RTK-GNSS measuring point | 7 | 8 | 9 | 10 | 11 | 12 | |

| absolute error (mm) | 27.04 | 10.87 | 13.31 | 16.59 | 16.41 | 16.09 | |

| Seedling position recognition in paddy field | RTK-GNSS measuring point | 1 | 2 | 3 | 4 | 5 | 6 |

| absolute error (mm) | 32.60 | 69.36 | 4.28 | 33.79 | 3.34 | 13.81 | |

| RTK-GNSS measuring point | 7 | 8 | 9 | 10 | 11 | 12 | |

| absolute error (mm) | 7.01 | 0.53 | 27.66 | 13.48 | 21.69 | 13.17 |

| Algorithm Type | Mean Angular Error (°) | Mean Processing Time (ms) |

|---|---|---|

| Proposed (paddy field) | 0.6489 | 50.67 |

| [38] | 3.14 | 192.52 |

| [39] | 1.124 | 20.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, J.; Dong, W.; Tan, Q.; Li, J.; Song, X.; Zhao, R. A Variable-Threshold Segmentation Method for Rice Row Detection Considering Robot Travelling Prior Information. Agriculture 2025, 15, 413. https://doi.org/10.3390/agriculture15040413

He J, Dong W, Tan Q, Li J, Song X, Zhao R. A Variable-Threshold Segmentation Method for Rice Row Detection Considering Robot Travelling Prior Information. Agriculture. 2025; 15(4):413. https://doi.org/10.3390/agriculture15040413

Chicago/Turabian StyleHe, Jing, Wenhao Dong, Qingneng Tan, Jianing Li, Xianwen Song, and Runmao Zhao. 2025. "A Variable-Threshold Segmentation Method for Rice Row Detection Considering Robot Travelling Prior Information" Agriculture 15, no. 4: 413. https://doi.org/10.3390/agriculture15040413

APA StyleHe, J., Dong, W., Tan, Q., Li, J., Song, X., & Zhao, R. (2025). A Variable-Threshold Segmentation Method for Rice Row Detection Considering Robot Travelling Prior Information. Agriculture, 15(4), 413. https://doi.org/10.3390/agriculture15040413