Capsicum Counting Algorithm Using Infrared Imaging and YOLO11

Abstract

1. Introduction

2. Materials and Methods

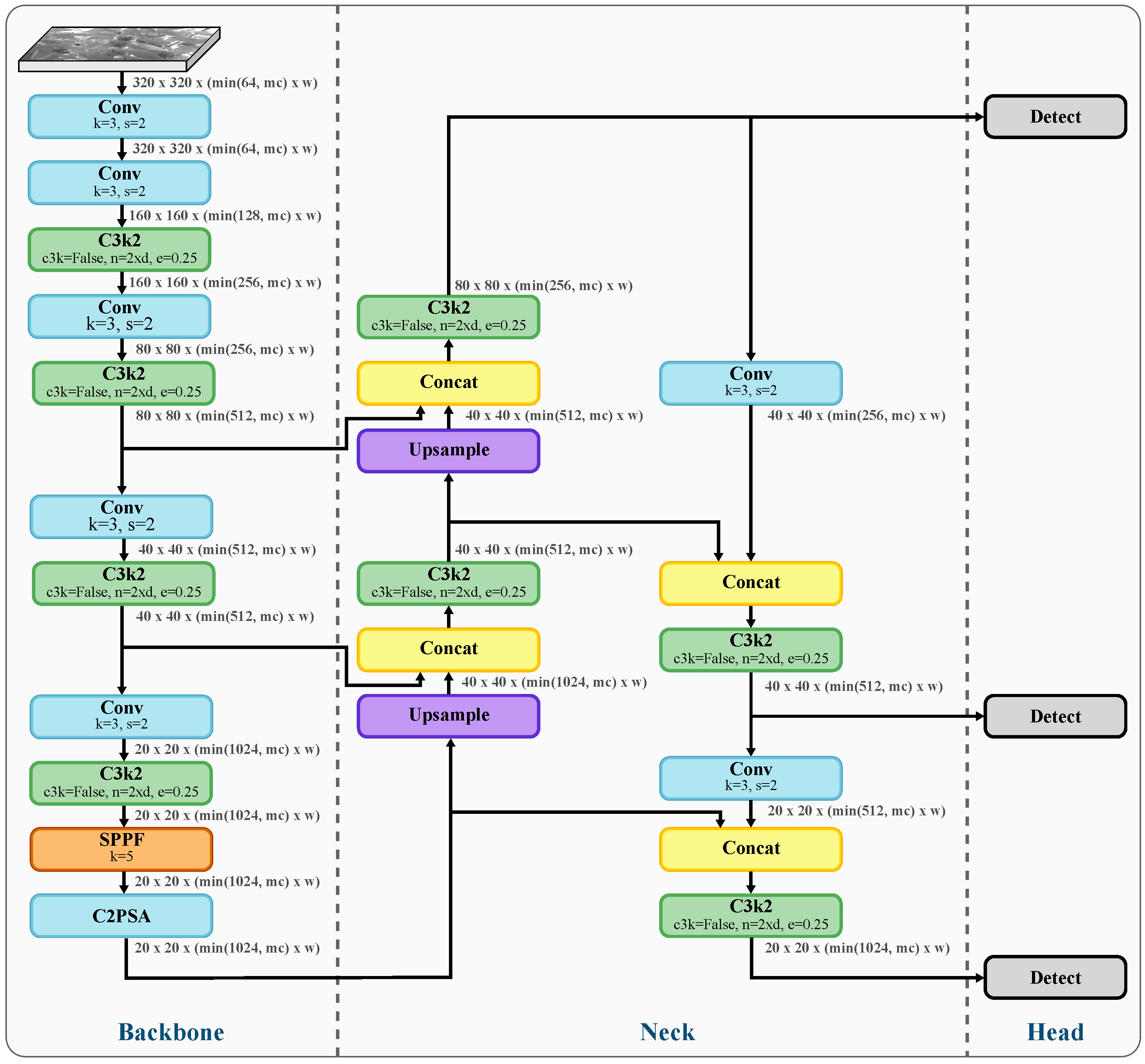

2.1. Object Detection

2.2. Tracking Algorithms

| Model Variant | d (Depth_Multiple) | w (Width_Multiple) | mc (Max_Channels) |

|---|---|---|---|

| n | 0.50 | 0.25 | 1024 |

| s | 0.50 | 0.50 | 1024 |

| m | 0.50 | 1.00 | 512 |

| l | 1.00 | 1.00 | 512 |

| xl | 1.00 | 1.50 | 512 |

2.2.1. Kalman Filter for Motion Prediction

2.2.2. Re-Identification and Data Association

- is the appearance cost.

- is the cosine distance between the average tracklet appearance descriptor i and the new detection descriptor j.

- is the appearance threshold, which is used to separate positive associations of tracklet appearance states and detection embedding vectors from negative ones.

- is a proximity threshold, set to 0.5 as in [53], used to reject unlikely pairs of tracklets and detections.

- represents the motion cost and is the IoU distance between the tracklet i-th predicted bounding box and the j-th detection bounding box.

- is the element of the cost matrix C.

2.2.3. Integration with YOLO11

3. Experimental Setup

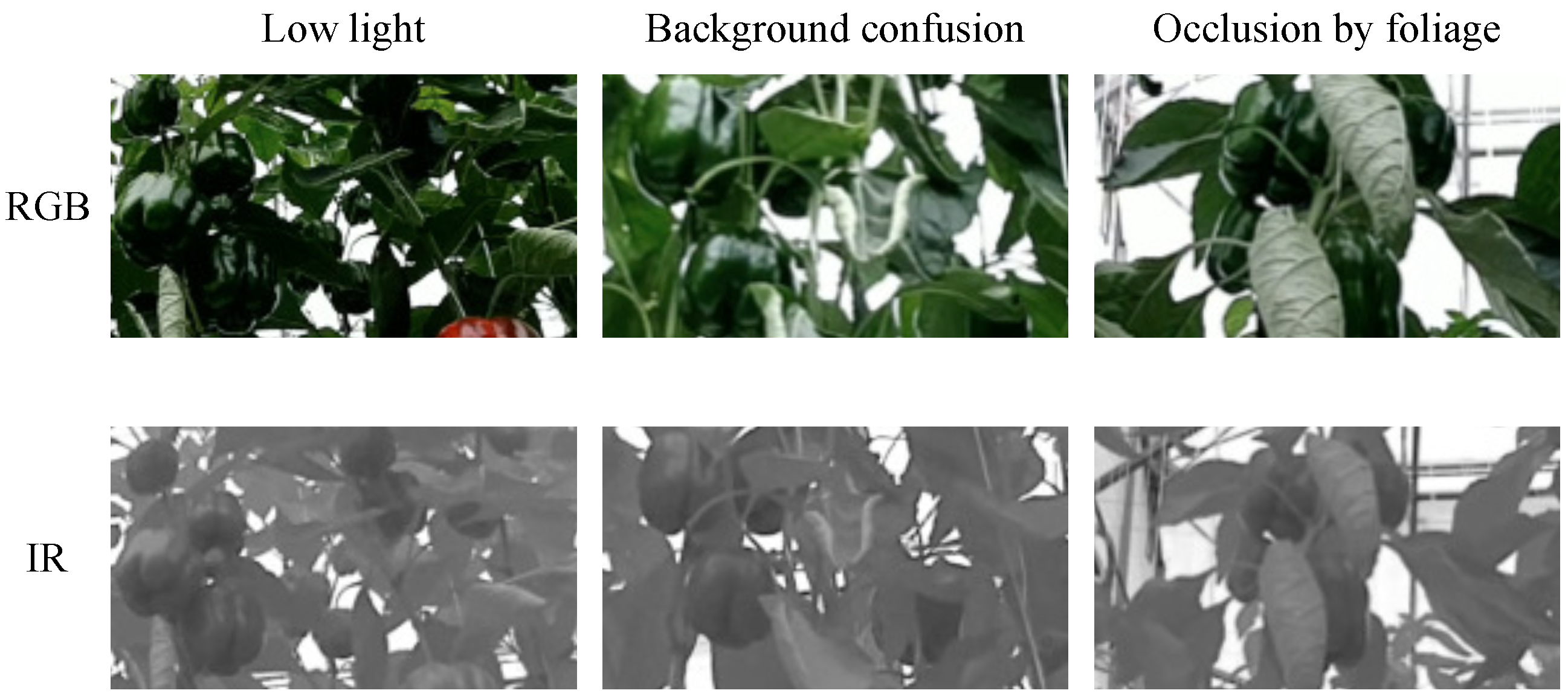

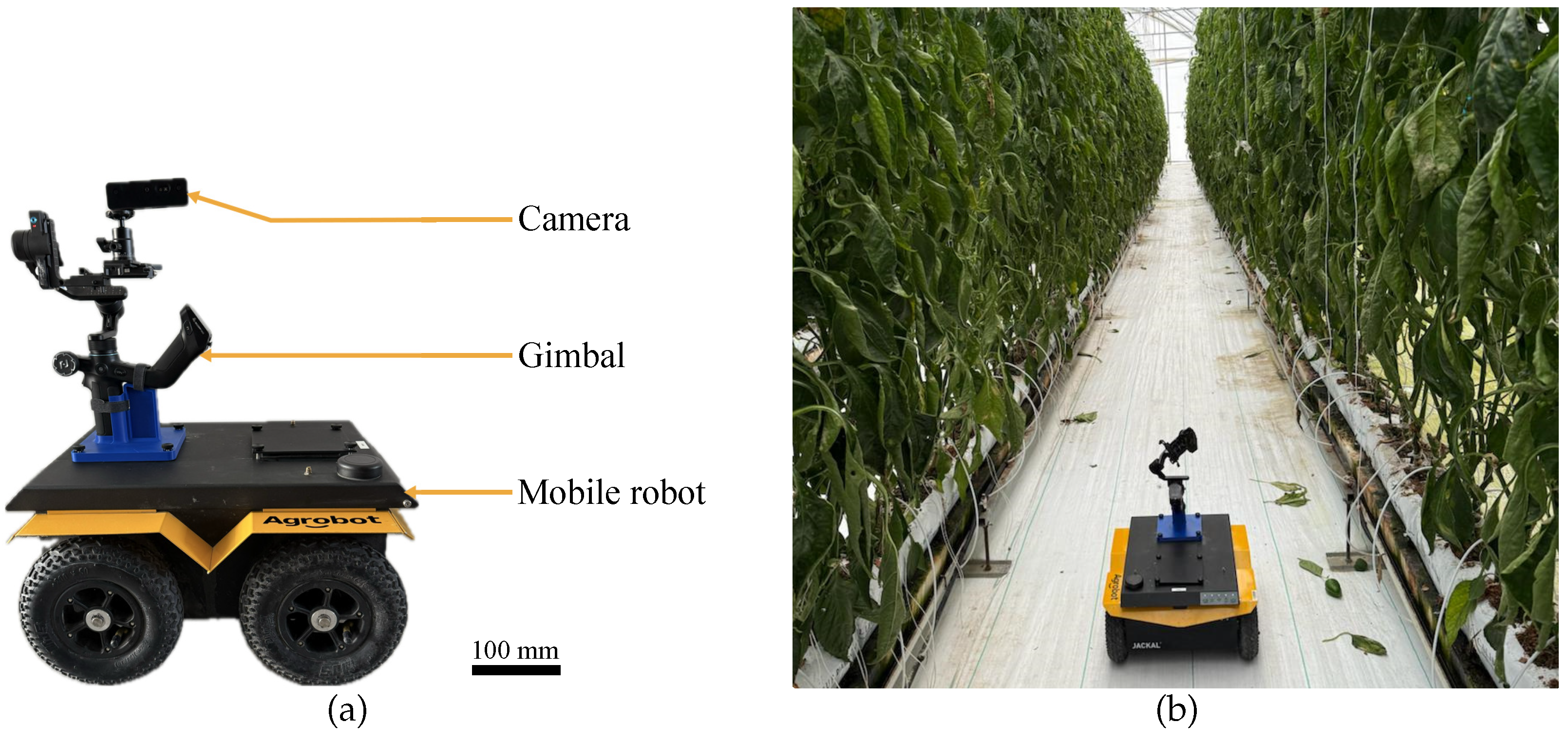

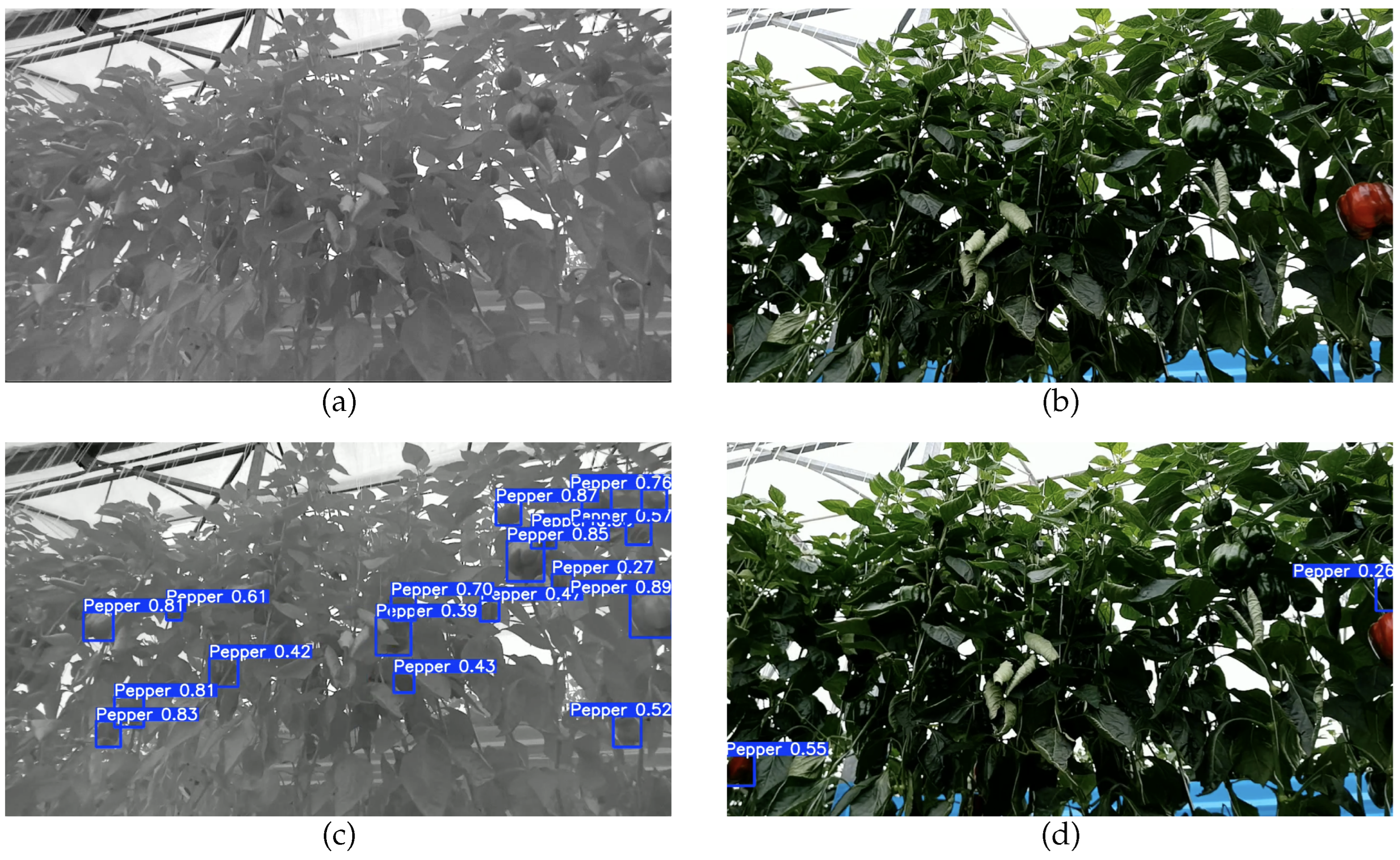

3.1. Image Acquisition

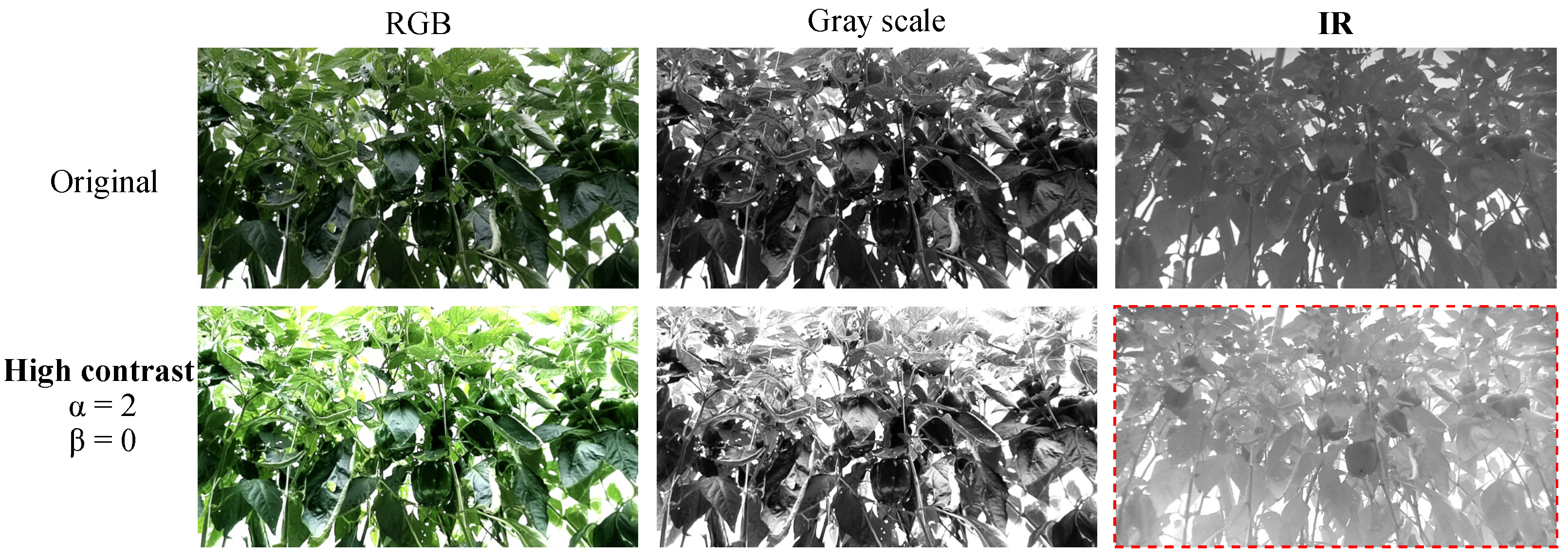

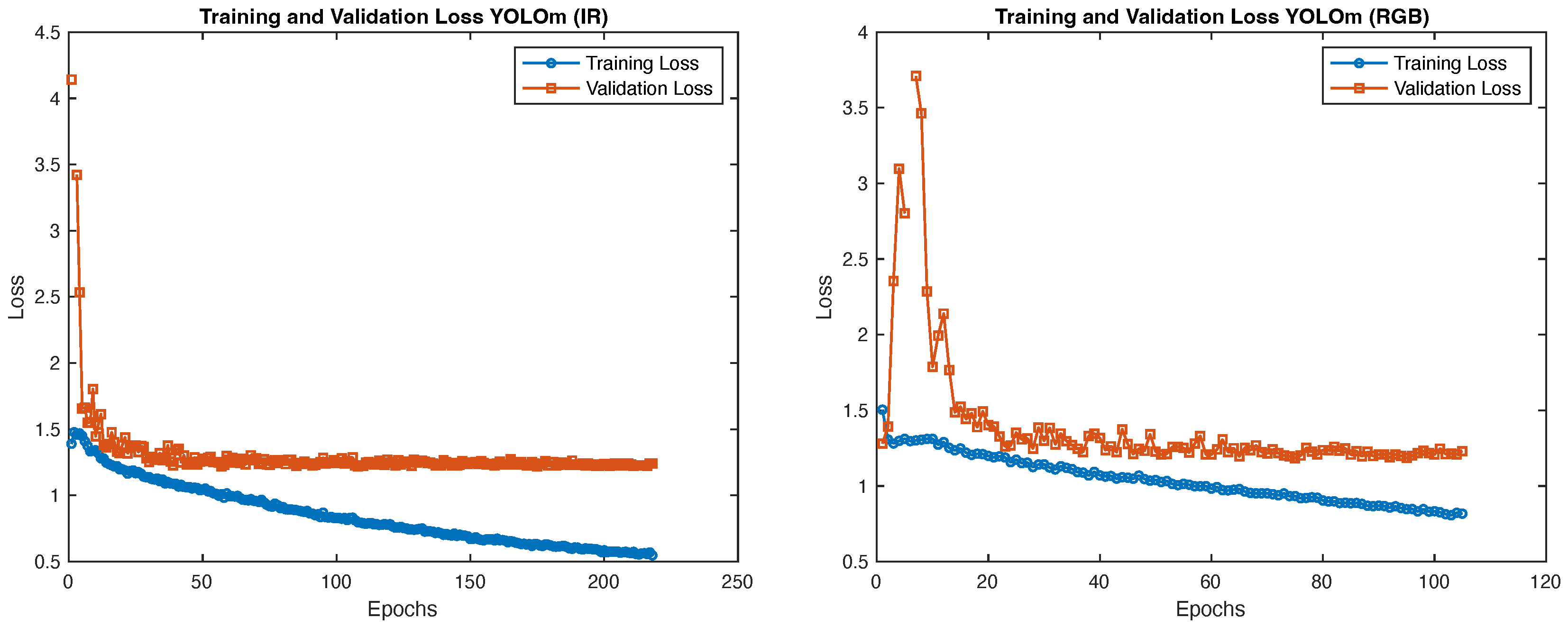

3.2. Dataset and Model Training

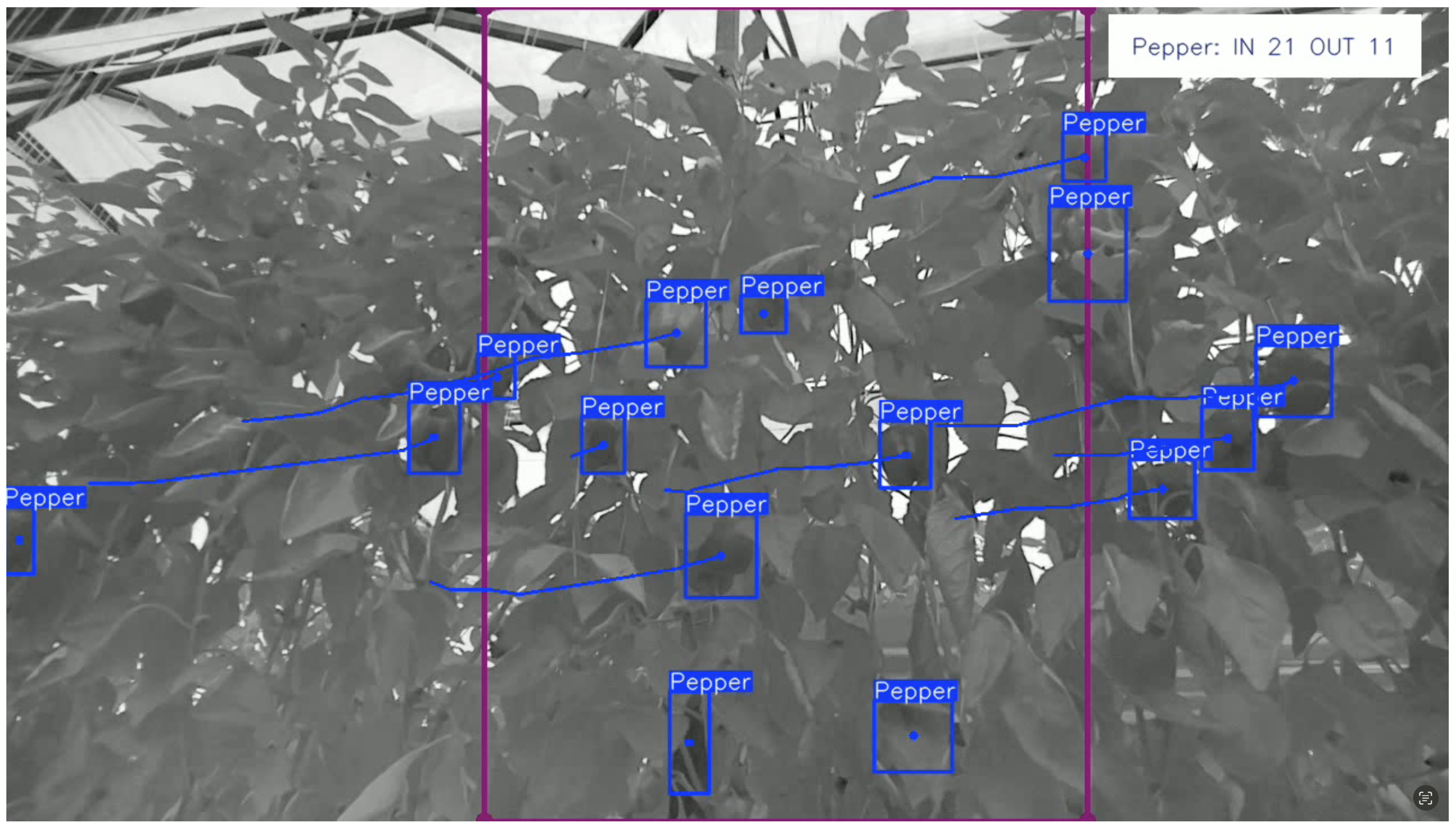

3.3. Capsicum Counting

4. Results and Discussion

4.1. Performance Metrics

- True Positives (TP): The number of correctly identified capsicums.

- True Negatives (TN): The number of correctly rejected non-capsicum instances, such as foliage or background.

- False Positives (FP): The number of non-capsicum instances incorrectly identified as capsicums.

- False Negatives (FN): The number of capsicums that the algorithm failed to identify.

4.2. Object Detection

4.3. Multi-Object Tracker and Counting

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ma, L.; Long, H.; Zhang, Y.; Tu, S.; Ge, D.; Tu, X. Agricultural labor changes and agricultural economic development in China and their implications for rural vitalization. J. Geogr. Sci. 2019, 29, 163–179. [Google Scholar] [CrossRef]

- Yang, X.; Shu, L.; Chen, J.; Ferrag, M.A.; Wu, J.; Nurellari, E.; Huang, K. A Survey on Smart Agriculture: Development Modes, Technologies, and Security and Privacy Challenges. IEEE/CAA J. Autom. Sin. 2021, 8, 273–302. [Google Scholar] [CrossRef]

- Afonso, M.; Fonteijn, H.; Fiorentin, F.S.; Lensink, D.; Mooij, M.; Faber, N.; Polder, G.; Wehrens, R. Tomato Fruit Detection and Counting in Greenhouses Using Deep Learning. Front. Plant Sci. 2020, 11, 571299. [Google Scholar] [CrossRef]

- Li, C.; Ma, W.; Liu, F.; Fang, B.; Lu, H.; Sun, Y. Recognition of citrus fruit and planning the robotic picking sequence in orchards. Signal Image Video Process. 2023, 17, 4425–4434. [Google Scholar] [CrossRef]

- Montoya-Cavero, L.E.; Díaz de León Torres, R.; Gómez-Espinosa, A.; Escobedo Cabello, J.A. Vision systems for harvesting robots: Produce detection and localization. Comput. Electron. Agric. 2022, 192, 106562. [Google Scholar] [CrossRef]

- Maktab Dar Oghaz, M.; Razaak, M.; Kerdegari, H.; Argyriou, V.; Remagnino, P. Scene and Environment Monitoring Using Aerial Imagery and Deep Learning. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 362–369. [Google Scholar] [CrossRef]

- Osman, Y.; Dennis, R.; Elgazzar, K. Yield Estimation and Visualization Solution for Precision Agriculture. Sensors 2021, 21, 6657. [Google Scholar] [CrossRef]

- Huang, S.C.; Le, T.H. Chapter 12—Object detection. In Principles and Labs for Deep Learning; Huang, S.C., Le, T.H., Eds.; Academic Press: Cambridge, MA, USA, 2021; pp. 283–331. [Google Scholar] [CrossRef]

- Hou, G.; Chen, H.; Jiang, M.; Niu, R. An Overview of the Application of Machine Vision in Recognition and Localization of Fruit and Vegetable Harvesting Robots. Agriculture 2023, 13, 1814. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Fang, Y. Color-, depth-, and shape-based 3D fruit detection. Precis. Agric. 2020, 21, 1–17. [Google Scholar] [CrossRef]

- Akbar, J.U.M.; Kamarulzaman, S.F.; Muzahid, A.J.M.; Rahman, M.A.; Uddin, M. A Comprehensive Review on Deep Learning Assisted Computer Vision Techniques for Smart Greenhouse Agriculture. IEEE Access 2024, 12, 4485–4522. [Google Scholar] [CrossRef]

- Paul, A.; Machavaram, R.; Ambuj; Kumar, D.; Nagar, H. Smart solutions for capsicum Harvesting: Unleashing the power of YOLO for Detection, Segmentation, growth stage Classification, Counting, and real-time mobile identification. Comput. Electron. Agric. 2024, 219, 108832. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, C. Convolutional Neural Networks for Image-Based High-Throughput Plant Phenotyping: A Review. Plant Phenomics 2020, 2020, 4152816. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2012; Volume 25. [Google Scholar]

- Espinoza, S.; Aguilera, C.; Rojas, L.; Campos, P.G. Analysis of Fruit Images With Deep Learning: A Systematic Literature Review and Future Directions. IEEE Access 2024, 12, 3837–3859. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587, ISSN 1063-6919. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788, ISSN 1063-6919. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Tu, S.; Pang, J.; Liu, H.; Zhuang, N.; Chen, Y.; Zheng, C.; Wan, H.; Xue, Y. Passion fruit detection and counting based on multiple scale faster R-CNN using RGB-D images. Precis. Agric. 2020, 21, 1072–1091. [Google Scholar] [CrossRef]

- Behera, S.K.; Rath, A.K.; Sethy, P.K. Fruits yield estimation using Faster R-CNN with MIoU. Multimed. Tools Appl. 2021, 80, 19043–19056. [Google Scholar] [CrossRef]

- Assunção, E.T.; Gaspar, P.D.; Mesquita, R.J.M.; Simões, M.P.; Ramos, A.; Proença, H.; Inacio, P.R.M. Peaches Detection Using a Deep Learning Technique—A Contribution to Yield Estimation, Resources Management, and Circular Economy. Climate 2022, 10, 11. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask R-CNN for instance segmentation in complex orchard environments. Artif. Intell. Agric. 2024, 13, 84–99. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Bazame, H.C.; Molin, J.P.; Althoff, D.; Martello, M. Detection, classification, and mapping of coffee fruits during harvest with computer vision. Comput. Electron. Agric. 2021, 183, 106066. [Google Scholar] [CrossRef]

- Chen, W.; Zhang, J.; Guo, B.; Wei, Q.; Zhu, Z. An Apple Detection Method Based on Des-YOLO v4 Algorithm for Harvesting Robots in Complex Environment. Math. Probl. Eng. 2021, 2021, 7351470. [Google Scholar] [CrossRef]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Abdanan Mehdizadeh, S. Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions. Comput. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Tsai, F.T.; Nguyen, V.T.; Duong, T.P.; Phan, Q.H.; Lien, C.H. Tomato Fruit Detection Using Modified Yolov5m Model with Convolutional Neural Networks. Plants 2023, 12, 3067. [Google Scholar] [CrossRef] [PubMed]

- Du, X.; Cheng, H.; Ma, Z.; Lu, W.; Wang, M.; Meng, Z.; Jiang, C.; Hong, F. DSW-YOLO: A detection method for ground-planted strawberry fruits under different occlusion levels. Comput. Electron. Agric. 2023, 214, 108304. [Google Scholar] [CrossRef]

- Giacomin, R.; Constantino, L.; Nogueira, A.; Ruzza, M.; Morelli, A.; Branco, K.; Rossetto, L.; Zeffa, D.; Gonçalves, L. Post-harvest quality and sensory evaluation of mini sweet peppers. Horticulturae 2021, 7, 287. [Google Scholar] [CrossRef]

- Zou, X.; Ma, Y.; Dai, X.; Li, X.; Yang, S. Spread and Industry Development of Pepper in China. Acta Hortic. Sin. 2020, 47, 1715. [Google Scholar] [CrossRef]

- Cong, P.; Li, S.; Zhou, J.; Lv, K.; Feng, H. Research on Instance Segmentation Algorithm of Greenhouse Sweet Pepper Detection Based on Improved Mask RCNN. Agronomy 2023, 13, 196. [Google Scholar] [CrossRef]

- López-Barrios, J.D.; Escobedo Cabello, J.A.; Gómez-Espinosa, A.; Montoya-Cavero, L.E. Green Sweet Pepper Fruit and Peduncle Detection Using Mask R-CNN in Greenhouses. Appl. Sci. 2023, 13, 6296. [Google Scholar] [CrossRef]

- Viveros Escamilla, L.D.; Gómez-Espinosa, A.; Escobedo Cabello, J.A.; Cantoral-Ceballos, J.A. Maturity Recognition and Fruit Counting for Sweet Peppers in Greenhouses Using Deep Learning Neural Networks. Agriculture 2024, 14, 331. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649, ISSN 2381-8549. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, D.; Wen, R. SwinT-YOLO: Detection of densely distributed maize tassels in remote sensing images. Comput. Electron. Agric. 2023, 210, 107905. [Google Scholar] [CrossRef]

- Feng, J.; Zeng, L.; He, L. Apple Fruit Recognition Algorithm Based on Multi-Spectral Dynamic Image Analysis. Sensors 2019, 19, 949. [Google Scholar] [CrossRef]

- Wu, X.; Wu, X.; Huang, H.; Zhang, F.; Wen, Y. Characterization of Pepper Ripeness in the Field Using Hyperspectral Imaging (HSI) with Back Propagation (BP) Neural Network and Kernel Based Extreme Learning Machine (KELM) Models. Anal. Lett. 2024, 57, 409–424. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S.; Dong, W.; Luo, W.; Huang, Y.; Zhan, B.; Liu, X. Detection of common defects on mandarins by using visible and near infrared hyperspectral imaging. Infrared Phys. Technol. 2020, 108, 103341. [Google Scholar] [CrossRef]

- Gan, H.; Lee, W.S.; Alchanatis, V.; Abd-Elrahman, A. Active thermal imaging for immature citrus fruit detection. Biosyst. Eng. 2020, 198, 291–303. [Google Scholar] [CrossRef]

- Paul, A.; Machavaram, R. Greenhouse capsicum detection in thermal imaging: A comparative analysis of a single-shot and a novel zero-shot detector. Next Res. 2024, 1, 100076. [Google Scholar] [CrossRef]

- Tsuchikawa, S.; Ma, T.; Inagaki, T. Application of near-infrared spectroscopy to agriculture and forestry. Anal. Sci. 2022, 38, 635–642. [Google Scholar] [CrossRef]

- Patel, K.K.; Pathare, P.B. Principle and applications of near-infrared imaging for fruit quality assessment—An overview. Int. J. Food Sci. Technol. 2024, 59, 3436–3450. [Google Scholar] [CrossRef]

- Fan, S.; Liang, X.; Huang, W.; Jialong Zhang, V.; Pang, Q.; He, X.; Li, L.; Zhang, C. Real-time defects detection for apple sorting using NIR cameras with pruning-based YOLOV4 network. Comput. Electron. Agric. 2022, 193, 106715. [Google Scholar] [CrossRef]

- Fan, S.; Li, C.; Huang, W.; Chen, L. Detection of blueberry internal bruising over time using NIR hyperspectral reflectance imaging with optimum wavelengths. Postharvest Biol. Technol. 2017, 134, 55–66. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Wang, Y.; Xiong, J.; Zhang, Z.; Zhao, B.; Luo, L.; Lin, G.; He, P. Application of Convolutional Neural Network-Based Detection Methods in Fresh Fruit Production: A Comprehensive Review. Front. Plant Sci. 2022, 13, 868745. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO (Version 8.0.0) [Computer software]. Available online: https://github.com/ultralytics/ultralytics (accessed on 18 November 2025).

- Huang, J.; Wang, K.; Hou, Y.; Wang, J. LW-YOLO11: A Lightweight Arbitrary-Oriented Ship Detection Method Based on Improved YOLO11. Sensors 2025, 25, 65. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. arXiv 2022, arXiv:2110.06864. [Google Scholar] [CrossRef]

- Aharon, N.; Orfaig, R.; Bobrovsky, B.Z. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar] [CrossRef]

- Siriani, A.L.R.; Miranda, I.B.D.C.; Mehdizadeh, S.A.; Pereira, D.F. Chicken Tracking and Individual Bird Activity Monitoring Using the BoT-SORT Algorithm. AgriEngineering 2023, 5, 1677–1693. [Google Scholar] [CrossRef]

- Yang, X.; Gao, Y.; Yin, M.; Li, H. Automatic Apple Detection and Counting with AD-YOLO and MR-SORT. Sensors 2024, 24, 7012. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Dwyer, B.; Nelson, J.; Hansen, T. Roboflow (Version 1.0) [Software]. Computer Vision Software. 2025. Available online: https://roboflow.com (accessed on 18 November 2025).

- Mendez, E. Capsicum IR Box Annotation Computer Vision Dataset. 1000 Images with 11,916 Labeled Instances. 2025. Available online: https://universe.roboflow.com/enrico-mendez-research-s9yeb/capsicum-ir-box (accessed on 18 November 2025).

| Parameter | Description | Value |

|---|---|---|

| track_high_thresh | Threshold for the first association | 0.5 |

| track_low_thresh | Threshold for the second association | 0.3 |

| new_track_thresh | Threshold for initializing a new track if no match is found | 0.29 |

| track_buffer | Buffer to determine when to remove tracks | 35 |

| match_thresh | Threshold for matching tracks | 0.8 |

| Augmentation (argument) | Description | Value |

|---|---|---|

| Hue shift () | Random perturbation of image hue in HSV space | 0.015 (maximum hue offset) |

| Saturation shift () | Random perturbation of color saturation | 0.70 (maximum relative change in saturation) |

| Brightness shift () | Random perturbation of brightness | 0.40 (maximum relative change in brightness) |

| Translation () | Random x/y translation | 0.10 (maximum fraction of image size used for shifting) |

| Scale () | Random zoom in/out | 0.50 (maximum relative scaling factor) |

| Horizontal flip () | Left–right flip | 0.50 (probability of flipping eachtraining image) |

| Mosaic () | Combines four training images into a single mosaic image | 1.0 (probability of applying mosaic) |

| Parameter | Description | Value |

|---|---|---|

| epochs | Number of training iterations over the entire dataset. | 500 |

| batch | Size of the batch for training, with 0.9 indicating the code to calculate the batch size to use 90% of the GPU memory. | 64 |

| imgsz | The size of the input images during training, resized to this size for processing. | 800 |

| cache | How the dataset is loaded into memory for training. ram indicates that the dataset is cached in RAM. | ram |

| optimizer | Optimization algorithm used to update the model weights during training. | Adam |

| patience | Early stopping parameter, specifying the number of epochs to wait, if improvement has not been observed, before stopping. | 100 |

| Model | Recall (Best) | Precision (Best) | F1-Score (Best) | Dataset |

|---|---|---|---|---|

| YOLO11n | 0.88 | 1 | 0.81 | IR |

| YOLO11s | 0.96 | 1 | 0.81 | IR |

| YOLO11m | 0.92 | 1 | 0.82 | IR |

| YOLO11n | 0.92 | 1 | 0.82 | RGB |

| YOLO11s | 0.95 | 1 | 0.81 | RGB |

| YOLO11m | 0.93 | 1 | 0.82 | RGB |

| Sensor | Ground Truth | Counted Capsicums | FP | FN | IDS | MOTA |

|---|---|---|---|---|---|---|

| IR | 70 | 67 | 5 | 4 | 1 | 0.85 |

| RGB | 70 | 25 | 3 | 43 | 0 | 0.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mendez, E.; Escobedo Cabello, J.A.; Gómez-Espinosa, A.; Cantoral-Ceballos, J.A.; Ochoa, O. Capsicum Counting Algorithm Using Infrared Imaging and YOLO11. Agriculture 2025, 15, 2574. https://doi.org/10.3390/agriculture15242574

Mendez E, Escobedo Cabello JA, Gómez-Espinosa A, Cantoral-Ceballos JA, Ochoa O. Capsicum Counting Algorithm Using Infrared Imaging and YOLO11. Agriculture. 2025; 15(24):2574. https://doi.org/10.3390/agriculture15242574

Chicago/Turabian StyleMendez, Enrico, Jesús Arturo Escobedo Cabello, Alfonso Gómez-Espinosa, Jose Antonio Cantoral-Ceballos, and Oscar Ochoa. 2025. "Capsicum Counting Algorithm Using Infrared Imaging and YOLO11" Agriculture 15, no. 24: 2574. https://doi.org/10.3390/agriculture15242574

APA StyleMendez, E., Escobedo Cabello, J. A., Gómez-Espinosa, A., Cantoral-Ceballos, J. A., & Ochoa, O. (2025). Capsicum Counting Algorithm Using Infrared Imaging and YOLO11. Agriculture, 15(24), 2574. https://doi.org/10.3390/agriculture15242574