Abstract

Artificial Intelligence (AI) is a key technological enabler for the transition of agricultural production and management from experience-driven to data-driven, continuously advancing modern agriculture toward smart agriculture. This evolution ultimately aims to achieve a precise agricultural production model characterized by low resource consumption, high safety, high quality, high yield, and stable, sustainable development. Although machine learning, deep learning, computer vision, Internet of Things, and other AI technologies have made significant progress in numerous agricultural production applications, most studies focus on singular agricultural scenarios or specific AI algorithm research, such as object detection, navigation, agricultural machinery maintenance, and food safety, resulting in relatively limited coverage. To comprehensively elucidate the applications of AI in agriculture and provide a valuable reference for practitioners and policymakers, this paper reviews relevant research by investigating the entire agricultural production process—including planting, management, and harvesting—covering application scenarios such as seed selection during the cultivation phase, pest and disease identification and intelligent management during the growth phase, and agricultural product grading during the harvest phase, as well as agricultural machinery and devices like fault diagnosis and predictive maintenance of agricultural equipment, agricultural robots, and the agricultural Internet of Things. It first analyzes the fundamental principles and potential advantages of typical AI technologies, followed by a systematic and in-depth review of the latest progress in applying these core technologies to smart agriculture. The challenges faced by existing technologies are also explored, such as the inherent limitations of AI models—including poor generalization capability, low interpretability, and insufficient real-time performance—as well as the complex agricultural operating environments that result in multi-source, heterogeneous, and low-quality, unevenly annotated data. Furthermore, future research directions are discussed, such as lightweight network models, transfer learning, embodied intelligent agricultural robots, multimodal perception technologies, and large language models for agriculture. The aim is to provide meaningful insights for both theoretical research and practical applications of AI technologies in agriculture.

1. Introduction

Faced with global population growth and food security challenges, coupled with the increasing demand for high-quality agricultural products, it is urgent to realize the precise, automated and intelligent management of agricultural production, namely smart agriculture [1]. However, the traditional manual agricultural production model relies solely on sparse experience and subjective judgment, making it impossible to achieve continuous and comprehensive coverage of macro and micro-level perception and decision-making throughout the entire agricultural production process. Specifically:

- It fails to enable real-time operational responses and long-term predictions, such as addressing soil nutrient variations, early diagnosis of crop diseases, and yield forecasting.

- Extensive agricultural management leads to resource waste and environmental pollution, including excessive use of chemical fertilizers and pesticides, soil degradation, and inefficient flood irrigation.

- The aging trend among agricultural practitioners results in low operational efficiency, high costs, and unstable production quality, placing immense pressure on high-intensity, high-precision repetitive tasks like planting, management, and harvesting.

- Farmers’ personal safety cannot be adequately ensured, for example, during manual pesticide spraying in large-scale plant protection environments or in complex indoor temperature, humidity, and gas conditions in livestock and poultry farming.

- Reliance on subjective product grading hinders standardized quality assurance and prevents effective traceability of agricultural product quality.

In essence, deficiencies in perception, decision-making, physical capability, and safety demonstrate that manual operations are no longer sufficient to meet the requirements of smart agriculture. With the rapid development of information technologies such as Artificial Intelligence (AI), Internet of Things, robotics, and big data, smart agriculture can leverage the Internet of Things for large-scale environmental perception and data collection, use AI to build predictive control models and make optimal decisions, and employ robotics to replace high-intensity human labor. Ultimately, this will enable efficient, low-cost, and safe agricultural production across the entire process [2]. Among these advanced technologies, data-driven AI has become the core of smart agriculture.

AI aims to analyze datasets, identify different patterns, and make predictions about targets, enabling machines to perform complex tasks such as learning, reasoning, perception, and decision-making. It encompasses various fields, including machine learning [3], deep learning [4], and computer vision [5], and is widely applied in areas such as industrial automation, autonomous driving, smart healthcare, and social security. In agricultural production, unlike traditional human experience-based analysis and decision-making or simple mechanical replacement of labor, AI technologies are reshaping agricultural production methods in terms of data, algorithms, and equipment. These technologies cover almost all aspects of agricultural production, including planting, cultivation, management, and harvesting, with applications in agricultural production management, soil and crop monitoring, pest and disease detection, yield prediction and crop planning, food quality, climate-smart agriculture, supply chain optimization, autonomous farming equipment, mechanical equipment fault analysis, etc. However, the application of AI in agriculture faces significant challenges due to open and unstructured complex agricultural environments, scarce and unevenly distributed high-quality annotated data, multi-source heterogeneous data fusion, and issues such as inadequate generalization ability, poor interpretability, and insufficient real-time performance of AI models.

In recent years, substantial research has emerged on the application of AI technologies in agriculture. Our earlier published study analyzed relevant publications in the Web of Science and Engineering Village databases over the past decade (from 1 January 2014 to 1 April 2025), further confirming a steadily rising trend in the development of AI agriculture [6]. Statistical reports also indicate that the global market value of AI in agriculture is expected to reach $16.92 billion by 2034, with a compound annual growth rate of 23.32% over the forecast period from 2024 to 2034 [7]. Typical applications include:

In the area of detection and identification, most studies leverage acquired crop images and integrate machine learning algorithms such as Support Vector Machines (SVM) [8] and Random Forests (RF) [9], as well as deep learning algorithms like You Only Look Once (YOLO) [10], ResNet [11], Visual Geometry Group (VGG) [12], and transformer [13] to achieve accurate pest and disease identification and agricultural product quality inspection. These approaches are particularly suitable for detecting early and subtle symptoms, minor defects in products (such as small bruises, early mold, and slight color unevenness), and internal quality attributes (e.g., sugar content and hollow).

In terms of crop growth and environment prediction, time-series data on crop growth environments and management history, along with spatial distribution data of crop growth collected by Unmanned Aerial Vehicles (UAV) and field cameras, etc., are typically utilized to predict crop growth trends and accurately forecast growth environments in combination with neural network models such as Long Short-Term Memory (LSTM) [14] and Gated Recurrent Unit (GRU) [15].

In the field of agricultural robotics, core AI technologies such as computer vision [16], navigation path planning [17], and operation control [18] are primarily involved. These technologies are widely applied in tasks including planting, weeding, spraying, fertilization, and picking, achieving refined operations far beyond human capabilities. This enhances operational safety and mitigates cost pressures and inefficiencies caused by labor shortages.

To enable predictive maintenance of agricultural machinery and equipment, thereby improving utilization rates and lifespan, comprehensive data reflecting operational status—such as vibration, temperature, pressure, infrared images, and sound—are integrated. Techniques like Auto-encoders [19] is employed for anomaly detection, while SVM [20], RF [21], and Convolutional Neural Networks (CNN) [22] are used for fault classification. Additionally, time-series analysis and regression models based on LSTM networks [23] are applied for fault prediction. The application of these technologies has laid a solid foundation for the development of smart agriculture.

In order to gain a clearer understanding of how AI technologies facilitate the development of smart agriculture, this paper focuses on various application domains, including pest and disease identification, product quality detection, growth and environmental prediction, agricultural robotics, fault diagnosis, agricultural Internet of Things (IoT), etc. Furthermore, a systematic and in-depth investigation is conducted for each category, covering aspects such as planting, management, and harvesting, with a review of related methods and application cases. Additionally, this paper analyzes the current challenges in applying AI to smart agriculture and outlines future research directions. Unlike existing reviews that primarily focus on simply listing current research methods, this paper adopts an application-driven approach to dissect recent studies in the context of agricultural production processes, highlighting their strengths and limitations.

2. Representative AI Fundamentals

In this section, several typical AI algorithms are outlined to better understand their application in smart agriculture.

2.1. Machine Learning, Deep Learning, and Reinforcement Learning

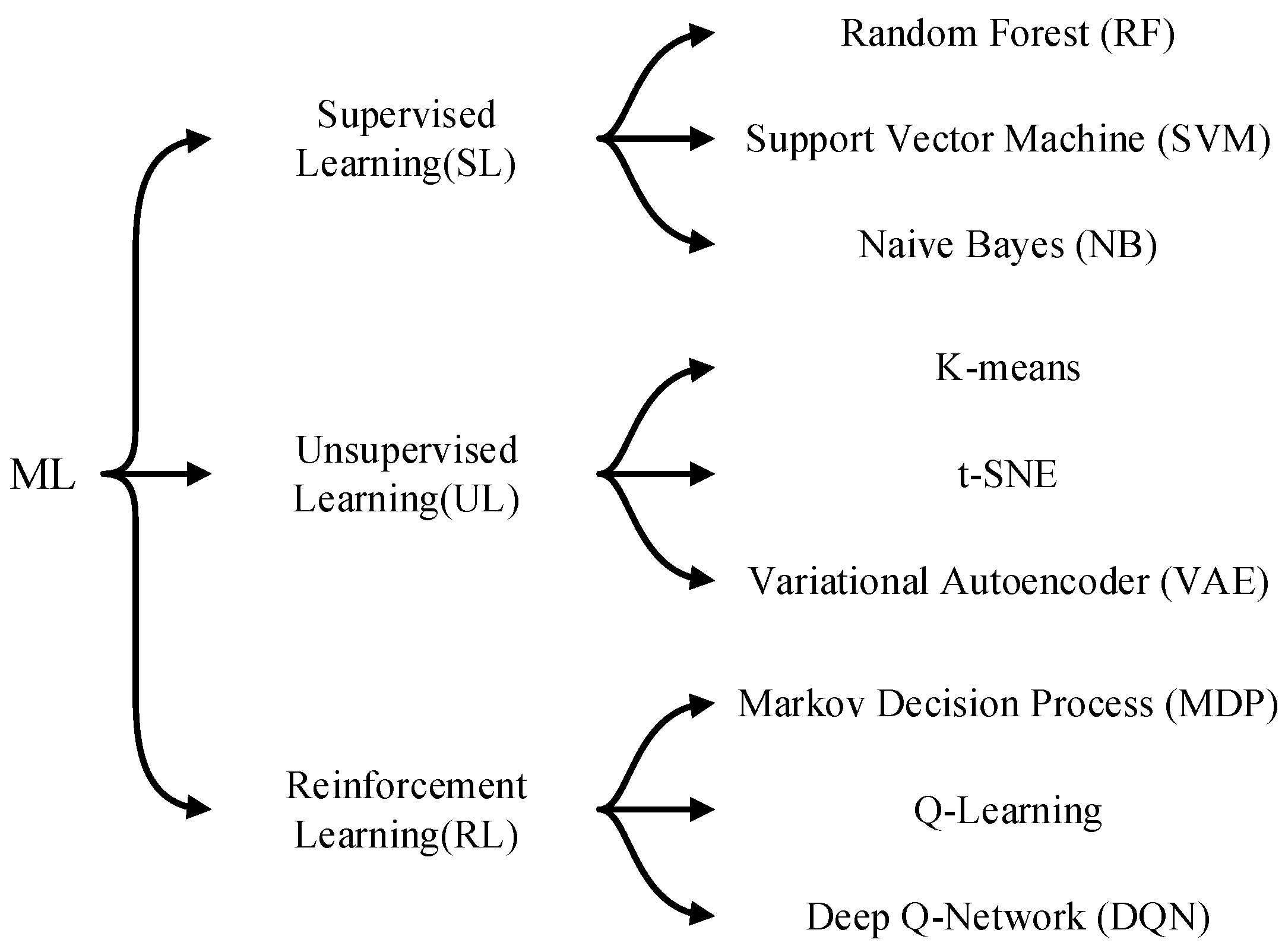

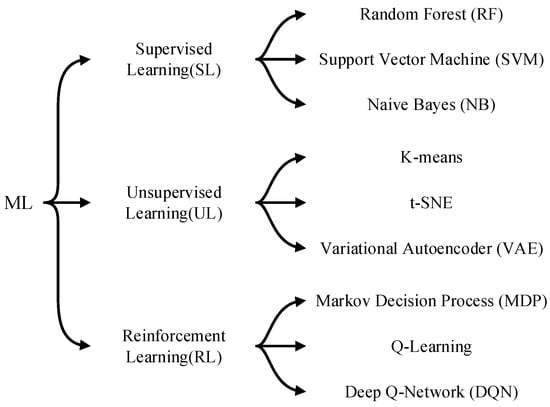

Machine Learning (ML) is a mechanism enabling machines to learn automatically without explicit programming [24]. Its core principle involves algorithms discovering patterns and rules from large volumes of known data, achieving self-optimization and improvement, and utilizing these patterns and rules to predict or classify unknown data. Machine learning algorithms can be categorized into various types based on different classification criteria. Figure 1 illustrates the classification results by learning paradigm and presents several representative models.

Figure 1.

Classification of ML algorithms.

The evolution of machine learning can be divided into two phases: shallow learning and deep learning. As a branch of machine learning, deep learning is fundamentally a system where multiple classifiers work collaboratively. Its foundation lies in the combination of linear regression and activation functions, sharing the core principles with traditional statistical linear regression [25]. Deep neural networks automatically learn hierarchical features of data through multiple nonlinear hidden layers. Their classifiers autonomously generate hypotheses without manual construction, enabling efficient learning of nonlinear relationships. Current deep learning models primarily include Deep Neural Networks (DNN), CNN, Recurrent Neural Networks (RNN), Generative Adversarial Networks (GAN), and Transformer architectures.

Reinforcement Learning (RL) is an important branch of ML that investigates how agents autonomously learn behavioral strategies to maximize long-term rewards or achieve specific goals through interaction with an environment [26]. It primarily consists of seven elements: agent, environment, state, action, reward, policy, and objective. Unlike supervised learning, which relies on labeled data for training, reinforcement learning does not provide demonstrations of correct actions. Instead, it evaluates the quality of actions and provides feedback signals to guide the agent in progressively optimizing its decision-making strategy. This mechanism allows for more flexible reward function design and requires less prior knowledge, making reinforcement learning particularly well-suited for highly complex, sequential decision-making tasks.

2.2. Computer Vision

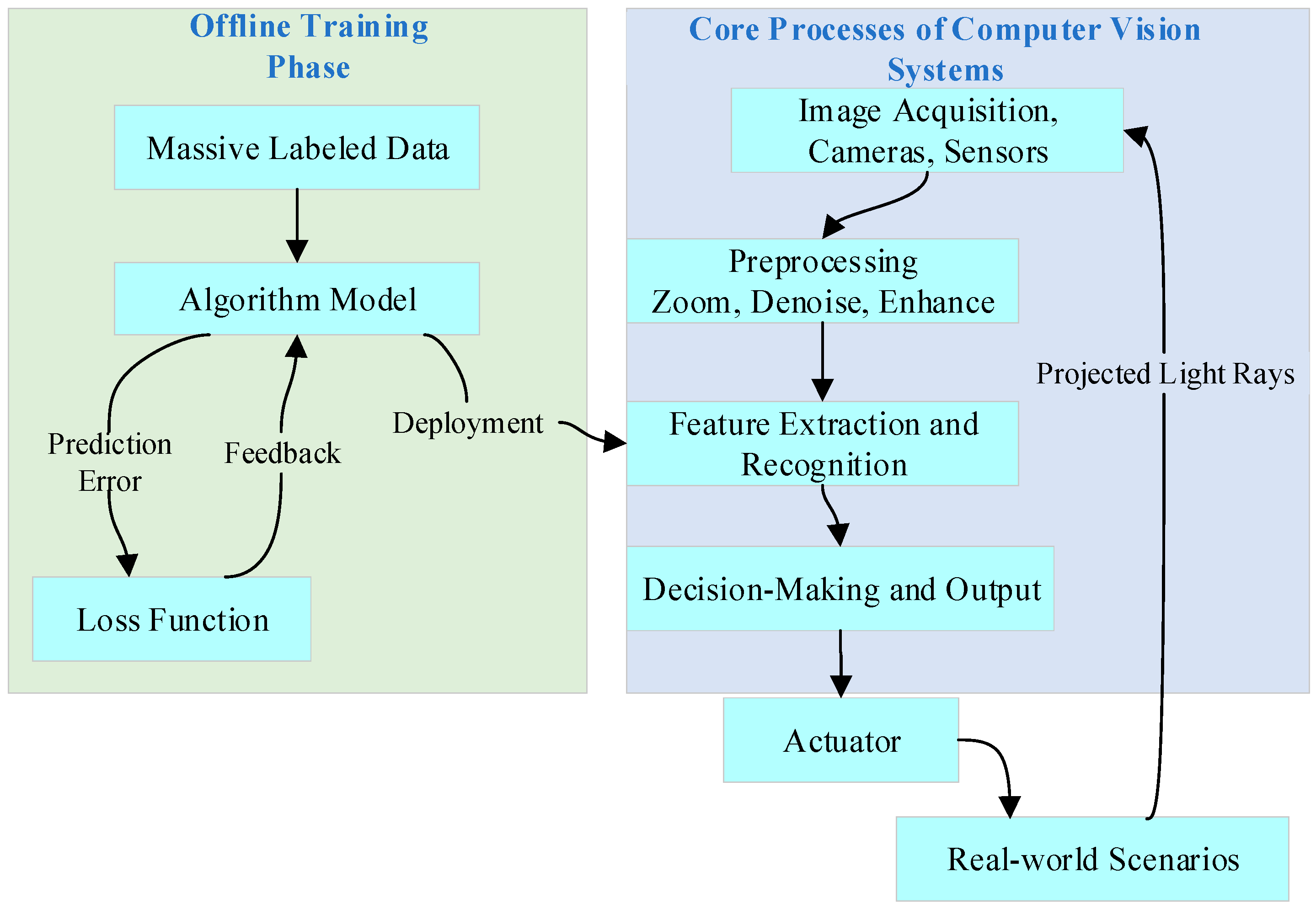

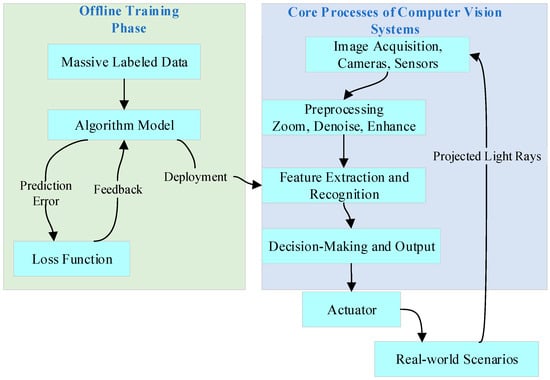

As a pivotal domain within AI, computer vision aims to teach computers how to understand images and videos. This involves not only extracting meaningful information from visual data but also interpreting and judging it to achieve intelligent recognition and interaction across diverse scenarios [27]. Computer vision primarily trains machines using cameras, massive datasets, and algorithmic models, enabling them to execute visual tasks at speeds far exceeding human capabilities. The workflow diagram for computer vision is shown in Figure 2. Currently, computer vision is mainly applied in image classification, object detection, image segmentation, and pose estimation.

Figure 2.

Workflow diagram of computer vision.

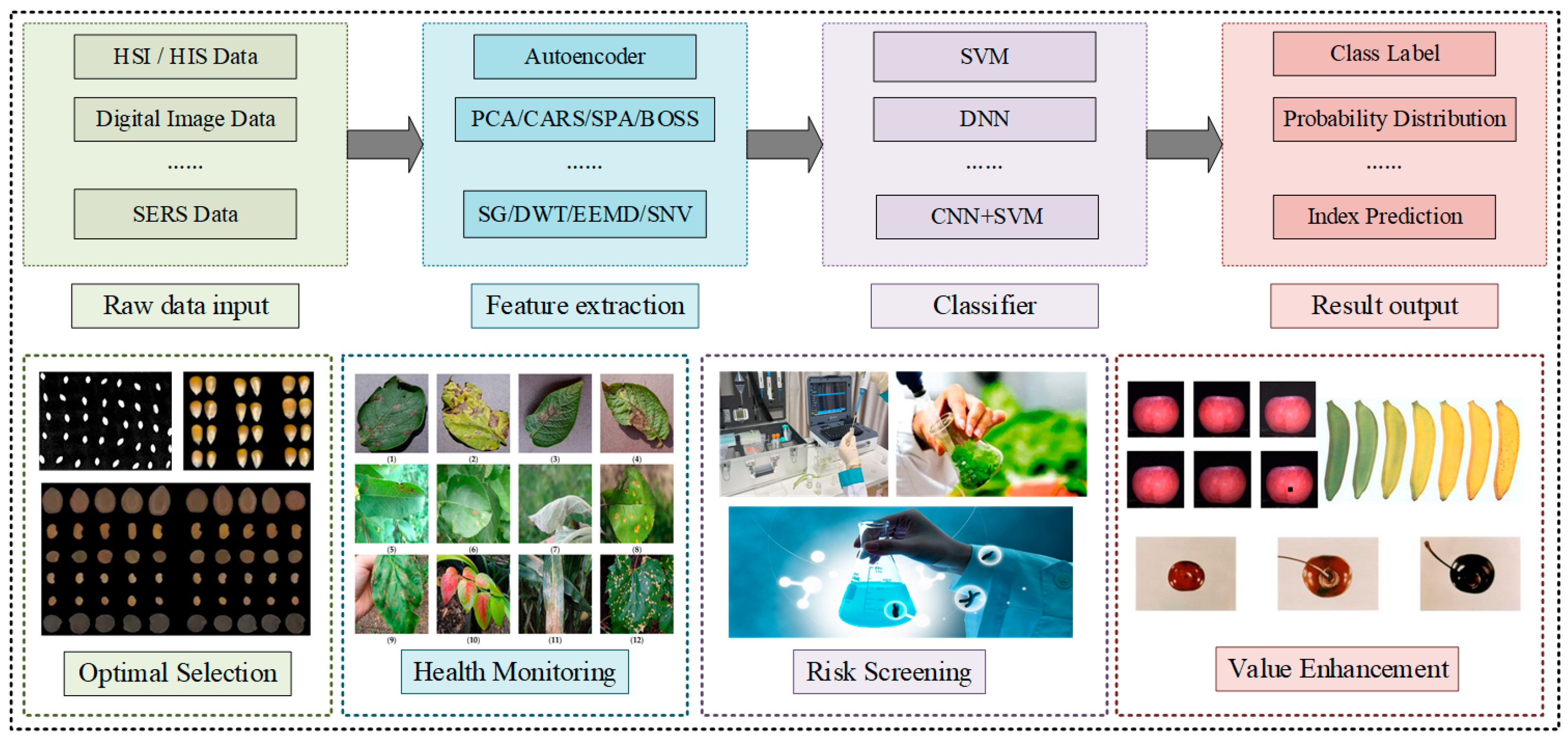

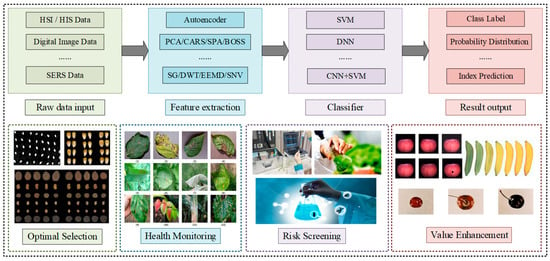

3. Applications of AI in Crop Detection

AI technologies are revolutionizing crop detection by integrating intelligence and high precision through the deep fusion of multi-source data, encompassing spectral, vibrational, and chemical sensing. As shown in Figure 3 [28,29,30] and Table 1, this section highlights breakthrough applications of AI across four key domains: seed variety identification, pest and disease detection, food safety monitoring (including pesticide residues, heavy metals, and mycotoxins), and product quality upgrading (such as non-destructive meat quality evaluation and agricultural product grading). It systematically reviews cutting-edge advances in the synergistic fusion of vibration signal processing, Hyperspectral Imaging (HSI), nano-enhanced sensing, and neural networks, thereby establishing robust technical paradigms for next-generation intelligent agricultural detection systems.

Figure 3.

Illustration of AI-crop detection.

Table 1.

Summary of AI crop-detection methods.

3.1. Seed Variety Identification-Optimal Selection

As a significant area of agricultural scientific innovation, seed variety identification and detection play a critical role in modern variety management, quality control, and crop yield enhancement, thereby highlighting their indispensability for agricultural development. Concurrently, AI is facilitating the transition of seed detection into intelligence.

Recent advances in non-destructive seed identification have leveraged hybrid machine learning methods integrating spectral imaging and feature optimization techniques. Fu et al. [31] introduced a method combining Stacked Sparse Autoencoder, Cuckoo Search, and SVM (SSAE-CS-SVM) to achieve rapid screening of corn (Zea mays L.) seeds. The process includes preprocessing near-infrared spectra via Savitzky–Golay (SG) smoothing and Standard Normal Variate (SNV) correction, followed by feature extraction using SSAE and classification with a CS-optimized SVM model. Similarly, Sun et al. [32] developed an Artificial Fish Swarm Algorithm-SVM (AFSA-SVM) model for rice (Oryza sativa L.) seed identification, integrating spectral-image multimodal features with the Bootstrapping Soft Shrinkage (BOSS) variable method. This approach demonstrated superior performance over conventional techniques, offering a viable tool for agricultural quality control.

Further extending these methodologies, Zhang et al. [33] proposed a framework for detecting selenium-enriched millet (Setaria italica (L.) P. Beauv.), which combines SG smoothing with dual feature selection via Competitive Adaptive Reweighted Sampling and Successive Projections Algorithm (CARS-SPA) to build SVM model. The model achieved higher accuracy compared to single-feature selection methods. In the context of fruit seeds, Xu et al. [34] developed a hyperspectral-based approach that incorporates EEMD and DWT joint denoising, along with CARS-SPA feature selection and SVM modeling, to discriminate grape (Vitis vinifera L.) varieties effectively. Meanwhile, Sun et al. [35] established a non-destructive testing system for watermelon (Citrullus lanatus (Thunb.) Matsum. & Nakai) seed viability using HSI merged with principal component analysis (PCA) feature extraction and an Artificial Bee Colony (ABC)-optimized SVM classifier. These studies collectively highlight the trend of combining signal preprocessing, feature selection, and machine learning optimization to enhance accuracy and efficiency in seed identification.

3.2. Pest and Disease Detection-Health Monitoring

Plant diseases and insect pests lead to significant reductions in crop yield and quality, while also raising control expenses and impinging upon environmental and food safety. Consequently, the advancement of intelligent monitoring and precision control technologies has become essential in modern agricultural practices.

To accurately identify pests and diseases in field grain crops, several advanced approaches have been developed. Zuo et al. [29] introduced a multi-fine-grained feature aggregation network for crop disease classification, integrating multi-source features to improve the recognition of discriminative symptom characteristics. Zhu et al. [13] presented a hybrid recognition model combining transformers and CNN architectures. By incorporating a transformer encoder, the model strengthens global feature representation, and with CenterLoss optimization, it achieves compact feature distribution, leading to improved discriminative power. Cross-dataset validation confirmed its generalization capacity and robustness, particularly in distinguishing visually similar diseases. Zhao et al. [11] developed an attention-guided deep CNN for multi-disease diagnosis, incorporating residual learning and attention mechanisms to improve recognition under complex conditions. The model achieved high performance in terms of both accuracy and efficiency, demonstrating promise for real-time applications. Peng et al. [8] implemented a grape leaf disease recognition system utilizing CNN-extracted features fed into a SVM, attaining high classification accuracy with reduced computational overhead. Cap et al. [36] designed LeafGAN, an attention-based image synthesis model that generates realistic diseased leaf images from healthy reference images. This data augmentation strategy significantly enhanced the generalization performance of diagnostic models.

Beyond image-based approaches, researchers have also integrated spectroscopy with AI for non-destructive disease detection. Lu et al. [37] established an Extreme Learning Machine (ELM) framework for tea (Camellia sinensis (L.) Kuntze) disease recognition by applying masking techniques to isolate spectral signatures of disease spots, yielding higher accuracy compared to full-leaf spectral analysis. This methodology offers a new reference for hyperspectral disease classification. Yang et al. [38] introduced an early diagnostic technique for rice blast based on spore diffraction fingerprint textures and CNN, achieving 97.18% accuracy and a 90% increase in detection speed. This approach provides an efficient and accurate solution for early-stage disease prevention. However, existing methods heavily rely on high-quality images or spectral data and remain limited in their adaptability to complex and variable field conditions, such as light variations, shading, and different growth stages. Furthermore, the models focus on single-disease identification, and their diagnostic capabilities for concurrent multiple pests and diseases or early latent symptoms still need improvement.

3.3. Food Safety Monitoring-Risk Screening

3.3.1. Detection of Pesticide Residues in Agricultural Products

The rapid detection of pesticide residues is crucial for safeguarding food safety and public health. To overcome the limitations of conventional methods, recent research has increasingly integrated advanced sensing technologies (e.g., Surface-Enhanced Raman Spectroscopy (SERS)) with deep learning algorithms, significantly enhancing detection sensitivity and efficiency. This section reviews emerging applications of nanomaterial-enhanced SERS substrates and CNN for quantifying pesticide residues in food commodities such as tea, fruits, and vegetables.

The quantitative analysis of pesticide residues in tea has benefited from the combination of SERS-based sensors and nanotechnology, enabling highly sensitive detection of traces. For example, Li et al. [39] developed a sensing platform using gold-silver octahedral hollow cages (Au–Ag OHCs) as a SERS substrate, coupled with a CNN model for quantifying thiram and pymetrozine residues. The CNN achieved determination coefficients (R2) of 0.995 and 0.977 for thiram and pymetrozine, respectively, with detection limits as low as 0.286 ppb and 29 ppb. In a related study, Chen et al. [57] fabricated a SERS substrate based on gold@silver core–shell nanorods (Au@Ag NRs) for rapid detection of thiabendazole in fruit juices such as apple (Malus domestica (Suckow) Borkh.) and peach (Prunus persica (L.) Batsch).

To address the need for efficient screening of diverse pesticide residues across multiple agricultural products, innovative approaches such as molecularly imprinted polymers and fluorescence-based sensors have been introduced. Qiu et al. [58] synthesized silica-based Fluorescent Molecularly Imprinted Polymers (FMIPs) for selective detection of beta-cyfluthrin. Li et al. [59] constructed an upconversion fluorescence nanosensor capable of screening organophosphorus pesticides, including dimethoate.

3.3.2. Detection of Heavy Metals in Food

The high-sensitivity detection of heavy metal contamination in food is essential for preventing and controlling food safety risks. Researchers are increasingly combining advanced sensing technologies—such as microwave sensing and HSI—with deep feature extraction networks to develop efficient quantitative models for heavy metal analysis. This section reviews recent technological advances and practical applications in detecting heavy metals in foods, including edible oils and vegetables, with a particular emphasis on multi-source sensor data fusion, wavelet transforms, and residual neural networks.

The identification of heavy metal residues in edible oils has made progress through the combination of microwave sensing and SERS, enabling efficient and quantitative safety assessments. Deng et al. [40] introduced a method combining microwave detection with an attention-based deep residual network for quantitative analysis of lead (Pb) in edible oils. Chen et al. [60] synthesized silver nanoparticles and established a quantitative model for cadmium (Cd) using SERS coupled with chemometric techniques.

Monitoring heavy metal stress in vegetable crops increasingly uses HSI combined with deep learning-based feature extraction to support non-invasive diagnosis. Zhou et al. [41] proposed a method integrating fluorescence HSI, Wavelet Transform (WT), and a Stacked Denoising Autoencoder (SDAE) for detecting Pb in rapeseed leaves. Zhou et al. [42] also presented a fusion approach linking hyperspectral technology, WT, and a Stacked Convolutional Autoencoder (SCAE) for detecting composite contamination from Cd and Pb in lettuce (Lactuca sativa L.). However, these methods often rely on sophisticated laboratory equipment and complex sample preparation procedures, making rapid, on-site testing difficult to implement within the actual food supply chain.

3.3.3. Detection of Mycotoxins in Agricultural Products

The detection of mycotoxins in agricultural products is of critical practical importance, playing an essential role in enhancing product quality, protecting consumer health, and ensuring food safety. In recent years, AI-based detection technologies have been rapidly advancing, offering increasingly robust tools to support the reliable monitoring of mycotoxin contamination.

Novel AI-driven approaches are effectively overcoming the limitations of traditional methods, such as low sensitivity and poor interference resistance. For instance, Yang et al. [43] developed a SVM classification model using line-scan Raman HSI combined with SG smoothing and adaptive iteratively reweighted Penalized Least Squares (airPLS) preprocessing to detect aflatoxin contamination in peanuts (Arachis hypogaea L.) accurately. This method offers a high-precision and rapid detection strategy for fungal toxins. Similarly, Lin et al. [44] introduced an aflatoxin detection method for maize (Zea mays L.) based on highly sensitive nano-colorimetric sensor array. By integrating colorimetric features with Linear Discriminant Analysis (LDA) or K-Nearest Neighbors (KNN) algorithm, the approach achieved 100% accuracy in identifying toxigenic aspergillus flavus infections, providing an efficient tool for on-site screening.

With the expanding adoption of deep learning, mycotoxin detection is increasingly shifting toward more intelligent and user-friendly applications. Wang et al. [45] proposed a high-accuracy model for aflatoxin B1 detection in corn by combining Near-Infrared Reflectance Spectroscopy (NIRS) with a CNN. Using a Markov Transition Field (MTF) to convert 1D spectral data into 2D images, the proposed two-dimensional MTF-CNN architecture significantly outperformed conventional techniques. Zhu et al. [46] developed a method for detecting zearalenone (ZEN) in corn by integrating surface-enhanced Raman spectroscopy (SERS) with a deep learning model. By adopting a composite enhancement strategy for SERS spectral optimization and constructing a 2D-CNN model, the method achieved markedly higher prediction accuracy. Zhao et al. [47] designed a non-destructive detection system for ZEN in wheat (Triticum aestivum L.) using a colorimetric sensor array and CNN. This method combined multispectral and colorimetric features within a CNN-based quantitative model, substantially outperforming traditional prediction models and offering a new practical tool for grain safety inspection. However, these data-driven approaches face a dual challenge: an over-reliance on scarce, high-quality labeled data, and the potential loss of features during image transformation.

3.4. Product Quality Upgrading-Value Enhancement

3.4.1. Non-Destructive Meat Quality Detection

Non-destructive meat quality detection represents a critical technological foundation for ensuring food safety and upholding quality standards. Driven by the deep fusion of spectral imaging and deep learning, researchers are developing intelligent inspection systems marked by high precision and cross-modal data fusion. This section highlights synergistic advances combining optical technologies—such as HSI and fluorescence HSI (F-HSI)—with CNNs and attention mechanisms, demonstrating their ground-breaking applications in pork freshness evaluation, meat adulteration identification, and quantitative component prediction.

Quantitative and non-destructive evaluation of key indicators in pork, including freshness and oxidative damage, has been achieved through the combination of spectral imaging and deep learning. Cheng et al. [48] introduced a fusion framework integrating HSI with a multi-task CNN, enabling simultaneous monitoring of lipid and protein oxidation in freeze-thawed pork. The model achieved predictive coefficients of determination (R2p) of 0.97 and 0.96 for these key metrics, respectively. Sun et al. [49] innovatively incorporated two-dimensional Correlation Spectroscopy (2D-COS) images into a dual-branch CNN architecture. By leveraging synchronous-asynchronous spectral feature fusion, the model achieved an R2p of 0.958 for predicting Total Volatile Basic Nitrogen (TVB-N), a key indicator of freshness. Cheng et al. [50] proposed a Hybrid Fusion Attention Network (HFA-Net) to integrate F-HSI and electronic nose data. Through attention-guided feature optimization, the model reached an R2 of 0.937 for TVB-N prediction.

To ensure meat authenticity, HSI coupled with transfer learning has been employed for accurate detection of foreign substances in chicken products. Yang et al. [51] utilized an HSI with a fine-tuned GoogleNet model to identify starch adulteration in minced chicken non-destructively. Similarly, Sun et al. [12] applied VGG16-SVM fusion for the detection of soy protein impurities, demonstrating robust performance in authenticity verification.

NIRS combined with CNNs provides an efficient tool for rapid geographical origin traceability and authenticity discrimination of herbal medicines. Huang et al. [52] implemented a 1D-CNN model with preprocessed NIRS data for recognizing the origin of Chuanxiong (Ligusticum chuanxiong Hort.) slices, achieving 92.2% accuracy—a 12.1% improvement over conventional techniques.

In summary, the cross-modal fusion of spectral technologies and deep learning has established itself as a prominent paradigm in meat quality detection. Methodologies such as multi-task learning, cross-sensor feature fusion, and transfer learning have substantially elevated detection accuracy and model generalizability, opening avenues for intelligent food inspection systems.

3.4.2. Quality Grading and Detection

Grading and quality detection of agricultural products are essential for enhancing their market value and meeting diverse consumer demands. Moreover, these practices significantly contribute to accelerating market circulation and fostering a sustainable agricultural ecosystem.

In recent years, the application of non-destructive testing technologies has expanded from evaluating external quality to precisely assessing internal physiological indicators. Li et al. [61] introduced an innovative method for diagnosing potassium nutrition levels in tomato (Solanum lycopersicum L.) leaves using electrical impedance spectroscopy. The established prediction model demonstrated greater robustness against environmental interference compared to optical techniques. Subsequently, in the domain of fruit quality evaluation, Xu et al. [53] developed a novel model for the non-destructive and rapid assessment of grape quality. This method employed Variational Mode Decomposition combined with Regression Coefficient (VMD-RC) to process hyperspectral data, and constructed Least Squares SVM (LSSVM) models under both full-spectrum and feature-spectrum wavelengths to predict Total Soluble Solids (TSS). The results indicated that the model achieved optimal TSS prediction accuracy. Qiu et al. [30] conducted a feasibility study using CNNs for apple classification based on color and deformity, assembling a machine vision dataset to facilitate model training. AlexNet, GoogLeNet, and VGG16 were deployed for three-class quality evaluation, categorizing apples through visual traits. Experiments revealed that VGG16 attained a top accuracy of 92.29%, establishing a practical deep learning framework for agricultural quality inspection. Ji et al. [10] proposed an apple grading method based on the YOLOv5s network, incorporating Omni-dimensional Dynamic Convolution (ODConv) to improve surface defect feature extraction, and designing a lightweight architecture combining Grouped Spatial Convolution (GSConv) and VoVGSCSP. The model effectively recognized defects and graded quality. Xu et al. [54] tackled the challenges of low accuracy and efficiency in automated apple grading by integrating Squeeze-and-Excitation (SE) modules into the YOLOv5 backbone to strengthen feature representation, and adopting Distance-IoU Loss (DIoU_Loss) to accelerate convergence. This approach attained high grading speed and accuracy in practical automated grading systems.

Shifting to other agricultural products, Guo et al. [55] constructed a non-destructive and efficient method for tea blend ratio analysis and sample matching. Based on a ResNet architecture integrated with the Convolutional Block Attention Module (CBAM), the model enhanced feature capture capability and achieved high precision in tea categorization and mixture analysis. Zhang et al. [56] proposed a YOLOv4 Tiny-based deep learning model for the inspection of vegetable quality. By leveraging the Cross Stage Partial Network (CSPNet) structure to design a feature enhancement network, the method effectively extracted discriminative features of various cherry tomato categories, proving to be a practical and efficient solution for multi-class detection. However, existing methods still suffer from insufficient recognition stability when confronted with target defects in agricultural products characterized by diverse appearances, varying lighting conditions, and complex backgrounds.

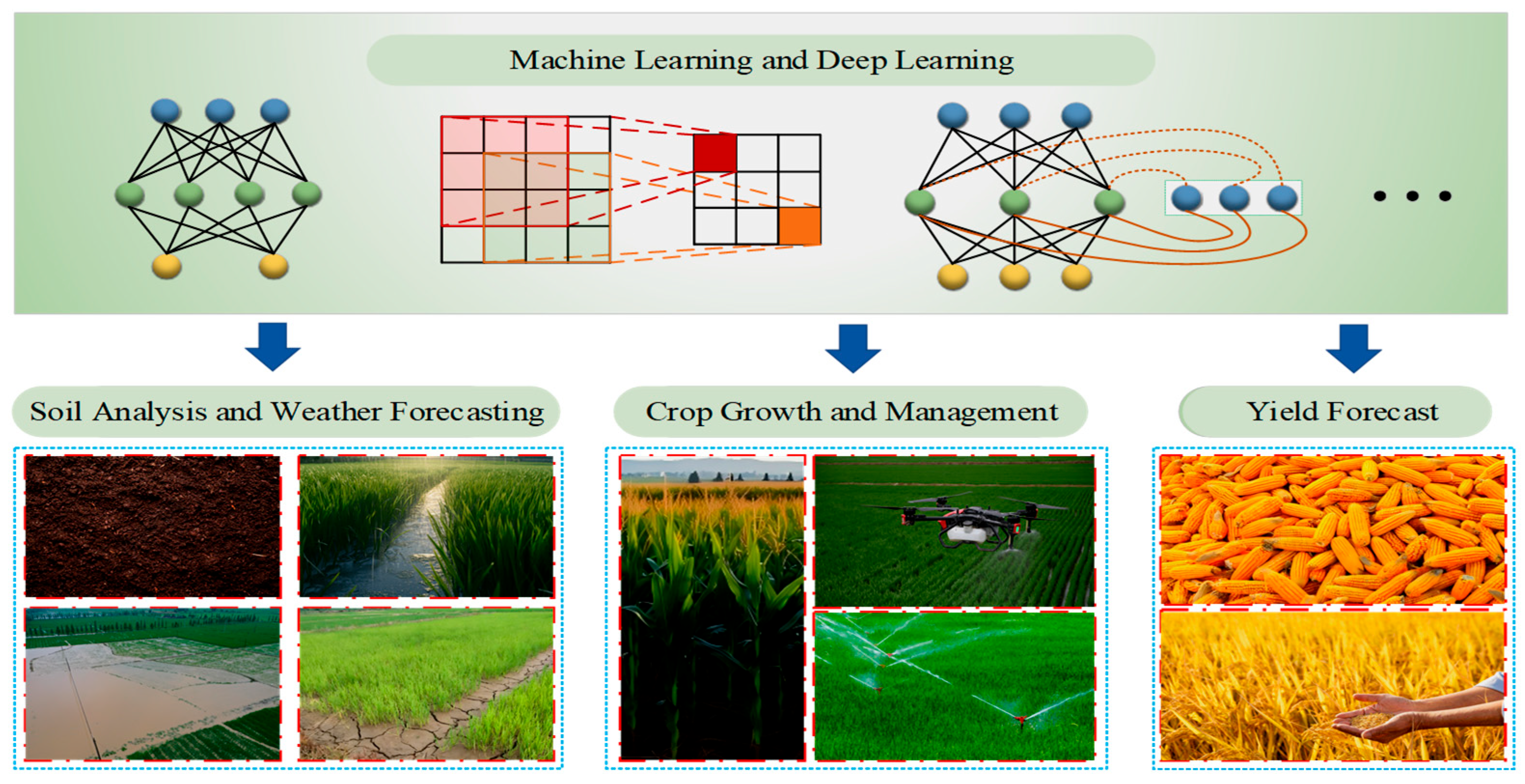

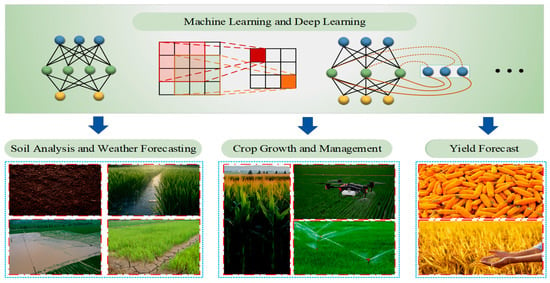

4. AI in Crop Growth and Agricultural Condition Forecasting

Crop growth and agricultural condition forecasting monitoring systems can assist farmers in understanding crop development, enhancing production efficiency, and safeguarding agricultural output [62]. With advances in AI technology, intelligent learning has demonstrated superior performance in crop growth and agricultural condition prediction. As illustrated in Figure 4, this section provides a concise overview of recent research findings across three domains: growth environments, production management, and yield forecasting.

Figure 4.

Illustration of crop growth and agricultural forecasts.

4.1. Growing Environment

The nutrient content within soil is crucial for crop selection and preparatory fertilizer work, directly impacting crop growth and yield [63]. Soil nutrient forecasting primarily involves predicting soil moisture levels and nutrient concentrations within the soil. Reda et al. [64] employs multiple machine learning algorithms to estimate Soil Organic Carbon (SOC) and Total Nitrogen (TN) models. Khanal et al. [65] utilized aerial remote sensing imagery of farmland to generate datasets for predicting soil nutrient content. SVM, Cubist algorithms, RF, and neural networks were employed to forecast soil pH levels and the concentrations of substances such as magnesium (Mg) and potassium (K). Bhattacharyya et al. [66] proposed a two-stage ensemble prediction model integrating the Gaussian Probability Method (GPM), CNN, and SVM to forecast soil moisture. The proposed method was validated using soil moisture measurement datasets from the Godavari River Plateau and Krishna River Plateau. Experimental results demonstrated that the proposed method achieved a prediction accuracy of 89.53%, whereas traditional single-stage ensemble prediction models reached only 82.56%, indicating a significant performance improvement. Cui et al. [67] employed three machine learning algorithms—RF, Support Vector Regression (SVR), and Artificial Neural Network (ANN)—to construct different Soil Salinity Content (SSC) estimation models. Utilizing multi-temporal Sentinel-2 imagery and ground-truth data, they classified sunflower (Helianthus annuus L.) and maize crop types and analyzed temporal characteristics across different growth stages. By integrating vegetation indices and salinity indices, they estimated the SSC. Experimental results indicate that classifying crop types and accounting for different time series significantly enhances the correlation between SSC and spectral indices, thereby positively influencing SSC estimation. However, existing analytical and predictive methods still suffer from inefficiencies, limited coverage, and challenges in deploying on edge devices. Future efforts must further optimize multi-source data fusion and lightweight algorithms to facilitate the transition of soil analysis from monitoring to agricultural decision-making support.

Climate factors also significantly influence crop growth, with suitable temperatures and rainfall directly impacting plant development and final yields [68]. Yue et al. [69] innovatively proposed an encoder–decoder model based on Convolutional LSTM (ConvLSTM), achieving high-precision annual forecasting of three key meteorological elements—sunshine duration, cumulative precipitation, and daily mean temperature—by integrating LSTM with ConvLSTM architectures. However, in practical applications, model reliability significantly decreases when real-time weather data inputs are insufficient, while emergency response mechanisms for extreme climate events remain underdeveloped. For extreme weather events, Devarashetti et al. [70] proposed a climate prediction model that integrates residual LSTM (R-LSTM) with Artificial Gorilla Troops Optimized Deep Learning Networks (AGTO-DLN), significantly enhancing the forecasting accuracy of extreme weather occurrences. However, this model fails to incorporate real-time climatic factors, severely restricting its applicability in dynamic agricultural environments.

Agricultural drought represents one of the recurrent natural disasters in crop cultivation, typically arising from meteorological or soil drought conditions. This leads to sustained soil moisture levels falling below crop requirements throughout the growing season, subsequently causing reduced yields, complete crop failure, or plant mortality [71]. Consequently, numerous scholars have devoted considerable effort to developing various drought prediction systems. Statistical methods, exemplified by the Autoregressive Integrated Moving Average (ARIMA) model, are commonly employed prediction techniques. However, such statistical approaches neglect the temporal information inherent in remote sensing data. Guo et al. [72] innovatively combined the ARIMA model with the LSTM model, leveraging the former’s linear expressive capability and the latter’s temporal information extraction capacity. By simultaneously utilizing both the linear trend and temporal information within the Vegetation Temperature Condition Index (VTCI) time series, they achieved high-precision drought forecasting for the Sichuan Basin. Khan et al. [73] designed a hybrid model that combines the wavelet transform and ARIMA-ANN (W-2A), using the Standardized Precipitation Index (SPI) and Standard Index of Annual Precipitation (SIAP) as drought indices to forecast future drought events. Gowri et al. [74] employed a Triple Exponential-LSTM (TEX-LSTM) model to forecast the Standard Vegetation Index (SVI) and Vegetation Health Index (VHI), thereby determining drought occurrence. Mokhtarzad et al. [75] utilized SVM, ANN, and Adaptive Neuro-Fuzzy Inference Systems (ANFIS) for drought prediction, employing SPI as the drought indicator. De Vos et al. [76] integrated Earth Observation (EO)-based monitoring systems with Categorical Boosting (CatBoost) regression models to achieve three-month advance, high-precision forecasting of Normalized Difference Vegetation Index (NDVI) negative anomalies (a key indicator of drought) across Africa. This advances drought management from a reactive response to a proactive early warning, although challenges persist in forecasting during the transition period from the rainy season.

4.2. Crop Growth and Management

The growth status of crops largely reflects their health condition. Predicting crop growth stages aids in arranging cultivation practices, determining mechanical harvesting timings, and forecasting crop yields. Some scholars approach crop growth stage prediction by first forecasting indicators related to crop growth, followed by decision-makers determining the specific growth stage [77,78,79]. However, this approach requires decision-makers to possess specialized knowledge to analyze and evaluate the predicted growth indicators effectively. Consequently, some researchers directly forecast crop growth characteristics for comprehensive assessment. Pal et al. [80] proposed a Digital Twin (DT) system for cotton (Gossypium hirsutum L.) crops based on UAV remote sensing data and machine learning, capable of accurately predicting key characteristic parameters, including Canopy Cover (CC), Canopy Height (CH), Canopy Volume (CV), and Excess Greenness (EXG). Lou et al. [81] employed a 1D-ResNet18 deep learning model to predict polyphenol and crude fiber content in fresh tea leaves. Tsai et al. [82] utilized ensemble machine learning to assess bean sprout (Glycine max (L.) Merr.) growth conditions and forecast growth requirements. Yue et al. [69] combined a ConvLSTM encoder–decoder with traditional neural networks, achieving high accuracy in predicting maize growth stages.

In agricultural production management and decision-making, the application of intelligent monitoring and prediction systems can effectively reduce labor intensity for workers, minimize losses of grain and other agricultural products during storage, and improve storage quality. This robustly supports the advancement and construction of smart agricultural warehouses and precision farming. Agricultural greenhouses serve as core facilities driving the intensive, intelligent, and sustainable development of modern agriculture [83], with numerous scholars consistently dedicated to researching intelligent management systems for such structures. Francik et al. [84] proposed an ANN-based system for predicting internal temperatures within heated plastic greenhouses. Lee et al. [85] employed a hybrid deep learning model including ConvLSTM, CNN and regression Backpropagation Neural Network (BPNN), to construct an AI-powered greenhouse environmental control system. Scientific irrigation significantly enhances crop yield and quality; however, improper irrigation practices can lead to resource wastage and ecological issues [86,87,88]. Elbeltagi et al. [89] and Raza et al. [90] evaluated the predictive performance of multiple machine learning algorithms using evapotranspiration (ET) as an irrigation indicator. Tong et al. [91] combined the Competitive Adaptive Reweighted Sampling (CARS) with the CatBoost machine learning method to forecast transpiration rates (Tr) in greenhouse tomatoes, providing an intelligent solution for precise irrigation control. Appropriate nitrogen fertilizer aids crop growth, yet excessive application leads to soil compaction, water pollution, and increased crop lodging susceptibility [92]. Zhang et al. [93] employed multiple machine learning algorithms to construct diverse UAV RGB systems, integrating spectral and textural features to estimate winter wheat Plant Nitrogen Content (PNC), and demonstrating the feasibility of UAV RGB systems for this purpose. Zhang et al. [94] employs SVM in conjunction with UAV multispectral vegetation indices to predict winter wheat Leaf Chlorophyll Content (LCC), thereby enabling indirect nitrogen fertilizer management.

In summary, despite the immense potential of smart technologies across all aspects of agricultural management, current practical applications still commonly face challenges such as reliance on experience-based decision-making, inefficient resource utilization, and difficulties in real-time monitoring of key physiological parameters.

4.3. Yield Forecast

Reliable crop yield forecasting is of paramount importance to agricultural producers and remains a prominent research focus within the field of smart agriculture both domestically and internationally [95]. Yield prediction methods based on statistical models or crop growth models are commonly employed; however, both approaches exhibit limitations: the former requires high-quality data, while the latter suffers from significant uncertainty at large spatial scales. With the advancement of AI, an increasing number of scholars are applying machine learning techniques to crop yield prediction. Some scholars have evaluated the performance of various single machine learning algorithms in predicting crop yields [96,97,98]. Another group of scholars combined machine learning algorithms with different prediction models, validating the feasibility of hybrid prediction models [99,100,101,102]. Feng et al. [103] developed a hybrid dynamic prediction model by integrating APSIM-simulated biomass at multiple crop growth stages, extreme weather events preceding the prediction date, NDVI, and the Standard Precipitation and Evapotranspiration Index (SPEI). This approach utilized random forests and multiple linear regression models, achieving high prediction accuracy in experiments. Lee et al. [104] proposed a country-specific maize yield forecasting system based on Earth Observation (EO) and Extremely Randomized Trees (ERT), achieving high-precision maize yield predictions across multiple nations in South Africa.

While machine learning demonstrates promising performance in crop yield prediction, traditional machine learning methods are constrained in their ability to extract features from input data, limiting their capacity to fully exploit information characteristics within remote sensing data. In contrast, deep learning overcomes this limitation by delivering superior fitting capabilities and enhanced flexibility. Consequently, numerous scholars have employed deep neural networks to extract spatial features from remote sensing data for crop yield forecasting [105,106,107]. LSTM-based models are also frequently used to predict wheat and rice yields [108,109,110]. Ren et al. [111] combined the World Food Studies (WOFOST) growth model with a GRU, employing distinct feature combinations across growth stages to forecast maize yields. Fieuzal et al. [112] proposed a dual-mode maize yield prediction system based on neural networks. By integrating multi-source satellite data (optical and microwave remote sensing), it enables both diagnostic predictions throughout the entire growth period and real-time dynamic forecasting during the growing season, achieving optimal prediction accuracy three months prior to harvest. However, cloud cover obstruction during remote sensing data acquisition frequently causes data gaps, compromising prediction accuracy. Future research should prioritize developing hybrid models to mitigate the impacts of such data deficiencies.

The outstanding performance of AI-based crop growth and agricultural condition prediction methods relies heavily on large-scale, high-quality data. However, the industry lacks unified standards for data collection, and the process is susceptible to noise, resulting in significant variability within datasets. Furthermore, most existing models are tailored to specific crops in particular regions, exhibiting limited generalizability and demanding substantial computational resources. Future research should focus on model light weighting and enhancing generalizability. The methods employed in some of the literature cited in this section are summarized in Table 2.

Table 2.

Summary of AI methods for crop growth and agricultural condition forecasting.

5. AI in Fault Diagnosis of Agricultural Machinery

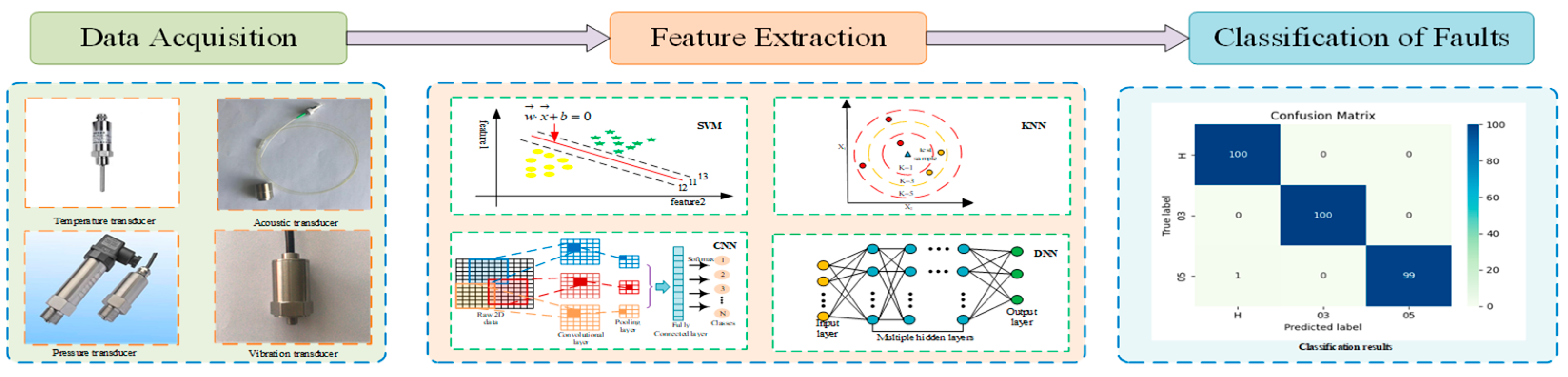

The condition monitoring and fault diagnosis of agricultural machinery play a pivotal role in ensuring the stability of agricultural production and have demonstrated significant potential with advancements in AI technology [6]. This section provides an overview of fault diagnosis applications based on AI methods in agricultural machinery. We only highlight some recent research and provide a simple classification. As shown in Figure 5, the AI-based fault diagnosis methods primarily include data acquisition, feature extraction, and fault classification.

Figure 5.

Illustration of AI-fault diagnosis in agricultural machinery.

5.1. Harvesters

Harvesters, as essential equipment in agriculture, frequently experience various faults due to their complex structure and harsh working conditions [113]. Wang et al. [114] comprehensively summarized the current status and future development trends of data-driven methods, including signal processing and AI methods, in structural fault detection for combine harvesters. Traditional signal processing methods have proven valuable in diagnosing faults in agricultural machinery, enabling the extraction of fault characteristics [115]. For example, Li et al. [116] employed order tracking to locate gearbox bearing fault frequencies precisely. However, the performance and generalization capabilities of signal processing methods depend on expert experience and prior knowledge. In contrast, AI-based approaches such as machine learning and deep learning can adaptively learn the mapping relationships between fault features and signals, thereby enhancing diagnostic accuracy and robustness [117].

To address component faults in tractors, Xu et al. [20] presented a SVM based model for detecting bolt loosening in combine harvesters. Through the analysis of critical bolt torque and the extraction of a high-dimensional feature matrix, the method enabled accurate identification of bolt failure states under various excitation conditions. Gomez-Gil et al. [118] explored the feasibility of utilizing a KNN classifier combined with the Harmonic Search (HS) algorithm to diagnose the operational status of rotating components in a harvester using vibration signals. Martínez-Martínez et al. [119] employed a single vibration signal in conjunction with an ANN model optimized by a Genetic Algorithm (GA) to identify the different operational states of multiple rotating components in agricultural machinery. Yang et al. [19] employed SDAE to remove noise from vibration signals and achieved fault diagnosis of combined harvester rolling bearings using SVM. Confronted with insufficient samples, She et al. [120] proposed a method for diagnosing faults in combine harvester gearboxes under variable operating conditions and limited data. It utilizes a meta-transfer learning-driven approach that combines Multi-step Loss Optimization (MSL) and Conditional Domain Adversarial Networks (CDAN), ensuring high diagnostic accuracy even with few-shot data. Ling et al. [121] proposed a threshing cylinder blockage diagnosis model based on a hybrid search sparrow algorithm (HSSA) and SVM. The model significantly improved diagnostic accuracy and reduced sample requirements by optimizing algorithm initialization and perturbation strategies. However, the performance of existing models heavily relies on relatively clean vibration signals, making it difficult to handle complex noise and material interference in the field. This results in insufficient generalization capabilities under real-world conditions.

5.2. Sensors

Sensors are the core of the Agricultural Internet of Things (Ag-IoT), mainly used to collect various types of data during agricultural production processes, making agricultural machinery operate better in a complex farmland environment [122]. To achieve smart agriculture, timely detection and diagnosis of sensor failures are particularly crucial [123]. Karimzadeh et al. [124] investigated the effectiveness of five machine learning models for fault diagnosis of Electrical Conductivity (EC) and potential of Hydrogen (pH) sensors in closed-loop hydroponic systems, and innovatively proposed a sensor-independent EC prediction framework that does not rely on target sensor readings. Kaur et al. [125] proposed an intelligent agricultural diagnosis system that combines reinforcement learning to optimize mobile sink path planning and enhances sensor fault detection accuracy through hyperparameter-tuned least square SVM (HT-LS-SVM), improving network reliability and agricultural monitoring efficiency. Similarly, Ling et al. [126] also optimized SVM and proposed an improved dung beetle optimization (IDBO) SVM model for Ag-IoT sensor fault diagnosis, which significantly enhanced diagnosis accuracy under conditions of limited sample data. With technological advancements, wireless sensor networks (WSNs) are increasingly being applied in agriculture monitoring [127]. Barriga et al. [128] proposed an expert system for detecting faults in leaf-turgor pressure sensors. The system leverages a machine learning model trained on real-world agricultural data, which shows potential to replace human experts, improving the reliability and efficiency of precision irrigation. Salhi et al. [129] explored the real-time monitoring and intelligent fault diagnosis of farmland environments and equipment using an Evolutionary Recurrent Self-Organizing Map (ERSOM) deep learning model based on WSNs. Most models exhibit insufficient adaptability to varying climatic and soil conditions and are susceptible to signal interference between sensors. Simultaneously, the scarcity of samples constrains the models’ generalization capabilities. These issues require further resolution in future research.

5.3. Tractors

Tractors are another typical representative of agricultural power machinery [130]. Xu et al. [23] employed a feature fusion method based on an improved CNN-Bidirectional LSTM (CNN-BILSTM) for diagnosing faults in tractor transmission systems, which maintains high accuracy even in noisy environments. The author also integrated Time GAN with the multi-head self-attention transformer model, effectively addressing the issue of sample deficiency [131]. Ni et al. [132] utilized SVM to identify various faults in the PST Electro-Hydraulic system of the tractors, analyzing flow and pressure signals to achieve high classification and fault identification performance. Hosseinpour-Zarnaq et al. [21] proposed a fault diagnosis method for tractor transmissions based on vibration analysis and the RF classifier. The method acquired vibration signals at different rotational speeds and extracted features using the Discrete Wavelet Transform (DWT). It obtained accurate classification results through feature optimization with Correlation-based Feature Selection (CFS). Xue et al. [133] investigated a method based on PCA and an improved Gaussian Naive Bayes Algorithm (GNBA) for diagnosing faults in the control system of a tractor hydrostatic power split continuously variable transmission (CVT). The proposed method achieved superior fault classification accuracy compared to other conventional algorithms through optimized time window selection and feature extraction. In addition, Wang et al. [22] developed a fault diagnosis method based on Infrared Thermography (IRT) and the CNN for diesel generators. Xiao et al. [134] proposed the Competitive Multiswarm Cooperative Particle Swarm Optimizer (COM-MCPSO) algorithm for fault diagnosis of diesel engines.

A major limitation in current tractor fault diagnosis research is that most methods conduct isolated analyses of individual subsystems, such as the transmission or engine, failing to adequately account for systemic coupling faults within the tractor as a complex integrated machine. Furthermore, the decision-making processes within these models often lack interpretability, hindering direct on-site repairs.

5.4. Pumps

Pumps find extensive application in fields such as agricultural irrigation and urban water supply because of their high flow capacity and reliability [135]. Thus, extensive research has been conducted for the stable operation of the pump [136,137]. Wang et al. [138] proposed a self-attention mechanism combined with a Dense Neural Network (DNN) model to identify flexible winding faults in double-suction centrifugal pumps, which maintains superior classification accuracy even in noisy environments. Prasshanth et al. [139] converts vibration signals collected by sensors into images to enhance feature representation, integrating transfer learning techniques, and employing 15 pre-trained deep learning models to classify different faults. Moreover, the optimized AlexNet achieved the highest classification accuracy with the shortest processing time. However, in reality, it is often difficult to obtain sufficient data to train models. Therefore, Zou et al. [140] combined the Self-Calibrating Attention Mechanism (SCAM) with the Distributed Edge Prediction Strategy (DEPS) for pump fault diagnosis. The method transferred features from the source domain via SCAM and generated new samples conforming to a Gaussian distribution. It then employed DEPS to design an edge discriminator, preventing feature aliasing. Ultimately, it effectively enhances classification accuracy under few-shot conditions. Furthermore, Zou et al. [141] also integrated the diffusion mechanism and self-adaptive Lr with the Model-Agnostic Meta-Learning Strategy (MAMLS) to enhance the accuracy of pump anomaly detection.

Although these advanced methods enhance performance in scenarios with small samples and noisy environments, their model structures are often highly complex, demanding significant computational resources. Furthermore, when addressing unknown or compound failures in pump equipment, their interpretability and generalization capabilities may be limited.

5.5. Others

The study also identifies several other applications, which are grouped into a single category due to limited research on them. Bai et al. [6] summarized the application of deep learning-based technologies in the motor fault diagnosis of agricultural machinery, providing reliable solutions for sustainable agricultural practices. Xie et al. employed Graph Convolutional Networks (GCNs) [142] and SVM [143] for fault diagnosis of rolling bearings in agricultural machines, respectively.

To address the faults of agricultural vehicles, Rajakumar et al. [144] acquired acoustic signals from vehicles using smartphones and employed an optimized deep CNN (DCNN) for feature extraction and classification, enabling vehicle fault identification. Gupta et al. [145] investigates lightweight techniques for ANN optimized using GA for vehicle fault detection. Additionally, Mystkowski et al. [146] presented a method based on vibration data and Multilayer Perceptron (MLP) neural networks for diagnosing faults in the rotary hay tedder. The method combines time and frequency domain metrics from vibration signals to train the MLP model, achieving high detection accuracy in both laboratory and real-world conditions. Luque et al. [147] also utilized vibration signals and combined with the RF algorithm to diagnose gripping pliers in bottling plants. The number of the above studies remains relatively limited, lacking systematic comparisons and in-depth optimization tailored to specific agricultural applications. Moreover, the effectiveness and robustness of most methods have yet to be fully validated in real-world farmland environments.

Despite significant achievements in AI-based fault diagnosis methods, as summarized in Table 3, these methods necessitate abundant data to train the diagnostic model, which should cover various load and supply conditions with different levels of faults. In addition, the training process consumes excessive computational resources and time, and the model cannot be applied well when the operating conditions change. Future research can be focused on aspects such as operational efficiency, limited samples, and model generalization to enhance the effectiveness of AI methods further.

Table 3.

Summary of AI-based fault diagnosis methods.

6. AI Agricultural Robots

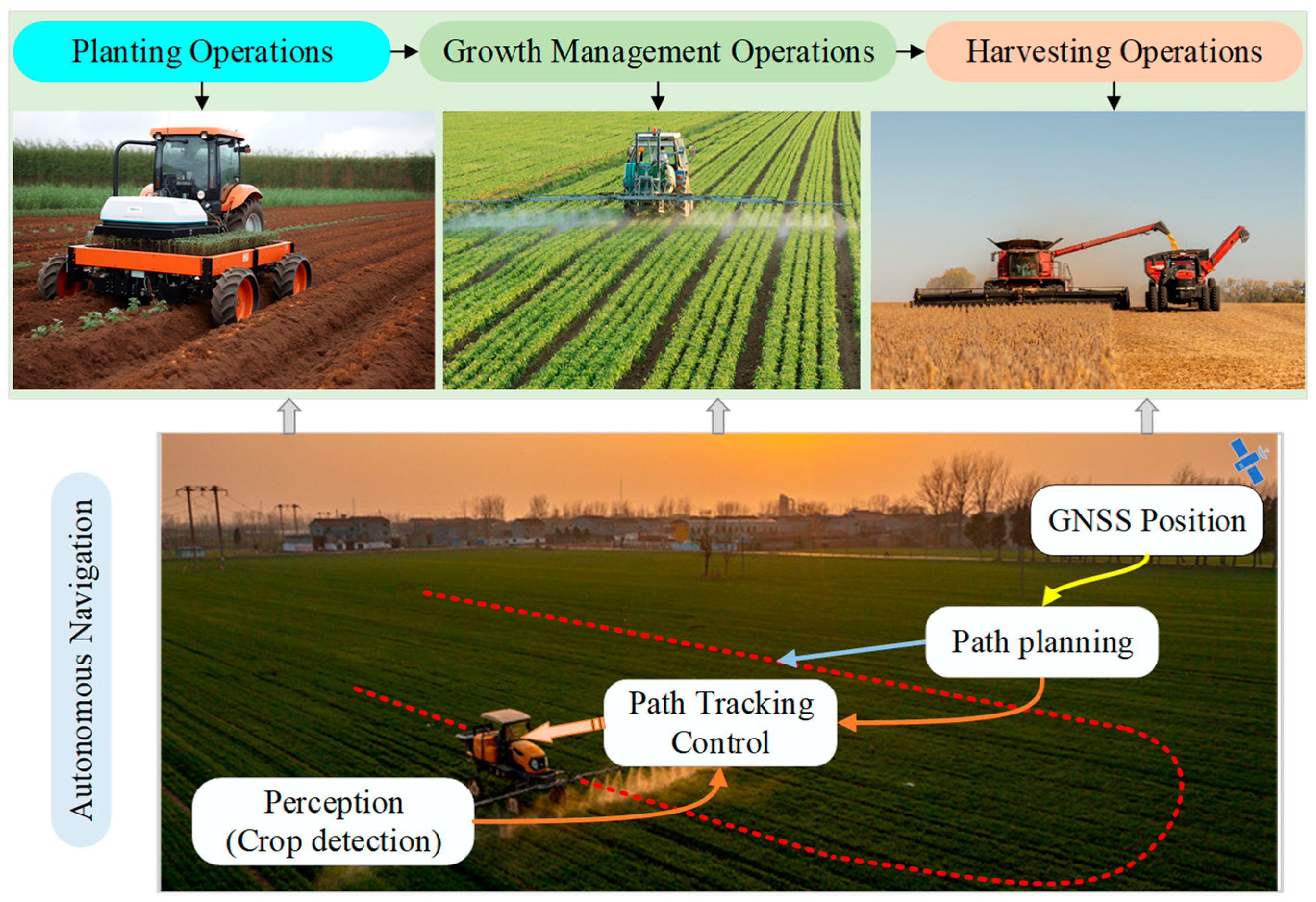

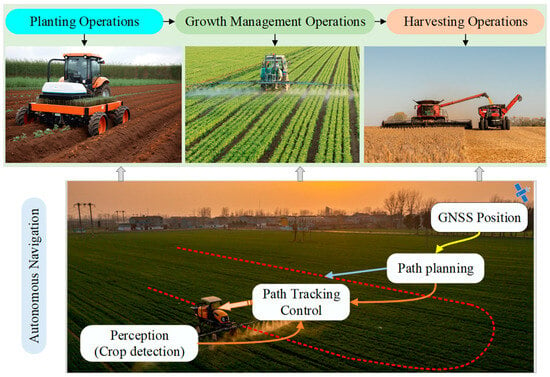

AI agricultural robots represent a cutting-edge application of AI and robotics technology in modern agriculture. In the current era, AI agricultural robots are increasingly being adopted in agricultural production. The primary reasons for this trend lie in their significant potential to address environmental protection, enhance production efficiency, mitigate labor shortages, and improve food safety [5,148,149]. Currently, the technological evolution of agricultural robots centers on intelligent applications for field operations, particularly in planting, growth management, and harvesting. Numerous robots capable of replacing traditional manual labor have emerged across these three primary stages of agricultural production, as illustrated in Figure 6. This transformation has become a crucial pathway for addressing global food security challenges and driving the automation and upgrading of agriculture [150]. In this context, autonomous navigation technology has also become a core pillar of innovative agricultural robots. The autonomous navigation framework for agricultural robots primarily relies on three key modules: localization and perception [151,152,153,154], path planning [155,156], and path tracking control [157].

Figure 6.

AI agricultural robot application scenarios.

6.1. Autonomous Navigation Technologies of AI Agricultural Robots

6.1.1. Localization and Perception

Positioning and perception form the foundation of automated navigation for AI agricultural robots. Through high-precision positioning and perception identification technologies, they provide core data support for situational awareness in complex field environments.

The localization technology of AI agricultural robots primarily achieves relatively high-precision navigation through the integration of Global Navigation Satellite Systems (GNSS) and Inertial Navigation Systems (INS) [158]. However, both GNSS and INS have certain limitations. For example, GNSS may experience signal degradation or loss under adverse weather conditions or in complex environments. Zhou et al. [159] proposed a neural network-based Simultaneous Localization and Mapping (SLAM)/GNSS fusion localization algorithm to address the loss of control in agricultural robots caused by signal degradation or loss in complex agricultural environments. The algorithm achieved an overall average positioning error of 0.12 m in orchard field tests, demonstrating its capability to maintain centimeter-level accuracy even under fluctuating or denied GNSS conditions. Li et al. [160] proposed a navigation method that integrates Real-Time Kinematic GNSS (RTK-GNSS), INS, and Light Detection and Ranging (LiDAR) technologies. Through dynamic switching strategies and Proportional Integral Derivative (PID) path tracking control, it achieved an average lateral error of 0.1m and a positioning accuracy improvement of 1.6m in orchard environments, effectively addressing satellite signal loss issues.

The perception technology of AI agricultural robots primarily relies on sensors to perceive the surrounding environment, such as visual sensors [16] and lidar [161]. Visual sensors and lidar have been widely adopted for autonomous navigation of agricultural robots in fields, playing a crucial role particularly in tasks such as spraying and harvesting.

To address navigation challenges for agricultural robots under varying conditions, numerous visual navigation technologies based on deep learning and traditional image processing have emerged. Liu et al. [162] proposed a machine vision navigation method based on field ridge color, which achieved an average recognition success rate of 98.8% in four typical ridge environments through the gray reconstruction method and the approximate quadrilateral method, successfully enabling automated navigation of agricultural machinery on crop-free field ridges. Syed et al. [163] introduced a CNN-based model for obstacle classification in orchard environments, enabling agricultural robots to operate collision-free during autonomous navigation. Zhang et al. [164] developed a seedling band-based navigation line extraction model—Seedling Navigation CNN (SN-CNN)—to achieve autonomous navigation for cross-ridge field operation robots. However, vision-based navigation suffers from poor environmental adaptability and is highly vulnerable to adverse weather and complex surroundings.

Compared to vision-based navigation, LiDAR offers superior stability and environmental adaptability in complex settings. It not only provides high-precision distance measurements but also generates detailed point cloud data. Teng et al. [165] proposed an adaptive LiDAR odometry and mapping framework. By employing generalized ICP point cloud matching and selective map update strategies, it effectively addresses motion distortion and dynamic interference issues in agricultural unstructured environments. While maintaining computational efficiency, the framework achieves superior odometry estimation accuracy and environmental adaptability compared to competing methods. Firkat et al. [166] proposed a ground segmentation algorithm specifically designed for complex agricultural field environments. This algorithm is compatible with a wide range of LiDAR sensors, enabling agricultural robots to distinguish between horizontal and sloped terrains during operations effectively. However, LiDAR-based solutions are constrained by the high cost of laser-radar sensors, which greatly limits their large-scale popularization.

The aforementioned GNSS and sensors each have their own advantages and limitations. A comprehensive understanding of their characteristics and effective integration would significantly enhance the environmental perception capabilities and autonomous operation levels of agricultural machinery.

6.1.2. Path Planning

Path planning is a critical component of autonomous navigation for agricultural robots. Rational path planning can enhance operational efficiency and multi-machine collaboration while reducing unnecessary energy consumption [167,168]. Path planning includes full-coverage path planning, headland turn planning, and multi-vehicle cooperative path planning, among others.

The primary objective of full-coverage path planning is to ensure complete coverage of the operational area while minimizing path overlap and missed zones. Multi-vehicle cooperative path planning involves the precise and rational arrangement of operational paths for multiple agricultural robots, representing a major direction in the development of agricultural automation. Jeon et al. [169] developed an automated tillage path planning method for polygonal paddy fields, which synergistically optimizes internal and peripheral field paths to enhance trajectory tracking accuracy and reduce missed areas. Wang et al. [170] addressed target constraints in complex environments by proposing a full-coverage path planning approach that integrates an improved ant colony algorithm with a shuttle strategy, achieving high coverage rates and operational efficiency for harvesting machinery. Soitinaho et al. [171] introduced a novel method for dual-machine cooperative full-coverage path planning, utilizing short-path decomposition and real-time collision detection scheduling to enable synchronous tillage and seeding by autonomous agricultural machinery in fields. However, the aforementioned cooperative schemes generally assume regular field geometry and zero-delay communication; when irregular ridges, crop occlusion, or local signal loss occur in practice, positioning drift readily leads to overlapping or missed coverage, leaving a clear shortfall before truly robust large-scale multi-robot coordination.

6.1.3. Path Tracking Control

Path tracking control is critical for the autonomous navigation of agricultural robots and a key factor determining the performance of navigation systems [172,173]. Its primary function is to drive agricultural machinery to accurately execute planned trajectories while maintaining system stability during dynamic operations. However, achieving high-[precision path tracking control in a complex field environment remains challenging due to susceptibility to external environmental variations, parameter inaccuracies, and actuator saturation. Therefore, path-tracking control algorithms used in agricultural settings must combine environmental adaptability with strong robustness.

Currently, path tracking control methods applied to unmanned agricultural vehicles are primarily based on the following models: geometric models, kinematic models, and dynamic models. Yang et al. [174] proposed an agricultural robot path tracking algorithm that incorporates optimal goal points, simulating driver preview behavior and evaluation functions to achieve adaptive optimization of target points. This approach reduces tracking errors by over 20% compared to traditional algorithms, significantly enhancing navigation accuracy in complex field environments. Cheng et al. [175] developed a path tracking method integrating pre-aiming theory with an adaptive PID architecture, significantly enhancing the tractor’s tracking robustness and disturbance rejection capabilities across diverse paths and operating conditions. Yang et al. [176] developed a novel Fast Super-Torsional Sliding Mode (FSTSM) control method based on an Anti-Peak Expansion State Observer (AESO), enabling finite-time stable tracking of unmanned agricultural vehicles under disturbances while significantly enhancing interference resistance and convergence performance. However, these path-tracking methods still rely on high-frequency GNSS-RTK and multi-sensor fusion; under fruit-canopy shade, rolling terrain, or dust-covered conditions the signals easily drift, so real-world tracking errors exceed simulation results, leaving a gap before low-cost, all-weather, all-terrain robust deployment.

Furthermore, the above agricultural robot autonomous navigation technologies are classified and summarized as shown in Table 4.

Table 4.

Autonomous navigation technologies for agricultural robots.

6.2. Categories of AI-Agricultural Robots

6.2.1. Planting Operations

Planting is a critical stage in agricultural production, directly affecting crop yield and quality. Agricultural robots for planting operations can be broadly categorized into automatic transplanting robots [177,178] and automatic seeding robots [179], which are designed for different types of crops. Liu et al. [180] developed a strawberry autonomous transplanting system that integrates photoelectric navigation and a pneumatic dual-gripper mechanism. This system enables arched-back oriented transplanting in elevated cultivation facilities, achieving a success rate of 95.3% and an operational efficiency of 1047.8 plants per hour, which significantly advances the automation of strawberry (Fragaria × ananassa Duchesne) transplantation. Abo-habaga et al. [181] proposed an Automated Precision Seeding Unit (APSU) for greenhouses, achieving precise seeding within pots for four crop types by optimizing seed-aspiration nozzle size, with an operational efficiency of 35 s per pot. Liu et al. [182] introduced an automatic seeding robot utilizing basketball motion capture technology, which accomplishes a seeding positioning accuracy of 95.5%, a target recognition rate of 98.2%, substantially enhancing high-precision seeding performance and crop yield. However, when confronted with uneven substrate, deformed plug trays, or variations in seed coating, such transplanting robots tend to miss, double-plant, or damage roots; coupled with delayed real-time sensing and feedback during high-speed operation, overall reliability drops, leaving a gap before stable unmanned operation in large, complex field environments.

6.2.2. Growth Management Operations

The crop growth management phase is the longest and most complex stage in agricultural production. Agricultural robots play a more diverse and intelligent role in this phase compared to the planting stage. Key types of robots for growth management include pollination robots, weeding robots, and pesticide spraying robots.

Pollination is one of the key factors in fruit development. Standard pollination methods include natural pollination and artificial pollination [183]. Natural pollination primarily relies on insects [184] and is susceptible to environmental influences, while artificial pollination requires excessive labor. Robotic pollination overcomes these limitations by utilizing computer vision to locate flowers and then employing mechanical devices to physically contact the stigma and transfer pollen. Yang et al. [185] proposed a pistil orientation detection method named PSTL_Orient. By integrating visual servo technology with a robotic arm system, the autonomous pollination robot achieved an 86.19% pollination success rate on forsythia (Forsythia suspensa (Thunb.) Vahl) flowers. Cao et al. [186] developed a kiwifruit (Actinidia chinensis Planch.) pollination robot integrating vision, air-liquid spraying, and a robotic arm, achieving a 99.3% pollination success rate and an 88.5% fruit set rate, with pollen consumption of only 0.15 g per 60 flowers, significantly improving pollen utilization efficiency. Masuda et al. [187] introduced a rail-suspended pollination robot equipped with a flexible multi-degree-of-freedom manipulator and a dual-actuator system, enabling automated pollination of tomato flowers in greenhouse environments. However, current pollination robots still face bottlenecks such as missed detection caused by clustered flower spikes, insufficient force control of the actuator on stamens leading to damage, high sensitivity of pollen viability to temperature and humidity fluctuations, and short continuous operation endurance, leaving a gap before large-scale deployment in complex open environments outside greenhouses.

Weed-removing robots primarily identify crops and weeds through machine vision and AI, then locate and eliminate weeds. Based on their weeding methods, these robots can be categorized into two types: physical weed removal [188,189] and precision spraying [190]. Zhao et al. [191] developed an autonomous laser weeding robot for strawberry fields based on the detection method for drip irrigation pipe navigation and laser weeding, achieving a 92.6% field weeding rate with only 1.2% crop damage, enabling precise weed identification and removal in strawberry plantations. Zheng et al. [192] proposed an electric swing-type intra-row weeding control system that integrates deep learning for accurate cabbage (Brassica oleracea L.) recognition and dynamic obstacle avoidance, attaining a 96% weeding accuracy and 1.57% crop injury rate at a low speed of 0.1 m/s. Balabantaray et al. [193] designed an AI-powered weeding robot targeting Palmer amaranth (Amaranthus palmeri S. Watson), which employs a self-developed AI recognition model and robotic technology to achieve real-time weed identification and spot spraying. Fan et al. [194] introduced a cotton field weed detection model incorporating a Convolutional Block Attention Module (CBAM), a Bidirectional Feature Pyramid Network (BiFPN), and bilinear interpolation algorithms. This allowed robots to achieve high-precision weed recognition and highly effective spraying operations. However, the aforementioned weeding robots generally rely on AI recognition models and multi-sensor fusion, resulting in high overall costs. In dense or tall-stalk crops, plant occlusion still causes a sharp drop in recognition accuracy and a surge in seedling-damage rates, leaving some bottlenecks before large-scale deployment.

6.2.3. Harvesting Operations

Harvesting is the final stage of agricultural production and also the most challenging one. It demands high speed and precision from robotic harvesters, as fruits not only ripen simultaneously but are also highly perishable.

Fruit harvesting robots primarily identify and locate fruits through machine vision and AI, then use robotic arms for precise picking and transportation [195,196]. Yang et al. [197] developed an automated pumpkin (Cucurbita moschata Duchesne) harvesting robot system based on AI and an RGB-D camera, achieving 99% fruit detection accuracy and 90% picking success rate. This thereby alleviates labor demands for harvesting heavy fruit. Yang et al. [198] proposed a grape harvesting robot utilizing a multi-camera system and an AI object detection algorithm, enabling accurate identification of grape cutting points and automated outdoor grape harvesting. Chang et al. [199] introduced a wire-driven multi-joint strawberry harvesting robotic arm, combining deep learning with two-stage fuzzy logic control to achieve precise strawberry fruit recognition and successful harvesting. Choi et al. [200] developed an AI robotic system for harvesting citrus (Citrus sinensis (L.) Osbeck). Through eye-hand coordination of the robotic arm and 6D fruit posture sensing, it achieves adaptive grasping and precise stem cutting, demonstrating high success rates and harvesting efficiency in real orchard environments. Although fruit-harvesting robots can alleviate labor shortages, they still suffer from poor scene generalization and a high fruit-damage rate.

Despite continuous technological breakthroughs and advancements in agricultural robots, their application in real-world field environments still faces numerous challenges. On one hand, existing navigation algorithms struggle to maintain high stability and reliability in highly unstructured and dynamically evolving complex farmland scenarios. On the other hand, AI struggles to achieve high recognition accuracy for targets in relatively complex agricultural environments. Overall, for agricultural robots to achieve widespread adoption, further breakthroughs are needed in both the robustness of their autonomous navigation algorithms and the precision of their AI recognition capabilities. Table 5 summarizes the application scenarios and methods of AI-agricultural robots in different stages of crop production.

Table 5.

Agricultural robots used for different stages of crop growth.

7. AI-Internet of Things for Smart Agriculture

The rapid development of the Internet of Things and AI has provided a strong boost to the transformation of agriculture to intelligence and automation. Smart agriculture realizes accurate monitoring, intelligent decision-making and automatic control of the whole process of agricultural production by integrating wireless sensing technology, cloud computing, machine learning and other methods, i.e., AI-Internet of Things for smart agriculture. According to the existing research results, it can be summarized into the following three categories: environmental monitoring and intelligent control, bioinformation perception and processing, and system architecture and prospect.

7.1. Environmental Monitoring and Intelligent Control