Abstract

Crop row detection technology, as one of the key technologies for agricultural robots to achieve autonomous navigation and precise operations, is related to the precision and stability of agricultural machinery operations. Its research and development will also significantly determine the development process of intelligent agriculture. The paper first summarizes the mainstream technical methods, performance evaluation systems, and adaptability analysis of typical agricultural scenes for crop row detection. The paper also summarizes and explains the technical principles and characteristics of traditional methods based on visual sensors, point cloud preprocessing based on LiDAR, line structure extraction and 3D feature calculation methods, and multi-sensor fusion methods. Secondly, a review was conducted on performance evaluation criteria such as accuracy, efficiency, robustness, and practicality, analyzing and comparing the applicability of different methods in typical scenarios such as open fields, facility agriculture, orchards, and special terrains. Based on the multidimensional analysis above, it is concluded that a single technology has specific environmental adaptability limitations. Multi-sensor fusion can help improve robustness in complex scenarios, and the fusion advantage will gradually increase with the increase in the number of sensors. Suggestions on the development of agricultural robot navigation technology are made based on the current status of technological applications in the past five years and the needs for future development. This review systematically summarizes crop row detection technology, providing a clear technical framework and scenario adaptation reference for research in this field, and striving to promote the development of precision and efficiency in agricultural production.

1. Introduction

1.1. Research Background

Crop row detection is an important prerequisite for robots to achieve precise positioning and navigation, and it plays a decisive role in ensuring the accuracy and stability of agricultural machinery during sowing, weeding, fertilization, and other operations. However, adverse factors such as harsh farmland environments, difficulty in avoiding weeds and obstructed branches and leaves during crop row detection tasks, and poor lighting conditions can have a significant impact on crop row detection. Hence it is crucial to conduct an in-depth analysis of the research status, technical difficulties, and research directions of crop row detection.

Shi et al. [1] conducted a comprehensive analysis of crop row detection technology in agricultural machinery navigation. They compared and analyzed the advantages and disadvantages of traditional techniques and deep learning based methods, and explained the effectiveness of different methods in different application scenarios. The conclusion is that it is necessary to achieve accurate detection of crop strains through sensor matching and target model design for different scenarios. Zhang et al. [2] briefly introduced the advantages and disadvantages of visual inspection methods for crop row detection. These visual inspection methods have improved the autonomous positioning ability of agricultural equipment and to some extent solved the problem of poor Global Navigation Satellite System (GNSS) signals. They summarized it as a typical visual inspection process consisting of the following: image acquisition, feature extraction, centerline detection, and listed the corresponding equipment selection, model design, and algorithm optimization. They pointed out that current research results have insufficient consideration for the complex situations in detection.

Yao et al. [3] classified agricultural machinery automatic navigation into three categories: GNSS, machine vision, and Light Detection and Ranging (LiDAR). By comparing and analyzing the structural characteristics, advantages, disadvantages, and technical difficulties of these three navigation methods, they found that the integration of GNSS and machine vision is the most mature path. They described four types of path planning algorithms and 22 scene matching algorithms to provide reference for technology implementation, and analyzed and summarized the challenges that need to be overcome. Bai et al. [4] also emphasized the important role of machine vision in agricultural robot navigation. They summarized the characteristics of visual sensors, and focused on explaining the use of key technologies such as filtering, segmentation, and row detection in environmental perception, positioning, and path planning. Wang et al. [5], based on the increasing emphasis on autonomous navigation in intelligent agriculture, have used different visual sensors and algorithms to achieve autonomous navigation due to the highly beneficial and low cost of machine vision. They have also specifically explained the scope and limitations of these visual sensors and algorithms.

From the perspective of scholars, various sensors have certain limitations when used in specific scenarios. When using a certain type of sensor alone, it may be affected by weak GNSS signal coverage, camera susceptibility to light interference and vibration, and LiDAR occlusion. Using a single sensor alone may result in insufficient accuracy. Bonacini et al. [6] proposed a dynamic selection method based on sensor data features, which enables the system to select the optimal method of camera or LiDAR in crop areas and uses GNSS in other areas. The accuracy of this method can achieve ≥87% accuracy and 20 s GNSS transition adaptation. Xie et al. [7] used an extended Kalman filter and particle filter to analyze vehicle motion GNSS. Information fusion, such as ultra-wideband improves the positioning accuracy of vehicles. Wang et al. [8] proposed using LiDAR/IMU/GNSS fusion navigation technology to improve the positioning accuracy of agricultural pesticide robots in orchard operating environments, enabling orchard pesticide robots to achieve centimeter-level positioning accuracy and an average lateral offset of 2.215 cm. Qu et al. [9] addressed the issue of LiDAR SLAM map distortion and optimized the map with an improved SLAM, resulting in a distortion error of 0.205 m.

The research on path planning and tracking optimization has also reached a significant level. Wen et al. [10] addressed the issue of low path tracking accuracy in agricultural machinery by adding a speed-forward distance correlation function to the algorithm to adjust parameter optimization. The measured average lateral offset value was 3 cm, and the yaw angle was less than 5°. Su et al. [11] proposed a method based on multi-sensor fusion to address the limitations of GNSS navigation in orchards. The system was constructed using two-dimensional LiDAR, an electronic compass, encoder, and other devices, and the extended Kalman filter (EKF) was used to fuse pose data. The experimental results showed that the system had high positioning and navigation accuracy and strong robustness. Kaewkorn et al. [12] showed that the positioning method based on low-cost IMU coupled with three laser guidance (TLG) achieves an accuracy of 1.68/0.59 cm in horizontal/vertical direction and 0.76–0.90° in yaw angle, which is much lower than the cost of commercial GNSS-INS systems.

Researchers have been continuously conducting research on technology optimization for a certain scenario. Cui et al. [13] combined the deep learning model DoubleNet with a multi-head, self-attention mechanism and improved activation function, and the experiment proved that this method can achieve a localization accuracy of 95.75% at a speed of 71.16 fps. Chen et al. [14] proposed a path planning method based on genetic algorithm (GA) and Bezier curve, achieving a balance between minimizing navigation error and maximizing land use efficiency. Jin et al. [15] proposed a field ridge navigation line extraction method based on Res2net50 to address the issues of poor real-time performance and susceptibility to light effects in field navigation path recognition. The pixel error was approximately 8.27 pixels under different lighting conditions. Gao et al. [16] proposed a GRU Transformer hybrid model for GNSS/INS fusion navigation optimization to improve the accuracy of GNSS/INS fusion navigation when GNSS signals in orchards are obstructed. The root mean square error of the position has been improved. At the same time, scholars have proposed a crop–weed recognition model based on the YOLOv5 unmanned plant protection robot, with an accuracy of 87.7% and an average lateral error of 0.036 m for straight-line operations [17].

1.2. Research Content

Although mature technological methods are currently being applied in many scenarios, in practice, there are still problems such as poor technical adaptability, incomplete multi-sensor fusion mechanisms, and weak robustness to complex scenarios. Therefore, starting from the current mainstream crop row detection algorithms, the paper briefly describes the principles of each algorithm and provides corresponding performance evaluation indicators. Based on this, combined with typical farmland scenarios, the applicability of each method is analyzed and discussed, in order to provide some reference materials for relevant researchers.

1.3. Main Contributions

Numerous research studies have been conducted on single- or multi-source vision, LiDAR, GNSS, etc., but there are limitations in practical application, such as low scene applicability, immature multi-sensor fusion methods, and poor robustness.

This paper reviews the mainstream crop row detection technologies of the past five years, and, for the first time, sorts out the technical spectrum from the following three dimensions: visual sensor technology, LiDAR technology, and multi-sensor fusion. It analyzes the relevant technical principles and characteristics of visual artificial feature extraction, deep learning automatic feature learning, LiDAR point cloud preprocessing, structured extraction, and multi-sensor information complementary fusion technology under various methods, and elaborates on the development trends and application boundaries of various technologies.

This paper constructs a multidimensional measurement analysis framework for accuracy, efficiency, robustness, and practicality. It elaborates on detection accuracy, positioning error, real-time performance, computational cost, environmental adaptability, scene fault tolerance, hardware cost, and deployment difficulty. In the past five years of research review, it analyzes the advantages and disadvantages of crop row detection technology compared by scholars.

This paper combines four typical agricultural scenarios, including open-air farmland, facility agriculture, orchards, and special terrain. Starting from the characteristics of each scenario, it analyzes the detection technologies applicable to each scenario and proposes corresponding technical selection suggestions to guide the selection of detection schemes in practical application scenarios.

Based on the technology application situation in the past 5 years and the development needs of intelligent agriculture, analyze the current technological shortcomings, including poor robustness in extreme environments, low data consistency among multiple sensors, and inability to meet low-cost deployment requirements. Based on numerous literature, the development trends of several research directions, including multimodal deep fusion, model lightweighting and edge deployment, and data-driven scene generalization, have been determined to promote the development of intelligent agriculture.

2. Conventional Technical Methods and Principles for Crop Row Detection

2.1. Detection Methods Based on Visual Sensors

Visual sensors have become the core technology carrier for crop row detection due to their low cost and rich data. Their methods can be divided into traditional visual methods that rely on manual features and data-driven deep learning methods, each with its own emphasis in different scenarios.

2.1.1. Traditional Visual Methods

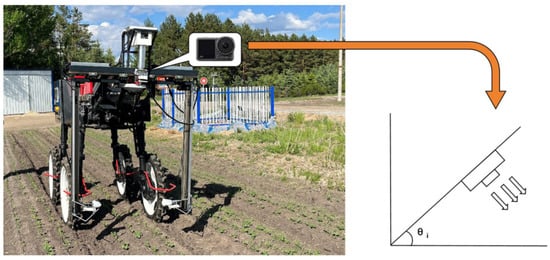

Traditional visual methods use manually extracted features and geometric methods to detect crop rows, which have high accuracy and stability in structured farmland scenes, and have the advantages of low computational complexity and strong real-time performance. Gai et al. [18] proposed a method based on a frequency domain processing pipeline, which identifies crop row spacing and direction from the frequency domain components of crop rows through two-dimensional discrete Fourier transform (DFT). The positioning accuracy is improved through frequency domain interpolation and a weighted Hanning window optimization algorithm. After being combined with the LQG controller, the average absolute error of robot tracking is only 3.74 cm, and it can better adapt to stability and robustness under complex lighting conditions. As shown in Figure 1, in response to the problem of soybean seedlings being covered with straw after lodging during the seedling stage, the Hue–Saturation–Value (HSV) color model and Otsu’s Thresholding (OTSU) method were used to segment the image, and the adaptive DBSCAN clustering was improved to extract feature points. Zhang et al. [19] extracted 20 images from a multi-level sample to validate the navigation line extraction method. The least squares method was used to fit the navigation path, with an average distance deviation of 7.38 cm, an average angle deviation of 0.32°, and an accuracy rate of 96.77%.

Figure 1.

Crop row navigation for soybean seedlings [19].

The rice guidance system studied by Ruangurai et al. can use the trajectory law of tractor wheels, combine principal component analysis with the initial value estimation Hough transform optimization method, and make the position error of rows below 28.25 mm, which can achieve automation of precise rice sowing [20]. Zhou et al. [21] proposed a feature extraction based on the A* component of the CIELAB color space for multi-period high-ridge broad-leaved crops, using adaptive clustering and Huber loss linear regression to fit the centerline strategy, resulting in an average image processing time of only 38.53 ms, which is suitable for long-term crops. Gai et al. [22] proposed a canopy navigation system based on ToF depth cameras to construct a grid map of crop fields occupied from depth maps. The average absolute errors used to determine the lateral positioning of corn and sorghum fields are 5.0 cm and 4.2 cm, respectively, providing a solution in GPS weak signal scenarios.

In response to the stereoscopic vision guidance method proposed by Yun et al. [23], the introduction of dynamic pitch and roll compensation and anti-ghosting algorithms can effectively track ridges and ditches. The lateral root mean square error (RMSE) of a flat plot is 2.5 cm, and the RMSE of a field with a slope of 20% is 6.2 cm, which has achieved practical operation guidance. For the jujube harvester, after Lab color segmentation and noise reduction filtering, the tree line was fitted using the least squares method. The average missed detection rate was measured to be 3.98%, the average detection rate was 95.07%, and the average detection rate was 41 fps, which fully meets the requirements of autonomous driving [24].

2.1.2. Deep Learning Methods

Due to the comprehensive consideration of accuracy and speed, the YOLO series object detection model is currently the main choice for real-time field detection. Li et al. [25] added DyFasterNet and dynamic FM IoU loss to the D3-YOLOv10 framework structure. They applied enhanced DyFasterNet and enhanced dynamic FM IoU loss in 11 places on their model and achieved a 91.18% result in tomato detection mAP0.5 in the D3-YOLOv10 network structure. Compared with the original model, this model reduces the number of parameters by 54.0% computational complexity by 64.9%, and achieves a frame rate of 80.1 fps, meeting the real-time requirements of facility scenarios. Based on the LBDC-YOLO lightweight model, the design methods of GSConv Slim Neck and Triplet Attention were applied to the broccoli model, achieving an average accuracy of 94.44%. Compared with YOLOv8n, this model reduces parameters by 47.8%, making it more suitable for resource-constrained field harvesting [26]. Duan et al. [27] proposed a YOLOv8-GCI model based on RepGFPN backbone network feature map fusion and CA attention mechanism optimization. The gradient descent method combined with the population approximation algorithm was used for accurate detection of pepper phytophthora, which improved the accuracy by 0.9% and recall by 1.8%, reaching over 60 fps.

For crop row geometric feature extraction, end-to-end detection and semantic segmentation have good results. Yang et al. [28] proposed ROI autonomous extraction, which uses YOLO to predict the driving area and reduce the detection range. Cornrows are extracted through super green operator segmentation and FAST corner detection. Processing one image only takes 25 ms, with a frame rate of over 40 fps and an angle error of 1.88°. Quan et al. [29] used the Anchor Points Selecting Classification (RCASC) algorithm based on row and column and an end-to-end Convolutional Neural Network (CNN) for prediction. The F1-score reached 92.6%, while maintaining a frame rate of over 100 fps, achieving good results in various cornrows. Li et al.’s [30] E2CropDet end-to-end network directly models crop rows as independent objects, with a lateral deviation of 5.945 pixels in the centerline and a detection frame rate of 166 fps, without the need for any additional post-processing work. Luo et al. [31] combined CAD UNet with CBAM and DCNv2, and introduced them into UNet to detect rice seedling rows. The detection accuracy reached 91.14%, and the F1-score reached 89.52%, completing the evaluation of the quality of rice transplanter operation.

Gomez et al. [32] showed that the method based on YOLO-NAS whole leaf labeling can achieve an mAP of 97.9% and a recall rate of 98.8%. The bean detection method based on YOLOv7/8 is superior. The ST-YOLOv8s network proposed by Diao et al. [33] uses a swin transformer as the backbone, which improves the accuracy of cornrow detection by 4.03% to 11.92% compared to traditional methods at different stages, and reduces the angle error to 0.7° to 3.78°. Liu et al. [34] proposed an improved YOLOv5 model, which adds a small object detection layer to the neck and optimizes the loss function. Based on the feature points of pineapple rows, clustering and fitting the centerline can achieve an accuracy of 89.13% on sunny days and 85.32% on cloudy days. The accuracy of row recognition is 89.29%, with an angle error of 3.54°, which can be used for harvester navigation.

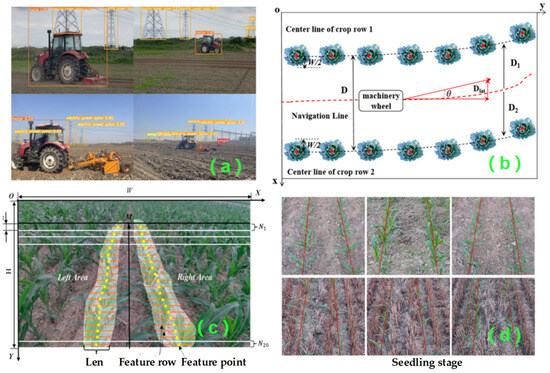

The lightweight VC UNet model is based on VGG16 as the backbone, and channel pruning techniques of CBAM and convolutional layers are introduced in upsampling. The ROI is determined by vertical projection, and the centerline of the rapeseed row is fitted using the least squares method, resulting in a quasi intersection rate of 84.67% and a mean accuracy of 94.11%. The segmentation optimization is carried out with the minimum mean error, and the processing speed is 24.47 fps. After transfer learning processing, the recognition rate of soybeans was 90.76%, and the recognition rate of corn was 91.57% [35]. As shown in Figure 2, Zhou et al. [36] applied the improved YOLOv8 model to the backbone layer and integrated the CBAM attention mechanism, and used the neck BiFPN feature fusion module for multi-scale information aggregation to improve the accuracy of the model for field obstacles. The recognition accuracy of field obstacles reached 85.5%, with an average accuracy of 82.5% and a frame rate of 52 fps, which can fully meet the needs of real-time obstacle avoidance. To improve the adaptability of the model to the environment and facilitate lightweight deployment, Shi et al. [37] developed the DCGA-YOLOv8 algorithm by fusing DCGA and YOLOv8, and the results of target detection in cabbage and rice crops were both greater than 95.9%. Liu et al. [38] proposed a crop root row detection method, which first extracts canopy ROI through semantic segmentation, and then obtains canopy row detection lines through horizontal stripe division and midpoint clustering. Using a root representation learning model to obtain root row detection lines corrected by an alignment equation. The method can achieve a single frame processing time of 30.49 ms, accuracy of 97.1%, and reduced risk of high pole crop crushing. Wang et al. [39] established the MV3-DeepLabV3 model based on MobileNetV3_Large, and obtained the best segmentation results for different wheat plant heights, maturity levels, and occlusion conditions through experiments, and the accuracy was 98.04% and the IoU was 95.02%.

Figure 2.

Traditional visual methods: (a) obstacle detection algorithm based on YOLO [36]; (b) relationship between the crop row and the motion wheel of the farm machine [37]; (c) crop root rows detection based on crop canopy image [38]; (d) crop row detection at different growth stages [39].

Here we discuss the CNN dual-branch architecture proposed by Osco and Lucas [40], which simultaneously calculates crop quantity and detects planting rows, and optimizes detection and multi-stage refinement based on row information feedback. The detection accuracy of cornrows is 0.913, the recall rate is 0.941, and the F1-score is 0.925. Lv et al. [41] improved the YOLOv8 segmentation algorithm by using MobileNetV4 as the backbone and ShuffleNetV2 as the feature module. The average accuracy of the model was increased to 90.4%, the model size was reduced to 1.8 M, and the frame rate was 49.5 fps, achieving real-time recognition of crop-free ridge navigation routes and improving recognition speed and real-time performance.

In each specific field, the model has strong adaptability to the task. In the broccoli seedling replacement scenario, Zhang et al. [42] used seedling-YOLO to complete the replacement work, added the Efficient Layer Aggregation Network-Path (ELAN_P) module, and added the coordinate attention module to enhance the difficult-to-detect samples. They obtained AP values of 94.2% and 92.2%, respectively, in the categories of “exposed seedlings” and “missing holes”. Wang et al. [43] proposed the YOLOv8 TEA instance segmentation algorithm, which is an improved algorithm that replaces some cross-stage partial 2 with focus (C2f) modules with MobileViT Block (MVB), adds C2PSA and CoTAttention, and uses dynamic upsampling and dilated convolution methods on YOLOv8 seg. Through experiments, it can be seen that the algorithm can achieve mAP (BOX): 86.9%, mAP (Mask): 68.8%, Giga Floating-point Operations per Second (GFLOPs): 52.7, and can effectively recognize tea buds, thus providing some help for intelligent tea picking.

For mature lotus seed detection, Ma et al. [44] introduced the CBAM module using modified YOLOv8-seg, achieving an mAP mask of 97.4% in detection with an average time of only 25.9 ms, and applied this method to path planning for lotus pond robots. In addition, in order to solve the problems of low navigation speed and poor accuracy of most Automated Guided Vehicles (AGVs) relying on reflectors, Wang et al. [45] borrowed the MTNet network model and combined feature offset aggregation with multi-task collaboration to improve driving speed and positioning accuracy through feature offset aggregation. Compared to the original driving speed of You Only Look for Panoptic Driving Perception (YOLOP), this result has more advantages.

2.1.3. Summary of Detection Methods Based on Visual Sensors

There are two traditional techniques and principles for crop row detection based on visual sensors: traditional vision and deep learning. Traditional vision is achieved through manually extracting features or using geometric methods, and in structured agricultural scenes, it has high accuracy and stability. The computational complexity is relatively low. Realize real-time detection. By using methods such as HSV color model and OTSU threshold method to process the test object, it is possible to quickly fit the travel path and apply it to some simple crop row detection. However, this method has poor adaptability to unstructured environments and is prone to failure in situations with dense weeds and significant changes in light intensity. Deep learning methods, represented by the YOLO series, SSD, Faster R-CNN, etc., automatically learn features to improve detection accuracy. The introduction of multi-scale information fusion and attention mechanisms further improves the model performance. At the same time, for scenes with limited resources, lightweight methods can be used to improve detection efficiency while ensuring a certain level of accuracy, in order to meet the needs of detecting different types of crops. However, it relies on a large amount of annotated data, and the deployment of the model requires certain hardware conditions. If it is in a relatively harsh environment, it also needs to be continuously debugged to meet the corresponding requirements and continuously trained. The visual sensor method has the advantages of low cost and rich data, but a single visual modality cannot obtain the three-dimensional shape of crop rows, and its stability is poor in complex agricultural environments. Combining other methods such as LiDAR can help compensate for the shortcomings of a single visual sensor.

2.2. LiDAR-Based Detection Methods

Compared to visual sensors, LiDAR has great advantages in utilizing the precise positioning and depth information contained in three-dimensional point cloud data. It is more effective than visual sensors in poor lighting environments or severe tree canopy occlusion, and is also a supplementary technology for crop row detection. Below are some common tools and techniques used for LiDAR-based detection models.

2.2.1. Point Cloud Preprocessing Technology

Point cloud preprocessing is a core step in crop row detection based on LiDAR. Point cloud preprocessing, represented by ground filtering, denoising, and downsampling, can remove redundant point cloud data, preserve important crop information, and prepare for the next step of line structure extraction. Karim et al. [46] measured the R2 of wheat plants after airborne LiDAR preprocessing to be 0.97, and the relative error of soybean canopy estimation was only 5.14%. The accurate recognition rate of plants can reach 100%.

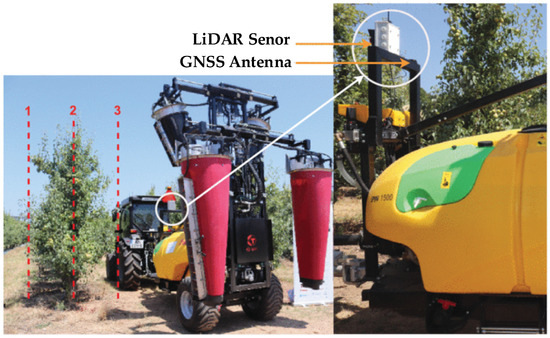

Using the 2D LiDAR tree crown sensing system developed by Baltazar et al. [47], precise spraying of tree crown boundaries was detected through pre-processing, resulting in a 28% reduction in excessive spraying and a 78% water saving, as shown in Figure 3. Li et al. [48] used LiDAR filtering and downsampling to achieve obstacle recognition between cornrows, and achieved a downsampling rate of 17.91% in the YOLOv5 framework. After supplementing visual information, the navigation accuracy significantly improved. Yan et al. optimized the flight parameters of Unmanned Aerial Vehicle (UAV) LiDAR and proposed a path and phenotype index p downsampling scheme. They suggested that the accuracy of phenotype estimation after downsampling the s-alone path should be p > 0.75, and the efficiency should be improved by about 42% to 44% [49]. Bhattarai et al. [50] set the optimal height of 24.4 m and grid sampling (20 cm) to balance accuracy and efficiency for cotton UAV LiDAR, and provided data processing standards.

Figure 3.

Two-dimensional LiDAR-based system for canopy sensing [47]. The lines in the picture are used for the position of tree rows.

2.2.2. Row Structure Extraction Methods

Using fitting algorithms and clustering analysis, the characteristics of crop rows are extracted from preprocessed point cloud data to distinguish between crops and background. Since this step determines the exported navigation lines and their positioning accuracy, the relevant algorithm used can be selected based on the actual situation. For dense wheat point clouds, Zou et al. [51] preprocessed them using the octree segmentation voxel grid merging method and extracted wheat ear point clouds through clustering algorithm to construct a linear regression model. The R2 of the model is 0.97, and compared with the actual field measurements, the R2 is 0.93. Liu et al. [52] combined 3D LiDAR observations with EKF localization and corrected trajectory errors through a crown center detection. They found that the average lateral and vertical positioning offset of the orchard was less than 0.1 m, and the heading offset was less than 6.75°. Nehme et al. [53] found that the grape row structure tracking method does not require prior data measurement, and uses Hough transform and Random Sample Consensus (RANSAC) to fit row features, and verifies the layout suitable for various vineyards through RTK-GNSS methods.

Ban et al. [54] combined monocular cameras with 3D LiDAR and used adaptive radius filtering and DBSCAN clustering methods to determine the accuracy of navigation lines in extracting stem point clouds, achieving a rate of 93.67%. The average absolute error of the obtained heading angle was 1.53°. Luo et al. [55] showed that the cotton variable spray system based on the UAV LiDAR can use the alpha shape algorithm to calculate the canopy volume (R2 = 0.89), which can adjust the flow in real time and save 43.37% of the dosage in the field test. Nazeri et al.’s [56] PointCleanNet deep learning method achieved an improvement in leaf area index (LAI) prediction value R2 by removing outliers, while reducing RMSE. Cruz et al. [57] found that the fertilization positioning error can be reduced from 13 mm to 11 mm by comparing the general point cloud map (G-PC) with the local point cloud map (L-PC), providing robust positioning support for precision fertilization.

2.2.3. Three-Dimensional Feature Calculation

Three-dimensional (3D) feature calculation is a quantitative reflection of crop growth and guidance. Nazeri et al. [58] used LiDAR point cloud statistical features to establish a regression model: the predicted values R2 of sorghum LAI ranged from 0.4 to 0.6, and the height difference was used to infer the growth status. Lin et al. [59] proposed a multi-temporal UAV LiDAR framework for automatic recognition of row position and orientation angle; the net vertical and planimetric discrepancies between multi-temporal point clouds are ±3 cm and ±8 cm, adapted to different planting orientations and crop types.

Karim et al. [60] used machine learning models to quantify the morphological features of apple orchards. The results showed that the mean absolute error (MAE) of tree height was 0.08 m, the MAE of canopy volume was 0.57 m3, the RMSE of row spacing was 0.07 m, and R2 was 0.94. Escolà et al. [61] compared Multi-Temporal Laser ScanningMTLS (MTLS-LDAR) and Unmanned Aerial Vehicle-Digital Aerial Photography (UAV-DAP) methods and found that using both LiDAR and TLM to obtain canopy stereo data can provide more detailed information about the canopy. When a large area is occluded, complementary enhancement can be achieved. It also has a high degree of spatial redundancy, making it suitable for large-scale monitoring and the monitoring of overall canopy volume: 19–25 m3.

2.2.4. Summary of LiDAR-Based Detection Methods

The technical core of crop row detection methods based on LiDAR mainly relies on the accurate positioning and depth perception ability of 3D point cloud data, which has strong advantages in low light or severe canopy occlusion. The technical system includes point cloud preprocessing, using filtering, denoising, sampling, and other methods to remove noise and reduce redundant data. And use optimization methods such as dynamic clustering to preserve key data in the point cloud, laying a solid foundation for subsequent processing to meet the real-time requirements of agricultural machinery assisted driving. Row structure extraction extracts preprocessed point cloud data through fitting and clustering algorithms to segment crops from the background, and generates low offset navigation lines that are close to the actual path position based on positioning information. Three-dimensional feature calculation can quantify crop growth related parameters and obtain relatively complete canopy information even under large-scale occlusion conditions. However, the method based on Hikvision equipment has certain limitations, such as sparse point clouds, high equipment costs, and difficulty in popularization. In some complex scenarios, objects may be disassembled, and the extraction effect may also deteriorate in scenes where ground objects obstruct each other or point clouds overlap.

2.3. Multi-Sensor Fusion Method

As outlined in the preceding discussion, a single sensor is not robust against environmental influences or effects. Simple sensors are prone to problems such as low recognition rate and inaccurate positioning. However, multi-sensor fusion can combine or maximize the advantages of multiple sensors to complement each other and achieve a high detection rate.

2.3.1. Visual–LiDAR Fusion

Vision and LiDAR are combined to achieve texture and geometric feature co-extraction. Xie et al. [62] pointed out that multisensor fusion and edge computing will become the future development trend, which can achieve centimeter-level positioning. By using drones for obstacle detection and parameter extraction, incorporating K-means parameter extraction and Markov chain dynamic programming, the collaborative operation of agricultural machinery has improved compared to traditional agricultural machinery collaborative operation methods [63]. The system design based on 2D LiDAR-AHRS-RTK-GNSS adopts a variable threshold segmentation method to obtain experimental data of fragmented dryland and paddy fields, with MAE of 59.41 mm and 69.36 mm, respectively, and an angle error of 0.6489° [64].

Shi et al. [65] proposed a visual and LiDAR fusion navigation line extraction method based on the YOLOv8 ResCBAM tree trunk detection model in a pomegranate orchard environment. Field experiments have shown that the average lateral error is 5.2 cm, the RMS is 6.6 cm, and the navigation success rate is 95.4%, demonstrating the effectiveness of this approach. Song et al. [66] used RGB-D cameras and LiDAR registration to obtain point clouds with R2 values of 0.9–0.96 for corn plant height and 0.8–0.86 for LAI during the seedling stage. Li et al. [67] reduced the seeding depth error by 10.7% to 22.9% by combining laser ranging with array LiDAR. Guan et al. [68] combined the RGB and depth features of RGB images and depth images using the YOLO-GS algorithm, and achieved a water pump recognition accuracy of 95.7% with a positioning error of less than 5.01 mm. Hu et al. [69] improved the method of apple detection by combining YOLOX and RGB-D images, and obtained an F1-score of 93% and a 3D positioning error of less than 7 mm for apple detection.

2.3.2. Visual–GNSS/IMU Fusion

This fusion method combines the local accuracy of vision with the stability of GNSS/IMU, addressing the limitations of single-sensor localization. Wu et al. [70] proposed a visual–unmanned aerial vehicle GNSS surveying scheme, and the RMS accuracy of navigation for curved rice seedlings reached 5.9 cm. The three schemes proposed by Mwitta et al. [71] for cotton fields integrate GPS and vision, with an average lateral error of 9.5 cm for local global planning. The fusion strategy designed by Li et al. [72] for phenotype robots has a lateral error of 0.8 cm and a crop positioning error of 6.4 cm in continuous scenes without GNSS. Li et al. [73] combined RTK-GNSS and odometer to solve the problems of leakage at the breakpoint of agricultural machinery spray operation and large flow control error.

2.3.3. Other Multi-Sensor Fusion Methods

Chen et al. [74] used a fusion of visual and infrared/tactile methods to achieve high-precision F1-score values of 91.80% and 91.82% in visual and tactile recognition based on rice lodging. The average accuracy of visual-based weed density detection in paddy fields is 91.7%, which is higher than the 64.17% achieved by visual methods alone [75]. The accuracy and reliability of rice recognition devices are balanced through tactile stimulation of visual acquisition [76]. Gronewold et al. [77] proposed a tactile-visual detection and positioning system based on visual and tactile hybrid navigation that can be used for obstacle avoidance with an accuracy rate of 97%. This system can achieve autonomous driving with a maximum driving distance of over 30 m.

2.3.4. Summary of Multi-Sensor Fusion Method

The multi-sensor fusion method proposed to address the above issues fully utilizes the complementary advantages of each sensor, greatly improving the robustness and accuracy of crop row detection, and completely solving the drawbacks of a sensor that is prone to distortion, low recognition rate, and large positioning deviation in harsh environments. Among them, the combination of vision and LiDAR is more common, which can complement each other’s strengths and weaknesses, extract texture and geometric features, complete centimeter-level positioning, and accurately obtain crop plant height parameter values, reducing operational errors. By integrating vision and GNSS/IMU, the advantages of vision in local accuracy and GNSS/IMU in global accuracy can be obtained, ensuring low positioning error even in the absence of GNSS continuous scenes. However, in a specific scene, if the fusion of vision and infrared is adopted, the limitation of single mode can be broken through, and the weed recognition rate and obstacle avoidance rate can be improved to some extent. However, the above methods also have the following obvious drawbacks: (1) how to achieve simultaneous acquisition of multi-source data and obtain accurate data synchronization information; (2) the complexity of the algorithm is also relatively high; and (3) compared to a single sensor, the system cost is relatively high.

2.4. Summary of Conventional Crop Row Detection Methods and Principles

This section mainly summarizes the mainstream technical methods for crop row detection, including vision-based methods, LiDAR-based methods, and multi-sensor fusion-based methods. Traditional vision-based methods often use manual feature extraction techniques such as threshold segmentation and Hough transform, which are suitable for scenarios such as broken seedling rows or lighting changes in structured backgrounds. However, their generalization ability to environmental complexity is poor. However, deep-learning-based methods use models such as CNN or YOLO to self-learn features, and have good accuracy in high-density weeds, curved rows, and other high-complexity scenarios. In addition, lightweight design can achieve a balance between efficiency and performance to a certain extent. Based on LiDAR technology, point clouds are preprocessed and structurally extracted, and 3D features are calculated to obtain accurate geometric information. This can provide an alternative to visual techniques for precise geometric references in scenarios such as canopy occlusion and weak GNSS signals, and is an important supplement. Based on multi-sensor fusion technology, utilizing visual-LiDAR texture geometric complementarity and visual-GNSS/IMU long-short-term localization for information fusion can improve robustness. This paper compares the advantages and disadvantages of various methods based on evaluation indicators such as principle types, application scenarios, and robustness characteristics in Table 1, in order to choose the appropriate method according to one’s own situation in practical applications.

Table 1.

Comparison of different methods for crop row detection technology.

The mainstream technologies for crop detection mainly include three categories: visual sensors, LiDAR, and multi-sensor fusion. Traditional visual technology uses manually designed methods to extract features and employs geometric algorithms for localization. It runs quickly and has high accuracy in structured farmland scenes, with extremely short delay times. Additionally, its localization error can be controlled within a few centimeters. However, it does not have good robustness in unstructured farmland scenes with overgrown weeds and large changes in lighting. Deep learning methods such as YOLO series have good accuracy by introducing attention mechanisms and performing multi-scale fusion. Most detection results are above 90%, and a few models can achieve 166 fps. Lightweight design can be well adapted to application environments with weak computing power, but requires a large-scale annotated data. The laser radar technology using three-dimensional point clouds has the advantages of accurate distance measurement, good stability, and low susceptibility to weak light. It can quantify crop growth parameters and has a positioning accuracy error of less than 0.5 mm. However, its shortcomings are sparse point clouds, high cost, and reduced accuracy in extracting complex scenes. Multi-sensor fusion has become a research focus, and the fusion of vision and LiDAR can be combined to obtain texture and geometric information, achieving centimeter-level positioning. The fusion of vision and GNSS/IMU can achieve both local accuracy and global stability, and can achieve a low error rate even after GNSS loss of lock. However, fusion requires consideration of data synchronization issues, as well as related computational algorithms and fusion system costs. Different technologies can be applied to different scenarios, and fusion technology can also compensate for the technical deficiencies of these various single sensors.

3. Performance Evaluation Indicators for Crop Row Detection Methods

3.1. Accuracy Indicators

Accuracy is the core indicator for measuring the practicality of crop row detection methods, directly reflecting the reliability of navigation and operations. This section summarizes the performance of mainstream methods from two dimensions: detection accuracy and positioning error, providing a quantitative basis for technical optimization and scene adaptation.

3.1.1. Detection Accuracy

Accuracy, F1-score, and recall are commonly used evaluation indicators to reflect the ability of crop row features. Sun J et al. [101] used an adaptive disturbance observer sliding mode control method to address the issue of unknown disturbances affecting tractor path tracking, reducing the average lateral error by 20–31.7%, and the heading error by 5–21%. Cui B et al. [102] added a path search function to the traditional Stanley model, and the average lateral error of straight-line tracking increased from 5.2 cm to 6.0 cm at a vehicle speed of 1 m/s, which was 10.3% higher than using the traditional model, and the maximum error across the entire scene was reduced from 34 cm to 27 cm.

Afzaal et al. [103] proposed a crop row detection framework integrating deep learning and deep modeling ideas, achieving an F1-score of 0.8012, accuracy of 0.8512, and recall of 0.7584 on the validation set, and achieving a good balance between accuracy and recall. Gong et al. [104] improved the YOLOX Tiny network with an attention mechanism, achieving an average accuracy of 92.2%, which is 16.4% higher than the original YOLOX Tiny, and an angle error of 0.59°.

Li et al. [105] used a row-column attention network, achieving 95.75% accuracy on the tea dataset and vegetable dataset, which is about 8.5% higher than state-of-the-art (SOTA). The average absolute error of the lateral distance was 8.8 pixels, and only 23.81 ms was used on an image with a size of 1920 × 1080. Wei et al. [106] used the Crop BiSeNet V2 algorithm to obtain an accuracy of 0.9811, a detection speed of 65.54 ms/frame, and strong generalization ability on the corn seedling dataset. The detection speed of YOLOv11 for weed detection in the field is about 34 fps, and the mAP can reach up to 97.5%, making it more suitable for field deployment [107].

Diao et al. [108] proposed the ASPPF-YOLOv8s model, which obtained 90.2% mAP and 91% F1-score. The navigation line fitting accuracy was 94.35%, and the angular error was 0.63°. Zhu et al. [109] used the SN-YOLOXNano ECA model to achieve 97.86% classification accuracy, 98.52% recall, and >96% accuracy for foliar fertilizer spraying information. Liang et al. [110] used the DE YOLO model to detect rice impurities and obtained 97.55% mAP, which was 2.9% higher than YOLOX and had 48.89% fewer parameters than YOLOX. The improved YOLOv8n by Jiang et al. [111] can achieve an accuracy rate of 94.7% for strawberry detection, and can achieve a correct grouping rate greater than 94%. Memon et al. [112] established an inter-row weed and cotton field recognition system, with 89.4% and 85.0% recognition rates, respectively.

3.1.2. Positioning Error

The magnitude of positioning error affects the accuracy of crop row geometric parameter estimation, and thus determines the effectiveness of navigation path planning. Zhang et al. [113] proposed an adaptive system of unmanned track harvesters for paddy fields: the steady-state tracking deviation of 0.09 m for concrete pavement, a stable tracking deviation of 0.032 m for paddy fields, and a cutting platform utilization rate of 91.3%. The tractor–trailer dual-layer closed-loop control system proposed by Lu et al. [114], considering parameter uncertainty, has a position error always within 0.1m and a heading error not exceeding 0.1°. Yang et al. [115] used the 3D LiDAR method to obtain canopy point clouds through dual thresholding; their algorithm achieved >86% correct detection rate and <120 ms average processing time across four corn growth stages, with the accuracy of canopy localization unaffected by crop density. Kong et al. [116] improved the ENet network for identifying rice heading stage navigation lines, with an IoU segmentation of 89.3% and an average deviation of less than 5 cm from the actual path.

3.1.3. Summary of Accuracy Indicators

The evaluation of crop row detection methods mainly focuses on accuracy, which is related to the reliability of navigation operations, that is, the quality of detection accuracy and positioning error in testing. Detection performance is generally measured by F1-score or recall rate. Different optimization models have excellent performance for different application scenarios, such as optimizing the YOLO series model for large-scale and multi-category crop row detection. In some scenarios, it can achieve correct recognition of crops or weeds. Most models have an accuracy rate of over 95% for specific datasets, and also have a certain detection speed, which can be deployed in the field.

The positioning error affects the accuracy of geometric parameter estimation of crop rows and the effectiveness of navigation path planning. By optimizing the control system and algorithms, the positioning error can be reduced. Unmanned tracked harvesters can achieve minimal tracking deviation in paddy fields, and some algorithms can maintain small angle errors throughout the crop growth period without being affected by crop density. Specifically, the accuracy index can obtain detection results from feature point recognition and geometric position information, while considering the differences in accuracy, speed, and robustness of detection methods. It can distinguish the advantages and disadvantages of different methods, guide subsequent improvement, application, and other work, and establish a more reasonable and comprehensive performance evaluation system based on this to meet actual production and usage needs.

3.2. Efficiency Indicators

The efficiency indicators for measuring the actual deployment of crop row detection methods directly affect the real-time response of agricultural robots to crop lines and the degree of hardware matching. In complex field environments, crop row detection needs to balance accuracy and speed, and consider computational resource consumption. This section summarizes the performance of various algorithms and summarizes the performance of methods from the perspectives of real-time performance and computational cost.

3.2.1. Real Time Performance

Focusing on testing metrics such as real-time detection speed and response to environmental changes, fps and single-frame processing time are key indicators to evaluate the two commonly used methods. Zhang et al. [117] proposed a lightweight TS-YOLO model, which uses MobileNetV3 convolution and depth-wise separable convolution instead of YOLOv4 convolution adds deformable convolution, a coordinate attention module, and other methods to reduce the model size to 11.78 M (only 18.30% of YOLOv4). Compared with YOLOv4, it increases 11.68 fps and has an accuracy of 85.35% under various scene lighting conditions.

Luo et al. [118] studied various crop harvest boundary detection methods based on stereo vision; they achieved >98% accuracy for rice, rapeseed, and corn. The automatic turning function of the combine harvester during the automatic harvesting process was also achieved, with a processing speed of 49 ms/frame. He et al. [119] proposed the YOLO Broccoli Seg model, which improves YOLOv8n Seg by adding a triple attention module to enhance feature fusion capability. The mean average precision mAP50 (Mask), mAP95 (Mask), mAP50 (Bounding Box, Bbox), and mAP95 (Bbox) are 0.973, 0.683, 0.973, and 0.748, respectively, with an 8.7% increase in accuracy. This model provides real-time recognition support for automatic broccoli harvesting. Lac et al. [120] proposed an algorithm for crop stem detection and tracking, which was obtained through corn/legume experiments. They reported F1-scores of, respectively, 94.74% and 93.82%, and the power consumption was not exceeding 30W, and it can operate in real time on embedded computers with power consumption not exceeding 30 W, supporting precise mechanical weeding in vegetable fields.

3.2.2. Cost Calculation

A navigation line extraction algorithm based on YOLOv8’s CornNet proposed by Guo et al. [121] replaces the original Conv module with Depthwise Convolution (DWConv) and the C2f module with PP LCNet, reducing the number of parameters and GFLOPs to achieve lightweight. Yang et al. [122] added the small object detection layer (P2) of the original YOLOv8-obb model to the improved YOLOv8-obb model and tested the optimal performance on a 2640 × 1978 image at a height of 7 m. Three proportional head structures were added to the improved YOLOv8-obb model, and comparative experiments were conducted on this basis. The model improved the accuracy of the minimum object by 0.1–2% and the F1-score by 0.5–3% compared to the comparative model. K-means clustering and linear fitting were used for row information extraction to reduce the cost of planting layout quantification and calculation. Lin et al. [123] used an enhanced multitask YOLO algorithm based on C2F and anchor-free modules to achieve the segmentation of passable areas and weed detection in pineapple fields. Compared with previous methods, the training was more accurate, with an accuracy increase of 4.27% and an mIoU of 2.5%. The error in navigation line extraction was 5.472 cm, which solved the problem that robots on the ground could not perceive accurately.

3.2.3. Summary of Efficiency Indicators

For crop row detection methods, efficiency indicators correspond to the practical application of examining whether real-time response can be achieved and whether it can operate on acceptable equipment, balancing accuracy and speed while considering computational resource consumption. The main focus is on achieving real-time performance and computational cost. Real-time performance mainly includes two indicators: frame rate and single-frame processing time. TS-YOLO improves speed through model slimming and can adapt to all-weather operations. SEEDING-YOLO and YOLO-like models with U-Net as the backbone network can simultaneously maintain high detection accuracy and meet the requirements of seedling monitoring and frame rate. Some models can achieve automatic steering of autonomous driving harvesters and support precise seeding. (Classic draws inspiration from other perceptual methods)

The calculation cost quantifies the hardware used by the number of parameters and calculations. Optimizing the model by using depthwise separable convolutions, reducing parameter and computational complexity, and other methods to alleviate model load while compensating for accuracy loss. Some algorithms improve the accuracy of small object detection by changing their own structure, while also reducing the cost of quantitative calculation of planting layout. Efficiency indicators measure its technical feasibility through real-time response and resource consumption. Lightweight models and optimization algorithms are the best way to balance accuracy and efficiency, facilitating the selection of various application scenarios and hardware platforms.

3.3. Robustness Indicators

Robustness is the key point to measure the stable operation of crop row detection technology, and it is also the focus of research, directly determining the practical application value of the technology. The evaluation of robustness based on accuracy and efficiency mainly depends on whether the method can have a certain degree of fault tolerance to changes in lighting, the impact of weeds, and crop cutting.

3.3.1. Environmental Adaptability

Environmental adaptability refers to the ability of technical methods to resist common field disturbances such as light fluctuations and weed density. Patidar et al. [124] used the object detection algorithm based on ByteTrack Simple Online and Real Time Tracker (BTSORT) and YOLOv7 to estimate the weed density in chili fields. The YOLOv7 recognition model had a recognition accuracy of 0.92, a recall rate of 0.94, and a frame rate of 47.39 fps. The multi-target tracking accuracy of BTSORT combined with YOLOv7 is 0.85, the MOTP is 0.81, and the overall classification accuracy is 0.87. The image processing speed of 180 × 720 is 1.38 times faster than 1920 × 1080, achieving dynamic estimation of weed density in chili fields.

Song et al. [125] used semantic segmentation to integrate RGB-D and HHG wheat field navigation algorithms, achieving 95% mIoU for HHG, <0.1° average navigation line angle deviation and 2.0° standard deviation, and 30 mm average distance deviation. This method can avoid interference caused by crop occlusion within a certain range. Costa et al. [126] found that mixing image sets from different cameras and different field images can improve the robustness of CNN crop row detection environments with a small amount of data. Vrochidou et al. [127] noted that the visual system is affected by lighting, and multispectral imaging eliminates the adverse effects of noise. Stereoscopic vision has better stability and is more suitable for navigating crops such as cotton and corn in large fields. Zhao et al. [128] used a combination of ExGR index, OTSU, and DBSCAN methods, which have advantages over traditional algorithms for characterizing crop rows in weeds and shadows. The DBSCAN clustering method is more suitable for characterizing crop rows in complex environments. Pang et al. [129] proposed a cornrow detection system that combines geometric descriptors with MaxArea Mask Scoring RCNN, achieving an estimation accuracy of 95.8% for seedling emergence rate.

3.3.2. Scene Fault Tolerance

The focus on fault tolerance in this scenario corresponds to the adaptability to abnormal crop growth and extreme weather conditions. Liang et al. [130] proposed a cotton ridge cutting navigation line detection method based on edge detection and OTSU, which used the least squares method to fit narrow row gap navigation lines, achieving a visual detection positioning accuracy of 99.2% and a processing speed of 6.63 ms per frame during the cotton seedling stage. At the same time, its recognition accuracy for corn and soybeans reached 98.1% and 98.4%, with a mean lateral drift of 2 cm and a mean longitudinal drift of 0.57°. It has good robustness against missing seedlings and ruts. Zhang et al. [131] designed a corn missed sowing detection and compensation system using microcontrollers and fiber optic sensors. The accuracy of missed sowing detection is 96%, the error of replanting is 4%, and the replanting rate is 90%. The qualified rate of sowing when the tractor travels at a speed of 3–8 km/h reaches 90%, which can meet the requirements of missed sowing detection and replanting.

3.3.3. Summary of Robustness Indicators

In the performance evaluation indicators of crop row detection methods, robustness is an important indicator to consider whether the technology can work stably, and it directly determines whether the technology has practical application value. Robustness indicators are mainly judged from the perspectives of environmental adaptability and scene fault tolerance, and examining the fault tolerance of the method for conditions such as changes in lighting, weed interference, and crop cutting. For the adaptability of the environment, optimization algorithms and multimodal techniques can enhance anti-interference ability, the YOLOv7 tracker model can be used to dynamically estimate weed density. The algorithm that integrates semantic segmentation with multi-source data reduces the interference caused by crop occlusion. Image acquisition technology such as multispectral imaging and stereo vision can alleviate the impact of lighting noise. The above methods are more effective than traditional algorithms when used in the shadow of weeds. In terms of scene fault tolerance, specialized detection methods have also shown good robustness in cases of abnormal crop growth and extreme environmental conditions. The cotton seedling ridge planting navigation line detection method has good fault tolerance for missing seedling ruts, and the corn missed planting detection compensation system can accurately detect missed planting and quickly achieve replanting, which is suitable for multiple types of crops and different driving speeds. The so-called robustness index is a quantitative measure of the adaptability of technology to the environment and scene, as well as its ability to tolerate abnormal situations, in order to evaluate whether the technology can be truly applied in the field. It is one of the key reference standards for transitioning from the laboratory to the field.

3.4. Practical Indicators

The practicality index focuses on the practical feasibility of crop row detection technology and directly relates to the potential for large-scale application of the technology. Compared to accuracy, efficiency, and robustness, practicality focuses more on the controllability of hardware costs and the convenience of deployment processes, which are key considerations for technology transitioning from the laboratory to the field.

3.4.1. Hardware Cost

Hardware is one of the factors that determine whether technology is economical, and low-cost solutions are easier to implement. Cox et al. [132] proposed Visual Teach and Generalize (VTAG), which uses a low-cost uncalibrated monocular camera and a wheel odometer to automatically complete crop navigation in a greenhouse environment. With only a distance of 25 m to teach the target line, navigation within a range of more than 3.5 km can be achieved, and experiments have shown that its generalization gain can reach 140 s, providing a low-cost method for GNSS signal obstruction environments. Calera et al. [133] proposed an agricultural rover navigation system under the canopy, which combines low-cost hardware with multiple image methods to achieve seamless crop navigation for robots in different field conditions and locations without human intervention. Torres et al. [134] compared the estimation results of woody crop canopy parameters using MTSL LiDAR with UAV-DAP, and found that the R2 value could reach 0.82–0.94, providing a reliable data reference for the selection of orchard cultivation techniques. Hong et al. [135] improved the Adaptive Monte Carlo Localization—Normal Distributions Transform (AMCL-NDT) localization algorithm and applied it in combination with 2D LiDAR to agricultural robots. Palm garden simulations showed that the absolute pose error of the robot in the palm garden was reduced by more than 53%, demonstrating strong cost-effectiveness and proving the superiority of 2D LiDAR.

3.4.2. Deployment Difficulty

The difficulty of deployment affects the feasibility of technology implementation. Simplifying the labeling and annotation steps can lower the threshold for technical use. The robust crop row detection algorithm proposed by de Silva et al. [136], based on a sugar beet dataset containing 11 field variables, uses deep learning methods to segment crop rows and uses low-cost cameras for field change detection, which performs better than baseline. The InstaCropNet dual-branch structure labeling method based on camera histogram correction proposed by Guo et al. [137] can effectively eliminate blade interference and achieve labeling by simulating the strip-shaped structure at the center of the row. The average angle deviation of detection is ≤2°, and the detection accuracy can reach 96.5%. Jayathunga et al.’s [138] method used unlabeled UAV-DAP point cloud information to construct coniferous tree seedlings, with a total accuracy of 95.2% and an F1-score of 96.6%, reducing a significant amount of labeling work. Rana et al. [139] established the GobhiSet cabbage dataset, which provides automatic annotation options, reducing manual annotation time, and can be further used for model improvement.

3.4.3. Summary of Practical Indicators

In the performance evaluation indicators of crop row detection methods, practicality indicators will be defined from the perspective of technical feasibility and used as indicators of whether the technology can achieve scale. Compared with indicators such as accuracy, efficiency, and robustness, more emphasis is placed on hardware cost, controllability and simplicity, and feasible layout and installation, which serve as guiding technologies for the process from experimental benches to farmland. In terms of hardware cost, there are more low-cost solutions with low investment and easy deployment, such as using uncalibrated monocular cameras and wheel odometry, which can run long distances without the need for GNSS signals and have strong generalization ability to various environments. Combining 2D LiDAR with optimized positioning algorithms can meet certain accuracy and performance requirements while offering high cost-effectiveness, making it more suitable in economically disadvantaged areas. Concerning deployment difficulty, simplifying the process of annotation and calibration can reduce the difficulty of using machine vision systems. Some algorithms simulate the central structure of rows or use unlabeled point cloud data, which greatly reduces the workload of manual annotation and can achieve positioning without the need for specially calibrated equipment, which is conducive to promoting the use of technology. Overall, when evaluating technology selection from the perspectives of economy, ease of operation, and practicality, accuracy, efficiency, and robustness should also be taken into account. The four types of indicators work together to form a comprehensive quantitative indicator, which can ensure that the improved and optimized crop row detection technology is used in actual production.

3.5. Summary of Performance Evaluation Indicators

Using the performance evaluation indicators of the crop row detection method, this technology is quantitatively evaluated from four aspects: accuracy, efficiency, robustness, and practicality. Accuracy index: The ability to identify features and estimate geometric parameters is represented by detection accuracy and positioning error. Efficiency indicator: measures the balance between speed and cost from two aspects: real-time performance and computational cost. Robustness index: It characterizes environmental adaptability and scene fault tolerance, and has a certain stabilizing effect under harsh conditions. Practicality indicator: consideration of its feasibility in terms of hardware cost and installation difficulty. The four types of indicators complement each other, and can provide quantitative data support for technical optimization and application.

Table 2 summarizes the typical indicators of various methods/models, and based on their main differences in accuracy, efficiency, and robustness, various technical indices can be measured and compared, providing a more intuitive and effective basis for the selection of crop row detection methods.

Table 2.

Comparison of performance evaluation indicators for crop inspection.

This section establishes an evaluation system for crop row detection methods based on four dimensions: accuracy, efficiency, robustness, and practicality. The detection accuracy is often measured by accuracy, F1-score, and other methods. The vast majority of models can achieve an accuracy of over 95% for specific datasets, and the highest mAP of YOLO after optimization can reach 97.55%. The positioning error can be lowered by optimizing control algorithms. The crop-density–independent steady-state tracking error of unmanned tracked harvesters in rice fields, and the angular error of certain algorithms, can reach 0.032 m and as low as 0.59°, respectively. In terms of efficiency, real-time performance is measured by frame rate and processing time per frame. The lightweight model TS-YOLO’s frame rate can already meet the needs of all-weather work, while models like SEEDING–YOLO improve both accuracy and speed. The computational cost can be reduced by some methods (such as depth-wise separable convolution) to minimize the number of parameters and calculations in the model, and to compensate for the loss of model accuracy as much as possible. The focus is on environmental adaptability and scene fault tolerance. Robustness can be improved by combining multi-source data or using multimodal methods to enhance anti-interference ability. Adding RGB-D data to semantic segmentation can avoid occlusion interference. Some methods have a certain fault tolerance for scenarios such as missing seedlings and ruts. Practicality considerations include hardware costs and deployment difficulties. A low-cost, uncalibrated monocular camera combined with a wheel speed sensor and a low budget can achieve low-cost, high-precision positioning by simplifying the labeling process. This can greatly reduce the technical barrier to entry for long-distance navigation. Overall, lightweight, low-cost, and strong environmental adaptability are the main development directions for the future, and are important factors in introducing papers from the laboratory to real-world scenarios.

4. Comparison of Adaptability in Farmland Scenes

4.1. Comparison of Methods for Open-Air Scenarios

As an agricultural production scenario, open-air fields are greatly affected by external natural environment interference, and the difficulty of detection varies with weed density and crop growth stage. In simple scenarios, crops have regular rows and minimal interference, and high efficiency should be pursued during detection. In complex scenarios, weeds are dense and there are many types of crop forms, so high requirements are placed on anti-interference performance.

4.1.1. Simple Scenarios in Open-Air Scenarios

In simple scenarios, crop rows have good regularity and minimal interference, so lightweight methods have more advantages. Sun et al. [150] used GD-YOLOv10n-seg and PCA fitting to achieve detection of soybean and corn seedling rows. By integrating GhostModule and DynamicConv, the network size was reduced by 18.3%, the fitting centerline accuracy was 95.08%, the angle offset was 1.75°, and the processing speed was 61.47 fps, meeting the requirements of composite planting navigation. In order to achieve 92.2% mAP for corn seedlings, Gong et al. [151] changed YOLOv5s to YOLOv5-M3 and replaced the backbone with MobileNetv3, combined with CBAM, to achieve a recognition speed of 39 fps and effectively denoise images, while also improving interference.

Zheng et al. [152] used vertical projection and Hough transform to extract cornrow features and developed an automatic row-oriented pesticide application system. The algorithm took an average of 42 ms, with an optimal accuracy of 93.3% and a field deviation of 4.36 cm, saving 11.4–20.4% of pesticide application compared to traditional methods. In the SN-CNN model proposed by Zhang et al. [153], the parameters containing the C2f_UIB module and SimAM attention are 2.37 M, and mAP@0.5. At 94.6%, the RANSAC fitting RMSE value is 5.7 pixels, and the entire image processing can be completed on the embedded platform at a speed of 25 ms/frame. The embedded platform has high real-time performance. Geng et al. [154] developed an automatic alignment system for corn harvesters, which combines touch detection and adaptive fuzzy PID control. When the straw offset is ±15 cm, the proportion reaches over 95.4%, reducing significant ear loss. Hruska et al. [155] used a machine learning model based on near-infrared data for real-time weed detection in broad row maize (Zea mays) fields. The customized model achieved a recognition accuracy of 94.5%, while emphasizing the practical limitations of the dataset.

4.1.2. Complex Scenarios in Open-Air Fields

For complex scenarios, strong anti-interference ability is required, and using deep learning and multi-sensor fusion is currently one of the popular methods. Yang et al. applied log transformation to convolutional neural networks to improve corn ear features. By using micro ROI to extract corn ear feature points, the accuracy was improved by 5%, and the real-time performance was improved by 62.3% [156]. Guo et al. [157] defined crop row detection as curve approximation based on Transformer, outputting shape parameters end-to-end, reducing steps, and improving generality for complex environments. In response to the low detection accuracy of tea buds in open-air tea gardens, Yu et al. proposed Tea CycleGAN and a data augmentation method, which improved the mAP of YOLOv7 detection to 83.54%, alleviating the influence of light and other factors on measurement accuracy [158].

Deng et al. [159] designed and proposed the HAD-YOLO model based on indoor images of three common weeds in the field. The average accuracy (mAP) of weed detection was verified to be 94.2% using a greenhouse-collected dataset. Using the greenhouse-collected dataset as pre-training weights, the field-collected dataset was tested to verify that the average accuracy of weed detection can reach 96.2%, with a detection frame rate of 30.6 fps. The HAD-YOLO model can fully meet the requirements of precise weed identification in crop growth environments and provide reference for automated weed control. Based on the improved YOLOv8, Zuo et al. [160] introduced the LBDC-YOLO model and adopted GSConv’s Slim neck design, incorporating a triple attention module. In open-air scenarios testing, the average detection accuracy of broccoli reached 94.44%, which can adapt to complex field disturbances. The cornrow control weed removal robot, using YOLOv5 and the seedling extraction method, achieved a weed removal rate of 79.8% and a seedling damage rate of 7.3%, completing integrated operations [161]. The enhanced version of the YOLOv5 intelligent weeding machine has an average weeding rate of 96.87% and a seedling damage rate of 1.19%, improving its adaptability to complex scenarios [162].

4.1.3. Open-Air Scenarios Challenges and Responses

In the open-air scenarios, extreme weather such as rainstorms and strong winds will have a great impact on the sensor. Strong wind will cause crops to fall or swing, which will make the number of photons scattered to the ground in the LiDAR point cloud relatively increase, resulting in more sparse LiDAR point cloud data, which will seriously affect the later point cloud preprocessing and row structure extraction accuracy. According to current research, although some methods have adopted technological improvements to enhance anti-interference, such as using waterproof LiDAR to reduce the damage caused by rainwater to sensors, and using a canopy navigation system based on ToF depth cameras, it has been found that under complex lighting conditions, the average horizontal and vertical position errors of cornfields can reach 5.0 cm and 4.2 cm, respectively, in corn and sorghum fields, proving that they have achieved a certain degree of resistance to some harsh environments. In addition, in traditional vision, the combination of the HSV color model and the OTSU thresholding method is used for image segmentation. In addition, in the YOLO series models that integrate attention mechanisms in deep learning methods, such as the ST-YOLOv8s network model, the accuracy of cornrow detection has been improved by 4.03–11.92%, and the angle error has been reduced by 0.7–3.78°. All of the above can alleviate the impact of weather interference on feature extraction in a certain sense, but so far, there are few special methods to solve the problem of sensor hardware damage and serious sparse point clouds caused by rainstorms and strong wind, and more efforts are being made to improve the algorithm against interference. Outdoor crop row detection requires both strengthening sensor hardware protection and improving algorithm robustness. It can ensure good working conditions under general interference conditions, but how to achieve long-term stable and reliable operation in harsh weather environments is a problem that needs to be solved.

4.2. Comparison of Methods for Facility Agriculture Scenarios

Facility agriculture provides a relatively stable detection foundation for crop row detection by utilizing controllable conditions such as light and temperature. However, due to the high degree of obstruction and vine entanglement in the production process of crops, special requirements are placed on sensor equipment.

4.2.1. Simple Scenarios in Facility Agriculture

When the growth conditions of crops are relatively simple, the main requirements for detection technology are to improve detection accuracy and speed. An agricultural big data platform is built based on the “Data Process Organization” three-dimensional collaborative framework model proposed by Wang et al. [163], which integrates sensor data from multiple sources. The application practice of linking cultivation, planting, management, harvesting, and other links together for greenhouse vegetable crops shows that the efficiency of agricultural machinery is increased by 30%, the land utilization rate is increased by 15%, and the cost is reduced by 20%.

Ulloa et al. [164] proposed a CNN-based vegetable detection and characterization algorithm. The data collected in the experimental field was trained and integrated with data processing and driving operations on ROS. The field experiment results showed that the accuracy of using neural networks to detect vegetables was 90.5%, and the vegetable characterization error was ±3%. The use of low-cost RGB cameras can achieve precise fertilization at the level of individual vegetable plants. Yan et al. [165] used the YOLOv5x model to identify tomato plug seeds, with an average detection accuracy of 92.84% and an average single disk detection time of 13.475 s. When the missed sowing rate was 5–20%, the success rate of replanting reached 91.7%, and the sowing productivity reached 42.4 disks/h, improving the accuracy and production efficiency of sowing. Wang et al. [166] fused CNN and LSTM to establish an integrated model for predicting cucumber downy mildew by introducing disease related information and greenhouse indoor and outdoor environmental data. The model had an MAE of 0.069, an R2 of 0.9127, and an average error of 6.6478%, providing technical support for early warning of cucumber downy mildew airborne diseases.

4.2.2. Complex Scenarios in Facility Agriculture Scenarios

Due to factors such as excessive coverage of branches and leaves, as well as vine entanglement, detection algorithms for such complex scenarios must have stronger anti-interference ability and robustness. To this end, Wang et al. [167] proposed a visual navigation method that integrates vegetation index and ridge segmentation: firstly, the vegetation index and ridge semantic segmentation methods are used to obtain plant segmentation maps. Then, the improved PROSAC algorithm and distance filtering method were used to fit the navigation line. The experimental results showed that this method was superior to traditional methods in terms of weed resistance and missing row interference. Its running speed reached 10 fps, meeting the requirements of real-time navigation, and can be extended to vegetable farms such as broccoli.

The ridge strawberry dual arm collaborative harvesting robot developed by Yu et al. [168] integrates a lightweight Mask R-CNN vision system and CAN bus control system. Greenhouse experiments have shown that the success rate of harvesting after removing flowers and fruits reaches 49.30% (30.23% in the untrimmed state), with a single arm harvesting time of 7 s per fruit and a dual-arm collaborative harvesting time of 4 s per fruit. However, the harvesting effect needs to be improved when the fruit is severely obstructed. Jin et al. [169] used an Intel Realsense D415 depth camera to identify the edges of leafy vegetable seedlings and reduced transplanting losses through an L-shaped seedling path. The accuracy of the calibration experiment was 98.4%, and the average X/Y coordinate deviations were 5 mm and 4 mm, respectively. Compared with the control group, the machine vision group had an injury rate of 8.59%, which was 11.11% lower than the manual group, and had a higher efficiency.

4.2.3. Facility Agriculture Scenarios Challenges and Responses