A Semi-Automatic and Visual Leaf Area Measurement System Integrating Hough Transform and Gaussian Level-Set Method

Abstract

1. Introduction

- 1.

- Geometric Fidelity: We address perspective distortion algorithmically, not through fixed imaging rigs, by using Hough transform-based detection to correct scaling and angular inconsistencies. This correction establishes an accurate spatial mapping relationship, ensuring that measurements are based on a uniform metric scale and are independent of the camera’s position and angle.

- 2.

- Precision Segmentation with Minimal Interaction: We introduce a novel segmentation workflow that requires minimal user input to guide high-precision automation. Through simple interactive sampling, the user provides prior knowledge by indicating approximate regions of the leaf and reference object. This critical yet minimal interaction enables our system to automatically generate initial contours within these regions, serving as the starting point for the variational level set evolution. The subsequent segmentation is guided by a multivariate Gaussian color model that fully leverages RGB channel information and their correlations, enabling robust performance across natural color variations.

2. Materials and Methods

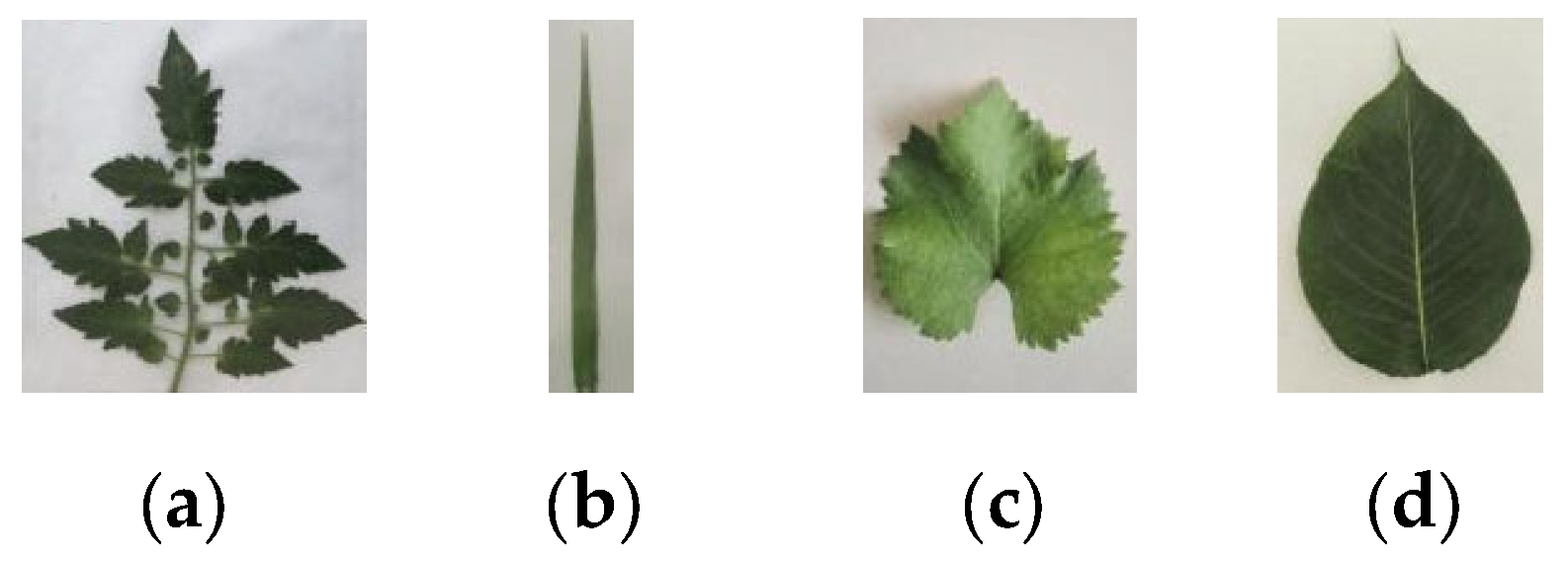

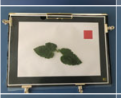

2.1. Experimental Materials

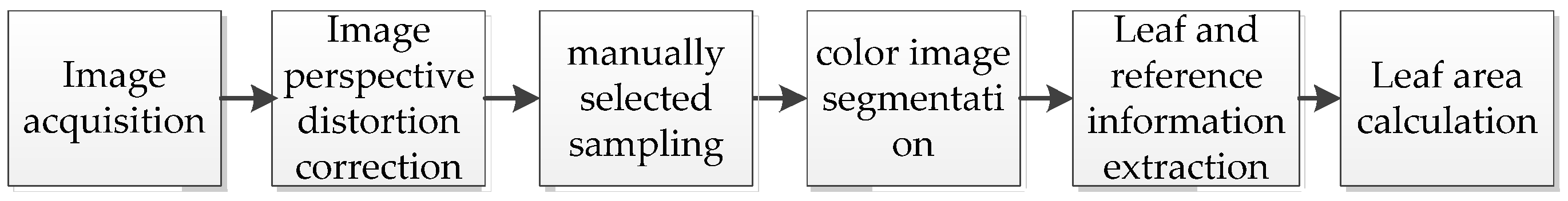

2.2. Method for Calculating Leaf Area

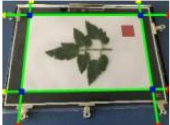

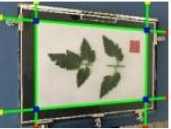

2.2.1. Image Acquisition

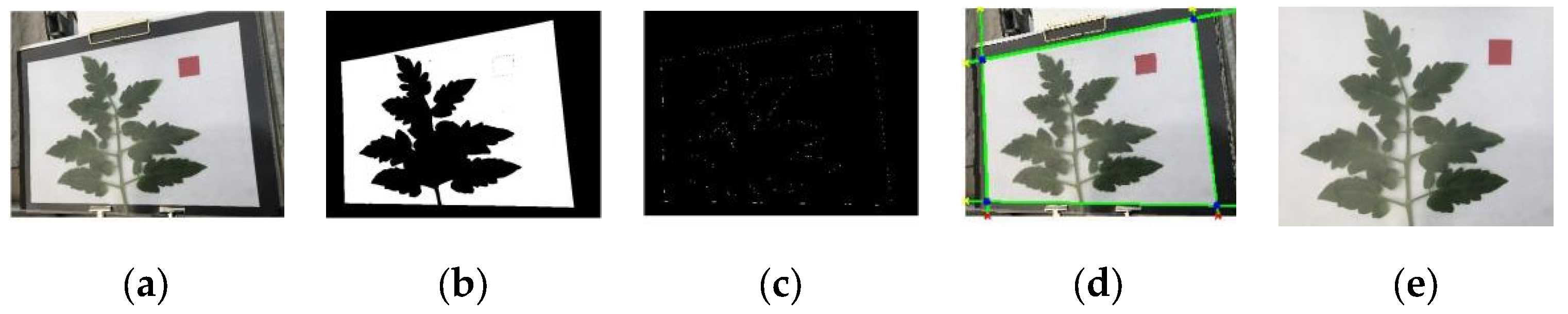

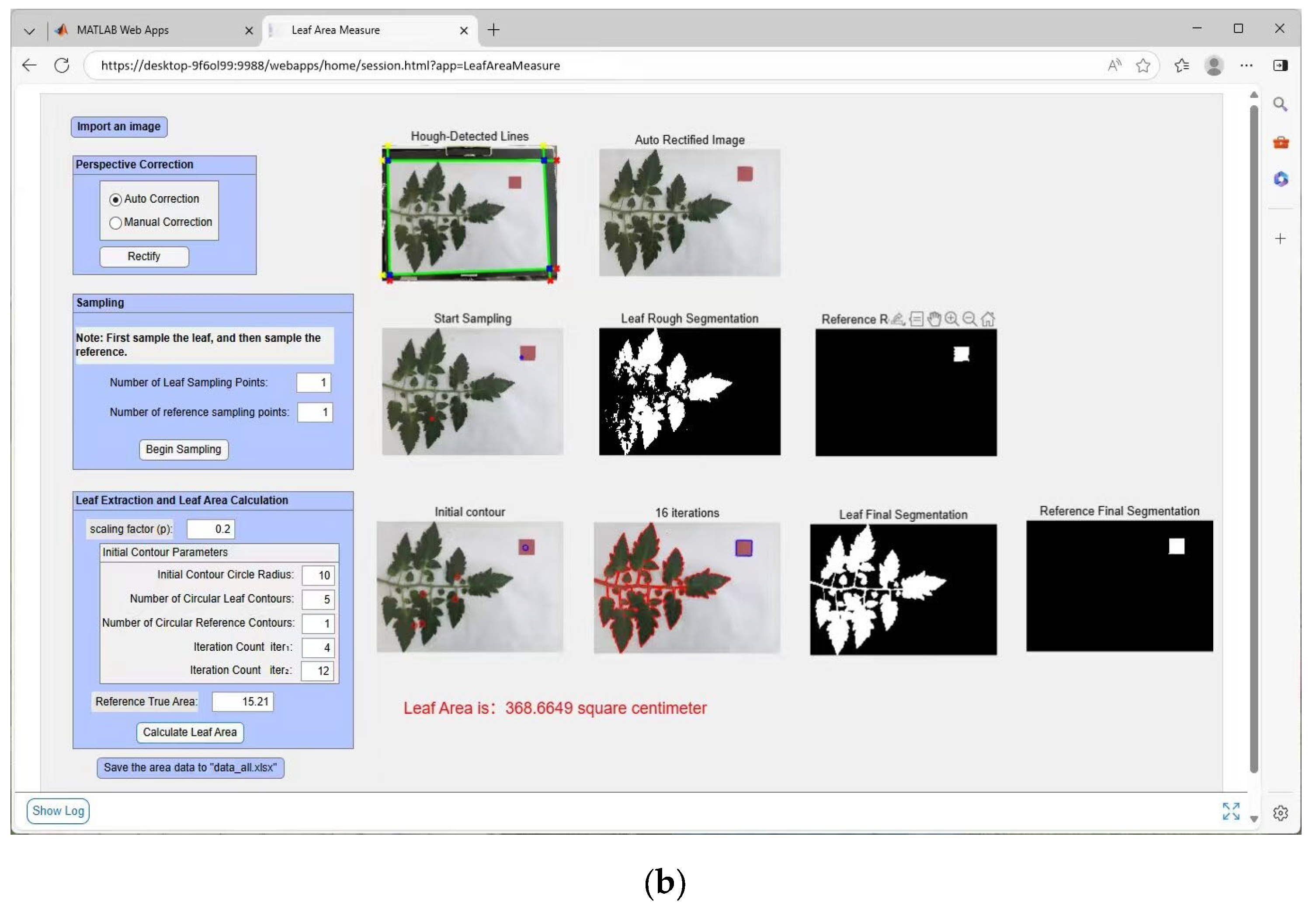

2.2.2. Image Rectification

1. Perspective Transformation

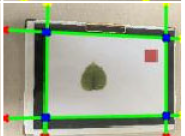

2. Hough Transform-Based Perspective Rectification

- (1)

- (2)

- (3)

- Geometric Registration:

- a.

- Source vertices: Intersection coordinates () to () from line equations.

- b.

- Target rectangle: Constructed using maximum horizontal/vertical spans with vertices () to $().

- (4)

- Spatial Transformation:

- a.

- Perspective matrix derivation: Plugging source/target coordinates into Equation (2).

- b.

- Bicubic interpolation: Mitigates pixel loss during warping.

- c.

- Background pruning: Extraneous regions beyond detection area removed (Figure 5e).

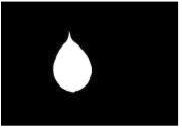

2.2.3. Color Image Segmentation

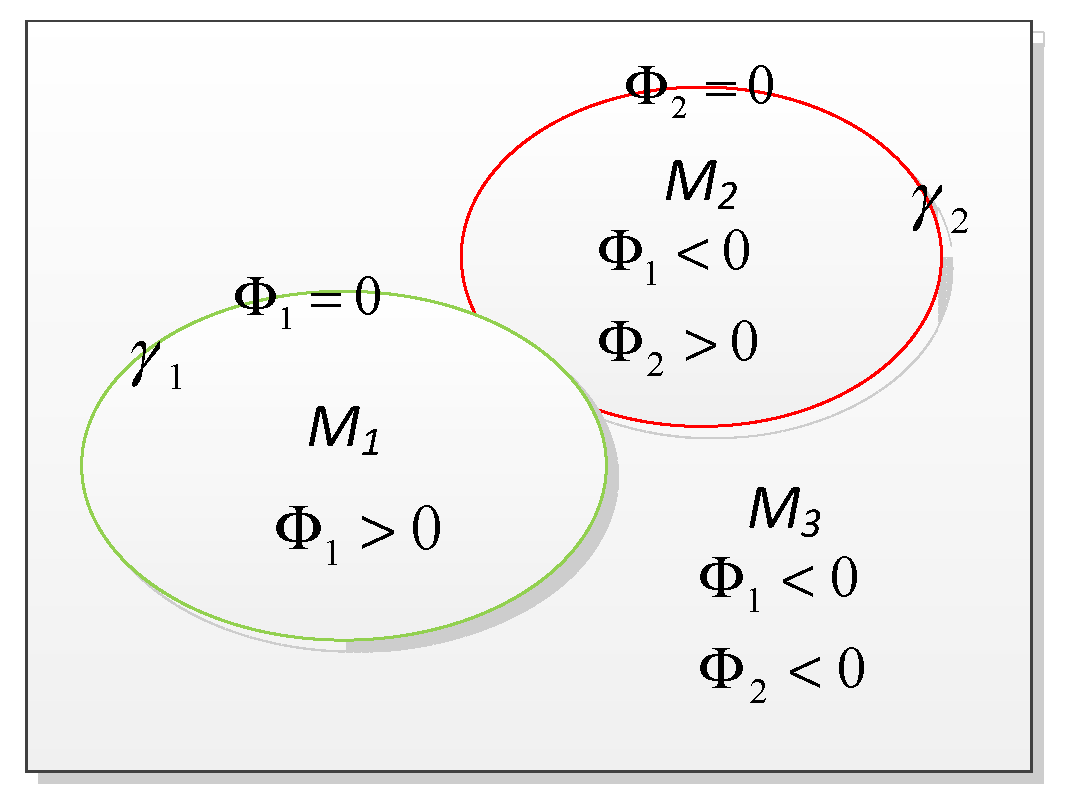

1. Probabilistic Segmentation Model

2. Level Set Curve Evolution Implementation

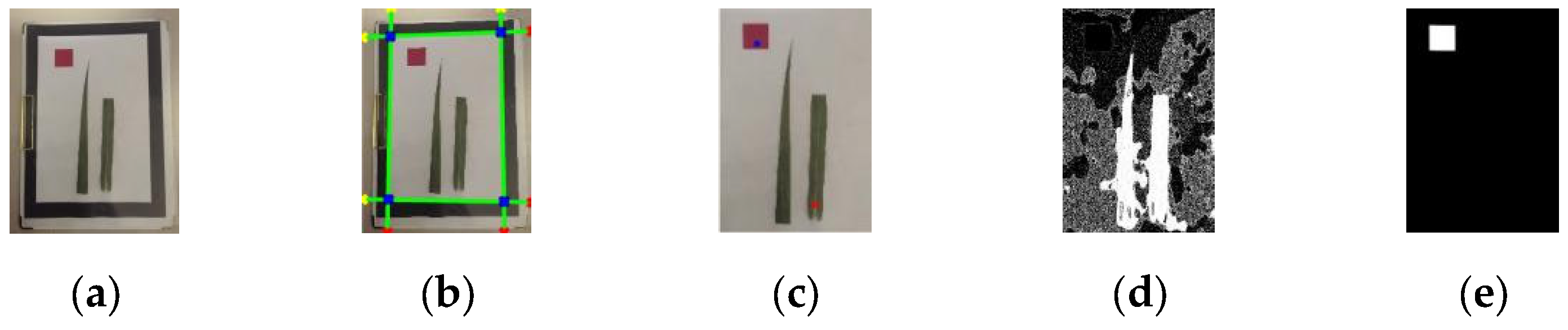

2.2.4. Algorithm Description and System Development

1. Algorithm Description

- (1)

- Image Rectification: Acquired images undergo rectification using the method detailed in Section 2.2.2, yielding rectified images. Rectification can be performed either manually or automatically.

- (2)

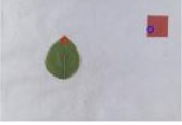

- Adaptive Thresholding and Rough Segmentation: On the rectified image, manually select n1 sample points on the leaf and n2 points on the reference object to obtain two sets of adaptive thresholds. The RGB color values acquired from these samples are converted to the HSV color space. The minimum and maximum hue (H) values from all sampled points are then calculated to define the hue range of the leaf. This hue range is used as a threshold to segment the approximate regions of the leaf and the reference object, thereby providing a foundation for the automatic initialization of the level set initial contour.

- (3)

- Initial Contour Definition: Within the segmented rough regions, automatically and randomly define multiple small circles as initial contours using Equation (13) (where d is the function value). Parameters include scaling factor p, circle radius r, number of initial leaf contours c1, and number of initial reference object contours c2.

- (4)

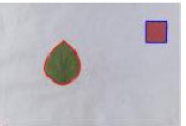

- Curve Evolution: Perform curve evolution for iter1 iterations according to Equation (15), followed by iter2 iterations according to Equation (11) (total iterations iter = iter1 + iter2). This process converges with the final contours of the leaf and reference object.

- (5)

- Area Calculation: Extract the final contours to determine the number of pixels within the leaf region (N_leaf) and the reference object region (N_ref). Given the known true area of the reference object (A_ref), the true leaf area (A_leaf) is calculated as [30]:

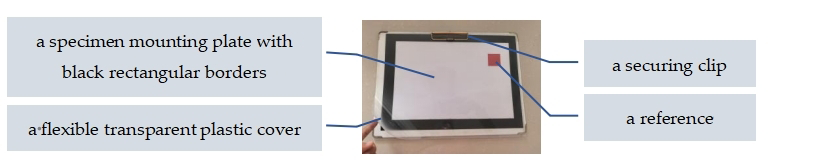

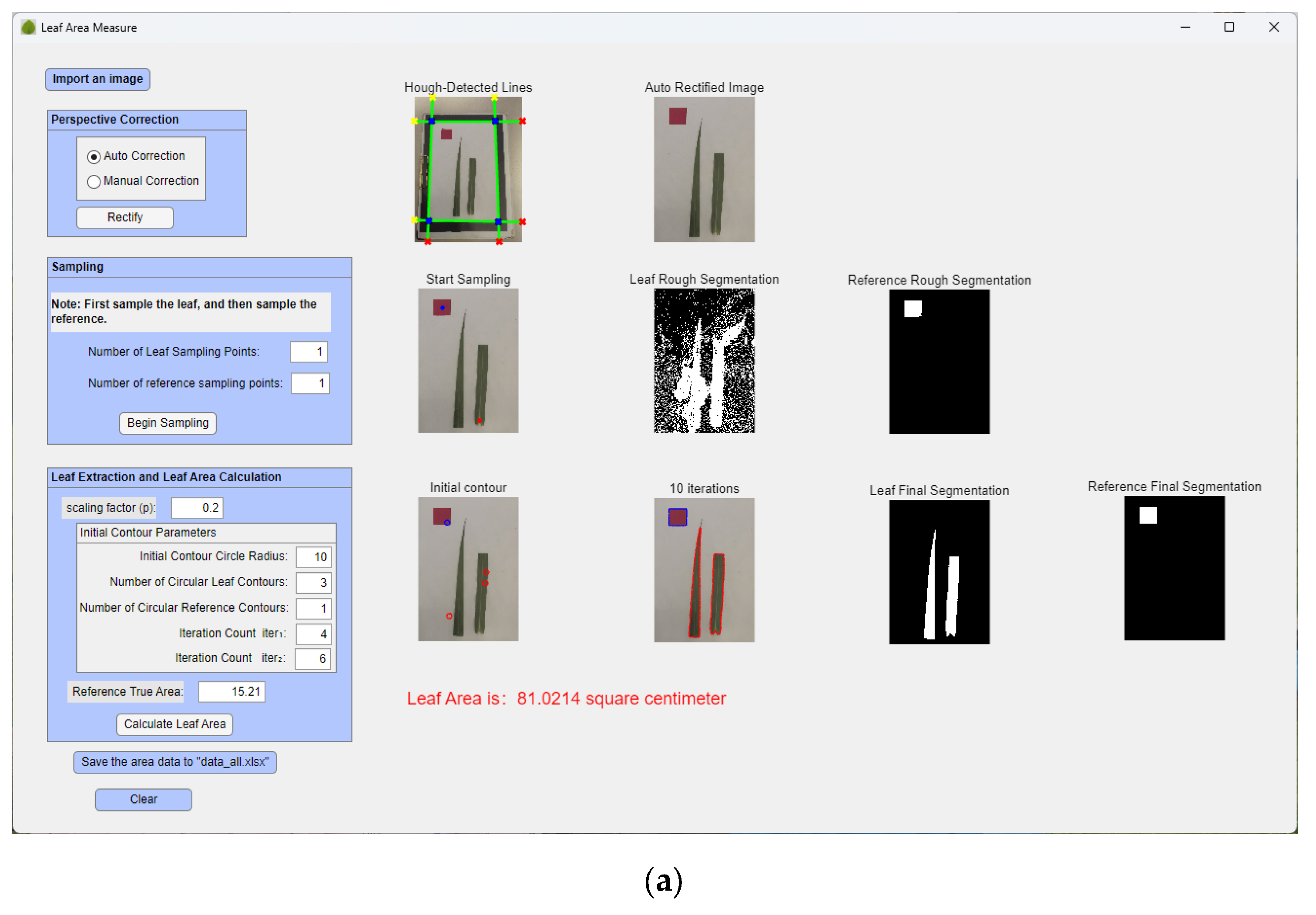

2. System Development

- (1)

- Computational Environment

- (2)

- Imaging Setup

- (3)

- App Development

3. Results

3.1. Image Rectification Functionality Validation

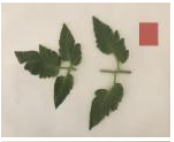

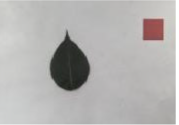

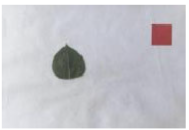

3.2. Leaf Extraction Workflows for Diverse Crop Species

- (1)

- Size-dependent scaling: Increase initial circle radius, count, and iterations for larger leaves; decrease for smaller specimens.

- (2)

- Over-segmentation compensation: Elevate circle count when rough segmentation exceeds target boundaries to ensure adequate on-leaf initialization.

- (3)

- Algorithmic resilience: Randomized circle placement achieves accurate contours even with suboptimal initialization (e.g., broomcorn millet, Row 5: 2/5 circles overlapping leaf).

3.3. Comprehensive Leaf Area Measurements Across Crops and Parameters

3.4. Comparison with OpenPheno and ImageJ

4. Discussion

4.1. Impact of Manual Sampling Points

4.2. Impact of Initial Contour Configuration

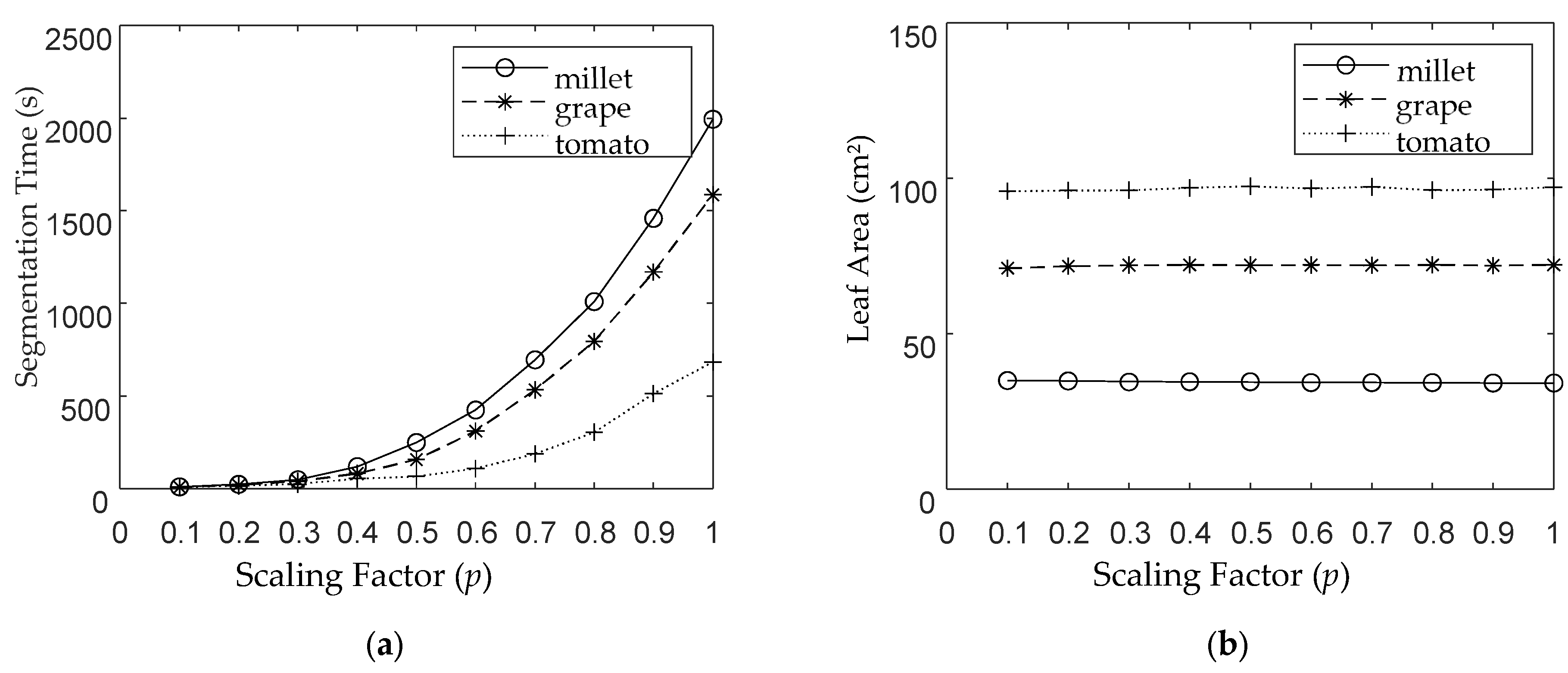

4.3. Impact of Scaling Factor Variation

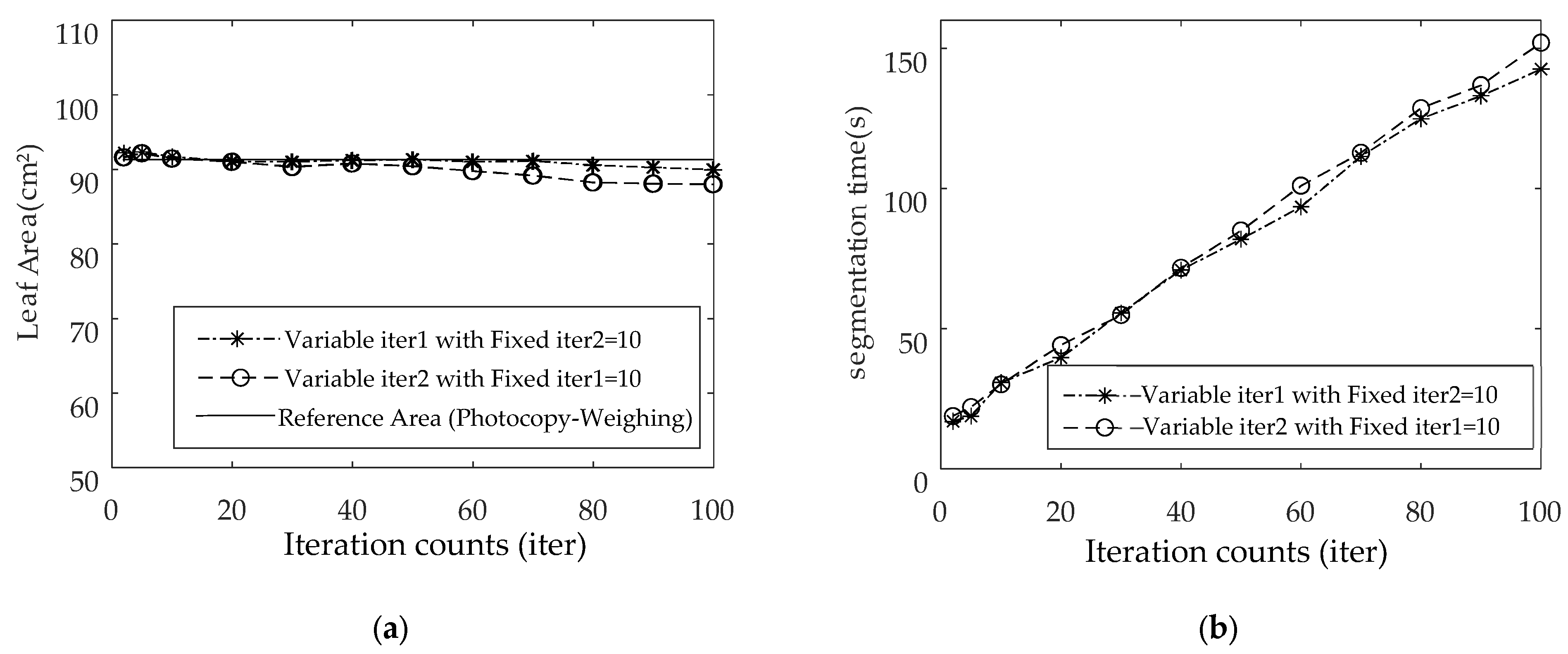

4.4. Impact of Iteration Counts

4.5. Impact of Shooting Angle and Height

4.6. Impact of Leaf Color Variation

4.7. Performance Evaluation of Different Approaches

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SD | standard deviation |

| CV | coefficient of variation |

| RMSE | root mean square error |

| AE | absolute error |

| RE | Relative Error |

| R2 | Coefficients of Determination |

Appendix A

| Metric (Abbreviation) | Formula | Variable Definitions |

|---|---|---|

| AE | : measured value | |

| RE | ||

| SD | : total number of samples : the i-th sample value : sample mean | |

| CV | , | |

| RMSE | : the i-th true value : the i-th measured or predicted value : total number of samples | |

| R2 |

References

- Jiang, N. A Non-Destructive Method for Toal Green Leaf Area Estimation of Individual Rice Plants. Ph.D. Dissertation, Huazhong University of Science and Technology, Wuhan, China, 2014. [Google Scholar]

- Yin, C.; Shi, Z.; Tian, C.; Chen, C.; Li, D.; Dong, W.; Zhang, Y. Effects of Red and Blue Light on Photosynthetic Carbon Assimilation and Growth Development in Plants: A Review. Chin. J. Appl. Ecol. 2025, 36, 2246–2256. [Google Scholar] [CrossRef]

- Shi, Y.; Xia, C. Research on Leaf Area Measurement of Variable Reference Based on Checkerboards. J. Chin. Agric. Mech. 2021, 42, 191–196. [Google Scholar] [CrossRef]

- Zhang, F.; Li, X.; Zhao, Q.; Feng, Y.; Wu, Y. Regression Estimation on Leaf Area of Hevea Brasiliensis Clones. Trop. Agric. Sci. Technol. 2024, 47, 63–68. [Google Scholar] [CrossRef]

- Zhang, Z.; Jia, S.; Zhao, N.; Liu, S.; Fan, F.; Nie, L. A Measurement Approach of Leaf Area Based on Digital Image Processing and Regression Equation of Leaves Area on Watermelon. China Cucurbits Veg. 2021, 34, 51–54. [Google Scholar] [CrossRef]

- Li, Q.; Yang, M.; Yuan, P.; Xue, Y. Image Measurement Method of Leaf Area Based on Saturation Segmentation. J. For. Eng. 2021, 6, 147–152. [Google Scholar] [CrossRef]

- Xu, Z.; Sun, L.; Li, B.; Lin, J.; Lan, W.; Zhang, S. Design of Leaf Area Measurement System Based on Android Smartphone. Exp. Technol. Manag. 2023, 40, 89–94. [Google Scholar] [CrossRef]

- Tan, X.; Tan, L.; Yang, S. Leaf Area Measurement Method Based on Black-White Scanning Image. J. Guangxi Agric. 2022, 37, 46–52. [Google Scholar]

- Li, F.; Li, J.; Huang, H.; Guan, M.; Guan, C. Research on Non-Destructive Measurement Methods and Strategies of Individual Rape Leaf Area in Field. Acta Laser Biol. Sin. 2021, 30, 505–517. [Google Scholar]

- Wang, P.; Deng, H.; Guo, J.; Ji, S.; Meng, D.; Bao, J.; Zuo, P. Leaf Segmentation Using Modified YOLOv8-Seg Models. Life 2024, 14, 780. [Google Scholar] [CrossRef]

- Huang, F.; Li, Y.; Liu, Z.; Gong, L.; Liu, C. A Method for Calculating the Leaf Area of Pak Choi Based on an Improved Mask R-CNN. Agriculture 2024, 14, 101. [Google Scholar] [CrossRef]

- Khan, A.T.; Jensen, S.M. LEAF-Net: A Unified Framework for Leaf Extraction and Analysis in Multi-Crop Phenotyping Using YOLOv11. Agriculture 2025, 15, 196. [Google Scholar] [CrossRef]

- Guo, X.; Cheng, J.; Wang, L. Research and Realisation the Statistics Algorithm of Wheat Leaf Area. J. Henan Inst. Sci. Technol. Sci. Ed. 2019, 47, 56–59. [Google Scholar]

- Yang, Z.; Yang, P.; Zhang, M.; Xiao, Y.; Liu, H. Study on Correction Method of Local Geometric Distortion in Projection Phantom Imaging. Acta Photonica Sin. 2022, 51, 0311004. [Google Scholar]

- Zhou, D.; Yang, Y.; Zhu, J.; Wang, K. Tilt Correction Method of Pointer Meter Based on Deep Learning. J. Comput.-Aided Des. Comput. Graph. 2020, 32, 1976–1984. [Google Scholar] [CrossRef]

- Matarneh, S.; Elghaish, F.; Al-Ghraibah, A.; Abdellatef, E.; Edwards, D.J. An Automatic Image Processing Based on Hough Transform Algorithm for Pavement Crack Detection and Classification. Smart Sustain. Built Environ. 2025, 14, 1–22. [Google Scholar] [CrossRef]

- Sasmita, W.A.; Mubarok, H.; Widiyasono, N. Agricultural Path Detection Systems Using Canny-Edge Detection and Hough Transform. IAES Int. J. Robot. Autom. 2024, 13, 247. [Google Scholar] [CrossRef]

- Wang, P.; Liu, Z.; Ma, C.; Peng, A.; Ren, F.; Cai, M. Investigation of Fast Identification of Joint Traces Information of Rock Mass Based on Hough Detection Method and Its Application. Rock Soil Mech. 2022, 43, 2887–2897. [Google Scholar] [CrossRef]

- Cremers, D.; Rousson, M.; Deriche, R. A Review of Statistical Approaches to Level Set Segmentation: Integrating Color, Texture, Motion and Shape. Int. J. Comput. Vis. 2007, 72, 195–215. [Google Scholar] [CrossRef]

- Qu, H.-B.; Xiang, L.; Wang, J.-Q.; Li, B.; Tao, H.-J. Variational Bayesian Level Set for Image Segmentation. In Proceedings of the International Conference on Machine Vision, London, UK, 24 December 2013; p. 90670D. [Google Scholar]

- Chan, T.F.; Vese, L.A. Active Contours without Edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed]

- Ben Ayed, I.; Hennane, N.; Mitiche, A. Unsupervised Variational Image Segmentation/Classification Using a Weibull Observation Model. IEEE Trans. Image Process. 2006, 15, 3431–3439. [Google Scholar] [CrossRef]

- Reska, D.; Kretowski, M. GPU-Accelerated Image Segmentation Based on Level Sets and Multiple Texture Features. Multimed. Tools Appl. 2021, 80, 5087–5109. [Google Scholar] [CrossRef]

- Han, T.; Cao, H.; Yang, Y. AS2LS: Adaptive Anatomical Structure-Based Two-Layer Level Set Framework for Medical Image Segmentation. IEEE Trans. Image Process. 2024, 33, 6393–6408. [Google Scholar] [CrossRef] [PubMed]

- Joshi, A.; Saquib Khan, M.; Choi, K.N. Medical Image Segmentation Using Combined Level Set and Saliency Analysis. IEEE Access 2024, 12, 102016–102026. [Google Scholar] [CrossRef]

- Li, W.; Liu, W.; Zhu, J.; Cui, M.; Hua, X.-S.; Zhang, L. Box2Mask: Box-Supervised Instance Segmentation with Level Set Evolution, Proceedings of the Computer Vision—ECCV 2022. Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 1–18. [Google Scholar]

- Aghazadeh, N.; Moradi, P.; Castellano, G.; Noras, P. An Automatic MRI Brain Image Segmentation Technique Using Edge–Region-Based Level Set. J. Supercomput. 2023, 79, 7337–7359. [Google Scholar] [CrossRef]

- Olfa, B.; Ziad, B.; Nozha, B. Adaptive Satellite Images Segmentation by Level Set Multiregion Competition. Inst. Natl. Rech. Inform. Autom. 1992, 37, 55–57. [Google Scholar] [CrossRef]

- Li, C.; Xu, C.; Gui, C.; Fox, M.D. Distance Regularized Level Set Evolution and Its Application to Image Segmentation. IEEE Trans. Image Process. 2010, 19, 3243–3254. [Google Scholar] [CrossRef]

- He, A.; Li, B.; Xu, Z.; Yang, Y.; Li, Z. Detection of Bamboo Leaf Area Based on Self-Made Colored Base Plate and Spectral Features. Res. Explor. Lab. 2024, 43, 39–44. [Google Scholar] [CrossRef]

- Hu, T.; Shen, P.; Zhang, Y.; Zhang, J.; Li, X.; Xia, C.; Liu, P.; Lu, H.; Wu, T.; Han, Z. OpenPheno: An Open-Access, User-Friendly, and Smartphone-Based Software Platform for Instant Plant Phenotyping. Plant Methods 2025, 21, 76. [Google Scholar] [CrossRef]

| Abbreviation | Full Term | Abbreviation | Full Term |

|---|---|---|---|

| Orig. | Original | Fin. | Final |

| Rect. | Rectified | Meas. | Measured |

| Ref. | Reference | Prop. | Proposed Method |

| Seg. | Segmentation | OP. | OpenPheno |

| Auto-Init. | Auto-Initialized | IJ. | ImageJ |

| Cont. | Contours | Sh. | Shooting |

| Evol. | Evolution | H1–H4 | Height 1–Height 4 |

| Orig. Image | Hough Lines | Rect. Image | Ref. Ratio | Ratio (Pre) | Ratio (Post) | RE (Pre) (%) | RE (Post) (%) |

|---|---|---|---|---|---|---|---|

|  |  | 1 | 1.3197 | 1.0008 | 31.97 | 0.08 |

|  |  | 1 | 1.0931 | 0.9923 | 9.31 | 0.77 |

|  |  | 1 | 0.7118 | 0.9949 | 28.82 | 0.51 |

|  |  | 1 | 0.9107 | 1.0016 | 8.93 | 0.16 |

|  |  | 1 | 0.8593 | 1.0027 | 14.07 | 0.27 |

|  |  | 1 | 1.2966 | 0.9976 | 29.66 | 0.24 |

|  |  | 1 | 0.6668 | 1.0091 | 33.32 | 0.91 |

|  |  | 1 | 0.9309 | 0.9915 | 6.91 | 0.85 |

| Orig. Image | Hough Lines | Manual Seeds | Rough Seg. (leaf) | Rough Seg. (Ref.) | Auto-Init. Cont. | Evol. Stage | Fin. Cont. | Leaf Fin. Seg. | Ref. Fin. Seg. |

|---|---|---|---|---|---|---|---|---|---|

|  |  |  |  |  |  |  |  |  |

|  |  |  |  |  |  |  |  |  |

|  |  |  |  |  |  |  |  |  |

|  |  |  |  |  |  |  |  |  |

|  |  |  |  |  |  |  |  |  |

|  |  |  |  |  |  |  |  |  |

| Leaf Type | GT 1 Mean Area (cm2) | Meas. Mean Area (cm2) | SD (cm2) | CV (%) | RMSE (cm2) | AE (cm2) | RE (%) | Min Meas. Time (s) |

|---|---|---|---|---|---|---|---|---|

| Pear_1 | 54.32 | 53.91 | 0.98 | 1.82 | 1.82 | 0.41 | 0.75 | 5.23 |

| Pear_2 | 79.34 | 79.00 | 0.98 | 1.06 | 1.24 | 0.34 | 0.43 | 5.28 |

| Pear_3 | 37.18 | 36.84 | 0.90 | 2.44 | 0.96 | 0.34 | 0.91 | 3.40 |

| Pear_4 | 26.65 | 26.91 | 0.55 | 2.04 | 0.95 | 0.26 | 0.98 | 3.36 |

| Pear_5 | 43.03 | 43.24 | 0.94 | 2.18 | 0.96 | 0.21 | 0.49 | 5.93 |

| Tomato_1 | 37.71 | 37.34 | 0.69 | 1.84 | 0.783 | 0.37 | 0.98 | 5.24 |

| Tomato_2 | 77.31 | 77.83 | 0.98 | 1.26 | 1.108 | 0.52 | 0.67 | 4.54 |

| Tomato_3 | 91.09 | 90.19 | 0.97 | 1.08 | 1.322 | 0.9 | 0.99 | 5.39 |

| Tomato_4 | 96.42 | 96.40 | 0.99 | 1.03 | 0.991 | 0.02 | 0.02 | 7.47 |

| Tomato_5 | 112.18 | 111.68 | 0.97 | 0.87 | 1.094 | 0.5 | 0.45 | 8.12 |

| Tomato_6 | 136.70 | 137.07 | 1.94 | 1.42 | 1.97 | 0.37 | 0.27 | 9.05 |

| B_millet_1 | 19.36 | 19.47 | 0.54 | 2.77 | 0.549 | 0.11 | 0.57 | 3.86 |

| B_millet_2 | 31.43 | 31.41 | 0.90 | 2.86 | 0.896 | 0.02 | 0.06 | 6.80 |

| B_millet_3 | 33.10 | 33.29 | 0.72 | 2.16 | 0.74 | 0.19 | 0.57 | 4.62 |

| B_millet_4 | 34.56 | 34.85 | 0.71 | 2.05 | 0.768 | 0.29 | 0.84 | 6.98 |

| B_millet_5 | 70.59 | 70.78 | 0.96 | 1.36 | 0.976 | 0.19 | 0.27 | 5.74 |

| Grape_1 | 60.77 | 60.28 | 0.94 | 1.56 | 1.06 | 0.49 | 0.81 | 5.11 |

| Grape_2 | 71.58 | 71.17 | 1.00 | 1.40 | 1.074 | 0.41 | 0.57 | 5.65 |

| Grape_3 | 88.12 | 88.16 | 0.97 | 1.3 | 1.103 | 0.04 | 0.05 | 4.54 |

| Grape_4 | 91.31 | 90.91 | 0.98 | 1.44 | 1.077 | 0.4 | 0.44 | 5.23 |

| Grape_5 | 112.18 | 111.22 | 0.94 | 0.85 | 1.339 | 0.96 | 0.86 | 5.21 |

| Leaf Type | Repetitions | Sh. 1 | Sh. 2 | Sh. 3 | Sh. 4 | Sh. 5 | Sh. 6 | Sh. 7 | Sh. 8 | Sh. 9 | Sh. 10 | Sh. 11 | Sh. 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pear_1 | 110 |  |  |  |  |  |  | ||||||

| Pear_2 | 113 |  |  |  |  |  |  |  |  | ||||

| Pear_3 | 195 |  |  |  |  |  | |||||||

| Pear_4 | 177 |  |  |  |  |  |  | ||||||

| Pear_5 | 107 |  |  |  |  |  | |||||||

| Tomato_1 | 116 |  |  |  |  | ||||||||

| Tomato_2 | 156 |  |  | ||||||||||

| Tomato_3 | 106 |  |  |  | |||||||||

| Tomato_4 | 222 |  |  |  | |||||||||

| Tomato_5 | 200 |  |  | ||||||||||

| Tomato_6 | 313 |  |  |  |  |  |  |  | |||||

| B_millet_1 | 143 |  |  |  |  | ||||||||

| B_millet_2 | 151 |  |  |  |  |  |  | ||||||

| B_millet_3 | 164 |  |  |  |  | ||||||||

| B_millet_4 | 174 |  |  |  |  |  |  | ||||||

| B_millet_5 | 221 |  |  |  |  |  |  |  | |||||

| Grape_1 | 282 |  |  |  |  |  | |||||||

| Grape_2 | 294 |  |  |  |  |  |  |  |  |  | |||

| Grape_3 | 229 |  |  |  |  |  |  |  |  | ||||

| Grape_4 | 236 |  |  |  |  |  |  |  |  | ||||

| Grape_5 | 371 |  |  |  |  |  |  |  |  |  |  |  |  |

| Shooting Name | Meas. Leaf Area (cm2) | Meas. Time (s) | Scaling Factor (p) | iter1 | iter2 | c1 | c2 | r | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Sh. 1 | 44.3877 | 6.0915 | 0.2 | 1 | 3 | 3 | 1 | 10 | ||

| Sh. 1 | 44.4377 | 8.6088 | 0.2 | 1 | 5 | 1 | 1 | 10 | ||

| Sh. 1 | 44.7547 | 8.3736 | 0.2 | 1 | 5 | 3 | 1 | 10 | ||

| Sh. 1 | 44.6804 | 6.6870 | 0.1 | 2 | 4 | 2 | 1 | 5 | ||

| Sh. 1 | 44.8210 | 9.3218 | 0.2 | 2 | 4 | 3 | 1 | 10 | ||

| Sh. 2 | 42.0385 | 9.1475 | 0.2 | 1 | 5 | 1 | 1 | 10 | ||

| Sh. 2 | 42.1926 | 8.9257 | 0.2 | 1 | 5 | 3 | 1 | 10 | ||

| Sh. 2 | 42.8226 | 11.5620 | 0.2 | 1 | 7 | 1 | 1 | 10 | ||

| Sh. 2 | 42.4920 | 9.5679 | 0.2 | 2 | 4 | 3 | 1 | 10 | ||

| Sh. 2 | 42.2359 | 15.0976 | 0.2 | 2 | 6 | 3 | 1 | 10 | ||

| Sh. 3 | 42.1047 | 12.1156 | 0.2 | 1 | 5 | 1 | 1 | 10 | ||

| Sh. 3 | 42.5355 | 12.8461 | 0.2 | 2 | 4 | 1 | 1 | 10 | ||

| Sh. 3 | 43.8534 | 28.6916 | 0.3 | 2 | 4 | 2 | 1 | 15 | ||

| Sh. 3 | 43.4826 | 7.3114 | 0.1 | 2 | 4 | 2 | 2 | 5 | ||

| Sh. 3 | 43.5182 | 8.4654 | 0.1 | 2 | 6 | 2 | 1 | 5 | ||

| Sh. 4 | 43.9285 | 8.4622 | 0.2 | 2 | 4 | 2 | 1 | 10 | ||

| Sh. 4 | 43.6243 | 8.5215 | 0.2 | 2 | 4 | 2 | 1 | 10 | ||

| Sh. 4 | 43.6756 | 8.6725 | 0.2 | 2 | 4 | 2 | 1 | 10 | ||

| Sh. 4 | 43.9184 | 8.8893 | 0.2 | 2 | 4 | 2 | 1 | 10 | ||

| Sh. 4 | 42.5147 | 9.1388 | 0.2 | 2 | 4 | 2 | 1 | 10 | ||

| Sh. 5 | 43.7698 | 5.9310 | 0.1 | 2 | 4 | 2 | 1 | 5 | ||

| Sh. 5 | 43.8995 | 6.0577 | 0.1 | 2 | 4 | 2 | 1 | 5 | ||

| Sh. 5 | 43.9360 | 6.4218 | 0.1 | 2 | 4 | 2 | 1 | 5 | ||

| Sh. 5 | 44.5514 | 6.4735 | 0.1 | 2 | 4 | 2 | 1 | 5 | ||

| Sh. 5 | 43.7880 | 6.6547 | 0.1 | 2 | 4 | 2 | 1 | 5 | ||

| Mean Area (cm2) | 43.5185 | RMSE (cm2) | 1.0053 | |||||||

| SD (cm2) | 0.8963 | CV (%) | 2.06 | |||||||

| GT Area (cm2) | 43.0292 | Min Measured Time(s) | 5.9310 | |||||||

| Description | Multi-Branch Leaf | Single-Branch Leaf | ImageJ: Different References | OpenPheno: Different Heights | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prop. | OP. | IJ. | Prop. | OP. | IJ. | Coin | Rectangle | H11 | H2 | H3 | H4 | |||

| Original Image |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| Leaf Extraction |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| Local Zoom |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| Leaf Area (cm2) | 86.25 | 82.82 | 84.76 | 54.75 | 49.69 | 52.04 | 87.78 | 85.88 | 89.48 | 91.60 | 53.29 | 52.59 | 47.68 | 51.24 |

| Manual Seeds | Rough Seg. (Leaf) | Auto-Init. Cont. | Evol. Stage | Fin. Cont. | Meas. Area (cm2) | Meas. Time (s) |

|---|---|---|---|---|---|---|

|  |  |  |  | 445.21 | 25.64 |

|  |  |  |  | 446.12 | 24.66 |

|  |  |  |  | 446.01 | 24.67 |

|  |  |  |  | 444.17 | 23.91 |

|  |  |  |  | 446.49 | 23.31 |

|  |  |  |  | 445.91 | 25.33 |

| Parameter/Metric | c1 = 1 | c1 = 1 | c1 = 2 | c1 = 2 | c1 = 3 | c1 = 4 | c1 = 6 | c1 = 10 |

|---|---|---|---|---|---|---|---|---|

| Auto-Init. Cont. |  |  |  |  |  |  |  |  |

| Iter = 2 |  |  |  |  |  |  |  |  |

| Iter = 10 |  |  |  |  |  |  |  |  |

| Meas. area (cm2) | 71.87 | 81.17 | 81.54 | 81.14 | 80.58 | 82.16 | 81.09 | 81.99 |

| Meas. Time (s) | 20.73 | 21.10 | 19.18 | 20.33 | 18.08 | 19.03 | 18.35 | 18.42 |

| Species/Morphology | Parameter | Scaling Factor (p) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 | ||

| Broomcorn millet (Smooth margins) | Area | 34.97 | 34.82 | 34.50 | 34.60 | 34.56 | 34.24 | 34.31 | 34.29 | 34.19 | 34.12 |

| Time | 9.58 | 22.61 | 49.51 | 120.12 | 248.08 | 425.27 | 695.68 | 1010.94 | 1458.95 | 1995.62 | |

| Grape (Serrated-only margins) | Area | 71.09 | 71.78 | 72.09 | 72.19 | 72.13 | 72.13 | 72.05 | 72.21 | 71.95 | 72.20 |

| Time | 9.22 | 19.14 | 40.43 | 81.93 | 157.62 | 310.65 | 533.57 | 796.52 | 1169.33 | 1586.34 | |

| Tomato (Serrated + Lobed) | Area | 95.83 | 96.09 | 96.15 | 96.95 | 97.45 | 96.71 | 97.26 | 96.17 | 96.37 | 97.15 |

| Time | 8.35 | 15.59 | 24.65 | 55.02 | 66.07 | 109.23 | 188.29 | 304.56 | 513.01 | 684 | |

| Metric | Broomcorn Millet (Smooth) | Grape (Serrated) | Tomato (Serrated + Lobed) |

|---|---|---|---|

| MaxΔArea 1 (cm2) | 0.85 | 1.12 | 1.62 |

| SD (cm2) | 0.28 | 0.34 | 0.57 |

| CV (%) | 0.81 | 0.47 | 0.59 |

| GT Area (cm2) | 34.56 | 71.58 | 96.42 |

| RMSE (cm2) | 0.28 | 0.52 | 0.57 |

| Time Model R2 | 0.9909 | 0.9894 | 0.9915 |

| Iteration Counts | Auto-Init. Cont. | iter = iter1 + iter2 | Leaf Fin. Seg. | Meas. Area (cm2) | Meas. Time (s) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 4 | 6 | 10 | 20 | 40 | 60 | 80 | 100 | |||||

| iter1 = 0 iter2 = 100 |  |  |  |  |  |  |  |  |  |  |  | 91.41 | 143.17 |

| iter1 = 100 iter2 = 0 |  |  |  |  |  |  |  |  |  |  |  | 86.67 | 136.58 |

| Iter1 = 80 Iter2 = 20 |  |  |  |  |  |  |  |  |  |  |  | 91.32 | 127.07 |

| Orig. Image | Hough Lines | Rect. Image | Meas. Mean Area (cm2) | Meas. Mean Time(s) |

|---|---|---|---|---|

|  |  | 137.37 | 21.16 |

|  |  | 137.61 | 20.94 |

|  |  | 136.26 | 20.32 |

|  |  | 136.10 | 19.89 |

|  |  | 137.50 | 20.79 |

| Orig. Image | Hough Lines | Rect. Image | Auto-Init. Cont. | Fin. Cont. | Leaf Fin. Seg. | Meas. Area (cm2) | Meas. Time (s) | GT 1 Area (cm2) | AE (cm2) |

|---|---|---|---|---|---|---|---|---|---|

|  |  |  |  |  | 54.75 | 9.98 | 54.72 | 0.03 |

|  |  |  |  |  | 29.87 | 12.56 | 29.55 | 0.32 |

|  |  |  |  |  | 37.19 | 10.30 | 37.33 | 0.14 |

|  |  |  |  |  | 27.26 | 8.65 | 26.65 | 0.61 |

|  |  |  |  |  | 42.22 | 8.80 | 43.03 | 0.81 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Hao, C.; Zhang, X.; Guo, W.; Bi, Z.; Lan, Z.; Zhang, L.; Han, Y. A Semi-Automatic and Visual Leaf Area Measurement System Integrating Hough Transform and Gaussian Level-Set Method. Agriculture 2025, 15, 2101. https://doi.org/10.3390/agriculture15192101

Wang L, Hao C, Zhang X, Guo W, Bi Z, Lan Z, Zhang L, Han Y. A Semi-Automatic and Visual Leaf Area Measurement System Integrating Hough Transform and Gaussian Level-Set Method. Agriculture. 2025; 15(19):2101. https://doi.org/10.3390/agriculture15192101

Chicago/Turabian StyleWang, Linjuan, Chengyi Hao, Xiaoying Zhang, Wenfeng Guo, Zhifang Bi, Zhaoqing Lan, Lili Zhang, and Yuanhuai Han. 2025. "A Semi-Automatic and Visual Leaf Area Measurement System Integrating Hough Transform and Gaussian Level-Set Method" Agriculture 15, no. 19: 2101. https://doi.org/10.3390/agriculture15192101

APA StyleWang, L., Hao, C., Zhang, X., Guo, W., Bi, Z., Lan, Z., Zhang, L., & Han, Y. (2025). A Semi-Automatic and Visual Leaf Area Measurement System Integrating Hough Transform and Gaussian Level-Set Method. Agriculture, 15(19), 2101. https://doi.org/10.3390/agriculture15192101