SPMF-YOLO-Tracker: A Method for Quantifying Individual Activity Levels and Assessing Health in Newborn Piglets

Abstract

1. Introduction

2. Materials and Methods

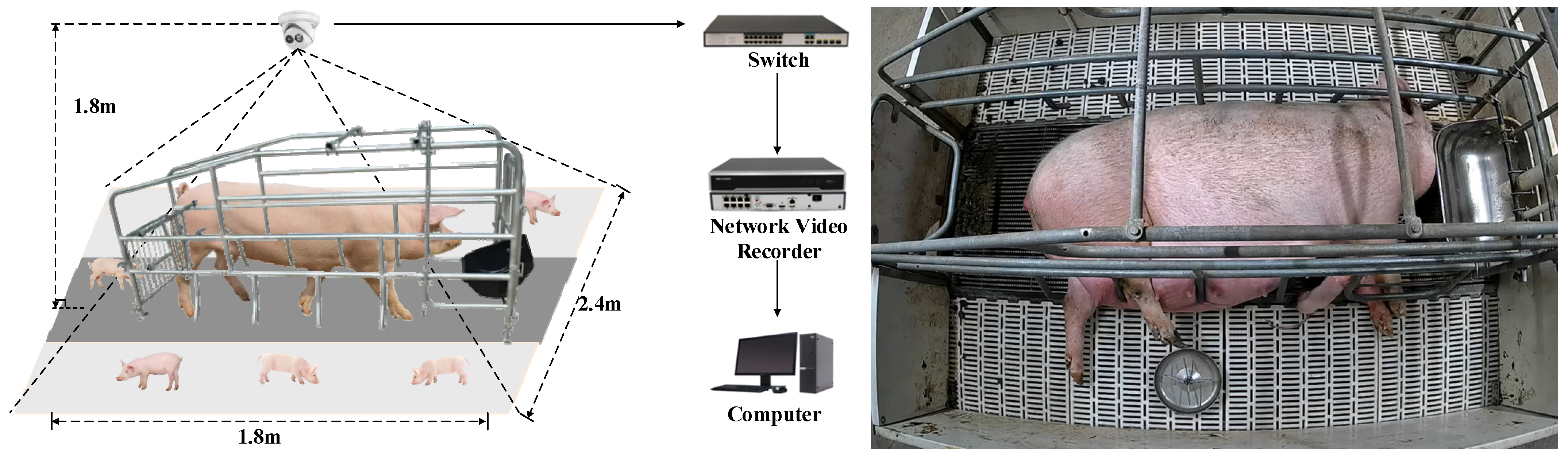

2.1. Test Site and Data Acquisition

2.2. Dataset Construction

2.2.1. Object Detection Dataset

2.2.2. Multi-Object Tracking Dataset

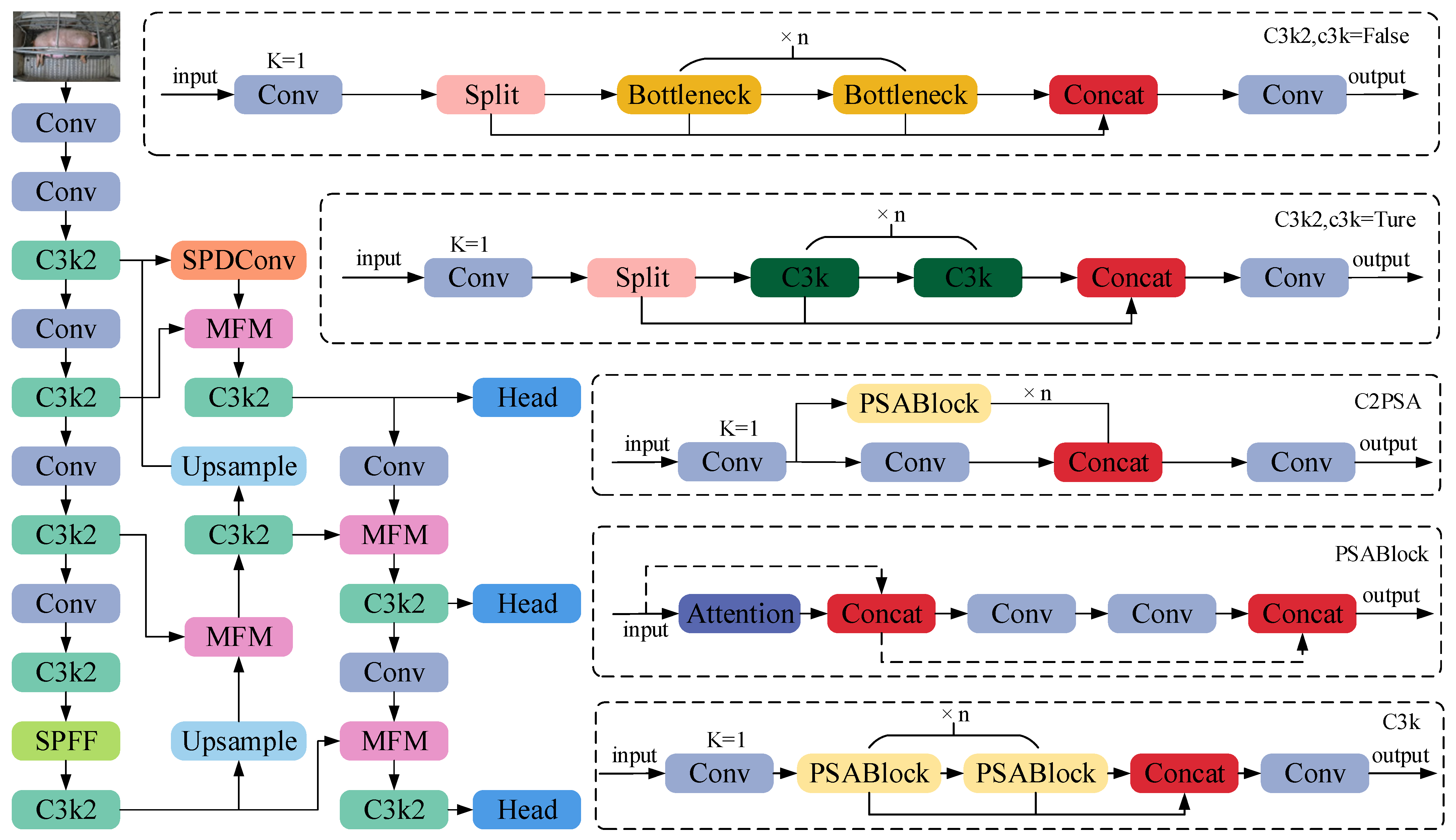

2.3. SPMF-YOLO Model

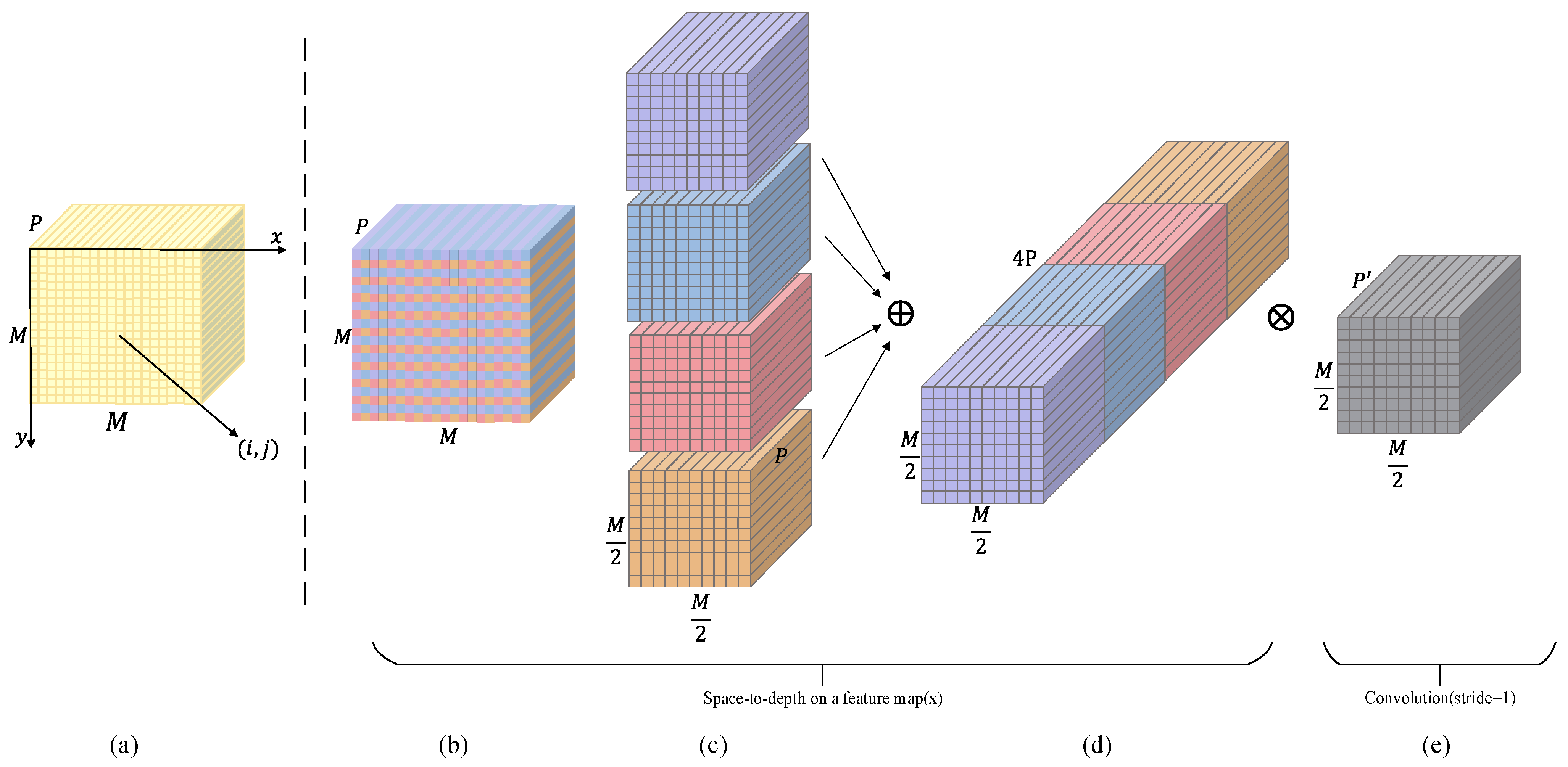

2.3.1. SPDConv Module

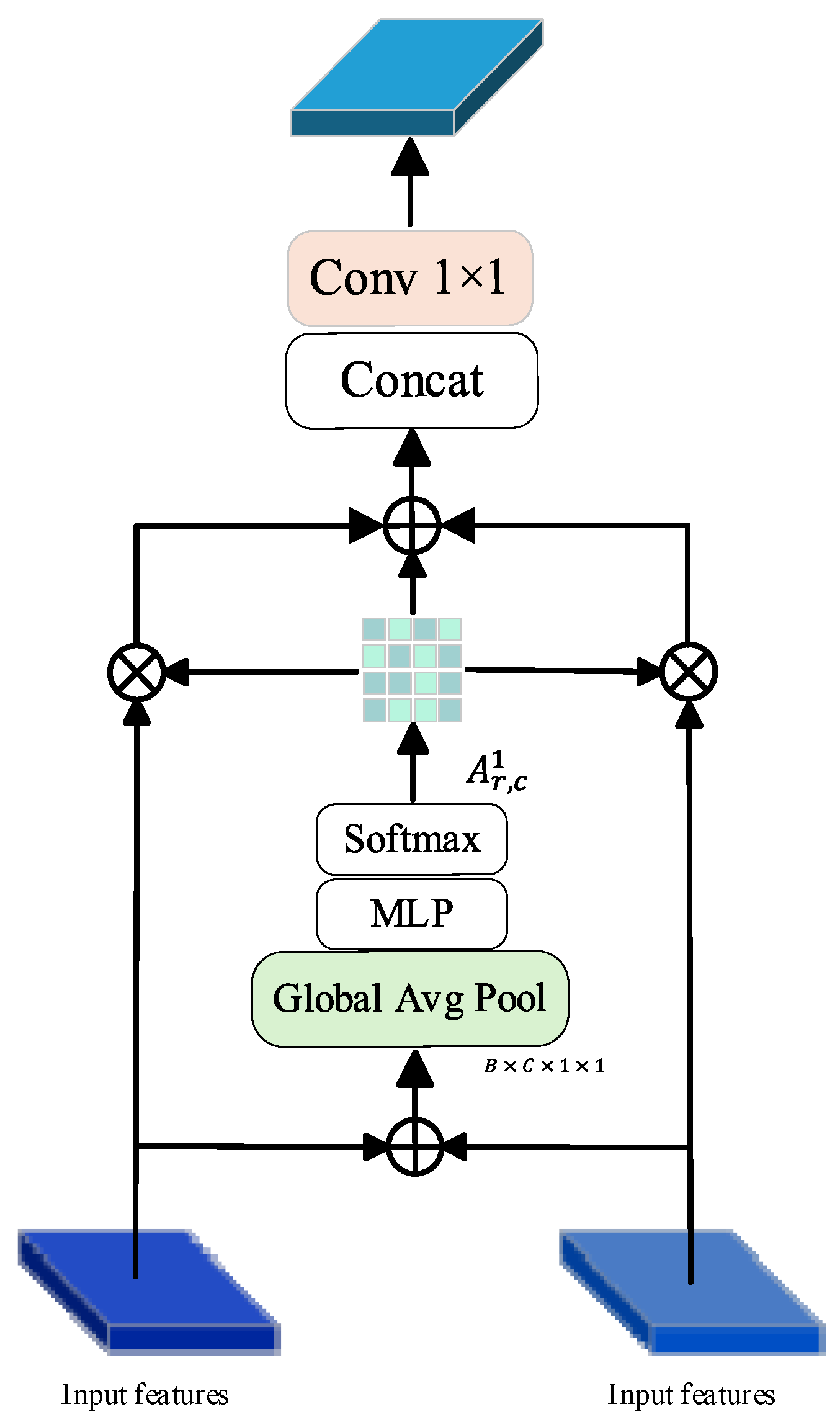

2.3.2. MFM Module

2.3.3. Normalized Wasserstein Distance Loss Function

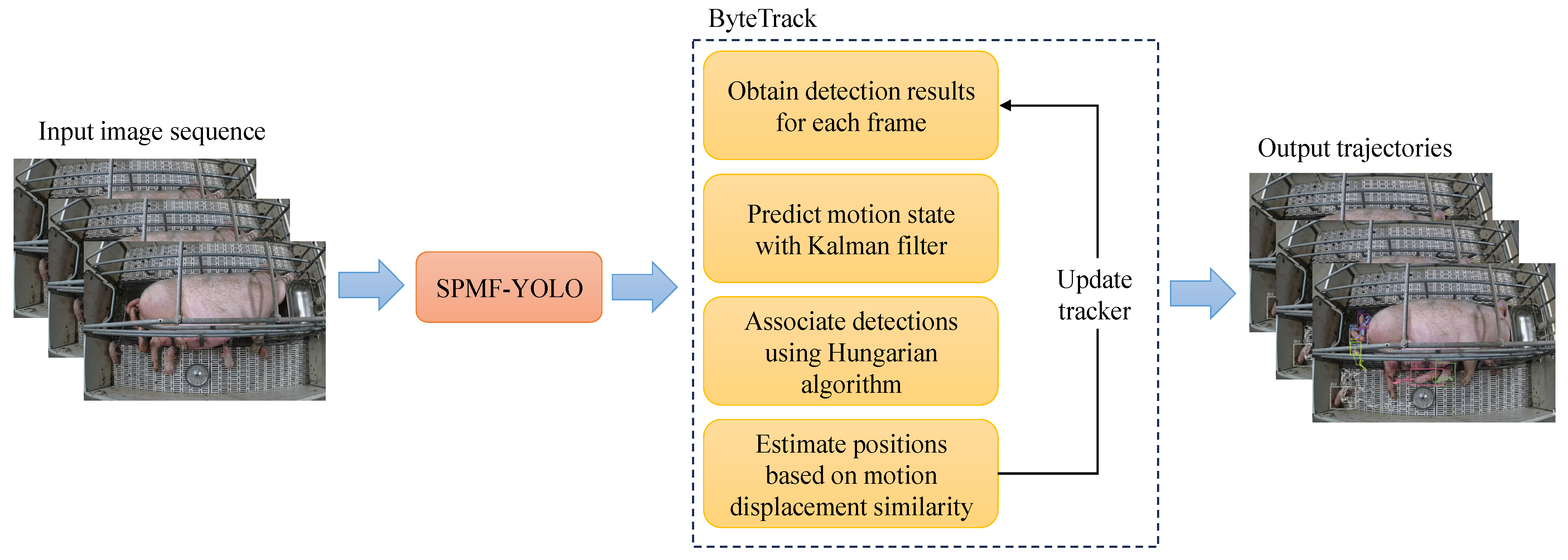

2.4. Bytetrack Model

2.5. Model Evaluation Approach

2.5.1. Performance Indicators for Object Detection

2.5.2. Evaluation Metrics for Target Tracking

3. Results and Analysis

3.1. Training Settings

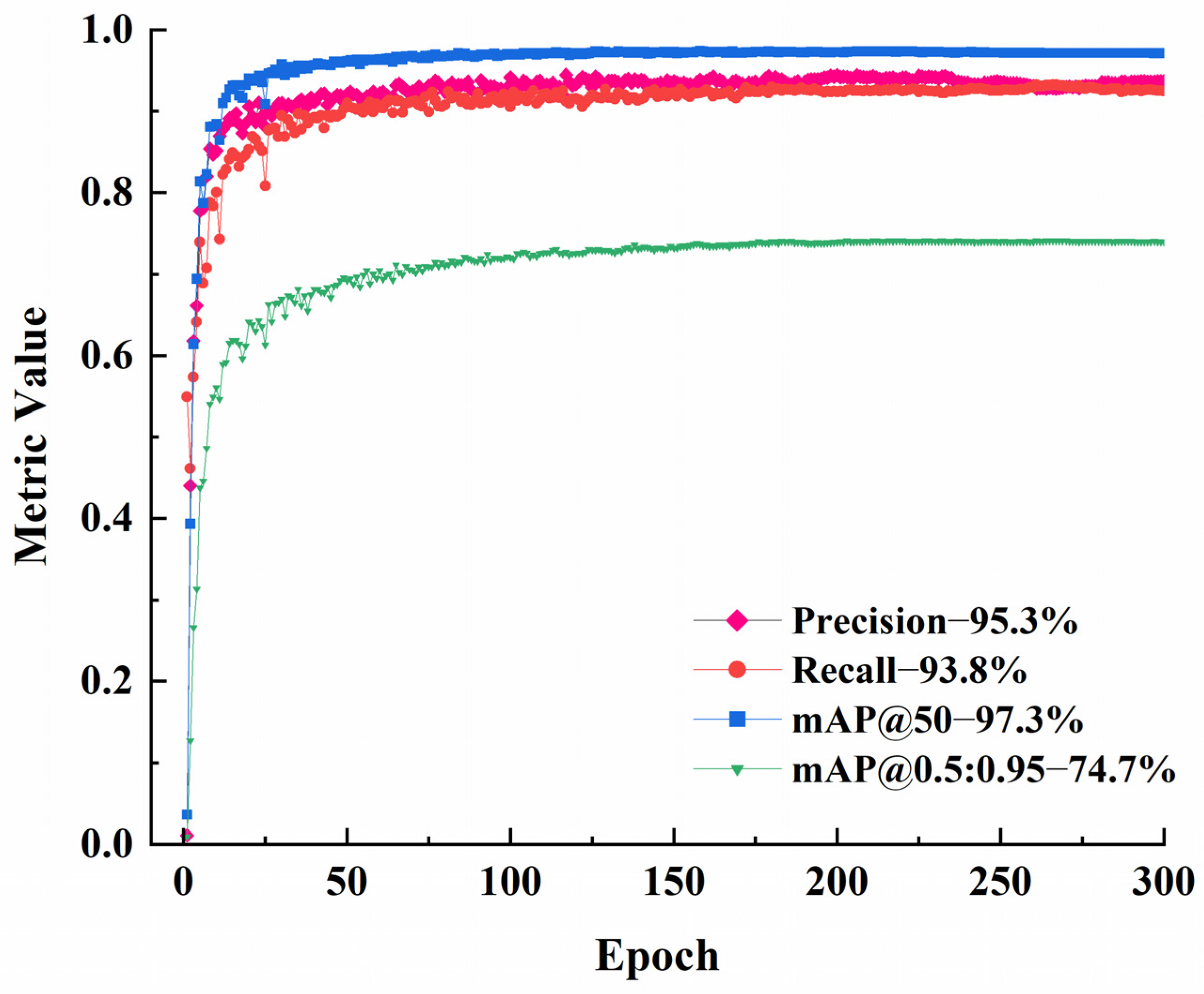

3.2. SPMF-YOLO Detection Performance

3.3. Results and Analysis of Multi-Target Tracking Algorithms

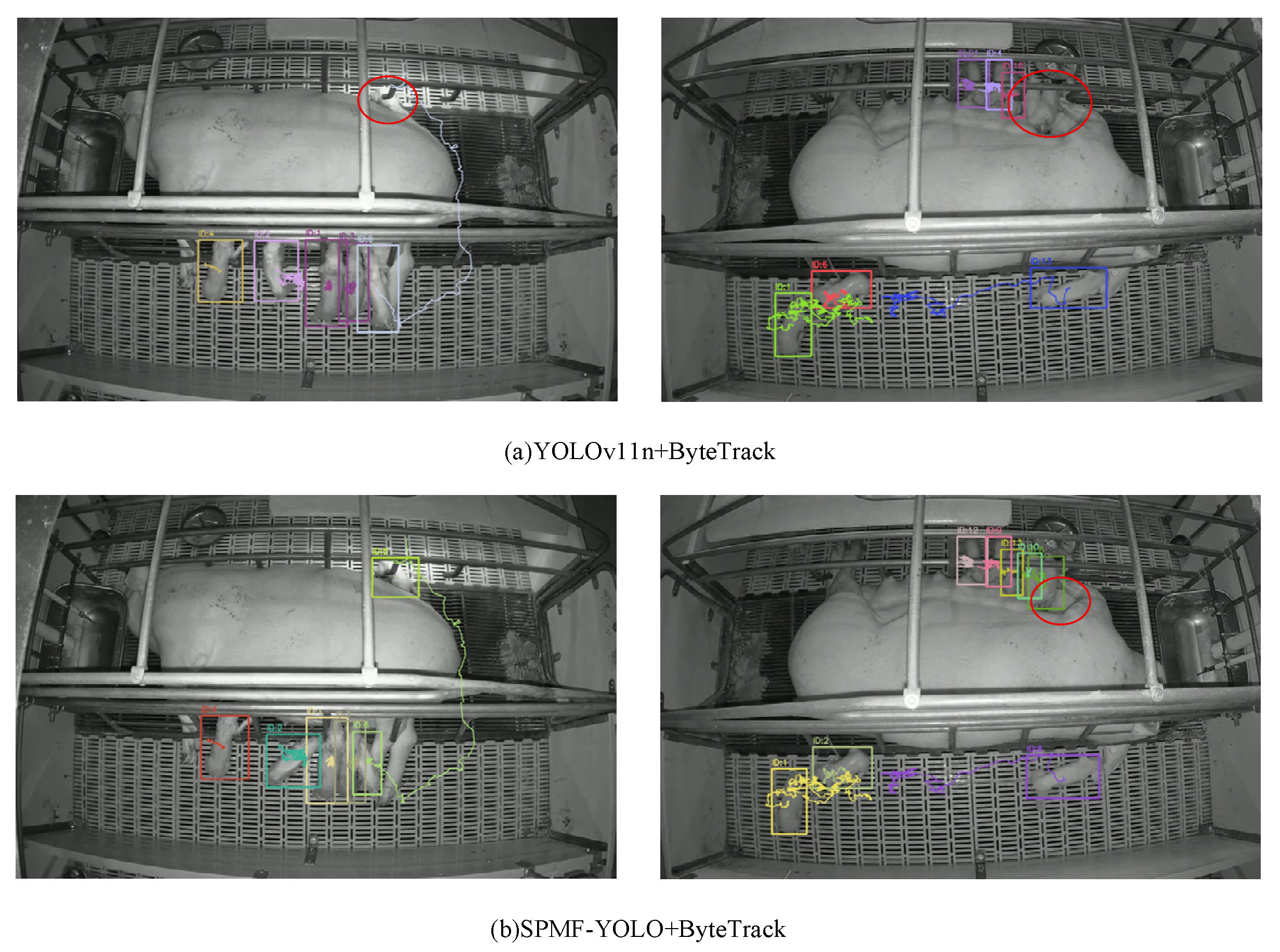

3.3.1. Performance Comparison of the Algorithm Before and After Improvement

3.3.2. Performance Evaluation Across Multiple Multi-Target Tracking Approaches

3.4. Performance Tracking of Newborn Piglets

3.5. Quantification of Activity Levels and Health Assessment Analysis in Newborn Piglets

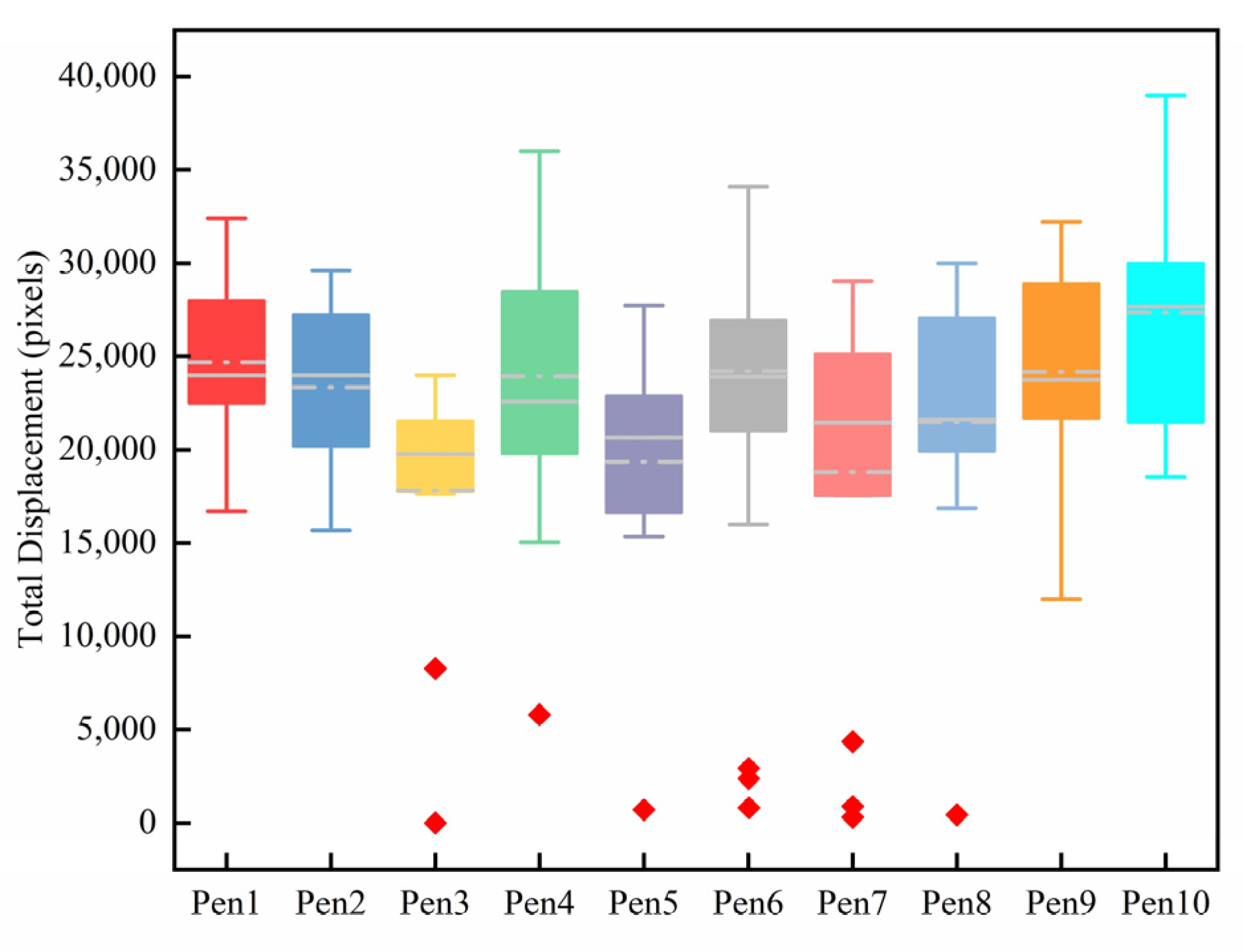

3.5.1. Quantification of Activity Levels in Newborn Piglets

3.5.2. Experimental Results of Health Assessment

3.5.3. Case Analysis of Misclassification

4. Discussion

5. Conclusions

- (1)

- Object Detection Performance: The enhanced SPMF-YOLO model significantly improves detection capabilities for small targets. It achieves 95.3% detection accuracy on the test dataset while maintaining stable performance under occlusion and overlapping scenarios, validating its applicability in complex farrowing environments.

- (2)

- Multi-Object Tracking Performance: Integrated with the ByteTrack tracking algorithm, the system reliably captures postnatal movement trajectories of newborn piglets, achieving HOTA, MOTA, and IDF1 values of 79.1%, 92.2%, and 84.7%, respectively. Compared to mainstream multi-object tracking methods, our approach demonstrates superior identity retention and trajectory continuity, significantly reducing ID switching in occlusion and dense interference scenarios.

- (3)

- Activity Quantification and Health Identification: Based on precise individual movement trajectories, the system quantifies the cumulative movement distance of newborn piglets within 30 min post-birth, using this as a core indicator of activity level. Comparison with manual labeling shows an overall consistency rate of 98.2%, with an accuracy rate of 92.9% in identifying abnormal individuals. Video retrospective analysis further confirmed behavioral and physical abnormalities in low-activity individuals, demonstrating the method’s capability to accurately identify potential health risks and provide data support for early intervention.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Singh, A.; Jadoun, Y.S.; Brar, P.S.; Kour, G. Smart Technologies in Livestock Farming. In Smart and Sustainable Food Technologies; Sehgal, S., Singh, B., Sharma, V., Eds.; Springer Nature: Singapore, 2022; pp. 25–57. ISBN 978-981-19-1746-2. [Google Scholar]

- Quesnel, H.; Resmond, R.; Merlot, E.; Père, M.-C.; Gondret, F.; Louveau, I. Physiological Traits of Newborn Piglets Associated with Colostrum Intake, Neonatal Survival and Preweaning Growth. Animal 2023, 17, 100843. [Google Scholar] [CrossRef] [PubMed]

- Tullo, E.; Finzi, A.; Guarino, M. Review: Environmental Impact of Livestock Farming and Precision Livestock Farming as a Mitigation Strategy. Sci. Total Environ. 2019, 650, 2751–2760. [Google Scholar] [CrossRef]

- Lao, F.; Brown-Brandl, T.; Stinn, J.P.; Liu, K.; Teng, G.; Xin, H. Automatic Recognition of Lactating Sow Behaviors Through Depth Image Processing. Comput. Electron. Agric. 2016, 125, 56–62. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Edwards, S.A.; Matheson, S.M.; Sturm, B. Using Automated Image Analysis in Pig Behavioural Research: Assessment of the Influence of Enrichment Substrate Provision on Lying Behaviour. Appl. Anim. Behav. Sci. 2017, 196, 30–35. [Google Scholar] [CrossRef]

- Xu, J.; Zhou, S.; Xu, A.; Ye, J.; Zhao, A. Automatic Scoring of Postures in Grouped Pigs Using Depth Image and CNN-SVM. Comput. Electron. Agric. 2022, 194, 106746. [Google Scholar] [CrossRef]

- Gan, H.; Xu, C.; Hou, W.; Guo, J.; Liu, K.; Xue, Y. Spatiotemporal Graph Convolutional Network for Automated Detection and Analysis of Social Behaviours Among Pre-Weaning Piglets. Biosyst. Eng. 2022, 217, 102–114. [Google Scholar] [CrossRef]

- Ji, H.; Yu, J.; Lao, F.; Zhuang, Y.; Wen, Y.; Teng, G. Automatic Position Detection and Posture Recognition of Grouped Pigs Based on Deep Learning. Agriculture 2022, 12, 1314. [Google Scholar] [CrossRef]

- Chen, J.; Liu, L.; Li, P.; Yao, W.; Shen, M.; Liu, L. Resting Posture Recognition Method for Suckling Piglets Based on Piglet Posture Recognition (PPR)–You Only Look Once. Agriculture 2025, 15, 230. [Google Scholar] [CrossRef]

- Cowton, J.; Kyriazakis, I.; Bacardit, J. Automated Individual Pig Localisation, Tracking and Behaviour Metric Extraction Using Deep Learning. IEEE Access 2019, 7, 108049–108060. [Google Scholar] [CrossRef]

- Guo, Q.; Sun, Y.; Orsini, C.; Bolhuis, J.E.; de Vlieg, J.; Bijma, P.; With, P.H.N. de Enhanced Camera-Based Individual Pig Detection and Tracking for Smart Pig Farms. Comput. Electron. Agric. 2023, 211, 108009. [Google Scholar] [CrossRef]

- Tu, S.; Cai, Y.; Liang, Y.; Lei, H.; Huang, Y.; Liu, H.; Xiao, D. Tracking and Monitoring of Individual Pig Behavior Based on YOLOv5-Byte. Comput. Electron. Agric. 2024, 221, 108997. [Google Scholar] [CrossRef]

- Yang, Q.; Hui, X.; Huang, Y.; Chen, M.; Huang, S.; Xiao, D. A Long-Term Video Tracking Method for Group-Housed Pigs. Animals 2024, 14, 1505. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Baek, H.; Son, S.; Seo, J.; Chung, Y. FTO-SORT: A Fast Track-Id Optimizer for Enhanced Multi-Object Tracking with SORT in Unseen Pig Farm Environments. Comput. Electron. Agric. 2025, 237, 110540. [Google Scholar] [CrossRef]

- Cangar, Ö.; Leroy, T.; Guarino, M.; Vranken, E.; Fallon, R.; Lenehan, J.; Mee, J.; Berckmans, D. Automatic Real-Time Monitoring of Locomotion and Posture Behaviour of Pregnant Cows Prior to Calving Using Online Image Analysis. Comput. Electron. Agric. 2008, 64, 53–60. [Google Scholar] [CrossRef]

- Chen, J.; Liu, L.; Li, P.; Yao, W.; Shen, M.; Ding, Q.; Liu, L. PKL-Track: A Keypoint-Optimized Approach for Piglet Tracking and Activity Measurement. Comput. Electron. Agric. 2025, 237, 110578. [Google Scholar] [CrossRef]

- Valle, J.E.D.; Pereira, D.F.; Neto, M.M.; Filho, L.R.A.G.; Salgado, D.D. Unrest Index for Estimating Thermal Comfort of Poultry Birds (Gallus Gallus Domesticus) Using Computer Vision Techniques. Biosyst. Eng. 2021, 206, 123–134. [Google Scholar] [CrossRef]

- Ho, K.-Y.; Tsai, Y.-J.; Kuo, Y.-F. Automatic Monitoring of Lactation Frequency of Sows and Movement Quantification of Newborn Piglets in Farrowing Houses Using Convolutional Neural Networks. Comput. Electron. Agric. 2021, 189, 106376. [Google Scholar] [CrossRef]

- Vanden Hole, C.; Ayuso, M.; Aerts, P.; Prims, S.; Van Cruchten, S.; Van Ginneken, C. Glucose and Glycogen Levels in Piglets That Differ in Birth Weight and Vitality. Heliyon 2019, 5, e02510. [Google Scholar] [CrossRef]

- Panzardi, A.; Bernardi, M.L.; Mellagi, A.P.; Bierhals, T.; Bortolozzo, F.P.; Wentz, I. Newborn Piglet Traits Associated with Survival and Growth Performance Until Weaning. Prev. Vet. Med. 2013, 110, 206–213. [Google Scholar] [CrossRef]

- Tucker, B.S.; Craig, J.R.; Morrison, R.S.; Smits, R.J.; Kirkwood, R.N. Piglet Viability: A Review of Identification and Pre-Weaning Management Strategies. Animals 2021, 11, 2902. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects 2022. arXiv 2022. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, S.; Li, H. Depth Information Assisted Collaborative Mutual Promotion Network for Single Image Dehazing. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 2846–2855. [Google Scholar]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A Normalized Gaussian Wasserstein Distance for Tiny Object Detection 2022. arXiv 2022. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box 2022. arXiv 2022. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN 2015. arXiv 2015. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Alif, M.A.R.; Hussain, M. YOLOv12: A Breakdown of the Key Architectural Features 2025. arXiv 2025. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection 2024. arXiv 2024, arXiv:2304.08069v3. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: Piscataway, NJ, USA. [Google Scholar]

- Pujara, A.; Bhamare, M. DeepSORT: Real Time & Multi-Object Detection and Tracking with YOLO and TensorFlow. In Proceedings of the 2022 International Conference on Augmented Intelligence and Sustainable Systems (ICAISS), Trichy, India, 24–26 November 2022; pp. 456–460. [Google Scholar]

- Yang, F.; Odashima, S.; Masui, S.; Jiang, S. Hard to Track Objects with Irregular Motions and Similar Appearances? Make It Easier by Buffering the Matching Space 2023. arXiv 2023. [Google Scholar] [CrossRef]

- Yang, M.; Han, G.; Yan, B.; Zhang, W.; Qi, J.; Lu, H.; Wang, D. Hybrid-SORT: Weak Cues Matter for Online Multi-Object Tracking. AAAI 2024, 38, 6504–6512. [Google Scholar] [CrossRef]

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. StrongSORT: Make DeepSORT Great Again 2023. arXiv 2023. [Google Scholar] [CrossRef]

- Aharon, N.; Orfaig, R.; Bobrovsky, B.-Z. BoT-SORT: Robust Associations Multi-Pedestrian Tracking 2022. arXiv 2022. [Google Scholar] [CrossRef]

| Dataset Type | Number of Images | Dataset Purpose |

|---|---|---|

| Object Detection Dataset | 1780 | Training, testing, and validating object detection models |

| Multi-Object Tracking Model Dataset | 4950 | Testing multi-object tracking algorithms |

| SPDConv | MFM | NWD | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Params (M) | FLOPs (G) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|---|---|

| × | × | × | 93.0 | 91.5 | 96.3 | 70.1 | 2.6 | 6.3 | 6.3 |

| √ | × | × | 93.3 | 91.9 | 96.9 | 74.0 | 5.6 | 9.0 | 7.4 |

| √ | √ | × | 94.2 | 92.9 | 97.1 | 74.3 | 6.2 | 12.3 | 10.1 |

| √ | √ | √ | 95.3 | 93.8 | 97.3 | 74.7 | 6.2 | 12.3 | 10.1 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Params (M) | FLOPs (G) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|

| Fast R-CNN | 73.5 | 79.2 | 80.5 | 40.6 | 45.31 | 117.4 | 96.4 |

| RT-DETR-L | 91.3 | 89.3 | 94.3 | 59.6 | 32 | 131.3 | 68.4 |

| SSD | 80.5 | 86.6 | 87.8 | 57.2 | 36.28 | 139.7 | 114.7 |

| YOLOv8n | 92.7 | 90.9 | 95.4 | 62.3 | 3.2 | 7.5 | 6.7 |

| YOLOv11n | 93.0 | 91.5 | 96.3 | 70.1 | 2.6 | 6.3 | 6.2 |

| YOLOv12n | 93.0 | 90.5 | 96.1 | 68.8 | 6.2 | 6.7 | 7.1 |

| SPMF-YOLO | 95.3 | 93.8 | 97.3 | 74.7 | 6.2 | 12.3 | 10.1 |

| Model | HOTA (%) ↑ | MOTA (%) ↑ | IDF1 (%) ↑ | IDSW ↓ | FPS ↑ |

|---|---|---|---|---|---|

| YOLOv11n + ByteTrack | 65.3 | 87.5 | 71.1 | 26 | 38.9 |

| SPMF-YOLO + ByteTrack | 79.1 | 92.2 | 84.7 | 15 | 35.9 |

| Model | HOTA (%) ↑ | MOTA (%) ↑ | IDF1 (%) ↑ | IDSW ↓ | FPS ↑ |

|---|---|---|---|---|---|

| SORT | 56.5 | 70.2 | 52.2 | 151 | 6.1 |

| DeepSORT | 58.8 | 72.1 | 53.4 | 140 | 28.3 |

| C-BIoU Tracker | 69.3 | 81.5 | 66.1 | 24 | 38.9 |

| Hybrid-SORT | 64.2 | 80.2 | 67.7 | 84 | 40.5 |

| StrongSORT | 59.4 | 77.6 | 51.7 | 151 | 7.3 |

| BoT-SORT | 70.2 | 84.6 | 63.9 | 63 | 38.2 |

| Our | 79.1 | 92.2 | 84.7 | 15 | 35.9 |

| Label | Criteria for Label Classification |

|---|---|

| Healthy piglets | Able to crawl independently and is relatively active; frequently explores and suckles, moves naturally, and is in good spirits. |

| Abnormal piglets | Sluggish movement, prolonged inactivity in corners, lack of exploration; activity levels below group norms; abnormal posture, low energy levels, and even risk of death. |

| Pen | Actual Value/Predicted Value | |||

|---|---|---|---|---|

| Piglet Count | Healthy Piglets | Abnormal Piglets | Pen Consistency Rate | |

| Pen1 | 7 | 7/7 | 0/0 | 100% |

| Pen2 | 11 | 10/10 | 1/1 | 100% |

| Pen3 | 12 | 10/10 | 2/2 | 100% |

| Pen4 | 11 | 11/10 | 0/1 | 90.9% |

| Pen5 | 10 | 9/9 | 1/1 | 100% |

| Pen6 | 11 | 8/8 | 3/3 | 100% |

| Pen7 | 14 | 11/11 | 3/3 | 100% |

| Pen8 | 13 | 11/11 | 2/2 | 100% |

| Pen9 | 14 | 13/14 | 1/0 | 92.9% |

| Pen10 | 10 | 10/10 | 0/0 | 100% |

| Total | 113 | 100/100 | 11/11 | 98.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, J.; Tang, Y.; Chen, J.; Wang, K.; Li, P.; Shen, M.; Liu, L. SPMF-YOLO-Tracker: A Method for Quantifying Individual Activity Levels and Assessing Health in Newborn Piglets. Agriculture 2025, 15, 2087. https://doi.org/10.3390/agriculture15192087

Wei J, Tang Y, Chen J, Wang K, Li P, Shen M, Liu L. SPMF-YOLO-Tracker: A Method for Quantifying Individual Activity Levels and Assessing Health in Newborn Piglets. Agriculture. 2025; 15(19):2087. https://doi.org/10.3390/agriculture15192087

Chicago/Turabian StyleWei, Jingge, Yurong Tang, Jinxin Chen, Kelin Wang, Peng Li, Mingxia Shen, and Longshen Liu. 2025. "SPMF-YOLO-Tracker: A Method for Quantifying Individual Activity Levels and Assessing Health in Newborn Piglets" Agriculture 15, no. 19: 2087. https://doi.org/10.3390/agriculture15192087

APA StyleWei, J., Tang, Y., Chen, J., Wang, K., Li, P., Shen, M., & Liu, L. (2025). SPMF-YOLO-Tracker: A Method for Quantifying Individual Activity Levels and Assessing Health in Newborn Piglets. Agriculture, 15(19), 2087. https://doi.org/10.3390/agriculture15192087