Research Progress of Deep Learning-Based Artificial Intelligence Technology in Pest and Disease Detection and Control

Abstract

1. Introduction

1.1. Research Background and Significance

1.2. Evolution of Agricultural Pest and Disease Detection and Control Technologies

1.2.1. Conventional Approaches to Pest and Disease Detection and Control

1.2.2. Emerging AI-Based Approaches for Pest and Disease Detection and Control

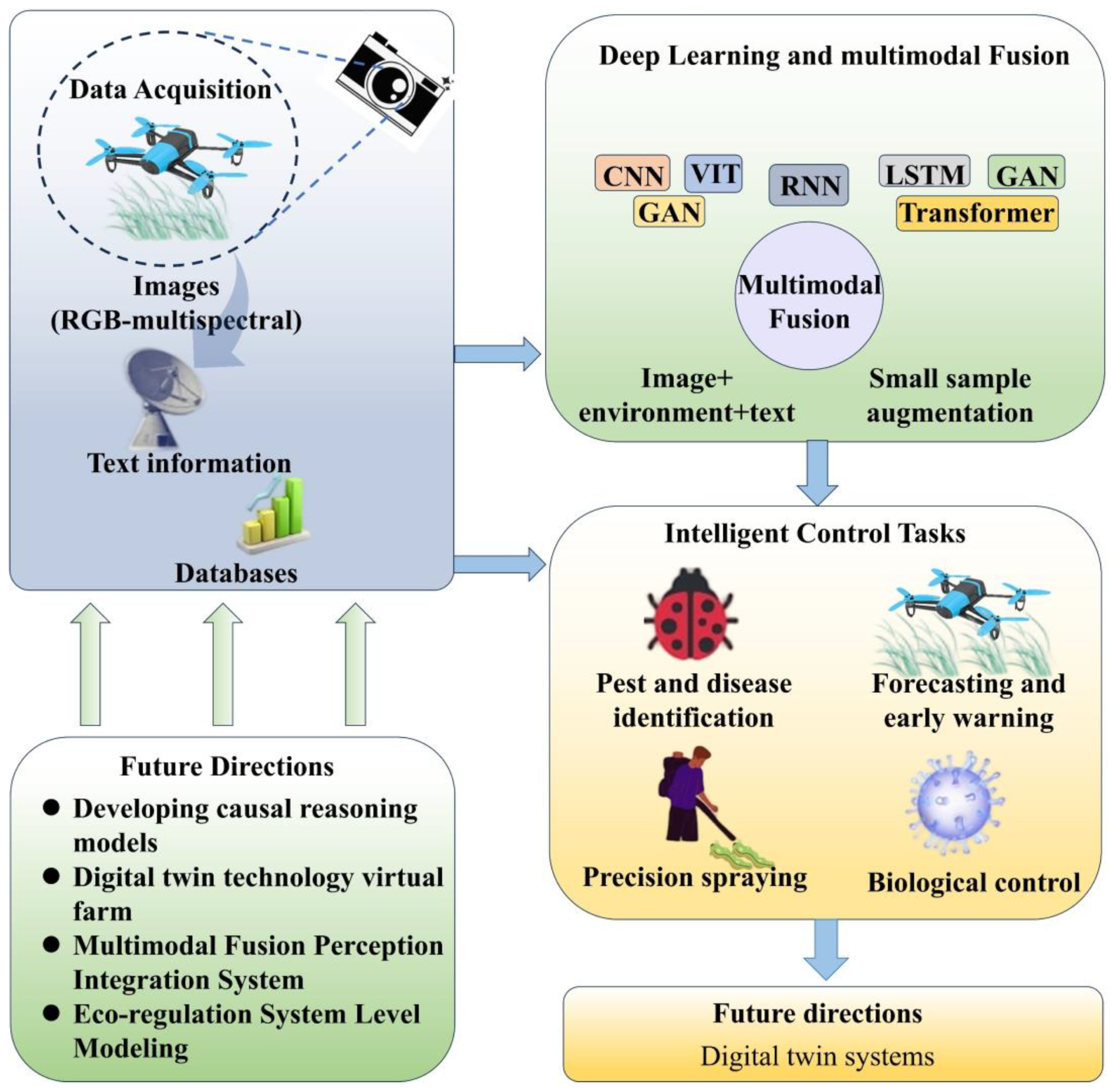

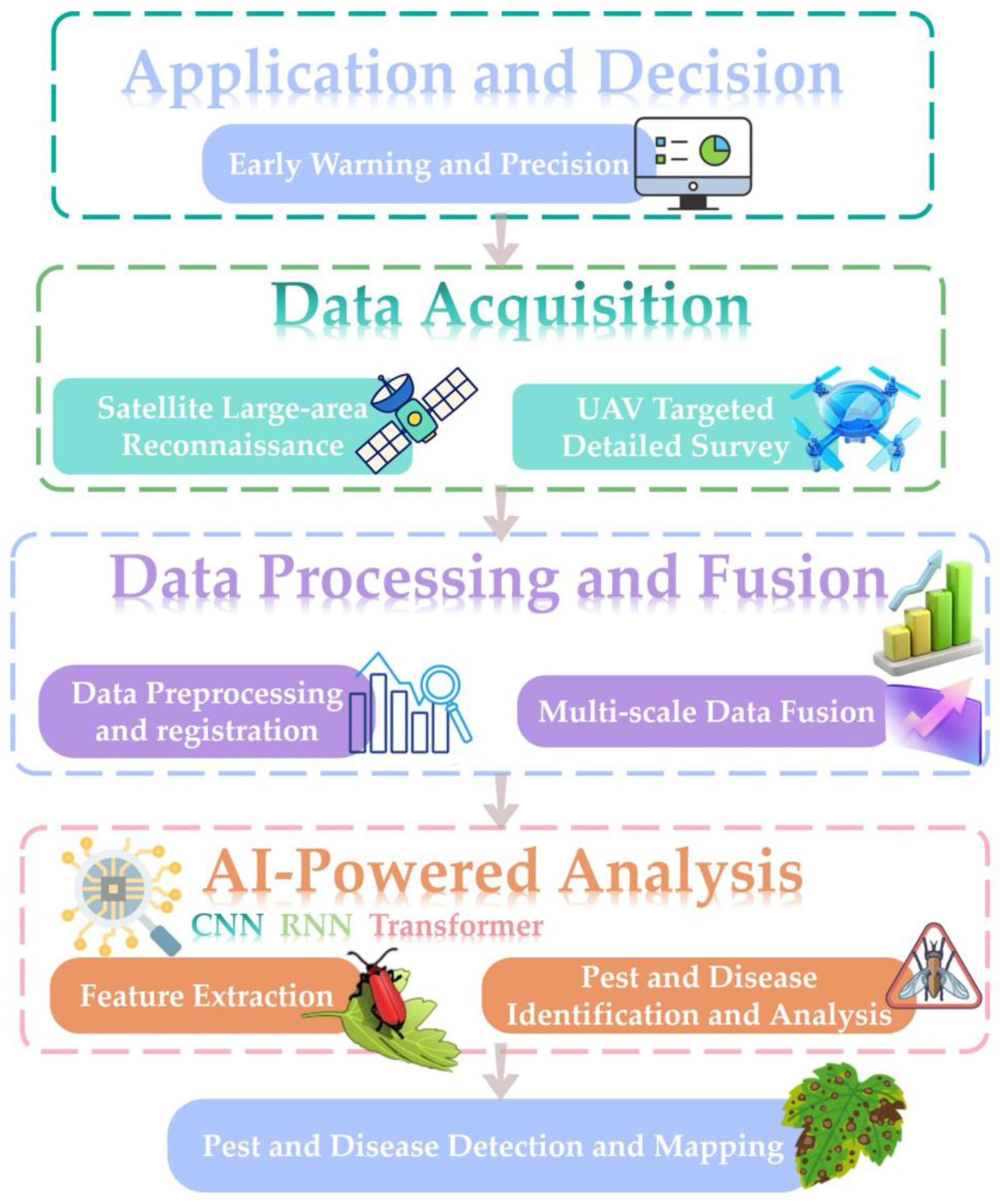

2. Applications of Artificial Intelligence in Agricultural Pest and Disease Identification and Detection

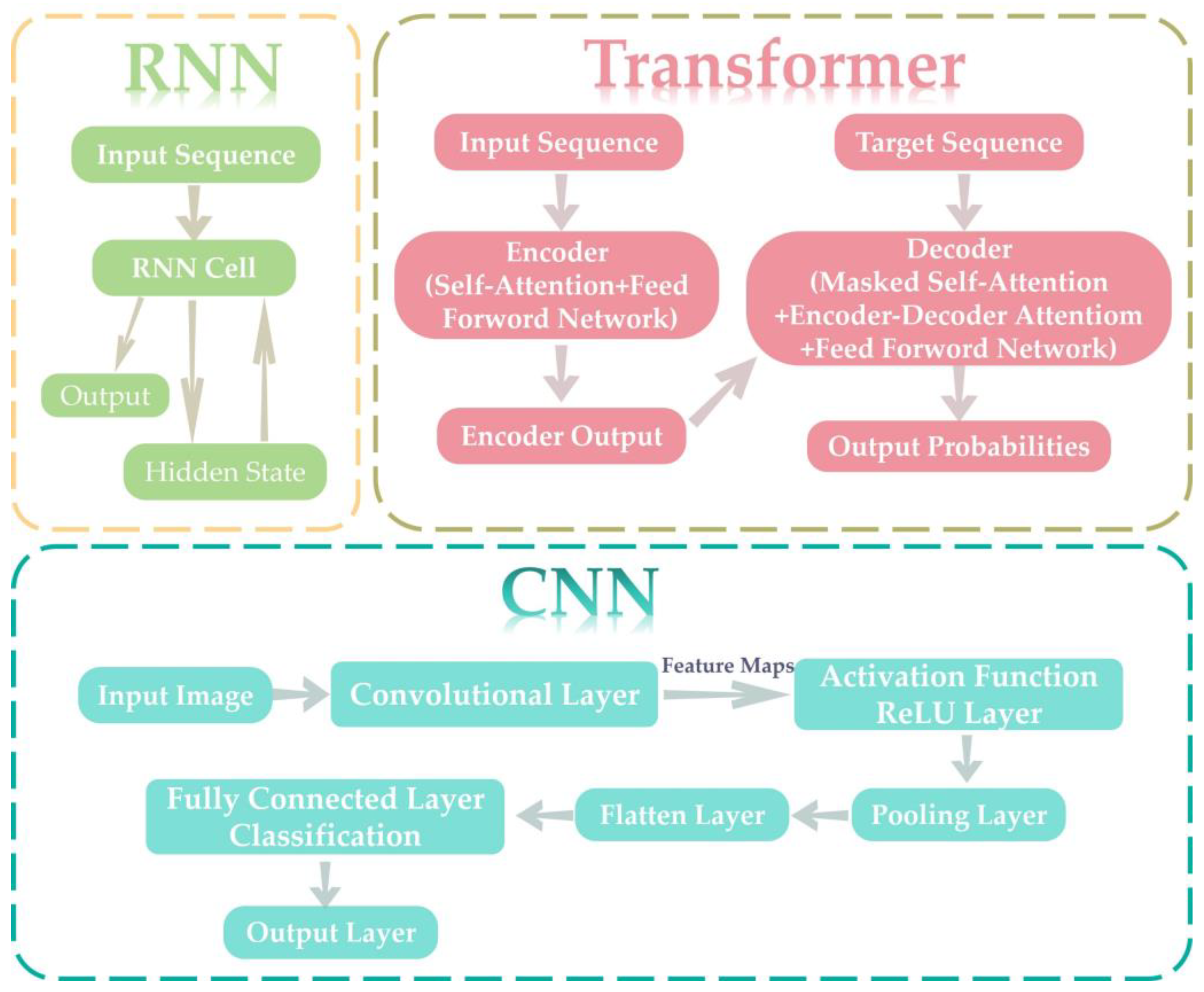

2.1. Deep Learning and Image Recognition Models

2.1.1. Mainstream Deep Learning Architectures

2.1.2. Image Recognition and Detection Technology

2.2. Remote Sensing and UAV Image Analysis Technology

2.3. Internet of Things Sensors and Smart Monitoring Technologies

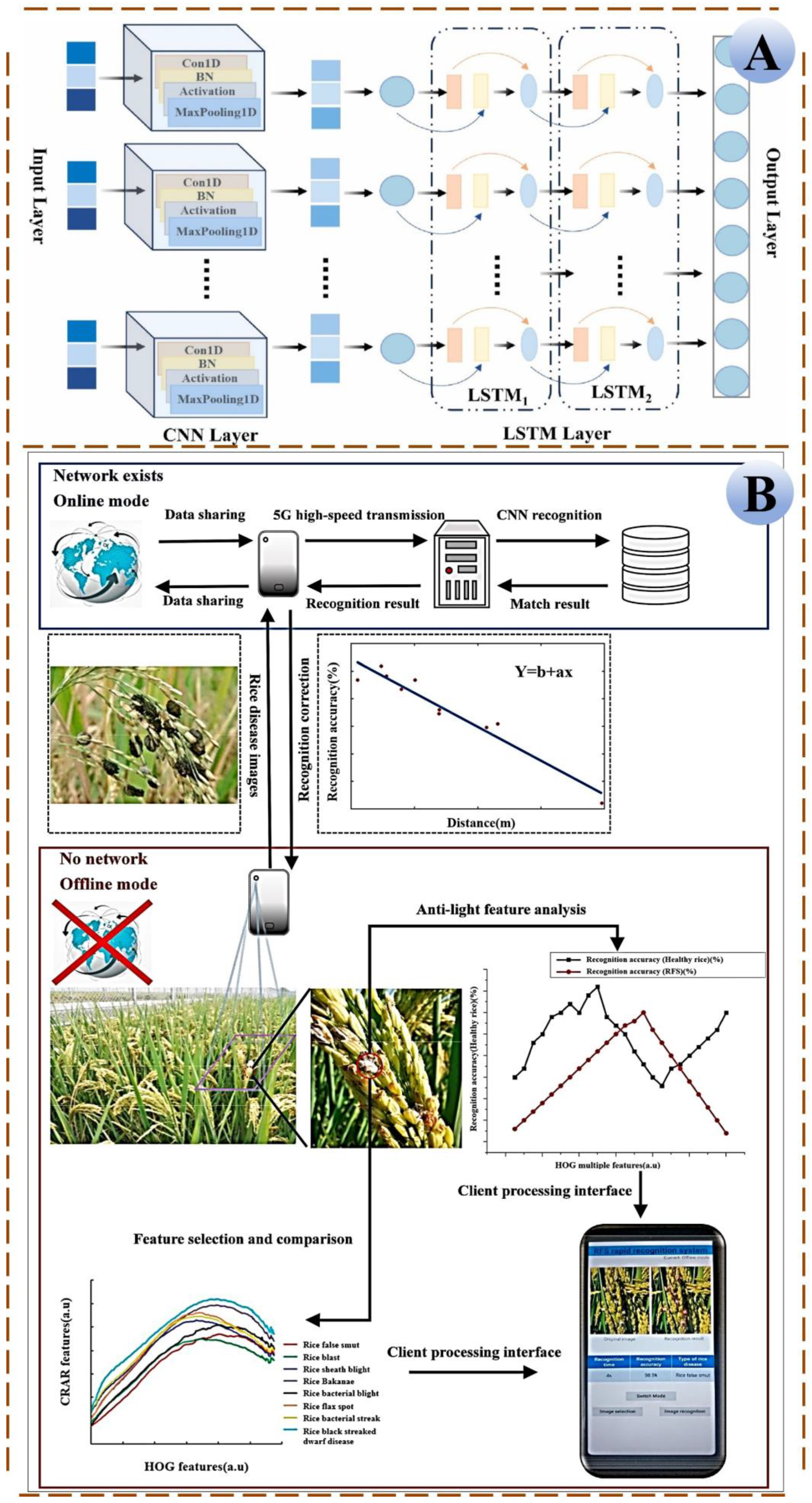

2.4. Rapid Detection and Mobile Application Technologies

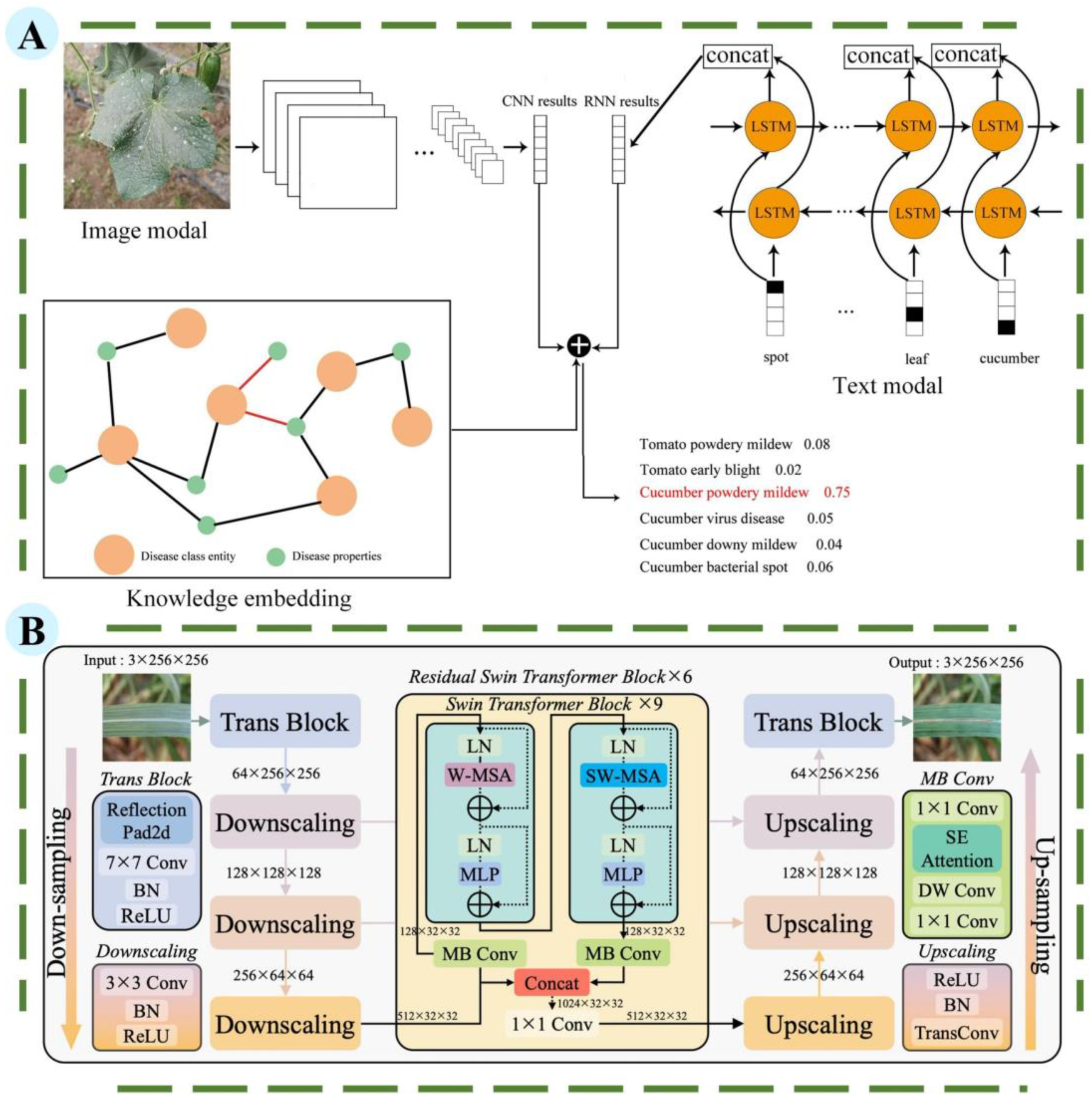

2.5. Multimodal Fusion and Data Analysis Technologies

3. Application of Artificial Intelligence Technologies in Agricultural Pest and Disease Control

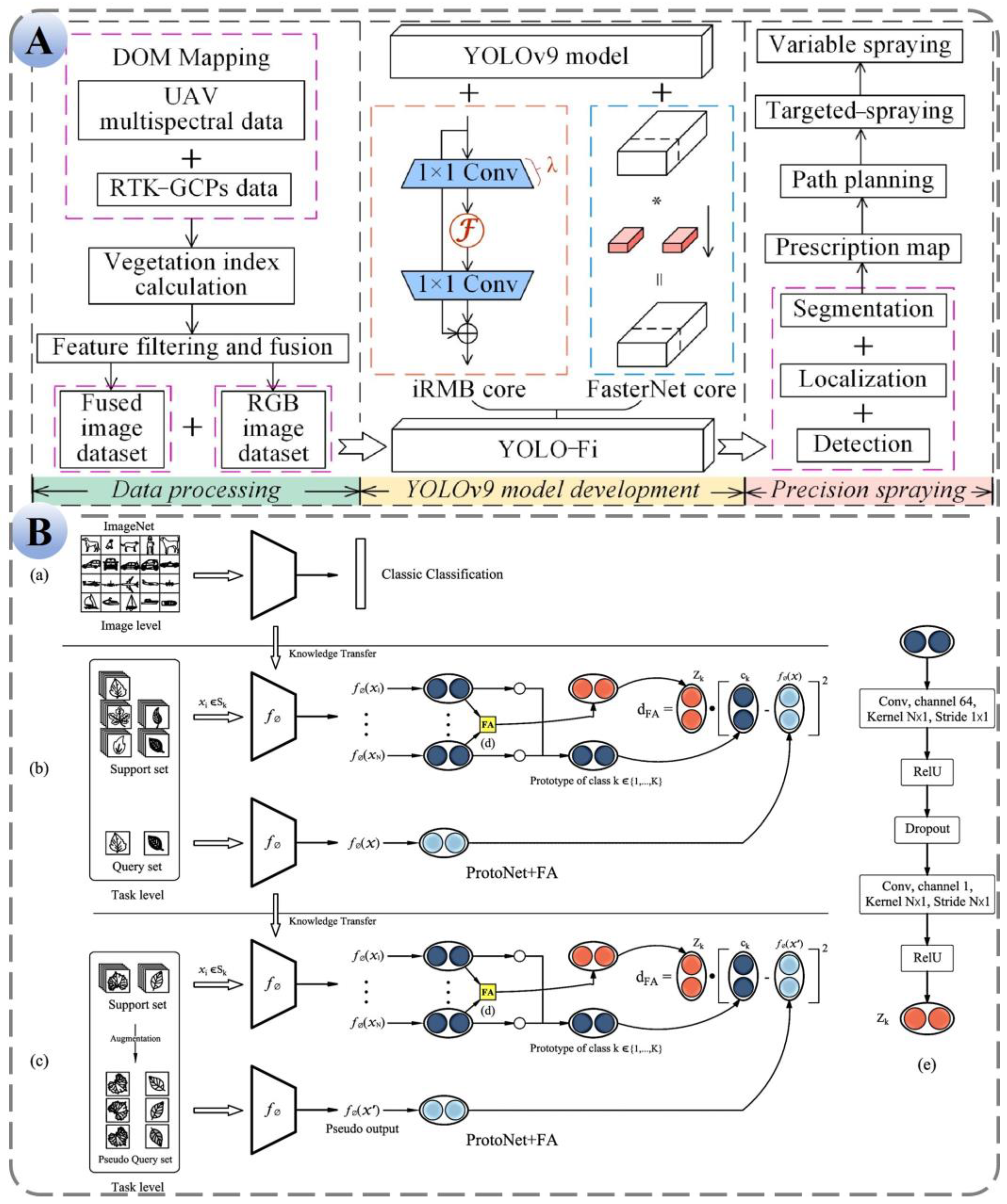

3.1. Precision Application and Intelligent Spraying Equipment

3.2. Smart Early-Warning and Pest Control Models

3.3. AI-Assisted Biological Control and Ecological Regulation

3.4. Green Intelligent Control Strategies for Disease-Resistant Varieties

4. Conclusions and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | convolutional neural network |

| RNN | recurrent neural network |

| CNN-LSTM | convolutional neural network–long short-term memory network model |

| SE-ResNet50 | squeeze-and-excitation residual network 50-layer model |

| SugarcaneGAN | sugarcane generative adversarial network |

| MobileNet-V2 | mobileNet version 2 |

| GNN | graph neural network |

| TRL-GAN | transformer-reinforced learning generative adversarial network |

| DDMA-YOLO | dual-dimensional mixed attention YOLO |

| DTL-SE-ResNet50 | dual transfer learning squeeze-and-excitation residual network 50-layer model |

References

- Anders, M.; Grass, I.; Linden, V.M.; Taylor, P.J.; Westphal, C. Smart orchard design improves crop pollination. J. Appl. Ecol. 2023, 60, 624–637. [Google Scholar] [CrossRef]

- Jiao, L.; Dong, S.; Zhang, S.; Xie, C.; Wang, H. AF-RCNN: An anchor-free convolutional neural network for multi-categories agricultural pest detection. Comput. Electron. Agric. 2020, 174, 105522. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Yang, M.; Wang, G.; Zhao, Y.; Hu, Y. Optimal training strategy for high-performance detection model of multi-cultivar tea shoots based on deep learning methods. Sci. Hortic. 2024, 328, 112949. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, J.; Tian, Y.; Lu, B.; Hang, Y.; Chen, Q. Hyperspectral technique combined with deep learning algorithm for detection of compound heavy metals in lettuce. Food Chem. 2020, 321, 126503. [Google Scholar] [CrossRef]

- Fu, X.; Ma, Q.; Yang, F.; Zhang, C.; Zhao, X.; Chang, F.; Han, L. Crop pest image recognition based on the improved ViT method. Inf. Process. Agric. 2024, 11, 249–259. [Google Scholar] [CrossRef]

- Chen, X.; Yang, B.; Liu, Y.; Feng, Z.; Lyu, J.; Luo, J.; Wu, J.; Yao, Q.; Liu, S. Intelligent survey method of rice diseases and pests using AR glasses and image-text multimodal fusion model. Comput. Electron. Agric. 2025, 237, 110574. [Google Scholar] [CrossRef]

- Kim, D.; Zarei, M.; Lee, S.; Lee, H.; Lee, G.; Lee, S.G. Wearable Standalone Sensing Systems for Smart Agriculture. Adv. Sci. 2025, 12, 2414748. [Google Scholar] [CrossRef]

- Zeng, T.; Wang, Y.; Yang, Y.; Liang, Q.; Fang, J.; Li, Y.; Zhang, H.; Fu, W.; Wang, J.; Zhang, X. Early detection of rubber tree powdery mildew using UAV-based hyperspectral imagery and deep learning. Comput. Electron. Agric. 2024, 220, 108909. [Google Scholar] [CrossRef]

- Yuan, L.; Yan, P.; Han, W.; Huang, Y.; Wang, B.; Zhang, J.; Zhang, H.; Bao, Z. Detection of anthracnose in tea plants based on hyperspectral imaging. Comput. Electron. Agric. 2019, 167, 105039. [Google Scholar] [CrossRef]

- Lu, Y.; Yi, S.; Zeng, N.; Liu, Y.; Zhang, Y. Identification of rice diseases using deep convolutional neural networks. Neural Comput. 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Chen, Z.; Lin, H.; Bai, D.; Qian, J.; Zhou, H.; Gao, Y. PWDViTNet: A lightweight early pine wilt disease detection model based on the fusion of ViT and CNN. Comput. Electron. Agric. 2025, 230, 109910. [Google Scholar] [CrossRef]

- Zhang, Y.; Hao, X.; Li, F.; Wang, Z.; Li, D.; Li, M.; Mao, R. Unsupervised domain adaptation semantic segmentation method for wheat disease detection based on UAV multispectral images. Comput. Electron. Agric. 2025, 236, 110473. [Google Scholar] [CrossRef]

- Ma, J.; Du, K.; Zheng, F.; Zhang, L.; Gong, Z.; Sun, Z. A recognition method for cucumber diseases using leaf symptom images based on deep convolutional neural network. Comput. Electron. Agric. 2018, 154, 18–24. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Zhang, C.; Wang, X.; Shi, Y. Cucumber leaf disease identification with global pooling dilated convolu-tional neural network. Comput. Electron. Agric. 2019, 162, 422–430. [Google Scholar] [CrossRef]

- Tiwari, S.; Gehlot, A.; Singh, R.; Twala, B.; Priyadarshi, N. Design of an iterative method for disease prediction in finger millet leaves using graph networks, dyna networks, autoencoders, and recurrent neural networks. Results Eng. 2024, 24, 103301. [Google Scholar] [CrossRef]

- Li, G.; Jiao, L.; Chen, P.; Liu, K.; Wang, R.; Dong, S.; Kang, C. Spatial convolutional self-attention-based transformer module for strawberry disease identification under complex background. Comput. Electron. Agric. 2023, 212, 108121. [Google Scholar] [CrossRef]

- Li, X.; Chen, X.; Yang, J.; Li, S. Transformer helps identify kiwifruit diseases in complex natural environments. Comput. Electron. Agric. 2022, 200, 107258. [Google Scholar] [CrossRef]

- Xiao, D.; Zeng, R.; Liu, Y.; Huang, Y.; Liu, J.; Feng, J.; Zhang, X. Citrus greening disease recognition algorithm based on classification network using TRL-GAN. Comput. Electron. Agric. 2022, 200, 107206. [Google Scholar] [CrossRef]

- Bao, W.; Zhu, Z.; Hu, G.; Zhou, X.; Zhang, D.; Yang, X. UAV remote sensing detection of tea leaf blight based on DDMA-YOLO. Comput. Electron. Agric. 2023, 205, 107637. [Google Scholar] [CrossRef]

- Franke, J.; Menz, G. Multi-temporal wheat disease detection by multi-spectral remote sensing. Precis. Agric. 2007, 8, 161–172. [Google Scholar] [CrossRef]

- Deng, J.; Hong, D.; Li, C.; Yao, J.; Yang, Z.; Zhang, Z.; Chanussot, J. RustQNet: Multimodal deep learning for quantitative inversion of wheat stripe rust disease index. Comput. Electron. Agric. 2024, 225, 109245. [Google Scholar] [CrossRef]

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Ripper, W.E. Biological control as a supplement to chemical control of insect pests. Nature 1944, 153, 448–452. [Google Scholar] [CrossRef]

- Lamichhane, J.R.; Dürr, C.; Schwanck, A.A.; Robin, M.; Sarthou, J.; Cellier, V.; Messéan, A.; Aubertot, J. Integrated management of damping-off diseases. A review. Agron. Sustain. Dev. 2017, 37, 10. [Google Scholar] [CrossRef]

- Stenberg, J.A.; Sundh, I.; Becher, P.G.; Björkman, C.; Dubey, M.; Egan, P.A.; Friberg, H.; Gil, J.F.; Jensen, D.F.; Jonsson, M. When is it biological control? A framework of definitions, mechanisms, and classifications. J. Pest. Sci. 2021, 94, 665–676. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Wang, T.; Zhao, L.; Li, B.; Liu, X.; Xu, W.; Li, J. Recognition and counting of typical apple pests based on deep learning. Ecol. Inform. 2022, 68, 101556. [Google Scholar] [CrossRef]

- Ahanger, T.A.; Bhatia, M.; Alabduljabbar, A.; Albanyan, A. Blockchain-based intelligent equipment assessment in manufacturing industry. J. Intell. Manuf. 2025, 1–19. [Google Scholar] [CrossRef]

- Li, H.; Guo, Y.; Zhao, H.; Wang, Y.; Chow, D. Towards automated greenhouse: A state of the art review on greenhouse monitoring methods and technologies based on internet of things. Comput. Electron. Agric. 2021, 191, 106558. [Google Scholar] [CrossRef]

- Ding, H.; Gao, R.X.; Isaksson, A.J.; Landers, R.G.; Parisini, T.; Yuan, Y. State of AI-based monitoring in smart manufacturing and introduction to focused section. IEEE/ASME Trans. Mechatron. 2020, 25, 2143–2154. [Google Scholar] [CrossRef]

- Liu, J.; Abbas, I.; Noor, R.S. Development of deep learning-based variable rate agrochemical spraying system for targeted weeds control in strawberry crop. Agronomy 2021, 11, 1480. [Google Scholar] [CrossRef]

- Guo, J.; Zhang, K.; Adade, S.Y.S.S.; Lin, J.; Lin, H.; Chen, Q. Tea grading, blending, and matching based on computer vision and deep learning. J. Sci. Food Agric. 2025, 105, 3239–3251. [Google Scholar] [CrossRef]

- Liu, H.; Zhan, Y.; Xia, H.; Mao, Q.; Tan, Y. Self-supervised transformer-based pre-training method using latent semantic masking auto-encoder for pest and disease classification. Comput. Electron. Agric. 2022, 203, 107448. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, J.; Shi, E.; Yu, S.; Gao, Y.; Li, L.C.; Kuo, L.R.; Pomeroy, M.J.; Liang, Z.J. MM-GLCM-CNN: A multi-scale and multi-level based GLCM-CNN for polyp classification. Comput. Med. Imaging Graph. 2023, 108, 102257. [Google Scholar] [CrossRef] [PubMed]

- You, J.; Li, D.; Wang, Z.; Chen, Q.; Ouyang, Q. Prediction and visualization of moisture content in Tencha drying processes by computer vision and deep learning. J. Sci. Food Agric. 2024, 104, 5486–5494. [Google Scholar] [CrossRef] [PubMed]

- Qiu, D.; Guo, T.; Yu, S.; Liu, W.; Li, L.; Sun, Z.; Peng, H.; Hu, D. Classification of apple color and deformity using machine vision combined with cnn. Agriculture 2024, 14, 978. [Google Scholar] [CrossRef]

- Pei, E.; Zhao, Y.; Oveneke, M.C.; Jiang, D.; Sahli, H. A Bayesian filtering framework for continuous affect recognition from facial images. IEEE Trans. Multimed. 2022, 25, 3709–3722. [Google Scholar] [CrossRef]

- Xu, C.; Sui, X.; Liu, J.; Fei, Y.; Wang, L.; Chen, Q. Transformer in optronic neural networks for image classification. Opt. Laser Technol. 2023, 165, 109627. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Zhu, H.; Wang, D.; Wei, Y.; Zhang, X.; Li, L. Combining Transfer Learning and Ensemble Algorithms for Improved Citrus Leaf Disease Classification. Agriculture 2024, 14, 1549. [Google Scholar] [CrossRef]

- Okedi, T.I.; Fisher, A.C. Time series analysis and long short-term memory (LSTM) network prediction of BPV current density. Energ. Environ. Sci. 2021, 14, 2408–2418. [Google Scholar] [CrossRef]

- Chen, X.; Hassan, M.M.; Yu, J.; Zhu, A.; Han, Z.; He, P.; Chen, Q.; Li, H.; Ouyang, Q. Time series prediction of insect pests in tea gardens. J. Sci. Food Agric. 2024, 104, 5614–5624. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhang, L.; Wang, X.; Liang, B. Forecasting greenhouse air and soil temperatures: A multi-step time series approach employing attention-based LSTM network. Comput. Electron. Agric. 2024, 217, 108602. [Google Scholar] [CrossRef]

- Ji, W.; Zhai, K.; Xu, B.; Wu, J. Green Apple Detection Method Based on Multidimensional Feature Extraction Network Model and Transformer Module. J. Food Protect. 2025, 88, 100397. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Impact of dataset size and variety on the effectiveness of deep learning and transfer learning for plant disease classification. Comput. Electron. Agric. 2018, 153, 46–53. [Google Scholar] [CrossRef]

- Hu, J.; Ren, Z.; He, J.; Wang, Y.; Wu, Y.; He, P. Design of an intelligent vibration screening system for armyworm pupae based on image recognition. Comput. Electron. Agric. 2021, 187, 106189. [Google Scholar] [CrossRef]

- Sun, J.; Cao, Y.; Zhou, X.; Wu, M.; Sun, Y.; Hu, Y. Detection for lead pollution level of lettuce leaves based on deep belief network combined with hyperspectral image technology. J. Food Saf. 2021, 41, e12866. [Google Scholar] [CrossRef]

- Yang, C.; Guo, Z.; Fernandes Barbin, D.; Dai, Z.; Watson, N.; Povey, M.; Zou, X. Hyperspectral Imaging and Deep Learning for Quality and Safety Inspection of Fruits and Vegetables: A Review. J. Agric. Food Chem. 2025, 73, 10019–10035. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, M.; Pan, Q.; Jin, X.; Wang, G.; Zhao, Y.; Hu, Y. Identification of tea plant cultivars based on canopy images using deep learning methods. Sci. Hortic. 2025, 339, 113908. [Google Scholar]

- Xu, M.; Sun, J.; Zhou, X.; Tang, N.; Shen, J.; Wu, X. Research on nondestructive identification of grape varieties based on EEMD-DWT and hyperspectral image. J. Food Sci. 2021, 86, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Wan, L.; Li, H.; Li, C.; Wang, A.; Yang, Y.; Wang, P. Hyperspectral sensing of plant diseases: Principle and methods. Agronomy 2022, 12, 1451. [Google Scholar] [CrossRef]

- Jonak, M.; Mucha, J.; Jezek, S.; Kovac, D.; Cziria, K. SPAGRI-AI: Smart precision agriculture dataset of aerial images at different heights for crop and weed detection using super-resolution. Agric. Syst. 2024, 216, 103876. [Google Scholar] [CrossRef]

- Zuo, X.; Chu, J.; Shen, J.; Sun, J. Multi-granularity feature aggregation with self-attention and spatial reasoning for fine-grained crop disease classification. Agriculture 2022, 12, 1499. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Yang, N.; Qian, Y.; EL Mesery, H.S.; Zhang, R.; Wang, A.; Tang, J. Rapid detection of rice disease using microscopy image identification based on the synergistic judgment of texture and shape features and decision tree–confusion matrix method. J. Sci. Food Agric. 2019, 99, 6589–6600. [Google Scholar] [CrossRef]

- Yang, N.; Yuan, M.; Wang, P.; Zhang, R.; Sun, J.; Mao, H. Tea diseases detection based on fast infrared thermal image processing technology. J. Sci. Food Agric. 2019, 99, 3459–3466. [Google Scholar] [CrossRef]

- Wang, Y.; Mao, H.; Zhang, X.; Liu, Y.; Du, X. A rapid detection method for tomato gray mold spores in greenhouse based on microfluidic chip enrichment and lens-less diffraction image processing. Foods 2021, 10, 3011. [Google Scholar] [CrossRef]

- Yang, N.; Yu, J.; Wang, A.; Tang, J.; Zhang, R.; Xie, L.; Shu, F.; Kwabena, O.P. A rapid rice blast detection and identification method based on crop disease spores’ diffraction fingerprint texture. J. Sci. Food Agric. 2020, 100, 3608–3621. [Google Scholar] [CrossRef]

- Lu, B.; Jun, S.; Ning, Y.; Xiaohong, W.; Xin, Z. Identification of tea white star disease and anthrax based on hyperspectral image information. J. Food Process Eng. 2021, 44, e13584. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral UAV images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef]

- Memon, M.S.; Chen, S.; Shen, B.; Liang, R.; Tang, Z.; Wang, S.; Zhou, W.; Memon, N. Automatic visual recognition, detection and classification of weeds in cotton fields based on machine vision. Crop Prot. 2025, 187, 106966. [Google Scholar] [CrossRef]

- Zhao, X.; Li, K.; Li, Y.; Ma, J.; Zhang, L. Identification method of vegetable diseases based on transfer learning and attention mechanism. Comput. Electron. Agric. 2022, 193, 106703. [Google Scholar] [CrossRef]

- Zhu, W.; Sun, J.; Wang, S.; Shen, J.; Yang, K.; Zhou, X. Identifying field crop diseases using transformer-embedded convolutional neural network. Agriculture 2022, 12, 1083. [Google Scholar] [CrossRef]

- Cong, S.; Sun, J.; Shi, L.; Dai, C.; Wu, X.; Zhang, B.; Yao, K. Hyperspectral imaging combined with a universal hybrid deep network for identifying early chilling injury in kiwifruit across varieties. Postharvest Biol. Technol. 2025, 230, 113752. [Google Scholar] [CrossRef]

- Zhao, S.; Peng, Y.; Liu, J.; Wu, S. Tomato leaf disease diagnosis based on improved convolution neural network by attention module. Agriculture 2021, 11, 651. [Google Scholar] [CrossRef]

- Awais, M.; Li, W.; Hussain, S.; Cheema, M.J.M.; Li, W.; Song, R.; Liu, C. Comparative evaluation of land surface temperature images from unmanned aerial vehicle and satellite observation for agricultural areas using in situ data. Agriculture 2022, 12, 184. [Google Scholar] [CrossRef]

- Yuan, L.; Yu, Q.; Xiang, L.; Zeng, F.; Dong, J.; Xu, O.; Zhang, J. Integrating UAV and high-resolution satellite remote sensing for multi-scale rice disease monitoring. Comput. Electron. Agric. 2025, 234, 110287. [Google Scholar] [CrossRef]

- Wei, J.; Dong, W.; Liu, S.; Song, L.; Zhou, J.; Xu, Z.; Wang, Z.; Xu, T.; He, X.; Sun, J. Mapping super high resolution evapotranspiration in oasis-desert areas using UAV multi-sensor data. Agric. Water Manag. 2023, 287, 108466. [Google Scholar] [CrossRef]

- Lu, Y.; Young, S. A survey of public datasets for computer vision tasks in precision agriculture. Comput. Electron. Agric. 2020, 178, 105760. [Google Scholar] [CrossRef]

- Feng, G.; Wang, C.; Wang, A.; Gao, Y.; Zhou, Y.; Huang, S.; Luo, B. Segmentation of Wheat Lodging Areas from UAV Imagery Using an Ultra-Lightweight Network. Agriculture 2024, 14, 244. [Google Scholar] [CrossRef]

- Zhang, L.; Song, X.; Niu, Y.; Zhang, H.; Wang, A.; Zhu, Y.; Zhu, X.; Chen, L.; Zhu, Q. Estimating Winter Wheat Plant Nitrogen Content by Combining Spectral and Texture Features Based on a Low-Cost UAV RGB System Throughout the Growing Season. Agriculture 2024, 14, 456. [Google Scholar] [CrossRef]

- Zhu, H.; Lin, C.; Liu, G.; Wang, D.; Qin, S.; Li, A.; Xu, J.; He, Y. Intelligent agriculture: Deep learning in UAV-based remote sensing imagery for crop diseases and pests detection. Front. Plant Sci. 2024, 15, 1435016. [Google Scholar] [CrossRef]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed detection in maize fields by UAV images based on crop row preprocessing and improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Qin, W.; Qiu, B.; Xue, X.; Chen, C.; Xu, Z.; Zhou, Q. Droplet deposition and control effect of insecticides sprayed with an unmanned aerial vehicle against plant hoppers. Crop Prot. 2016, 85, 79–88. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, D.; Mao, H.; Shen, B.; Li, M. Wind-induced response of rice under the action of the downwash flow field of a multi-rotor UAV. Biosyst. Eng. 2021, 203, 60–69. [Google Scholar] [CrossRef]

- Ahmed, S.; Qiu, B.; Ahmad, F.; Kong, C.; Xin, H. A state-of-the-art analysis of obstacle avoidance methods from the perspective of an agricultural sprayer UAV’s operation scenario. Agronomy 2021, 11, 1069. [Google Scholar] [CrossRef]

- Niu, Y.; Han, W.; Zhang, H.; Zhang, L.; Chen, H. Estimating maize plant height using a crop surface model constructed from UAV RGB images. Biosyst. Eng. 2024, 241, 56–67. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. 2018, 146, 124–136. [Google Scholar]

- Zhu, W.; Feng, Z.; Dai, S.; Zhang, P.; Wei, X. Using UAV multispectral remote sensing with appropriate spatial resolution and machine learning to monitor wheat scab. Agriculture 2022, 12, 1785. [Google Scholar] [CrossRef]

- Gu, C.; Cheng, T.; Cai, N.; Li, W.; Zhang, G.; Zhou, X.; Zhang, D. Assessing narrow brown leaf spot severity and fungicide efficacy in rice using low altitude UAV imaging. Ecol. Inform. 2023, 77, 102208. [Google Scholar] [CrossRef]

- Zhou, H.; Huang, F.; Lou, W.; Gu, Q.; Ye, Z.; Hu, H.; Zhang, X. Yield prediction through UAV-based multispectral imaging and deep learning in rice breeding trials. Agric. Syst. 2025, 223, 104214. [Google Scholar] [CrossRef]

- Wang, A.; Song, Z.; Xie, Y.; Hu, J.; Zhang, L.; Zhu, Q. Detection of Rice Leaf SPAD and Blast Disease Using Integrated Aerial and Ground Multiscale Canopy Reflectance Spectroscopy. Agriculture 2024, 14, 1471. [Google Scholar] [CrossRef]

- Xu, S.; Xu, X.; Zhu, Q.; Meng, Y.; Yang, G.; Feng, H.; Yang, M.; Zhu, Q.; Xue, H.; Wang, B. Monitoring leaf nitrogen content in rice based on information fusion of multi-sensor imagery from UAV. Precis. Agric. 2023, 24, 2327–2349. [Google Scholar] [CrossRef]

- Wei, L.; Yang, H.; Niu, Y.; Zhang, Y.; Xu, L.; Chai, X. Wheat biomass, yield, and straw-grain ratio estimation from multi-temporal UAV-based RGB and multispectral images. Biosyst. Eng. 2023, 234, 187–205. [Google Scholar] [CrossRef]

- Hu, G.; Ye, R.; Wan, M.; Bao, W.; Zhang, Y.; Zeng, W. Detection of tea leaf blight in low-resolution UAV remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5601218. [Google Scholar] [CrossRef]

- Jamali, A.; Lu, B.; Gerbrandt, E.M.; Teasdale, C.; Burlakoti, R.R.; Sabaratnam, S.; McIntyre, J.; Yang, L.; Schmidt, M.; McCaffrey, D. High-resolution UAV-based blueberry scorch virus mapping utilizing a deep vision transformer algorithm. Comput. Electron. Agric. 2025, 229, 109726. [Google Scholar] [CrossRef]

- Berger, K.; Machwitz, M.; Kycko, M.; Kefauver, S.C.; Van Wittenberghe, S.; Gerhards, M.; Verrelst, J.; Atzberger, C.; Van der Tol, C.; Damm, A. Multi-sensor spectral synergies for crop stress detection and monitoring in the optical domain: A review. Remote Sens. Environ. 2022, 280, 113198. [Google Scholar] [CrossRef]

- Chang, S.; Chi, Z.; Chen, H.; Hu, T.; Gao, C.; Meng, J.; Han, L. Development of a Multiscale XGBoost-based Model for Enhanced Detection of Potato Late Blight Using Sentinel-2, UAV, and Ground Data. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4415014. [Google Scholar] [CrossRef]

- Meng, L.; Liu, H.; Zhang, X.; Ren, C.; Ustin, S.; Qiu, Z.; Xu, M.; Guo, D. Assessment of the effectiveness of spatiotemporal fusion of multi-source satellite images for cotton yield estimation. Comput. Electron. Agric. 2019, 162, 44–52. [Google Scholar] [CrossRef]

- Zhang, S.; He, L.; Duan, J.; Zang, S.; Yang, T.; Schulthess, U.; Guo, T.; Wang, C.; Feng, W. Aboveground wheat biomass estimation from a low-altitude UAV platform based on multimodal remote sensing data fusion with the introduction of terrain factors. Precis. Agric. 2024, 25, 119–145. [Google Scholar] [CrossRef]

- Sawant, S.; Durbha, S.S.; Jagarlapudi, A. Interoperable agro-meteorological observation and analysis platform for precision agriculture: A case study in citrus crop water requirement estimation. Comput. Electron. Agric. 2017, 138, 175–187. [Google Scholar] [CrossRef]

- Luo, L.; Ma, S.; Li, L.; Liu, X.; Zhang, J.; Li, X.; Liu, D.; You, T. Monitoring zearalenone in corn flour utilizing novel self-enhanced electrochemiluminescence aptasensor based on NGQDs-NH2-Ru@ SiO2 luminophore. Food Chem. 2019, 292, 98–105. [Google Scholar] [CrossRef]

- Zhou, J.; Zou, X.; Song, S.; Chen, G. Quantum dots applied to methodology on detection of pesticide and veterinary drug residues. J. Agric. Food Chem. 2018, 66, 1307–1319. [Google Scholar] [CrossRef] [PubMed]

- Guzman, B.G.; Talavante, J.; Fonseca, D.F.; Mir, M.S.; Giustiniano, D.; Obraczka, K.; Loik, M.E.; Childress, S.; Wong, D.G. Toward sustainable greenhouses using battery-free LiFi-enabled Internet of Things. IEEE Commun. Mag. 2023, 61, 129–135. [Google Scholar] [CrossRef]

- Zheng, P.; Solomon Adade, S.Y.; Rong, Y.; Zhao, S.; Han, Z.; Gong, Y.; Chen, X.; Yu, J.; Huang, C.; Lin, H. Online system for monitoring the degree of fermentation of oolong tea using integrated visible–near-infrared spectroscopy and image-processing technologies. Foods 2024, 13, 1708. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Cai, J.; Lin, H.; Ouyang, Q.; Liu, Z. Deep learning-assisted self-cleaning cellulose colorimetric sensor array for monitoring black tea withering dynamics. Food Chem. 2025, 487, 144727. [Google Scholar] [CrossRef]

- Gutiérrez, S.; Hernández, I.; Ceballos, S.; Barrio, I.; Díez-Navajas, A.M.; Tardaguila, J. Deep learning for the differentiation of downy mildew and spider mite in grapevine under field conditions. Comput. Electron. Agric. 2021, 182, 105991. [Google Scholar] [CrossRef]

- Li, X.H.; Li, M.Z.; Li, J.Y.; Gao, Y.Y.; Liu, C.R.; Hao, G.F. Wearable sensor supports in-situ and continuous monitoring of plant health in precision agriculture era. Plant Biotechnol. J. 2024, 22, 1516–1535. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, Y.; Xiao, H.; Jayan, H.; Majeed, U.; Ashiagbor, K.; Jiang, S.; Zou, X. Multi-sensor fusion and deep learning for batch monitoring and real-time warning of apple spoilage. Food Control 2025, 172, 111174. [Google Scholar] [CrossRef]

- Velásquez, D.; Sánchez, A.; Sarmiento, S.; Toro, M.; Maiza, M.; Sierra, B. A method for detecting coffee leaf rust through wireless sensor networks, remote sensing, and deep learning: Case study of the Caturra variety in Colombia. Appl. Sci. 2020, 10, 697. [Google Scholar] [CrossRef]

- Deng, J.; Ni, L.; Bai, X.; Jiang, H.; Xu, L. Simultaneous analysis of mildew degree and aflatoxin B1 of wheat by a multi-task deep learning strategy based on microwave detection technology. LWT 2023, 184, 115047. [Google Scholar] [CrossRef]

- Hu, Y.; Sheng, W.; Adade, S.Y.S.; Wang, J.; Li, H.; Chen, Q. Comparison of machine learning and deep learning models for detecting quality components of vine tea using smartphone-based portable near-infrared device. Food Control 2025, 174, 111244. [Google Scholar] [CrossRef]

- Xing, S.; Lee, H.J. Crop pests and diseases recognition using DANet with TLDP. Comput. Electron. Agric. 2022, 199, 107144. [Google Scholar] [CrossRef]

- Santos, C.F.G.D.; Arrais, R.R.; Silva, J.V.S.D.; Silva, M.H.M.D.; Neto, W.B.G.D.; Lopes, L.T.; Bileki, G.A.; Lima, I.O.; Rondon, L.B.; Souza, B.M.D. ISP Meets Deep Learning: A Survey on Deep Learning Methods for Image Signal Processing. ACM Comput. Surv. 2025, 57, 127. [Google Scholar] [CrossRef]

- Guo, Z.; Wu, X.; Jayan, H.; Yin, L.; Xue, S.; El-Seedi, H.R.; Zou, X. Recent developments and applications of surface enhanced Raman scattering spectroscopy in safety detection of fruits and vegetables. Food Chem. 2024, 434, 137469. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Sun, J.; Wu, Z.; Jia, Y.; Dai, C. Application of Non-Destructive Technology in Plant Disease Detection. Agriculture 2025, 15, 1670. [Google Scholar] [CrossRef]

- Cunha, C.R.; Peres, E.; Morais, R.; Oliveira, A.A.; Matos, S.G.; Fernandes, M.A.; Ferreira, P.J.S.; Reis, M.J.C.D. The use of mobile devices with multi-tag technologies for an overall contextualized vineyard management. Comput. Electron. Agric. 2010, 73, 154–164. [Google Scholar] [CrossRef]

- Yang, N.; Chang, K.; Dong, S.; Tang, J.; Wang, A.; Huang, R.; Jia, Y. Rapid image detection and recognition of rice false smut based on mobile smart devices with anti-light features from cloud database. Biosyst. Eng. 2022, 218, 229–244. [Google Scholar] [CrossRef]

- Li, S.; Yuan, Z.; Peng, R.; Leybourne, D.; Xue, Q.; Li, Y.; Yang, P. An effective farmer-centred mobile intelligence solution using lightweight deep learning for integrated wheat pest management. J. Ind. Inf. Integr. 2024, 42, 100705. [Google Scholar] [CrossRef]

- Peng, Y.; Zhao, S.; Liu, J. Fused-deep-features based grape leaf disease diagnosis. Agronomy 2021, 11, 2234. [Google Scholar] [CrossRef]

- Elsherbiny, O.; Gao, J.; Guo, Y.; Tunio, M.H.; Mosha, A.H. Fusion of the deep networks for rapid detection of branch-infected aeroponically cultivated mulberries using multimodal traits. Int. J. Agric. Biol. Eng. 2025, 18, 75–88. [Google Scholar] [CrossRef]

- Zhu, N.; Liu, X.; Liu, Z.; Hu, K.; Wang, Y.; Tan, J.; Huang, M.; Zhu, Q.; Ji, X.; Jiang, Y. Deep learning for smart agriculture: Concepts, tools, applications, and opportunities. Int. J. Agric. Biol. Eng. 2018, 11, 32–44. [Google Scholar] [CrossRef]

- Chen, L.; Wu, Y.; Yang, N.; Sun, Z. Advances in Hyperspectral and Diffraction Imaging for Agricultural Applications. Agriculture 2025, 15, 1775. [Google Scholar] [CrossRef]

- Liu, B.; Tan, C.; Li, S.; He, J.; Wang, H. A data augmentation method based on generative adversarial networks for grape leaf disease identification. IEEE Access 2020, 8, 102188–102198. [Google Scholar] [CrossRef]

- Li, R.; Liu, J.; Shi, B.; Zhao, H.; Li, Y.; Zheng, X.; Peng, C.; Lv, C. High-Performance Grape Disease Detection Method Using Multimodal Data and Parallel Activation Functions. Plants 2024, 13, 2720. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Li, J.; Wang, C.; Wu, H.; Zhao, C.; Teng, G. Crop disease identification and interpretation method based on multimodal deep learning. Comput. Electron. Agric. 2021, 189, 106408. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Zhang, M.; Dong, Q.; Zhang, G.; Wang, Z.; Wei, P. SugarcaneGAN: A novel dataset generating approach for sugarcane leaf diseases based on lightweight hybrid CNN-Transformer network. Comput. Electron. Agric. 2024, 219, 108762. [Google Scholar] [CrossRef]

- Wang, Y.; Li, T.; Chen, T.; Zhang, X.; Taha, M.F.; Yang, N.; Mao, H.; Shi, Q. Cucumber downy mildew disease prediction using a CNN-LSTM approach. Agriculture 2024, 14, 1155. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, D. Intelligent pest forecasting with meteorological data: An explainable deep learning approach. Expert Syst. Appl. 2024, 252, 124137. [Google Scholar] [CrossRef]

- Zhang, L.; Zhao, Y.; Zhou, C.; Zhang, J.; Yan, Y.; Chen, T.; Lv, C. JuDifformer: Multimodal fusion model with transformer and diffusion for jujube disease detection. Comput. Electron. Agric. 2025, 232, 110008. [Google Scholar] [CrossRef]

- Chen, T.; Miao, Z.; Li, W.; Pan, Q. A learning-based memetic algorithm for a cooperative task allocation problem of multiple unmanned aerial vehicles in smart agriculture. Swarm Evol. Comput. 2024, 91, 101694. [Google Scholar] [CrossRef]

- Abbas, I.; Liu, J.; Amin, M.; Tariq, A.; Tunio, M.H. Strawberry fungal leaf scorch disease identification in real-time strawberry field using deep learning architectures. Plants 2021, 10, 2643. [Google Scholar] [CrossRef]

- Peng, H.; Li, Z.; Zhou, Z.; Shao, Y. Weed detection in paddy field using an improved RetinaNet network. Comput. Electron. Agric. 2022, 199, 107179. [Google Scholar] [CrossRef]

- Yan, W.; Li, L.; Song, J.; Hu, P.; Xu, G.; Wu, Q.; Zhang, R.; Chen, L. Deep Learning-Assisted Measurement of Liquid Sheet Structure in the Atomization of Hydraulic Nozzle Spraying. Agronomy 2025, 15, 409. [Google Scholar] [CrossRef]

- Bongiovanni, R.; Lowenberg-DeBoer, J. Precision agriculture and sustainability. Precis. Agric. 2004, 5, 359–387. [Google Scholar] [CrossRef]

- Wang, A.; Li, W.; Men, X.; Gao, B.; Xu, Y.; Wei, X. Vegetation detection based on spectral information and development of a low-cost vegetation sensor for selective spraying. Pest. Manag. Sci. 2022, 78, 2467–2476. [Google Scholar] [CrossRef] [PubMed]

- Wei, P.; Yan, X.; Yan, W.; Sun, L.; Xu, J.; Yuan, H. Precise extraction of targeted apple tree canopy with YOLO-Fi model for advanced UAV spraying plans. Comput. Electron. Agric. 2024, 226, 109425. [Google Scholar] [CrossRef]

- Zhao, J.; Fan, S.; Zhang, B.; Wang, A.; Zhang, L.; Zhu, Q. Research Status and Development Trends of Deep Reinforcement Learning in the Intelligent Transformation of Agricultural Machinery. Agriculture 2025, 15, 1223. [Google Scholar] [CrossRef]

- Upadhyay, A.; Sunil, G.C.; Zhang, Y.; Koparan, C.; Sun, X. Development and evaluation of a machine vision and deep learning-based smart sprayer system for site-specific weed management in row crops: An edge computing approach. J. Agric. Food Res. 2024, 18, 101331. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Z.; Jia, W.; Ou, M.; Dong, X.; Dai, S. A review of environmental sensing technologies for targeted spraying in orchards. Horticulturae 2025, 11, 551. [Google Scholar] [CrossRef]

- Liu, H.; Du, Z.; Shen, Y.; Du, W.; Zhang, X. Development and evaluation of an intelligent multivariable spraying robot for orchards and nurseries. Comput. Electron. Agric. 2024, 222, 109056. [Google Scholar] [CrossRef]

- Rezaei, M.; Diepeveen, D.; Laga, H.; Jones, M.G.; Sohel, F. Plant disease recognition in a low data scenario using few-shot learning. Comput. Electron. Agric. 2024, 219, 108812. [Google Scholar] [CrossRef]

- Escribà-Gelonch, M.; Liang, S.; van Schalkwyk, P.; Fisk, I.; Long, N.V.D.; Hessel, V. Digital Twins in Agriculture: Orchestration and Applications. J. Agric. Food Chem. 2024, 72, 10737–10752. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Zhu, H.; Chang, Q.; Mao, Q. A Comprehensive Review of Digital Twins Technology in Agriculture. Agriculture 2025, 15, 903. [Google Scholar] [CrossRef]

- Mekala, M.S.; Viswanathan, P. CLAY-MIST: IoT-cloud enabled CMM index for smart agriculture monitoring system. Measurement 2019, 134, 236–244. [Google Scholar] [CrossRef]

- SS, V.C.; Hareendran, A.; Albaaji, G.F. Precision farming for sustainability: An agricultural intelligence model. Comput. Electron. Agric. 2024, 226, 109386. [Google Scholar] [CrossRef]

- Yin, L.; Jayan, H.; Cai, J.; El-Seedi, H.R.; Guo, Z.; Zou, X. Spoilage monitoring and early warning for apples in storage using gas sensors and chemometrics. Foods 2023, 12, 2968. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Plant disease identification from individual lesions and spots using deep learning. Biosyst. Eng. 2019, 180, 96–107. [Google Scholar] [CrossRef]

- Chen, T.; Liu, C.; Meng, L.; Lu, D.; Chen, B.; Cheng, Q. Early warning of rice mildew based on gas chromatography-ion mobility spectrometry technology and chemometrics. J. Food Meas. Charact. 2021, 15, 1939–1948. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef] [PubMed]

- Bi, H.; Li, T.; Xin, X.; Shi, H.; Li, L.; Zong, S. Non-destructive estimation of wood-boring pest density in living trees using X-ray imaging and edge computing techniques. Comput. Electron. Agric. 2025, 233, 110183. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, D.; Zeb, A.; Nanehkaran, Y.A. Identification of rice plant diseases using lightweight attention networks. Expert Syst. Appl. 2021, 169, 114514. [Google Scholar] [CrossRef]

- Bonser, S.P.; Gabriel, V.; Zeng, K.; Moles, A.T. The biocontrol paradox. Trends Ecol. Evol. 2025, 40, 586–592. [Google Scholar] [CrossRef]

- Mahunu, G.K.; Zhang, H.; Apaliya, M.T.; Yang, Q.; Zhang, X.; Zhao, L. Bamboo leaf flavonoid enhances the control effect of Pichia caribbica against Penicillium expansum growth and patulin accumulation in apples. Postharvest Biol. Technol. 2018, 141, 1–7. [Google Scholar] [CrossRef]

- Godana, E.A.; Yang, Q.; Wang, K.; Zhang, H.; Zhang, X.; Zhao, L.; Abdelhai, M.H.; Legrand, N.N.G. Bio-control activity of Pichia anomala supplemented with chitosan against Penicillium expansum in postharvest grapes and its possible inhibition mechanism. LWT 2020, 124, 109188. [Google Scholar] [CrossRef]

- Zhang, H.; Serwah Boateng, N.A.; Ngolong Ngea, G.L.; Shi, Y.; Lin, H.; Yang, Q.; Wang, K.; Zhang, X.; Zhao, L.; Droby, S. Unravelling the fruit microbiome: The key for developing effective biological control strategies for postharvest diseases. Compr. Rev. Food Sci. Food Saf. 2021, 20, 4906–4930. [Google Scholar] [CrossRef]

- Kang, Y.; Bai, D.; Tapia, L.; Bateman, H. Dynamical effects of biocontrol on the ecosystem: Benefits or harm? Appl. Math. Model. 2017, 51, 361–385. [Google Scholar] [CrossRef]

- Yang, Q.; Solairaj, D.; Apaliya, M.T.; Abdelhai, M.; Zhu, M.; Yan, Y.; Zhang, H. Protein expression profile and transcriptome characterization of Penicillium expansum Induced by Meyerozyma guilliermondii. J. Food Qual. 2020, 2020, 8056767. [Google Scholar] [CrossRef]

- Xu, M.; Yang, Q.; Boateng, N.A.S.; Ahima, J.; Dou, Y.; Zhang, H. Ultrastructure observation and transcriptome analysis of Penicillium expansum invasion in postharvest pears. Postharvest Biol. Technol. 2020, 165, 111198. [Google Scholar] [CrossRef]

- Wang, K.; Ngea, G.L.N.; Godana, E.A.; Shi, Y.; Lanhuang, B.; Zhang, X.; Zhao, L.; Yang, Q.; Wang, S.; Zhang, H. Recent advances in Penicillium expansum infection mechanisms and current methods in controlling P. expansum in postharvest apples. Crit. Rev. Food Sci. 2023, 63, 2598–2611. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Zhou, Y.; Liang, L.; Godana, E.A.; Zhang, X.; Yang, X.; Wu, M.; Song, Y.; Zhang, H. Changes in quality and microbiome composition of strawberry fruits following postharvest application of Debaryomyces hansenii, a yeast biocontrol agent. Postharvest Biol. Technol. 2023, 202, 112379. [Google Scholar] [CrossRef]

- Godana, E.A.; Yang, Q.; Zhang, X.; Zhao, L.; Wang, K.; Dhanasekaran, S.; Mehari, T.G.; Zhang, H. Biotechnological and biocontrol approaches for mitigating postharvest diseases caused by fungal pathogens and their mycotoxins in fruits: A review. J. Agric. Food Chem. 2023, 71, 17584–17596. [Google Scholar] [CrossRef]

- Li, Y.; Wang, L.; Cao, Q.; Gu, X.; Lu, Y.; Luo, Y. Exploring an adaptive management model for “status-optimization-regulation” of mining city in transition: A case study of Huangshi, China. Appl. Geogr. 2024, 172, 103438. [Google Scholar] [CrossRef]

- Su, H.; Gu, M.; Qu, Z.; Wang, Q.; Jin, J.; Lu, P.; Zhang, J.; Cao, P.; Ren, X.; Tao, J. Discovery of antimicrobial peptides from Bacillus genomes against phytopathogens with deep learning models. Chem. Biol. Technol. Agric. 2025, 12, 35. [Google Scholar] [CrossRef]

- Sun, L.; Cai, Z.; Liang, K.; Wang, Y.; Zeng, W.; Yan, X. An intelligent system for high-density small target pest identification and infestation level determination based on an improved YOLOv5 model. Expert Syst. Appl. 2024, 239, 122190. [Google Scholar] [CrossRef]

- Mansourvar, M.; Funk, J.; Petersen, S.D.; Tavakoli, S.; Hoof, J.B.; Corcoles, D.L.; Pittroff, S.M.; Jelsbak, L.; Jensen, N.B.; Ding, L. Automatic classification of fungal-fungal interactions using deep leaning models. Comput. Struct. Biotechnol. J. 2024, 23, 4222–4231. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, G.; Tian, L.; Huang, L. Ecological regulation network of quality in American ginseng: Insights from macroscopic-mesoscopic-microscopic perspectives. Ind. Crops Prod. 2023, 206, 117617. [Google Scholar] [CrossRef]

- Anand, G.; Koniusz, P.; Kumar, A.; Golding, L.A.; Morgan, M.J.; Moghadam, P. Graph neural networks-enhanced relation prediction for ecotoxicology (GRAPE). J. Hazard. Mater. 2024, 472, 134456. [Google Scholar] [CrossRef]

- Zeng, H.; Wu, Y.; Xu, L.; Dong, J.; Huang, B. Banana defense response against pathogens: Breeding disease-resistant cultivars. Hortic. Plant J. 2024. [Google Scholar] [CrossRef]

- Wang, H.; Cimen, E.; Singh, N.; Buckler, E. Deep learning for plant genomics and crop improvement. Curr. Opin. Plant Biol. 2020, 54, 34–41. [Google Scholar] [CrossRef]

- Xu, M.; Godana, E.A.; Dhanasekaran, S.; Zhang, X.; Yang, Q.; Zhao, L.; Zhang, H. Comparative proteome and transcriptome analyses of the response of postharvest pears to Penicillium expansum infection. Postharvest Biol. Technol. 2023, 196, 112182. [Google Scholar] [CrossRef]

- Crossa, J.; Pérez-Rodríguez, P.; Cuevas, J.; Montesinos-López, O.; Jarquín, D.; De Los Campos, G.; Burgueño, J.; González-Camacho, J.M.; Pérez-Elizalde, S.; Beyene, Y. Genomic selection in plant breeding: Methods, models, and perspectives. Trends Plant Sci. 2017, 22, 961–975. [Google Scholar] [CrossRef]

- Ma, J.; Qin, N.; Cai, B.; Chen, G.; Ding, P.; Zhang, H.; Yang, C.; Huang, L.; Mu, Y.; Tang, H. Identification and validation of a novel major QTL for all-stage stripe rust resistance on 1BL in the winter wheat line 20828. Theor. Appl. Genet. 2019, 132, 1363–1373. [Google Scholar] [CrossRef]

- Sarkar, S.; Ganapathysubramanian, B.; Singh, A.; Fotouhi, F.; Kar, S.; Nagasubramanian, K.; Chowdhary, G.; Das, S.K.; Kantor, G.; Krishnamurthy, A. Cyber-agricultural systems for crop breeding and sustainable production. Trends Plant Sci. 2024, 29, 130–149. [Google Scholar] [CrossRef]

- Ganapathysubramanian, B.; Sarkar, S.; Singh, A.; Singh, A.K. Digital twins for the plant sciences. Trends Plant Sci. 2025, 30, 576–577. [Google Scholar] [CrossRef] [PubMed]

| Model Name | Detection Technology | Disease Type | Research Advantage |

|---|---|---|---|

| Convolutional Neural Network [8] | Hyperspectral Imaging | Powdery Mildew | Early warning, low-cost monitoring |

| TARI/TANI + Unsupervised classification + Adaptive thresholding [9] | Hyperspectral imaging | Tea anthracnose | Robust against background noise, effective for automated tea disease detection |

| Deep Convolutional Neural Network [10] | Multispectral images | Rice Diseases | High recognition accuracy |

| CNN + Transformer [11] | Multispectral Images | Pine Wilt Disease | Lightweight implementation for real-time monitoring |

| DATS-ASSFormer [12] | Multispectral Images | Wheat rust, wheat scab, wheat yellow dwarf | Reduces dependency on manual labeling |

| Deep Convolutional Neural Network [13] | RGB images | Anthracnose, Downy Mildew, Powdery Mildew | High computational efficiency |

| Global Pooling Dilated Convolutional Neural Network [14] | RGB Images | Cucumber Leaf Diseases | Improved feature extraction ability and computational performance |

| Recurrent Neural Network [15] | RGB Images | Finger Millet Leaf Diseases | Applicable to small datasets or changing environments |

| Spatial Convolutional Self-attention Transformer [16] | RGB Images | Strawberry Diseases | Enhanced robustness and accuracy under noisy conditions |

| Transformer [17] | RGB Images | Kiwi Diseases | Strong feature extraction ability |

| TRL-GAN [18] | RGB Images | Citrus Greening | Small sample learning, early detection |

| DDMA-YOLO [19] | UAV Remote Sensing | Leaf Blight Disease | Precise positioning, efficient detection |

| Decision tree + MTMF + NDVI [20] | UAV multispectral remote sensing | Wheat powdery mildew, wheat leaf rust | Enables spatiotemporal monitoring of disease progression |

| RustQNet [21] | RGB Images + Hyperspectral Resolution Multispectral | Stripe Rust Disease | Quantitative evaluation, precision spraying |

| Model Name | Detection Technology | Research Object | Research Advantage |

|---|---|---|---|

| Decision Tree–Based Model and Confusion Matrix Method [56] | Microscopic Imaging | Rice Pyricularia Disease | Integration of multiple features to minimize misclassification rate |

| Classification Model [57] | Thermal Infrared Imaging | Tea Anthracnose | Contact-free detection offering rapid response |

| Logistic Regression and Random Forest Classification Model [58] | Diffraction Imaging | Tomato Botrytis cinerea Spores | Sensitivity enhancement via integrated microfluidic enrichment |

| Support Vector Machine Classification Model [59] | Diffraction Imaging | Magnaporthe oryzae Spores | High-specificity detection capability |

| Support Vector Machine Classification Model [60] | Hyperspectral Imaging | Tea White Spot Disease and Anthracnose | Enables non-destructive leaf analysis |

| 3D Convolutional Neural Network [61] | Hyperspectral Imaging | Wheat Stripe Rust | Efficient wide-area monitoring |

| Faster Region-Based Convolutional Neural Network [62] | Visible Light Imaging | Weeds in Cotton Field | Rapid identification and precise weed categorization |

| DTL-SE-ResNet50 [63] | Visible Light Imaging | Tomato Early Blight, Cucumber Downy Mildew, and Pepper Anthracnose | Accurate performance with reduced reliance on large datasets |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Chen, L.; Yang, N.; Sun, Z. Research Progress of Deep Learning-Based Artificial Intelligence Technology in Pest and Disease Detection and Control. Agriculture 2025, 15, 2077. https://doi.org/10.3390/agriculture15192077

Wu Y, Chen L, Yang N, Sun Z. Research Progress of Deep Learning-Based Artificial Intelligence Technology in Pest and Disease Detection and Control. Agriculture. 2025; 15(19):2077. https://doi.org/10.3390/agriculture15192077

Chicago/Turabian StyleWu, Yu, Li Chen, Ning Yang, and Zongbao Sun. 2025. "Research Progress of Deep Learning-Based Artificial Intelligence Technology in Pest and Disease Detection and Control" Agriculture 15, no. 19: 2077. https://doi.org/10.3390/agriculture15192077

APA StyleWu, Y., Chen, L., Yang, N., & Sun, Z. (2025). Research Progress of Deep Learning-Based Artificial Intelligence Technology in Pest and Disease Detection and Control. Agriculture, 15(19), 2077. https://doi.org/10.3390/agriculture15192077