YOLO-SCA: A Lightweight Potato Bud Eye Detection Method Based on the Improved YOLOv5s Algorithm

Abstract

1. Introduction

2. Materials and Methods

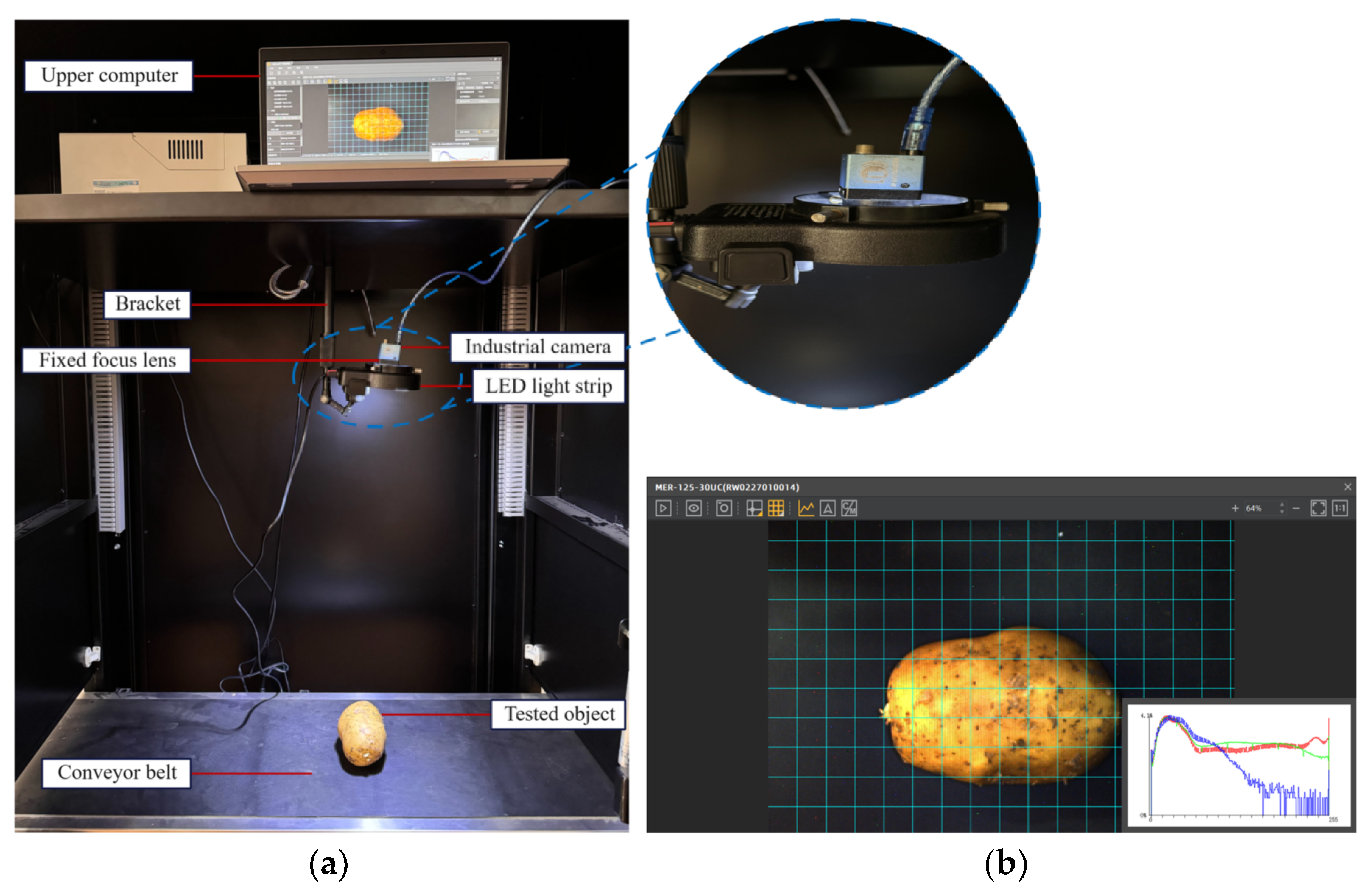

2.1. Test Equipment and Environment Parameter Settings

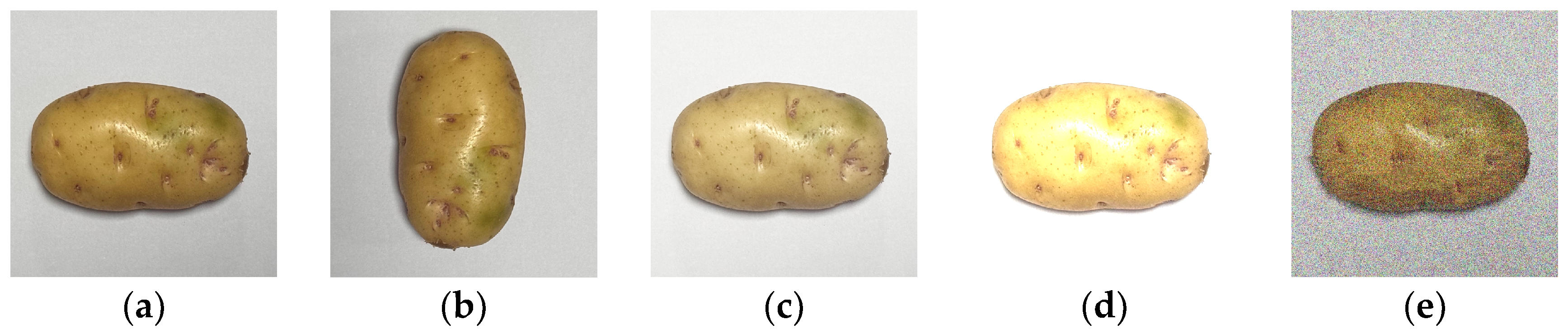

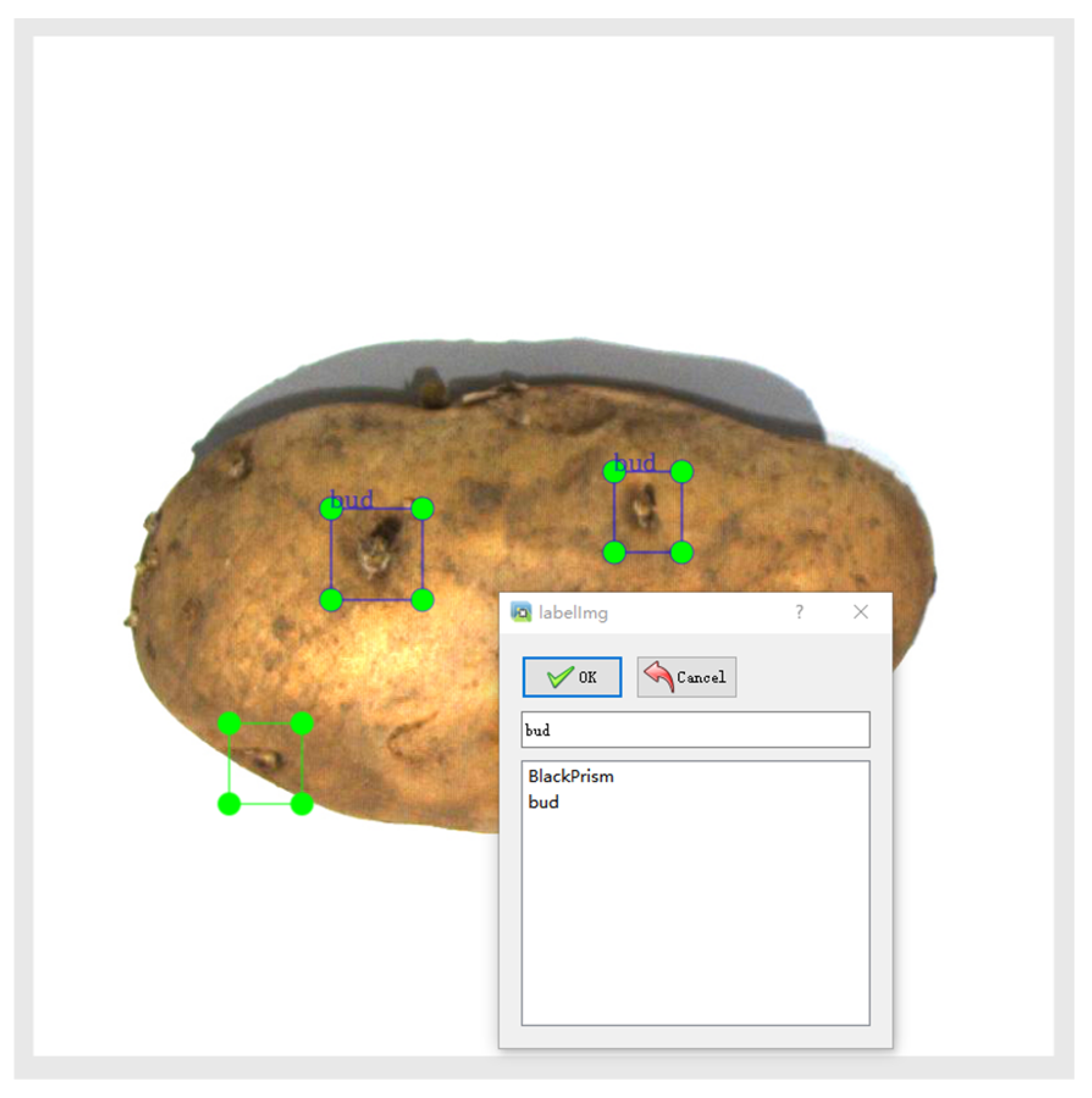

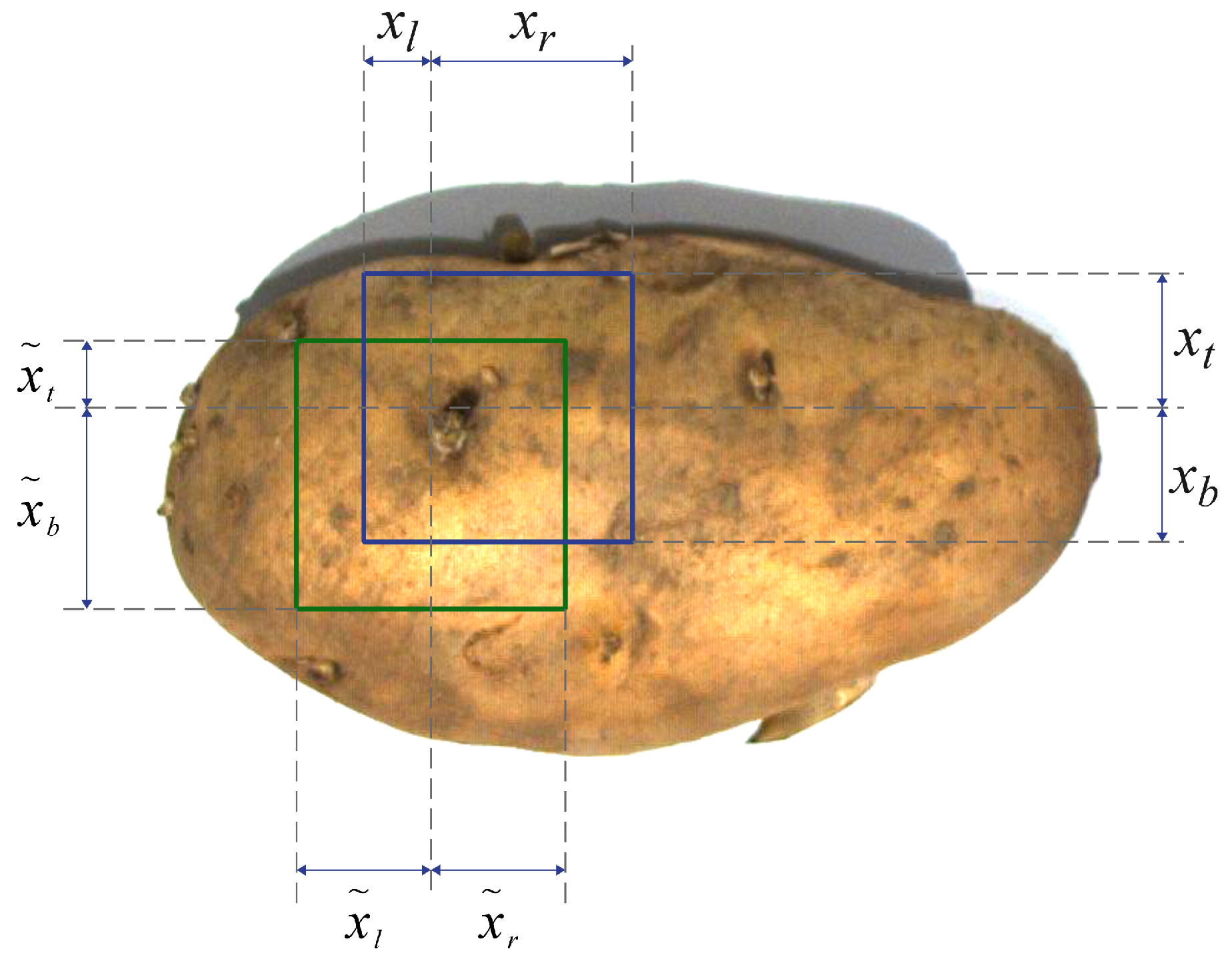

2.2. Production and Construction of Dataset

2.3. Selection of the Original Model

2.4. Summary of Improved Methods Based on YOLOv5s

2.5. An Improved Potato Bud Eye Detection Model Based on YOLOv5s

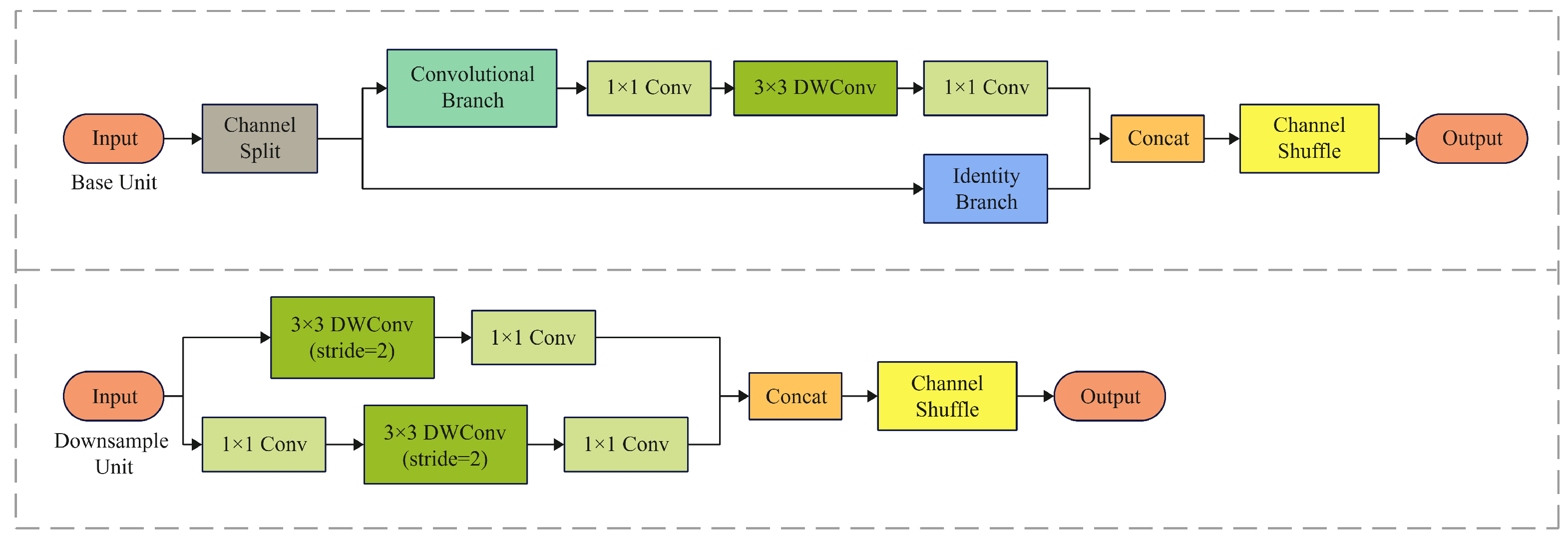

2.5.1. ShuffleNetv2

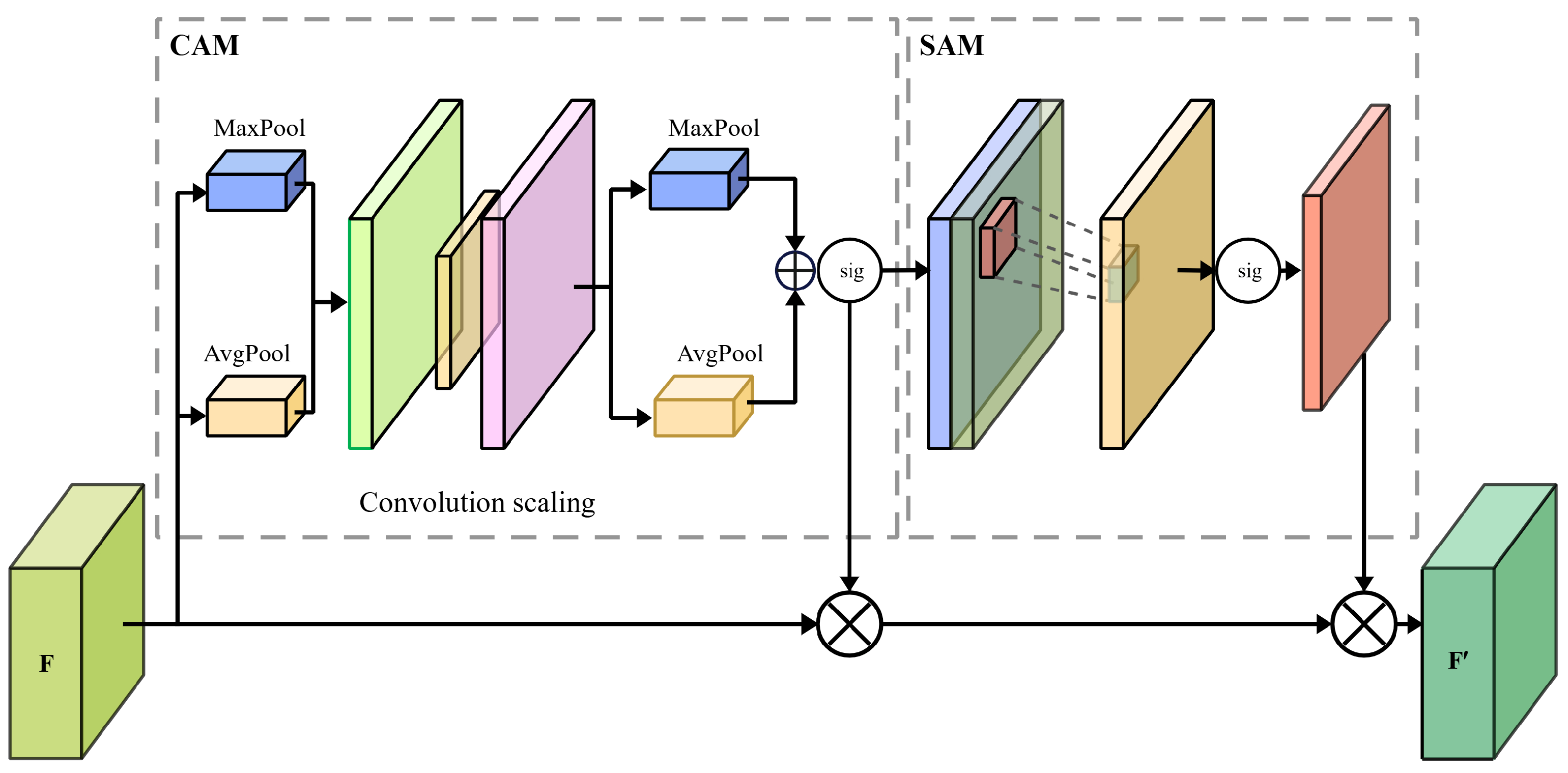

2.5.2. The CBAM Attention Mechanism

2.5.3. Alpha-IoU Loss Function

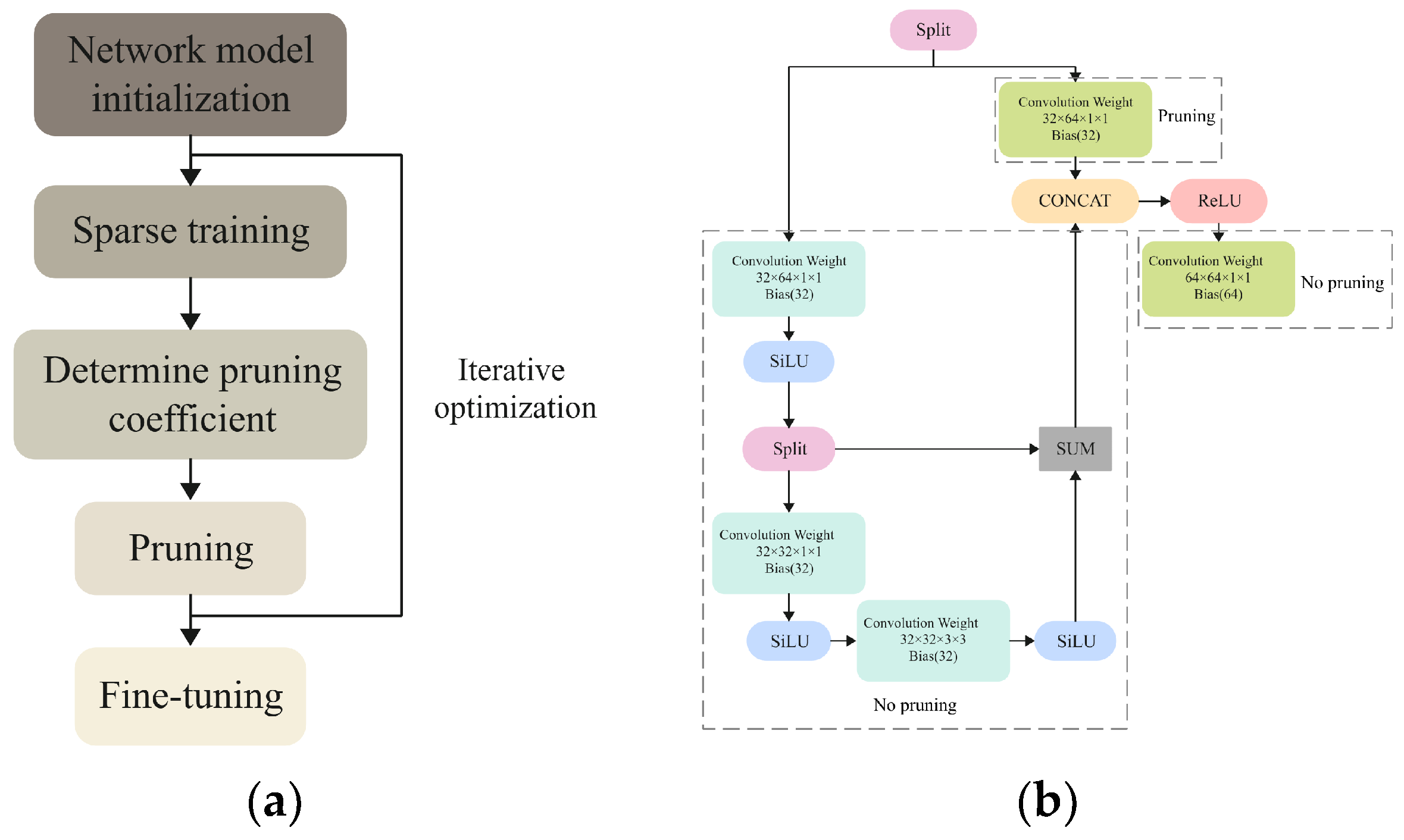

2.5.4. Model Pruning

3. Results and Analysis

3.1. Evaluation Index

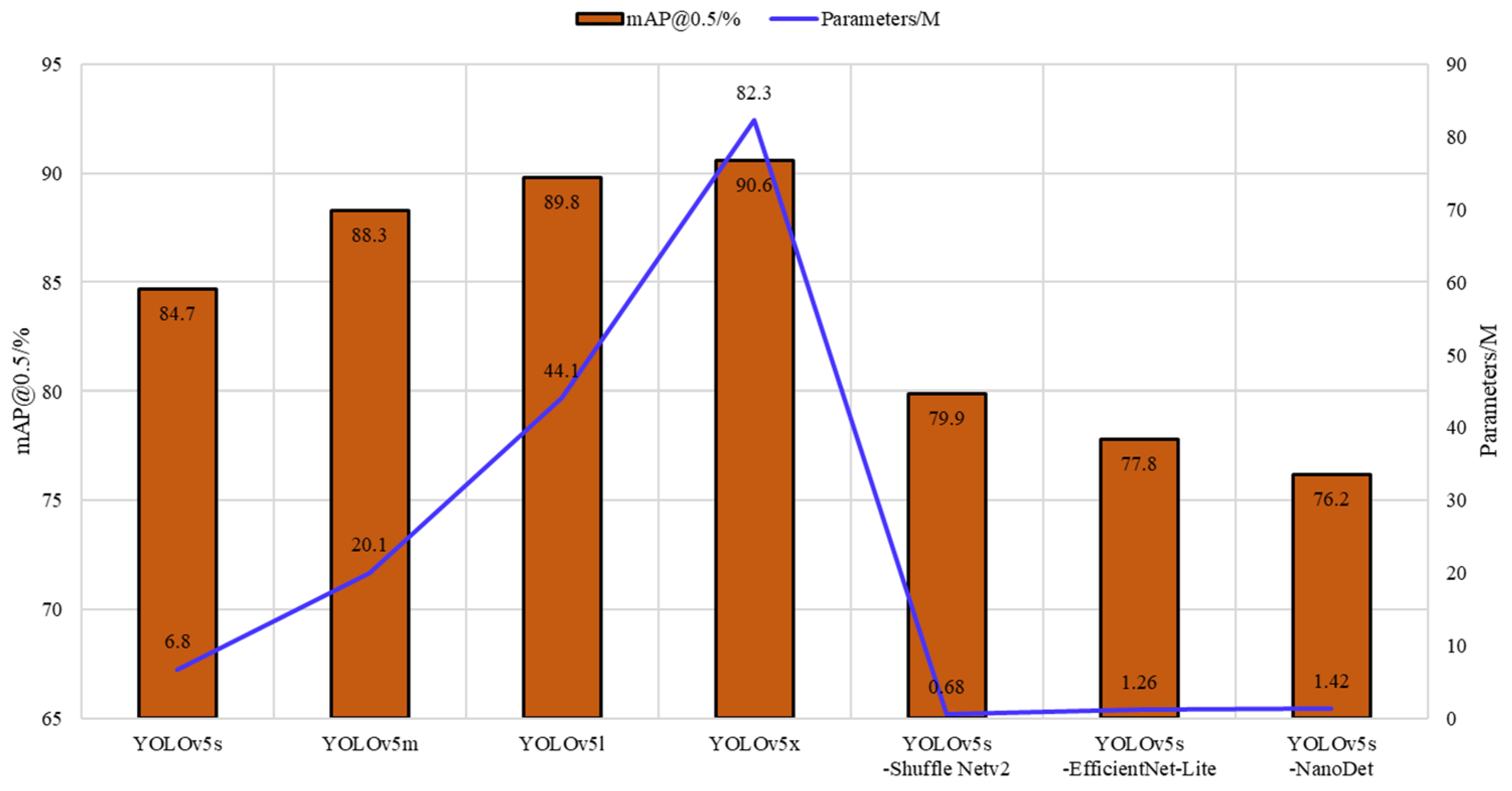

3.2. Comparison of Lightweight Backbone Networks

3.3. Performance Comparison of Various Attention Mechanisms

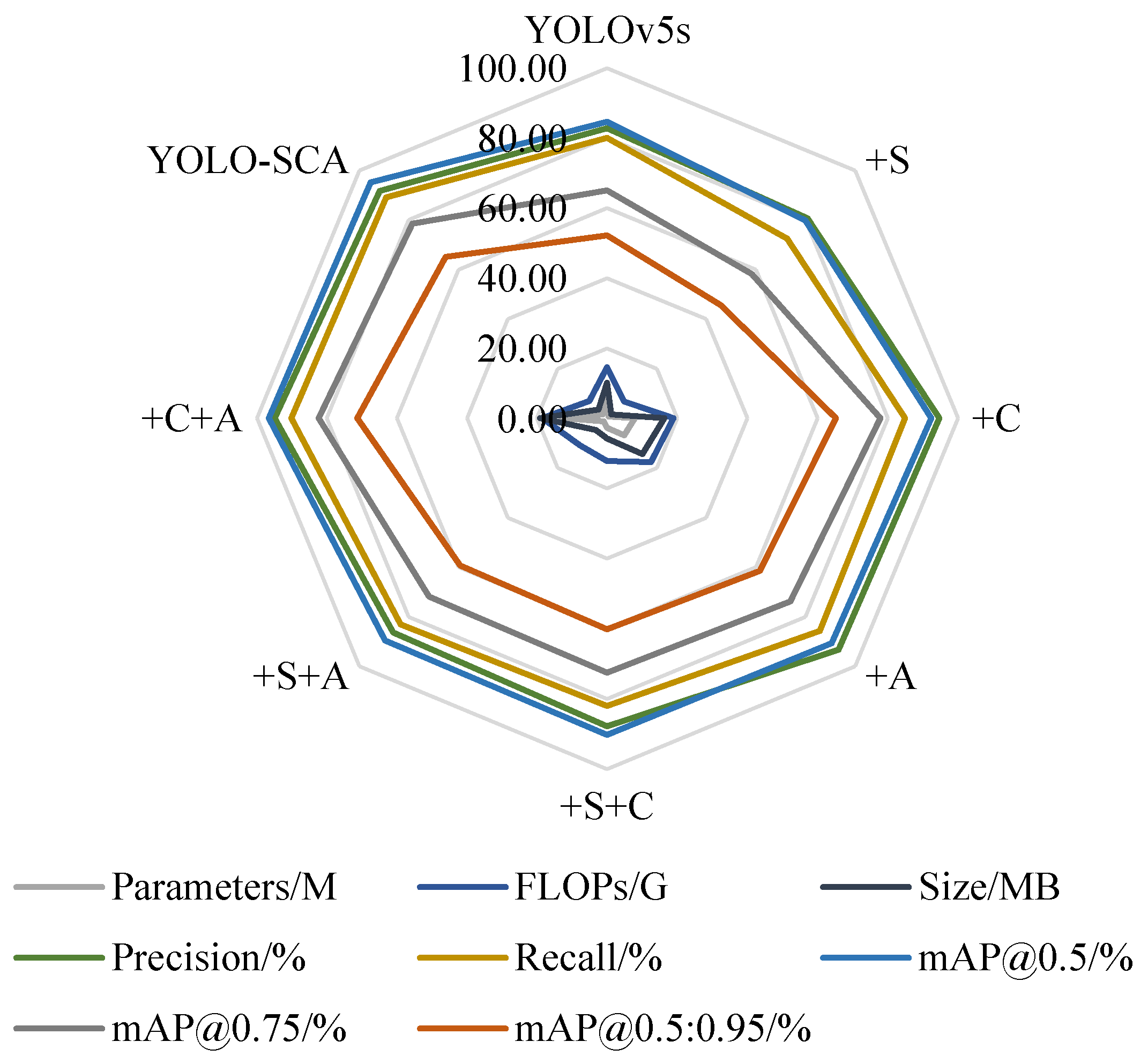

3.4. Ablation Experiments

3.5. Comparative Experiments Based on Improvements to Different Original Models

3.6. Ablation Experiment for the α Parameter

4. Discussion

4.1. Limitations of Deep Learning Models

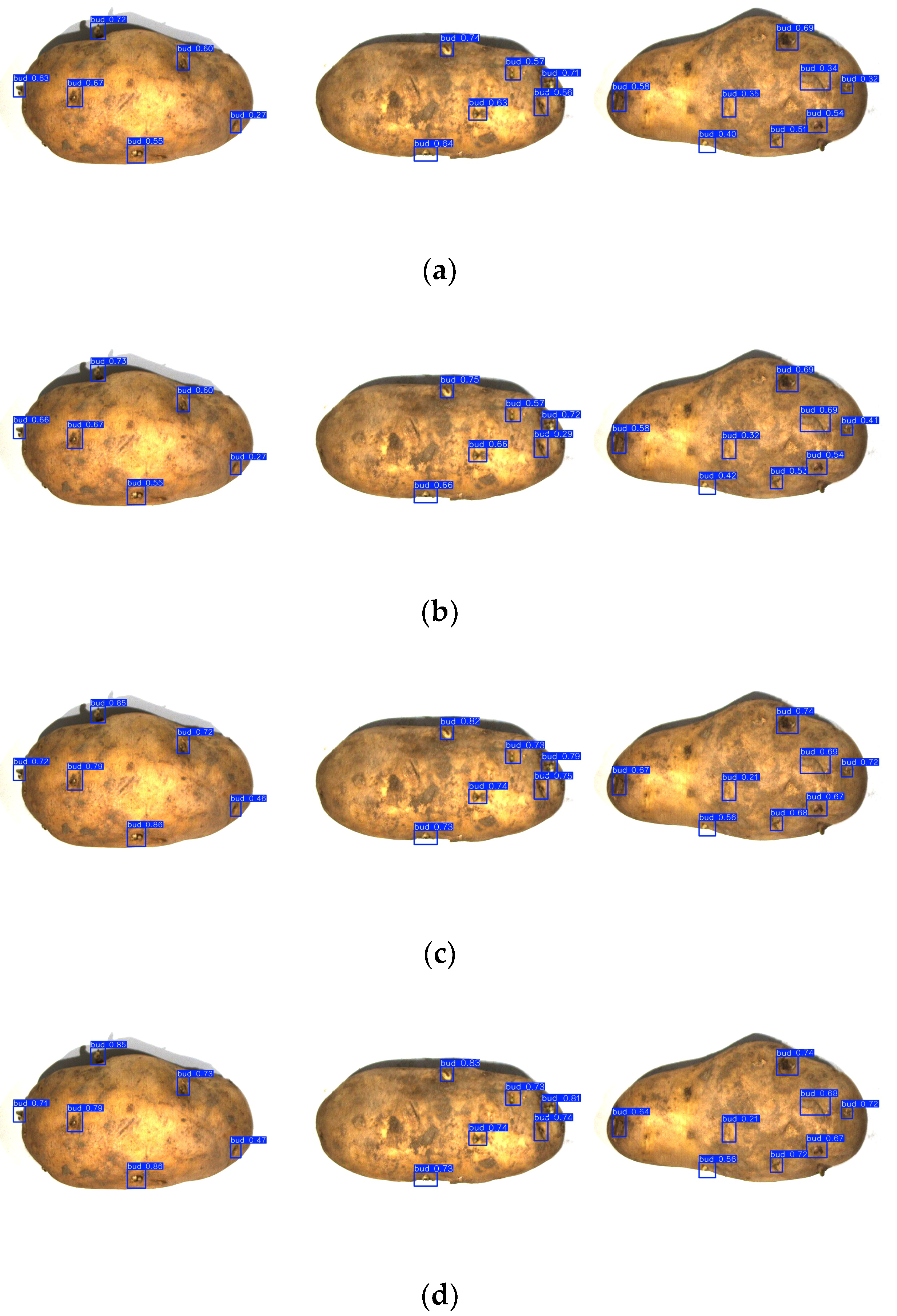

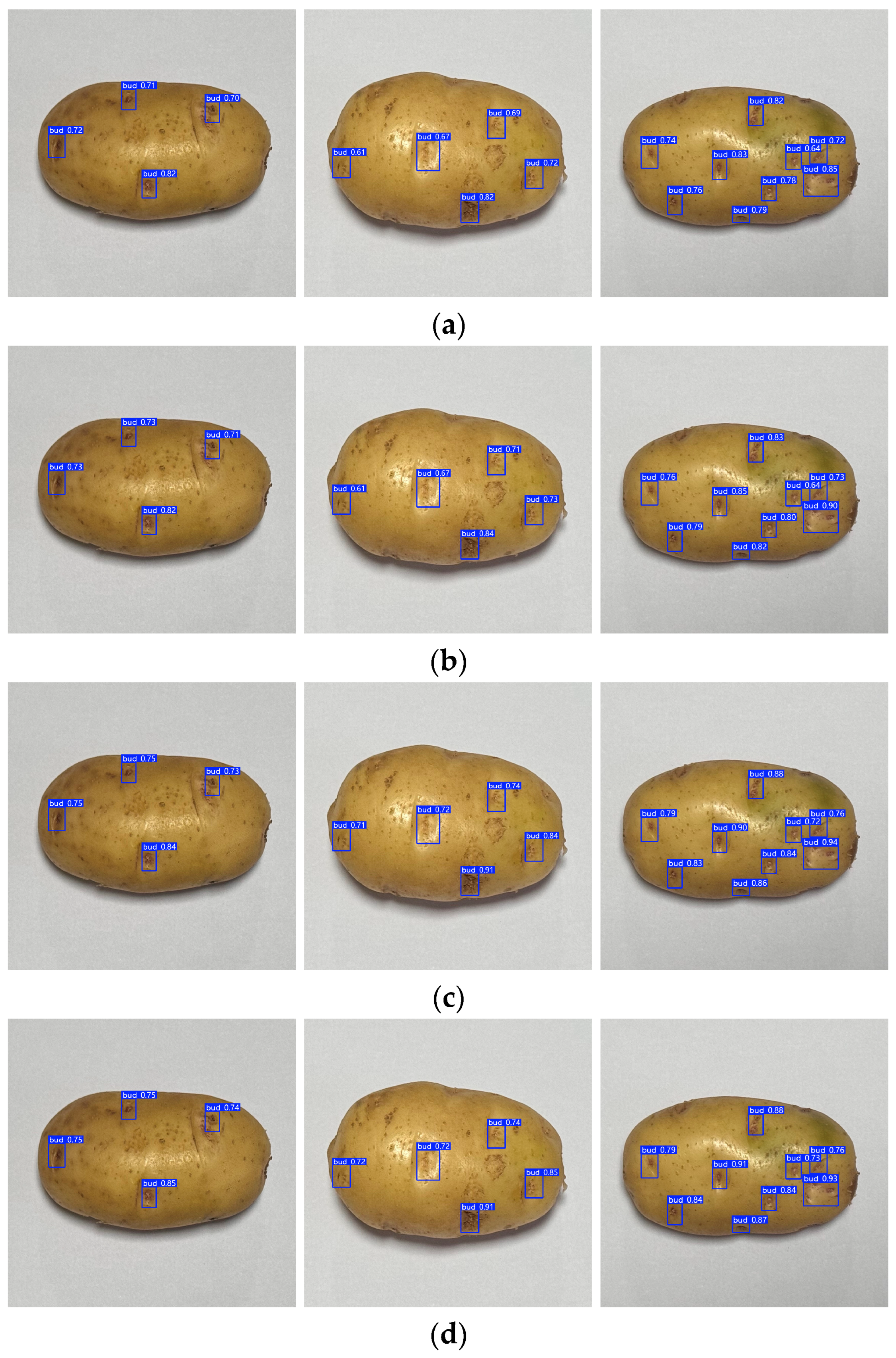

4.2. Analysis of Failure Cases

4.3. Research Prospects

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| YOLO | Savitzky–Golay |

| CBAM | Convolutional Block Attention Module |

| IoU | Intersection over Union |

| LBP | Local binary pattern |

| SVM | Support vector machine |

| BiFPN | Bidirectional Feature Pyramid Network |

| ECA | Efficient Channel Attention |

| SGD | Stochastic gradient descent |

| mAP | Mean Average Precision |

| FPS | Frames Per Second |

| FPN | Feature Pyramid Network |

| PAN | Path Aggregation Network |

| WNMS | Weighted Non-Maximum Suppression |

| DC | Depthwise Convolution |

| PC | Pointwise Convolution |

| CAM | Channel Attention Module |

| SAM | Spatial Attention Module |

| MLP | Multilayer perceptron |

| BN | Batch Normalization |

| GC | Global Context |

| AA | Axial Attention |

| R-CNN | Region-based Convolutional Neural Network |

| DETR | Detection Transformer |

| CNN-ViT | Convolutional Neural Network-Vision Transformer |

| ASFF | Adaptive Spatial Feature Fusion |

References

- Semyalo, D.; Kim, Y.; Omia, E.; Arief, M.A.A.; Kim, H.; Sim, E.Y.; Kim, M.S.; Baek, I.; Cho, B.K. Nondestructive Identification of Internal Potato Defects Using Visible and Short-Wavelength Near-Infrared Spectral Analysis. Agriculture 2024, 14, 2014. [Google Scholar]

- Jia, L.; Zheng, Y.; Jin, B.; Tang, W.; He, R.; Ma, Y.; Feng, L.; Wang, T. Analysis and Recommendations for the Development of the Potato Industry in Liaoning Province in 2024. In Proceedings of the Potato Industry and Rural Revitalization 2025, Benxi, China, 24–27 May 2025; Potato Professional Committee of the Chinese Crop Science Society: Dingxi, China; Benxi Potato Research Institute: Benxi, China, 2025. [Google Scholar] [CrossRef]

- Sohel, A.; Shakil, M.S.; Siddiquee, S.M.T.; Al Marouf, A.; Rokne, J.G.; Alhajj, R. Enhanced Potato Pest Identification: A Deep learning approach for identifying potato pests. IEEE Access 2024, 12, 172149–172161. [Google Scholar] [CrossRef]

- Gu, H.; Li, Z.; Li, T.; Li, T.; Li, N.; Wei, Z. Lightweight detection algorithm of seed potato eyes based on YOLOv5. Trans. Chin. Soc. Agric. Eng. 2024, 40, 126–136. [Google Scholar]

- Wu, H. Research on Intelligent Grading and Bud Eye Detection Methods for Potato Seed Tubers. Master’s Thesis, Heilongjiang Bayi Agricultural University, Daqing, China, 2025. [Google Scholar] [CrossRef]

- Yang, T. Design and Research of Potato Seed Potato Intelligent Cutter. Master’s Thesis, Lanzhou Jiaotong University, Lanzhou, China, 2019. [Google Scholar] [CrossRef]

- Lv, Z.; Qi, X.; Zhang, W.; Liu, Z.; Zheng, W.; Mu, G. Buds Recognition of Potato Images Based on Gabor Feature. Agric. Mech. Res. 2021, 43, 203–207. [Google Scholar] [CrossRef]

- Zhang, W.; Zeng, X.; Liu, S.; Mu, G.; Zhang, H.; Guo, Z. Detection Method of Potato Seed Bud Eye Based on Improved YOLOv5s. Trans. Chin. Soc. Agric. Mach. 2023, 54, 260–269. [Google Scholar]

- Huang, J.; Wang, X.; Wu, H.; Liu, S.; Yang, X.; Liu, W. Detecting potato seed bud eye using lightweight convolutional neuralnetwork (CNN). Trans. Chin. Soc. Agric. Eng. 2023, 39, 172–182. [Google Scholar]

- Yang, S.; Zhang, P.; Wang, L.; Tang, L.; Wang, S.; He, X. Identifying tomato leaf diseases and pests using lightweight improved YOLOv8n and channel pruning. Trans. Chin. Soc. Agric. Eng. 2025, 41, 206–214. [Google Scholar]

- Chen, Z. Research on the Detection and Automatic Cutting Test of Seed Potato Buds based on YOLOX. Master’s Thesis, Shandong Agricultural University, Taian, China, 2022. [Google Scholar] [CrossRef]

- Huang, S. Analysis of 3D Phenotype and Bud Eye Traits of Potato Basedon Structured Light Imaging. Master’s Thesis, Huazhong Agricultural University, Wuhan, China, 2024. [Google Scholar] [CrossRef]

- Wang, Y.; Fang, Z.; Wang, M.; Peng, L.; Hong, H. Comparative study of landslide susceptibility mapping with different recurrent neural networks. Comput. Geosci. 2020, 138, 104445. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, Q.; Zhang, Z.; Liu, J.; Fang, J. Detection of Seed Potato Sprouts Based on Improved YOLOv8 Algorithm. Agriculture 2025, 15, 1015. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Li, Q.; Yang, X. Lightweight Vehicle Detection Method Based on Improved YOLOv4. Comput. Technol. Dev. Comput. Technol Dev. 2023, 33, 42–48. [Google Scholar]

- Wu, Y.; Qiu, H.; Ma, S. Underwater Fish Detection Algorithm Based on Improved YOLOv5s. J. Heilongjiang Univ. Technol. (Compr. Ed.) 2025, 25, 96–102. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Zheng, H.; Chu, J. Feature Fusion Method for Object Detection. J. Nanchang Hangkong Univ. (Nat. Sci.) 2022, 36, 59–67. [Google Scholar]

- Meng, W.; An, W.; Ma, S.; Yang, X. An object detection algorithm based on feature enhancement and adaptive threshold non-maximum suppression. J. Beijing Univ. Aeronaut. Astronaut. 2025, 51, 2349–2359. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Lu, W.; Wang, Y.; Lu, Y.; Cheng, S. An Improved YOLOv5s Recognition and Detection Algorithm forFloating Objects on Lake Surface. Nat. Sci. Hainan Univ. 2025. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, L.; Qiao, R.; Dang, Q.; Zhai, P.; Sun, H. Spatial Attention Mechanism with Global Characteristics. J. Xi’an Jiaotong Univ. 2020, 54, 129–138. [Google Scholar]

- Chen, Z.; Zhao, C.; Li, B. Bounding box regression loss function based on improved IoU loss. Appl. Res. Comput. 2020, 37 (Suppl. S2), 293–296. [Google Scholar]

- Jiang, Y.; Liu, W.; Wei, T. A Robot for Detecting Rail Screws Based on YOLOv5. China Comput. Commun. 2022, 34, 165–167. [Google Scholar]

- Dong, H.; Pan, J.; Dong, F.; Zhao, Q.; Guo, H. Research on bounding box regression loss function based on YOLOv5s model. Mod. Electron. Technol. 2024, 47, 179–186. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, J.; Hu, Y.; Zhao, Y.; Cao, Y. Real-time Safety Helmet-wearing Detection Based on Improved YOLOv5. Comput. Syst. Sci. Eng. 2022, 43, 1219. [Google Scholar] [CrossRef]

- Yan, H. Research on Typical Solid Waste Species Identification Based on Image. Master’s Thesis, North China Electric Power University, Beijing, China, 2024. [Google Scholar] [CrossRef]

- Xu, X.; Wang, Y.; Hua, Z.; Yang, G.; Li, H.; Song, H. Lightweight recognition for the oestrus behavior of dairy cows combining YOLO v5n and channel pruning. Trans. Chin. Soc. Agric. Eng. 2022, 38, 130–140. [Google Scholar]

- Feng, J. Channel Pruning of Convolutional Neural Network Based on Transfer Learning. Comput. Mod. 2021, 12, 13–18+26. [Google Scholar]

- Zheng, Y.; Cheng, B. Lightweight Ship Recognition Network Based on Model Pruning. J. Wuhan Univ. Technol. (Transp. Sci. Eng.) 2025, 49, 682–691. [Google Scholar]

- Liang, X.; Pang, Q.; Yang, Y.; Wen, C.; Li, Y.; Huang, W.; Zhang, C.; Zhao, C. Online detection of tomato defects based on YOLOv4 model pruning. Trans. Chin. Soc. Agric. Eng. 2022, 38, 283–292. [Google Scholar]

- Li, S.J.; Hu, D.Y.; Gao, S.M.; Lin, J.H.; An, X.S.; Zhu, M. Real-time classification and detection of citrus based on improved single short multibox detecter. Trans. Chin. Soc. Agric. Eng. 2019, 35, 307–313. [Google Scholar]

- Zhang, W.; Zhang, H.; Liu, S.; Zeng, X.; Mu, G.; Zhang, T. Detection of Potato Seed Bud Eye Based on Improved YOLOv7. Trans. Chin. Soc. Agric. Eng. 2023, 39, 148–158. [Google Scholar]

| Model | Parameters /M | FLOPs /G | Size /MB | Precision /% | Recall /% | mAP@ 0.5/% | mAP@ 0.75/% | mAP@ 0.5:0.95/% | FPS /(s−1) | Depth Multiple | Width Multiple |

|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 6.80 | 14.6 | 10.2 | 82.8 | 80.1 | 84.7 | 65.1 | 52.3 | 158 | 0.33 | 0.50 |

| YOLOv5m | 20.1 | 44.2 | 30.1 | 85.5 | 83.7 | 88.3 | 70.8 | 58.1 | 104 | 0.67 | 0.75 |

| YOLOv5l | 44.1 | 99.5 | 63.0 | 86.9 | 85.3 | 89.8 | 73.5 | 61.7 | 79 | 1.00 | 1.00 |

| YOLOv5x | 82.3 | 188.7 | 117.1 | 87.6 | 86.2 | 90.6 | 75.2 | 63.9 | 53 | 1.33 | 1.25 |

| Model | Parameters/M | FLOPs/G | Size/MB | Precision/% | Recall/% | mAP@0.5/% | mAP@0.75/% | mAP@0.5:0.95/% |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 6.80 | 14.6 | 10.2 | 82.8 | 80.1 | 84.7 | 65.1 | 52.3 |

| YOLOv5s- Shuffle Netv2 | 0.68 | 6.9 | 1.7 | 80.7 | 72.5 | 79.9 | 58.3 | 45.8 |

| YOLOv5s- EfficientNet-Lite | 1.26 | 7.3 | 2.1 | 79.5 | 71.0 | 77.8 | 56.9 | 44.1 |

| YOLOv5s- NanoDet | 1.42 | 7.2 | 2.5 | 76.1 | 70.3 | 76.2 | 55.1 | 42.5 |

| Model | Parameters/M | FLOPs/G | Size/MB | Precision/% | Recall/% | mAP@0.5/% | mAP@0.75/% | mAP@0.5:0.95/% |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 6.80 | 14.6 | 10.2 | 82.8 | 80.1 | 84.7 | 65.1 | 52.3 |

| YOLOv5s- CBAM | 7.82 | 18.9 | 16.3 | 94.6 | 84.8 | 92.4 | 77.8 | 65.2 |

| YOLOv5s- GC | 8.85 | 18.4 | 19.4 | 93.1 | 86.1 | 91.1 | 75.1 | 62.9 |

| YOLOv5s- AA | 8.13 | 18.7 | 17.3 | 93.2 | 84.6 | 93.8 | 78.5 | 66.8 |

| YOLOv5s- SimAM | 7.20 | 18.6 | 17.2 | 91.0 | 81.7 | 88.6 | 70.2 | 57.4 |

| YOLOv5s- Triplet | 8.86 | 19.0 | 19.1 | 94.9 | 84.3 | 93.9 | 79.1 | 67.5 |

| Component Combination | Parameters/M | FLOPs/G | Size/MB | Precision/% | Recall/% | mAP@0.5/% | mAP@0.75/% | mAP@0.5:0.95/% |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 6.80 | 14.6 | 10.2 | 82.8 | 80.1 | 84.7 | 65.1 | 52.3 |

| YOLOv5s+S | 0.68 | 6.9 | 1.7 | 80.7 | 72.5 | 79.9 | 58.3 | 45.8 |

| YOLOv5s++C | 7.82 | 18.9 | 16.3 | 94.6 | 84.8 | 92.4 | 77.8 | 65.2 |

| YOLOv5s++A | 6.80 | 17.6 | 14.2 | 93.2 | 85.7 | 90.6 | 73.9 | 61.5 |

| YOLOv5s++S+C | 2.64 | 12.2 | 5.8 | 87.8 | 82.0 | 90.2 | 72.5 | 60.1 |

| YOLOv5s++S+A | 1.28 | 10.9 | 4.7 | 86.4 | 83.2 | 89.6 | 71.8 | 59.3 |

| YOLOv5s++C+A | 12.82 | 18.6 | 19.3 | 94.9 | 90.1 | 96.5 | 82.3 | 71.4 |

| YOLO-SCA | 1.70 | 7.1 | 3.6 | 91.7 | 89.2 | 95.3 | 78.5 | 65.2 |

| Model | Parameters /M | FLOPs /G | Size /MB | Precision /% | Recall /% | mAP@ 0.5/% | mAP@ 0.75/% | mAP@ 0.5:0.95/% | Advantage | Disadvantage |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv3 -SCA | 6.2 | 16.8 | 12.7 | 84.4 | 85.1 | 89.7 | 70.2 | 58.9 | Target features are fully preserved. | Large number of parameters. |

| YOLOv4 -SCA | 4.1 | 14.2 | 9.8 | 86.7 | 97.2 | 92.8 | 75.8 | 64.1 | Improved accuracy. | Heavily computational. |

| YOLOv8 -SCA | 3.2 | 10.5 | 6.3 | 92.3 | 89.1 | 95.7 | 79.8 | 67.1 | Enhanced detail perception. | Large memory. Poor real-time performance. |

| YOLO -SCA | 1.7 | 7.1 | 3.6 | 91.7 | 89.2 | 95.3 | 78.5 | 65.2 | Lightweight model. | Small target detection robustness is slightly lower. |

| α Value | Precision/% | Recall/% | mAP@0.5/% | mAP@0.75/% | mAP@0.5:0.95/% |

|---|---|---|---|---|---|

| 2 | 90.8 | 88.5 | 94.1 | 72.5 | 63.5 |

| 2.5 | 91.2 | 88.9 | 94.8 | 75.8 | 64.3 |

| 3 | 91.7 | 89.2 | 95.3 | 78.5 | 65.2 |

| 3.5 | 91.5 | 89.0 | 95.1 | 77.2 | 64.9 |

| 4 | 91.3 | 88.8 | 94.9 | 76.4 | 64.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Q.; Zhao, P.; Wang, X.; Xu, Q.; Liu, S.; Ma, T. YOLO-SCA: A Lightweight Potato Bud Eye Detection Method Based on the Improved YOLOv5s Algorithm. Agriculture 2025, 15, 2066. https://doi.org/10.3390/agriculture15192066

Zhao Q, Zhao P, Wang X, Xu Q, Liu S, Ma T. YOLO-SCA: A Lightweight Potato Bud Eye Detection Method Based on the Improved YOLOv5s Algorithm. Agriculture. 2025; 15(19):2066. https://doi.org/10.3390/agriculture15192066

Chicago/Turabian StyleZhao, Qing, Ping Zhao, Xiaojian Wang, Qingbing Xu, Siyao Liu, and Tianqi Ma. 2025. "YOLO-SCA: A Lightweight Potato Bud Eye Detection Method Based on the Improved YOLOv5s Algorithm" Agriculture 15, no. 19: 2066. https://doi.org/10.3390/agriculture15192066

APA StyleZhao, Q., Zhao, P., Wang, X., Xu, Q., Liu, S., & Ma, T. (2025). YOLO-SCA: A Lightweight Potato Bud Eye Detection Method Based on the Improved YOLOv5s Algorithm. Agriculture, 15(19), 2066. https://doi.org/10.3390/agriculture15192066