1. Introduction

Laying hen farming is fundamental in the agricultural sector [

1,

2]. Over the past few years, the demand for eggs has been on a continuous increase among consumers [

3,

4]. Large-scale egg weighing is necessary for calculating the feed-to-egg ratio of a laying hen farm, reflecting the feed utilization rate and helping farmers optimize feed formulations and adjust feeding management to improve production efficiency. The traditional manual egg-weighing method requires substantial human labor input [

5]. With the expanding scale of the egg production industry, there is an urgent need for an automated egg weight measurement method [

6].

Recently, automated approaches based on computer vision and deep learning methodologies have been widely implemented in intelligent poultry farming [

7]. These advancements have provided a revolutionary solution for automatic egg weight measurement. Indeed, several computer vision-based automated systems have already been developed for weight measurement applications in agricultural engineering. For example. Rao et al. [

8] employed color threshold segmentation on eggs, extracted features such as the transverse diameter and longitudinal diameter of eggs, and established a polynomial regression model, with an R

2 of 0.898 and an absolute error of ±3 g. Aragua and Mabayo [

6] proposed an egg weight estimation method based on a computer vision system and traditional image analysis methods. They tested 15 eggs with an average accuracy of 96.31% and proposed the possibility of further improvement. Thipakorn [

9], after segmenting eggs, extracted 13 geometric features to develop a linear regression model, which achieved an R

2 of 0.983. Most existing studies extract geometric features directly from egg segmentation results; however, the edge segmentation results are often unsmooth, which introduces errors into the extracted geometric features and thereby affects the weight estimation accuracy [

10]. Furthermore, the author utilized support vector machine (SVM) for egg weight grading, yielding a grading accuracy of 87.58%. Zalhan et al. [

11] and Ab Nasir et al. [

12] directly used geometric parameters of eggs, such as diameter, perimeter, and area, for egg grading, with grading accuracies of 96% and 94.16%, respectively. Yang et al. [

5] segmented eggs using RTMDet, extracted the lengths of the major axis and minor axis of the eggs, and then employed the Random Forest (RF) algorithm to predict egg weight, achieving an R

2 of 96%.

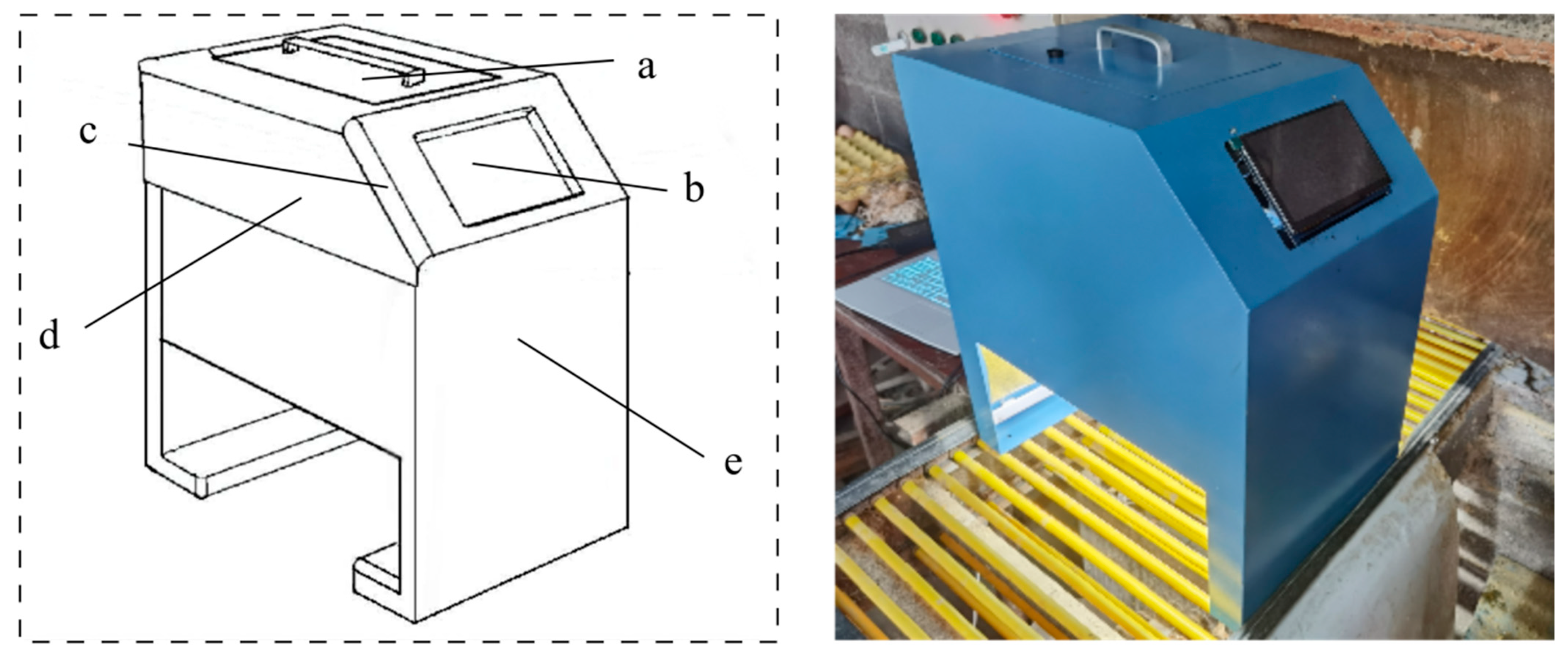

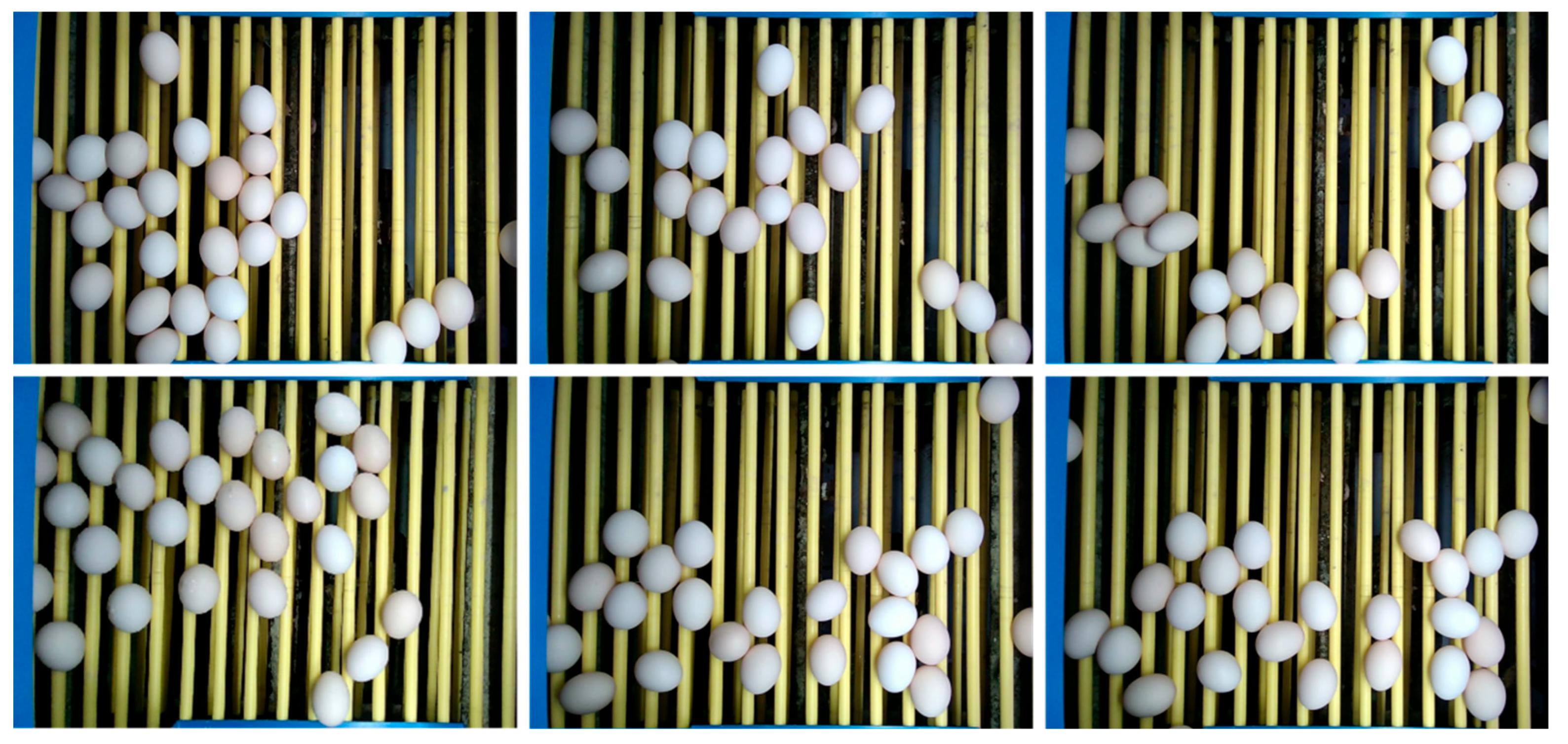

The research suggests that computer vision-based approaches hold significant potential for enabling efficient egg weight measurement. However, while the aforementioned research was conducted in a laboratory setting, the practical implementation of such solutions poses several critical challenges. (1) In egg collection transmission lines, eggs are often clustered together, leading to potential occlusions or mutual compression that may tilt them relative to the horizontal plane. As a result, eggs appear as oblique projections (non-orthogonal projections) in the camera installed from a top-down perspective. These complexities make high-precision weight measurement an exceptionally challenging task under such conditions. (2) From a user perspective, most farm owners strongly prefer convenient, cost-effective, and plug-and-play monitoring systems requiring no additional peripheral connections. This preference makes it difficult to standardize image acquisition conditions, thereby imposing stricter demands on the algorithm’s generalizability and robustness. (3) Most existing studies extract geometric features directly from egg segmentation results; however, the edge segmentation results are often unsmooth, which introduces errors into the extracted geometric features and thereby affects the weight estimation accuracy [

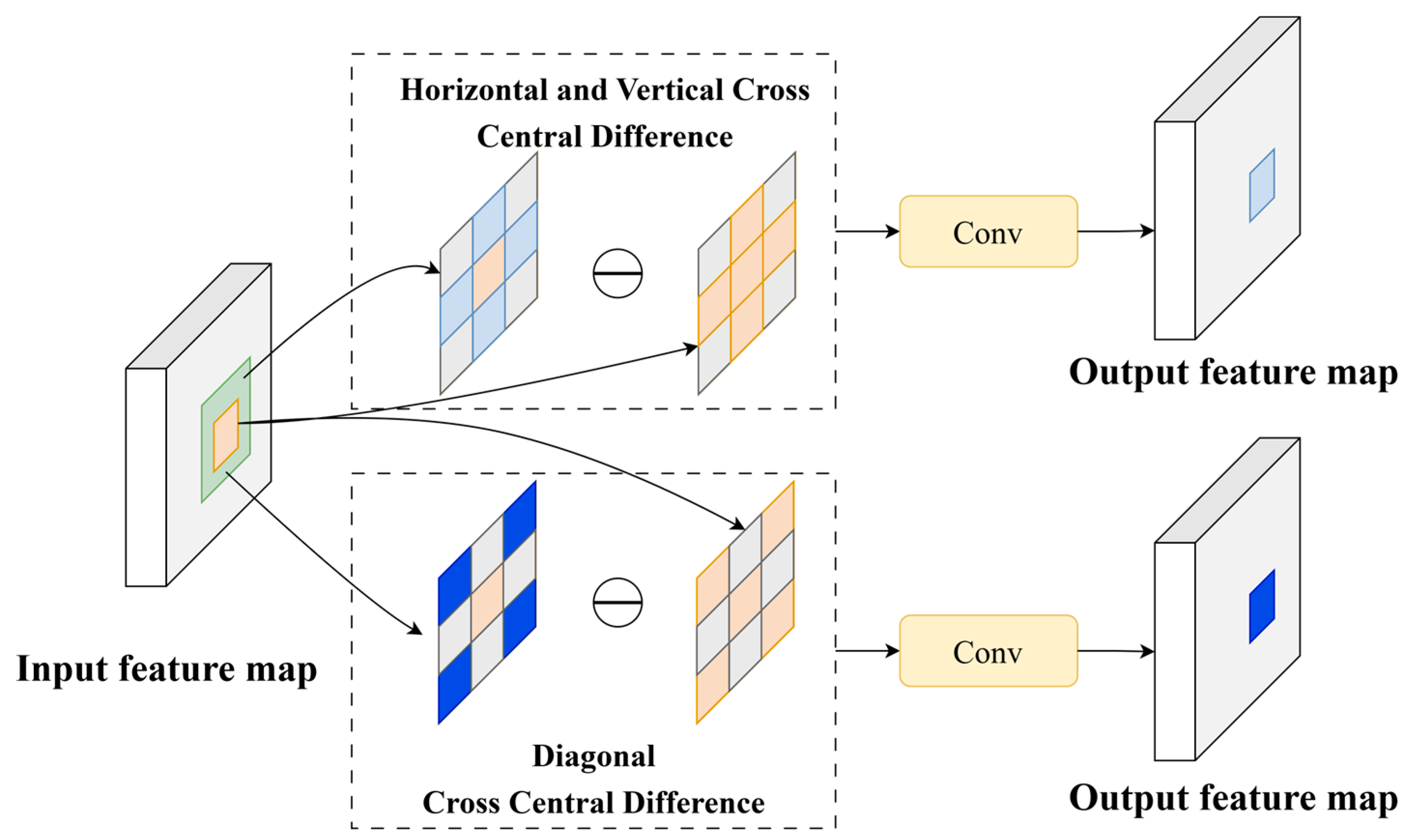

10]. This is because traditional convolution operators tend to inadvertently blur and smooth boundaries with low contrast [

13], leading to inaccurate contour segmentation of eggs with varying quality. Although these inaccuracies may be negligible for generic object detection, they prove particularly detrimental to our framework, where subpixel-level mask precision is paramount for weight prediction. Due to the above difficulties, the current vision-based egg-weighing method remains in the laboratory scenario stage, largely inconsistent with the actual production scenario (as shown in

Figure 1a). Installing monitoring equipment on the egg-laying area transmission line (

Figure 1b) is another potential solution, but this would necessitate dozens of monitoring equipment for a single laying hen house. Compared with monitoring egg collection transmission lines (

Figure 1c), this significantly increases costs, which is unacceptable to farmers.

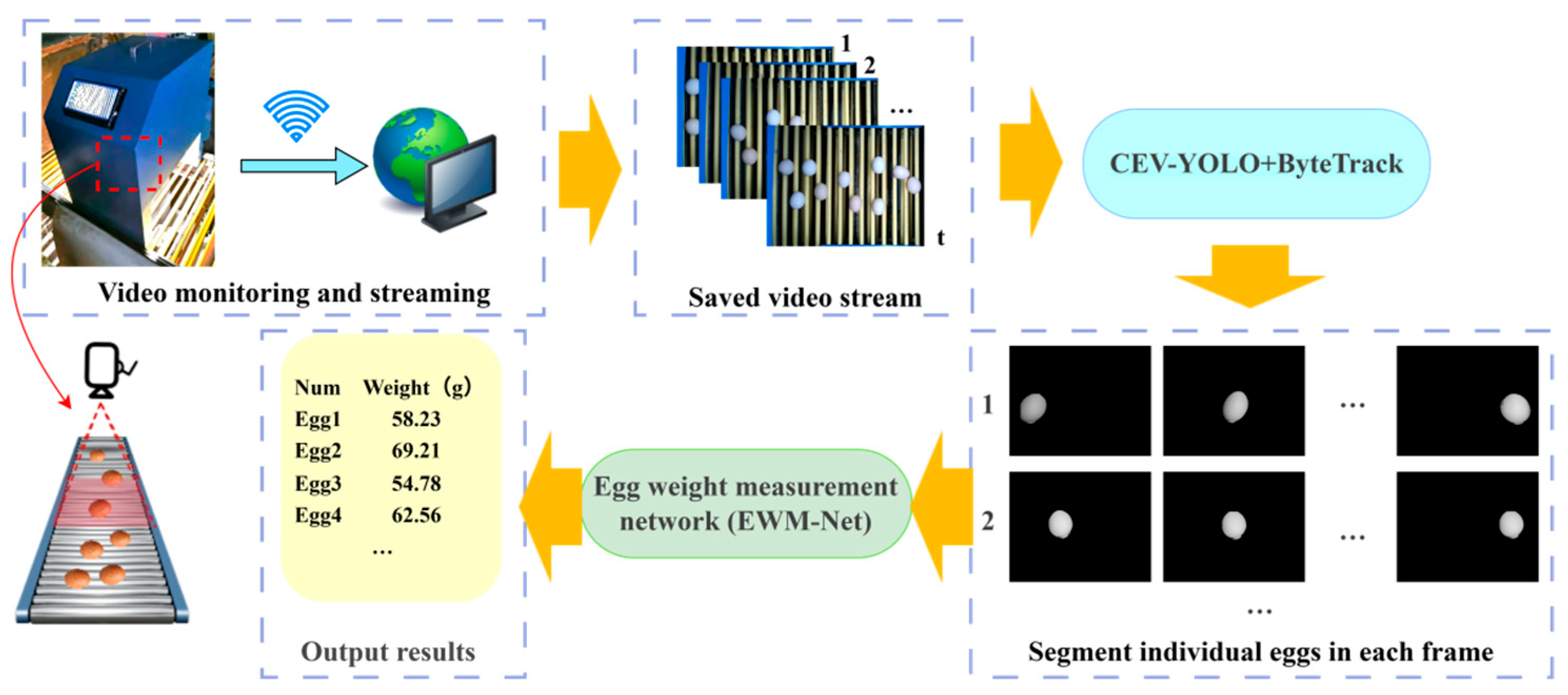

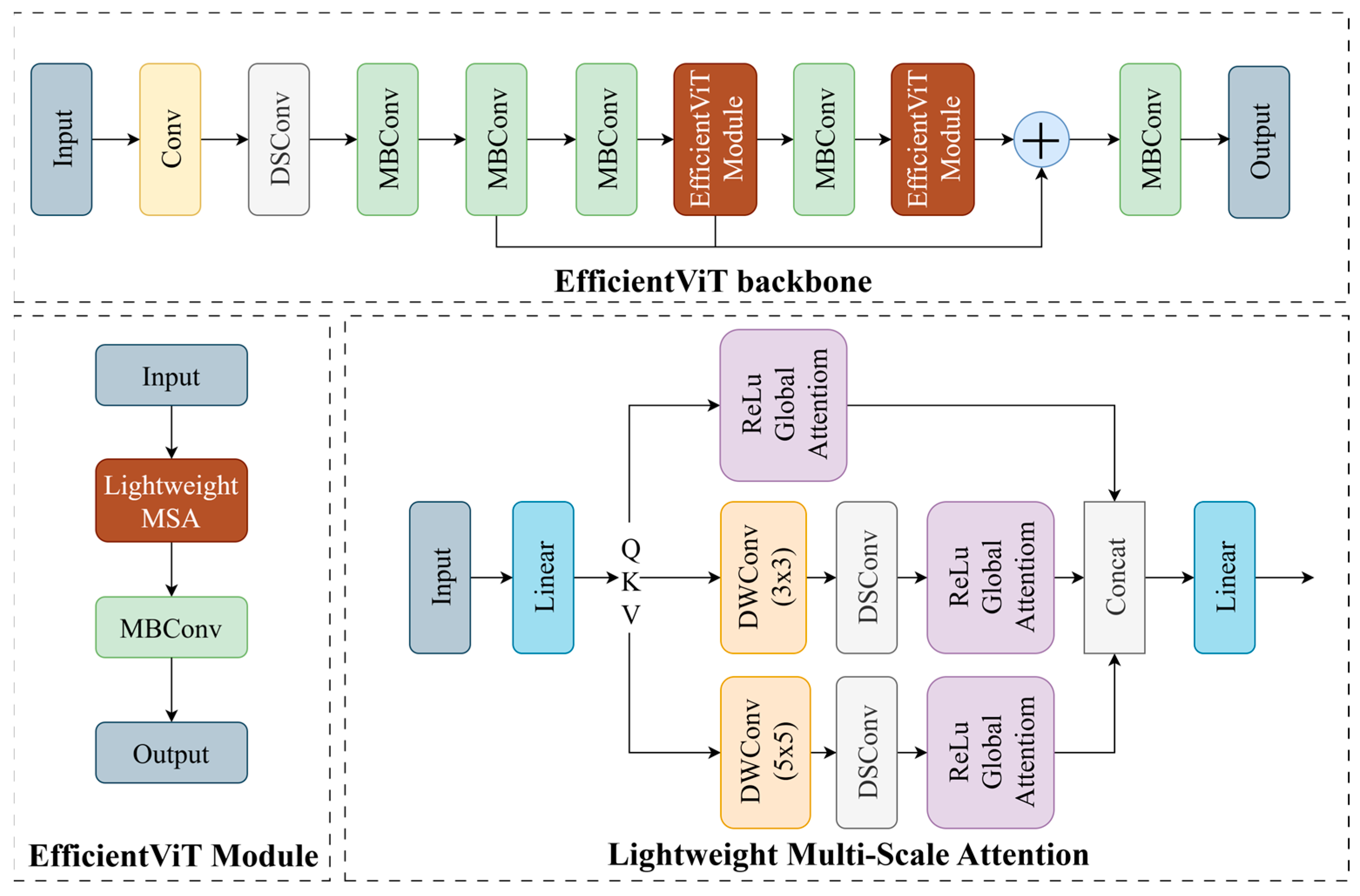

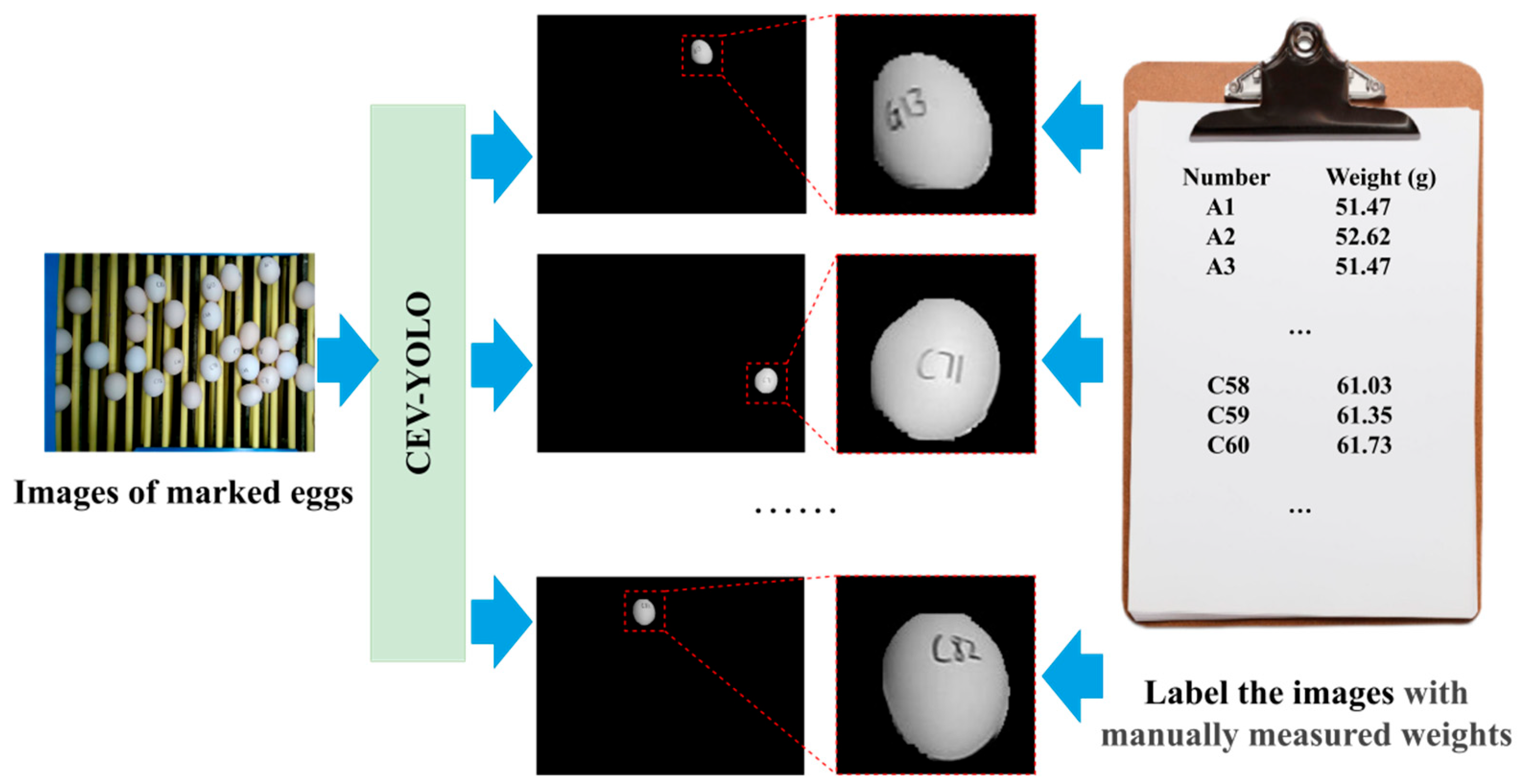

To address the above issues and promote the technology to practical production, we propose a computer vision-based multi-feature extraction and regression algorithm for precise egg weight measurement. We introduced two artificial neural networks and specialized data processing methods to enhance the robustness and accuracy of the algorithm. In our algorithmic pipeline, we present Central differential-EfficientViT YOLO (CEV-YOLO): an enhanced variant of YOLOv11 specifically optimized for stronger edge extraction and more robust anti-occlusion capabilities. Integrated with the ByteTrack algorithm, our framework generates continuous mask output across video streams. These segmented masks are subsequently fed into our proprietary egg weight measurement network (EWM-Net), which performs advanced multi-feature extraction and precise weight prediction from the masks. We constructed an egg image dataset and an egg weight dataset separately, which are used to train and validate CEV-YOLO and EWM-Net, respectively. The detailed design of the algorithm, model training and dataset construction are elaborated in the Materials and Methods section.

The main contributions of this paper can be summarized as follows:

Optimize the YOLOv11 architecture by integrating a Central Differential Convolution (CDC) block and EfficientViT backbone to develop a new model (CEV-YOLO), aiming to enhance the accuracy of pixel-level egg image segmentation for complex real-world scenarios.

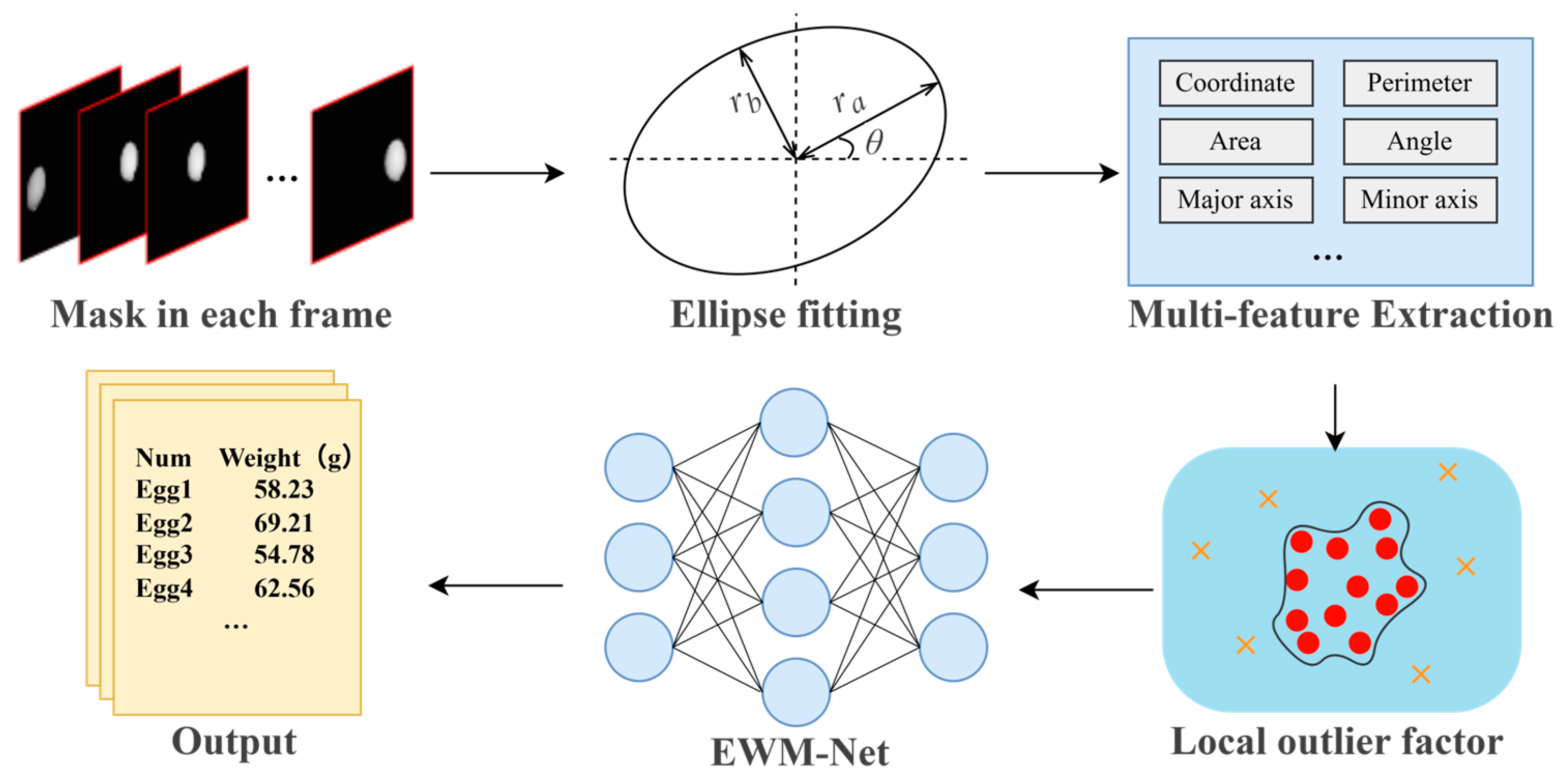

Design a high-precision Egg Weight Measurement Network (EWM-Net) that enables efficient feature extraction and weight estimation based on egg masks (after ellipse fitting and outlier filtering), with the goal of improving the reliability of egg weight prediction.

Construct a novel technical pipeline that combines image segmentation (CEV-YOLO + ByteTrack) and mask-based weight prediction (EWM-Net), which can process video data collected by customized devices to realize automated, high-precision egg weight measurement.

3. Results and Discussion

3.1. Comparison of Precision of Different Segmentation Models

Computer vision technology is an important component in agricultural engineering. In recent years, the YOLO network has become one of the most popular visual neural networks in the agricultural field due to its advanced performance in terms of accuracy, speed, and network scale [

42]. Our CEV-YOLO is also constructed based on the YOLO algorithm network framework.

To demonstrate the effectiveness of CEV-YOLO, we compared it with five other advanced YOLO models [

43,

44,

45,

46,

47], and the visualization results are shown in

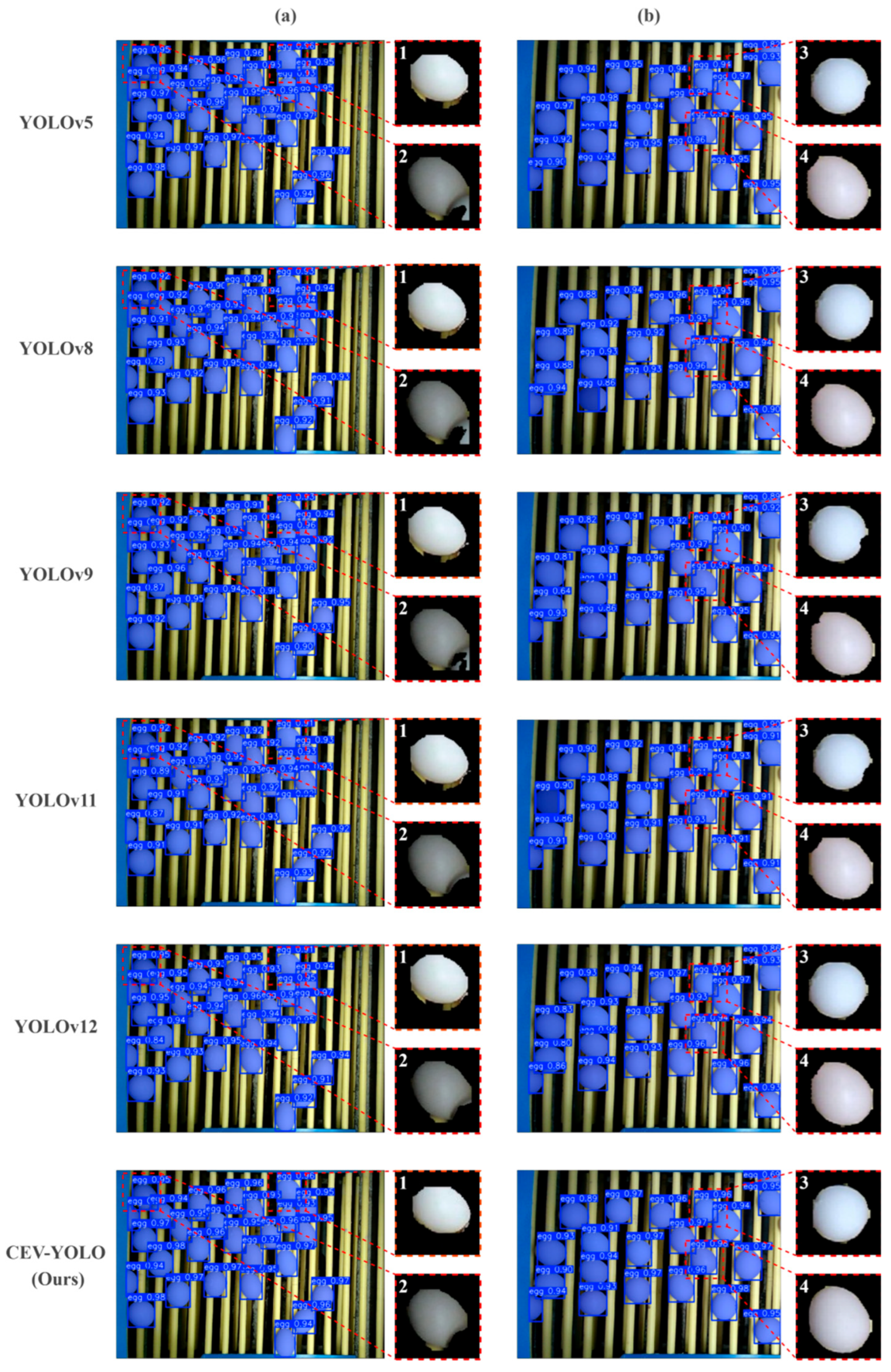

Figure 14.

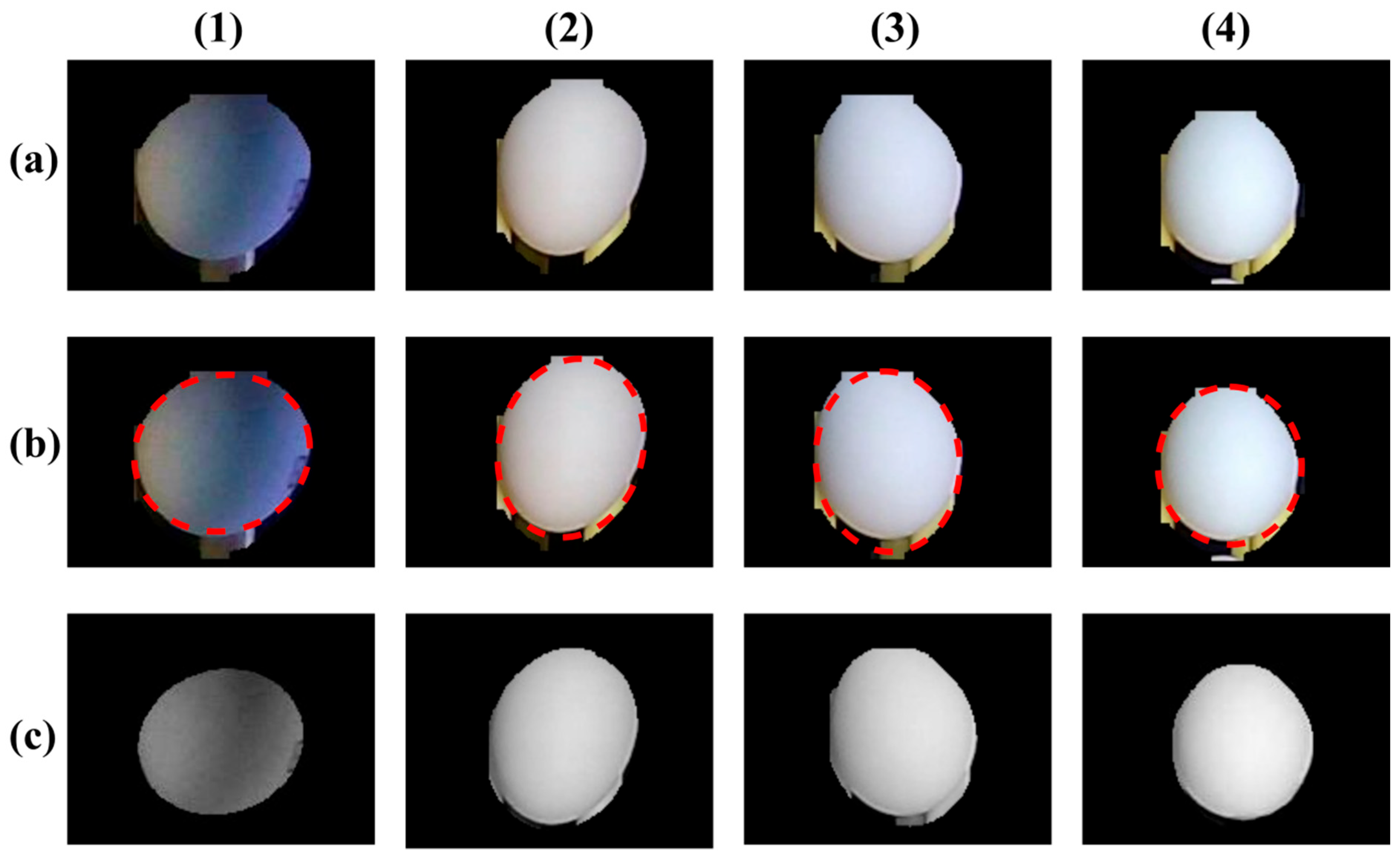

Figure 14 compares the egg segmentation results of different models. We selected two representative images from the test set as examples and let six models generate the operation results, respectively (Column a and Column b). Then, we further selected four targets from the images, extracted their corresponding masks, and carried out local magnifications (within the red dashed boxes) to more intuitively display the comparison results.

In Column a, most models mistakenly segmented the background into the mask when segmenting Target 1, while in contrast, the segmentation result of CEV-YOLO is much more accurate. For Target 2, due to the stacking and occlusion of eggs, most models mistakenly segmented another egg overlapping on the target into the egg mask, but CEV-YOLO did not have this problem. In Column b, the smoothness and accuracy of the edge segmentation of the targets (targets 3 and 4) by different algorithms are compared. It also can be observed that the segmentation results of CEV-YOLO are superior. As we have previously stated, although the segmentation results of Column b from different models seem to only exhibit very few differences at the edges, since we need to utilize the segmented masks for ellipse fitting and weight estimation, even a tiny segmentation deviation may lead to a significant weight error. A more precise segmentation result implies that the feature values we input into the weight estimation network are closer to the actual situation of the eggs.

Table 7 provides a quantitative comparison of the above models.

It should be emphasized that although each iteration of YOLO implies an improvement over its predecessor in performance on the COCO dataset [

41], this does not guarantee its superior performance in specific applications. The performance of the YOLO-series networks largely depends on the specific characteristics of the dataset [

48,

49]. For instance, as shown in

Table 7, the AP90 of YOLOv9 in the field of egg segmentation is much lower than that of YOLOv8.

As in

Table 7, the number of parameters, GFLOPs, and FPS of the proposed CEV-YOLO are 3.99, 11.8, and 39.4, respectively. In terms of running speed, our algorithm’s performance is slightly lower than that of YOLOv5 and YOLOv11 and is close to that of YOLOv12. However, CEV-YOLO has a significant advantage in segmentation accuracy (precision of 98.9%, recall of 97.5%, and AP90 of 89.8%). In general, our algorithm achieves a better balance between running speed and egg segmentation accuracy.

When considering both the visualization results and quantitative comparisons, our model exhibits greater advantages over other models in handling occlusions, as well as in the precision and smoothness of edge segmentation for eggs. This superiority in segmentation performance constitutes a critical prerequisite for achieving highly accurate weight estimation.

3.2. Ablation Experiment of CEV-YOLO

Compared with the baseline model YOLOv11, our CEV-YOLO has made some improvements to the network structure for egg segmentation. To verify the effectiveness of these improvements, we conducted a network ablation study by replacing the modules one by one in the baseline network. The results of the ablation experiment are shown in

Table 8.

As can be seen from

Table 8, the AP90 of the baseline model is only 85.7%. When we replaced the backbone network of the model with EfficientViT, the AP90 significantly increased by 2.6%, demonstrating the advantages of EfficientViT in dealing with occlusion and dense segmentation. However, this improvement increased the parameters, and the FPS of the model dropped from 53.97 to 41.27. When we further replaced the conventional convolutions in the network with CDC, the AP90 was greatly improved to 90.9% without significantly affecting the computational complexity and running speed of the model. This result indicates that CDC can indeed enhance the model’s ability to segment edge contours and can effectively address the issues of blurred and low-contrast egg edge contours. Ultimately, our CEV-YOLO demonstrates a remarkable improvement, achieving an increase of 5.2% in AP90 compared to the baseline model. Although there is an increase in the GFLOPs and the number of parameters of the model, which has caused the FPS to decrease from 53.97 to 39.38, we consider this acceptable. Evidently, in our task, the segmentation precision is more crucial than a slight reduction in the running speed.

3.3. Comparison of Weight Measurement Accuracy Between Different Regression Models

The measurement of the egg weight can be regarded as a regression task. To demonstrate the superiority of our model in precision, we select six mainstream algorithms in machine learning and deep learning for comparison with our EWM-Net. The models include SVM (Support Vector Machine) [

50], KRR (Kernel Ridge Regression) [

51], RF (Random Forest) [

52], DT (Decision Tree) [

53], MLP (Multi-Layer Perceptron) [

54], and RNN (Recurrent Neural Network) [

55]. The comparison results are shown in

Table 9.

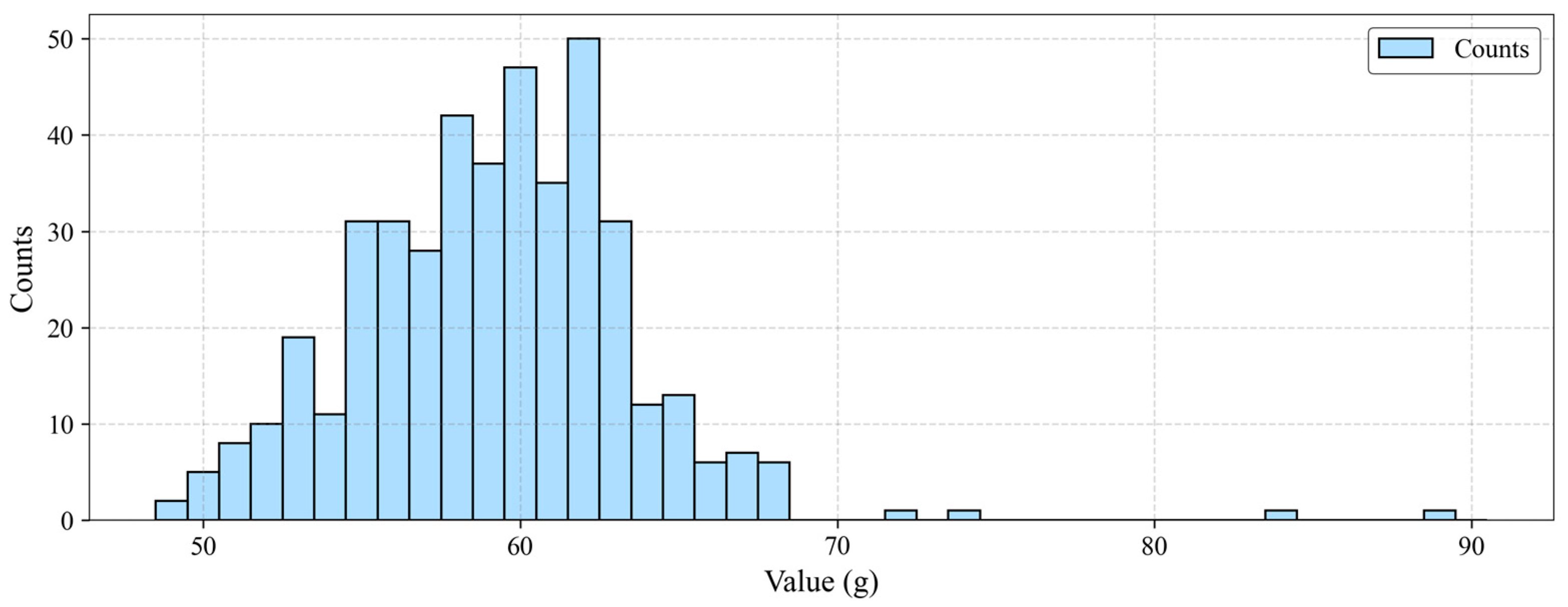

Figure 15 shows the scatter plot of the test results, where the red dashed line represents the ground truth and the blue dots denote the predicted values. The closer the blue dots are to the red dashed line, the more accurate the weight prediction of the egg is. In

Figure 15, the display range is set between 50 g and 68 g for better visual clarity.

In

Table 9, among several machine learning models, the accuracy of SVM and KRR (96.17% and 93.53%) is significantly lower than RF and DT (98.18% and 98.01%). In deep learning models, the accuracy of the RNN with a relatively complex structure (94.67%) is also significantly lower than the MLP (98.20%). This result also proves that when predicting the weight based on the egg features after dimensionality reduction, it is not suitable to use a network structure that is too complex. A complex network structure will cause the network to learn the noise and outliers in the data, resulting in overfitting, a decrease in the generalization ability of the algorithm, and poor accuracy.

However, an overly simple network is also not suitable. Our dataset contains 37,361 data records from 669 eggs; an overly simple network structure will have difficulty dealing with such a large amount of data, leading to underfitting. As can be seen from the results, our EWM-Net meets the appropriate network complexity very well.

Building upon the aforementioned experiments, we further conduct replicate experiments on the results to verify its reliability. Specifically, we perform 5 training and validation runs using different random seeds. The average values of MAE, RMSE, R2, and Acc of EWM-Net across these runs are 0.898, 1.176, 0.926, and 98.48%, respectively, with corresponding standard deviations of 0.01, 0.01, 0.01, and 0.02. These results are consistent with the reported point metrics. Further t-tests are conducted to compare the results of 5 repeated experiments of our algorithm with those of 6 other models, using MAE as the evaluation metric. All obtained p-values are less than 0.05: specifically, the p-values for SVM, KRR, and RNN each fall below 0.0001, with that for RF being 0.0015, for DT 0.0004, and for MLP 0.029. These results fully demonstrate the superiority of our algorithm over the other compared algorithms.

It can also be intuitively seen from

Figure 15 that the predicted weight values of EWM-Net are closer to the true value line (red dotted line) compared with other methods, demonstrating the robustness and accuracy of our model. Additionally, EWM-Net is the only model with an MAE of less than 1 g (0.88 g), the R

2 reaches 0.926, and the accuracy is 98.52%. We conduct statistics on the results of this experiment, revealing that 74.8% of the outcomes exhibit small errors (with relative errors less than 2%), while 4% show larger errors (with relative errors exceeding 4%). The maximum error value is 4.59 g, occurring in a case where the true value is 59.40 g but the predicted value is 54.81 g. A review indicates that this error arises because the egg in question is squeezed by other eggs, causing it to be placed non-horizontally under the camera, which results in smaller characteristic values such as its mask area. However, overall, our algorithm demonstrates certain advantages over other algorithms.

3.4. Impact of Geometric Fitting and LOF Filtering on Weight Prediction

Our model employs ellipse fitting on egg segmentation masks to extract morphological features, which are EWM-Net then processes for weight prediction. Regarding the geometric representation of eggs, Narushin et al. [

30] demonstrated that both ellipse fitting and oval curve fitting provide optimal mathematical representations for eggs in 2D images. To systematically evaluate this, we implemented three distinct fitting approaches for comparative accuracy analysis: (1) direct prediction without geometric fitting, (2) oval curve fitting, and (3) ellipse fitting. For each approach, we further examined the impact of Local Outlier Factor (LOF) filtering through controlled experiments. The comprehensive comparison results are presented in

Table 10.

As shown in

Table 10, the EWM-Net achieves an MAE of only 1.35 g in egg weight estimation without any curve fitting, and the Local Outlier Factor (LOF) further reduces this error to 1.10 g. This result demonstrates that with precise segmentation, EWM-Nets can achieve relatively accurate weight prediction using the feature of the egg mask. However, when an oval (egg-shaped) curve fitting is applied, the MAE increases to 1.96 g and remains at 1.81 g even after LOF optimization. In contrast, elliptical fitting improves accuracy, reducing the MAE by 0.11 g (to 1.24 g) compared to the no-fitting baseline. With LOF refinement, the error drops to 0.88 g, accompanied by an RMSE of 1.16, an R

2 of 0.926, and an Acc of 98.52%, making it the best-performing approach among all compared methods.

These results indicate that while ellipse fitting enhances the precision of weight prediction, oval fitting leads to degradation in performance. This discrepancy suggests that the oval equation does not provide an optimal fit for egg contours, likely due to its higher complexity and greater number of parameters, making it more susceptible to noise and segmentation errors. In comparison, elliptical fitting benefits from a more constrained and regularized shape, exhibiting greater robustness to minor mask imperfections. Moreover, elliptical fitting effectively corrects segmentation inaccuracies and better captures the morphological characteristics of egg masks. Thus, it proves more suitable for our application.

Regarding LOF, all three fitting methods exhibit significant MAE reductions (by 0.25 g, 0.15 g, and 0.36 g, respectively) when incorporating this preprocessing step. This demonstrates LOF’s effectiveness in filtering out outliers in egg segmentation results, which may arise from occlusions, edge effects, or artifacts introduced during segmentation and fitting. By removing such anomalies, LOF provides more reliable input data, enabling the model to better learn the underlying weight–contour relationship and ultimately improving weight prediction accuracy.

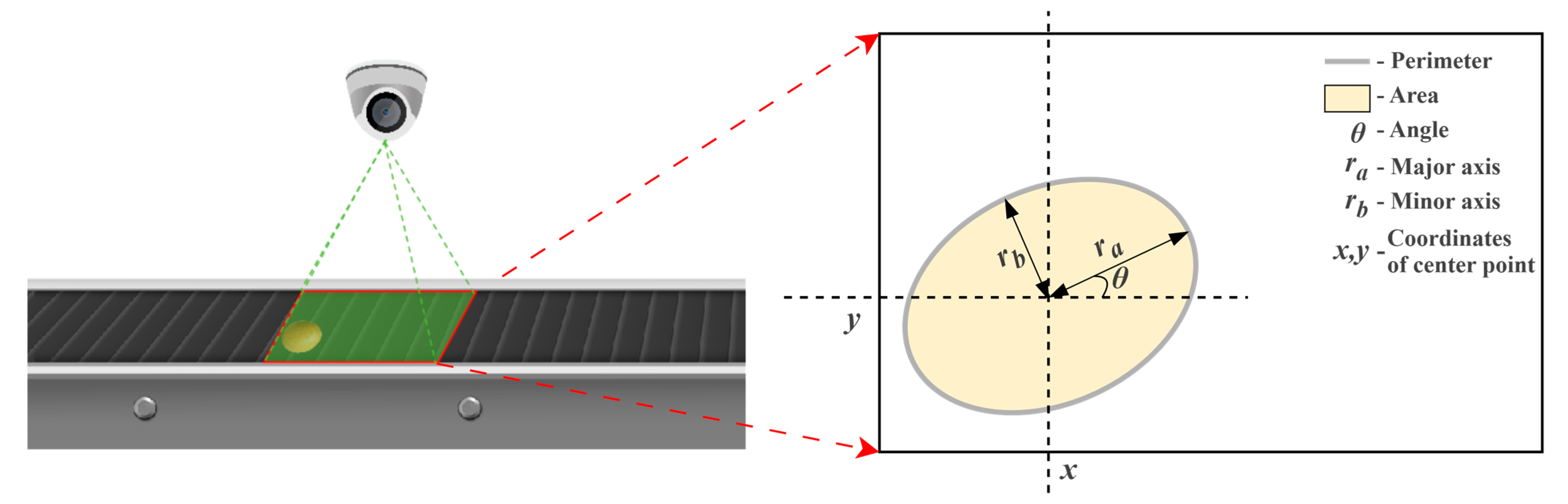

3.5. Impact of Multi-Feature Extraction on Weight Prediction

Our approach uses six parameters for multi-feature regression compared to traditional vision-based egg weight prediction methods. It is worth mentioning that to ensure the camera vision covers the egg collection transmission line, the camera must be positioned at a relatively high altitude. This configuration implies that when eggs are at different positions in the image, their linear distance from the camera varies significantly. So, in addition to the characteristic information of the egg itself (including the lengths of the major and minor axes, perimeter, projected area, and horizontal tilt angle), we have also incorporated the coordinates of the egg’s center point in the image as a feature for weight regression. For these features, we conduct ablation experiments to investigate their impact on the accuracy of weight prediction. The results are shown in

Table 11.

Initially, we only used the perimeter of the egg’s projection plane for weight regression prediction, resulting in a relatively large MAE of 2.19 g. When the projection area was added as a feature, the MAE significantly decreased to 1.74 g, representing a 0.45 g reduction compared to single-feature regression. Subsequently, by incorporating the egg’s tilt angle and the lengths of its major and minor axes as regression features, the MAE further decreased by 0.1 g. Most importantly, after inputting the central coordinates of the egg in the image as a feature into EWM-Net, the model learned the influence of the egg’s different positions on its projection relationship and linear distance. At this point, the MAE of weight measurement was only 0.88 g, with an accuracy of 98.52%.

This result indicates that single features have limited representational ability for the egg image–weight relationship. By introducing multi-feature extraction methods, especially the inclusion of coordinate information, the model’s capability to construct projection geometric relationships and environmental perception is enhanced, significantly improving the accuracy of egg weight prediction.

4. Conclusions

This study develops a computer vision-based multi-feature extraction and regression method to address the challenges of efficient and automated egg weight measurement in the production industry. Specifically, by integrating the CDC block and EfficientViT backbone into YOLOv11 to develop CEV-YOLO, the improved model significantly enhances the pixel-level egg segmentation accuracy in images. It effectively handles occlusion and dense segmentation issues while maintaining an acceptable running speed. The proposed EWM-Net, with its optimized network structure, accurately establishes the correlation between egg features and weights, outperforming other mainstream regression models in precision. Moreover, adopting ellipse fitting and LOF filtering further improves weight estimation accuracy by effectively extracting features and removing outliers. The experimental results, with an MAE of 0.88 g and an R2 of 0.926, demonstrate the high precision of this method. This research provides a practical and efficient solution for automated egg weight measurement in laying hen farms. It is expected to improve production efficiency, reduce labor costs and offer valuable references for similar research in other agricultural product weight estimation fields.

However, our model has not yet undergone generalization testing and validation across different breeds or laying hen farms. In future work, we will focus on addressing this limitation. Also, we will further refine the model to enhance the accuracy of egg weight prediction and deploy it on edge devices. By integrating it with our custom monitoring system, this implementation will support offline computation while storing results in an online database. Additionally, we will further develop other functionalities to meet farmers’ needs, such as detecting dirty or cracked eggs and an automated sorting system based on computer vision.