1. Introduction

Litchi is an important economic fruit tree widely cultivated in South China and globally, valued for its tender flesh and unique flavor [

1]. As the world’s leading producer of litchi, China contributes approximately one-third of the annual global litchi yield. Cultivation is primarily concentrated in southern hilly regions, with the related industry generating an annual output value exceeding 4 billion USD [

2].

Litchi fruit yield is influenced not only by external factors such as climate, fertilization, and irrigation [

3], but also by the number of flowers, a key indicator of internal tree development, which significantly determines fruit set rate, fruit weight, and skin color [

4]. An excessive flower number may cause uneven nutrient distribution and inhibit fruit development, while an insufficient number can reduce final yield [

5].

Currently, litchi flower monitoring relies primarily on manual statistics—a process that is time-consuming, labor-intensive and highly depends on human experience [

6]. This approach suffers from strong subjectivity, high error rates, and low efficiency, making it difficult to meet the modern orchard’s demand for large-scale, automated, and precise management. Consequently, developing efficient automated litchi flower detection technology carries substantial practical significance and application value.

In recent years, computer vision technology, especially deep learning-based methods, has made significant progress in agricultural target detection tasks and has gradually been applied to fruit flower identification and counting. Early studies primarily adopted traditional image processing approaches. For instance, D’Orj et al. [

7] utilized Gaussian filters and color analysis for orange blossom detection; Hočevar et al. [

8] employed the HSL (hue, saturation, brightness) color space to analyze image color features for estimating apple flower cluster density. However, these conventional techniques are sensitive to lighting conditions, background complexity, and variations in flower morphology, leading to limited robustness in practical scenarios.

Breakthroughs in deep learning have driven significant progress in multi-species fruit flower detection. Dias et al. [

9] proposed an improved semantic segmentation network for robust detection of apple, peach, and pear flowers across diverse scenarios. Additionally, their team [

10] developed an apple flower detection model based on deep convolutional neural networks, achieving an average precision (AP) of 97.20%, and a F1-score of 92.10% on public datasets, substantially outperforming traditional image processing approaches. Bhattarai et al. [

11] proposed CountNet, a weakly supervised regression algorithm for apple flower detection and counting. Jaju and Chandak (2022) [

12] classified and identified multi-class flower images using ResNet-50 and transfer learning strategies. Lin et al. [

13] utilized an improved VGG19 backbone with Faster R-CNN to detect strawberry flowers, achieving an accuracy of 86.1%, providing support for yield estimation. Farjon et al. [

14] proposed an apple flower detection system based on Faster R-CNN to assist precision thinning decisions, although detection accuracy still required improvement. Sun et al. [

15] proposed an improved YOLOv5-based peach flower detection method, introducing CAM and FSM modules, along with K-means++ clustering and SIoU loss, which increased the AP for flower buds, flowers, and fallen flowers by 7.8%, 10.1%, and 3.4%, respectively. Bai et al. [

16] incorporated a Swin Transformer prediction head and GS-ELAN optimization module into YOLOv7, improving the detection performance of strawberry flowers and fruits under small object, similar color, and occlusion conditions. More recently, Wang et al. [

17] introduced YO-AFD, an apple flower detection method based on improved YOLOv8, which significantly enhances detection accuracy and model robustness in complex scenarios by incorporating a C2f-IS module and an optimized FIoU (Focal Intersection over Union) regression loss function.

Although the aforementioned studies have made progress in detecting flowers of apples, peaches, strawberries, and other fruit trees, research on litchi flower detection remains relatively scarce. Lin et al. [

5] addressed male litchi flower estimation using a multicolumn convolutional neural network based on a density map, achieving an average absolute error (MAE) of 16.29 and a mean squared error (MSE) of 25.40 on a self-built dataset. Although this represents a breakthrough, the method does not consider target-level detection and position information, and challenges remain for the actual detection of dense flower clusters.

These research findings indicate that deep learning demonstrates significant advantages in the field of fruit flower detection.

In addition to algorithmic research, the integration of remote sensing and artificial intelligence technologies has further advanced the development of precision agriculture. Unmanned aerial vehicle (UAV) remote sensing systems offer advantages such as high image resolution, fast data collection efficiency, low cost, and wide coverage, making them particularly suitable for crop detection and yield estimation tasks in large-scale orchards [

18]. Currently, researchers have combined UAV imagery with object detection algorithms for widespread application in fruit detection tasks. For instance, Xiong et al. [

19] achieved 96.1% accuracy detecting green mangoes using UAV imagery with YOLOv2, while Wang et al. [

20] improved the YOLOv5 model to build a litchi fruit detection model, achieving an average precision (mAP) of 92.4% on the test set, and developed a corresponding mobile app, with the correlation (R

2) between predicted results and actual observations reaching 0.9879.

Additionally, Huang et al. [

21] designed a citrus detection system based on mobile platforms and edge computing devices, combining UAV-collected images with an improved YOLOv5 algorithm, achieving 93.32% detection accuracy on edge devices. Zheng et al. [

22] combined UAV imagery with YOLOv5 to develop the Fruit-YOLO model, and further improved citrus detection performance through image augmentation, achieving an mAP of 0.929. Concurrently, Yang et al. [

23] coupled YOLOv8 with UAV RGB imagery for oil tea fruit detection and counting, achieving 82% mAP and a correlation coefficient R

2 of 0.94, demonstrating robust versatility. In the litchi domain, Xiong et al. [

24] proposed an improved YOLOv5-based litchi fruit detection method, enhancing the feature fusion network and introducing a new loss function, which increased overall AP to 72.6%, effectively achieving precise detection of dense litchi fruits in UAV imagery. Liang et al. [

25] adapted YOLOv7 to attain 96.1% mAP on custom datasets, while Peng et al. [

26] constructed a litchi remote sensing dataset and proposed the YOLO-Litchi model, achieving improvements of 1.5% and 2.4% in mAP and recall rate, respectively, compared to the original YOLOv5.

Although existing studies have shown that combining UAVs with deep object detection algorithms achieves good accuracy and deployability in fruit detection, research on the automatic detection of litchi flowers remains scarce. Compared to fruit targets, litchi flowers exhibit dense cluster-like distributions, loose structures, complex shapes, varying scales, and severe occlusions in natural environments, posing greater challenges for object detection.

As a leading single-stage object detection framework, the YOLO family of algorithms strikes an effective compromise between inference speed and precision through its end-to-end modeling, rendering it highly appropriate for use in agricultural contexts where real-time processing and lightweight deployment are critical. In contrast, two-stage approaches like R-CNN [

27], Fast R-CNN [

28], and Faster R-CNN [

29], although outperforming in accuracy, exhibit slower inference, which limits their suitability for real-time detection and use on devices with limited resources or mobile platforms.

Given these considerations, this paper introduces a litchi flower cluster detection technique utilizing an enhanced YOLO11n. The main contributions are summarized below:

- (1)

We present the WSD-YOLO litchi flower cluster detection model, which incorporates three advancements based on YOLO11 aimed at boosting the detection accuracy of litchi flower clusters;

- (2)

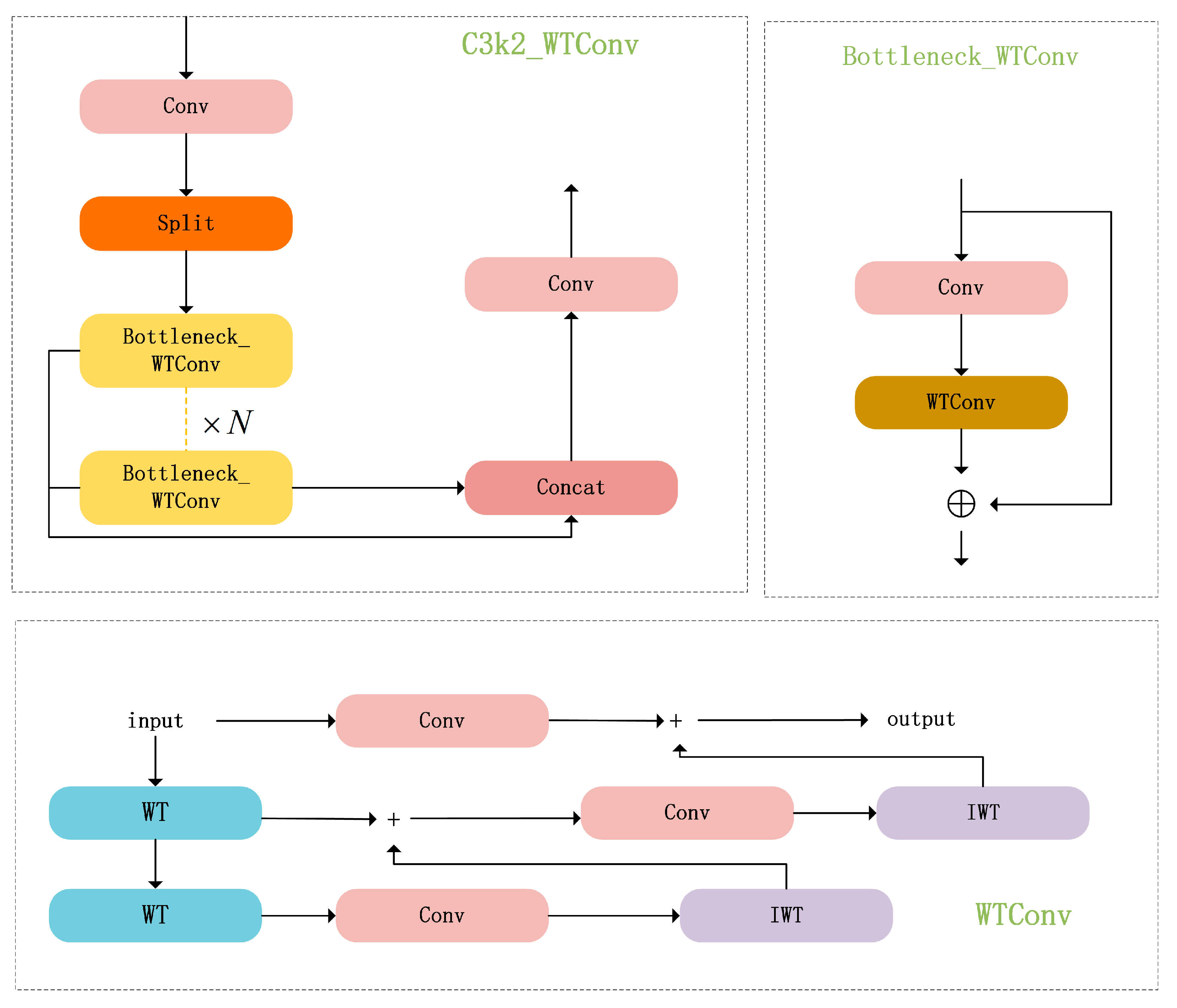

The WTConv module, featuring wavelet transformation, is added to refine the C3k2 module within the backbone network. The C3k2_WTConv module is intended to broaden the network’s receptive field, thus strengthening its feature extraction capabilities;

- (3)

The lightweight SlimNeck module is incorporated within the neck network to improve the efficiency of multi-scale feature fusion and integration of various morphological features, while decreasing parameter redundancy;

- (4)

Replacing the upsampling module in YOLO11 with the adaptive scale-aware DySample module to effectively mitigate detection degradation caused by scale changes.

The improved model is intended to boost the accuracy and robustness of litchi flower cluster detection, providing reliable technical support for intelligent management of orchard flowering periods and yield prediction, while also offering a referenceable model optimization approach for small-object dense target detection tasks.

2. Materials and Methods

This chapter will systematically elaborate the key materials and technical methods that support the model development and experimental validation: firstly, it introduces the scheme of UAV image acquisition and the construction process of the dataset; then, it parses the network structure of the YOLO11 and clarifies its advantages; finally, it disassembles in detail the three core improvement modules of the WSD-YOLO model (C3k2-WTConv, SlimNeck, and DySample), providing a clear methodological basis for the experimental performance verification in the subsequent chapters.

2.1. Image Acquisition

This study utilized the DJI Mavic 3T UAV (Manufacturer: DJI; Location: Shenzhen, China) as the data collection platform, conducting field data collection at the Litchi Exposition Park in Conghua District, Guangzhou City, Guangdong Province, from 16 to 17 April 2025. The geographical coordinates of the site are 23°33′ N and 113°35′ E. The main litchi variety was Jinggang Hongnuo, and the data collection scene is shown in

Figure 1. During the data collection period, the weather was clear with almost no wind, ensuring that environmental factors such as wind or leaf movement did not interfere with the acquisition of experimental data.

The litchi trees in the experimental orchard were approximately 3–6 m in height, and the UAV’s flight altitude was maintained between 5 m and 10 m (measured as the relative height from the ground to the UAV) to ensure image clarity and the discernibility of flower cluster details. The UAV was equipped with a high-resolution camera (4000 × 3000), and images were captured at this resolution. Shooting sessions were conducted daily from 9:00 AM to 4:00 PM, covering various natural lighting conditions such as front lighting and backlighting. A combination of aerial view and side view was used to enhance the diversity of the image scenes, as shown in

Figure 2.

In the sampling design, four evenly distributed plots were selected within the Litchi Exposition Park, and five Jinggang Hongnuo litchi trees were randomly chosen from each plot as sampling targets, resulting in a total of 20 trees.

2.2. Dataset Construction and Data Augmentation

After data collection, the original images were screened to remove low-quality images such as blurry or overexposed ones. Specifically, the variance of Laplacian was used as a sharpness metric, and images with a variance lower than 100 were considered blurry and discarded. For overexposure detection, each image was converted to grayscale to compute its brightness histogram; images with more than 10% of pixels having grayscale values greater than 240 were considered overexposed and removed. These quantitative criteria ensured the objectivity and reproducibility of the filtering process. A total of 807 valid images were retained. The original dataset was divided based on individual trees: 14 trees were used for training, 4 for validation, and 2 for testing, following a ratio of 7:2:1.

LabelImg software (

https://github.com/tzutalin/labelImg (accessed on 3 December 2018)) was used to label the samples in the images. The samples were annotated by category as “flower cluster (cluster)”, with rectangular boxes drawn around the regions of interest (ROIs). The annotation interface is shown in

Figure 3. The image annotation information was saved in YOLO format, with each image corresponding to a similarly named .txt file.

To ensure consistent annotation standards and reduce the impact of human subjectivity on model training, all flower clusters were uniformly labeled as a single category. This strategy was adopted because the images were captured from long-range wide-angle views to cover single litchi trees with a large canopy area, where the image resolution is limited, litchi flowers are small, densely distributed, and often partially occluded. Under such conditions, it is extremely difficult to visually distinguish subtle differences in flower size or flowering stage, making fine-grained classification impractical. Therefore, using a single “flower cluster” category allows the model to focus on accurate overall quantity detection and improves its generalization performance on large-scale aerial imagery.

Subsequently, in order to strengthen the model’s robustness and generalization, augmentation techniques were utilized on the images in the original training, validation, and test sets, while ensuring that the annotation information was synchronously updated and preserved during the augmentation process.

Specifically, the data augmentation operations included rotation, brightness adjustment, random cropping, and adding Gaussian noise. For rotation, the images were randomly rotated within the range of [−30°, +30°] to simulate different UAV attitudes and viewpoints during image capture. For brightness adjustment, a brightness factor was randomly sampled within [0.5, 1.5] to simulate varying lighting conditions. For random cropping, 70–90% of the original image area was randomly retained with random starting coordinates to simulate scenarios where targets are partially occluded or the image regions are incomplete. For noise addition, Gaussian noise with a mean of 0 and variance of 0.1 was added to simulate random environmental interference during actual data collection. After the augmentation, the total number of images was expanded to 1630, 467, and 233 for the training, validation, and test sets, respectively, maintaining the original 7:2:1 ratio. The augmented dataset contained 148,865 flower cluster samples, as shown in

Table 1. The dataset was used for training the model, optimizing hyperparameters, and assessing performance.

Figure 4 displays a selection of the augmented images.

2.3. YOLO11 Model

YOLO11 [

30] is the next-generation object detection algorithm launched by Ultralytics (Frederick, MD, USA) in 2024.

Figure 5 illustrates the architecture, which is composed of four primary components: Input layer, Backbone, Neck, and Head. YOLO11 offers an improved trade-off between detection speed and accuracy, making it especially well-suited for challenging tasks, including scenarios like small object detection and real-time processing. Therefore, it is particularly well-suited for detecting litchi flower clusters.

2.4. Improved YOLO11n Model

An enhanced YOLO11n-based approach for litchi flower cluster detection, termed WSD-YOLO, is presented in this work to improve detection accuracy. The architectural layout of the WSD-YOLO model can be found in

Figure 6.

2.4.1. C3K2_WTConv

This paper introduces WTConv [

31] to improve the Bottleneck in the C3k2 module of the YOLO11 backbone network. The improved C3k2_WTConv module is shown in

Figure 7. An enhanced YOLO11n-based approach for litchi flower cluster detection, termed WSD-YOLO, is presented in this work to improve detection accuracy. The architectural layout of the WSD-YOLO model can be found in

Figure 7.

WTConv (Wavelet Transform Convolution) is an improved convolution method that combines wavelet transform with traditional convolution operations. By integrating frequency domain analysis and spatial feature extraction, it effectively enhances feature representation capabilities. Its working principle is as follows:

First, the one-dimensional wavelet transform is extended to a two-dimensional form, constructing four sets of feature filters,

,

,

, and

, as shown in Equation (1).

For a given input image, applying convolution (

Conv) with these filters decomposes it into four corresponding frequency-domain subband components

,

,

,

, as shown in Equation (2).

Then, the inverse wavelet transform is obtained via transposed convolution to reconstruct the original image, as shown in Equation (3).

This process implements the wavelet transform (

WT) and its inverse transform (

IWT) based on convolution operations, as shown in Equations (4) and (5).

where

denotes the convolution operation,

denotes transposition,

denotes the wavelet transform, and

denotes the inverse wavelet transform operation

, denotes the reconstructed features obtained through inverse wavelet transform and convolution, and

denotes the convolution kernel weights.

To achieve multi-scale feature extraction, WTConv employs a cascaded decomposition strategy. At the

i-th layer

(i ≥ 1), the low-frequency component

from the previous layer is further subjected to the wavelet transform, as shown in Equation (6).

At each decomposition level, the extracted frequency-domain components (integrating low-frequency

and high-frequency

) are subjected to convolution (

Conv) for feature learning, and then the low-frequency and high-frequency feature outputs

and

are obtained, as shown in Equation (7).

The final feature fusion and output reconstruction are performed using the inverse wavelet transform. This method leverages the linear properties of the inverse wavelet transform to establish a top-down feature fusion framework, as shown in Equations (8) and (9).

where

X and

Y represent two feature maps,

and

denote the fused features at the

i-th and (

i + 1)-th layers, respectively, and

and

denote the low-frequency and high-frequency feature components at the

i-th layer, respectively.

WTConv is an improved convolution method combining wavelet transform and traditional convolution operations. By integrating frequency-domain analysis and spatial feature extraction, it effectively expands the receptive field of the YOLO network, significantly enhancing detection performance of litchi flower clusters in complex natural environments. The multi-scale characteristics of wavelets enhance robustness against partial occlusion or shadows of flower clusters, while high-frequency components aid in detecting small target flower clusters, and low-frequency components stabilize the localization of the main object.

2.4.2. SlimNeck

This paper introduces the SlimNeck [

32] architecture to enhance the neck network of YOLO11, improving the efficiency of multi-scale feature fusion and diverse morphological feature integration while reducing parameter redundancy.

SlimNeck is a lightweight neck network architecture designed to address the trade-off between real-time performance and edge deployment requirements in object detection tasks. Traditional convolutional models often find it challenging to reconcile lightweight characteristics with detection accuracy. To address this limitation, the SlimNeck architecture introduces a lightweight convolution technique termed GSConv. Based on GSConv, it designs the GSBottleneck module and VOV-GSCSP module, ultimately culminating in the SlimNeck lightweight neck network architecture. This design significantly reduces model complexity and computational overhead while preserving detection accuracy, achieving a balance between lightweight design and detection performance.

GSConv consists of standard convolution (Conv), depthwise separable convolution (DSC) [

33], and shuffle operations, with its structure shown in

Figure 8. Its workflow proceeds as follows: First, the input information is split into multiple groups containing an equal number of channels. After grouping, half of the information undergoes depthwise separable convolutional lightweighting operation, through DSC. Then, the information after the separable convolutional operation is fused with the remaining half of the unprocessed groups. Finally, a shuffle operation integrates information across different channels, enhancing the connections between features and thereby improving the feature map’s expressiveness and information flow. This group-based convolution design of GSConv enhances the model’s ability to perceive different features while increasing feature diversity, which effectively boosts litchi flower cluster detection performance and reduces computational overhead.

SlimNeck replaces standard convolutions in the Bottleneck structure with GSConv, forming the GSBottleneck structure and imparting it with lightweight characteristics. Its structure is shown in

Figure 9a. Furthermore, the SlimNeck architecture employs a single-stage aggregation strategy to construct the cross-level local network (GSCSP) module VoVGSCSP, whose structure is shown in

Figure 9b, where c1 and c2 denote the number of input and output channels, respectively. Within the VoVGSCSP module, the channels are divided into two parts: one part of the information is sent to the GSBottleneck module for feature extraction after convolution processing, while the other part undergoes residual connection through the convolution module. Finally, the information from the two channels is fused and output as a feature map through the convolution module, achieving effective transformation and feature extraction of the feature map. This design thus enables the VoVGSCSP module to achieve efficient fusion of multi-scale features and diverse morphological characteristics, significantly enhancing the model’s capacity for detecting multi-scale targets within complex backgrounds. The module attains a favorable performance-efficiency trade-off, substantially reducing computational cost and model complexity while maintaining competitive detection accuracy.

2.4.3. Dysample

Traditional sampling operators typically employ fixed interpolation strategies, such as bilinear interpolation. This approach has certain limitations when handling complex scenes, as it lacks sufficient reconstruction capability for low-resolution feature maps, leading to loss of feature information or blurred details. This makes it difficult to adapt to the detection requirements of multi-scale targets, resulting in high missed detection and false detection rates for litchi flower cluster detection, thereby affecting detection accuracy. Therefore, this paper introduces the DySample [

34] upsampling module to replace the UnSample module in YOLO11, aiming to achieve more precise feature recovery and scale adaptation. The DySample structure is shown in

Figure 10a. DySample is a lightweight and efficient upsampling module that dynamically generates upsampling kernels based on the local context of the input feature map. By dynamically weighting and fusing neighboring pixels, it reduces detail loss during the upsampling process.

In the DySample module, the input feature map first predicts spatial offsets O through a sampling point generator, then generates a dynamic sampling grid δ. The grid sampling function resamples x based on δ, outputting the upsampled feature map .

To optimize offset learning, Dysample has two sampling point generators: static scaling and dynamic scaling, as shown in

Figure 10b.

Static scaling constrains the offset range with a fixed factor of 0.25, combines pixel mixing with a linear layer to generate

O, and superimposes it on a regular grid

G to control the local search area, avoiding sampling overlap and feature blurring, as shown in Equation (10).

Dynamic scaling adapts the offset through a dual-branch structure. Branch 1 uses a linear layer L1 and a sigmoid function to generate a dynamic scaling factor in the range [0, 0.5], while branch 2 uses a linear layer L2 to predict the original offset. Finally, factor modulation is used to achieve content-aware offset adjustment, enhancing the model’s robustness to changes in feature scale, as shown in Equations (11) and (12).

The introduction of a dynamic scaling factor allows the sampling point step size to be adjusted adaptively based on input features (color, texture, and shape characteristics of litchi flowers), thereby significantly improving the flexibility and sensitivity of the sampling process to changes in the shape of litchi flowers.

3. Results

The design scheme and implementation details of the WSD-YOLO model have been described in detail in the previous section. In order to verify the effectiveness of the improvement strategy, this chapter will comprehensively evaluate the performance of the model through experiments to demonstrate the superiority of WSD-YOLO in terms of detection accuracy, computational efficiency and counting performance.

3.1. Experimental Setup and Evaluation Metrics

The training environment used in this study includes a Windows 10 64-bit system, 24 GB of RAM, and an NVIDIA GeForce RTX 3090 GPU. The model building and training environment consists of Python 3.8, PyTorch 1.12.1, and CUDA 11.8. An input image size of 640 × 640 is used, with a batch size of 16, 300 training epochs, and AdamW [

35] as the optimizer with an initial learning rate of 0.01 and a weight decay of 0.0005. During training, a cosine decay strategy was applied to adjust the learning rate, with the minimum learning rate set to 0.0001. To prevent overfitting, an early stopping strategy was adopted, where training was terminated if the validation accuracy did not improve for 50 consecutive epochs.

This study comprehensively evaluates model performance through two distinct tasks: detection and counting. For the detection task, common evaluation metrics are adopted, including

Precision (

P),

Recall (

R),

F1-score, Average Precision (

AP), Mean Average Precision (

mAP), model computational cost (floating point operations,

FLOPs), and Parameters. For the counting task, four metrics are used to further evaluate the model’s quantitative prediction accuracy: Mean Absolute Error (

MAE), Root Mean Square Error (

RMSE), Mean Absolute Percentage Error (

MAPE), and the coefficient of determination (

R2). They are calculated as follows:

In Equations (13) and (14), TP refers to instances where the model accurately classifies positive samples as belonging to the positive class, FP indicates cases where negative samples are incorrectly assigned to the positive category, and FN denotes positive samples that are mistakenly categorized as negative. In Equation (15), P(R) denotes the Precision–Recall (PR) curve function. The PR curve is generated by plotting precision and recall at varying confidence levels, and the Average Precision (AP) is calculated as the integral of the curve area. In Equation (16), c stands for the total count of image classes, i represents the specific detected class, and the mean average precision (mAP) across all classes is computed by averaging the AP values for each class. mAP@0.5 signifies the mean AP for each category at an IoU threshold of 0.5, while mAP@0.5:0.95 is the average mAP across IoU thresholds ranging from 0.5 to 0.95 in increments of 0.05. MAE expresses the mean absolute error between prediction and ground truth; RMSE, which is more responsive to large discrepancies, illustrates error variability; MAPE measures the mean absolute percentage difference between predicted and actual values, enhancing interpretability; R2 evaluates how well predictions match observed values, with values approaching 1 signifying superior fit. Here, i denotes the actual value, is the predicted value, indicates the mean of actual values, and n refers to the sample count.

3.2. Comparative Experiments

3.2.1. Comparison Experiments of YOLO11 Models with Different Scales

The YOLO11-Detect incorporates five different network configurations of different scales, n, s, m, l and x, which represent different depth structures from lightweight to high-performance, respectively. As the model scales progressively, both parameter count and computational complexity exhibit commensurate growth. To select the most suitable model structure for the litchi flower detection task, comparative experiments were conducted using three commonly used model sizes (YOLO11n, YOLO11s, and YOLO11m) under identical training parameters, datasets, and strategies. This method assesses the balance between accuracy and efficiency to identify the best configuration.

Table 2 presents the results of the experiments.

As shown in

Table 2, the recall of YOLO11n is only 0.32% lower than that of YOLO11m, while its computation (

FLOPs) is merely 9.33% of YOLO11m. This demonstrates a superior balance between performance and efficiency; therefore, YOLO11-n was selected as the baseline model.

3.2.2. Comparison Experiment Between YOLO11n and Different YOLO Models

In order to verify whether YOLO11n can serve as the optimal benchmark model for this research task, this study selected the current mainstream YOLO series models, including YOLOv5n, YOLOv8n, YOLOv10n, and YOLOv12n, as well as RT-DETR-l, for comparison experiments. All models are trained and evaluated with the same dataset, input size (640 × 640) and training strategy, and

Table 3 presents the results of the experiments.

Table 3 demonstrates that YOLO11n attains top performance in every major evaluation metric, including

Precision (

P),

Recall (

R), and

mAP@0.5. Although YOLO11n has marginally more parameters than both YOLOv5n and YOLOv12n, its overall parameter count remains relatively small. These results conclusively demonstrate YOLO11n’s suitability as the optimal baseline model for our research task.

3.2.3. Comparison Experiments of Different Input Sizes for YOLO11n

To validate whether 640 × 640 is the optimal input size and to explore the impact of input size on YOLO11n’s detection performance, five typical input resolutions are evaluated: 320 × 320, 480 × 480, 640 × 640, 800 × 800, and 960 × 960. The YOLO11n model is trained and evaluated under identical training set and hyper-parameter settings for all resolutions.

Table 4 results demonstrate that with the gradual increase of the input size, the overall detection performance of the model shows a trend of increasing and then levelling off or even slightly decreasing. When the input size increases from 320 to 640, the model achieves peak performance in

Precision (

P),

Recall (

R), and

mAP@0.5, with significant detection improvements. However, further enlargement to 800 and 960 leads to varying degrees of metric reduction. Consequently, 640 × 640 was identified as the optimal input size for YOLO11n in this study.

3.2.4. Comparison Experiment Between WSD-YOLO and Different YOLO Models

To validate the superior detection performance of the proposed improved model WSD-YOLO (with the introduction of C3K2-WTConv, SlimNeck and DySample modules), this paper conducts comparative experiments between WSD-YOLO and the mainstream YOLO series models (including YOLOv5n, YOLOv8n, YOLOv10n, YOLO11n and YOLOv12n) as well as RT-DETR-l for comparison experiments.

Table 5 and

Figure 11 present the results of the experiments.

Table 5 indicates that WSD-YOLO surpasses the other models across all evaluated metrics, attaining 83.85% precision, 82.37% recall, 83.10%

F1-score, and 87.28%

mAP@0.5. Although its parameter count is marginally greater than YOLOv5n, WSD-YOLO requires the least computation (

FLOPs) at just 5.8 G, thereby lowering computational demands and maintaining high detection performance. This demonstrates an effective balance between accuracy and efficiency.

Figure 11 clearly illustrates that WSD-YOLO exceeds the other models across every evaluated metric. Whether considering precision, recall, or overall measures like

F1-score and

mAP, WSD-YOLO always shows a faster upward trend with higher final results, and its detection performance is markedly superior compared to other YOLO series models. Among them, the benchmark model YOLO11n achieves the second-highest performance but completes training in approximately 240 rounds due to early stopping mechanism during the training process.

Figure 12 reveals that the YOLOv5, YOLOv8, YOLOv10, YOLO11 and YOLOv12 models all have different degrees of missed detection. Among these, YOLO11 demonstrates relatively fewer missed detections, primarily failing to effectively identify certain occluded small targets. In addition, the YOLOv5, YOLOv8, YOLO11 and YOLOv12 models exhibit a certain number of duplicate boxes in the detection results, which affects the detection accuracy. In contrast, WSD-YOLO has a better detection performance, which not only significantly reduces the phenomenon of missed detection, but also shows stronger robustness and accuracy in small target identification and occluded region detection.

In

Figure 13, heatmap visualizations of the baseline model and the proposed WSD-YOLO model are presented. It can be observed that the improved model exhibits stronger attention to occluded regions and small targets, while focusing more precisely on flower cluster areas with higher localization accuracy. These results highlight the limitations of the original model and demonstrate the superiority of the proposed improvements.

3.3. Module Ablation Experiments

In order to verify the role of each improved module in enhancing the model performance, this paper conducts ablation experiments on the three modules—C3K2-WTConv, SlimNeck and DySample—introduced by the YOLO11n model, and the experimental results are shown in

Table 6.

Table 6 presents the ablation experiment results of the individual modules and their combinations in WSD-YOLO. From the results of the table, it can be observed that with the introduction and combination of each improved module, the overall performance of the model shows a sustained trend of continuous improvement. Specifically, all three modules exert a positive impact on the model performance when replaced individually. Introducing the C3k2-WTConv module alone enhanced feature representation capability. The multi-scale properties of wavelets improved robustness against partial occlusion, where high-frequency components enhance the detection of small flower clusters and low-frequency components stabilize subject localization. This improves precision, recall,

F1-score, and

mAP@0.5 from 77.59%, 78.34%, 77.96%, and 82.12% to 82.24%, 81.35%, 81.79%, and 86.47%, respectively. SlimNeck, as a lightweight neck network, efficiently fused multi-scale features and reduced computational redundancy. When used alone, it improved

mAP@0.5 to 85.40% and reduced

FLOPs to 5.9 G. The DySample dynamic upsampling module reduced fine-grained feature loss and enhanced the detection performance of dense and small flower clusters, raising the

F1-score and

mAP@0.5 to 82.06% and 86.70%, respectively.

Combining these modules showed clear complementary effects. Configurations such as C3k2-WTConv + SlimNeck, C3k2-WTConv + DySample, or SlimNeck + DySample improved detection accuracy while reducing computational cost, indicating that the modules are complementary rather than redundant. For example, adding SlimNeck to C3k2-WTConv improved recall by 0.92% and mAP@0.5 by 0.13%, while reducing FLOPs to 5.8 G. Ultimately, when C3k2-WTConv, SlimNeck, and DySample were all integrated (WSD-YOLO), the model achieved optimal performance, with precision, recall, F1-score, and mAP@0.5 reaching 83.85%, 82.37%, 83.10%, and 87.28%, respectively, while reducing FLOPs to 5.8 G and maintaining a parameter count of approximately 2.52 M. These results demonstrate that the three modules work synergistically through different mechanisms to effectively improve flower cluster detection performance in complex natural environments, while maintaining the lightweight and efficient characteristics of the model.

From the results of the table, it can be observed that with the introduction and combination of each improved module, the overall performance of the model shows a sustained trend of continuous improvement. Specifically, all three modules exert a positive impact on the model performance when replaced individually.

3.4. Counting Experiments

In this section, counting performance comparison experiments were carried out using the Litchi flower dataset’s test set and benchmarked against the YOLO11n model. The quantity of flower clusters identified by the model was recorded and contrasted with manually annotated ground-truth values. Model counting accuracy was assessed through metrics such as

MAE,

RMSE,

MAPE, and

R2. Results from these experiments can be found in

Table 7 and

Figure 13 and

Figure 14.

As shown in

Table 7, compared to YOLO11n, the

MAE of WSD-YOLO decreases from 6.73 to 5.23, the

RMSE decreases from 8.79 to 6.89, the

MAPE decreases from 13.14% to 9.72%, and the

R2 improves from 0.8405 to 0.9205. These results effectively demonstrate the performance advantage of WSD-YOLO on the Litchi flower counting task.

Furthermore,

Figure 14 and

Figure 15 present the statistical errors for all test images. As the number of flower clusters increases (i.e., larger Ground Truth Count values on the

x-axis of

Figure 14 and higher Cluster Number values on the

y-axis of

Figure 15, representing images with more clusters and higher density), it can be observed that the counting errors of the proposed method are consistently smaller than those of YOLO11n. More importantly, as the cluster density increases, the proposed method does not exhibit a noticeable increase in statistical error (i.e., the difference between the true count and the predicted count). Specifically, in

Figure 14, the scatter points of WSD-YOLO are more compactly distributed around the diagonal with fewer points deviating from the y = x line, while in

Figure 15, the gap between the predicted counts and the ground truth counts is narrower. These results indicate that the predictions of WSD-YOLO align more closely with the actual number of clusters and that the proposed method maintains good detection performance even under high-density conditions.

4. Discussion

Litchi flower detection is an important research topic in the field of precision agriculture, but its dense distribution, complex morphology, variable scale, and severe obstruction in natural environments pose significant challenges for target detection. Traditional manual statistical methods are inefficient, highly dependent on subjective experience, and prone to errors and time-consuming, making them unsuitable for the large-scale, automated management requirements of modern orchards. Additionally, complex lighting conditions and background interference lead to the loss of critical information during feature extraction, further increasing detection difficulty. Compared to fruit detection tasks, litchi flower clusters present greater challenges due to their loose structure and diverse morphology, necessitating efficient and robust automated solutions.

To tackle the above challenges, this paper introduces an enhanced YOLO11n-based litchi flower cluster detection model, termed WSD-YOLO, which incorporated the C3k2-WTConv, SlimNeck, and DySample modules to markedly improve detection capability and counting accuracy in complex scenarios. Compared to the original YOLO11n, WSD-YOLO demonstrates advantages in all key metrics and has the lowest computational complexity, fully demonstrating the effectiveness of the proposed improvement strategy. Combined with high-resolution UAV imagery, WSD-YOLO offers an efficient approach for the automated detection and counting of litchi flower clusters, significantly reducing labor costs and errors, and providing scientific basis for flowering period management and yield prediction.

From the perspective of practical agricultural production application, the WSD-YOLO model proposed in this study has good feasibility and practical value, and can directly serve the needs of litchi growers for flowering management. In terms of hardware adaptation, the UAV used in the model is a common consumer-grade product available on the market, so farmers do not need to purchase professional remote sensing equipment; and WSD-YOLO can complete the inference calculation on ordinary computers or tablets, without requiring the deployment of high-performance servers, which reduces the cost of hardware investment. Regarding the operational threshold, the model’s image upload and result output processes can be simplified into a visual software interface. Farmers only need to master basic UAV aerial photography and software operation skills, without the need to have professional knowledge of computer vision or artificial intelligence, which greatly reduces the threshold of technology use. The system can be deployed on portable edge devices or smartphones, making it easy for farmers to quickly acquire information on the number and distribution of litchi flower clusters in the orchard. Compared with traditional manual counting methods, the application of WSD-YOLO can significantly reduce farmers’ production costs and increase profitability. Traditional manual counting methods are not only labour-intensive and inefficient, but also prone to counting errors due to visual fatigue and subjective judgement bias, whereas the use of the WSD-YOLO model not only greatly reduces labour costs, but also reduces errors due to omission, repeated counting and subjective inconsistencies.

However, this study has certain limitations. First, data collection was limited to clear weather conditions and did not include images under extreme weather conditions (such as heavy rain or dense fog), which may affect the model’s robustness in complex environments. Second, the counting experiments were based solely on test set images, and no target tracking or counting experiments were conducted using video data. Third, the current dataset annotations only include a single “flower cluster” category, without differentiating between flower sizes or flowering stages, which limits the model’s ability to learn fine-grained variations. This is mainly because images were captured from long-range wide-angle views, where the resolution is limited, litchi flowers are small, densely distributed, and often partially occluded, making fine-grained classification extremely difficult.

Future research can be conducted in the following directions: first, constructing a more diverse litchi flower dataset covering multiple weather and lighting conditions to enhance the model’s environmental adaptability; second, introducing video target tracking and temporal information fusion strategies to improve detection and counting stability in video scenes; third, optimizing computational efficiency through model pruning or quantization techniques to enable real-time deployment on UAV platforms and low-power edge devices; and fourth, building a fine-grained litchi flower dataset captured in close-range scenarios with flower stage classification, and exploring multi-class detection models for recognizing and counting flowers at different flowering stages.

5. Conclusions

For litchi flower cluster visual detection, this study presents the WSD-YOLO model, which is an enhanced version of YOLO11. WSD-YOLO incorporates WTConv to refine the Bottleneck within the C3k2 module of the YOLO11 backbone, resulting in the C3k2-WTConv module. By combining frequency-domain analysis with spatial feature extraction, it boosts feature extraction capability; The SlimNeck framework is adopted to optimize the YOLO11 neck network, together with lightweight GSConv and VoVGSCSP setups to improve feature fusion efficiency and minimize parameter redundancy; The upsampling module originally in YOLO11 is substituted with an adaptive, scale-aware DySample module, effectively alleviating detection decline due to scale variation. These enhancements greatly improve the model’s detection effectiveness and counting precision under complex conditions while lowering computational complexity.

Experimental findings indicate that WSD-YOLO achieves an mAP@0.5 of 87.28%, an improvement of 5.16% over the baseline YOLO11n, with precision, recall, and F1-score improving by 6.26%, 4.03%, and 5.14%. Meanwhile, computational load and parameter quantity were diminished by 7.69% and 2.37%. In counting tasks, compared to YOLO11, WSD-YOLO’s MAE decreased from 6.73 to 5.23, RMSE decreased from 8.79 to 6.89, MAPE decreased from 13.14% to 9.72%, and R2 increased from 0.8405 to 0.9205. Comparative experiments with other YOLO series models (YOLOv5n, YOLOv8n, YOLOv10n, YOLOv12n) as well as RTDETR-l show that WSD-YOLO significantly surpasses other models regarding accuracy, recall, F1-score, and mAP, while maintaining low computational requirements, which supports real-time detection and deployment of the model on edge platforms like UAVs. Future research will concentrate on overcoming the limitations discussed earlier, especially enhancing the model’s generalization capability for litchi flower detection under diverse environmental conditions.