1. Introduction

Blueberry is an important agricultural economic crop that originated in North America and parts of Asia. It is now widely cultivated, particularly in China. Studies indicate that blueberries are rich in anthocyanins, vitamin C, polyphenols, and dietary fiber, which contribute to antioxidation, promote cardiovascular health, enhance immunity, and effectively prevent various chronic diseases [

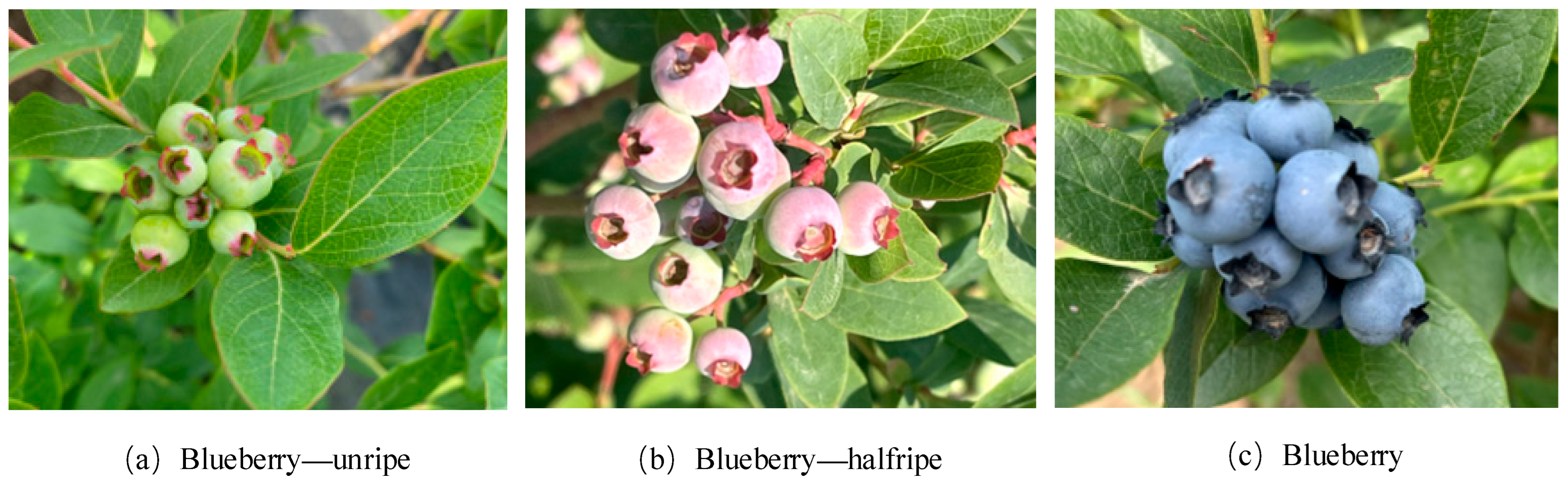

1]. During the planting period, the color of the blueberry peel serves as a crucial indicator for assessing the maturity of the fruit. Throughout the ripening process, the levels of endogenous hormones and soluble sugars in the fruit increase; the decomposition rate of chlorophyll accelerates; its synthesis is hindered; and the concentrations of chlorophyll and carotenoids decrease. Meanwhile, anthocyanins continue to accumulate, resulting in a color transition from initial green to red, and ultimately to blue [

2]. Blueberry fruits have a short harvest cycle, with multiple stages of ripeness coexisting. Furthermore, blueberry products at different ripeness levels exhibit significant variations in taste and nutritional content. Therefore, accurate identification of ripeness is essential. Currently, traditional manual detection methods are employed to assess the ripeness of blueberries. However, this approach is not only time-consuming and inefficient but also leads to considerable fruit waste during the actual picking process. Consequently, there is an urgent need for an efficient method to detect the maturity of blueberry fruits.

In recent years, algorithms for detecting fruit maturity based on traditional image processing techniques have exhibited a trend toward diversification. For instance, Liu et al. developed a method for detecting partially red apples by utilizing color and shape features and employing Histogram of Oriented Gradient (HOG) features in conjunction with a linear Support Vector Machine (SVM) classifier to assess apple ripeness [

3]. Wan et al. introduced a tomato maturity detection approach that integrates color feature values with a Back Propagation Neural Network (BPNN) classifier, achieving an accuracy rate of 99.31% on tomato samples [

4]. Ripardo et al. proposed a technique for melon maturity detection based on the prediction of the Soluble Solids Content (SSC) from digital images, where a Multilayer Perceptron (MLP) model combined with RGB features yielded the highest accuracy [

5]. Additionally, Li et al. applied an integrated approach combining Non-Negative Matrix Factorization (NMF) filtering and Root Mean Square (RMS) normalization alongside an SVM learning model to classify watermelons at varying stages of maturity [

6]. Despite the effectiveness of these traditional fruit recognition algorithms under specific conditions, they generally suffer from limitations such as limited environmental adaptability, high computational complexity, and slow processing speeds. Moreover, their recognition accuracy tends to decline significantly when confronted with complex backgrounds or fruit occlusions, thereby hindering their applicability in dynamic real-world environments.

With the advancement of deep learning techniques, methods for detecting fruit maturity in natural environments have been extensively investigated. For instance, Huang et al. introduced a fuzzy Mask R-CNN model to automatically identify the ripeness of cherry tomatoes. Their approach involved converting RGB images into the HSV color space to extract color features from the tomato surface and subsequently detecting different ripening stages by integrating fuzzy inference rules [

7]. Similarly, Jing et al. developed the Slim Neck paradigm, leveraging MobileNetv3 to efficiently assess the maturity of melons, achieving promising results on a greenhouse dataset [

8]. Momeny et al. employed a fine-tuned ResNet-50 model to classify four types of citrus fruits according to maturity, demonstrating accurate detection even in fruits exhibiting black spots [

9]. Additionally, Pisharody et al. proposed SegLoRA, a novel segmentation framework designed to precisely estimate tomato maturity and predict yield [

10]. Collectively, these studies underscore the significant potential of deep learning approaches for fruit maturity detection in natural settings and offer valuable insights for advancing maturity assessment techniques in blueberry fruits.

Previous research has extensively investigated blueberry fruit analysis. For instance, Tan et al. introduced a computer vision approach for recognizing blueberries at various ripening stages using color images. This technique integrates Histogram of Oriented Gradient (HOG) features with color information to differentiate ripeness levels; the average accuracy for immature, medium, and mature fruits is 86.0%, 94.2% and 96.0, respectively [

11]. To address the complex environmental challenges of dense aggregation and occlusion of blueberries in natural environments, Yang et al. developed a lightweight recognition model based on the enhanced YOLOv5 architecture [

12]. To tackle issues arising from the small size and dense distribution of blueberries during detection, Gai et al. proposed the TL-YOLOv8 algorithm, which leverages transfer learning to improve the model’s generalization capabilities and training efficiency [

13]. Additionally, MacEachern et al. constructed six deep learning convolutional neural network (CNN) models aimed at determining the maturity stages of wild blueberries and estimating yield [

14]. The above research provides a powerful and effective crop recognition model for blueberry fruit detection, which is an important technical foundation of this paper. However, it still faces triple challenges when facing practical deployment. High-precision models are often accompanied by huge computing and storage overheads, and it is difficult to meet the stringent resource constraints of embedded platforms. In addition, the effectiveness of many methods is easily influenced by the complex field environment (such as changes in illumination, occlusion, and background interference), and the generalization ability and robustness need to be improved.

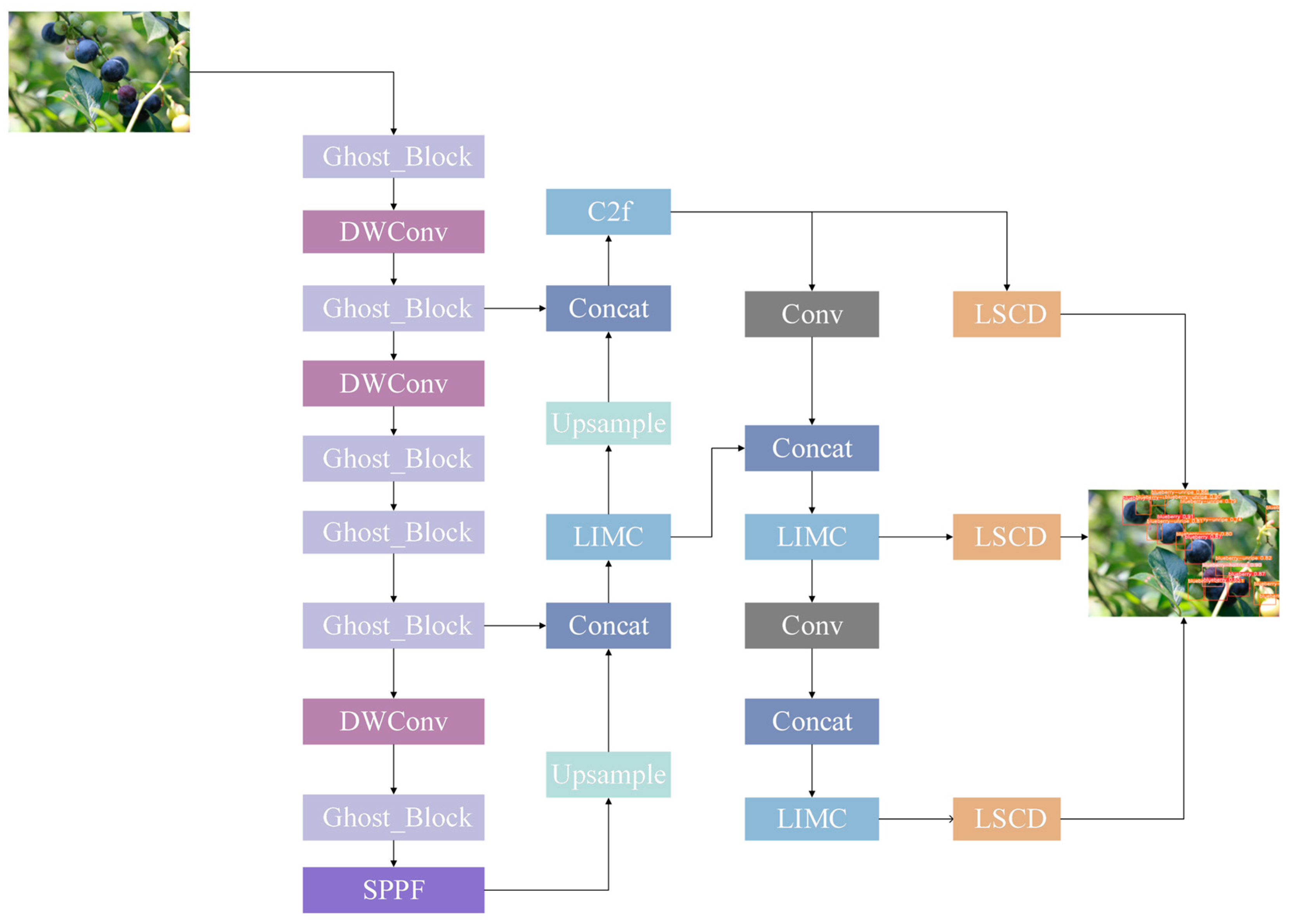

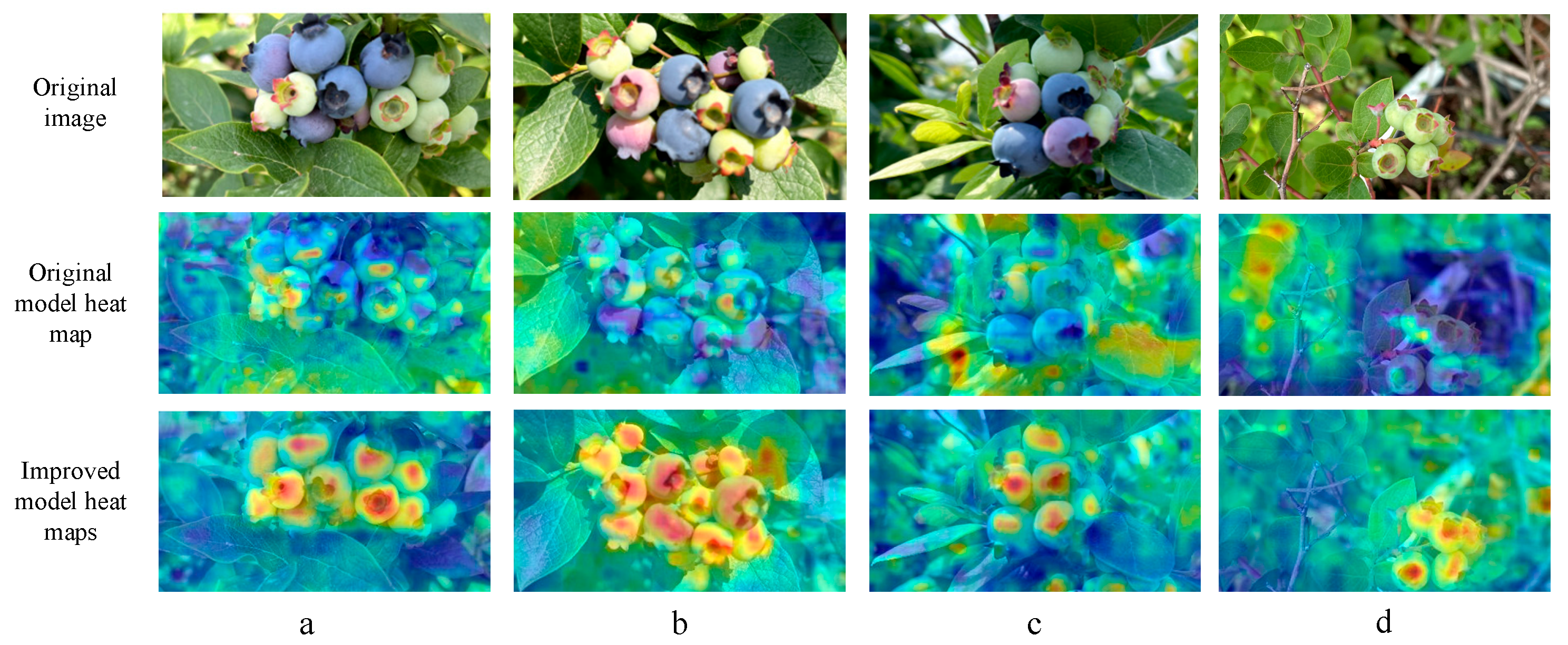

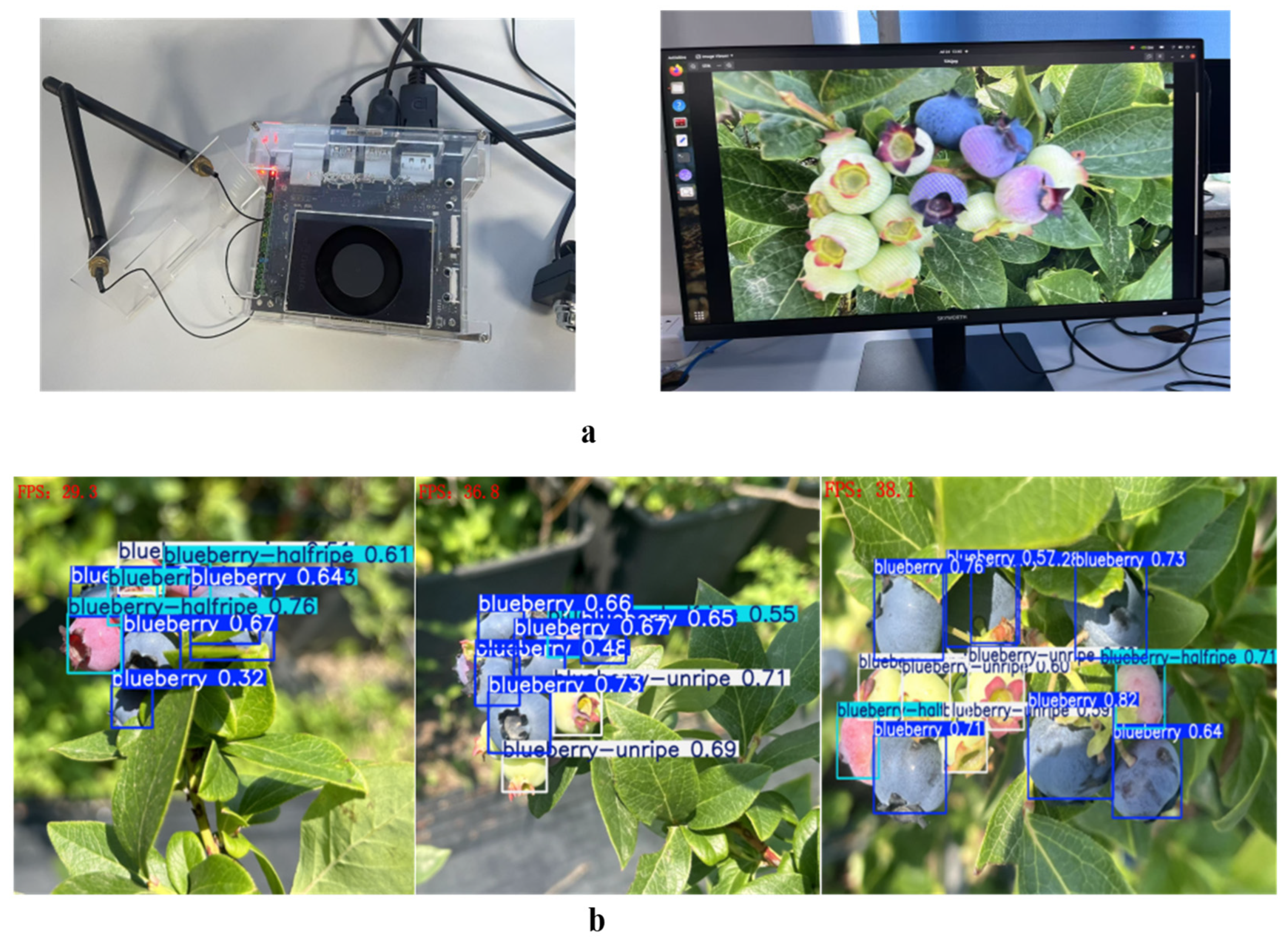

Despite advancements in blueberry fruit detection research, challenges persist in balancing model complexity with detection accuracy, particularly regarding small target identification and occlusion resilience. Blueberries are characteristically small and densely clustered, and their detection is complicated by factors such as complex backgrounds, variable lighting conditions, and fruit occlusion in natural settings. These factors pose significant obstacles to the precision and robustness of deep learning-based models. Although prior studies have addressed some issues by enhancing the YOLO architecture, employing transfer learning, and utilizing convolutional neural networks, these approaches often entail substantial computational demands and exhibit limited adaptability to complex real-world environments. Consequently, this study seeks to develop a lightweight yet highly accurate method for blueberry fruit detection and ripeness recognition, specifically designed to address challenges including dense small target distribution and intricate background interference. The proposed approach aims to facilitate precise maturity assessments, enabling efficient harvesting and real-time monitoring, thereby contributing to improved operational efficiency, quality assurance, loss reduction, and the sustainable and intelligent advancement of the blueberry industry.