Advances in Hyperspectral and Diffraction Imaging for Agricultural Applications

Abstract

1. Introduction

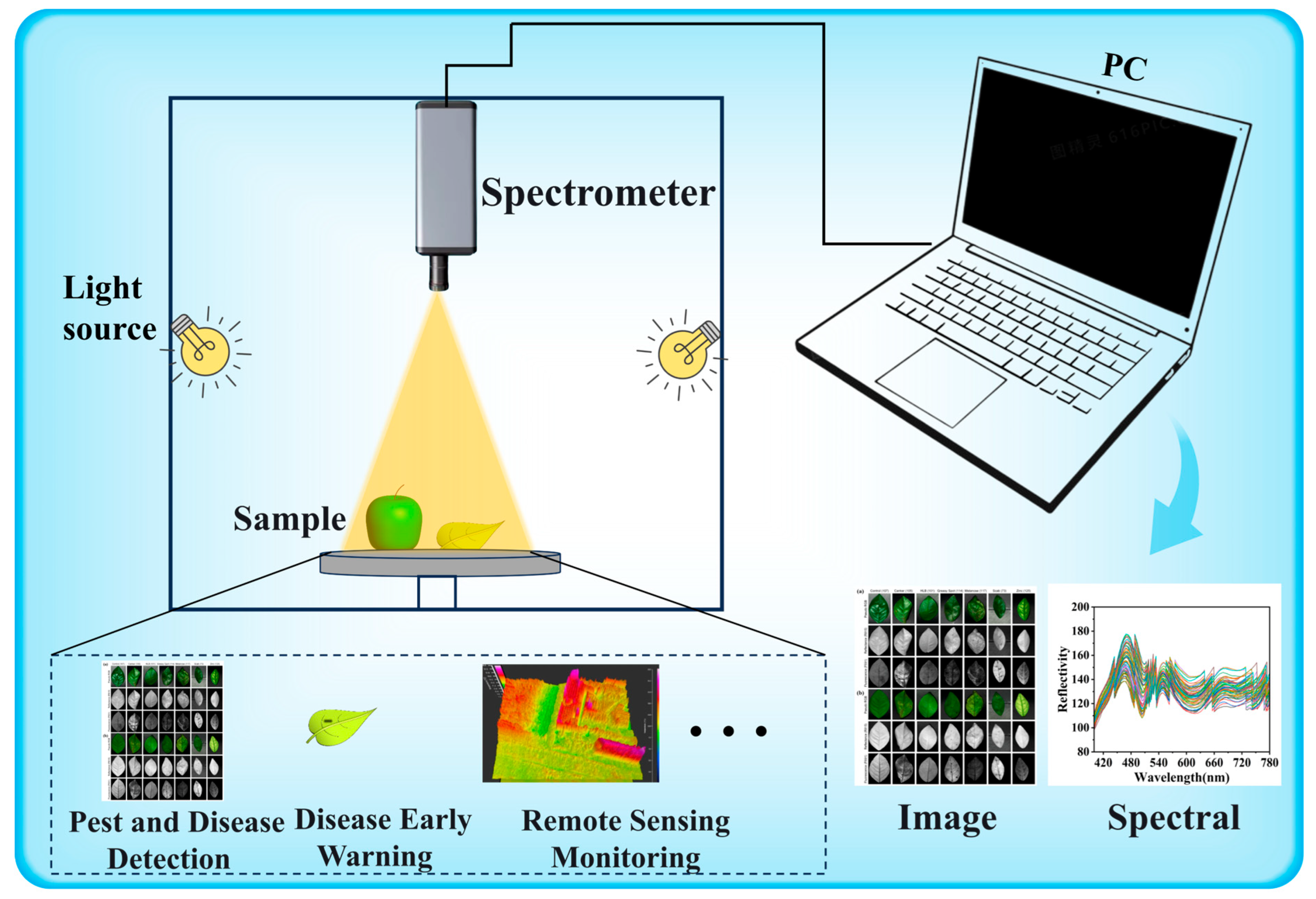

1.1. Hyperspectral Imaging Technology

| Application Scenarios | Algorithm Type | Advantages | Limitations |

|---|---|---|---|

| Remote sensing classification | Super PCA [41] | Superpixel-based PCA; Preserves spatial structure; Enhances computational efficiency; Unsupervised learning | Computational complexity |

| PCA [42] | Effective dimensionality reduction | Potential loss of some important information | |

| CNN [43] | Generates spectral images from RGB images; Low cost | Resulting image quality depends on training data | |

| Linear mixing model [44] | Suitable for spectral unmixing; Good model generalization | Model may oversimplify complex spectral variations | |

| Gabor filter + Unsupervised discriminant analysis [45] | High classification accuracy | High model complexity | |

| CNN + Dual swin transformer [46] | Captures both local and global features; High classification accuracy | Complex architecture; High training cost; Requires large datasets | |

| Crop image classification | Feature selection + Folded-PCA [47] | Combines information-theoretic optimized feature selection | Implementation complexity |

| Information theory-based feature selection + Folded-PCA [48] | Combines information-theoretic feature selection; Enhances classification accuracy | High computational complexity | |

| CNN + SVM [49] | Combines CNN feature extraction with SVM classification; Adaptable to various crop types | High computational resource demands | |

| Spatial-spectral homogeneous block extraction [50] | Integrates spatial and spectral information; High accuracy | High model complexity | |

| CNN [51] | Strong adaptability; High classification accuracy | Long training time | |

| 3D CNN (LeNet-5) [52] | Integrates spatial and spectral information; High classification accuracy | High computational complexity | |

| GNN + ARMA filter + Parallel CNN [53] | Superior classification performance | High model complexity | |

| CNN + GAT + C-means [54] | Combines spatial and spectral data; High segmentation accuracy | High model complexity | |

| Soil | Optimal band selection + Random Forest [55] | Improves soil salinity estimation accuracy | Relies on band selection methods |

| Evaluation of ML models (SVM, RF, CNN, etc.) [56] | Provides a comprehensive comparison of classification performance | Model accuracy depends on sample quality; affected by spectral interference | |

| HSI + SVM/RF/PLS-DA [57] | Enables accurate identification of PE and PA microplastics in soil | Feature selection relies on manual design; Limited generalization ability | |

| HSI combined with radar + PLSR [58] | Reduces soil moisture and surface roughness interference in SOC estimation | Complex fusion process; Requires consistent data sources | |

| Spectral feature extraction methods (PCA, CARS, GA) [59] | Enhances SOC prediction accuracy through multi-feature integration | High computational cost; Uncertainty in feature selection | |

| HSI + PLSR + RBF neural network [60] | Enables non-destructive detection of silicon and moisture across regions | Requires retraining for different soil types; Limited model transferability |

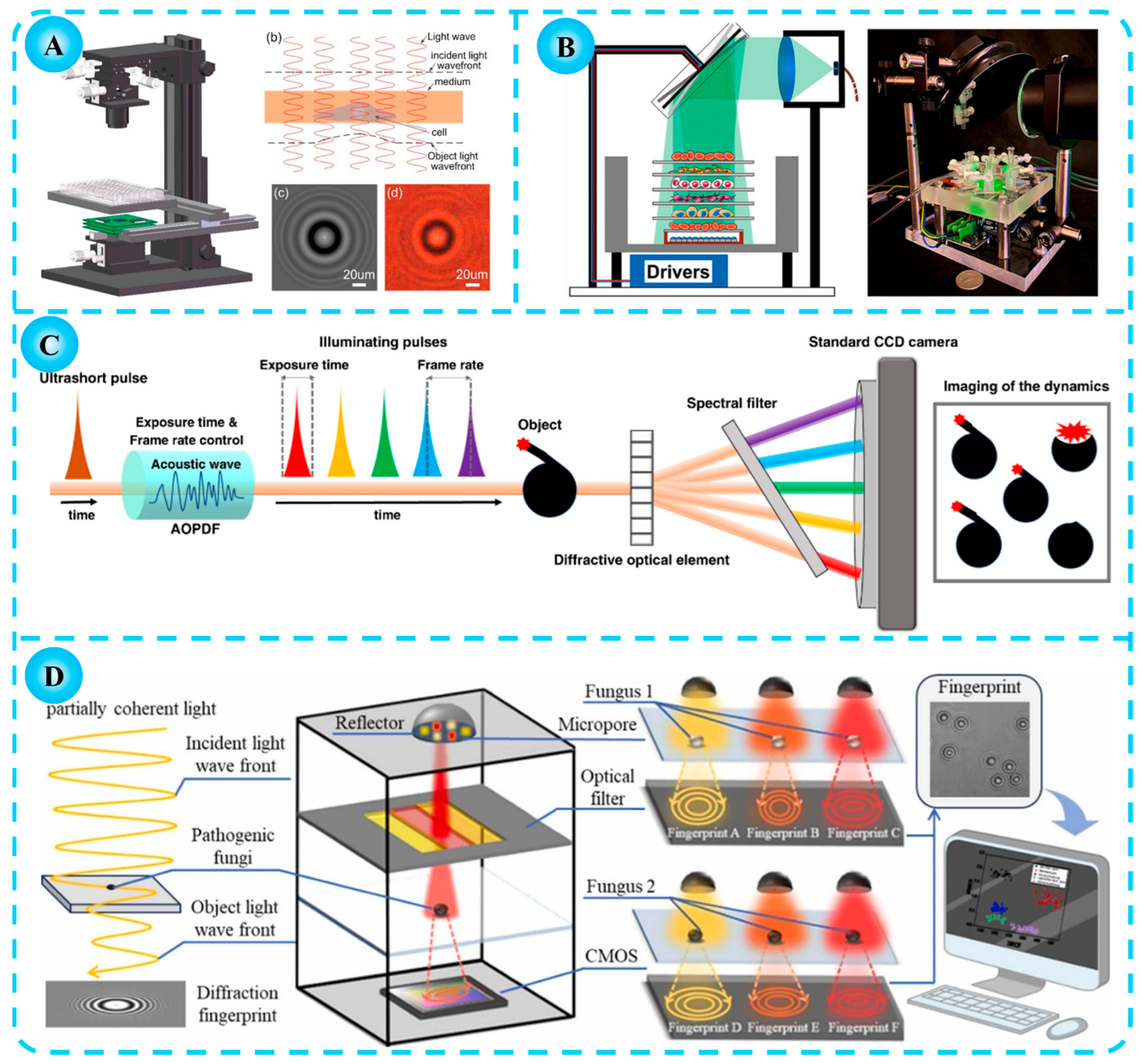

1.2. Diffraction Imaging Technology

2. Applications of Hyperspectral Imaging Technology in Agriculture

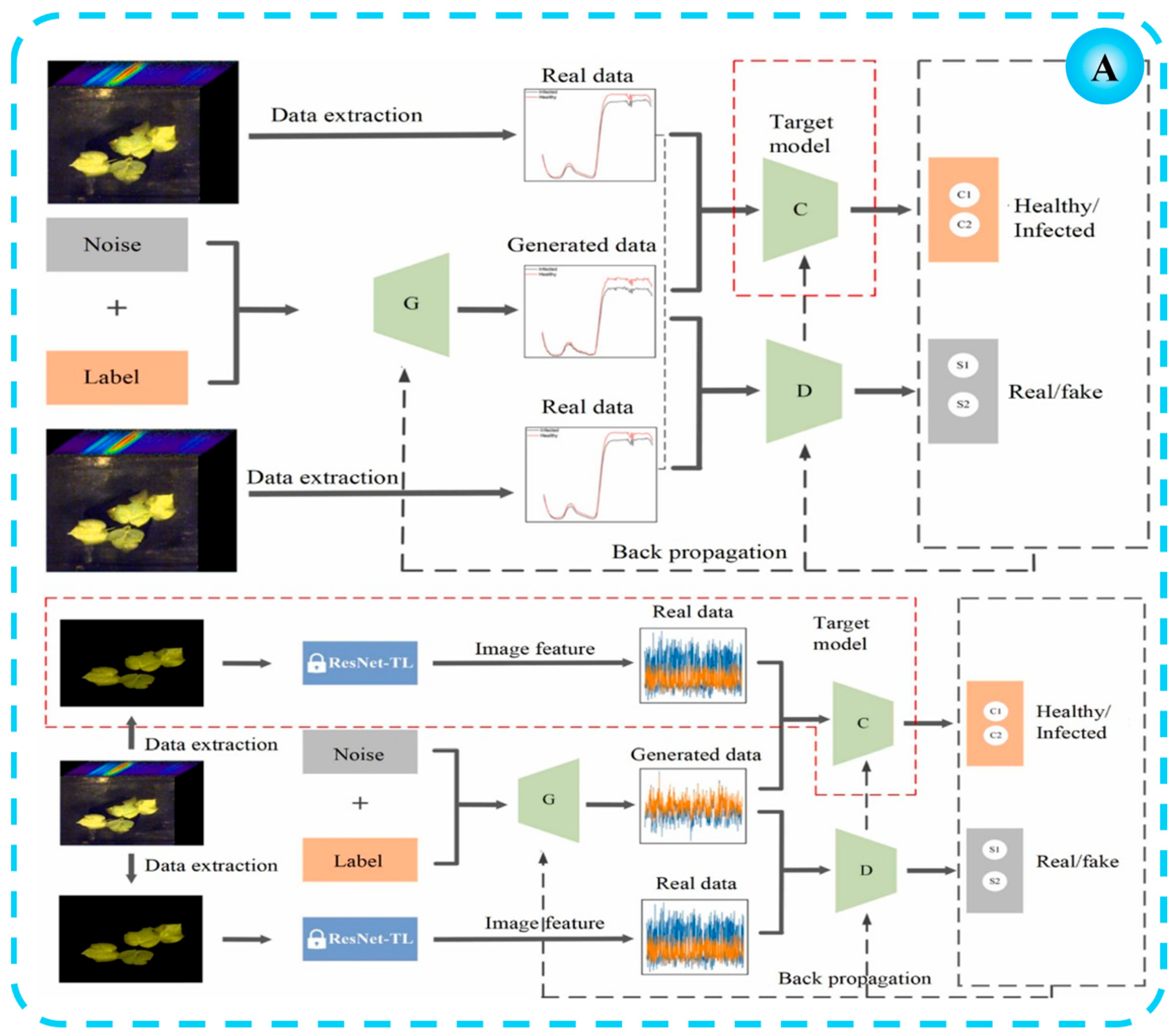

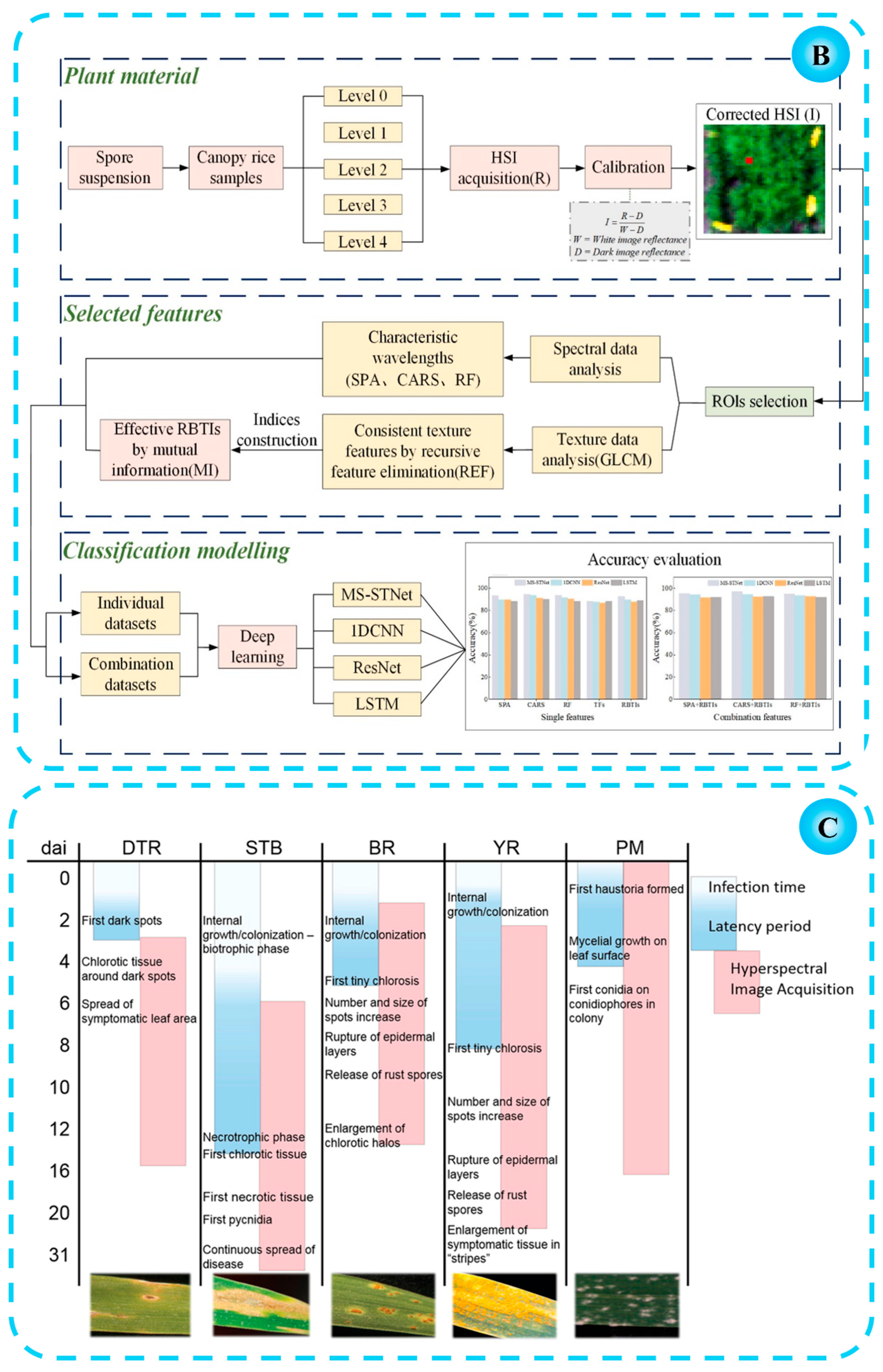

2.1. Disease Detection

2.2. Disease Early Warning

2.3. Crop Remote Sensing and Monitoring

2.4. Extended Applications of Hyperspectral Imaging Techniques

3. Applications of Diffraction Imaging Technology in Agriculture

3.1. Classification and Identification of Pathogenic Spores

3.2. Pathogenic Spore Counting

3.3. Cell Viability Detection

3.4. Other Agricultural Applications of Diffraction Imaging Technology

4. Conclusions

4.1. Integration of Hyperspectral and Diffraction Imaging

4.2. Advanced Data Analytics and Artificial Intelligence

4.3. Development of Low-Cost, Portable Devices

4.4. Enhanced Environmental Adaptability and Robustness

4.5. Interdisciplinary Integration and Smart Agriculture Applications

5. Future Prospects

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PCA | principal component analysis |

| CNN | convolutional neural network |

| SVM | support vector machine |

| GNN | graph neural network |

| ARMA | autoregressive moving average |

| GAT | graph attention network |

| CARS-Ridge | competitive adaptive reweighted sampling-ridge |

| VIS-NIR | visible-near infrared |

| LDA | linear discriminant analysis |

| KNN | k-nearest neighbor |

| PLS-DA | partial least squares discriminant analysis |

| FFNN | feedforward neural network |

| UAV | unmanned aerial vehicle |

| RGB | red–green–blue |

| DNN | deep neural network |

| ANN-BPN | artificial neural network with backpropagation |

| HSI | hyperspectral imaging |

| SEM | scanning electron microscopy |

| MTT | 3-(4,5-dimethylthiazol-2-yl)-2,5-diphenyltetrazolium bromide |

| FOV | field-of-view |

| FIC | fringe intensity contrast |

| LUCAS | laser ultrasonic camera system |

| SSAW | standing surface acoustic wave |

| DOE | diffraction optical element |

| SPA | successive projections algorithm |

| GLCM | gray-level co-occurrence matrix |

References

- Ozcelik, A.E. Driving initiatives for future improvements of specialty agricultural crops. Comput. Electron. Agr. 2016, 121, 122–134. [Google Scholar] [CrossRef]

- Gebbers, R.; Adamchuk, V.I. Precision Agriculture and Food Security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Wang, Y.T.; Hu, X.T.; Li, Z.Z.; Huang, X.W.; Liang, J.; Zhang, X.A.; Zheng, K.Y.; Zou, X.B.; Shi, J.T. Nondestructive discrimination of analogous density foreign matter inside soy protein meat semi-finished products based on transmission hyperspectral imaging. Food Chem. 2023, 411, 135431. [Google Scholar] [CrossRef]

- Nirere, A.; Sun, J.; Kama, R.; Atindana, V.A.; Nikubwimana, F.D.; Dusabe, K.D.; Zhong, Y.H. Nondestructive detection of adulterated wolfberry (Lycium Chinense) fruits based on hyperspectral imaging technology. J. Food Process Eng. 2023, 46, e14293. [Google Scholar] [CrossRef]

- Ram, B.G.; Oduor, P.; Igathinathane, C.; Howatt, K.; Sun, X. A systematic review of hyperspectral imaging in precision agriculture: Analysis of its current state and future prospects. Comput. Electron. Agr. 2024, 222, 109037. [Google Scholar] [CrossRef]

- Massaro, G.; Francesco, V.P.; And, M.D.A. Correlation Hyperspectral Imaging. Phys. Rev. Lett. 2024, 18, 183802. [Google Scholar] [CrossRef]

- Yang, C.; Guo, Z.; Fernandes Barbin, D.; Dai, Z.; Watson, N.; Povey, M.; Zou, X. Hyperspectral Imaging and Deep Learning for Quality and Safety Inspection of Fruits and Vegetables: A Review. J. Agr. Food Chem. 2025, 73, 10019–10035. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, L.; Zhou, X.; Yao, K.S.; Tian, Y.; Nirere, A. A method of information fusion for identification of rice seed varieties based on hyperspectral imaging technology. J. Food Process Eng. 2021, 44, e13797. [Google Scholar] [CrossRef]

- Sun, J.; Lu, X.Z.; Mao, H.P.; Jin, X.M.; Wu, X.H. A Method for Rapid Identification of Rice Origin by Hyperspectral Imaging Technology. J. Food Process Eng. 2017, 40, e12297. [Google Scholar] [CrossRef]

- Tang, N.Q.; Sun, J.; Yao, K.S.; Zhou, X.; Tian, Y.; Cao, Y.; Nirere, A. Identification of Lycium barbarum varieties based on hyperspectral imaging technique and competitive adaptive reweighted sampling-whale optimization algorithm-support vector machine. J. Food Process Eng. 2021, 44, e13603. [Google Scholar] [CrossRef]

- Fu, L.H.; Sun, J.; Wang, S.M.; Xu, M.; Yao, K.S.; Cao, Y.; Tang, N.Q. Identification of maize seed varieties based on stacked sparse autoencoder and near-infrared hyperspectral imaging technology. J. Food Process Eng. 2022, 45, e14120. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, J.; Zhou, X.; Nirere, A.; Wu, X.; Dai, R. Classification detection of saccharin jujube based on hyperspectral imaging technology. J. Food Process. Pres. 2020, 44, e14591. [Google Scholar] [CrossRef]

- Sun, J.; Lu, X.Z.; Mao, H.P.; Wu, X.H.; Gao, H.Y. Quantitative Determination of Rice Moisture Based on Hyperspectral Imaging Technology and BCC-LS-SVR Algorithm. J. Food Process Eng. 2017, 40, e12446. [Google Scholar] [CrossRef]

- Shi, L.; Sun, J.; Zhang, B.; Wu, Z.; Jia, Y.; Yao, K.; Zhou, X. Simultaneous detection for storage condition and storage time of yellow peach under different storage conditions using hyperspectral imaging with multi-target characteristic selection and multi-task model. J. Food Compos. Anal. 2024, 135, 106647. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, J.; Mao, H.P.; Wu, X.H.; Zhang, X.D.; Yang, N. Visualization research of moisture content in leaf lettuce leaves based on WT-PLSR and hyperspectral imaging technology. J. Food Process Eng. 2018, 41, e12647. [Google Scholar] [CrossRef]

- Lu, B.; Sun, J.; Yang, N.; Wu, X.H.; Zhou, X.; Shen, J.F. Quantitative detection of moisture content in rice seeds based on hyperspectral technique. J. Food Process Eng. 2018, 41, e12916. [Google Scholar] [CrossRef]

- Sun, J.; Wu, M.M.; Hang, Y.Y.; Lu, B.; Wu, X.H.; Chen, Q.S. Estimating cadmium content in lettuce leaves based on deep brief network and hyperspectral imaging technology. J. Food Process Eng. 2019, 42, e13293. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, J.; Tian, Y.; Lu, B.; Hang, Y.Y.; Chen, Q.S. Hyperspectral technique combined with deep learning algorithm for detection of compound heavy metals in lettuce. Food Chem. 2020, 321, 126503. [Google Scholar] [CrossRef]

- Tian, X.Y.; Aheto, J.H.; Bai, J.W.; Dai, C.X.; Ren, Y.; Chang, X.H. Quantitative analysis and visualization of moisture and anthocyanins content in purple sweet potato by Vis–NIR hyperspectral imaging. J. Food Process. Pres. 2021, 45, e15128. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, C.J.; Sun, J.; Yao, K.S.; Xu, M. Detection of lead content in oilseed rape leaves and roots based on deep transfer learning and hyperspectral imaging technology. Spectrochim. Acta Part A 2023, 290, 122288. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, C.J.; Sun, J.; Cao, Y.; Yao, K.S.; Xu, M. A deep learning method for predicting lead content in oilseed rape leaves using fluorescence hyperspectral imaging. Food Chem. 2023, 409, 135251. [Google Scholar] [CrossRef]

- Lu, X.; Sun, J.; Mao, H.P.; Wu, X.H.; Gao, H.Y. Quantitative determination of rice starch based on hyperspectral imaging technology. Int. J. Food Prop. 2017, 20, S1037–S1044. [Google Scholar] [CrossRef]

- Sun, J.; Cong, S.L.; Mao, H.P.; Wu, X.H.; Yang, N. Quantitative detection of mixed pesticide residue of lettuce leaves based on hyperspectral technique. J. Food Process Eng. 2018, 41, e12654. [Google Scholar] [CrossRef]

- Cheng, J.H.; Sun, J.; Yao, K.S.; Xu, M.; Wang, S.M.; Fu, L.H. Hyperspectral technique combined with stacking and blending ensemble learning method for detection of cadmium content in oilseed rape leaves. J. Sci. Food Agr. 2023, 103, 2690–2699. [Google Scholar] [CrossRef]

- Shi, J.Y.; Zhang, F.; Wu, S.B.; Guo, Z.M.; Huang, X.W.; Hu, X.T.; Holmes, M.; Zou, X.B. Noise-free microbial colony counting method based on hyperspectral features of agar plates. Food Chem. 2019, 274, 925–932. [Google Scholar] [CrossRef]

- Cao, Y.; Li, H.R.; Sun, J.; Zhou, X.; Yao, K.S.; Nirere, A. Nondestructive determination of the total mold colony count in green tea by hyperspectral imaging technology. J. Food Process Eng. 2020, 43, e13570. [Google Scholar] [CrossRef]

- Sun Li, C.; Jun, S.; Han Ping, M.; Xiao Hong, W.; Pei, W.; Xiao Dong, Z. Non-destructive detection for mold colonies in rice based on hyperspectra and GWO-SVR. J. Sci. Food Agr. 2018, 98, 1453–1459. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, Q.; Wang, L.; Park, B.; Kang, R.; Wang, Z.; Chen, Q.S.; Guo, Z.M. Assessment of matcha sensory quality using hyperspectral microscope imaging technology. Lwt 2020, 125, 109254. [Google Scholar] [CrossRef]

- Sun, J.; Yang, F.Y.; Cheng, J.H.; Wang, S.M.; Fu, L.H. Nondestructive identification of soybean protein in minced chicken meat based on hyperspectral imaging and VGG16-SVM. J. Food Compos. Anal. 2024, 125, 105713. [Google Scholar] [CrossRef]

- Xiong, C.H.; She, Y.X.; Jiao, X.; Zhang, T.W.; Wang, M.; Wang, M.Q.; Abd El-Aty, A.M.; Wang, J.; Xiao, M. Rapid nondestructive hardness detection of black highland Barley Kernels via hyperspectral imaging. J. Food Compos. Anal. 2024, 127, 105966. [Google Scholar] [CrossRef]

- Cheng, J.H.; Sun, J.; Xu, M.; Zhou, X. Nondestructive detection of lipid oxidation in frozen pork using hyperspectral imaging technology. J. Food Compos. Anal. 2023, 123, 105497. [Google Scholar] [CrossRef]

- Zhu, W.J.; Li, J.Y.; Li, L.; Wang, A.C.; Wei, X.H.; Mao, H.P. Nondestructive diagnostics of soluble sugar, total nitrogen and their ratio of tomato leaves in greenhouse by polarized spectra–hyperspectral data fusion. Int. J. Agr. Biol. Eng. 2020, 13, 189–197. [Google Scholar] [CrossRef]

- Yao, K.S.; Sun, J.; Zhang, L.; Zhou, X.; Tian, Y.; Tang, N.Q.; Wu, X.H. Nondestructive detection for egg freshness based on hyperspectral imaging technology combined with harris hawks optimization support vector regression. J. Food Saf. 2021, 41, e12888. [Google Scholar] [CrossRef]

- Yao, K.S.; Sun, J.; Chen, C.; Xu, M.; Zhou, X.; Cao, Y.; Tian, Y. Non-destructive detection of egg qualities based on hyperspectral imaging. J. Food Eng. 2022, 325, 111024. [Google Scholar] [CrossRef]

- Khulal, U.; Zhao, J.W.; Hu, W.W.; Chen, Q.S. Nondestructive quantifying total volatile basic nitrogen (TVB-N) content in chicken using hyperspectral imaging (HSI) technique combined with different data dimension reduction algorithms. Food Chem. 2016, 197, 1191–1199. [Google Scholar] [CrossRef]

- Li, H.H.; Kutsanedzie, F.; Zhao, J.W.; Chen, Q.S. Quantifying Total Viable Count in Pork Meat Using Combined Hyperspectral Imaging and Artificial Olfaction Techniques. Food Anal. Methods 2016, 9, 2–9913. [Google Scholar] [CrossRef]

- Li, Y.T.; Sun, J.; Wu, X.H.; Lu, B.; Wu, M.M.; Dai, C. Grade Identification of Tieguanyin Tea Using Fluorescence Hyperspectra and Different Statistical Algorithms. J. Food Sci. 2019, 84, 2234–2241. [Google Scholar] [CrossRef]

- Xu, M.; Sun, J.; Yao, K.S.; Wu, X.H.; Shen, J.F.; Cao, Y.; Zhou, X. Nondestructive detection of total soluble solids in grapes using VMD-RC and hyperspectral imaging. J. Food Sci. 2022, 87, 326–338. [Google Scholar] [CrossRef]

- Yao, K.S.; Sun, J.; Cheng, J.H.; Xu, M.; Chen, C.; Zhou, X.; Dai, C.X. Development of Simplified Models for Non-Destructive Hyperspectral Imaging Monitoring of S-ovalbumin Content in Eggs during Storage. Foods 2022, 11, 2024. [Google Scholar] [CrossRef]

- Yang, F.Y.; Sun, J.; Cheng, J.H.; Fu, L.H.; Wang, S.M.; Xu, M. Detection of starch in minced chicken meat based on hyperspectral imaging technique and transfer learning. J. Food Process Eng. 2023, 46, e14304. [Google Scholar] [CrossRef]

- Zhang, X.; Jiang, X.; Jiang, J.; Zhang, Y.; Liu, X.; Cai, Z. Spectral–Spatial and Superpixelwise PCA for Unsupervised Feature Extraction of Hyperspectral Imagery. IEEE T. Geosci. Remote 2022, 60, 1–10. [Google Scholar] [CrossRef]

- Gao, S.; Xu, J.H. Hyperspectral image information fusion-based detection of soluble solids content in red globe grapes. Comput. Electron. Agr. 2022, 196, 106822. [Google Scholar] [CrossRef]

- Mei, S.H.; Geng, Y.H.; Hou, J.H.; Du, Q. Learning hyperspectral images from RGB images via a coarse-to-fine CNN. Sci. China Inf. Sci. 2021, 65, 152102. [Google Scholar] [CrossRef]

- Yang, J.Y.; Lee, Y.K.; Chi, J. Spectral unmixing-based Arctic plant species analysis using a spectral library and terrestrial hyperspectral Imagery: A case study in Adventdalen, Svalbard. Int. J. Appl. Earth Obs. 2023, 125, 103583. [Google Scholar] [CrossRef]

- Jia, S.; Zhao, Q.Q.; Zhuang, J.Y.; Tang, D.D.; Long, Y.Q.; Xu, M. Flexible Gabor-Based Superpixel-Level Unsupervised LDA for Hyperspectral Image Classification. IEEE T. Geosci. Remote 2021, 59, 10394–10409. [Google Scholar] [CrossRef]

- Li, D.; Neira-Molina, H.; Huang, M.; Syam, S.M.; Zhang, Y.; Feng, Z.J.; Bhatti, U.A.; Asif, M.; Sarhan, N.; Awwad, E.M. CSTFNet: A CNN and Dual Swin-Transformer Fusion Network for Remote Sensing Hyperspectral Data Fusion and Classification of Coastal Areas. IEEE J. Stars 2025, 18, 5853–5865. [Google Scholar] [CrossRef]

- Uddin; Md, P.; Mamun, M.A.; Md, A.H. Effective feature extraction through segmentation-based folded-PCA for hyperspectral image classification. Int. J. Remote Sens. 2019, 40, 7190–7220. [Google Scholar] [CrossRef]

- Uddin, M.P.; Mamun, M.A.; Afjal, M.I.; Hossain, M.A. Information-theoretic feature selection with segmentation-based folded principal component analysis (PCA) for hyperspectral image classification. Int. J. Remote Sens. 2021, 42, 286–321. [Google Scholar] [CrossRef]

- Chen, Y.X.; Liu, Z.H.; Chen, Z.Q. AMS: A hyperspectral image classification method based on SVM and multi-modal attention network. Knowl. Based Syst. 2025, 314, 113236. [Google Scholar] [CrossRef]

- Sahadevan, A.S. Extraction of spatial-spectral homogeneous patches and fractional abundances for field-scale agriculture monitoring using airborne hyperspectral images. Comput. Electron. Agr. 2021, 188, 106325. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, L.H.; Tang, B.; Wang, Q.W.; Ge, Z.X.; Jiang, L.H. Non-Euclidean Spectral-Spatial feature mining network with Gated GCN-CNN for hyperspectral image classification. Expert Syst. Appl. 2025, 272, 126811. [Google Scholar] [CrossRef]

- Lin, J.; Mou, L.; Zhu, X.X.; Ji, X.; Wang, Z.J. Attention-Aware Pseudo-3-D Convolutional Neural Network for Hyperspectral Image Classification. IEEE T. Geosci. Remote 2021, 59, 7790–7802. [Google Scholar] [CrossRef]

- Yang, J.; Sun, J.; Ren, Y.P.; Li, S.B.; Ding, S.J.; Hu, J.J. GACP: Graph neural networks with ARMA filters and a parallel CNN for hyperspectral image classification. Int J. Digit Earth 2023, 16, 1770–1800. [Google Scholar] [CrossRef]

- Peng, M.; Liu, Y.X.; Qadri, I.A.; Bhatti, U.A.; Ahmed, B.; Sarhan, N.M.; Awwad, E.M. Advanced image segmentation for precision agriculture using CNN-GAT fusion and fuzzy C-means clustering. Comput. Electron. Agr. 2024, 226, 109431. [Google Scholar] [CrossRef]

- Zhu, C.M.; Ding, J.L.; Zhang, Z.P.; Wang, Z. Exploring the potential of UAV hyperspectral image for estimating soil salinity: Effects of optimal band combination algorithm and random forest. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2022, 279, 121416. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.A.; Lyu, X.; Ersan, M.S.; Xiao, F. Critical evaluation of hyperspectral imaging technology for detection and quantification of microplastics in soil. J. Hazard. Mater. 2024, 476, 135041. [Google Scholar] [CrossRef]

- Chen, H.; Shin, T.; Park, B.; Ro, K.; Jeong, C.; Jeon, H.J.; Tan, P. Coupling hyperspectral imaging with machine learning algorithms for detecting polyethylene (PE) and polyamide (PA) in soils. J. Hazard. Mater. 2024, 471, 134346. [Google Scholar] [CrossRef]

- Jiang, R.Z.; Sui, Y.Y.; Zhang, X.; Lin, N.; Zheng, X.M.; Li, B.Z.; Zhang, L.; Li, X.K.; Yu, H.Y. Estimation of soil organic carbon by combining hyperspectral and radar remote sensing to reduce coupling effects of soil surface moisture and roughness. Geoderma 2024, 444, 116874. [Google Scholar] [CrossRef]

- Li, X.Y.; Qiu, H.M.; Fan, P.P. A review of spectral feature extraction and multi-feature fusion methods in predicting soil organic carbon. Appl. Spectrosc. Rev. 2025, 60, 78–101. [Google Scholar] [CrossRef]

- Xu, H.; Ran, J.; Chen, M.X.; Sui, B.W.; Bai, X.Y. Non-Destructive Testing Based on Hyperspectral Imaging for Determination of Available Silicon and Moisture Contents in Ginseng Soils of Different Origins. J. Food Sci. 2025, 90, e70285. [Google Scholar] [CrossRef]

- Bohnenkamp, D.; Behmann, J.; Mahlein, A. In-Field Detection of Yellow Rust in Wheat on the Ground Canopy and UAV Scale. Remote Sens. 2019, 11, 2495. [Google Scholar] [CrossRef]

- Moghimi, A.; Yang, C.; Anderson, J.A. Aerial hyperspectral imagery and deep neural networks for high-throughput yield phenotyping in wheat. Comput. Electron. Agr. 2020, 172, 105299. [Google Scholar] [CrossRef]

- Ahmed, M.T.; Monjur, O.; Khaliduzzaman, A.; Kamruzzaman, M. A comprehensive review of deep learning-based hyperspectral image reconstruction for agri-food quality appraisal. Artif. Intell. Rev. 2025, 58, 96. [Google Scholar] [CrossRef]

- Genangeli, A.; Avola, G.; Bindi, M.; Cantini, C.; Cellini, F.; Riggi, E.; Gioli, B. A Novel Correction Methodology to Improve the Performance of a Low-Cost Hyperspectral Portable Snapshot Camera. Sens. 2023, 23, 9685. [Google Scholar] [CrossRef]

- Arad, O.; Cheplanov, L.; Afgin, Y.; Reshef, L.; Brikman, R.; Elatrash, S.; Stern, A.; Tsror, L.; Bonfil, D.J.; Klapp, I. Low-Cost Dispersive Hyperspectral Sampling Scanner for Agricultural Imaging Spectrometry. IEEE Sens. J. 2023, 23, 18292–18303. [Google Scholar] [CrossRef]

- Li, Q.F.; Yang, Y.P.; Tan, M.; Xia, H.; Peng, Y.X.; Fu, X.R.; Huang, Y.G.; Yang, X.P.; Ma, X.Y. Rapid pesticide residues detection by portable filter-array hyperspectral imaging. Spectrochim. Acta. Part A Mol. Biomol. Spectrosc. 2025, 330, 125703. [Google Scholar] [CrossRef] [PubMed]

- Habib, A.; Han, Y.; Xiong, W.F.; Fang Ning, H.; Zhou, Z.; Crawford, A. Automated Ortho-Rectification of UAV-Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery. Remote Sens. 2016, 8, 796. [Google Scholar] [CrossRef]

- Mäkynen, J.; Litkey, P.; Hakala, T.; Pölönen, I.; Kaivosoja, J.; Saari, H.; Honkavaara, E.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar]

- Sun, M.J.; Jing, X.T.; Ma, Y.X.; Huang, H.X. Lensless imaging via LED array based computational ghost imaging. Opt. Laser Technol. 2025, 180, 111401. [Google Scholar] [CrossRef]

- Greenbaum, A.; Zhang, Y.B.; Feizi, A.; Chung, P.; Luo, W.; Kandukuri, S.R.; Ozcan, A. Wide-field computational imaging of pathology slides using lens-free on-chip microscopy. Sci. Transl. Med. 2014, 6, 267ra175. [Google Scholar] [CrossRef]

- Xu, M.; Sun, J.; Zhou, X.; Tang, N.Q.; Shen, J.F.; Wu, X.H. Research on nondestructive identification of grape varieties based on EEMD-DWT and hyperspectral image. J. Food Sci. 2021, 86, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Jia, W.Y.; Ferragina, A.; Hamill, R.; Koidis, A. Modelling and numerical methods for identifying low-level adulteration in ground beef using near-infrared hyperspectral imaging (NIR-HSI). Talanta 2024, 276, 126199. [Google Scholar] [CrossRef]

- Savary, S.; Willocquet, L.; Pethybridge, S.J.; Esker, P.; McRoberts, N.; Nelson, A. The global burden of pathogens and pests on major food crops. Nat. Ecol. Evol. 2019, 3, 430–439. [Google Scholar] [CrossRef]

- Shen, G.H.; Cao, Y.Y.; Yin, X.C.; Dong, F.; Xu, J.H.; Shi, J.R.; Lee, Y. Rapid and nondestructive quantification of deoxynivalenol in individual wheat kernels using near-infrared hyperspectral imaging and chemometrics. Food Control 2022, 131, 108420. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.W. Plant diseases and pests detection based on deep learning: A review. Plant Methods 2021, 17, 22. [Google Scholar] [CrossRef]

- Lu, B.; Jun, S.; Ning, Y.; Xiao Hong, W.; Xin, Z. Identification of tea white star disease and anthrax based on hyperspectral image information. J. Food Process Eng. 2021, 44, e13584. [Google Scholar] [CrossRef]

- Wan, L.; Li, H.; Li, C.S.; Wang, A.C.; Yang, Y.H.; Wang, P. Hyperspectral Sensing of Plant Diseases: Principle and Methods. Agronomy 2022, 12, 1451. [Google Scholar] [CrossRef]

- Zhang, X.D.; Wang, Y.F.; Zhou, Z.K.; Zhang, Y.X.; Wang, X.Z. Detection Method for Tomato Leaf Mildew Based on Hyperspectral Fusion Terahertz Technology. Foods 2023, 12, 535. [Google Scholar] [CrossRef]

- Jiang, X.P.; Zhen, J.N.; Miao, J.; Zhao, D.M.; Shen, Z.; Jiang, J.C.; Gao, C.J.; Wu, G.F.; Wang, J.J. Newly-developed three-band hyperspectral vegetation index for estimating leaf relative chlorophyll content of mangrove under different severities of pest and disease. Ecol. Indic. 2022, 140, 108978. [Google Scholar] [CrossRef]

- Feng, L.; Wu, B.H.; He, Y.; Zhang, C. Hyperspectral Imaging Combined With Deep Transfer Learning for Rice Disease Detection. Front. Plant Sci. 2021, 12, 693521. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, J.; Huang, L.; Huang, W.; Dong, Y.; Ma, H.; Ruan, C. Development of new indices and use of CARS-Ridge algorithm for wheat fusarium head blight detection using in-situ hyperspectral data. Biosyst. Eng. 2024, 237, 13–25. [Google Scholar] [CrossRef]

- Rady, A.; Ekramirad, N.; Adedeji, A.A.; Li, M.; Alimardani, R. Hyperspectral imaging for detection of codling moth infestation in GoldRush apples. Postharvest Biol. Tec. 2017, 129, 37–44. [Google Scholar] [CrossRef]

- Cheshkova, A.F. A review of hyperspectral image analysis techniques for plant disease detection and identification. Vavilovskii Zh Genet. 2022, 26, 202–213. [Google Scholar]

- Shi, Y.; Han, L.X.; Kleerekoper, A.; Chang, S.; Hu, T.L. A Novel CropdocNet for Automated Potato Late Blight Disease Detection from the Unmanned Aerial Vehicle-based Hyperspectral Imagery. arXiv 2021, arXiv:2107.13277. [Google Scholar] [CrossRef]

- Gu, Q.; Sheng, L.; Zhang, T.H.; Lu, Y.W.; Zhang, Z.J.; Zheng, K.F.; Hu, H.; Zhou, H.K. Early detection of tomato spotted wilt virus infection in tobacco using the hyperspectral imaging technique and machine learning algorithms. Comput. Electron. Agr. 2019, 167, 105066. [Google Scholar] [CrossRef]

- Bai, Y.L.; Nie, C.W.; Yu, X.; Gou, M.Y.; Liu, S.B.; Zhu, Y.Q.; Jiang, T.T.; Jia, X.; Liu, Y.D.; Nan, F.; et al. Comprehensive analysis of hyperspectral features for monitoring canopy maize leaf spot disease. Comput. Electron. Agr. 2024, 225, 109350. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, Y.Y.; Hu, X.T.; Li, Z.H.; Huang, X.W.; Liang, J.; Zhang, X.A.; Zhang, D.; Zou, X.B.; Shi, J.Y. Quantitative characterization of the diffusion behavior of sucrose in marinated beef by HSI and FEA. Meat Sci. 2023, 195, 109002. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Liu, X.; Meng, K.X.; Zhang, K.X.; Yang, W.J.; Yang, J.T.; Feng, L.Y.; Gong, H.R.; Zhou, C.A. Discrimination of leaf diseases in Maize/Soybean intercropping system based on hyperspectral imaging. Front. Plant Sci. 2024, 15, 1434163. [Google Scholar] [CrossRef]

- Baek, I.; Kim, M.S.; Cho, B.; Changyeun, M.; Jinyoung, Y.B.; McClung, A.M.; Oh, M. Selection of Optimal Hyperspectral Wavebands for Detection of Discolored, Diseased Rice Seeds. Appl. Sci. 2019, 9, 1027. [Google Scholar] [CrossRef]

- Qi, C.; Sandroni, M.; Westergaard, J.C.; Sundmark, E.H.R.; Bagge, M.; Alexandersson, E.; Gao, J.F. In-field early disease recognition of potato late blight based on deep learning and proximal hyperspectral imaging. arXiv 2021, arXiv:2111.12155. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Wang, M.; Xu, T.; Huang, K.; Sun, Y.; Yuan, Q.; Lei, X.; Qi, Y.; Lv, X. Early detection and lesion visualization of pear leaf anthracnose based on multi-source feature fusion of hyperspectral imaging. Front. Plant Sci. 2024, 15, 1461855. [Google Scholar] [CrossRef]

- Bharadwaj, S.; Midha, A.; Sharma, S.; Sidhu, G.S.; Kumar, R. Optical screening of citrus leaf diseases using label-free spectroscopic tools: A review. J. Agr. Food Res. 2024, 18, 101303. [Google Scholar] [CrossRef]

- Ferreira, L.D.C.; Carvalho, I.C.B.; Jorge, L.A.D.C.; Quezado-Duval, A.M.; Rossato, M. Hyperspectral imaging for the detection of plant pathogens in seeds: Recent developments and challenges. Front. Plant Sci. 2024, 15, 1387925. [Google Scholar] [CrossRef] [PubMed]

- Jia, Z.C.; Qi Feng, D.; Yue, W.; Ke, W.; Hong Zhe, J. Detection Model and Spectral Disease Indices for Poplar (Populus L.) Anthracnose Based Hyperspectral Reflectance. Forest 2024, 15, 1309. [Google Scholar] [CrossRef]

- Rouš, R.; Peller, J.; Polder, G.; Hageraats, S.; Ruigrok, T.; Blok, P.M. Apple scab detection in orchards using deep learning on colour and multispectral images. arXiv 2023, arXiv:2302.08818. [Google Scholar] [CrossRef]

- Guo, L.; Sun, X.R.; Fu, P.; Shi, T.Z.; Dang, L.N.; Chen, Y.Y.; Linderman, M.; Zhang, G.L.; Zhang, Y.; Jiang, Q.H.; et al. Mapping soil organic carbon stock by hyperspectral and time-series multispectral remote sensing images in low-relief agricultural areas. Geoderma 2021, 398, 115118. [Google Scholar] [CrossRef]

- Mehedi, I.M.; Bilal, M.; Hanif, M.S.; Palaniswamy, T.; Vellingiri, M.T. Leveraging Hyperspectral Remote Sensing Imaging for Agricultural Crop Classification Using Coot Bird Optimization With Entropy-Based Feature Fusion Model. IEEE Access 2024, 12, 130214–130227. [Google Scholar] [CrossRef]

- Zhang, Y.T.; Wang, X.; Shan, J.J.; Zhao, J.B.; Zhang, W.; Liu, L.F.; Wu, F.C. Hyperspectral Imaging Based Method for Rapid Detection of Microplastics in the Intestinal Tracts of Fish. Environ. Sci. Technol. 2019, 53, 5151–5158. [Google Scholar] [CrossRef]

- Duma, Z.; Zemcik, T.; Bilik, S.; Sihvonen, T.; Honec, P.; Reinikainen, S.; Horak, K. Varroa destructor detection on honey bees using hyperspectral imagery. Comput. Electron. Agr. 2024, 224, 109219. [Google Scholar] [CrossRef]

- Chen, S.Y.; Chang, C.Y.; Ou, C.S.; Lien, C.T. Detection of Insect Damage in Green Coffee Beans Using VIS-NIR Hyperspectral Imaging | Semantic Scholar. Remote Sens. 2020, 15, 2348. [Google Scholar] [CrossRef]

- Dashti, A.; Müller-Maatsch, J.; Roetgerink, E.; Wijtten, M.; Weesepoel, Y.; Parastar, H.; Yazdanpanah, H. Comparison of a portable Vis-NIR hyperspectral imaging and a snapscan SWIR hyperspectral imaging for evaluation of meat authenticity. Food Chem. X 2023, 18, 100667. [Google Scholar] [CrossRef]

- Zheng, L.; Zhao, M.Y.; Zhu, J.C.; Huang, L.S.; Zhao, J.L.; Liang, D.; Zhang, D.Y. Fusion of hyperspectral imaging (HSI) and RGB for identification of soybean kernel damages using ShuffleNet with convolutional optimization and cross stage partial architecture. Front. Plant Sci. 2022, 13, 1098864. [Google Scholar] [CrossRef]

- Sun, Y.; Liang, D.D.; Wang, X.C.; Hu, Y.H. Assessing and detection of multiple bruises in peaches based on structured hyperspectral imaging. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2024, 304, 123378. [Google Scholar] [CrossRef] [PubMed]

- Vignati, S.; Tugnolo, A.; Giovenzana, V.; Pampuri, A.; Casson, A.; Guidetti, R.; Beghi, R. Hyperspectral Imaging for Fresh-Cut Fruit and Vegetable Quality Assessment: Basic Concepts and Applications. Appl. Sci. 2023, 13, 9740. [Google Scholar] [CrossRef]

- Jin, S.S.; Liu, X.H.; Wang, J.L.; Pan, L.Q.; Zhang, Y.M.; Zhou, G.H.; Tang, C.B. Hyperspectral imaging combined with fluorescence for the prediction of microbial growth in chicken breasts under different packaging conditions. Lwt 2023, 181, 114727. [Google Scholar] [CrossRef]

- Wang, S.M.; Sun, J.; Fu, L.H.; Xu, M.; Tang, N.Q.; Cao, Y.; Yao, K.S.; Jing, J.P. Identification of red jujube varieties based on hyperspectral imaging technology combined with CARS-IRIV and SSA-SVM. J. Food Process Eng. 2022, 45, e14137. [Google Scholar] [CrossRef]

- Ge, X.; Sun, J.; Lu, B.; Chen, Q.S.; Xun, W.; Jin, Y.T. Classification of oolong tea varieties based on hyperspectral imaging technology and BOSS-LightGBM model. J. Food Process Eng. 2019, 42, e13289. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, J.; Zhou, X.; Yao, K.S.; Tang, N.Q. Detection of soluble solid content in apples based on hyperspectral technology combined with deep learning algorithm. J. Food Process. Pres. 2022, 46, e16414. [Google Scholar] [CrossRef]

- Nirere, A.; Sun, J.; Atindana, V.A.; Hussain, A.; Zhou, X.; Yao, K.S. A comparative analysis of hybrid SVM and LS-SVM classification algorithms to identify dried wolfberry fruits quality based on hyperspectral imaging technology. J. Food Process. Pres. 2022, 46, e16320. [Google Scholar] [CrossRef]

- Terentev, A.; Badenko, V.; Shaydayuk, E.; Dmitriy, E.; Danila, E.; Dmitriy, K.; Alexander, F.; Dolzhenko, V. Hyperspectral Remote Sens. Early Detect. Wheat Leaf Rust Caused By Puccinia triticina. Mol. Plant Pathol. 2023, 13, 1186. [Google Scholar]

- Moriya, É.A.S.; Imai, N.N.; Tommaselli, A.M.G.; Berveglieri, A.; Santos, G.H.; Soares, M.A.; Marino, M.; Reis, T.T. Detection and mapping of trees infected with citrus gummosis using UAV hyperspectral data. Comput. Electron. Agr. 2021, 188, 106298. [Google Scholar] [CrossRef]

- Bai, Y.L.; Jin, X.L. Hyperspectral approaches for rapid and spatial plant disease monitoring. Trends Plant Sci. 2024, 6, 711–712. [Google Scholar] [CrossRef]

- Xu, P.; Fu, L.; Xu, K.; Sun, W.B.; Tan, Q.; Zhang, Y.P.; Zha, X.T.; Yang, R.B. Investigation into maize seed disease identification based on deep learning and multi-source spectral information fusion techniques. J. Food Compos. Anal. 2023, 119, 105254. [Google Scholar] [CrossRef]

- Tan, F.; Cang, H.; Gao, X.W.; Wu, N.Y.; Di, R.Y.; Zhang, Y.; Gao, P.; Lv, X.; Zhang, C. Early detection of cotton Verticillium wilt based on generative adversarial networks and hyperspectral imaging technology. Ind. Crop. Prod. 2025, 231, 121167. [Google Scholar] [CrossRef]

- Liu, T.; Qi, Y.; Yang, F.; Yi, X.Y.; Guo, S.L.; Wu, P.Y.; Yuan, Q.Y.; Xu, T.Y. Early detection of rice blast using UAV hyperspectral imagery and multi-scale integrator selection attention transformer network (MS-STNet). Comput. Electron. Agr. 2025, 231, 110007. [Google Scholar] [CrossRef]

- Bohnenkamp, D.; Behmann, J.; Paulus, S.; Steiner, U.; Mahlein, A. A Hyperspectral Library of Foliar Diseases of Wheat. Phytopathology 2021, 111, 1583–1593. [Google Scholar] [CrossRef]

- Wu, G.S.; Fang, Y.L.; Jiang, Q.Y.; Cui, M.; Li, N.; Ou, Y.M.; Diao, Z.H.; Zhang, B.H. Early identification of strawberry leaves disease utilizing hyperspectral imaging combing with spectral features, multiple vegetation indices and textural features. Comput. Electron. Agr. 2023, 204, 107553. [Google Scholar] [CrossRef]

- Zhu, W.X.; Sun, Z.G.; Huang, Y.H.; Yang, T.; Li, J.; Zhu, K.Y.; Zhang, J.Q.; Yang, B.; Shao, C.X.; Peng, J.B.; et al. Optimization of multi-source UAV RS agro-monitoring schemes designed for field-scale crop phenotyping. Precis. Agric. 2021, 22, 1768–1802. [Google Scholar] [CrossRef]

- Wang, L.J.; Jin, J.; Song, Z.H.; Wang, J.L.; Zhang, L.B.; Rehman, T.U.; Ma, D.; Carpenter, N.R.; Tuinstra, M.R. LeafSpec: An accurate and portable hyperspectral corn leaf imager. Comput. Electron. Agr. 2020, 169, 105209. [Google Scholar] [CrossRef]

- Sun, Q.; Gu, X.H.; Chen, L.P.; Xu, X.B.; Wei, Z.H.; Pan, Y.C.; Gao, Y.B. Monitoring maize canopy chlorophyll density under lodging stress based on UAV hyperspectral imagery. Comput. Electron. Agr. 2022, 193, 106671. [Google Scholar] [CrossRef]

- Thangadeepiga, E.; Alagu Raja, R.A. Remote Sensing-Based Crop Identification Using Deep Learning. In Intelligent Data Engineering and Analytics; Spinger: Berlin/Heidelberg, Germany, 2021; pp. 109–122. [Google Scholar]

- Li, D.S.; Chen, Q.S.; Ou Yang, Q.; Liu, Z.H. Advances of Vis/NIRS and imaging techniques assisted by AI for tea processing. Crit. Rev. Food Sci. 2025, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Ou Yang, Q.; Chang, H.L.; Fan, Z.Z.; Ma, S.Z.; Chen, Q.S.; Liu, Z.H. Monitoring changes in constituents during black tea fermentation using snapshot multispectral imaging and 1D-CNN enhanced with data augmentation. Comput. Electron. Agr. 2025, 237, 110643. [Google Scholar] [CrossRef]

- Wang, Y.Y.D.; Duan, Z.J. Advances in Mask-Modulated Lensless Imaging. Electronics 2024, 3, 617. [Google Scholar] [CrossRef]

- Zhao, J.; Li, M.S. Lensless ultrafast optical imaging. Light Sci. Appl. 2022, 11, 97. [Google Scholar] [CrossRef]

- Li, T.G.; Wang, Y.F.; Yang, N.; Wang, A.Y.; Dong, S.Z.; Wang, S.H.; Jiang, F.Y.; Li, S.F. Portable multispectral diffraction microfluidic sensing system for pathogenic fungal detection. Sens. Actuators B Chem. 2024, 411, 135775. [Google Scholar] [CrossRef]

- Zhang, X.D.; Song, H.J.; Wang, Y.F.; Hu, L.; Wang, P.; Mao, H.P. Detection of Rice Fungal Spores Based on Micro- Hyperspectral and Microfluidic Techniques. Biosensors 2023, 13, 278. [Google Scholar] [CrossRef]

- Zhang, X.D.; Guo, B.X.; Wang, Y.F.; Hu, L.; Yang, N.; Mao, H.P. A Detection Method for Crop Fungal Spores Based on Microfluidic Separation Enrichment and AC Impedance Characteristics. J. Fungi 2022, 8, 1168. [Google Scholar] [CrossRef]

- Yang, N.; Hu, J.Q.; Zhou, X.; Wang, A.Y.; Yu, J.J.; Tao, X.Y.; Tang, J. A rapid detection method of early spore viability based on AC impedance measurement. J. Food Process Eng. 2020, 43, e13520. [Google Scholar] [CrossRef]

- Yang, N.; Qian, Y.; Mesery, H.S.E.; Zhang, R.B.; Wang, A.Y.; Tang, J. Rapid detection of rice disease using microscopy image identification based on the synergistic judgment of texture and shape features and decision tree–confusion matrix method. J. Sci. Food Agr. 2019, 99, 6589–6600. [Google Scholar] [CrossRef]

- Xu, P.F.; Zhang, R.B.; Yang, N.; Kwabena Oppong, P.; Sun, J.; Wang, P. High-precision extraction and concentration detection of airborne disease microorganisms based on microfluidic chip. Biomicrofluidics 2019, 13, 24110. [Google Scholar] [CrossRef] [PubMed]

- Yang, N.; Ji, Y.Y.; Wang, A.Y.; Tang, J.; Liu, S.H.; Zhang, X.D.; Xu, L.J.; He, Y. An integrated nucleic acid detection method based on a microfluidic chip for collection and culture of rice false smut spores. Lab Chip 2022, 22, 4894–4904. [Google Scholar] [CrossRef]

- Yang, N.; Chen, C.Y.; Li, T.; Li, Z.; Zou, L.R.; Zhang, R.B.; Mao, H.P. Portable Rice Disease Spores Capture and Detection Method Using Diffraction Fingerprints on Microfluidic Chip. Micromachines 2019, 10, 289. [Google Scholar] [CrossRef]

- Zhang, X.D.; Bian, F.; Wang, Y.F.; Hu, L.; Yang, N.; Mao, H.P. A Method for Capture and Detection of Crop Airborne Disease Spores Based on Microfluidic Chips and Micro Raman Spectroscopy. Foods 2022, 11, 3462. [Google Scholar] [CrossRef]

- Wang, Y.F.; Shi, Q.; Ren, S.J.; Li, T.Z.; Yang, N.; Zhang, X.D.; Ma, G.X.; Mohamed, F.T.; Mao, H.P. Application of a spore detection system based on diffraction imaging to tomato gray mold. Int. J. Agric. Biol. Eng. 2024, 6, 212–217. [Google Scholar] [CrossRef]

- Wang, Y.F.; Mao, H.P.; Zhang, X.D.; Liu, Y.; Du, X.X. A Rapid Detection Method for Tomato Gray Mold Spores in Greenhouse Based on Microfluidic Chip Enrichment and Lens-Less Diffraction Image Processing. Foods 2021, 10, 3011. [Google Scholar] [CrossRef]

- Yang, N.; Yu, J.J.; Wang, A.Y.; Tang, J.; Zhang, R.B.; Xie, L.L.; Shu, F.Y.; Kwabena, O.P. A rapid rice blast detection and identification method based on crop disease spores’ diffraction fingerprint texture. J. Sci. Food Agr. 2020, 100, 3608–3621. [Google Scholar] [CrossRef]

- Li, X.X.; Zhang, X.L.; Liu, Q.; Zhao, W.; Liu, S.X.; Sui, G.D. Microfluidic System for Rapid Detection of Airborne Pathogenic Fungal Spores. Acs Sens. 2018, 3, 2095–2103. [Google Scholar] [CrossRef]

- Wang, Y.F.; Zhang, X.D.; Taha, M.F.; Chen, T.H.; Yang, N.; Zhang, J.R.; Mao, H.P. Detection Method of Fungal Spores Based on Fingerprint Characteristics of Diffraction-Polarization Images. J. Fungi 2023, 9, 1131. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.F.; Mao, H.P.; Xu, G.L.; Zhang, X.D.; Zhang, Y.K. A Rapid Detection Method for Fungal Spores from Greenhouse Crops Based on CMOS Image Sensors and Diffraction Fingerprint Feature Processing. J. Fungi 2022, 8, 374. [Google Scholar] [CrossRef]

- Li, G.X.; Zhang, R.B.; Yang, N.; Yin, C.S.; Wei, M.J.; Zhang, Y.C.; Sun, J. An approach for cell viability online detection based on the characteristics of lensfree cell diffraction fingerprint. Biosens. Bioelectron. 2018, 107, 163–169. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.; Shu Hua, L.; Yanjun, K.; Jian, T.; Ya Fei, W.; Ning, Y.; Ru Bing, H. Crop Disease Source Location and Monitoring System Based on Diffractive Light Identification Airborne Spore Sensor Network. IEEE Internet Things J. 2022, 13, 11030–11042. [Google Scholar]

- Shi, Z.S.; Zhao, Y.; Liu, S.; Wang, Y.T.; Yu, Q.L. Size-Dependent Impact of Magnetic Nanoparticles on Growth and Sporulation of Aspergillus niger. Molecules 2022, 27, 5840. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.W.; Guo, C.F.; Bian, Z.C.; Wang, R.H.; Zhu, J.K.; Song, P.M.; Hu, P.; Hu, D.; Zhang, Z.B.; Hoshino, K.; et al. Ptychographic sensor for large-scale lensless microbial monitoring with high spatiotemporal resolution. Biosens. Bioelectron. 2022, 196, 113699. [Google Scholar] [CrossRef]

- Wang, J.Y.; You, W.; Jiao, Y.H.; Zhu, Y.H.; Liu, X.J.; Jiang, X.Q.; Hu, C.F.; Lu, W.L. Quantitative phase imaging of opaque specimens with flexible endoscopic microscopy. Opt. Laser. Eng. 2024, 180, 108342. [Google Scholar] [CrossRef]

- Li, T.G.; Yang, N.; Xiao, Y.; Liu, Y.; Pan, X.Q.; Wang, S.H.; Jiang, F.Y.; Zhang, Z.Y.; Zhang, X.C. Virus detection light diffraction fingerprints for biological applications. Sci. Adv. 2024, 10, eadl3466. [Google Scholar] [CrossRef] [PubMed]

- Kalwa, U.; Legner, C.; Wlezien, E.; Tylka, G.; Pandey, S. New methods of removing debris and high-throughput counting of cyst nematode eggs extracted from field soil. PLoS ONE 2019, 14, e0223386. [Google Scholar] [CrossRef]

- Sasagawa, K.; Sasagawa, K.; Ohta, Y.; Kawahara, M.; Haruta, M.; Tokuda, T.; Ohta, J. Wide field-of-view lensless fluorescence imaging device with hybrid bandpass emission filter. Aip. Adv. 2019, 3, 35108. [Google Scholar] [CrossRef]

- Feshki, M.; De Koninck, Y.; Gosselin, B. Deep Learning Empowered Fresnel-based Lensless Fluorescence Microscopy. In Proceedings of the EMBC 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney Australia, 24–27 July 2023; pp. 1–4. [Google Scholar]

- Gurkan, U.A.; Moon, S.; Geckil, H.; Xu, F.; Wang, S.Q.; Lu, T.J.; Demirci, U. Miniaturized lensless imaging systems for cell and microorganism visualization in point-of-care testing. Biotechnol J. 2011, 6, 138–149. [Google Scholar] [CrossRef]

- Chen, J.; Han, W.; Fu, L.; Lv, Z.; Chen, H.; Fang, W.; Hou, J.; Yu, H.; Huang, X.; Sun, L. A Miniaturized and Intelligent Lensless Holographic Imaging System With Auto-Focusing and Deep Learning-Based Object Detection for Label-Free Cell Classification. IEEE Photonics J. 2024, 16, 1–8. [Google Scholar] [CrossRef]

- Bian, Y.X.; Jiang, Y.N.; Wang, J.X.; Yang, S.M.; Deng, W.J.; Yang, X.F.; Shen, R.B.; Shen, H.; Kuang, C.F. Deep learning colorful ptychographic iterative engine lens-less diffraction microscopy. Opt. Laser. Eng. 2022, 150, 106843. [Google Scholar] [CrossRef]

- Su, T.; Xue, L.; Ozcan, A. High-throughput lensfree 3D tracking of human sperms reveals rare statistics of helical trajectories. Proc. Natl. Acad. Sci. USA 2012, 109, 16018–16022. [Google Scholar] [CrossRef]

- Su, T.; Seo, S.; Erlinger, A.; Ozcan, A. High-throughput lensfree imaging and characterization of a heterogeneous cell solution on a chip. Biotechnol. Bioeng. 2009, 102, 856–868. [Google Scholar] [CrossRef]

- Young, L.W.; Parham, C.; Zhong, Z.; Chapman, D.; Reaney, M.J.T. Non-destructive diffraction enhanced imaging of seeds. J. Exp. Bot. 2007, 58, 2513–2523. [Google Scholar] [CrossRef]

- Chen, J.; Huang, X.W.; Xu, X.F.; Wang, R.J.; Wei, M.Y.; Han, W.T.; Cao, J.F.; Xuan, W.P.; Ge, Y.K.; Wang, J.C.; et al. Microfluidic Particle Separation and Detection System Based on Standing Surface Acoustic Wave and Lensless Imaging. IEEE Trans. Bio-Med. Eng. 2022, 69, 2165–2175. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Hu, H.Q.; Chen, S.Q.; Xu, Z.H.; Li, Q.; Jiang, T.T.; Chen, Y.T. Hyperspectral image reconstruction based on the fusion of diffracted rotation blurred and clear images. Opt. Laser. Eng. 2023, 160, 107274. [Google Scholar] [CrossRef]

- Jeon, D.S.; Baek, S.; Shinyoung, Y.; Qiang, F.; Xiong, D.; Wolfgang, H.; Min, H.K. Compact snapshot hyperspectral imaging with diffracted rotation. Communities Collect. 2019, 38, 111–117. [Google Scholar] [CrossRef]

- Chen, X.G.; Hu, J.; Chen, W.L.; Yang, S.L.; Wang, Y.F.; Tang, Z.R.; Liu, S.Y. Multi-spectral snapshot diffraction-based overlay metrology. Opt. Lett. 2023, 48, 3383–3386. [Google Scholar] [CrossRef]

- Bai, J.; Niu, Z.; Bi, K.; Yang, X.; Huang, Y.; Fu, Y.; Wu, M.; Wang, L. Toward an Advanced Method for Full-Waveform Hyperspectral LiDAR Data Processing. IEEE T. Geosci. Remote 2024, 62, 1–16. [Google Scholar] [CrossRef]

| Application Type | Target Disease | Detection Technology | Main Achievements |

|---|---|---|---|

| Disease detection | Wheat powdery mildew [83] | HSI | Successfully identified the infection degree of wheat powdery mildew using hyperspectral imaging; Sensitive bands at 560 nm, 680 nm, and 758 nm. |

| Potato late blight [84] | UAV-based HSI | Achieved early detection of potato late blight by integrating UAV-mounted hyperspectral sensors with deep learning models. | |

| Tomato spotted wilt virus [85] | HSI | Successfully detected tomato spotted wilt virus using hyperspectral imaging combined with machine learning techniques; Detection accuracy reached 85%. | |

| Maize leaf blight [86] | HSI | Achieved high-precision detection of maize leaf blight by integrating hyperspectral imaging with biochemical and spectral features; Overall accuracy reached 86.12%. | |

| Sucrose diffusion in beef [87] | HSI | Enabled quantitative and spatial visualization of sucrose diffusion dynamics | |

| Leaf diseases in intercropping [88] | HSI | Successfully distinguished multiple leaf diseases using hyperspectral features | |

| Rice seeds [89] | HSI | Identified optimal wavebands to improve detection performance and reduce data redundancy | |

| Disease early warning | Potato late blight [90] | Proximal HSI | Early recognition of potato late blight achieved by combining deep learning models with proximal hyperspectral imaging; Test set accuracy reached 73.9%. |

| pear leaf anthracnose [91] | HSI | Applied hyperspectral imaging to enable early warning and visual diagnosis of Sclerotinia-infected tomato, improving detection accuracy and providing a basis for timely disease management. | |

| Citrus leaf diseases [92] | HSI | Summarized the application of multiple hyperspectral imaging technologies in citrus leaf disease detection; Emphasized advantages for fast, non-invasive detection and future development directions. | |

| Crop seed-borne pathogens [93] | HSI | Demonstrated that hyperspectral imaging combined with AI technology can accurately distinguish between infected and healthy seeds, providing a novel approach for seed pathogen detection. | |

| Poplar anthracnose [94] | HSI | Developed a spectral model for early and accurate disease detection | |

| Crop remote sensing and monitoring | Apple black rot [95] | Multispectral imaging | Deep learning combined with multispectral imaging enabled early detection of apple black rot, improving orchard disease management efficiency. |

| Wheat [62] | UAV-based HSI | Using UAV-based hyperspectral data combined with deep neural network (DNN) analysis of wheat spectral characteristics, achieved yield prediction. | |

| Rice [80] | HSI | Combined hyperspectral imaging with advanced transfer learning methods to enable rapid detection of various rice upper leaf diseases. | |

| Soil [96] | Multispectral imaging | By integrating precise spectral features from hyperspectral imaging with dynamic temporal information from multispectral data, significantly improved the accuracy of soil organic carbon estimation. | |

| Seeds [93] | HSI | Demonstrated that hyperspectral imaging combined with AI can accurately distinguish infected and healthy seeds, providing a novel approach for seed pathogen detection. | |

| Crop [97] | HSI | Improved classification accuracy and robustness by combining HSI with feature fusion strategies. | |

| Other applications | Fish species [98] | HSI | Proposed a method combining hyperspectral imaging with a SVM model for rapid detection of microplastics in fish intestines, providing a new approach for assessing the impact of environmental pollution on aquatic organism health. |

| Honeybees [99] | HSI | Utilized hyperspectral imaging combined with multivariate statistical analysis to detect Varroa destructor mites on honeybee bodies. | |

| Wheat [62] | UAV-based HSI + Deep learning | Combined UAV-based hyperspectral data with DNN to perform wheat pest and yield prediction, analyzing the correlation between spectral features and pest damage. | |

| Coffee bean [100] | HSI | Applied hyperspectral imaging to detect damage caused by coffee berry borers, enabling high-precision, non-destructive pest identification in coffee beans. | |

| Beef, lamb, and chicken [101] | HSI + SVM and ANN-BPN | Vis-NIR HSI outperformed SWIR HSI with higher accuracy (96% vs. 88%), better Rp (~0.99 vs. 0.86), and lower RMSEP (4–9% vs. 15–24%). | |

| Soybean kernel damage [102] | HSI +RGB+DNN | Achieved high accuracy of 98.36% using spectrum-RGB fusion and optimized convolutional architecture. | |

| Peaches [103] | HSI + Multivariate analysis | Effectively identified multiple bruises at different stages, enabling improved quality inspection. | |

| Fruits and vegetables [104] | HSI | HSI enables rapid, non-destructive assessment of moisture, color, damage, and spoilage. | |

| Microorganism [105] | HSI | Combined modalities accurately predicted microbial growth trends under various packaging conditions. | |

| Red jujube [106] | HIS + CARS-IRIV and SSA-SVM | Achieved high-accuracy varietal identification through effective feature selection and model optimization. | |

| Oolong tea [107] | HIS + BOSS-LightGBM | Enabled accurate and rapid classification of tea varieties by combining band selection with advanced machine learning. | |

| Egg [31] | HSI + SVM | Provided a nondestructive and reliable method for predicting egg freshness with improved regression accuracy. | |

| Soluble solid content in apples [108] | HSI + DNN | Realized precise prediction of soluble solid content, enhancing nondestructive apple quality evaluation. | |

| Wolfberry [109] | HSI + Hybrid SVM and LS-SVM | Demonstrated effective differentiation of dried wolfberry quality grades through comparative classification approaches. |

| Application Type | Target | Detection Technology | Main Achievements |

|---|---|---|---|

| Spore detection | Rice disease spores [141] | Microfluidic chip combined with diffraction-based optical technology | Optimized microfluidic chip structure to achieve purification and detection of low-concentration airborne spores, significantly improving detection sensitivity. |

| Fungal spores [139] | Diffraction–polarization imaging combined with machine learning | Developed a method based on diffraction-polarization imaging features of fungal spores; Integrated with SVM algorithm to achieve high-accuracy identification of multiple fungal spores. | |

| Rice virus spores [133] | Portable microfluidic chip combined with lens-free diffraction imaging | Proposed a portable device using microfluidic chip and lens-free diffraction imaging for rice virus spore capture and detection; Results highly correlated with microscopic identification. | |

| Rice blast spores [137] | Diffraction-based texture feature analysis | Developed a rapid rice blast spore detection and identification method based on diffraction texture analysis, enabling effective classification of rice blast spores and other spores. | |

| Airborne crop pathogen spores [142] | Diffraction-based optical identification sensor network | Built a diffraction-based optical identification sensor network for airborne crop pathogen spores, enabling source localization and monitoring, and enhancing disease early warning capabilities. | |

| Pathogenic fungal spores [127] | Multispectral diffraction-based microfluidic sensing system | Developed a portable multispectral diffraction-based microfluidic sensing system for pathogenic fungal spore detection; Demonstrated high sensitivity and specificity. | |

| Pathogenic spore counting | Spores (tomato gray mold, cucumber downy and powdery mildew) [139] | Diffraction–polarization fingerprint + SVM | ~95.85% accurate classification of multiple spore types using diffraction–polarization texture features. |

| Greenhouse crop fungal spores [140] | CMOS sensor + Diffraction fingerprint processing | Compact, high-throughput detection with ~92.7% SVM accuracy. | |

| Rice disease spores in microfluidic chip [136] | Microfluidic capture + Lensless diffraction | Portable system enabling automatic spore detection via diffraction fingerprint imaging. | |

| Aspergillus niger spore sporulation [143] | Magnetic nanoparticle exposure + Spore count analysis | Demonstrated that larger magnetic nanoparticles significantly inhibit spore production. | |

| Other applications | Microorganisms in sediment [144] | Lens-free imaging technology | Used lens-free imaging to capture images of microorganisms in sediment; Analyzed morphological and physiological characteristics to achieve microorganism species identification. |

| Virus-infected cells [145] | Lens-free diffraction imaging technology | Analyzed diffraction pattern signatures of virus-infected cells; Achieved high-throughput, visual monitoring with a linear correlation of 98.9%. | |

| Virus-infected cells [146] | Lens-free imaging technology | Proposed a novel method using lens-free diffraction pattern analysis for biological detection of virus-infected cells, enabling efficient, flexible identification and differentiation of various viruses. | |

| Cyst nematode eggs in soil samples [147] | Lensless or deep learning-based counting | Greatly improved egg purity and automated counting efficiency with minimal manual error. | |

| Rice blast fungal spores [137] | Lensless diffraction imaging + CNN | Enabled rapid (few seconds) identification of fungal spores with 97.18% accuracy. | |

| Fluorescently labeled particles or cells [148] | Lensless fluorescence imaging + Hybrid bandpass filters | Achieved wide field-of-view imaging with high signal-to-noise ratio using a compact, low-cost system. | |

| Cells and microorganisms [149] Cells and microorganisms [150] | Lensless imaging + Deep learning analysis | Enabled automated identification of cell morphology with high resolution in a portable format. | |

| Lensless holographic imaging | They present a compact lensless holographic imaging system featuring auto-focusing and deep learning-based detection for label-free cell classification. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Wu, Y.; Yang, N.; Sun, Z. Advances in Hyperspectral and Diffraction Imaging for Agricultural Applications. Agriculture 2025, 15, 1775. https://doi.org/10.3390/agriculture15161775

Chen L, Wu Y, Yang N, Sun Z. Advances in Hyperspectral and Diffraction Imaging for Agricultural Applications. Agriculture. 2025; 15(16):1775. https://doi.org/10.3390/agriculture15161775

Chicago/Turabian StyleChen, Li, Yu Wu, Ning Yang, and Zongbao Sun. 2025. "Advances in Hyperspectral and Diffraction Imaging for Agricultural Applications" Agriculture 15, no. 16: 1775. https://doi.org/10.3390/agriculture15161775

APA StyleChen, L., Wu, Y., Yang, N., & Sun, Z. (2025). Advances in Hyperspectral and Diffraction Imaging for Agricultural Applications. Agriculture, 15(16), 1775. https://doi.org/10.3390/agriculture15161775