Dynamic Object Detection and Non-Contact Localization in Lightweight Cattle Farms Based on Binocular Vision and Improved YOLOv8s

Abstract

1. Introduction

2. Related Work

- A multi-modal data fusion scheme based on the ZED2i stereo camera is proposed, which combines GPS and IMU data for high-precision target localization, significantly improving the model’s applicability in complex farm environments.

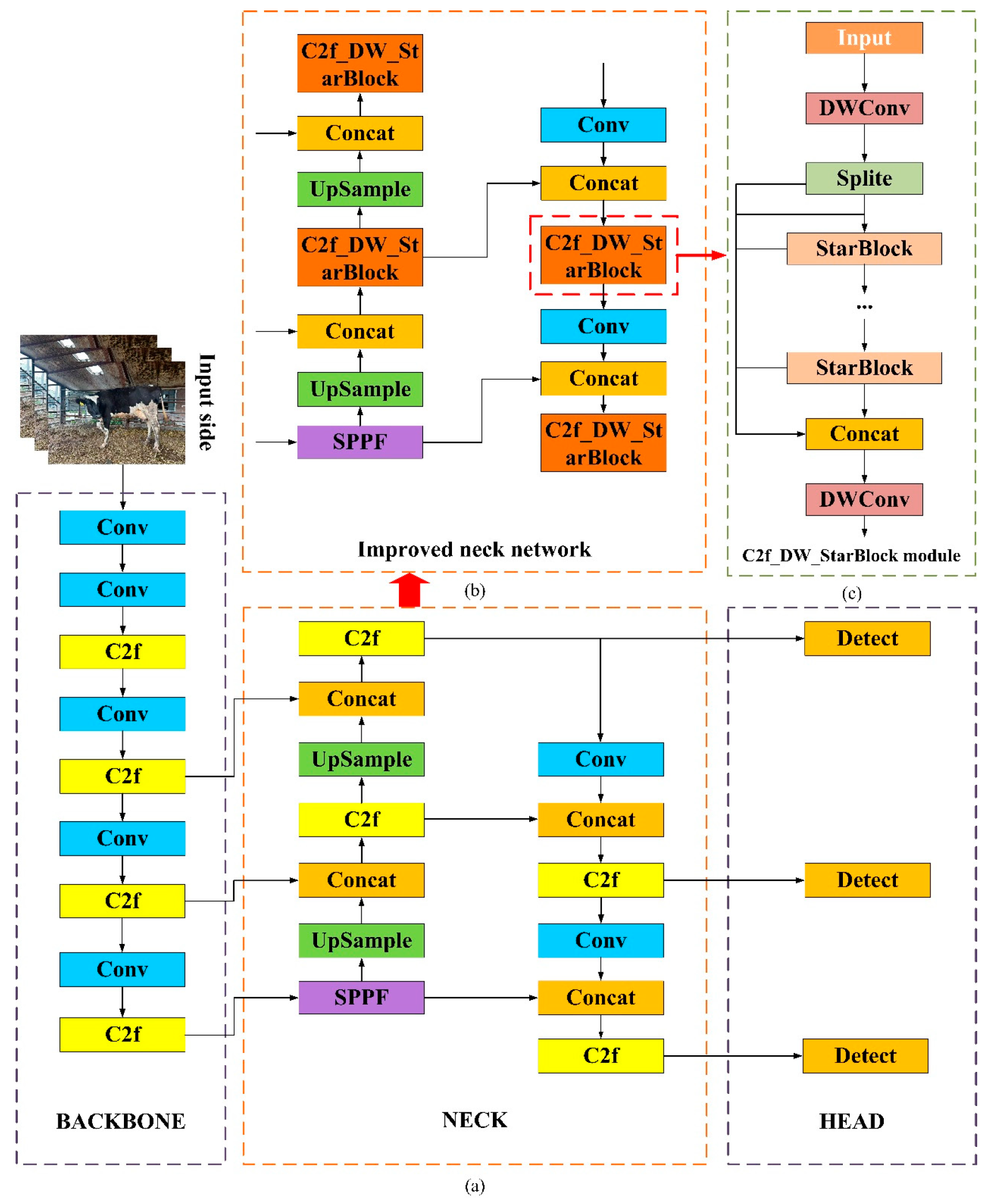

- The C2f_DW_StarBlock module is designed and optimized to replace the original module in YOLOv8s, achieving a 36.03% reduction in parameters, a 33.45% decrease in computational complexity, and a 38.67% reduction in model size while maintaining high detection accuracy.

- An efficient target localization module is proposed, which combines binocular vision depth estimation and sensor data fusion to effectively solve the high-precision localization of dynamic targets.

- The method has been successfully deployed on the NVIDIA Jetson Orin NX platform, and its real-time application feasibility in resource-constrained environments has been verified through field testing, demonstrating its potential for smart farming applications.

3. Materials and Methods

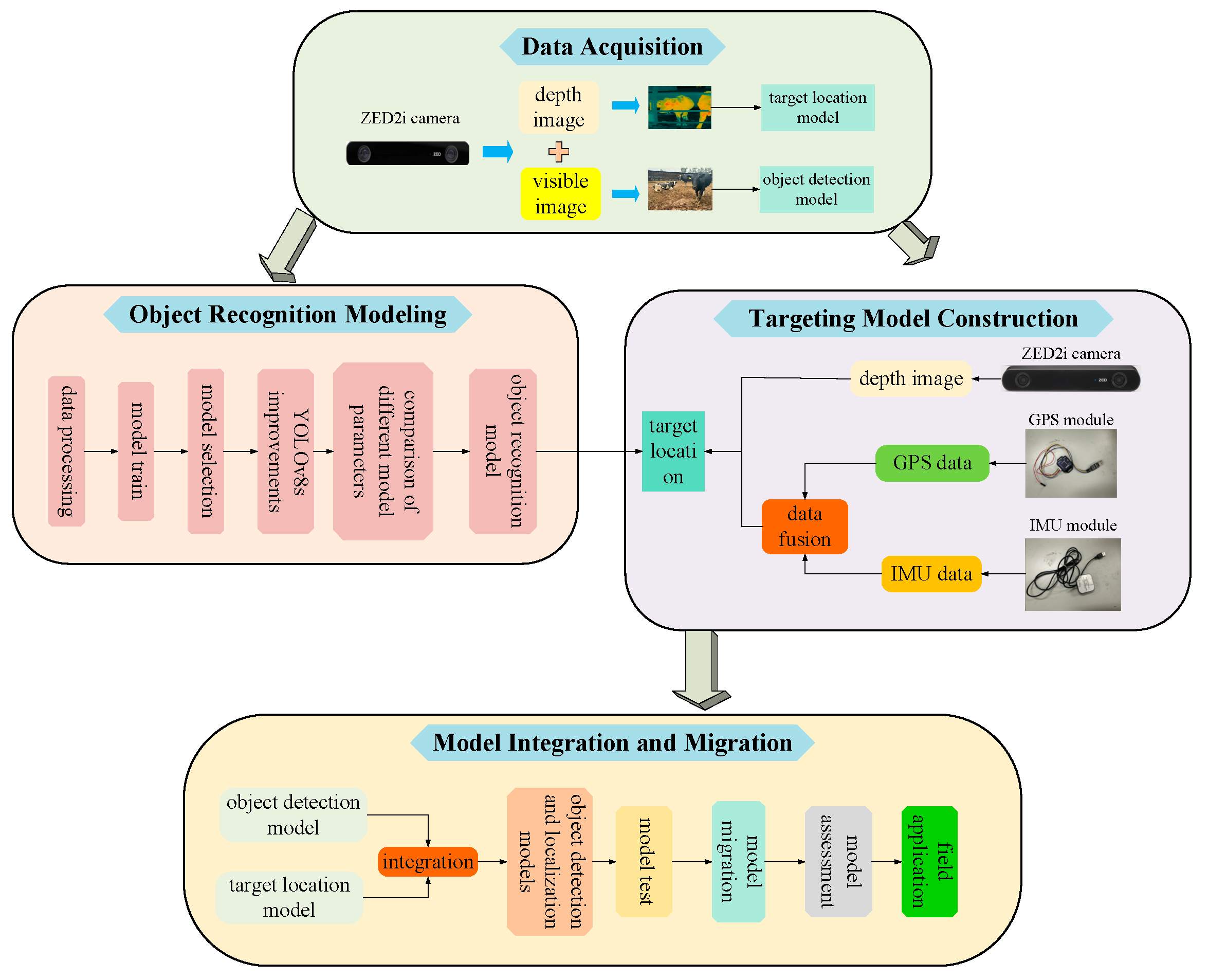

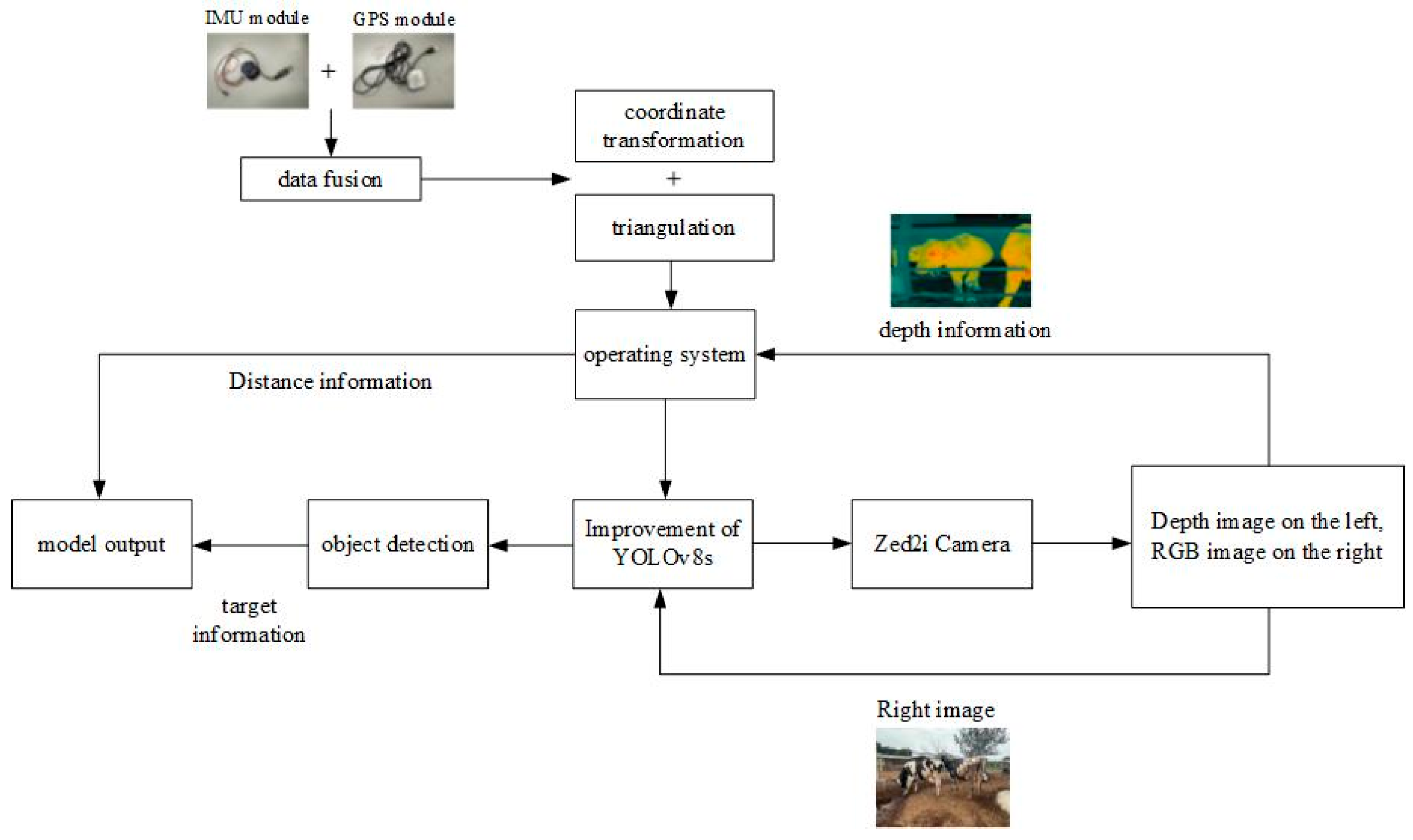

3.1. Overall Framework of the Study

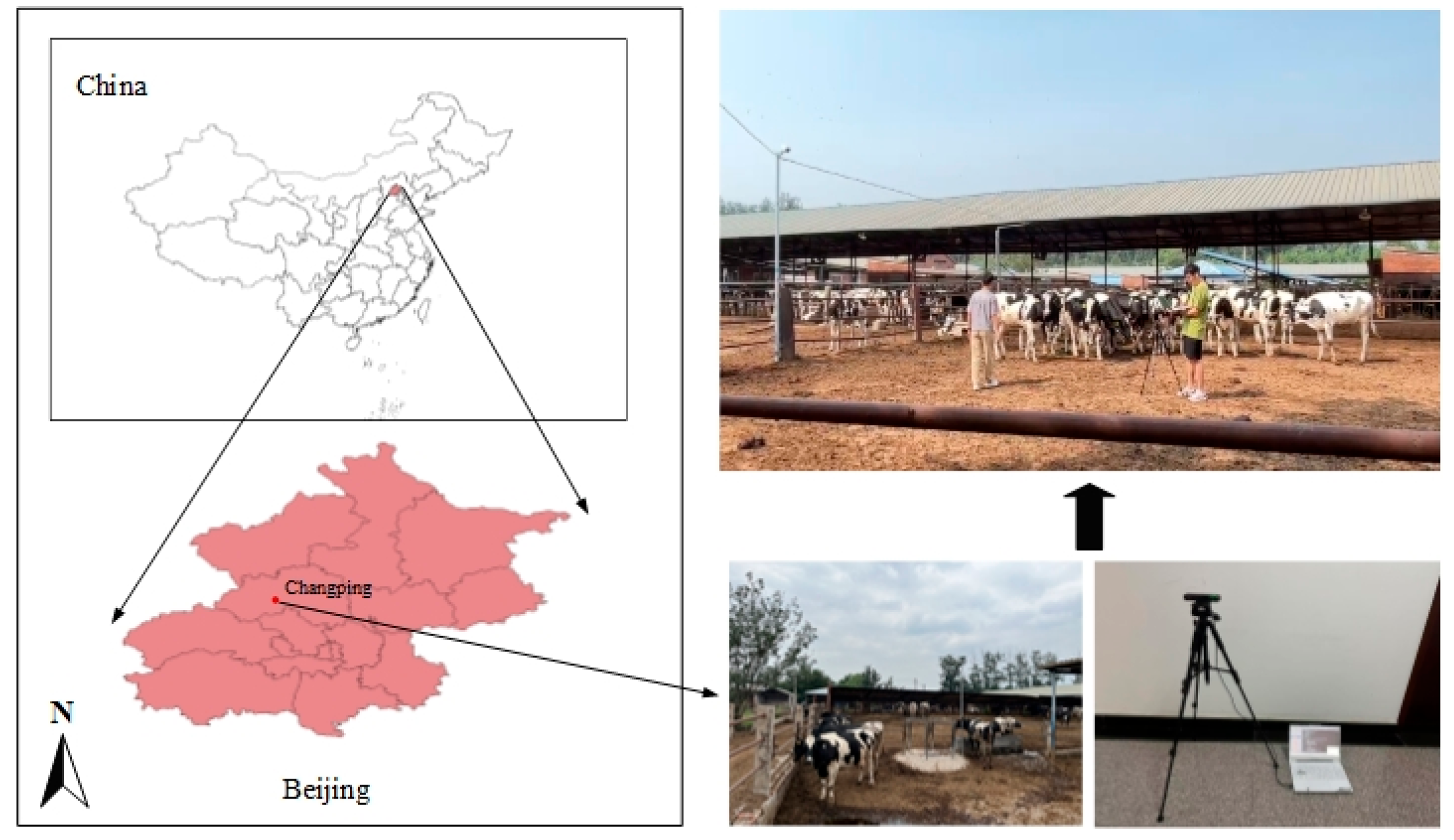

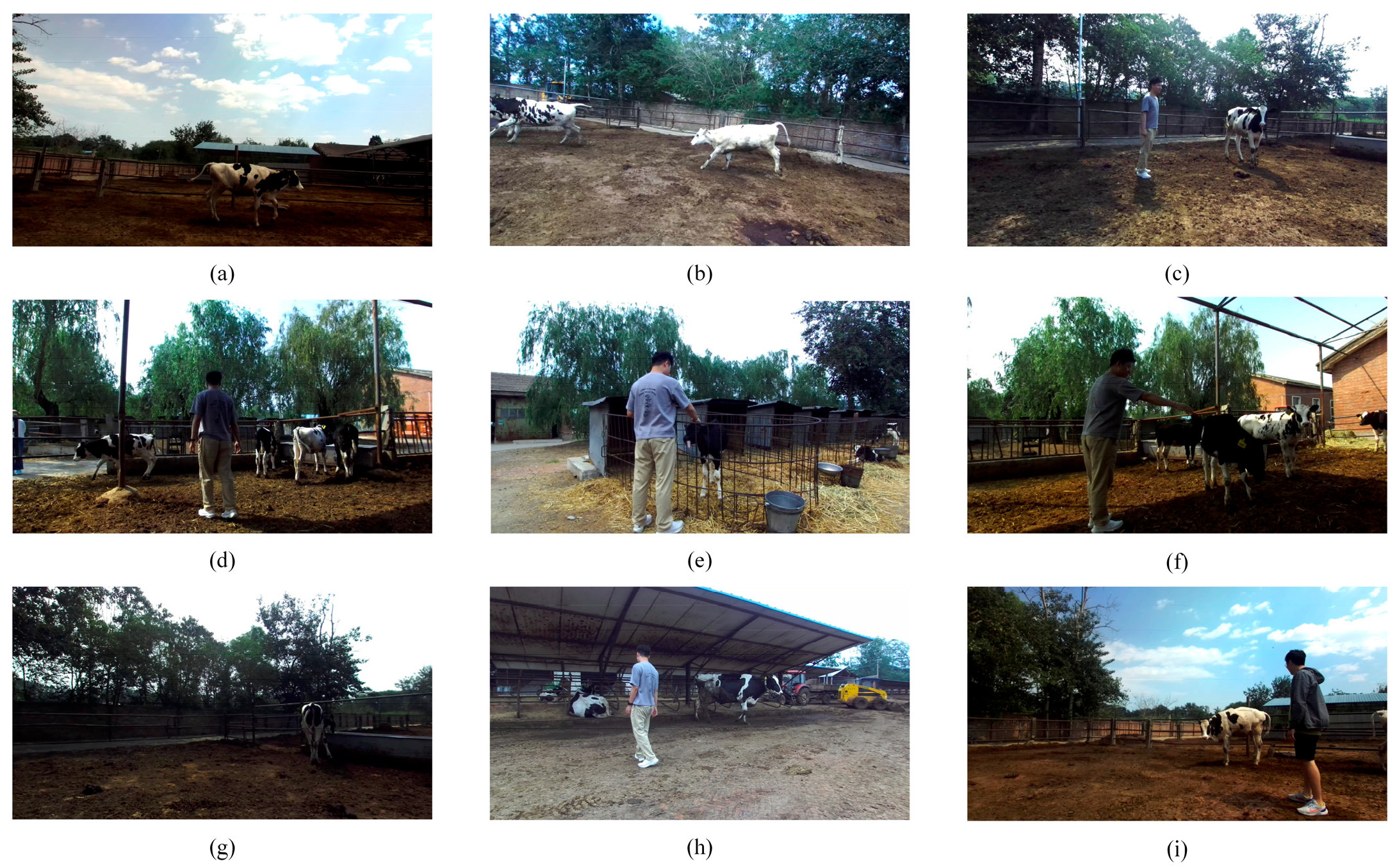

3.2. Data Acquisition

3.3. Object Detection Model Construction Based on YOLOv8

3.3.1. YOLOv8 Series Model Preferred

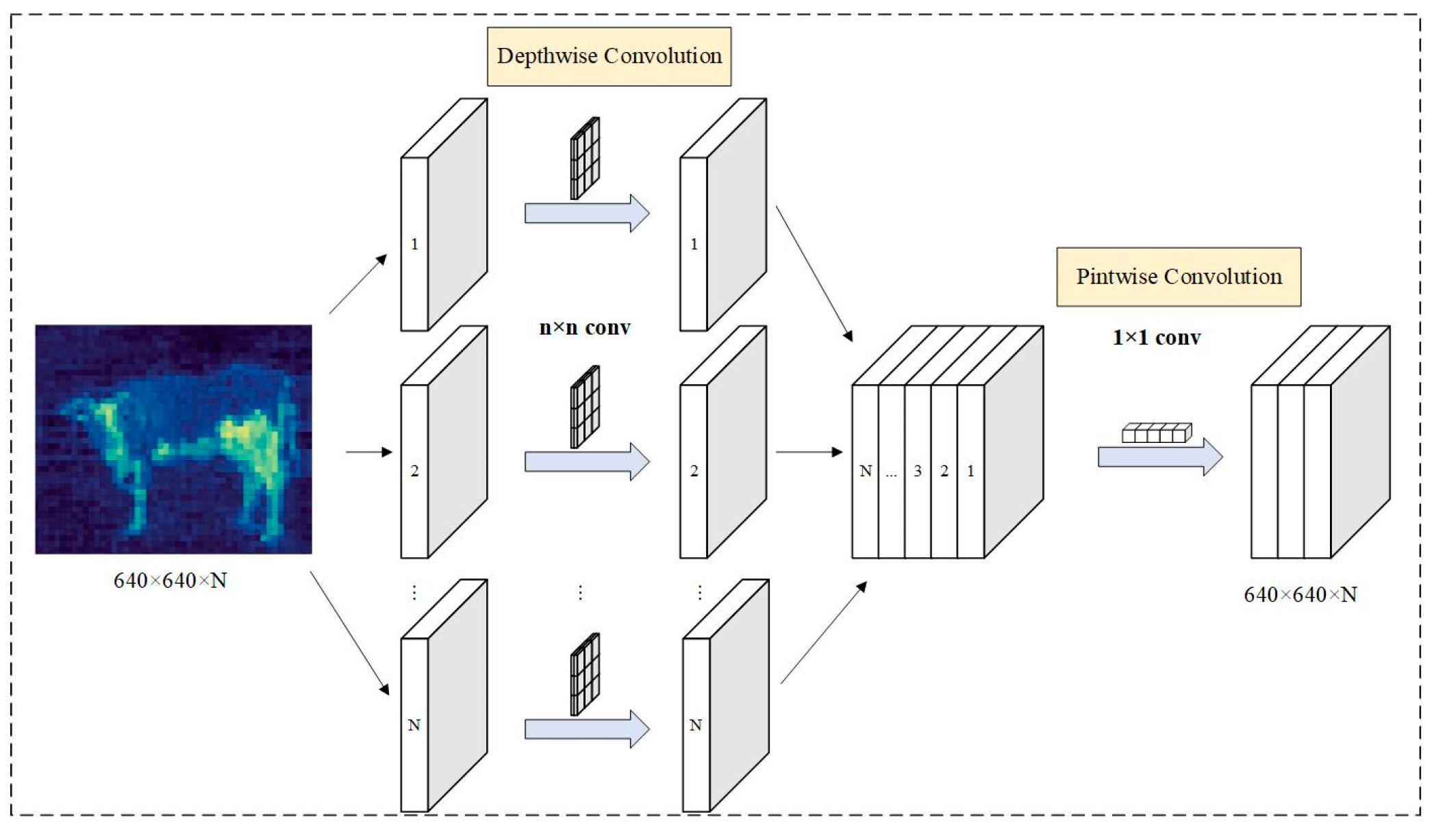

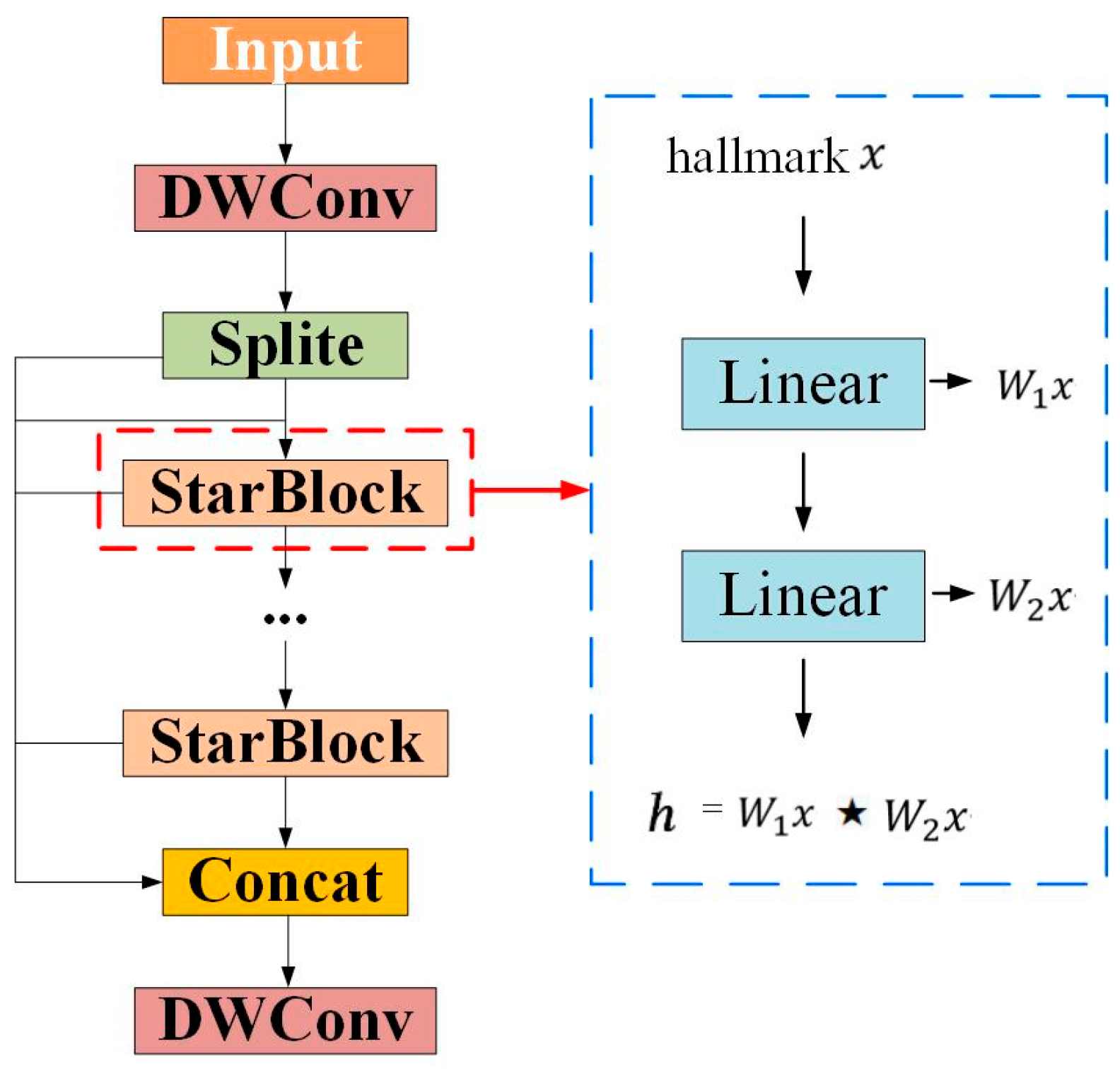

3.3.2. YOLOv8s Model Lightweight Improvements

3.4. Target Localization Model Construction for Multimodal Data Fusion

3.4.1. Precise Calibration of Binocular Camera Parameters

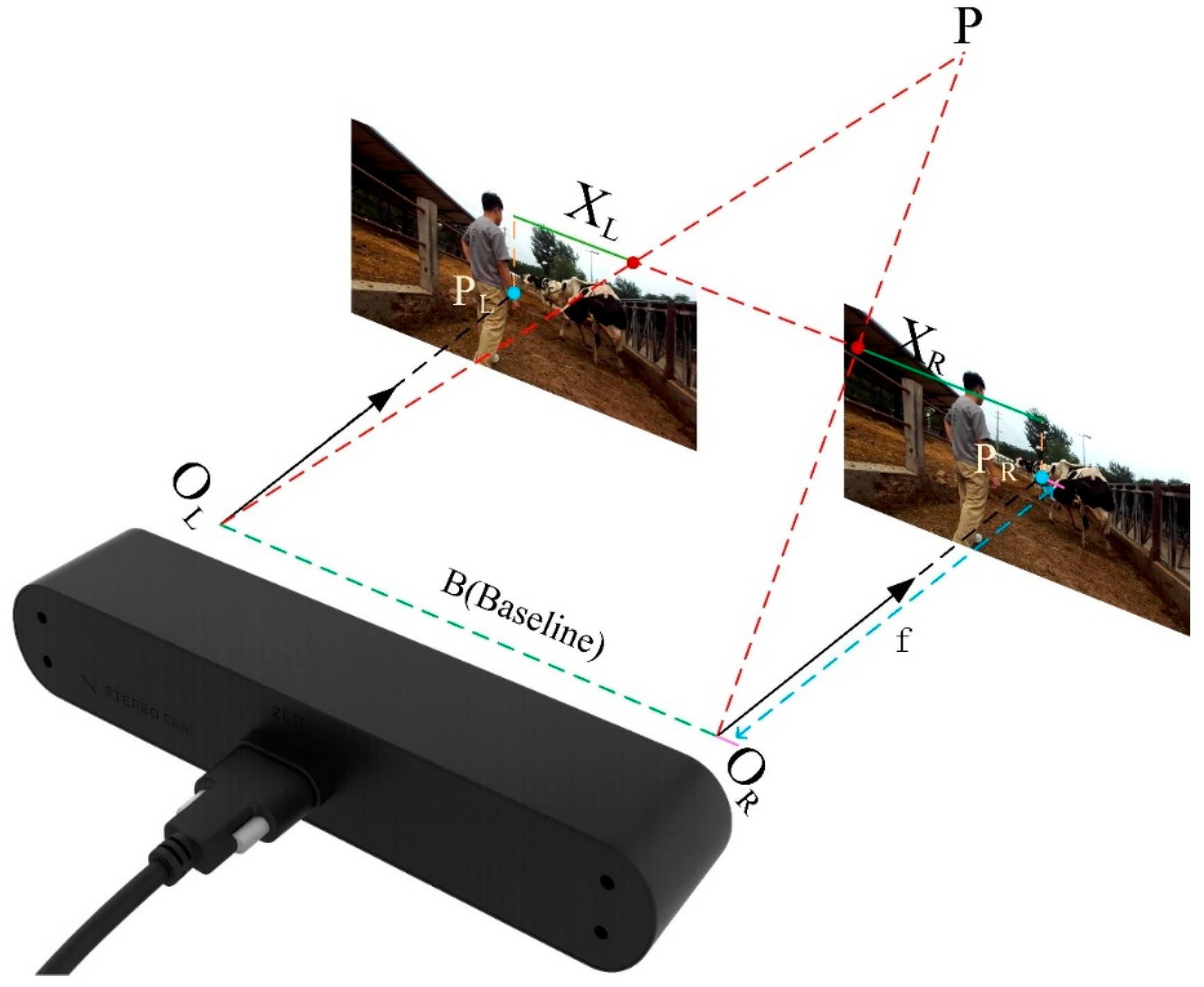

3.4.2. Binocular Vision Ranging Algorithm Design

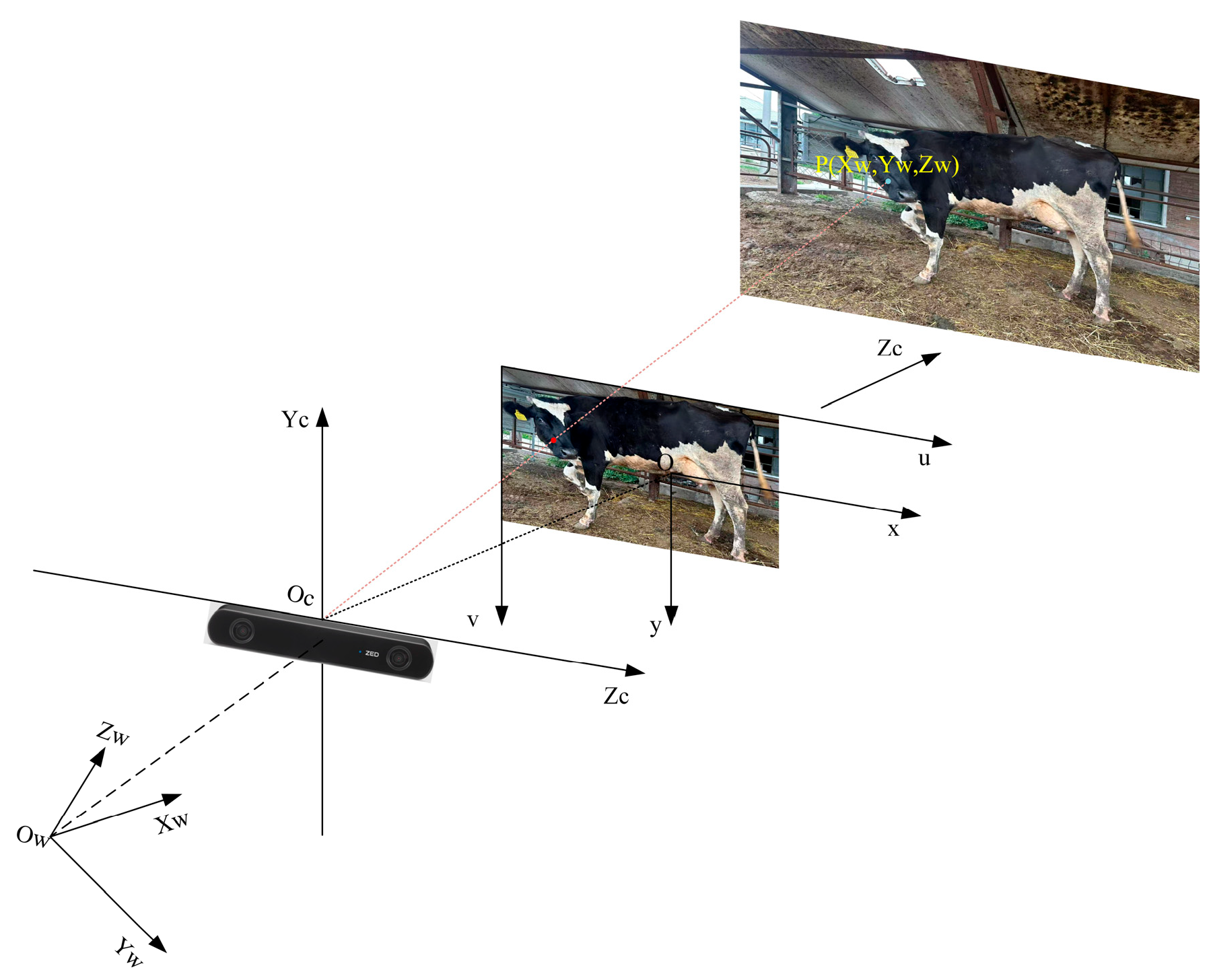

3.4.3. Binocular Visual Localization Coordinate System Conversion

3.4.4. Multimodal Localization Data Fusion

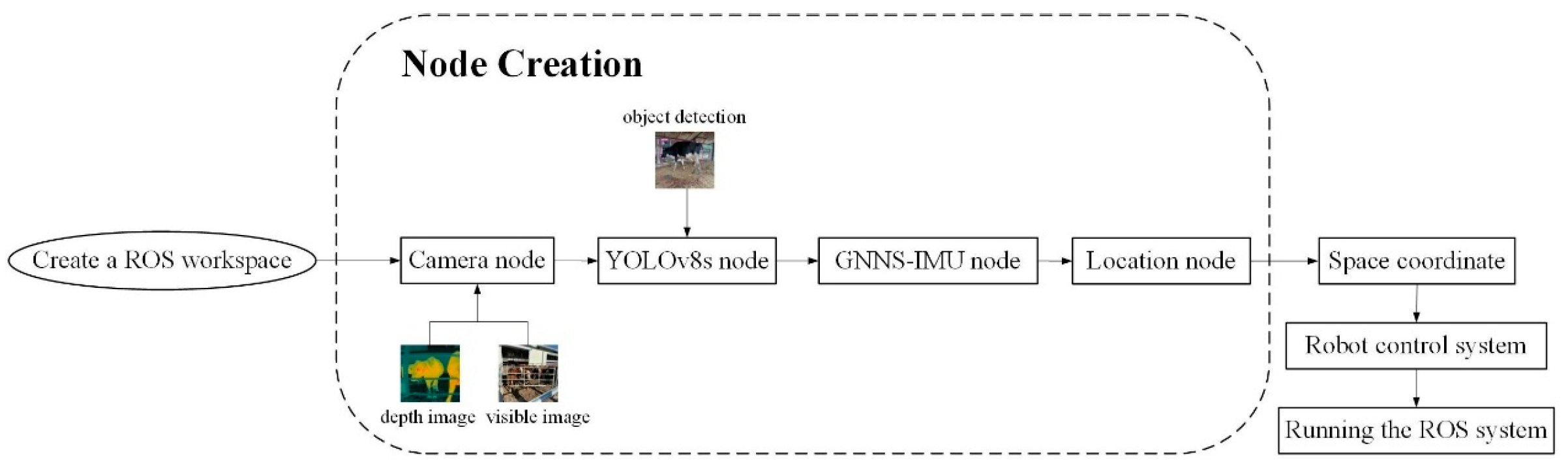

3.5. Cross-Platform Migration and Deployment of Intelligent Equipment

- (1)

- Perception node (camera): it is responsible for processing the video stream collected by the depth camera, completing the temporal and spatial synchronization and calibration of the visible and depth images, and ensuring that the downstream module acquires a consistent data source. This node serves as an input interface for visual information, providing original support for target recognition and depth estimation.

- (2)

- Detection node (YOLOv8s): integrating the lightweight and improved object detection model proposed in this paper, it is responsible for fast target recognition of the input image frames, and outputs information such as target category, location, and confidence level. This node supports high-frequency real-time operation, which can provide accurate 2D observation data for the subsequent localization module.

- (3)

- Fusion node (GNSS-IMU): integrates the geographic position information provided by the global navigation satellite system (GNSS) and the attitude information output from the Inertial Measurement Unit (IMU) to construct a highly robust position–posture fusion result. The fusion strategy is based on the attitude correction and filtering model, which effectively improves the stability of the positioning system in the dynamic environment and provides a reliable basic reference for target geo-mapping.

- (4)

- Localization node: undertake the task of spatial transformation of image depth information and 2D detection results. This module reconstructs the 3D spatial position of the target under the camera coordinate system by integrating visual depth, target image coordinates, and position information, and outputs the geographic position of the target with absolute spatial semantics based on coordinate transformation and geographic alignment. This module is the core node of multimodal information integration.

3.6. Experimental Configuration and Model Evaluation Metrics

3.6.1. Experimental Configuration

3.6.2. Evaluation Indicators

4. Results

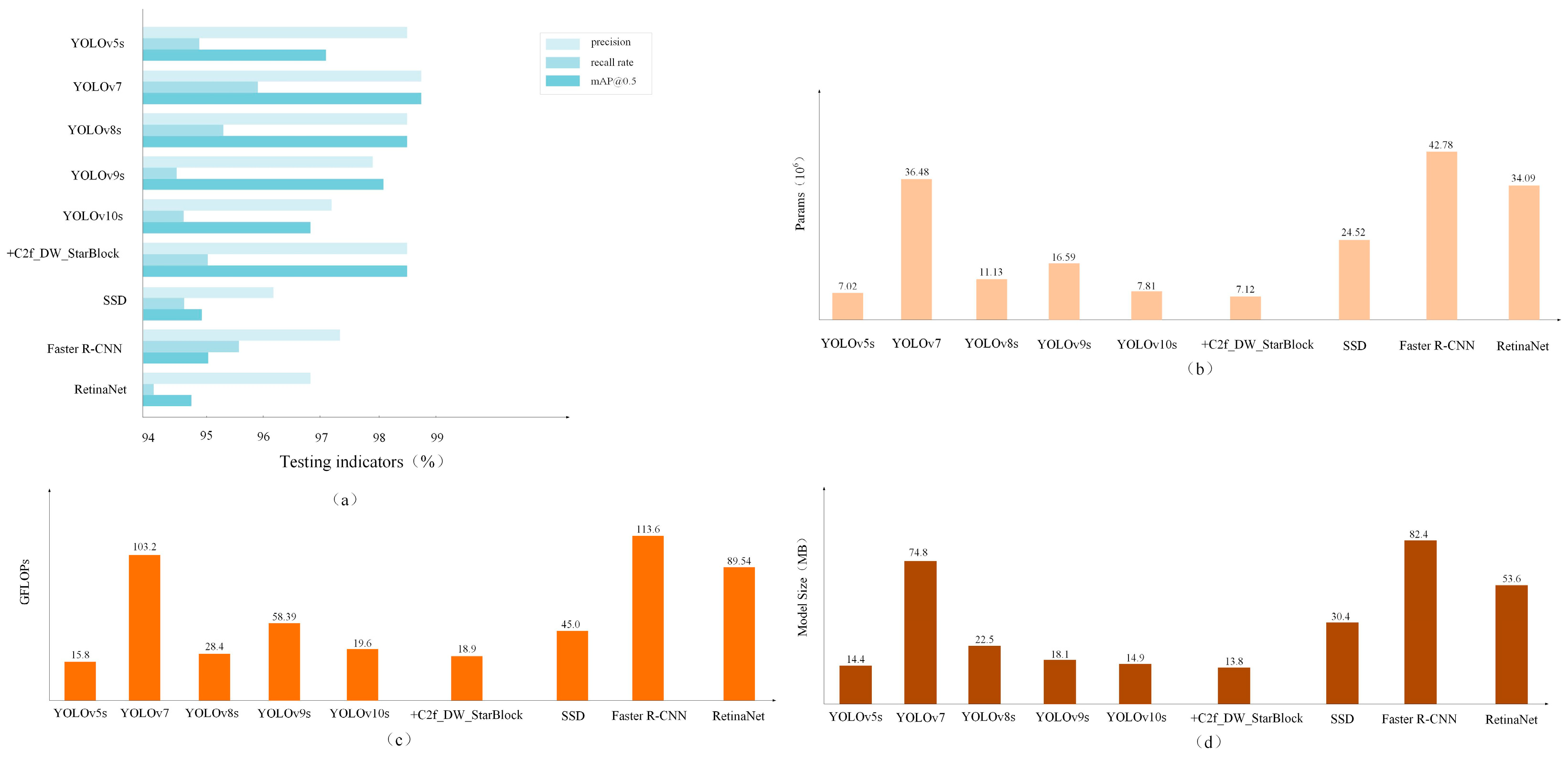

4.1. Comparison and Field Validation of Object Detection Models

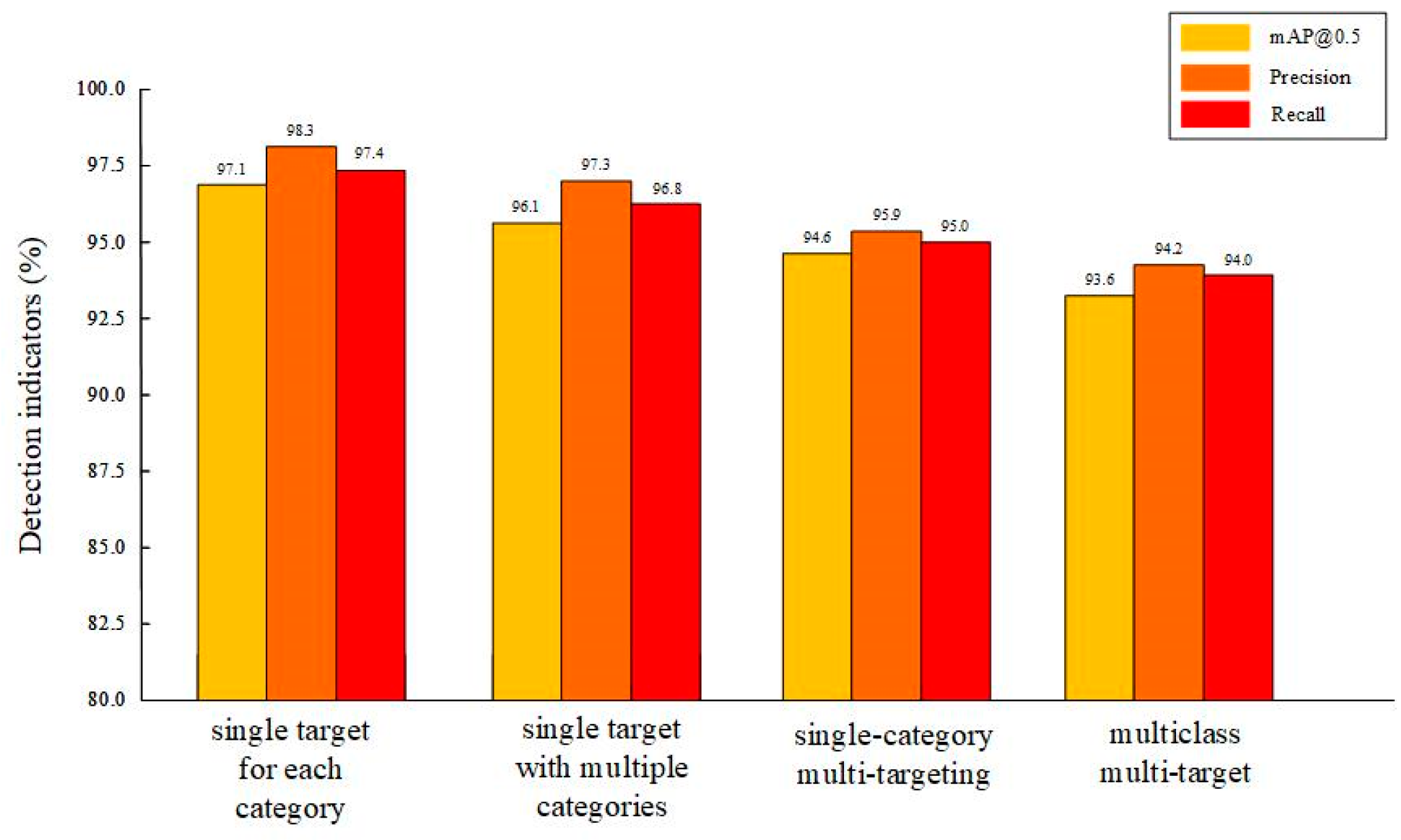

4.2. Ablation Experiment

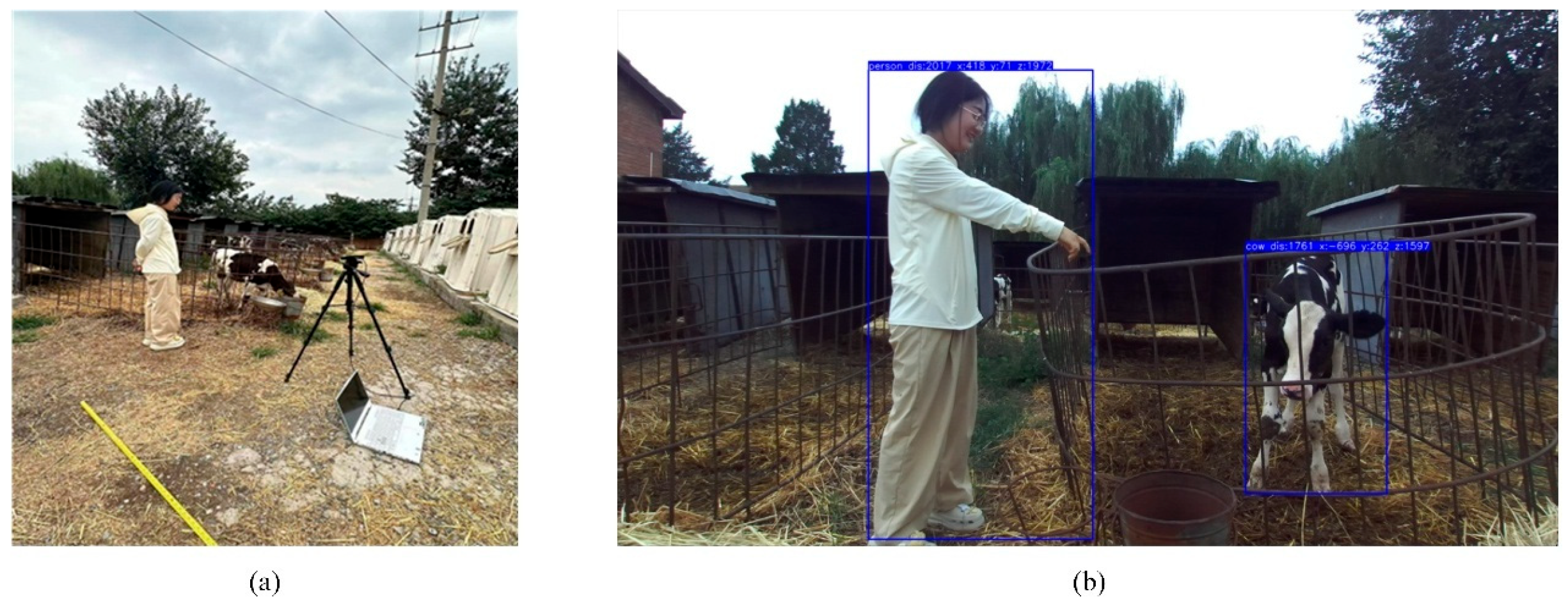

4.3. Binocular Stereo Vision Ranging Accuracy Analysis

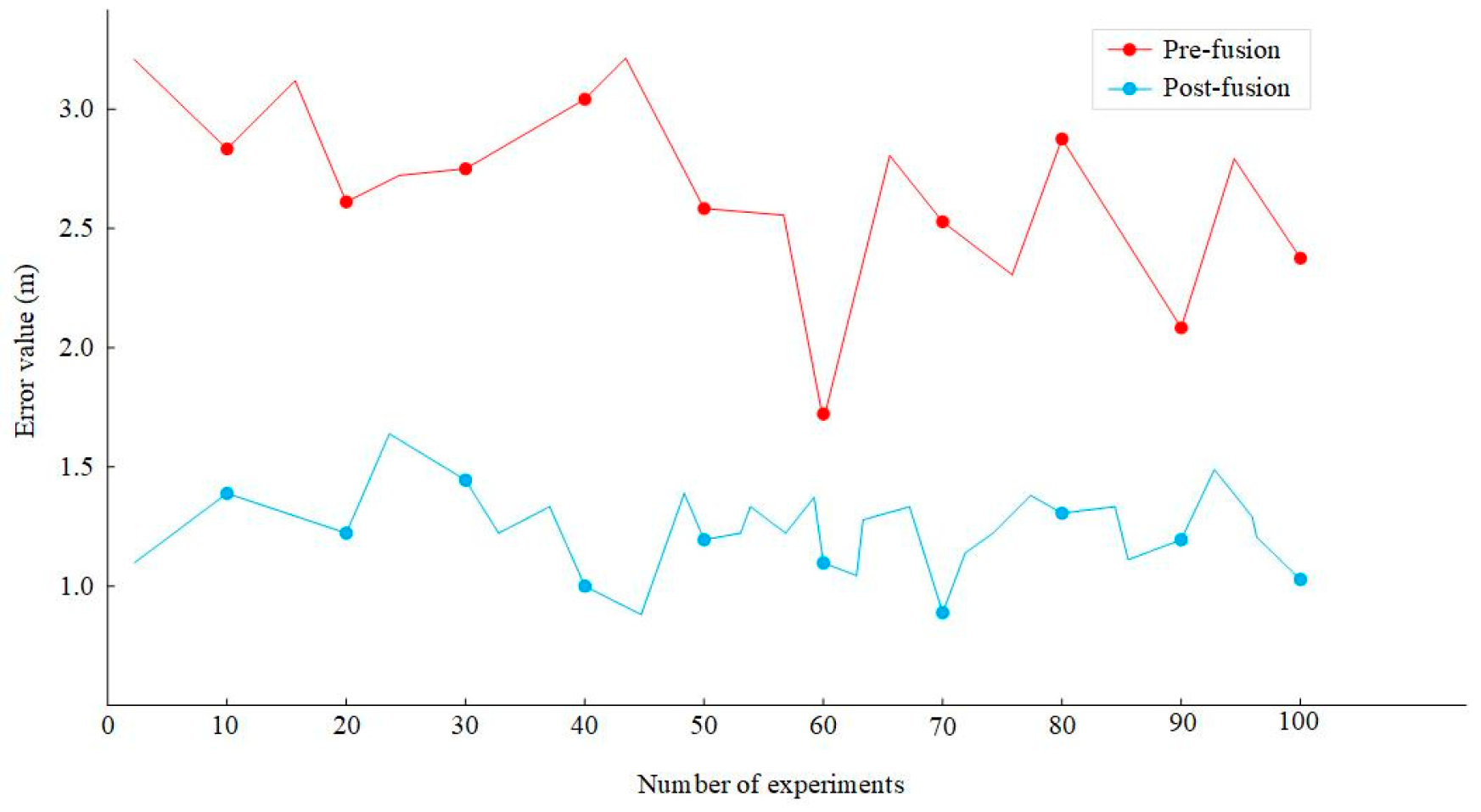

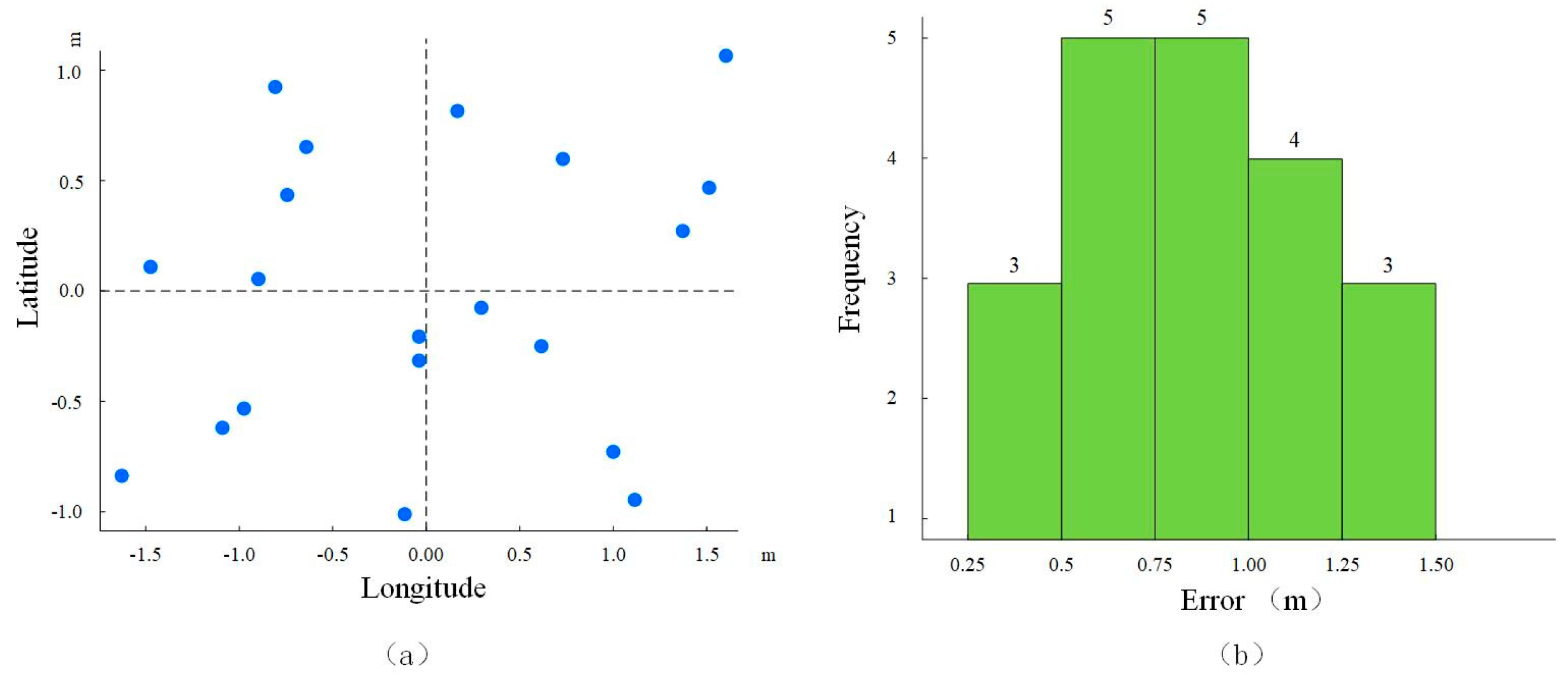

4.4. Targeting Model Accuracy Analysis

4.5. Model Smart Equipment Porting Test Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pivoto, D.; Waquil, P.D.; Talamini, E.; Finocchio, C.P.S.; Dalla Corte, V.F.; de Vargas Mores, G. Scientific development of smart farming technologies and their application in Brazil. Inf. Process. Agric. 2018, 5, 21–32. [Google Scholar] [CrossRef]

- Mishra, S. Internet of things enabled deep learning methods using unmanned aerial vehicles enabled integrated farm management. Heliyon 2023, 9, e18659. [Google Scholar] [CrossRef] [PubMed]

- Jiang, B.; Tang, W.; Cui, L.; Deng, X. Precision livestock farming research: A global scientometric review. Animals 2023, 13, 2096. [Google Scholar] [CrossRef]

- Dayoub, M.; Shnaigat, S.; Tarawneh, R.A.; Al-Yacoub, A.N.; Al-Barakeh, F.; Al-Najjar, K. Enhancing animal production through smart agriculture: Possibilities, hurdles, resolutions, and advantages. Ruminants 2024, 4, 22–46. [Google Scholar] [CrossRef]

- García, R.; Jiménez, M.; Aguilar, J. A multi-objective optimization model to maximize cattle weight-gain in rotational grazing. Int. J. Inf. Technol. 2024, 1–12. [Google Scholar] [CrossRef]

- Su, J.; Tan, B.e.; Jiang, Z.; Wu, D.; Nyachoti, C.; Kim, S.W.; Yin, Y.; Wang, J. Accelerating precision feeding with the internet of things for livestock: From concept to implementation. Sci. Bull. 2024, 69, 2156–2160. [Google Scholar] [CrossRef]

- Denis, L.; Tembo, M.D.; Manda, M.; Wilondja, A.; Ndong, N.; Kimeli, J.K.; Phionah, N. Leveraging Geospatial Technologies for Resource Optimization in Livestock Management. J. Geosci. Environ. Prot. 2024, 12, 287–307. [Google Scholar] [CrossRef]

- Swain, S.; Pattnayak, B.K.; Mohanty, M.N.; Jayasingh, S.K.; Patra, K.J.; Panda, C. Smart livestock management: Integrating IoT for cattle health diagnosis and disease prediction through machine learning. Indones. J. Electr. Eng. Comput. Sci. 2024, 34, 1192–1203. [Google Scholar] [CrossRef]

- Luo, W.; Zhang, G.; Yuan, Q.; Zhao, Y.; Chen, H.; Zhou, J.; Meng, Z.; Wang, F.; Li, L.; Liu, J. High-precision tracking and positioning for monitoring Holstein cattle. PLoS ONE 2024, 19, e0302277. [Google Scholar] [CrossRef]

- Rahman, A.; Smith, D.; Little, B.; Ingham, A.; Greenwood, P.; Bishop-Hurley, G. Cattle behaviour classification from collar, halter, and ear tag sensors. Inf. Process. Agric. 2018, 5, 124–133. [Google Scholar] [CrossRef]

- Huhtala, A.; Suhonen, K.; Mäkelä, P.; Hakojärvi, M.; Ahokas, J. Evaluation of instrumentation for cow positioning and tracking indoors. Biosyst. Eng. 2007, 96, 399–405. [Google Scholar] [CrossRef]

- Gygax, L.; Neisen, G.; Bollhalder, H. Accuracy and validation of a radar-based automatic local position measurement system for tracking dairy cows in free-stall barns. Comput. Electron. Agric. 2007, 56, 23–33. [Google Scholar] [CrossRef]

- Jukan, A.; Masip-Bruin, X.; Amla, N. Smart computing and sensing technologies for animal welfare: A systematic review. ACM Comput. Surv. (CSUR) 2017, 50, 1–27. [Google Scholar] [CrossRef]

- Ruiz-Garcia, L.; Lunadei, L. The role of RFID in agriculture: Applications, limitations and challenges. Comput. Electron. Agric. 2011, 79, 42–50. [Google Scholar] [CrossRef]

- Reger, M.; Stumpenhausen, J.; Bernhardt, H. Evaluation of LiDAR for the free navigation in agriculture. AgriEngineering 2022, 4, 489–506. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, K.; Huang, J.; Wang, Z.; Zhang, B.; Xie, Q. Field Obstacle Detection and Location Method Based on Binocular Vision. Agriculture 2024, 14, 1493. [Google Scholar] [CrossRef]

- Zhou, H.; Li, C.; Sun, G.; Yin, J.; Ren, F. Calibration and location analysis of a heterogeneous binocular stereo vision system. Appl. Opt. 2021, 60, 7214–7222. [Google Scholar] [CrossRef] [PubMed]

- Ding, J.; Yan, Z.; We, X. High-accuracy recognition and localization of moving targets in an indoor environment using binocular stereo vision. ISPRS Int. J. Geo-Inf. 2021, 10, 234. [Google Scholar] [CrossRef]

- Luo, Y.; Lin, K.; Xiao, Z.; Lv, E.; Wei, X.; Li, B.; Lu, H.; Zeng, Z. PBR-YOLO: A lightweight piglet multi-behavior recognition algorithm based on improved yolov8. Smart Agric. Technol. 2025, 10, 100785. [Google Scholar] [CrossRef]

- Yuan, Z.; Wang, S.; Wang, C.; Zong, Z.; Zhang, C.; Su, L.; Ban, Z. Research on Calf Behavior Recognition Based on Improved Lightweight YOLOv8 in Farming Scenarios. Animals 2025, 15, 898. [Google Scholar] [CrossRef]

- Wang, Z.; Hua, Z.; Wen, Y.; Zhang, S.; Xu, X.; Song, H. E-YOLO: Recognition of estrus cow based on improved YOLOv8n model. Expert Syst. Appl. 2024, 238, 122212. [Google Scholar] [CrossRef]

- Ni, J.; Zhu, S.; Tang, G.; Ke, C.; Wang, T. A small-object detection model based on improved YOLOv8s for UAV image scenarios. Remote Sens. 2024, 16, 2465. [Google Scholar] [CrossRef]

- Ni, J.; Shen, K.; Chen, Y.; Yang, S.X. An improved ssd-like deep network-based object detection method for indoor scenes. IEEE Trans. Instrum. Meas. 2023, 72, 1–15. [Google Scholar] [CrossRef]

- Wang, A.; Qian, W.; Li, A.; Xu, Y.; Hu, J.; Xie, Y.; Zhang, L. NVW-YOLOv8s: An improved YOLOv8s network for real-time detection and segmentation of tomato fruits at different ripeness stages. Comput. Electron. Agric. 2024, 219, 108833. [Google Scholar] [CrossRef]

- Sager, C.; Janiesch, C.; Zschech, P. A survey of image labelling for computer vision applications. J. Bus. Anal. 2021, 4, 91–110. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-t.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01479. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Zhai, S.; Shang, D.; Wang, S.; Dong, S. DF-SSD: An improved SSD object detection algorithm based on DenseNet and feature fusion. IEEE Access 2020, 8, 24344–24357. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, X.; Gao, Y.; Li, Y. Rapid target detection in high resolution remote sensing images using YOLO model. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 1915–1920. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M. YOLOv1 to YOLOv10: The fastest and most accurate real-time object detection systems. APSIPA Trans. Signal Inf. Process. 2024, 13. [Google Scholar] [CrossRef]

- Myat Noe, S.; Zin, T.T.; Kobayashi, I.; Tin, P. Optimizing black cattle tracking in complex open ranch environments using YOLOv8 embedded multi-camera system. Sci. Rep. 2025, 15, 6820. [Google Scholar] [CrossRef]

- Ali, M.L.; Zhang, Z. The YOLO framework: A comprehensive review of evolution, applications, and benchmarks in object detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Khan, Z.Y.; Niu, Z. CNN with depthwise separable convolutions and combined kernels for rating prediction. Expert. Syst. Appl. 2021, 170, 114528. [Google Scholar] [CrossRef]

- Elliott, J. Functorial properties of star operations. Commun. Algebra 2010, 38, 1466–1490. [Google Scholar] [CrossRef]

- Wang, X.; Yang, W.; Qi, W.; Wang, Y.; Ma, X.; Wang, W. STaRNet: A spatio-temporal and Riemannian network for high-performance motor imagery decoding. Neural Netw. 2024, 178, 106471. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 22, 1330–1334. [Google Scholar] [CrossRef]

- Mahammed, M.A.; Melhum, A.I.; Kochery, F.A. Object distance measurement by stereo vision. Int. J. Sci. Appl. Inf. Technol. (IJSAIT) 2013, 2, 05–08. [Google Scholar]

- Schmid, H.H. Worldwide geometric satellite triangulation. J. Geophys. Res. 1974, 79, 5349–5376. [Google Scholar] [CrossRef]

- Ashkenazi, V. Coordinate systems: How to get your position very precise and completely wrong. J. Navig. 1986, 39, 269–278. [Google Scholar] [CrossRef]

- Thompson, W. Coordinate systems for solar image data. Astron. Astrophys. 2006, 449, 791–803. [Google Scholar] [CrossRef]

- Shashua, A.; Navab, N. Relative affine structure: Canonical model for 3D from 2D geometry and applications. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 873–883. [Google Scholar] [CrossRef]

- Davis, M.H.; Khotanzad, A.; Flamig, D.P.; Harms, S.E. A physics-based coordinate transformation for 3-D image matching. IEEE Trans. Med. Imaging 2002, 16, 317–328. [Google Scholar] [CrossRef] [PubMed]

- Sohan, G.N.; Sanjay, G.; Saraswathi, S. Real-Time Snake Prediction and Detection System Using the YOLOv5. In Proceedings of the 2024 4th International Conference on Ubiquitous Computing and Intelligent Information Systems (ICUIS), Gobichettipalayam, India, 12–13 December 2024; pp. 1135–1142. [Google Scholar]

- Agouridis, C.T.; Stombaugh, T.S.; Workman, S.R.; Koostra, B.K.; Edwards, D.R. Examination of GPS collar capabilities and limitations for tracking animal movement in grazed watershed studies. In 2003 ASAE Annual Meeting; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2003. [Google Scholar]

| Model | Precision (%) | Recall (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|

| YOLOv8n | 97.7 | 97.1 | 98.9 | 93.3 |

| YOLOv8s | 97.9 | 97.4 | 99.0 | 94.7 |

| YOLOv8m | 98.0 | 97.3 | 98.9 | 94.9 |

| YOLOv8l | 98.1 | 97.8 | 99.0 | 95.0 |

| YOLOv8x | 98.2 | 97.3 | 99.1 | 95.0 |

| Model | Precision (%) | Recall (%) | mAP50 (%) | Params (106) | GFLOPs | Model Size (MB) |

|---|---|---|---|---|---|---|

| YOLOv5s | 98.5 | 94.9 | 97.1 | 7.02 | 15.8 | 14.4 |

| YOLOv7 | 98.7 | 95.9 | 98.7 | 36.48 | 103.2 | 74.8 |

| YOLOv8s | 98.5 | 95.3 | 98.5 | 11.13 | 28.4 | 22.5 |

| YOLOv9s | 97.9 | 94.5 | 98.1 | 16.59 | 58.39 | 18.1 |

| YOLOv10s | 97.3 | 94.7 | 96.8 | 7.81 | 19.6 | 14.9 |

| +C2f_DW_StarBlock | 98.5 | 95.1 | 98.5 | 7.12 | 18.9 | 13.8 |

| SSD | 96.2 | 94.5 | 94.8 | 24.52 | 45.0 | 30.4 |

| Faster R-CNN | 97.3 | 95.4 | 95.1 | 42.78 | 113.6 | 82.4 |

| RetinaNet | 96.8 | 94.1 | 94.7 | 34.09 | 89.54 | 53.6 |

| Scheme Name | DWConv Used | StarBlock Used | Precision (%) | mAP@0.5 (%) | Params (M) | GFLOPs | Model Size (MB) |

|---|---|---|---|---|---|---|---|

| Original YOLOv8s | × | × | 98.5 | 98.5 | 11.13 | 28.4 | 22.5 |

| YOLOv8s + DWConv | √ | × | 98.4 | 98.1 | 9.5 | 22.5 | 19.6 |

| YOLOv8s + StarBlock | × | √ | 98.4 | 97.9 | 8.9 | 20.5 | 18.2 |

| YOLOv8s + C2f_DW_StarBlock | √ | √ | 98.5 | 98.5 | 7.12 | 18.9 | 13.8 |

| Spot | Model Measuring Distance (mm) | Actual Measuring Distance (mm) | Tolerance (mm) | Tolerance Ratio (mm) |

|---|---|---|---|---|

| Position 1 (0.5–5 m) | 753 | 744 | 9 | 0.61 |

| 1467 | 1476 | 9 | 1.21 | |

| 2139 | 2134 | 5 | 0.23 | |

| 3775 | 3822 | 47 | 1.23 | |

| 4171 | 4158 | 13 | 0.31 | |

| Position 2 (5–10 m) | 5534 | 5447 | 87 | 1.60 |

| 6386 | 6359 | 27 | 0.42 | |

| 7653 | 7755 | 102 | 1.32 | |

| 8635 | 8642 | 7 | 0.08 | |

| 9735 | 9829 | 94 | 0.96 | |

| Position 3 (10–15 m) | 10,441 | 10,529 | 88 | 0.84 |

| 11,743 | 11,799 | 56 | 0.47 | |

| 12,675 | 12,536 | 139 | 1.11 | |

| 13,317 | 13,291 | 26 | 0.20 | |

| 14,344 | 14,543 | 199 | 1.37 | |

| Position 4 (15–20 m) | 16,164 | 15,481 | 683 | 4.41 |

| 16,378 | 16,176 | 202 | 1.25 | |

| 18,099 | 17,472 | 627 | 3.46 | |

| 18,870 | 18,215 | 655 | 3.60 | |

| 20,561 | 19,787 | 774 | 3.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Cao, S.; Wei, P.; Sun, W.; Kong, F. Dynamic Object Detection and Non-Contact Localization in Lightweight Cattle Farms Based on Binocular Vision and Improved YOLOv8s. Agriculture 2025, 15, 1766. https://doi.org/10.3390/agriculture15161766

Li S, Cao S, Wei P, Sun W, Kong F. Dynamic Object Detection and Non-Contact Localization in Lightweight Cattle Farms Based on Binocular Vision and Improved YOLOv8s. Agriculture. 2025; 15(16):1766. https://doi.org/10.3390/agriculture15161766

Chicago/Turabian StyleLi, Shijie, Shanshan Cao, Peigang Wei, Wei Sun, and Fantao Kong. 2025. "Dynamic Object Detection and Non-Contact Localization in Lightweight Cattle Farms Based on Binocular Vision and Improved YOLOv8s" Agriculture 15, no. 16: 1766. https://doi.org/10.3390/agriculture15161766

APA StyleLi, S., Cao, S., Wei, P., Sun, W., & Kong, F. (2025). Dynamic Object Detection and Non-Contact Localization in Lightweight Cattle Farms Based on Binocular Vision and Improved YOLOv8s. Agriculture, 15(16), 1766. https://doi.org/10.3390/agriculture15161766