Abstract

Precise wheat head detection is essential for plant counting and yield estimation in precision agriculture. To tackle the difficulties arising from densely packed wheat heads with diverse scales and intricate occlusions in real-world field conditions, this research introduces YOLO v11n-GRN, an improved wheat head detection model founded on the streamlined YOLO v11n framework. The model optimizes performance through three key innovations: This study introduces a Global Edge Information Transfer (GEIT) module architecture that incorporates a Multi-Scale Edge Information Generator (MSEIG) to enhance the perception of wheat head contours through effective modeling of edge features and deep semantic fusion. Additionally, a C3k2_RFCAConv module is developed to improve spatial awareness and multi-scale feature representation by integrating receptive field augmentation and a coordinate attention mechanism. The utilization of the Normalized Gaussian Wasserstein Distance (NWD) as the localization loss function enhances regression stability for distant small targets. Experiments were, respectively, validated on the self-built multi-temporal wheat field image dataset and the GWHD2021 public dataset. Results showed that, while maintaining a lightweight design (3.6 MB, 10.3 GFLOPs), the YOLOv11n-GRN model achieved a precision, recall, and mAP@0.5 of 92.5%, 91.1%, and 95.7%, respectively, on the self-built dataset, and 91.6%, 89.7%, and 94.4%, respectively, on the GWHD2021 dataset. This fully demonstrates that the improvements can effectively enhance the model’s comprehensive detection performance for wheat ear targets in complex backgrounds. Meanwhile, this study offers an effective technical approach for wheat head detection and yield estimation in challenging field conditions, showcasing promising practical implications.

1. Introduction

Global wheat production in 2023 was estimated at around 790 million tons according to data from the Food and Agriculture Organization (FAO) of the United Nations [1], making it the most extensively cultivated cereal crop worldwide whose yield levels directly impact national food security and farmers’ economic benefits. In practical agricultural production, the accurate and rapid acquisition of field-based wheat yield information constitutes a critical prerequisite for reliable yield forecasting and agricultural decision-making. As a key indicator for yield estimation, the wheat head count offers explicit and quantifiable value; research on automated head counting and yield prediction not only facilitates precision field management but also provides technical foundations for intelligent agricultural development. Traditional field survey techniques for counting wheat heads and estimating yields are plagued by issues such as high labor expenses, inefficiency, and significant measurement inaccuracies, making them inadequate for the requirements of contemporary smart farming practices. Consequently, automated detection of wheat heads during harvest season enables efficient and precise plant and head counting for yield estimation while allowing comparative analysis with actual sowing density to evaluate seedling survival rates and pest/disease impacts—thereby guiding targeted pest control strategies and ensuring stable high-yield production. Moreover, widespread adoption of this technology promotes agricultural informatization and intelligentization, enhances scientific precision in wheat cultivation management, and carries significant theoretical and practical implications for safeguarding grain production and optimizing planting strategies.

The rapid advancement of deep learning technologies in recent years has significantly increased their adoption in agriculture, playing a crucial role in driving intelligent agricultural transformation. Unlike conventional machine learning approaches that rely on manual feature engineering, deep learning models automatically extract spatial and semantic features from images using multilayer neural networks, demonstrating superior feature representation and generalization capabilities. To overcome challenges such as accuracy degradation due to dense wheat head distribution, occlusion, and complex backgrounds in natural field settings, Li et al. [2] integrated the CBAM attention mechanism [3] into the YOLO v5 architecture. They also introduced a 4× downsampling branch to improve small-target feature extraction and implemented Mosaic-8 data augmentation with GIoU [4] regression optimization. This enhanced model achieved an outstanding performance with 94.3% mAP@0.5 and 98.0% recall on the GWHD dataset [5], surpassing existing models and confirming its practical effectiveness in wheat head detection and counting. Bhagat et al. [6] proposed a lightweight wheat ear detection network named WheatNet-Lite. This model incorporates Mixed Depthwise Convolution (MDWConv) [7] and an Inverted Residual Bottleneck Structure into the backbone network, and it achieves multi-scale feature extraction through an Improved Spatial Pyramid Pooling module (MSPP) [8]. Experiments were evaluated on the GWHD, SPIKE, and newly constructed ACID datasets, achieving mAP@0.5 scores of 91.32%, 86.10%, and 76.32%, respectively. Its overall detection accuracy outperforms existing mainstream methods, demonstrating good practical application potential. Khaki et al. [9] proposed a deep learning framework, WheatNet, designed to efficiently and accurately perform wheat ear counting tasks. This method uses a truncated version of MobileNetV2 [10] as a lightweight backbone network, adapts to image scale variations by fusing multi-scale feature maps, and constructs two parallel sub-networks to perform density-based counting and localization tasks, respectively. Additionally, the proposed method relies solely on point-level annotations, significantly reducing manual annotation costs. In the wheat ear counting task, WheatNet achieves a Mean Absolute Error (MAE) of 3.85 and a Root Mean Squared Error (RMSE) of 5.19. While maintaining extremely few parameters, its performance surpasses various state-of-the-art methods, demonstrating strong robustness and generalization capabilities. Zang et al. [11] modified the DM-Count network [12] to develop DMseg-Count, incorporating a local segmentation branch and feature fusion mechanism to strengthen local contextual supervision. The model achieved significantly lower MAE and RMSE than mainstream detection/regression models on both custom and public datasets, with a mean relative error of 3.51%, substantially improving accuracy and robustness for automated wheat head identification and counting in complex field environments. Fang et al. [13] enhanced YOLO v8n by incorporating SimSPPF [14], LSKA [15], and WIoU loss [16] to develop a more efficient detection model. This optimized model achieved a reduction of 1.6 million parameters and 2.2 billion FLOPs in computations while enhancing mAP, recall, and inference speed, thus enabling real-time monitoring on portable devices and improving field intelligence capabilities. For efficient wheat head counting and genetic locus mining, Li et al. [17] developed YOLO X-P by modifying YOLO X with a CBAM attention module to enhance image resolution and an optimized SPP structure to improve detection accuracy. Experiments demonstrated 91.81% mAP during the LGF stage. It successfully localized four novel QTL loci and developed two KASP markers from automated identification results, pioneering a new approach for high-throughput phenotype–genotype association studies. Pan et al. [18] enhanced RT-DETR by integrating SPD-Conv modules and Context Guided Blocks to improve feature extraction precision and contextual modeling capabilities. The modified model achieved an AP50 of 93.5% on GWHD2021, outperforming Faster R-CNN, YOLO X, and other mainstream models across all scales and complex scenarios, demonstrating strong robustness and generalization potential.

The aforementioned studies demonstrate the high feasibility and adaptability of deep learning for wheat head recognition, with significant advancements achieved in detection accuracy, model lightweighting, and practical deployment capabilities. However, persistent challenges including dense arrangement, scale variation, and severe occlusion of wheat heads in natural field environments remain critical research foci. The objective of this study is to enhance the accuracy and robustness of a detection model. Concurrently, ongoing evolution in object detection has driven iterative upgrades of YOLO architectures. The recently released YOLOv11 incorporates substantial optimizations in feature extraction and modular design, establishing a new technical foundation for efficient detection in complex agricultural scenarios. Building upon these foundations, we propose YOLO v11n-GRN, an enhanced wheat head detection model based on YOLO v11n. Initially, a Global Edge Information Transfer (GEIT) module architecture is devised, integrating a Multi-scale Edge Information Generator (MSEIG) to facilitate effective cross-scale transmission and fusion of edge features across semantic hierarchies, thereby improving contour perception for wheat heads. Secondly, a novel C3k2_RFCAConv module is constructed by integrating receptive field augmentation and coordinate attention mechanisms into the C3k2 block, strengthening multi-scale target representation and complex background modeling capabilities. Subsequently, the Normalized Wasserstein Distance (NWD) [19] is utilized as the localization loss function to optimize detection performance for distant small targets, addressing instability concerns associated with conventional IoU-based losses for far-range wheat head detection. These innovations collectively enable YOLOv11n-GRN to achieve superior detection accuracy and enhanced environmental adaptability while maintaining lightweight characteristics, providing robust technical support for efficient field-based wheat head identification and yield estimation.

2. Materials and Methods

2.1. Data Collection

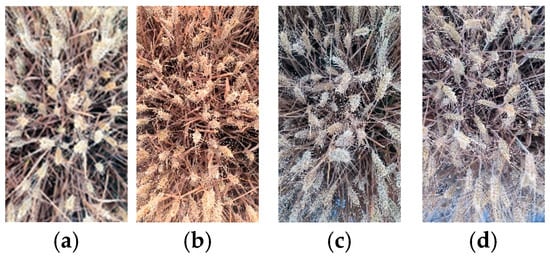

The dataset utilized in this study was manually collected. To align with practical application scenarios, the custom dataset images were collected in mid-June 2024 during the late maturation stage of wheat—the optimal period for yield measurement. Data collection occurred in Jingxiu District, Baoding City, Hebei Province (GPS coordinates: 38.88 °N, 115.38 °E; elevation: 29.4 m). A five-point sampling method was adopted, randomly selecting five 1 m2 plots per field for imaging, with all sampling points positioned >5 m from field boundaries to mitigate edge effects. All images were captured vertically downward at 1.2–1.5 m above ground level, covering approximately 1 m2 per frame to ensure accurate quantitative yield estimation. Real-world agricultural environments exhibit dynamic complexity: variables such as illumination intensity and wind speed impact image information representation and consequently model recognition performance. Accordingly, image acquisition spanned three diurnal periods (05:00–08:00, 11:00–13:00, and 17:00–19:00) with a specific emphasis on capturing data under gentle breeze conditions. Images were acquired using a Redmi K50 smartphone (58 MP effective resolution) to simulate real-world deployment scenarios. Representative dataset samples are shown in Figure 1.

Figure 1.

Dataset image samples. (a) Morning capture; (b) noon capture; (c) dusk capture; (d) breezy condition capture.

2.2. Dataset Construction

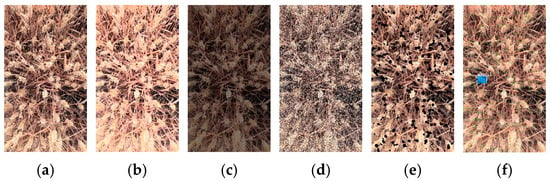

Field images typically contain substantial non-target background information, exhibiting significant deviations from standardized dataset environments. During harvesting scenarios, extraneous elements such as weeds and scattered straw debris frequently appear within the field of view. These interfering elements may occlude or mimic wheat head targets, substantially increasing detection complexity and causing false positives/negatives that compromise counting accuracy. To enhance the generalizability and robustness of the model, data augmentation techniques such as brightness variation, noise injection, and randomized occlusion were implemented to simulate realistic field conditions, as depicted in Figure 2. The dataset comprises 6340 images. Wheat heads were annotated using LabelImg 1.8.6 software (illustrated in Figure 2f). The image files and annotation files were divided into training, testing, and validation sets in a ratio of 7:2:1. This allocation ensures that the model is trained on a sufficiently large training set while retaining enough samples for validation and testing, thereby balancing training efficiency and evaluation reliability. In addition, to further validate the generalization capability of the proposed model, this paper additionally incorporated the internationally authoritative wheat ear detection dataset GWHD2021 (Global Wheat Head Detection 2021) [5] as a supplementary dataset to conduct supplementary experiments. This dataset was jointly released by multiple national research institutions, encompassing wheat images of diverse varieties captured under varied conditions from multiple countries. It features high heterogeneity and representativeness, making it suitable for evaluating the model’s cross-domain adaptability.

Figure 2.

Image enhancement examples. (a) Original image; (b) brightness enhanced; (c) brightness reduced; (d) noise corrupted; (e) randomized occlusion; (f) wheat head annotations.

2.3. Experimental Environment and Configuration

The experimental setup utilized PyTorch 1.12.1 with Python 3.10, running on Ubuntu 20.04.5 LTS. Hardware configuration included an Intel® Xeon(R) Gold 6248R CPU @ 3.00 GHz with 96 logical cores and an NVIDIA GeForce RTX 3090 GPU with CUDA 11.4. The model training was conducted using the SGD optimizer. The initial learning rate was set to 0.01, and the weight decay coefficient was 0.0005. The batch size was 32, and the training process was run for 200 epochs. All experimental result data presented in this paper are average values derived from five independent experimental runs, with each run initialized using distinct random seeds to ensure the robustness and reproducibility of model training.

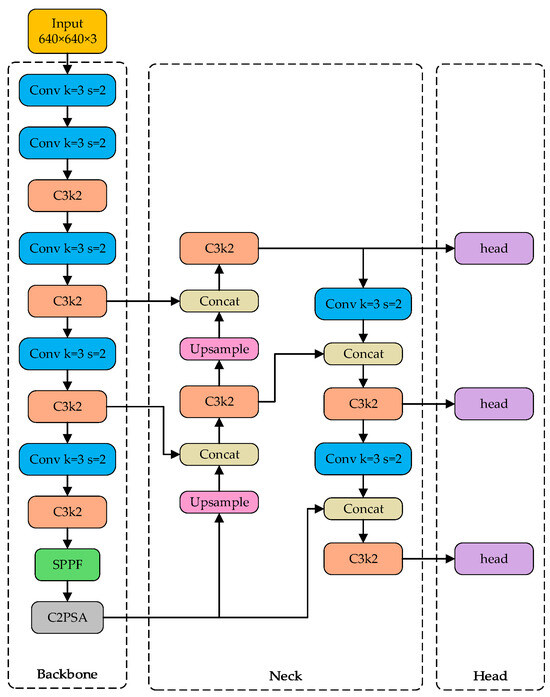

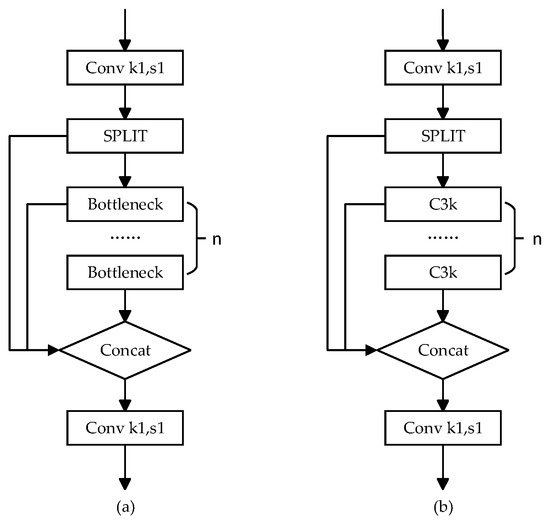

2.4. YOLO v11n Network Architecture

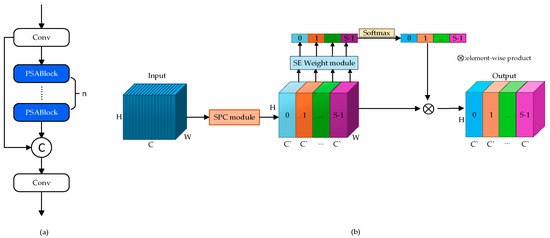

The YOLO series denotes single-stage object detection algorithms. Since its initial proposal by Redmon et al. in 2015, successive iterations have continuously optimized network architecture, feature extraction, and loss functions, progressively enhancing accuracy while maintaining high-speed detection. In contrast to two-stage detectors like Faster R-CNN [20], YOLO conducts object localization and classification simultaneously in a single forward pass, eliminating the need for complex region proposal generation and significantly enhancing detection efficiency. The YOLO v11 architecture consists of three components: Backbone, Neck, and Detection Head, as illustrated in Figure 3. Compared to YOLO v8, YOLO v11 introduces the C3k2 (CSP Bottleneck with 2 Convolutions) module [21] and C2PSA (Cross Stage Partial with Spatial Attention) dual-domain attention mechanism [21] in its backbone. The C3k2 module (Figure 4) constitutes the backbone’s core—an accelerated variant of CSP (Cross Stage Partial Networks) [22] deployed in YOLO v11. C3k2 operates in two configurations: when C3k = False, it functions as the C2f module (Figure 4a); when C3k = True, it becomes the C3k2 module (Figure 4b). When optimizing the C3 foundation, C3k2 sets its parameter to 2 to cascade two C3k blocks, thereby enhancing feature extraction efficiency and capability. This design increases inference speed while maintaining model stability and indirectly boosts accuracy through deeper feature extraction. The C2PSA module (Figure 5) integrates stacked PSA (Pyramid Split Attention) blocks [23]—an enhanced adaptation of SE (Squeeze-and-Excitation) attention [24]—for feature extraction and processing. SE attention explicitly models inter-channel dependencies to adaptively recalibrate channel-wise feature responses, thereby enhancing representational capacity. PSA enhances this mechanism via pyramidal feature splitting, refining dependency modeling through multi-scale context aggregation. By embedding PSA as standalone components that replace original structures, C2PSA significantly amplifies feature extraction capability, elevating both precision and mean average precision (mAP).

Figure 3.

YOLO v11 network architecture diagram.

Figure 4.

C3k2 module schematic diagram. (a) C2f module schematic, (b) C3k2 module schematic.

Figure 5.

(a) C2PSA module schematic diagram; (b) PSA block structure diagram.

Based on scale, YOLO v11 can be categorized into variants such as YOLO v11n, YOLO v11s, YOLO v11m, YOLO v11l, and YOLO v11x. Among these, YOLO v11n represents the most lightweight model, ensuring significantly enhanced deployability to mobile devices. As the next-generation compact model in the YOLO series, it achieves dramatic reductions in parameters and computational demands while retaining the architectural enhancements in feature representation and multi-scale detection inherent to YOLO v11. This enables reliable wheat head detection even in resource-constrained environments. Leveraging transfer learning from pre-trained models and task-specific training on wheat head datasets, YOLO v11n demonstrates strong potential for accurately identifying wheat heads amidst complex field conditions, meeting the critical requirements for accuracy and reliability in agricultural operations, particularly for yield estimation tasks.

2.5. YOLO v11n Model Optimization

2.5.1. Global Edge Information Transfer Module Architecture

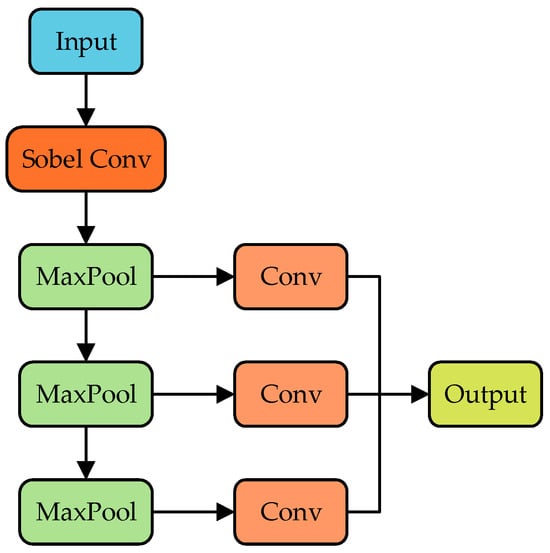

It is well-established that object localization in detection tasks critically relies on edge information. However, traditional detection networks do not have specialized components to emphasize object edges, necessitating a module that integrates edge details across various feature hierarchies. To tackle this issue, we introduce the Global Edge Information Transfer (GEIT) framework, which disseminates edge data derived from shallow features across the network backbone while facilitating fusion with multi-scale features. Direct edge extraction from raw images introduces background noise during propagation, whereas shallow convolutional layers inherently filter irrelevant background content. Thus, we incorporate the Multi-scale Edge Information Generator (MSEIG) at initial network stages (refer to Figure 6), utilizing shallow feature layers to create multi-scale edge feature maps for integration into backbone hierarchies. Specifically, it employs the Sobel edge detection algorithm [25] for approximate differential computation: This algorithm utilizes the Sobel operator with built-in smoothing operations (such as applying Gaussian weighting to the first-order differences in the horizontal and vertical directions, respectively), which effectively suppresses interference from common noise in farmland environments (e.g., random noise caused by illumination fluctuations, leaf movement, or low-quality imaging). Compared to operators like Canny that rely on gradient thresholds, it significantly reduces the probability of generating false edges while preserving true edges—critical for the precise edge localization of small targets like wheat heads. On the other hand, the external contours of wheat heads (e.g., the junction between the spike axis and awns) and internal structures (e.g., the boundaries of grain arrangements) mostly exhibit significant horizontal and vertical edge features. By leveraging separate horizontal () and vertical () convolution kernels (Equations (1) and (2)), the Sobel operator accurately captures such directional edge information, providing high-quality edge priors for subsequent feature fusion.

Figure 6.

MSEIG (multi-scale edge information generator) schematic diagram.

Horizontal Sobel operator (detects horizontal edges):

Vertical Sobel operator (detects vertical edges):

These kernels perform convolution operations on the image to compute horizontal () and vertical () gradient magnitudes, followed by edge intensity calculation via Equation (3):

where and denote the horizontal and vertical directional gradients of the image.

Furthermore, this algorithm has extremely low computational complexity, with its computational cost being only 1/3 to 1/2 that of the Canny operator. It also avoids complex non-maximum suppression or multi-threshold filtering steps. When integrated into the network’s feature extraction stage, it can guide the network to focus on detailed features of boundary regions without significantly increasing the model’s parameter count, thereby enhancing the localization accuracy of small targets (wheat heads).

Downsampling method selection requires careful consideration. Our objective to preserve and enhance edge information during downsampling makes MaxPool the optimal choice, as it retains the strongest local features and better preserves edge characteristics. In contrast, AvgPool is more suitable for feature smoothing or homogenization scenarios and underperforms compared to MaxPool [26] in preserving fine details and edge information. For feature fusion, we propose a novel cross-channel approach: Conv_Edge_Fusion elegantly integrates edge information with standard convolutional features. This module employs Conv_Channel_Fusion for cross-channel fusion of edge features and convolutional representations, enabling effective integration of heterogeneous feature sources. Subsequent Conv_3 × 3_Feature_Extract further refines fused features to enhance local detail capture capability, followed by dimensionality adjustment via Conv_1 × 1.

2.5.2. RFCAConv-Based C3k2_RFCAConv Module

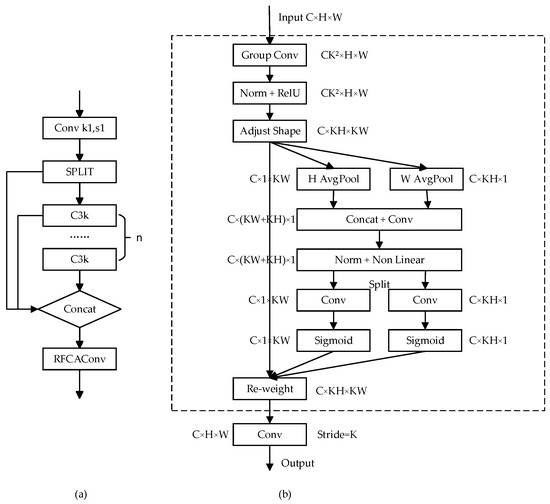

Images captured from varying angles exhibit significant differences in wheat head morphology representation, while agricultural machinery operating during harvest demands continuous operation, imposing stringent requirements on model performance. As the core feature extraction and fusion component, the C3k2 module balances representational capacity and computational efficiency through channel splitting, multi-stage convolution processing, and feature reuse mechanisms. However, its fixed receptive field design constrains adaptability to multi-scale targets, the absence of spatial awareness mechanisms underutilizes positional information, and coarse feature fusion becomes particularly problematic in occlusion scenarios. To overcome these limitations, we introduce the C3k2_RFCAConv module based on RFCAConv (Receptive-Field and Coordinated Attention Convolution) [27], replacing the second standard convolution in the parallel branch of C3k2 with RFCAConv (see Figure 7). RFCAConv integrates Coordinate Attention (CA) [28] into RFAConv (Receptive-Field Attention Convolution) [27]. RFAConv generates independent attention weights for each receptive field by focusing on spatial characteristics, emphasizing feature importance within the receptive field to enable parameter-unshared convolution. Decomposing 2D channel attention, CA divides it into two 1D encoding processes that aggregate features vertically and horizontally. This allows for capturing long-range spatial dependencies and retaining local positional information accurately, which is crucial for tasks involving object structural localization.

Figure 7.

(a) C3k2_RFCAConv module schematic; (b) RFCA model architecture.

2.5.3. Normalized Wasserstein Distance Loss Function

In field-captured wheat images, foreground and background wheat heads exhibit significant scale disparities: foreground heads occupy more pixels with richer edge details, while distant heads appear diminished with blurred textures due to perspective foreshortening. This causes inherent limitations in traditional IoU-based losses for localizing distant small heads and occluded targets. In addressing this, we utilize the NWD (Normalized Gaussian Wasserstein Distance) loss—a localization loss specifically tailored for small-object detection. NWD represents bounding boxes as Gaussian distributions and evaluates their similarity using the Wasserstein distance, where bounding box similarity corresponds to the distance between the respective Gaussian distributions, as outlined in Equation (4):

where denotes the second-order Wasserstein distance. For a bounding box R = (,,w,h) with center coordinates (,) and dimensions w (width) and h (height), this formulation exhibits scale insensitivity, ensuring enhanced stability in small-target detection and effectively addressing the failure modes of traditional IoU metrics for small objects.

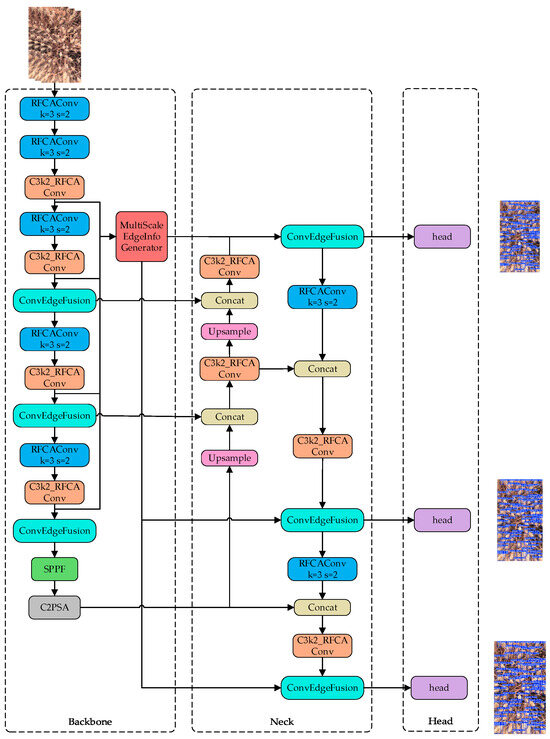

2.5.4. YOLO v11n-GRN Network Model

Field wheat head images typically exhibit complex characteristics including a dense arrangement, occlusion, and scale variation. To enhance detection model performance for wheat head recognition in complex field environments and meet high-precision yield estimation requirements, this study introduces structural improvements to the lightweight YOLO v11n framework, proposing a novel object detection network: YOLO v11n-GRN. Firstly, addressing insufficient edge feature perception in traditional detectors under complex backgrounds, we design the Global Edge Information Transfer (GEIT) module integrated with a Multi-scale Edge Information Generator (MSEIG), enabling effective transmission and fusion of shallow edge features to deep semantic features. Secondly, to overcome limited receptive fields and weak spatial modeling capabilities, we integrate the C3k2 module with RFCAConv to construct the novel C3k2_RFCAConv module and optimize convolutional operations within the YOLO framework to enhance multi-scale perception and feature localization accuracy. Finally, acknowledging inevitable scale variations in field images, we adopt the Normalized Wasserstein Distance (NWD) as the localization loss to resolve instability in small-target regression with traditional IoU losses, significantly improving robustness and accuracy for small-scale wheat head detection. The enhanced model architecture is depicted in Figure 8.

Figure 8.

YOLO v11n-GRN network architecture diagram.

3. Results and Analysis

3.1. Evaluation Index

In practical yield estimation scenarios, algorithmic performance faces rigorous demands. Models must operate efficiently on constrained computational resources (e.g., vehicle-mounted embedded devices) while maintaining detection stability and reliability without sacrificing speed.

This study utilizes precision (P), recall (R), and mean average precision (mAP) as key metrics to assess model accuracy. Precision indicates the ratio of true positive instances to all predicted samples, reflecting the model’s false positive rate. Higher precision values indicate better resistance to non-target interference. Recall measures the ratio of correctly identified positive instances to all actual targets, representing the model’s false negative rate. Higher recall values suggest improved target detection capability. Mean average precision (mAP) is a comprehensive metric in object detection, considering both bounding box localization accuracy and classification correctness. This study employs mAP@0.5 (IoU threshold = 0.5) for model evaluation. Additionally, to validate the detection capability of the proposed model on targets of different scales, this paper referenced the COCO evaluation criteria. The wheat ear annotation bounding boxes in the test set were divided into small targets (area < 322 pixels), medium targets (area between 322 and 962 pixels), and large targets (area > 962 pixels) based on their area. The average precision (AP) metrics APS, APM, and APL were then calculated for different scales, respectively. Furthermore, parameter count is used to evaluate spatial complexity, where lower values facilitate lightweight model deployment in resource-constrained settings such as mobile devices or agricultural edge terminals. Floating-point operations (FLOPs) quantify computational complexity, with reduced values indicating lighter-weight models. Frames per second (FPS) is employed as an evaluation metric for algorithm real-time performance, reflecting both the model’s inference speed and its real-time capability. A high FPS indicates that the model exhibits a superior response performance in practical applications.

3.2. Model Performance Comparative Experiments

To thoroughly assess the integrated performance of the YOLO v11n-GRN model for wheat head detection, this study selected current mainstream detection models, including Faster R-CNN, RT-DETR, YOLO X, YOLO v5n, YOLO v8n, YOLO v11n, YOLO v11s, and YOLO v12n, as experimental comparison baselines. Comparative experiments were conducted on both the self-built dataset and the GWHD2021 public dataset under identical experimental environments, evaluating the performance of each model across six metrics: precision, recall, mAP@0.5, number of parameters, FLOPs, and FPS. These experiments aimed to comprehensively assess the models’ detection accuracy and computational resource consumption, with results presented in Table 1 and Table 2. Results demonstrated that YOLO v11n-GRN exhibited outstanding performance across multiple core metrics, demonstrating significant advantages in wheat ear detection tasks within wheat fields. Specifically, on the self-built dataset, the YOLO v11n model outperformed most current mainstream detection models in terms of accuracy. Compared with RT-DETR and YOLO v11s, the average difference in their mean precision values was only 0.1% and 0.2%, respectively. However, considering the parameters (32.8M vs. 9.4M) and floating-point computation volume (21.3 GFLOPs vs. 103.4 GFLOPs) of RT-DETR and YOLO v11s, YOLO v11n demonstrated a better overall performance, thus being selected as the base network model for this study. For the improved YOLO v11n-GRN model, its mAP@0.5 value reached 95.7%, representing a 1.6 percentage point improvement over its baseline model YOLO v11n, with corresponding enhancements of 1.3% and 2.3% in precision and recall, respectively. Compared with current mainstream detection models such as RT-DETR, YOLO X, and YOLO v12n, the improved model achieved varying degrees of performance gains. In terms of inference speed, YOLO v11n-GRN achieved an inference speed of 61.6 FPS while maintaining a small parameter size (3.6 MB) and low computational volume (10.3 GFLOPs). This speed is significantly higher than Faster R-CNN’s 18.8 FPS and, although slightly lower than the pre-improved YOLO models, still fully meets the real-time detection requirements in the operation scenario of combine harvesters, demonstrating excellent balance capability between accuracy and speed. Despite a slight increase of 1 MB in parameter count compared to YOLO v11n, the YOLO v11n-GRN model remains within the category of lightweight models, rendering it suitable for deployment on resource-constrained devices or field equipment. Importantly, the model achieved a 1.7% mAP@0.5 improvement with only a 38.5% increase in parameters, underscoring the effectiveness of multi-module co-optimization in the enhanced architecture. In terms of inference speed, YOLO v11n-GRN achieves an inference speed of 61.6 FPS while maintaining a compact parameter size (3.6 MB) and low computational volume (10.3 GFLOPs). This speed is significantly higher than Faster R-CNN’s 18.8 FPS and, although slightly lower than the pre-improved YOLO models, still fully meets the real-time detection requirements in combine harvester operation scenarios, demonstrating an excellent balance between accuracy and speed. Beyond the self-built dataset, this paper also evaluated the improved model on the GWHD2021 public dataset. On the GWHD2021 test set, the YOLO v11n-GRN model continued to exhibit strong detection capabilities, with its precision, recall, and mAP@0.5 reaching 91.6%, 89.7%, and 94.4%, respectively. These results outperformed the baseline model YOLO v11n (90.8%, 88.6%, 93.7%) and numerous mainstream detection models. In summary, the proposed YOLO v11n-GRN strikes an optimal balance between detection accuracy, robustness, and model complexity. It demonstrates significant practical applicability and field deployability for wheat head detection and intelligent yield estimation in dynamic field environments characterized by variable lighting, occlusion, and scale variations.

Table 1.

Experiment on performance comparison of different models under the self-built dataset.

Table 2.

Experiment on performance comparison of different models on the GWHD2021 public dataset.

3.3. Ablation Experiment

To validate the actual contributions of the GEIT module architecture, C3k2_RFCAConv module, and NWD loss function to model performance, this study designed eight sets of ablation experiments, with their specific configurations and corresponding performance metrics presented in Table 3. Among these, to better illustrate the impact of the proposed improvements on the detection performance of wheat ears at different scales, the APS, APM, and APL evaluation metrics are introduced here. Key findings include the following.

Table 3.

Results of the ablation experiment.

Introducing the GEIT module architecture alone increased the model’s mAP@0.5 to 94.8%, representing a 0.7 percentage point improvement over the baseline YOLO v11n model. This demonstrates that the multi-scale transmission and fusion mechanism of shallow edge information effectively enhances the model’s perception of wheat ear contour features. Meanwhile, APS and APM increased from 6.0% and 41.5% to 6.3% and 47.4%, respectively, which proves that the feature perception capability brought by the GEIT module architecture improves the model’s detection performance on small and medium targets. Moreover, parameters increased from 2.6 MB to 3.4 MB post-GEIT integration, primarily due to the 1 × 1 and 3 × 3 convolutional layers in MSEIG and Conv_Edge_Fusion structures, which supplement edge information across backbone scales while introducing additional trainable weights. This controlled parameter growth yields enhanced boundary discrimination and target perception accuracy, confirming GEIT’s favorable cost-effectiveness. Replacing backbone convolutions with C3k2_RFCAConv elevated mAP@0.5 by 0.4% and recall by 0.5%; although the improvements in convolutional operations yielded limited gains in overall model accuracy, they sufficiently demonstrate that combining receptive field attention and coordinate attention enhances spatial feature extraction and strengthens feature representation capabilities. After replacing the original CIoU loss function of the model with the NWD loss function, the model’s mAP@0.5 further increased to 94.4%, representing an improvement of 0.3 percentage points. Moreover, the effect of this module is more prominent in the detection of small and distant targets: it increased the model’s detection precision for small targets from 6.0% to 6.7% and for medium targets from the original 41.5% to 47.8%, representing improvements of 0.7 and 6.3 percentage points, respectively. This fully demonstrates that this improvement performs particularly well in small-target detection scenarios, indicating that NWD has significant advantages in small-target wheat ear regression.

The combined use of GEIT and C3k2_RFCAConv modules exhibited optimal synergy, resulting in a 95.3% mAP@0.5 performance with moderate enhancements in precision and recall metrics. This integration highlighted synergistic effects between edge enhancement and dual-attention mechanisms. The incorporation of all three modules led to peak performance, achieving a 95.7% mAP@0.5, 92.5% precision, and 91.1% recall, showcasing module complementarity and design effectiveness. In comparison to the original YOLO v11n model, the final model demonstrated improvements of 1.6 percentage points in mAP@0.5, 1.3 percentage points in precision, and 2.3 percentage points in recall. APS, APM, and APL increased by 2 percentage points, 6.7 percentage points, and 2.9 percentage points, respectively. Despite the inevitable increase in computational complexity associated with module stacking, the three-module combination only added 1 MB (38.5%) in parameters, remaining within lightweight constraints. Ablation studies unequivocally confirm the efficacy and synergistic enhancement of the proposed enhancements, significantly enhancing overall model performance.

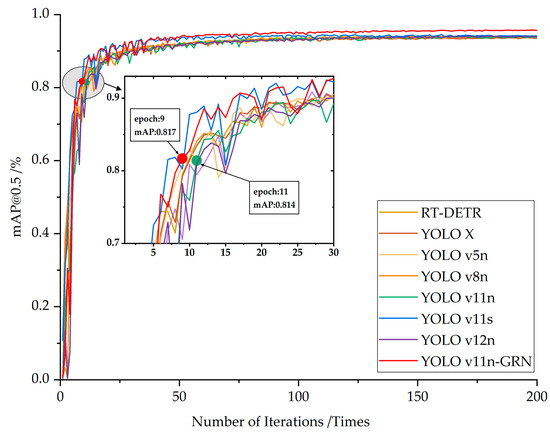

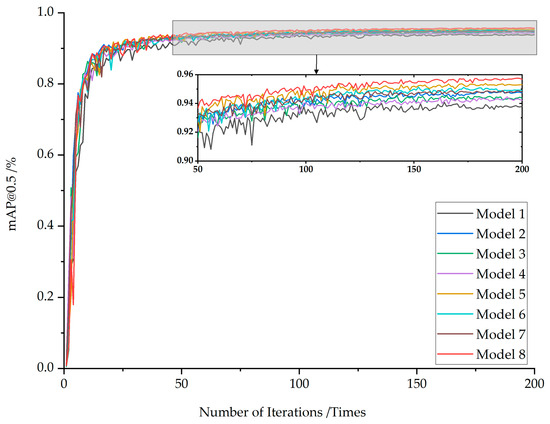

3.4. Mean Average Precision Comparative Analysis

To more intuitively demonstrate the performance improvement of the proposed improved model YOLO v11n-GRN, training process curves of the mean average precision (mAP) were plotted for the proposed model and mainstream detection models (RT-DETR, YOLO X, YOLO v5n, YOLO v8n, YOLO v11n, YOLO v11s, and YOLO v12n) after 200 training epochs (as shown in Figure 9). Additionally, curves illustrating the variation trends of the mean average precision training process with or without each improved module were also plotted (as shown in Figure 10) for comparative analysis.

Figure 9.

mAP@0.5 training curves across models.

Figure 10.

mAP@0.5 training curves under ablation studies.

As evident from Figure 9, the general trends of all detection models were generally consistent. In comparison, YOLO v11s and YOLO v12n exhibited greater fluctuations during the initial training stages. By the later training phases, all models had converged to stability. The improved YOLO v11n-GRN model took the lead due to its consistently high and stable mAP@0.5. On the other hand, although the mAP@0.5 of the YOLO v11n-GRN model increased at a slightly slower rate in the early training stage, it surpassed 0.8 mAP in just 9 training epochs, whereas YOLO v11n required 11 epochs. This indicates that the module improvements accelerated the model’s early-stage feature learning speed. As the number of training epochs increased, its mAP@0.5 value rose rapidly, gradually stabilized at a high level, and ultimately reached 95.7%, surpassing all other comparison models. This fully demonstrated its excellent feature learning and detection capabilities in complex wheat field images. Analysis of Figure 10 further reveals that Model 8 (YOLO v11n-GRN) significantly outperforms the seven other models in convergence speed, detection accuracy, and stability. During initial training, it demonstrates faster mAP@0.5 improvements, confirming accelerated convergence. After epoch 50 when all models approach convergence, Model 8 consistently maintains the highest mAP@0.5 throughout the subsequent 150 epochs with minimal curve fluctuations, demonstrating both a superior mean accuracy and enhanced stability.

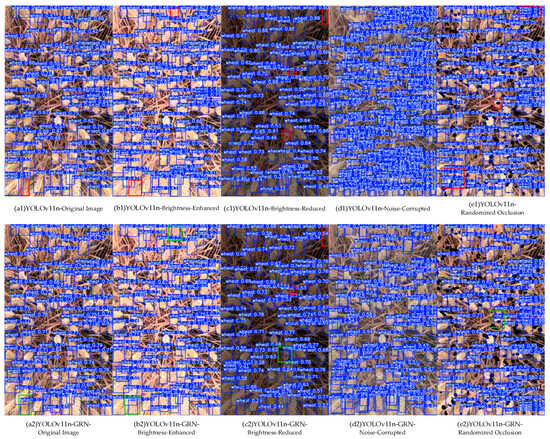

3.5. Model Detection Performance Comparison

To more intuitively compare the specific performance of the baseline model YOLO v11n and the proposed improved model YOLO v11n-GRN, a set of model detection visualization results for wheat ear images randomly selected from the self-built dataset are presented, as shown in Figure 11. The test set comprises five image variants: (a) original, (b) brightness enhanced, (c) brightness reduced, (d) noise corrupted, and (e) randomized occlusion samples. Blue bounding boxes denote correct detections, while red boxes indicate false positives or missed detections. Analysis reveals distinct performance advantages of YOLO v11n-GRN: In the original image, the baseline model missed detecting a wheat head in the lower-left area (Figure 11(a1)), whereas YOLO v11n-GRN successfully identified it (green box in Figure 11(a2)). Similarly, under brightness-enhanced conditions, YOLO v11n missed two targets that were accurately detected by the proposed model. Although both models exhibited occasional detection failures in brightness-reduced scenarios (Figure 11(c1,c2)), YOLO v11n-GRN maintained a higher detection accuracy. Notably, significant error rates persisted for both models when processing noise-corrupted and occluded images, with false positives being particularly prevalent in noisy conditions. Despite these challenges, YOLO v11n-GRN demonstrated measurable improvements over the baseline, substantially reducing false detection rates across challenging conditions. This comparative analysis demonstrates the practical detection efficacy enhancement achieved by the proposed model modifications.

Figure 11.

Model detection performance comparison. The blue bounding boxes in the image indicate areas where the model has detected wheat heads; red bounding boxes represent regions of missed or false detections; green bounding boxes highlight targets that were missed by the baseline model but successfully detected by the improved model.

4. Discussion

This study introduces the YOLOv11n-GRN model, which is built upon the advanced YOLO v11n object detection framework. The architectural improvements primarily center on three key aspects: edge information modeling, fusion of multi-scale spatial attention mechanisms, and small-target localization optimization. Experimental results demonstrate that each enhancement played pivotal roles in collectively advancing model performance.

The GEIT module architecture significantly enhances wheat head contour perception through its MSEIG generator and cross-channel fusion, enabling robust edge feature extraction under occlusion and blurring. This heightened edge sensitivity improves bounding box localization accuracy, with experiments confirming substantial gains in mAP, recall, and precision—particularly effective in mitigating false detections from ambiguous boundaries in overlapping occlusion zones. The C3k2_RFCAConv module integrates receptive field attention and coordinate attention into parallel convolutions, simultaneously capturing long-range spatial dependencies while preserving local positional details. This dual-attention approach enhances spatial awareness and scale adaptability, solving detection challenges from densely distributed, scale-variant wheat heads through expanded receptive fields and strengthened positional modeling. The NWD loss specifically targets small/dense wheat heads by modeling bounding box similarity distributionally. Compared to IoU-based losses, it overcomes stability and sensitivity limitations in small-target detection, ensuring consistent robustness for distant wheat heads. Benchmarking shows that our model outperforms Li et al.’s YOLO v5 variant [1] by 1.4 percentage points in mAP@0.5 (95.7% vs. 94.3%), attributable to refined spatial modeling via dual attention. It also surpasses Jing et al.’s Wheat-YOLONet [2] by 4.7 percentage points despite slightly greater complexity.

By employing standardized quadrat sampling for image acquisition, this study enables rapid field estimation of wheat spikes per mu/hectare directly from detected heads. Combined with agronomic parameters (average kernels per spike, thousand-kernel weight), it generates theoretical yield predictions. This methodology demonstrates technical feasibility for establishing an integrated yield estimation pipeline: image recognition → spike counting → yield prediction. Overall, the proposed YOLO v11n-GRN model achieves an enhanced detection performance in complex scenarios while maintaining a compact size and low computational costs, effectively supporting real-time field detection and precision yield estimation. Nevertheless, inherent trade-offs between model lightweighting and accuracy persist. Future research should investigate more efficient backbone architectures and optimization strategies to further reduce computational burdens while advancing holistic model performance.

5. Conclusions

To address the complex detection challenges in wheat fields, including dense wheat ears, severe occlusion, and varying scales, this paper proposes an improved wheat ear target detection model based on YOLO v11n, named YOLO v11n-GRN. The architecture integrates (1) a Global Edge Information Transfer (GEIT) module that enhances boundary perception through shallow edge feature generation and multi-scale fusion; (2) a C3k2_RFCAConv module combining receptive field augmentation and coordinate attention mechanisms to improve feature representation in complex environments; and (3) the Normalized Wasserstein Distance (NWD) loss, which is utilized to represent bounding boxes as Gaussian distributions, addressing the scale sensitivity issue of traditional IoU and enhancing accuracy in localizing distant small targets. Experimental results demonstrate that the improved YOLO v11n-GRN model, while maintaining its lightweight design, outperforms existing mainstream models across multiple key metrics such as precision, recall, and mean average precision (mAP). Specifically, on the self-built dataset, it achieves precisions and recalls of 92.5% and 91.1%, respectively. The mean average precision (mAP) increases from 94.1% to 95.7%, representing a 1.6 percentage point improvement—one of the most significant enhancements among all evaluation metrics. Despite increasing parameters to 3.6 MB, the model remains suitable for agricultural machinery deployment. This work establishes a high-precision solution for wheat head counting and yield estimation, validating practical field application potential. It provides reliable data support for accurate spikes-per-mu and yield calculations, offering an efficient pathway for smart agriculture advancement.

Author Contributions

Conceptualization, G.T.; data curation, Y.Z., Z.L., X.G., and C.L.; formal analysis, Z.L., X.G., and G.T.; funding acquisition, G.T.; investigation, Y.Z., Z.L., C.L., and G.T.; methodology, Y.Z. and Z.L.; project administration, C.L. and G.T.; resources, C.L. and G.T.; software, Y.Z., Z.L., and X.G.; supervision, C.L. and G.T.; validation, Y.Z. and Z.L.; visualization, Y.Z. and Z.L.; writing—original draft preparation, Y.Z. and Z.L.; writing—review and editing, Y.Z. and G.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (U20A20180), Agricultural Scientific and Technological Achievements Transformation Fund of Hebei (202460104030028), and Key Programs for Science and Technology Development of Hebei Province (19227210D).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

We thank the editor and reviewers for their helpful suggestions to improve the quality of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Food and Agriculture Organization of the United Nations. Available online: https://www.fao.org/faostat/zh/#data/QCL/visualize (accessed on 15 August 2025).

- Li, R.; Wu, Y. Improved YOLO v5 Wheat Ear Detection Algorithm Based on Attention Mechanism. Electronics 2022, 11, 1673. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 658–666. [Google Scholar]

- David, E.; Serouart, M.; Smith, D.; Madec, S.; Velumani, K.; Liu, S.; Wang, X.; Espinosa, F.P.; Shafiee, S.; Tahir, I.S.; et al. Global wheat head dataset 2021: More diversity to improve the benchmarking of wheat head localization methods. arXiv 2021, arXiv:2105.07660. [Google Scholar] [CrossRef]

- Bhagat, S.; Kokare, M.; Haswani, V.; Hambarde, P.; Kamble, R. WheatNet-Lite: A novel light weight network for wheat head detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 1–17 October 2021; pp. 1332–1341. [Google Scholar]

- Tan, M.; Le, Q.V. Mixconv: Mixed depthwise convolutional kernels. arXiv 2019, arXiv:1907.09595. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Khaki, S.; Safaei, N.; Pham, H.; Wang, L. Wheatnet: A lightweight convolutional neural network for high-throughput image-based wheat head detection and counting. arXiv 2021, arXiv:2103.09408. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zang, H.; Peng, Y.; Zhou, M.; Li, G.; Zheng, G.; Shen, H. Automatic detection and counting of wheat spike based on DMseg-Count. Sci. Rep. 2024, 14, 29676. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Liu, H.; Samaras, D.; Nguyen, M.H. Distribution matching for crowd counting. Adv. Neural Inf. Process. Syst. 2020, 33, 1595–1607. [Google Scholar]

- Fang, C.; Yang, X. Lightweight YOLOv8 for wheat head detection. IEEE Access 2024, 12, 66214–66222. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Lau, K.W.; Po, L.-M.; Rehman, Y.A.U. Large separable kernel attention: Rethinking the large kernel attention design in cnn. Expert Syst. Appl. 2024, 236, 121352. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding box regression loss with dynamic focusing mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Li, L.; Hassan, M.A.; Wang, D.; Wan, G.; Beegum, S.; Rasheed, A.; Xia, X.; He, Y.; Zhang, Y.; He, Z. RGB imaging and computer vision-based approaches for identifying spike number loci for wheat. Plant Phenomics 2025, 7, 100051. [Google Scholar] [CrossRef]

- Pan, J.; Song, S.; Guan, Y.; Jia, W. Improved Wheat Detection Based on RT-DETR Model. Int. J. Comput. Sci. 2025, 52, 705. [Google Scholar]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A normalized Gaussian Wasserstein distance for tiny object detection. arXiv 2021, arXiv:2110.13389. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Xiong, Z.; Zhan, Z.; Wang, X. Position-sensitive attention based on fully convolutional neural networks for land cover classification. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 3, 281–288. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Samuel, A.; Sun, G.; Enhua, W. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2011–2023. [Google Scholar]

- Sobel, I.; Feldman, G. A 3 × 3 Isotropic Gradient Operator For Image Processing. Presented at a talk at the Stanford Artificial Intelligence Project. 1968, pp. 271–272. Available online: https://www.researchgate.net/publication/285159837_A_33_isotropic_gradient_operator_for_image_processing (accessed on 15 August 2025).

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of pooling operations in convolutional architectures for object recognition. In Proceedings of the International Conference on Artificial Neural Networks, Thessaloniki, Greece, 15–18 September 2010; pp. 92–101. [Google Scholar]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating spatial attention and standard convolutional operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).