WT-ResNet: A Non-Destructive Method for Determining the Nitrogen, Phosphorus, and Potassium Content of Sugarcane Leaves Based on Leaf Image

Abstract

1. Introduction

- (1)

- This study pioneers the use of deep learning models to establish a correlation between sugarcane leaf images and the phosphorus and potassium levels within the leaves.

- (2)

- The CNN model design integrates wavelet transform, a technique from image processing, into the residual network architecture, enabling multi-scale feature extraction.

- (3)

- The nitrogen, phosphorus, and potassium prediction model based on sugarcane leaf images achieves the best performance among comparable prediction models. Additionally, the introduction of the tolerance concept facilitates a more objective evaluation of the model.

2. Materials and Methods

2.1. Field Experiment

2.2. Field Experiment Result

2.3. WT-ResNet

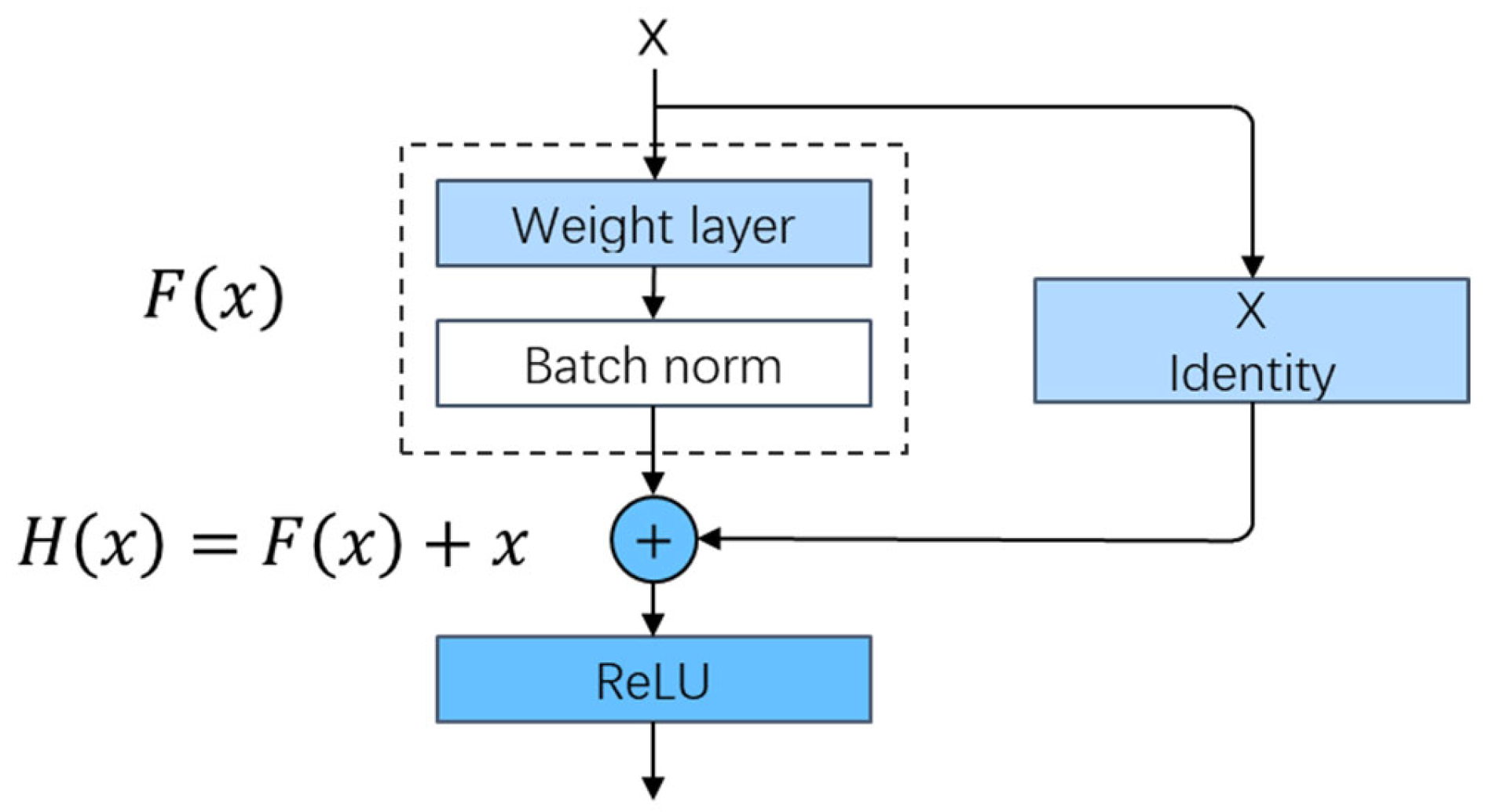

2.3.1. Residual Structure

2.3.2. Wavelet Convolution

2.3.3. WT Residual Block

2.4. Evaluation Metrics

3. Results

3.1. WT-ResNet Training Results

3.2. Grad-CAM

3.3. Backbone Comparison Experiments

3.4. Ablation Study

4. Discussion

- (1)

- Although the model demonstrates strong performance on the current dataset, its generalization ability and robustness still encounter several limitations. The size and variety of the dataset represent key limitations. The dataset employed in this study exhibits limitations regarding sample size, the range of sugarcane growth stages represented, the number of varieties included, and the variety of environmental stressors encountered (such as drought, pest infestations, and diseases). Consequently, the model predictive accuracy may considerably decline when estimating nutrient levels in diverse and unpredictable field environments not represented in the training data. The model dependability hinges directly on how well the training data represents the full spectrum of conditions it will encounter. To overcome the limitation of data, we are actively and systematically enriching the dataset, aiming to include a broader range of sugarcane varieties, the complete growth cycle, and abiotic stress conditions. Furthermore, we will investigate unsupervised and self-supervised learning methods. A key benefit of this strategy is its capacity to leverage vast amounts of unlabeled field images for pre-training, allowing the model to independently learn common visual characteristics of leaves, including texture, shape, and structure.

- (2)

- Difficulties arise in automating the process of acquiring and preparing field images. In natural and unstructured agricultural settings, the efficient and standardized acquisition of images remains a significant challenge. Complex environmental factors, such as soil, weeds, and other plants, significantly disrupt the model’s ability to focus on the target leaf and extract its features, thereby decreasing prediction accuracy [41]. Furthermore, fluctuations in lighting conditions are critical determinants of image quality [42]. Uncompensated differences in illumination can generate significant noise, thereby directly impacting the model’s stability. The current automated acquisition and preprocessing methods are still inadequate in addressing these complex challenges. To ensure the practical implementation of the technology, it is essential to address the challenges encountered in engineering practice. Developing image acquisition protocols, including standardized shooting distance, angle, and the use of reference objects, is fundamental to ensuring data consistency. Additionally, incorporating illumination correction algorithms into the preprocessing pipeline can effectively mitigate the effects caused by variations in lighting conditions.

- (3)

- Although deep learning models offer high performance, they typically require substantial computational resources. This presents a challenge for application scenarios that need to be deployed on edge devices, such as smartphones and portable devices. Furthermore, existing models still have room for improvement in balancing the capture of the leaf global structure and local fine details. This may result in not fully leveraging all the phenotypic information present in the images. Regarding the model architecture, we will investigate more advanced network designs to enhance both performance and efficiency. These models leverage techniques like depthwise separable convolution [33], model pruning, and quantization [43] to significantly reduce both the number of parameters and computational complexity, all while preserving high accuracy. This allows for seamless deployment on mobile devices such as drones and smartphones.

- (4)

- The quality of raw data collected by visual sensors directly sets the upper bound for the performance of subsequent model analysis [44]. Portable agricultural devices, such as drones and handheld detectors, impose stringent requirements on sensor size and power consumption. Currently available high-precision visual sensors, such as 3D cameras and hyperspectral cameras, tend to be large in size and consume significant energy during prolonged operation, which makes it challenging to meet the demands of mobile applications in field environments. To address the complexities of crop shapes, such as vines and clusters of fruits, bionic visual sensors are being investigated. This approach aims to widen the field of view and minimize blind spots. Meanwhile, the use of flexible electronic technology holds promise for enabling sensors to adhere closely to crop surfaces for monitoring purposes.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Phiri, R.; Rangappa, S.M.; Siengchin, S. Sugarcane Bagasse for Sustainable Development of Thermoplastic Biocomposites. Ind. Crops Prod. 2024, 222, 120115. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Y.; Cai, T.; He, K.; Tian, X.; Chen, Z.; Yin, Y.; Cui, Z. Integrated Management to Achieve Synergy in Sugarcane Production and Quality in China. Field Crops Res. 2024, 317, 109552. [Google Scholar] [CrossRef]

- Zhang, Z.; Hua, C.; Ayyamperumal, R.; Wang, M.; Wang, S. The Impact of Specialization and Large-Scale Operation on the Application of Pesticides and Chemical Fertilizers: A Spatial Panel Data Analysis in China. Environ. Impact Assess. Rev. 2024, 106, 107496. [Google Scholar] [CrossRef]

- Pereira Da Silva, G.; Justino Chiaia, H.L. Limitation Due to Nutritional Deficiency and Excess in Sugarcane Using the Integral Diagnosis and Recommendation System (DRIS) and Nutritional Composition Diagnosis (CND). Commun. Soil Sci. Plant Anal. 2021, 52, 1458–1467. [Google Scholar] [CrossRef]

- Lemaire, G.; Tang, L.; Bélanger, G.; Zhu, Y.; Jeuffroy, M.-H. Forward New Paradigms for Crop Mineral Nutrition and Fertilization towards Sustainable Agriculture. Eur. J. Agron. 2021, 125, 126248. [Google Scholar] [CrossRef]

- Mahlayeye, M.; Darvishzadeh, R.; Nelson, A. Characterising Maize and Intercropped Maize Spectral Signatures for Cropping Pattern Classification. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103699. [Google Scholar] [CrossRef]

- Siedliska, A.; Baranowski, P.; Pastuszka-Woźniak, J.; Zubik, M.; Krzyszczak, J. Identification of Plant Leaf Phosphorus Content at Different Growth Stages Based on Hyperspectral Reflectance. BMC Plant Biol. 2021, 21, 28. [Google Scholar] [CrossRef]

- Shankar, T.; Malik, G.C.; Banerjee, M.; Dutta, S.; Praharaj, S.; Lalichetti, S.; Mohanty, S.; Bhattacharyay, D.; Maitra, S.; Gaber, A.; et al. Prediction of the Effect of Nutrients on Plant Parameters of Rice by Artificial Neural Network. Agronomy 2022, 12, 2123. [Google Scholar] [CrossRef]

- Kolhar, S.; Jagtap, J. Plant Trait Estimation and Classification Studies in Plant Phenotyping Using Machine Vision—A Review. Inf. Process. Agric. 2023, 10, 114–135. [Google Scholar] [CrossRef]

- VanHook, A.M. Nitrogen Assimilation Gets a HY5. Sci. Signal. 2016, 9, ec59. [Google Scholar] [CrossRef]

- Akkem, Y.; Biswas, S.K.; Varanasi, A. Smart Farming Using Artificial Intelligence: A Review. Eng. Appl. Artif. Intell. 2023, 120, 105899. [Google Scholar] [CrossRef]

- Li, Y.; Chen, D.; Walker, C.N.; Angus, J.F. Estimating the Nitrogen Status of Crops Using a Digital Camera. Field Crops Res. 2010, 118, 221–227. [Google Scholar] [CrossRef]

- Haider, T.; Farid, M.S.; Mahmood, R.; Ilyas, A.; Khan, M.H.; Haider, S.T.-A.; Chaudhry, M.H.; Gul, M. A Computer-Vision-Based Approach for Nitrogen Content Estimation in Plant Leaves. Agriculture 2021, 11, 766. [Google Scholar] [CrossRef]

- Sulistyo, S.B.; Wu, D.; Woo, W.L.; Dlay, S.S.; Gao, B. Computational Deep Intelligence Vision Sensing for Nutrient Content Estimation in Agricultural Automation. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1243–1257. [Google Scholar] [CrossRef]

- You, H.; Zhou, M.; Zhang, J.; Peng, W.; Sun, C. Sugarcane Nitrogen Nutrition Estimation with Digital Images and Machine Learning Methods. Sci. Rep. 2023, 13, 14939. [Google Scholar] [CrossRef]

- Sun, L.; Yang, C.; Wang, J.; Cui, X.; Suo, X.; Fan, X.; Ji, P.; Gao, L.; Zhang, Y. Automatic Modeling Prediction Method of Nitrogen Content in Maize Leaves Based on Machine Vision and CNN. Agronomy 2024, 14, 124. [Google Scholar] [CrossRef]

- Janani, M.; Jebakumar, R. Detection and Classification of Groundnut Leaf Nutrient Level Extraction in RGB Images. Adv. Eng. Softw. 2023, 175, 103320. [Google Scholar] [CrossRef]

- Lu, Z.; Sun, C.; Dou, J.; He, B.; Zhou, M.; You, H. SC-ResNeXt: A Regression Prediction Model for Nitrogen Content in Sugarcane Leaves. Agronomy 2025, 15, 175. [Google Scholar] [CrossRef]

- Agrawal, J.; Arafat, M.Y. Transforming Farming: A Review of AI-Powered UAV Technologies in Precision Agriculture. Drones 2024, 8, 664. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-Based Plant Height from Crop Surface Models, Visible, and near Infrared Vegetation Indices for Biomass Monitoring in Barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef]

- Zhang, Y.; Ta, N.; Guo, S.; Chen, Q.; Zhao, L.; Li, F.; Chang, Q. Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard. Remote Sens. 2022, 14, 1063. [Google Scholar] [CrossRef]

- Zhang, X.; Hu, Y.; Li, X.; Wang, P.; Guo, S.; Wang, L.; Zhang, C.; Ge, X. Estimation of Rice Leaf Nitrogen Content Using UAV-Based Spectral–Texture Fusion Indices (STFIs) and Two-Stage Feature Selection. Remote Sens. 2025, 17, 2499. [Google Scholar] [CrossRef]

- Zhang, S.; Duan, J.; Qi, X.; Gao, Y.; He, L.; Liu, L.; Guo, T.; Feng, W. Combining Spectrum, Thermal, and Texture Features Using Machine Learning Algorithms for Wheat Nitrogen Nutrient Index Estimation and Model Transferability Analysis. Comput. Electron. Agric. 2024, 222, 109022. [Google Scholar] [CrossRef]

- Yang, M.-D.; Hsu, Y.-C.; Chen, Y.-H.; Yang, C.-Y.; Li, K.-Y. Precision Monitoring of Rice Nitrogen Fertilizer Levels Based on Machine Learning and UAV Multispectral Imagery. Comput. Electron. Agric. 2025, 237, 110523. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going Deeper with Nested U-Structure for Salient Object Detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Levin, A.; Lischinski, D.; Weiss, Y. A Closed-Form Solution to Natural Image Matting. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 228–242. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet Convolutions for Large Receptive Fields. In Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2025; pp. 363–380. ISBN 978-3-031-72948-5. [Google Scholar]

- Huang, H.; He, R.; Sun, Z.; Tan, T. Wavelet-SRNet: A Wavelet-Based CNN for Multi-Scale Face Super Resolution. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 1698–1706. [Google Scholar]

- Gal, R.; Hochberg, D.C.; Bermano, A.; Cohen-Or, D. SWAGAN: A Style-Based Wavelet-Driven Generative Model. ACM Trans. Graph. 2021, 40, 1–11. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 2818–2826. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 1800–1807. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 618–626. [Google Scholar]

- De Bang, T.C.; Husted, S.; Laursen, K.H.; Persson, D.P.; Schjoerring, J.K. The Molecular–Physiological Functions of Mineral Macronutrients and Their Consequences for Deficiency Symptoms in Plants. New Phytol. 2021, 229, 2446–2469. [Google Scholar] [CrossRef]

- Houmani, H.; Corpas, F.J. Can Nutrients Act as Signals under Abiotic Stress? Plant Physiol. Biochem. 2024, 206, 108313. [Google Scholar] [CrossRef]

- Li, M.; Zhang, H.; Yang, X.; Ge, M.; Ma, Q.; Wei, H.; Dai, Q.; Huo, Z.; Xu, K.; Luo, D. Accumulation and Utilization of Nitrogen, Phosphorus and Potassium of Irrigated Rice Cultivars with High Productivities and High N Use Efficiencies. Field Crops Res. 2014, 161, 55–63. [Google Scholar] [CrossRef]

- Guo, Y.; Yan, Z.; Gheyret, G.; Zhou, G.; Xie, Z.; Tang, Z. The Community-level Scaling Relationship between Leaf Nitrogen and Phosphorus Changes with Plant Growth, Climate and Nutrient Limitation. J. Ecol. 2020, 108, 1276–1286. [Google Scholar] [CrossRef]

- Liang, C.; Tian, J.; Liao, H. Proteomics Dissection of Plant Responses to Mineral Nutrient Deficiency. Proteomics 2013, 13, 624–636. [Google Scholar] [CrossRef] [PubMed]

- Xue, Y.; Zhu, S.; Schultze-Kraft, R.; Liu, G.; Chen, Z. Dissection of Crop Metabolome Responses to Nitrogen, Phosphorus, Potassium, and Other Nutrient Deficiencies. Int. J. Mol. Sci. 2022, 23, 9079. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Liao, W.; Nuyttens, D.; Lootens, P.; Xue, W.; Alexandersson, E.; Pieters, J. Cross-Domain Transfer Learning for Weed Segmentation and Mapping in Precision Farming Using Ground and UAV Images. Expert Syst. Appl. 2024, 246, 122980. [Google Scholar] [CrossRef]

- Xu, J.; Hou, Y.; Ren, D.; Liu, L.; Zhu, F.; Yu, M.; Wang, H.; Shao, L. STAR: A Structure and Texture Aware Retinex Model. IEEE Trans. Image Process. 2020, 29, 5022–5037. [Google Scholar] [CrossRef]

- Jiang, Z.; Xu, Y.; Xu, H.; Wang, Z.; Liu, J.; Chen, Q.; Qiao, C. Computation and Communication Efficient Federated Learning with Adaptive Model Pruning. IEEE Trans. Mob. Comput. 2024, 23, 2003–2021. [Google Scholar] [CrossRef]

- Fu, J.; Nie, C.; Sun, F.; Li, G.; Shi, H.; Wei, X. Bionic Visual-Audio Photodetectors with in-Sensor Perception and Preprocessing. Sci. Adv. 2024, 10, eadk8199. [Google Scholar] [CrossRef]

| pH | Organic Carbon (g/kg) | Total Nitrogen (mg/kg) | Total Phosphorus (mg/kg) | Total Potassium (mg/kg) |

|---|---|---|---|---|

| 5.13 ± 0.01 | 11.45 ± 0.04 | 97.36 ± 1.84 | 64.37 ± 1.53 | 102.32 ± 1.45 |

| Items | Methods |

|---|---|

| pH of soil | Acidity meter method |

| Organic matter of soil | Potassium dichromate volumetric method (external heating method) |

| Nitrogen and phosphorus of soil and crop | Measurement using a semi-automatic analyzer after H2SO4-H2O2 digestion (AMS, Italy; Model: SMARTCHEM 200) |

| Potassium of soil and crop | Flame photometry method for H2SO4-H2O2 digestion |

| Items | Detail |

|---|---|

| Operating System | Linux |

| CPU | Intel Xeon W-2235 |

| GPU | NVIDIA GeForce RTX 3090 |

| Acceleration Environment | CUDA 12.6 |

| Language | Python 3.8.20 |

| Framework | Pytorch 2.4.1 |

| Metrics | Definition | Formula |

|---|---|---|

| MAE (Mean Absolute Error) | The average absolute difference between predicted values and actual values. | |

| MSE (Mean Squared Error) | The average of the squared differences between predicted values and actual values. | |

| R2 (Determination coefficient) | The proportion of variance in the target variable explained by the model. | |

| Accuracy within tolerance | Accuracy within a specified allowable error. |

| Model | R2 | Accuracy | MAE | MSE |

|---|---|---|---|---|

| 2*3 × 3 | 0.8679 † | 0.7059 † | 0.3986 † | 0.2555 † |

| 2*3 × 3 WT | +0.061 | +0.1058 | −0.0245 | −0.0592 |

| 1*5 × 5 | +0.0269 | +0.0941 | −0.0462 | −0.052 |

| 1*5 × 5 WT | +0.0712 | +0.1646 | −0.1400 | −0.1376 |

| WT + 2*3 × 3 | +0.0128 | +0.0235 | −0.0347 | −0.0247 |

| WT + 2*3 × 3 WT | +0.0364 | +0.0941 | −0.0883 | −0.0705 |

| WT + 1*5 × 5 | +0.0535 | +0.0706 | −0.0844 | −0.1034 |

| WT + 1*5 × 5 WT (Ours) | +0.0741 | +0.1765 | −0.1456 | −0.1433 |

| Model | R2 | Accuracy | MAE | MSE |

|---|---|---|---|---|

| 2*3 × 3 | 0.8306 † | 0.3529 † | 0.1734 † | 0.0468 † |

| 2*3 × 3 WT | +0.0311 | +0.1059 | −0.0230 | −0.0087 |

| 1*5 × 5 | −0.0432 | −0.0235 | +0.0131 | +0.0119 |

| 1*5 × 5 WT | +0.0132 | +0.1059 | −0.0180 | −0.0037 |

| WT + 2*3 × 3 | −0.0297 | −0.0235 | +0.0079 | +0.0081 |

| WT + 2*3 × 3 WT | +0.0550 | +0.1177 | −0.0443 | −0.0152 |

| WT + 1*5 × 5 | −0.0567 | −0.0941 | +0.0302 | +0.0156 |

| WT + 1*5 × 5 WT (Ours) | +0.0778 | +0.2353 | −0.0573 | −0.0215 |

| Model | R2 | Accuracy | MAE | MSE |

|---|---|---|---|---|

| 2*3 × 3 | 0.6287 † | 0.4940 † | 0.5643 † | 0.4964 † |

| 2*3 × 3 WT | +0.1400 | +0.1766 | −0.1251 | −0.1870 |

| 1*5 × 5 | +0.0203 | +0.0119 | −0.0033 | −0.0268 |

| 1*5 × 5 WT | +0.1505 | +0.2001 | −0.1243 | −0.2011 |

| WT + 2*3 × 3 | +0.0338 | +0.0589 | −0.0213 | −0.0450 |

| WT + 2*3 × 3 WT | +0.1864 | +0.1884 | −0.1722 | −0.2558 |

| WT + 1*5 × 5 | −0.0696 | −0.0352 | +0.0687 | +0.0934 |

| WT + 1*5 × 5 WT (Ours) | +0.1948 | +0.2119 | −0.1789 | −0.2603 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, C.; Dou, J.; He, B.; Cai, Y.; Zou, C. WT-ResNet: A Non-Destructive Method for Determining the Nitrogen, Phosphorus, and Potassium Content of Sugarcane Leaves Based on Leaf Image. Agriculture 2025, 15, 1752. https://doi.org/10.3390/agriculture15161752

Sun C, Dou J, He B, Cai Y, Zou C. WT-ResNet: A Non-Destructive Method for Determining the Nitrogen, Phosphorus, and Potassium Content of Sugarcane Leaves Based on Leaf Image. Agriculture. 2025; 15(16):1752. https://doi.org/10.3390/agriculture15161752

Chicago/Turabian StyleSun, Cuimin, Junyang Dou, Biao He, Yuxiang Cai, and Chengwu Zou. 2025. "WT-ResNet: A Non-Destructive Method for Determining the Nitrogen, Phosphorus, and Potassium Content of Sugarcane Leaves Based on Leaf Image" Agriculture 15, no. 16: 1752. https://doi.org/10.3390/agriculture15161752

APA StyleSun, C., Dou, J., He, B., Cai, Y., & Zou, C. (2025). WT-ResNet: A Non-Destructive Method for Determining the Nitrogen, Phosphorus, and Potassium Content of Sugarcane Leaves Based on Leaf Image. Agriculture, 15(16), 1752. https://doi.org/10.3390/agriculture15161752