Abstract

The yield and quality of rice are severely affected by rice disease, which can result in crop failure. Early and precise identification of rice plant diseases enables timely action, minimizing potential economic losses. Deep convolutional neural networks (CNNs) have significantly advanced image classification accuracy by leveraging powerful feature extraction capabilities, outperforming traditional machine learning methods. In this work, we propose a dual attention-guided lightweight network for fast and precise recognition of rice diseases with small lesions and high similarity. First, to efficiently extract features while reducing computational redundancy, we incorporate FasterNet using partial convolution (PC-Conv). Furthermore, to enhance the network’s ability to capture fine-grained lesion details, we introduce a dual-attention mechanism that aggregates long-range contextual information in both spatial and channel dimensions. Additionally, we construct a large-scale rice disease dataset, named RD-6, which contains 2196 images across six categories, to support model training and evaluation. Finally, the proposed rice disease detection method is evaluated on the RD-6 dataset, demonstrating its superior performance over other state-of-the-art methods, especially in terms of recognition efficiency. For instance, the method achieves an average accuracy of 99.9%, recall of 99.8%, precision of 100%, specificity of 100%, and F1-score of 99.9%. Additionally, the proposed method has only 3.6 M parameters, demonstrating higher efficiency without sacrificing accuracy.

1. Introduction

Rice is one of the world’s most important crops and a dietary staple for billions of people. It holds significant value in national agricultural systems and food supply strategies. Rice diseases, such as rice blast, sheath blight, and bacterial leaf blight, significantly reduce both the yield and quality of rice, resulting in substantial economic losses. Researchers have noted that these diseases severely impair the growth and development of rice, leading to significant declines in grain production. Therefore, precise and timely recognition of plant disease are essential for disease diagnosis and treatment. Fortunately, the rapid development of mobile devices makes it easy to collect images of crop diseases. In parallel, machine learning- and deep learning-based methods of crop disease recognition have improved greatly.

Computer vision methods were recently introduced into the field of rice disease recognition. To be specific, these methods have been adopted to detect three types of rice plant diseases. Kahar et al. used an artificial neural network (ANN), achieving an accuracy of 74.21% [1]. Similarly, Support Vector Machine (SVM) has been applied to distinguish between healthy and diseased rice plants, yielding an accuracy of 82% [2]. A back-propagation neural network was trained to recognize soybean disease using extracted grey level co-occurrence matrix texture features, achieving an accuracy of 93.3% [3]. However, the recognition accuracy of these conventional machine-learning-based methods is dependent on the quality of hand-crafted features. Therefore, researchers have focused on convolution neural network (CNN)-based methods to automatically learn features that are beneficial for image classification. Many studies prove that CNN-based recognition modules can significantly improve the classification accuracy of rice disease. For example, inspired by the successful use of CNNs, Rahman et al. introduced deep-learning-based methods to detect disease and pests in rice plant images. A comparison of experiments shows that the proposed CNN-based architectures can achieve a satisfactory accuracy of 93.3% with reduced module size [4]. Patel et al. used a gated recurrent network for the automatic diagnosis of rice disease, achieving an accuracy of 99% [5]. To address the problem of uneven dataset samples and similar early-stage symptoms, Ni et al. designed a new classification model, RepVGG-ECA, by fusing the ECA attention mechanism into RepVGG [6]. Their model successfully classifies five types of typical rice plant pests with an accuracy of 97.06%. To enhance the recognition speed of rice disease, Chen et al. merged an attention mechanism into the lightweight network MobileNet-V2, which significantly improved detection efficiency with higher accuracy. Experimental results also demonstrate the validity of the proposed method [7]. More recent works, like [8,9,10], adopted CNN variants for the precise recognition of plant disease. Transformer-based classification methods have become popular because they can extract local features that are beneficial for classification and have gradually been incorporated into plant disease recognition. For example, Li et al. adopted a transformer to identify kiwifriut disease, obtaining a 4.53% improvement in identification accuracy compared to ResNet, ViT, and ResMLP [11]. In addition, to identify strawberry disease, we a novel spatial convolutional self-attention transformer module that fully exploits the advantages of CNN and Transformer [12]. A large number of experimental results show that this method is effective. However, these methods still have some limitations that need to be addressed.

- A large-scale multi-class dataset of rice diseases in complex environments is lacking. Recent works only used limited categories and quantities of rice disease images in a laboratory environment. Thus, their results are not applicable to natural scenes.

- Rice lesions tend to be small, which hinders feature extraction. Additionally, down-sampling in CNNs will degrade features and decrease detail, and severely affecting the feature expression of small lesions and leading to poor recognition.

To overcome these limitations, a dual attention-based lightweight network module has been designed to boost the feature expression ability for minute lesions. The backbone model is FasterNet, which is pretrained on ImageNet and merged with a dual-attention module to form a new rice disease recognition network: dual attention-guided lightweight network (DAL-Net). In DAL-Net, the spatial attention module is adopted for extracting the spatial information of features, while the channel attention module is used to model inter-dependencies among channels, resulting in improved feature expression for rice disease. Additionally, a large-scale dataset comprising multiple categories of rice disease images against a complex background has been constructed, which contains 2196 images of six types of rice disease.

The main contributions of this work are listed as follows:

- We built a large-scale multi-class rice disease dataset to address the lack of samples for rice disease recognition. The rice disease images in this dataset were collected in natural environments. The dataset contains six categories and 2196 images, and no data augmentation is applied.

- We propose a novel dual-attention lightweight rice recognition module with spatial and channel attention modules developed to enhance its discriminant ability for rice disease features high similarity, which significantly improves the recognition accuracy with few parameters.

- We carried out several experiments on our constructed rice disease dataset and compared our method with other methods, showing that the developed DAL-Net can achieve state-of-the-art results with a lower computation burden.

The remainder of this paper is structured as follows: Section 2 provides an overview of prior research relevant to our work; Section 3 details the creation of a large-scale rice disease dataset and elaborates on the key components of the proposed method; and Section 4 showcases the experimental results, including comparisons with state-of-the-art approaches. Section 5 concludes this work, highlighting its limitations and outlining potential directions for future research.

2. Related Work

2.1. Network Engineering

Network design plays a crucial role in computer vision, as a well-architected network ensures optimal performance across various tasks. Numerous CNN-based models have been proposed in recent years, achieving significant success. He et al. introduced ResNet by incorporating a simple identity skip connection to address the challenges of network optimization [13]. Building on ResNet’s success, a range of variants, such as ResNeXt [14], Inception-ResNet [15], and WideResNet [16], have emerged. Specifically, ResNeXt [14] improved image recognition by using grouped convolutions instead of standard convolutions. Meanwhile, many lightweight networks have been developed to improve recognition efficiency, including MobileNets [17,18,19], ShuffleNets [20,21], GhostNet [22], and TinyNet [23]. These models reduce the number of parameters and FLOPs by minimizing redundancy in filters. However, as the network width increases to compensate for accuracy loss, the memory access burden also grows. Therefore, in this study, we adopt FasterNet as a backbone. It reduces redundancy in feature maps and introduces a partial convolution technique to simultaneously decrease both FLOPs and memory access.

2.2. Attention Mechanism

Attention is essential in human visual perception, as it selectively highlights the most important regions, allowing for more efficient capture of visual information [24]. Inspired by the human visual system, attention mechanisms were developed to enhance model performance. Google first introduced the self-attention mechanism, which is extensively used to learn text representations [25]. Hu et al. developed the Squeeze-and-Excitation module, leveraging globally averaged pooled features to compute attention across channels. However, the incorporation of attention modules can increase computational overhead. To address this, Cao et al. proposed GCNet [26], which replaces the traditional spatial down-sampling process with a simple spatial attention mechanism. In our earlier work [27,28], we applied attention mechanisms to feature fusion in FPN, achieving improved performance. In this study, we introduce a dual attention-guided network with the lightweight network FasterNet and develop both spatial and channel attention modules to extract richer feature representations while maintaining accuracy and efficiency.

3. Materials and Methods

3.1. Materials

Rice disease images were captured in Hunan Province using a NIKON D700 camera produced by Nikon in Tokyo, Japan. The images were captured over an extended period to document the various diseases encountered during rice cultivation. A total of 2196 images representing six different types of rice disease were collected. Table 1 provides dataset details, including the scientific name, image count, and distribution of images into training and testing sets for each disease type. Figure 1 shows some images from each disease category. Upon inspection, it is evident that lesions are small in the early stages of rice disease. Additionally, rice sheath blight closely resembles dry leaves, which can lead to confusion during recognition. The images also feature complex backgrounds, which further complicate the accurate identification of rice diseases.

Table 1.

Description of rice disease dataset.

Figure 1.

Rice disease images.

3.2. Proposed Rice Disease Module

Overall Architecture of DAL-Net

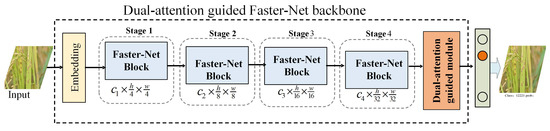

A dual attention-guided lightweight rice disease network was developed to achieve fast and accurate identification of rice diseases. Figure 2 shows the overall architecture of the proposed rice disease recognition module. A rice disease image is input into the lightweight network, FasterNet [29], to extract the feature information of the rice disease. Then, the spatial attention channel attention modules are introduced to further extract the spatial and channel inter-dependencies of the rice disease features, improving the feature expression of the image of rice disease in a natural setting. Finally, a classifier for rice disease is developed using a global average pooling layer, followed by a fully connected (FC) layer. This classifier generates the classification confidence and identifies the disease category for each rice disease image.

Figure 2.

Overall architecture of rice disease module.

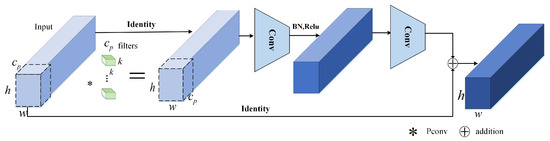

3.3. Review of Faster-Net Module

The overall structure of FasterNet is composed of four distinct blocks, as shown in Figure 3. Each block is preceded by an embedding, which is responsible for spatial down-sampling. Figure 3 illustrates the architecture of a FasterNet block [29]. Within each block, there is a PConv layer with a kernel size of , along with two convolutional layers that use a kernel size. Shortcut connections are employed to merge detailed information effectively. To prevent overuse of normalization and activation layers, which could impair network performance, they are only applied after each intermediate PWConv [29] to preserve feature diversity and reduce latency. Batch normalization (BN) is applied to the adjacent convolutional layer, serving as a normalization step to accelerate inference. For the activation function, either GELU or ReLU is selected, depending on which provides better performance.

Figure 3.

Description of dual-attention-guided module, including position attention block and channel attention block.

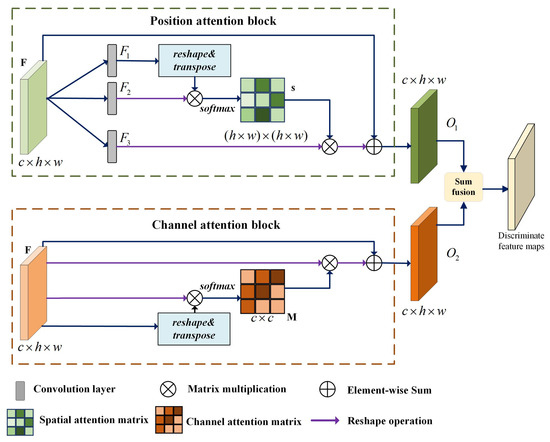

3.4. Position Attention Block

Long-range contextual information is crucial for the identification of rice diseases with small lesions and high similarity. Therefore, we introduce a position attention block to capture complex contextual relationships in the local features of rice disease. It can incorporates a wider range of contextual information into the local features, enhancing their feature representation ability for images of rice disease. Figure 4 shows the details of adaptively aggregated spatial contexts. The extracted local feature of rice disease, , generated from FastNet is first processed through a convolutional layer to further extract feature maps, , , and , followed by a reshaping operation, here , with representing the total number of pixels of rice disease feature maps. Then, matrix multiplication between feature maps and is performed. Finally, a softmax function is used to generate the spatial attention map

where denotes the impact of the i-th position on the j-th position. The strength of the correlation between the two positions increases with the similarity of their feature representations. Simultaneously, the feature map is then reshaped into . Subsequently, a matrix multiplication is applied between and the spatial attention map s, and the resulting matrix is reshaped back to . Then, a scale factor is applied to the resulting feature. Finally, the element-wise sum is computed with the original features F to yield the final output . The process is as follows:

Figure 4.

Description of dual-attention guided module.

The parameter is initialized to 0 and progressively learns to increase its weight. In Equation (2), the output feature at any given position is computed as a weighted combination of features from all positions, along with the original features. Consequently, the developed position attention block is capable of capturing a comprehensive global context and selectively integrating information using the spatial attention map. This process facilitates the mutual reinforcement of semantically similar features of rice diseases, enhancing intra-class cohesion and semantic uniformity for rice disease identification. This contributes to higher accuracy in recognizing rice diseases with high similarity.

3.5. Channel Attention Block

Feature maps from higher levels can be interpreted as responses specific to particular classes of rice disease, with various semantic responses being interconnected. By leveraging the relationships among these channel maps, we can enhance the feature maps that exhibit inter-dependencies, thereby refining the representation of specific semantic features related to rice diseases. Thus, a channel attention block is introduced to capture and model dependencies between different channels of feature maps of rice disease images. Figure 4 depicts the architecture of the channel attention block. Unlike the former block, the channel attention map of the channel attention block is computed directly from the input features . To be specific, the feature map F is first reshaped into , followed by a matrix multiplication between F and its transpose. A softmax function is then applied to generate the final channel attention map :

Here, quantifies the influence of the ith channel on the jth channel. Furthermore, a matrix multiplication is carried out between the transpose of M and F, and the resulting matrix is reshaped into . This reshaped output is then scaled by a parameter and combined with F through an element-wise summation to produce the final output :

Here, the parameter starts at 0 and progressively learns an adaptive weight. As demonstrated in Equation (4), the output feature of rice disease for each channel is computed as a weighted combination of features across all channels and the initial features. This approach effectively captures long-range semantic relationships among feature maps, thereby enhancing the discriminative power for rice disease features with small lesions.

To effectively leverage long-range contextual information on rice diseases, we combine the feature maps produced from the dual-attention-mechanism-based blocks. To achieve this, convolution is first performed on the two output feature maps from the dual-attention-based blocks, and element-wise summation is performed to accomplish rice disease feature fusion.

3.6. Evaluation Criteria

To evaluate the effectiveness of the proposed rice disease recognition approach, a variety of metrics, like accuracy, precision, recall, and specificity, are computed as follows:

Taking rice panicle blast as an example, True Positive () refers to the count of samples that are correctly predicted as rice panicle blast and are indeed rice panicle blast. False Positive () represents the number of instances that are predicted to be rice panicle blast but are actually backgrounds. True Negative () denotes the count of samples that are correctly identified as backgrounds. Lastly, False Negative () indicates the number of samples that are predicted to be backgrounds but which are actually rice panicle blast.

Since accuracy and recall are interdependent, the - is introduced as an additional metric to assess the model’s performance more comprehensively. This metric is derived from the harmonic mean of precision and recall, providing a balanced evaluation, which can be calculated as follows:

4. Experimental Results and Analysis

4.1. Experimental Settings

Platform configuration: The proposed approach and other similar methods were executed on a system running Ubuntu 18.04, Python 3.8, and PyTorch 1.10.0. The hardware setup consisted of an i9-10900X CPU operating at 3.74 GHz, 2TB of RAM, and an NVIDIA GeForce RTX 3060 GPU with 12GB of memory.

Parameter configuration: The learning rate of the rice disease network was configured at 0.002, while the batch size was set to 64. All methods mentioned in this paper were trained for 100 epochs.

Compared modules: To verify the performance of the proposed rice disease recognition module, recent state-of-the-art classification methods, including CNN-based models (RegNet [30], DenseNet [31], Mobilenet [17], and Shulffnet [21]) and Transformer-based modules (MobileVit [32], Swin transformer [33], and SCSA-transformer [12]), are compared.

4.2. Experimental Results on Rice Disease Dataset

A large number of comparison experiments were conducted on the rice disease dataset, RD-6, as demonstrated in Table 2. We can observe that the proposed DAL-Net can achieve an accuracy of 99.9% and a recall of 99.8%. In addition, its precision, specificity, and F1-score can reach 100%, 100%, and 99.9%, respectively. Furthermore, the number of parameters is only 3.6 M. On one hand, the proposed DAL-Net has higher accuracy than the Transformer-based modules. This improvement can likely be attributed to the introduction of spatial and channel attention blocks, which significantly boost its feature representation ability for images of rice disease. Additionally, the computational expense of the developed rice recognition module is notably reduced compared to other advanced CNN-based classification techniques. This reduction in cost may be due to the more efficient architecture of the FasterNet backbone. The proposed DAL-Net demonstrates superior recognition accuracy while requiring fewer computational resources, making it a good choice for identifying rice disease from images taken in natural environments.

Table 2.

Performance of different methods for rice disease recognition.

We conducted further validation to assess the performance of the proposed DAL-Net across different types of rice diseases, as demonstrated in Table 3, which shows that the proposed approach is capable of accurately identifying all six categories of rice disease. For instance, the average performance metrics, accuracy, precision, recall, specificity, and F1-score, reach 99.9%, 99.93%, 99.97%, 99.95%, and 99.95%, respectively. The above experimental results show that our DAL-Net method is suitable for rice disease identification.

Table 3.

Performance of proposed DAL-Net for each type of rice disease.

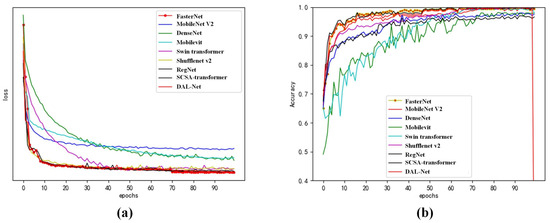

We also present the loss curves of the proposed DAL-Net and several state-of-the-art recognition methods, including RegNet, DenseNet, MobileNet, ShuffleNet, MobileViT, Swin Transformer, FasterNet, and SCSA-Transformer, as illustrated in Figure 5a. The results reveal that the proposed approach exhibits the fastest convergence speed among all methods considered, achieving the lowest loss value in the shortest training time. When combined with the accuracy plot in Figure 5b, it becomes evident that DAL-Net surpasses other classifiers in accuracy and attains optimal performance with minimal training time. These findings highlight the superior capability of our approach in recognizing rice disease images compared to existing methods.

Figure 5.

Loss (a) and accuracy curves (b) of proposed DAL-Net and other state-of-the-art methods.

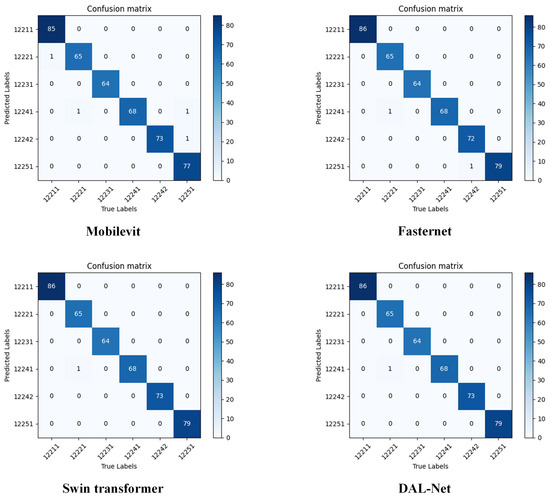

To comprehensively reveal the effectiveness of the proposed rice disease recognition module, confusion matrix figures were generated using the proposed method and the top three performing models, MobileVit, FasterNet, and Swin Transformer, as illustrated in Figure 6. Following the principles of confusion matrix construction, the diagonal elements represent the count of correctly predicted samples. Consequently, higher values in these elements indicate superior performance. It is worth noting that the Swin Transformer achieves results similar to the proposed method. However, DAL-Net significantly reduces the number of parameters, which is a critical and noteworthy advantage.

Figure 6.

Confusion matrix of four best performing models.

4.3. Ablation Study

We ablated various components of the position and channel attention mechanisms on the rice disease dataset, as shown in Table 4. Here, we used FasterNet as the baseline model but without dual attention. Table 4 demonstrates the effectiveness of the proposed position attention block and channel attention block. It shows that the introduction of the position attention block and channel attention block resulted in 0.7% and 0.4% improvement, respectively. This means that the introduction of position and channel attention blocks enhances the feature extraction ability of the proposed module.

Table 4.

Ablation studies on DAL-Net on rice disease dataset. FasterNet is taken as baseline.

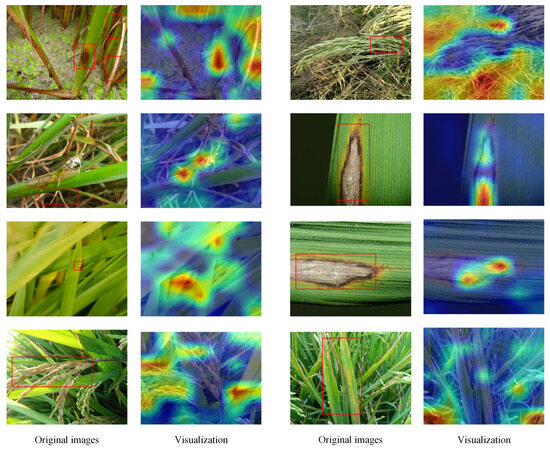

4.4. Visualization Analysis

Gradient-weighted Class Activation Mapping was employed to visualize the process of rice disease recognition, as illustrated in Figure 7. The heat maps of rice disease images reveal that regions with a darker red hue indicate higher model attention, while blue areas signify regions that the model disregards. It demonstrates the dual-attention-guided lightweight network, which incorporates both spatial and channel attention, enhances the rice recognition module’s ability to concentrate on disease-affected areas. This improves accuracy in identifying rice diseases in images with complex backgrounds.

Figure 7.

Visualized results of proposed DAL-Net on testing set of rice disease dataset. Red bounding boxes denote the lesion areas.

To further validate the effectiveness of the proposed rice disease recognition approach, a subset of recognition results from the testing dataset were randomly chosen. Figure 8 illustrates that the proposed DAL-Net method successfully makes accurate predictions with high classification confidence, highlighting the efficacy of the dual-attention-guided framework for rice disease identification. Even though the proposed method performs well in recognizing rice diseases, it is not suitable for assessing disease severity, as it does not provide lesion area measurements.

Figure 8.

Confusion matrix of four best-performing models.

5. Conclusions and Future Work

Rice is one of the most important staple crops worldwide, with significant economic value. Rice diseases severely impact the yield and quality of rice products, resulting in substantial economic losses. The accurate and timely recognition of rice disease will effectively prevent the occurrence of rice diseases. However, due to a lack of large-scale datasets, recognition accuracy is low for rice diseases with small lesions and high similarity. To overcome these problems, we first constructed a comprehensive large-scale rice disease dataset featuring images with complex backgrounds, called the RD-6 dataset, which contains 2196 images featuring six categories of rice diseases against complex backgrounds. Furthermore, a dual-attention-guided lightweight network was introduced to accurately and quickly recognize the category of rice disease. To be specific, FasterNet was introduced to effectively extract rice disease features using partial convolution, which dramatically reduces the number of parameters. Furthermore, a module based on dual attention is designed to capture long-range contextual information across both spatial and channel dimensions. This is conducive to the recognition of rice diseases with small lesions and high similarity. To evaluate the performance of the proposed module, comprehensive experiments were carried out on the RD-6 dataset, demonstrating that the proposed method of rice disease recognition outperforms other state-of-the-art approaches, particularly in recognition efficiency. For example, it achieves average accuracy, recall, precision, specificity, and F1-score of 99.9%, 99.8%, 100%, 100%, and 99.9%, respectively. Additionally, our proposed method only requires 3.6 M parameters. The dual-attention-guided rice recognition module offers a novel approach for crop disease identification in real-world scenarios. In future work, we will further deploy the model on mobile devices to facilitate its use by rice growers in the field.

Author Contributions

Conceptualization, C.K. and L.J.; methodology, C.K. and L.J.; software, C.K., L.J. and K.L.; validation, C.K., L.J. and K.L.; formal analysis, K.L. and Z.L.; investigation, L.J. and K.L.; resources, R.W.; data curation, C.K. and R.W.; writing—original draft preparation, C.K.; writing—review and editing, C.K., L.J., K.L., Z.L. and R.W.; visualization, Z.L.; supervision, L.J.; project administration, Z.L. and R.W.; funding acquisition, L.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the 2024 Central Guiding Local Science and Technology Development Special Plan Funding and Projects (No. 202407a12020010), Hefei unveiling and leading project(No. 2024SGJ039), Natural Science Foundation of Anhui Higher Education Institutions of China (No. KJ2021A0025), the Open Research Fund of the National Engineering Research Center for Agro-Ecological Big Data Analysis Application, Anhui University (No. AE202213), and the Natural Science Foundation of Anhui Province (No. 2208085MC57).

Data Availability Statement

Due to a confidentiality agreement, this dataset cannot be fully disclosed at present. However, if there is a research need, the interested researcher can contact the authors via email to obtain it.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kahar, M.A.; Mutalib, S.; Abdul-Rahman, S. Early Detection and Classification of Paddy Diseases with Neural Networks and Fuzzy Logic. In Proceedings of the 17th International Conference on Mathematical and Computational Methods in Science and Engineering, Kuala Lumpur, Malaysia, 23–25 April 2015. [Google Scholar]

- Singh, A.K.; Rubiya, A.; Raja, B. Classification of rice disease using digital image processing and svm classifier. Int. J. Electr. Electron. Eng. 2015, 7, 294–299. [Google Scholar]

- Gharge, S.; Singh, P. Image processing for soybean disease classification and severity estimation. In Proceedings of the Emerging Research in Computing, Information, Communication and Applications: ERCICA 2015, Bengaluru, India, 31 July 31–1 August 2015; Springer: Berlin/Heidelberg, Germany, 2016; Volume 2, pp. 493–500. [Google Scholar]

- Rahman, C.R.; Arko, P.S.; Ali, M.E.; Khan, M.A.I.; Apon, S.H.; Nowrin, F.; Wasif, A. Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 2020, 194, 112–120. [Google Scholar] [CrossRef]

- Patel, B.; Sharaff, A. Automatic Rice Plant’s disease diagnosis using gated recurrent network. Multimed. Tools Appl. 2023, 82, 28997–29016. [Google Scholar] [CrossRef]

- Ni, H.; Shi, Z.; Karungaru, S.; Lv, S.; Li, X.; Wang, X.; Zhang, J. Classification of Typical Pests and Diseases of Rice Based on the ECA Attention Mechanism. Agriculture 2023, 13, 1066. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, D.; Zeb, A.; Nanehkaran, Y.A. Identification of rice plant diseases using lightweight attention networks. Expert Syst. Appl. 2021, 169, 114514. [Google Scholar] [CrossRef]

- Dogra, R.; Rani, S.; Singh, A.; Albahar, M.A.; Barrera, A.E.; Alkhayyat, A. Deep learning model for detection of brown spot rice leaf disease with smart agriculture. Comput. Electr. Eng. 2023, 109, 108659. [Google Scholar] [CrossRef]

- Jiang, M.; Feng, C.; Fang, X.; Huang, Q.; Zhang, C.; Shi, X. Rice Disease Identification Method Based on Attention Mechanism and Deep Dense Network. Electronics 2023, 12, 508. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, H.; Peng, Z. Rice diseases detection and classification using attention based neural network and bayesian optimization. Expert Syst. Appl. 2021, 178, 114770. [Google Scholar] [CrossRef]

- Li, X.; Chen, X.; Yang, J.; Li, S. Transformer helps identify kiwifruit diseases in complex natural environments. Comput. Electron. Agric. 2022, 200, 107258. [Google Scholar] [CrossRef]

- Li, G.; Jiao, L.; Chen, P.; Liu, K.; Wang, R.; Dong, S.; Kang, C. Spatial convolutional self-attention-based transformer module for strawberry disease identification under complex background. Comput. Electron. Agric. 2023, 212, 108121. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Han, K.; Wang, Y.; Zhang, Q.; Zhang, W.; Xu, C.; Zhang, T. Model rubik’s cube: Twisting resolution, depth and width for tinynets. Adv. Neural Inf. Process. Syst. 2020, 33, 19353–19364. [Google Scholar]

- Larochelle, H.; Hinton, G.E. Learning to combine foveal glimpses with a third-order Boltzmann machine. Adv. Neural Inf. Process. Syst. 2010, 23, 1243–1251. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Wang, R.; Jiao, L.; Xie, C.; Chen, P.; Du, J.; Li, R. S-RPN: Sampling-balanced region proposal network for small crop pest detection. Comput. Electron. Agric. 2021, 187, 106290. [Google Scholar] [CrossRef]

- Jiao, L.; Kang, C.; Dong, S.; Chen, P.; Li, G.; Wang, R. An attention-based feature pyramid network for single-stage small object detection. Multimed. Tools Appl. 2023, 82, 18529–18544. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.; Dollár, P. Designing network design spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10428–10436. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).