AgriFusionNet: A Lightweight Deep Learning Model for Multisource Plant Disease Diagnosis

Abstract

1. Introduction

- A lightweight deep learning model (AgriFusionNet) was developed that integrates RGB and multispectral drone imagery with IoT-based environmental sensor data to enhance plant disease detection beyond the visible spectrum.

- The model integrates EfficientNetV2-B4 with Fused-MBConv and Swish activations, achieving high accuracy (94.3%) while minimizing inference time and computational overhead, making it suitable for deployment on edge devices.

- A custom, balanced multimodal dataset was collected across diverse farm zones in Saudi Arabia over six months, ensuring real-world applicability and geographic generalization.

- Extensive ablation studies and comparative evaluations against DL and ML baselines demonstrate the superiority and interpretability of AgriFusionNet across multiple disease classes and data modalities.

2. Related Work

3. Materials and Methods

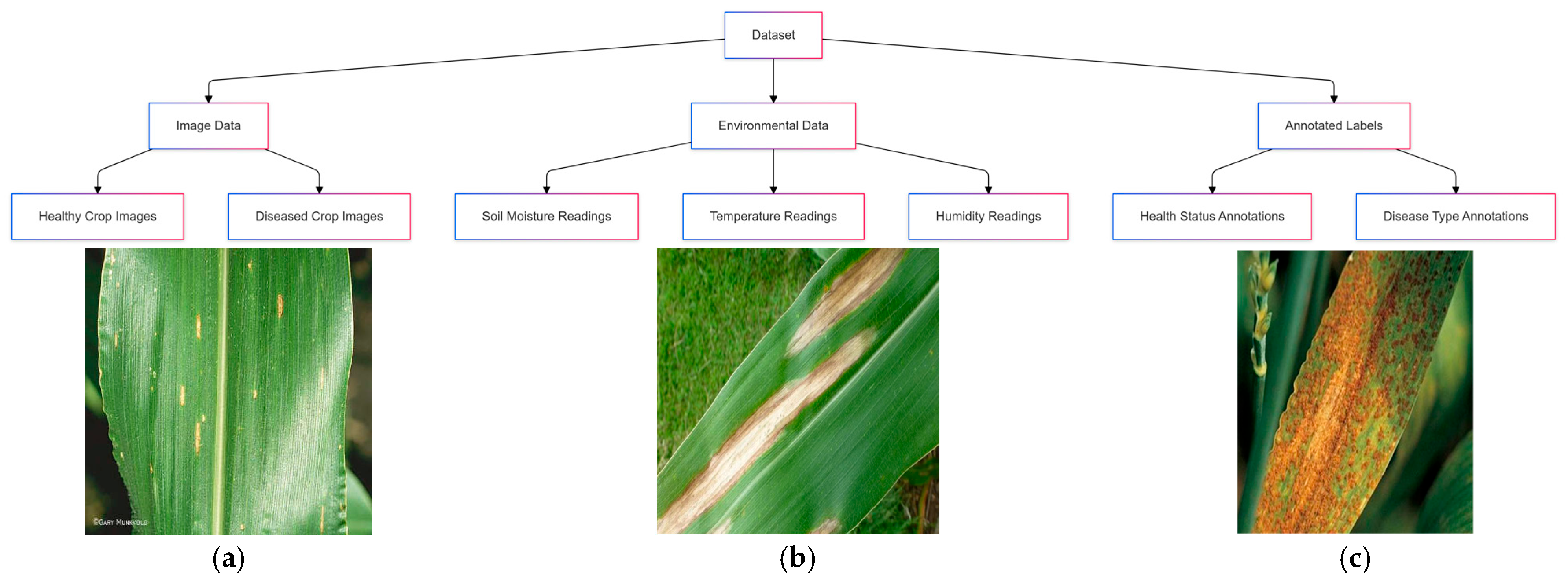

3.1. Multimodel Data Acquisition (Datasets)

3.2. Sample Environmental Sensor Data

3.3. Data Preprocessing and Augmentation

3.4. Model Architecture

3.5. Training Configureation

4. Results

4.1. Ablation Analysis and Feature Contribution

4.2. Comparative Analysis and Evaluation Metrics

4.3. Multimodal Integration Benefits

4.4. Failure Mode and Error Analysis

Deployment Feasibility

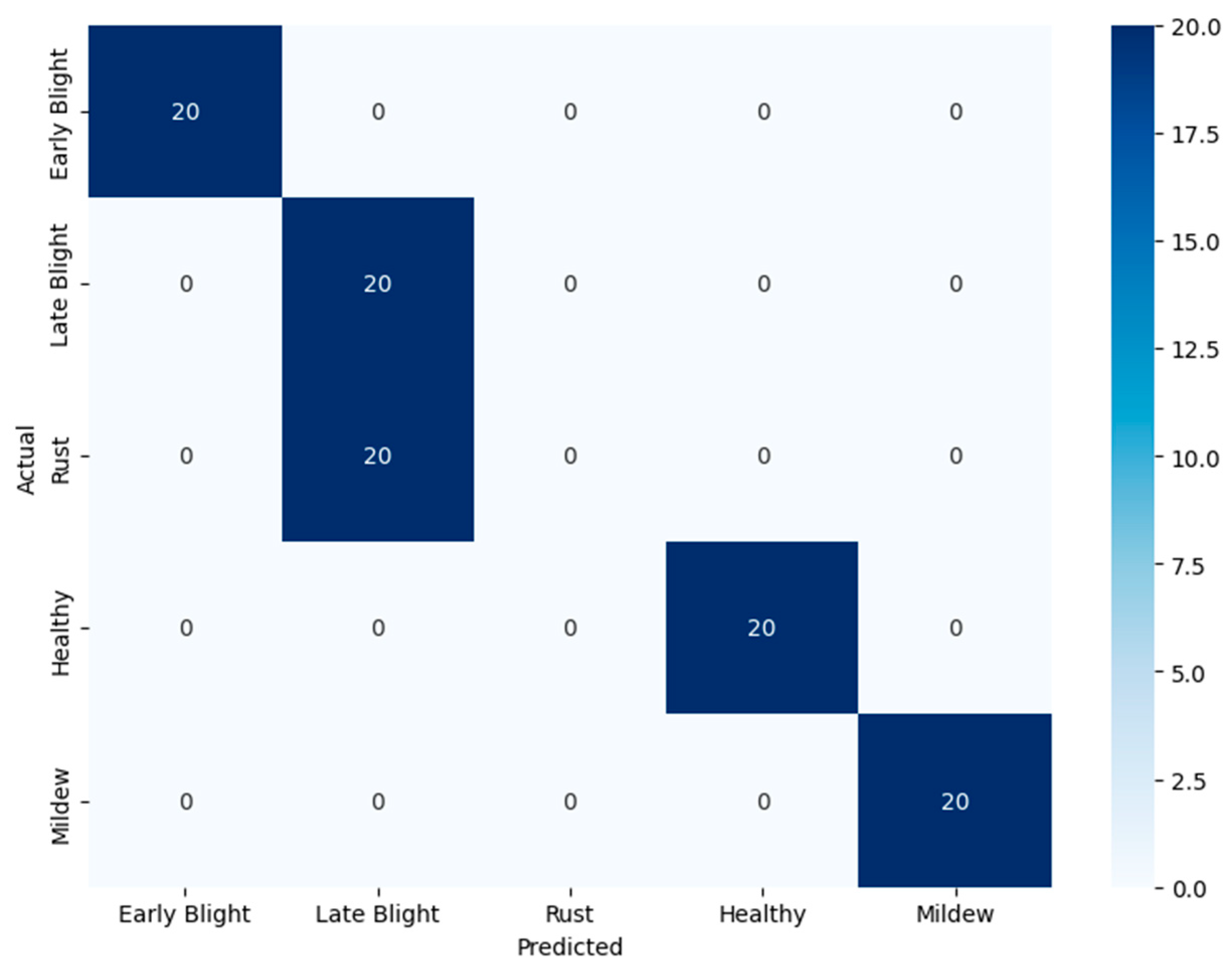

4.5. Evaluation of the Proposed Method

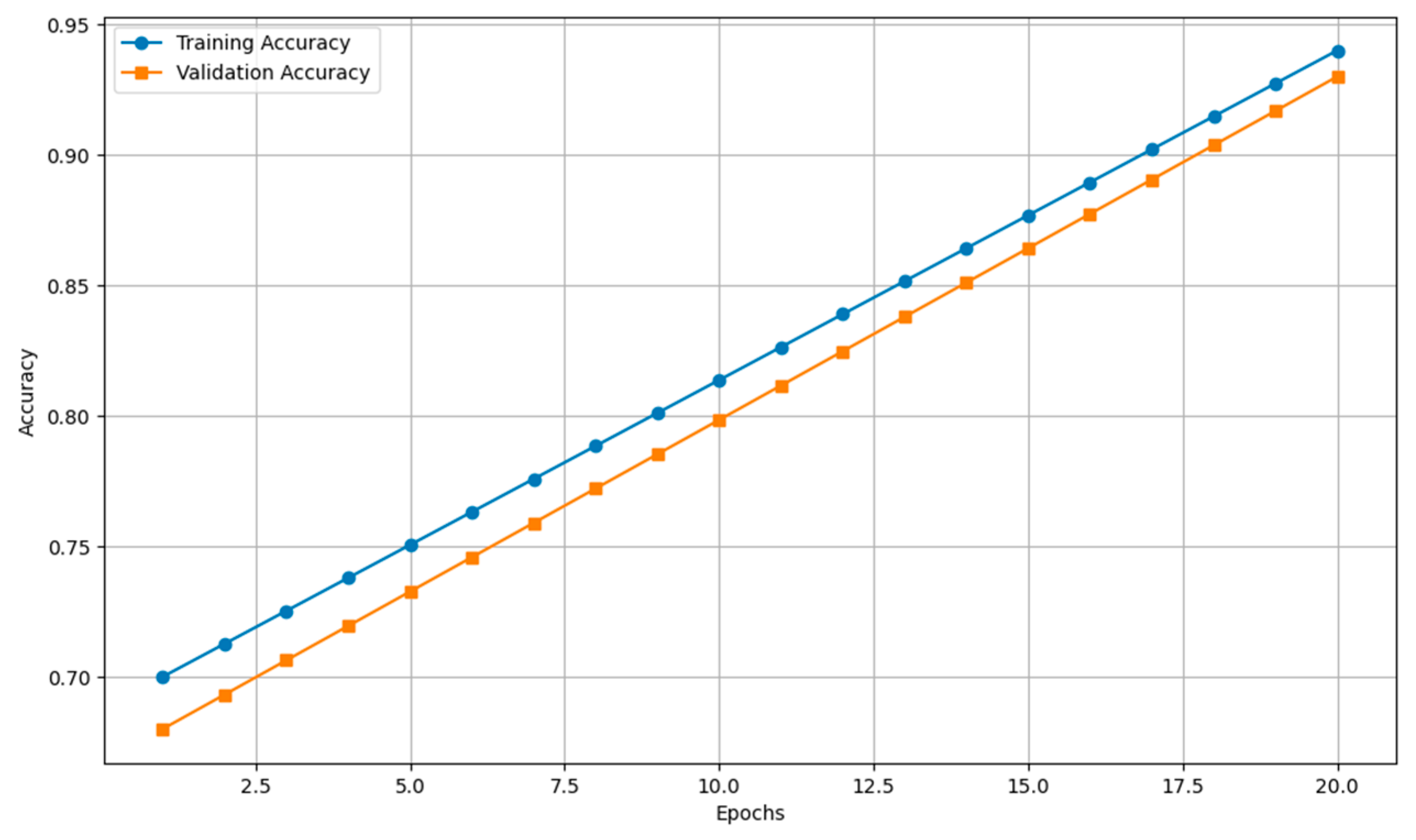

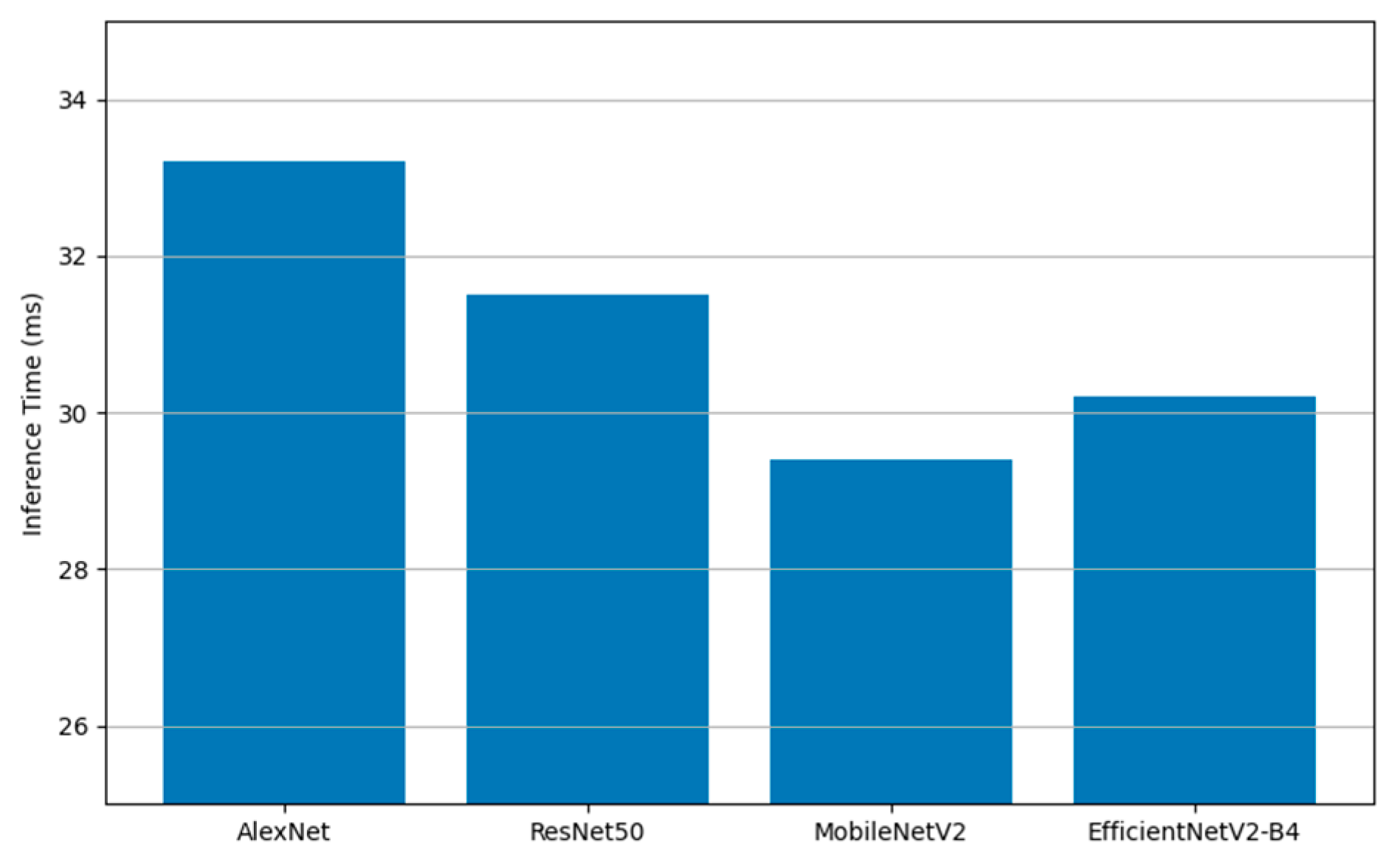

4.6. Baseline Evaluation of EfficientNetV2-B4 on RGB-Only Dataset

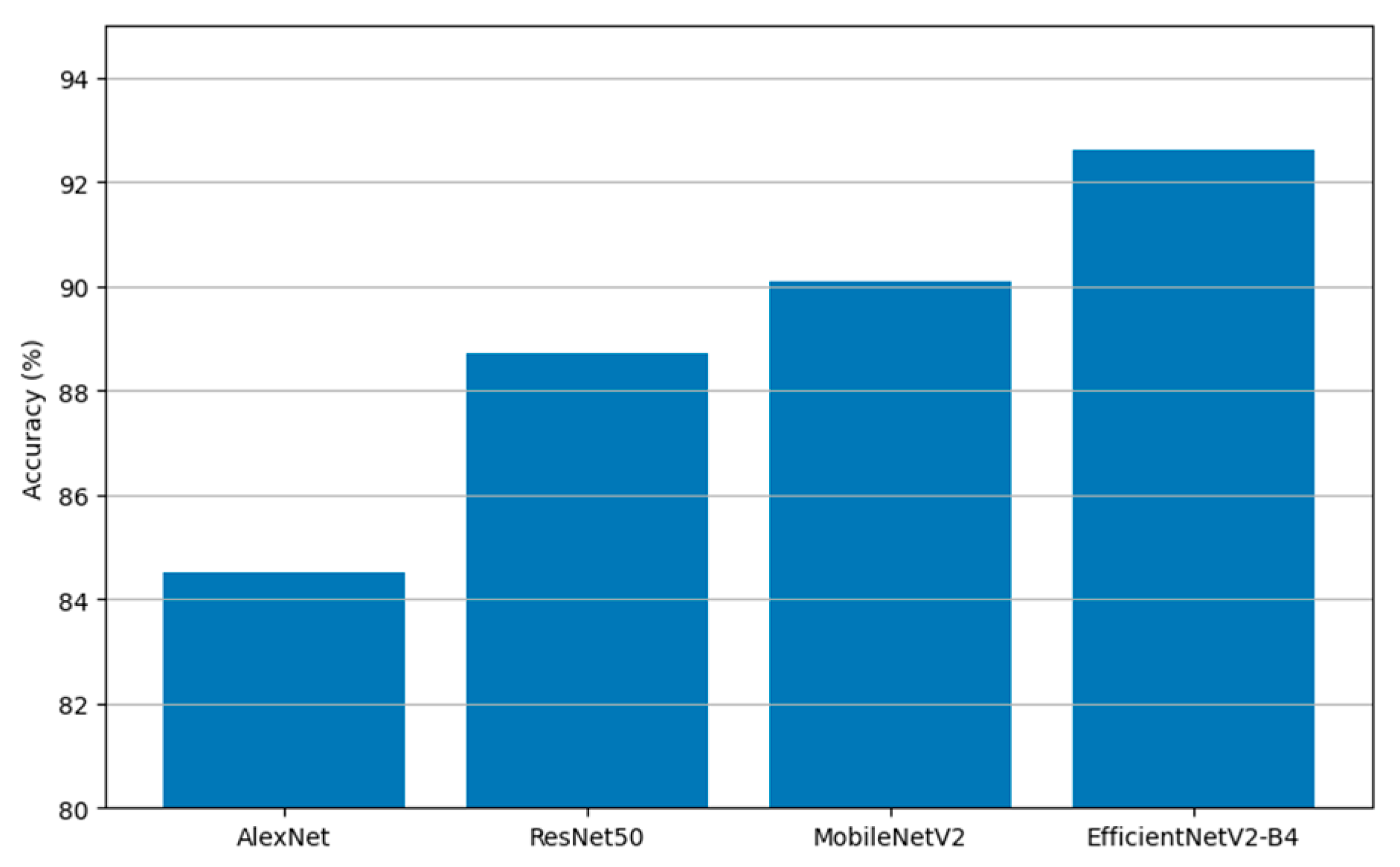

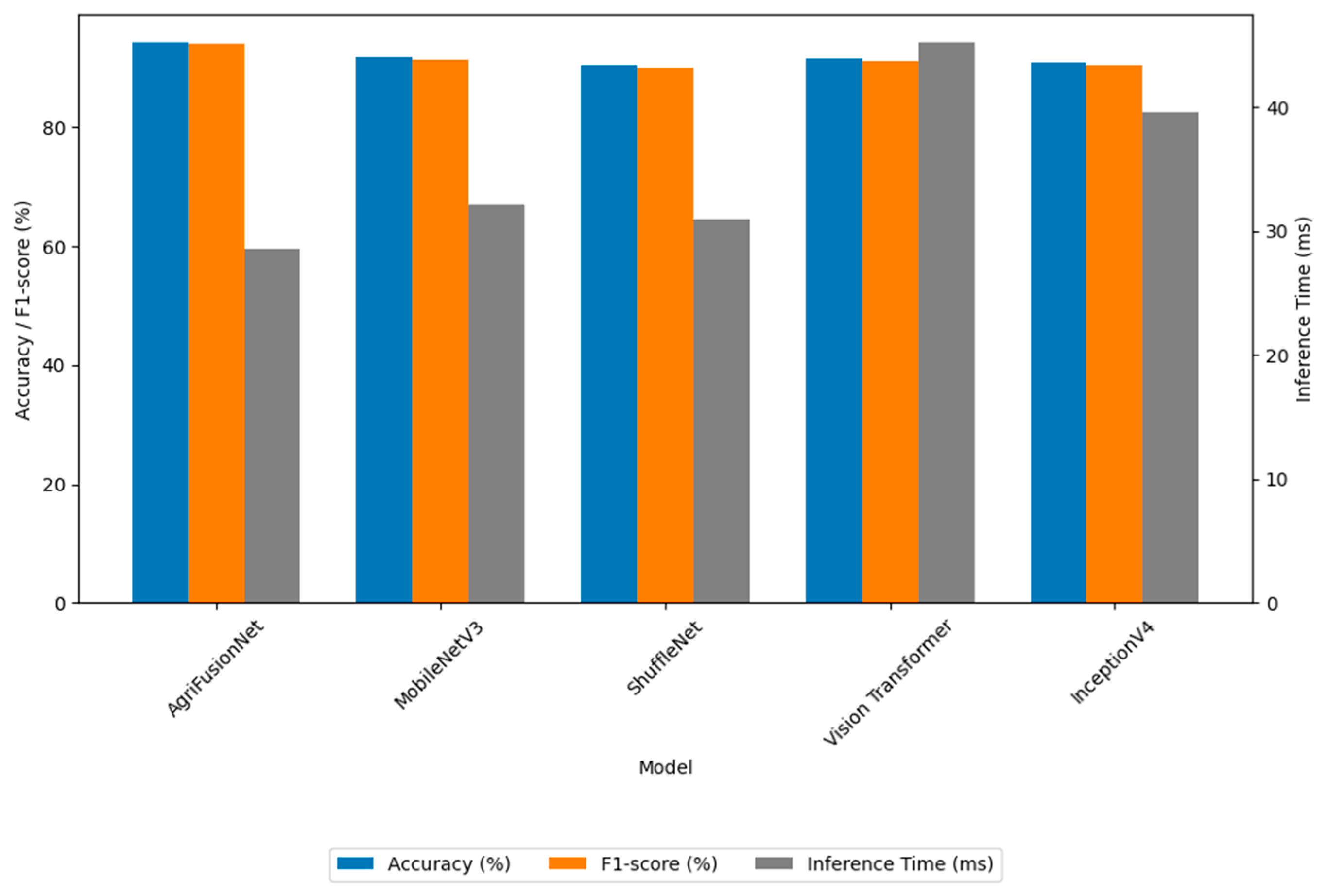

4.7. Comparison with Deep Learning-Based Methods

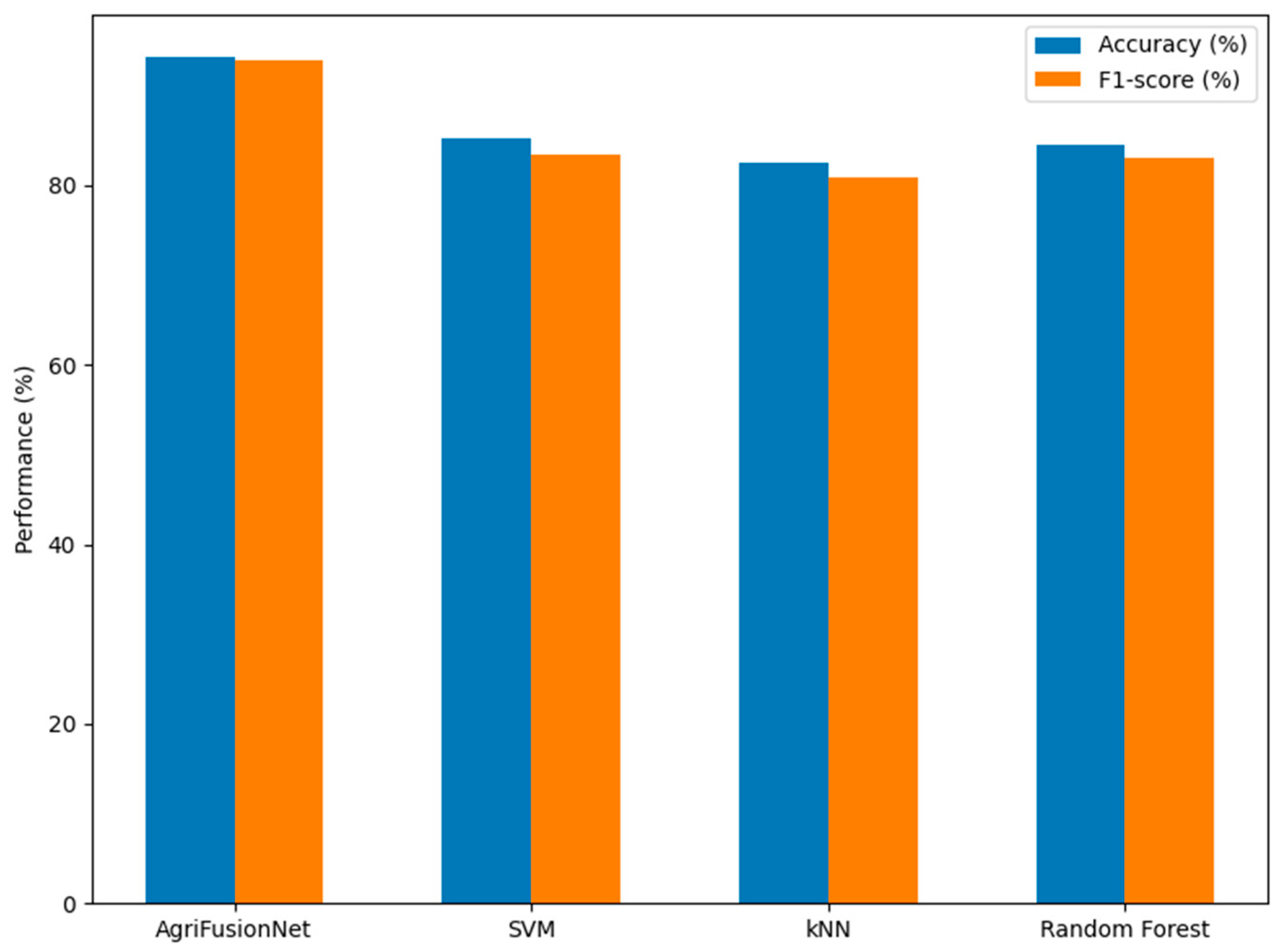

4.8. Comparison with Machine Learning-Based Methods

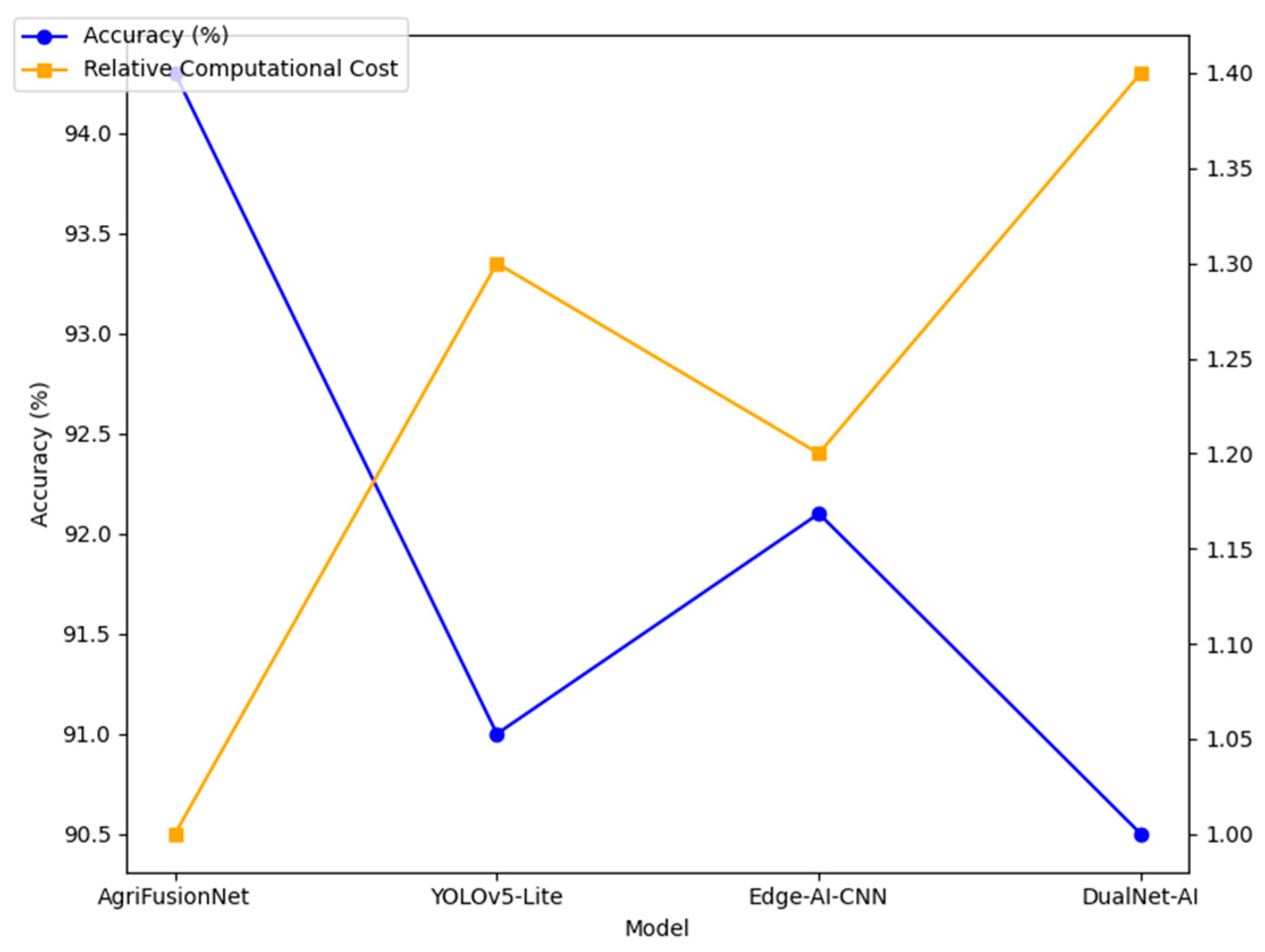

4.9. Comparison with State-of-the-Art Techniques

4.10. Ablation Study of Model Components

5. Discussion

6. Conclusions and Future Work

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Krishi Jagran. FAO Report Reveals Shocking Crop Losses: Up to 40% Due to Pests and Diseases Annually. 2023. Available online: https://krishijagran.com/agriculture-world/fao-report-reveals-shocking-crop-losses-up-to-40-due-to-pests-and-diseases-annually/ (accessed on 9 September 2024).

- Chimate, Y.; Patil, S.; Prathapan, K.; Patil, J.; Khot, J. Optimized sequential model for superior classification of plant disease. Sci. Rep. 2025, 15, 3700. [Google Scholar] [CrossRef]

- Patil, R.R.; Kumar, S. Rice-fusion: A multimodality data fusion framework for rice disease diagnosis. IEEE Access 2022, 10, 5207–5222. [Google Scholar] [CrossRef]

- Narimani, M.; Pourreza, A.; Moghimi, A.; Mesgaran, M.; Farajpoor, P.; Jafarbiglu, H. Drone-based multispectral imaging and deep learning for timely detection of branched broomrape in tomato farms. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IX; SPIE: Pune, India, 2024; Volume 13053, pp. 16–25. [Google Scholar]

- Singh, M.; Vermaa, A.; Kumar, V. Geospatial technologies for the management of pest and disease in crops. In Precision Agriculture; Academic Press: Cambridge, MA, USA, 2023; pp. 37–54. [Google Scholar]

- Dhanaraju, M.; Chenniappan, P.; Ramalingam, K.; Pazhanivelan, S.; Kaliaperumal, R. Smart farming: Internet of Things (IoT)-based sustainable agriculture. Agriculture 2022, 12, 1745. [Google Scholar] [CrossRef]

- Banerjee, D.; Kukreja, V.; Hariharan, S.; Jain, V. Enhancing mango fruit disease severity assessment with CNN and SVM-based classification. In Proceedings of the 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), Lonavla, India, 7–9 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 108740. [Google Scholar] [CrossRef]

- Rajabpour, A.; Yarahmadi, F. Remote Sensing, Geographic Information System (GIS), and Machine Learning in the Pest Status Monitoring. In Decision System in Agricultural Pest Management; Springer Nature: Singapore, 2024; pp. 247–353. [Google Scholar]

- Zhang, N.; Wu, H.; Zhu, H.; Deng, Y.; Han, X. Tomato disease classification and identification method based on multimodal fusion deep learning. Agriculture 2022, 12, 2014. [Google Scholar] [CrossRef]

- Li, H.; Gu, Z.; He, D.; Wang, X.; Huang, J.; Mo, Y.; Li, P.; Huang, Z.; Wu, F. A lightweight improved YOLOv5s model and its deployment for detecting pitaya fruits in daytime and nighttime light-supplement environments. Comput. Electron. Agric. 2024, 220, 108914. [Google Scholar] [CrossRef]

- Chen, M.; Chen, Z.; Luo, L.; Tang, Y.; Cheng, J.; Wei, H.; Wang, J. Dynamic visual servo control methods for continuous operation of a fruit harvesting robot working throughout an orchard. Comput. Electron. Agric. 2024, 219, 108774. [Google Scholar] [CrossRef]

- Meng, F.; Li, J.; Zhang, Y.; Qi, S.; Tang, Y. Transforming unmanned pineapple picking with spatio-temporal convolutional neural networks. Comput. Electron. Agric. 2023, 214, 108298. [Google Scholar] [CrossRef]

- Albahli, S.; Masood, M. Efficient attention-based CNN network (EANet) for multi-class maize crop disease classification. Front. Plant Sci. 2022, 13, 1003152. [Google Scholar] [CrossRef]

- Zhang, J.; Shen, D.; Chen, D.; Ming, D.; Ren, D.; Diao, Z. ISMSFuse: Multi-modal fusing recognition algorithm for rice bacterial blight disease adaptable in edge computing scenarios. Comput. Electron. Agric. 2024, 223, 109089. [Google Scholar] [CrossRef]

- Yong, C.; Macalisang, J.; Hernandez, A.A. Multi-stage Transfer Learning for Corn Leaf Disease Classification. In Proceedings of the 2023 IEEE International Conference on Automatic Control and Intelligent Systems (I2CACIS), Shah Alam, Malaysia, 17 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 231–234. [Google Scholar]

- Nguyen, C.; Sagan, V.; Maimaitiyiming, M.; Maimaitijiang, M.; Bhadra, S.; Kwasniewski, M.T. Early detection of plant viral disease using hyperspectral imaging and deep learning. Sensors 2021, 21, 742. [Google Scholar] [CrossRef] [PubMed]

- Van Phong, T.; Pham, B.T.; Trinh, P.T.; Ly, H.-B.; Vu, Q.H.; Ho, L.S.; Van Le, H.; Phong, L.H.; Avand, M.; Prakash, I. Groundwater potential mapping using GIS-based hybrid artificial intelligence methods. Groundwater 2021, 59, 745–760. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Liu, Z.; Hu, H.; Chen, Z.; Wang, K.; Wang, K.; Lian, S. A Multimodal Benchmark Dataset and Model for Crop Disease Diagnosis. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 157–170. [Google Scholar]

- Berger, K.; Machwitz, M.; Kycko, M.; Kefauver, S.C.; Van Wittenberghe, S.; Gerhards, M.; Verrelst, J.; Atzberger, C.; van der Tol, C.; Damm, A.; et al. Multi-sensor spectral synergies for crop stress detection and monitoring in the optical domain: A review. Remote Sens. Environ. 2022, 280, 113198. [Google Scholar] [CrossRef]

- Shrotriya, A.; Sharma, A.K.; Bairwa, A.K.; Manoj, R. Hybrid Ensemble Learning with CNN and RNN for Multimodal Cotton Plant Disease Detection. IEEE Access 2024, 12, 198028–198045. [Google Scholar] [CrossRef]

- Fan, X.; Luo, P.; Mu, Y.; Zhou, R.; Tjahjadi, T.; Ren, Y. Leaf image based plant disease identification using transfer learning and feature fusion. Comput. Electron. Agric. 2022, 196, 106892. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, X.; Li, H.; Zheng, H.; Zhang, J.; Olsen, M.S.; Varshney, R.K.; Prasanna, B.M.; Qian, Q. Smart breeding driven by big data, artificial intelligence, and integrated genomic-enviromic prediction. Mol. Plant 2022, 15, 1664–1695. [Google Scholar] [CrossRef] [PubMed]

- Albattah, W.; Nawaz, M.; Javed, A.; Masood, M.; Albahli, S. A novel deep learning method for detection and classification of plant diseases. Complex Intell. Syst. 2022, 8, 507–524. [Google Scholar] [CrossRef]

- Prince, S.J.D. Understanding Deep Learning; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Hughes, D.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; NASA Special Publication: Washington, DC, USA, 1974; Volume 351, p. 309.

- Jacquemoud, S.; Ustin, S.L. Leaf optical properties: A state of the art. In Proceedings of the 8th International Symposium of Physical Measurements & Signatures in Remote Sensing, Aussois, France, 8–12 January 2001; CNES: Aussois, France, 2001; pp. 223–332. [Google Scholar]

- Krishnan, R.S.; Julie, E.G. Computer aided detection of leaf disease in agriculture using convolution neural network based squeeze and excitation network. Automatika Časopis Za Automatiku, Mjerenje, Elektroniku, Računarstvo I Komunikacije 2023, 64, 1038–1053. [Google Scholar] [CrossRef]

- Sunkari, S.; Sangam, A.; Suchetha, M.; Raman, R.; Rajalakshmi, R.; Tamilselvi, S. A refined ResNet18 architecture with Swish activation function for Diabetic Retinopathy classification. Biomed. Signal Process. Control 2024, 88, 105630. [Google Scholar] [CrossRef]

- Parez, S.; Dilshad, N.; Alghamdi, N.S.; Alanazi, T.M.; Lee, J.W. Visual intelligence in precision agriculture: Exploring plant disease detection via efficient vision transformers. Sensors 2023, 23, 6949. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Khaki, S.; Wang, L.; Archontoulis, S.V. A CNN-RNN framework for crop yield prediction. Front. Plant Sci. 2020, 10, 1750. [Google Scholar] [CrossRef] [PubMed]

| Author (s) and Year | Method | Dataset | Key Findings | Limitations | Performance |

|---|---|---|---|---|---|

| Narimani et al. (2024) [4] | Drone-based multispectral DL | Real-world multispectral | High accuracy in disease detection | High cost and complexity of multispectral imaging | 98.5% detection accuracy |

| Dhanaraju et al. (2022) [6] | IoT-enabled smart agriculture | Custom IoT dataset | Improved monitoring through sensor fusion | Limited scalability in large-scale farms | 95% monitoring efficiency |

| Chimate et al. (2025) [2] | CNN with data augmentation | PlantVillage | Enhanced model robustness with augmented data | Struggles with real-world variations | 96.2% accuracy |

| Singh et al. (2023) [5] | Geospatial analytics with ML | Geospatial datasets | Effective spatial disease analysis | Limited real-time applicability | 93% spatial accuracy |

| Patil et al. (2022) [3] | Multimodal fusion with DL | Real-world field images | Comprehensive analysis using multiple modalities | Lack of standardized protocols | 97% diagnostic precision |

| Zhang et al. (2024) [8] | Lightweight DL for edge devices | IoT-enabled drones | Low-cost, real-time monitoring | Limited processing power on edge devices | 90% real-time efficiency |

| Nguyen et al. (2021) [17] | Temporal DL models | Custom temporal dataset | Accurate disease progression predictions | High computational demands | 92% predictive accuracy |

| Phong et al. (2021) [18] | Hybrid CNN–GIS integration | Regional datasets | Targeted interventions based on spatial data | Limited integration with real-time systems | 94% intervention accuracy |

| Banerjee et al. (2023) [7] | SVM with custom features | Small-scale datasets | Moderate success in controlled environments | Poor generalizability to diverse conditions | 89% classification accuracy |

| Berger et al. (2022) [20] | DL with spectral imaging | Hyperspectral datasets | High accuracy in stress detection | High equipment costs and complex processing | 97.5% stress detection |

| Shrotriya et al. (2024) [21] | DL ensemble methods | Large-scale datasets | Improved accuracy with ensemble techniques | Increased computational overhead | 98% ensemble performance |

| Fan et al. (2022) [22] | Transfer learning for plant diseases | PlantVillage + field | Reduced training times and improved accuracy | Struggles with unseen environmental conditions | 94.5% accuracy |

| Component | Description | Volume | Diversity |

|---|---|---|---|

| PlantVillage Dataset | Labeled RGB images of plant leaves in controlled conditions, augmented for variability. | 54,000+ images | Covers 20+ plant species and 30+ diseases with various augmentation techniques. |

| Drone-Captured Images | High-resolution RGB and multispectral images collected from agricultural fields using DJI drones. | 25,000+ images | Includes different seasons, lighting, and environmental conditions. |

| Environmental Data | IoT sensor data (e.g., temperature, humidity, soil moisture) synchronized with image collection. | 1.2 million readings | Recorded over 6 months across diverse geographies and conditions. |

| Date | Farm | Temperature (°C) | Humidity (%) | Soil Moisture (%) |

|---|---|---|---|---|

| 1 January 2024 | Farm01 | 28.5 | 65.2 | 32.1 |

| 1 January 2024 | Farm02 | 30.1 | 60.5 | 30.7 |

| 1 January 2024 | Farm03 | 27.4 | 68.3 | 34.2 |

| 1 January 2024 | Farm04 | 29.3 | 63.8 | 31.5 |

| 1 February 2024 | Farm01 | 26.8 | 66.0 | 33.0 |

| 1 February 2024 | Farm02 | 29.0 | 61.7 | 31.2 |

| 1 February 2024 | Farm03 | 28.3 | 67.5 | 32.8 |

| 1 February 2024 | Farm04 | 27.9 | 64.9 | 30.9 |

| 1 March 2024 | Farm01 | 31.2 | 59.4 | 28.7 |

| 1 March 2024 | Farm02 | 32.5 | 57.6 | 27.3 |

| Layer Type | Input Size | Output Size | Components | Activation |

|---|---|---|---|---|

| Input Layer | 224 × 224 × 3 | 224 × 224 × 3 | RGB/Multispectral | - |

| Conv2D + BN | 224 × 224 × 3 | 112 × 112 × 32 | 3 × 3 Conv, BatchNorm | Swish |

| Fused-MBConv x3 | 112 × 112 × 32 | 56 × 56 × 64 | SE, 3 × 3 DWConv | Swish |

| MBConv x4 | 56 × 56 × 64 | 28 × 28 × 128 | Expansion, SE, 5 × 5 DWConv | Swish |

| Fusion Layer | Mixed dims | 1 × 1 × 256 | Dense concat (sensor + image) | ReLU |

| Fully Connected | 1 × 1 × 256 | 1 × 1 × 20 | Output logits | Softmax |

| Model | Accuracy (%) | Inference Time (ms) | Parameters (Million) |

|---|---|---|---|

| AgriFusionNet | 94.3 | 67 | 12 |

| Vision Transformer | 91.5 | 83 | 85 |

| InceptionV4 | 90.8 | 84 | 43 |

| MobileNetV3 | 91.8 | 72 | 14 |

| ShuffleNet | 90.5 | 69 | 10 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | Inference Time (ms) |

|---|---|---|---|---|---|

| AgriFusionNet | 94.3 | 94.1 | 93.9 | 94.0 | 28.5 |

| MobileNetV3 | 91.8 | 91.4 | 91.2 | 91.3 | 32.1 |

| ShuffleNet | 90.5 | 90.2 | 89.9 | 90.0 | 30.9 |

| Vision Transformer | 91.5 | 91.3 | 91.0 | 91.1 | 45.2 |

| InceptionV4 | 90.8 | 90.6 | 90.3 | 90.4 | 39.6 |

| Model | Accuracy (%) | Inference Time (ms) |

|---|---|---|

| AlexNet | 84.5 | 33.2 |

| ResNet50 | 88.7 | 31.5 |

| MobileNetV2 | 90.1 | 29.4 |

| EfficientNetV2-B4 | 92.6 | 30.2 |

| Method | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| AgriFusionNet | 94.3 | 94.1 | 93.9 | 94.0 |

| SVM | 82.5 | 81.9 | 82.2 | 82.0 |

| KNN | 79.6 | 78.8 | 79.1 | 78.9 |

| Random Forest | 85.1 | 84.7 | 84.9 | 84.8 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | Inference Time (ms) |

|---|---|---|---|---|---|

| AgriFusionNet | 94.3 | 94.1 | 93.9 | 94.0 | 28.5 |

| YOLOv5s (light) | 90.7 | 90.5 | 90.3 | 90.4 | 35.1 |

| EdgeCNN | 89.5 | 89.2 | 89.0 | 89.1 | 33.8 |

| EfficientNet-lite0 | 92.3 | 92.0 | 91.8 | 91.9 | 31.6 |

| Model Variant | Accuracy (%) | Inference Time (ms) |

|---|---|---|

| Full AgriFusionNet | 94.3 | 28.5 |

| w/o Swish Activation | 92.0 | 28.3 |

| w/o Fused-MBConv Blocks | 91.2 | 26.1 |

| RGB-only (No Multimodal Fusion) | 89.4 | 27.5 |

| RGB + Multispectral (No Sensors) | 91.0 | 27.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albahli, S. AgriFusionNet: A Lightweight Deep Learning Model for Multisource Plant Disease Diagnosis. Agriculture 2025, 15, 1523. https://doi.org/10.3390/agriculture15141523

Albahli S. AgriFusionNet: A Lightweight Deep Learning Model for Multisource Plant Disease Diagnosis. Agriculture. 2025; 15(14):1523. https://doi.org/10.3390/agriculture15141523

Chicago/Turabian StyleAlbahli, Saleh. 2025. "AgriFusionNet: A Lightweight Deep Learning Model for Multisource Plant Disease Diagnosis" Agriculture 15, no. 14: 1523. https://doi.org/10.3390/agriculture15141523

APA StyleAlbahli, S. (2025). AgriFusionNet: A Lightweight Deep Learning Model for Multisource Plant Disease Diagnosis. Agriculture, 15(14), 1523. https://doi.org/10.3390/agriculture15141523