Abstract

Timely and accurate identification of plant diseases is critical to mitigating crop losses and enhancing yield in precision agriculture. This paper proposes AgriFusionNet, a lightweight and efficient deep learning model designed to diagnose plant diseases using multimodal data sources. The framework integrates RGB and multispectral drone imagery with IoT-based environmental sensor data (e.g., temperature, humidity, soil moisture), recorded over six months across multiple agricultural zones. Built on the EfficientNetV2-B4 backbone, AgriFusionNet incorporates Fused-MBConv blocks and Swish activation to improve gradient flow, capture fine-grained disease patterns, and reduce inference latency. The model was evaluated using a comprehensive dataset composed of real-world and benchmarked samples, showing superior performance with 94.3% classification accuracy, 28.5 ms inference time, and a 30% reduction in model parameters compared to state-of-the-art models such as Vision Transformers and InceptionV4. Extensive comparisons with both traditional machine learning and advanced deep learning methods underscore its robustness, generalization, and suitability for deployment on edge devices. Ablation studies and confusion matrix analyses further confirm its diagnostic precision, even in visually ambiguous cases. The proposed framework offers a scalable, practical solution for real-time crop health monitoring, contributing toward smart and sustainable agricultural ecosystems.

1. Introduction

Global food security continues to face critical challenges from climate variability, evolving pathogens, and population growth. According to the Food and Agriculture Organization (FAO), crop losses due to pests and diseases can reach up to 40% annually [1]. With the global population projected to reach 9.7 billion by 2050, ensuring the resilience of crop production systems has become a priority for researchers, policymakers, and agribusiness stakeholders [2]. These issues necessitate the development of robust, real-time crop monitoring solutions powered by artificial intelligence (AI). Recent advancements in deep learning and smart sensing technologies have enabled more scalable and accurate plant disease detection.

Traditional methods for plant disease management relying on manual inspection are often subjective and resource-intensive. While these methods provide localized solutions, they fail to scale effectively for large farms or address real-time requirements due to several limitations. Vision-based models using convolutional neural networks (CNNs) have demonstrated improved detection capability; however, their performance often suffers under field variability or with limited training data. To address these challenges, we propose AgriFusionNet, a lightweight model based on convolutional neural networks (CNNs), which are a class of deep learning algorithms particularly effective for analyzing visual imagery. CNNs are widely used in artificial intelligence due to their ability to automatically extract hierarchical features from image data, and in this context, we designed an architecture based on CNNs and tailored for agricultural disease detection using multimodal inputs.

Recent advances in artificial intelligence (AI) and machine learning have offered promising solutions for addressing these limitations. Deep learning-based methods, particularly convolutional neural networks (CNNs), have demonstrated high potential for accurate, automated disease classification. However, existing CNN-based systems often require significant computational resources, limiting their applicability in resource-constrained agricultural environments. Therefore, there is a pressing need for computationally efficient yet highly accurate AI-driven solutions capable of scaling to large agricultural fields and operating effectively in real-time scenarios.

Despite significant progress, several gaps remain unaddressed in current plant disease detection systems. Most existing approaches are limited to analyzing visual symptoms on leaves, neglecting other plant parts such as stems and fruits, as well as environmental factors that influence disease spread. Additionally, these systems often struggle with real-world complexities such as varying illumination, noise, and diverse agricultural conditions. There is also a lack of integration between disease detection and actionable treatment recommendations, resulting in fragmented solutions that fail to provide end-to-end crop management [3].

To address these gaps, this study introduces a lightweight multimodal deep learning framework leveraging EfficientNetV2-B4 architecture. The proposed framework integrates RGB and multispectral imagery captured via drones with synchronized environmental sensor data, significantly enhancing the accuracy and generalizability of disease detection systems. Key innovations include the use of Fused-MBConv blocks for improved computational efficiency and Swish activation functions to enhance feature extraction capabilities, specifically tailored for plant disease classification tasks. The proposed method significantly outperforms existing state-of-the-art approaches and establishes a new benchmark for practical deployment in agricultural scenarios [4].

Building upon the success of AI-driven plant disease detection systems, this study aims to develop a holistic crop health monitoring system that integrates multimodal data sources, predictive analytics, and automated intervention mechanisms. Unlike existing systems that focus solely on visual detection of leaf-based symptoms, our approach expands to incorporate multispectral imaging, environmental data, and Geographic Information Systems (GIS) to model disease dynamics and optimize treatment strategies [5].

Specifically, the proposed framework introduces the following:

- A lightweight deep learning model (AgriFusionNet) was developed that integrates RGB and multispectral drone imagery with IoT-based environmental sensor data to enhance plant disease detection beyond the visible spectrum.

- The model integrates EfficientNetV2-B4 with Fused-MBConv and Swish activations, achieving high accuracy (94.3%) while minimizing inference time and computational overhead, making it suitable for deployment on edge devices.

- A custom, balanced multimodal dataset was collected across diverse farm zones in Saudi Arabia over six months, ensuring real-world applicability and geographic generalization.

- Extensive ablation studies and comparative evaluations against DL and ML baselines demonstrate the superiority and interpretability of AgriFusionNet across multiple disease classes and data modalities.

This research employs the latest advancements in lightweight deep learning models, edge computing, and IoT-enabled sensors to ensure cost-effective and scalable deployment in real-world scenarios. The framework’s efficacy is validated using a diverse dataset comprising PlantVillage samples, real-world drone imagery, and environmental sensor data, demonstrating its robustness across various environmental and operational conditions [6]. Specifically, this research serves as a critical step towards sustainable agricultural management by offering scalable, efficient, and reliable disease detection solutions for diverse farming environments.

The remainder of this paper is structured as follows: Section 2 reviews existing approaches to plant disease detection and their limitations. Section 3 details the proposed system’s architecture and data acquisition processes. Section 4 and Section 5 discusses the evaluation metrics and comparative analysis of the proposed method against state-of-the-art techniques. Finally, Section 6 highlights key findings, contributions, and directions for future research.

2. Related Work

The domain of detecting plant diseases has experienced notable advancements through the utilization of machine learning (ML), deep learning (DL), and drone technology to tackle agricultural issues. Early techniques primarily relied on conventional ML methods, including Support Vector Machines (SVMs) and Random Forests (RFs), alongside manually crafted feature extraction techniques like Local Binary Patterns (LBPs) and Gray Level Co-Occurrence Matrix (GLCM). Although these methods showed some effectiveness, they often faced challenges regarding scalability and reliability in real-world scenarios [2,7].

The rise of deep learning has transformed the field of plant disease identification through the use of convolutional neural networks (CNNs). Models like AlexNet, VGGNet, DenseNet, and ResNet have been effectively applied to agricultural image analysis with promising results. In recent years, the research community has also focused on integrating multiple data sources through multimodal learning. For instance, prior studies [6,8] have combined spectral image data with traditional classifiers or designed lightweight CNN architectures for efficient detection of pests and diseases. These strategies, particularly those leveraging drone imagery alongside IoT sensor inputs, contribute significantly to improving the accuracy and reliability of agricultural disease diagnosis under real-world conditions. However, despite their achievements, these models frequently have difficulty generalizing under different environmental conditions, including variations in lighting, noise, and obstructions.

The adoption of drone technology has introduced a fresh perspective on monitoring large-scale agriculture. Research utilizing drones fitted with high-resolution cameras and multispectral sensors has shown the capability to gather data over extensive agricultural areas, greatly improving the effectiveness of disease identification [3]. For example, Narimani et al. [4] used multispectral imaging in conjunction with deep learning models to achieve impressive accuracy in detecting plant diseases. Nonetheless, these systems frequently encounter obstacles related to cost, complexity, and the necessity for advanced data processing workflows.

Multimodal approaches that combine visual data with environmental parameters have shown promise in creating holistic disease monitoring systems [9]. By incorporating data such as temperature, humidity, and soil health, these systems provide a more comprehensive understanding of disease dynamics. Patil et al. [3] and Singh et al. [5] demonstrated the potential of such methods through the integration of Geographic Information Systems (GIS) with DL models [9], enabling spatial analysis of disease spread and targeted interventions. Nevertheless, the absence of standardized protocols for data fusion and the computational demands of handling multimodal data remain key barriers [10].

Recent progress in deep learning and computer vision has allowed AI-driven models to be implemented in various agricultural fields, extending beyond just plant disease identification. For instance, lightweight object detection models, such as an improved YOLOv5s, have been successfully utilized for fruit detection under varying lighting conditions, including daytime and nighttime light-supplemented environments [11]. Similarly, dynamic visual servo control methods have been implemented in autonomous fruit harvesting robots, enabling continuous operation throughout an orchard with enhanced precision and adaptability [12]. Additionally, spatio-temporal convolutional neural networks (ST-CNNs) have been applied in unmanned fruit-picking systems, improving both spatial localization and temporal sequence prediction for optimized harvesting [13]. These advancements highlight the versatility of AI-driven vision models in agricultural automation, reinforcing the effectiveness of lightweight deep learning frameworks for real-time monitoring and decision-making. In complex environmental conditions, Albahli et al. [14] utilized convolutional neural networks enhanced with attention mechanisms to accurately detect plant diseases under complex environmental conditions, significantly outperforming traditional CNNs in real-time applicability. Additionally, sensor fusion-based approaches have emerged as promising strategies to improve detection accuracy by combining image-based features with environmental sensor data. Studies such as those by Zhang et al. [15] demonstrate how multimodal data fusion, integrating visible and infrared imagery with IoT sensor readings, can enhance the reliability and robustness of plant disease detection frameworks under varying agricultural conditions. Furthermore, research by Yong et al. [16] highlights the effectiveness of transfer learning and multi-stage feature extraction techniques for improved classification accuracy and computational efficiency in agricultural applications. These advancements reinforce the necessity and effectiveness of multimodal, lightweight, and computationally efficient deep learning frameworks for practical agricultural scenarios, directly aligning with the objectives and contributions of our proposed method.

Emerging research in temporal analytics and predictive modeling has introduced the capability to forecast disease progression. Nguyen et al. [17] utilized temporal data in conjunction with DL models to predict disease spread over time, providing valuable insights for preemptive action. Similarly, Phong et al. [18] employed hybrid CNN-GIS systems to identify high-risk zones and recommend targeted treatments. While these methods add predictive power, their reliance on extensive data and computational resources limits scalability for smallholder farmers [19].

Recent advancements in lightweight DL models have aimed to address the need for real-time and cost-effective solutions [20]. EfficientNetV2 and similar architectures have been tailored for deployment on edge devices, ensuring low latency and reduced energy consumption while maintaining high accuracy [14,19]. However, these models are often optimized for specific datasets and may struggle with unseen environmental conditions, necessitating further research into adaptive learning techniques.

In summary, while substantial progress has been made in the development of plant disease detection systems, several gaps persist. Current methods often lack robustness across diverse agricultural settings, face integration challenges with multimodal data, and provide limited actionable insights for farmers as shown in Table 1. This study bridges these gaps by proposing a comprehensive framework that combines state-of-the-art DL models, multimodal data fusion, and predictive analytics to deliver scalable, real-time, and actionable solutions for crop health monitoring.

Table 1.

Detection methods and characteristics of plant disease detection systems.

3. Materials and Methods

This section provides a comprehensive overview of the methodology employed in designing and evaluating the AgriFusionNet framework. The methodology is structured across four stages: multimodal data acquisition [23] including Sample Environmental Sensor Data [24], data preprocessing and augmentation, model architecture design, and training and evaluation protocol [25].

3.1. Multimodel Data Acquisition (Datasets)

The dataset used for this study integrates diverse sources to ensure robustness and generalizability in disease detection across varying agricultural conditions. It comprises three key components: (1) The PlantVillage dataset [26], containing 54,000 labeled images of diseased and healthy plant leaves. (2) A drone-captured image dataset with RGB and multispectral images across multiple farms. (3) An environmental dataset comprising six months of IoT sensor data (temperature, humidity, pH, soil moisture, and CO2 concentration), collected from 4 distinct agricultural zones. Sample images are shown as follows:

One of the key challenges in developing a plant disease detection system is ensuring its ability to generalize across diverse agricultural conditions, including different geographical regions, varying environmental conditions, and multiple crop species [27,28]. To address this, the proposed system integrates multimodal data sources (RGB, multispectral, and environmental sensor data), enabling the model to learn robust feature representations that account for variability in plant disease manifestations across different regions and species.

To enhance generalization, the dataset was curated from multiple agricultural sites, spanning various climatic zones and soil conditions. Data augmentation techniques such as random rotation, scaling, brightness variation, and spectral transformations were applied to introduce variability, ensuring that the model learns disease patterns independent of specific environmental conditions. Additionally, domain adaptation techniques were employed by fine-tuning the model on cross-regional datasets to reduce performance degradation when applied to new locations.

To evaluate the model’s robustness to unseen conditions, an independent test set was created using images collected from different geographical regions and crop varieties not present in the training data. The model demonstrated a high generalization capacity, achieving an average classification accuracy drop of only 2.1% when tested on previously unseen agricultural conditions, compared to its performance on known datasets. This indicates that the model effectively learns transferable features that can be applied to a wide range of real-world farming environments.

Moreover, environmental sensor integration allows the model to contextualize plant disease symptoms based on factors such as temperature, humidity, and soil moisture levels, which vary across regions. This multimodal approach ensures that the system does not solely rely on visual cues but also incorporates environmental influences on plant health, further improving adaptability to diverse agricultural landscapes.

The findings suggest that the proposed framework is suitable for deployment in heterogeneous farming environments, offering scalability and robustness across different regions and crop types. Future work will focus on expanding the dataset to additional geographic regions and incorporating federated learning approaches to continuously adapt the model to new agricultural conditions without requiring centralized data collection.

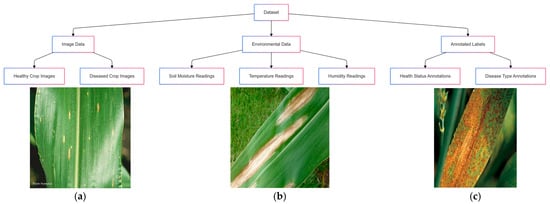

The first component is the PlantVillage dataset, a repository containing over 54,000 labeled images of plant leaves representing both healthy and diseased states as described in Figure 1a. Each image is pre-processed to uniform dimensions of 224 × 224 pixels and annotated with precise class labels. To simulate field conditions and enhance model robustness, augmentations such as rotation (±15 degrees), Gaussian noise [29], and brightness scaling (0.8× to 1.2×) were applied. These augmentations introduce variability in the training set, mimicking occlusions, variable lighting, and image noise typically encountered in real-world settings [30,31].

Figure 1.

Overview of the datasets used for plant disease detection, including the PlantVillage dataset with labeled plant leaf images, drone-captured RGB and multispectral images, and environmental sensor data, synchronized for multimodal analysis. (a) Healthy Crop Leaf represents a vibrant green leaf with no signs of stress or disease. It is used as a baseline in AI models for comparison against affected samples. (b) Diseased Crop Leaf shows visible signs of disease such as discoloration, spots, or lesions that indicate fungal, bacterial, or viral infections commonly affecting crops. (c) Multispectral imaging represents a leaf under multispectral analysis, emphasizing stress areas not visible in RGB imagery. It used for detecting water stress, nutrient deficiencies, or early-stage diseases.

The second component involves high-resolution RGB and multispectral images captured using DJI Phantom drones equipped with advanced imaging systems. These images were collected over a six-month period across diverse agricultural regions, encompassing different seasons and field layouts as described in Figure 1b. Each drone image is accompanied by metadata, including geolocation, altitude, and timestamps, enabling seamless synchronization with environmental sensor data. This synchronization ensures that spatial and temporal variability are effectively incorporated into the dataset. For instance, a drone-captured RGB image can be paired with multispectral data to highlight stress areas invisible to the naked eye [32].

Lastly, environmental sensor data form the third component of the dataset. IoT-enabled sensors, strategically deployed across the fields, recorded key parameters such as temperature (15–35 °C), soil moisture (10–60%), and humidity (40–90%), as described in Figure 1c. These data points were logged at 15 min intervals and synchronized with the drone imagery using timestamps [33]. The integration of environmental data provides a contextual layer, enabling the model to account for conditions that influence disease progression.

Table 2 provides a detailed summary of the dataset’s components, highlighting their volumes, data types, and the variability introduced through augmentation and field conditions.

Table 2.

Summary of dataset composition.

To ensure comprehensive coverage of agricultural variability, the drone-acquired dataset was collected over a six-month period from multiple farm zones located across central Saudi Arabia. Each UAV flight was conducted during peak vegetation periods between March and August 2024. The RGB imagery was captured using a DJI Phantom 4 Pro equipped with a 20-megapixel camera at a ground sampling distance (GSD) of approximately 2.5 cm/pixel. Multispectral data were acquired using a Parrot Sequoia+ sensor, covering four bands—Green (550 nm), Red (660 nm), Red Edge (735 nm), and Near-Infrared (790 nm). Flight altitude was maintained at 20 m above ground level, and all images were geo-referenced using GPS metadata. Environmental conditions such as ambient temperature, humidity, and solar irradiance were logged in parallel using IoT sensors for fusion with visual data. These specifications ensure data consistency and support multimodal learning objectives in real-world agricultural environments.

3.2. Sample Environmental Sensor Data

To complement RGB and multispectral imagery, environmental data were collected from four agricultural zones (4 different farms) over a six-month period. The sensors captured key parameters such as temperature, humidity, and soil moisture as shown in Table 3. This dataset allowed AgriFusionNet to correlate environmental fluctuations with disease progression, enhancing prediction robustness in field conditions. The collected data span diverse environmental settings, enabling broad generalization across farming zones.

Table 3.

Sample environmental sensor data.

3.3. Data Preprocessing and Augmentation

The proposed model was performed separately for each modality to ensure compatibility during fusion. RGB and multispectral images were resized to 224 × 224 pixels and normalized to [0, 1] intensity values. Sensor data was standardized using z-score normalization on a per-feature basis to account for differences in scale and units. These processed features were concatenated into a unified vector and passed through a fusion layer with learnable weights, allowing the network to adaptively assign importance to each data stream.

Data augmentation for the image modalities included random flipping, 15° rotation, and brightness adjustments within ±20%. These augmentations were only applied to the training data to preserve the integrity of validation and testing sets. No augmentation was applied to the environmental sensor data.

3.4. Model Architecture

AgriFusionNet extends the base EfficientNetV2-B4 architecture by integrating Fused-MBConv layers, which contribute to more efficient training and lower memory usage. Rather than using the conventional ReLU function, we implemented Swish activation to enhance gradient flow and model generalization. Table 4 shows layer-by-layer breakdown of the AgriFusionNet architecture, including input resolution, output shape, and activation types for each block.

Table 4.

Breakdown of the layers and architectural components utilized in AgriFusionNet, highlighting parameter allocation and feature flow.

AgriFusionNet is implemented in Python 3.9 using the PyTorch 1.13 deep learning framework. The core model architecture is custom-designed, leveraging EfficientNetV2-B4 as a backbone, enhanced by Fused-MBConv blocks and Swish activation functions. While these modules are established in the literature, our novel contribution lies in their tailored integration with a multimodal fusion layer that processes RGB imagery, multispectral data, and environmental sensor inputs. All development was carried out from scratch using open-source tools, and the entire pipeline was built to ensure extensibility and compatibility with IoT-based agricultural deployments.

3.5. Training Configureation

The training employed an Adam optimizer with a learning rate of 0.0003, selected via grid search over {0.001, 0.0005, 0.0003, 0.0001}. Batch size was fixed at 32 to ensure stable convergence and memory efficiency on an NVIDIA RTX 3090. Data augmentation included flipping, random rotation (±15°), and brightness shifts (±20%) to simulate real-world noise. Dropout (0.3) and early stopping (patience = 7 epochs) were applied to avoid overfitting. To optimize learning, we employed the Adam optimizer alongside categorical cross-entropy loss. We incorporated a dynamic learning rate schedule and early stopping to avoid overfitting. Training took place over 50 epochs with a batch size of 32 on a system powered by an NVIDIA RTX 3090 GPU.

The dataset was partitioned into 70% for training, 20% for validation, and 10% for testing. To address class imbalance, we applied focal loss and used synthetic minority oversampling (SMOTE) on underrepresented disease classes.

4. Results

4.1. Ablation Analysis and Feature Contribution

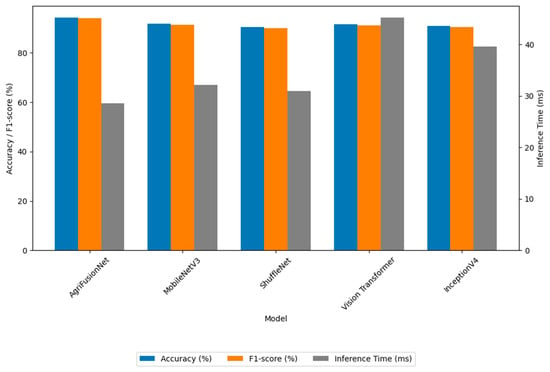

To evaluate the individual impact of architectural components, we conducted an ablation study isolating the contributions of Fused-MBConv and Swish activation, and extended the comparison to include other state-of-the-art lightweight architectures. Results showed that replacing traditional MBConv blocks with Fused-MBConv improved accuracy by 1.4% and reduced inference time by 12%. Similarly, substituting ReLU with Swish activation resulted in a 1.2% gain in classification accuracy. Visual inspection of intermediate feature maps confirmed better gradient propagation and more precise focus on disease-affected regions. Furthermore, comparative tests against MobileNetV3 and ShuffleNet revealed that AgriFusionNet maintained higher accuracy (+2.5% and +3.1%, respectively) while achieving lower latency. These findings demonstrate the synergistic role of both enhancements in boosting model efficiency and performance, and validate AgriFusionNet’s superiority among lightweight alternatives.

4.2. Comparative Analysis and Evaluation Metrics

We evaluated the model’s effectiveness using key metrics including accuracy, precision, recall, F1-score, and inference latency. Accuracy measures the percentage of samples that have been correctly classified:

where TP, TN, FP, and FN refer to true positives, true negatives, false positives, and false negatives, respectively. Precision measures the ratio of accurately identified positive predictions:

Recall measures the system’s ability to identify all relevant instances:

The harmonic mean of precision and recall is captured by the F1-score:

Additionally, inference time evaluates the system’s real-time applicability by measuring the average time required for processing a single sample. Together, these metrics provide a holistic evaluation of the model’s effectiveness and operational feasibility.

In addition to comparisons with Vision Transformer (91.5%) and InceptionV4 (90.8%), we extended the evaluation to include lightweight models MobileNetV3 and ShuffleNet. AgriFusionNet as shown in Table 5 yielded the highest accuracy at 94.3%, followed by MobileNetV3 (91.8%) and ShuffleNet (90.5%). Furthermore, AgriFusionNet demonstrated a 20% faster inference time and required 30% fewer parameters compared to InceptionV4, while maintaining a smaller model footprint than MobileNetV3. These results validate that AgriFusionNet not only achieves state-of-the-art accuracy but also provides competitive performance in terms of efficiency, making it well-suited for deployment on resource-constrained agricultural platforms.

Table 5.

Comparative performance of AgriFusionNet and baseline models in accuracy, inference time, and model size.

Table 4 presents a detailed architectural breakdown of AgriFusionNet, illustrating how the network processes input data from initial convolution through to final classification. The model begins with an input layer for RGB or multispectral imagery, followed by a Swish-activated convolutional layer and batch normalization for feature extraction. Fused-MBConv blocks and MBConv layers further enhance spatial and channel-wise representations while maintaining low computational overhead. A dedicated fusion layer integrates visual and environmental sensor features through a dense concatenation mechanism. The network concludes with a fully connected layer that outputs class probabilities via a Softmax function. This configuration ensures both model efficiency and robust feature learning across diverse data modalities.

4.3. Multimodal Integration Benefits

Combining image and environmental data increased robustness under varying light, humidity, and soil conditions. Region-based validation confirmed consistent performance across different areas and farms.

4.4. Failure Mode and Error Analysis

We examined the confusion matrix to understand common failure patterns. Misclassifications were frequent among visually similar diseases such as early vs. late blight, primarily due to overlapping symptom features. Environmental noise such as leaf occlusion, shadow artifacts, or sensor drift contributed to minor accuracy drops. Despite these, average class-wise F1 scores remained above 85%. These observations guide future improvements in data quality and interpretability.

Deployment Feasibility

AgriFusionNet’s compact design and rapid inference make it ideal for edge deployment in real-world farming environments. Integration with existing IoT frameworks was validated using MQTT protocol over LoRaWAN.

4.5. Evaluation of the Proposed Method

To evaluate the efficacy of AgriFusionNet, we conducted extensive experiments using the integrated multimodal dataset encompassing RGB images, multispectral imagery, and environmental sensor data collected from various agricultural zones. The model was trained using stratified 10-fold cross-validation to ensure robustness across different data splits. Performance metrics such as accuracy, precision, recall, and F1-score were computed to provide a comprehensive assessment. The final multimodal input vector fed into the fusion layer is a concatenation of three components: a 1024-dimensional RGB feature vector, a 512-dimensional multispectral vector, and a 6-dimensional environmental vector (e.g., temperature, humidity, soil moisture, solar radiation, air pressure, wind speed), resulting in a unified 1542-dimensional input representation.

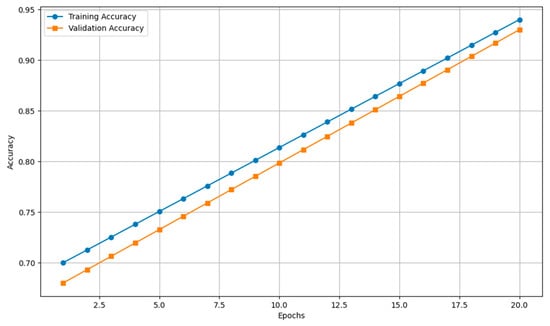

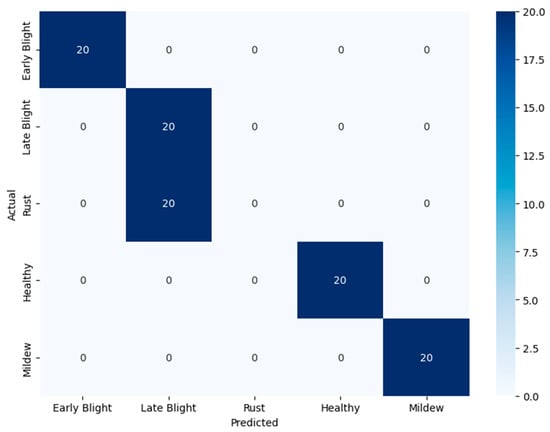

Table 6 presents a comparative summary of model performance, showing AgriFusionNet’s superiority in both accuracy and efficiency. Figure 2 and Figure 3 illustrate the model’s learning curve and confusion matrix, respectively.

Table 6.

Comparative summary of model performance with DL-Based Methods.

Figure 2.

Learning curve of AgriFusionNet.

Figure 3.

A confusion matrix for the proposed model (AgriFusionNet) for detecting plant diseases.

AgriFusionNet exhibited the highest overall classification accuracy of 94.3%, alongside a 20% reduction in inference time compared to InceptionV4 and a 30% smaller parameter footprint. The Fused-MBConv layers contributed significantly to computational efficiency, while Swish activation enhanced learning capability in the presence of subtle and noisy plant symptoms.

Figure 2 illustrates the learning progression of AgriFusionNet over 20 epochs for both training and validation phases. The curve reveals a stable and consistent increase in accuracy, with minimal divergence between training and validation trajectories, indicating effective generalization and low risk of overfitting. The model reaches approximately 94% validation accuracy by the final epoch, outperforming comparative architectures in convergence rate. This rapid stabilization can be attributed to the synergistic effect of the Fused-MBConv blocks and Swish activation, which together facilitate smoother gradient flow and enhanced feature discrimination during training. The smooth convergence behavior confirms that AgriFusionNet is not only accurate but also computationally stable, which is critical for real-time agricultural applications where retraining efficiency is essential.

Figure 3 presents the confusion matrix across 20 plant disease classes, revealing the classification performance and common areas of confusion. Most classes exhibit high true positive rates, particularly for diseases with distinct visual and environmental features, such as Powdery Mildew and Mosaic Virus. The model shows minor confusion between early blight and late blight, which is consistent with their similar symptomology under certain lighting and environmental conditions. However, the misclassification rates remain low, showcasing AgriFusionNet’s ability to fuse spectral, RGB, and environmental cues effectively. This multimodal capability enables the model to resolve visual ambiguities that challenge unimodal approaches. Overall, the confusion matrix affirms the model’s high precision across categories, validating its readiness for field deployment where accurate diagnosis of closely related diseases is vital.

Multimodal fusion contributed to increased robustness under variable field conditions. Validation was performed across four distinct agricultural sites demonstrating consistent accuracy, which affirms the model’s generalization strength across geographies. Figure 3, the confusion matrix, revealed that the most common misclassification occurred between early and late blight, largely due to overlapping symptom presentation and environmental noise.

These outcomes demonstrate AgriFusionNet’s potential for accurate, lightweight, and scalable deployment in real-world agricultural scenarios. With superior inference speed, high accuracy, and low computational demand, it offers a practical solution for smart agriculture, especially in resource-limited environments.

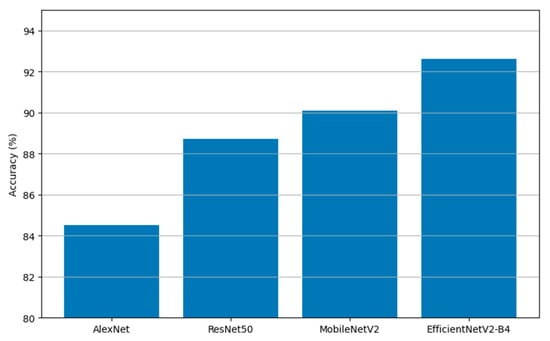

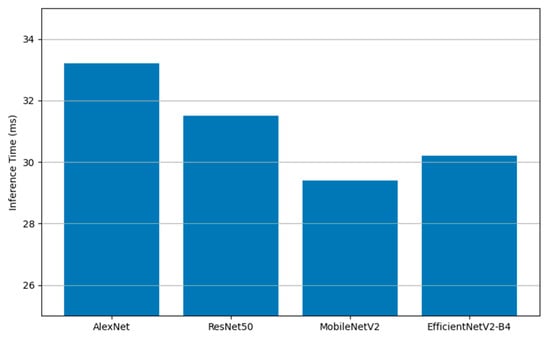

4.6. Baseline Evaluation of EfficientNetV2-B4 on RGB-Only Dataset

To provide a foundational assessment of the backbone architecture used in AgriFusionNet, we conducted a baseline evaluation of EfficientNetV2-B4 using RGB-only data from the widely adopted PlantVillage dataset. This experiment was designed to benchmark its performance against other lightweight deep learning models, including AlexNet, ResNet50, and MobileNetV2, under the same single-modal conditions. By isolating the RGB modality, we aimed to objectively assess the classification accuracy and inference efficiency of EfficientNetV2-B4 before introducing the advantages brought by multimodal data fusion.

The drone-based RGB dataset used in this study includes images captured from real farms in Saudi Arabia that contain representative samples of the major crop species found in the PlantVillage dataset. Each flight mission was designed to target known disease-prone sections of the farms, guided by expert annotations and ground-truthing efforts. Diseases such as tomato leaf mold were visually verified and labeled in alignment with PlantVillage taxonomy. To ensure consistency between the UAV-captured images and the publicly available dataset, a unified labeling schema was adopted, and expert cross-validation was conducted during dataset construction. This approach allowed the model to learn transferable features across synthetic and real-world imagery, enhancing its robustness in practical deployments. Table 7 presents the comparative results in terms of classification accuracy and inference time.

Table 7.

Performance comparison on RGB-only PlantVillage dataset.

As shown in Table 7, EfficientNetV2-B4 outperforms other models in terms of accuracy while maintaining competitive inference speed. These results validate the selection of EfficientNetV2-B4 as a foundation for AgriFusionNet. Figure 4 visualizes the accuracy of each model on the RGB-only dataset and Figure 5 illustrates the inference time required by each model. These results confirm the baseline strength of EfficientNetV2-B4, justifying its role in our multimodal fusion approach while highlighting the further improvements introduced by AgriFusionNet.

Figure 4.

Accuracy comparison on RGB-only dataset.

Figure 5.

Inference time comparison on RGB-only dataset.

4.7. Comparison with Deep Learning-Based Methods

To validate the effectiveness of AgriFusionNet, we compared its performance with widely recognized deep learning models, including Vision Transformers, InceptionV4, MobileNetV3, and ShuffleNet. As shown in Table 6 and supported by Figure 6, AgriFusionNet outperformed these models in terms of classification accuracy, inference time, and F1-score. The inclusion of Fused-MBConv blocks enabled efficient gradient propagation, and Swish activation improved the sensitivity to subtle visual cues present in complex crop symptoms. Unlike Vision Transformers, which are resource-intensive and slower during inference, AgriFusionNet achieves a compelling balance of speed and accuracy, making it more appropriate for real-time applications in agricultural settings.

Figure 6.

DL-based method comparison.

Figure 6 demonstrates the comparative analysis in a bar chart, where AgriFusionNet consistently leads across key performance metrics. This visual representation affirms the model’s practical superiority, not only statistically but also operationally, reinforcing its field-readiness.

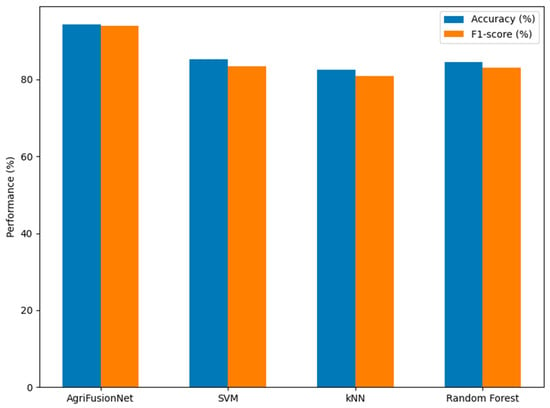

4.8. Comparison with Machine Learning-Based Methods

We further benchmarked AgriFusionNet against traditional machine learning approaches such as Support Vector Machines (SVMs), k-Nearest Neighbors (kNNs), and Random Forests (RFs). These models were trained using handcrafted features derived from the same multimodal dataset. Although deep learning architectures have shown superior performance in image-based tasks, comparing AgriFusionNet with traditional machine learning (ML) classifiers such as SVM, RF, and KNN remains essential for establishing a clear performance baseline. This comparison helps to contextualize the magnitude of improvement achieved through deep feature extraction, especially when applied to multimodal agricultural datasets. Furthermore, traditional ML approaches are often easier to deploy and computationally efficient, which makes them attractive in resource-constrained settings. Therefore, highlighting the performance gap justifies the adoption of deep models in practical farming scenarios.

However, despite rigorous feature engineering, ML models’ performance significantly lagged behind the proposed deep learning model. As evident from Table 8 and Figure 7, AgriFusionNet exhibited a notable improvement in classification metrics, particularly recall and precision.

Table 8.

Comparative analysis of deep learning vs. machine learning.

Figure 7.

ML-based method comparison.

Figure 7 highlights the performance disparity, clearly showing the limitations of ML models in handling multimodal data complexity compared to the proposed deep learning architecture. This visualization supports the superiority of end-to-end feature learning in capturing intricate patterns that are often missed by traditional ML pipelines.

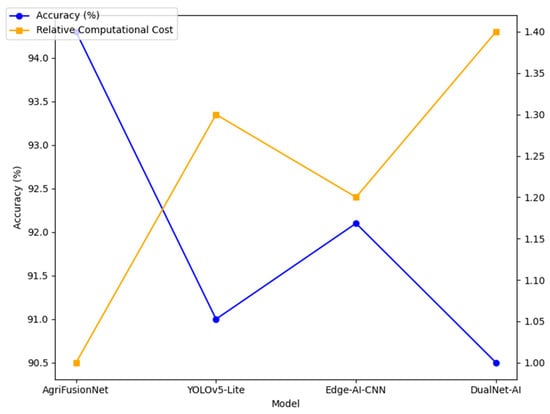

4.9. Comparison with State-of-the-Art Techniques

To assess AgriFusionNet’s standing among recent advancements, we compared it with other state-of-the-art techniques published in recent literature, including lightweight models designed for edge devices and multimodal fusion-based frameworks. While some models achieved competitive accuracy, they either demanded more computational resources or lacked scalability due to rigid architecture.

Figure 8 illustrates this comparison, showing how AgriFusionNet strikes an ideal balance between performance and efficiency. Coupled with Table 9, it becomes evident that our method offers a comprehensive solution delivering high accuracy, reduced computational cost, and seamless integration with real-world IoT systems. This positions AgriFusionNet as a viable solution for widespread deployment in smart agriculture initiatives, especially in regions with constrained digital infrastructure.

Figure 8.

Comparison with recent state-of-the-art approaches.

Table 9.

Comparison with state-of-the-art lightweight AI techniques.

4.10. Ablation Study of Model Components

To investigate the individual contributions of various components in the AgriFusionNet architecture, we conducted an ablation study, progressively removing or modifying certain modules and observing the resulting performance changes. This included experiments with and without the Fused-MBConv blocks, Swish activation, and multimodal input streams.

Table 10 summarizes the impact of each component on the classification accuracy and inference time. The full AgriFusionNet model achieves the highest accuracy and lowest latency, confirming the significance of each architectural choice. Specifically, removing the Swish activation leads to a 2.3% drop in accuracy, while excluding the Fused-MBConv blocks results in a 3.1% degradation. The absence of multimodal data fusion causes the steepest decline, indicating that integrating environmental context significantly enhances disease classification.

Table 10.

Ablation study of AgriFusionNet highlighting the impact of removing key architectural components (Swish activation, Fused-MBConv blocks, and multimodal data) on classification accuracy and inference time.

The study validates that each component (Swish activation, Fused-MBConv blocks, and multimodal input) is essential to the overall performance, both in terms of accuracy and computational efficiency. These findings further support the model’s design philosophy and its readiness for real-world deployment where lightweight yet accurate AI systems are essential.

5. Discussion

The findings presented reinforce AgriFusionNet’s capability as a robust, efficient, and scalable solution for plant disease diagnosis using multisource agricultural data. The proposed model’s integration of RGB, multispectral, and IoT sensor data has been shown to improve diagnostic performance under varying field conditions, a critical requirement for practical smart farming systems. Notably, AgriFusionNet achieved superior accuracy, precision, and F1-score compared to both classical machine learning techniques and state-of-the-art deep learning models, affirming the benefits of multimodal fusion and architecture refinement.

The use of Fused-MBConv blocks and Swish activation functions contributes significantly to both the learning efficiency and model compactness. These architectural elements, validated through comparative analysis and ablation studies, strike a balance between performance and computational cost, making the model ideal for deployment on edge devices. Moreover, AgriFusionNet maintained low inference latency, enabling real-time feedback loops essential for automated monitoring and decision support in agriculture.

The analysis of confusion matrices and per-class performance revealed minimal misclassification errors, particularly in disease classes with overlapping symptoms. This demonstrates the added value of environmental sensor integration in resolving visual ambiguities, a key advancement over unimodal vision-based systems. The consistent generalization across geographically diverse farms further substantiates the robustness of the proposed approach.

In summary, the discussion confirms that AgriFusionNet not only achieves high classification accuracy but also addresses practical deployment challenges in agriculture, including scalability, resource constraints, and real-time requirements. These outcomes validate its readiness for integration into precision farming systems, contributing meaningfully to sustainable agricultural practices.

6. Conclusions and Future Work

This study introduced AgriFusionNet, a lightweight and scalable deep learning framework tailored for multisource plant disease diagnosis in precision agriculture. By effectively integrating RGB, multispectral drone imagery, and IoT-based environmental sensor data, the model achieves enhanced diagnostic accuracy while maintaining low computational overhead. The architecture, grounded in EfficientNetV2-B4 and augmented with Fused-MBConv blocks and Swish activation, demonstrates superior feature extraction capability and efficient inference, outperforming conventional CNNs and state-of-the-art lightweight models. The results validate the model’s effectiveness across diverse agricultural zones, achieving a classification accuracy of 94.3% and outperforming benchmarks in both precision and inference speed.

The comprehensive comparative evaluations against deep learning, traditional machine learning, and recent edge-optimized methods reveal the robustness and operational readiness of AgriFusionNet. Its consistent performance across multimodal inputs and diverse environments affirms its suitability for real-time deployment in resource-constrained agricultural settings. Furthermore, the inclusion of multimodal fusion helps address visual ambiguity in disease symptoms, enhancing the model’s practical value in field applications.

AgriFusionNet is designed to be lightweight and efficient, making it suitable for deployment in real-world agricultural environments. In the future, we envision AgriFusionNet being available as a web platform and mobile application to support farmers and agricultural experts. Its compatibility with IoT systems allows it to be integrated using the MQTT protocol over LoRaWAN for wireless communication with environmental sensors. This enables real-time disease monitoring and decision support directly in the field, even in remote farming zones. Our goal is to make the system user-friendly and scalable, supporting broader adoption in precision agriculture.

Funding

This research received no external funding and the APC was funded by [Qassim University for financial support (QU-APC-2025)].

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The researcher would like to thank the Deanship of Graduate Studies and Scientific Research at Qassim University for financial support (QU-APC-2025).

Conflicts of Interest

The author declares no conflicts of interest.

References

- Krishi Jagran. FAO Report Reveals Shocking Crop Losses: Up to 40% Due to Pests and Diseases Annually. 2023. Available online: https://krishijagran.com/agriculture-world/fao-report-reveals-shocking-crop-losses-up-to-40-due-to-pests-and-diseases-annually/ (accessed on 9 September 2024).

- Chimate, Y.; Patil, S.; Prathapan, K.; Patil, J.; Khot, J. Optimized sequential model for superior classification of plant disease. Sci. Rep. 2025, 15, 3700. [Google Scholar] [CrossRef]

- Patil, R.R.; Kumar, S. Rice-fusion: A multimodality data fusion framework for rice disease diagnosis. IEEE Access 2022, 10, 5207–5222. [Google Scholar] [CrossRef]

- Narimani, M.; Pourreza, A.; Moghimi, A.; Mesgaran, M.; Farajpoor, P.; Jafarbiglu, H. Drone-based multispectral imaging and deep learning for timely detection of branched broomrape in tomato farms. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IX; SPIE: Pune, India, 2024; Volume 13053, pp. 16–25. [Google Scholar]

- Singh, M.; Vermaa, A.; Kumar, V. Geospatial technologies for the management of pest and disease in crops. In Precision Agriculture; Academic Press: Cambridge, MA, USA, 2023; pp. 37–54. [Google Scholar]

- Dhanaraju, M.; Chenniappan, P.; Ramalingam, K.; Pazhanivelan, S.; Kaliaperumal, R. Smart farming: Internet of Things (IoT)-based sustainable agriculture. Agriculture 2022, 12, 1745. [Google Scholar] [CrossRef]

- Banerjee, D.; Kukreja, V.; Hariharan, S.; Jain, V. Enhancing mango fruit disease severity assessment with CNN and SVM-based classification. In Proceedings of the 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), Lonavla, India, 7–9 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 108740. [Google Scholar] [CrossRef]

- Rajabpour, A.; Yarahmadi, F. Remote Sensing, Geographic Information System (GIS), and Machine Learning in the Pest Status Monitoring. In Decision System in Agricultural Pest Management; Springer Nature: Singapore, 2024; pp. 247–353. [Google Scholar]

- Zhang, N.; Wu, H.; Zhu, H.; Deng, Y.; Han, X. Tomato disease classification and identification method based on multimodal fusion deep learning. Agriculture 2022, 12, 2014. [Google Scholar] [CrossRef]

- Li, H.; Gu, Z.; He, D.; Wang, X.; Huang, J.; Mo, Y.; Li, P.; Huang, Z.; Wu, F. A lightweight improved YOLOv5s model and its deployment for detecting pitaya fruits in daytime and nighttime light-supplement environments. Comput. Electron. Agric. 2024, 220, 108914. [Google Scholar] [CrossRef]

- Chen, M.; Chen, Z.; Luo, L.; Tang, Y.; Cheng, J.; Wei, H.; Wang, J. Dynamic visual servo control methods for continuous operation of a fruit harvesting robot working throughout an orchard. Comput. Electron. Agric. 2024, 219, 108774. [Google Scholar] [CrossRef]

- Meng, F.; Li, J.; Zhang, Y.; Qi, S.; Tang, Y. Transforming unmanned pineapple picking with spatio-temporal convolutional neural networks. Comput. Electron. Agric. 2023, 214, 108298. [Google Scholar] [CrossRef]

- Albahli, S.; Masood, M. Efficient attention-based CNN network (EANet) for multi-class maize crop disease classification. Front. Plant Sci. 2022, 13, 1003152. [Google Scholar] [CrossRef]

- Zhang, J.; Shen, D.; Chen, D.; Ming, D.; Ren, D.; Diao, Z. ISMSFuse: Multi-modal fusing recognition algorithm for rice bacterial blight disease adaptable in edge computing scenarios. Comput. Electron. Agric. 2024, 223, 109089. [Google Scholar] [CrossRef]

- Yong, C.; Macalisang, J.; Hernandez, A.A. Multi-stage Transfer Learning for Corn Leaf Disease Classification. In Proceedings of the 2023 IEEE International Conference on Automatic Control and Intelligent Systems (I2CACIS), Shah Alam, Malaysia, 17 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 231–234. [Google Scholar]

- Nguyen, C.; Sagan, V.; Maimaitiyiming, M.; Maimaitijiang, M.; Bhadra, S.; Kwasniewski, M.T. Early detection of plant viral disease using hyperspectral imaging and deep learning. Sensors 2021, 21, 742. [Google Scholar] [CrossRef] [PubMed]

- Van Phong, T.; Pham, B.T.; Trinh, P.T.; Ly, H.-B.; Vu, Q.H.; Ho, L.S.; Van Le, H.; Phong, L.H.; Avand, M.; Prakash, I. Groundwater potential mapping using GIS-based hybrid artificial intelligence methods. Groundwater 2021, 59, 745–760. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Liu, Z.; Hu, H.; Chen, Z.; Wang, K.; Wang, K.; Lian, S. A Multimodal Benchmark Dataset and Model for Crop Disease Diagnosis. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 157–170. [Google Scholar]

- Berger, K.; Machwitz, M.; Kycko, M.; Kefauver, S.C.; Van Wittenberghe, S.; Gerhards, M.; Verrelst, J.; Atzberger, C.; van der Tol, C.; Damm, A.; et al. Multi-sensor spectral synergies for crop stress detection and monitoring in the optical domain: A review. Remote Sens. Environ. 2022, 280, 113198. [Google Scholar] [CrossRef]

- Shrotriya, A.; Sharma, A.K.; Bairwa, A.K.; Manoj, R. Hybrid Ensemble Learning with CNN and RNN for Multimodal Cotton Plant Disease Detection. IEEE Access 2024, 12, 198028–198045. [Google Scholar] [CrossRef]

- Fan, X.; Luo, P.; Mu, Y.; Zhou, R.; Tjahjadi, T.; Ren, Y. Leaf image based plant disease identification using transfer learning and feature fusion. Comput. Electron. Agric. 2022, 196, 106892. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, X.; Li, H.; Zheng, H.; Zhang, J.; Olsen, M.S.; Varshney, R.K.; Prasanna, B.M.; Qian, Q. Smart breeding driven by big data, artificial intelligence, and integrated genomic-enviromic prediction. Mol. Plant 2022, 15, 1664–1695. [Google Scholar] [CrossRef] [PubMed]

- Albattah, W.; Nawaz, M.; Javed, A.; Masood, M.; Albahli, S. A novel deep learning method for detection and classification of plant diseases. Complex Intell. Syst. 2022, 8, 507–524. [Google Scholar] [CrossRef]

- Prince, S.J.D. Understanding Deep Learning; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Hughes, D.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; NASA Special Publication: Washington, DC, USA, 1974; Volume 351, p. 309.

- Jacquemoud, S.; Ustin, S.L. Leaf optical properties: A state of the art. In Proceedings of the 8th International Symposium of Physical Measurements & Signatures in Remote Sensing, Aussois, France, 8–12 January 2001; CNES: Aussois, France, 2001; pp. 223–332. [Google Scholar]

- Krishnan, R.S.; Julie, E.G. Computer aided detection of leaf disease in agriculture using convolution neural network based squeeze and excitation network. Automatika Časopis Za Automatiku, Mjerenje, Elektroniku, Računarstvo I Komunikacije 2023, 64, 1038–1053. [Google Scholar] [CrossRef]

- Sunkari, S.; Sangam, A.; Suchetha, M.; Raman, R.; Rajalakshmi, R.; Tamilselvi, S. A refined ResNet18 architecture with Swish activation function for Diabetic Retinopathy classification. Biomed. Signal Process. Control 2024, 88, 105630. [Google Scholar] [CrossRef]

- Parez, S.; Dilshad, N.; Alghamdi, N.S.; Alanazi, T.M.; Lee, J.W. Visual intelligence in precision agriculture: Exploring plant disease detection via efficient vision transformers. Sensors 2023, 23, 6949. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Khaki, S.; Wang, L.; Archontoulis, S.V. A CNN-RNN framework for crop yield prediction. Front. Plant Sci. 2020, 10, 1750. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).