Sugarcane Feed Volume Detection in Stacked Scenarios Based on Improved YOLO-ASM

Abstract

1. Introduction

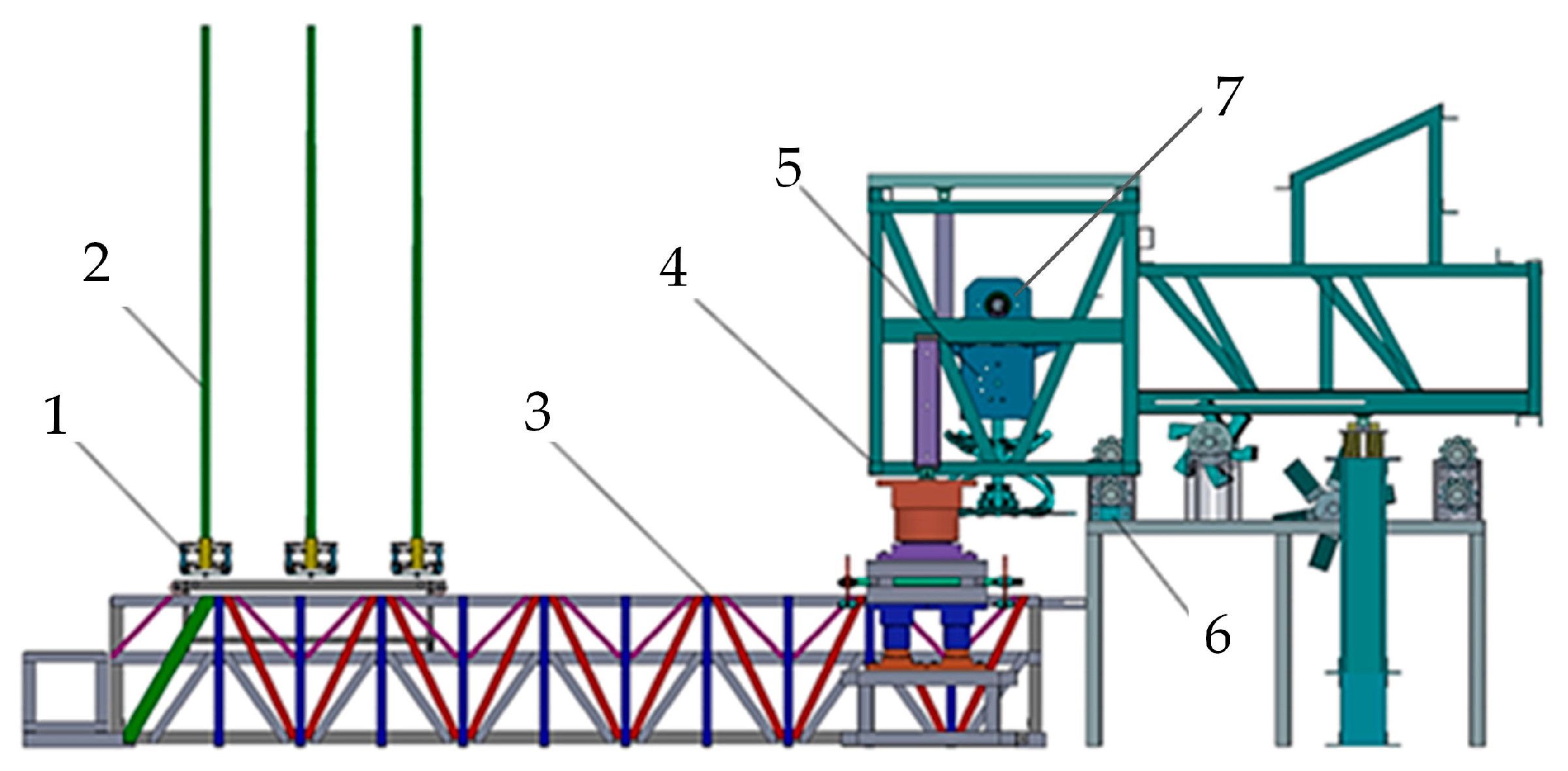

2. Materials and Methods

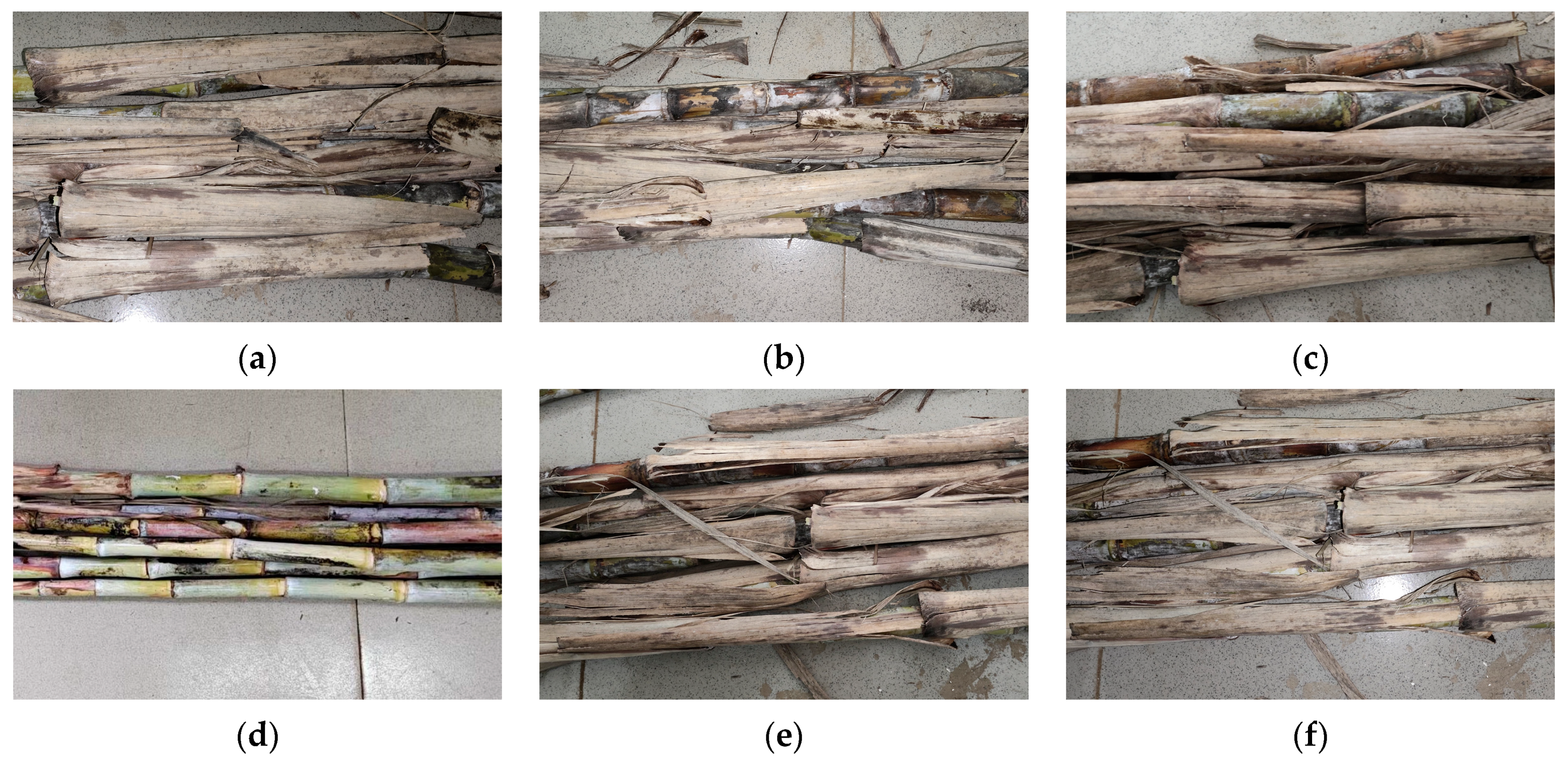

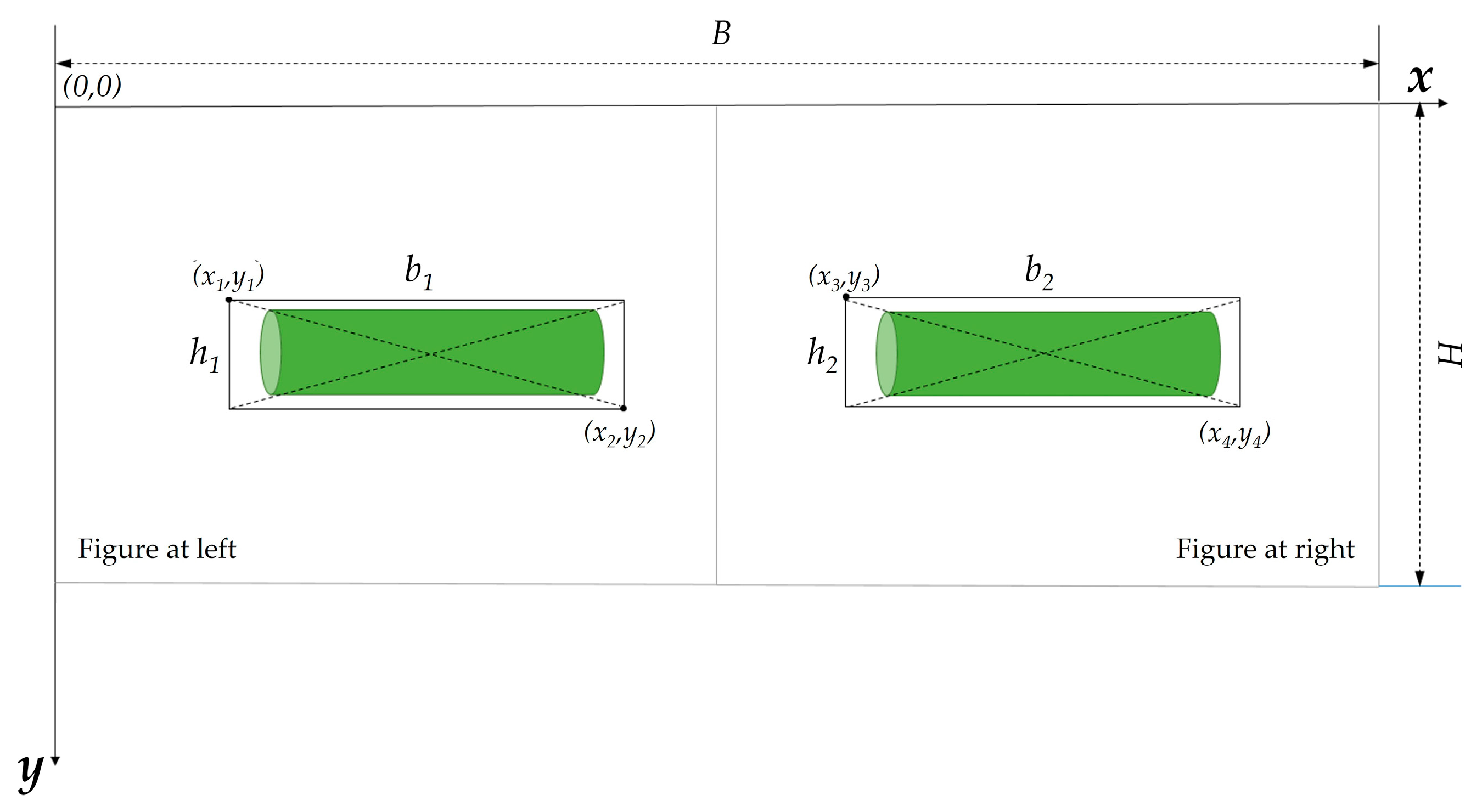

2.1. Preparation and Processing of Dataset

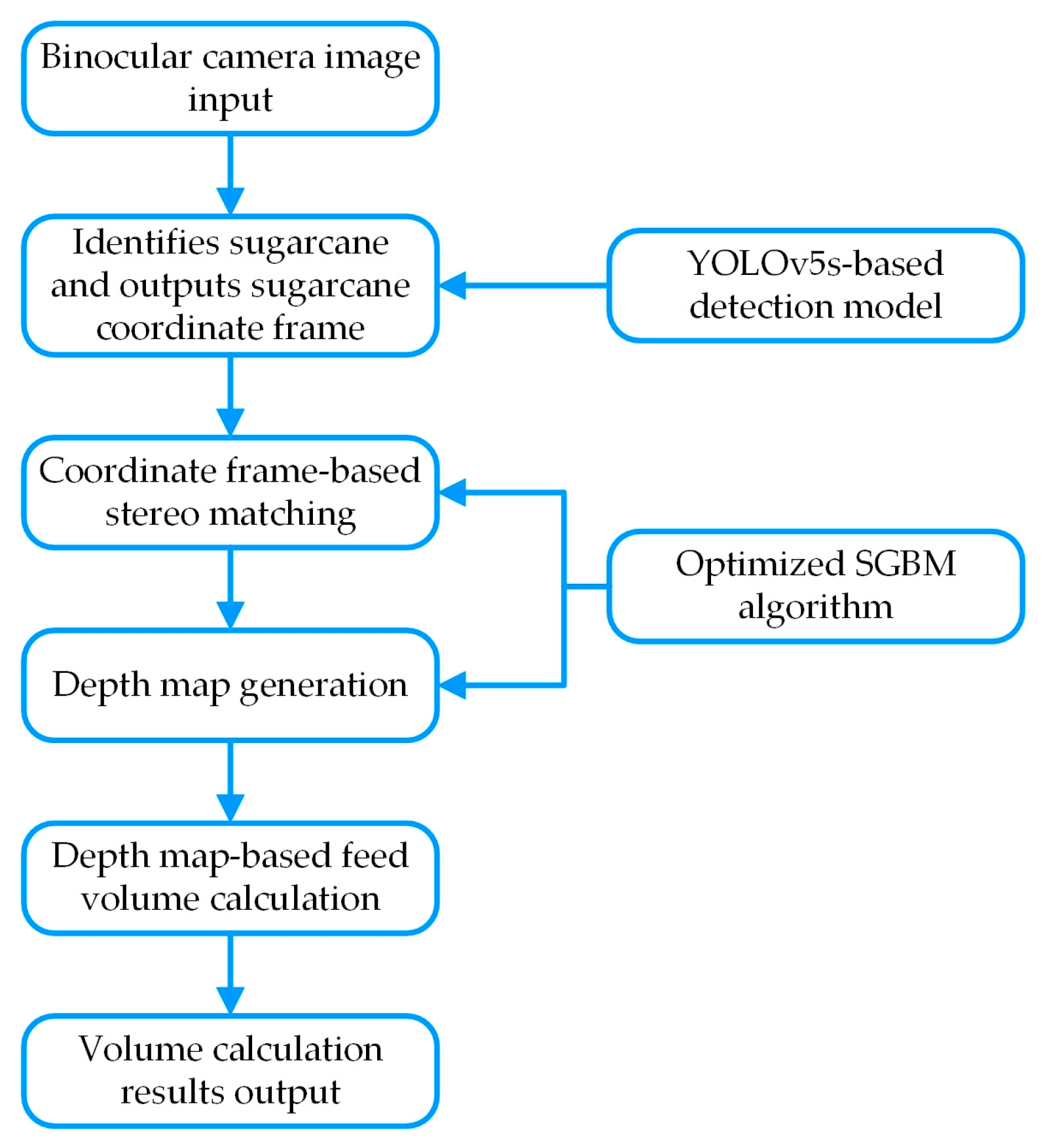

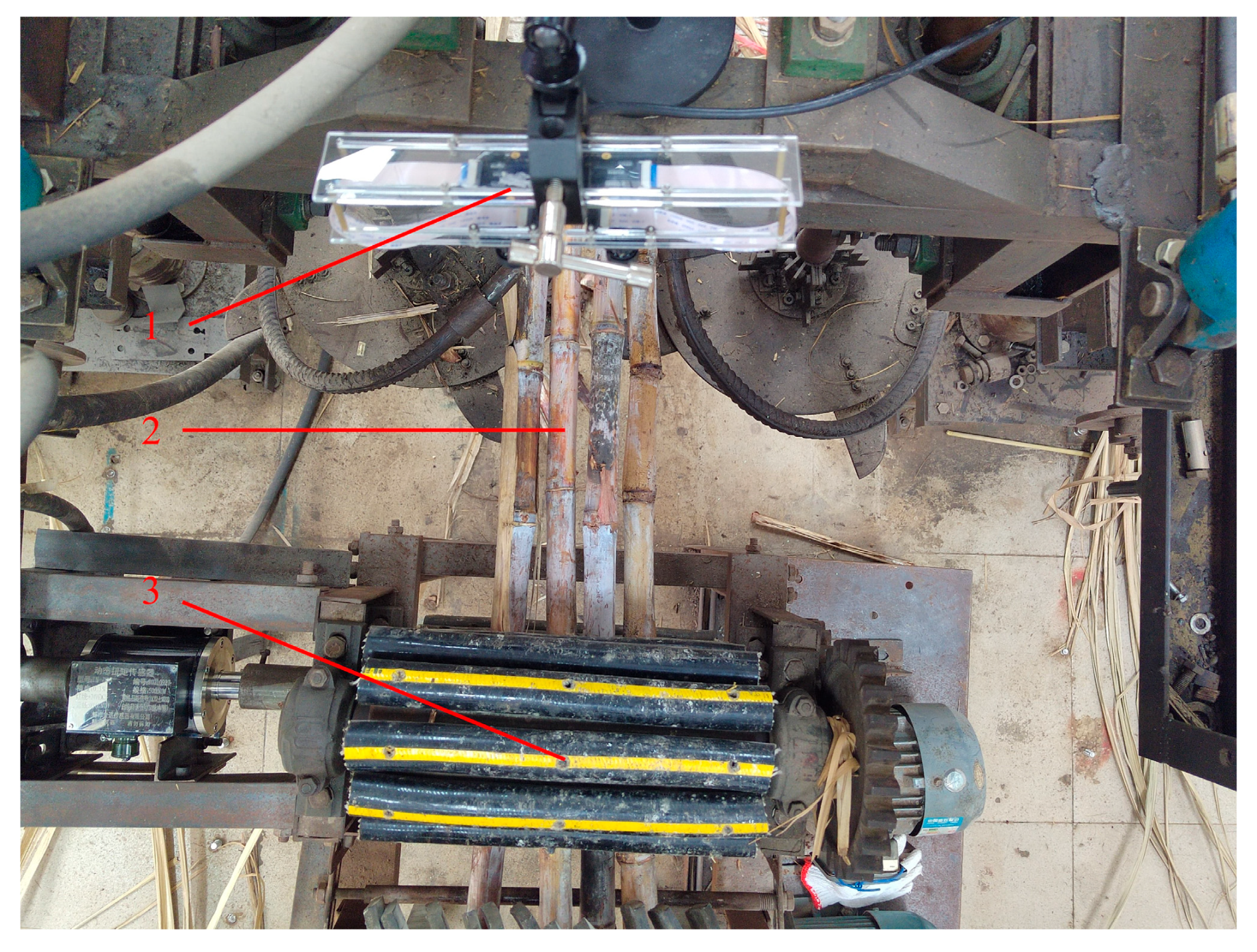

2.2. Sugarcane Feeding Volume Detection Scheme Design

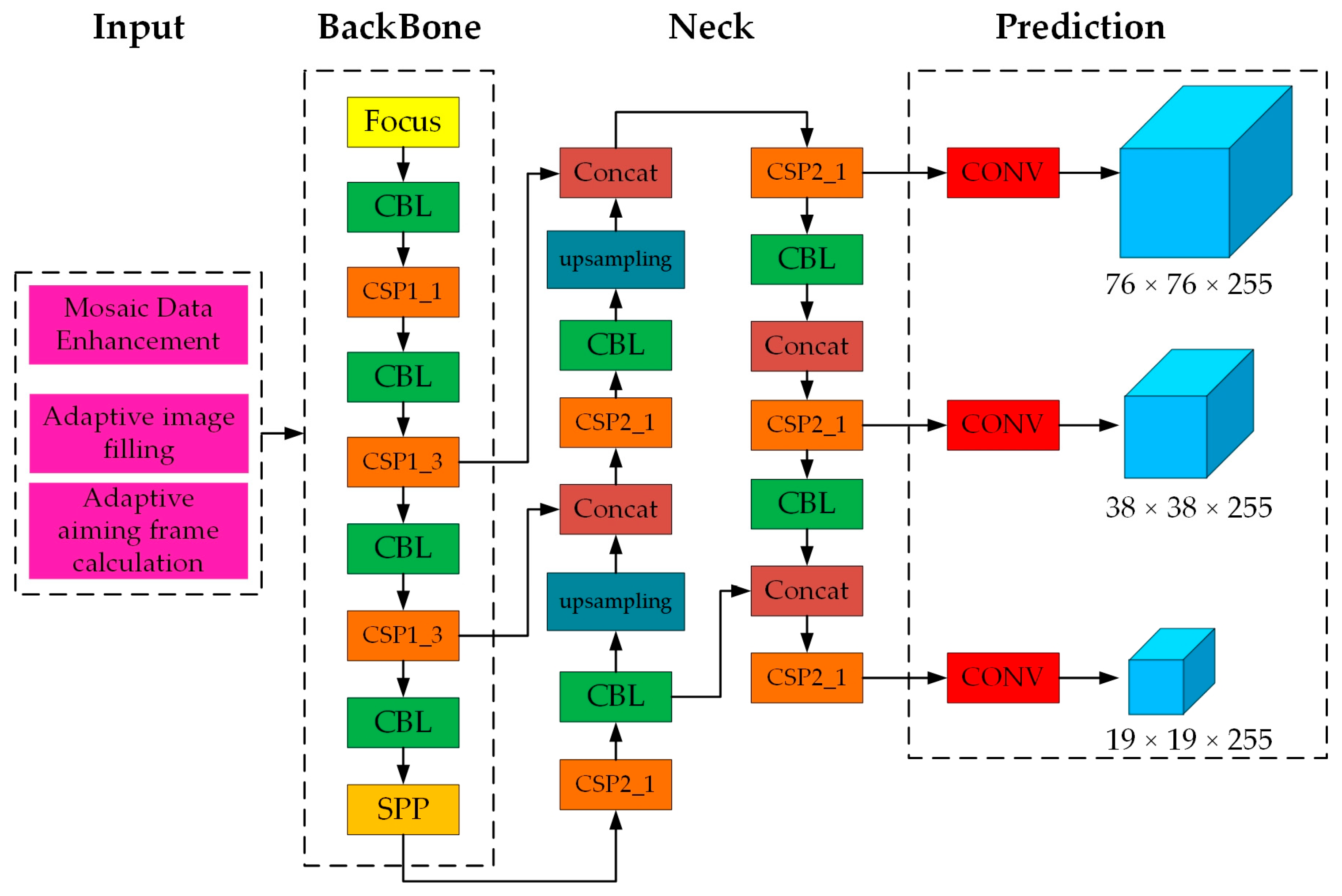

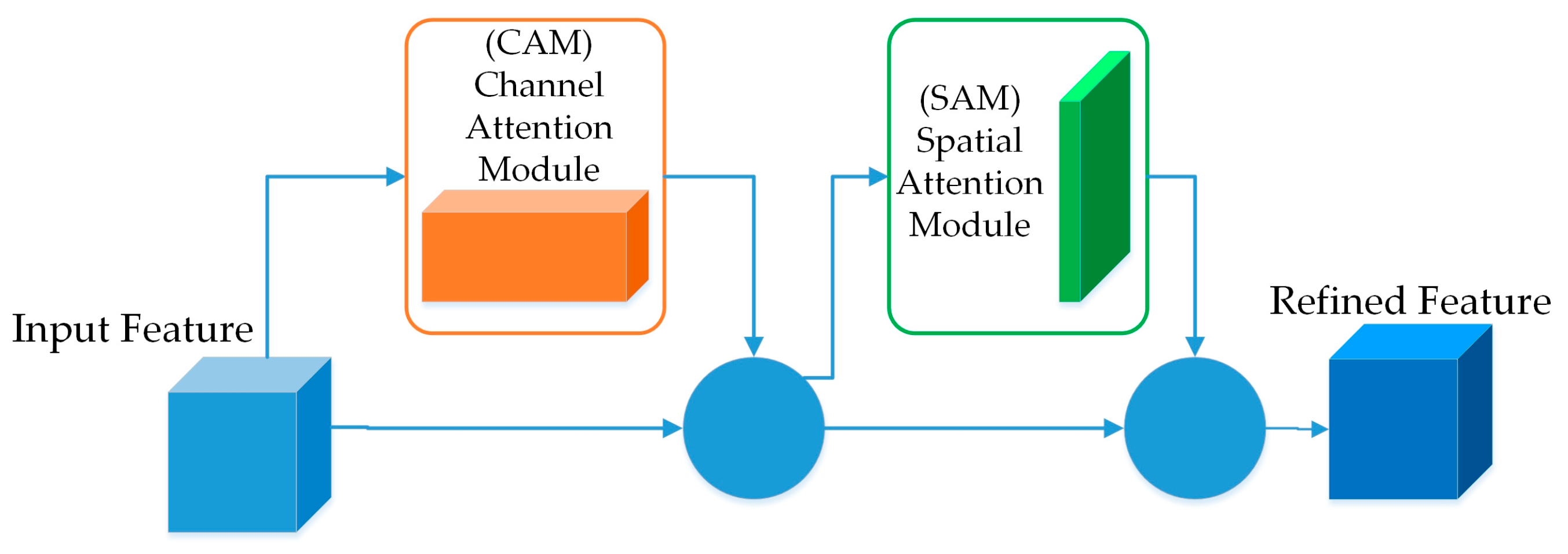

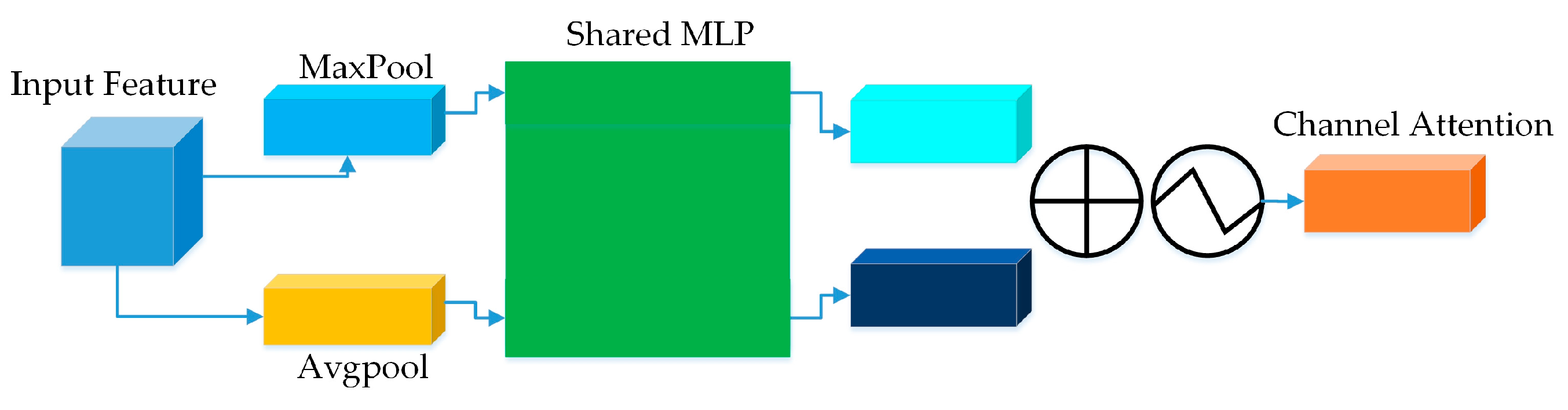

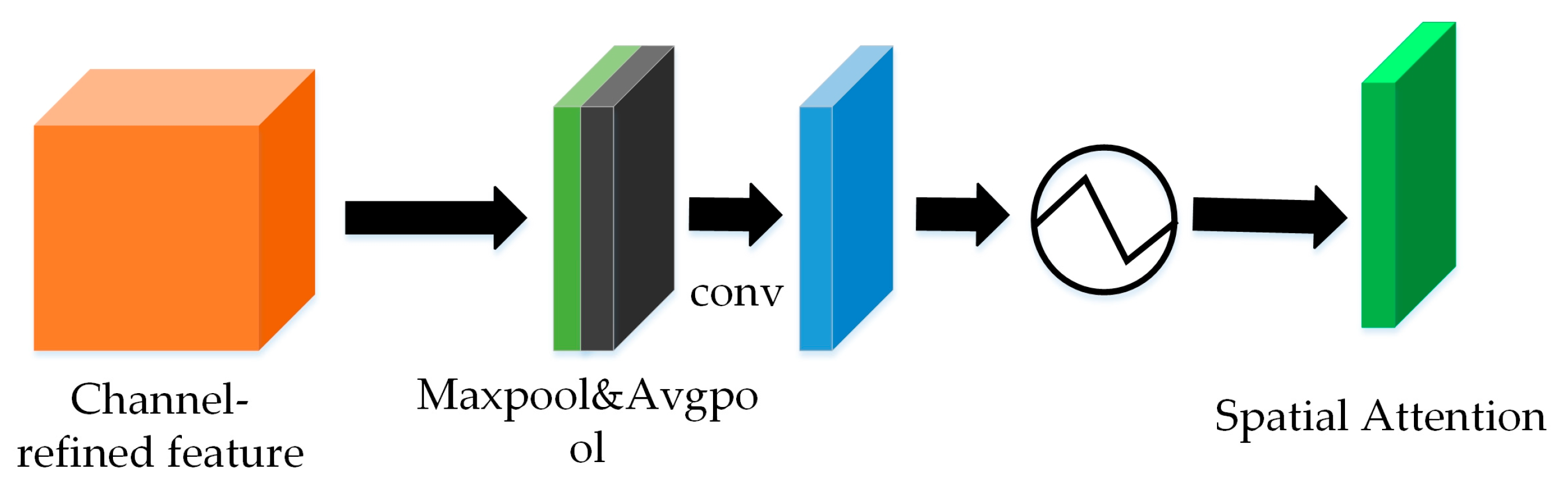

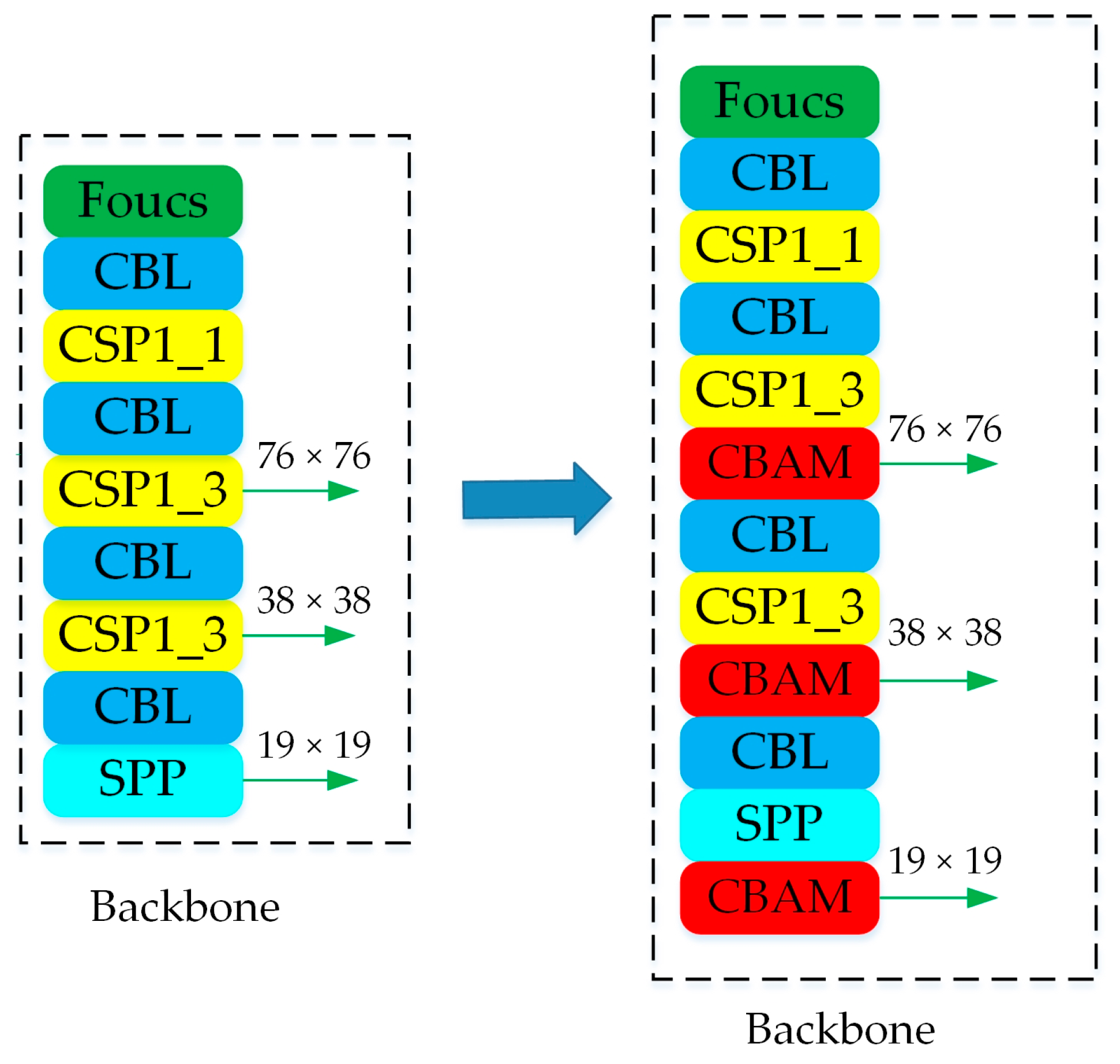

2.3. YOLO-ASM Algorithm

2.3.1. Sugarcane Target Recognition Algorithm Based on YOLOv5s

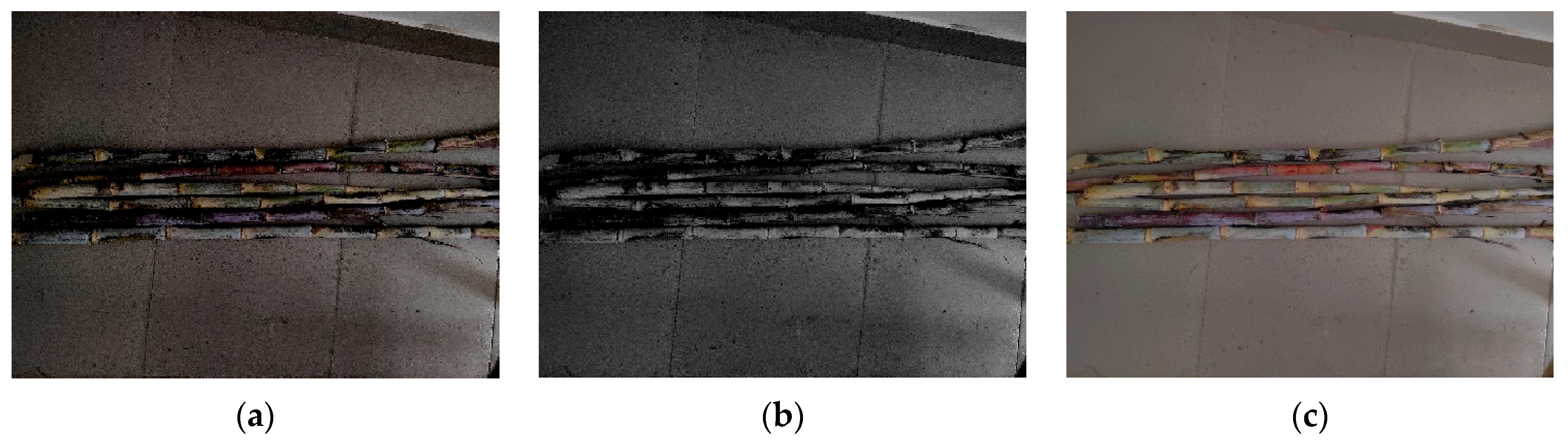

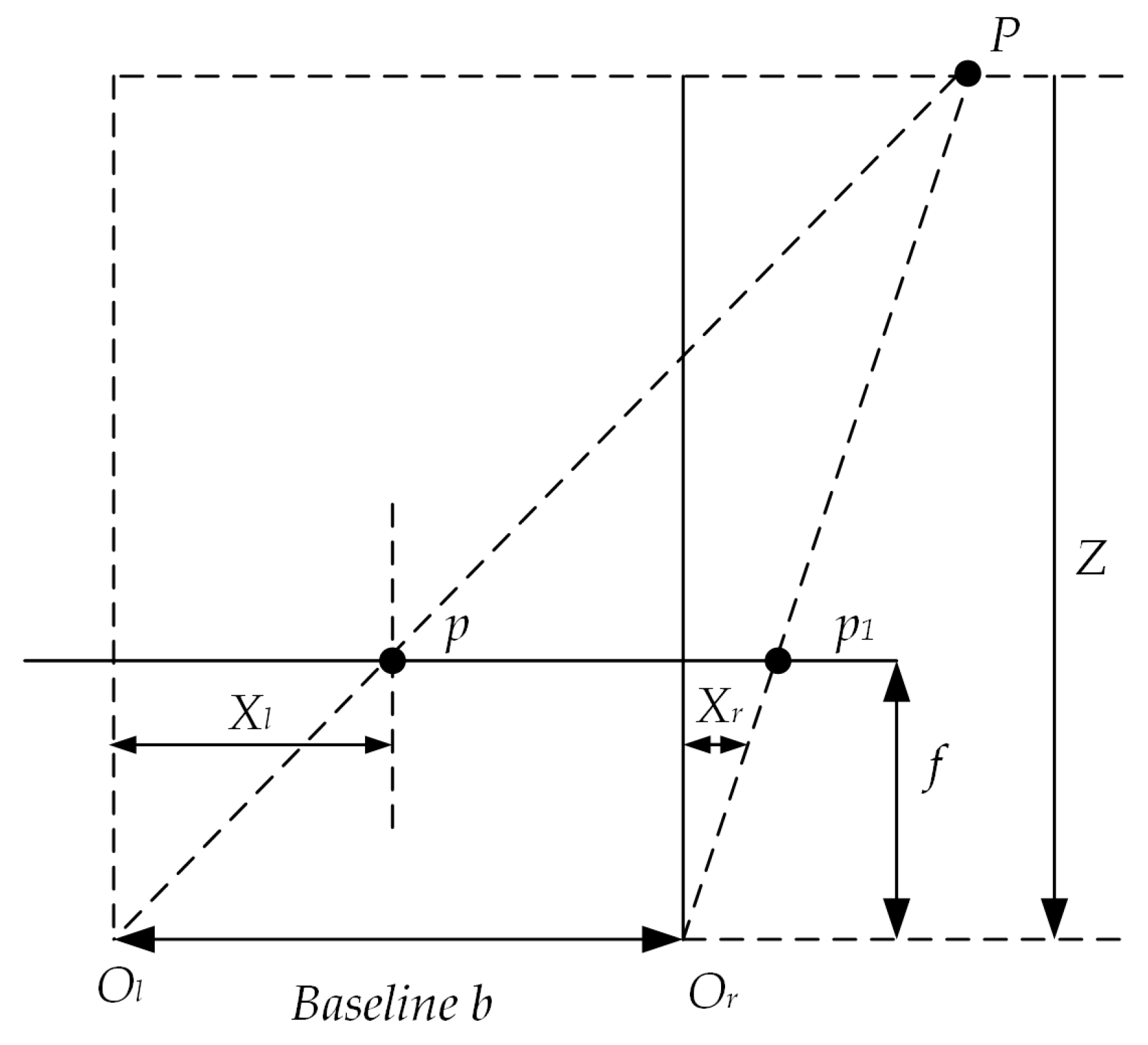

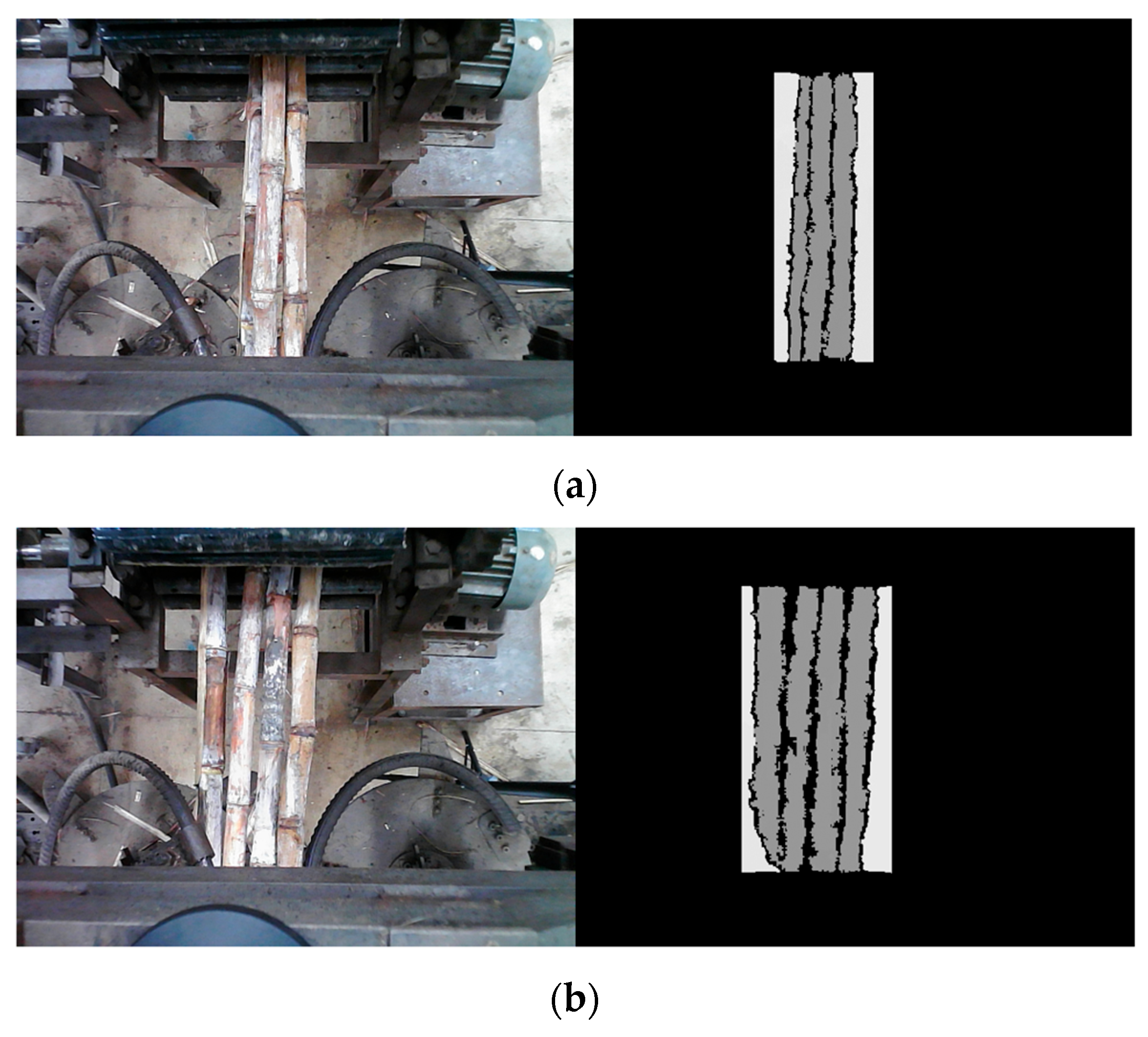

2.3.2. Opti-SGBM Algorithm Based on Double Cost Calculation

2.3.3. YOLO-ASM Algorithm Based on Improve-YOLOv5s and Opti-SGBM

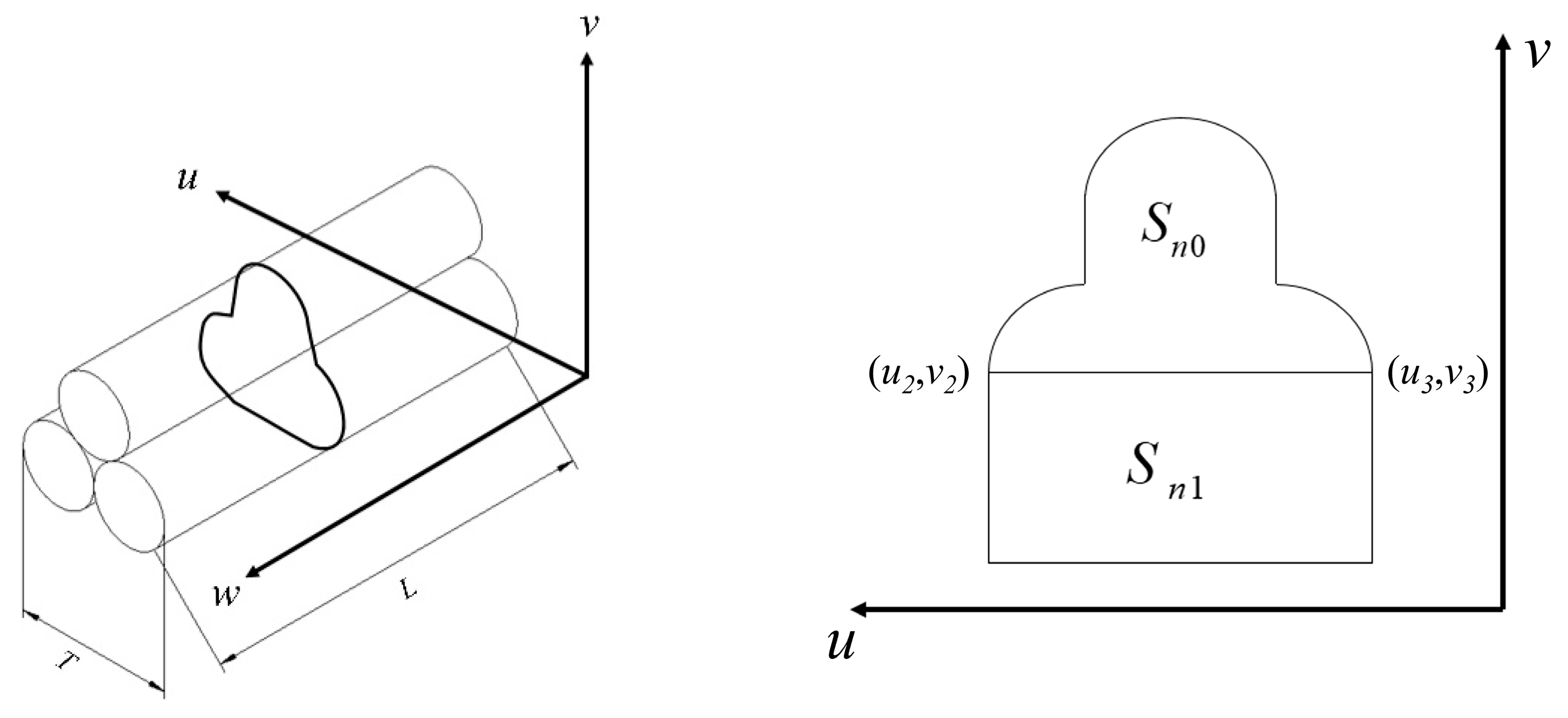

2.3.4. Theoretical Analysis of Sugarcane Feeding Volume Calculation

3. Results

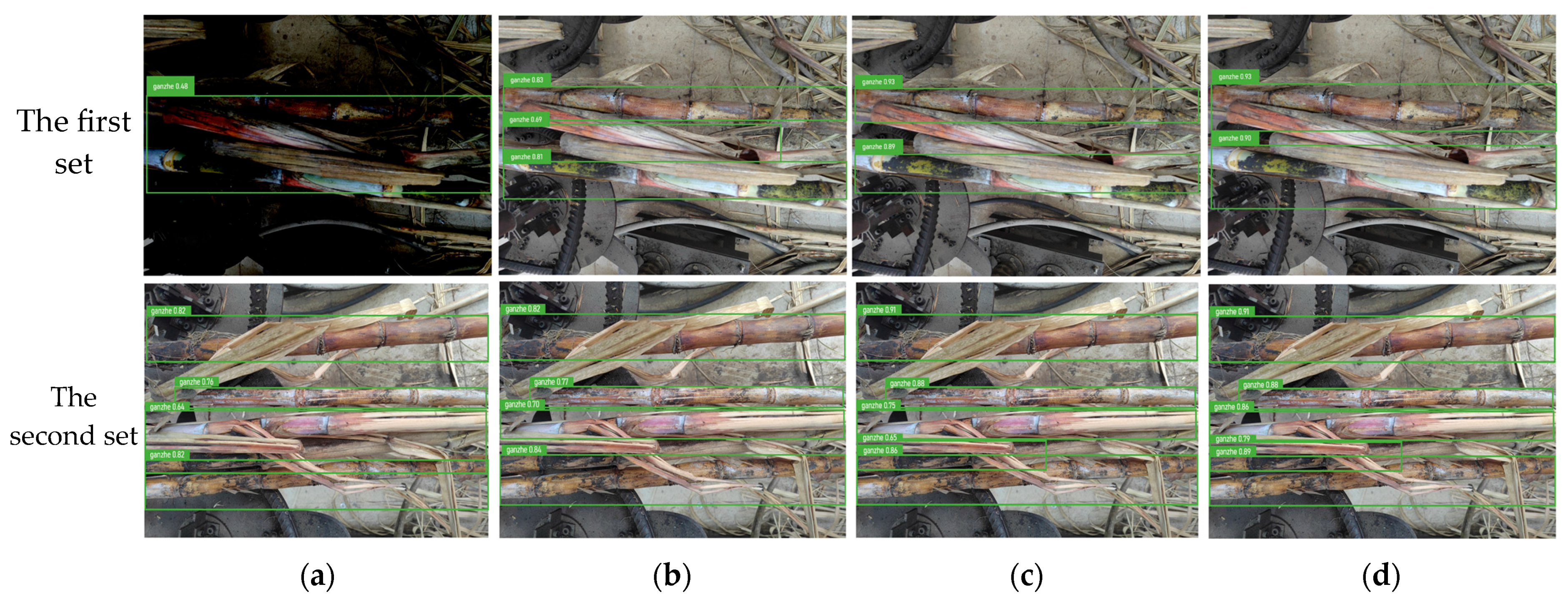

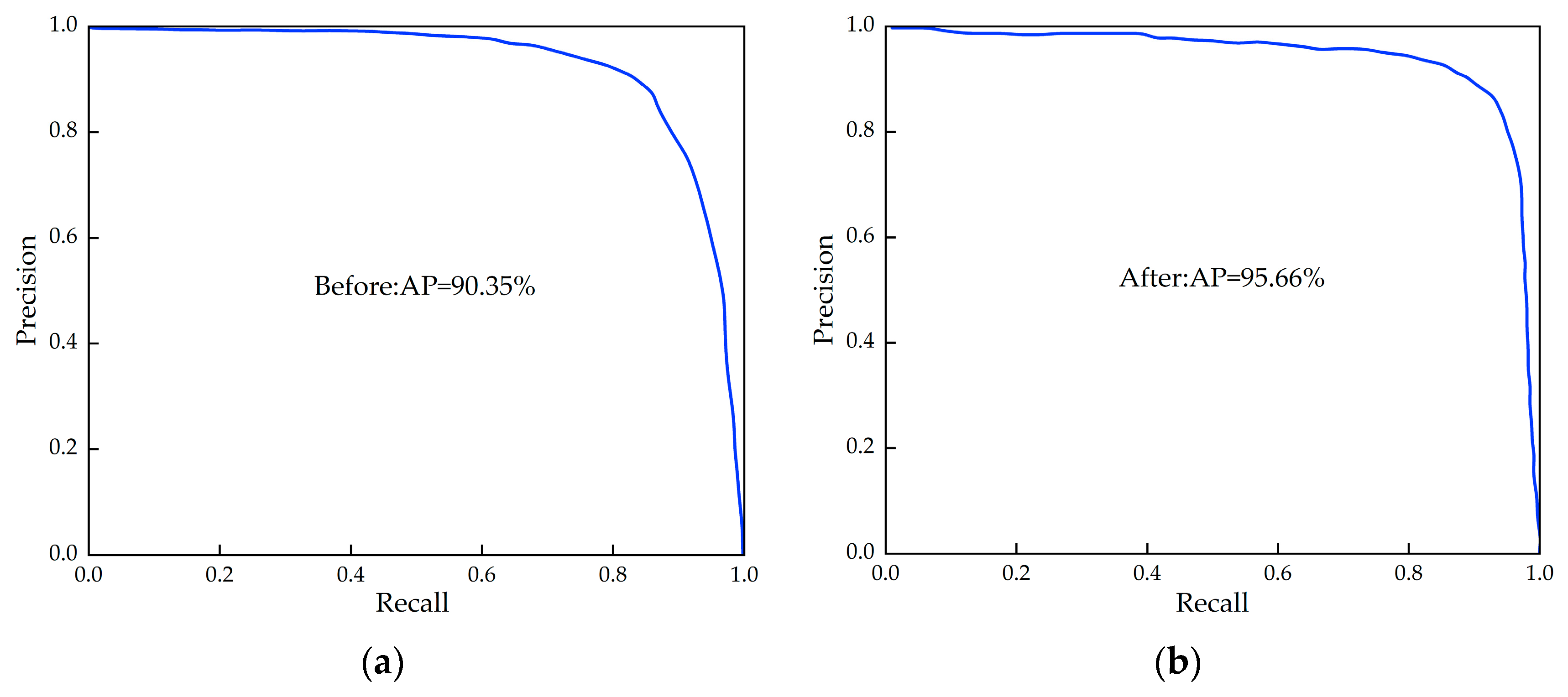

3.1. Improve-YOLOv5s Performance Experiments

3.1.1. Algorithm Performance Comparison

3.1.2. Ablation Studies

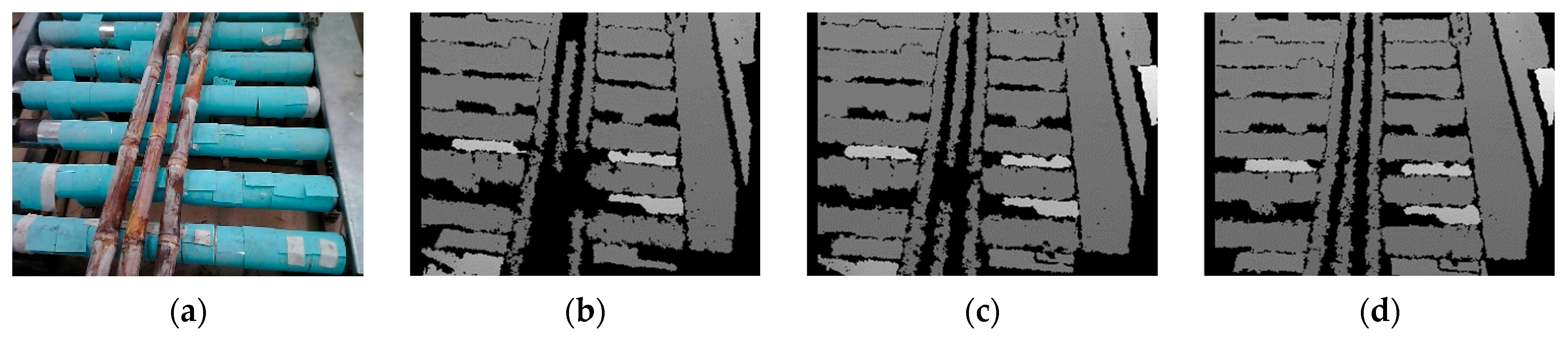

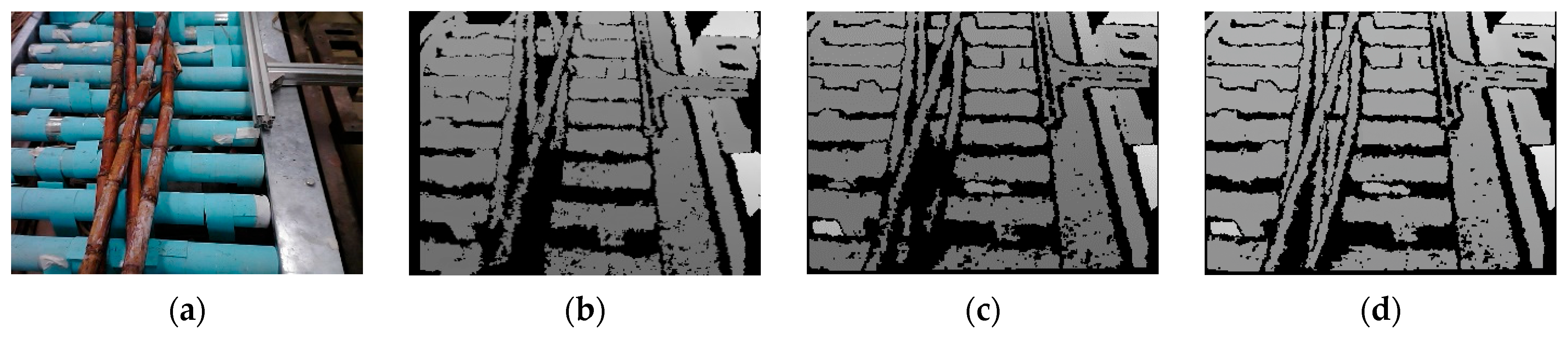

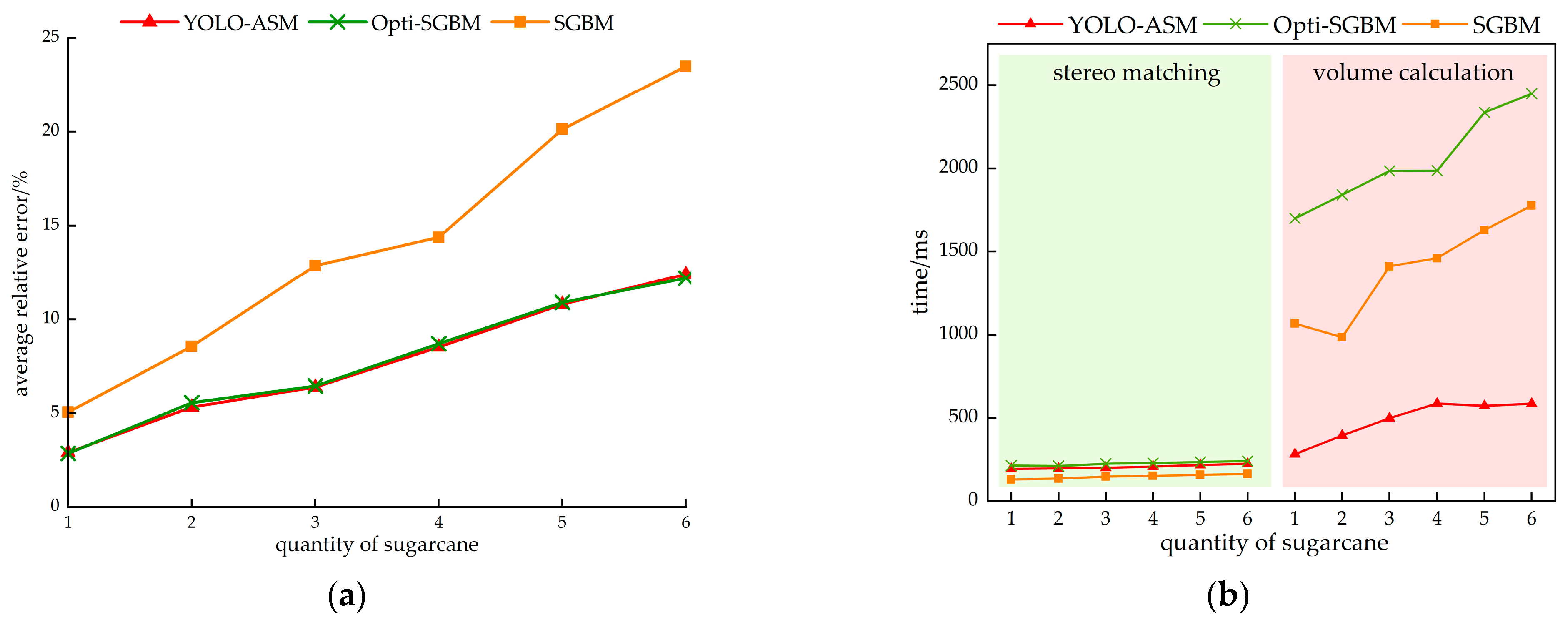

3.2. SGBM Algorithm Comparison Results

3.3. Sugarcane Feed Volume Calculation with YOLO-ASM Algorithm

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Xie, L.; Wang, J.; Cheng, S.; Zeng, B.; Yang, Z. Optimisation and dynamic simulation of a conveying and top breaking system for whole-stalk sugarcane harvesters. Biosyst. Eng. 2020, 197, 156–169. [Google Scholar] [CrossRef]

- Zhou, B.; Ma, S.; Li, W.; Qian, J.; Li, W.; Yang, S. Design and experiment of monitoring system for feed rate on sugarcane chopper harvester. Comput. Electron. Agric. 2025, 228, 109695. [Google Scholar] [CrossRef]

- Ding, Z.; Ma, S.; Zhang, X.; Liang, W.; Li, L.; Su, C. Ultrasonic Sensor-Based Basecutter Height Control System of Sugarcane Harvester. Sugar Tech 2022, 25, 453–459. [Google Scholar] [CrossRef]

- Peng, C.; Ma, S.; Zhang, L.; Su, C.; Li, W. Sugarcane feeding quantity detection based on depth learning. In Proceedings of the 2023 ASABE Annual International Meeting, Omaha, NB, USA, 9–12 July 2023; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2023. [Google Scholar]

- Zhou, D.; Fan, Y.; Deng, G.; He, F.; Wang, M. A new design of sugarcane seed cutting systems based on machine vision. Comput. Electron. Agric. 2020, 175, 105611. [Google Scholar] [CrossRef]

- Maldaner, L.F.; Molin, J.P. Data processing within rows for sugarcane yield mapping. Sci. Agric. 2019, 77, e20180391. [Google Scholar] [CrossRef]

- Liang, K.; Feng, Y.; Yao, B.; Chen, H.; Pan, M.; Tang, Y.; Guan, W. A power matching control strategy for sugarcane combine harvesters. Appl. Eng. Agric. 2023, 39, 439–448. [Google Scholar] [CrossRef]

- Ma, J.; Ma, S.; Wang, F.; Xing, H.; Bai, J.; Ke, W.; Gao, S. Experimental Research on the Feeding Mechanism of Sugarcane Chopper Harvester. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2019. [Google Scholar]

- Metkar, A.R.; Valsalam, S.R.; Sivakumaran, N. A Novel Control Scheme Design and Implementation of Cane feeding System in Sugar Plant. IFAC-PapersOnLine 2018, 51, 389–394. [Google Scholar] [CrossRef]

- Price, R.R.; Johnson, R.M.; Viator, R.P.; Larsen, J.; Peters, A. Fiber optic yield monitor for a sugarcane harvester. Trans. ASABE 2011, 54, 31–39. [Google Scholar] [CrossRef]

- Lai, X.; Qin, Z.; Yang, P.; Shen, Z. Small Sugarcane Harvester Conveying Failure Analysis. In Proceedings of the 2018 3rd International Conference on Electrical, Automation and Mechanical Engineering (EAME 2018), Xi’an, China, 24–25 June 2018; Atlantis Press: Dordrecht, The Netherlands, 2018. [Google Scholar]

- Price, R.R.; Johnson, R.M.; Viator, R.P. An overhead optical yield monitor for a sugarcane harvester based on two optical distance sensors mounted above the loading elevator. Appl. Eng. Agric. 2017, 33, 687–693. [Google Scholar] [CrossRef]

- Xu, J.-X.; Ma, J.; Tang, Y.-N.; Wu, W.-X.; Shao, J.-H.; Wu, W.-B.; Wei, S.-Y.; Liu, Y.-F.; Wang, Y.-C.; Guo, H.-Q. Estimation of sugarcane yield using a machine learning approach based on uav-lidar data. Remote Sens. 2020, 12, 2823. [Google Scholar] [CrossRef]

- Vargas, C.M.; Heenkenda, M.K.; Romero, K.F. Estimating the aboveground fresh weight of sugarcane using multispectral images and light detection and ranging (LIDAR). Land 2024, 13, 611. [Google Scholar] [CrossRef]

- Wang, Z.; Heinemann, P.H.; Walker, P.N.; Heuser, C. Automated micropropagated sugarcane shoot separation by machine vision. Trans. ASAE 1999, 42, 247–254. [Google Scholar] [CrossRef]

- Schaufler, D.H.; Walker, P.N. Micropropagated sugarcane shoot identification using machine vision. Trans. ASAE 1995, 38, 1919–1925. [Google Scholar] [CrossRef]

- Rees, S.J.; McCarthy, C.L.; Baillie, C.P.; Burgos-Artizzu, X.P.; Dunn, M.T. Development and evaluation of a prototype precision spot spray system using image analysis to target Guinea Grass in sugarcane. Aust. J. Multi-Discip. Eng. 2011, 8, 97–106. [Google Scholar] [CrossRef]

- Kai, P.M.; de Oliveira, B.M.; da Costa, R.M. Deep learning-based method for classification of sugarcane varieties. Agronomy 2022, 12, 2722. [Google Scholar] [CrossRef]

- Militante, S.V.; Gerardo, B.D.; Medina, R.P. Sugarcane disease recognition using deep learning. In Proceedings of the 2019 IEEE Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 3–6 October 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Yu, K.; Tang, G.; Chen, W.; Hu, S.; Li, Y.; Gong, H. MobileNet-YOLO v5s: An improved lightweight method for real-time detection of sugarcane stem nodes in complex natural environments. IEEE Access 2023, 11, 104070–104083. [Google Scholar] [CrossRef]

- Kumpala, I.; Wichapha, N.; Prasomsab, P. Sugar cane red stripe disease detection using YOLO CNN of deep learning technique. Eng. Access 2022, 8, 192–197. [Google Scholar]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the 7th International Conference on Document Analysis and Recognition (ICDAR 2003), Edinburgh, UK, 3–6 August 2003; Volume 3. No. 2003. [Google Scholar]

- Saran, N.A.; Saran, M.; Nar, F. Distribution-preserving data augmentation. PeerJ Comput. Sci. 2021, 7, e571. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Lecture Notes in Computer Science, Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Liu, Y.; Lu, B.; Peng, J.; Zhang, Z. Research on the use of YOLOv5 object detection algorithm in mask wearing recognition. World Sci. Res. J. 2020, 6, 276–284. [Google Scholar]

- Lee, J.; Hwang, K. YOLO with adaptive frame control for real-time object detection applications. Multimed. Tools Appl. 2022, 81, 36375–36396. [Google Scholar] [CrossRef]

- Guo, W.; Zhao, L.; Li, Q.; Zhu, H. Binocular Vision Ranging Based on the SGBM Algorithm. In Proceedings of the 2024 4th International Conference on Electronic Information Engineering and Computer Science (EIECS), Yanji, China, 27–29 September 2024; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Zhang, J.; Han, F.; Han, D.; Su, Z.; Li, H.; Zhao, W.; Yang, J. Object measurement in real underwater environments using improved stereo matching with semantic segmentation. Measurement 2023, 218, 113147. [Google Scholar] [CrossRef]

- Zabih, R.; Woodfill, J. Non-parametric local transforms for computing visual correspondence. In Computer Vision—ECCV’94, Proceedings of the Third European Conference on Computer Vision Stockholm, Stockholm, Sweden, 2–6 May 1994; Springer: Berlin/Heidelberg, Germany, 1994; Volume II. [Google Scholar]

- Rathnayaka, P.; Park, S.-Y. IGG-MBS: Iterative guided-Gaussian multi-baseline stereo matching. IEEE Access 2020, 8, 99205–99218. [Google Scholar] [CrossRef]

- Dawood, W.M.; Alghargan, N.Y.A. Response of Sugarcane Saccharum officinarum L. Varieties to Seedling Technique. Plant Arch. 2020, 20, 2871–2879. [Google Scholar]

- Alam, M.N.; Nath, U.K.; Karim, K.M.R.; Ahmed, M.M.; Mitul, R.Y. Genetic variability of exotic sugarcane genotypes. Scientifica 2017, 1, 5202913. [Google Scholar] [CrossRef] [PubMed]

- Zhou, M. Family evaluation for sugarcane yield using data estimated from stalk number, height, and diameter. J. Crop Improv. 2014, 28, 406–417. [Google Scholar] [CrossRef]

- Mo, H.; Ma, S.; Huang, Z.; Li, S.; Qiu, C. Factors influencing axial no-load cutter vibration of sugarcane harvesters. Sugar Tech 2024, 26, 668–682. [Google Scholar] [CrossRef]

- Boyer, R.S.; Moore, J.S. A fast string searching algorithm. Commun. ACM 1977, 20, 762–772. [Google Scholar] [CrossRef]

| Fusion Position | Mean Average Precision(%) | Precision(%) | Recall(%) | Frames Per Second |

|---|---|---|---|---|

| CSP1_1 | 90.35 | 92.00 | 88.55 | 72 |

| CSP1_3 | 94.50 | 95.80 | 93.10 | 76 |

| CSP2_1 | 91.60 | 88.50 | 86.80 | 77 |

| Models | Average Precision (%) | Precision (%) | Recall (%) | Frames Per Second |

|---|---|---|---|---|

| SSD300 | 78.19 | 86.40 | 88.10 | 85 |

| Faster R-CNN | 82.36 | 86.90 | 89.00 | 90 |

| YOLOv3 | 88.90 | 93.10 | 90.70 | 102 |

| YOLOv5l | 95.80 | 96.30 | 93.90 | 55 |

| YOLOv5s | 90.35 | 92.00 | 88.55 | 82 |

| Improve-YOLOv5s | 95.66 | 93.80 | 92.30 | 105 |

| Number of Sugarcane Stalks | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| (Adequate lighting) Volume relative error/% | 2.61 | 4.94 | 5.78 | 7.74 | 10.19 | 11.36 |

| (Low lighting) Volume relative error/% | 3.21 | 5.82 | 6.66 | 8.97 | 11.41 | 13.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, X.; Fu, G. Sugarcane Feed Volume Detection in Stacked Scenarios Based on Improved YOLO-ASM. Agriculture 2025, 15, 1428. https://doi.org/10.3390/agriculture15131428

Lai X, Fu G. Sugarcane Feed Volume Detection in Stacked Scenarios Based on Improved YOLO-ASM. Agriculture. 2025; 15(13):1428. https://doi.org/10.3390/agriculture15131428

Chicago/Turabian StyleLai, Xiao, and Guanglong Fu. 2025. "Sugarcane Feed Volume Detection in Stacked Scenarios Based on Improved YOLO-ASM" Agriculture 15, no. 13: 1428. https://doi.org/10.3390/agriculture15131428

APA StyleLai, X., & Fu, G. (2025). Sugarcane Feed Volume Detection in Stacked Scenarios Based on Improved YOLO-ASM. Agriculture, 15(13), 1428. https://doi.org/10.3390/agriculture15131428