An Improved YOLOP Lane-Line Detection Utilizing Feature Shift Aggregation for Intelligent Agricultural Machinery

Abstract

1. Introduction

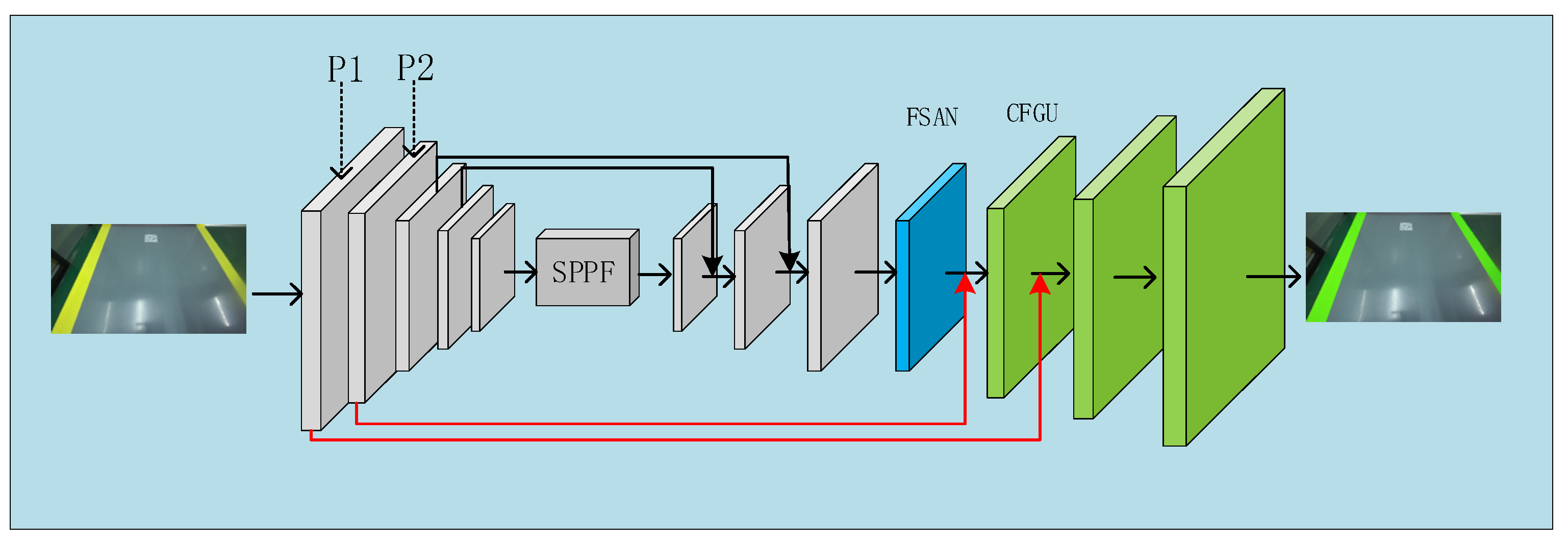

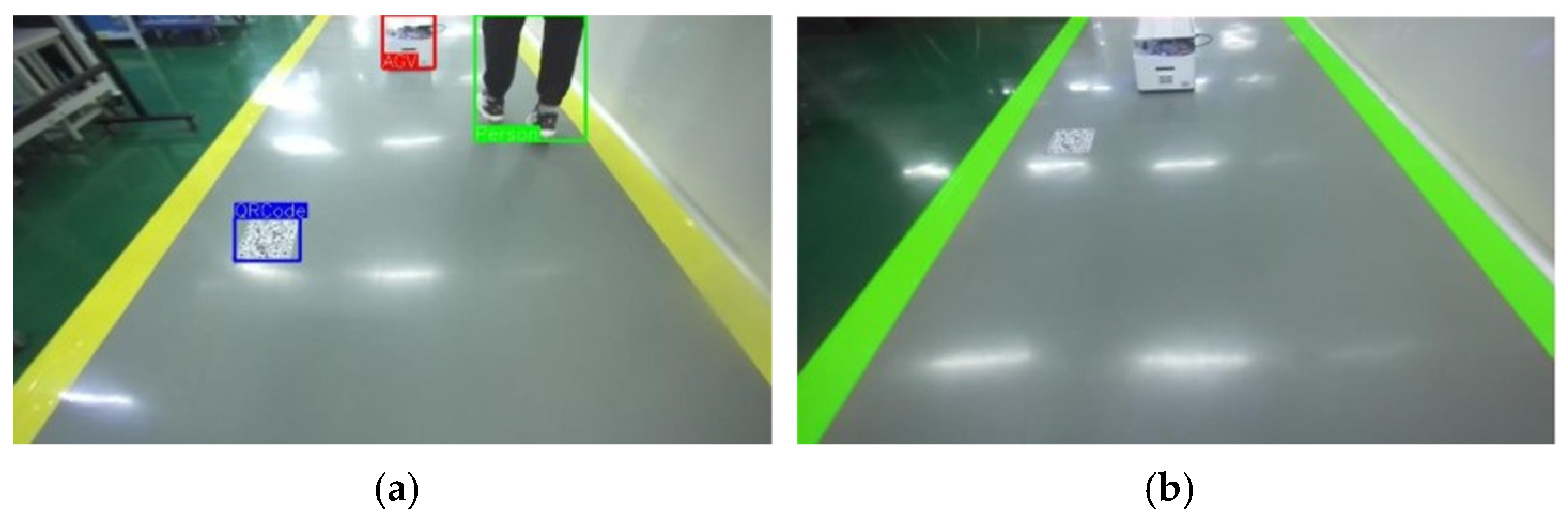

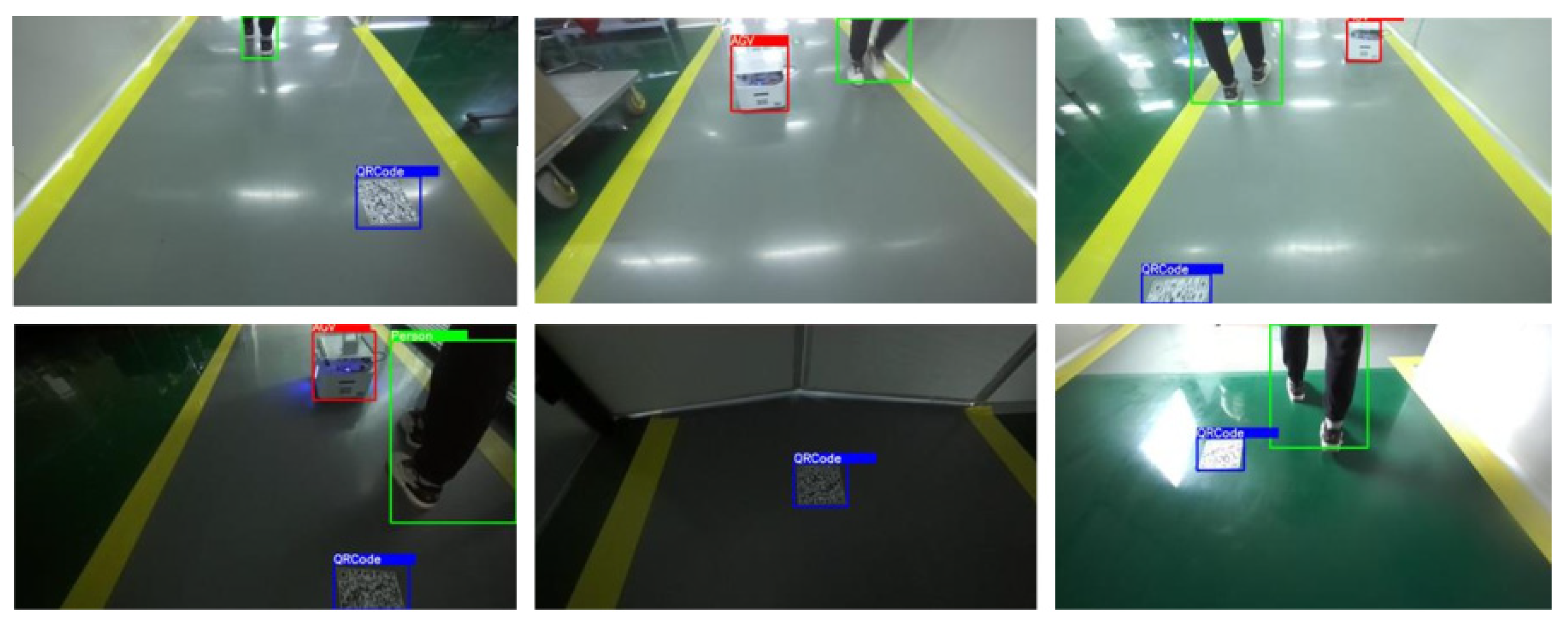

- Network Framework Design: We propose a multi-task joint detection algorithm (MTNet) tailored for embedded devices with limited computational resources, enabling simultaneous lane-line segmentation and the detection of pedestrians, Automated Guided Vehicles (AGVs), and QR codes. The network architecture comprises a shared feature encoder and two decoders for detection and segmentation;

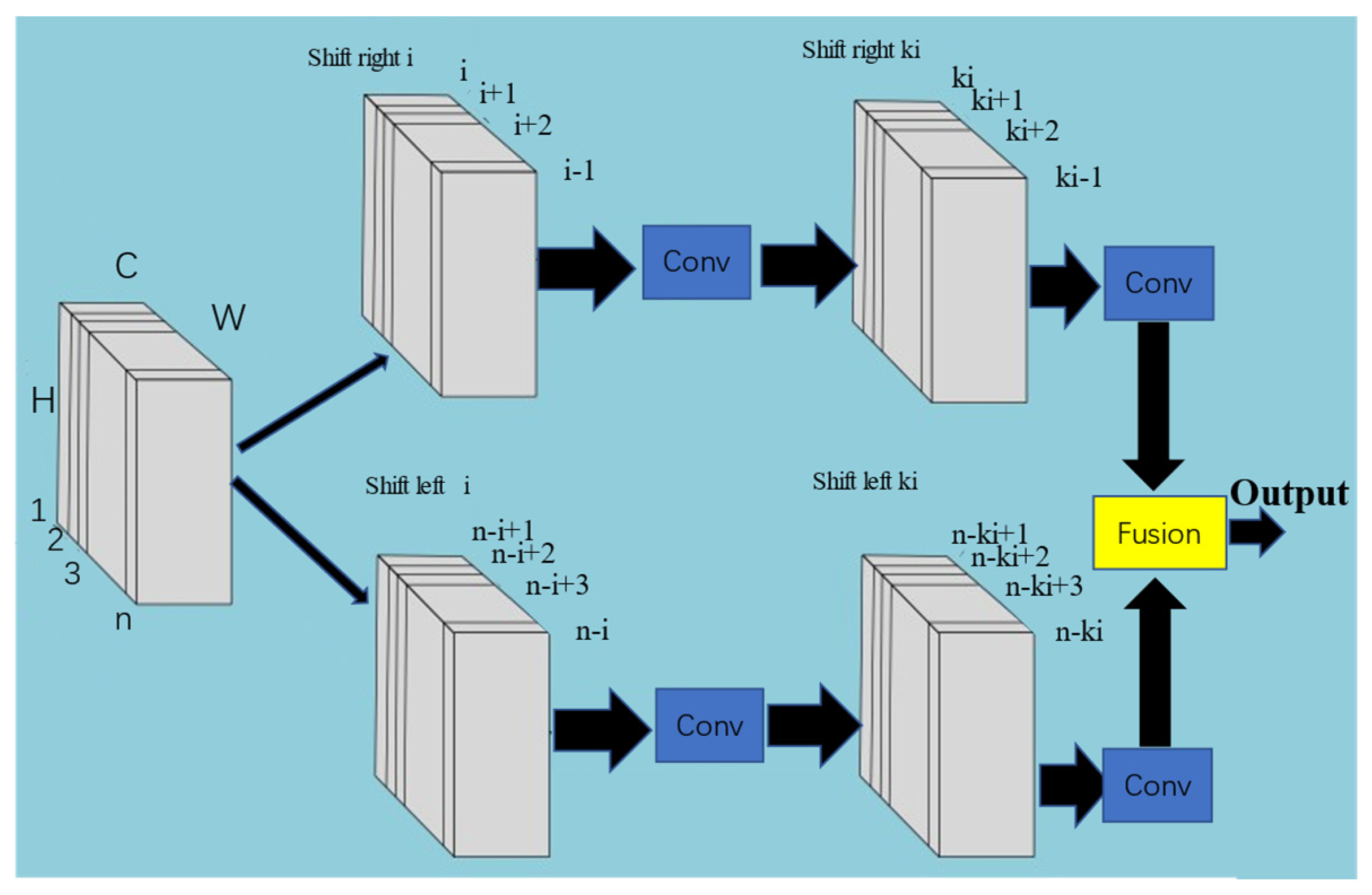

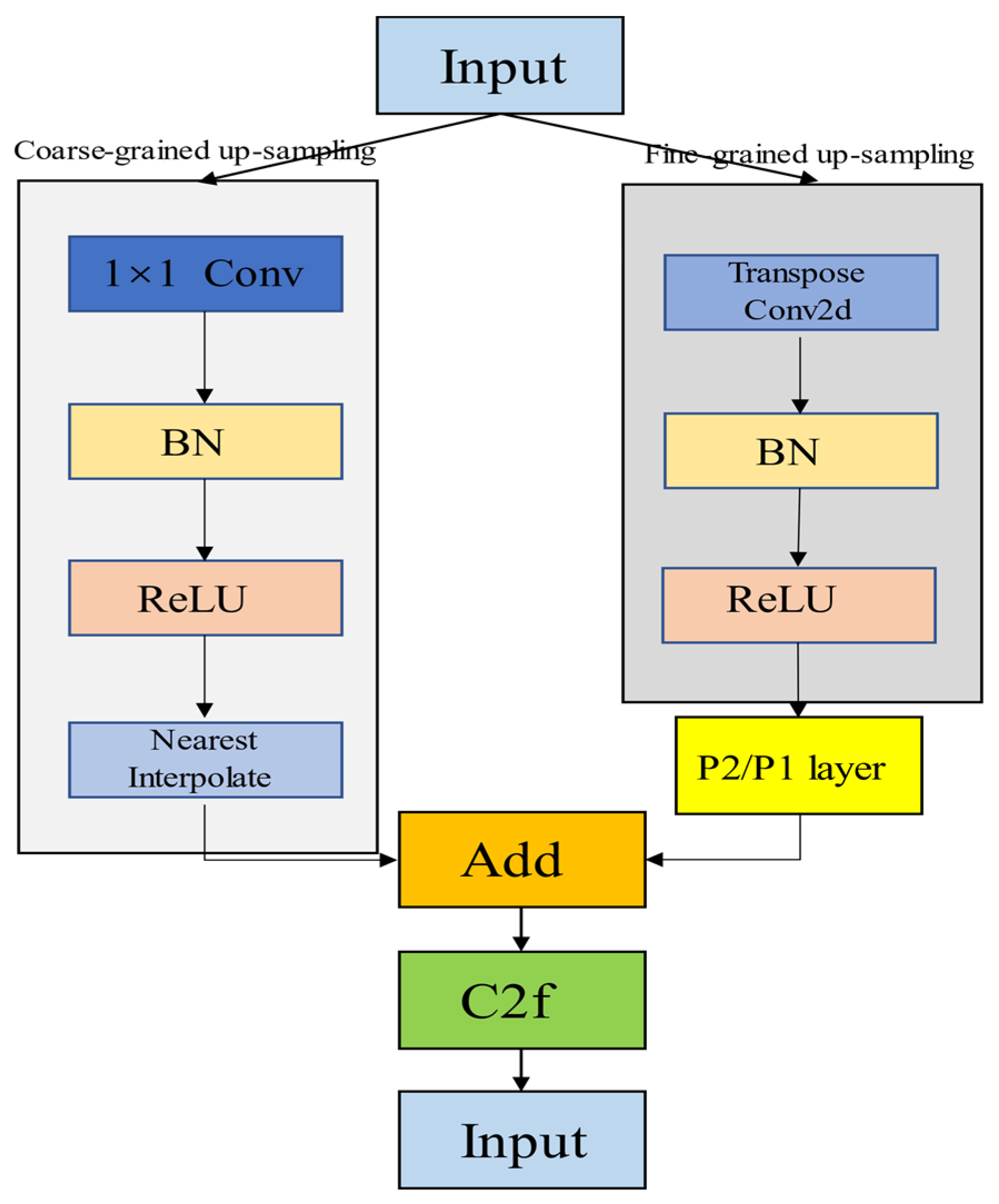

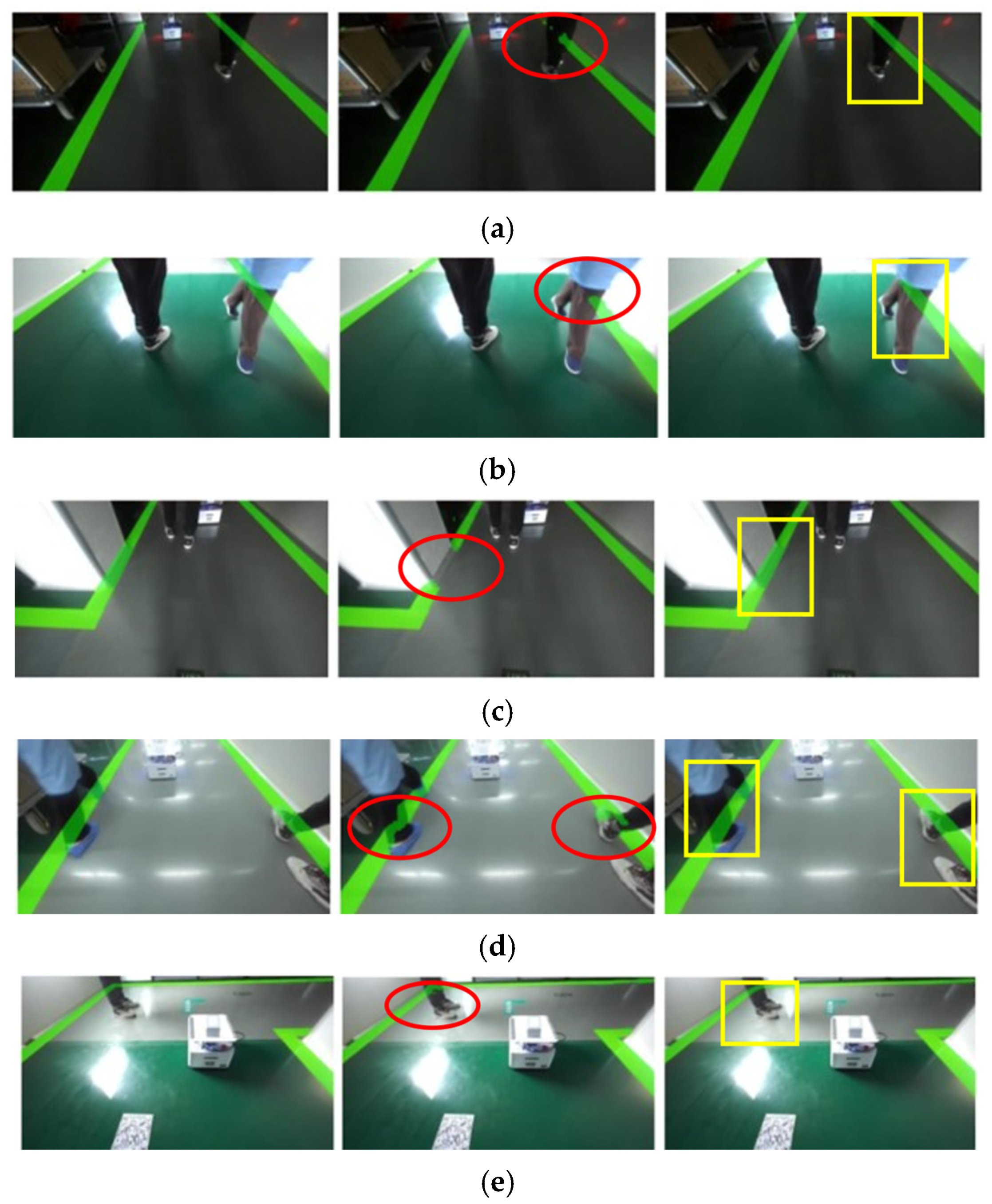

- Optimization Techniques: We introduced the Feature Shift Aggregation Network (FSAN) and Coarse and Fine Grain Size Combined Up-sampling (CFGU) to optimize the lane-line segmentation header of the MTNet model. These enhancements enable the model to infer lane lines even in complex scenarios, such as occluded, missing, or blurred lines, while addressing the challenge of preserving detailed texture and structural information in dim and reflective environments;

- Model Evaluation: We conducted training and testing of the MTNet model, performing experimental comparisons with various network architectures. Ablation experiments were designed to validate the effectiveness of the MTNet model in lane-line detection.

2. Related Work

2.1. Multi-Task Learning

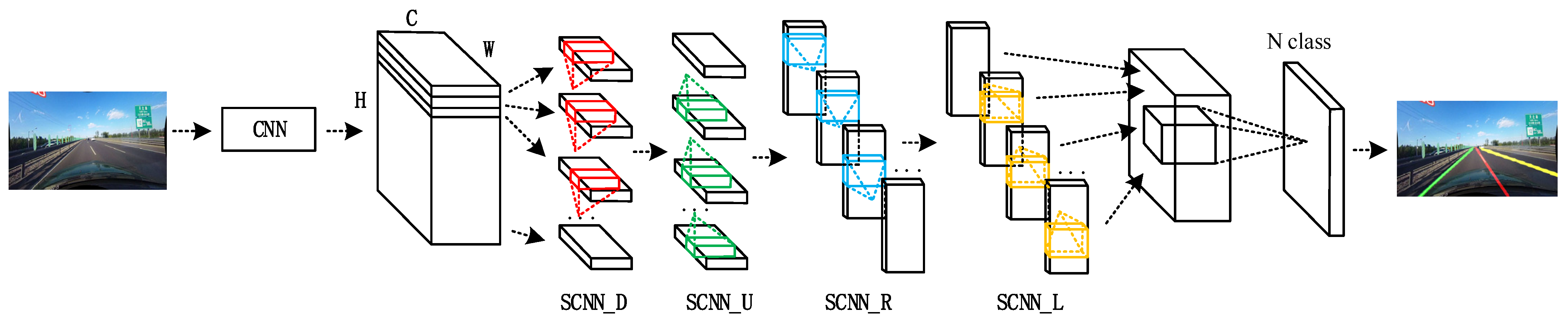

2.2. Lane-Line Detection

3. Methods

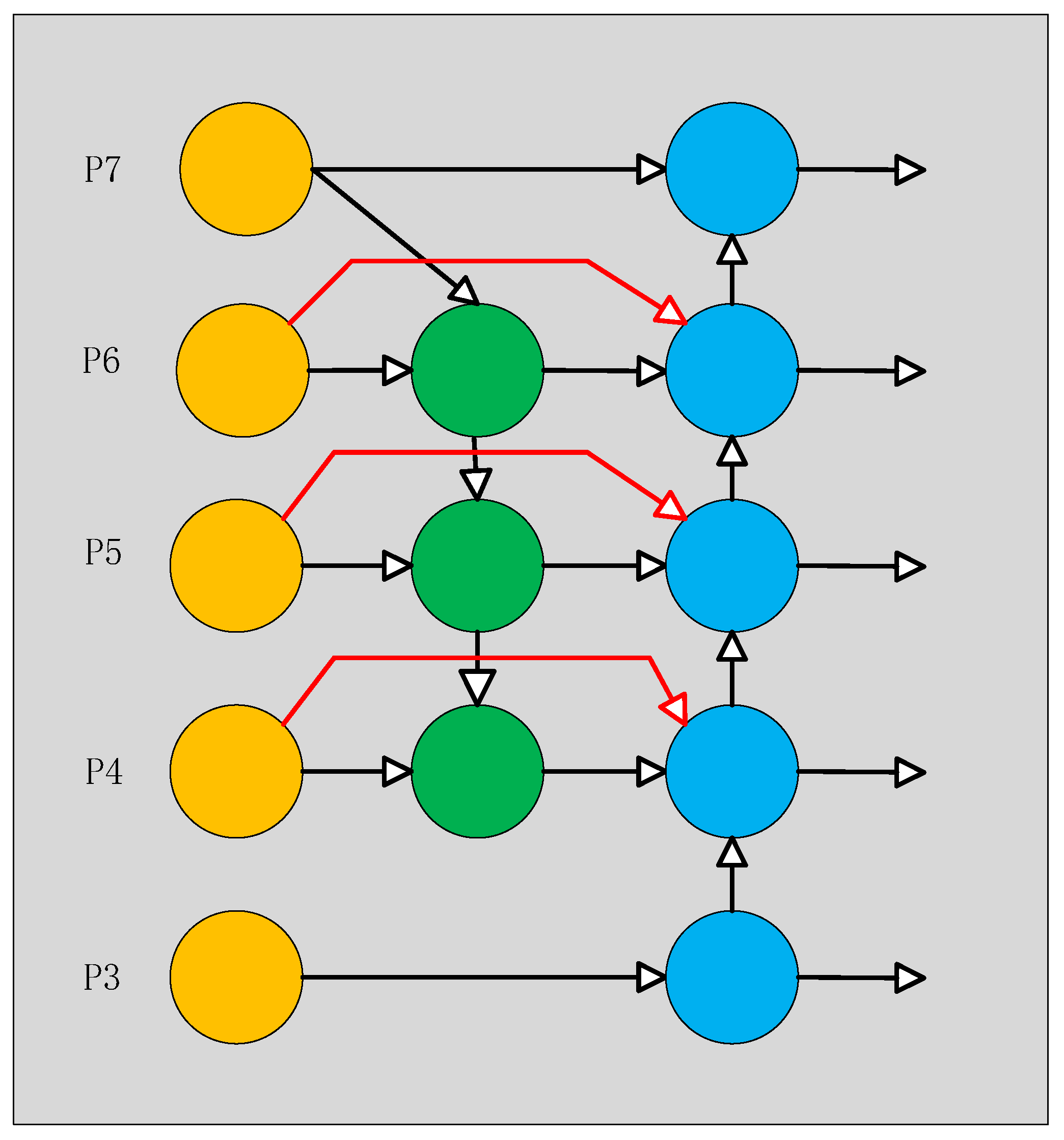

3.1. Encoder Design

- Enhanced Feature Fusion: BiFPN introduces a bidirectional feature propagation mechanism, allowing features to be transmitted from both higher layers to lower layers and vice versa. This approach overcomes the unidirectional (bottom-up) feature fusion method of FPN, facilitating comprehensive interaction among features at each layer and improving the integrity of feature fusion;

- Reduced Information Loss: By incorporating a two-way propagation mechanism, BiFPN minimizes information loss during feature fusion, ensuring that both high-level abstract features and low-level detailed features are effectively utilized;

- Dynamic Weighted Fusion: BiFPN employs learnable weight parameters to prioritize features from different layers. This dynamic weighting allows the network to adjust the importance of features based on task requirements, enabling adaptive learning of the optimal feature combinations and enhancing the network’s adaptability;

- Balancing Efficiency and Accuracy: BiFPN maintains relatively low computational complexity while ensuring high accuracy by iteratively applying top-down and bottom-up fusion mechanisms.

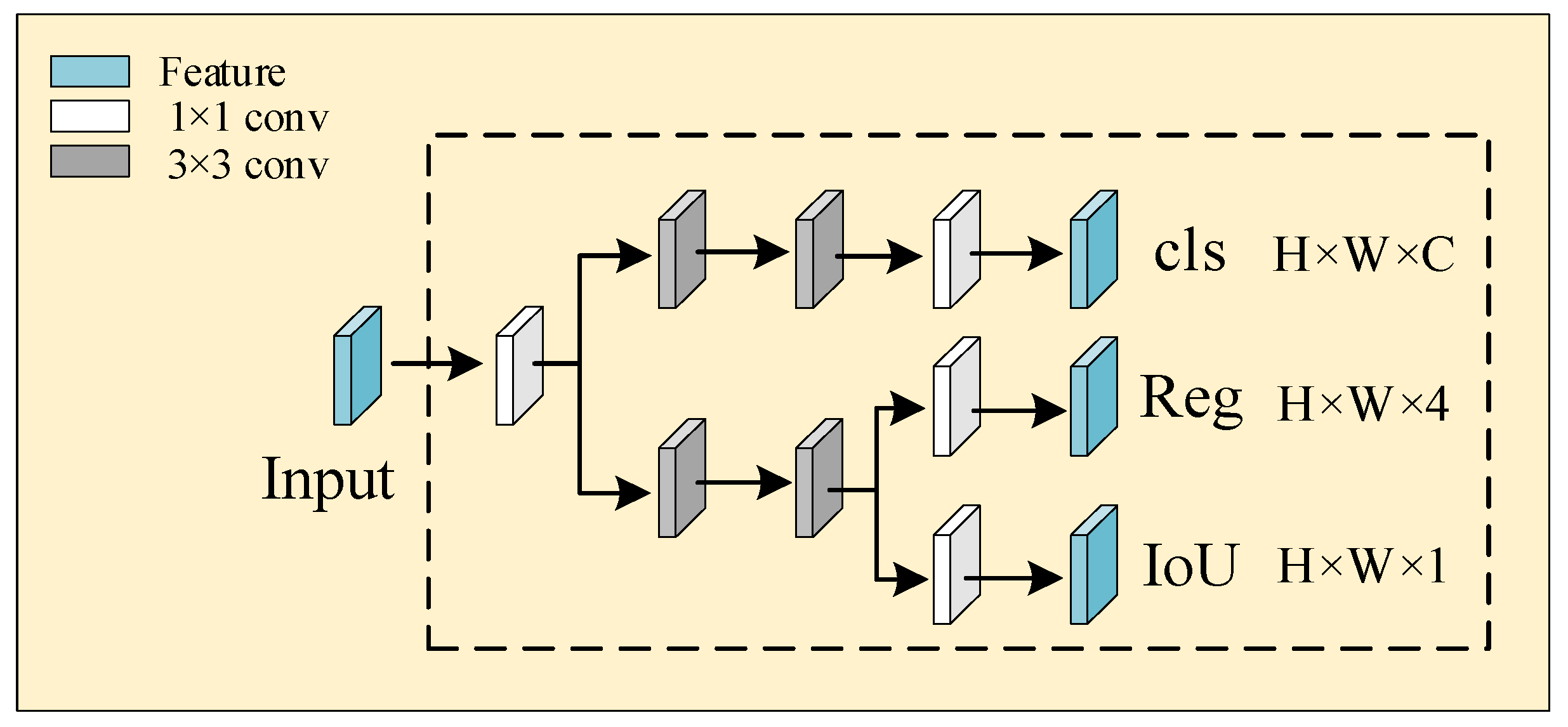

3.2. Detection-Decoder Design

3.3. Split-Decoder Design

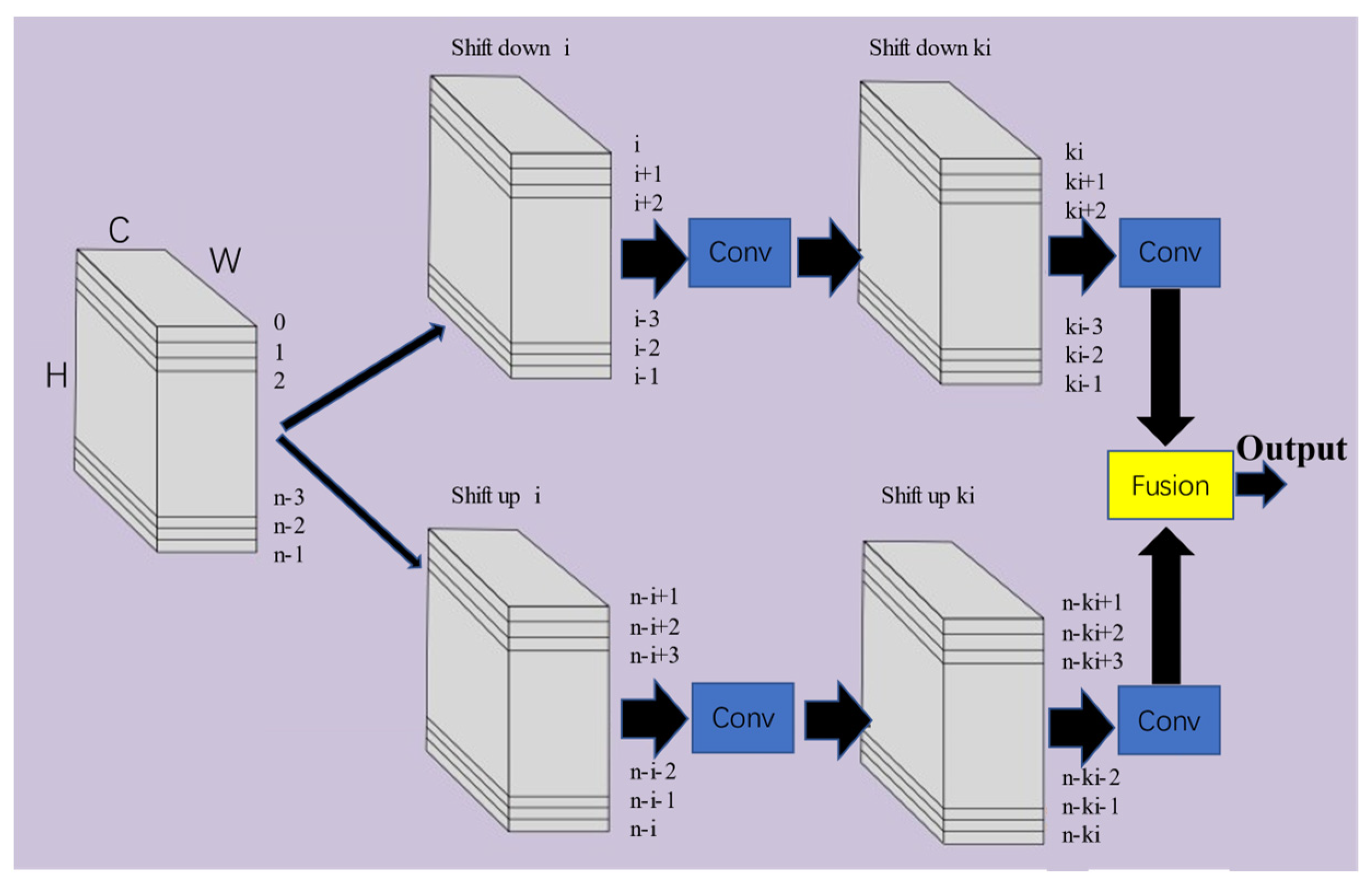

3.3.1. Feature Shift Aggregation Network (FSAN)

3.3.2. Coarse-Fine Granularity Combined Up-Sample (CFGU)

4. Experiments

4.1. Training Details

4.1.1. Experimental Platforms

4.1.2. Loss Function

- (1)

- Detect loss

- (2)

- Split loss

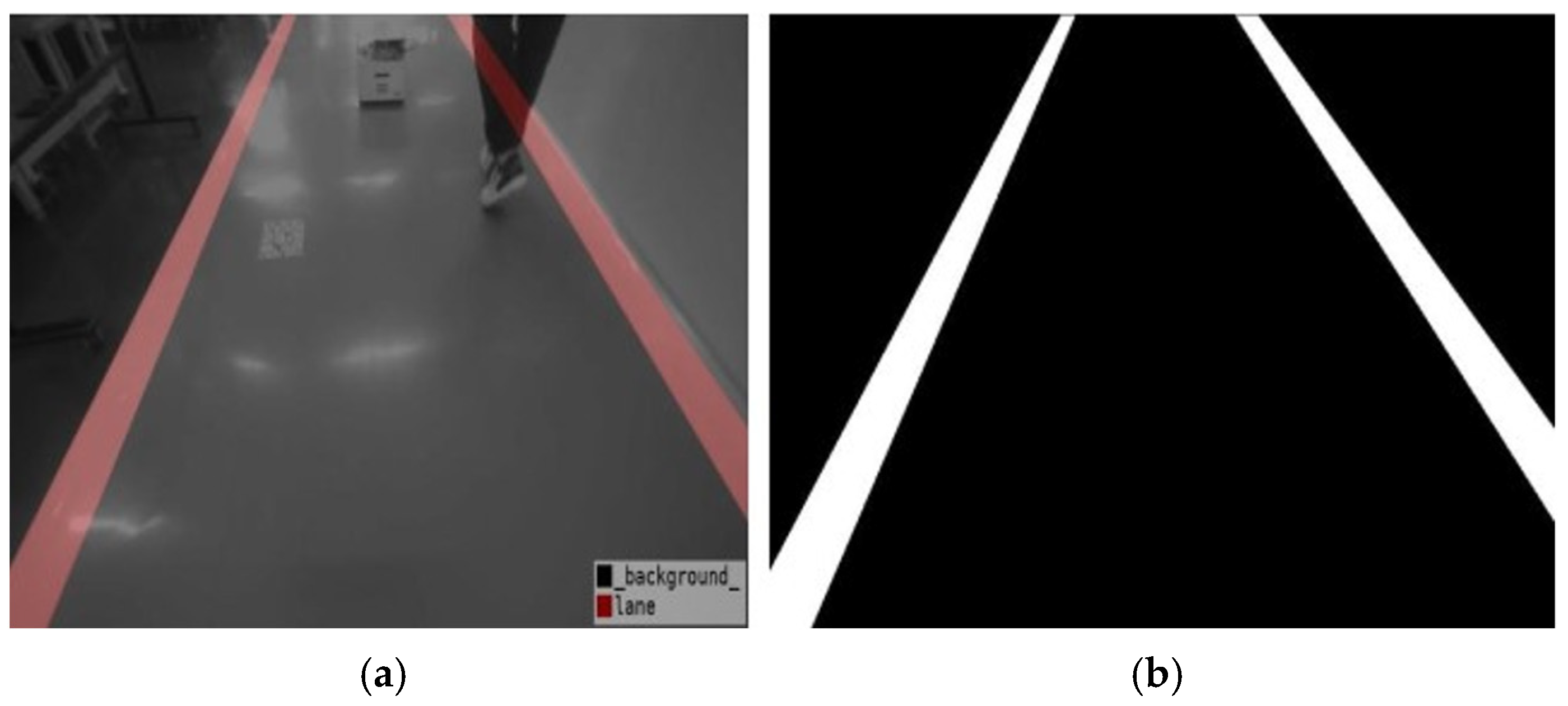

4.1.3. Dataset

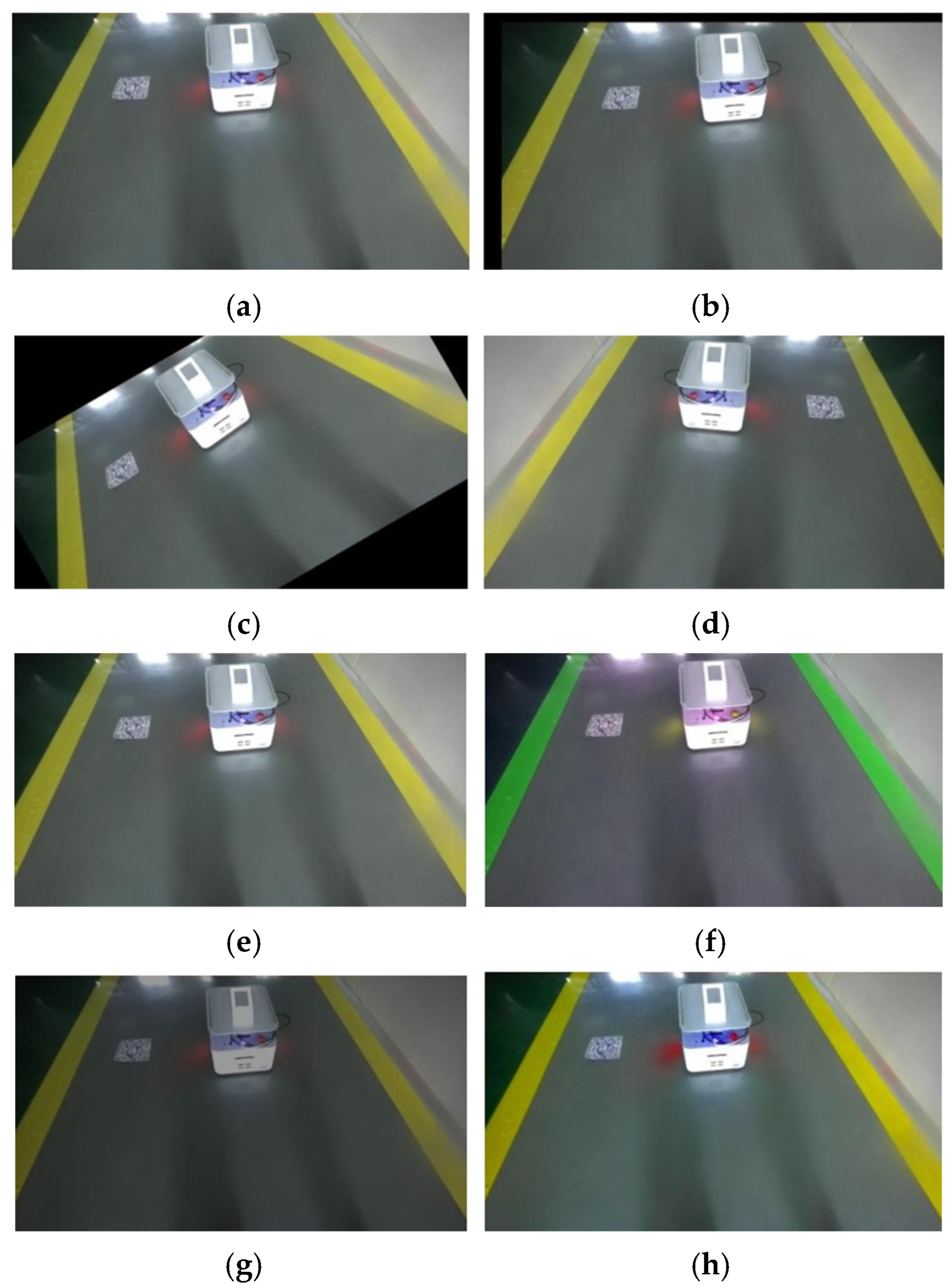

4.1.4. Data Enhancements

4.1.5. Performance-Evaluation Indicators

4.2. Comparisons and Analyses

4.3. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, D.; Dong, F.; Li, Z.; Xu, S. How Can Farmers’ Green Production Behavior Be Promoted? A Literature Review of Drivers and Incentives for Behavioral Change. Agriculture 2025, 15, 744. [Google Scholar] [CrossRef]

- Wu, X. Application status and development trend of AGV autonomous guided robots. Robot. Technol. Appl. 2012, 16–17. [Google Scholar]

- Yao, W.; Xu, J.; Wang, H. Design of visual localization system based on visual navigation AGV. Manufact. Auto. 2020, 42, 18–22. [Google Scholar]

- Guo, H.; Chen, H.; Wu, T. MSDP-Net: A YOLOv5-Based Safflower Corolla Object Detection and Spatial Positioning Network. Agriculture 2025, 15, 855. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, W.; Wei, X. Improved Field Obstacle Detection Algorithm Based on YOLOv8. Agriculture 2024, 14, 2263. [Google Scholar] [CrossRef]

- Liang, Z.; Xu, X.; Yang, D.; Liu, Y. The Development of a Lightweight DE-YOLO Model for Detecting Impurities and Broken Rice Grains. Agriculture 2025, 15, 848. [Google Scholar] [CrossRef]

- Maghsoumi, H.; Masoumi, N.; Araabi, B.N. RoaDSaVe: A Robust Lane Detection Method Based on Validity Borrowing From Reliable Lines. IEEE Sens. J. 2023, 23, 14571–14582. [Google Scholar] [CrossRef]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as Deep: Spatial CNN for Traffic Scene Understanding. Proc. AAAI 2018, 32, 7276–7283. [Google Scholar] [CrossRef]

- Liu, P.; Yu, G.; Wang, Z.; Zhou, B.; Ming, R.; Jin, C. Uncertainty-Aware Point-Cloud Semantic Segmentation for Unstructured Roads. IEEE Sens. J. 2023, 23, 15071–15080. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, Y.; Lei, L. Research on ELAN-Based Multimodal Teaching in International Chinese Character Micro-Lessons. In Proceedings of the 2024 13th International Conference on Educational and Information Technology (ICEIT), Chengdu, China, 22–24 March 2024; pp. 397–403. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Wu, D.; Liao, M.-W.; Zhang, W.-T.; Wang, X.-G.; Bai, X.; Cheng, W.-Q.; Liu, W.-Y. YOLOP: You Only Look Once for Panoptic Driving Perception. Mach. Intell. Res. 2022, 19, 550–562. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, L. Research progress of vision-based lane line detection method. J. Instrum. 2019, 40, 92–109. [Google Scholar]

- Teichmann, M.; Weber, M.; Zollner, M.; Cipolla, R.; Urtasun, R. MultiNet: Real-time Joint Semantic Reasoning for Autonomous Driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1013–1020. [Google Scholar]

- Qian, Y.; Dolan, J.M.; Yang, M. DLT-Net: Joint Detection of Drivable Areas, Lane Lines, and Traffic Objects. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4670–4679. [Google Scholar] [CrossRef]

- Vu, D.; Ngo, B.; Phan, H. HybridNets: End-to-End Perception Network. arXiv 2022, arXiv:2203.09035. [Google Scholar]

- Niu, J.; Lu, J.; Xu, M.; Lv, P.; Zhao, X. Robust lane detection using two-stage feature extraction with curve fitting. Pattern Recognit. 2016, 59, 225–233. [Google Scholar] [CrossRef]

- Jung, S.; Youn, J.; Sull, S. Efficient Lane Detection Based on Spatiotemporal Images. IEEE Trans. Intell. Transp. Syst. 2016, 17, 289–295. [Google Scholar] [CrossRef]

- Berriel, R.F.; de Aguiar, E.; Filho, V.V.d.S.; Oliveira-Santos, T. A Particle Filter-Based Lane Marker Tracking Approach Using a Cubic Spline Model. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Salvador, Brazil, 26–29 August 2015; pp. 149–156. [Google Scholar]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. ESPNet: Efficient Spatial Pyramid of Dilated Convolutions for Semantic Segmentation. In Proceedings of the Lecture Notes in Computer Science), Munich, Germany, 8–14 September 2018; pp. 561–580. [Google Scholar]

- Qu, Z.; Jin, H.; Zhou, Y.; Yang, Z.; Zhang, W. Focus on Local: Detecting Lane Marker from Bottom Up via Key Point. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14117–14125. [Google Scholar]

- Wang, J.; Ma, Y.; Huang, S.; Hui, T.; Wang, F.; Qian, C.; Zhang, T. A Keypoint-based Global Association Network for Lane Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1382–1391. [Google Scholar]

- Liu, L.; Chen, X.; Zhu, S.; Tan, P. CondLaneNet: A Top-to-down Lane Detection Framework Based on Conditional Convolution. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3753–3762. [Google Scholar]

- Kontente, S.; Orfaig, R.; Bobrovsky, B. CLRmatchNet: Enhancing Curved Lane Detection with Deep Matching Process. arXiv 2023, arXiv:2309.15204. [Google Scholar]

- Yang, Z.; Shen, C.; Shao, W.; Xing, T.; Hu, R.; Xu, P.; Chai, H.; Xue, R. CANet: Curved Guide Line Network with Adaptive Decoder for Lane Detection. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Zoljodi, A.; Abadijou, S.; Alibeigi, M.; Daneshtalab, M. Contrastive Learning for Lane Detection via cross-similarity. Pattern Recognit. Lett. 2024, 185, 175–183. [Google Scholar] [CrossRef]

- Honda, H.; Uchida, Y. CLRerNet: Improving Confidence of Lane Detection with LaneIoU. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 1165–1174. [Google Scholar]

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning Lightweight Lane Detection CNNs by Self Attention Distillation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–3 November 2019; Volume 2019, pp. 1013–1021. [Google Scholar]

- Abualsaud, H.; Liu, S.; Lu, D.B.; Situ, K.; Rangesh, A.; Trivedi, M.M. LaneAF: Robust Multi-Lane Detection With Affinity Fields. IEEE Robot. Autom. Lett. 2021, 6, 7477–7484. [Google Scholar] [CrossRef]

- Yao, Z.; Chen, X.; Guindel, C. Efficient Lane Detection Technique Based on Lightweight Attention Deep Neural Network. J. Adv. Transp. 2022, 2022, 5134437. [Google Scholar] [CrossRef]

- Zheng, T.; Huang, Y.; Liu, Y.; Tang, W.; Yang, Z.; Cai, D.; He, X. CLRNet: Cross Layer Refinement Network for Lane Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 888–897. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for RealTime Semantic Segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Pahk, J.; Shim, J.; Baek, M.; Lim, Y.; Choi, G. Effects of Sim2Real Image Translation via DCLGAN on Lane Keeping Assist System in CARLA Simulator. IEEE Access 2023, 11, 33915–33927. [Google Scholar] [CrossRef]

| Parameters | Values |

|---|---|

| Batchsize | 32 |

| Epoch | 300 |

| Initial Learning Rate | 0.001 |

| Weight Decay | 0.0005 |

| Box Loss Gain | 0.05 |

| Classification Loss Gain | 0.5 |

| Lane Segmentation Loss Gain | 0.2 |

| Lane IoU Loss Gain | 0.2 |

| Method | Recall (%) | mAP50 (%) |

|---|---|---|

| Faster R-CNN | 80.6 | 80.2 |

| YOLOv5s | 94.3 | 93.6 |

| YOLOP | 96.2 | 91.5 |

| MTNet | 99.0 | 97.9 |

| Method | Accuracy (%) | IoU (%) | Speed (ms/Frame) |

|---|---|---|---|

| ENet | 80.6 | 71.9 | 4.5 |

| SCNN | 85.2 | 74.5 | 17.6 |

| ENet-SAD | 87.4 | 76.3 | 7.0 |

| YOLOP | 95.7 | 90.0 | 8.7 |

| MTNet | 99.1 | 94.2 | 4.2 |

| Detecting Branch | Split Branch | Recall (%) | mAP50 (%) | Accuracy (%) | IoU (%) |

|---|---|---|---|---|---|

| √ | × | 98.5 | 97.6 | - | - |

| × | √ | - | - | 99.1 | 94.2 |

| √ | √ | 99.0 | 97.9 | 99.3 | 94.8 |

| Darknet53 | FSAN | CFGU | Accuracy (%) | IoU (%) |

|---|---|---|---|---|

| × | × | × | 95.7 | 90.0 |

| √ | × | × | 96.3 | 91.1 |

| × | √ | × | 98.5 | 93.6 |

| × | × | √ | 97.4 | 92.7 |

| √ | √ | × | 98.8 | 93.8 |

| × | √ | √ | 98.7 | 94.0 |

| √ | × | √ | 97.8 | 92.8 |

| √ | √ | √ | 99.1 | 94.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Chen, X.; Jiao, Z.; Song, S.; Ma, Z. An Improved YOLOP Lane-Line Detection Utilizing Feature Shift Aggregation for Intelligent Agricultural Machinery. Agriculture 2025, 15, 1361. https://doi.org/10.3390/agriculture15131361

Wang C, Chen X, Jiao Z, Song S, Ma Z. An Improved YOLOP Lane-Line Detection Utilizing Feature Shift Aggregation for Intelligent Agricultural Machinery. Agriculture. 2025; 15(13):1361. https://doi.org/10.3390/agriculture15131361

Chicago/Turabian StyleWang, Cundeng, Xiyuan Chen, Zhiyuan Jiao, Shuang Song, and Zhen Ma. 2025. "An Improved YOLOP Lane-Line Detection Utilizing Feature Shift Aggregation for Intelligent Agricultural Machinery" Agriculture 15, no. 13: 1361. https://doi.org/10.3390/agriculture15131361

APA StyleWang, C., Chen, X., Jiao, Z., Song, S., & Ma, Z. (2025). An Improved YOLOP Lane-Line Detection Utilizing Feature Shift Aggregation for Intelligent Agricultural Machinery. Agriculture, 15(13), 1361. https://doi.org/10.3390/agriculture15131361