Reinforcement Learning-Driven Digital Twin for Zero-Delay Communication in Smart Greenhouse Robotics

Abstract

1. Introduction

- The simplest is the Digital Model, which is a static representation without a direct connection to the physical world. It is a replica not synchronized in real time, and it is used for simulation and default analysis;

- The Digital Shadow instead is a Digital Model that receives data from the physical world, but the communication is one-way. The physical world updates the model, but not vice versa. It can be used for monitoring and data analysis;

- Finally, the Digital Twin is an interactive model of the physical world with a bidirectional data exchange. The model receives data in real time from the physical world, and it can send commands to the real world, modifying it.

- Real2Digital, which updates the DT with real-world data in real time;

- Digital2Real, which executes commands from the DT to the physical system;

- Digital2Digital, which simulates tasks in the DT to optimize performance and algorithms before deployment.

2. Related Work

2.1. Wearable Glove-Based Teleoperation Systems

2.2. IoT and Smart Agriculture Monitoring

2.3. Digital Twin Applications in Robotics and Human Interaction

2.4. Limitations of Vision-Based Gesture Recognition

2.5. Digital Twins Combined with Reinforcement Learning

2.6. Positioning of Our Work

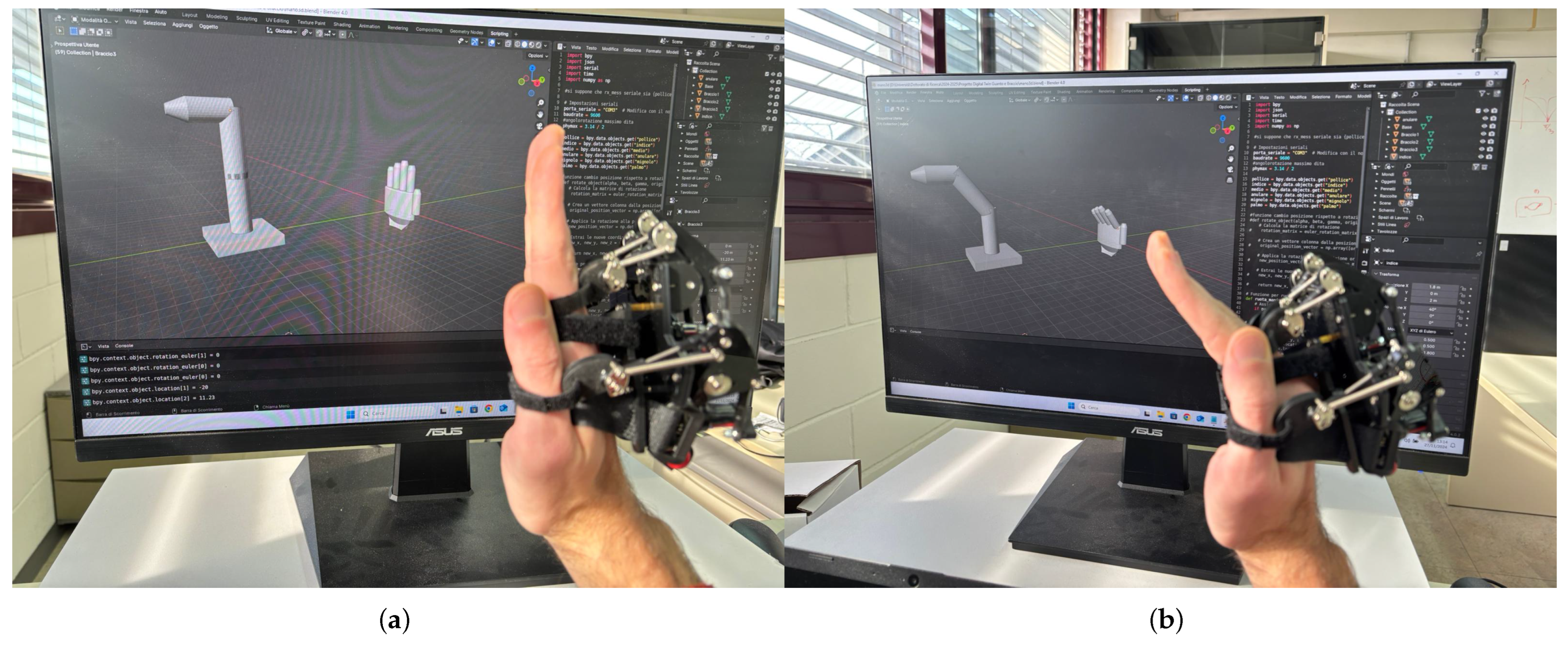

3. Materials and Methods

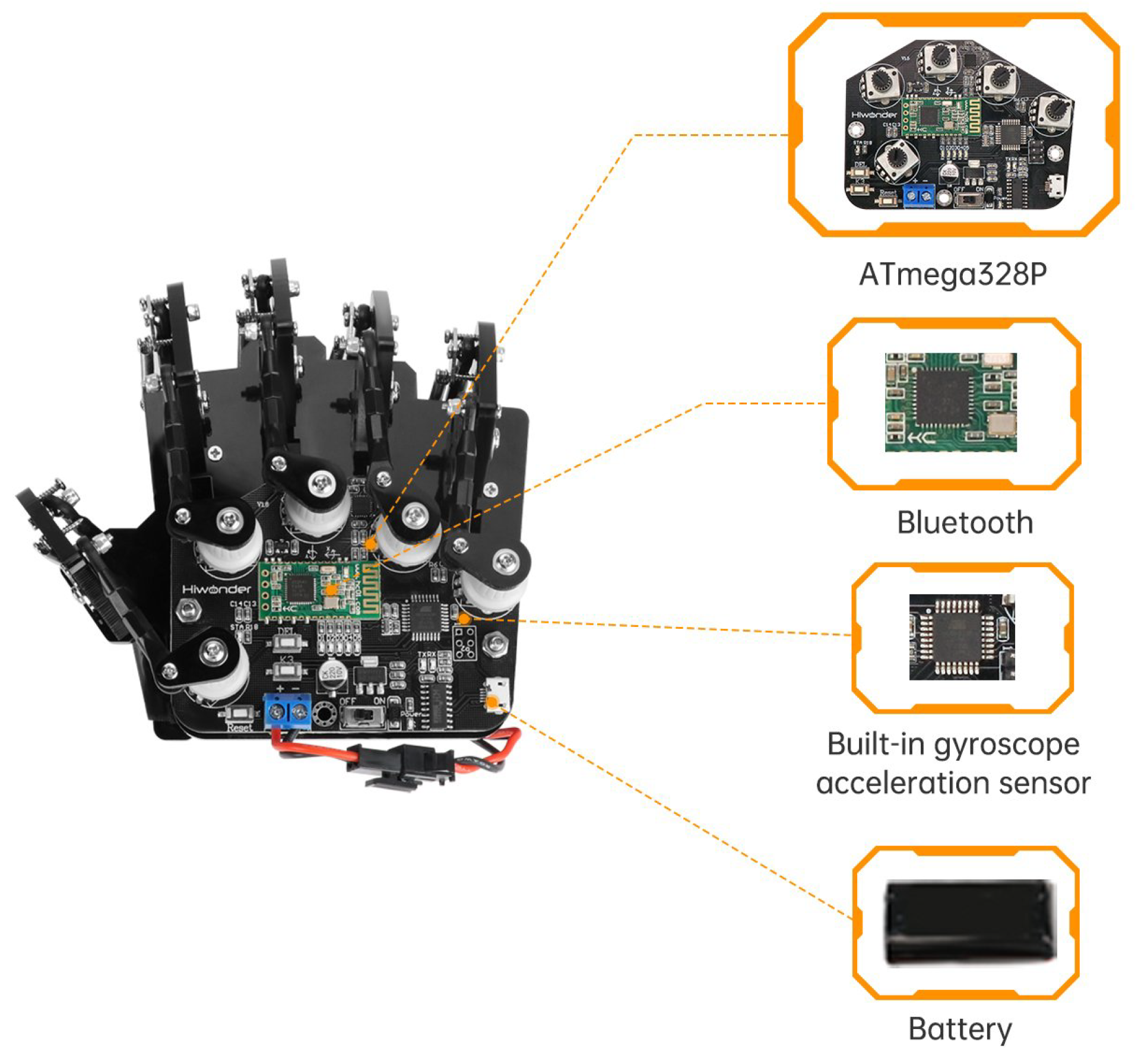

3.1. Sensor-Equipped Wearable Glove

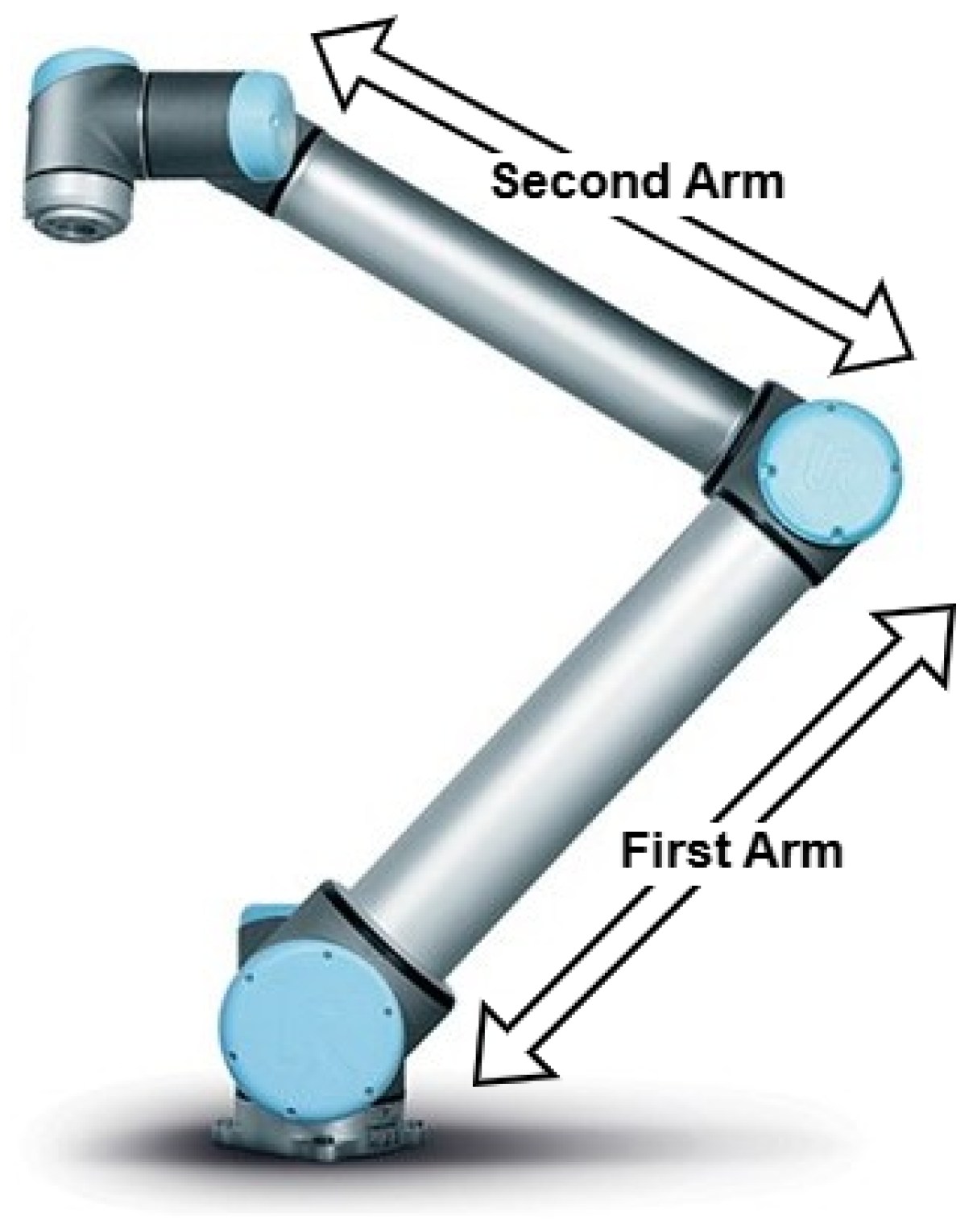

3.2. 6-Degree-of-Freedom Robotic Arm

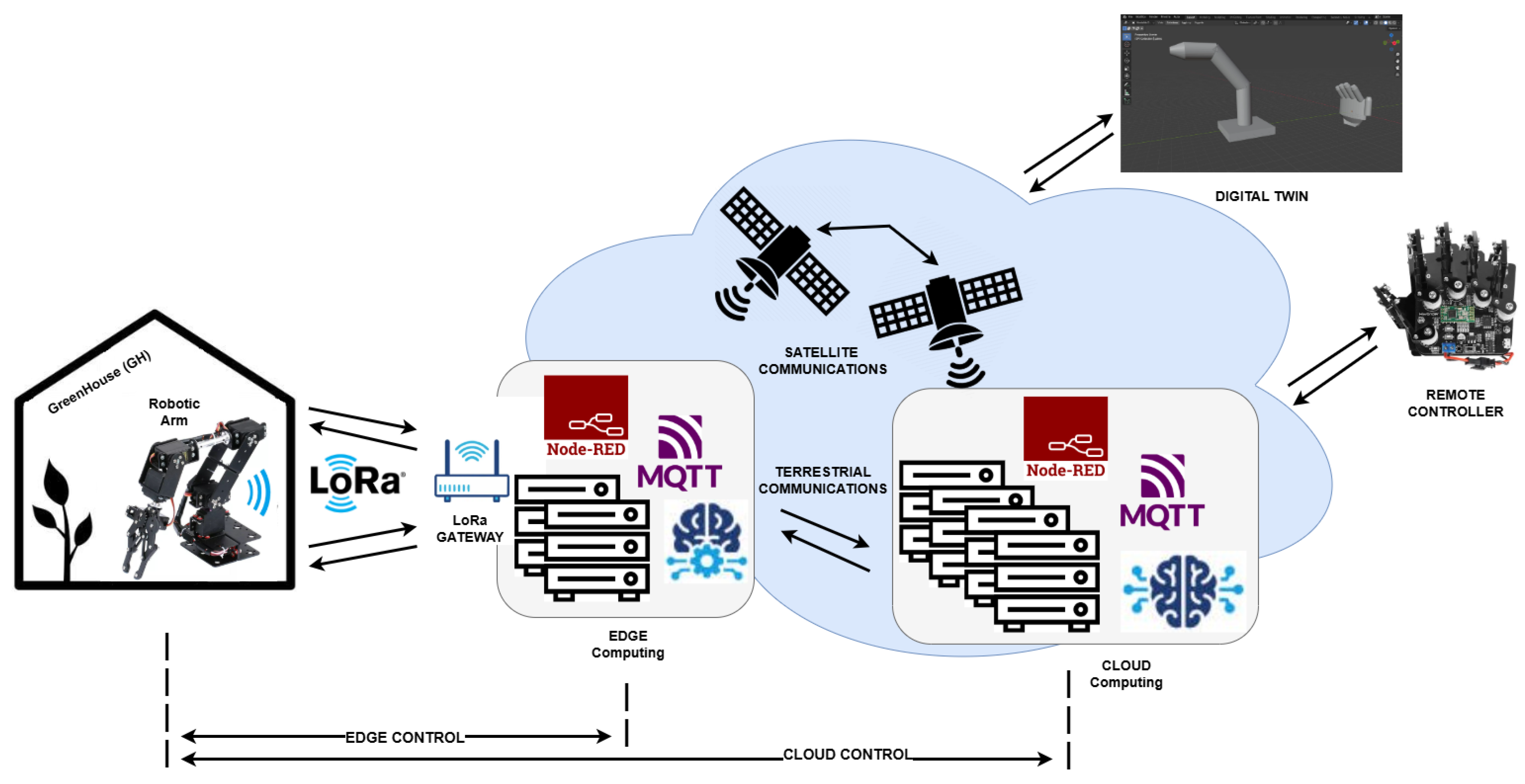

3.3. Smart Greenhouse System Architecture

3.4. Training Configurations of the RL Model

- Actor-critic networks: 3-layer MLPs (256-128 units)

- Exploration: Gaussian noise

- Training parameters:

- -

- Replay buffer: samples

- -

- Batch size: 128

- -

- Learning rate: 0.001

- -

- Training steps: 50,000

4. Digital Twin Framework and Hand Movement Prediction Model

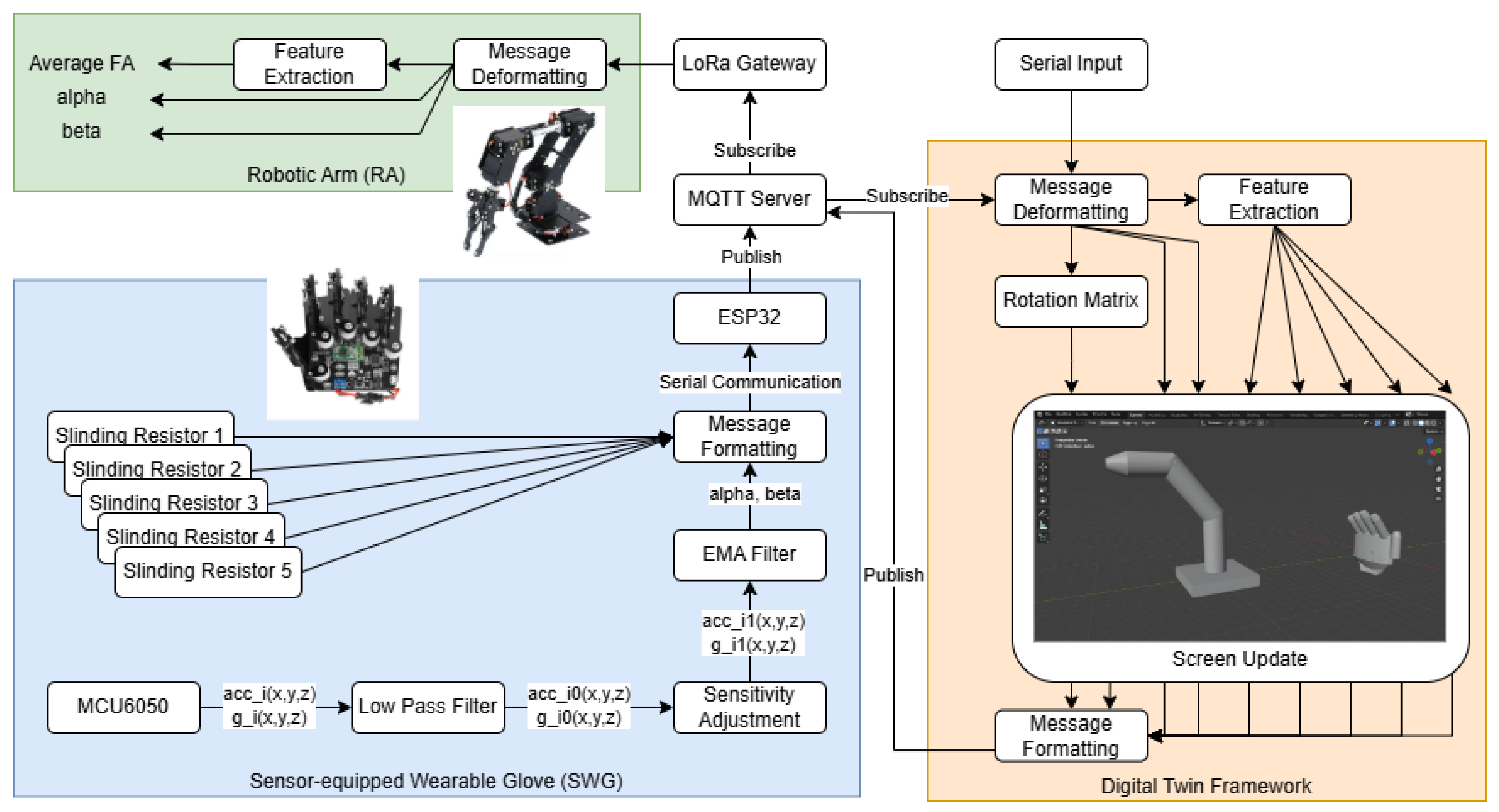

4.1. Digital Twin Framework

- Real2Digital: The SWG and RA operate in the physical world, transmitting data to the DT to maintain synchronization between the 3D models and real-world actions. Communication is handled via the MQTT protocol, which enables the transmission of commands and status updates from the physical environment to the digital realm.

- Digital2Real: In this mode, interaction occurs in the opposite direction, from the DT to the physical system. Specifically, manipulations performed directly on the 3D model of the hand or RA are transmitted as control commands to the RA in real time. In our implementation, human interaction with the DT is facilitated through a serial interface, which differentiates control messages from those originating from field devices.

- Digital2Digital: This mode is particularly useful for testing and analyzing new algorithms and commands in a virtual environment without affecting the physical world. Here, mode-specific identifiers are used to indicate the operational mode. Through the serial interface, commands can be sent to directly manipulate the 3D model of the hand or RA, allowing visualization of the robotic arm’s potential response without requiring actual physical movement.

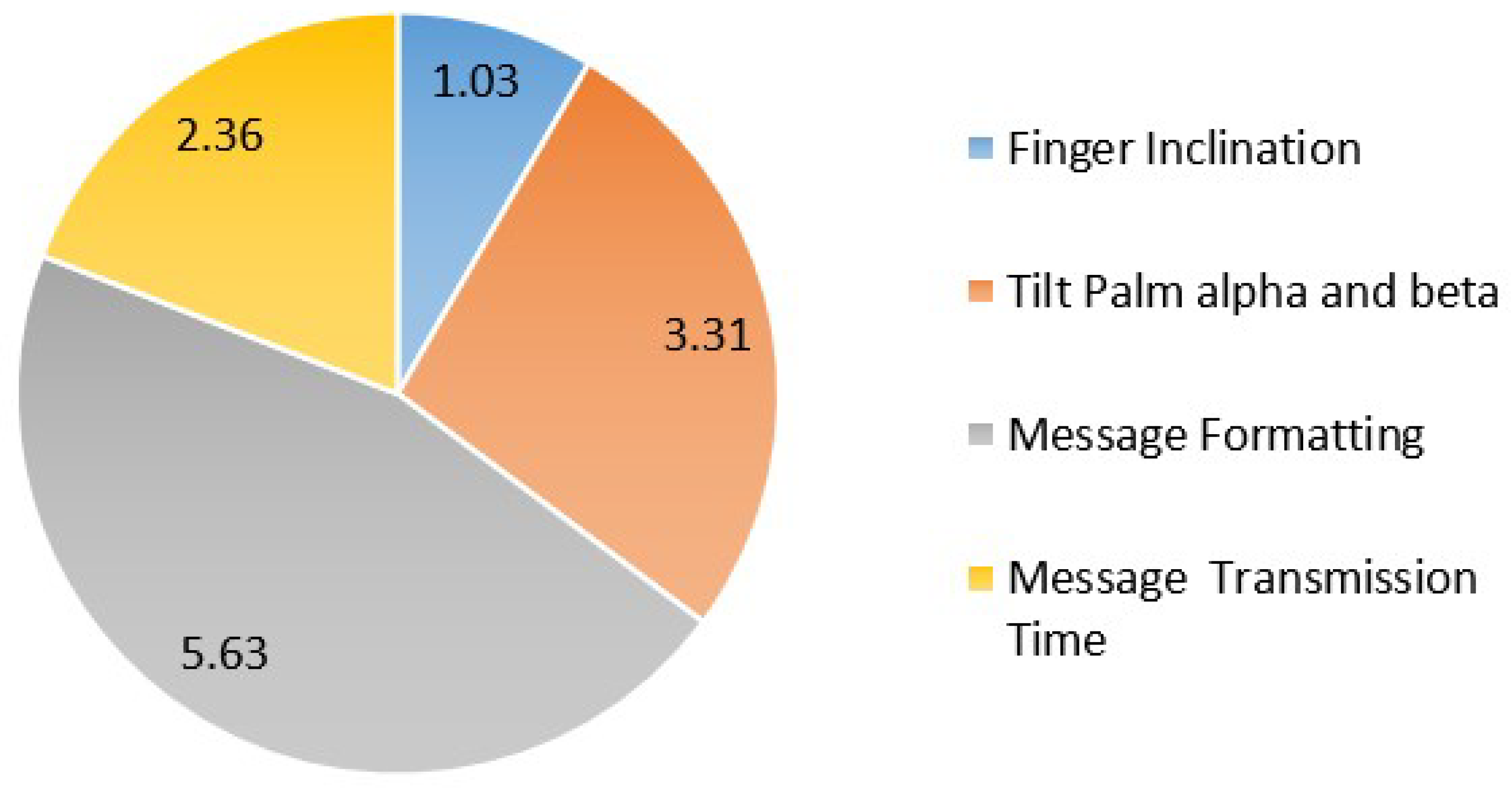

4.2. SWG Computation

| Algorithm 1 Computation of Palm Tilt Angles and |

|

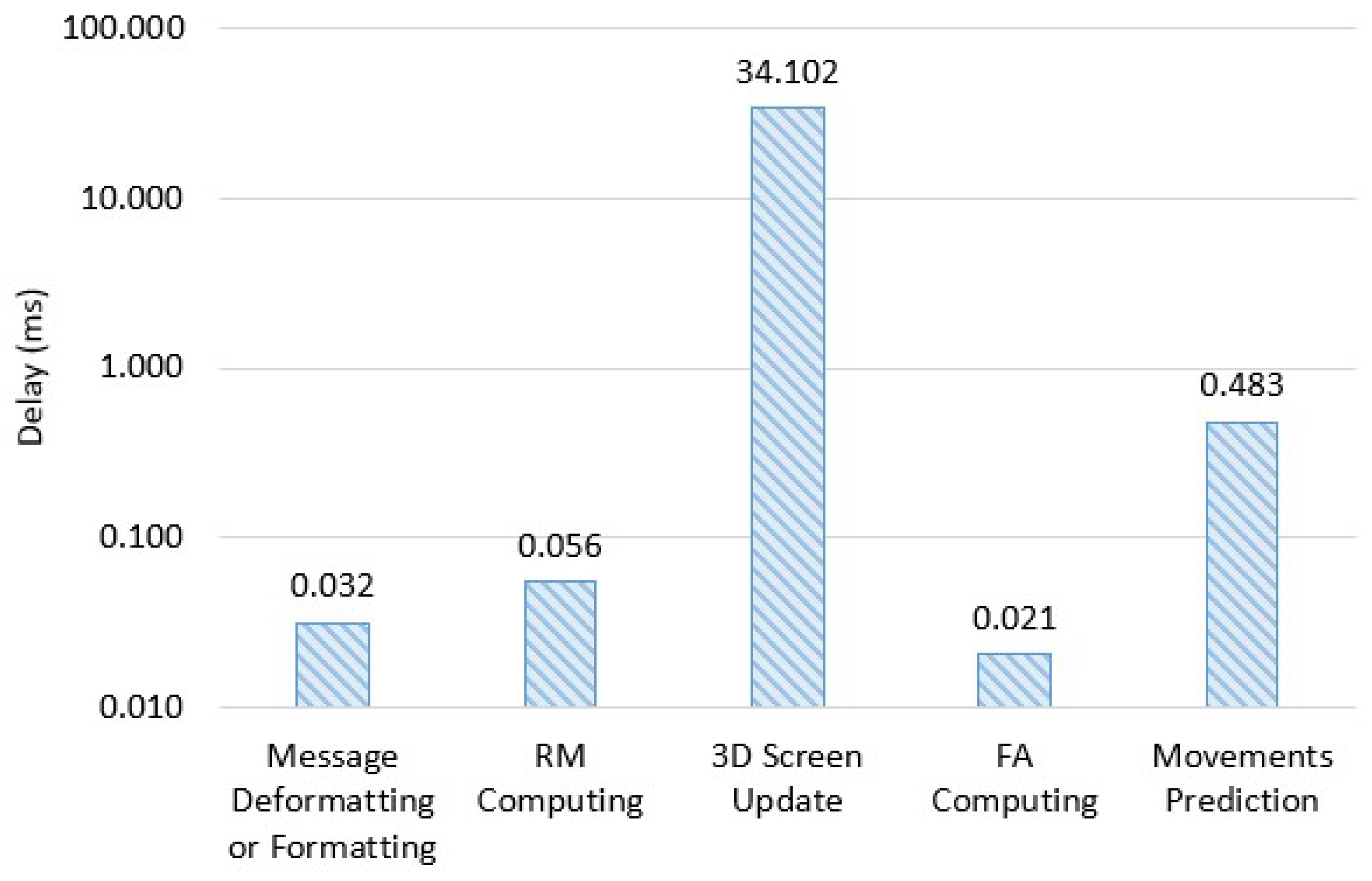

4.3. RA and DT Computation

4.4. Hand Movements Prediction with Reinforcement Learning

- the observable state of the system (state),

- the actions that the agent can perform,

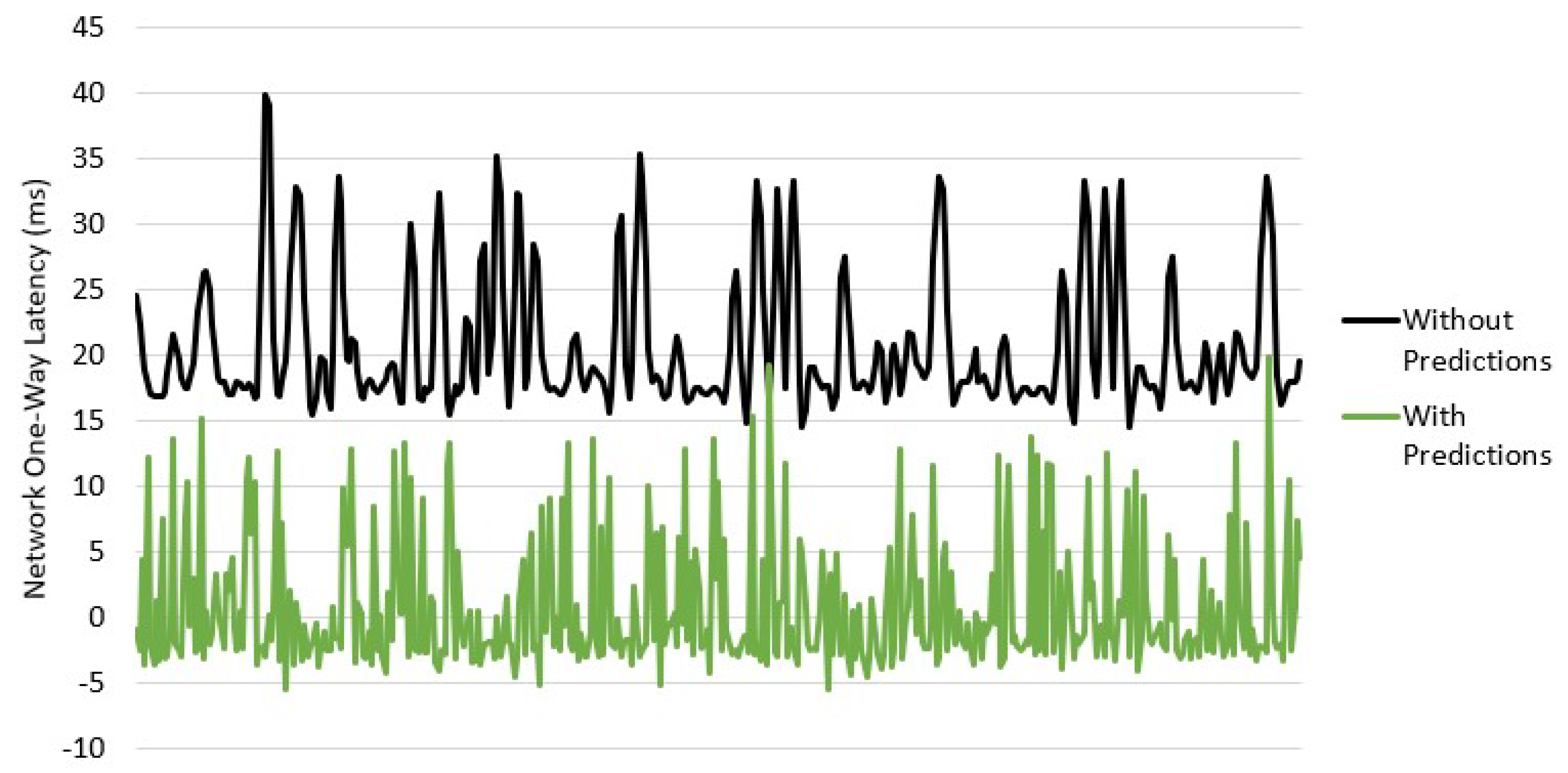

- a reward function that quantifies the goodness of each action over time,

- and a policy, which is the decision strategy that the agent learns and optimizes during the interaction with the environment.

- Break the temporal correlations between sequential samples.

- Provide a diverse set of experiences, which stabilizes the training process.

5. Experimental Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- World Population Projections. Available online: https://www.worldometers.info/world-population/world-population-projections/ (accessed on 2 June 2025).

- Food Security Update and World Bank Solutions to Food Insecurity. Available online: https://www.worldbank.org/en/topic/agriculture/brief/food-security-update (accessed on 2 June 2025).

- Lowry, G.V.; Avellan, A.; Gilbertson, L.M. Opportunities and challenges for nanotechnology in the agri-tech revolution. Nat. Nanotechnol. 2019, 14, 517–522. [Google Scholar] [CrossRef] [PubMed]

- Onishi, Y.; Yoshida, T.; Kurita, H.; Fukao, T.; Arihara, H.; Iwai, A. An automated fruit harvesting robot by using deep learning. Robomech J. 2019, 6, 1–8. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, X.; Au, W.; Kang, H.; Chen, C. Intelligent robots for fruit harvesting: Recent developments and future challenges. Precis. Agric. 2022, 23, 1856–1907. [Google Scholar] [CrossRef]

- Zhang, R.; Zhu, H.; Chang, Q.; Mao, Q. A Comprehensive Review of Digital Twins Technology in Agriculture. Agriculture 2025, 15, 903. [Google Scholar] [CrossRef]

- Rayhana, R.; Xiao, G.; Liu, Z. Internet of things empowered smart greenhouse farming. IEEE J. Radio Freq. Identif. 2020, 4, 195–211. [Google Scholar] [CrossRef]

- Bua, C.; Borgianni, L.; Adami, D.; Giordano, S. Empowering Remote Agriculture: Wearable Glove Control for Smart Hydroponic Greenhouses. In Proceedings of the 2024 IEEE 25th International Conference on High Performance Switching and Routing (HPSR), Pisa, Italy, 22–24 July 2024; pp. 215–220. [Google Scholar] [CrossRef]

- Bua, C.; Borgianni, L.; Adami, D.; Giordano, S. Digital Twin for Remote Control of Robotic Arm via Wearable Glove in Smart Agriculture. In Proceedings of the 2025 IEEE Wireless Communications and Networking Conference (WCNC), Milan, Italy, 24–27 March 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Commercial Hydroponic Greenhouse. Available online: https://futuranet.it/prodotto/n-264-maggio-2022/ (accessed on 9 February 2024).

- Heltec Wi-Fi LoRa v3. Available online: https://heltec.org/project/wifi-lora-32-v3/ (accessed on 9 February 2024).

- Fu, J.; Poletti, M.; Liu, Q.; Iovene, E.; Su, H.; Ferrigno, G.; De Momi, E. Teleoperation control of an underactuated bionic hand: Comparison between wearable and vision-tracking-based methods. Robotics 2022, 11, 61. [Google Scholar] [CrossRef]

- Fang, B.; Guo, D.; Sun, F.; Liu, H.; Wu, Y. A robotic hand-arm teleoperation system using human arm/hand with a novel data glove. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 2483–2488. [Google Scholar]

- Diftler, M.A.; Culbert, C.; Ambrose, R.O.; Platt, R.; Bluethmann, W. Evolution of the NASA/DARPA robonaut control system. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 2, pp. 2543–2548. [Google Scholar]

- Almeida, L.; Lopes, E.; Yalçinkaya, B.; Martins, R.; Lopes, A.; Menezes, P.; Pires, G. Towards natural interaction in immersive reality with a cyber-glove. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 2653–2658. [Google Scholar]

- Saraswathi, D.; Manibharathy, P.; Gokulnath, R.; Sureshkumar, E.; Karthikeyan, K. Automation of hydroponics green house farming using IoT. In Proceedings of the 2018 IEEE International Conference on System, Computation, Automation and Networking (ICSCA), Pondicherry, India, 6–7 July 2018; pp. 1–4. [Google Scholar]

- Chen, Y.J.; Chien, H.Y. IoT-based green house system with splunk data analysis. In Proceedings of the 2017 IEEE 8th International Conference on Awareness Science and Technology (iCAST), Taichung, Taiwan, 8–10 November 2017; pp. 260–263. [Google Scholar]

- Andrianto, H.; Suhardi; Faizal, A. Development of Smart Greenhouse System for Hydroponic Agriculture. In Proceedings of the 2020 International Conference on Information Technology Systems and Innovation (ICITSI), Padang, Indonesia, 19–23 October 2020; pp. 335–340. [Google Scholar] [CrossRef]

- Li, S.L.; Han, Y.; Li, G.; Zhang, M.; Zhang, L.; Ma, Q. Design and implementation of agricultral greenhouse environmental monitoring system based on Internet of Things. Appl. Mech. Mater. 2012, 121, 2624–2629. [Google Scholar] [CrossRef]

- Park, J.; Choi, J.H.; Lee, Y.J.; Min, O. A layered features analysis in smart farm environments. In Proceedings of the International Conference on Big Data and Internet of Thing, London, UK, 20–22 December 2017; pp. 169–173. [Google Scholar]

- Xu, H.; Wu, J.; Pan, Q.; Guan, X.; Guizani, M. A Survey on Digital Twin for Industrial Internet of Things: Applications, Technologies and Tools. IEEE Commun. Surv. Tutor. 2023, 25, 2569–2598. [Google Scholar] [CrossRef]

- Attaran, M.; Çelik, B.G. Digital Twin: Benefits, use cases, challenges, and opportunities. Decis. Anal. J. 2023, 6, 100165. [Google Scholar] [CrossRef]

- Matteo, A.D.; Lozzi, D.; Mattei, E.; Mignosi, F.; Montagna, S.; Polsinelli, M.; Placidi, G. Calibration of the Double Digital Twin for the Hand Rehabilitation by the Virtual Glove. In Proceedings of the 2024 IEEE 37th International Symposium on Computer-Based Medical Systems (CBMS), Guadalajara, Mexico, 26–28 June 2024. [Google Scholar] [CrossRef]

- Yu, T.; Luo, J.; Gong, Y.; Wang, H.; Guo, W.; Yu, H.; Chen, G. A Compact Gesture Sensing Glove for Digital Twin of Hand Motion and Robot Teleoperation. IEEE Trans. Ind. Electron. 2024, 72, 1684–1693. [Google Scholar] [CrossRef]

- Audonnet, F.P.; Grizou, J.; Hamilton, A.; Aragon-Camarasa, G. TELESIM: A Modular and Plug-and-Play Framework for Robotic Arm Teleoperation using a Digital Twin. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024. [Google Scholar] [CrossRef]

- Bratchikov, S.; Bratchikov, S.; Abdullin, A.; Abdullin, A.; Demidova, G.; Demidova, G.L.; Lukichev, D.V.; Lukichev, D.V. Development of Digital Twin for Robotic Arm. In Proceedings of the 2021 IEEE 19th International Power Electronics and Motion Control Conference (PEMC), Gliwice, Poland, 25–29 April 2021. [Google Scholar] [CrossRef]

- Abduljabbar, M.; Gochoo, M.; Sultan, M.T.; Batnasan, G.; Otgonbold, M.E.; Berengueres, J.; Alnajjar, F.; Rasheed, A.S.A.; Alshamsi, A.; Alsaedi, N. A Cloud-Based 3D Digital Twin for Arabic Sign Language Alphabet Using Machine Learning Object Detection Model. In Proceedings of the 2023 15th International Conference on Innovations in Information Technology (IIT), Al Ain, United Arab Emirates, 14–15 November 2023. [Google Scholar] [CrossRef]

- Wu, Z.; Yao, Y.; Liang, J.; Jiang, F.; Chen, S.; Zhang, S.; Yan, X. Digital twin-driven 3D position information mutuality and positioning error compensation for robotic arm. IEEE Sens. J. 2023, 22, 27508–27516. [Google Scholar] [CrossRef]

- Cheng, H.; Yang, L.; Liu, Z. Survey on 3D hand gesture recognition. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 1659–1673. [Google Scholar] [CrossRef]

- Kurakin, A.; Zhang, Z.; Liu, Z. A real time system for dynamic hand gesture recognition with a depth sensor. In Proceedings of the 2012 Proceedings of the 20th European Signal Processing Conference (EUSIPCO), Bucharest, Romania, 27–31 August 2012; pp. 1975–1979. [Google Scholar]

- Dong, Y.; Liu, J.; Yan, W. Dynamic Hand Gesture Recognition Based on Signals From Specialized Data Glove and Deep Learning Algorithms. IEEE Trans. Instrum. Meas. 2021, 70, 1–14. [Google Scholar] [CrossRef]

- Ge, Y.; Li, B.; Yan, W.; Zhao, Y. A real-time gesture prediction system using neural networks and multimodal fusion based on data glove. In Proceedings of the 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI), Xiamen, China, 29–31 March 2018; pp. 625–630. [Google Scholar] [CrossRef]

- Matulis, M.; Harvey, C. A robot arm digital twin utilising reinforcement learning. Comput. Graph. 2021, 95, 106–114. [Google Scholar] [CrossRef]

- Lee, D.; Lee, S.; Masoud, N.; Krishnan, M.; Li, V.C. Digital twin-driven deep reinforcement learning for adaptive task allocation in robotic construction. Adv. Eng. Informat. 2022, 53, 101710. [Google Scholar] [CrossRef]

- Khdoudi, A.; Masrour, T.; El Hassani, I.; El Mazgualdi, C. A deep-reinforcement-learning-based digital twin for manufacturing process optimization. Systems 2024, 12, 38. [Google Scholar] [CrossRef]

- Bua, C.; Adami, D.; Giordano, S. GymHydro: An Innovative Modular Small-Scale Smart Agriculture System for Hydroponic Greenhouses. Electronics 2024, 13, 1366. [Google Scholar] [CrossRef]

- Werable Glove. Available online: https://www.hiwonder.com/products/wireless-glove-open-source-somatosensory-mechanical-glove (accessed on 9 February 2024).

- Robotic Arm. Available online: https://futuranet.it/prodotto/braccio-robotico-6dof-con-pinza-e-servi-rc/ (accessed on 9 February 2024).

- Octopus Shield. Available online: https://fishino.it/home-it.html (accessed on 9 February 2024).

- Brockman, G.; Cheung, V.; Pettersson, L.; Schneider, J.; Schulman, J.; Tang, J.; Zaremba, W. Openai gym. arXiv 2016, arXiv:1606.01540. [Google Scholar]

- Kušić, K.; Schumann, R.; Ivanjko, E. A digital twin in transportation: Real-time synergy of traffic data streams and simulation for virtualizing motorway dynamics. Adv. Eng. Inf. 2023, 55, 101858. [Google Scholar] [CrossRef]

- Vaezi, M.; Noroozi, K.; Todd, T.D.; Zhao, D.; Karakostas, G. Digital Twin Placement for Minimum Application Request Delay With Data Age Targets. IEEE Internet Things J. 2023, 10, 11547–11557. [Google Scholar] [CrossRef]

- Ravi, K.S.D.; Ng, M.S.; Medina Ibáñez, J.; Hall, D.M. Real-time Digital Twin of Robotic construction processes in Mixed Reality. In Proceedings of the 38th International Symposium on Automation and Robotics in Construction (ISARC), Dubai, United Arab Emirates, 2–5 November 2021; pp. 451–458. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, M.; Li, M.; Liu, X.; Zhong, R.Y.; Pan, W.; Huang, G.Q. Digital twin-enabled real-time synchronization for planning, scheduling, and execution in precast on-site assembly. Autom. Constr. 2022, 141, 104397. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y.; Qian, C. The enhanced resource modeling and real-time transmission technologies for Digital Twin based on QoS considerations. Robot. Comput.-Integr. Manuf. 2022, 75, 102284. [Google Scholar] [CrossRef]

| Delays (ms) | ||

|---|---|---|

| Edge Computing | Cloud Computing | |

| SWG Total Delay | 12.33 | 12.33 |

| Network Latency | 20.74 | 70.68 |

| Digital Twin Total Delay | 34.69 | 34.69 |

| Total Delay | 67.76 | 117.70 |

| Metric | MSE Value |

|---|---|

| Overall MSE | 0.014206 |

| Thumb | 0.017617 |

| Index Finger | 0.015549 |

| Middle Finger | 0.009180 |

| Ring Finger | 0.010753 |

| Little Finger | 0.013323 |

| PalmX | 0.017937 |

| PalmY | 0.015083 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bua, C.; Borgianni, L.; Adami, D.; Giordano, S. Reinforcement Learning-Driven Digital Twin for Zero-Delay Communication in Smart Greenhouse Robotics. Agriculture 2025, 15, 1290. https://doi.org/10.3390/agriculture15121290

Bua C, Borgianni L, Adami D, Giordano S. Reinforcement Learning-Driven Digital Twin for Zero-Delay Communication in Smart Greenhouse Robotics. Agriculture. 2025; 15(12):1290. https://doi.org/10.3390/agriculture15121290

Chicago/Turabian StyleBua, Cristian, Luca Borgianni, Davide Adami, and Stefano Giordano. 2025. "Reinforcement Learning-Driven Digital Twin for Zero-Delay Communication in Smart Greenhouse Robotics" Agriculture 15, no. 12: 1290. https://doi.org/10.3390/agriculture15121290

APA StyleBua, C., Borgianni, L., Adami, D., & Giordano, S. (2025). Reinforcement Learning-Driven Digital Twin for Zero-Delay Communication in Smart Greenhouse Robotics. Agriculture, 15(12), 1290. https://doi.org/10.3390/agriculture15121290