Abstract

Dragon fruit detection in natural environments remains challenged by limited accuracy and deployment difficulties, primarily due to variable lighting and occlusions from branches. To enhance detection accuracy and satisfy the deployment constraints of edge devices, we propose YOLOv10n-CGD, a lightweight and efficient dragon fruit detection method designed for robotic harvesting applications. The method builds upon YOLOv10 and integrates Gated Convolution (gConv) into the C2f module, forming a novel C2f-gConv structure that effectively reduces model parameters and computational complexity. In addition, a Global Attention Mechanism (GAM) is inserted between the backbone and the feature fusion layers to enrich semantic representations and improve the detection of occluded fruits. Furthermore, the neck network integrates a Dynamic Sample (DySample) operator to enhance the spatial restoration of high-level semantic features. The experimental results demonstrate that YOLOv10n-CGD significantly improves performance while reducing model size from 5.8 MB to 4.5 MB—a 22.4% decrease. The mAP improves from 95.1% to 98.1%, with precision and recall reaching 97.1% and 95.7%, respectively. The observed improvements are statistically significant (p < 0.05). Moreover, detection speeds of 44.9 FPS and 17.2 FPS are achieved on Jetson AGX Orin and Jetson Nano, respectively, demonstrating strong real-time capabilities and suitability for deployment. In summary, YOLOv10n-CGD enables high-precision, real-time dragon fruit detection while preserving model compactness, offering robust technical support for future robotic harvesting systems and smart agricultural terminals.

1. Introduction

As a tropical fruit, dragon fruit has gained widespread popularity due to its distinctive appearance and rich nutritional content. In recent years, dragon fruit cultivation in China has expanded rapidly, accompanied by a continuous rise in consumer demand [1]. Currently, dragon fruit harvesting predominantly depends on manual labor, which is not only labor-intensive and costly but also time-consuming. Robots can replace manual labor to automate dragon fruit harvesting, presenting significant implications and broad development prospects in agricultural production [2]. Fruit target detection is a critical technology for picking robots [3]. Thus, achieving accurate recognition of dragon fruits in natural orchard environments is essential for advancing automated harvesting.

With advancements in computer technology, deep learning-based neural network target detection methods have been extensively adopted in agriculture [4,5,6]. Compared with traditional target detection algorithms, deep learning employs deeper neural networks capable of extracting both low-dimensional and high-dimensional features, offering greater adaptability and robustness in fruit recognition tasks [7]. Gui et al. [8] explored the application of the YOLO-Tea target detection algorithm for detecting tea buds in unstructured environments. By incorporating a Multi-scale Convolutional Block Attention Module (MCBAM) and a multi-scale prediction layer, the detection of small tea buds in dense scenes was significantly improved. Ji et al. [9] proposed an improved YOLOv4-based apple recognition method that employs a lightweight EfficientNet-B0 network for feature extraction and integrates it with the Path Aggregation Network (PANet). This method achieves an average accuracy of 93.42%. Liu et al. [10] applied the K-means clustering algorithm within the YOLOv7 framework. By embedding the Coordinate Attention (CA) module into the backbone network, refining the loss function, and incorporating a downsampling P2 module, unripe yellow peach recognition was achieved with an accuracy of 79.3%. Gai et al. [11] proposed a blueberry recognition algorithm, TL-YOLOv8, which integrates an improved Multidimensional Coordinated Attention (MPCA) module into the backbone network, achieving an accuracy of 84.6% for blueberry recognition in orchards. Jiang et al. [12] proposed a novel young apple detection algorithm that integrates the YOLOv4 network and visual attention mechanisms, the improved model achieved a detection accuracy of 85.8%. Despite notable advances in detection accuracy, many existing methods entail high computational complexity and exhibit limited robustness in challenging environments—such as occlusion, low light, and cluttered backgrounds—thereby restricting their deployment on embedded platforms.

Currently, numerous researchers have explored various approaches in the field of dragon fruit recognition. Zhou et al. [13] incorporated RepGhost and a decoupled header into YOLOv7, along with multiple Efficient Channel Attention (ECA) modules strategically placed across the network to enhance feature extraction and prediction accuracy. Nan et al. [14] enhanced the network backbone by designing the WFE-C4 module, and they utilized a Bi-FPN-structured header network for multi-scale feature fusion and enhancement, achieving an average detection accuracy of 86.0%. Qiu et al. [15] categorized dragon fruit into four maturity stages—bud, immature, semi-ripe, and ripe—and developed a classification network leveraging the improved YOLOv8n, which achieved an accuracy of 85.2%. Li et al. [16] additionally categorized and quantified dragon fruit flowers, immature fruits, and ripe fruits, proposing a rapid identification and counting method that achieved an average accuracy of 95.0% in testing. Wang et al. [17] proposed a real-time detection and tracking method for dragon fruits in orchard environments. By integrating a convolutional attention module, a Transformer self-attention mechanism, and a DeepSORT multi-target tracking algorithm, they developed the YOLOv5s-DFT model, achieving a recognition accuracy of 87.22%. Huang et al. [18] introduced an improved YOLOv8-G algorithm for detecting dragon fruit stem diseases, achieving an average detection accuracy of 83.1%. While these studies offer diverse approaches to fruit detection and tracking, opportunities remain to improve real-time performance and detection accuracy.

Although considerable research has been conducted on dragon fruit detection, key challenges persist—particularly in achieving real-time performance under complex field conditions. The key challenges are summarized as follows: (1) existing models often possess large parameter sizes and high computational burdens, hindering deployment on resource-limited agricultural robots; (2) complex orchard environments—characterized by cluttered backgrounds, low illumination, and fruit occlusion—severely impair detection accuracy, leading to missed and false detections; (3) the inference speed of current models remains insufficient to meet the rapid response requirements of field operations, limiting real-time performance and reducing picking efficiency.

To tackle these challenges, we propose YOLOv10n-CGD—a lightweight and efficient dragon fruit detection method built upon an improved YOLOv10 architecture. The proposed method significantly reduces computational overhead and enhances detection speed while maintaining accuracy, thereby improving deployment efficiency and robustness on embedded platforms to meet the real-time demands of robotic harvesting.

The main contributions of this paper are as follows:

- (1)

- Gated Convolution is integrated into the C2f module to form the C2f-gConv module, which reduces both parameter count and computational cost without compromising detection performance.

- (2)

- A Global Attention Mechanism is inserted between the backbone and the feature fusion layers to improve the model’s focus on occluded targets and boost detection performance in complex environments.

- (3)

- A Dynamic Sampling strategy is applied in the neck to adaptively enhance feature representation while preserving the model’s lightweight structure.

- (4)

- The improved model is deployed on embedded devices for testing, showing faster detection speeds than the baseline and demonstrating strong potential for deployment on field harvesting robots.

2. Materials and Methods

2.1. Dataset Construction

2.1.1. Data Sources

The experimental dataset of dragon fruit images was captured between September and mid-October 2024 from Lile Agricultural Park in Nanjing, Jiangsu Province, and Heqing Dragon Fruit Picking Base in Shanghai, China. Images were acquired between 08:00 and 18:00 using an Intel RealSense D435i camera (Intel Corporation, Santa Clara, CA, USA) at a resolution of 1280 × 720 pixels and saved in JPG format. The images were collected in natural field environments, with the camera positioned 0.3–1.2 m away from the dragon fruit during acquisition. To enhance dataset diversity and improve the model’s generalization ability, images were captured from multiple angles under various lighting conditions, including uniform lighting, backlighting, and partial occlusion by branches. A total of 2135 dragon fruit images were collected, and representative samples are shown in Figure 1.

Figure 1.

Part of the collected image of dragon fruit.

2.1.2. Data Enhancement and Dataset Production

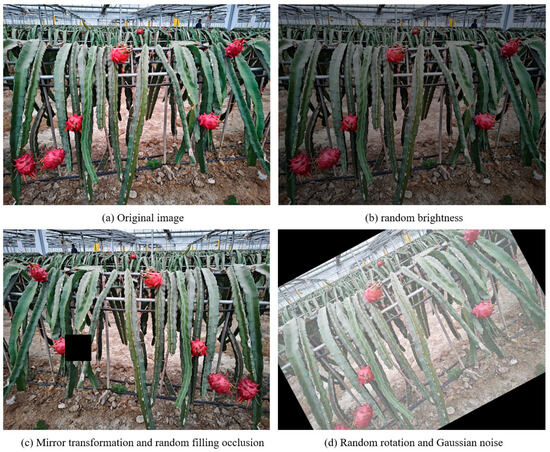

In this experiment, the Labeling tool was employed to annotate the regions containing dragon fruit in the original images using rectangular bounding boxes. The dragon fruit label was designated as “df”, with annotations saved in YOLO-format text files. To mitigate the risk of model overfitting caused by the limited dataset size and enhance the network’s robustness and generalization capabilities, data augmentation was applied to the images. Specific augmentation techniques included simulating variations in lighting conditions by randomly adjusting brightness, replicating branch-induced occlusions through mirror transformations and randomized occlusion filling, and mimicking camera-induced blur by applying random rotations and Gaussian noise, as illustrated in Figure 2. The augmented dataset expanded to four times the size of the original, resulting in a total of 8540 dragon fruit images, which were subsequently partitioned into training, validation, and test sets in a 7:2:1 ratio. The results of dataset division are shown in Table 1.

Figure 2.

An example of image enhancement.

Table 1.

Dragon fruit dataset distribution table.

2.2. YOLOv10 Convolutional Neural Network

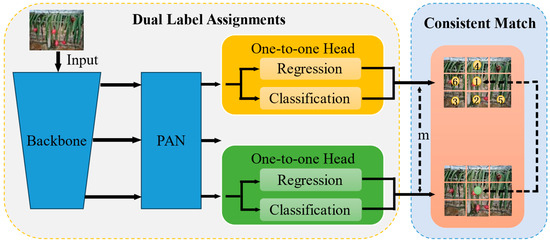

The YOLOv10 network, introduced by Tsinghua University in May 2024, is a real-time, end-to-end object detection model. This novel target detection algorithm builds upon the Ultralytics Python package (version 8.3.13), addressing limitations of prior YOLO versions related to post-processing and model architecture. The primary innovation of YOLOv10 lies in its consistent dual NMS-free allocation training strategy [19]. The head network incorporates both one-to-one and one-to-many matching detection heads, facilitating multiple predictions per object during training. This design enables the backbone and neck networks to leverage the extensive supervision provided by one-to-many allocations, thereby enhancing learning accuracy. During inference, the network employs only one-to-one matching detection heads to generate a single optimal prediction per object without requiring NMS, significantly reducing latency and enhancing efficiency for end-to-end deployment [20]. Figure 3 illustrates the schematic structure of consistent dual assignment training without NMS.

Figure 3.

Consistent dual assignments for NMS-free training. Note: m denotes the matching metric, which is used to quantitatively assess the level of agreement between predictions and instances.

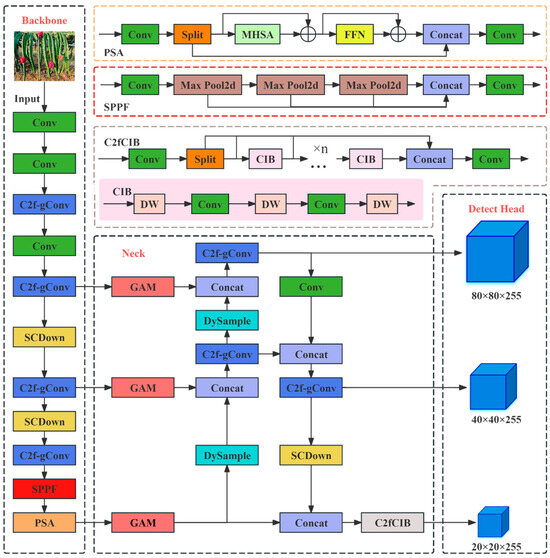

Additionally, YOLOv10 incorporates comprehensive optimizations to enhance both efficiency and accuracy. Compared to YOLOv8, the SCDown module replaces the CBL module in the P4 and P5 layers, while the PSA module is integrated to maximize image information retention and minimize computational overhead. For layers with smaller feature map scales in the neck network, the C2f module is replaced by the C2fCIB module, where the Bottleneck module in C2f is substituted with CIB. This modification reduces computational redundancy and further simplifies model complexity.

As the smallest network in the YOLOv10 series, YOLOv10n more readily satisfies lightweight requirements, making it the basis for improvements in this study.

2.3. YOLOv10n Network Improvements

To address the challenges of low detection accuracy, slow detection speed, and the high parameter count in dragon fruit detection under natural conditions, this study proposes a lightweight and rapid dragon fruit detection method for harvesting robots based on YOLOv10, focusing on three key optimizations to achieve superior detection performance with reduced computational cost. First, the gConv operation is integrated into the C2f module to construct the C2f-gConv module, effectively reducing computational redundancy during convolution and amplifying the lightweight characteristics of the model. Second, the GAM attention mechanism is incorporated into the neck network to enhance its feature extraction and fusion capabilities. This adjustment enables the model to better focus on dragon fruit features obscured by branches, thereby improving detection accuracy. Finally, the DySample dynamic upsampler is employed to further enhance detection accuracy. This component improves global feature extraction during upsampling while maintaining a constant parameter count. The improved model proposed in this study is designated as the YOLOv10n-CGD network, with its structural details illustrated in Figure 4.

Figure 4.

YOLOv10n-CGD model structure. Note: Conv denotes convolution; C2f-gConv denotes the C2f module that introduces partial convolution; GAM denotes the global attention module; DySample denotes the up-sampling module; SCDown denotes the spatial-channel separation down-sampling; PSA denotes the pyramid cut attention module; DW denotes depth-wise convolutional.

To ensure practical deployability, YOLOv10n-CGD is specifically designed for embedded platforms used in agricultural harvesting robots, including mainstream low-power edge devices such as the Jetson Nano and Jetson AGX Orin. Based on empirical deployment tests and official hardware specifications, we formulated the following lightweight design constraints: (1) the model size must remain under 5 MB to accommodate the storage and loading constraints of embedded systems; (2) runtime memory usage must not exceed 1 GB to maintain system stability during concurrent execution of control modules; and (3) single-frame inference latency must remain below 200 ms to satisfy the real-time control requirement of a minimum 5 Hz picking frequency.

2.3.1. C2f-gConv Module

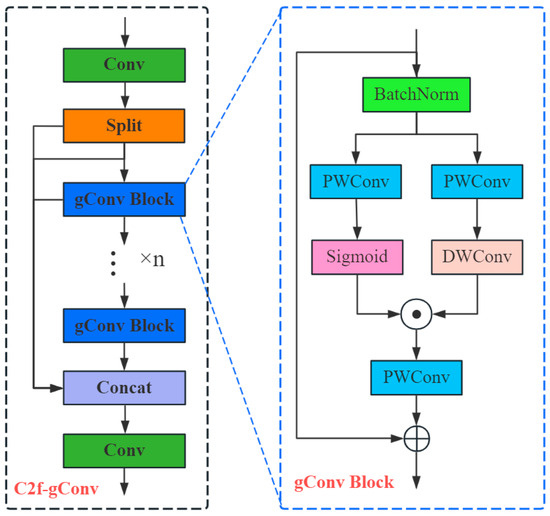

The C2f module in YOLOv10n incorporates multiple Bottleneck structures, resulting in significant redundancy in channel information despite extracting more image features. In contrast, Gated Convolution (gConv) in gUNet [21] effectively reduces parameter count and computational load by dividing input feature maps into multiple groups. Each group independently performs convolution, and the results are subsequently aggregated, thereby enhancing computational efficiency and model speed.

This study constructs the C2f-gConv module by replacing the Bottleneck structure with a gConv Block as the primary gradient flow branch in the C2f module, leveraging class inheritance. This modification enhances feature extraction from input images while significantly reducing computational redundancy. The structural details of the C2f-gConv module are illustrated in Figure 5.

Figure 5.

C2f-gConv module structure diagram. Note: PWConv denotes point-wise convolutional; DWConv denotes depth-wise convolutional; BatchNorm denotes batch normalization; Concat denotes the feature connection module; ⊕ denotes the stacking operation; ⊙ denotes the Sigmoid activation operation.

Let be the input feature. The gConv Block first normalizes it using BatchNorm with , and then the normalized output feature is processed through two parallel convolution paths.

Then, use the generated gating signal on the convolved feature to perform element-wise adjustment on , achieving feature fusion. Finally, further compress and adjust the feature dimensions of the fused features through point-wise convolution, sum the result with the input feature , and output the final extracted features.

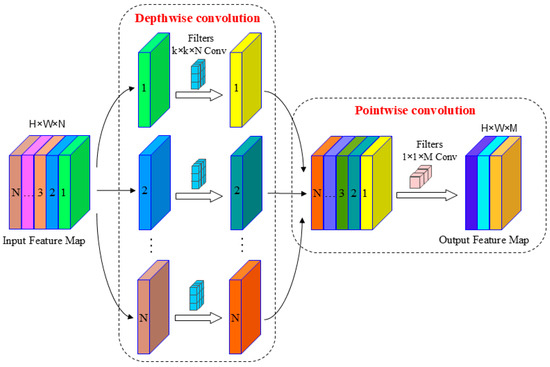

In gConv, a lightweight depthwise separable convolution (DWConv) is employed as a substitute for the standard convolution (Conv) with a kernel size. The structure diagram of DWConv is shown in Figure 6. Unlike standard convolution, which applies filters uniformly across all input channels, DWConv independently processes each channel, enabling more efficient feature extraction and fusion. DWConv architecture comprises two components: depthwise convolution and pointwise convolution. Depthwise convolution independently applies kernels to each channel, constraining computational cost to a function of spatial dimensions and the number of input channels. Subsequently, pointwise convolution utilizing kernels merges the information from all channels. The resulting depthwise feature maps are then weighted and summed to produce the final output feature maps. This approach enables the model to capture local features within each channel, facilitate inter-channel information flow, significantly reduce the computational burden associated with network parameters, and achieve a lightweight module design.

Figure 6.

DWConv module structure diagram. H and W denote the height and width of the feature map; N and M denote the number of channels that are the input and output feature maps.

The ratio of computational complexity between DWConv and Conv, denoted as , is derived by comparing their computational costs under the condition that the input/output feature map dimensions and convolution kernel sizes remain unchanged. As illustrated in Equation (4), the numerator comprises two terms representing the contributions of each filter to a single input channel and to all input channels in DWConv, respectively, while the denominator encapsulates the aggregate contributions of all filters and channels in Conv. Here, denotes the height or width of the feature map, represents its spatial size, indicates the height or width of the convolution kernel, represents its spatial size, refers to the number of input channels, and refers to the number of output channels.

It can be observed that the computational complexity of DWConv is reduced to times that of Conv. Here, arises from the pointwise convolution, while originates from the depthwise convolution. This demonstrates that DWConv significantly alleviates the computational burden of the network structure. Consequently, integrating gConv with DWConv to enhance the C2f module achieves further reductions in computational redundancy and memory access. This improvement ensures stable model performance, rendering it highly suitable for the deployment of resource-constrained devices.

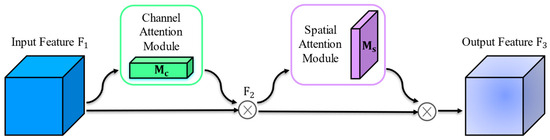

2.3.2. GAM Attention Mechanism

To improve the model’s perception of red dragon fruit features and ensure a sharper focus on details obscured by branches, this study incorporates the Global Attention Module (GAM) between the backbone network and the feature fusion layer, enhancing the lightweight network’s recognition accuracy. GAM [22], a novel global attention mechanism succeeding the Convolutional Block Attention Module (CBAM), efficiently minimizes information dispersion along global dimensions and strengthens feature interactions by extracting and integrating global image information, thereby boosting the model’s overall performance. The integration of the global attention mechanism enables the model to capture critical image features more effectively, facilitating precise and rapid identification of dragon fruit in intricate backgrounds. Figure 7 illustrates the structural configuration of GAM.

Figure 7.

GAM module structure diagram. Note: denotes the input feature; denotes the feature output by the channel attention sub-module; denotes the feature output by the spatial attention sub-module; denotes the element-wise multiplication.

GAM seamlessly integrates channel attention and spatial attention submodules. The channel attention submodule leverages three-dimensional arrangements to perform dimensional transformations, preserving input’s spatial information and using a multilayer perceptron (MLP) to enhance cross-dimensional channel correlations, thereby capturing richer nonlinear and combinatorial features. The spatial attention submodule employs two 7 × 7 convolutional layers to fuse pixel relationships within the spatial dimension effectively. By adopting a hierarchical attention correction strategy, GAM reinforces key information within the feature map, offering enhanced feature representation for the YOLOv10n model and elevating its detection accuracy. The GAM process can be represented by (5) and (6).

Among them, is the input feature map, and are the channel and spatial attention feature maps, respectively, and represents element-wise multiplication.

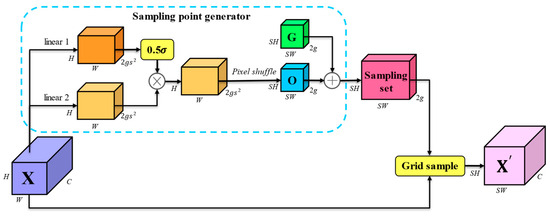

2.3.3. DySample Upsampling

YOLOv10n employs the nearest neighbor interpolation technique for feature sampling, aiming to improve detection performance across targets of varying scales. However, this approach adjusts the input feature map size by filling pixel values of the nearest neighbors, relying on limited surrounding pixels for prediction. This often results in pixel discontinuities, hindering the observation of subtle variations in captured images. Furthermore, the method demands significant computational resources, compromising the model’s lightweight design for dragon fruit detection. To tackle these challenges, the super lightweight and efficient dynamic upsampler, DySample [23], is proposed as a replacement for the original interpolation-based upsampling module in the YOLOv10n model. DySample enhances the network’s feature extraction by employing a point-based sampling method, significantly boosting dragon fruit detection performance. Moreover, it bypasses complex dynamic convolution processes, reducing computational overhead and improving overall model efficiency. The detailed architecture of the DySample upsampling module is depicted in Figure 8.

Figure 8.

DySample module structure diagram. Note: X denotes the input feature; denotes the output upsampled feature; σ denotes the sigmoid function. O denotes the dynamic offset; G denotes the original grid.

DySample dynamically adjusts offsets based on the content of the input feature map, enabling adaptation to diverse feature distributions and varying data scenarios. In this study, addressing the challenge of dragon fruit detection in natural environments, we introduce a dynamic range factor to enhance the flexibility of dynamic offsets and mitigate offset overlap issues. First, the grid samples the input feature map with dimensions . A dynamic range factor is obtained by applying a linear layer (linear1) to the feature map and introducing a sigmoid function. This factor is then multiplied by the offset produced by another linear layer (linear2). Following channel and spatial dimensionality transformations, the final dynamic offset, O, is derived. The generated dynamic offset is added to the original sampling grid , forming a modified grid for dragon fruit detection in natural environments. Subsequently, is incorporated into the original sampling grid to generate the desired dynamic sampling set . Finally, the input features are resampled via the grid_sample function, resulting in an upsampled feature map with dimensions . The overall process is described as follows:

The DySample upsampling method dynamically adjusts the generation and placement of sampling points based on the characteristics of different input feature images. This approach effectively restores low-resolution feature maps to high-resolution representations, enabling the model to achieve more precise localization of small targets. Such capability is particularly crucial for recognizing small fruits in long-range scenarios. Furthermore, compared to traditional interpolation-based upsampling methods, DySample not only enhances the model’s detection accuracy but also reduces computational overhead and parameter complexity, aligning seamlessly with the lightweight design objectives of the model.

2.4. Model Training and Testing

2.4.1. Test Environment and Parameter Settings

The experiments in this study were conducted under the Windows 10 operating system, with a GPU of NVIDIA GeForce RTX3070Ti, a CPU of AMD Ryzen 7 5800X 8-core processor, and 16 GB of memory. The deep learning framework used is Python version 3.9, PyTorch version 1.10, and CUDA version 12.1. The training hyperparameter settings are shown in Table 2.

Table 2.

Hyperparameters during training.

2.4.2. Evaluation Indicators

In this study, Precision (P), Recall (R), and mean average precision (mAP) are utilized as evaluation metrics to assess the model’s training accuracy, with calculations provided in Equations (10)–(12). The model’s complexity is evaluated using the number of parameters, computational load, and model weights, while the frame rate (FPS) is employed to measure the model’s real-time detection performance.

Among them, represents the number of correctly predicted positive samples, represents the number of incorrectly predicted positive samples, represents the number of incorrectly predicted negative samples, and represents the number of categories.

3. Results and Analysis

3.1. Lightweight Network Design

To verify the effectiveness of the lightweight network used in this study, improvements were made to the backbone network of YOLOv10n using c2f-gConv and the current mainstream lightweight feature extraction backbone networks MobileNetV4 [24], StarNet [25], Fasternet [26], and EfficientViT [27], comparing the training effects of different backbone networks on the target, as shown in Table 3.

Table 3.

Comparison of lightweight feature extraction backbone networks.

As shown in Table 3, while StarNet exhibits the smallest parameter count and floating-point operations, its precision, recall, and mAP decline by 3.4, 1.5, and 1.6 percentage points, respectively, compared to YOLOv10n. In contrast, MobileNetV4 and EfficientViT, despite their larger parameter counts and floating-point operations, do not significantly enhance the model’s mAP, precision, or recall. Fasternet improves precision, recall, and mAP by 0.9, 0.8, and 1.5 percentage points relative to YOLOv10n; however, it requires substantially more parameters and floating-point operations, resulting in an overly large model size. Conversely, the C2f-gConv model, with a compact size of only 4.5 MB, enables rapid deployment on mobile platforms. Additionally, it improves precision, recall, and mAP by 1.2, 0.7, and 1.7 percentage points compared to YOLOv10n, effectively enhancing dragon fruit detection accuracy while reducing parameters and computational complexity. Hence, adopting C2f-gConv is a practical and effective solution for achieving a lightweight model.

3.2. Comparison of Detection Performance of Different Attention Mechanisms

To verify the impact of the attention mechanism GAM added in this study on the YOLOv10n model, the CBAM [28], EMA [29], and ECA [30] attention mechanisms were added at the same position, and a performance comparison was made with the attention mechanism in this paper, as shown in Table 4.

Table 4.

Results of comparison of different attention mechanisms.

As illustrated in Table 4, the YOLOv10n+GAM model, after incorporating the attention mechanism, achieves the highest precision and mAP, with only a marginal decrease in recall compared to the YOLOv10n+CBAM model. Compared to YOLOv10n, the precision, recall, and mAP of YOLOv10n+GAM improve by 2.8, 1.9, and 1.9 percentage points, respectively, albeit with a slight reduction in detection speed. Compared to the YOLOv10n+EMA model, the YOLOv10n+GAM model improves precision, recall, and mAP by 3.1, 2.7, and 2.6 percentage points, respectively. Similarly, compared to the YOLOv10n+ECA model, it achieves improvements of 1.4, 1.4, and 0.8 percentage points, respectively. In summary, the incorporation of the GAM attention mechanism significantly enhances the YOLOv10n+GAM model’s feature extraction capabilities, improving its ability to recognize dragon fruits partially obscured by branches. The results demonstrate that introducing the GAM attention mechanism effectively improves the model’s accuracy in detecting dragon fruits.

3.3. Ablation Experiment

This study extends the original YOLOv10n model by incorporating the C2f-gConv module, a Global Attention Mechanism, and a DySample upsampling operator to improve object detection performance under complex environmental conditions. To evaluate the individual contribution of each module to overall performance, systematic ablation experiments were conducted. Each configuration was trained independently five times using distinct random seeds, with all other training settings held constant. The mAP was used as the primary evaluation metric, and paired t-tests were conducted to assess the statistical significance of performance differences between the baseline and enhanced models. The results of the ablation experiments are summarized in Table 5.

Table 5.

Ablation experiment results.

As shown in Table 5, the integration of the gConv enhancement into the C2f module of YOLOv10n resulted in a marked reduction in both model parameters and computational overhead, while the mAP increased by an average of 1.3%. This improvement is attributed to gConv’s ability to combine the benefits of standard convolution and depthwise separable convolution, thereby enhancing inter-channel interactions and improving feature modeling efficiency at low computational cost. In orchard environments, small distant targets often become indistinct within deeper feature maps; however, gConv’s enhanced perceptual capability aids in preserving such crucial information, thereby mitigating feature degradation. The addition of the GAM to the backbone network slightly increased model size but led to notable improvements in precision, recall, and mAP—by 3.4, 1.5, and 2.6 percentage points on average, respectively. These performance gains are primarily due to GAM’s global modeling through spatial–channel fusion, which enhances the network’s attention to semantically rich regions such as fruit edges and crowns, thereby improving the detection of partially occluded fruits and reducing the impact of branch interference. Finally, replacing the conventional upsampling module with DySample yielded further gains in precision, recall, and mAP—by 3.8, 2.7, and 3.0 percentage points on average, respectively. This improvement is attributed to DySample’s ability to more accurately reconstruct object boundary details via dynamic weight learning, thereby enhancing detection performance for irregularly shaped dragon fruits. Furthermore, all improved variants demonstrated statistically significant gains in mAP (p < 0.05) relative to the baseline YOLOv10n, confirming the effectiveness and robustness of the proposed modifications in enhancing detection performance.

Upon the simultaneous integration of gConv, GAM, and DySample, the model’s parameter count was reduced to just 1.7 M, with a computational load of 4.8 GFLOPs. The model size was compressed to 4.5 MB, while the detection frame rate improved to 125.6 FPS, achieving an mAP of 98.1%. These results ensure high detection precision while further optimizing real-time performance and computational efficiency. While all pairwise combinations of the three enhancements improved the network to varying extents, none matched the comprehensive detection performance of YOLOv10n-CGD. Although the GAM + DySample configuration yielded a marginally higher mAP (98.3%), it incurred significantly greater parameter count, model size, and computational complexity than the proposed YOLOv10n-CGD. Furthermore, YOLOv10n-CGD outperformed the GAM + DySample configuration in both precision and recall, reflecting superior robustness and generalization capacity. Therefore, considering multiple metrics including accuracy, inference speed, model compactness, and operational stability, YOLOv10n-CGD delivers the most balanced overall performance in complex field environments, rendering it particularly well-suited for meeting the dual demands of rapid detection and efficient deployment in practical agricultural applications.

3.4. Comparison Test of Different Target Detection Models

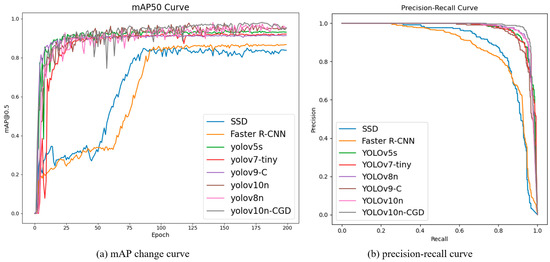

The improved YOLOv10n-CGD model based on YOLOv10n is compared with mainstream object detection network models such as SSD, Faster R-CNN, YOLOv5s, YOLOv7-tiny, and YOLOv8n. It is important to note that for the YOLO series models, the training was conducted using the parameter settings specified in Table 2. In contrast, for SSD and Faster R-CNN, the default official training configurations were adopted due to framework constraints. Nevertheless, to ensure fair comparisons, all models were trained for the same number of epochs and evaluated on the same dataset, thereby maintaining a consistent experimental foundation. The corresponding results are summarized in Table 6.

Table 6.

Comparison of test results for different detection models.

As shown in Table 6, the improved YOLOv10n-CGD model achieves precision, recall, and mean average precision of 97.1%, 95.7%, and 98.1%, respectively, outperforming other models. Its mean average precision exceeds that of SSD, Faster R-CNN, YOLOv5s, YOLOv7-tiny, YOLOv8n, YOLOv9-C, and YOLOv10n by 14.2, 12.9, 4.9, 5.4, 2.5, 6.7, and 3.0 percentage points, respectively. Regarding detection speed, the YOLOv10n-CGD model achieves a frame rate of 125.6 FPS, representing a significant advantage over other models. Moreover, the YOLOv10n-CGD model’s weight is only 4.5 MB, smaller than that of all other models, making it highly suitable for mobile deployment. In summary, the YOLOv10n-CGD model proposed in this study demonstrates superior performance in terms of size, detection accuracy, and speed compared to other mainstream networks, enabling fast and accurate dragon fruit detection in natural environments.

Figure 9 presents the mAP change curves and the P-R curve visualizations for each model during training. Figure 9a shows that the YOLOv10n-CGD model gradually stabilizes and surpasses other detection models at around 125 iterations, with no signs of overfitting or underfitting. As illustrated in Figure 9b, the area under the P-R curve for the YOLOv10n-CGD model is closest to 1, demonstrating its superior detection performance.

Figure 9.

Parametric curves of different object detection models during training.

3.5. Visualization Results of Different Target Detection Models

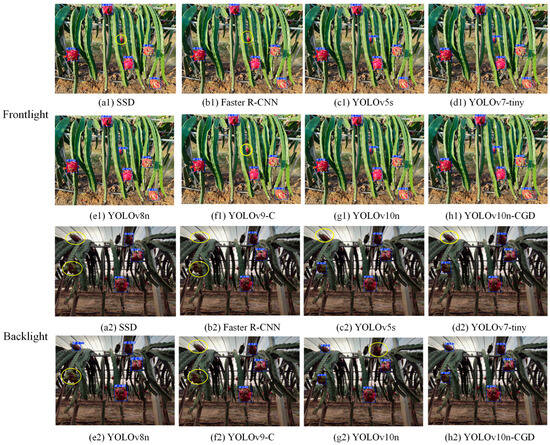

To further validate the detection performance of the YOLOv10n-CGD model for dragon fruit, this study compares and analyzes the target detection networks discussed in Section 3.4 under varying lighting conditions and occlusion scenarios. Figure 10 illustrates the detection results of each algorithm under varying lighting conditions. Under uniform lighting conditions, SSD, Faster R-CNN, and YOLOv9-C miss detecting small target fruits at longer distances. While other networks successfully detect dragon fruits, their accuracy is slightly inferior to YOLOv10n-CGD. Under backlit conditions, the low brightness reduces the color distinction between dragon fruits and the background. Consequently, networks like Faster R-CNN, YOLOv5s, YOLOv8n, and YOLOv10n experience varying levels of missed detections and false positives. In contrast, the YOLOv10n-CGD model maintains robust performance and demonstrates consistent accuracy under diverse lighting conditions.

Figure 10.

Recognition effects of different models in smooth and backlit environments. Note: the blue boxes indicate correctly identified fruits; the yellow circles indicate missed or unrecognized fruits.

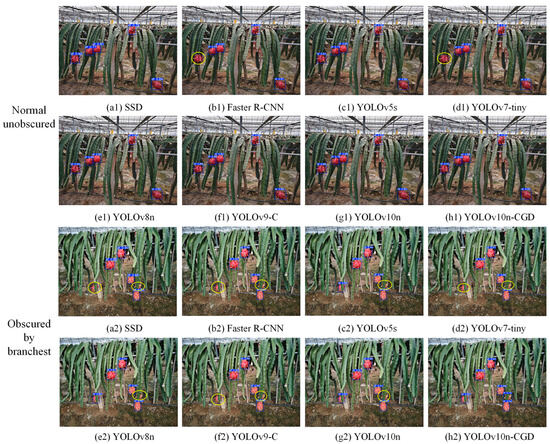

Figure 11 depicts the detection results for each network when fruits are partially occluded by branches, as illustrated in Figure 11(a2,b2,d2,f2). SSD, Faster R-CNN, YOLOv7-tiny, and YOLOv9-C each miss detecting two fruits, while YOLOv5s, YOLOv8n, and YOLOv10n miss one fruit each, as illustrated in Figure 11(c2,e2,g2). In contrast, the proposed YOLOv10n-CGD model demonstrates superior detection performance under occlusion conditions and maintains consistent accuracy across diverse environments.

Figure 11.

Recognition effect of different models with and without occlusions. Note: The blue boxes indicate correctly identified fruits; The yellow circles indicate missed or unrecognized fruits.

3.6. Robustness Evaluation of the Model in Complex Environments

To comprehensively assess the robustness of the proposed YOLOv10n-CGD model in real-world complex environments, we constructed test subsets under varying lighting conditions and occlusion scenarios and conducted comparative experiments to verify its adaptability for practical applications.

3.6.1. Robustness Under Varying Lighting Conditions

To evaluate the model’s robustness under complex lighting scenarios, the test dataset was manually annotated and categorized based on lighting direction during image acquisition. Two representative subsets were created: a frontlight subset and a backlight subset, each consisting of 100 representative images for performance evaluation.

Both the baseline YOLOv10n and the improved YOLOv10n-CGD models were evaluated on these two subsets using three standard metrics: Precision, Recall, and mAP. To ensure the reliability of comparative results, five independent training and testing sessions were conducted for each model on both subsets using different random seeds. A paired t-test was performed on the mAP results to determine the statistical significance of the performance differences. The results are presented in Table 7.

Table 7.

Comparison of model performance under different lighting conditions.

As shown in Table 7, under front-light conditions, YOLOv10n-CGD achieved an average improvement of 2.7 percentage points in mAP compared to the baseline model. Under backlight conditions, the improvement was even more pronounced, reaching 3.5 percentage points. In both scenarios, the improvements were statistically significant (p < 0.05), indicating that the proposed enhancements effectively improve the model’s feature extraction capability and robustness against illumination variability in complex environments.

3.6.2. Performance Evaluation on the Occlusion Subset

To assess the robustness of the improved model in scenarios involving complex occlusions, an occlusion subset was constructed from the test dataset. This subset comprises samples in which the dragon fruit target is partially obscured by foreground branches. The selection criterion was as follows: if, based on manual assessment, the area of the fruit occluded by branches exceeded 30% of its bounding box area—approximately corresponding to an IoU > 0.3 between the target and occlusion region—the image was considered severely occluded and included in the subset. In total, 100 representative occlusion images were selected for performance evaluation.

On this subset, we evaluated and compared the detection performance of the baseline YOLOv10n model and the improved YOLOv10n-CGD model. To verify the statistical significance of the observed performance differences, a paired t-test was conducted on the key metric, mAP. The evaluation results are summarized in Table 8.

Table 8.

Performance comparison of models under the occlusion subset.

As shown in Table 8, YOLOv10n-CGD consistently outperformed the baseline across all evaluated metrics, achieving average improvements of 2.8, 3.8, and 3.9 percentage points in Precision, Recall, and mAP, respectively. Notably, the improvement in mAP was statistically significant (p < 0.01), confirming that the observed gain is unlikely due to random variation. These results clearly demonstrate that the integration of the GAM attention mechanism enhances the model’s responsiveness to partially occluded targets, substantially alleviates performance degradation caused by branch occlusion, and significantly improves the model’s robustness in real-world orchard environments.

3.7. Model Deployment on Mobile Devices and System Integration Analysis

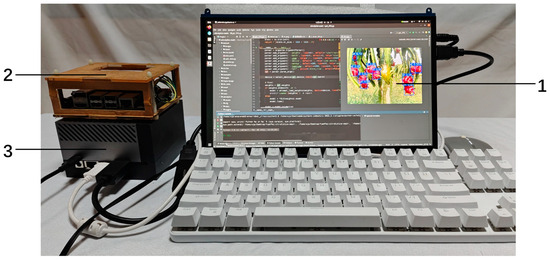

To validate the actual deployment performance of the improved YOLOv10n-CGD model on embedded mobile devices and to evaluate the impact of each enhancement module on model efficiency, this study tested the detection frame rates of various models on edge computing devices Jetson AGX Orin and Jetson Nano. The test platform is shown in Figure 12. Table 9 presents the frame rate results of the model tests.

Figure 12.

Embedded device model test diagram. Note: 1. Test interface 2. Jetson Nano device 3. Jetson AGX Orin device.

Table 9.

Detection Frame Rates of Different Models Deployed on Jetson Devices.

Table 9 reveals that the C2f-gConv module is the primary contributor to the improvement in detection frame rates, yielding performance gains of 14.1 FPS on the Jetson AGX Orin and 6.5 FPS on the Jetson Nano. This underscores its substantial role in enhancing the model’s operational efficiency and reducing computational resource consumption. In contrast, the DySample module has a relatively minor effect on inference speed. The GAM attention mechanism, while beneficial for accuracy enhancement, introduces a slight reduction in frame rate due to its additional computational overhead. The integrated YOLOv10n-CGD model achieves detection frame rates of 44.9 FPS and 17.2 FPS on the Jetson AGX Orin and Jetson Nano, respectively—representing improvements of 12.2 FPS and 5.6 FPS compared to the baseline YOLOv10n. These results highlight the strong adaptability and synergistic optimization effect of the multi-module design in embedded deployment scenarios. Moreover, the deployed model runs stably on both devices, with memory usage remaining well within hardware limits. On the Jetson Nano, the average single-frame inference latency is approximately 58.1 milliseconds (derived from the frame rate), significantly below the 200-millisecond threshold required for real-time robotic control. These findings demonstrate that the improved YOLOv10n-CGD model not only maintains high detection performance on edge devices but also exhibits excellent real-time processing capability and deployment robustness. This provides strong technical support for the development of efficient intelligent perception and robotic harvesting systems for dragon fruit in practical agricultural applications.

Although the proposed YOLOv10n-CGD model demonstrates strong detection performance on embedded mobile devices, practical deployment in agricultural scenarios requires integration with additional functional modules, including 3D localization and robotic arm control. Within the complete automated harvesting pipeline, the detection model first outputs 2D bounding box information, which is then fused with depth image data based on camera calibration principles to estimate the 3D spatial coordinates of the target fruit. These coordinates serve as inputs for the robotic arm control module, enabling accurate path planning and grasp execution. The entire harvesting system adopts a modular architecture that supports closed-loop processing on edge computing platforms. This includes image acquisition, target detection, 3D localization, and transmission of control commands. The system is capable of handling simultaneous multi-fruit detection and planning sequential harvesting actions, thereby meeting the real-time and coordination requirements of automated agricultural operations. These results demonstrate that the improved YOLOv10n-CGD model provides a reliable perceptual foundation for intelligent harvesting systems, particularly for high-value crops such as dragon fruit.

4. Conclusions

This study proposes a lightweight and rapid dragon fruit detection method for harvesting robots under natural environments. The conclusions derived from the ablation experiments and comparative analyses with other target detection models are as follows:

(1) The proposed YOLOv10n-CGD model demonstrates significant performance improvements, with mean precision, recall, and average precision values of 97.3%, 95.8%, and 98.2%, respectively, surpassing the original YOLOv10n model by 4.1, 2.9, and 3.1 percentage points. This highlights its superior detection performance.

(2) The enhanced model exhibits superior detection performance under varying lighting and shading conditions. Compared to SSD, Faster R-CNN, YOLOv5S, YOLOv8n, and other algorithms, it demonstrates clear advantages in model size, detection accuracy, and speed, enabling efficient and accurate dragon fruit detection in natural environments.

(3) The improved model achieves a 22.4% reduction in weight size, with detection frame rates of 44.9 and 17.2 FPS on the Jetson AGX Orin and Jetson Nano edge computing devices, respectively. Its deployment performance surpasses the YOLOv10n baseline, effectively meeting the requirements for lightweight embedded deployment.

The proposed YOLOv10n-CGD model achieves high-precision and real-time detection of dragon fruit in complex orchard environments and demonstrates strong potential for deployment on embedded mobile platforms. However, the model still faces several limitations. The dataset used in this study was collected from specific regions and time periods, lacking diversity in terms of seasons, orchard conditions, and fruit varieties, which limits the generalization capability of the model. Moreover, since all images were acquired using the same type of camera, the model may have implicitly learned sensor-specific imaging characteristics, potentially impacting its performance when deployed across different devices.

To address these limitations, future research will focus on collecting multi-temporal and multi-regional datasets using various camera types in orchards across different geographic locations. We will also explore domain adaptation strategies—such as unsupervised feature alignment—to mitigate performance degradation under cross-orchard and cross-device scenarios, thereby enhancing the model’s cross-domain robustness. In addition, we plan to incorporate active learning techniques to iteratively select and annotate challenging samples, further improving the model’s performance in scenarios with severe occlusion or suboptimal lighting conditions. Finally, efforts will be made to realize end-to-end deployment of the model on agricultural robotic platforms and conduct extensive field validation to support real-world applications in intelligent harvesting systems.

Author Contributions

Conceptualization, J.W. and C.F.; methodology, F.Y.; software, F.Y.; vali-dation, J.W., W.D. and C.F.; formal analysis, H.Z.; investigation, W.D., S.M. and S.C.; resources, J.W. and W.D.; data curation, C.F.; writing—original draft preparation, F.Y.; writing—review and editing, F.Y.; visualization, F.Y.; supervision, S.M. and S.C.; project administration, W.D., S.M. and H.Z.; funding acquisition, H.Z. and J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the Emergency science and technology project of research on High-Efficiency Management Operational Equipment for Plantations (2024YFD2200702), Key R&D Program of Jiangsu Modern Agricultural Machinery Equipment and Technology Promotion Project (NJ2021-18), National Key R&D Program of the 14th Five-Year Plan, (2022YFD2202105-1).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author.

Acknowledgments

In addition to the funds received, we would like to thank Lile Agriculture, Nanjing City, Jiangsu Province, for the fruit data provided.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shah, K.; Chen, J.; Chen, J.; Qin, Y. Pitaya nutrition, biology, and biotechnology: A review. Int. J. Mol. Sci. 2023, 24, 13986. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Wang, X.; Au, W.; Kang, H.; Chen, C. Intelligent robots for fruit harvesting: Recent developments and future challenges. Precis. Agric. 2022, 23, 1856–1907. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Tu, S.; Huang, Y.; Liang, Y.; Liu, H.; Cai, Y.; Lei, H. A passion fruit counting method based on the lightweight YOLOv5s and improved DeepSORT. Precis. Agric. 2024, 25, 1731–1750. [Google Scholar] [CrossRef]

- Hou, C.; Xu, J.; Tang, Y.; Zhuang, J.; Tan, Z.; Chen, W.; Wei, S.; Huang, H.; Fang, M. Detection and localization of citrus picking points based on binocular vision. Precis. Agric. 2024, 25, 2321–2355. [Google Scholar] [CrossRef]

- Nan, Y.; Zhang, H.; Zeng, Y.; Zheng, J.; Ge, Y. Faster and accurate green pepper detection using NSGA-II-based pruned YOLOv5l in the field environment. Comput. Electron. Agric. 2023, 205, 107563. [Google Scholar] [CrossRef]

- Bhatti, U.A.; Tang, H.; Wu, G.; Marjan, S.; Hussain, A. Deep learning with graph convolutional networks: An overview and latest applications in computational intelligence. Int. J. Intell. Syst. 2023, 2023, 8342104. [Google Scholar] [CrossRef]

- Gui, J.; Wu, D.; Xu, H.; Chen, J.; Tong, J. Tea bud detection based on multi-scale convolutional block attention module. J. Food Process Eng. 2024, 47, e14556. [Google Scholar] [CrossRef]

- Ji, W.; Gao, X.; Xu, B.; Pan, Y.; Zhang, Z.; Zhao, D. Apple target recognition method in complex environment based on improved YOLOv4. J. Food Process Eng. 2021, 44, e13866. [Google Scholar] [CrossRef]

- Liu, P.; Yin, H. Yolov7-peach: An algorithm for immature small yellow peaches detection in complex natural environments. Sensors 2023, 23, 5096. [Google Scholar] [CrossRef]

- Gai, R.; Liu, Y.; Xu, G. TL-YOLOv8: A blueberry fruit detection algorithm based on improved YOLOv8 and transfer learning. IEEE Access 2024, 12, 86378–86390. [Google Scholar] [CrossRef]

- Jiang, M.; Song, L.; Wang, Y.; Li, Z.; Song, H. Fusion of the YOLOv4 network model and visual attention mechanism to detect low-quality young apples in a complex environment. Precis. Agric. 2022, 23, 559–577. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Y.; Wang, J. RDE-YOLOv7: An improved model based on YOLOv7 for better performance in detecting dragon fruits. Agronomy 2023, 13, 1042. [Google Scholar] [CrossRef]

- Nan, Y.; Zhang, H.; Zeng, Y.; Zheng, J.; Ge, Y. Intelligent detection of Multi-Class pitaya fruits in target picking row based on WGB-YOLO network. Comput. Electron. Agric. 2023, 208, 107780. [Google Scholar] [CrossRef]

- Qiu, Z.; Huang, Z.; Mo, D.; Tian, X.; Tian, X. GSE-YOLO: A Lightweight and High-Precision Model for Identifying the Ripeness of Pitaya (Dragon Fruit) Based on the YOLOv8n Improvement. Horticulturae 2024, 10, 852. [Google Scholar] [CrossRef]

- Li, X.; Wang, X.; Ong, P.; Yi, Z.; Ding, L.; Han, C. Fast Recognition and Counting Method of Dragon Fruit Flowers and Fruits Based on Video Stream. Sensors 2023, 23, 8444. [Google Scholar] [CrossRef]

- Wang, C.; Wang, C.; Wang, L.; Li, Y.; Lan, Y. Real-Time Tracking Based on Improved YOLOv5 Detection in Orchard Environment for Dragon Fruit. J. ASABE 2023, 66, 1109–1124. [Google Scholar] [CrossRef]

- Huang, L.; Chen, M.; Peng, Z. YOLOv8-G: An Improved YOLOv8 Model for Major Disease Detection in Dragon Fruit Stems. Sensors 2024, 24, 5034. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Guan, S.; Lin, Y.; Lin, G.; Su, P.; Huang, S.; Meng, X.; Liu, P.; Yan, J. Real-time detection and counting of wheat spikes based on improved YOLOv10. Agronomy 2024, 14, 1936. [Google Scholar] [CrossRef]

- Song, Y.; Zhou, Y.; Qian, H.; Du, X. Rethinking performance gains in image dehazing networks. arXiv 2022, arXiv:2209.11448. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to upsample by learning to sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 6027–6037. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4: Universal Models for the Mobile Ecosystem. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2025; pp. 78–96. [Google Scholar]

- Ngiam, J.; Caine, B.; Han, W.; Yang, B.; Chai, Y.; Sun, P.; Zhou, Y.; Yi, X.; Alsharif, O.; Nguyen, P.; et al. Starnet: Targeted computation for object detection in point clouds. arXiv 2019, arXiv:1908.11069. [Google Scholar]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 12021–12031. [Google Scholar]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 14420–14430. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE Press: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).