A Lightweight Citrus Object Detection Method in Complex Environments

Abstract

1. Introduction

- (1)

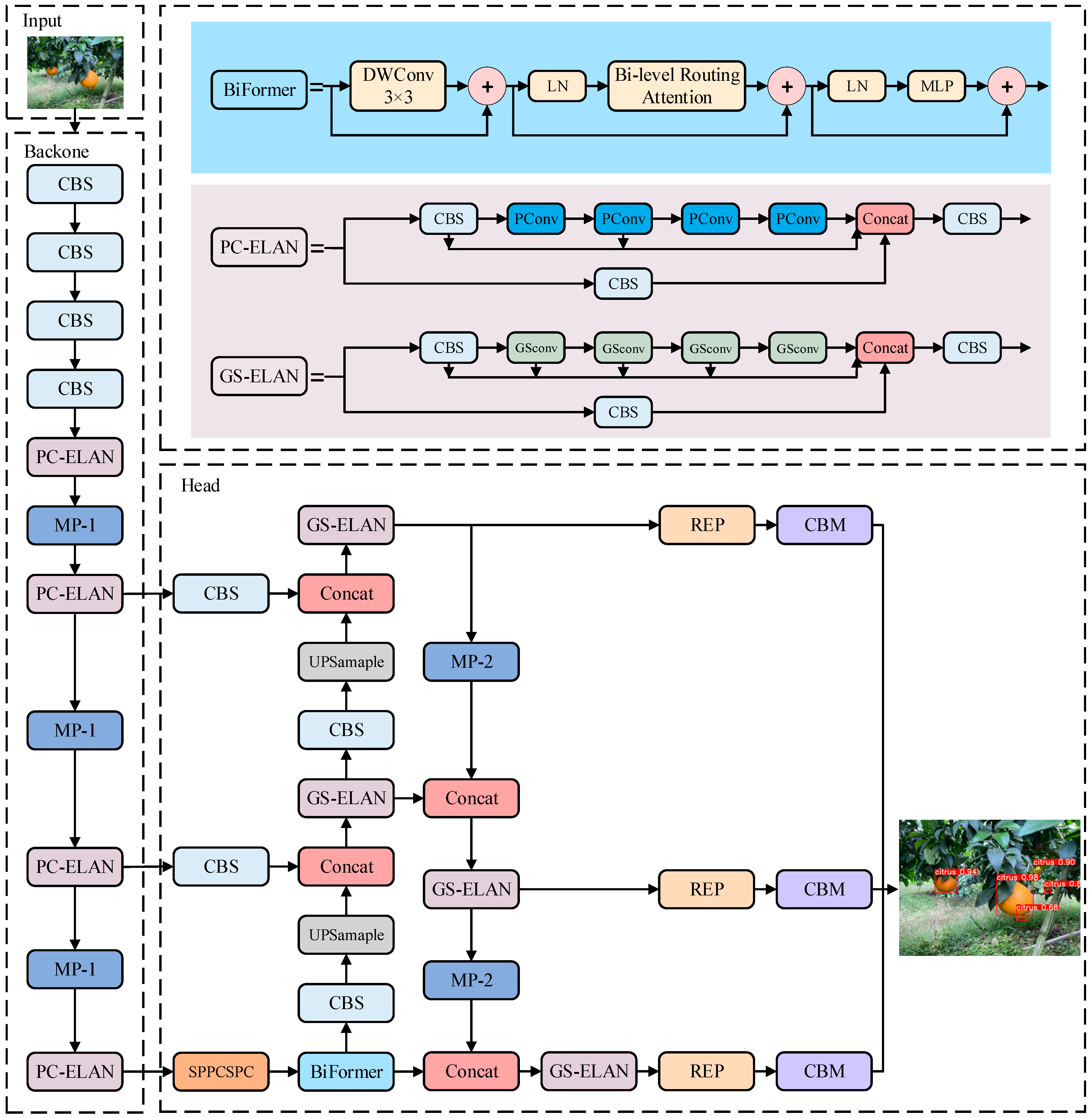

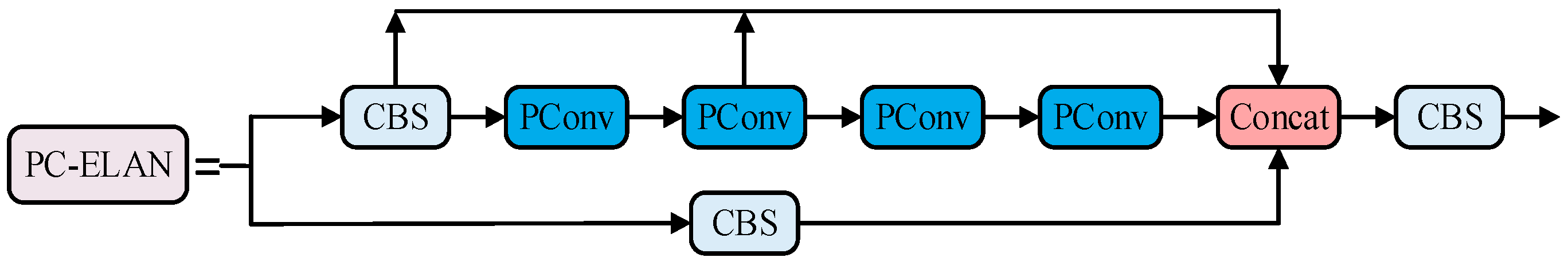

- To address the issues of large model size, high computational complexity, and difficulties in deployment on mobile devices, some CBS modules are replaced with PConv modules to construct the PC-ELAN module. This modification reduces the demand for computational resources and improves inference efficiency on mobile devices.

- (2)

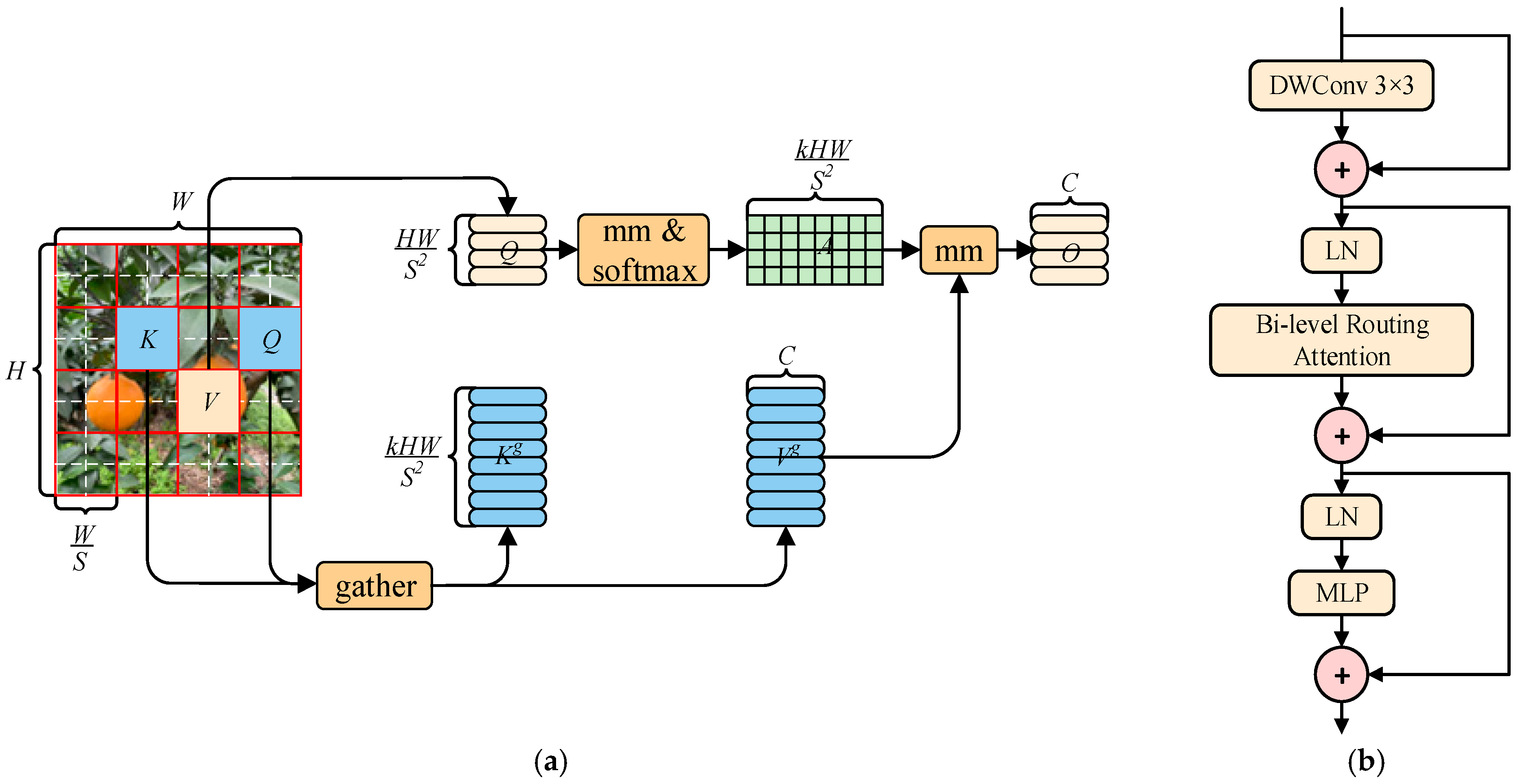

- The BiFormer attention mechanism is embedded to achieve more flexible calculation allocation and feature perception and to enhance the sensitivity of perception to key data of citrus fruits.

- (3)

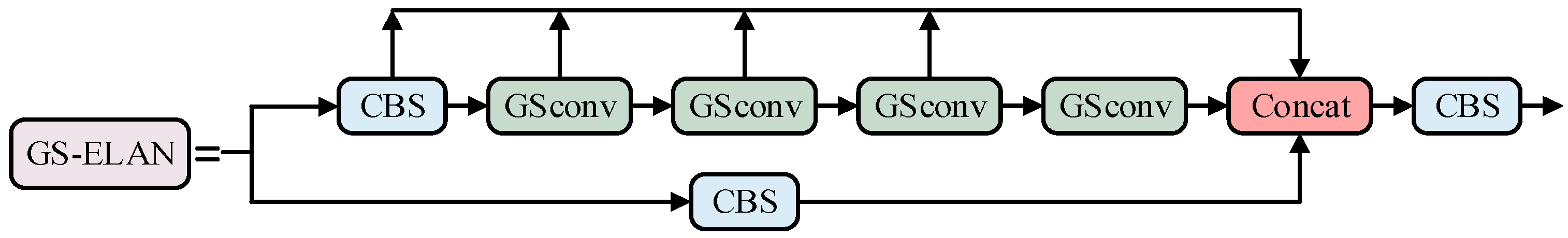

- The GS-ELAN module is constructed using GSConv to provide richer target information and enhance the network’s nonlinear capabilities.

- (4)

- The MDPIoU loss function is utilized to address the problem of distorted detection boxes caused by large sample differences, while also speeding up model convergence.

2. Materials and Methods

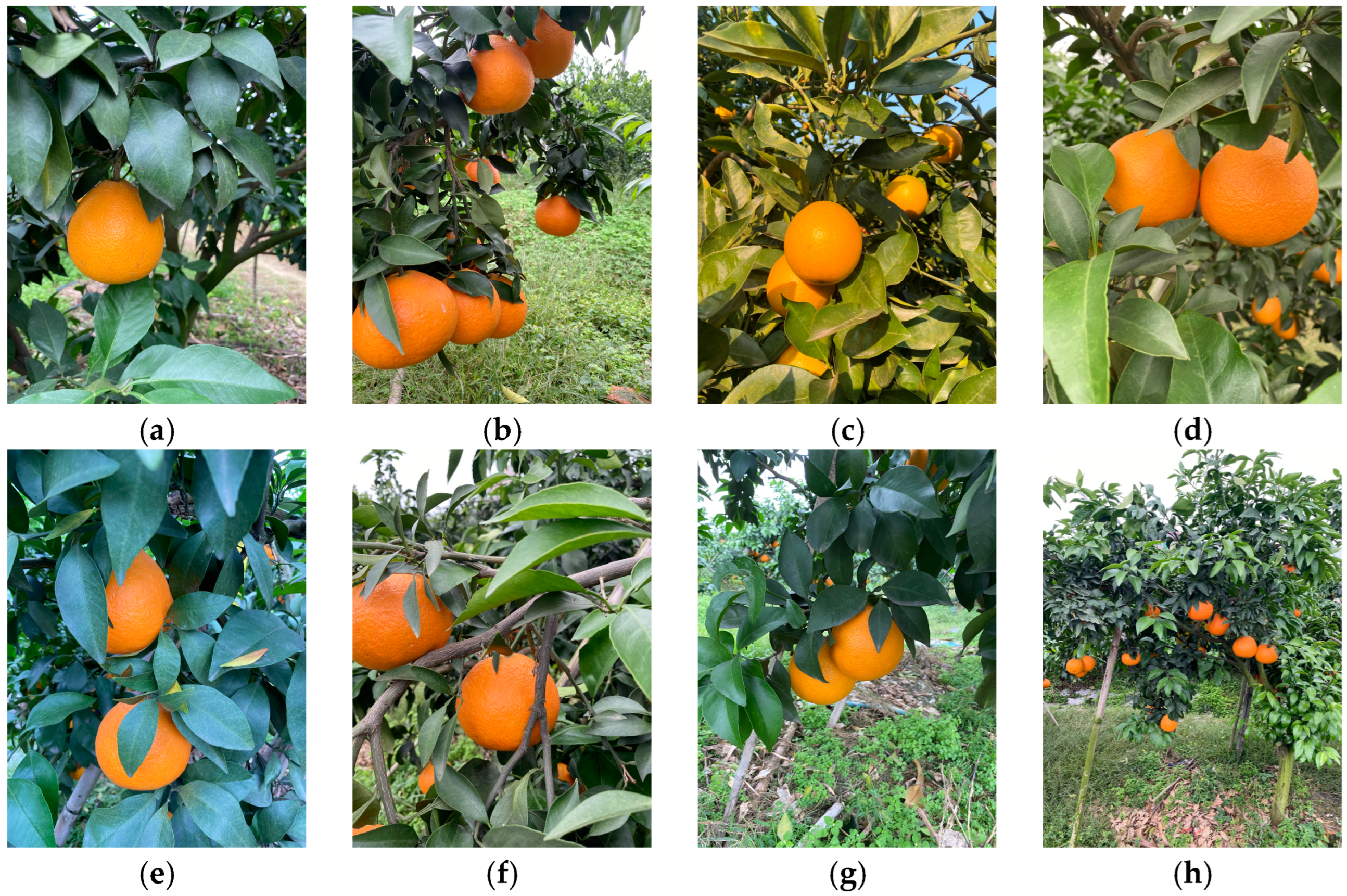

2.1. Collecting Datasets

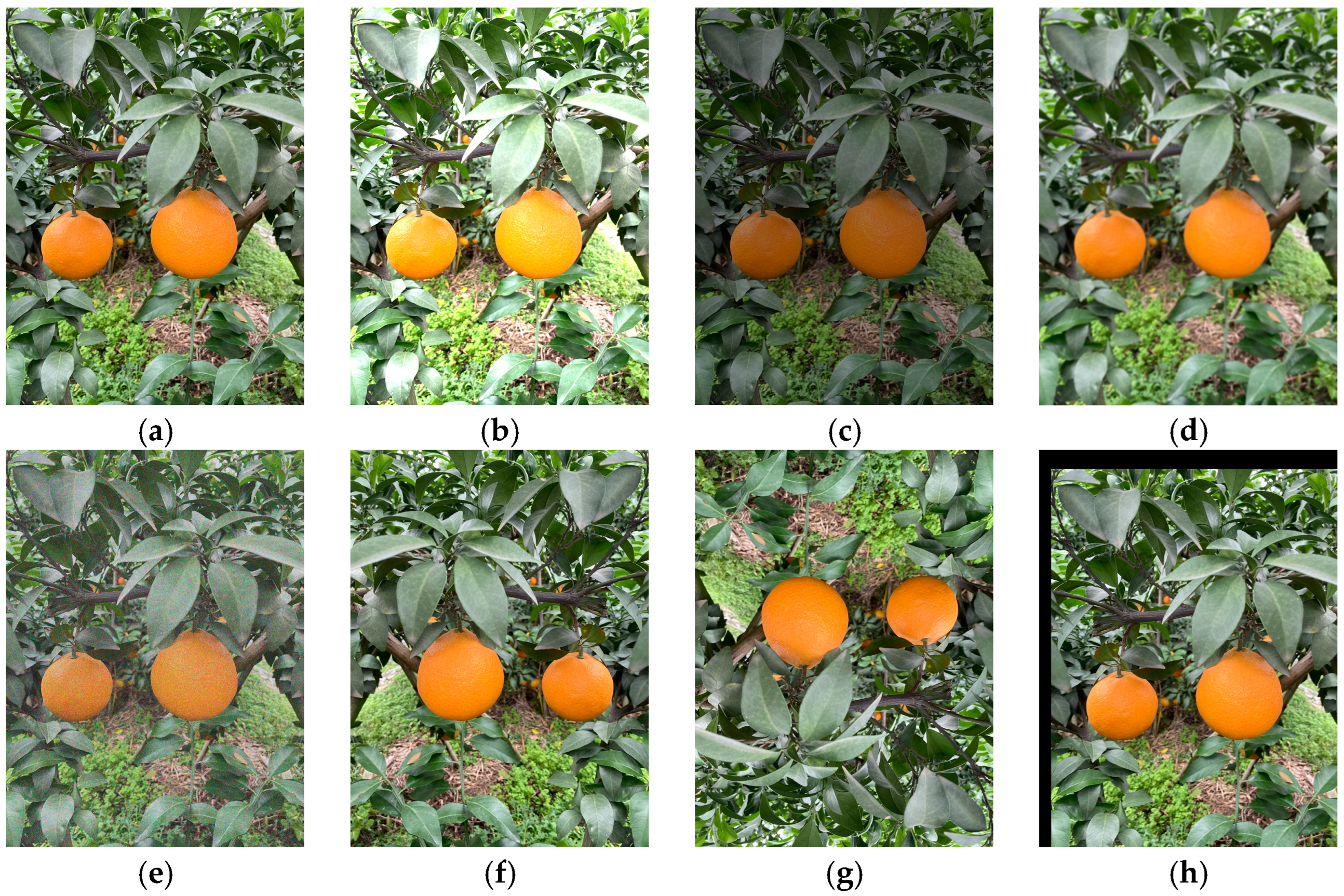

2.2. Dataset Production

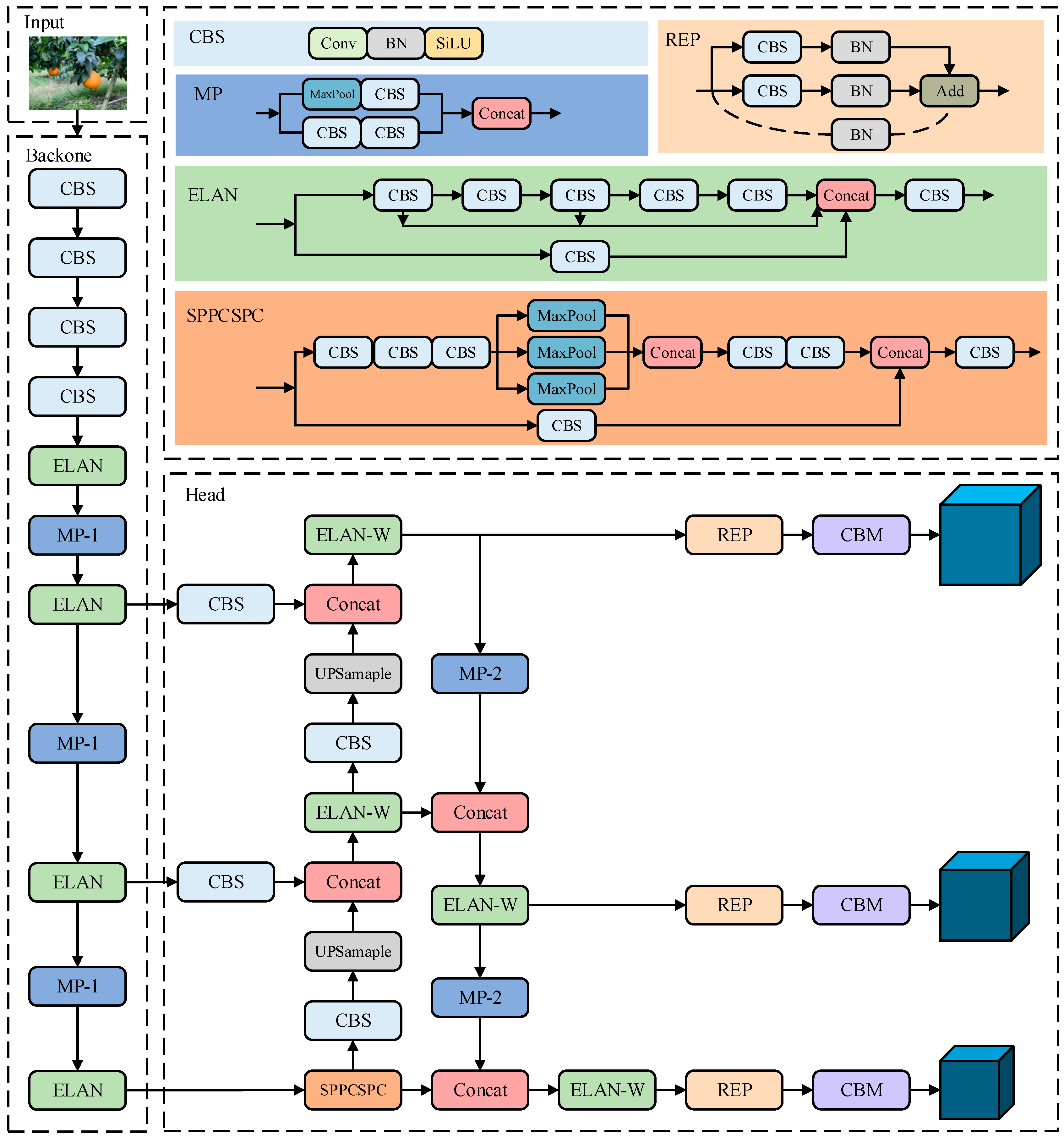

2.3. YOLOv7 Model

2.4. YOLO-PBGM Algorithm

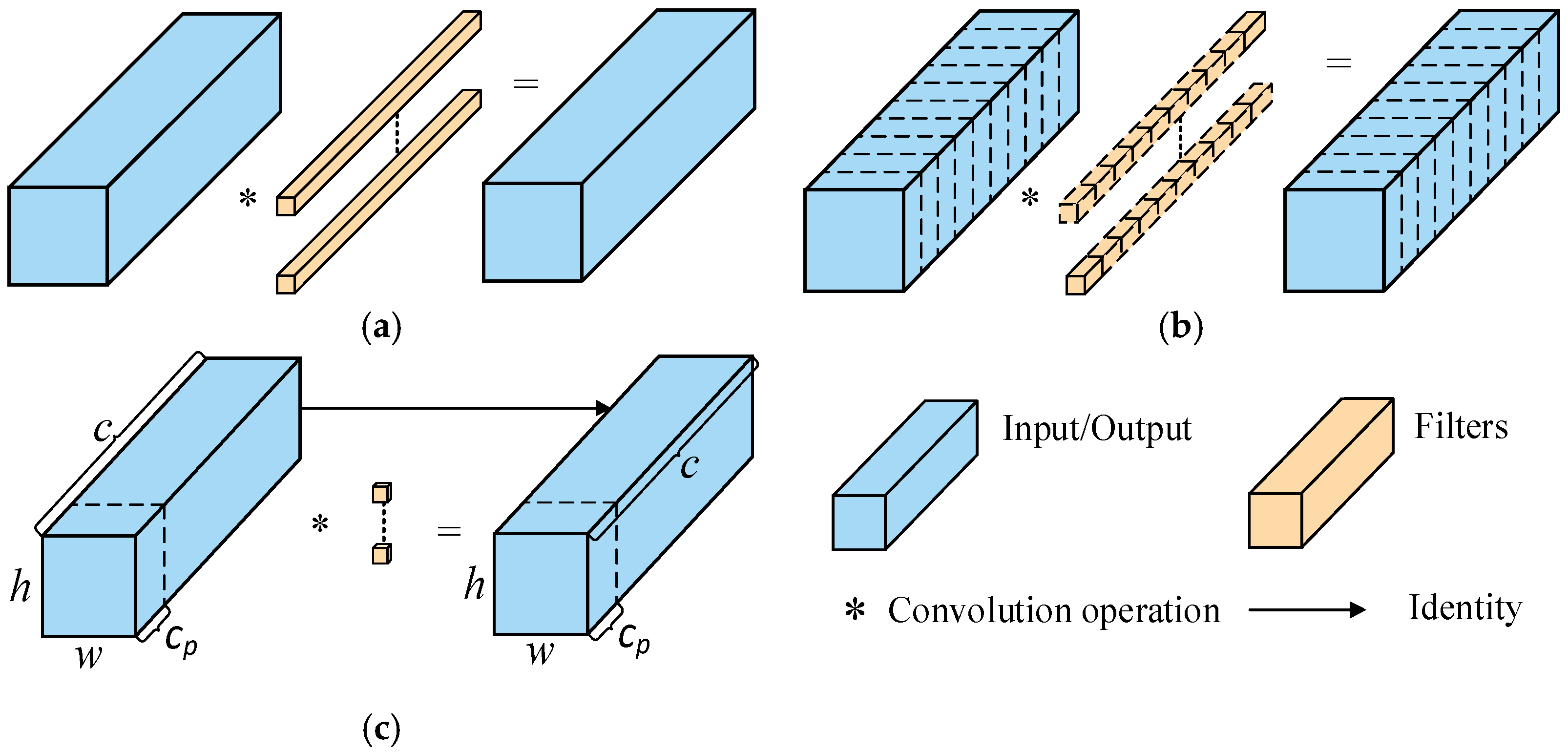

2.4.1. PConv

2.4.2. BiFormer Attention Mechanism

2.4.3. GSConv Convolution

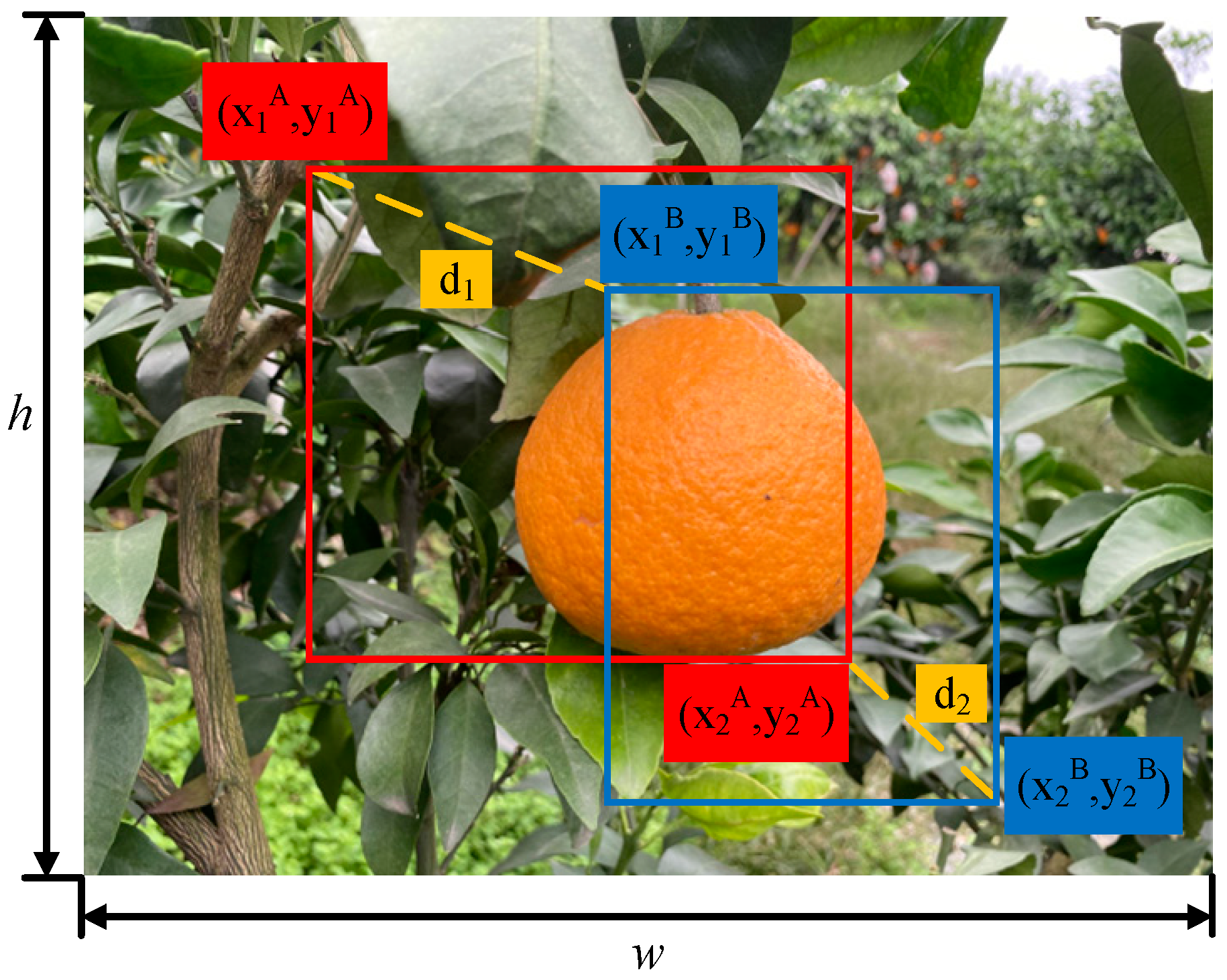

2.4.4. MPDIoU Loss Function

3. Results

3.1. Experimental Platform

3.2. Evaluation Index

3.3. Attention Mechanism Comparison Experiment

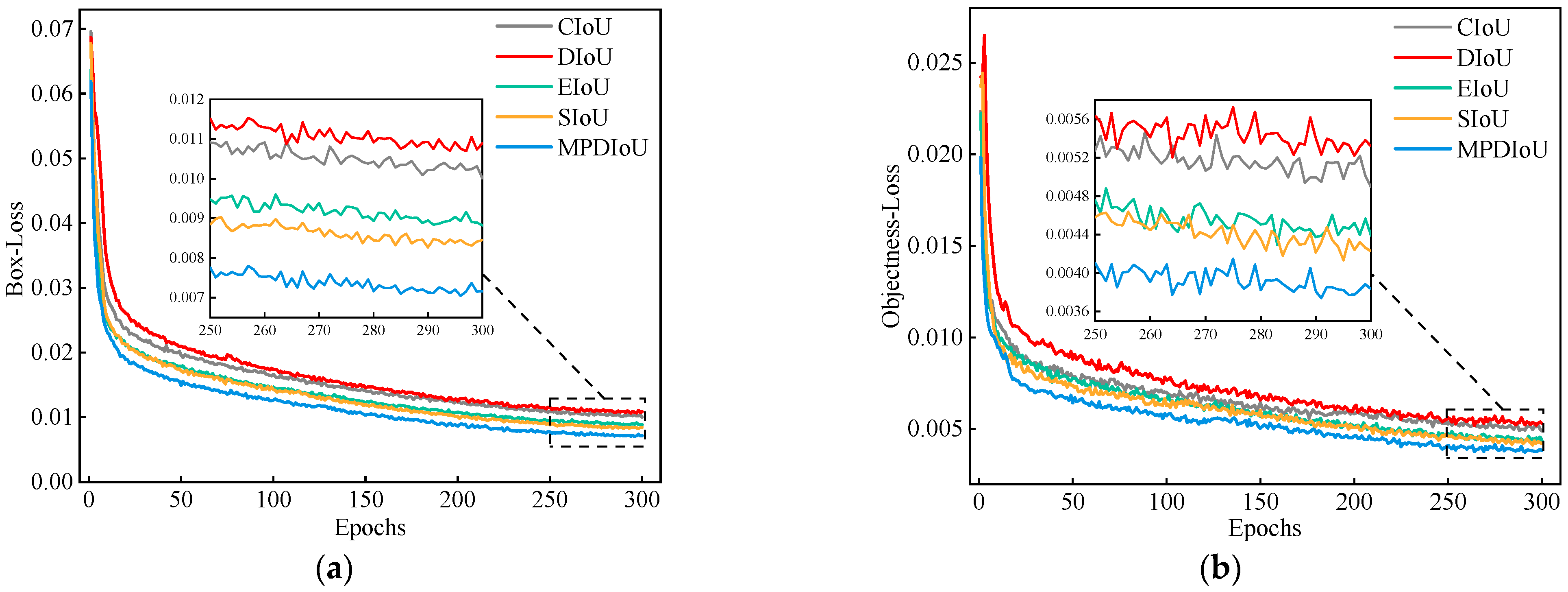

3.4. Comparative Experiment of Loss Functions

3.5. Ablation Experiment

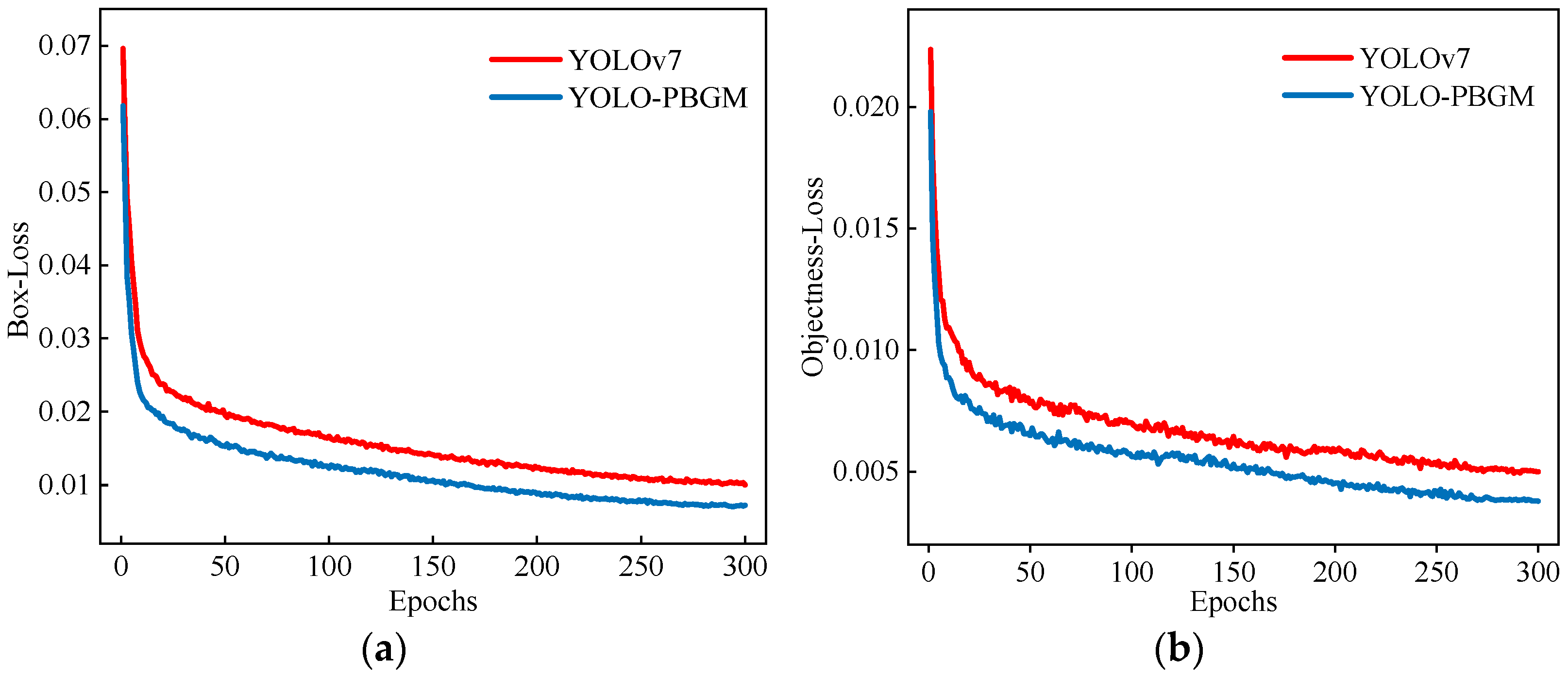

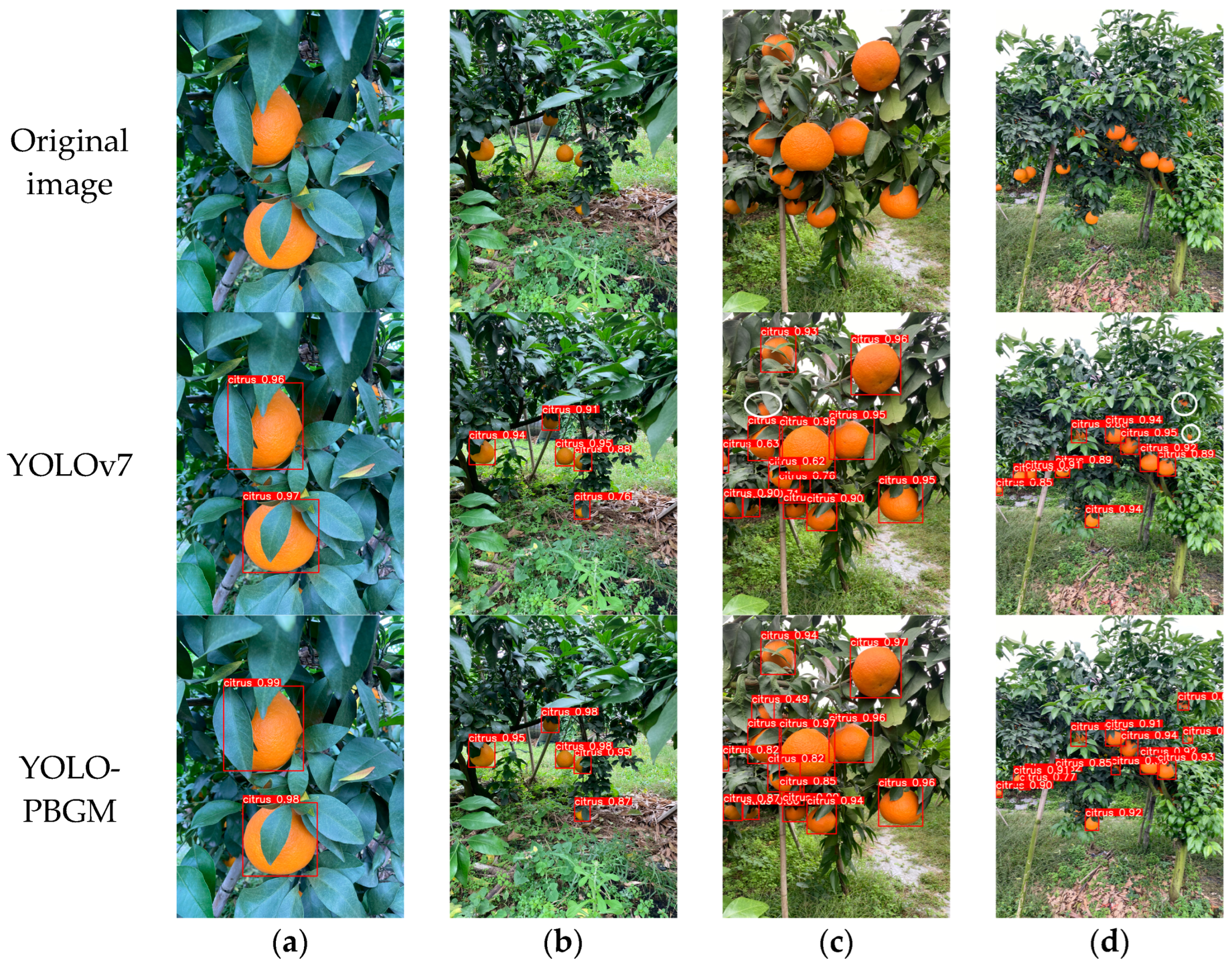

3.6. Improved YOLO-PBGM Comparison Experiment

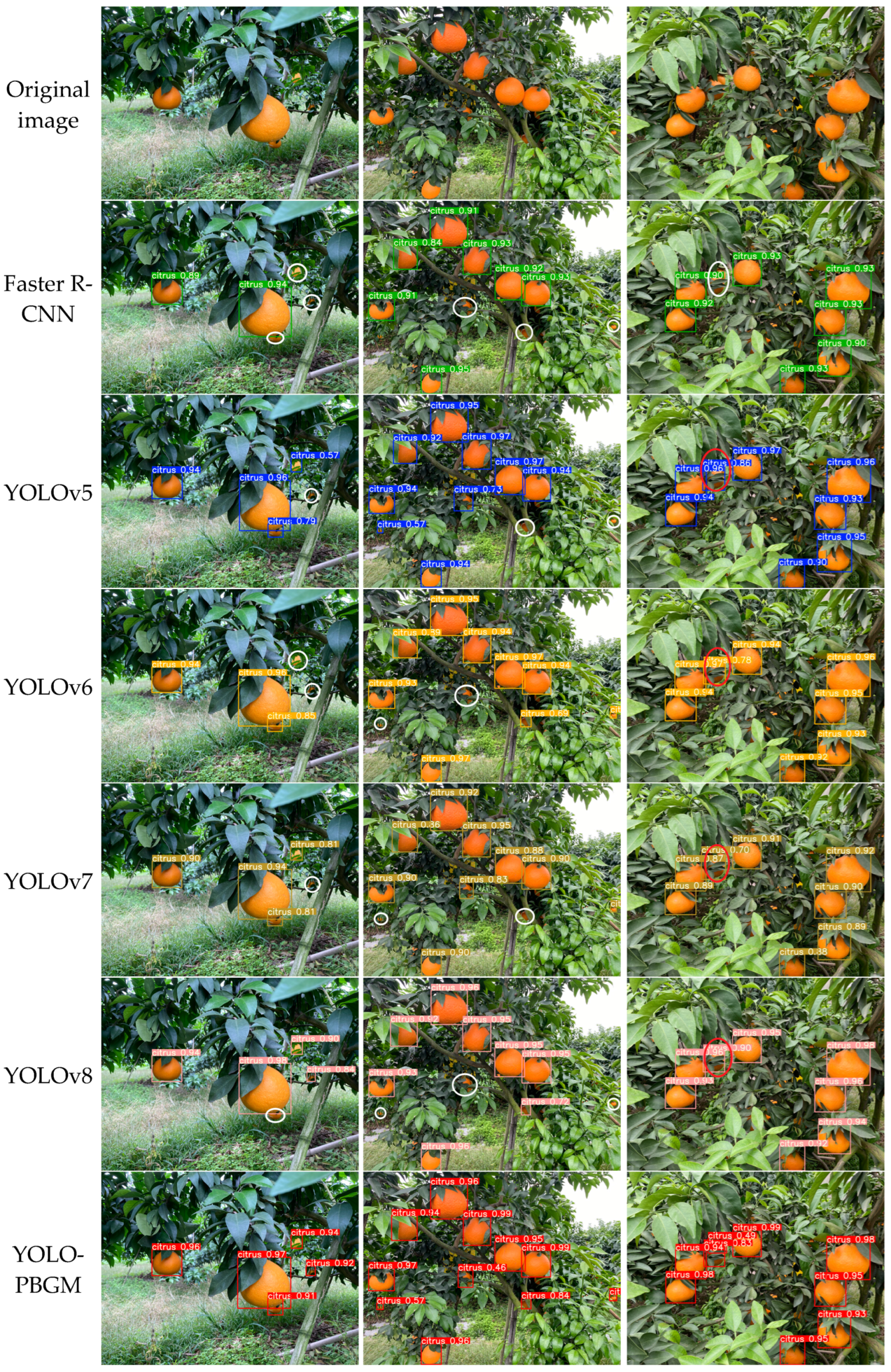

3.7. Model Comparison

3.7.1. Detection Performance Analysis with Benchmark Model

3.7.2. Comparison of Different Citrus Detection Models

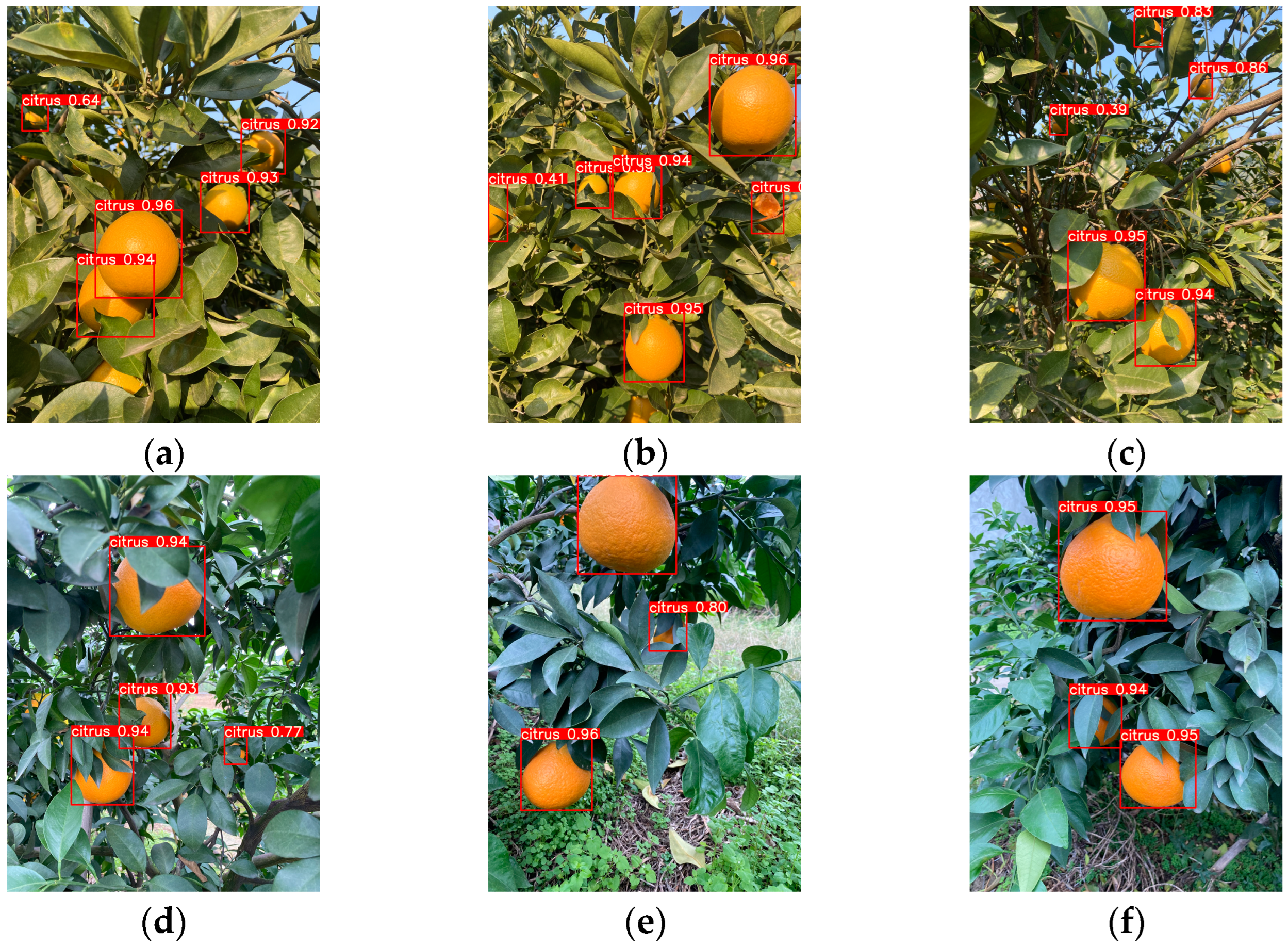

4. Discussion

4.1. Detection of Different Lighting Conditions

4.2. Detection of Different Occlusion Conditions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Hayat, F.; Li, J.; Iqbal, S.; Peng, Y.; Hong, L.; Balal, R.M.; Khan, M.N.; Nawaz, M.A.; Khan, U.; Farhan, M.A. A mini review of citrus rootstocks and their role in high-density orchards. Plants 2022, 11, 2876. [Google Scholar] [CrossRef]

- Singerman, A.; Rogers, M.E. The economic challenges of dealing with citrus greening: The case of Florida. J. Integr. Pest Manag. 2020, 11, 3. [Google Scholar] [CrossRef]

- Wang, S.; Xie, W.; Yan, X. Effects of future climate change on citrus quality and yield in China. Sustainability 2022, 14, 9366. [Google Scholar] [CrossRef]

- Huang, Z.; Li, Z.; Yao, L.; Yuan, Y.; Hong, Z.; Huang, S.; Wang, Y.; Ye, J.; Zhang, L.; Ding, J. Geographical distribution and potential distribution prediction of thirteen species of Citrus L. in China. Environ. Sci. Pollut. Res. 2024, 31, 6558–6571. [Google Scholar] [CrossRef] [PubMed]

- Liang, Y.; Jiang, W.; Liu, Y.; Wu, Z.; Zheng, R. Picking-Point Localization Algorithm for Citrus Fruits Based on Improved YOLOv8 Model. Agriculture 2025, 15, 237. [Google Scholar] [CrossRef]

- Xiao, X.; Wang, Y.N.; Jiang, Y.M. End-Effectors Developed for Citrus and Other Spherical Crops. Appl. Sci. 2022, 12, 7945. [Google Scholar] [CrossRef]

- Chen, Z.Q.; Lei, X.H.; Yuan, Q.C.; Qi, Y.N.; Ma, Z.B.; Qian, S.C.; Lyu, X. Key Technologies for Autonomous Fruit- and Vegetable-Picking Robots: A Review. Agronomy 2024, 14, 2233. [Google Scholar] [CrossRef]

- Lu, J.; Lee, W.S.; Gan, H.; Hu, X. Immature citrus fruit detection based on local binary pattern feature and hierarchical contour analysis. Biosyst. Eng. 2018, 171, 78–90. [Google Scholar] [CrossRef]

- Wu, G.; Li, B.; Zhu, Q.; Huang, M.; Guo, Y. Using color and 3D geometry features to segment fruit point cloud and improve fruit recognition accuracy. Comput. Electron. Agric. 2020, 174, 105475. [Google Scholar] [CrossRef]

- Dubey, S.R.; Jalal, A.S. Apple disease classification using color, texture and shape features from images. Signal Image Video Process. 2016, 10, 819–826. [Google Scholar] [CrossRef]

- Farooque, A.A.; Hussain, N.; Schumann, A.W.; Abbas, F.; Afzaal, H.; McKenzie-Gopsill, A.; Esau, T.; Zaman, Q.; Wang, X. Field evaluation of a deep learning-based smart variable-rate sprayer for targeted application of agrochemicals. Smart Agric. Technol. 2023, 3, 100073. [Google Scholar] [CrossRef]

- Wu, H.R.; Li, X.X.; Sun, F.C.; Huang, L.M.; Yang, T.; Bian, Y.C.; Lv, Q.R. An Improved Product Defect Detection Method Combining Centroid Distance and Textural Information. Electronics 2024, 13, 3798. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Mai, X.; Zhang, H.; Jia, X.; Meng, M.Q.-H. Faster R-CNN with classifier fusion for automatic detection of small fruits. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1555–1569. [Google Scholar] [CrossRef]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Lu, J.; Yang, R.; Yu, C.; Lin, J.; Chen, W.; Wu, H.; Chen, X.; Lan, Y.; Wang, W. Citrus green fruit detection via improved feature network extraction. Front. Plant Sci. 2022, 13, 946154. [Google Scholar] [CrossRef] [PubMed]

- Hussain, M. Yolov5, yolov8 and yolov10: The go-to detectors for real-time vision. arXiv 2024, arXiv:2407.02988. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Part I 14, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Gu, B.; Wen, C.J.; Liu, X.Z.; Hou, Y.J.; Hu, Y.H.; Su, H.Q. Improved YOLOv7-Tiny Complex Environment Citrus Detection Based on Lightweighting. Agronomy 2023, 13, 2667. [Google Scholar] [CrossRef]

- Li, G.J.; Huang, X.J.; Ai, J.Y.; Yi, Z.R.; Xie, W. Lemon-YOLO: An efficient object detection method for lemons in the natural environment. IET Image Process. 2021, 15, 1998–2009. [Google Scholar] [CrossRef]

- Ou, J.J.; Zhang, R.H.; Li, X.M.; Lin, G.C. Research and Explainable Analysis of a Real-Time Passion Fruit Detection Model Based on FSOne-YOLOv7. Agronomy 2023, 13, 1993. [Google Scholar] [CrossRef]

- Xu, L.J.; Wang, Y.H.; Shi, X.S.; Tang, Z.L.; Chen, X.Y.; Wang, Y.C.; Zou, Z.Y.; Huang, P.; Liu, B.; Yang, N.; et al. Real-time and accurate detection of citrus in complex scenes based on HPL-YOLOv4. Comput. Electron. Agric. 2023, 205, 107590. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Sun, F.; Lv, Q.; Bian, Y.; He, R.; Lv, D.; Gao, L.; Wu, H.; Li, X. Grape Target Detection Method in Orchard Environment Based on Improved YOLOv7. Agronomy 2025, 15, 42. [Google Scholar] [CrossRef]

- Ma, C.J.; Fu, Y.Y.; Wang, D.Y.; Guo, R.; Zhao, X.Y.; Fang, J. YOLO-UAV: Object Detection Method of Unmanned Aerial Vehicle Imagery Based on Efficient Multi-Scale Feature Fusion. IEEE Access 2023, 11, 126857–126878. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.-h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern recognition, 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Tang, Z.X.; Zhang, W.; Li, J.L.; Liu, R.; Xu, Y.S.; Chen, S.Y.; Fang, Z.Y.; Zhao, F.C.L. LTSCD-YOLO: A Lightweight Algorithm for Detecting Typical Satellite Components Based on Improved YOLOv8. Remote Sens. 2024, 16, 3101. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, X.; Ke, Z.; Zhang, W.; Lau, R.W. Biformer: Vision transformer with bi-level routing attention. In Proceedings of the IEEE/CVF Conference On Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10323–10333. [Google Scholar]

- Sun, Y.; Li, Y.; Li, S.; Duan, Z.H.; Ning, H.A.; Zhang, Y.H. PBA-YOLOv7: An Object Detection Method Based on an Improved YOLOv7 Network. Appl. Sci. 2023, 13, 10436. [Google Scholar] [CrossRef]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 6070–6079. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Ma, S.; Xu, Y. Mpdiou: A loss for efficient and accurate bounding box regression. arXiv 2023, arXiv:2307.07662. [Google Scholar]

- Cheng, D.G.; Zhao, Z.Q.; Feng, J. Rice Diseases Identification Method Based on Improved YOLOv7-Tiny. Agriculture 2024, 14, 709. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Malta, A.; Mendes, M.; Farinha, T. Augmented reality maintenance assistant using yolov5. Appl. Sci. 2021, 11, 4758. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Zheng, Z.; Xiong, J.; Lin, H.; Han, Y.; Sun, B.; Xie, Z.; Yang, Z.; Wang, C. A method of green citrus detection in natural environments using a deep convolutional neural network. Front. Plant Sci. 2021, 12, 705737. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Huang, Z.; Liang, Y.; Liu, Y.; Jiang, W. Ag-yolo: A rapid citrus fruit detection algorithm with global context fusion. Agriculture 2024, 14, 114. [Google Scholar] [CrossRef]

- Deng, F.; Chen, J.; Fu, L.; Zhong, J.; Qiaoi, W.; Luo, J.; Li, J.; Li, N. Real-time citrus variety detection in orchards based on complex scenarios of improved YOLOv7. Front. Plant Sci. 2024, 15, 1381694. [Google Scholar] [CrossRef]

- Liao, Y.; Li, L.; Xiao, H.; Xu, F.; Shan, B.; Yin, H. YOLO-MECD: Citrus Detection Algorithm Based on YOLOv11. Agronomy 2025, 15, 687. [Google Scholar] [CrossRef]

| Parameter Name | Parameter Value |

|---|---|

| Image size | 640 × 640 |

| Batch size | 16 |

| Multi-threaded | 16 |

| Momentum | 0.937 |

| Initial learning rate | 0.01 |

| Optimizer | SGD |

| Epochs | 300 |

| Model | P (%) | R (%) | mAP@0.5 (%) | Params (M) | GFLOPs (G) | FPS (f·s−1) |

|---|---|---|---|---|---|---|

| Baseline | 98.2 | 93.0 | 92.7 | 37.20 | 105.1 | 60.24 |

| CBAM | 98.4 | 94.0 | 93.5 | 37.36 | 105.4 | 64.52 |

| ECA | 97.1 | 94.0 | 93.2 | 37.59 | 106.4 | 60.61 |

| SE | 96.8 | 97.0 | 95.3 | 37.39 | 105.3 | 67.11 |

| SimAm | 96.4 | 97.0 | 95.5 | 37.31 | 105.1 | 61.35 |

| BiFormer | 98.5 | 97.0 | 95.9 | 37.28 | 105.1 | 64.96 |

| IoU Loss | P (%) | R (%) | mAP@0.5 (%) | Params (M) | GFLOPs (G) | FPS (f·s−1) |

|---|---|---|---|---|---|---|

| CIoU | 98.2 | 93.0 | 92.7 | 37.20 | 105.1 | 60.24 |

| DIoU | 98.1 | 95.0 | 93.7 | 37.21 | 105.1 | 58.82 |

| GIoU | 98.6 | 94.0 | 92.8 | 37.20 | 105.2 | 54.05 |

| SIoU | 98.2 | 94.0 | 92.9 | 37.20 | 105.1 | 61.73 |

| MPDIoU | 98.5 | 97.0 | 94.5 | 37.20 | 105.1 | 64.10 |

| Baseline | PConv | BiFormer | GSConv | MPDIoU | mAP@0.5 (%) | Params (M) | GFLOPs (G) | FPS (f·s−1) |

|---|---|---|---|---|---|---|---|---|

| YOLOv7 | × | × | × | × | 92.7 | 37.20 | 105.1 | 60.24 |

| √ | × | × | × | 93.7 | 32.69 | 84.8 | 70.92 | |

| × | √ | × | × | 95.9 | 37.28 | 105.1 | 64.96 | |

| × | × | √ | × | 94.0 | 34.92 | 100.5 | 66.23 | |

| × | × | × | √ | 94.5 | 37.20 | 105.1 | 64.10 | |

| √ | √ | × | × | 95.1 | 33.74 | 84.8 | 74.46 | |

| √ | √ | √ | × | 95.3 | 31.47 | 80.2 | 75.19 | |

| √ | √ | √ | √ | 96.2 | 31.47 | 80.2 | 77.51 |

| Model | P (%) | R (%) | mAP@0.5 (%) | Params (M) | GFLOPs (G) | FPS (f·s−1) |

|---|---|---|---|---|---|---|

| YOLOv7 | 98.2 | 93.0 | 92.7 | 37.20 | 105.1 | 60.24 |

| YOLO-PBGM | 98.5 | 97.0 | 96.2 | 31.47 | 80.2 | 77.51 |

| Model | P (%) | R (%) | mAP@0.5 (%) | Params (M) | GFLOPs (G) | FPS (f·s−1) |

|---|---|---|---|---|---|---|

| Faster R-CNN | 87.9 | 88.6 | 89.1 | 136.75 | 368.3 | 21.36 |

| YOLOv5 | 94.7 | 92.5 | 91.8 | 46.14 | 108.2 | 54.05 |

| YOLOv6 | 93.8 | 91.7 | 91.5 | 34.87 | 85.3 | 53.62 |

| YOLOv7 | 98.2 | 93.0 | 92.7 | 37.20 | 105.1 | 60.24 |

| YOLOv8 | 97.6 | 93.2 | 93.4 | 43.63 | 165.4 | 63.58 |

| YOLO-PBGM | 98.5 | 97.0 | 96.2 | 31.47 | 80.2 | 77.51 |

| Model | P (%) | R (%) | mAP@0.5 (%) |

|---|---|---|---|

| YOLO-BP [47] | 86.0 | 91.0 | 91.6 |

| YOLO-DCA [22] | 94.1 | 91.6 | 95.0 |

| AG-YOLO [48] | 90.6 | 73.4 | 83.2 |

| YOLOv7-BiGS [49] | 91.0 | 87.3 | 93.7 |

| YOLO-MECD [50] | 84.4 | 73.3 | 81.6 |

| YOLO-PBGM | 98.5 | 97.0 | 96.2 |

| Model | Light | P (%) | R (%) | mAP@0.5 (%) | FPS (f·s−1) |

|---|---|---|---|---|---|

| YOLO-PBGM | Sunny | 97.6 | 96.5 | 96.2 | 76.52 |

| Overcast | 97.2 | 95.8 | 95.7 | 75.26 |

| Model | Type | P (%) | R (%) | mAP@0.5 (%) | FPS (f·s−1) |

|---|---|---|---|---|---|

| YOLO-PBGM | Unobstructed | 98.9 | 99.1 | 99.6 | 79.35 |

| Slightly obscured | 98.2 | 97.6 | 97.4 | 76.18 | |

| Severely obstructed | 96.4 | 95.3 | 92.2 | 73.49 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, Q.; Sun, F.; Bian, Y.; Wu, H.; Li, X.; Li, X.; Zhou, J. A Lightweight Citrus Object Detection Method in Complex Environments. Agriculture 2025, 15, 1046. https://doi.org/10.3390/agriculture15101046

Lv Q, Sun F, Bian Y, Wu H, Li X, Li X, Zhou J. A Lightweight Citrus Object Detection Method in Complex Environments. Agriculture. 2025; 15(10):1046. https://doi.org/10.3390/agriculture15101046

Chicago/Turabian StyleLv, Qiurong, Fuchun Sun, Yuechao Bian, Haorong Wu, Xiaoxiao Li, Xin Li, and Jie Zhou. 2025. "A Lightweight Citrus Object Detection Method in Complex Environments" Agriculture 15, no. 10: 1046. https://doi.org/10.3390/agriculture15101046

APA StyleLv, Q., Sun, F., Bian, Y., Wu, H., Li, X., Li, X., & Zhou, J. (2025). A Lightweight Citrus Object Detection Method in Complex Environments. Agriculture, 15(10), 1046. https://doi.org/10.3390/agriculture15101046