An OCRNet-Based Method for Assessing Apple Watercore in Cold and Cool Regions of Yunnan Province

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Preparation

2.2. Data Annotation

2.3. Experimental Environment

2.4. Experimental Method

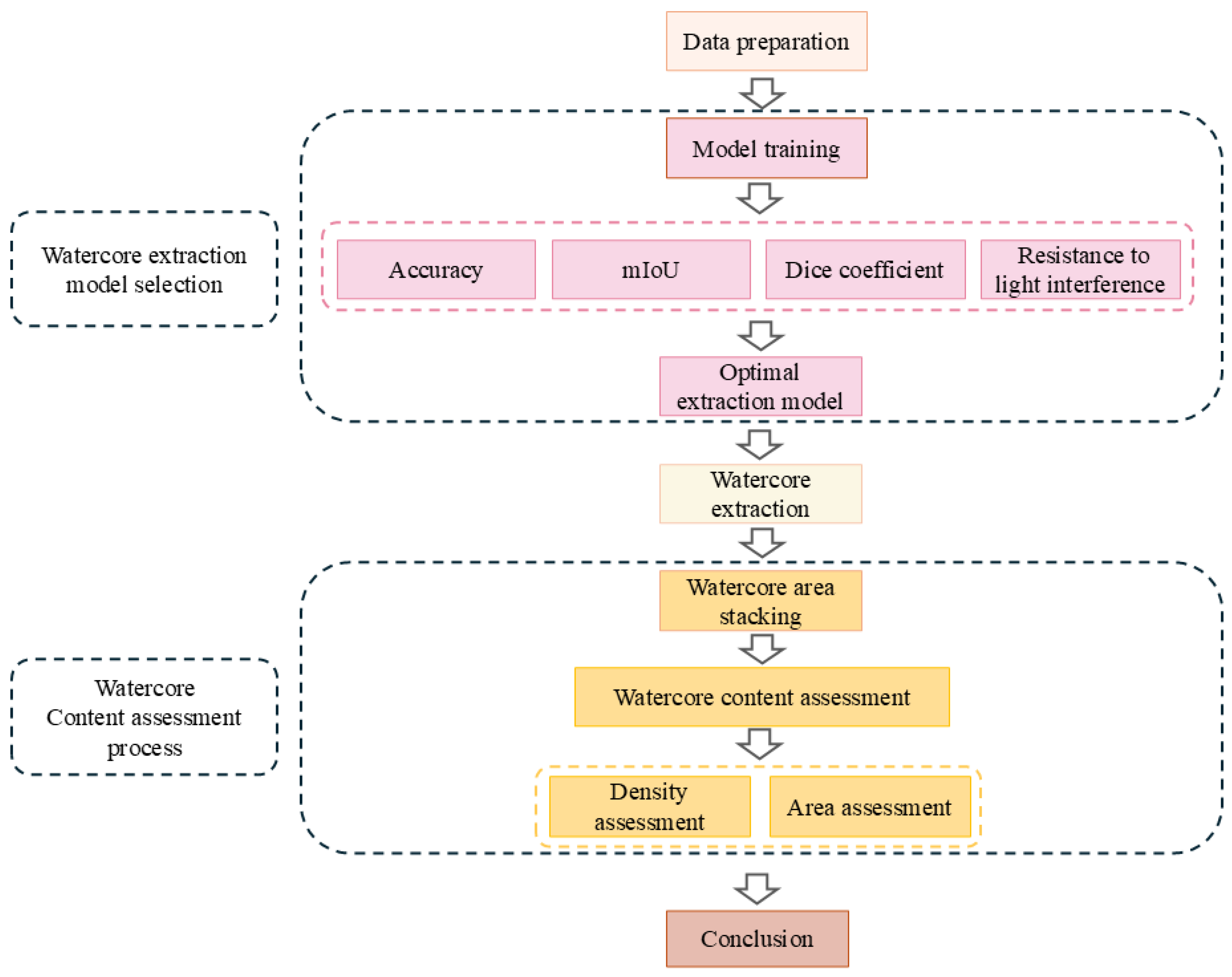

2.4.1. Selection of Watercore Extraction Model

2.4.2. Evaluation of Watercore Content

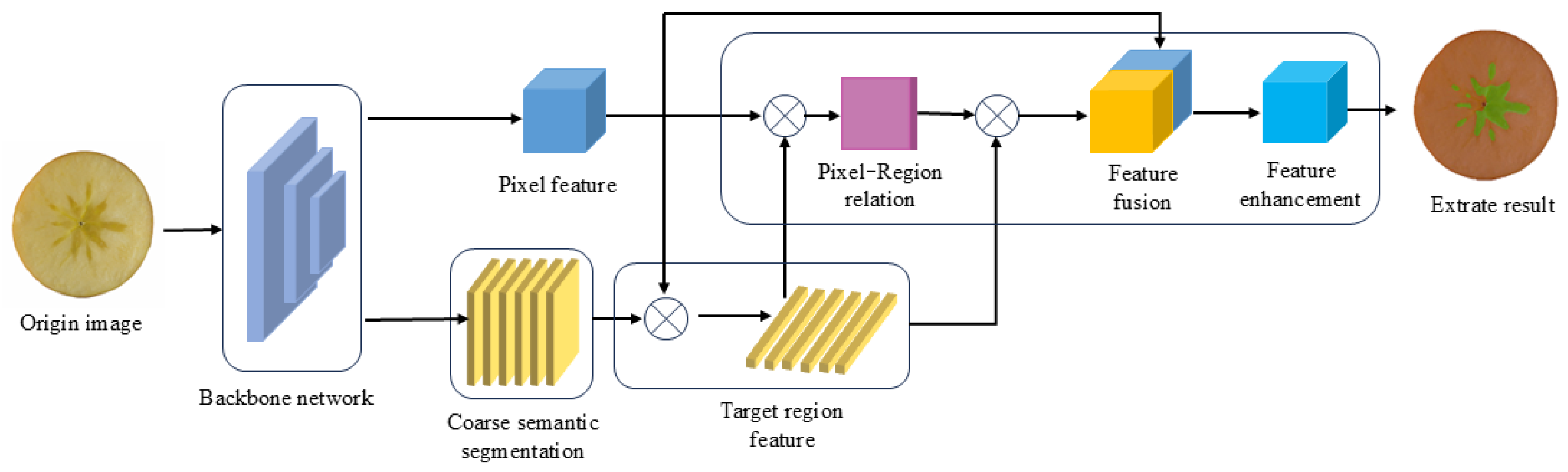

2.5. OCRNet Model

2.6. Model Evaluation Indicators

3. Results and Analysis

3.1. Watercore Extraction Model Selection

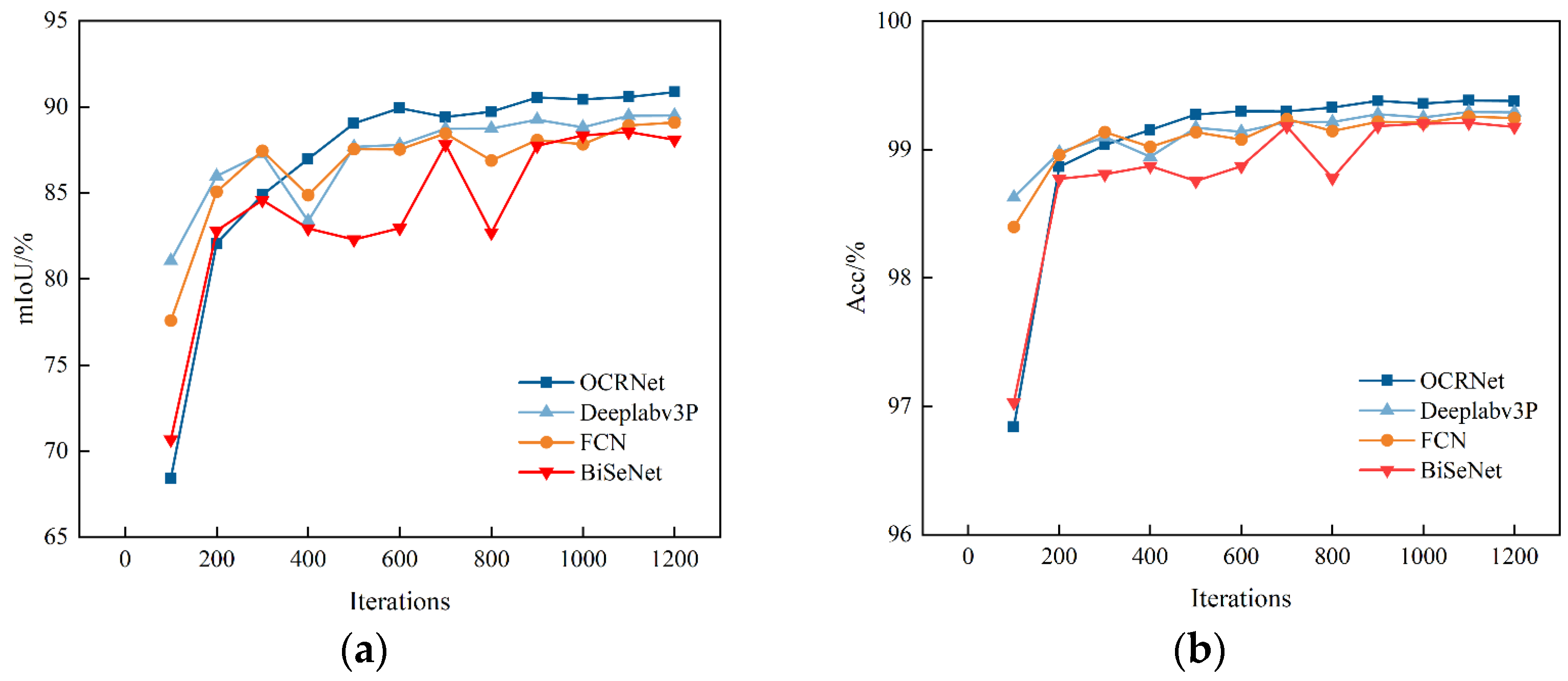

3.1.1. Comparison of Models Under the Data_1 Dataset

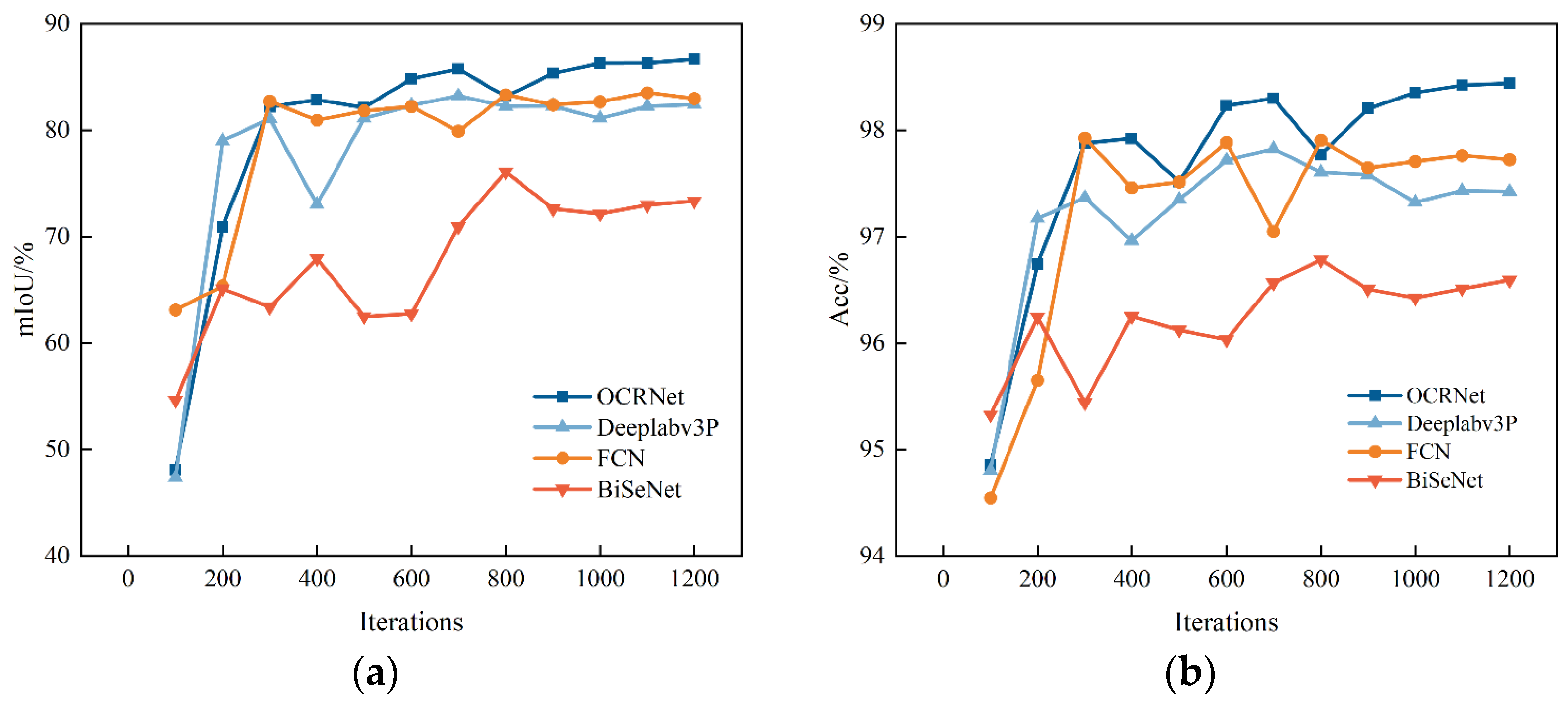

3.1.2. Comparison of Models Under the Data_2 Dataset

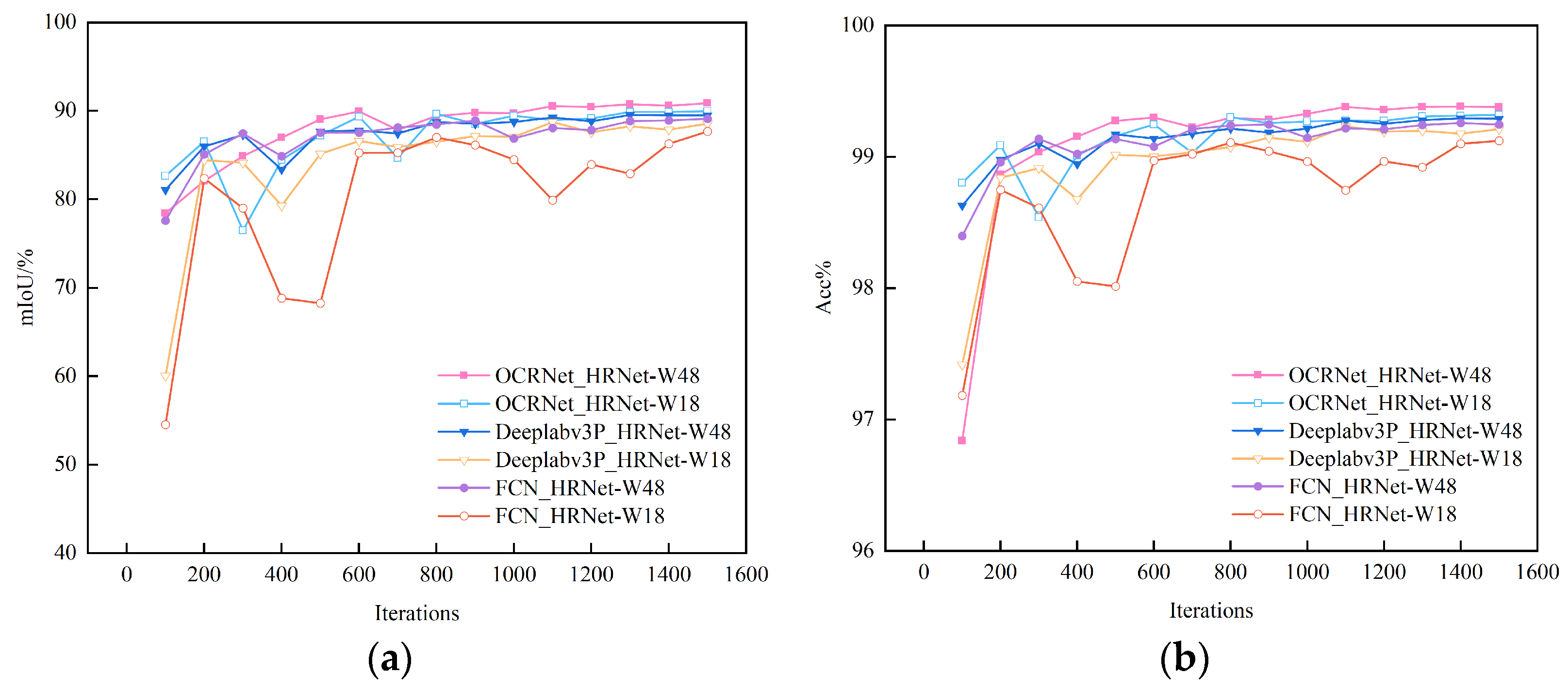

3.1.3. Comparison of Models Under Different Backbone Networks

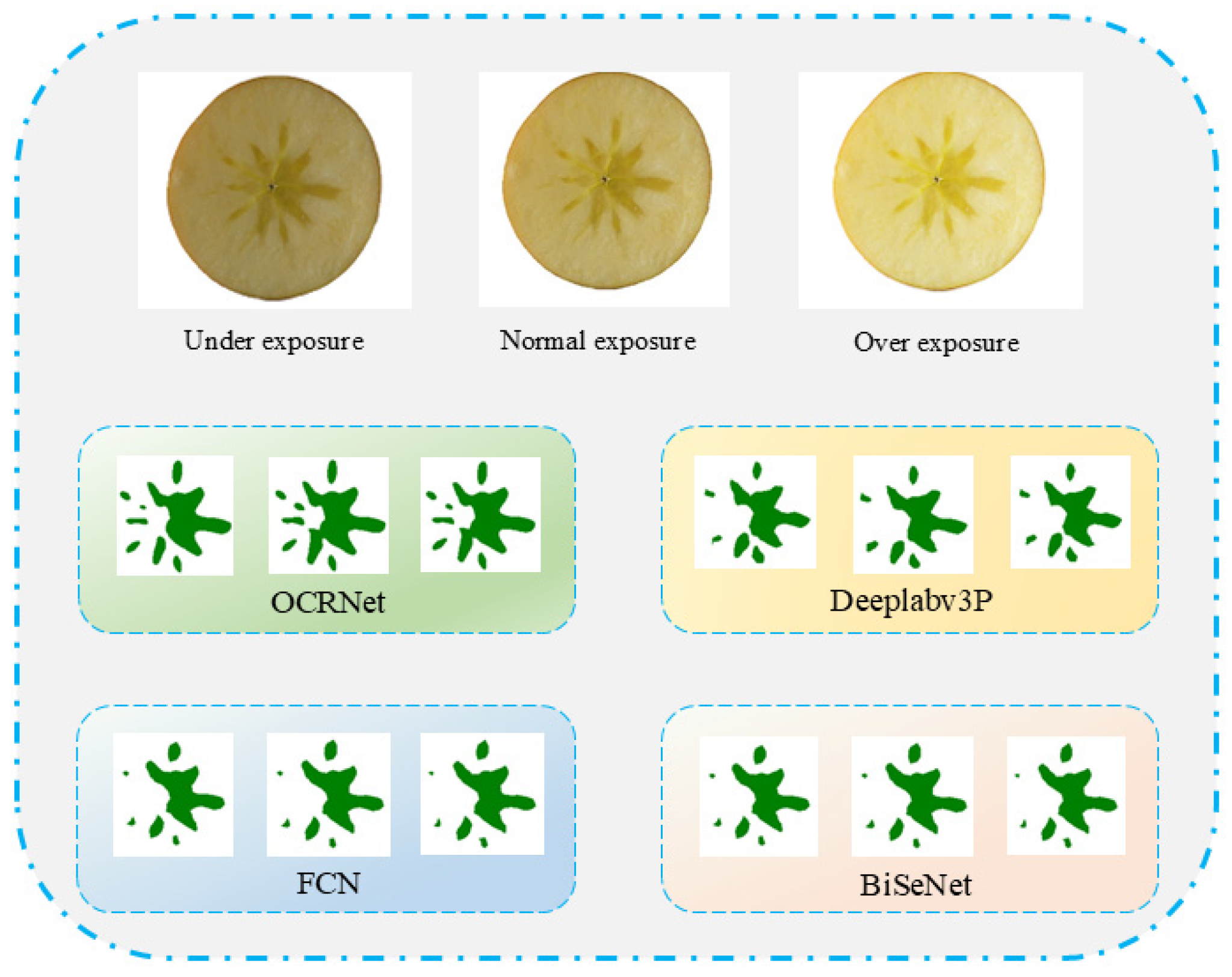

3.1.4. Comparison of Extraction Results from Different Models

3.2. Evaluation of Apple Watercore Content in Different Regions

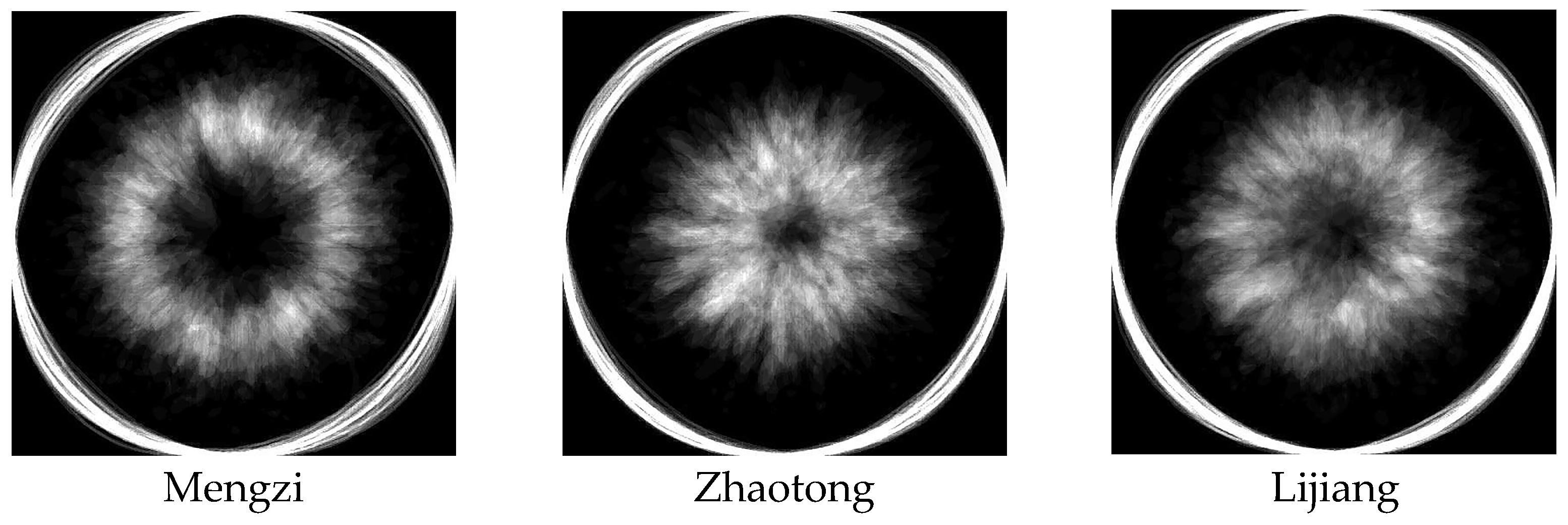

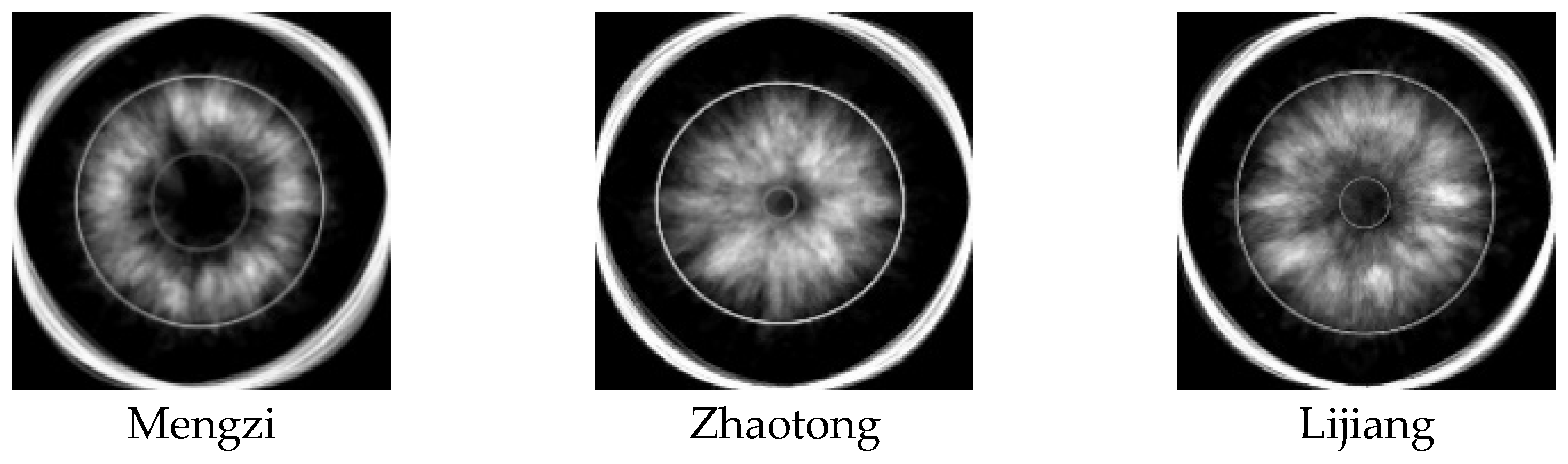

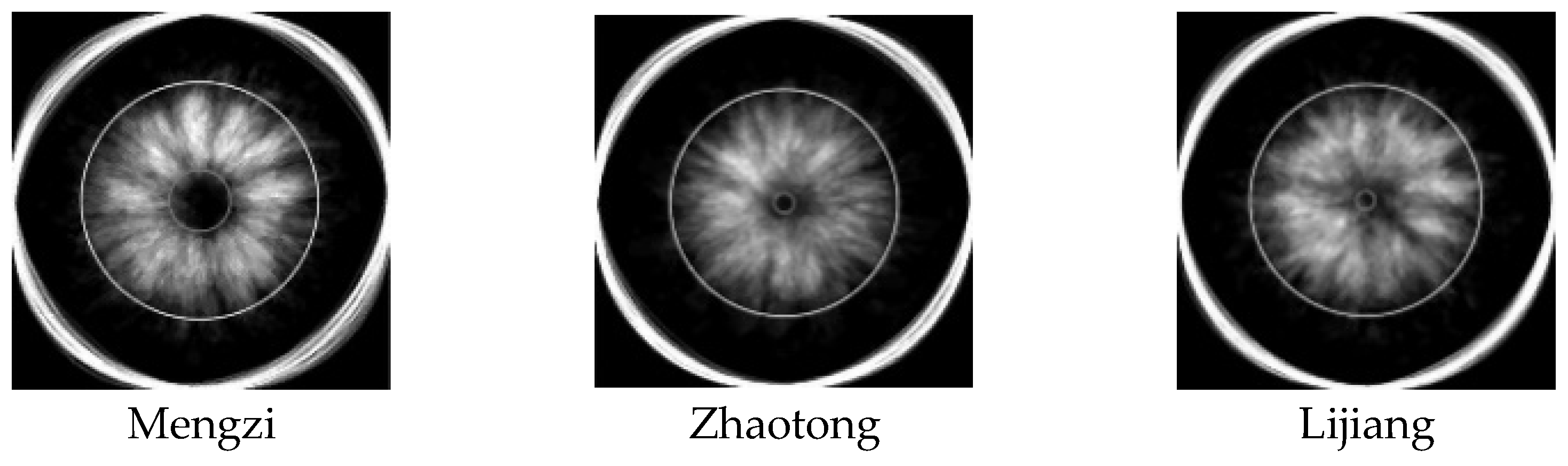

3.2.1. Watercore Region Stacking Results

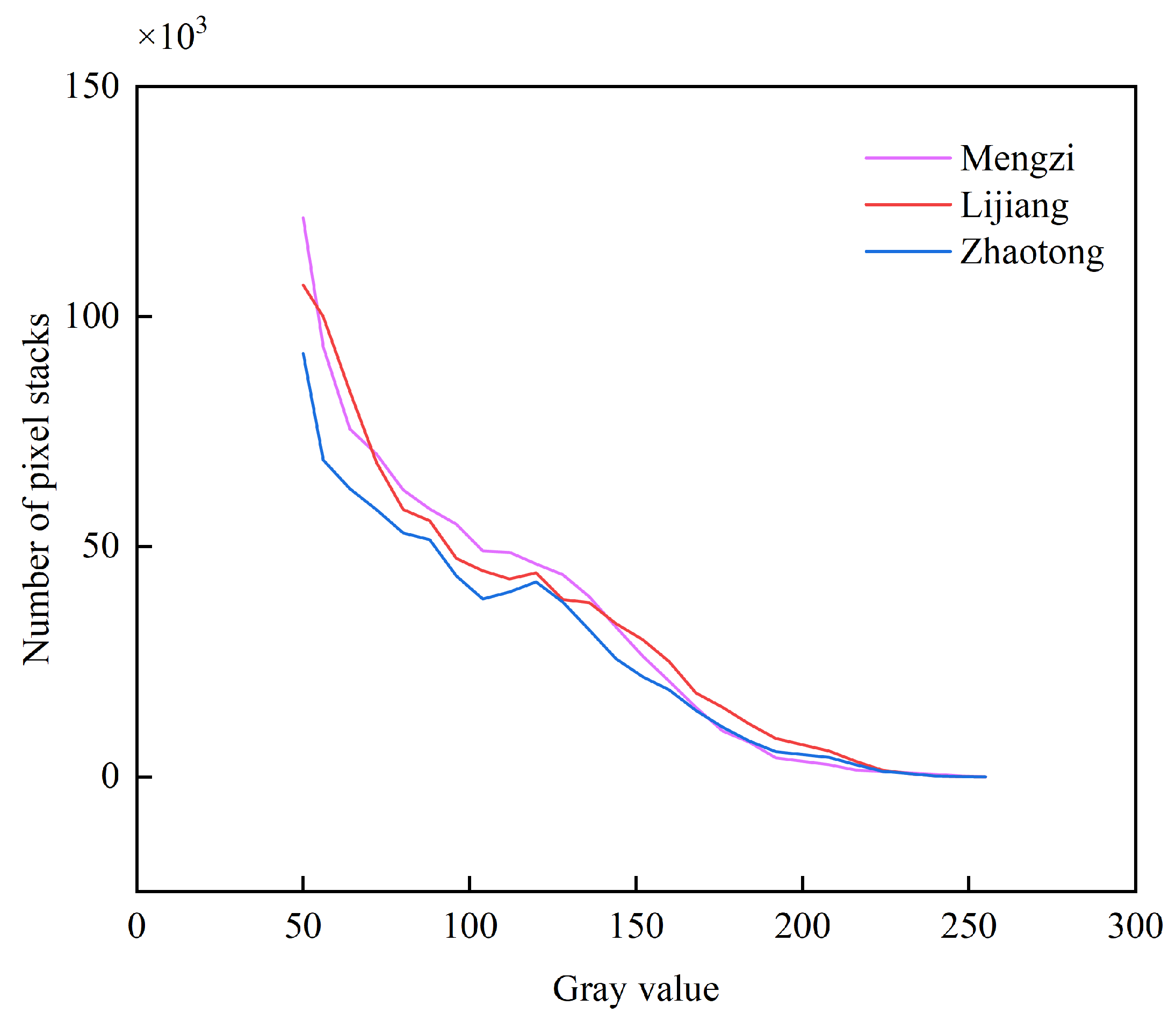

3.2.2. Density Assessment of Watercore Fitting Regions

3.2.3. Assessment of the Area of the Watercore Fitting Region

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jia, Y.; Zhang, Y.; Tong, P.; Wang, J. Aksu candied apple primary metabolites and mineral elements. J. Tarim Univ. 2021, 33, 1–6. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Nurmaimaiti; Xiong, R. Quality Analysis of “Sugar Core” ‘Fuji’ Apple in Aksu. J. Tarim Univ. 2019, 31, 60–65. [Google Scholar] [CrossRef]

- Liu, X. The Studies on Mechanism and Control of Apple Watercore. Master’s Thesis, Gansu Agricultural University, Lanzhou, China, 2008. [Google Scholar] [CrossRef]

- Feng, J.; Han, X.; Song, S.; Wang, H.; Xie, P.; Yang, H.; Li, S.; Wu, Y. Fruit quality characters and causes of watercore apple in high altitude areas of Guizhou. J. South. Agric. 2021, 52, 1273–1281. [Google Scholar] [CrossRef]

- Yamada, H.; Minami, J.; Amano, S.; Kadoya, K. Development of early watercore in ‘Orin’ apples grown in warmer regions and its physiology. J. Jpn. Soc. Hortic. Sci. 2001, 70, 409–415. [Google Scholar] [CrossRef]

- Zhou, W.; Li, W.; Wang, A.; Wu, Z.; Hu, A. Quality Changes of Sugar Core Red Fuji Apple under Two Storage Conditions. Xinjiang Agric. Sci. 2020, 57, 1431–1442. Available online: https://d.wanfangdata.com.cn/periodical/Ch9QZXJpb2RpY2FsQ0hJTmV3UzIwMjUwMTE2MTYzNjE0Eg94am55a3gyMDIwMDgwMDcaCGZobzM5ejFt (accessed on 8 May 2025).

- Guo, Z.; Wang, M.; Agyekum, A.A.; Wu, J.; Chen, Q.; Zuo, M.; EI-Seedi, H.; Tao, F.; Shi, J.; Yang, Q.; et al. Quantitative detection of apple watercore and soluble solids content by near infrared transmittance spectroscopy. J. Food Eng. 2020, 279, 109955. [Google Scholar] [CrossRef]

- Hu, W.; Sun, D.; Pu, H.; Pan, T. Recent developments in methods and techniques for rapid monitoring of sugar metabolism in fruits. Compr. Rev. Food Sci. Food Saf. 2016, 15, 1067–1079. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Xu, H.; Wang, J.; Sun, Y.; Wang, H. Detection of watercore disease in apple based on inversion of optical characteristic parameters. J. Nanjing Agric. Univ. 2023, 46, 986–994. [Google Scholar] [CrossRef]

- Yin, Y. Numerical Characterization of Sugar Content of Aksu Apple Based on Electrical Characteristics. Master’s Thesis, Tarim University, Aral, China, 2023. [Google Scholar] [CrossRef]

- Zhang, Z. Research and System Implementation of Pixel-Level Image Exposure Evaluation Method. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2023. [Google Scholar] [CrossRef]

- Zhang, H.; Cai, C. Lossless and Online Classification System for Apple Water Core Disease Based on Computer Vision. Agric. Mech. Res. 2018, 40, 208–210. [Google Scholar] [CrossRef]

- Yuan, M.; Wang, B.; Chen, J.; Wang, C.; Yang, S.; Chen, J. Real-time nondestructive inspection system of Aksu Bingxin apple. Inf. Commun. 2019, 7, 54–56. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, H.; Wei, Z.; Pu, Y.; Zhang, Z.; Zhao, J.; Hu, J. Comparison of Different Detection Modes of Visible/Near-Infrared Spectroscopy for Detecting Moldy Apple Core. Spectrosc. Spectr. Anal. 2024, 44, 883–890. Available online: https://d.wanfangdata.com.cn/periodical/gpxygpfx202403047 (accessed on 8 May 2025).

- Tong, P.; Zhang, Y.; Tang, L.; Zhang, S.; Xu, Q.; Wang, J. Effects of Light and Temperature on Sugar Core Formation of Fuji Apple. J. Northwest Agric. 2020, 29, 579–586. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.; Tian, X.; Yang, X.; Cai, Z.; Li, J. Online analysis of watercore apples by considering different speeds and orientations based on Vis/NIR full-transmittance spectroscopy. Infrared Phys. Technol. 2022, 122, 104090. [Google Scholar] [CrossRef]

- Chang, H.; Wu, Q.; Yan, J.; Luo, X.; Xu, H. On-line evaluation of watercore in apples by visible/near infrared spectroscopy. In Proceedings of the ASABE Annual International Meeting, St Joseph Michigan, MI, USA, 7–10 July 2019; p. 1. [Google Scholar] [CrossRef]

- Clark, C.J.; MacFall, J.S.; Bieleski, R.L. Loss of watercore from ‘Fuji’ apple observed by magnetic resonance imaging. Sci. Hortic. 1998, 73, 213–227. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, B.; Xiao, P.; Zhang, Y.; Xu, Y. Selection and implementation of semantic segmentation model for intelligent recognition of scrap type. Metall. Ind. Autom. 2023, 47, 81–92. [Google Scholar] [CrossRef]

- Zhao, W.; Chen, H.; Guo, L.; Wang, S.; Pan, X.; Wang, X. Substation Meter Readings and Dial Information Identification Method Based on YOLO-E and Enhanced OCRNet Image Segmentation. Electr. Power Constr. 2023, 44, 75–85. [Google Scholar] [CrossRef]

- Hu, T.; Gao, X.; Hua, Y.; Cai, L. Deep learning-based adaptive multi-defect detection in apple images. J. Shandong Univ. Sci. Technol. (Nat. Sci. Ed.) 2024, 38, 42–47. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, B. MDP-YOLO: A lightweight YOLOv5s algorithm for Multi-Scale pest detection. Eng. Agrícola 2023, 43, e20230065. [Google Scholar] [CrossRef]

- Ni, J.; Wu, J.; Elazab, A.; Tong, J.; Chen, Z. DNL-Net: Deformed non-local neural network for blood vessel segmentation. BMC Med. Imaging 2022, 22, 109. [Google Scholar] [CrossRef]

- Yin, M.; Yao, Z.; Cao, Y.; Li, X.; Zhang, Z.; Lin, L.; Han, H. Disentangled non-local neural networks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 191–207. [Google Scholar] [CrossRef]

- Ren, Z.; Wang, L.; He, Z. Open-Pit mining area extraction from high-resolution remotesensing images based on EMANet and FC-CRF. Remote Sens. 2023, 15, 3829. [Google Scholar] [CrossRef]

- Li, X.; Zhong, Z.; Wu, J.; Yang, Y.; Lin, Z.; Liu, H. Expectation-maximization attention networks for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Visio, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9167–9176. [Google Scholar] [CrossRef]

- Peng, Z.; Cai, C. An effective segmentation algorithm of apple watercore disease region using fully convolutional neural networks. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 1292–1299. [Google Scholar] [CrossRef]

- Yin, Z.; Zhang, W.; Zhao, C. Method of extracting characteristics of watercore in cross section of watercore apple based on BiSeNet. J. Huazhong Agric. Univ. 2023, 42, 209–215. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar] [CrossRef]

- Liu, Y.; Ding, L.; Meng, F. High Spatial Resolution Imagery Semantic Segmentation Based on Object-context Representation CNN. Remote Sens. Inf. 2021, 36, 66–74. [Google Scholar] [CrossRef]

- Jiang, P. Research on Water Extraction from UAV Remote Sensing Images Based on Deep Semantic Segmentation. Mod. Comput. 2022, 28, 50–56. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, X.; Chen, X.; Wang, J. Object-contextual representations for semantic segmentation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 173–190. [Google Scholar] [CrossRef]

- Yang, G.; Xu, N.; Hong, Z. Identification of navel orange lesions by nonlinear Deep Learning algorithm. Eng. Agrícola 2018, 38, 783–796. [Google Scholar] [CrossRef]

- Zheng, Y.; Chen, R.; Yang, C.; Zhou, T. Improved YOLOv5s based identification of pests and diseases in citrus. J. Huazhong Agric. Univ. 2024, 43, 134–143. [Google Scholar] [CrossRef]

- Kim, S.K.; Choi, D.G.; Choi, Y.M. Relationship between the temperature characteristics and the occurrence of watercore at various altitudes in ‘Hongro’ and ‘Fuji’ apples. Hortic. Sci. Technol. 2023, 41, 595–604. [Google Scholar] [CrossRef]

| Parameter | Version |

|---|---|

| CPU | Intel(R) Core(TM) i7-12650H |

| Memory | 16 GB |

| GPU | RTX 4060 |

| Memory | 8 GB |

| Program language | Python3.9 |

| CUDA | 11.7 |

| Cudnn | 8.4 |

| Paddlepaddle-gpu | 2.5.1 |

| Paddleseg | 2.8.0 |

| Model | Optimizer | Lr_Scheduler | Loss Function | |||

|---|---|---|---|---|---|---|

| Type | Momentum | Weight_Decay | Type | Learning_Rate | ||

| OCRNet | SGD | 0.9 | 4.0 × 10−5 | PolynomialD-ecay | 0.01 | CrossEntropy-Loss |

| EMAnet | ||||||

| DNLNet | ||||||

| BiSeNet | ||||||

| Model | mIoU/% | Acc/% | Dice Coefficient/% |

|---|---|---|---|

| OCRNet | 90.14 | 99.33 | 94.57 |

| Deeplabv3P | 88.48 | 99.20 | 93.48 |

| FCN | 87.68 | 99.16 | 93.04 |

| BiSeNet | 86.45 | 99.10 | 92.24 |

| Model | mIoU/% | Acc/% | Dice Coefficient/% |

|---|---|---|---|

| OCRNet | 86.35 | 98.35 | 92.22 |

| Deeplabv3P | 83.22 | 97.83 | 90.15 |

| FCN | 83.34 | 97.93 | 90.23 |

| BiSeNet | 76.11 | 96.78 | 84.87 |

| Model | mIoU/% | Acc/% | Dice Coefficient/% |

|---|---|---|---|

| OCRNet_HRNet-W48 | 89.94 | 99.37 | 94.35 |

| OCRNet_HRNet-W18 | 89.45 | 99.32 | 94.26 |

| Deeplabv3P_HRNet-W48 | 88.71 | 99.29 | 93.69 |

| Deeplabv3P_HRNet-W18 | 88.53 | 99.21 | 93.52 |

| FCN_HRNet-W48 | 87.68 | 99.25 | 93.30 |

| FCN_HRNet-W18 | 85.89 | 99.12 | 93.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Tan, Y.; Zhang, W.; Yin, Z.; Zhao, C.; Guo, P.; Wu, H.; Hu, D. An OCRNet-Based Method for Assessing Apple Watercore in Cold and Cool Regions of Yunnan Province. Agriculture 2025, 15, 1040. https://doi.org/10.3390/agriculture15101040

Ma Y, Tan Y, Zhang W, Yin Z, Zhao C, Guo P, Wu H, Hu D. An OCRNet-Based Method for Assessing Apple Watercore in Cold and Cool Regions of Yunnan Province. Agriculture. 2025; 15(10):1040. https://doi.org/10.3390/agriculture15101040

Chicago/Turabian StyleMa, Yaxing, Yushuo Tan, Wenbin Zhang, Zhipeng Yin, Chunlin Zhao, Panpan Guo, Haijian Wu, and Ding Hu. 2025. "An OCRNet-Based Method for Assessing Apple Watercore in Cold and Cool Regions of Yunnan Province" Agriculture 15, no. 10: 1040. https://doi.org/10.3390/agriculture15101040

APA StyleMa, Y., Tan, Y., Zhang, W., Yin, Z., Zhao, C., Guo, P., Wu, H., & Hu, D. (2025). An OCRNet-Based Method for Assessing Apple Watercore in Cold and Cool Regions of Yunnan Province. Agriculture, 15(10), 1040. https://doi.org/10.3390/agriculture15101040