Abstract

In complex orchard environments, rapidly and accurately identifying pomegranate fruits at various growth stages remains a significant challenge. Therefore, we propose YOLO-MSNet, a lightweight and enhanced pomegranate fruit detection model developed using YOLOv11. Firstly, the C3k2_UIB module is elegantly designed by integrating the Universal Inverted Bottleneck (UIB) structure into the model, while convolutional modules within the model are seamlessly replaced by AKConv units, thereby markedly reducing the overall complexity of the model. Subsequently, a novel parallel cascaded attention module called SSAM is designed as a way to improve the model’s ability to clearly see small details of the fruit against the background of a complex orchard. Additionally, a Dynamic Adaptive Bidirectional Feature Pyramid Network (DA-BiFPN) that employs adaptive sampling strategies to optimize multi-scale feature fusion is designed. The C3k2_UIB module complements this by reinforcing feature interactions and information aggregation across various scales, thereby enhancing the model’s perception of multi-scale objects. Furthermore, integrating VFLoss and ShapeIOU further refines the model’s ability to distinguish between overlapping and differently sized targets. Finally, comparative evaluations conducted on a publicly available pomegranate fruit dataset against state-of-the-art models demonstrate that YOLO-MSNet achieves a 1.7% increase in mAP50, a 21.5% reduction in parameter count, and a 21.8% decrease in model size. Further comparisons with mainstream YOLO models confirm that YOLO-MSNet has a superior detection accuracy despite being significantly lighter, making it especially suitable for deployment in resource-constrained edge devices, effectively addressing real-world requirements for fruit detection in complex orchard environments.

1. Introduction

Pomegranate (Punica granatum L.) is an economically significant diploid crop (2n = 16) of the Lythraceae family which is cultivated on roughly 550,000 ha worldwide and yields about 6.5 million tons annually [1]. Beyond its economic value, pomegranate cultivation bolsters nutritional security, stabilizes rural livelihoods, and enhances climate resilience in water-limited regions. The fruit passes through distinct growth stages. By monitoring these growth stages in real time, farmers can optimize their fertilization, irrigation, and harvesting schedules. Additionally, continuous phenological data feed pest and disease control models and improve yield forecasts, supporting more precise farm management. Consequently, developing a robust, all-weather fruit detection system for real-time fruit monitoring has become central to modern precision agriculture.

In fruit detection tasks, traditional methods primarily rely upon the identification of color and textural features. For instance, Bai et al. [2], accurately classified tomatoes by meticulously extracting and combining shape, texture, and color attributes. Yu et al. [3] employed a random forest model based on color and texture to identify lychees. Similarly, Wan et al. [4] analyzed various pineapple characteristics to identify the pineapple crown. However, these classical methods degrade under variable lighting conditions, occlusions, and overlapping foliage, limiting their accuracy and robustness in real-world agricultural settings [5].

Compared to conventional machine learning approaches, deep learning-based object detection has dramatically increased detection accuracy in agricultural scenarios, effectively addressing the limitations inherent in traditional detection methodologies [6]. Two-stage detectors, such as the R-CNN family [7], first generate region proposals and then apply a classification head alongside a bounding box regressor to accurately locate and identify objects. However, these approaches often result in slow inference, hindering their deployment in resource-constrained edge devices for fruit detection [8]. In contrast, single-stage detection algorithms eliminate the separate region proposal step, resulting in substantially faster detection speeds. The YOLO (You Only Look Once) family has emerged as a leading paradigm, as it uses a single convolutional network to predict object classes and bounding boxes in one unified pass [9]. Its end-to-end real-time design makes it particularly well suited for intelligent fruit-picking systems. Reference [10] introduced a YOLOv4-tiny detection framework optimized for Camellia oleifera fruits, delivering precise feature extraction and achieving a detection accuracy of 92.1%. Nan et al. [11] introduced WGB-YOLO, an enhanced YOLOv3-based architecture that counters lighting variability and branch occlusions in dragon fruit detection, achieving 86.0% accuracy in orchard conditions.

In addition, Badeka et al. [12] created an improved grape detection algorithm by integrating lightweight network architectures and attention mechanisms into YOLOv7, significantly reducing the model’s parameters without compromising its detection performance. WU et al. [13] proposed a lightweight D-YOLO model for strawberry disease detection. The model integrates multiple attention mechanisms, achieving up to a 90.5% mAP on a maize disease test set while significantly reducing computational requirements. Liang et al. [14] proposed CPPL, a novel two-stage model for citrus picking-point localization. The model employs KD-YOLOP for rapid fruit detection and leverages RG-YOLO-seg for efficient branch segmentation. CPPL combines knowledge distillation, model pruning, and multi-scale feature fusion to deliver the precise, real-time localization of citrus picking points, surpassing existing methods in terms of both detection speed and robustness. Liang et al. [15] also introduced YOLO-SBWL, a real-time framework for pre-sowing corn seed sorting. It combines a parameter-free attention module with a weighted bidirectional feature pyramid to classify seed varieties and detect surface defects simultaneously. The model attains a 97.21% mAP while markedly reducing both its computational cost and model footprint. Ilyas et al. proposed an encoder–decoder adaptive convolutional network comprising three modules for classifying three types of strawberry and a fourth for overgrown or diseased fruit. By dynamically adjusting its receptive fields and feature pathways, the model detects fruits at multiple scales with high accuracy, although it prioritizes category separation over instance-level differentiation.

In summary, deep learning techniques have markedly improved fruit detection for apples, grapes, and strawberries. Yet specialized detection algorithms for pomegranates remain relatively scarce. In complex orchard environments, lightweight pomegranate detection must overcome three key hurdles. First, high-accuracy models demand extensive training time and computing power, hindering their rapid deployment in resource-limited devices. Second, the detection of pomegranate fruits during their early stages, such as budding and flowering, is made difficult by their tiny features (<15 pixels), occlusions, and overlapping foliage, which complicate the process of distinguishing between similar fruits. Third, the class imbalance in horticultural datasets further degrades model performance. To tackle these issues, we introduce a YOLOv11n-based lightweight model customized for all pomegranate growth stages and embed it within a practical deployment framework. The main contributions of this article are as follows:

1. We propose a lightweight detection model named YOLO-MSNet to achieve the real-time and efficient detection of pomegranate fruits throughout their various growth stages. Inspired by the Universal Inverted Bottleneck (UIB) design, the C3k2 module was improved to create the new C3k2_UIB module, and the traditional convolutional modules in the backbone were swapped out for Convolutional Kernels with Arbitrary (AKConv) units. This method greatly improves the model’s ability to find targets of different sizes while also lowering the number of model parameters required and making it less complex, which help it work well on mobile and edge-computing devices.

2. We design an innovative parallel-cascaded attention module, known as the Parallel Channel Squeeze and Spatial Attention Module (PSSAM), specifically for aggregating multi-scale features while simultaneously reducing model parameters. PSSAM notably strengthens the robustness of feature extraction, particularly in challenging scenarios involving fruit occlusion, complex environmental backgrounds, and variations in fruit scales.

3. We present a dynamically adaptive collaborative architecture (DA-BiFPN). This architecture employs the Dynamic Upsampling (Dy-sample) technique, intelligently adjusting sampling strategies according to the input feature characteristics as an effective alternative to traditional bilinear interpolation. Additionally, the integration of C3k2_UIB modules significantly strengthens cross-layer feature interactions and fusion, thereby enhancing the extraction and representation of essential features.

The structure of this paper is organized as follows: The Introduction provides a background for this research and reviews the current state of the relevant literature. The Materials and Methods presents the theoretical foundations supporting this research. The proposed YOLO-MSNet is elaborated in detail, including its architecture and underlying principles. The Experimental Results and Analysis presents the evaluation metrics and experimental configurations used and comparative analyses with alternative models. Finally, the Conclusion summarizes our key findings and outlines potential directions for subsequent research.

2. Materials and Methods

2.1. The Principle of YOLO v11n

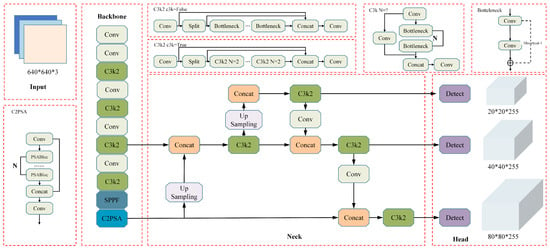

YOLOv11 is an advanced object detection algorithm that was released by Ultralytics in September 2024 [16]. Compared to its predecessors, it achieves substantial improvements in both accuracy and inference speed. Its detailed network architecture, depicted in Figure 1, primarily consists of a backbone, neck, and detection head. The YOLOv11’s architecture includes an optimized CSPDarknet53 backbone network, which hierarchically extracts multi-scale representations through a sequence of five downsampling stages (denoted as P1–P5). This design facilitates progressive feature abstraction while preserving fine-grained spatial information across different resolution levels. The original C2f modules are also replaced by refined C3K2 modules to reinforce feature learning. The backbone utilizes CBS modules that integrate convolutional processing, batch normalization, and the SiLU activation function to enhance feature representation. A Spatial Pyramid Pooling Fast (SPPF) module then pools the feature maps at fixed scales, boosting feature diversity, and a C2PSA module with Pyramid Slice Attention further augments the model’s extraction capacity. The neck employs a PAN-FPN design with an added bottom-up pathway to merge shallow positional cues and deep semantic features, effectively overcoming the localization gaps in conventional FPN structures. The detection head features a decoupled design, with separate branches dedicated to object classification and bounding box regression. It achieves classification by using Binary Cross-Entropy (BCE), while its regression leverages Distribution Focal Loss (DFL) paired with Complete IoU (CIoU). Two depthwise convolution (DWConv) layers further reduce the computational overhead and parameter count, boosting efficiency without sacrificing accuracy.

Figure 1.

Network structure of detection model based on YOLO v11.

In summary, YOLOv11 achieves remarkable improvements in real-time object detection performance through refined network architectures and enhanced fusion mechanisms, underscoring its profound potential across diverse computer vision applications.

2.2. AKConv

In the task of pomegranate fruit detection, complex backgrounds, multi-stage fruit morphologies, and overlapping occlusions between fruits and foliage impose stringent demands on feature extraction. YOLOv11’s standard modules lead to substantial parameter redundancy and computational overhead and lack the sensitivity to extract fine-grained local features. Consequently, they underperform in extracting the multi-scale details of pomegranate fruits in complex environments.

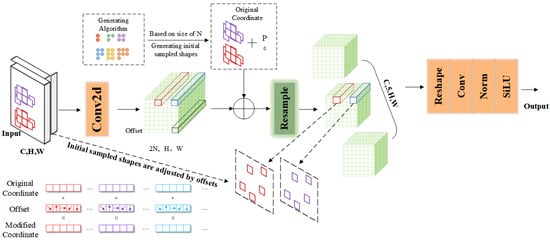

To address these shortcomings, this study introduces Adaptive Kernel Convolution (AKConv [17]) as a replacement for traditional convolution modules. As depicted in Figure 2, AKConv establishes an initial convolutional sampling pattern and employs Conv2D to process the input image. Learned offsets then adaptively reshape the sampling grid, after which the feature map is resampled and subsequently reshaped to produce the final output. This mechanism endows AKConv with kernels of arbitrary parameterization and geometric form, shattering the limitations of the fixed local windows and static sampling used in traditional convolutions. As a result, it more deftly captures the nuanced local features of pomegranate fruits across varied environments while simultaneously reducing both model complexity and computational overhead.

Figure 2.

AKConv structure diagram.

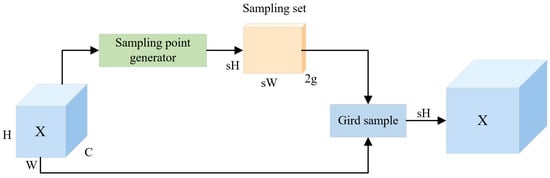

2.3. Dy-Sample

When feature maps are made bigger, traditional upsampling methods like bilinear interpolation and transposed convolution often lose high-frequency details and texture information. This makes it harder to find small or densely grouped pomegranate fruits. To overcome this limitation, we introduce a dynamic upsampler termed Dy-sample [18], which is illustrated in Figure 3. Dy-sample primarily leverages a point-wise resampling approach, circumventing the computationally expensive dynamic convolutions and supplementary subnetworks required to generate dynamic kernels. This approach significantly enhances the model’s processing speed and versatility while maintaining its superior computational efficiency.

Figure 3.

Dy-sample structure diagram.

2.4. Loss Function Design

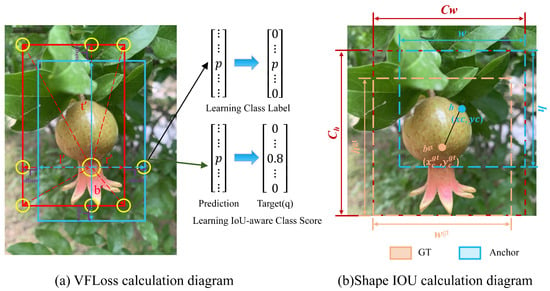

YOLOv11 employs BCE for classification, yet this loss does not align classification confidence with bounding box accuracy. Consequently, detections with a high IoU may be mistakenly removed by non-maximum suppression if their classification scores are insufficient. As depicted in Figure 4a, the Varifocal Loss (VFLoss [19]) introduces an asymmetric weighting scheme: nine candidate anchors are arranged equidistantly around each ground-truth box, with the central anchor labeled as positive and the other eight as negative. Traditional classification loss merely predicts whether a box belongs to class p. In contrast, the IoU-aware branch of VFLoss introduces a scalar that quantifies the quality of the overlap between each anchor and its ground-truth box. This mechanism directs the model’s attention to hard-to-classify positives, mitigating class imbalance and improving the effectiveness of subsequent non-maximum suppression. The loss is defined as follows:

Figure 4.

Loss function calculation diagram.

Here, represents the predicted classification score for the bounding box, is set to the actual IoU for positive samples and to zero for negative samples, and and denote adjustable coefficients. VFLoss’s IoU-aware classification score fuses object confidence with localization accuracy, enabling the model to prioritize difficult positive samples and thereby substantially boost its detection performance.

Although YOLOv8’s CIoU accounts for the center point and diagonal distances between the ground-truth box and an anchor, it overlooks intrinsic anchor attributes—such as shape and orientation—and their impact on detection. To remedy this, we introduce the ShapeIoU loss, which weaves each box’s own shape and size information into the IoU loss, thus sharpening bounding box regression accuracy. Its mathematical form appears in Equation (2).

Here, L denotes the overall loss; IoU (intersection over union) is the intersection over union term (Equation (2)); Ld is the shape distance loss (Equation (4)); and is the shape value loss (Equation (6)).

Here, and represent the predicted and ground-truth box regions, respectively.

The coefficients ww and hh weight the horizontal and vertical components, while and hgt are the ground-truth box’s width and height. The scale factors tied to the dataset’s object scale distribution further refine the loss [20].

Here, and denote the centers of the ground-truth and predicted boxes and c is the Euclidean distance between them.

As depicted in Figure 4b, the ShapeIoU loss splits the distance penalty into separate x and y components and applies distinct horizontal and vertical weights to each part. This design resolves the ambiguity in CIoU’s distance definition, enabling the model to more effectively capture feature correlations across different pomegranate growth stages and to converge swiftly to the optimum, thereby accelerating training and elevating its detection performance and precision.

3. The Proposed YOLO-MSNet

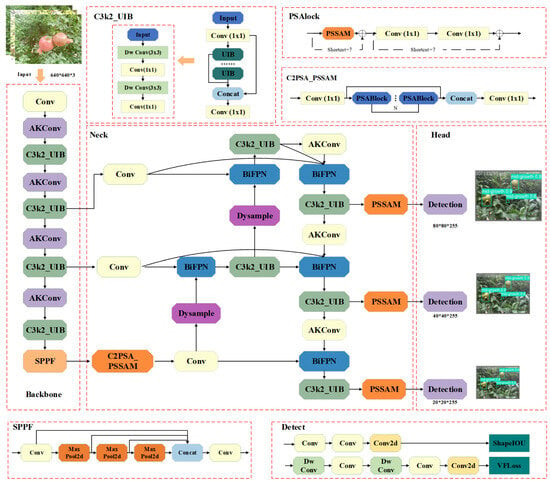

To overcome parameter redundancy and slow inference speeds in stage-wise pomegranate detection, this study proposes an enhanced YOLOv11-based model, called YOLO-MSNet, whose refined architecture is illustrated in Figure 5.

Figure 5.

Network structure diagram of detection model called YOLO-MSNet.

The principal enhancements we made to the model are as follows: (1) using AKConv modules to replace conventional convolutions within the backbone and further optimizing the C3k2 module through integration with the Universal Inverted Bottleneck (UIB) structure, significantly reducing model complexity; (2) introducing a novel module, PSSAM, to improve the C2PSA component and strategically adding an attention mechanism at the interface between the neck and head networks; (3) proposing an optimized Dynamic Adaptive Bidirectional Feature Pyramid Network (DA-BiFPN) architecture for the neck region, effectively enhancing the model’s feature recognition and fusion capabilities for multi-scale and overlapping fruits, thereby substantially boosting its detection precision.

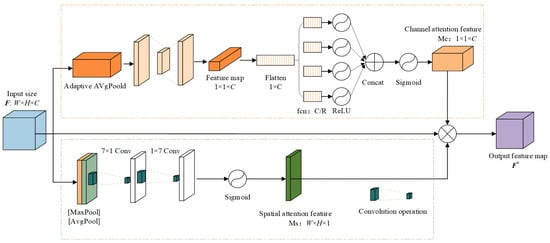

3.1. PSSAM

Pomegranate fruits exhibit considerable variations in their morphological features, texture, and coloration across their developmental stages, which is further complicated by intricate orchard backgrounds, leaf–fruit occlusions, and overlapping objects, all of which heighten the complexity of accurate object perception. Traditional convolutional neural networks, such as CNNs, typically apply uniform processing to entire regions, failing to effectively capture nuanced local details or adaptively respond to these intricate environmental factors, thereby compromising their detection accuracy. Attention mechanisms, however, can effectively concentrate on critical regions specific to each growth stage, enhancing target features even amidst complex, dynamically changing conditions.

To enhance the model’s capacity to capture intricate local details and seamlessly integrate channel information, we designed an innovative attention module, the PSSAM, which is depicted in Figure 6. Inspired by the concept of parallel cascading, PSSAM’s channel attention branch employs a multi-path strategy [21]: Following adaptive average pooling, several parallel fully connected layers execute squeeze-and-excitation actions, and subsequent feature scaling sharpens representation granularity while seamlessly integrating global contexts. Concurrently, the spatial branch concatenates the average and max pooling outputs and employs decomposed convolutions (7 × 1 and 1 × 7) to extract horizontal and vertical local spatial features, with a subsequent Sigmoid activation generating spatial attention weights. Finally, channel and spatial attention outputs are fused using a preset ratio (for instance, 0.7:0.3) to achieve the fine-grained modulation of input features.

Figure 6.

PSSAM network structure diagram.

By employing PSSAM, the network not only makes use of multi-scale details but also substantially strengthens its perception of local details, thus effectively enhancing its detection accuracy while maintaining its lightweight nature.

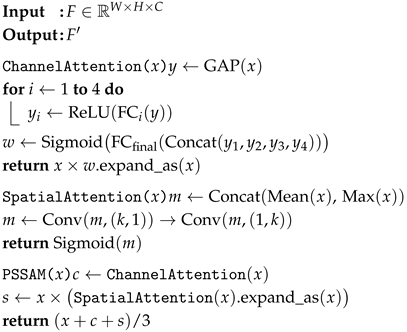

The pseudo-code of the algorithm is shown as Algorithm 1.

| Algorithm 1: Pseudo-code of the PSSAM algorithm |

|

The enhanced functionality of PSSAM benefits from the attention and spatial attention mechanisms of parallel channels, and its overall formula is as follows:

Regarding the channel attention mechanism, the feature maps undergo global average pooling to generate a channel-wise descriptor vector . Then, the vector is compressed by a dense layer; once the outputs of the multiple branches are amalgamated, it is restored to its original dimensions via a second dense layer. Channel-wise scaling factors are then applied and added to the input to yield the final channel attention weights, as shown in Equation (8).

Here, denotes the compression operation applied after aggregation; signifies the excitation step, wherein is reconstructed via fully connected layers and subjected to channel-wise scaling; and encapsulates input-modifying procedures such as batch normalization, dropout, and their ilk.

We first apply both maximize and average pooling across each feature map to produce a 2 × W × H representation of their spatial statistics. Next, we use factorized convolutions (7 × 1 and 1 × 7) to extract horizontal and vertical contexts. A Sigmoid activation then converts the resulting map into spatial attention weights. The spatial attention weights are generated via the following Sigmoid activation function:

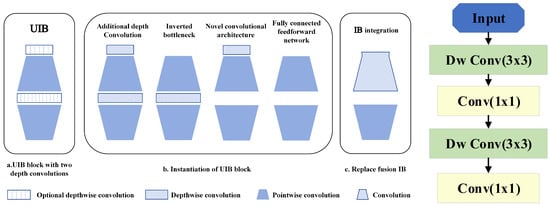

3.2. C3k2_UIB

In the context of pomegranate fruit detection, the original C3k2 module in YOLOv11 exhibits several limitations, including its high computational complexity, redundant feature representations, and insufficient ability to capture localized features. These shortcomings hinder the accurate extraction of multi-scale fruit characteristics, particularly under complex and unstructured environmental conditions. To mitigate these issues, this study introduces the Universal Inverted Bottleneck (UIB) module, derived from MobileNet V4, to enhance the C3k2 architecture, resulting in the proposed C3k2_UIB module. This refined structure integrates two optional depthwise separable convolutions, which collectively improve the module’s flexibility and efficiency in feature extraction. Specifically, the first depthwise convolution—located prior to the expansion layer—facilitates the early-stage processing of input features, thereby improving the capture of low-level spatial details. The second depthwise convolution—inserted between the expansion and projection layers—further augments the network’s capacity to extract high-level semantic information. Together, these enhancements substantially strengthen the model’s ability to recognize pomegranate fruit features under visually complex conditions. The architectural details of the C3k2_UIB module are depicted in Figure 7.

Figure 7.

C3k2_UIB structure diagram.

3.3. The Improved BiFPN

Pomegranate fruits undergo complex morphological shifts during their growth, evolving from tight, small clusters in the initial stages of development to larger, more dispersed forms at maturity. Consequently, capturing both global semantic context and intricate local details simultaneously along multiple feature extraction pathways becomes essential to meet the demands of multi-scale target detection. YOLO v11’s PAN-FPN relies on bilinear upsampling with a fixed interpolation weight (β = 0.5), preventing the adaptive scaling of feature maps. Consequently, edges become blurred and fine fruit details are lost in complex scenes. Moreover, the traditional stacked convolution strategy in the original C3k2 module frequently introduces redundant feature information, negatively impacting training efficiency and detection accuracy.

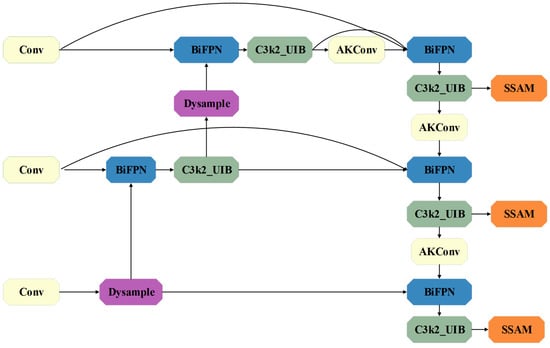

To address the aforementioned limitations, this study proposes an enhanced Dynamic Adaptive Bidirectional Feature Pyramid Network (DA-BiFPN) module, which is illustrated in Figure 8. The redesigned architecture substitutes the original neck component with a BiFPN structure, enabling efficient bidirectional feature fusion through simultaneous top-down and bottom-up information propagation. Furthermore, we incorporate a Dy-sample module that leverages point-wise resampling to replace costly dynamic convolutions and eliminate auxiliary subnetworks for adaptive kernel generation. This integration not only improves computational efficiency but also effectively suppresses redundant information during multi-scale feature fusion. As a result, the proposed DA-BiFPN module markedly enhances the quality of feature representation and contributes to improved detection accuracy, particularly in scenarios involving complex object distributions and scale variations.

Figure 8.

DA-BiFPN network structure diagram.

These enhancements enable DA-BiFPN to retain BiFPN’s effective multi-scale bidirectional feature fusion while overcoming the inherent drawbacks of traditional upsampling and feature extraction techniques. Consequently, it significantly elevates both the precision and real-time performance of pomegranate fruit detection across all developmental stages.

3.4. Experimental Environment Description

3.4.1. Datasets

The dataset used in this study comprises publicly accessible images that depict the various developmental stages of pomegranate fruits and have been sourced from online repositories. Representative images from the dataset are presented in Figure 9. This comprehensive collection consists of 5857 meticulously annotated images categorized into five distinct growth stages: budding, flowering, early fruiting, intermediate fruiting, and maturity. The images were partitioned, based on a ratio of 7:1.5:1.5, into training, validation, and testing subsets, respectively. Specifically, 4100 images were allocated to the training set, 585 images were designated for validation, and an additional 585 images were reserved for testing [22].

Figure 9.

Images of pomegranate fruits at different growth stages.

To diversify our dataset, we collected an additional 445 images (1280 × 720 px) of pomegranates: fully ripened fruits photographed under standardized indoor lighting and specimens from successive growth stages in Huili orchards, Sichuan. Every image was precisely annotated in LabelMe with bounding boxes and matching label files. Representative samples of the augmented dataset appear in Figure 10.

Figure 10.

Images from supplementary validation dataset of pomegranate fruits at different growth stages.

To bolster the model’s generalization and guard against overfitting, we augmented the dataset by randomly applying flips, blurring transformations, adaptive brightness adjustments, exposure adjustments, rotational crops, noise perturbations and randomly add black occlusion blocks to expand the collection to 8000 images. Examples of these enhanced images are shown in Figure 11.

Figure 11.

Images after image enhancement.

These images were allocated to the training, validation, and testing sets in a 7:1.5:1.5 ratio, with the supplemental dataset incorporated into the validation set. Consequently, 5600 images were designated for training, 1200 for validation, and 1200 + 445 for testing.

3.4.2. Experimental Configuration

The experiments were conducted on a Windows 10 (64-bit) operating system utilizing a neural network model constructed with Python 3.9 and the PyTorch 2.0.1 framework within the PyCharm 2023.3 (Community Edition) development environment. An NVIDIA GeForce RTX 4080 GPU with 16 GB of RAM, accelerated by CUDA version 11.8, was employed to ensure computational efficiency. All experiments were performed consistently under identical environmental configurations.

3.4.3. Setting the Experimental Parameters

To ensure the model fully captures features and to prevent potential cross-domain biases arising from pre-trained weights, this study employed a random initialization strategy during training. The input images were uniformly resized to 640 × 640 pixels, and the training procedure spanned a total of 200 epochs, each consisting of 512 iterations, with a batch size of eight images per iteration. In terms of an optimization strategy, the AdamW optimizer was utilized with an initial learning rate of 0.02 and its learning rate decay factor (lrf) set to 0.1. We applied a cosine annealing schedule (cos_lr = True) for a smooth learning rate decay, which permits finer parameter tuning in later training phases. To ensure robust results, we reported all evaluation metrics as the mean of ten independent runs.

3.4.4. Evaluation Metrics and Visualization Techniques

The evaluation metrics selected for this study were precision (P), recall (R), parameters (Params), mean average precision (mAP), Floating Point Operations per Second (FLOPs), and inference time (inf). The mAP metric, combining both precision and recall, is widely recognized as a comprehensive indicator in performance assessments. Specifically, the mAP_0.5 measures the mean average precision across all classes at an IoU threshold of 0.5, whereas mAP_0.5:0.95 averages the AP over IoU values from 0.5 to 0.95. In object detection tasks, higher mAP values signify a superior detection performance. For binary classification tasks, precision (P) and recall (R) are typically computed using true positives (TPs), false positives (FPs), true negatives (TNs), and false negatives (FNs), as expressed in the formulas below:

“Params” denotes the total number of parameters within the network, serving as a measure of the model’s scale and complexity. “GFLOPs” represents the number of floating-point operations executed per second, reflecting the computational complexity and operational efficiency of the model. “inference time” quantifies the number of images processed by the model per second.

4. Experimental Results and Analysis

4.1. Model Training Results

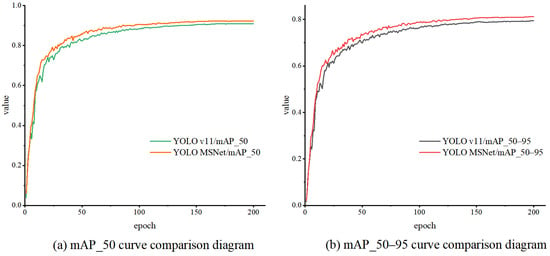

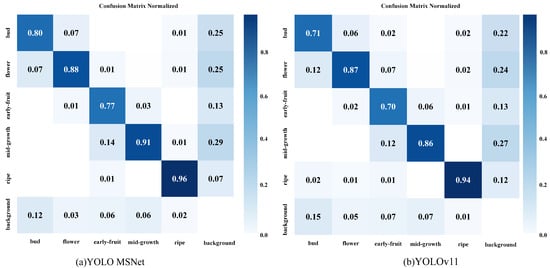

In this section, upon the completion of their training, both the proposed YOLO-MSNet and the original YOLO v11n models were evaluated using the same validation dataset. Their respective performance curves—depicting precision, recall, and mean average precision (mAP)—are illustrated in Figure 12. These evaluation metrics, which measure the effectiveness of each model in detecting pomegranate fruits across various growth stages, were detailed previously in Section 3.4.4 Figure 12 shows precision–recall curves that highlight YOLO-MSNet’s clear advantage over YOLO v11n: at the same recall, YOLO-MSNet delivers a substantially higher precision, while at the same precision, it yields a significantly greater recall. Based on these comprehensive performance comparisons, it is evident that the enhanced YOLO-MSNet model introduced in this study achieves remarkable improvements in precision, recall, and overall mAP, displaying a superior performance on the publicly available pomegranate image dataset.

Figure 12.

Comparison of curves of evaluation indicators used on YOLOv11 YOLO-MSNet.

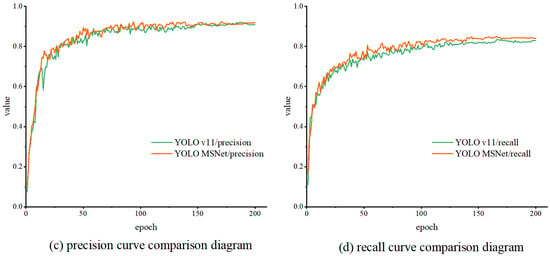

Figure 13 presents confusion matrices that illustrate the detection performance of both the baseline YOLO v11n model and the refined YOLO-MSNet. These matrices offer a clear and intuitive comparison of the classification capabilities for each model across the various growth stages of pomegranate fruits, with each column representing predicted classes and each row denoting actual classes. Within the dataset, the deep crimson of the mature fruit stands in stark relief against the dark emerald foliage, facilitating effortless detection. In contrast, early-fruit pomegranates (those in their green phase) blend into the leaves and branches, causing confidence scores to wane. To remedy this, we integrated PSSAM and DA-BiFPN modules into the baseline architecture, sharpening the network’s perception of nuanced color and texture cues. The confusion matrix reveals that under challenging conditions, the mAP of early fruit detection climbed from 72% to 82%, while the mAP of detecting buds rose by 6%.

Figure 13.

YOLO-MSNet and YOLOv11 confusion matrices.

4.2. Model Test Results and Analysis

In this section, we evaluate the practicality and performance of YOLO-MSNet in pomegranate detection across differnt growth stages by comparing it to 15 mainstream models trained using identical datasets and experimental settings. The results of this comparison are shown in Table 1.

Table 1.

Comparative experimental results of different models on pomegranate dataset.

According to the analysis presented in Table 1, the YOLO-MSNet proposed in this study exhibits a superior performance across the various evaluation metrics when compared with mainstream models. Compared to YOLO v11n, YOLO-MSNet boosts its precision by 0.8%, recall by 3.5%, mAP50 by 1.7%, and mAP50-95 by 0.6%, all while preserving its inference speed. It also cuts its own parameter count by 21.5% and is a 21.8% smaller model. These improvements confirm that YOLO-MSNet strikes an optimal balance between detection accuracy, computational efficiency, and model compactness—ideal for real-time pomegranate detection in complex orchard settings.

Although YOLO-MSNet performs slightly worse in terms of its inference speed and parameter count compared to lightweight detectors such as YOLOv8n and its enhanced variants, it delivers a striking leap in accuracy. Against high-precision models like YOLOv9t and YOLOv8n, YOLO-MSNet delivers a superior detection accuracy while minimizing its parameter count and model size. When compared to other pomegranate-detection-specific methods, especially YOLO-Granada, it matches their precision but reduces its weight size by 44.4%, parameter count by 47%, and GFLOPs by 16.3%, while speeding up inference by 60.7%. These gains highlight its clear advantage for lightweight deployments.

In summary, our experimental results underscore the effectiveness of YOLO-MSNet in balancing detection accuracy, computational efficiency, and model compactness. These attributes render it highly suitable for real-time deployment in resource-constrained environments, such as edge devices or compact agricultural robots, and particularly advantageous for the accurate detection of pomegranate fruits across complex orchard scenarios.

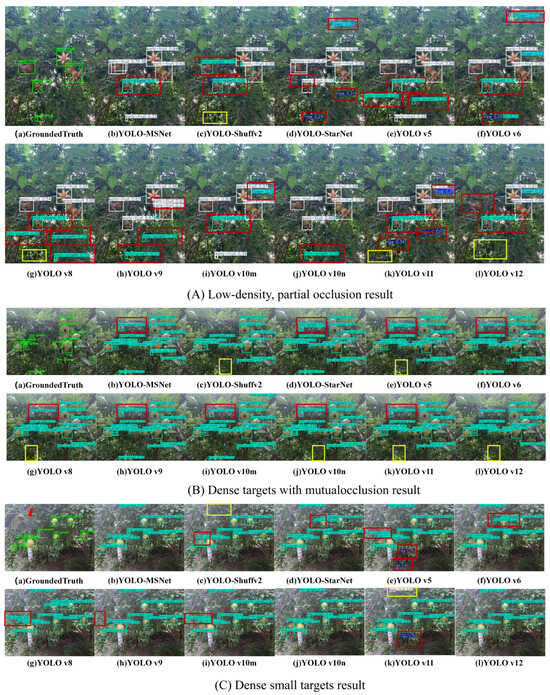

To intuitively illustrate the detection efficacy of the nine models evaluated, images encompassing various pomegranate growth stages, densities, occlusions, and overlaps were randomly selected from the training set as test samples, and their corresponding detection outcomes are presented in Figure 14.

Figure 14.

Pomegranate fruit detection.

In Figure 14A, under sparse layouts and with partial occlusions, most detectors—except YOLO-MSNet, YOLOv11, and YOLOv10n—suffer from numerous false positives and missed detections. Conversely, in densely packed scenes with small overlapping fruits and foliage, their detection accuracy drops sharply due to severe interference. Moreover, due to the low contrast between early-stage fruits (green-phase fruits) and the surrounding leaves and branches in the dataset, downscaling images exacerbates confusion among similarly colored vegetation. However, YOLO-MSNet consistently maintains its high-confidence detections, as illustrated in Figure 14B,C. These observations indicate that YOLO-MSNet can reliably identify and classify pomegranate fruits throughout their growth stages, benefiting significantly from the enhanced feature extraction capabilities offered by its PSSAM and DA-BiFPN modules. Though minor instances of missed or false detections may still occur under certain challenging conditions, the model consistently achieves high accuracy when dealing with unobstructed or mildly obstructed scenarios. In contexts presenting clearly distinguishable fruit characteristics, misclassification and missed detections are virtually absent, whereas severe occlusions and irregular plant growth patterns occasionally result in incomplete feature representations, such as potential errors or omissions.

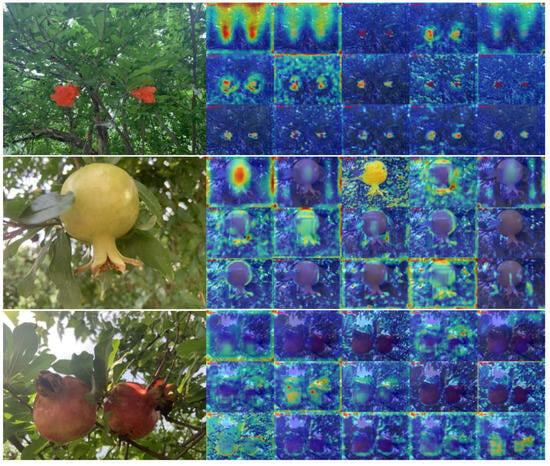

By examining the heatmaps in Figure 15, we can see that YOLO-MSNet’s feature maps, which operate at different depths, exhibit unique activation patterns for pomegranates across their growth stages. Areas contributing significantly to model detection are indicated in red, while regions with minimal contribution are depicted in blue. These visualizations demonstrate YOLO-MSNet’s exceptional ability to focus on the morphological characteristics of the fruits with precision, effectively suppressing background interference and adeptly addressing fruit detection challenges within complex orchard environments. This illustrates that the integration of modules such as PSSAM and DA-BiFPN enables the network to acquire discriminative, multi-scale features that are tailored to intricate orchard settings, effectively capturing key fruit details while avoiding excessive activation in irrelevant background regions (e.g., foliage). Consequently, YOLO-MSNet achieves a harmonious balance between accuracy and real-time performance, characterized by its fewer parameters and improved inference speed.

Figure 15.

Heat maps.

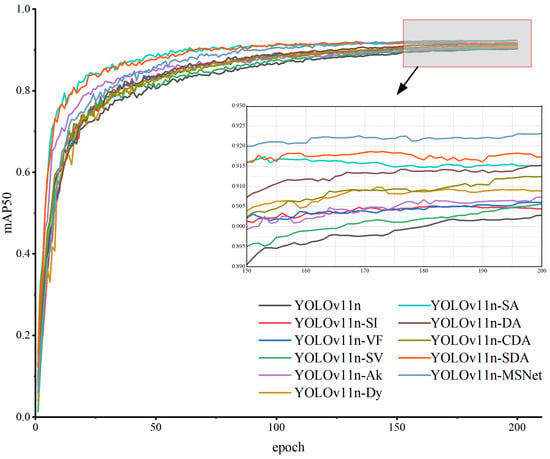

To substantiate the performance of the proposed YOLO-MSNet model, we conducted ablation studies on the same dataset, meticulously comparing the effects of the loss functions (ShapeIoU and VFLoss), the convolutional modules (Dy-sample and AKConv), and the attention mechanisms (PSSAM and C2f_UIB) within the BiFPN framework; the results are presented in Table 2 and Figure 16.

Table 2.

Results from the ablation experiments performed on the pomegranate dataset.

Figure 16.

Comparison of ablation experiment results.

From the charts and confusion matrices above, it is clear that the introduction of ShapeIoU prevents the conventional IoU from erroneously suppressing bounding boxes when their aspect ratios differ, thereby reducing missed detections in both densely clustered fruits and slender targets; VFLoss, in turn, accentuates learning on low-confidence yet high-quality samples, reclaiming high-IoU true positives overlooked by standard classification losses and markedly diminishing false negatives. Upon incorporating the PSSAM module, the baseline model exhibited reductions of 19.2%, 1.5%, and 16.3% in its parameter count, GFLOPs, and weight size, respectively, alongside an increase of 1.2% in its mAP50. These improvements likely stem from PSSAM’s ability to strengthen feature correlations across distinct channels and effectively alleviate feature degradation. Its parallel attention mechanisms, combining both channel and spatial attention, enable the network to capture target features with greater precision and suppress background interference. The DA-BiFPN architecture further boosts accuracy, raising the mAP from 90.3% to 91.6% while reducing the model’s parameters by 0.4 million and its weight size by 0.3 million. BiFPN achieves superior efficiency in feature extraction and accelerates inference by adaptively integrating multi-scale features, thereby allowing the network to better discern the importance of the input features. Incorporating the C2f_UIB module into the model yielded additional modest reductions in parameter count and GFLOPs, highlighting its value in model simplification via a mobile-optimized inverted bottleneck design. Compared to YOLO v11n, YOLO-MSNet achieved improvements of 0.9%, 3.5%, 1.7%, and 2.4% in precision, recall, mAP50, and mAP50-95, respectively, while simultaneously reducing the total number of parameters by 21.5% and the weight size by 21.8%. These outcomes clearly demonstrate that YOLO-MSNet effectively combines high detection accuracy with reduced computational demands and model complexity, making it particularly suitable for real-time object detection tasks within challenging orchard environments.

In summary, whether in comparison to contemporary mainstream algorithms or across various internal module evaluations, YOLO-MSNet consistently excels across multiple evaluation metrics. The model effectively balances high accuracy with enhanced computational efficiency and reduced complexity, thus presenting an ideal solution for the real-time detection of fruits in complex orchard scenarios.

5. Conclusions

In this study, a lightweight and efficient detection algorithm, YOLO-MSNet, was proposed to facilitate the deployment of pomegranate growth-stage detection on mobile, CPU-based, and other resource-constrained platforms. Built upon an enhanced YOLOv5 architecture, YOLO-MSNet uses AKConv modules in place of traditional convolutions within its backbone and integrates the Universal Inverted Bottleneck (UIB) structure into its framework to optimize the C3k2 module. These modifications result in a substantial reduction in model parameters and size while improving inference efficiency. We further boost the model’s accuracy by adding PSSAM, which uses squeeze-and-excitation to refine features in challenging visual scenes. Experiments show that YOLO-MSNet reaches a 91.9% mAP, up from 90.2% for YOLOv11n, while cutting its parameters and model size by 21.5% and 21.8%, respectively. This confirms YOLO-MSNet’s balance of high accuracy and low computational costs, which is ideal for its real-time use on budget, GPU-free hardware.

Our work advances intelligent agriculture by showing that high-precision detectors can run effectively under real-world constraints. The design methodology adopted in YOLO-MSNet offers a valuable reference for the future development of lightweight neural networks tailored to agricultural and edge-computing applications.

Future research will focus on further enhancing the model’s robustness and adaptability to challenging conditions, such as variable lighting conditions, occlusions, and background clutter. The exploration of advanced network architectures, attention mechanisms, and optimization strategies will be instrumental to this process. Moreover, integrating YOLO-MSNet into mobile applications can improve its accessibility for agricultural practitioners. Beyond agriculture, the lightweight and high-precision nature of the proposed model is also promising for applications in fields such as security surveillance and autonomous systems.

Author Contributions

Conceptualization, L.X. and B.L.; methodology, L.X.; validation, X.F. and Z.L. (Zhe Lu); formal analysis, Z.L. (Zelong Li); data curation, B.J.; writing—original draft preparation, L.X.; writing—review and editing, S.J.; visualization, Z.L. (Zelong Li); project administration and funding acquisition, B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (No. 32472000), the Key Research and Development Program of Heilongjiang Province (No. 2024ZXDXA14), and the Fundamental Research Funds for the Central Universities (No. 3072024LJ0404).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article; further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank the Institute of Applied Electronics, China Academy of Engineering Physics, for supporting this research project.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhao, J.; Du, C.; Li, Y.; Mudhsh, M.; Guo, D.; Fan, Y.; Wu, X.; Wang, X.; Almodfer, R. YOLO-Granada: A lightweight attentioned Yolo for pomegranates fruit detection. Sci. Rep. 2024, 14, 16848. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Mao, S.; Zhou, J.; Zhang, B. Clustered tomato detection and picking point location using machine learning-aided image analysis for automatic robotic harvesting. Precis. Agric. 2023, 24, 727–743. [Google Scholar] [CrossRef]

- Yu, L.; Xiong, J.; Fang, X.; Yang, Z.; Chen, Y.; Lin, X.; Chen, S. A litchi fruit recognition method in a natural environment using RGB-D images. Biosyst. Eng. 2021, 204, 50–63. [Google Scholar] [CrossRef]

- Syazwani, R.W.N.; Asraf, H.M.; Amin, M.A.M.S.; Dalila, K.N. Automated image identification, detection and fruit counting of top-view pineapple crown using machine learning. Alex. Eng. J. 2022, 61, 1265–1276. [Google Scholar] [CrossRef]

- Fan, Y.; Cai, Y.; Yang, H. A detection algorithm based on improved YOLOv5 for coarse-fine variety fruits. J. Food Meas. Charact. 2024, 18, 1338–1354. [Google Scholar] [CrossRef]

- Zeng, T.; Li, S.; Song, Q.; Zhong, F.; Wei, X. Lightweight tomato real-time detection method based on improved YOLO and mobile deployment. Comput. Electron. Agric. 2023, 205, 107625. [Google Scholar] [CrossRef]

- Chu, P.; Li, Z.; Lammers, K.; Lu, R.; Liu, X. Deep learning-based apple detection using a suppression mask R-CNN. Pattern Recognit. Lett. 2021, 147, 206–211. [Google Scholar] [CrossRef]

- Li, J.; Zhu, Z.; Liu, H.; Su, Y.; Deng, L. Strawberry R-CNN: Recognition and counting model of strawberry based on improved faster R-CNN. Ecol. Inform. 2023, 77, 102210. [Google Scholar] [CrossRef]

- Macías-Macías, M.; Sánchez-Santamaria, H.; Garcia Orellana, C.J.; González-Velasco, H.M.; Gallardo-Caballero, R.; García-Manso, A. Mask R-CNN for quality control of table olives. Multimed. Tools Appl. 2023, 82, 21657–21671. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Nan, Y.; Zhang, H.; Zeng, Y.; Zheng, J.; Ge, Y. Intelligent detection of Multi-Class pitaya fruits in target picking row based on WGB-YOLO network. Comput. Electron. Agric. 2023, 208, 107780. [Google Scholar] [CrossRef]

- Badeka, E.; Karapatzak, E.; Karampatea, A.; Bouloumpasi, E.; Kalathas, I.; Lytridis, C.; Tziolas, E.; Tsakalidou, V.N.; Kaburlasos, V.G. A deep learning approach for precision viticulture, assessing grape maturity via YOLOv7. Sensors 2023, 23, 8126. [Google Scholar] [CrossRef] [PubMed]

- Wu, E.; Ma, R.; Dong, D.; Zhao, X. D-YOLO: A Lightweight Model for Strawberry Health Detection. Agriculture 2025, 15, 570. [Google Scholar] [CrossRef]

- Liang, Y.; Jiang, W.; Liu, Y.; Wu, Z.; Zheng, R. Picking-Point Localization Algorithm for Citrus Fruits Based on Improved YOLOv8 Model. Agriculture 2025, 15, 237. [Google Scholar] [CrossRef]

- Che, Y.; Bai, H.; Sun, L.; Fang, Y.; Guo, X.; Yin, S. Real-Time Detection of Varieties and Defects in Moving Corn Seeds Based on YOLO-SBWL. Agriculture 2025, 15, 685. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Zhang, X.; Song, Y.; Song, T.; Yang, D.; Ye, Y.; Zhou, J.; Zhang, L. LDConv: Linear deformable convolution for improving convolutional neural networks. Image Vis. Comput. 2024, 149, 105190. [Google Scholar] [CrossRef]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to upsample by learning to sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 6027–6037. [Google Scholar]

- Jiao, L.; Abdullah, M.I. DP-YOLO: Enhancing Pedestrian Detection in Crowd Scenes with Deformable Convolution and Varifocal Loss. In Proceedings of the 2024 3rd International Conference on Cyber Security, Artificial Intelligence and Digital Economy, Nanjing, China, 1–3 March 2024; pp. 226–231. [Google Scholar]

- Zhou, L.; Jin, S.; Wang, J.; Zhang, H.; Shi, M.; Zhou, H. 3D Positioning of Camellia oleifera Fruit-grabbing Points for Robotic Harvesting. Robot. Mach. Learn. Dly. News 2024, 246, 110–121. [Google Scholar] [CrossRef]

- Narayanan, M. SENetV2: Aggregated dense layer for channelwise and global representations. arXiv 2023, arXiv:2311.10807. [Google Scholar]

- Fan, X.; Sun, T.; Chai, X.; Zhou, J. YOLO-WDNet: A lightweight and accurate model for weeds detection in cotton field. Comput. Electron. Agric. 2024, 225, 109317. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).