1. Introduction

“Pig grain secures the world”, indicating that the swine industry is closely tied to people’s daily lives and health. Given the continuous increase in global population and the rising demand for meat, developing efficient pig farming techniques is especially crucial [

1,

2,

3,

4]. Although China is one of the major pork-producing countries globally, it still lacks efficiency and technological application in production. The swine industry in China is currently facing multiple challenges, including shortages of feed resources, high labor costs, significant biosecurity risks, and low production efficiency. Therefore, there is an urgent need to develop a rapid, accurate, and non-invasive method for estimating pig body data. Such a method would not only enhance production efficiency but also strengthen biosecurity measures and improve the health level of pigs, thereby supporting the modernization of the pig farming industry [

5,

6].

Although there has been notable advancement in modern data collection methods and advanced Internet of Things (IoT) technology within the global livestock industry over the past two decades, challenges continue to exist, particularly in terms of limited capabilities in intrinsic data analysis and ongoing concerns related to animal welfare [

7]. Over the past two decades, there has been notable progress in the development of modern data collection methods and advanced Internet of Things (IoT) technologies within the global livestock industry. However, challenges remain, particularly in the areas of intrinsic data analysis capabilities and animal welfare concerns [

6,

8,

9,

10,

11]. Dynamically perceiving the body measurement data of pigs is of utmost importance. By utilizing computer vision technology to estimate the body dimensions of livestock, which involves analyzing animal images to calculate their size, not only is the measurement automated, but it also significantly reduces disturbance to the animals, greatly enhancing the efficiency of livestock management. With the rapid development of computer technology, 3D perception, modeling, and reconstruction techniques have been widely applied in industrial and agricultural fields. The advancements in these technologies have not only propelled the development of research equipment but also advanced the depth of research in 3D reconstruction [

12,

13,

14,

15]. The application of 3D reconstruction technology primarily aims to overcome the difficulties associated with extracting 3D information from 2D images. This is because 2D imaging captures flat representations, whereas 3D imaging facilitates more effective 3D reconstructions. Some studies have employed 3D reconstruction technology to create 3D models of animals by analyzing multiple images, thereby constructing more accurate models. Techniques such as X-ray 3D reconstruction have been used to inspect industrial and agricultural products, while ultrasound tomography images and image-based methods have been applied to the 3D reconstruction of typical parts [

16,

17]. Three-dimensional reconstruction technology has a wide range of applications in the agricultural sector and has achieved relatively good research results [

18,

19,

20,

21].

In precision pig farming, the physical characteristics of pigs hold significant reference value for genetic breeding, feed conversion, and body condition analysis [

22,

23]. However, traditional methods still rely on manual tape measurements, which often cause stress to pigs and are inefficient. With technological advancements, image recognition and 3D reconstruction technologies have been widely applied to the perception of physical characteristic information. Depth cameras, such as Kinect DK and RealSense, have been developed, leading to extensive research in pig weight estimation, physical characteristic perception, and body condition analysis. Nonetheless, in field deployments, there are shortcomings such as lengthy processing times and high hardware requirements [

24,

25,

26,

27].

In conclusion, 3D imaging technology is an efficient and precise method for acquiring pig body characteristics in the pig farming industry. Compared to traditional contact measurement methods, this technology offers non-invasive monitoring, significantly reducing stress on the animals. By analyzing 3D images of pigs, we innovatively constructed 3D models of pigs and integrated an innovative body measurement system, providing revolutionary technology and system support for production sites. Moreover, in the current context of heavy pig farming tasks, large workloads, low efficiency, and a high reliance on traditional, singular, and ideal laboratory environments for pig testing, the development of new methods for sensing and modeling physical characteristics of pigs based on image vision technology offers a new perspective for industrial implementation. The main contributions of this paper include: 1. Constructing a model for perceiving 3D body size information of pigs. 2. Reconstructing 3D models using depth cameras. 3. Building a system for sensing body size information of pigs.

2. Materials and Methods

2.1. Environment and Animals

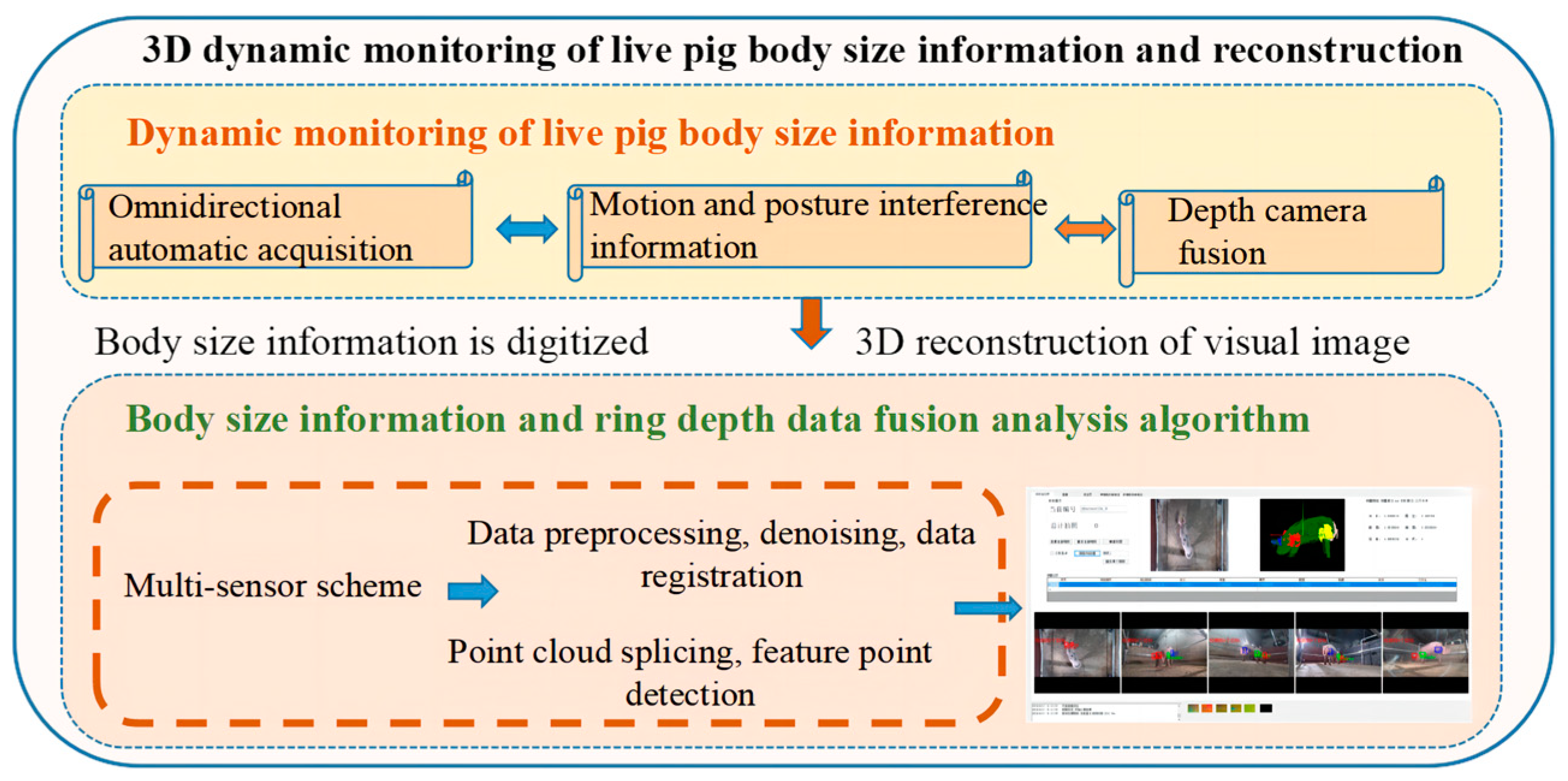

This study was deployed and constructed in the laboratory, and on-site verification was also conducted at a farm in Tianjin, China. The subjects selected for this study were ten pigs with body weights ranging from 52.5 to 121.5 kg. These pigs were chosen because they had well-developed physiques, proportionate builds, and uniform body colors. Selecting pigs within this weight range was primarily undertaken to optimize the model’s learning effectiveness and to minimize the impact of varying body lengths on the model’s performance. The 3D point cloud collection framework measured 2 m by 3 m, with one depth camera placed at each of the four corners and another depth camera at the center of the top, 2 m above the ground. This paper collected a total of 96,210 point cloud data points. For the collected data, we first carried out manual selection, primarily eliminating images with obstructions and poor imaging quality. Eventually, 9000 depth images were selected from five different perspectives. The experiment was divided into two parts: static model pigs in the laboratory and pigs in pigsties. The body measurement data of the pigs being measured, including body length, chest girth, abdominal girth, back height, and hip width, were also measured using a tailor’s tape. Data labeling was performed using the LabelMe software 5.4.1 and the corresponding JSON files were generated. Below is the overall technical roadmap for this study (

Figure 1).

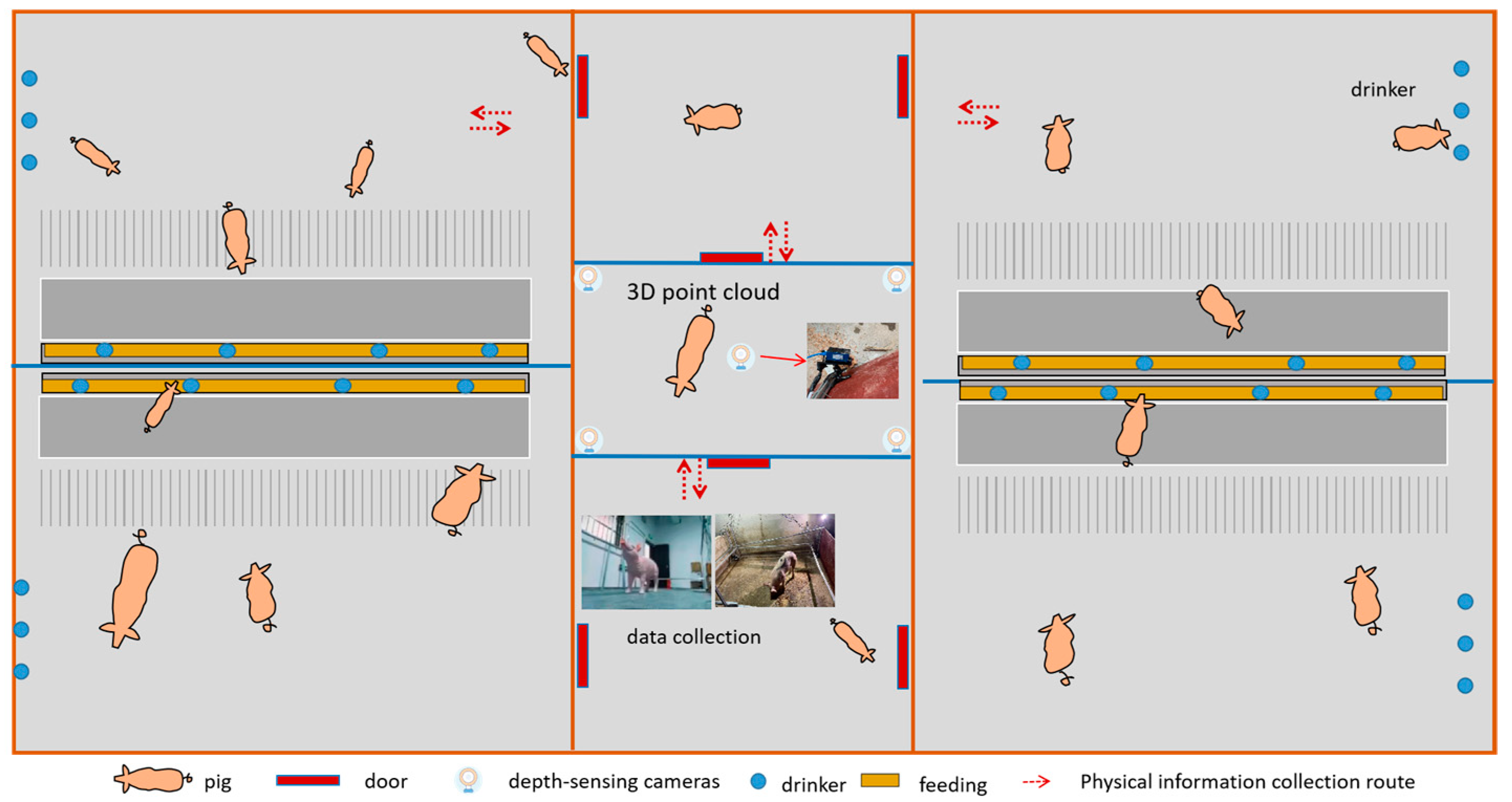

In this experiment, the electronic weighing scale used was a CWYC model. The physical measurements of the pigs primarily include chest girth and hip width, among others. The imaging collection equipment used was a LXPS-M4422-79E model. The computer processor was an Intel(R) Core(TM) i7-4210M CPU @ 2.60 GHz, and the RAM was 16 GB (

Figure 2).

2.2. Data Analysis and Model Establishment

The acquired imaging images were first preprocessed using Python, including feature detection, registration, and other steps, followed by further processing through the model. Then, the data were analyzed based on convolutional neural networks to provide references for identifying changes in pig weights at different daily ages.

For camera calibration, we used a method that involves a large calibration board to unify the coordinates of all cameras. In this method, each large calibration board contained several smaller calibration boards, with known relative positions between them, and each camera was able to capture at least one small calibration board. These small boards allowed for the precise calibration of each camera’s internal and external parameters, enabling the alignment of each camera’s coordinates to the coordinate system of the small calibration boards.

We performed data labeling using the labelme.exe (V 5.4.1) software, marking parts such as ears, shoulders, hips, and tails, creating bounding boxes, and saving the annotation files. Once the labeling was completed, the files were saved in the default path, and a JSON file with the same name is automatically generated.

2.3. Point Cloud Data Acquisition and Processing

Point Cloud Data Acquisition

In this study, point cloud data were acquired using depth cameras, a method that allows for rapid and high-resolution collection of extensive 3D coordinate data of the surface of the object being measured. The acquisition of point cloud data can be completed within 0.2 s, followed by the computation of results in 1 s using a high-performance industrial computer. The Time of Flight (TOF) camera is a type of depth camera based on the principle of time of flight (ToF). It can measure the distance between the object and the camera, thereby generating a depth image. Combined with RGB (red, green, blue) images, TOF cameras can provide RGBD (red, green, blue, depth) data, which is used for 3D reconstruction. During the shooting process of the depth camera, the acquisition of point cloud data can be affected by factors such as object occlusion and uneven lighting, making the object prone to scanning blind spots, and thereby forming holes. Since point cloud data are collected by 5 cameras, there is noise that does not meet the requirements for model reliability, necessitating preprocessing steps such as denoising and simplification [

28].

2.4. Point Cloud Preprocessing

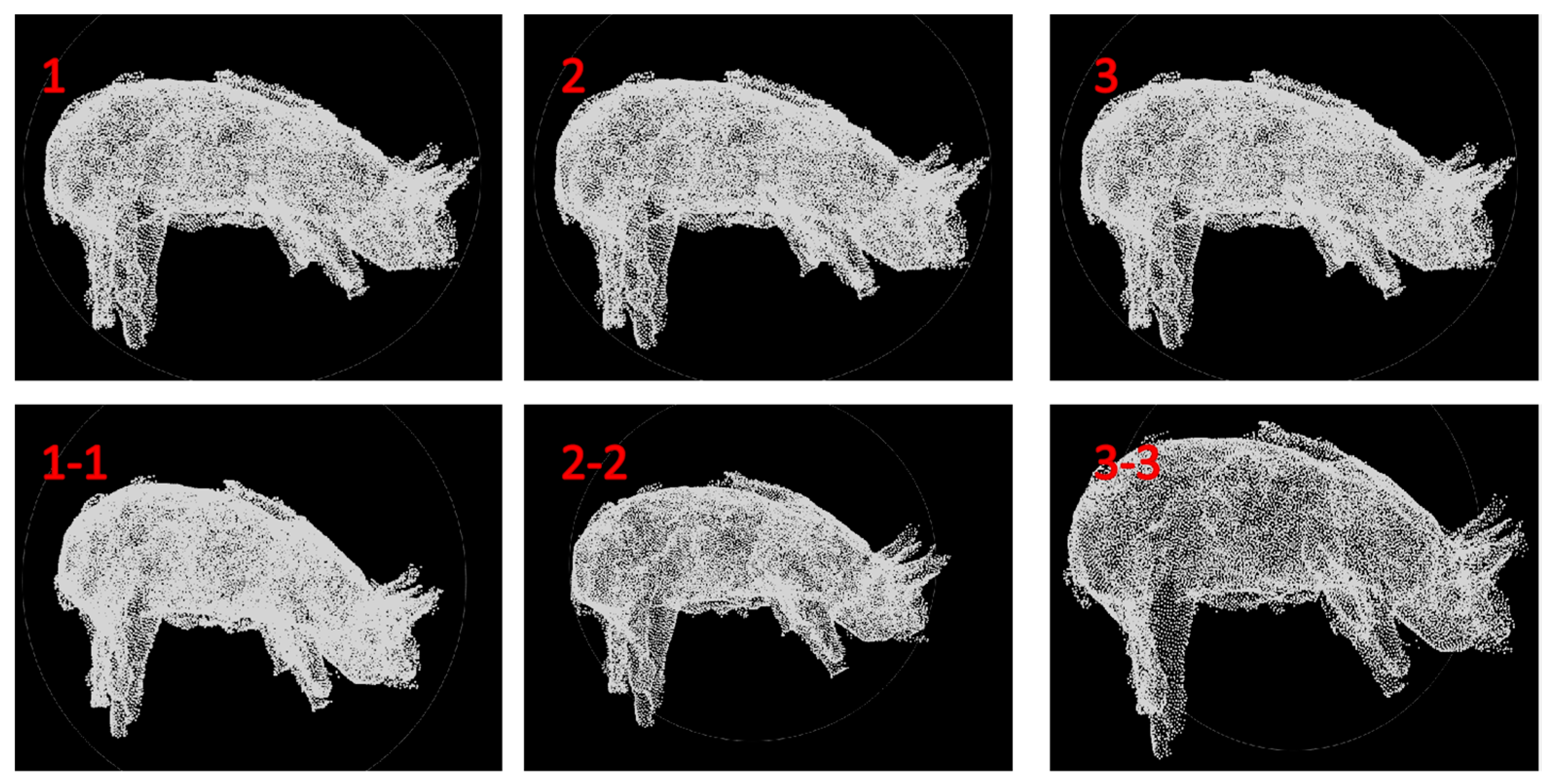

After data collection, obstacles such as railings, baffles, and walls may be present. Therefore, the main focus is on simplifying the noise in the point cloud and aligning the point cloud data captured from different angles into the same coordinate system. This provides a solid data foundation for subsequent surface construction and the generation of 3D solid models. When using depth cameras to capture targets, point cloud data inevitably contain some noise due to the surrounding environment, human disturbances, and the characteristics of the pigs themselves. This noise prevents accurate representation of the spatial position of the scanned object, necessitating the removal of these noisy points. The main approach involves applying Gaussian filtering, mean filtering, and median filtering to the ordered point cloud data. Gaussian noise refers to a type of noise where the noise density function follows a Gaussian distribution. Due to the mathematical ease of handling Gaussian noise in both space and frequency domains, this noise model (also known as normal noise) is used. Simple smoothing of an image is achieved through mean filtering, which calculates the average grayscale value within a certain neighborhood of pixels and saves the result as the grayscale of the center pixel in the output image. Median filtering primarily replaces the center pixel with the median value of the rectangular neighborhood around the center pixel (1, 1-1 Gaussian filtering, 2, 2-2 mean filtering, and 3, 3-3 median filtering preprocessing) (

Figure 3).

2.5. Data Model

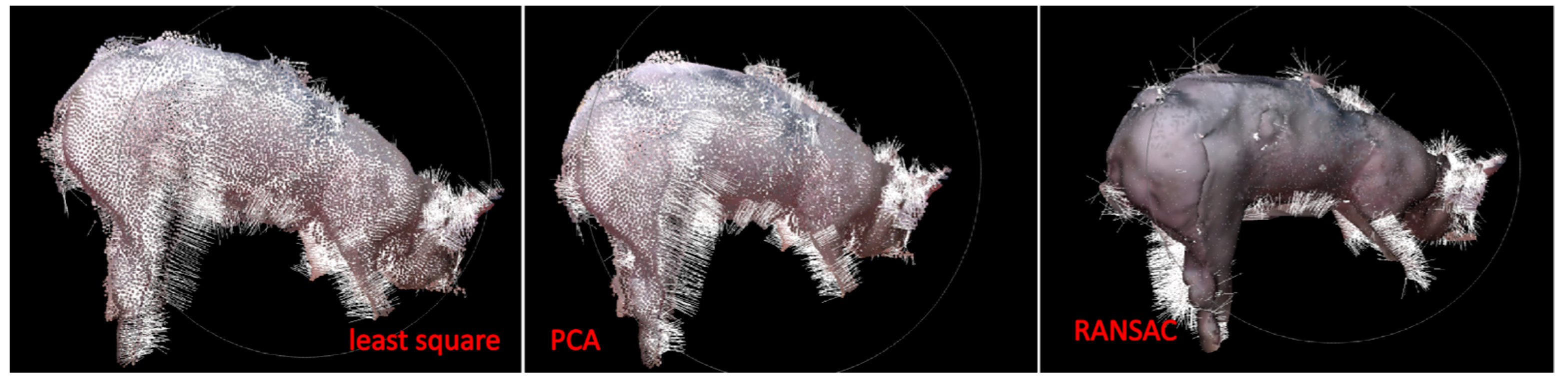

The estimation of point cloud normals is an important step in point cloud processing, providing a foundation for subsequent tasks such as point cloud reconstruction, feature extraction, and registration. Common methods for normal estimation include the least squares method, principal component analysis (PCA), and random sample consensus (RANSAC) [

29,

30]. The least squares method estimates the normals of local planes by minimizing the sum of squared residuals between the points and the fitted plane. While intuitive and simple, it may lack precision when dealing with sharp features. Principal component analysis (PCA) determines the direction of minimal variance, suitable for surfaces approximating planes and offering robustness to noise. Random sample consensus (RANSAC) improves accuracy by iteratively excluding outliers, albeit computationally intensive, effectively handling noise and outliers. This study adopts the least squares method for data estimation, which is intuitive and applicable to most point cloud processing scenarios, but requires caution with sharp features. By combining these methods, point cloud processing quality and efficiency can be effectively enhanced, providing reliable support for 3D vision tasks.

2.6. Detection of Feature Points in Swine Point Clouds

The identification of feature points utilizes the Mask-RCNN convolutional neural network to detect features within color images and map them to the point cloud space using the calibration relationship between color images and depth point clouds. Mask RCNN is an instance segmentation algorithm, characterized by a multitask network capable of performing “object detection”, “object instance segmentation”, and “object keypoint detection”.

The overall structure of the algorithm is based on the Faster-RCNN framework, with a fully connected segmentation network added after the base feature network, transforming the original two tasks (classification + regression) into three tasks (classification + regression + segmentation). Mask R-CNN follows the same two-stage approach as Faster R-CNN, utilizing a fully convolutional network (FCN) to perform semantic segmentation on each proposal box generated by FastRCNN.

Mask RCNN is an efficient instance segmentation framework that integrates multiple functions, including object detection, instance segmentation, and keypoint detection, through a multi-stage processing flow to precisely identify and segment individual instances in images. In the first stage, it scans the input image with a region proposal network (RPN) to generate proposals for areas potentially containing objects. Subsequently, in the second stage, it classifies objects within these proposals, performs bounding box regression, and generates binary masks through a fully convolutional network branch, accurately distinguishing between object pixels and background pixels. This multi-task processing not only enhances processing efficiency but also optimizes the overall performance of the algorithm by decoupling and refining each sub-task (

Figure 4).

The model primarily consists of the following components: it first preprocesses the input image, then extracts feature maps through a pretrained neural network. Each point on the feature map is used to generate multiple candidate region proposals, which are then sent to the RPN network for classification into foreground and background, as well as for bounding box regression, filtering out the higher-quality proposals. Next, the ROI Align technique is used to precisely align the feature maps and candidate regions, ensuring the accuracy of feature extraction. Finally, the filtered region proposals are classified, subjected to bounding box regression, and used to generate masks, outputting each object’s category, location, and detailed pixel-level segmentation results.

Mask-RCNN enhances the detection and segmentation quality of targets in images by using a ResNet-FPN architecture for feature extraction and adding a branch for mask prediction. The algorithm can not only process multiple targets within images but also provides high-quality segmentation masks for each target, supporting further analysis such as volume estimation or shape analysis. Moreover, its flexibility allows for expansion to other tasks, like keypoint detection, further broadening its range of applications. Mask RCNN is highly regarded for its exceptional feature extraction capabilities, outstanding object detection, and instance segmentation performance, having a wide range of application prospects in the field of computer vision.

2.7. Pig Body Point Cloud Registration

In this study, due to the incompleteness, rotational misalignment, and translational misalignment of the point cloud, it is necessary to register partial point clouds to obtain a complete point cloud. To achieve a comprehensive data model of the object being measured, it is essential to determine an appropriate coordinate transformation. This process involves merging point sets obtained from various perspectives into a unified coordinate system, forming a complete data point cloud. This facilitates further operations like visualization, which constitutes the registration of point cloud data. The study utilizes automatic point cloud registration technology, which employs certain algorithms or statistical rules to compute the misalignment between two point clouds, thereby achieving the effect of automatically registering the two point clouds. Essentially, it involves the coordinate transformation of data point clouds measured in different coordinate systems to obtain an overall data model.

The key issue in automatic registration technology lies in determining the coordinate transformation parameters R (rotation matrix) and T (translation vector), minimizing the distance between 3D data measured from two viewpoints after coordinate transformation. Registration algorithms can be classified into global registration and local registration based on their implementation process. The Point Cloud Library (PCL) includes a dedicated registration module, which implements fundamental data structures related to registration and classic registration algorithms such as ICP, estimating corresponding points, and removing incorrect correspondences during the registration process.

2.8. Three-Dimensional Reconstruction of Pig Body Point Clouds

Three-dimensional reconstruction based on point cloud data involves voxel grid reconstruction and surface reconstruction (such as triangulation). The ultimate goal is visualization and display, primarily using 3D visualization software or libraries (such as PCL, MeshLab, and Blender) to view and edit the reconstructed 3D models. Subsequently, these 3D models are utilized for further measurements of the pig’s body dimensions. In a pigsty environment, specific software or libraries might be employed to streamline these steps. For instance, the Point Cloud Library (PCL) offers a plethora of tools and algorithms for processing point cloud data, while algorithms like ElasticFusion and BundleFusion are specifically designed for real-time 3D reconstruction.

3. Results and Discussion

We developed a non-contact measurement system using depth cameras to accurately capture pig body size data, suitable for on-site pig farming scenarios. This stress-free method ensures animal welfare while accurately collecting data. We optimized data quality using preprocessing techniques such as Gaussian, mean, and median filtering, followed by robust normal estimation using methods such as least squares, principal component analysis (PCA), and random sample consensus (RANSAC), enhancing the efficiency of point cloud processing. Our experiments demonstrate that the use of the RANSAC method significantly improves the speed of 3D reconstruction, particularly in achieving smooth pig body surfaces.

3.1. Estimation of Point Cloud Normals

Point cloud normals are an essential geometric surface feature in 3D point clouds, with all algorithmic models relying on the estimation of normals. Estimating point cloud normals is a crucial step in point cloud processing. This study explores the use of the least squares method, principal component analysis (PCA), and random sample consensus (RANSAC). The least squares method, also known as the method of least squares, seeks the best function match for the data by minimizing the sum of the squares of the errors, demonstrating significant advantages in fitting. Utilizing the PCA principle, the process begins by searching for a direction n, where the distribution of projection points of all neighboring points in direction n is most concentrated, meaning the variance of the projections in that direction is minimized, eventually identifying n as the eigenvector corresponding to the smallest eigenvalue (

Figure 5).

RANSAC, known for its strong advantages in scene stitching, primarily operates through feature matching (e.g., SIFT matching) to compute the transformation structure between the subsequent image and the previous one. Subsequently, image mapping overlays the next image onto the coordinate system of the previous image, culminating in the fusion of the transformed images. In summary, the figure below displays the images processed by these three methods.

3.2. Validation of the Point Cloud Feature Detection Algorithm

The feature point detection in the collected point cloud data of pigs showed satisfactory results, with key body parts such as the head, ears, back, and buttocks being effectively identified; it also performed well in detecting the state of curved surfaces. The algorithm is capable of achieving desirable outcomes in detecting fine details and easily overlooked feature points, providing a scientific foundation for subsequent model reconstruction and holding significant potential for future on-site applications (

Figure 6).

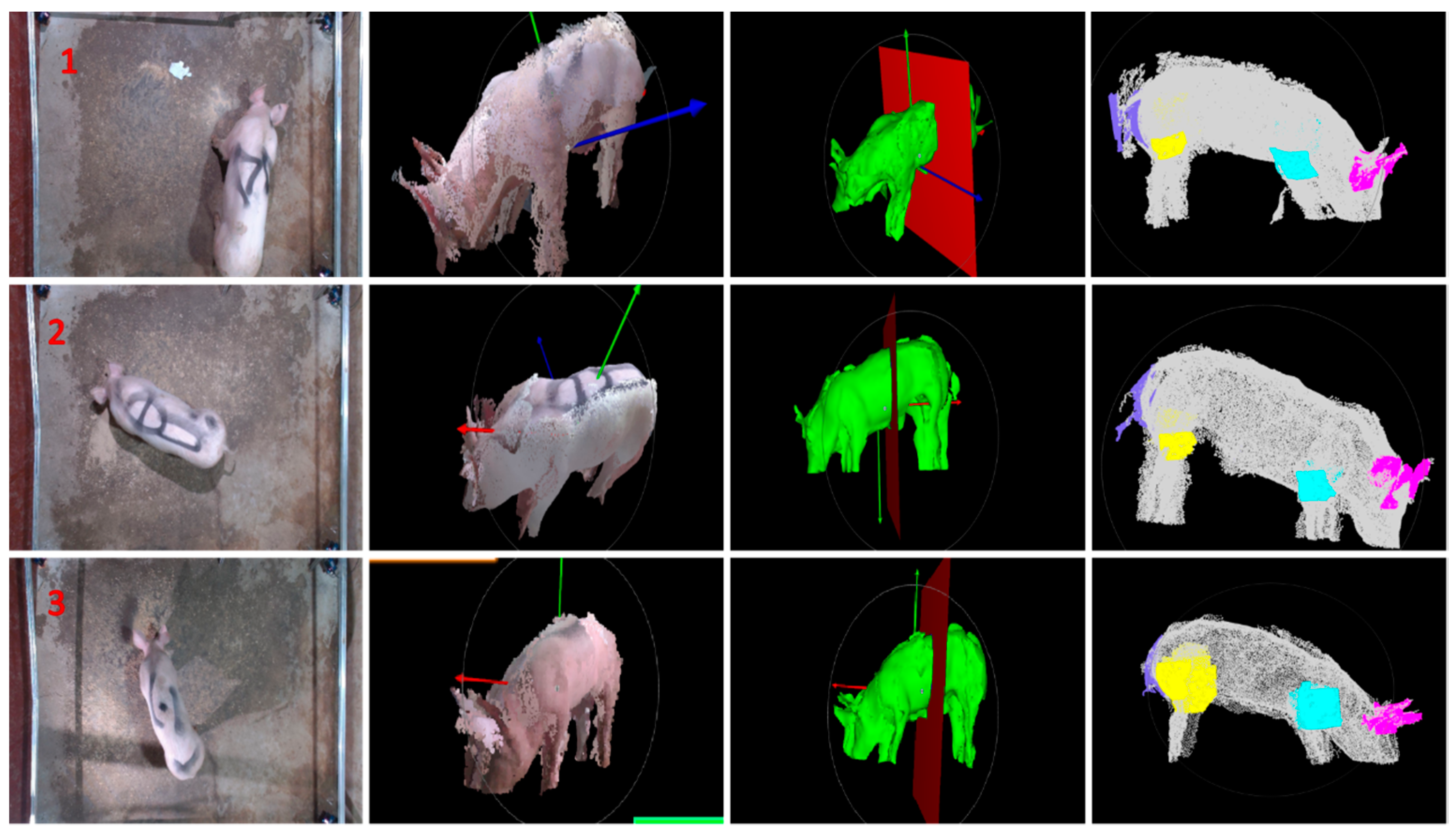

3.3. Validation and Result Analysis of Static Pigs

In the preliminary phase, we conducted model construction and analysis under laboratory conditions. After acquiring point cloud data, we proceeded with preprocessing, feature point detection, and 3D reconstruction (

Figure 7). By comparing field measurements with model validations, we found that the relative errors for body length, chest girth, abdominal girth, back height, were 0.2%, 1.73%, 4.48%, 3.28% respectively.

3.4. Pig Body Point Cloud Registration Experiment and Result Analysis

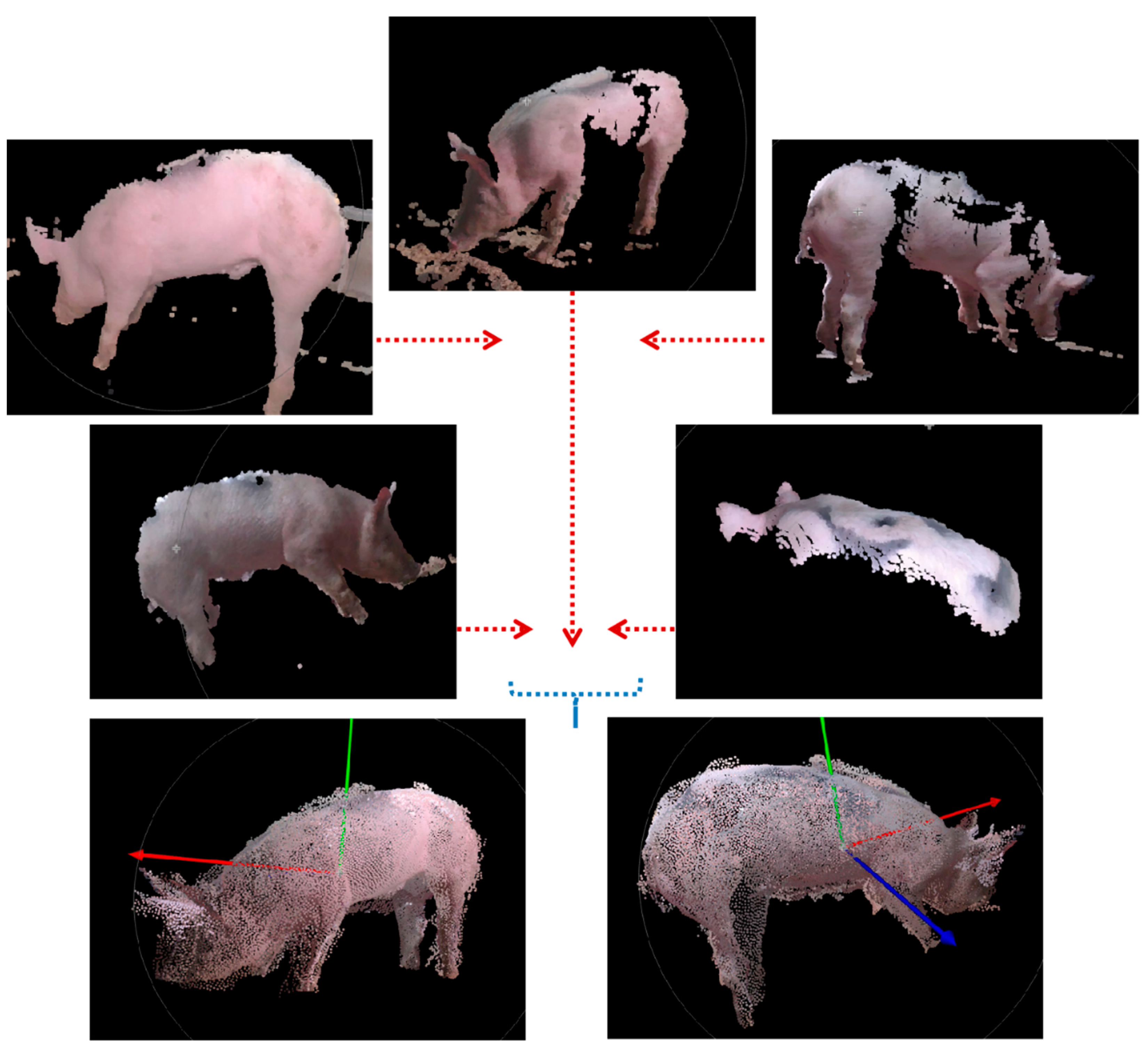

Analyzing the point cloud data of the pigs, we fused the data from five perspectives into one coordinate system, resulting in comprehensive and complete point cloud data. Through feature point detection, we significantly improved efficiency. Experimental results demonstrate the feasibility and effectiveness of this method, providing a model foundation for subsequent on-site applications (

Figure 8).

3.5. Pig Body Point Cloud Reconstruction Experiment and Result Analysis

The 3D reconstruction method proposed in this study achieves point cloud reconstruction in complex pigpen environments. Experimental results demonstrate that this method effectively removes noise from point clouds and produces good reconstruction results. This method is characterized by its simplicity, ease of implementation, and speed. The following image shows the surface results from the experiments (

Figure 9).

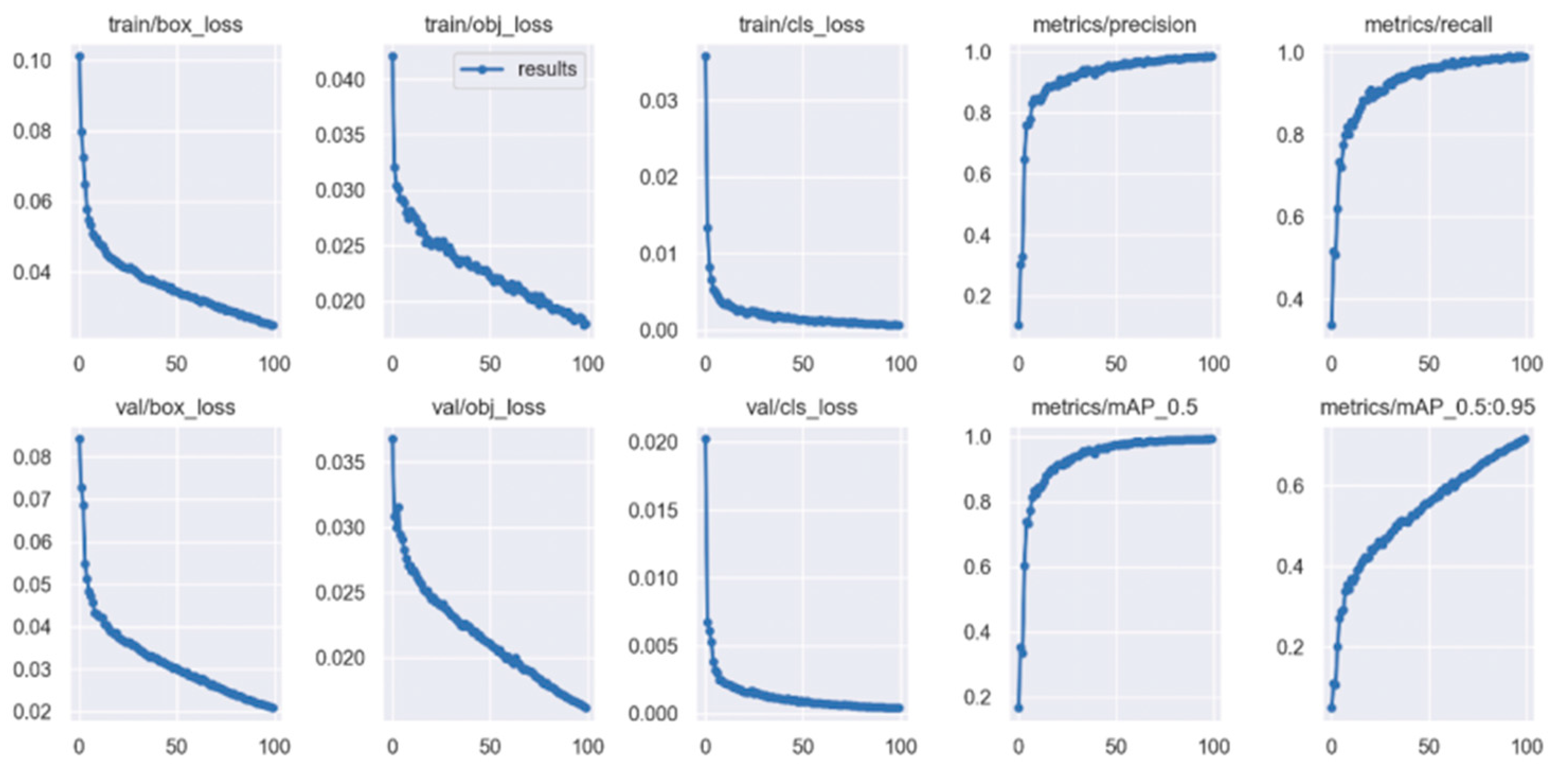

3.6. Parameter Estimation Analysis of Physiological Data

The body measurements of pigs serve as important indicators for assessing their growth and development, providing insights into their physical condition, feed intake, and genetic performance. Many research scholars have made significant contributions to this field by analyzing pig head-to-tail point cloud data [

31], while others have focused on estimating weight based on back point cloud data [

32], The advantage of this paper lies in its comprehensive 3D reconstruction and analysis of all body parts, enabling a scientific and thorough data analysis. In this technology, the parameters measured include chest girth and hip width etc. Chest girth refers to the circumference at the widest point behind the front legs, and hip width refers to the horizontal width at the widest part of the hips. For instance, in the measurement and model parameter estimation of a pig, the relative errors of chest girth, and hip width are 3.55% and 2.83%, respectively. This study involves the identification and segmentation of feature point regions in depth images, which are subsequently mapped onto 3D point clouds for training. The training curve is shown as follows (

Figure 10).

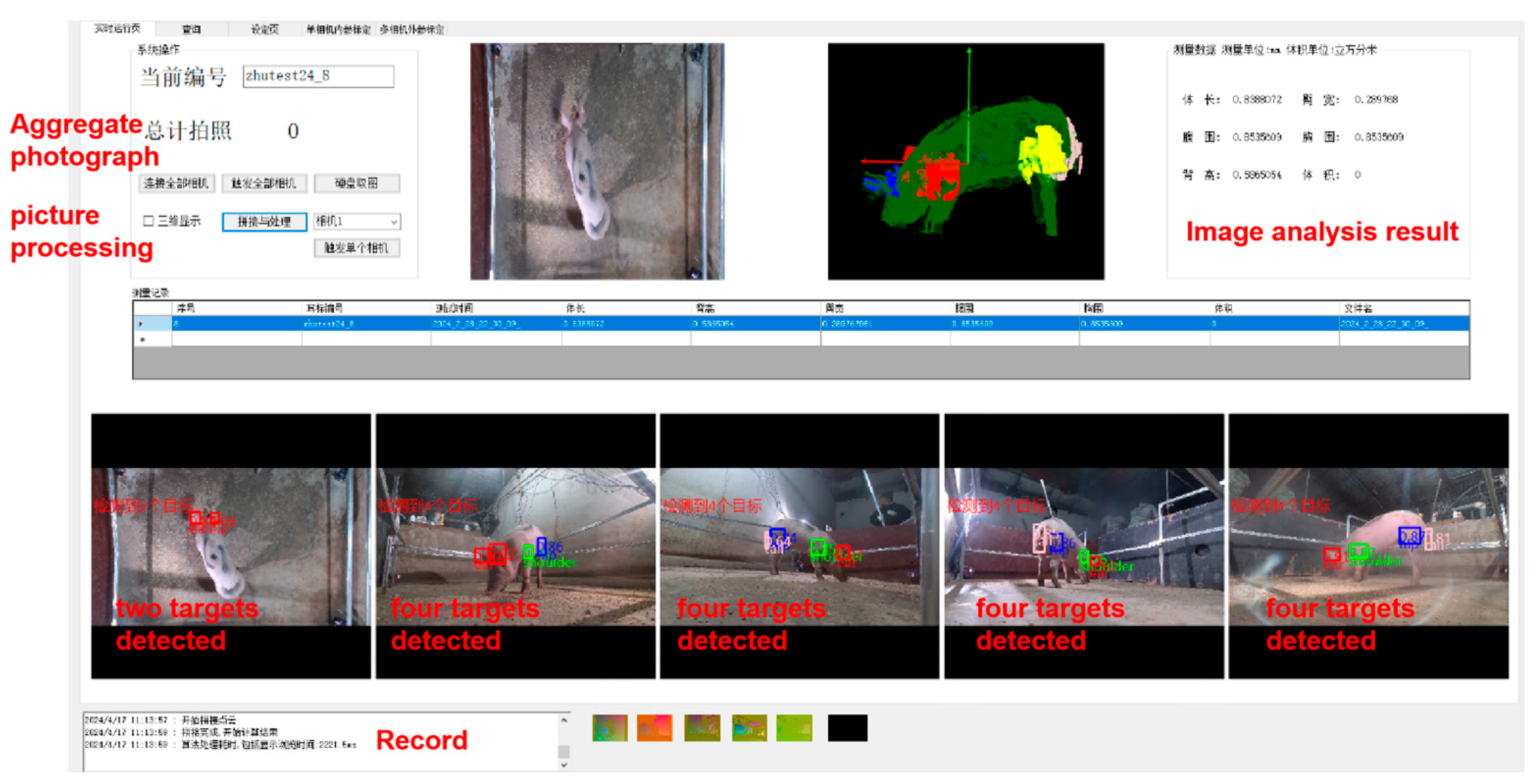

3.7. Discussions and Perspectives

Additionally, we developed an image processing and detection result display system with a concise and intuitive human–machine interaction interface. It mainly consists of a communication interaction module, camera interaction module, image processing module, system control module, and human–machine interface module. This system can display real-time recognition results of feature points in images and mark them with rectangular areas. It also processes the images captured by the five cameras, performs point cloud reconstruction, generates 3D models, and displays them (

Figure 11).

This paper integrates and innovates a 3D reconstruction model to perceive the physiological data of pigs, providing significant support for the development of precision livestock farming.