Abstract

With the development of artificial intelligence, the intelligence of agriculture has become a trend. Intelligent monitoring of agricultural activities is an important part of it. However, due to difficulties in achieving a balance between quality and cost, the goal of improving the economic benefits of agricultural activities has not reached the expected level. Farm supervision requires intensive human effort and may not produce satisfactory results. In order to achieve intelligent monitoring of agricultural activities and improve economic benefits, this paper proposes a solution that combines unmanned aerial vehicles (UAVs) with deep learning models. The proposed solution aims to detect and classify objects using UAVs in the agricultural industry, thereby achieving independent agriculture without human intervention. To achieve this, a highly reliable target detection and tracking system is developed using Unmanned Aerial Vehicles. The use of deep learning methods allows the system to effectively solve the target detection and tracking problem. The model utilizes data collected from DJI Mirage 4 unmanned aerial vehicles to detect, track, and classify different types of targets. The performance evaluation of the proposed method shows promising results. By combining UAV technology and deep learning models, this paper provides a cost-effective solution for intelligent monitoring of agricultural activities. The proposed method offers the potential to improve the economic benefits of farming while reducing the need for intensive hum.

1. Introduction

Farming is an important industry worldwide [1]. As shown in Figure 1, the global smart agriculture market size was estimated at USD 18.12 billion in 2021, and it is expected to hit USD 43.37 billion by 2030 with a registered CAGR of 10.2% from 2022 to 2030 [2]. Hence, it is imperative to ensure the safety of farms. Farm surveillance requires intensive human efforts as it is difficult to monitor the entire farm. Farm thefts can seriously affect the farm owners both financially and psychologically. A study on agricultural crimes reveals that 33.4% of farms were robbed in 2018, and the majority of the thefts are due to trespassing. Having a real-time automated farm surveillance system can help farm owners to take action immediately. It reduces manual efforts, saves them time, and secures farm properties [3,4,5,6,7,8,9,10].

Figure 1.

Smart agriculture market size, 2021 to 2030 [2].

In the 21st century, technology is advancing rapidly, and the Internet of Things (IoT) is changing the world and making it an improved living environment. Drone technology has sparked a lot of interest in a variety of industries due to its numerous advantages. According to Digital Journal, the drones market in commercial space is expected to increase by 57% from 2021 to 2028 as per reports [11,12,13,14,15,16,17,18,19,20]. Drones are increasingly used in the agriculture sector to monitor the health of crops and disperse fertilizers. Using drones, it is possible to track and detect objects for autonomous farming without human intervention. It can be used to monitor a farm in a far more efficient and cost-effective manner [21,22,23,24,25,26,27,28,29,30,31,32].

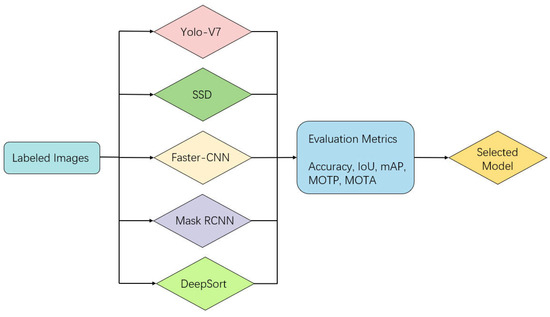

Our focus is to use drones to create a data-driven solution and agricultural monitoring system, and to plan to evaluate and develop a reliable UAV data target detection and tracking that can work under any type of weather conditions. In addition, a portal for monitoring farms will be developed to view the results and enable farm owners to make data-oriented decisions. In order to achieve the goal, by studying the latest methods of target detection and tracking based on UAV [33], it is found that they have some significant shortcomings, such as not being able to detect/track multiple targets and small targets. This article plans to evaluate the YOLOv7 [34], Faster RCNN [9], SSD [13,14,35,36], and MASK RCNN models [14], which should be able to address the above issues. The next step is to evaluate our model based on different evaluation indicators, such as accuracy, MAP [7], IOU, F1 score, accuracy, and recall rate. Based on these results, the best model will be selected and further fine-tuned through intensive model training and adjusting hyperparameters to produce more accurate results.

The main contributions of developing a powerful farm monitoring system to our society are as follows: 1. Transformation from traditional to digital surveillance; 2. Provide easy, effective monitoring of the farm-to-farm owners in low maintenance and cost; 3. The developed method will be helpful for other researchers in the drone-based surveillance system; 4. Enhanced security and protection by leveraging technology and machines, thus minimizing human effort.

The rest of this paper is organized as follows. The related work is summarized in Section 2. Section 2.3 shows a detailed description of training and testing data preparation. Section 2.4 describes the methodology for each selected model and the proposed ensemble models. In Section 2.5, the results and case study are provided. Section 5 discusses the results of this research in the light of other similar studies. The Data Availability Statement explains the reasons for the non-disclosure of the data.

2. Materials and Methods

2.1. Machine Learning Model Based on UAV Data

In order to develop intelligent agricultural monitoring systems, people have conducted a lot of research. Tian et al. proposed a dual-network target detection (DNOD) method [37]. In [38], features are used as a group of objects, and SSD-500 and YOLOv3 deep learning models are used for detection. In [39], the author uses the SSD and MobileNets algorithms. Huang et al. proposed the MOT algorithm to solve the problem of undetected small targets and class imbalance [40]. In order to track targets in crowded spaces, Dike proposed a deep quaternion network (DQN) [9]. Lu et al. (2018) selected YOLOv2 and YOLOv3 models for experiments [41]. In [42], an object detection classification method was used to classify individual points from 3D point cloud data. Ref. [43] proposed to use two different modes for target detection DSOD and long short-term memory (LSTM) for target tracking. Pan et al. (2019) used visual data captured by drones to track multiple objects [44]. In [45], the authors suggested a new DAFSiamRPN tracker for drone’s object detection tracking based on SiamRPN, which addresses the problem that unmanned aerial vehicles (UAVs) are readily influenced by diverse surroundings in the object tracking process such as full occlusion variation of illumination. Xu et al. (2018) proposed an improved YOLO model, which uses a small UAV to collect data at low altitudes, which significantly improves the ability to identify small targets [46]. By improving the RetinaNet model, Tong et al. (2019) made the average detection accuracy more than 90% [47]. Ref. [48] proposed and compared two different target detection and tracking models: YOLOv7 and YOLOv5. Table 1 below shows the comparison of previous studies carried out for smart farming surveillance.

Table 1.

Comparison of literature review.

2.2. Drone Technology Comparison and Solution Survey

Smart farming surveillance requires two types of functions. One is object detection, and the other is tracking the objects in the surveillance videos. In order to carry out these functions, there are various deep learning models that can be used.

Table 2 below describes a comparison of the advantages and limitations of various models developed in the past for target detection. This comparison is based on evaluation indicators, real-time detection of objects, and the complexity of the model. Although some models provide Faster R-CNN, YOLOv7 provides higher accuracy, while some models cannot detect small targets.

Table 2.

Comparison of models.

As part of our technology survey, we also researched various deep learning models that focus on object tracking in a surveillance video. There are various advantages and disadvantages of each of these models.

A detailed comparison is shown in Table 3 below.

Table 3.

Comparison of object tracking models.

For this paper, we conducted research on drones and their specifications. The important parameters for us while selecting a drone are cost, flight time, control range, camera, speed, and obstacle avoidance. Table 4 lists the drones that will be able to meet the functional requirements of our paper. Traditional UAVs do not support auxiliary technology (sprinkling water and spraying pesticides), the cost of monitoring and management is high, the scale is limited, and remote management is limited. Among these, we selected the DJI Phantom 4 drone to collect the data since it has an inbuilt flight path and more flight time.

Table 4.

Comparison of UAV technology.

2.3. Data Engineering

2.3.1. Data Process

To build the drone-based surveillance system, we required data collected from the drones to detect and track the objects for actionable insights and information. Having a variety of farm data captured from the drone is very crucial to building a robust and generalized model. In our paper, we collected the data using a DJI Phantom 4 Pro V2.0 drone from a local farm field. A total of 1140 images and 10 videos of a total of 4 GB of data were captured from different angles and heights. This drone-based data contains a variety of objects on the farm, such as sheep, cows, horses, trucks, cars and humans. In addition to this, we leveraged publicly available datasets such as the Visdrone (V. 2020), Roboflow Universe, and sequoia-rgb-images databases, which are of 20 GB size, captured using drones. In order to acquire accurate and compelling insights, it is critical to properly analyze these raw data before feeding them into the model for training. We stored the collected raw data in the Google Cloud, which is connected to Google Colab for further analysis.

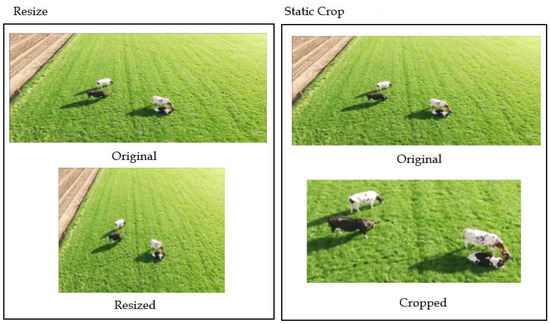

Next, we pre-processed the raw dataset, where we resized the images to 640 × 640. The raw data that did not have annotations were labeled using the Roboflow Universe application. After pre-processing, we applied data transformation techniques such as image data augmentation to improve the amount of data; therefore, the model will be exposed to more aspects of data and thus will generalize better.

The next step was data preparation, where we prepared the data to proceed to the next step of training the deep learning models. For this purpose, we split the data into training, testing, and validation datasets into an 80:10:10 ratio, respectively. The raw data set consisted of 19,476 images, and after transformation, the number of images increased to 58,428. Our training dataset consists of 46,742 images, whereas the validation and test dataset consists of 5843 images.

2.3.2. Data Collection

We collected data from different data sources, including VisDrone and Roboflow. Table 5 below contains the datasets that were used for training, validation, and testing the models.

Table 5.

Dataset collection.

VisDrone: The first one is the VisDrone dataset, which is a large drone-based dataset. It contains a total of 288 videos created from 261,908 frames and 10,209 images. It consists of multiple objects, including pedestrians, vehicles, bicycles, tricycles, and urban and country environments under different lighting weather conditions. The frames are labeled with bounding boxes of the targets. The VisDrone2019-DET dataset contains training, validation, and testing datasets, where the training dataset contains 6174 images and respective annotations, and the total size is 1.79 GB. Moreover, the VisDrone2019-VID dataset also contains videos, totaling 11.16 GB of training, testing, and validation data. Below, Figure 2a shows the sample of images we have in our dataset. The targeted classes are cows, sheep, horses, humans, trucks, and cars. These targeted classes come up to 5218 relevant images from the VisDrone dataset for our model training.

Figure 2.

(a) Data sample from all the datasets. (b) DJI Phantom 4 V2.0 data sample.

Roboflow: This is an open-source data repository used for computer vision projects. There are a variety of open datasets available to download as per our needs. For this paper, we downloaded and annotated horse, cow, sheep, human, truck, and car datasets. In total, of all the classes, we collected and annotated 13,118 images. Later, these data were integrated with other data for model training purposes. Below are the samples of the raw dataset as shown in Figure 2a.

Additionally, we used the DJI Phantom 4 Pro V2.0 drone and collected data from local farm fields by flying it. A total of 1140 images and 10 videos were captured from different angles and heights, capturing different objects for model training, such as humans, cows, horses, cars, and trucks. The recorded videos and images are of 4 K high-resolution shots and captured on an optimized f/2.8 wide-angle lens. In terms of image quality, the images and videos are sharp and vivid with appropriate color accuracy. In the future, we are planning to collect more data using this drone using a flight path around the fence of the fields. We are also planning to collect data on different types of fields, such as cattle farms, vineyard farms, agricultural farms, and dairy farms. These data will be stored on Google Drive. Later, these data will be integrated with other data for model training purposes. Below are the samples of the raw dataset as shown in Figure 2b.

2.3.3. Data Pre-Processing

Multimedia processing and other related operations do not affect the total flight time of the drone. Our dataset includes different image sizes, and hence, we resized our images to 512 × 512; therefore, the images below this size were padded to 512 × 512. Resizing the images reduces processing time since larger-resolution images take longer to process.

The data we collected by the DJI drone do not have annotations, and hence, we labeled some of these images with their respective labels using an image annotation tool called Plainsight. We used RGB images for our model, and hence, grayscale images were excluded from the dataset. Any images with low quality were removed as we encountered and discovered them. Below, Figure 3 shows the samples for which we created the bounding boxes. The bounding boxes were created for sheep, trucks, and cows.

Figure 3.

Data pre-processing sample.

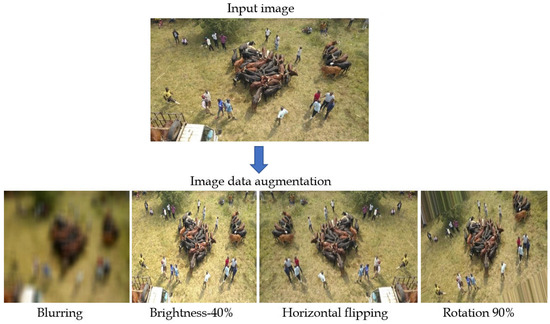

2.3.4. Data Transformation

Image data augmentation is the main data transformation approach we used for this paper. It is a technique used to enhance the amount of a dataset and bring variability into it by producing modified versions of the images/videos in the datasets. As our dataset may contain images that are taken under a narrow range of settings, we may fall short in other situations; the augmented data can help to deal with such situations. As a result, the model will be exposed to more aspects of data and thus will generalize better. Below are the types of augmentations we selected and applied, as shown in Figure 4. These techniques were selected based on the number of characteristics that fit for aerial data, such as data from drones, which is relevant in many computer vision tasks.

Figure 4.

Data transformation sample.

2.3.5. Data Preparation

After data pre-processing and transformation, the datasets were ready to be divided into three categories: training, testing, and validation. Here, for the training and validation datasets, 80% of the images were randomly chosen. With 80% of the data allocated for the training dataset and 10% for the validation dataset, the remaining 10% of the images were chosen as the test dataset. To achieve better performance, different objects such as livestock, humans, and farming machines/equipment detected by the model were trained separately. Thus, each different classification and detection deep learning models were prepared with corresponding training, validation, and test datasets. Below are the classes in which the objects could be classified: cow, horse, sheep, human, truck, and car.

Each of the object’s images, as shown below in Figure 5, were used as part of the training dataset for training the model to detect these objects from drone-based video data.

Figure 5.

Data preparation sample.

2.3.6. Data Statistics

We collected the data from the drone as well as a publicly available dataset. From the two sources, we collected 19,476 images/videos as a raw dataset. Also, transformation procedures such as augmentation of data were used to enhance the quality of our dataset and expand the variety of the data.

We built an extensive farm database with 19,476 images/videos containing objects that belong to either of the 6 classes after completing this phase. With the prepared dataset, we split the images into training, validation, and testing datasets in a 60:20:20 ratio. Below, Table 6 shows the visualization of the data statistics summary with the help of a bar graph.

Table 6.

Data statistics count.

2.4. Machine Learning Models

2.4.1. Model Proposals

Our paper’s main objective is to build a real-time agricultural surveillance system using drones by detecting, classifying, and tracking the expected objects in real time in the specified route. To achieve this, the models that we considered are YOLOv7, SSD, Mask R-CNN, and Faster R-CNN for object detection and YOLOv7+DeepSort for object tracking. From multiple research papers carried out in the past, it was observed that the deep learning models mentioned below can be used for our paper.

- 1.

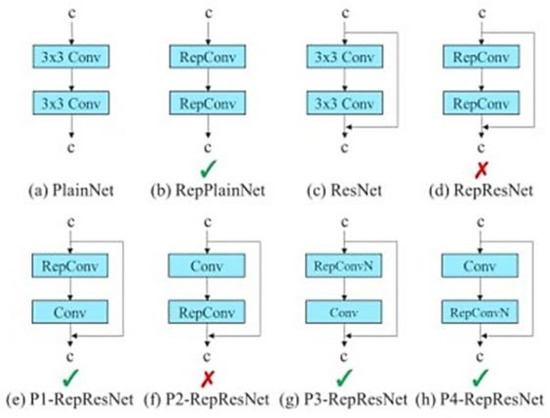

- Improved YOLOv7:

YOLO stands for “You Only Look Once”. It is a framework for real-time object detection that combines the classification of objects and the prediction of bounding boxes into a single end-to-end variational network that can recognize specific things in drone-captured recordings, live feeds, and images. It was created and is maintained under the Darknet framework. As a part of this paper, we utilize YOLOv7, the newest addition of the YOLO family, the most advanced and latest version that outperforms other detectors in terms of speed and accuracy.

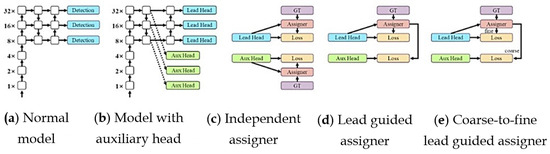

The other changes introduced in YOLOv7 are coarse for auxiliary and fine for lead loss, and planned re-parameterized convolution techniques in a trainable bag of freebies, as shown in below Figure 6. In the re-parameterized model, a layer containing concatenation or residual connections, whose RepConv must not have an identity connection, can be substituted by a layer called RepConvN, which does not have any identity connection and in the second technique, to obtain the labels of the training auxiliary and lead head simultaneously. This label assigner is optimized using ground-truth labels and lead head predictions, as shown in Figure 7.

Figure 6.

Planned re-parameterized model.

Figure 7.

Coarse for auxiliary and fine for lead head label assigner.

With the above changes, YOLOv7 is able to significantly reduce around 40% of the parameters and 50% of the computation required for cutting-edge real-time object detection and also increase detection accuracy and speed. These capabilities empower the use of complex datasets and leverage a multi-label technique, which gives the flexibility to utilize specific classes and multiple bounding boxes for a particular bounding box. This boosts the capability by multiple folds to recognize small objects with higher accuracy, and the model can identify and label various objects at hand, such as farm machinery, humans, cattle, and other objects present on farm fields.

About the model input and output of the model, this model takes drone-captured RGB images/videos of size 640 × 640 and processes them. The expected output from the model is to detect the targeted objects with good accuracy.

Transfer Learning in YOLOv7: As part of transfer learning, we trained the baseline model on custom data and also modified the hyperparameters such as batch size, number of epochs, and weights and set the image size to 640 to increase the performance of the model.

- 2.

- Improved Mask RCNN:

Mask RCNN is a model used in computer vision for object detection and classification. It is the famous deep learning model used for critical tasks in deep learning. It is a Faster RCNN model extension. It is made up of convolution layers, a region proposal network, and a fully connected network, which is similar to Faster RCNN. The only difference between the two is that the Mask RCNN model has an additional layer to predict image segmentation. Mask RCNN predicts the presence of every object (object mask) along with the bounding box and colors them differently. The step-by-step working of the model is as follows: First, it extracts the feature maps using ConvNet from the images. Next, these feature maps are forwarded to the region proposal network (RPN). The RPN predicts the bounding boxes. The next layer is the RoI pooling layer. This layer makes the changes in all the bounding box sizes to make them of the same sizes. Finally, the fully connected layer predicts the class label and bounding boxes. In addition to this, Mask RCNN generates the segmentation mask with the help of the region of interest and the intersection over union (IoU). If this value of the IoU is more than 0.5, then it is considered a good region of interest. Finally, the model predicts the segmentation mask for each object in the image. Since this model has the additional capability to generate segmented masks, it is very accurate and used in many applications.

Model Inputs and Outputs: This model takes drone-captured RGB images/videos of size 1024 × 1024 × 3 and processes them. The expected output from the model is to detect the targeted objects with good accuracy.

Transfer Learning in Mask RCNN: As part of transfer learning, we trained the baseline model on custom data and also modified the hyperparameters such as batch size, number of epochs, and weights and set the image size to 1024 × 1024 × 3 to increase the performance of the model.

- 3.

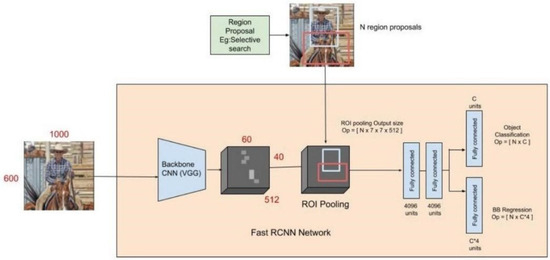

- Improved Faster RCNN:

Faster RCNN is one of the robust algorithms for real-time object detection, and its performance is 250 times better than Fast RCNN. It consists of two modules, namely, region proposed network (RPN) and Fast RCNN. The RPN is used to generate bounding boxes, whereas Fast RCNN helps in classifying the objects.

At first, when an image is fed to the convolutional neural network, it generates feature maps that act as an input to the region proposed network (RPN). The RPN helps in determining the region that mostly contains the object. Anchors are vital in Faster RCNN. Nine anchors of different aspect ratios are used for generating region proposals. The RPN in Faster RCNN is more efficient than a selection search in Fast RCNNs. The anchors have the object if IoU > 0.7.

The second module is the Fast RCNN model. Here, the region proposal is generated by sending the feature vector of the RPN to the SoftMax and Regressor. The region proposals are then sent to the ROI pooling layer, which is then connected to the fully connected layer and helps in classifying the objects. For our paper, we customized the backbone to ResNet-50 and used images of input size 800 × 800.

Model Inputs and Outputs: This model takes drone-captured RGB images/videos and processes them. The expected output from the model is to detect the targeted objects with good accuracy.

Transfer Learning in Faster RCNN: As part of transfer learning, we trained the baseline model on custom data and also modified the hyperparameters such as batch size, number of epochs, and weights and resized the images. The Faster RCNN architecture is shown in Figure 8.

Figure 8.

Improved Faster RCNN architecture.

- 4.

- Improved SSD (Single-Shot Multi-Box Detector):

The approach of this model is based on feed-forward CNN. It comprises 2 segments: feature maps extraction and convolution filters. The SSD does not use delegated region proposal networks. The input to the SSD model should contain images with ground-truth bounding boxes. As input, items such as humans, livestock, equipment, and other farm objects will be sent into the SSD. Ground-truth bounding boxes are boxes around the objects to be detected in that image. In the beginning, the SSD uses VGG16 to extract feature maps as a base network. After VGG16, it uses 6 convolution layers. These layers decrease in size consequently and detect the objects at multiple scales. Each convolution layer uses multiple default bounding boxes of different sizes and aspect ratios across the entire image.

Multiple boxes are used to obtain the highest overlap of boxes on ground-truth boxes. In the end, it uses non-max suppression to eliminate the duplicate predictions and selects the box with the highest prediction overlaps with the object based on confidence scores. To achieve the highest accuracy, predictions of multiple scales are produced and segregated by aspect ratio. The loss is calculated as a mismatch between the predicted boundary box and the ground-truth box. Hard negative mining is performed in the SSD as a large number of negative matches are present. So, instead of selecting all the negatives to calculate the loss, the SSD algorithm selects negatives with the highest loss and maintains the ratio between positive values and negative values.

Transfer Learning in SSD: As part of transfer learning, we trained the baseline model on custom data and also modified the hyperparameters such as batch size, number of epochs, and weights and set the image size to 480 to increase the performance of the model.

- 5.

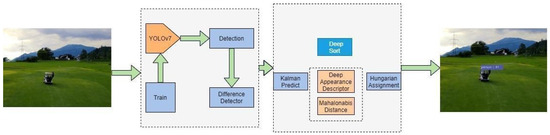

- Object Tracking Algorithm YOLOv7+ DeepSort:

DeepSort is one of the most powerful and popular algorithms for object tracking. It is an extension of the SORT algorithm. In the case of the SORT algorithm, it skips the appearance of the target and deletes the targets that have no match in consecutive frames. Because of this, the ID assigned to a particular target changes frequently. The Most crucial component in this algorithm is the Kalman filter. It extracts the appearance information with the help of ReID and decreases the ID switching. It performs IoU matching on detected targets in the last stage of matching. It uses the squared Mahalanobis distance metric. It also helps in tracking the missing tracks. The YOLOv7 algorithm is used for object detection, and DeepSort is used for object tracking. The DeepSort architecture is shown in Figure 9.

Figure 9.

Improved DeepSort architecture.

Model Inputs and Outputs: This model takes drone-captured RGB images/videos of size 1024 × 1024 × 3 and processes them. The expected output from the model is to track the targeted objects with good accuracy.

2.4.2. Model Comparison and Justification

When it comes to performance, each model has its own set of advantages and disadvantages. In order to obtain the best performance from the model, we need to fine-tune it with a variety of input data. Below are the models we chose for the detection of objects and the detection of tracking. We compare the target problem, features, strengths, and limitations of these models in the following section.

For computer vision tasks, YOLOv7 is the most precise and fastest real-time object detection model. It is also referred to as Trainable Bag-of-Freebies and establishes a new standard for real-time object detectors. This most recent YOLO version features unique “compound scaling” and “extend” techniques that efficiently use computation parameters. The network architecture of YOLOv7 is faster and more resilient, offering a more efficient feature integration approach, accurate object detection performance, a stable loss function, improved label assignment, and model training efficiency. It outperforms both convolutional and transformer-based object detectors.

Mask RCNN is a state-of-the-art region-based convolutional neural network algorithm consisting of image segmentation and instance segmentation. The model architecture is similar to Faster RCNN. The backbone of this model is ResNet-50 and ResNet-101. In addition, the Mask RCNN model has an additional layer to detect the image segmentation/mask. This additional layer improves the accuracy in predicting the objects in the image accurately.

Faster RCNN is one of the most advanced deep learning models for object detection and is a multi-stage method. It has two stages: RPN and Fast RCNN. RPN is used for determining the bounding boxes, whereas Fast RCNN classifies the object. The advantage of this model is that it can work well on tiny objects and has a faster runtime. The disadvantage is that it is a complex model.

The SSD (single-shot multi-box detector) is a famous algorithm for object detection in real time. Its main purpose is to detect huge and small objects with high precision. A major highlight of the SSD model is the different default boxes. The approach of the SSD is to produce multiple bounding boxes with different aspect ratios and assign the confidence score to each of the boxes. The box with maximum confidence is selected that has maximum overlap with the object class. Finally, non-maximum suppression is applied to finally select the detections.

Liu et al. [13] used the SSD, YOLO, and Faster RCNN models to compare the performance on the Pascal VOC dataset, and SSD performs better as compared to other models with 80.0 percent MAP as an evaluation metric to detect the objects. By introducing small convolutional filters for default boxes, this model can be trained fully for good accuracy.

The more layers included, the accuracy improves by skipping some connections. For the limitation, in the SSD, only high-resolution layers can detect small objects. Features of different depths can identify small and large objects at the same time, which is critical for resolving variations in object scales. Table 7 is a comparison of the results of selected models.

Table 7.

Comparison of the results of selected models.

2.4.3. Model Evaluation Methods

To evaluate model performance for object detection models, YOLOv7, R-CNN, Faster R-CNN, Mask R-CNN, and SSD different evaluation metrics are used. Following are the metrics that will be utilized to assess the model performance. We will use a training dataset to fit the models. To compare the performances of models, will use the validation dataset. Final metrics evaluation will be performed on the testing data.

For measuring the object detection model’s performances, we will use the indicators mAP, FPS, accuracy, and intersection over Union (IoU).

For object tracking, we will use the metrics multiple object tracking precision (MOTP) and multiple object tracking accuracy (MOTA) to evaluate.

Intersection over Union (IoU): This calculates the amount of overlap between boundaries. It indicates how much the border boxes and ground-truth boxes overlap. The IoU value increases as the overlapped region grows.

MAP (Mean Average Precision): The average precision is defined as the area beneath the precision–recall curve. The MAP is determined by taking the average of each class’s average precisionb and the total number of classes. Both false positives and false negatives are taken into account by MAP. As a result, MAP is well suited to detection applications.

FPS: The frame per second rate of the object detection model determines how quickly it processes videos and images to obtain the desired result in object detection, and we can calculate the FPS using the below equation

2.4.4. Model Validation and Evaluation Results

Improved YOLOv7: In order to train our model, we leveraged the NVIDIA Tesla K80 GPU, and the custom dataset was labeled using the Roboflow labeling tool. The data were then augmented and transformed to increase the dataset diversity. We used the number of images for each category of classification and split the dataset into training, validation, and testing data sets.

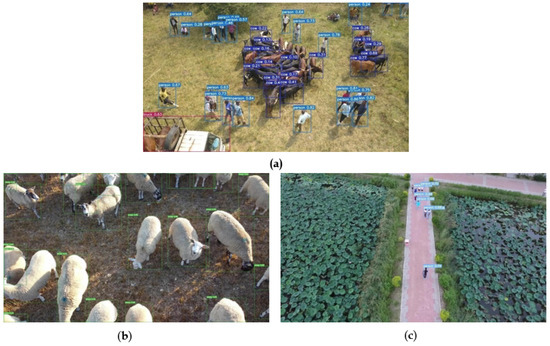

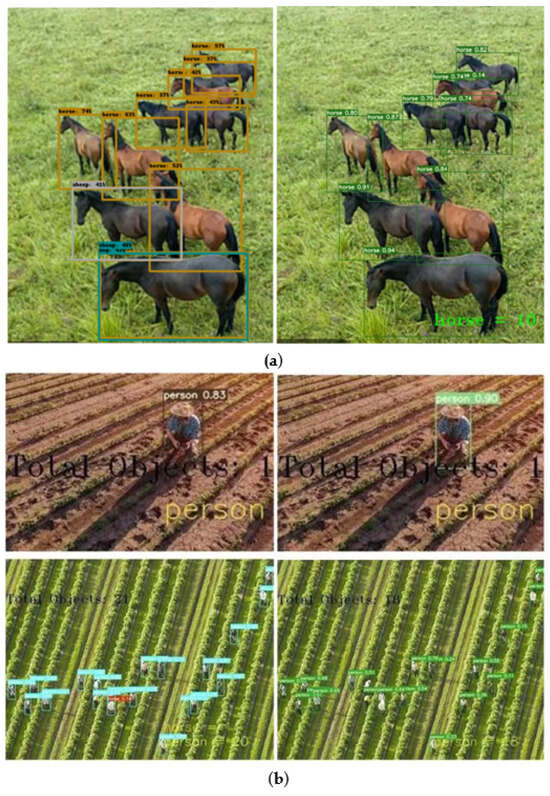

We customized the pre-trained model YOLOv7 as per the paper’s needs and modified the hyperparameters, the batch size to 16, and used the default image size of 640 at data. A YAML file was used to train the model on the drone-captured images of the three classes: person, sheep, and cow. Also, we set the number of epochs to 50 to increase the performance. Once the model was trained, we called the detect.py script to detect the objects in the test data with a confidence threshold equal to 0.1, which generated the prediction output in a frame that included the bounding boxes with class labels. YOLOv7 cow and person detection is shown in Figure 10a. YOLOv7 sheep detection is shown in Figure 10b. YOLOv7 person detection is shown in Figure 10c.

Figure 10.

(a) Yolo V7 cow and person detection. (b) YOLOv7 sheep detection. (c) YOLOv7 person detection.

Improved Faster RCNN: We customized the baseline pre-trained RCNN according to our needs. We trained the model on the drone to capture the images of various classes. We performed the transfer learning by adjusting the batch size to 10 and resizing the images to 512 × 512, as resizing has effects on training time as well as memory consumption, and set the number of epochs to 100. We observed that Faster RCNN faces difficulties when predicting the group objects. Faster RCNN person and cow detection is shown in Figure 11a. Faster RCNN sheep detection is shown in Figure 11b. Faster RCNN person detection is shown in Figure 11c.

Figure 11.

(a) Faster RCNN person and cow detection. (b) Faster RCNN sheep detection. (c) Faster RCNN sheep detection.

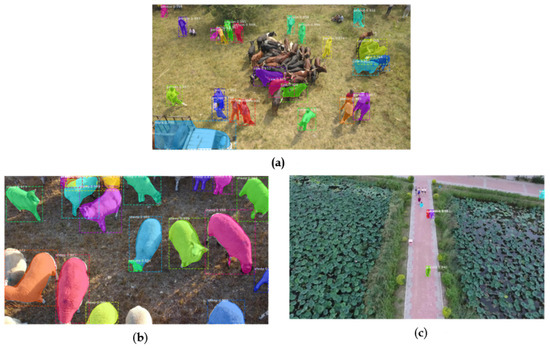

Improved Mask RCNN: v7. Mask RCNN person and cow detection is shown in Figure 12a. Mask RCNN sheep detection is shown in Figure 12b. Mask RCNN sheep detection is shown in Figure 12c.

Figure 12.

(a) Mask RCNN person and cow detection. (b) Mask RCNN sheep detection. (c) Mask RCNN person detection.

Improved SSD: To attain maximum accuracy, the baseline SSD model is improvised by changing the hyperparameters batch size to 10 and picture size to 480. With many open-source datasets, such as COCO, VisDrone, and the aerial agricultural dataset, the model was trained. To improve speed, the number of epochs was set to 50. The model was then used to recognize objects in test data after the model was trained. This algorithm produces predictions in a frame that contains bounding boxes and labels for the objects. SSD cow detection is shown in Figure 13.

Figure 13.

SSD cow detection.

The summary of model performance is shown in Table 8.

Table 8.

Summary of model performance.

2.5. Smart Surveillance System for Livestock Farms: Design, Implementation, Results

2.5.1. System Development and Design

System Development

To develop the real-time farm surveillance system multiple cloud-based platforms are utilized. Our dataset consists of images and videos in RGB format and are stored in Google cloud, which has advanced sharing, security capabilities and also is cost-effective compared to other available cloud storages. This stored data can be easily accessed by other platforms such as Google Colab.

Then we use Google Colab pro for all the pre-processing, transformation and developing Deep learning models for detection and tracking. Colab enables users to create free jupyter notebooks entirely on the cloud, no configuration is required and can be shared easily among the team members. We can scale our model in production with Google Colab because it is compatible with commonly used machine learning libraries and can be used to save models with minimal software engineering preparation.

The Python “TensorFlow”, “Keras”, “PyTorch”, “Opencv” libraries will be primarily used to develop and execute Deep learning models. Both these libraries are open source. The TensorFlow library is designed for graph-based computations. By using GPUs, it enables speed and parallelization, and is popular for creating neural networks. keras is an open-source library built upon TensorFlow and is more user friendly compared to TensorFlow.

To accelerate the model runtime, we will use the NVIDIA T4 GCP colab. The developed models will be saved in cloud storage. Then we will build a web application using Python flask and deploy the Deep learning models. Flask is a Python-based micro web framework that has all components that are required to build an application. The biggest advantage of flask is that it has inbuilt development servers and robust debuggers. It is easy to get started with and it has some project templates and can provide a better start to build web applications. We can easily integrate flask on Colab and deploy our trained models as backend computation for the application built.

The last step of the paper, which is to send the collected frames from the Drones to the backend server for farm owners to view and analyze. The collected frames show the detected objects, track them and notify if abnormal actions are detected.

The evaluation method for each model selected for our segmentation task is shown in Figure 14. All images from prelabeled image datasets, as well as labeled images generated by Roboflow, will be fed into YOLOv7, Faster RCNN, SSD, Mask RCNN independently. The IoU (Intersection over Union), mAP, MOTP, MOTA metrics will be evaluated for model comparisons. The model that achieves the best will be chosen and used for the paper’s important image segmentation job.

Figure 14.

Model selection technique diagram.

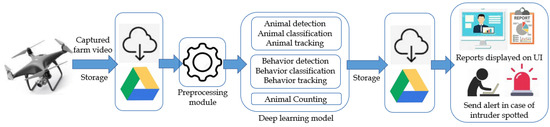

The below Figure 15 of system architecture explains the complete flow and end-to-end process of classifying objects through drone. Drone traverses through a set flight path, in above case the input is images/videos from a farm, drone hovers over different objects while capturing images and videos. These data are pre-processed and transformed and are also used as a training input along with different variations to train the model to work in different conditions.

Figure 15.

The workflow of the system.

This is done in iteration as a feedback loop to make the algorithm and system more efficient and intelligent, and the model detects and tracks different objects in the real time. Example when the drone flies over a herd of cattle, it can label and identify them as well as track their movements thus giving the farm owners insights and information to run their operations.

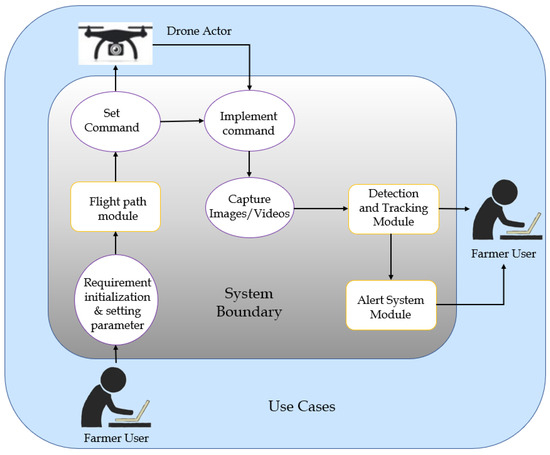

System Boundary and Use Cases

This solution can potentially be leveraged in farm management, farm livestock count, and monitoring intruders. The users of this system would be owners and security workers who work on farms and conduct surveillance.

Below, Figure 16 introduces the system boundary use cases and actors. Security workers or farm owners can be user actors. The drone is one of the separate actors of this system. Actor actions are boxes with oval shapes which are purple in color, whereas system modules are rectangle boxes with rounded corners in orange color, which are for subsystems of computer vision. Data flow from one source to another source in the direction of an arrow, feed the data to the object detection tracking model, and go back to the user actor through user interface communication to display the identification results.

Figure 16.

Use cases of the system and interfaces of user actors.

One of the potential use cases could be security officers flying a drone over the farm by setting up a flight path, monitoring the different areas of the property, and taking pictures/videos. They can see the live view in the monitoring screen and view the objects that are being identified by the deep learning model, reviewing the alerts shown to them and alerting the respective workers as needed. For example, if a herd of animals travels too far in an area where they are not supposed to, they can alert the workers to get them back to their designated place.

System High-Level Data Analytics Requirements

In this paper, we will build a deep learning model-based intelligent drone-based surveillance system to assist object detection, classification, and recognition for actionable information and insights. This system uses a variety of datasets to build the generalized and robust model, which includes human, animal, farming equipment, tree, house, and fence image/video data captured using drones in different weather conditions, lighting, positions, and situations. The data elicitation process, analysis, specification, transformation, validation, and verification are the five aspects of preparing the data analytic system.

The deep learning model layers will only analyze and train on farm-related data during the elicitation process. The drone-captured input images/videos, on the other hand, will solely be used to generate farm object detection and classification findings. During the analysis stage, we assessed the system’s performance using a variety of performance metrics. For the model, we used accuracy, F1 score, recall confidence score, probability prediction, and classification of a specific label. For the system, we assessed processing time and error rate recording. The error rate is noted when the output does not match the anticipated outcome, and the runtime is computed from the beginning of the detection procedure until the time the result is displayed.

Data requirements and the utilization of data are included in the requirements specification. We will collect the training data, and it will be processed using size, format, and different resolutions and angles. The drone captures the input data for the system, and it is transformed and processed using the Python library. Packages and different variations of lighting are used in order to train and develop robust models. Regulations and restrictions on the usage of data vary depending on the circumstances. To adhere to the guidelines while analyzing, storing, and distributing the data, farm owner consent is required. To ensure that training and real data match, requirements validation should be performed continuously. In order to maintain the system’s performance, runtime data must be tracked and analyzed. As a result, deep learning systems must be continually updated to incorporate the most recent data.

2.5.2. System Design

System Architecture and Infrastructure

Below, Figure 17 shows the architecture of the farm surveillance system. The scenario is described as follows: the user will initiate a drone operation and fly the drone in a farm area according to the flight path. The video will be recorded in the drone system. The video will then be uploaded to the Google Drive location. The video then will be given as an input to the deep learning model. The module will then detect and classify the objects in the video with bounding boxes and confidence scores along with the labels. The video will then be stored again on the Google Drive location. In the end, the summary of results will be sent back to the frontend system and will be displayed on UI. In case any intruder is observed, the alert will be sent to the user.

Figure 17.

The architecture of the farm surveillance system.

System Supporting Platforms and Cloud Environment

For this paper, we propose to design a system for farm surveillance purposes using drones. The model development for the paper involves object detection, classification, and tracking. It is performed using Deep learning models and Python-based libraries such as Keras, TensorFlow, scikit-learn, PyTorch, Matplotlib, NumPy, and pandas. The frontend application is developed using Python libraries. The training dataset along with the collected drone-based customized dataset is integrated and stored on the Google Drive location. The integration of all the modules, backend, storage, and frontend, is performed on Google Colab. The above integrated module will communicate with each other using WiFi.

2.5.3. System Development

AI and Machine Learning Model Development

To solve the object detection and tracking problem, various deep learning models are used. The high-performing model is then selected by evaluating the performance metrics and minimal losses. The system is designed to detect various objects in a farmland environment and track them. The detection of objects in a given image or a video is seen as a classification problem that identifies the object into defined classes. Images and videos taken from a drone of the selected objects are used for training the model. Along with the drone-acquired images and videos, various open-source datasets are taken as part of the training dataset.

The machine learning models proposed are YOLOv7, SSD, Faster RCNN, and Masked RCNN for object detection and DeepSort for object Tracking. These models also accomplish the task of remotely tracking the objects as well. Due to this reason, the YOLOv7 model works well in aerial images. Out of these models, YOLOv7 not only yielded the highest accuracy but was also able to detect tiny objects correctly. The performance of this improved version of the model increased in comparison to the pre-trained model, and the number of layers, epochs, and batch size was modified along with the adjustments in hyperparameters and confidence threshold values. The YOLOv7 model was trained with labeled classes, and it was further tested on a custom dataset.

Implement Designed System

In the designed system, the user captures the data using the DJI Phantom 4 drone, known for taking high-quality images and videos. The development machine used is an Intel Core i5 8250 CPU with 8 GB of RAM that supports the computational intensity required for processing and development of the deep learning models. The GPU used is the NVIDIA Tesla K80. It also helps if the GPU can be further expanded to a higher number for the improvements made in the models. The data in the form of video and images are stored in the cloud storage from where the data are processed by performing augmentations. The processed data are then fed to the object detection and classification component, where the objects are detected and classified into their respective classes using the YOLOv7 model. This module is supported by deep learning libraries such as PyTorch and TensorFlow.

Another functional component is the remote tracking of these classified objects, which is also supported by the YOLOv7 model. An improved design is used while training the model and then further testing the functions with the test dataset followed by assessing the performance of these developed models. The final part of the development is to save the source codes of the model in a cloud storage location to ensure the accessibility and reusability of these models on different machines.

Input and Output Requirements, Supporting Systems and Solution APIs

Input Datasets—Drone-captured images and videos along with publicly available datasets for training the model. The input datasets should consist of cows, sheep, horses, humans, farming equipment, and trucks as objects.

Expected Outputs—The user interface will return the summary of the video to the user. This summary will contain the objects that were detected along with their counts. Also, it will provide alerts to inform the user about the anomalies detected in the data that was provided.

Supporting System—DJI Phantom 4 drone.

3. Analysis of Model Execution and Evaluation Results

We leveraged pre-trained models like YOLOv7 and Faster RCNN, and transfer learning has been performed to improve the performance of object detection and classification. For each task and feature, such as classification, tracking, counting, analyzing behavior, and intrusion detection these models were trained with a custom dataset of each class like animals, humans, and farming machinery equipment (vehicles). For each feature, we analyzed the results based on previous and current accuracy comparisons. Based on the comparison, we finalized the best-performing model, which is YOLOv7-Deepsort.

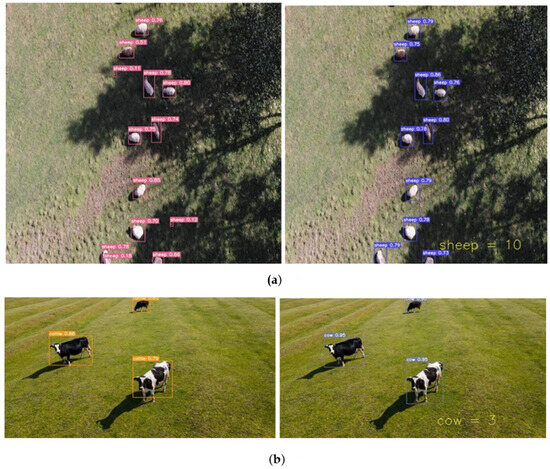

Regarding livestock detection and tracking, the objects considered under livestock are sheep, horses, and cows. For the detection of livestock, we used custom-trained models such as YOLOv7 and Faster RCNN. For tracking the objects, we used YOLOv7-DeepSort. These models were further fine-tuned by adding more training data and changing the model parameters. We then compared the performance of models on different evaluation metrics on a confidence threshold greater than 0.6 and an IoU greater than 0.7.

According to the model training results of sheep, the YOLOv7 model provided better predictions with an mAP of 85.80% than the Faster RCNN model, which had an mAP of 66.54%. The YOLOv7 model’s mAP was increased to 97.5% when both models were fine-tuned by increasing the epoch number to 350; however, the Faster R-CNN’s results did not significantly change, and the mAP was 71.57%

Figure 18a is the YOLOv7 test result of a sheep video, which is before and after the hyperparameter tuning with a confidence score for each bounding box. We observed that after iterating the model with an increasing number of epochs, the object detectors predict the class with high confidence scores and improve the mAP, recall, and precision score.

Figure 18.

(a) Output results of Faster RCNN vs. YOLOv7 on a sheep video. (b) Output results of Faster RCNN vs. YOLOv7 on a cow video.

For cow detection, YOLOv7 outperformed Faster RCNN. YOLOv7 resulted in an mAP@0.5 of 97.9%, whereas the Faster RCNN model achieved an mAP@0.5 of 66.5%. Before fine-tuning, YOLOv7 has an mAP@0.5 of 75.38%, whereas Faster RCNN has an mAP@0.5 of 60.2%. Figure 18b is the YOLOv7 test result of a cow video, which is before and after the hyperparameter tuning model. The confidence scores have improved considerably.

For horse detection, the YOLOv7 model performs better in detecting the horses in the input video as compared to Faster RCNN. As seen in the images below, the confidence score of the horse detection in Faster RCNN is 0.74, whereas the confidence score of YOLOv7 is 0.94. With the improved an mAP score of 94% for YOLOv7, it outperformed the previous results of YOLOv7 along with the Faster RCNN model. Figure 19a shows the output images of horse detection in the video for both Faster RCNN and YOLOv7.

Figure 19.

(a) Output results of Faster RCNN vs. YOLOv7 on a horse video. (b) Output results of Faster RCNN vs. YOLOv7 on a person video.

Regarding human detection and tracking, humans are considered in the farms. For the detection of humans, we used custom-trained models YOLOv7 and Faster RCNN. For tracking the humans, we used YOLOv7+Deepsort. These models were fine-tuned using a greater number of epochs and a variety of human images. Later, we compared the performances of both the models Faster RCNN and YOLOv7+Deepsort on the basis of mAP as evaluation criteria and a confidence score greater than 0.5. The model training results of humans trained on the YOLOv7+DeepSort model are better compared to the Faster RCNN model. According to the training results of humans, the YOLOv7 model provided better predictions with an mAP of 92.06% than the Faster RCNN model, which had an mAP of 66.04%. After increasing the training epochs, the map score also slightly improved from previously for YOLOv7. Figure 19b the predictions for human class.

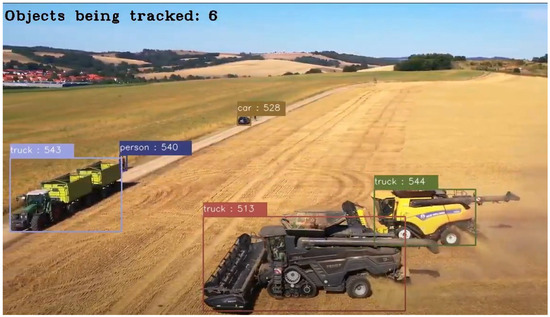

Regarding detection and tracking of farm equipment, trucks in the farm areas are used as one of the farm equipment. For the detection of trucks, we initially used YOLOv7. For tracking these trucks, we used the YOLOv7 and DeepSort model. These models were fine-tuned using more epochs and a variety of truck images. As we can see in the images below, the result of the pre-trained model detected three different bounding boxes, which is inaccurate as there are only two trucks present. However, on fine-tuning the hyperparameters and training it with more data, the improved model accurately shows two bounding boxes with a classification and confidence value of 0.97 and an improved mAP of 94.3%, as shown in Figure 20 and Figure 21.

Figure 20.

Output results of Faster RCNN vs. YOLOv7 on a truck video.

Figure 21.

Output results of Faster RCNN vs. YOLOv7 on a truck, car and person video.

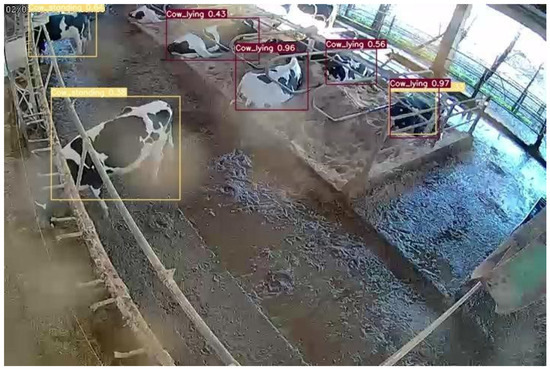

Regarding the detection of livestock behavior, the classes targeted for livestock behavior detection are standing and lying, as shown in Figure 22. Custom-trained YOLOv7 and Faster RCNN were used for this task. However, YOLOv7 performed better compared to Faster RCNN. YOLOv7 resulted in an mAP@0.5 of 92.45%, whereas the Faster RCNN model achieved an mAP@0.5 of 67.34%. Before fine-tuning, YOLOv7 has an mAP@0.5 of 87.6%, whereas Faster RCNN has an mAP@0.5 of 68.51%.

Figure 22.

Output results of livestock behavioral detection.

Based on Table 9, the YOLOv7-DeepSort outperforms the Faster RCNN model in terms of accuracy and speed. As a result, the YOLOv7-DeepSort model is the final approach to build a surveillance system for object detection and tracking. We further fine-tuned this model with multiple iterations and trained with a variety of datasets to increase the performance. Table 10 is the result of the final model execution and evaluation metrics results.

Table 9.

Model comparison.

Table 10.

Evaluation results.

4. Discussion

In order to develop a UAV-based farm monitoring system for farmers, object detection and object tracking modules have been integrated and deployed, which can count the number of each object class detected, detect the behavior of each livestock object, develop a user interface to display the results, and develop interactive functions on UI so that the output can be displayed according to the selected class.

4.1. Achievements

The models selected yielded better performance in the identification of various classes, details as follows:

- Augmentation of the image and video data—Enables the user to take a closer look at the objects present on the farm for surveillance purposes;

- Livestock Detection—Classes like cow, sheep, and horse have been identified along with their behavior such as standing, sitting, and sleeping;

- In the output, livestock has been classified from an aerial view with appropriate class labels and confidence score;

- Object Tracking—The farm objects detected in the video are being tracked in each frame;

- Object counting results of various classes displayed in the video frames;

- Detection of other farm objects like buildings and farm equipment like trucks;

- Livestock behavior classification—Behavior of livestock is detected such as whether the livestock is standing or sleeping.

The livestock detection module detects different kinds of livestock. In our case, it is cow, sheep, and horse. Then these objects are tracked and the counting for each of these objects takes place. This functionality has been implemented using two models. The YOLOv7 and DeepSort models are integrated. This pipeline includes using an input video that is fed into the livestock detection module for determining the classification weights. This results in the bounding boxes being formed in every frame that is further passed on the DeepSort model weights to track these objects and maintain a count of each of these objects. In the end, the output video shown on the UI will provide the detection of different types of species being tracked along with the count of each species being tracked. The User Interface developed provides an option for the user to select the object that they want to see and classify.

4.2. Constraints

Images taken at a higher altitude produce incorrect output due to low resolution and smaller objects leading to inaccurate dimensions of the objects. While training the model, it was observed that the model detects sheep as cows for high-altitude and low-resolution images when the color is the same for both. Hence high-altitude images are discarded. The restrictions are as follows:

- Optimal drone height—While training the model, it was observed that videos taken at heights more than 200–250 were not capturing the objects clearly, hence leading to inaccurate results. However, when videos were taken at 100–150 ft altitude, the objects were seen clearly and the model yielded better performance. Therefore, it was necessary to define the optimal drone height for collecting the data.

- A large dataset with high-resolution images is required for training the model on farming equipment. Due to the different angles, light settings and appearances of the truck can vary. To take care of these scenarios, we performed augmentations where brightness was increased to enhance the classifier results under different conditions.

- Training a deep Learning with a huge dataset is extremely time-consuming and challenging, even with the premium accounts of Google Pro.

4.3. System Quality Evaluation of Model Functions and Performance

We leveraged Google Colab Pro for training the improvised models with a custom dataset of each class and the same environment we used for testing and deploying the models to build the smart surveillance system. Each class predicts with probability scores, and it is different for each model. Additionally, the bounding box around the recognized object displays these values together with the class names and evaluates the prediction of the forecasts for each classification. So, each model’s run time performance measures how long it takes to execute and produce the results. Below is the average runtime in seconds for each feature and the time taken to execute each iteration to train the model in minutes, as shown in Table 11.

Table 11.

Average run-time performance comparison.

4.4. System Visualization

For the farm surveillance system we built, the user has to provide the necessary inputs in order to process the video/image. These inputs are given through the website’s user interface, and this triggers the developed models in the backend, which process the video and show the results to users on user interface. There are two steps involved in this process, which are mentioned below.

- Step 1:

- Upload the drone-captured video and select the desired options.

The user will upload the drone-captured video and select the desired category to detect/track from a dropdown menu. Different categories, such as all categories, cows, sheep, buildings, trees, and farming equipment, were included in the dropdown. When the user uploads the video and selects the desired category, the backend-developed model is triggered and starts processing the video.

- Step 2:

- Processed input video with detection and tracking are shown on the UI.

When the backend model completes the processing of the model, it shows the desired output to the user on UI with object detection and tracking. The output will have original video and processed video, which gives a clear picture of the object detected/tracked. It also gives the summary of the objects detected and alerts the user if any anomalies are detected. The below images show the user interface of the smart surveillance system.

5. Conclusions

5.1. Summary

We established an effective farm monitoring system to help farmers monitor their farms. The monitoring system will be able to accurately detect the type, number, and behavior of farm animals, as well as humans and trucks. The monitoring system can mark a unique ID for each detected object to track the detected object. In addition, an interactive website is built. In order to detect objects, we implement the most advanced algorithms “YOLOv7” and “DeepSort”. The monitoring system can help detect objects even in the case of insufficient light. The monitoring system is very cost-effective, does not need a lot of money, and can provide more security for the farm.

5.2. Prospect and Future Work

It is a known fact that farm surveillance requires intensive human efforts and is extremely expensive for farm owners. Thefts of equipment/livestock or unexpected visitors can cause a great financial loss and prove to be traumatic to the farm owners. With the help of this smart farm surveillance solution, the farmers can now overcome the stress of physically monitoring the farm. The farm owners can be notified of all the object movements within the farm, which enables them to make data-driven decisions. This solution is extremely cost-effective and does not require large funds. It provides more safety and security to the owners, and by using drones, the monitoring can be performed without interrupting the ongoing activities of the farm. In the future, more images and classes can be used to further train the model, optimize the system, improve the model, and realize real-time tracking and detection of objects. Integrate with mobile applications to send alerts to farmers.

Author Contributions

Methodology, N.P. and J.W.; Software, H.Y.; Validation, C.K.B.; Investigation, S.M.; Data curation, S.K.; Writing—original draft, J.G.; Writing—review & editing, Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

Project supported by the Shanxi Province Research Foundation for Base Research, China (Grant No. 202303021221002).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The datasets generated and/or analyzed during the current study are not publicly available because the data were obtained from private farms with a privacy agreement but are available from the corresponding author.

Conflicts of Interest

The authors declared that they have no conflicts of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

References

- Bosquet, B.; Mucientes, M.; Brea, V.M. STDnet-ST: Spatio-temporal ConvNet for small object detection. Pattern Recognit. 2021, 116, 107929. [Google Scholar] [CrossRef]

- Zinke-Wehlmann, C.; Charvát, K. Introduction of smart agriculture. In Big Data in Bioeconomy; Springer International Publishing: Cham, Switzerland, 2021; pp. 187–190. [Google Scholar]

- Boruah, P. RetinaNet: The Beauty of Focal Loss—Towards Data Science.Medium. Available online: https://towardsdatascience.com/retinanet-the-beauty-of-focal-loss-e9ab132f2981 (accessed on 6 January 2022).

- Meng, X.; Chen, Y.; Xin, L. Adaptive decision-level fusion and complementary mining for visual object tracking with deeper networks. J. Electron. Imaging 2020, 4, 29. [Google Scholar] [CrossRef]

- Canuma, P. Data Science Project Management [The New Guide For ML Teams]. Neptune.Ai. Available online: https://neptune.ai/blog/data-science-project-management (accessed on 31 January 2022).

- Chen, C.; Zhang, Y.; Lv, Q.; Wei, S.; Wang, X.; Sun, X.; Dong, J. Rrnet: A hybrid detector for object detection in drone-captured images. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Li, C.; Yang, T.; Zhu, S.; Chen, C.; Guan, S. Density map guided object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 190–191. [Google Scholar]

- Commercial Drone Market Size, Share & Trends Report. Global-Commercial-Drones-Market. Historical Range: 2021–2028. 2021. Available online: https://www.grandviewresearch.com/industry-analysis/global-commercial-drones-market (accessed on 11 March 2024).

- Dike, H.U.; Zhou, Y. A robust quadruplet and faster region-based CNN for UAV video-based multiple object tracking in crowded environment. Electronics 2021, 10, 795. [Google Scholar] [CrossRef]

- Discover a Wide Range of Drone Datasets, senseFly. 2021. Available online: https://www.sensefly.com/education/datasets/?dataset=1502&industries%5B%5D=2 (accessed on 11 March 2024).

- Robicquet, A.; Sadeghian, A.; Alahi, A.; Savarese, S. Learning social etiquette: Human trajectory understanding in crowded scenes. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part VIII 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 549–565. [Google Scholar]

- Li, W.; Mu, J.; Liu, G. Multiple object tracking with motion and appearance cues. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019; pp. 1–9. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Li, M.; Zhang, Z.; Lei, L.; Wang, X.; Guo, X. Agricultural greenhouses detection in high-resolution satellite images based on convolutional neural networks: Comparison of faster R-CNN, YOLO v3 and SSD. Sensors 2020, 20, 4938. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8971–8980. [Google Scholar]

- Mandal, M.; Kumar, L.K.; Vipparthi, S.K. Mor-uav: A benchmark dataset and baselines for moving object recognition in uav videos. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2626–2635. [Google Scholar]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. Uav-yolo: Small object detection on unmanned aerial vehicle perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 445–461. [Google Scholar]

- Mevadata. Multiview Extended Video. 2021. Available online: https://mevadata.org/ (accessed on 11 March 2024).

- Imamura, S.; Zin, T.T.; Kobayashi, I.; Horii, Y. Automatic evaluation of cow’s body-condition-score using 3D camera. In Proceedings of the 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE), Nagoya, Japan, 24–27 October 2017; pp. 1–2. [Google Scholar]

- Zhu, P.; Sun, Y.; Wen, L.; Feng, Y.; Hu, Q. Drone based rgbt vehicle detection and counting: A challenge. arXiv 2020, arXiv:2003.02437. [Google Scholar]

- Perera, A.G.; Law, Y.W.; Chahl, J. Drone-action: An outdoor recorded drone video dataset for action recognition. Drones 2019, 3, 82. [Google Scholar] [CrossRef]

- Papers with Code UAVDT Dataset. UAVDT Benchmark Dataset. 2018. Available online: https://paperswithcode.com/dataset/uavdt (accessed on 11 March 2024).

- Zhang, R.; Shao, Z.; Huang, X.; Wang, J.; Li, D. Object detection in UAV images via global density fused convolutional network. Remote Sens. 2020, 12, 3140. [Google Scholar] [CrossRef]

- Sun, S.; Akhtar, N.; Song, H.; Mian, A.; Shah, M. Deep affinity network for multiple object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 104–119. [Google Scholar] [CrossRef]

- Staaker. Best Surveillance Drones of 2022: Top Brands Reviewed. Staaker.Com. Available online: https://staaker.com/best-surveillance-drones/ (accessed on 13 November 2021).

- Klauser, F. Surveillance farm: Towards a research agenda on big data agriculture. Surveill. Soc. 2018, 16, 370–378. [Google Scholar] [CrossRef]

- Walter, A.; Finger, R.; Huber, R.; Buchmann, N. Smart farming is key to developing sustainable agriculture. Proc. Natl. Acad. Sci. USA 2017, 114, 6148–6150. [Google Scholar] [CrossRef] [PubMed]

- Triantafyllou, A.; Tsouros, D.C.; Sarigiannidis, P.; Bibi, S. An architecture model for smart farming. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini, Greece, 29–31 May 2019; pp. 385–392. [Google Scholar]

- Mascarello, L.N.; Quagliotti, F.; Ristorto, G. A feasibility study of an harmless tiltrotor for smart farming applications. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1631–1639. [Google Scholar]

- Koshy, S.S.; Sunnam, V.S.; Rajgarhia, P.; Chinnusamy, K.; Ravulapalli, D.P.; Chunduri, S. Application of the internet of things (IoT) for smart farming: A case study on groundnut and castor pest and disease forewarning. CSI Trans. ICT 2018, 6, 311–318. [Google Scholar] [CrossRef]

- Zin, T.T.; Seint, P.T.; Tin, P.; Horii, Y.; Kobayashi, I. Body condition score estimation based on regression analysis using a 3D camera. Sensors 2020, 20, 3705. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, A.; Misra, S.; Sukrutha, A.; Raghuwanshi, N.S. Distributed aerial processing for IoT-based edge UAV swarms in smart farming. Comput. Netw. 2020, 167, 107038. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Hui, J. SSD Object Detection: Single Shot MultiBox Detector for Real-Time Processing. Date of Application: 10 October 2021. 2018. Available online: https://jonathan-hui.medium.com/ssd-object-detection-single-shot-multibox-detector-for-real-time-processing-9bd8deac0e06 (accessed on 11 March 2024).

- Noe, S.M.; Zin, T.T.; Tin, P.; Kobayashi, I. Automatic detection and tracking of mounting behavior in cattle using a deep learning-based instance segmentation model. Int. J. Innov. Comput. Inf. Control 2022, 18, 211–220. [Google Scholar]

- Tian, G.; Liu, J.; Yang, W. A dual neural network for object detection in UAV images. Neurocomputing 2021, 443, 292–301. [Google Scholar] [CrossRef]

- Aburasain, R.; Edirisinghe, E.A.; Albatay, A. Drone-based cattle detection using deep neural networks. In Intelligent Systems and Applications: Proceedings of the 2020 Intelligent Systems Conference (IntelliSys) Volume 1; Springer: Berlin/Heidelberg, Germany, 2021; pp. 598–611. [Google Scholar]

- Chandan, G.; Jain, A.; Jain, H. Real time object detection and tracking using Deep Learning and OpenCV. In Proceedings of the 2018 International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 11–12 July 2018; pp. 1305–1308. [Google Scholar]

- Huang, W.; Zhou, X.; Dong, M.; Xu, H. Multiple objects tracking in the UAV system based on hierarchical deep high-resolution network. Multimed. Tools Appl. 2021, 80, 13911–13929. [Google Scholar] [CrossRef]

- Lu, J.; Yan, W.Q.; Nguyen, M. Human behaviour recognition using deep learning. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Kragh, M.; Jørgensen, R.N.; Pedersen, H. Object detection and terrain classification in agricultural fields using 3D lidar data. In Proceedings of the Computer Vision Systems: 10th International Conference, ICVS 2015, Copenhagen, Denmark, 6–9 July 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 188–197. [Google Scholar]

- Micheal, A.A.; Vani, K.; Sanjeevi, S.; Lin, C.H. Object detection and tracking with UAV data using deep learning. J. Indian Soc. Remote. Sens. 2021, 49, 463–469. [Google Scholar] [CrossRef]

- Pan, S.; Tong, Z.; Zhao, Y.; Zhao, Z.; Su, F.; Zhuang, B. Multi-object tracking hierarchically in visual data taken from drones. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Yang, S.; Chen, H.; Xu, F.; Li, Y.; Yuan, J. High-performance UAVs visual tracking based on siamese network. Vis. Comput. 2022, 38, 2107–2123. [Google Scholar] [CrossRef]

- Xu, Z.; Shi, H.; Li, N.; Xiang, C.; Zhou, H. Vehicle detection under UAV based on optimal dense YOLO method. In Proceedings of the 2018 5th International Conference on Systems and Informatics (ICSAI), Nanjing, China, 10–12 November 2018; pp. 407–411. [Google Scholar]

- Tong, Z.; Jieyu, L.; Zhiqiang, D. UAV target detection based on RetinaNet. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 3342–3346. [Google Scholar]

- Yadav, V.K.; Yadav, P.; Sharma, S. An Efficient YOLOv7 and Deep Sort are Used in a Deep Learning Model for Tracking Vehicle and Detection. J. Xi’an Shiyou Univ. Nat. Sci. Ed. 2022, 18, 759–763. [Google Scholar]

- Yang, F.; Zhang, X.; Liu, B. Video object tracking based on YOLOv7 and DeepSORT. arXiv 2022, arXiv:2207.12202. [Google Scholar]

- Bondi, E.; Jain, R.; Aggrawal, P.; Anand, S.; Hannaford, R.; Kapoor, A.; Piavis, J.; Shah, S.; Joppa, L.; Dilkina, B.; et al. BIRDSAI: A dataset for detection and tracking in aerial thermal infrared videos. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1747–1756. [Google Scholar]

- Zhang, H.; Wang, G.; Lei, Z.; Hwang, J.N. Eye in the sky: Drone-based object tracking and 3d localization. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 899–907. [Google Scholar]

- GitHub—VisDrone/VisDrone-Dataset: The Dataset for Drone-Based Detection and Tracking Is Released, Including Both Image/Video, and Annotations. VisDrone2020 Challenge. 2020. Available online: https://github.com/VisDrone/VisDrone-Dataset (accessed on 11 March 2024).

- Zuo, M.; Zhu, X.; Chen, Y.; Yu, J. Survey of Object Tracking Algorithm Based on Siamese Network. J. Phys. Conf. Ser. 2022, 2203, 012035. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).