3D Reconstruction of Wheat Plants by Integrating Point Cloud Data and Virtual Design Optimization

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Design

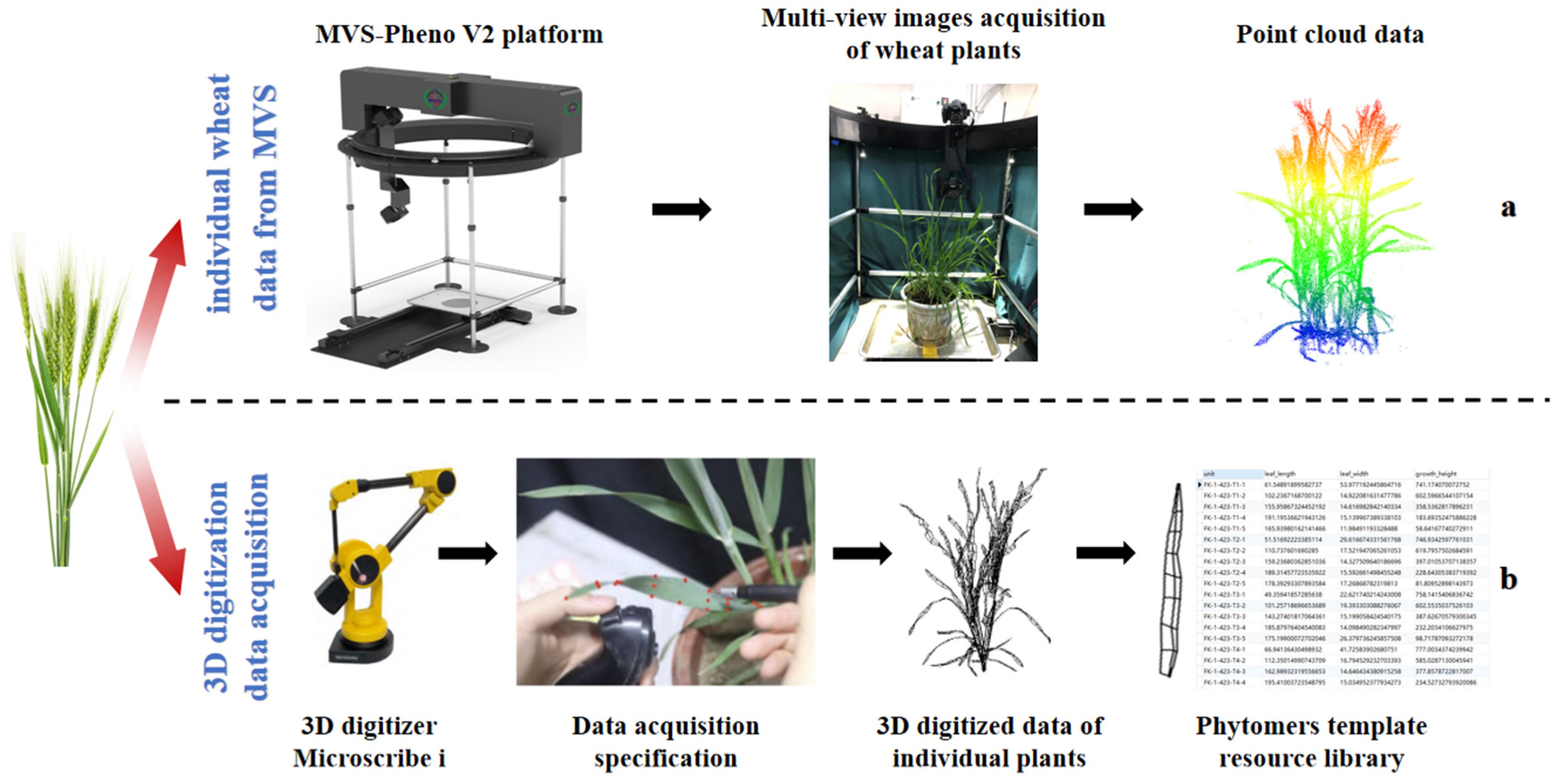

2.2. Data Acquisition

2.2.1. Acquisition of Multi-View Image Data for Plants

2.2.2. Acquisition of 3D Digital Data for Plant Sub-Organs

2.3. Data Processing

2.4. Initial Single-Stem 3D Model Construction

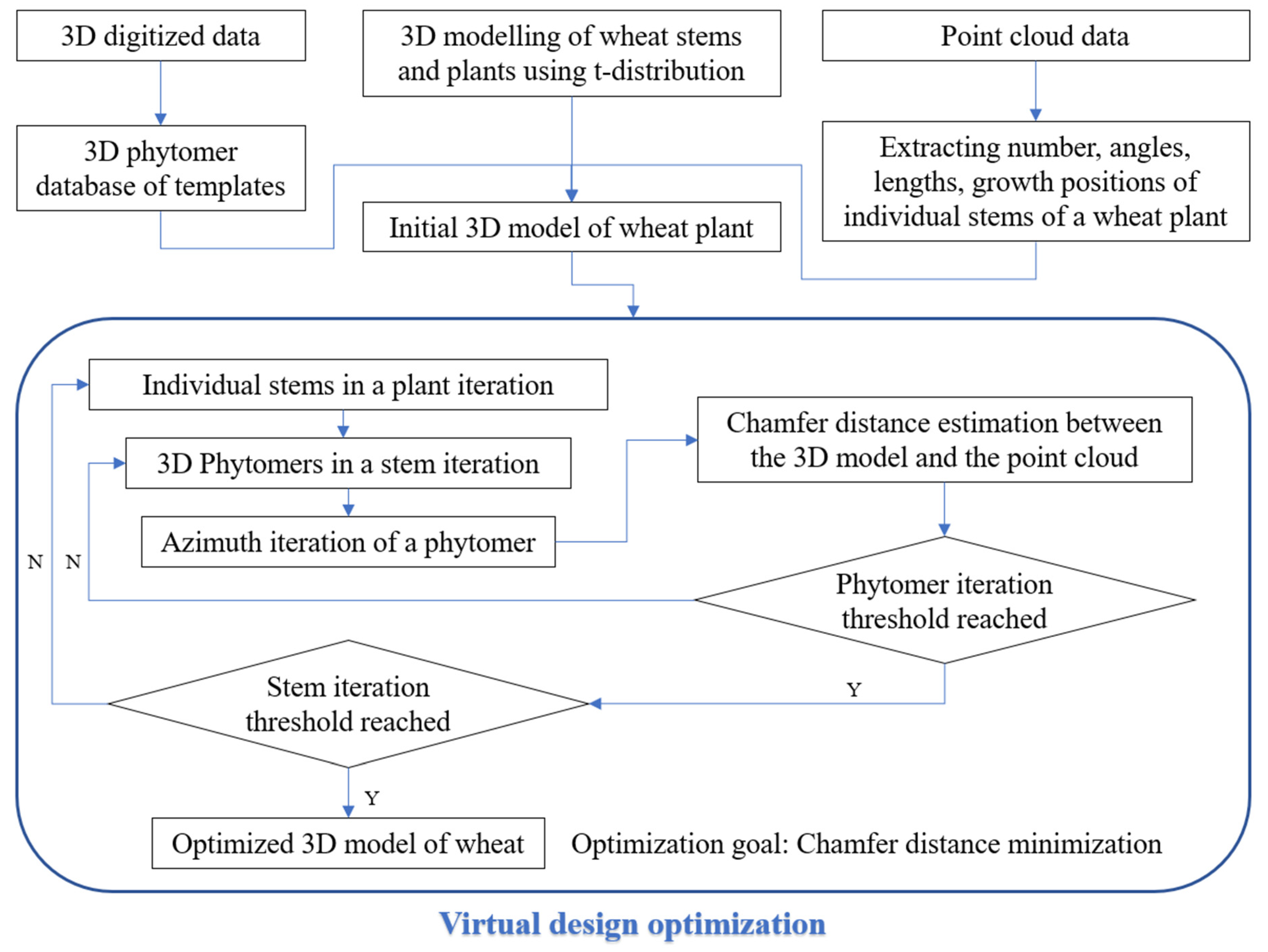

2.5. 3D Reconstruction of Wheat Plants by Virtual Design Optimization

2.5.1. Initial Growth Position Extraction of Single Stems

2.5.2. Virtual Design and 3D Reconstruction of Wheat Plants

| Algorithm 1. Virtual design to adjust the angle of Phytomers |

Procedure: VIRTUALDESIGN(P,W)

|

2.6. Evaluating Running Efficiency

2.7. Validation Methods

3. Results

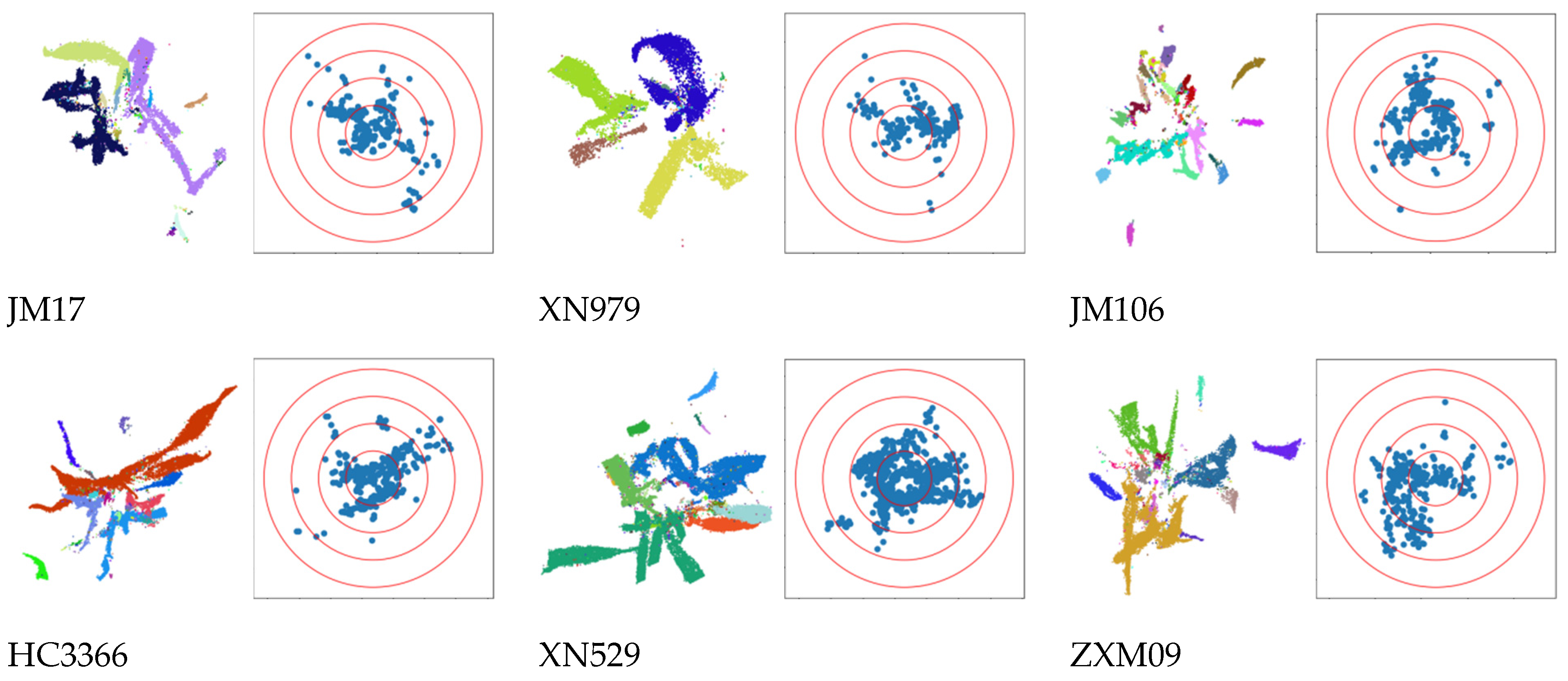

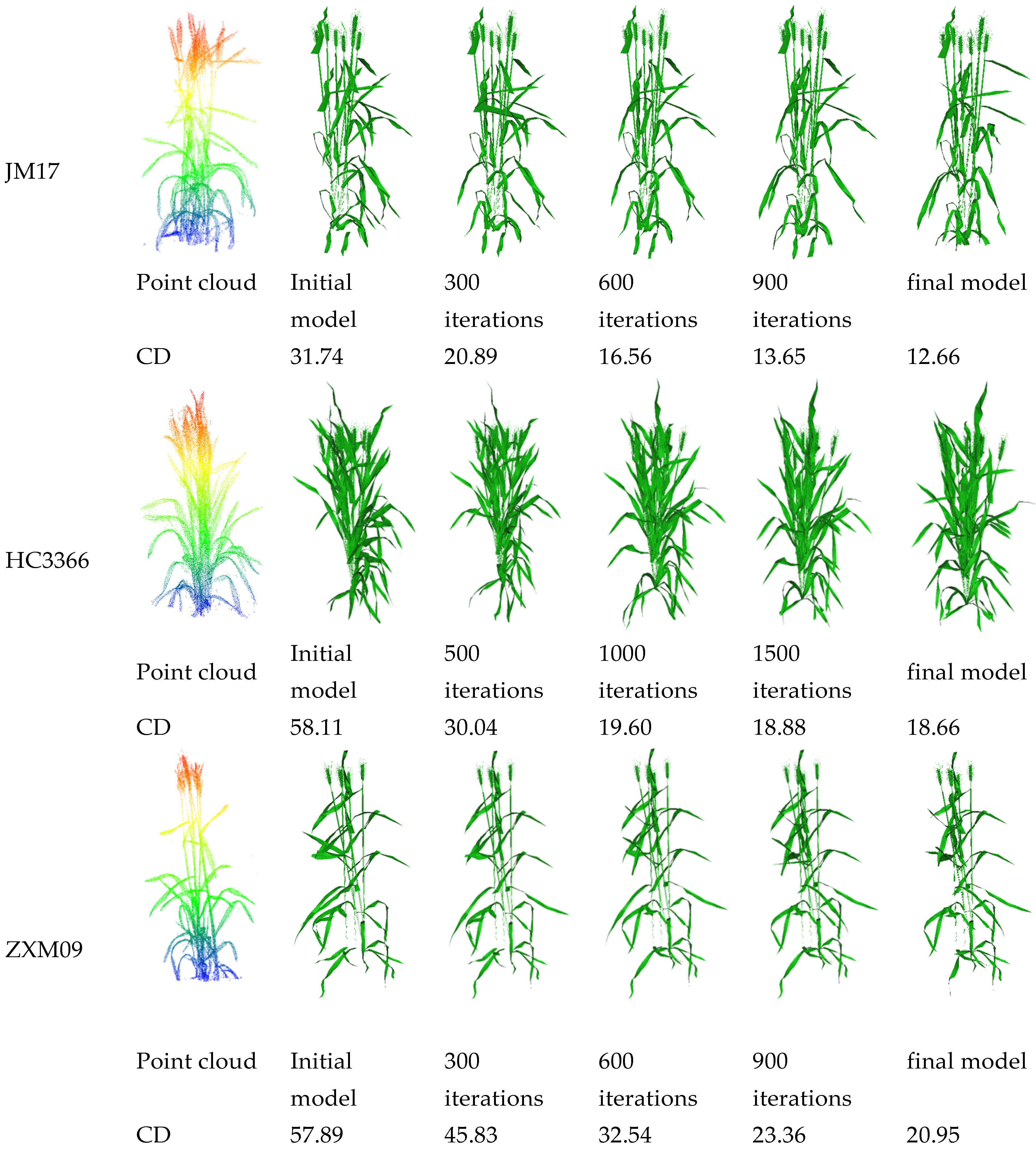

3.1. Visualization Results of 3D Reconstructed Plants

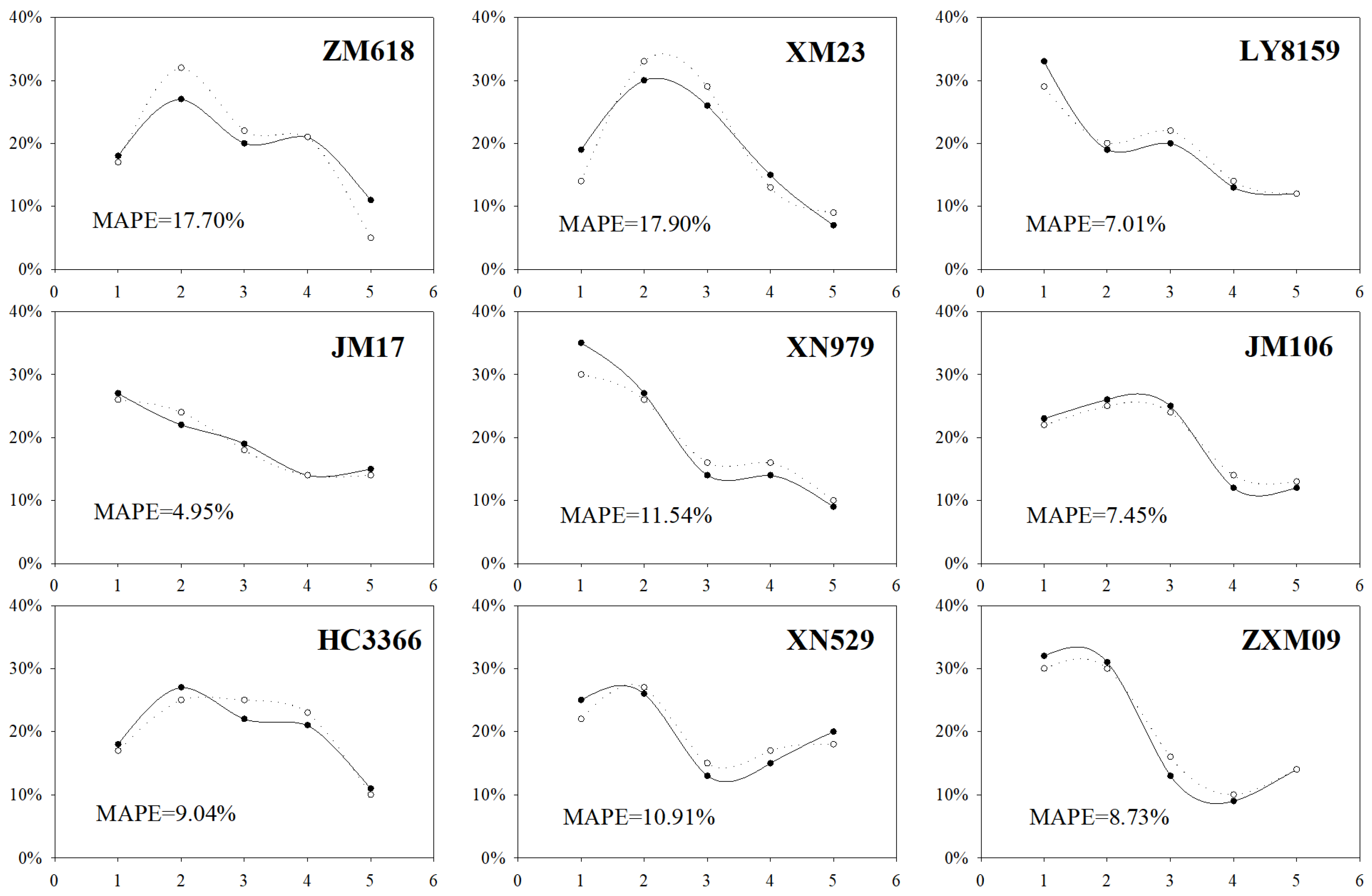

3.2. Verification Results

3.2.1. Comparative Validation of Plant Phenotypes

3.2.2. Comparison of Spatial Distribution of Reconstruction Results

3.2.3. Hausdorff Distance between Plant Point Cloud and Reconstructed 3D Models

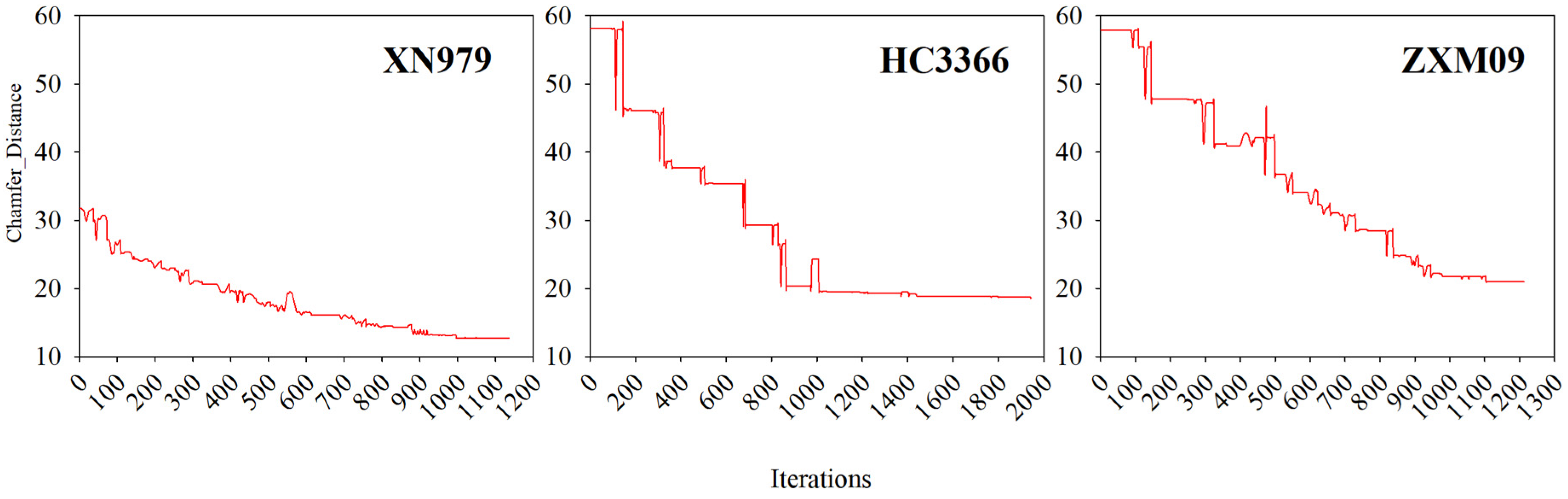

3.3. Iteration Process

3.4. Running Efficiency

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bucksch, A.; Atta-Boateng, A.; Azihou, A.F.; Battogtokh, D.; Baumgartner, A.; Binder, B.M.; Braybrook, S.A.; Chang, C.; Coneva, V.; DeWitt, T.J.; et al. Morphological Plant Modeling: Unleashing Geometric and Topological Potential within the Plant Sciences. Front. Plant Sci. 2017, 8, 900. [Google Scholar] [CrossRef]

- Yang, W.; Feng, H.; Zhang, X.; Zhang, J.; Doonan, J.H.; Batchelor, W.D.; Xiong, L.; Yan, J. Crop Phenomics and High-Throughput Phenotyping: Past Decades, Current Challenges, and Future Perspectives. Mol. Plant 2020, 13, 187–214. [Google Scholar] [CrossRef]

- Henke, M.; Kurth, W.; Buck-Sorlin, G.H. FSPM-P: Towards a general functional-structural plant model for robust and comprehensive model development. Front. Comput. Sci. 2016, 10, 1103–1117. [Google Scholar] [CrossRef]

- Louarn, G.; Song, Y. Two decades of functional–structural plant modelling: Now addressing fundamental questions in systems biology and predictive ecology. Ann. Bot. 2020, 126, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Okura, F. 3D modeling and reconstruction of plants and trees: A cross-cutting review across computer graphics, vision, and plant phenotyping. Breed. Sci. 2022, 72, 31–47. [Google Scholar] [CrossRef] [PubMed]

- Gibbs, J.A.; Pound, M.; French, A.P.; Wells, D.M.; Murchie, E.; Pridmore, T. Approaches to three-dimensional reconstruction of plant shoot topology and geometry. Funct. Plant Biol. 2017, 44, 62–75. [Google Scholar] [CrossRef] [PubMed]

- Karwowski, R.; Prusinkiewicz, P. The L-system-based plant-modeling environment L-studio 4.0. In Proceedings of the 4th International Workshop on Functional-Structural Plant Models, 2004, UMR AMAP, Montpellier, France, 7–11 June 2004; 2004. [Google Scholar]

- Hemmerling, R.; Evers, J.B.; Smoleňová, K.; Buck-Sorlin, G.; Kurth, W. Extension of the GroIMP modelling platform to allow easy specification of differential equations describing biological processes within plant models. Comput. Electron. Agric. 2013, 92, 1–8. [Google Scholar] [CrossRef]

- Winiwarter, L.; Pena, A.M.E.; Weiser, H.; Anders, K.; Sanchez, J.M.; Searle, M.; Höfle, B. Virtual laser scanning with HELIOS plus plus: A novel take on ray tracing-based simulation of topographic full-waveform 3D laser scanning. Remote Sens. Environ. 2022, 269, 112772. [Google Scholar] [CrossRef]

- Kierzkowski, D.; Runions, A.; Vuolo, F.; Strauss, S.; Lymbouridou, R.; Routier-Kierzkowska, A.-L.; Wilson-Sánchez, D.; Jenke, H.; Galinha, C.; Mosca, G.; et al. A Growth-Based Framework for Leaf Shape Development and Diversity. Cell 2019, 177, 1405–1418.e17. [Google Scholar] [CrossRef] [PubMed]

- Runions, A.; Tsiantis, M.; Prusinkiewicz, P. A common developmental program can produce diverse leaf shapes. New Phytol. 2017, 216, 401–418. [Google Scholar] [CrossRef] [PubMed]

- Qian, B.; Huang, W.; Xie, D.; Ye, H.; Guo, A.; Pan, Y.; Jin, Y.; Xie, Q.; Jiao, Q.; Zhang, B.; et al. Coupled maize model: A 4D maize growth model based on growing degree days. Comput. Electron. Agric. 2023, 212, 108124. [Google Scholar] [CrossRef]

- Wen, W.L.; Zhao, C.J.; Guo, X.Y.; Wang, Y.J.; Du, J.J.; Yu, Z.T. Construction method of three-dimensional model of maize colony based on t-distribution function. Trans. Chin. Soc. Agric. Eng. 2018, 34, 192–200. [Google Scholar]

- Han, X.F.; Laga, H.; Bennamoun, M. Image-Based 3D Object Reconstruction: State-of-the-Art and Trends in the Deep Learning Era. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1578–1604. [Google Scholar] [CrossRef] [PubMed]

- Harandi, N.; Vandenberghe, B.; Vankerschaver, J.; Depuydt, S.; Van Messem, A. How to make sense of 3D representations for plant phenotyping: A compendium of processing and analysis techniques. Plant Methods 2023, 19, 60. [Google Scholar] [CrossRef]

- Slattery, R.A.; Ort, D.R. Perspectives on improving light distribution and light use efficiency in crop canopies. Plant Physiol. 2021, 185, 34–48. [Google Scholar] [CrossRef] [PubMed]

- Yin, K.; Huang, H.; Long, P.; Gaissinski, A.; Gong, M.; Sharf, A. Full 3D Plant Reconstruction via Intrusive Acquisition. Comput. Graph. Forum 2016, 35, 272–284. [Google Scholar] [CrossRef]

- Zhu, T.; Ma, X.; Guan, H.; Wu, X.; Wang, F.; Yang, C.; Jiang, Q. A method for detecting tomato canopies’ phenotypic traits based on improved skeleton extraction algorithm. Comput. Electron. Agric. 2023, 214, 108285. [Google Scholar] [CrossRef]

- Jin, S.; Sun, X.; Wu, F.; Su, Y.; Li, Y.; Song, S.; Xu, K.; Ma, Q.; Baret, F.; Jiang, D.; et al. Lidar sheds new light on plant phenomics for plant breeding and management: Recent advances and future prospects. ISPRS J. Photogramm. Remote Sens. 2021, 171, 202–223. [Google Scholar] [CrossRef]

- Chaivivatrakul, S.; Tang, L.; Dailey, M.N.; Nakarmi, A.D. Automatic morphological trait characterization for corn plants via 3D holographic reconstruction. Comput. Electron. Agric. 2014, 109, 109–123. [Google Scholar] [CrossRef]

- Zermas, D.; Morellas, V.; Mulla, D.; Papanikolopoulos, N. 3D model processing for high throughput phenotype extraction—The case of corn. Comput. Electron. Agric. 2019, 172, 105047. [Google Scholar] [CrossRef]

- Sampaio, G.S.; Silva, L.A.; Marengoni, M. 3D Reconstruction of Non-Rigid Plants and Sensor Data Fusion for Agriculture Phenotyping. Sensors 2021, 21, 4115. [Google Scholar] [CrossRef] [PubMed]

- Artzet, S.; Chen, T.W.; Chopard, J.; Brichet, N.; Mielewczik, M.; Cohen-Boulakia, S.; Cabrera-Bosquet, L.; Tardieu, F.; Fournier, C.; Pradal, C. Phenomenal: An automatic open source library for 3D shoot architecture reconstruction and analysis for image-based plant phenotyping. bioRxiv 2019, 805739. [Google Scholar] [CrossRef]

- Ando, R.; Ozasa, Y.; Guo, W. Robust Surface Reconstruction of Plant Leaves from 3D Point Clouds. Plant Phenomics 2021, 2021, 3184185. [Google Scholar] [CrossRef] [PubMed]

- Kempthorne, D.M.; Turner, I.W.; Belward, J.A.; McCue, S.W.; Barry, M.; Young, J.; Dorr, G.J.; Hanan, J.; Zabkiewicz, J.A. Surface reconstruction of wheat leaf morphology from three-dimensional scanned data. Funct. Plant Biol. 2015, 42, 444–451. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Wen, W.; Lu, X.; Chang, W.; Chen, B.; Wu, Q.; Xiang, Z.; Guo, X.; Zhao, C. Three-Dimensional Wheat Modelling Based on Leaf Morphological Features and Mesh Deformation. Agronomy 2022, 12, 414. [Google Scholar] [CrossRef]

- Chang, W.; Wen, W.; Zheng, C.; Lu, X.; Chen, B.; Li, R.; Guo, X. Geometric Wheat Modeling and Quantitative Plant Architecture Analysis Using Three-Dimensional Phytomers. Plants 2023, 12, 445. [Google Scholar] [CrossRef]

- Duan, T.; Chapman, S.; Holland, E.; Rebetzke, G.; Guo, Y.; Zheng, B. Dynamic quantification of canopy structure to characterize early plant vigour in wheat genotypes. J. Exp. Bot. 2016, 67, 4523–4534. [Google Scholar] [CrossRef]

- Wu, D.; Yu, L.; Ye, J.; Zhai, R.; Duan, L.; Liu, L.; Wu, N.; Geng, Z.; Fu, J.; Huang, C.; et al. Panicle-3D: A low-cost 3D-modeling method for rice panicles based on deep learning, shape from silhouette, and supervoxel clustering. Crop J. 2022, 10, 1386–1398. [Google Scholar] [CrossRef]

- Liu, S.; Baret, F.; Abichou, M.; Boudon, F.; Thomas, S.; Zhao, K.; Fournier, C.; Andrieu, B.; Irfan, K.; Hemmerlé, M.; et al. Estimating wheat green area index from ground-based LiDAR measurement using a 3D canopy structure model. Agric. For. Meteorol. 2017, 247, 12–20. [Google Scholar] [CrossRef]

- Barillot, R.; Chambon, C.; Fournier, C.; Combes, D.; Pradal, C.; Andrieu, B. Investigation of complex canopies with a functional–structural plant model as exemplified by leaf inclination effect on the functioning of pure and mixed stands of wheat during grain filling. Ann. Bot. 2019, 123, 727–742. [Google Scholar] [CrossRef]

- Wu, S.; Wen, W.; Gou, W.; Lu, X.; Zhang, W.; Zheng, C.; Xiang, Z.; Chen, L.; Guo, X. A miniaturized phenotyping platform for individual plants using multi-view stereo 3D reconstruction. Front. Plant Sci. 2022, 13, 897746. [Google Scholar] [CrossRef] [PubMed]

- Pengliang, X.; Sheng, W.; Shiqing, G.; Weiliang, W.; Chuanyu, W.; Xianju, L.; Xiaofen, G.; Wenrui, L.; Linsheng, H.; Dong, L.; et al. ICFMNet: An Automated Segmentation and 3D Phenotypic Analysis Pipeline for Plant, Spike, and Flag Leaf type of Wheat. Comput. Electron. Agric. 2024; under review. [Google Scholar]

- Wen, W.; Wang, Y.; Wu, S.; Liu, K.; Gu, S.; Guo, X. 3D phytomer-based geometric modelling method for plants—The case of maize. AoB Plants 2021, 13, plab055. [Google Scholar] [CrossRef]

- Yuan, X.; Liu, B.; Ma, Y. Anisotropic neighborhood searching for point cloud with sharp feature. Meas. Control 2020, 53, 1943–1953. [Google Scholar] [CrossRef]

- Gao, J.; Hu, X.; Gao, C.; Chen, G.; Feng, H.; Jia, Z.; Zhao, P.; Yu, H.; Li, H.; Geng, Z.; et al. Deciphering genetic basis of developmental and agronomic traits by integrating high-throughput optical phenotyping and genome-wide association studies in wheat. Plant Biotechnol. J. 2023, 21, 1966–1977. [Google Scholar] [CrossRef] [PubMed]

| Number | Variety Name | Abbreviation | Plant Height (cm) | Plant Architecture |

|---|---|---|---|---|

| 1 | ZhengMai 618 | ZM618 | 70 | loose |

| 2 | XingMai 23 | XM23 | 73.7 | compact |

| 3 | LinYou 8159 | LY8159 | 93.3 | compact |

| 4 | JiMai 17 | JM17 | 79.7 | semi-compact |

| 5 | XiNong 979 | XN979 | 74.7 | semi-compact |

| 6 | JiMai 106 | JM106 | 59 | loose |

| 7 | HuaCheng 3366 | HC3366 | 80.3 | compact |

| 8 | XiNong 529 | XN529 | 82.3 | compact |

| 9 | ZhongXinMai 09 | ZXM09 | 75.7 | semi-compact |

| Variety | Number of Points after Uniform Down-Sampling | Number of Single-Stems | Number of Phytomers | Time Cost Using 5° Iteration Step (s) | Time Cost Using 10° Iteration Step (s) | Time Cost Using 20° Iteration Step (s) |

|---|---|---|---|---|---|---|

| XN979 | 3556 | 7 | 31 | 1003.27 | 498.34 | 239.33 |

| HC3366 | 4045 | 11 | 54 | 2191.31 | 1088.02 | 598.15 |

| ZXM09 | 3603 | 7 | 32 | 1123.59 | 553.24 | 270.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, W.; Wen, W.; Wu, S.; Zheng, C.; Lu, X.; Chang, W.; Xiao, P.; Guo, X. 3D Reconstruction of Wheat Plants by Integrating Point Cloud Data and Virtual Design Optimization. Agriculture 2024, 14, 391. https://doi.org/10.3390/agriculture14030391

Gu W, Wen W, Wu S, Zheng C, Lu X, Chang W, Xiao P, Guo X. 3D Reconstruction of Wheat Plants by Integrating Point Cloud Data and Virtual Design Optimization. Agriculture. 2024; 14(3):391. https://doi.org/10.3390/agriculture14030391

Chicago/Turabian StyleGu, Wenxuan, Weiliang Wen, Sheng Wu, Chenxi Zheng, Xianju Lu, Wushuai Chang, Pengliang Xiao, and Xinyu Guo. 2024. "3D Reconstruction of Wheat Plants by Integrating Point Cloud Data and Virtual Design Optimization" Agriculture 14, no. 3: 391. https://doi.org/10.3390/agriculture14030391

APA StyleGu, W., Wen, W., Wu, S., Zheng, C., Lu, X., Chang, W., Xiao, P., & Guo, X. (2024). 3D Reconstruction of Wheat Plants by Integrating Point Cloud Data and Virtual Design Optimization. Agriculture, 14(3), 391. https://doi.org/10.3390/agriculture14030391