Segmentation of Wheat Lodging Areas from UAV Imagery Using an Ultra-Lightweight Network

Abstract

1. Introduction

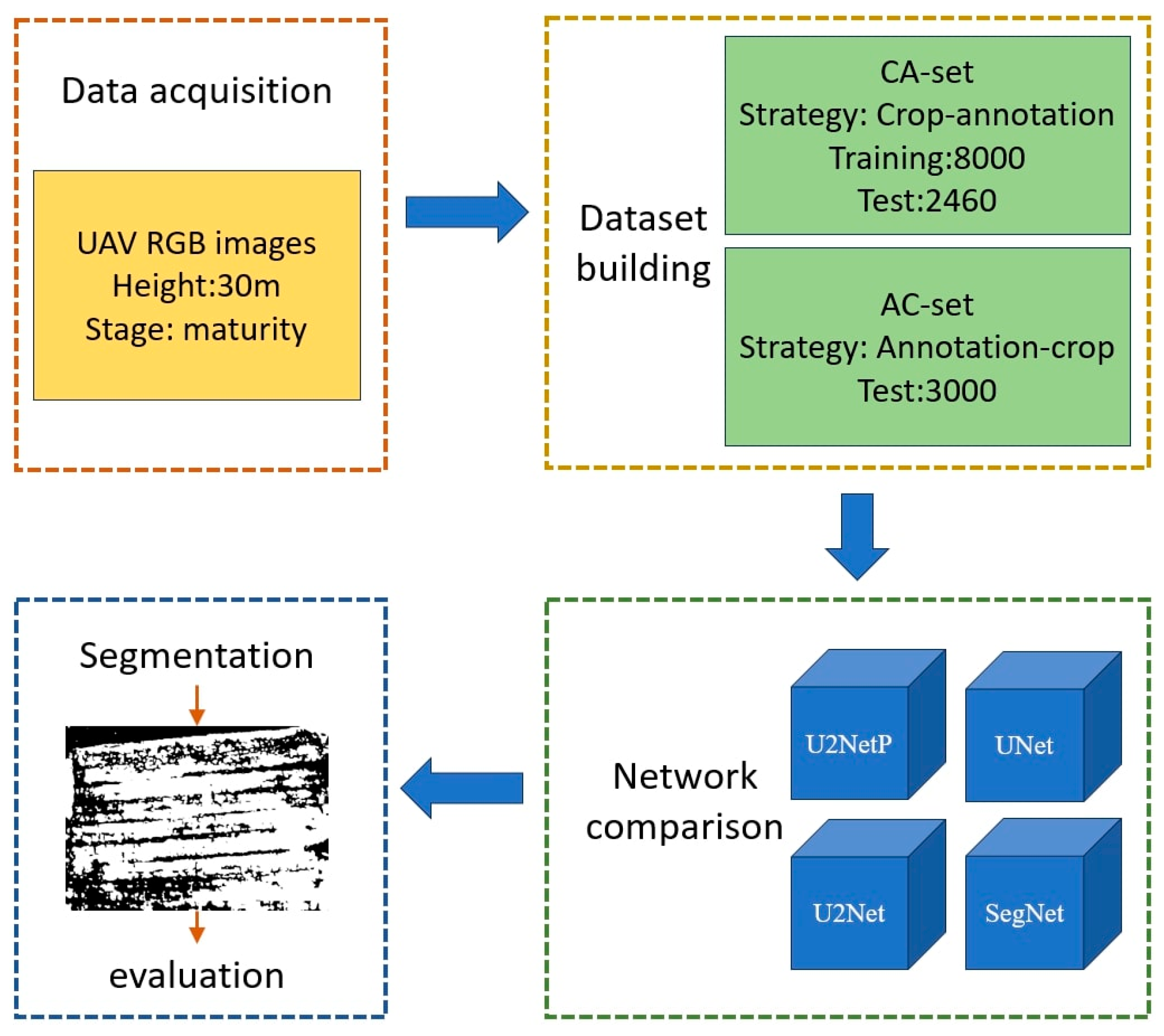

2. Materials and Methods

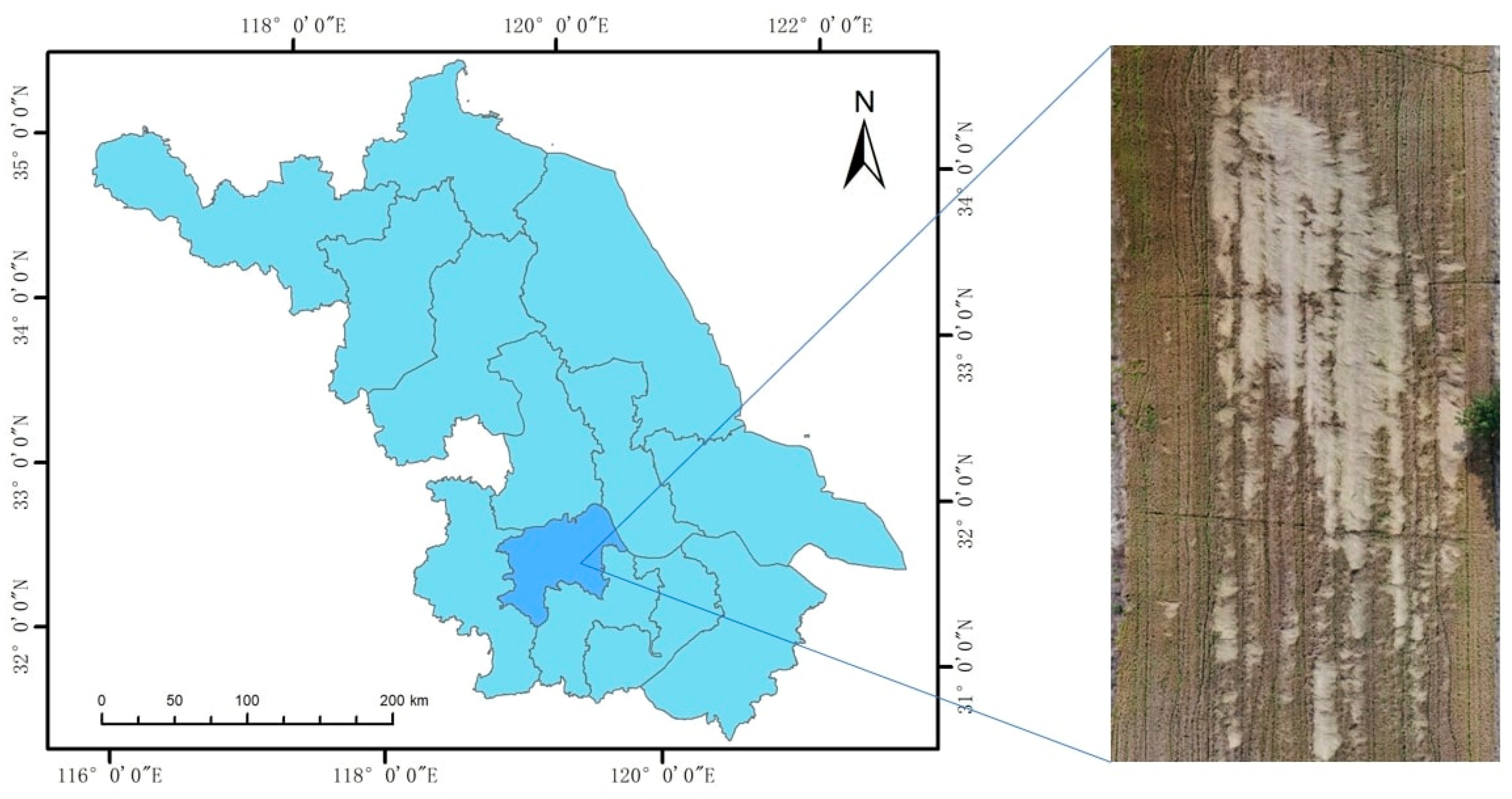

2.1. Study Site

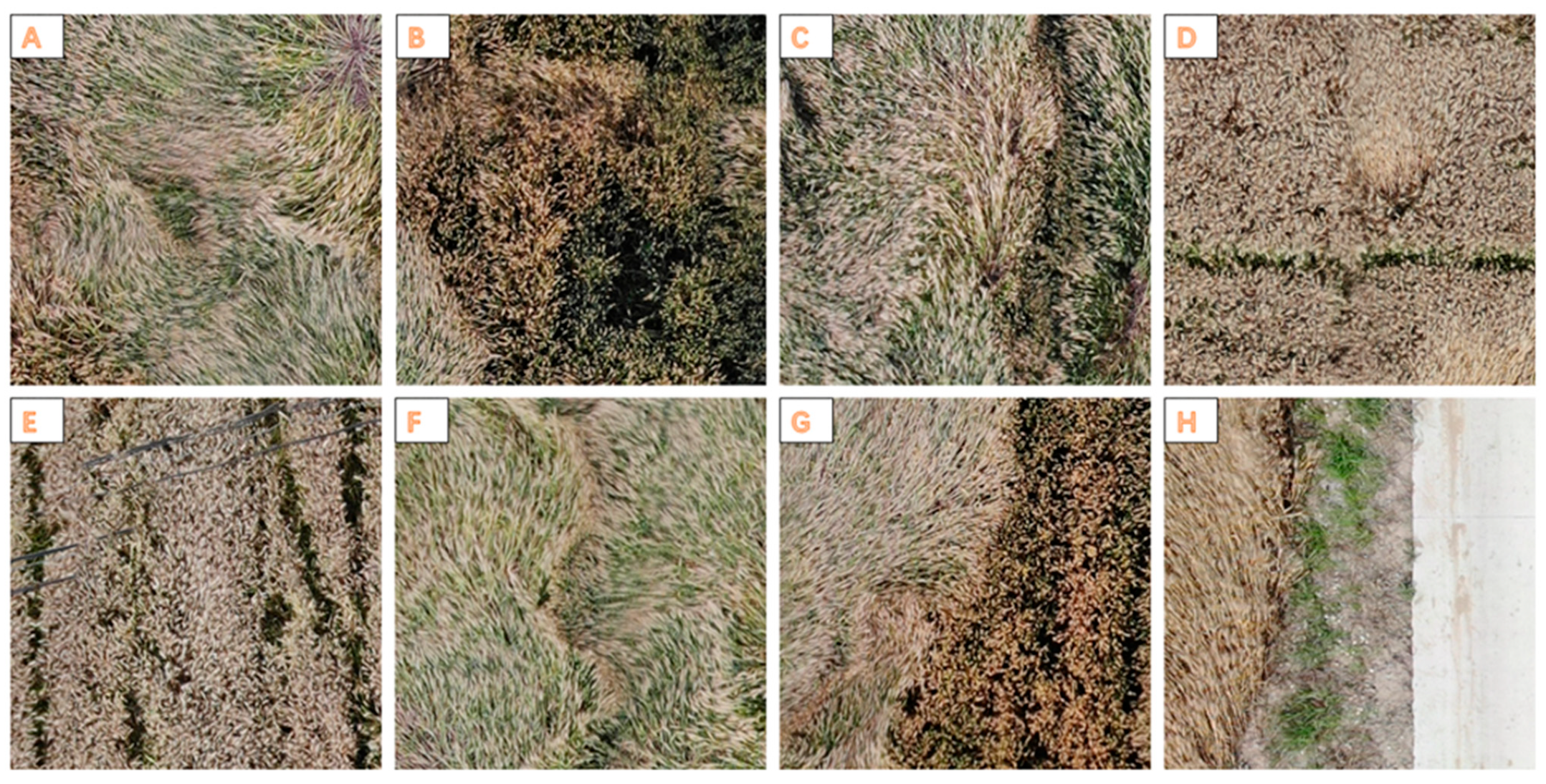

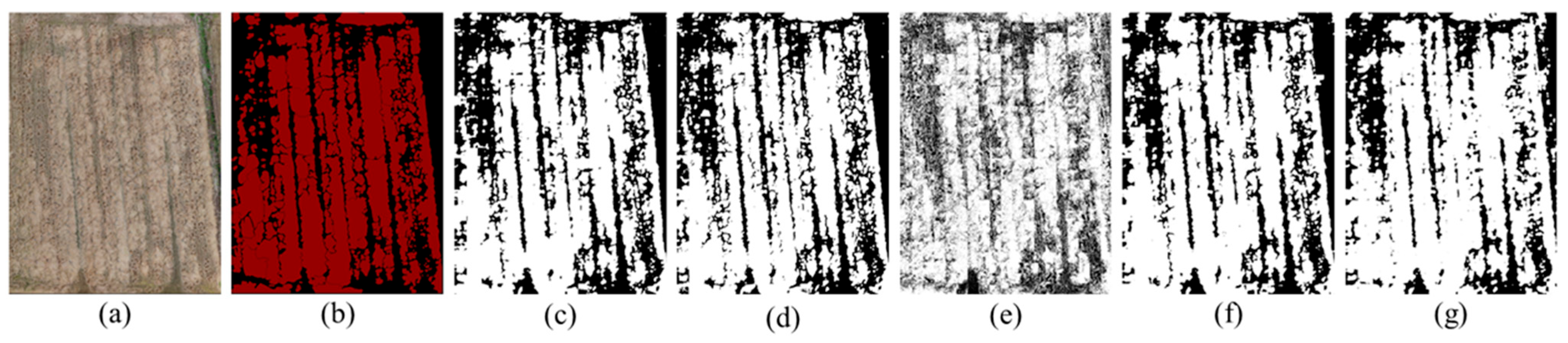

2.2. Data Collection and Preprocessing

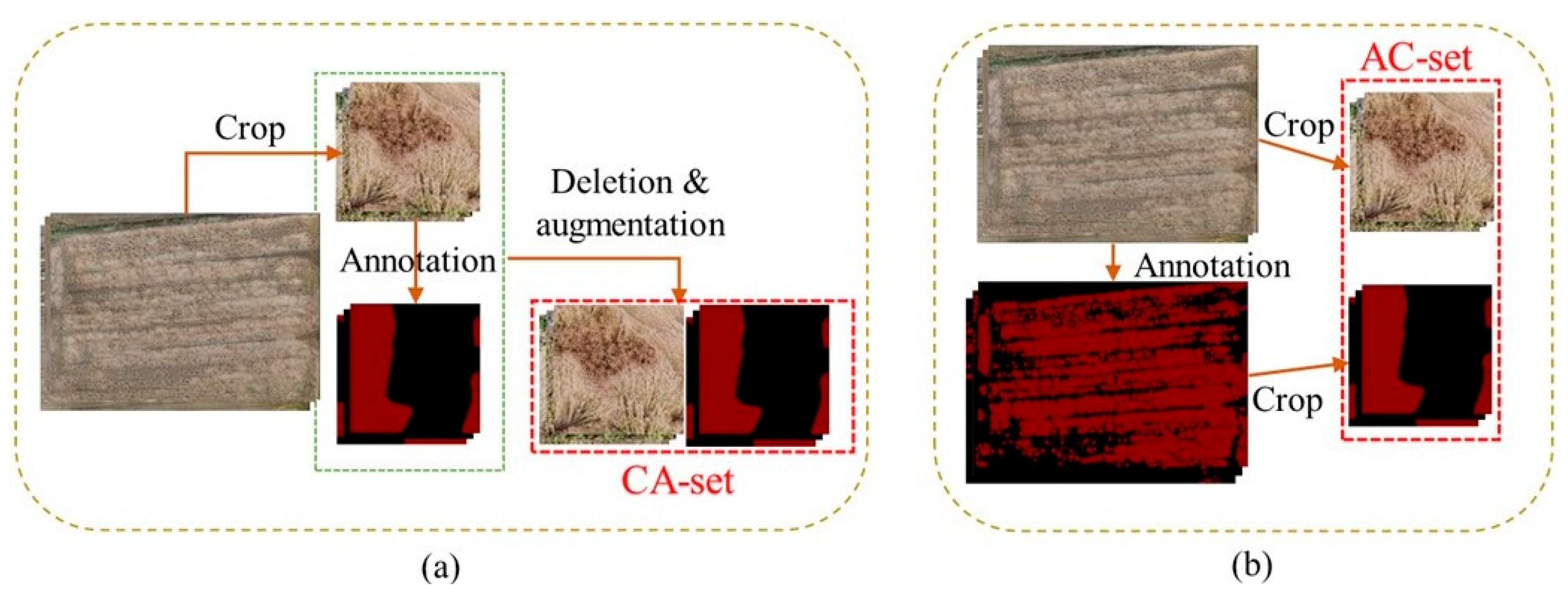

2.3. Dataset Construction and Annotation

3. Methodology

3.1. Technical Workflow

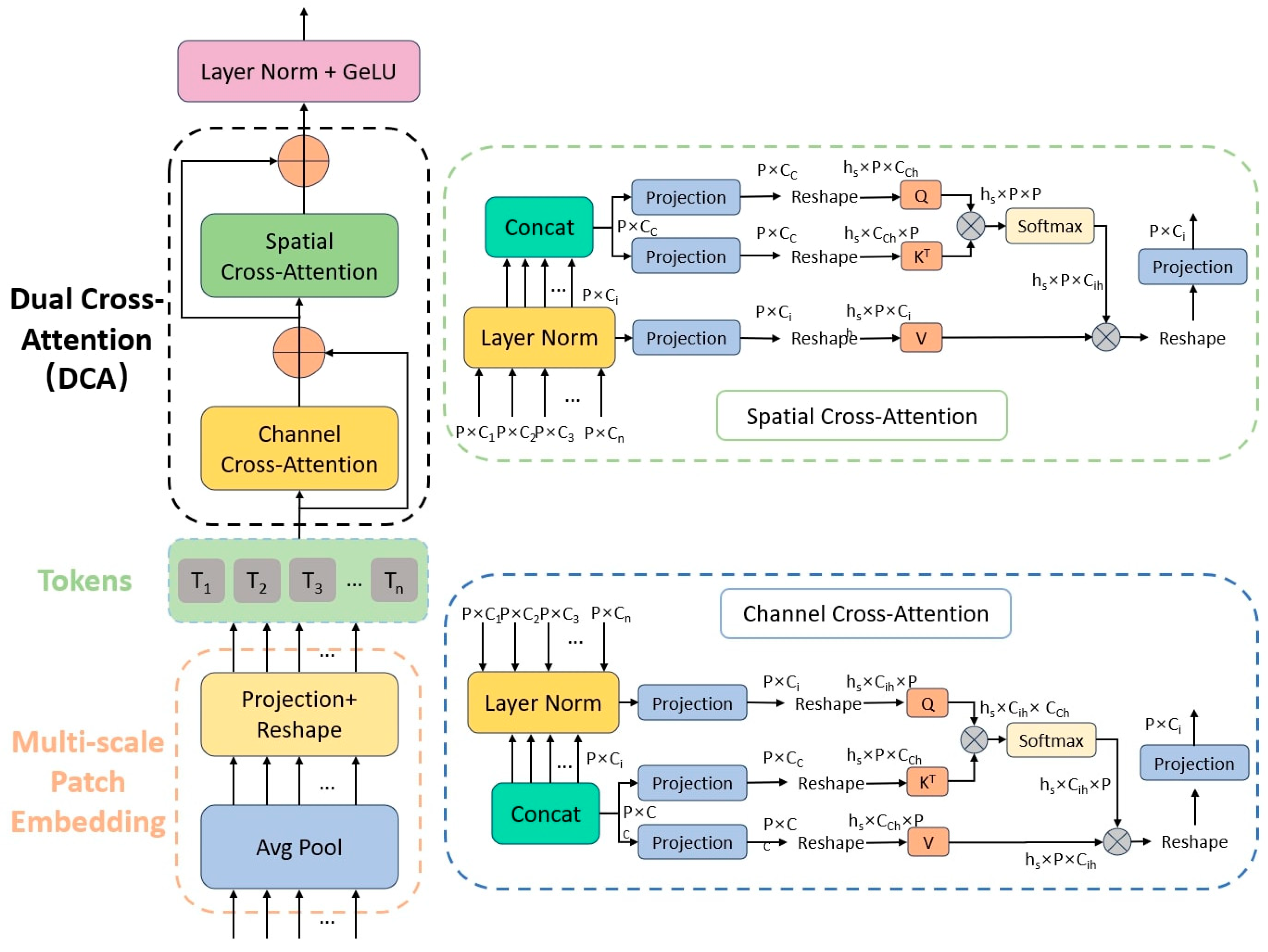

3.2. DCA Module

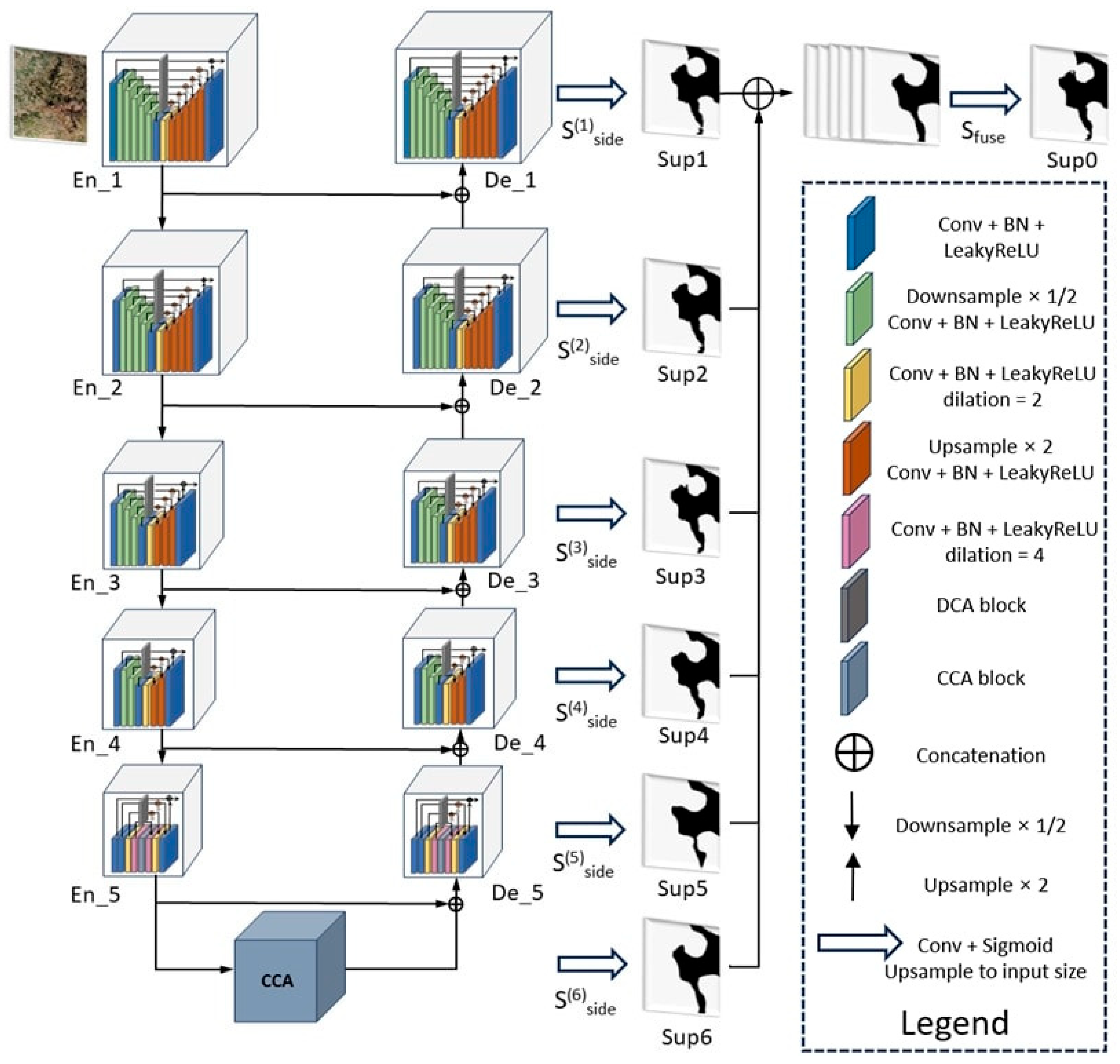

3.3. Structure of L-U2NetP

- CCA [39] was used to replace the large dilation rate convolution blocks and bottommost blocks of U2NetP. At the cost of ultra-lightweight computation and memory, the CCA module modeled the correlations between the neighborhood features of the full image. Compared with the original image, the CCA-enhanced feature map could selectively aggregate context features through the attention feature map while obtaining a larger context receptive field.

- As shown in the dark gray module in Figure 6, the DCA module was added to the connection channels of each small-scale U-structure’s encoder and decoder, which could make it simple and effective to enhance the skip connection in the structure.

- All activation functions, ReLU, in U2Net were replaced by LeakyReLU [40] in L-U2NetP. The ReLU is often used as an activation function in order to obtain a sparse network, but with an ReLU, the dead ReLU problem was observed when the input data were normalized [41]. The Leaky ReLU function can adjust the dead ReLU problem of negative values by assigning very small linear components of the input vector to negative inputs.

3.4. Model Training

3.5. Evaluation Metrics

4. Results

4.1. Performance of Different Models on CA Set

4.2. Comparison of Robustness of Different Models in Real-Time Detection Simulation

4.3. Comparison of Generalization Ability of Different Models on AC Set

5. Discussion

5.1. Comparison of the Proposed Method with Previous Crop Lodging Segmentation Methods Based on RGB Images

5.2. Necessity of Model Robustness Testing in Real-Time Detection Simulation

5.3. Necessity of Using Crop-Annotation Strategy for Crop Lodging Extraction

5.4. Future Challenges

6. Conclusions

- L-U2NetP achieved a segmentation accuracy of 95.45% and 89.72% on the simple and difficult set with the same enhancement as the training set, which outperformed all the other comparative networks.

- In the real-time detection simulation test, L-U2NetP demonstrated accuracy rates of 85.96% and 83.58% on the simple and difficult sets, respectively, under changing light conditions. Similarly, under aerial foreign object obstruction, the accuracy rates were 94.13% and 85.82% on the simple and difficult sets, respectively. When motion blur was present, the accuracy rates stood at 89.64% and 75.16% on the simple and difficult sets, respectively. L-U2NetP outperformed the other models in the majority of the evaluation metrics and showed strong robustness on the above simulation.

- Compared with the U2NetP network, the accuracy improved by 7.31% using L-U2NetP on the test set obtained by the Annotation-crop strategy. The results indicated that the L-U2NetP can effectively extract lodging areas and the novel strategy was reliable.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Brune, P.F.; Baumgarten, A.; McKay, S.J.; Technow, F.; Podhiny, J. A biomechanical model for maize root lodging. Plant Soil 2018, 422, 397–408. [Google Scholar] [CrossRef]

- Bing, L.; Jingang, L.; Yupan, Z.; Yugi, W.; Zhen, J. Epidemiological Analysis and Management Strategies of Fusarium Head Blight of Wheat. Curr. Biotechnol. 2021, 11, 647. [Google Scholar]

- Martínez-Peña, R.; Vergara-Díaz, O.; Schlereth, A.; Höhne, M.; Morcuende, R.; Nieto-Taladriz, M.T.; Araus, J.L.; Aparicio, N.; Vicente, R. Analysis of durum wheat photosynthetic organs during grain filling reveals the ear as a water stress-tolerant organ and the peduncle as the largest pool of primary metabolites. Planta 2023, 257, 81. [Google Scholar] [CrossRef]

- Islam, M.S.; Peng, S.; Visperas, R.M.; Ereful, N.; Bhuiya, M.S.U.; Julfiquar, A. Lodging-related morphological traits of hybrid rice in a tropical irrigated ecosystem. Field Crops Res. 2007, 101, 240–248. [Google Scholar] [CrossRef]

- Dong, H.; Luo, Y.; Li, W.; Wang, Y.; Zhang, Q.; Chen, J.; Jin, M.; Li, Y.; Wang, Z. Effects of Diferent Spring Nitrogen Topdressing Modes on Lodging Resistance and Lignin Accumulation of Winter Wheat. Sci. Agric. Sin. 2020, 53, 4399–4414. [Google Scholar]

- Wang, D.; Ding, W.H.; Feng, S.W.; Hu, T.Z.; Li, G.; Li, X.H.; Yang, Y.Y.; Ru, Z.G. Stem characteristics of different wheat varieties and its relationship with lodqing-resistance. Chin. J. Appl. Ecol. 2015, 27, 1496–1502. [Google Scholar]

- Del Pozo, A.; Matus, I.; Ruf, K.; Castillo, D.; Méndez-Espinoza, A.M.; Serret, M.D. Genetic advance of durum wheat under high yielding conditions: The case of Chile. Agronomy 2019, 9, 454. [Google Scholar] [CrossRef]

- Zhu, W.; Feng, Z.; Dai, S.; Zhang, P.; Ji, W.; Wang, A.; Wei, X.-H. Multi-Feature Fusion Detection of Wheat Lodqing lnformation Based on UAV Multispectral lmages. Spectrosc. Spectr. Anal. 2022, 44, 197–206. [Google Scholar]

- Yang, H.; Chen, E.; Li, Z.; Zhao, C.; Yang, G.; Pignatti, S.; Casa, R.; Zhao, L. Wheat lodging monitoring using polarimetric index from RADARSAT-2 data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 157–166. [Google Scholar] [CrossRef]

- Zhang, G.; Yan, H.; Zhang, D.; Zhang, H.; Cheng, T.; Hu, G.; Shen, S.; Xu, H. Enhancing model performance in detecting lodging areas in wheat fields using UAV RGB Imagery: Considering spatial and temporal variations. Comput. Electron. Agric. 2023, 214, 108297. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Mardanisamani, S.; Maleki, F.; Hosseinzadeh Kassani, S.; Rajapaksa, S.; Duddu, H.; Wang, M.; Shirtliffe, S.; Ryu, S.; Josuttes, A.; Zhang, T. Crop lodging prediction from UAV-acquired images of wheat and canola using a DCNN augmented with handcrafted texture features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Chauhan, S.; Darvishzadeh, R.; van Delden, S.H.; Boschetti, M.; Nelson, A. Mapping of wheat lodging susceptibility with synthetic aperture radar data. Remote Sens. Environ. 2021, 259, 112427. [Google Scholar] [CrossRef]

- Chauhan, S.; Darvishzadeh, R.; Lu, Y.; Boschetti, M.; Nelson, A. Understanding wheat lodging using multi-temporal Sentinel-1 and Sentinel-2 data. Remote Sens. Environ. 2020, 243, 111804. [Google Scholar] [CrossRef]

- Hufkens, K.; Melaas, E.K.; Mann, M.L.; Foster, T.; Ceballos, F.; Robles, M.; Kramer, B. Monitoring crop phenology using a smartphone based near-surface remote sensing approach. Agric. For. Meteorol. 2019, 265, 327–337. [Google Scholar] [CrossRef]

- Gerten, D.; Wiese, M. Microcomputer-assisted video image analysis of lodging in winter wheat. Photogramm. Eng. Remote Sens. 1987, 53, 83–88. [Google Scholar]

- Chauhan, S.; Darvishzadeh, R.; Boschetti, M.; Pepe, M.; Nelson, A. Remote sensing-based crop lodging assessment: Current status and perspectives. ISPRS J. Photogramm. Remote Sens. 2019, 151, 124–140. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Weeds detection in UAV imagery using SLIC and the hough transform. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar]

- Jung, J.; Maeda, M.; Chang, A.; Landivar, J.; Yeom, J.; McGinty, J. Unmanned aerial system assisted framework for the selection of high yielding cotton genotypes. Comput. Electron. Agric. 2018, 152, 74–81. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral UAV images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef]

- Vélez, S.; Vacas, R.; Martín, H.; Ruano-Rosa, D.; Álvarez, S. A novel technique using planar area and ground shadows calculated from UAV RGB imagery to estimate pistachio tree (Pistacia vera L.) canopy volume. Remote Sens. 2022, 14, 6006. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F. Beyond the traditional NDVI index as a key factor to mainstream the use of UAV in precision viticulture. Sci. Rep. 2021, 11, 2721. [Google Scholar] [CrossRef]

- Li, M.; Shamshiri, R.R.; Schirrmann, M.; Weltzien, C.; Shafian, S.; Laursen, M.S. UAV oblique imagery with an adaptive micro-terrain model for estimation of leaf area index and height of maize canopy from 3D point clouds. Remote Sens. 2022, 14, 585. [Google Scholar] [CrossRef]

- Tian, M.; Ban, S.; Yuan, T.; Ji, Y.; Ma, C.; Li, L. Assessing rice lodging using UAV visible and multispectral image. Int. J. Remote Sens. 2021, 42, 8840–8857. [Google Scholar] [CrossRef]

- Chu, T.; Starek, M.J.; Brewer, M.J.; Masiane, T.; Murray, S.C. UAS imaging for automated crop lodging detection: A case study over an experimental maize field. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping II; SPIE: Bellingham, WA, USA, 2017; pp. 88–94. [Google Scholar]

- Yu, J.; Cheng, T.; Cai, N.; Lin, F.; Zhou, X.-G.; Du, S.; Zhang, D.; Zhang, G.; Liang, D. Wheat lodging extraction using Improved_Unet network. Front. Plant Sci. 2022, 13, 1009835. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Ding, Y.; Chen, P.; Zhang, X.; Pan, Z.; Liang, D. Automatic extraction of wheat lodging area based on transfer learning method and deeplabv3+ network. Comput. Electron. Agric. 2020, 179, 105845. [Google Scholar] [CrossRef]

- Yu, J.; Cheng, T.; Cai, N.; Zhou, X.-G.; Diao, Z.; Wang, T.; Du, S.; Liang, D.; Zhang, D. Wheat Lodging Segmentation Based on Lstm_PSPNet Deep Learning Network. Drones 2023, 7, 143. [Google Scholar] [CrossRef]

- Yang, B.; Zhu, Y.; Zhou, S. Accurate wheat lodging extraction from multi-channel UAV images using a lightweight network model. Sensors 2021, 21, 6826. [Google Scholar] [CrossRef] [PubMed]

- Shen, H.; Su, X.; Zhao, Q. Extraction of lodging area of wheat varieties by unmanned aerial vehicle remote sensing based on deep learning. Trans. Chin. Soc. Agric. Mach. 2022, 53, 252–260. [Google Scholar]

- Yang, W.; Luo, W.; Mao, J.; Fang, Y.; Bei, J. Substation meter detection and recognition method based on lightweight deep learning model. In Proceedings of the International Symposium on Artificial Intelligence and Robotics 2022, Shanghai, China, 21–23 October 2022; pp. 199–207. [Google Scholar]

- Li, W.; Zhan, W.; Han, T.; Wang, P.; Liu, H.; Xiong, M.; Hong, S. Research and Application of U 2-NetP Network Incorporating Coordinate Attention for Ship Draft Reading in Complex Situations. J. Signal Process. Syst. 2023, 95, 177–195. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Tao, F.; Yao, H.; Hruska, Z.; Kincaid, R.; Rajasekaran, K. Near-infrared hyperspectral imaging for evaluation of aflatoxin contamination in corn kernels. Biosyst. Eng. 2022, 221, 181–194. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Ates, G.C.; Mohan, P.; Celik, E. Dual Cross-Attention for Medical Image Segmentation. arXiv 2023, arXiv:17696. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; p. 3. [Google Scholar]

- Baloch, D.; Abdullah, S.; Qaiser, A.; Ahmed, S.; Nasim, F.; Kanwal, M. Speech Enhancement using Fully Convolutional UNET and Gated Convolutional Neural Network. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 831–836. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhao, S.; Peng, Y.; Liu, J.; Wu, S. Tomato Leaf Disease Diagnosis Based on Improved Convolution Neural Network by Attention Module. Agriculture 2021, 11, 651. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of unmanned aerial vehicle imagery and deep learning unet to extract rice lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef]

- Zhao, J.; Li, Z.; Lei, Y.; Huang, L. Application of UAV RGB Images and Improved PSPNet Network to the Identification of Wheat Lodging Areas. Agronomy 2023, 13, 1309. [Google Scholar] [CrossRef]

- He, Y.; Zhang, X.; Zhang, Z.; Fang, H.J.C. Automated detection of boundary line in paddy field using MobileV2-UNet and RANSAC. Comput. Electron. Agric. 2022, 194, 106697. [Google Scholar] [CrossRef]

- Yoon, H.-S.; Park, S.-W.; Yoo, J.-H. Real-time hair segmentation using mobile-unet. Electronics 2021, 10, 99. [Google Scholar] [CrossRef]

- Zhang, Z.; Flores, P.; Igathinathane, C.; Naik, D.L.; Kiran, R.; Ransom, J.K. Wheat lodging detection from UAS imagery using machine learning algorithms. Remote Sens. 2020, 12, 1838. [Google Scholar] [CrossRef]

- Jianing, L.; Zhao, Z.; Xiaohang, L.; Yunxia, L.; Zhaoyu, R.; Jiangfan, Y.; Man, Z.; Paulo, F.; Zhexiong, H.; Can, H.; et al. Wheat Lodging Types Detection Based on UAV Image Using Improved EfficientNetV2. Smart Agric. 2023, 5, 62–74. [Google Scholar]

- Burdziakowski, P.; Bobkowska, K. UAV Photogrammetry under Poor Lighting Conditions—Accuracy Considerations. Sensors 2021, 21, 3531. [Google Scholar] [CrossRef]

- Chen, X.; Peng, D.; Gu, Y. Real-time object detection for UAV images based on improved YOLOv5s. Opto-Electron. Eng. 2022, 49, 210372-1–210372-13. [Google Scholar]

- Nasrullah, A.R. Systematic Analysis of Unmanned Aerial Vehicle (UAV) Derived Product Quality. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2016. [Google Scholar]

- Zhang, G.; He, F.; Yan, H.; Xu, H.; Pan, Z.; Yang, X.; Zhang, D.; Li, W. Methodology of wheat lodging annotation based on semi-automatic image segmentation algorithm. Int. J. Precis. Agric. Aviat. 2022, 5, 47–53. [Google Scholar]

- Liu, H.Y.; Yang, G.J.; Zhu, H.C. The extraction of wheat lodging area in UAV’s image used spectral and texture features. Appl. Mech. Mater. 2014, 651, 2390–2393. [Google Scholar] [CrossRef]

- Yu, N.; Hua, K.A.; Cheng, H. A Multi-Directional Search technique for image annotation propagation. J. Vis. Commun. Image Represent. 2012, 23, 237–244. [Google Scholar] [CrossRef]

- Bhagat, P.; Choudhary, P. Image annotation: Then and now. Image Vis. Comput. 2018, 80, 1–23. [Google Scholar] [CrossRef]

- Xiao, Y.; Dong, Y.; Huang, W.; Liu, L.; Ma, H. Wheat Fusarium Head Blight Detection Using UAV-Based Spectral and Texture Features in Optimal Window Size. Remote Sens. 2021, 13, 2437. [Google Scholar] [CrossRef]

| Dataset | Total | Train Set | Test Set | |

|---|---|---|---|---|

| CA set | 10,460 | 8000 | Normal set1 | Disturb set |

| 880 (Difficult) 760 (Simple) | 660 (Difficult) 570 (Simple) | |||

| AC set | 3000 | / | Normal set2 | Normal set3 |

| 3000 | 500 (Difficult) 500 (Simple) | |||

| Parameter | Value |

|---|---|

| Batch size | 8 |

| Learning rate | 0.001 |

| Weight decay | 0.0001 |

| Input shape | 512 × 512 |

| Num classes | 2 |

| Epoch | 80 |

| Model | Simple Set | Difficult Set | Parameters (Million) | ||||

|---|---|---|---|---|---|---|---|

| Acc | F1 | IoU | Acc | F1 | IoU | ||

| L-U2NetP | 95.45% | 93.11% | 89.15% | 89.72% | 79.95% | 70.24% | 1.10 |

| U2NetP | 94.80% | 91.97% | 87.89% | 87.83% | 78.90% | 69.23% | 1.13 |

| UNet | 75.95% | 73.01% | 63.57% | 66.18% | 49.02% | 36.41% | 29.44 |

| U2Net | 94.61% | 91.69% | 87.62% | 88.24% | 78.06% | 68.07% | 44.01 |

| SegNet | 94.17% | 91.16% | 86.33% | 88.57% | 76.27% | 65.65% | 31.03 |

| Model | Simple Set | Difficult Set | ||||

|---|---|---|---|---|---|---|

| Acc | F1 | IoU | Acc | F1 | IoU | |

| L-U2NetP | 85.96% | 83.02% | 77.97% | 83.58% | 69.42% | 59.44% |

| U2NetP | 73.84% | 69.78% | 64.36% | 71.96% | 55.70% | 46.91% |

| UNet | 73.56% | 71.70% | 62.49% | 63.08% | 49.58% | 37.02% |

| U2Net | 87.55% | 86.30% | 81.50% | 77.89% | 64.72% | 55.27% |

| SegNet | 85.49% | 82.38% | 76.03% | 81.92% | 69.21% | 58.69% |

| Model | Simple Set | Difficult Set | ||||

|---|---|---|---|---|---|---|

| Acc | F1 | IoU | Acc | F1 | IoU | |

| L-U2NetP | 94.13% | 89.61% | 84.55% | 85.82% | 72.93% | 61.60% |

| U2NetP | 93.42% | 88.48% | 83.46% | 84.74% | 73.82% | 62.71% |

| UNet | 72.48% | 71.77% | 60.53% | 64.40% | 49.63% | 36.05% |

| U2Net | 93.31% | 89.70% | 84.71% | 85.55% | 74.33% | 63.27% |

| SegNet | 91.16% | 87.70% | 81.63% | 83.56% | 72.26% | 60.54% |

| Model | Simple Set | Difficult Set | ||||

|---|---|---|---|---|---|---|

| Acc | F1 | IoU | Acc | F1 | IoU | |

| L-U2NetP | 89.64% | 88.38% | 83.72% | 75.16% | 68.89% | 58.24% |

| U2NetP | 91.73% | 89.63% | 84.67% | 82.57% | 73.05% | 61.99% |

| UNet | 76.70% | 76.37% | 68.47% | 60.41% | 50.92% | 38.83% |

| U2Net | 85.49% | 85.02% | 79.04% | 67.97% | 63.42% | 52.28% |

| SegNet | 78.69% | 74.75% | 65.99% | 75.87% | 56.48% | 44.68% |

| Models | All | Simple | Difficult |

|---|---|---|---|

| L-U2NetP | 91.67% | 97.30% | 90.63% |

| U2NetP | 84.36% | 85.53% | 81.35% |

| UNet | 61.89% | 50.68% | 63.49% |

| U2Net | 87.93% | 94.49% | 85.89% |

| SegNet | 90.98% | 97.34% | 90.53% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, G.; Wang, C.; Wang, A.; Gao, Y.; Zhou, Y.; Huang, S.; Luo, B. Segmentation of Wheat Lodging Areas from UAV Imagery Using an Ultra-Lightweight Network. Agriculture 2024, 14, 244. https://doi.org/10.3390/agriculture14020244

Feng G, Wang C, Wang A, Gao Y, Zhou Y, Huang S, Luo B. Segmentation of Wheat Lodging Areas from UAV Imagery Using an Ultra-Lightweight Network. Agriculture. 2024; 14(2):244. https://doi.org/10.3390/agriculture14020244

Chicago/Turabian StyleFeng, Guoqing, Cheng Wang, Aichen Wang, Yuanyuan Gao, Yanan Zhou, Shuo Huang, and Bin Luo. 2024. "Segmentation of Wheat Lodging Areas from UAV Imagery Using an Ultra-Lightweight Network" Agriculture 14, no. 2: 244. https://doi.org/10.3390/agriculture14020244

APA StyleFeng, G., Wang, C., Wang, A., Gao, Y., Zhou, Y., Huang, S., & Luo, B. (2024). Segmentation of Wheat Lodging Areas from UAV Imagery Using an Ultra-Lightweight Network. Agriculture, 14(2), 244. https://doi.org/10.3390/agriculture14020244