Abstract

The advent of artificial intelligence (AI) in animal husbandry, particularly in pig interaction recognition (PIR), offers a transformative approach to enhancing animal welfare, promoting sustainability, and bolstering climate resilience. This innovative methodology not only mitigates labor costs but also significantly reduces stress levels among domestic pigs, thereby diminishing the necessity for constant human intervention. However, the raw PIR datasets often encompass irrelevant porcine features, which pose a challenge for the accurate interpretation and application of these datasets in real-world scenarios. The majority of these datasets are derived from sequential pig imagery captured from video recordings, and an unregulated shuffling of data often leads to an overlap of data samples between training and testing groups, resulting in skewed experimental evaluations. To circumvent these obstacles, we introduced a groundbreaking solution—the Semi-Shuffle-Pig Detector (SSPD) for PIR datasets. This novel approach ensures a less biased experimental output by maintaining the distinctiveness of testing data samples from the training datasets and systematically discarding superfluous information from raw images. Our optimized method significantly enhances the true performance of classification, providing unbiased experimental evaluations. Remarkably, our approach has led to a substantial improvement in the isolation after feeding (IAF) metric by 20.2% and achieved higher accuracy in segregating IAF and paired after feeding (PAF) classifications exceeding 92%. This methodology, therefore, ensures the preservation of pertinent data within the PIR system and eliminates potential biases in experimental evaluations. As a result, it enhances the accuracy and reliability of real-world PIR applications, contributing to improved animal welfare management, elevated food safety standards, and a more sustainable and climate-resilient livestock industry.

1. Introduction

Pork occupies a significant niche in the global diet, with countless swine bred annually as per the United States Department of Agriculture (USDA). An omnipresent research topic in the meat industry is pig welfare, which often comes with the onus of a considerable human workforce due to the exhaustive nature of monitoring welfare conditions [1]. However, the prevalent demand for enhanced pork productivity and quality underscores the need for a shift in traditional practices [2,3].

Our paramount goal revolved around amplifying the accuracy of interaction recognition in pigs—a pursuit carrying significant implications for animal welfare through early stress detection and preventive measures. In response to this pressing need, the advent of the pig interaction recognition (PIR) system, leveraging cutting-edge deep learning algorithms and neural networks, heralds a transformative era in animal husbandry. The PIR system serves as a viable and scalable alternative for the farming industry, capable of detecting pig interactions without the need for human observers [4]. Its integration in livestock management could significantly reduce workforce expenses and time, while outperforming human efficiency in terms of accuracy.

Breakthroughs in deep neural networks, such as Visual Geometry Group (VGG) [5], Inception [6], ResNet [7], MobileNet [8], and Xception [9], have spurred dramatic advancements in sectors like healthcare and traffic infrastructure [10,11]. Now, these formidable convolution neural networks (CNNs) are set to revolutionize automated agricultural machinery, overcoming the pitfalls of traditional methods, such as laborious tasks and subjective judgments [12]. Prior research on facial emotion recognition accentuates the essential role of substantial, well-preprocessed datasets for optimized performance, even with the most advanced architectures [13,14,15].

However, the journey to a robust PIR system requires a hefty investment of time and effort to collate high-quality data samples. Collaboration between deep learning scientists and agricultural research institutes often lies at the core of this data collection process. To sculpt a reliable and efficient PIR system, both an exhaustive dataset and a top-tier deep neural network are crucial. The acquisition of a PIR dataset is an indispensable first step, followed by its meticulous management for training avant-garde neural networks, thus elevating the PIR system’s performance and accuracy. Furthermore, most datasets are extremely unbalanced. Obtaining a balanced PIR dataset is rare, and removing some data samples from the major group of classification could waste the potential trainable data samples.

A thorough dataset analysis necessitates the elimination of superfluous video frames, background pixels, and unrelated subjects to maximize neural network performance. It is vital to navigate the challenges of sequential images, which may identically display due to limited animal mobility in confined spaces. Random shuffling of the dataset between training and testing groups can be a pitfall, leading to skewed experimental results and unreliable classification in real-world scenarios. By addressing these insights and optimizing content for search engines, the potential impact of deep neural networks on large-scale livestock management crystallizes, marking a shift towards more efficient and reliable agricultural solutions [13].

Pig datasets obtained from animal experimental facilities, industrial pig farming facilities, and academic research institutions often comprise unprocessed video clips with features irrelevant to pig interactions. Moreover, these samples typically do not present the pig in an unoccupied space, given their limited mobility. Our unique contribution lies in the strategic application of methods to a PIR dataset, particularly in the context of sequentially generated images from video clips. This partitioning application is especially challenging due to the nature of sequential data, but our semi-shuffle method provides an effective antidote. Though shuffling sequential images and dividing them into training and testing groups can adequately train and test the PIR model, the pre-trained PIR model might falter in real-life scenarios due to shared training and testing dataset samples. This paper underscores the need for a partition that the model has yet to encounter, better simulating real-world deployment scenarios. The cross-validation is the method to resample the data samples for training or testing datasets [16]. It is an applicable tool for checking whether the accuracy is misrepresented in practical performance. However, randomly shuffling the time-sequential captured images can still render almost perfect performance. Some shuffled images share the training and testing data group and cause biased interpretation. From White et al. [17], traditional k-fold cross-validation may misrepresent practical accuracy due to its inherent characteristic of shuffling, which can cause overlap between training and testing data. The SSPD-PIR methods provide an alternative approach specifically designed to handle time-sequential data and offer a more realistic performance evaluation of the model for time-sequential images.

To mitigate this issue, we proposed the Semi-Shuffling and Pig Detector (SSPD)-PIR dataset, intended to produce less biased experimental results. Our pioneering contributions to these preprocessing techniques are as follows:

- The SSPD-PIR addresses sequentially generated images from video clips and prevents testing sample sharing with the PIR model during training, thereby enhancing objective evaluation;

- The SSPD-PIR discards a significant number of irrelevant pixels before training the Xception model, allowing focus on the pig region in an image and training of the PIR model with only pertinent information;

- The Xception architecture, known for its lightweight design and competitive results, can be trained with the SSPD-PIR dataset, potentially outperforming existing simple-CNN architectures [13].

Implementing the SSPD-PIR resulted in improved accuracy in the isolation-after-feeding class and revealed the true F1-score, indicating the need for further dataset classification management. Collectively, our proposed preprocessing techniques, coupled with the Xception architecture, pave the path for a more accurate and reliable PIR model in real-world applications.

The rest of this paper is organized as follows. Section 2 compares other pig-related approaches. Section 3 explains our proposed preprocessing techniques using semi-shuffling techniques and a pig detector before training the Xception architecture. Section 4 presents the experimental results. Section 5 presents a discussion. Finally, Section 6 summarizes our proposed methods.

2. Related Work

A notable body of research within the realm of pig welfare underscores the confluence of various aspects such as pig weight, pig tag number identification, symptoms of stress, and social status. Such contributions offer a vantage point into the wellbeing of pigs, serving as a cornerstone in improving their living conditions. Critical engagement with these research findings propels the discourse on pig welfare, enabling a better understanding of the interactive states of these animals, an underappreciated yet pivotal area of study.

Recent work elucidates the nuanced interplay of social dynamics among pigs, spotlighting the quintessential role of comprehending these interactions in gauging overall pig welfare [18]. Discomfort and stress are immediate by-products of surgical procedures, notably the castration of male pigs, demanding immediate attention for welfare improvement [19,20]. With a pivot towards technology, implantable telemetric devices have emerged as instrumental tools for monitoring pig interactions by tracking heart rate, blood pressure, and receptor variation [21]. The increasing attention animal and agricultural scientists have devoted to understanding animal interactive expressions continues to gain momentum. The research conducted by Lezama Garcia et al. [22] underscores the relationship between facial expressions, combined poses, and the welfare of domestic animals such as pigs. Close inspection of animal facial expressions and body poses can furnish invaluable insights into their interactive states, thereby enhancing post-surgical treatment and food safety standards. Building on this premise, innovative technologies like convolutional neural networks (CNNs) and machine learning algorithms have been employed to detect pig curvature and estimate pig weight and emotional valence [23]. Additionally, advanced architectures such as the ResNet 50 neural network and CNN-LSTM have been applied to detect and track pigs, measure the valence of interactive situations in pigs through vocal frequency, and sequentially monitor and detect aggressive behaviors in pigs [4]. Neethirajan [24] highlighted that postures of eyes, ears, and snout could help determine negative interactions in pigs using YOLO architectures combined with RCNN. Briefer et al. [25] employed high and low frequencies to identify negative and positive valence in pigs, using a ResNet 50 neural network, which includes CNNs. Hansen et al. [26] showed that frontal images display the eyelid region, and heat maps provide a deep convolutional neural network to determine if pig stress levels are increasing, thereby degrading overall pig welfare.

Hakansson et al. [27] utilized CNN-LSTM to sequentially monitor and detect aggressive pig behaviors, such as biting, allowing farmers to intervene before the situation worsens. Imfeld-Mueller et al. [28] measured the high vocal frequency to measure the valence of an interactive situation for pigs. Capuani et al. [29] applied a CNN with an extreme randomized tree (ERT) classifier to discern positive and negative interactions in swine vocalization through machine learning. Wang et al. [30] employed a lightweight CNN-based model for early warning in sow oestrus sound monitoring. Ocepek et al. [31] used the YOLOv4 model, while Xu et al. [32] applied ResNet 50 for pig face recognition. Ocepek et al. also applied the Mask R-CNN to remove irrelevant segments from pig images. However, the pre-trained mask R-CNN does not include the pig’s classification, and the authors only trained with 533 “pig-like” images and tested with 50 “pig-like” testing samples. Their approach is unreliable for extracting the pig segmentation as Megadetector v3, which is trained with millions of trainable samples. Removing all irrelevant pixels is the key to standardizing our PIR dataset, as our trainable model primarily focuses on the relevant information. Removing all extraneous information may improve the accuracy further. However, our methods significantly reduce a massive portion of extraneous pixels before entering the model’s training procedure. The extraneous pixels account for more than 80% of an image. Without using the Megadetector, the a huge portion of extraneous pixels will cause the model to mainly focus on extraneous information and misrepresent the performance in the end. The small portion of the extraneous pixels will not affect the model performance significantly.

Concurrent advancements, such as the application of the YOLOv4 model and Mask R-CNN, have revolutionized pig face recognition and image processing. Notwithstanding, the pre-trained Mask R-CNN, when tested under certain conditions, failed to include pig classification, thereby rendering the approach unreliable for extracting pig segmentation. Removing the remaining extraneous information completely may improve the accuracy further. However, our methods significantly reduce a huge portion of extraneous pixels before entering the model’s training process. Ahn et al. [33] exploited the tiny YOLOv4 model to view pigs from a top-view camera. Low et al. [34] tested CNN-LSTM with a ResNet50 back-boned model for full-body pig detection from a top-view camera. Colaco et al. [35,36] used the PIR dataset containing thermal images, instead of colored or gray-scaled images, and trained their proposed depth-wise separable designed architecture. These studies demonstrate the wide array of techniques employed in pig interaction recognition, emphasizing the importance of understanding and monitoring pig welfare.

By harnessing an array of techniques in pig interaction recognition, we witness the burgeoning importance of understanding and monitoring pig welfare. In recent years, researchers have charted impressive progress in improving pig welfare conditions. Central to these strides are in-depth studies focusing on pig facial expressions, body poses, social relationships, and feeding intervals. Our proposed recognition system seeks to continue this trend, aiming to improve pig welfare through accurate recognition and monitoring of pig interactions. This endeavor draws on diverse methodologies and architectures gleaned from the extant literature. Key among these considerations is the impact of feeding intervals and the manipulation behavior of pen mates on pig behavior [4,18,22,23,24,25,27,31,35,36]. Restrictive feeding can lead to increased aggression in pigs, resulting in antagonistic social behavior when interacting with other pigs. To develop solutions and animal welfare monitoring platforms that address aggression and tail biting, it is essential to understand the impact of feeding intervals and manipulation behavior of pen mates. Abnormal behavior in pigs may be attributed to the redirection of the pig’s exploitative behavior, such as the ability to interact with pen mates when grouped or kept in isolation. By comprehending the effects of feeding intervals and access to socializing conditions on pig behavior, we proposed four specific treatments: isolation after feeding (IAF), isolation before feeding (IBF), paired after feeding (PAF), and paired before feeding (PBF). These treatments are designed to spotlight how different feeding and socializing conditions shape pigs’ interactive behavior. By unraveling the intricacies of pig behavior under varied conditions, we aimed to enable researchers and farmers to cultivate optimal environments that uphold pig welfare and mitigate negative interactive experiences. This pursuit underscores the importance of continued research and engagement with innovative technologies in the sphere of pig welfare.

3. SSPD-PIR System

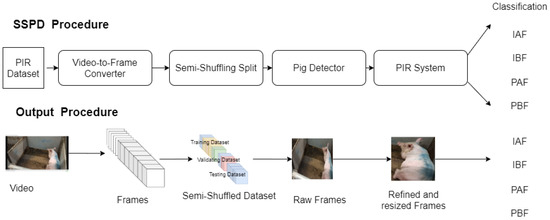

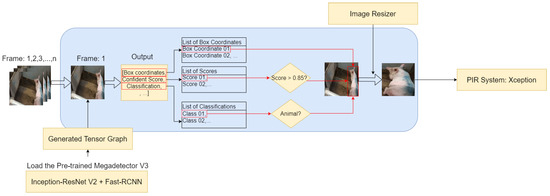

From Figure 1, the PIR dataset contains a collection of images or video clips used for recognizing and categorizing pig interactions. The SSPD-PIR consists of a video-to-frame converter, a semi-shuffling split, a pig detector, and a PIR system for interaction classification. The video-to-frame converter converts all given video clips into images, and the frame rate is 10 frames per 1 s. The semi-shuffle means shuffling the training and validating groups, but the testing data group shall not be mixed with the training nor validating data groups. The pig detector finds the pig in the image and removes irrelevant images. The PIR system contains a deep neural network that classifies the pig’s interactions after the training. With these major components, the SSPD-PIR generates the appropriate evaluation from all sequentially captured images from video clip.

Figure 1.

Proposed PIR approach.

3.1. PIR Dataset

The video clips of the PIR dataset were recorded by the Department of Animal Sciences, Wageningen University and Research, Netherlands. The PIR dataset was generated by recording video clips of pigs in various environments and situations. These classifications can indirectly interpret the pig’s interactions as the most positive and negative interactive states. To illustrate, IBF is considered the most stressed interaction state, while PAF is the most positive interaction. Each classification is related to pig interaction indirectly, as these labels are usually determined based on a combination of factors, including pig behaviors, physiological markers, and sometimes environmental conditions. The PIR dataset is used to train machine learning models, such as the Xception model, to recognize and classify pig interactions based on visual input.

The PIR dataset consists of IAF, IBF, PAF, and PBF. The IAF has 153 video clips containing two images, and the IBF has 59 clips. The PAF has 94 video clips, including one pig photo, and the PBF includes 24 video clips. All the video clips display the pig’s behavior from 45 degrees of side and top view. Each clip’s length ranges from 1 s to 8 min. The video’s resolution size varies from 620 pixels to 1920 pixels. Neethirajan’s team previously labeled the four classifications. The isolation and pair are visually distinguishable, but after and before feeding, the classifications are visually indistinguishable. The total size of the video clips is 332 GB, while our computer hardware capacity is 1 TB.

The PIR dataset from Figure 2 displays how the generated images from video clips are similar to neighboring images. If an image is similar to a neighboring image, the structural similarity index measure’s (SSIM) value displays a value of more than 0.8, which is 80% similarity. However, when the two images are different, the SSIM value displays as 0.1442, which is 14.42%. Figure 2 demonstrates how general images are mostly similar to the next images from the video clips. Almost all neighboring images are similar and will generate more than 80% of the SSIM’s value. The SSIM can remove neighboring images when two images are similar to each other. However, our PIR dataset has the nature of sequential images, and SSIM removes a vast majority of trainable images. Additionally, even after applying the SSIM, the fully-shuffling method still mixes with groups of trainable and testing data samples, and the model still generates biased experimental results. Therefore, semi-shuffling the PIR dataset is necessary in order to test with completely different testing images from training samples.

Figure 2.

SSIM algorithm evaluates the similarity of the neighboring pig’s images from PIR dataset.

3.2. SSPD-PIR: Semi-Shuffling System

Shuffling and splitting data samples between training and validating groups is commonly conducted unless the obtained dataset is already divided into train and test groups. The shuffling method randomly mixes the images before splitting them into training and testing data groups depending on the split ratio percentage and the initial random seed. As our initial dataset does not explicitly separate the training and testing datasets, we initially split them into a 90 to 10 ratio. The split ratio of 90 to 10 potentially boosts the accuracy due to a lack of testing samples to evaluate. Increasing up to 30% of the testing samples’ split ratio can have more testing samples and can assess the generalization of the overall PIR dataset to have a less biased evaluation. Thus, we split them into 70 to 30 percent to examine the pre-trained PIR model’s robustness after generating experimental results with the 90 to 10 percent ratio.

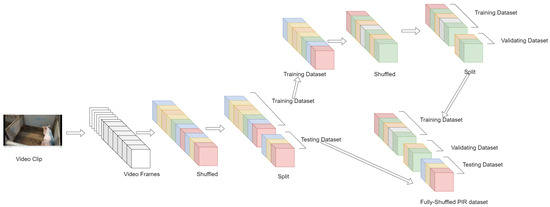

From Figure 3, the fully-shuffled technique means randomly mixing the data samples within the training, validating, and testing datasets. However, sharing the training and validating data samples with the testing data group can produce biased results. Since a pig often does not move in a pen, the generated images are almost identical to neighboring pictures when the video clip is converted into sequential photos. Some images from the testing dataset are almost identical to data samples from training and validating data groups. Enabling fully-shuffling data samples in training, validating, and testing datasets produces a biased experimental evaluation. The pre-trained PIR model is already familiar with testing images and performs well on them, a part of the training data samples. In contrast, the model’s performance can be degraded with completely different data samples from the training dataset.

Figure 3.

Fully-shuffling system splits training, validating, and testing data groups.

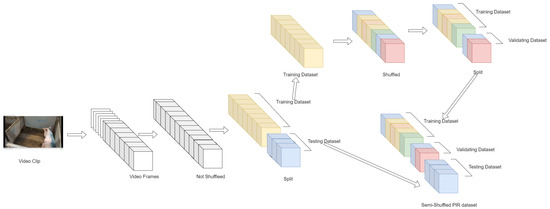

To prevent sharing the testing data samples with the PIR model, the semi-shuffling from Figure 4 initially does not mix with the training and testing data groups but is only divided into training and testing data groups. Then, the data samples from the training data group were split into training and validation groups with random shuffling. Thus, the semi-shuffling method produces an unbiased experimental result from testing, as the pre-trained PIR model is unfamiliar with the images from the testing dataset.

Figure 4.

Semi-shuffling system splits training, validating, and testing data groups.

3.3. SSPD-PIR: Pig Detector

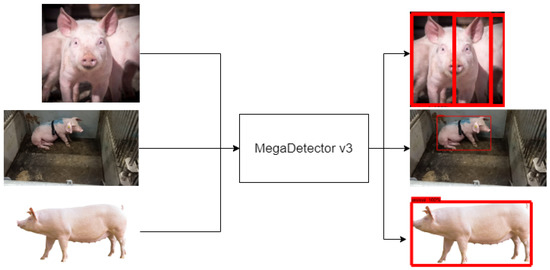

From Figure 7, the pig detector searches and locates pigs before removing the irrelevant pig pixels. The pig detector is comprised of MegaDetector V3, an animal-detecting model trained with Caltech camera traps (CCT) [37], SnapShot Serengeti [38], and Idaho camera traps [39]. MegaDetector [40] is based on the structures of Inception-Resnet V2 and faster region-based convolutional neural network (RCNN) architectures. Caltech camera traps have more than 243,100 images. SnapShot Serengeti has more than 2.65 million, and Idaho camera traps have more than 1.5 million. Since these are large datasets, their combined datasets can generate the reliability of the MegaDetector model.

However, from Figure 5, the initial pig detector only searches and displays the bounding box within the pig in an image rather than extracting a pig from an image. The outputs show red bounding boxes within the pig and the percentage of animal confidence. While investigating the Megadetector V3’s implementation, we see the tensor graph generated by loading the model from a local file path. The default value of the threshold of the confidence percentage is 85%. Megadetector V3 only has an animal classification. Thus, when the Megadetector V3 sees a pig in an image, it perceives the pig as an animal.

Figure 5.

MegaDetector V3 can locate and draw the bounding box on pig regions in an image, but this Megadetector cannot discard the insignificant background region without the modification.

The outputs of Megadetector V3 consist of classification, bounding box coordinates, and a confidence score. From Figure 6, instead of displaying bounding boxes, the percentage of confidence percentages, and animal classification, we modified and implemented the Megadetector V3 system after we comprehended the bounding box, confidence, and classification before displaying the bounding box. Once the video frames enter our pig detector system, it calls the model to generate the tensor graph, which contains the trained parameters of the pre-trained Megadetector V3 model. The Megadetector V3 model produces bounding box coordinates, confidence scores, and tagged classifications. Then, it collects the group of box coordinates, scores of confidence, and classification based on the input image. Finally, our pig detector practically crops and extracts pig parts in an image after locating pigs in a snapshot. Therefore, we modified the original Megadetector V3 and exploited the outputs for our data preprocessor as Figure 7.

Figure 6.

The structural design of Megadetector V3 demonstrates how we modified the megadetector V3 to create our pig detector.

Figure 7.

Megadetector V3 can mostly discard the unimportant background region after we modified the original Megadetector V3 system.

3.4. SSPD-PIR: PIR Model

After the pig detector removes the most undesirable pixels from input images, the automated recognition classifies IAF, IBF, PAF, and PBF from preprocessed pig images. Xception architecture [9], which stands for the extreme Inception architecture, is a deep CNN architecture that was proposed by François Chollet, the creator of the Keras library, in 2017. Xception is based on the principles of the Inception architecture. Still, it modifies the structure of the Inception modules based on the hypothesis that cross-channel correlations and spatial correlations can be mapped entirely separately. This idea is incorporated into Xception by using depthwise separable convolutions instead of standard convolutions, which allows for a more efficient use of model parameters. In simpler terms, Xception treats each channel in an input separately before mixing up outputs in the next layer, leading to more efficient learning. Therefore, we applied Xception as our proposed architecture to classify the pig’s interactions. From our previous research [13], the Xception architecture shows more enhanced accuracy than VGG [5], Inception [6], and MobileNet [8]. ResNet shows improved accuracy over Xception architecture, but the Xception architecture requires fewer parameters than ResNet [7]. Our loss function to train the Xception’s classifications is cross-entropy loss [41], and it is widely used for categorizing interactions from pig images. The cross-entropy loss function is expressed as

where is the error distance, the P as the probability of a true label, and the as the probability from the PIR model’s prediction. The desired output P is generally 0 or 1, while is a real number ranging between 0 and 1. means that i is a subset of the entire classifications. Once the input x image of i class enters the PIR model, the model predicts the image and produces . The summation will display the overall error distance from different classifications based on the input’s true label P and predicted label .

4. Experimental Analysis

This experimental session describes how poorly managed data samples can perfectly perform the test without representing practical evaluation. The performance may be different from raw and preprocessed data samples. The range of resized images from video clips is from pixels to pixels per image. We initially trained and tested with sized images to reduce computation from the massive PIR dataset and observed the performance. We witnessed the F1-score reached 99% even though the data sample was too small to recognize the pig interaction. Then, we expanded the size of an image to pixels and increased the 10 frames per second to collect the pig interaction images as we presumed richer information than . Yet, the F1-score was 98% as it was less accurate than the experimental result with the pixels sized images.

Experimental Conditions

Our hardware specifications were the intel® Core(TM) i5-10600K CPU @ 4.10 GHz (12 CPUs), 4.1 GHz, and our RAM size was 32 GB (Intel, Santa Clara, CA, USA). Our GPU specification was NVIDIA GeForce RTX 2070 Super (Santa Clara, CA, USA). We set the epoch as 150 and the batch size as 64 due to our hardware’s capacity limitation. The optimization was Adam [42] with a learning rate of , and the activation function used was the rectified linear unit (ReLU) [43]. The L2-regularization [44] was , and the epsilon of the Adam optimizer was . The number of patience was 50 as the training session stopped when the validating loss did not show any improvement over 50 iterations.

Wang et al. [45] suggested using the F1-score to evaluate the highly unbalanced dataset distribution that affects the conventional model’s classification test. Some model evaluations could be considered crucial for even a small number of incorrect classifications. The equations are described as follows:

where P, , , R, , and are precision, true positive, false positive, recall, false negative, and F1-score, respectively. The precision value is produced when the true positive is divided by the true positive, which is added by the false positive. The recall comes from the true positive value divided by the true positive added by the false negative.

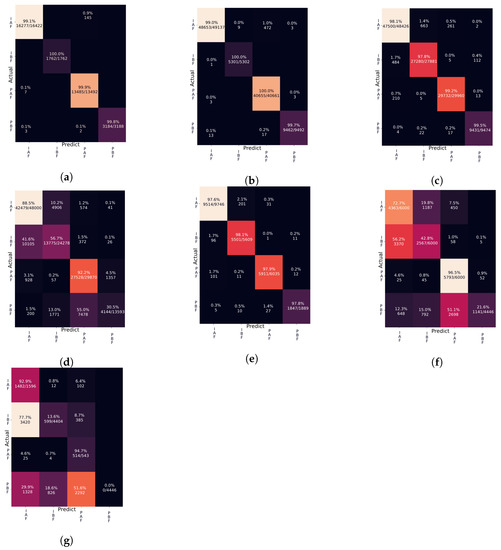

Figure 8 displays the performance of the results after training the simple CNN or Xception architectures. The numbers after the percentage number of each tile mean how the pre-trained architecture correctly identifies testing samples. To illustrate, from Figure 8a, when one of the tiles displays (16,277/16,422) after the percentage number, the pre-trained architecture correctly identified 16,277 out of 16,422 testing samples. The percentage shows how the PIR pre-trained model can accurately classify one single classification without mistakenly classifying the wrong pig interactions. Table 1 summarizes the experimental results from Figure 8a–g.

Figure 8.

The confusion matrix results of different approaches with PIR dataset. (a) Raw image based approach 90:10. (b) Raw image based approach 70:30. (c) Raw image with expanded numbers of dataset (Xception). (d) Raw image with expanded of semi-shuffled dataset (Xception). (e) Fully-shuffled raw images with 256 pixels sized and 1 FPS. (f) Semi-shuffled raw images with 256 pixels sized and 1 FPS. (g) Semi-shuffled pig detected images with 256 pixels sized and 1 FPS.

Table 1.

The summary of experimental results from Figure 8.

As seen in Figure 8a, the simple CNN architecture was trained and tested with the unprocessed dataset without cropping. We initially resized all the images to pixels, as the original image size was too large to train with a vast-sized PIR dataset. We also wished to capture the small size of the pig’s face in an image and inspect the result. The groups of training, validating, and testing datasets were fully shuffled and the training and testing were commenced with unprocessed pig photos. The split ratio of training and testing was 90 to 10. As a result, the F1-score from Table 1 was 99.55%. From Figure 8b, the simple CNN architecture was trained and tested with the unprocessed pig photos, and each image was pixels. The split ratio of training and testing was 70 to 30. All training, validating, and testing data groups were fully mixed, and the F1-score from Table 1 was 99.5021%. From Figure 8c, we increased the 10 frames per second to expand the number of samples in the PIR dataset, and the size of the input width and height of the image was expanded to 224 pixels. We changed from the simple CNN architecture to the Xception architecture to improve the accuracy further. The PIR data samples are being mixed within training, validating, and testing datasets so the Xception model could become familiar with the testing samples similar to the training data samples. The F1-score shows 98.45% (Table 1) regardless of expanding the size of the PIR dataset and choosing the best architecture.

We initially trained and tested with pixel-sized images to reduce the unnecessary computation due to the massive size of the whole PIR dataset. We witnessed that the F1-score reached 99% even though the data sample was too small to recognize the pig interaction. Then, we expanded the size of the images to pixels and increased the 10 frames per second to collect the pig interactive images. Despite expanding the size of the images, the F1-score was 98% and reduced from our previous experimental tests with the pixel-sized images. The pixel-sized images have richer information than the pixel-sized images and potentially improves PIR’s performance.

Figure 8d shows the results after mixing the samples within the training and validating datasets. On the other hand, testing data were split but not randomly mixed with pig images from the training or validating data groups. We trained the Xception architecture with the semi-shuffled PIR dataset. As a result, the F1-score from Table 1 was significantly reduced to 74.21%. Therefore, we discovered that the semi-shuffled PIR dataset significantly affects the Xception architecture since the testing images were completely different from training and validating dataset groups. As shown in Figure 8e, we increased the size of the image to pixels but reduced the number of frames. We reduced the number of frames to be captured so that the neighboring images become less identical. The F1-score was still over 97.83%, as shown in Figure 8e and Table 1. As shown in Figure 8f, we disabled sharing the data images from the testing dataset, so the pre-trained Xception architecture could not be familiar with the new data samples from the testing dataset. After applying semi-shuffling to the PIR dataset, the F1-score significantly reduced to 59.56% as shown in Table 1.

As shown in Figure 8g, we applied semi-shuffling to the PIR dataset and enabled the pig detector before training the Xception architecture. The F1-score from Table 1 shows the worst performance of 15.0215%. Yet, our pre-trained Xception architecture has more correct samples than semi-shuffling without enabling a pig detector. On the other hand, the IBF and PBF results showed an unsatisfactory performance, and the results from the PAF showed a minimal decrease. Some single pigs in images contain the PAF or PBF, which causes the pre-trained model to become confused. The pig detector may not perform perfectly, but it can refine the pig photos faster than manual preprocessing. Although the F1-score degrades by 84.5%, we can unveil the true performance and generate unbiased experiment evaluations, and the IAF shows a 20.2% improvement from Figure 8f,g. As shown in Figure 8g, the IAF and PAF were 92.9% and 94.7%, respectively. Thus, we can claim that the model can clearly distinguish the IAF and PAF. The PAF and PBF have two pigs in an image, while IAF and IBF have only one pig in an image. In other words, our model perceives either one or two pigs in an image and proceeds to be trained. The classifications of before and after feeding can be improved with further research. The reason for the low accuracy of Xception with IBF classification is that the after-feeding and before-feeding classifications are hardly distinguishable with human eyes.

5. Discusssion

In our study, we found that the sequential shuffling of training and testing data derived from video clips may obstruct an accurate evaluation. Our experimental results elucidate the link between the performance of emotion classification and the refinement process within our Semi-Shuffle Pig Detector—Pig Interaction Recognition (SSPD-PIR) system. While the classification of isolated and paired instances becomes more discernible, the delineation between ‘after’ and ‘before’ feeding remains nebulous throughout our SSPD-PIR system’s process. We recognize that the classification of isolation after feeding (IAF), isolation before feeding (IBF), paired after feeding (PAF), and paired before feeding (PBF) does not provide a direct measure of pig emotions. However, in the existing classifications, PAF represents the most positive emotion, while IBF indicates the most negative. To advance real-time pig welfare, further research into the reliability of pig feeding conditions is indispensable. We agree that our model is learning to differentiate between pairs and individual animals. Still, it is important to note that this is only a part of the process. The ultimate goal was not solely to classify images but to leverage this differentiation as a stepping stone towards detecting interactive differences.

Concerning the practical applicability of our classifier, our primary goal was to enhance the precision of interaction recognition in pigs. These advances have substantial implications for animal welfare, facilitating the identification and mitigation of stress and distress without time constraints. Moreover, the pig husbandry industry requires production efficiency, also without time limitations. As global pork demand increases, the conventional approach of hiring additional farmworkers may not suffice to meet production goals. Hence, our research underscores the necessity of innovative solutions like ours for sustainable and humane growth.

5.1. Enhancing Objectivity: The Role of Semi-Shuffling in Reducing Bias in Experimental Outcomes

The semi-shuffling technique is a method used in machine learning and statistics to reduce bias in the results. The technique is particularly useful in situations where the data may have some inherent order or structure that could potentially introduce bias into the analysis.

Data Shuffling: In a typical machine learning process, data are often shuffled before they are split into training and test sets. This is carried out to ensure that any potential order does not influence the model in the data. For example, if you are working with a time series dataset and you do not shuffle the data, your model might simply learn to predict the future based on the past, which is not what you want if you are trying to identify underlying patterns or relationships in the data.

Semi-Shuffling: However, in some cases, completely shuffling the data might not be ideal. For example, if you are working with time series data, completely shuffling the data would destroy the temporal relationships in the data, which could be important for your analysis. This is where the semi-shuffling technique comes in. Instead of completely shuffling the data, you only shuffle it within certain windows or blocks. This allows you to maintain the overall structure of the data while still introducing some randomness to reduce bias.

The semi-shuffling technique contributes to less biased results by ensuring that the model is not overly influenced by any potential order or structure in the data. By introducing some randomness into the data, the technique helps to ensure that the model is learning to identify true underlying patterns or relationships, rather than simply memorizing the order of the data.

It is important to note that, while the semi-shuffling technique can help to reduce bias, it is not a silver bullet. It is just one tool in a larger toolbox of techniques for reducing bias in machine learning and statistical analysis. Other techniques might include things like cross-validation, regularization, and feature selection, among others.

5.2. SSPD-PIR Method’s Promising Impact on Pig Well-Being and the Challenges of Its Real-World Implementation

The SSPD-PIR method is poised to significantly enhance pig welfare in real-world settings by providing a more nuanced understanding of pig interactions. In leveraging deep learning technology, the SSPD-PIR can reliably detect and interpret pig interactions, thereby allowing for timely identification of distress or discomfort in pigs. This ability not only aids in improving the animals’ well-being but also aids farmers in optimizing their livestock management practices, leading to increased productivity and improved animal welfare standards.

Implementing the SSPD-PIR method could serve as a revolutionary tool in early disease detection and stress management, improving the pigs’ quality of life. An accurate understanding of animal interaction could inform and influence farmers’ decisions regarding feeding times, living conditions, and social interactions among the animals, thereby enabling more humane and considerate treatment.

However, several challenges may arise during the implementation of the SSPD-PIR method in field settings. Firstly, the quality of data inputs is paramount for the successful application of this method. The video footage must be clear and free of environmental noise to ensure accurate recognition of pig interactions. Achieving this quality consistently in diverse farm settings might prove challenging due to varying lighting conditions, possible obstruction of camera view, or pig movements.

Secondly, the system’s effective integration into current farm management practices may be another hurdle. Farmers and livestock managers would need training to understand the data generated by the SSPD-PIR method and apply it to their day-to-day decision-making. They may need to adapt to new technology, which can take time and resources.

Lastly, there are technical considerations tied to the processing power required to run sophisticated algorithms, the requirement of stable internet connectivity for cloud-based analysis, and the potential for high initial setup costs. Addressing these challenges would be critical to making this advanced technology widely accessible and effective in enhancing pig welfare across diverse farming operations. Future research could focus on optimizing the SSPD-PIR system for more seamless integration into existing farming operations and making it more accessible for different scales of farming enterprises.

5.3. Deciphering Relevance: Criteria for Eliminating Extraneous Elements in Video Data Analysis

The criteria used for determining irrelevant video frames, extraneous background pixels, and unrelated subjects in our study were based on multiple factors, primarily focused on the relevancy to pig interaction recognition.

Irrelevant Video Frames: Frames were deemed irrelevant if they did not contain any information useful for understanding pig interactions. For instance, frames where pigs were not visible, or their interactive indicators, such as facial expressions or body postures, were obstructed or not discernible were classified as irrelevant. Similarly, frames in which pigs were asleep or inactive might not contribute much towards interaction recognition and thus could be categorized as irrelevant.

Extraneous Background Pixels: Pixels were deemed extraneous if they belonged to the background or objects within the environment that did not contribute to pig interaction recognition. This includes elements such as the pig pen structure, feeding apparatus, or any other non-pig related objects in the frame. The goal here was to focus the machine learning algorithm’s attention on the pigs and their interactions, minimizing noise or distraction from irrelevant elements in the environment.

Unrelated Subjects: Any object or entity within the frame that was not the pig whose interactions were being monitored was considered an unrelated subject. This could include other animals, farm personnel, or any moving or stationary object in the background that could potentially distract from or interfere with the accurate recognition of pig interactions.

The criteria for determining irrelevance in each of these cases were defined based on the specific task at hand, i.e., interaction recognition in pigs, and the unique attributes of the data, including the specific conditions of the farm environment, the pigs’ behavior, and the quality and angle of the video footage. These parameters can be fine-tuned and adapted as needed to suit different situations or requirements.

5.4. Outshining Competition: Benchmarking the Xception Architecture’s Competitive Edge in Lightweight Design

The Xception architecture, renowned for its lightweight design, has proven to be highly competitive in various applications. By leveraging depth-wise separable convolutions, Xception promotes efficiency and reduces computational complexity, enabling faster, more efficient training and deployment even on less powerful hardware.

When compared to other architectures, Xception’s unique advantages come to the fore. For example, traditional architectures like VGG and AlexNet, while powerful, are comparatively heavier, requiring more computational resources and often leading to longer training times. On the other hand, newer architectures like ResNet and Inception, while they do address some of these challenges, may not match the efficiency and compactness offered by Xception.

Furthermore, Xception’s design fundamentally differs from other models, allowing it to capture more complex patterns. While most architectures use standard convolutions, Xception replaces them with depth-wise separable convolutions, which allows it to capture spatial and channel-wise information separately. This distinction enables Xception to model more complex interactions with fewer parameters, enhancing its performance on a wide range of tasks.

However, it is also important to mention that the choice of architecture depends on the specific task and the available data. For tasks involving more complex data or demanding higher accuracy, more powerful, albeit resource-intensive models like EfficientNet or Vision Transformer may be more appropriate. Conversely, for tasks requiring real-time performance or deployment on edge devices, lightweight models like MobileNet or Xception would be more suitable.

Looking ahead, future research could explore hybrid models that combine the strengths of various architectures or investigate more efficient architectures using techniques like neural architecture search. In the realm of animal interaction recognition, specifically, there is much potential for experimenting with novel architectures that can capture temporal patterns in behavior, which is a crucial aspect of interaction.

5.5. Translating Theory into Practice: Unveiling the Real-World Impact and Challenges of Novel Methods in Pig Welfare Enhancement

This research is poised to fundamentally reshape the landscape of livestock welfare, specifically regarding pigs, by employing the innovative Semi-Shuffling and Pig Detector (SSPD) method within the pig interaction recognition (PIR) system. The practical implications are far-reaching and multidimensional.

Foremost, the enhanced accuracy in recognizing and interpreting pig interactions, enabled by the SSPD-PIR method, lays a solid foundation for improved pig welfare. By more accurately interpreting pig interactions, farmers and animal welfare specialists will be better able to identify signs of distress, pain, or discomfort in pigs. This early detection and intervention can prevent chronic stress and its related health issues, leading to better physical health and quality of life for the animals.

In addition, by understanding the interactive states of pigs, we can create more harmonious living environments that cater to their interactive and social needs, thus reducing the likelihood of aggressive behavior and fostering healthier social dynamics. This not only improves the animals’ overall welfare but also potentially increases productivity within the industry, as stress and poor health can negatively impact growth and reproduction.

Implementing the SSPD-PIR method does, however, present potential challenges. One such challenge is the need for substantial investment in technology, infrastructure, and training. As the SSPD-PIR method employs advanced machine learning algorithms and neural networks, it requires both the hardware capable of running these systems and personnel with the necessary technical knowledge to operate and maintain them.

Furthermore, as with any AI-based system, there may be ethical considerations and regulatory requirements to be met. Transparency in how data are collected, stored, and utilized will be essential, and safeguards should be in place to ensure privacy and ethical use of the technology.

Lastly, while the SSPD-PIR system has shown promise in research settings, real-world applications often present unforeseen challenges, such as environmental variations, diverse pig behaviors, and operational difficulties in larger scale farms. Continuous refinement and optimization of the SSPD-PIR method will be crucial in navigating these potential issues and ensuring its successful implementation in the field. While challenges are inherent in any new technology deployment, the potential benefits to pig welfare, agricultural efficiency, and productivity offered by the SSPD-PIR method merit careful consideration and exploration.

6. Conclusions

In closing, our research underscores the substantial impact of incorporating semi-shuffles and pig detection in enhancing the performance of pig interaction recognition (PIR) systems, bringing the true classification capabilities into sharp relief. A cornerstone in building a resilient PIR system hinge on the meticulous examination of each data sample by researchers specializing in pig behavior, ensuring that pig images are devoid of extraneous content or inaccuracies in labeling. Utilizing suboptimal strategies in handling the PIR dataset can precipitate skewed outcomes, with falsely ideal performances often deceiving researchers. Despite the observed dip in performance between after-feeding and before-feeding conditions, our system showcased substantial advancements in classifying isolation after feeding (IAF) and paired after feeding (PAF) categories. These insights shed light on the arenas that necessitate further exploration and meticulous management.

Looking ahead, the adoption of these methodologies to other livestock species, such as cattle and poultry, presents an intriguing avenue for future research. It may necessitate the tailoring of our current techniques to account for unique behaviors and interactive cues specific to different animal species. Furthermore, advancements in machine learning and artificial intelligence can be leveraged to refine the PIR system’s accuracy, offering potential areas of exploration in the broader field of animal interaction recognition. The cardinal aim of our experimental findings is to unveil misrepresented evaluations, thereby underscoring the indispensability of accurate preprocessing of pig related datasets. In this way, we hope to facilitate a shift in understanding, from misconceptions to a more nuanced view of the realities in animal interaction recognition research.

Author Contributions

Writing—original draft, J.H.K., A.P., S.J.C., S.N. and D.S.H.; Writing—review & editing, S.N. and D.S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) Funded by the Ministry of Education under Grant 2021R1A6A1A03 043144.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kittawornrat, A.; Zimmerman, J.J. Toward a better understanding of pig behavior and pig welfare. Anim. Health Res. Rev. 2011, 12, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Oh, S.-H.; See, M. Pork preference for consumers in China, Japan and South Korea. Asian-Australas. J. Anim. Sci. 2012, 25, 143. [Google Scholar] [CrossRef] [PubMed]

- Sinclair, M.; Fryer, C.; Phillips, C.J. The benefits of improving animal welfare from the perspective of livestock stakeholders across Asia. Animals 2019, 9, 123. [Google Scholar] [CrossRef]

- Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic individual pig detection and tracking in pig farms. Sensors 2019, 19, 1188. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Miotto, R.; Wang, F.; Wang, S.; Jiang, X.; Dudley, J.T. Deep learning for healthcare: Review, opportunities and challenges. Briefings Bioinform. 2018, 19, 1236–1246. [Google Scholar] [CrossRef]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F.-Y. Traffic flow prediction with big data: A deep learning approach. IEEE Trans. Intell. Transp. Syst. 2014, 16, 865–873. [Google Scholar] [CrossRef]

- Neethirajan, S. Affective State Recognition in Livestock—Artificial Intelligence Approaches. Animals 2022, 12, 759. [Google Scholar] [CrossRef]

- Kim, J.H.; Poulose, A.; Han, D.S. The Extensive Usage of the Facial Image Threshing Machine for Facial Emotion Recognition Performance. Sensors 2021, 21, 2026. [Google Scholar] [CrossRef]

- Kim, J.H.; Han, D.S. Data Augmentation & Merging Dataset for Facial Emotion Recognition. In Proceedings of the Symposium of the 1st Korea Artificial Intelligence Conference, Jeju, Republic of Korea, 25–26 May 2020; pp. 12–16. [Google Scholar]

- Kim, J.H.; Poulose, A.; Han, D.S. Facial Image Threshing Machine for Collecting Facial Emotion Recognition Dataset. In Proceedings of the Symposium of the Korean Institute of Communications and Information Sciences (KICS) Fall Conference, Online, 13 November 2020; pp. 67–68. [Google Scholar]

- Berrar, D. Cross-Validation. Encycl. Bioinform. Comput. 2019, 1, 542–545. [Google Scholar]

- White, J.; Power, S.D. k-Fold Cross-Validation Can Significantly Over-Estimate True Classification Accuracy in Common EEG-Based Passive BCI Experimental Designs: An Empirical Investigation. Sensors 2023, 23, 6077. [Google Scholar] [CrossRef] [PubMed]

- Wutke, M.; Heinrich, F.; Das, P.P.; Lange, A.; Gentz, M.; Traulsen, I.; Warns, F.K.; Schmitt, A.O.; Gültas, M. Detecting Animal Contacts—A Deep Learning-Based Pig Detection and Tracking Approach for the Quantification of Social Contacts. Sensors 2021, 21, 7512. [Google Scholar] [CrossRef] [PubMed]

- Mota-Rojas, D.; Orihuela, A.; Martínez-Burnes, J.; Gómez, J.; Mora-Medina, P.; Alavez, B.; Ramírez, L.; González-Lozano, M. Neurological modulation of facial expressions in pigs and implications for production. J. Anim. Behav. Biometeorol. 2020, 8, 232–243. [Google Scholar] [CrossRef]

- Di Giminiani, P.; Brierley, V.L.; Scollo, A.; Gottardo, F.; Malcolm, E.M.; Edwards, S.A.; Leach, M.C. The assessment of facial expressions in piglets undergoing tail docking and castration: Toward the development of the piglet grimace scale. Front. Vet. Sci. 2016, 3, 100. [Google Scholar] [CrossRef]

- Krause, A.; Puppe, B.; Langbein, J. Coping style modifies general and affective autonomic reactions of domestic pigs in different behavioral contexts. Front. Behav. Neurosci. 2017, 11, 103. [Google Scholar] [CrossRef]

- Lezama-García, K.; Orihuela, A.; Olmos-Hernández, A.; Reyes-Long, S.; Mota-Rojas, D. Facial expressions and emotions in domestic animals. CABI Rev. 2019, 2019, 1–12. [Google Scholar] [CrossRef]

- Jun, K.; Kim, S.J.; Ji, H.W. Estimating pig weights from images without constraint on posture and illumination. Comput. Electron. Agric. 2018, 153, 169–176. [Google Scholar] [CrossRef]

- Neethirajan, S. Happy cow or thinking pig? Wur wolf—Facial coding platform for measuring emotions in farm animals. AI 2021, 2, 342–354. [Google Scholar] [CrossRef]

- Briefer, E.F.; Sypherd, C.C.-R.; Linhart, P.; Leliveld, L.M.; Padilla de la Torre, M.; Read, E.R.; Guérin, C.; Deiss, V.; Monestier, C.; Rasmussen, J.H. Classification of pig calls produced from birth to slaughter according to their emotional valence and context of production. Sci. Rep. 2022, 12, 3409. [Google Scholar] [CrossRef]

- Hansen, M.F.; Baxter, E.M.; Rutherford, K.M.; Futro, A.; Smith, M.L.; Smith, L.N. Towards Facial Expression Recognition for On-Farm Welfare Assessment in Pigs. Agriculture 2021, 11, 847. [Google Scholar] [CrossRef]

- Hakansson, F.; Jensen, D.B. Automatic monitoring and detection of tail-biting behavior in groups of pigs using video-based deep learning methods. Front. Vet. Sci. 2022, 9, 1099347. [Google Scholar] [CrossRef]

- Imfeld-Mueller, S.; Van Wezemael, L.; Stauffacher, M.; Gygax, L.; Hillmann, E. Do pigs distinguish between situations of different emotional valences during anticipation? Appl. Anim. Behav. Sci. 2011, 131, 86–93. [Google Scholar] [CrossRef]

- Capuani, F.M. Discerning Positive and Negative Emotions in Swine Vocalisations through Machine Learning. Master’s Thesis, Tilburg University, Tilburg, The Netherlands, 2022. [Google Scholar]

- Wang, Y.; Li, S.; Zhang, H.; Liu, T. A lightweight CNN-based model for early warning in sow oestrus sound monitoring. Ecol. Inform. 2022, 72, 101863. [Google Scholar] [CrossRef]

- Ocepek, M.; Žnidar, A.; Lavrič, M.; Škorjanc, D.; Andersen, I.L. DigiPig: First developments of an automated monitoring system for body, head and tail detection in intensive pig farming. Agriculture 2021, 12, 2. [Google Scholar] [CrossRef]

- Xu, S.; He, Q.; Tao, S.; Chen, H.; Chai, Y.; Zheng, W. Pig Face Recognition Based on Trapezoid Normalized Pixel Difference Feature and Trimmed Mean Attention Mechanism. IEEE Trans. Instrum. Meas. 2022, 72, 3500713. [Google Scholar] [CrossRef]

- Son, S.; Ahn, H.; Baek, H.; Yu, S.; Suh, Y.; Lee, S.; Chung, Y.; Park, D. StaticPigDet: Accuracy Improvement of Static Camera-Based Pig Monitoring Using Background and Facility Information. Sensors 2022, 22, 8315. [Google Scholar] [CrossRef]

- Low, B.E.; Cho, Y.; Lee, B.; Yi, M.Y. Playing Behavior Classification of Group-Housed Pigs Using a Deep CNN-LSTM Network. Sustainability 2022, 14, 16181. [Google Scholar] [CrossRef]

- Colaco, S.J.; Kim, J.H.; Poulose, A.; Neethirajan, S.; Han, D.S. DISubNet: Depthwise Separable Inception Subnetwork for Pig Treatment Classification Using Thermal Data. Animals 2023, 13, 1184. [Google Scholar] [CrossRef]

- Colaco, S.J.; Kim, J.H.; Poulose, A.; Van, Z.S.; Neethirajan, S.; Han, D.S. Pig Treatment Classification on Thermal Image Data using Deep Learning. In Proceedings of the 2022 Thirteenth International Conference on Ubiquitous and Future Networks (ICUFN), Barcelona, Spain, 5–8 July 2022; pp. 8–11. [Google Scholar]

- Beery, S.; Van Horn, G.; Perona, P. Recognition in terra incognita. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 456–473. [Google Scholar]

- Swanson, A.; Kosmala, M.; Lintott, C.; Simpson, R.; Smith, A.; Packer, C. Snapshot Serengeti, high-frequency annotated camera trap images of 40 mammalian species in an African savanna. Sci. Data 2015, 2, 150026. [Google Scholar] [CrossRef]

- Jacobs, C.E.; Ausband, D.E. An evaluation of camera trap performance—What are we missing and does deployment height matter? Remote Sens. Ecol. Conserv. 2018, 4, 352–360. [Google Scholar] [CrossRef]

- Beery, S.; Morris, D.; Yang, S. Efficient pipeline for camera trap image review. arXiv 2019, arXiv:1907.06772. [Google Scholar]

- Bruch, S.; Wang, X.; Bendersky, M.; Najork, M. An analysis of the softmax cross entropy loss for learning-to-rank with binary relevance. In Proceedings of the 2019 ACM SIGIR International Conference on Theory of Information Retrieval, Santa Clara, CA, USA, 2–5 October 2019; pp. 75–78. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Van Laarhoven, T. L2 regularization versus batch and weight normalization. arXiv 2017, arXiv:1706.05350. [Google Scholar]

- Wang, R.; Li, J. Bayes test of precision, recall, and F1 measure for comparison of two natural language processing models. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 4135–4145. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).