Navigation Line Extraction Method for Broad-Leaved Plants in the Multi-Period Environments of the High-Ridge Cultivation Mode

Abstract

1. Introduction

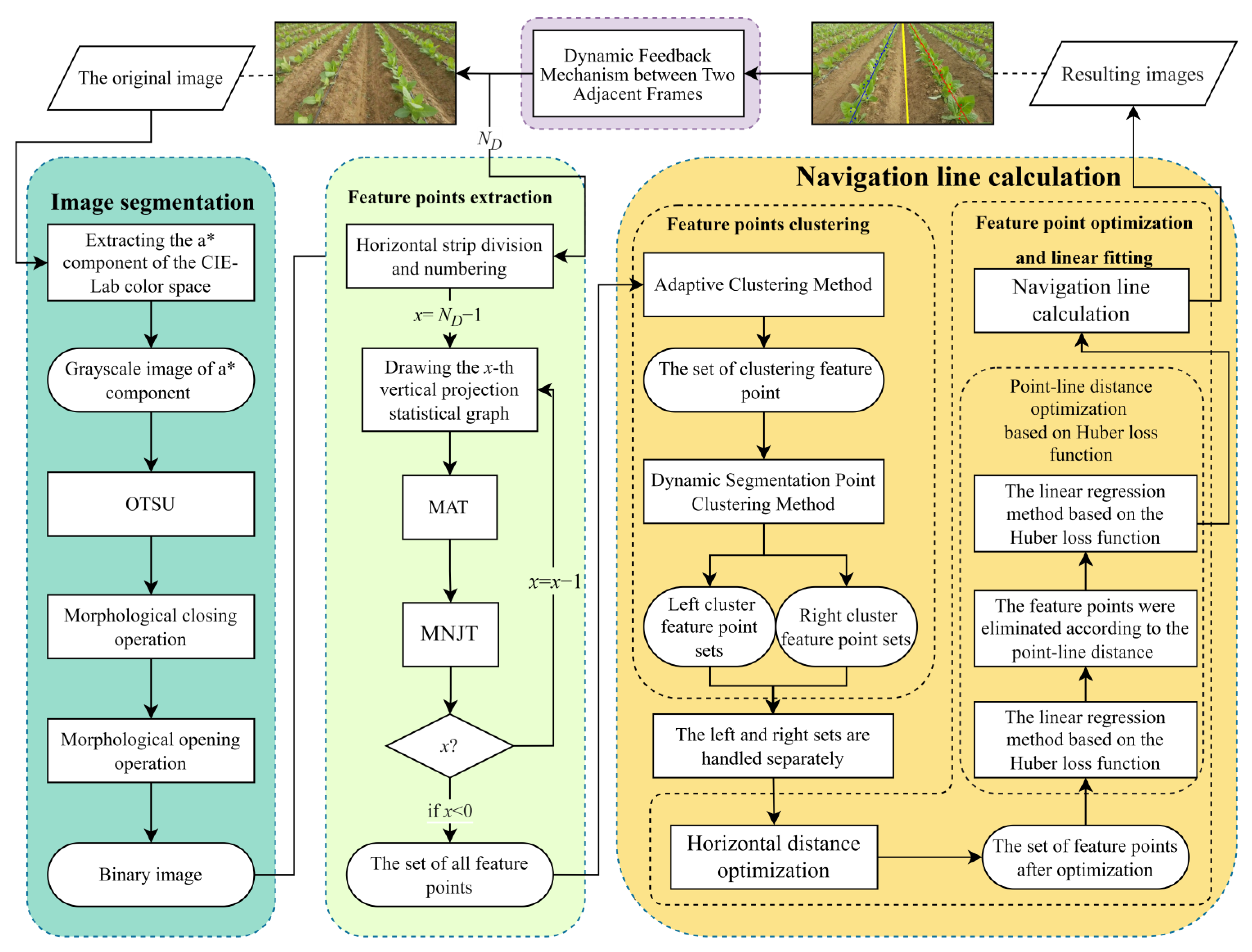

2. Materials and Methods

2.1. Image Acquisition

2.2. Image Segmentation

2.2.1. Image Grey Processing and Binarisation

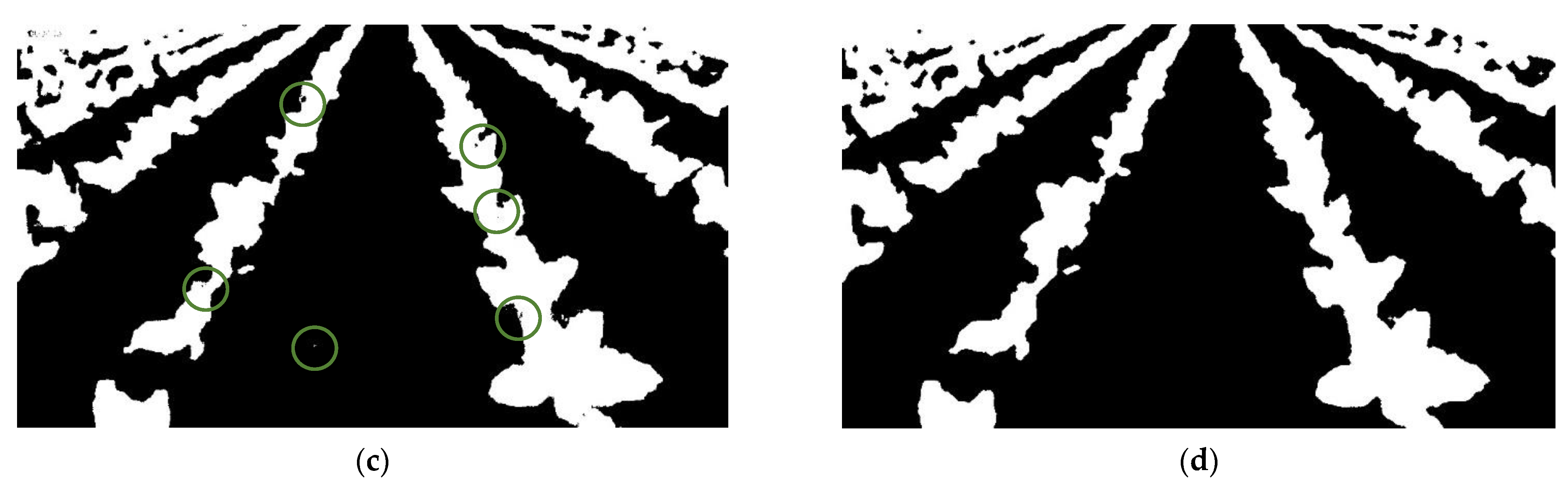

2.2.2. Morphological Operations

2.3. Feature Points Extraction

- (1)

- The number of horizontal strips of this frame ND is calculated according to the dynamic feedback mechanism between two adjacent frames. The specific process is as follows: ND horizontal strips are obtained after isometric segmenting the image from bottom to top. The value of ND is calculated according to the feedback mechanism at the last part of the processing of the previous frame. The rule of this mechanism is described in Section 2.5.

- (2)

- The top region is removed and the horizontal strip height D is calculated based on the number of horizontal strips ND. As the crop rows at the top of the image are dense and cannot accurately represent the locations of the crop rows, the pixel values of all points in the top region of the image are set to 0. The points of the top region with pixel values set to 0 are denoted as P (i, j), where i = 0, 1, 2, ..., W and j = 0, 1, 2, ..., 100. The horizontal strip height D is calculated according to Equation (2).

- (3)

- The ND horizontal strips are divided within the binary image, and all of the divided horizontal strips are numbered in a bottom-up order, for example, segment 0, segment 1, segment 2, ..., segment ND − 1. In the image coordinate system u − v as shown in Figure 3, the upper boundary of the x-th segment horizontal strip is UppB = H − (x + 1)D, the lower boundary is LowB = H − xD, the left boundary is LefB = 0, and the right boundary is RigB = W. The strip from the original image is shown in Figure 3a, and the strip from binary image is shown in Figure 3b.

- (4)

- The vertical projection curve of the x-th horizontal strip is calculated and is denoted as the x-th vertical projection curve. The x-th horizontal strip described above is actually a binary image. f (i, j) represents the value of point (i, j) in the x-th horizontal strip. The process initialises x = ND − 1 and finds the points with f (i, j) = 255 in the x-th horizontal strip, and accumulates the points with f (i, j) = 255 in the same column onto the column axis u to obtain the vertical projection curve as shown in Figure 3c. Let p(i) be the number of pixels with a pixel value of 255 in the i-th column of the binary horizontal stripes. The calculation of p(i) is shown in Equation (3), where i = 0, 1, 2, …, u; x = ND − 1, …, 2, 1, 0.

- (5)

- The average projected value avgp (x) of p(i) is calculated for all columns of the x-th vertical projection curve. A larger and higher peak area is identified as the crop characteristic area, and the crop characteristic area is simplified into a point. This point is the crop feature point. avgp (x) is calculated as follows:

- (6)

- PS and ths (according to the following definitions) are calculated, and after a threshold m(x) is calculated according to Equation (5), the ordinate value Yx of each feature point in the x-th horizontal strip can be calculated according to Equation (6). The image area threshold ths is determined by repeated experiments, and PS is the area ratio of the number of pixels with a grayscale value of 255 in the binary horizontal strip to the total number of pixels in the binary horizontal strip. In the x-th vertical projection curve, a horizontal line is set to pass through the curve, and the ordinate of the horizontal line is m(x), as shown in Figure 3c. When the crop is in the seedling stage, the crop’s leaves are small within the image and there are relatively few weeds, so selecting an appropriate m(x) can effectively avoid the influences of multimodal peaks on the feature points. When the crop is mature, a larger m(x) can filter out disturbances owing to the leaves and weeds of the crop. These processes above comprise a method for determining the ordinate values of feature points based on area threshold (hereafter ‘MAT’). m(x) is calculated as follows:

- (7)

- The value size relationship between p(i) and m(x) is analysed. The x-th vertical projection curve is simplified according to Equation (7), and a vertical projection simplified curve after the threshold is obtained as shown in Figure 3d.

- (8)

- The abscissa value Xx is calculated for each feature point in the x-th horizontal strip. Each continuous data set with a B(i) value of 1 is extracted. The abscissa of the feature point is the average value of the abscissa u corresponding to the continuous data set. The noise judgment threshold thn is used as the minimum width judgment indicator to identify the continuous data set. The noise judgment threshold is used to filter the noise interference of weeds, branches, and leaves. The value of thn is determined based on experiments. If a cardinal number of the continuous data set is smaller than the value of thn, the continuous data set is judged as noise interference and discarded. The equation for calculating the abscissa of the feature point is as follows:

- (9)

- The feature point set SET_FP = {(p_mid0, Yx), (p_mid1, Yx), (p_mid2, Yx), …, (p_midQ-1, Yx)} is output. Q is the number of feature points in the x-th horizontal strip.

- (10)

- After setting x = x − 1, steps (4)–(9) are repeated in a loop until x < 0; then, the module program stops running.

2.4. Navigation Line Calculation

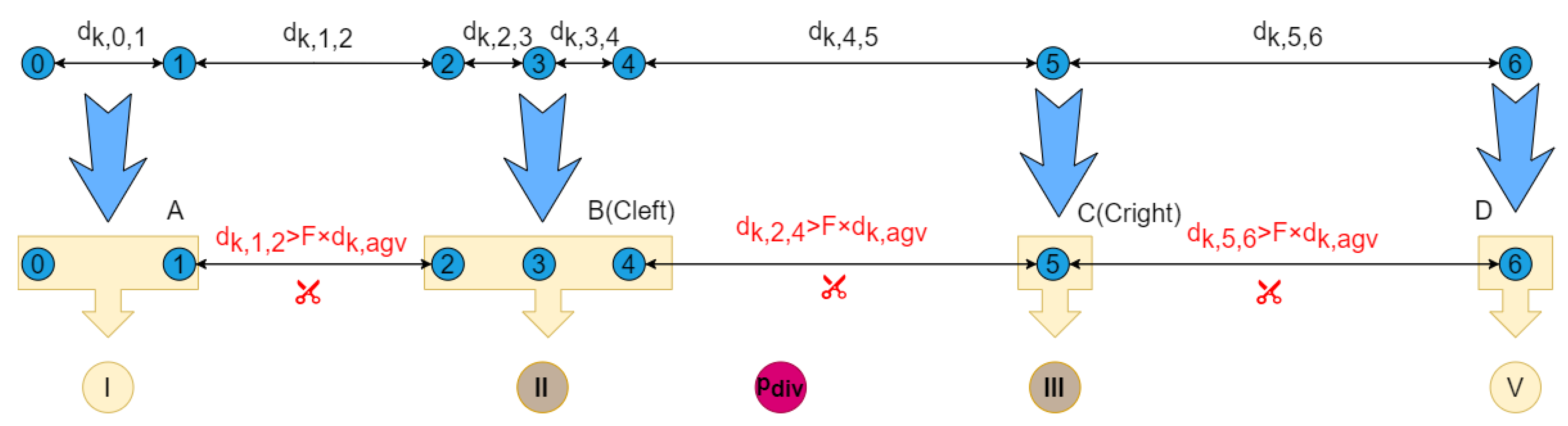

2.4.1. Feature Points Clustering

- (1)

- In the clustering process, the horizontal strips from the feature point extraction step are used as units to be traversed. The horizontal strip is traversed from bottom to top, and each feature point is traversed sequentially from left to right in the horizontal strip. The number of feature points of the K-th horizontal strip is set as QK, and PK,m (m = 0, 1, 2, …, nK) represents the m-th feature point in the K-th horizontal strip. The distance between the m-th feature point and m + 1-th feature point in the K-th horizontal strip can be expressed as dK,m,(m + 1), where m = 0, 1, 2, …, nK − 1. The average value of the distances between all adjacent feature points in the same horizontal strip can be expressed as dK,avg, as shown in Equation (9). As shown in Figure 4, a clustering threshold thdiv is established for comparison with the distance dK,m,(m + 1) between each adjacent feature point. For the feature points in the K-th horizontal strip, when dK,m,(m + 1) > thdiv, the m value is recorded, and according to all of the recorded m values, the feature points can be divided into several feature point sets. The equations for calculating thdiv and dK,avg are as follows, and F is a coefficient used to calculate thdiv:

- (2)

- segmentation midpoint pdiv is established. According to pdiv, the adjacent feature point sets on the left and right sides (B and C sets in Figure 4, respectively) can be obtained. The left feature point set is denoted as Cleft, and the right feature point set is denoted as Cright. At the 0-th horizontal strip, pdiv,0 is the rounded value of W/2. From the first horizontal strip to the last horizontal strip, the pdiv of the current horizontal strip is set as the average point value of the set established based on the pdiv values of all of the previous horizontal strips, and the split midpoint of the K-th horizontal strip is pdiv,K. The calculations for Equation (11) are as follows:

2.4.2. Feature Point Optimisation and Linear Fitting

- (1)

- Horizontal distance optimisation

- (2)

- Point-line distance optimisation based on the Huber loss function

2.4.3. Calculation of Navigation Lines Based on Fitted Straight Lines

2.5. Dynamic Feedback Mechanism between Two Adjacent Frames

- (1)

- The algorithm initialises a variable (NUMBER) representing the number of horizontal strips, and the algorithm starts with the initial NUMBER = 30 in the first frame.

- (2)

- After the image processing mentioned above, the number of left feature points (num_left) and number of right feature points (num_right) of this frame image can be obtained.

- (3)

- The average value of num_left and num_right is taken, and denoted as avg_feature.

- (4)

- The number of horizontal strips required to divide in the next frame image (denoted as num_next) is calculated according to the following feedback mechanism Equation (20). At each round, NUMBER= num_next, and the next frame image processing commences and segments the horizontal strips according to NUMBER. This loop continues until the termination command is issued.

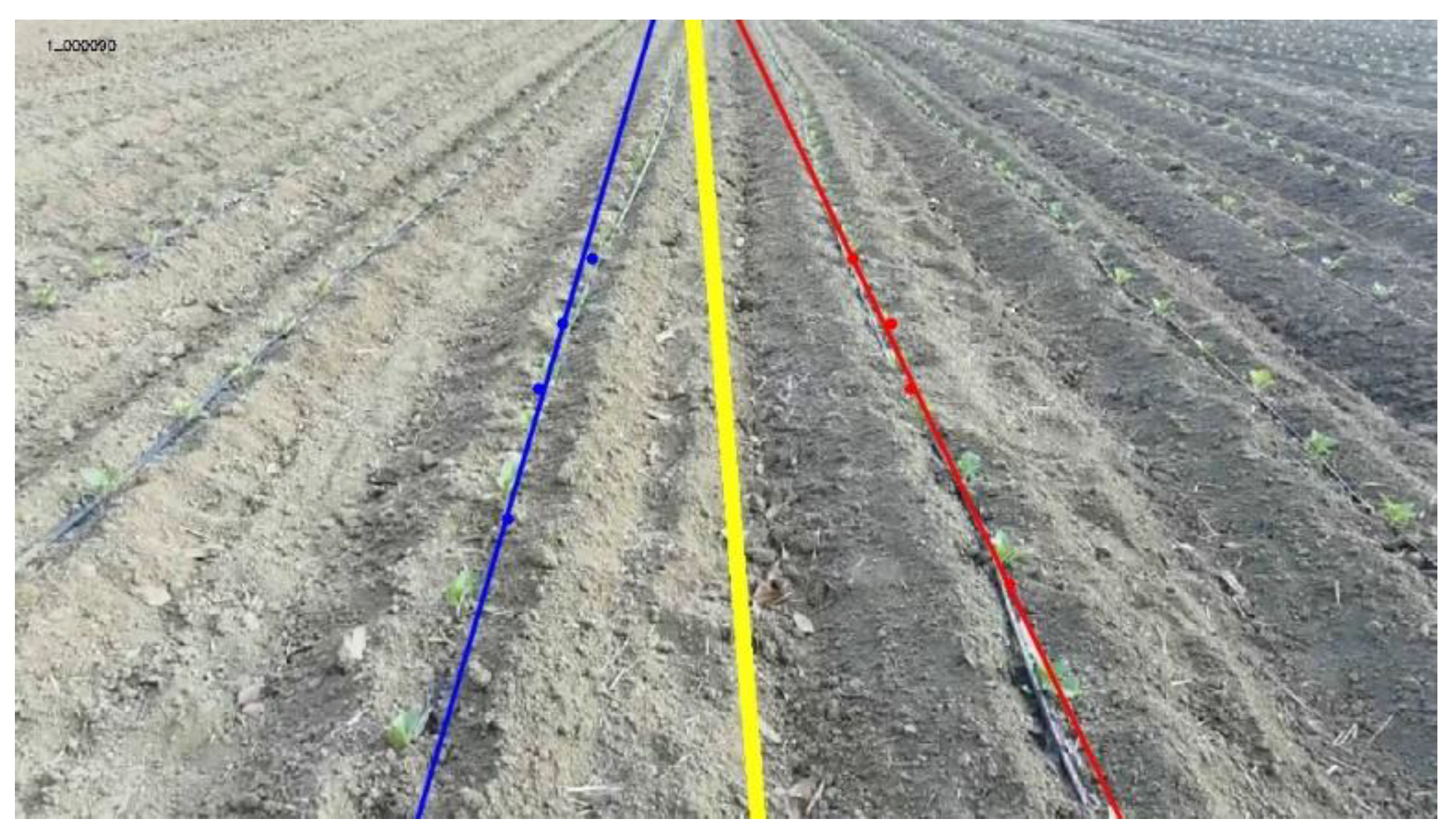

3. Results and Discussion

3.1. Image Preprocessing

3.2. Accuracy Verification Test

- (1)

- The leaf area of sample A is small. The single plant form can be clearly distinguished among plants with no shadows. There are almost no weeds, but the number of leaf surfaces is small.

- (2)

- The leaf area of sample B is slightly larger with a small amount of shadow, and the leaf surfaces of the crops are connected between plants to a certain extent. There are small weeds.

- (3)

- The leaf area of sample C is large with many shadows, and the leaf surfaces of the crops are connected between plants and rows to a certain extent. There is a large area of weeds.

- (4)

- The leaf area of sample D is slightly larger and has many shadows, and the leaf surfaces of crops are connected between plants to a certain extent. There are small weeds.

- (5)

- From a qualitative perspective, i.e., by comparing the crop row extraction results for the three crop growth periods and two light conditions, it can be seen that HUBERP has strong adaptability. The fitted straight lines detected in this study and shown in Figure 11 are all distributed in the position of the crop row. The straight lines obtained by HUBERP (solid line) have little deviation from the manually marked crop row lines (white dashed line), and the results from HUBERP are basically consistent with expectations. In contrast, only part of the straight lines (solid lines) obtained by the LSMC fit well with the manually marked crop row lines (white dotted lines). Therefore, HUBERP can meet the requirements for crop row line identification in selected environments during different growth periods and different light conditions, whereas the LSMC cannot meet the requirements for the early identification of crop growth.

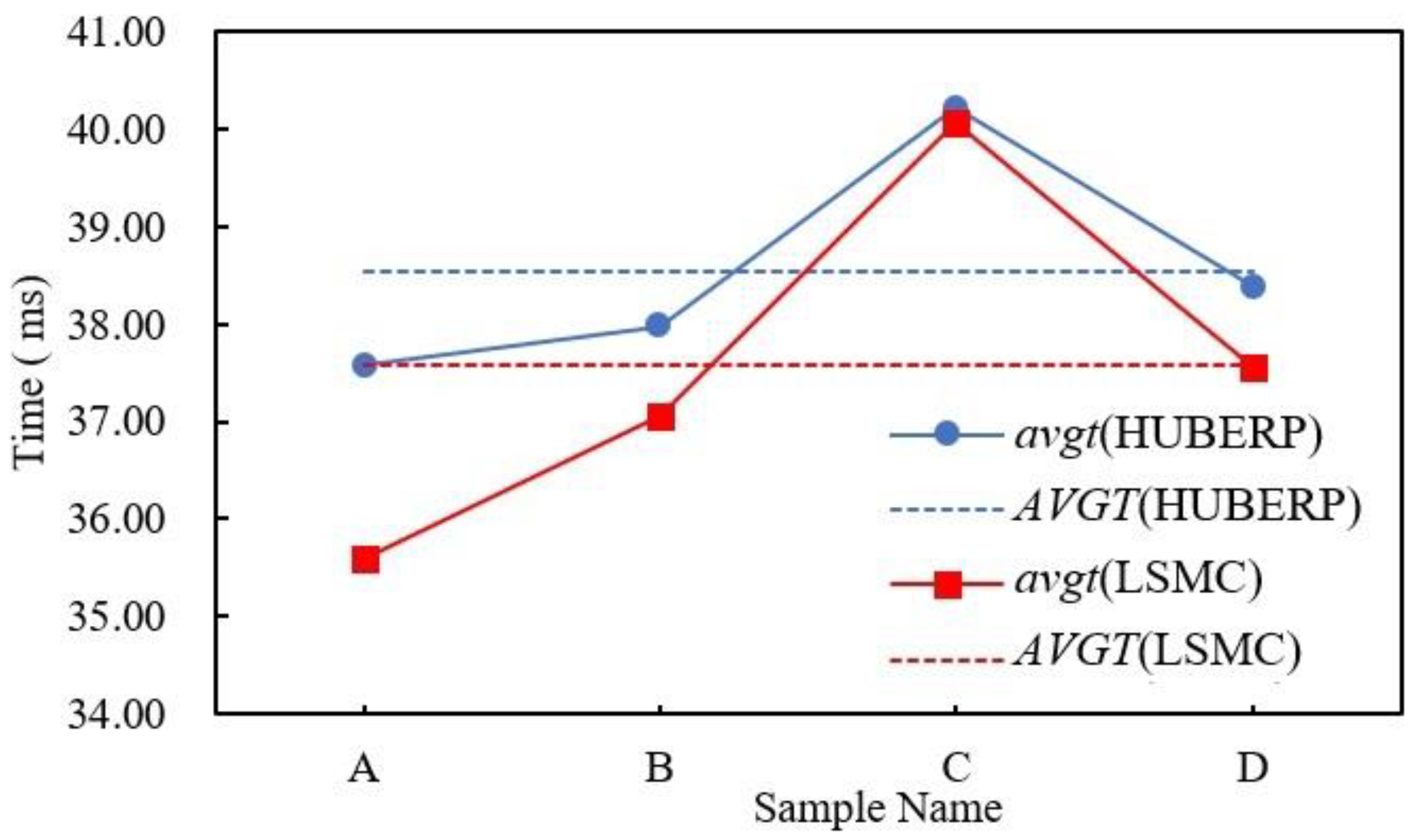

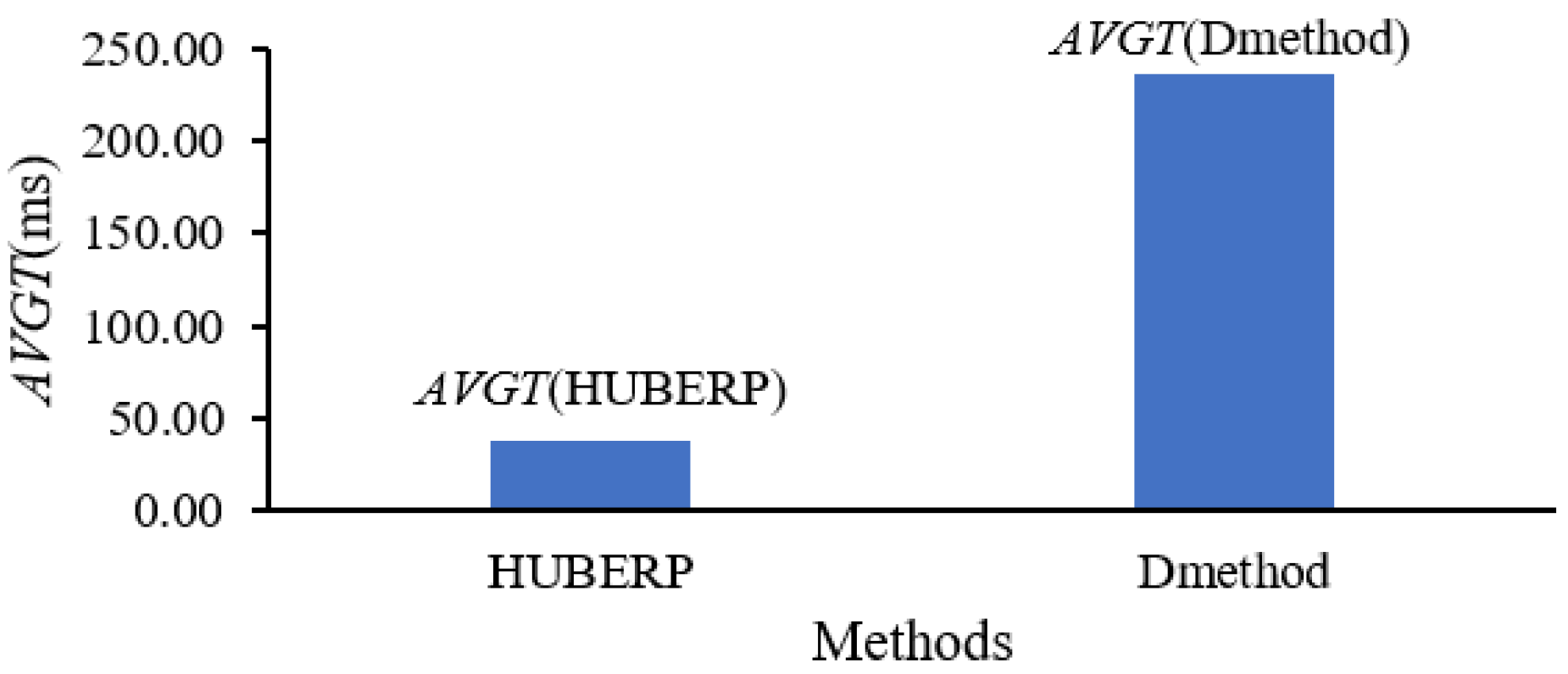

3.3. Timeliness Verification Test

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

References

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in Agriculture by Machine and Deep Learning Techniques: A Review of Recent Developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Li, S.; Xu, H.; Ji, Y.; Cao, R.; Zhang, M.; Li, H. Development of a following agricultural machinery automatic navigation system. Comput. Electron. Agric. 2019, 158, 335–344. [Google Scholar] [CrossRef]

- Opiyo, S.; Okinda, C.; Zhou, J.; Mwangi, E.; Makange, N. Medial axis-based machine-vision system for orchard robot navigation. Comput. Electron. Agric. 2021, 185, 106153. [Google Scholar] [CrossRef]

- Man, Z.; Yuhan, J.; Shichao, L.; Ruyue, C.; Hongzhen, X.; Zhenqian, Z. Research Progress of Agricultural Machinery Navigation. Trans. Chin. Soc. Agric. 2020, 51, 18. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, J.; Shu, A.; Chen, Y.; Chen, J.; Yang, Y.; Tang, W.; Zhang, Y. Study of convolutional neural network-based semantic segmentation methods on edge intelligence devices for field agricultural robot navigation line extraction. Comput. Electron. Agric. 2023, 209, 107811. [Google Scholar] [CrossRef]

- Lin, S.; Jiang, Y.; Chen, X.; Biswas, A.; Li, S.; Yuan, Z.; Wang, H.; Qi, L. Automatic Detection of Plant Rows for a Transplanter in Paddy Field Using Faster R-CNN. IEEE Access 2020, 8, 147231–147240. [Google Scholar] [CrossRef]

- Kim, W.-S.; Lee, D.-H.; Kim, T.; Kim, G.; Kim, H.; Sim, T.; Kim, Y.-J. One-shot classification-based tilled soil region segmentation for boundary guidance in autonomous tillage. Comput. Electron. Agric. 2021, 189, 106371. [Google Scholar] [CrossRef]

- Adhikari, S.P.; Kim, G.; Kim, H. Deep Neural Network-Based System for Autonomous Navigation in Paddy Field. IEEE Access 2020, 8, 71272–71278. [Google Scholar] [CrossRef]

- Choi, K.H.; Han, S.K.; Han, S.H.; Park, K.H.; Kim, K.S.; Kim, S. Morphology-based guidance line extraction for an autonomous weeding robot in paddy fields. Comput. Electron. Agric. 2015, 113, 266–274. [Google Scholar] [CrossRef]

- Li, J.B.; Zhu, R.G.; Chen, B.Q. Image detection and verification of visual navigation route during cotton field management period. Int. J. Agric. Biol. Eng. 2018, 11, 159–165. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, Y.; Zhang, B.; Wen, X.; Yue, X.; Chen, L. Autonomous detection of crop rows based on adaptive multi-ROI in maize fields. Int. J. Agric. Biol. Eng. 2021, 14, 217–225. [Google Scholar] [CrossRef]

- Ma, Z.; Tao, Z.; Du, X.; Yu, Y.; Wu, C. Automatic detection of crop root rows in paddy fields based on straight-line clustering algorithm and supervised learning method. Biosyst. Eng. 2021, 211, 63–76. [Google Scholar] [CrossRef]

- Lu, Y.Z.; Young, S.; Wang, H.F.; Wijewardane, N. Robust plant segmentation of color images based on image contrast optimization. Comput. Electron. Agric. 2022, 193, 106711. [Google Scholar] [CrossRef]

- Fan, Y.; Chen, Y.; Chen, X.; Zhang, H.; Liu, C.; Duan, Q. Estimating the aquatic-plant area on a pond surface using a hue-saturation-component combination and an improved Otsu method. Comput. Electron. Agric. 2021, 188, 106372. [Google Scholar] [CrossRef]

- Xu, B.; Chai, L.; Zhang, C. Research and Application on Corn Crop Identification and Positioning Method Based on Machine Vision. Inf. Process. Agric. 2021, 10, 106–113. [Google Scholar] [CrossRef]

- Zhang, X.Y.; Li, X.N.; Zhang, B.H.; Zhou, J.; Tian, G.Z.; Xiong, Y.J.; Gu, B.X. Automated robust crop-row detection in maize fields based on position clustering algorithm and shortest path method. Comput. Electron. Agric. 2018, 154, 165–175. [Google Scholar] [CrossRef]

- Garcia-Santillan, I.; Guerrero, J.M.; Montalvo, M.; Pajares, G. Curved and straight crop row detection by accumulation of green pixels from images in maize fields. Precis. Agric. 2018, 19, 18–41. [Google Scholar] [CrossRef]

- Yu, Y.; Bao, Y.; Wang, J.; Chu, H.; Zhao, N.; He, Y.; Liu, Y. Crop Row Segmentation and Detection in Paddy Fields Based on Treble-Classification Otsu and Double-Dimensional Clustering Method. Remote Sens. 2021, 13, 901. [Google Scholar] [CrossRef]

- Zhiqiang, Z.; Kun, X.; Liang, W.; Yuefeng, D.; Zhongxiang, Z.; Enrong, M. Crop row detection and tracking based on binocular vision and adaptive Kalman filter. Trans. Chin. Soc. Agric. Eng. 2022, 38, 143. [Google Scholar] [CrossRef]

- Diao, Z.; Wu, B.; Wei, Y.; Wu, Y. The Extraction Algorithm of Crop Rows Line Based on Machine Vision. In Computer and Computing Technologies in Agriculture IX; Li, D., Li, Z., Eds.; Swiss Confederation: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Fontaine, V.; Crowe, T. Development of line-detection algorithm for local positioning in densely seeded crops. Can. Biosyst. Eng 2006, 48, 7.19–17.29. [Google Scholar]

- Mao, J.; Cao, Z.; Wang, H.; Zhang, B.; Guo, Z.; Niu, W. Agricultural Robot Navigation Path Recognition Based on K-means Algorithm for Large-Scale Image Segmentation. In Proceedings of the 2019 14th IEEE Conference on Industrial Electronics and Applications (ICIEA), Xi’an, China, 19–21 June 2019. [Google Scholar] [CrossRef]

- Wang, S.S.; Yu, S.S.; Zhang, W.Y.; Wang, X.S.; Li, J. The seedling line extraction of automatic weeding machinery in paddy field. Comput. Electron. Agric. 2023, 205, 14. [Google Scholar] [CrossRef]

- Basso, M.; de Freitas, E.P. A UAV Guidance System Using Crop Row Detection and Line Follower Algorithms. J. Intell. Robot. Syst. 2020, 97, 605–621. [Google Scholar] [CrossRef]

- Winterhalter, W.; Fleckenstein, F.V.; Dornhege, C.; Burgard, W. Crop Row Detection on Tiny Plants with the Pattern Hough Transform. IEEE Robot. Autom. Lett. 2018, 3, 3394–3401. [Google Scholar] [CrossRef]

- Xia, L.; Junhao, S.; Zhenchao, Y.; Sichao, W.; Haibo, Z. Extracting navigation line to detect the maize seedling line using median-point Hough transform. Trans. Chin. Soc. Agric. Eng. 2022, 38, 167. [Google Scholar] [CrossRef]

- Varela, S.; Dhodda, P.R.; Hsu, W.H.; Prasad, P.V.V.; Assefa, Y.; Peralta, N.R.; Griffin, T.; Sharda, A.; Ferguson, A.; Ciampitti, I.A. Early-Season Stand Count Determination in Corn via Integration of Imagery from Unmanned Aerial Systems (UAS) and Supervised Learning Techniques. Remote Sens. 2018, 10, 14. [Google Scholar] [CrossRef]

- Zheng, M.; Luo, W. Underwater Image Enhancement Using Improved CNN Based Defogging. Electronics 2022, 11, 150. [Google Scholar] [CrossRef]

- Bai, Y.; Zhang, B.; Xu, N.; Zhou, J.; Shi, J.; Diao, Z. Vision-based navigation and guidance for agricultural autonomous vehicles and robots: A review. Comput. Electron. Agric. 2023, 205, 107584. [Google Scholar] [CrossRef]

- Diao, Z.; Zhao, M.; Song, Y.; Wu, B.; Wu, Y.; Qian, X.; Wei, Y. Crop line recognition algorithm and realization in precision pesticide system based on machine vision. Trans. Chin. Soc. Agric. Eng. 2015, 31, 47–52. [Google Scholar]

- Yang, Y.; Zhang, B.; Zha, J.; Wen, X.; Chen, L.; Zhang, T.; Dong, X.; Yang, X. Real-time extraction of navigation line between corn rows. Trans. Chin. Soc. Agric. Eng. 2020, 36, 162–171. [Google Scholar] [CrossRef]

| Sample Name | x Day of Crop Transplanting | Lighting Conditions | Graphic |

|---|---|---|---|

| A | 3 | Cloudy | Figure 1a |

| B | 18 | Cloudy | Figure 1b |

| C | 33 | Cloudy | Figure 1c |

| D | 19 | Sunny | Figure 1d |

| Samples | thn | ths | ths | ths | ths | ths | ths | ths |

|---|---|---|---|---|---|---|---|---|

| 25% | 30% | 35% | 40% | 45% | 50% | 55% | ||

| A | 1 | 0.2086 | 0.2086 | 0.3194 | 0.3899 | 0.3081 | 0.4198 | 0.9800 |

| 2 | 0.1827 | 0.1728 | 0.2927 | 0.3621 | 0.3049 | 0.4091 | 0.8591 | |

| 3 | 0.1302 | 0.1172 | 0.2410 | 0.3361 | 0.2982 | 0.4053 | 0.5156 | |

| 4 | 0.1060 | 0.0146 | 0.2170 | 0.3183 | 0.2266 | 0.3803 | 0.6399 | |

| 5 | 0.1339 | 0.1355 | 0.2448 | 0.3578 | 0.2687 | 0.4583 | 0.7911 | |

| 6 | 0.2589 | 0.2348 | 0.3699 | 0.4110 | 0.3604 | 0.4855 | 0.9188 | |

| 7 | 0.2854 | 0.3345 | 0.3960 | 0.4992 | 0.3843 | 0.5125 | 1.1292 | |

| B | 1 | 0.0353 | 0.029 | 0.0211 | 0.0343 | 0.0303 | 0.0288 | 0.0308 |

| 2 | 0.0225 | 0.0215 | 0.0274 | 0.0292 | 0.0294 | 0.0363 | 0.0383 | |

| 3 | 0.0242 | 0.0232 | 0.0263 | 0.0213 | 0.0331 | 0.0287 | 0.0307 | |

| 4 | 0.0218 | 0.02 | 0.0247 | 0.0342 | 0.0201 | 0.0199 | 0.0219 | |

| 5 | 0.0211 | 0.0201 | 0.0191 | 0.0199 | 0.0218 | 0.0259 | 0.0279 | |

| 6 | 0.0243 | 0.0233 | 0.0255 | 0.0335 | 0.0254 | 0.0284 | 0.0304 | |

| 7 | 0.0288 | 0.0278 | 0.0291 | 0.036 | 0.0324 | 0.0345 | 0.0365 | |

| C | 1 | 0.0581 | 0.0535 | 0.0603 | 0.0593 | 0.0507 | 0.0557 | 0.0686 |

| 2 | 0.044 | 0.0521 | 0.0565 | 0.0555 | 0.0464 | 0.0495 | 0.0579 | |

| 3 | 0.0424 | 0.0406 | 0.0501 | 0.0491 | 0.0432 | 0.0391 | 0.051 | |

| 4 | 0.0366 | 0.0393 | 0.0391 | 0.0381 | 0.0269 | 0.0272 | 0.0399 | |

| 5 | 0.0399 | 0.0315 | 0.037 | 0.036 | 0.0416 | 0.0328 | 0.0377 | |

| 6 | 0.0305 | 0.0304 | 0.0248 | 0.0238 | 0.0285 | 0.0277 | 0.0281 | |

| 7 | 0.0298 | 0.0274 | 0.0333 | 0.0323 | 0.0346 | 0.0355 | 0.0416 | |

| D | 1 | 0.0303 | 0.0262 | 0.0232 | 0.0372 | 0.0358 | 0.0272 | 0.0325 |

| 2 | 0.0251 | 0.0169 | 0.0206 | 0.0259 | 0.0286 | 0.0311 | 0.0316 | |

| 3 | 0.0227 | 0.0219 | 0.0179 | 0.0221 | 0.0242 | 0.0254 | 0.0304 | |

| 4 | 0.0246 | 0.0177 | 0.0213 | 0.0263 | 0.0259 | 0.022 | 0.0307 | |

| 5 | 0.0157 | 0.0186 | 0.0145 | 0.0239 | 0.0238 | 0.0194 | 0.0284 | |

| 6 | 0.0314 | 0.0266 | 0.0207 | 0.0297 | 0.0248 | 0.0178 | 0.0299 | |

| 7 | 0.0366 | 0.0345 | 0.0332 | 0.0285 | 0.0343 | 0.0268 | 0.0396 |

| Samples | ΔF | F | F | F | F | F | F | F |

|---|---|---|---|---|---|---|---|---|

| 0.7 | 0.8 | 0.9 | 1 | 1.1 | 1.2 | 13 | ||

| A | 0.01 | 0.5310 | 0.3916 | 0.3328 | 0.1407 | 0.0254 | 0.1417 | 0.2657 |

| 0.02 | 0.4623 | 0.3273 | 0.2757 | 0.1354 | 0.0169 | 0.1668 | 0.2045 | |

| 0.03 | 0.3535 | 0.3191 | 0.2032 | 0.1763 | 0.0117 | 0.1963 | 0.2322 | |

| 0.04 | 0.2920 | 0.2572 | 0.1830 | 0.1279 | 0.0090 | 0.1625 | 0.2347 | |

| 0.05 | 0.3225 | 0.2660 | 0.1958 | 0.1541 | 0.0183 | 0.1916 | 0.2421 | |

| 0.06 | 0.4811 | 0.3042 | 0.2043 | 0.1855 | 0.0558 | 0.1319 | 0.2891 | |

| 0.07 | 0.5204 | 0.3447 | 0.2470 | 0.1385 | 0.1259 | 0.1423 | 0.2157 | |

| B | 0.01 | 0.0234 | 0.0210 | 0.0331 | 0.0203 | 0.0301 | 0.0276 | 0.0252 |

| 0.02 | 0.0193 | 0.0167 | 0.0245 | 0.019 | 0.0221 | 0.0253 | 0.0246 | |

| 0.03 | 0.0174 | 0.0154 | 0.0174 | 0.0163 | 0.0153 | 0.0232 | 0.0196 | |

| 0.04 | 0.0162 | 0.0153 | 0.0147 | 0.0186 | 0.0219 | 0.0174 | 0.0158 | |

| 0.05 | 0.0141 | 0.0146 | 0.0145 | 0.0138 | 0.0144 | 0.0141 | 0.014 | |

| 0.06 | 0.0238 | 0.0236 | 0.0187 | 0.0179 | 0.0233 | 0.0215 | 0.0173 | |

| 0.07 | 0.0308 | 0.0272 | 0.0193 | 0.0276 | 0.0255 | 0.0306 | 0.022 | |

| C | 0.01 | 0.0368 | 0.042 | 0.0434 | 0.0429 | 0.05 | 0.0389 | 0.0401 |

| 0.02 | 0.0294 | 0.0352 | 0.0357 | 0.0345 | 0.0408 | 0.0342 | 0.0339 | |

| 0.03 | 0.0256 | 0.0334 | 0.0317 | 0.0249 | 0.0334 | 0.033 | 0.0258 | |

| 0.04 | 0.0333 | 0.0318 | 0.0339 | 0.0301 | 0.0278 | 0.0255 | 0.0321 | |

| 0.05 | 0.0243 | 0.0239 | 0.0243 | 0.0245 | 0.0239 | 0.0230 | 0.0242 | |

| 0.06 | 0.0252 | 0.0315 | 0.0273 | 0.0258 | 0.0302 | 0.0273 | 0.0302 | |

| 0.07 | 0.0282 | 0.0401 | 0.0292 | 0.0314 | 0.0367 | 0.0305 | 0.0347 | |

| D | 0.01 | 0.0124 | 0.0104 | 0.0074 | 0.0143 | 0.0096 | 0.0077 | 0.015 |

| 0.02 | 0.0055 | 0.0052 | 0.0046 | 0.0094 | 0.005 | 0.0034 | 0.0055 | |

| 0.03 | 0.002 | 0.0019 | 0.0012 | 0.0018 | 0.0011 | 0.0016 | 0.002 | |

| 0.04 | 0.0071 | 0.0076 | 0.0062 | 0.0066 | 0.0027 | 0.008 | 0.0077 | |

| 0.05 | 0.0099 | 0.0142 | 0.0117 | 0.0139 | 0.0114 | 0.0092 | 0.0114 | |

| 0.06 | 0.0166 | 0.018 | 0.0161 | 0.0216 | 0.0182 | 0.0103 | 0.0156 | |

| 0.07 | 0.0202 | 0.0234 | 0.0216 | 0.0241 | 0.0192 | 0.0171 | 0.0196 |

| Sample Naming | Sample A | Sample B | Sample C | Sample D |

|---|---|---|---|---|

| The average value of num_next | 40.45 | 36.15 | 34.25 | 37.45 |

| Crop size | Small | Medium | Large | Medium |

| Lighting conditions | Cloudy | Cloudy | Cloudy | Sunny |

| Lighting Conditions | Number of Connected Noise Areas | Total Area of Connected Noise Areas | ||||

|---|---|---|---|---|---|---|

| Average | Maximum | Minimum | Average | Maximum | Minimum | |

| Cloudy environment | 10 | 25 | 2 | 67 | 153 | 2 |

| Sunny environment | 48 | 71 | 29 | 293 | 496 | 113 |

| Method | Sample Name | rateREC(%) | avgANG(°) | Df | |||

|---|---|---|---|---|---|---|---|

| angComp(°) | angComp_abs(°) | angComp(°) | angComp_abs(°) | Df1 | Df2 | ||

| HUBERP | A | 100 | 100 | 0.20 | 1.92 | −2.72 | −9.79 |

| HUBERP | B | 100 | 100 | −0.35 | 1.49 | 0.05 | 0.12 |

| HUBERP | C | 90 | 90 | 2.96 | 4.81 | 1.44 | 0.06 |

| HUBERP | D | 100 | 100 | 0.24 | 0.88 | 0.10 | −0.09 |

| LSMC | A | 50 | 35 | −2.92 | 11.71 | / | / |

| LSMC | B | 100 | 100 | −0.30 | 1.37 | / | / |

| LSMC | C | 85 | 85 | −1.52 | 4.75 | / | / |

| LSMC | D | 100 | 100 | −0.14 | 0.97 | / | / |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Zhang, X.; Zhao, R.; Chen, Y.; Liu, X. Navigation Line Extraction Method for Broad-Leaved Plants in the Multi-Period Environments of the High-Ridge Cultivation Mode. Agriculture 2023, 13, 1496. https://doi.org/10.3390/agriculture13081496

Zhou X, Zhang X, Zhao R, Chen Y, Liu X. Navigation Line Extraction Method for Broad-Leaved Plants in the Multi-Period Environments of the High-Ridge Cultivation Mode. Agriculture. 2023; 13(8):1496. https://doi.org/10.3390/agriculture13081496

Chicago/Turabian StyleZhou, Xiangming, Xiuli Zhang, Renzhong Zhao, Yong Chen, and Xiaochan Liu. 2023. "Navigation Line Extraction Method for Broad-Leaved Plants in the Multi-Period Environments of the High-Ridge Cultivation Mode" Agriculture 13, no. 8: 1496. https://doi.org/10.3390/agriculture13081496

APA StyleZhou, X., Zhang, X., Zhao, R., Chen, Y., & Liu, X. (2023). Navigation Line Extraction Method for Broad-Leaved Plants in the Multi-Period Environments of the High-Ridge Cultivation Mode. Agriculture, 13(8), 1496. https://doi.org/10.3390/agriculture13081496