A Systematic Review on Automatic Insect Detection Using Deep Learning

Abstract

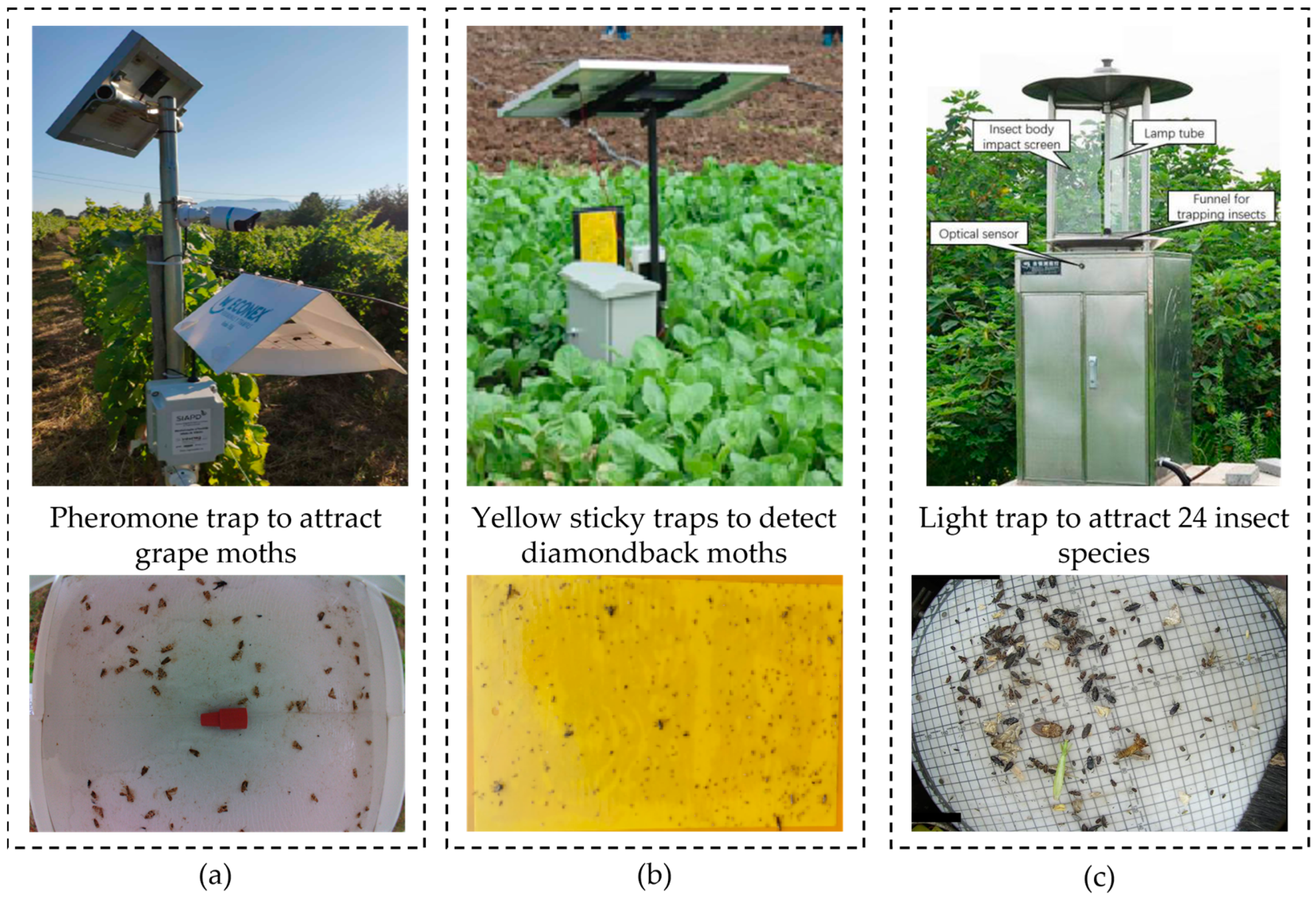

1. Introduction

- The integration of deep learning techniques for automatic insect detection in traps;

- A systematic review and analysis of recent research on deep learning methods for insect detection;

- An investigation of the effectiveness of deep learning in addressing the challenges of traditional insect detection methods;

- A comparison of deep learning methods for insect classification and detection;

- The identification of key research gaps and opportunities for future work in this area.

- Insect infestations can cause significant crop losses and economic damage in agricultural production;

- Traditional methods of insect detection and control can be time-consuming, labour-intensive, and potentially harmful to the environment and human health;

- Deep learning techniques have the potential to improve the efficiency and effectiveness of insect detection, leading to more sustainable and profitable farming practices;

- A systematic review of recent research on deep learning methods for insect detection can provide valuable insights and guidance for future research and development in this field;

- The results of this study can help inform and improve the use of deep learning techniques for insect detection in practical applications.

2. Theoretical Background

3. Materials and Methods

3.1. Research Questions

- (RQ1) What are the methods that obtain better mean average precision (mAP) for the task of insect detection?

- (RQ2) What dataset variables have the most significant influence on detection?

- (RQ3) What are the main challenges of and recommendations for automatically detecting insects?

3.2. Inclusion Criteria

3.3. Search Strategy

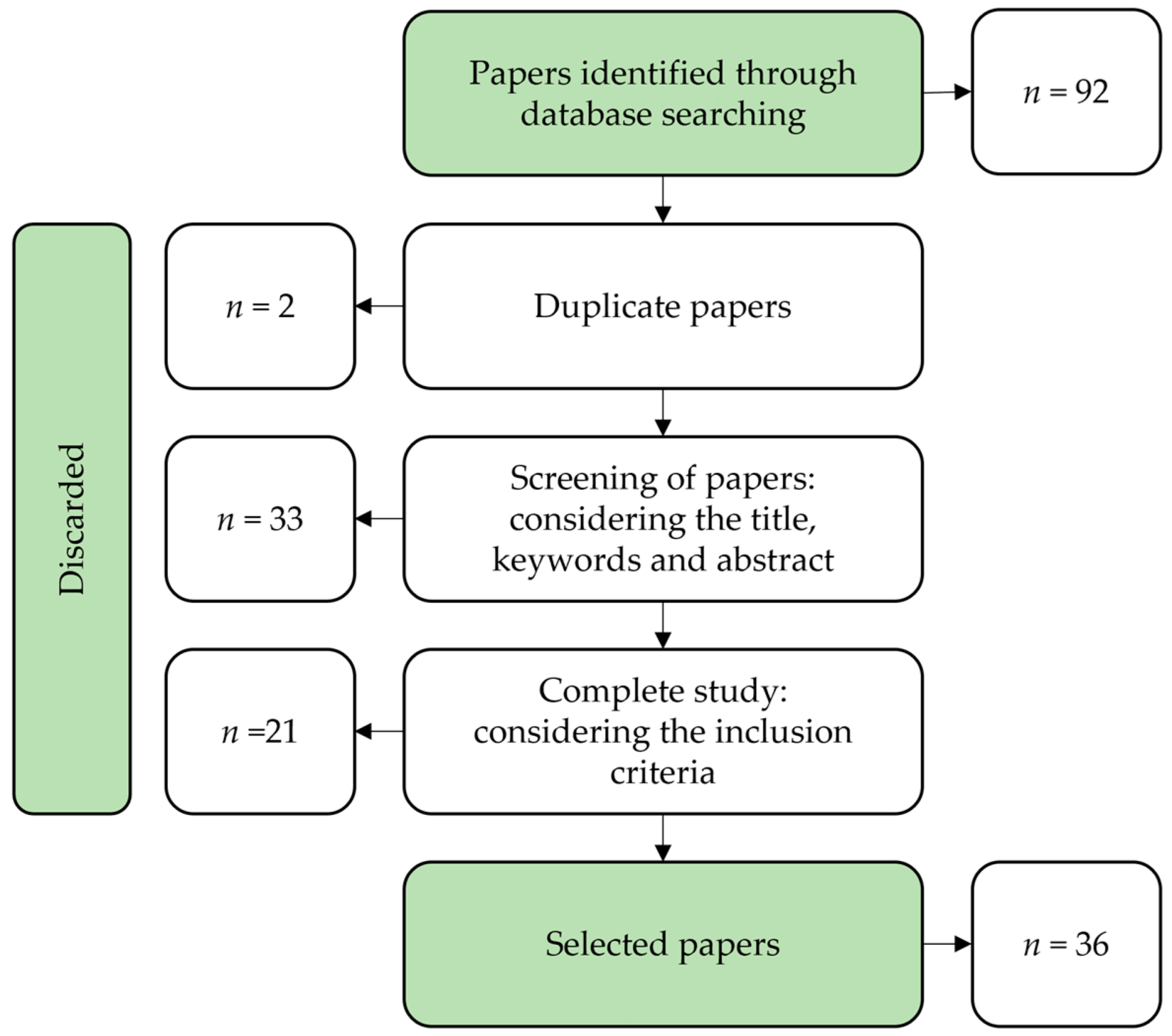

3.4. Selection of the Papers and Extraction of Study Characteristics

4. Results

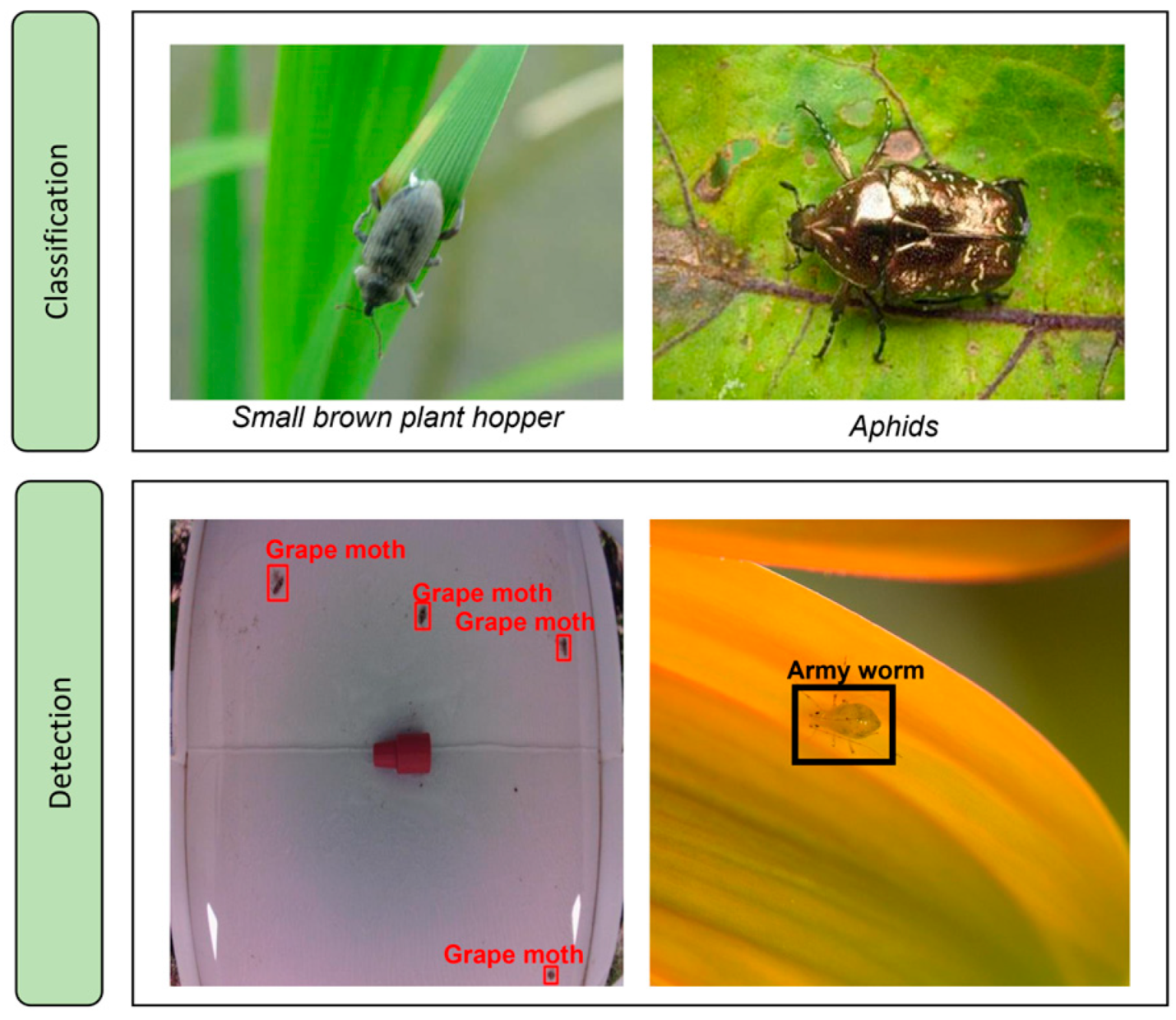

4.1. Classification of Insects with DL

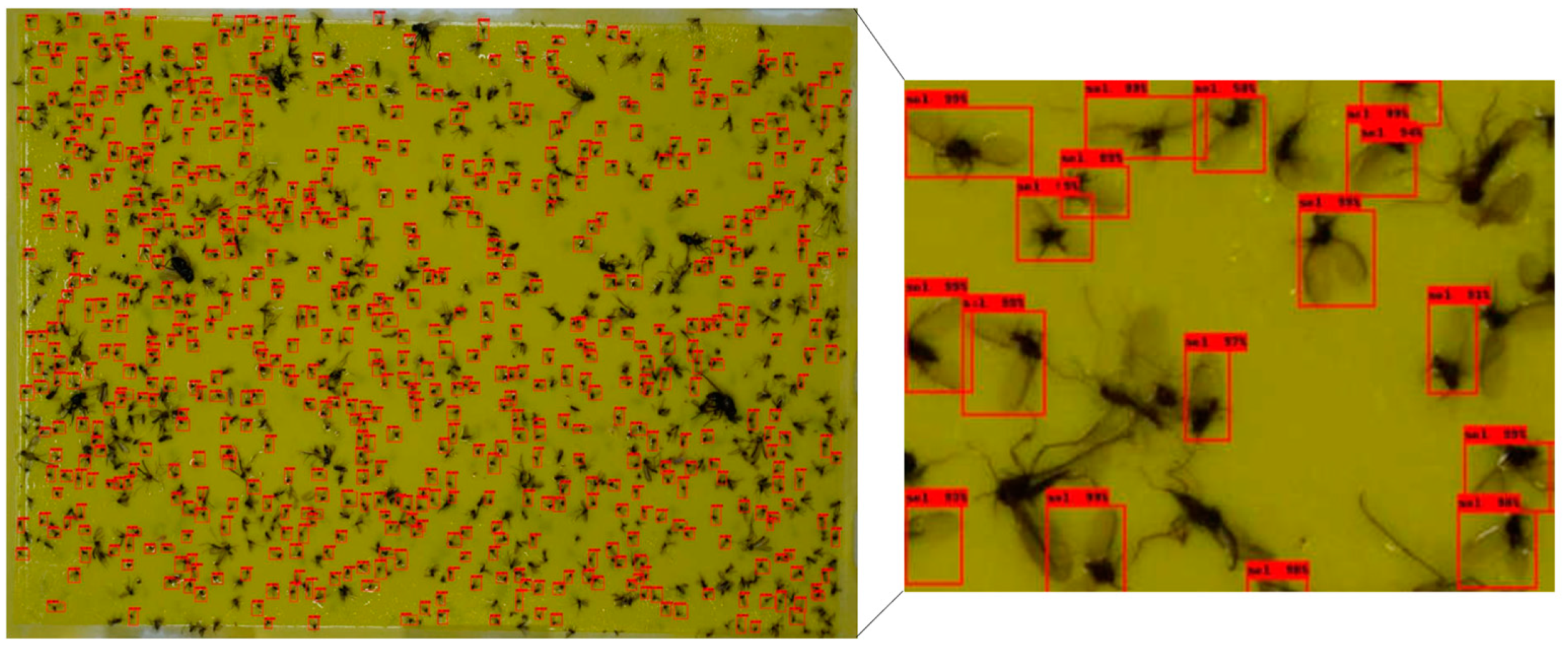

4.2. Detection of Insects with DL

4.2.1. Standard Detectors

4.2.2. Combined/Adapted Methodologies

4.2.3. Challenges and Recommendations in Insect Detection

- 1.

- Datasets

- 2.

- Methods of insect detection

5. Discussion

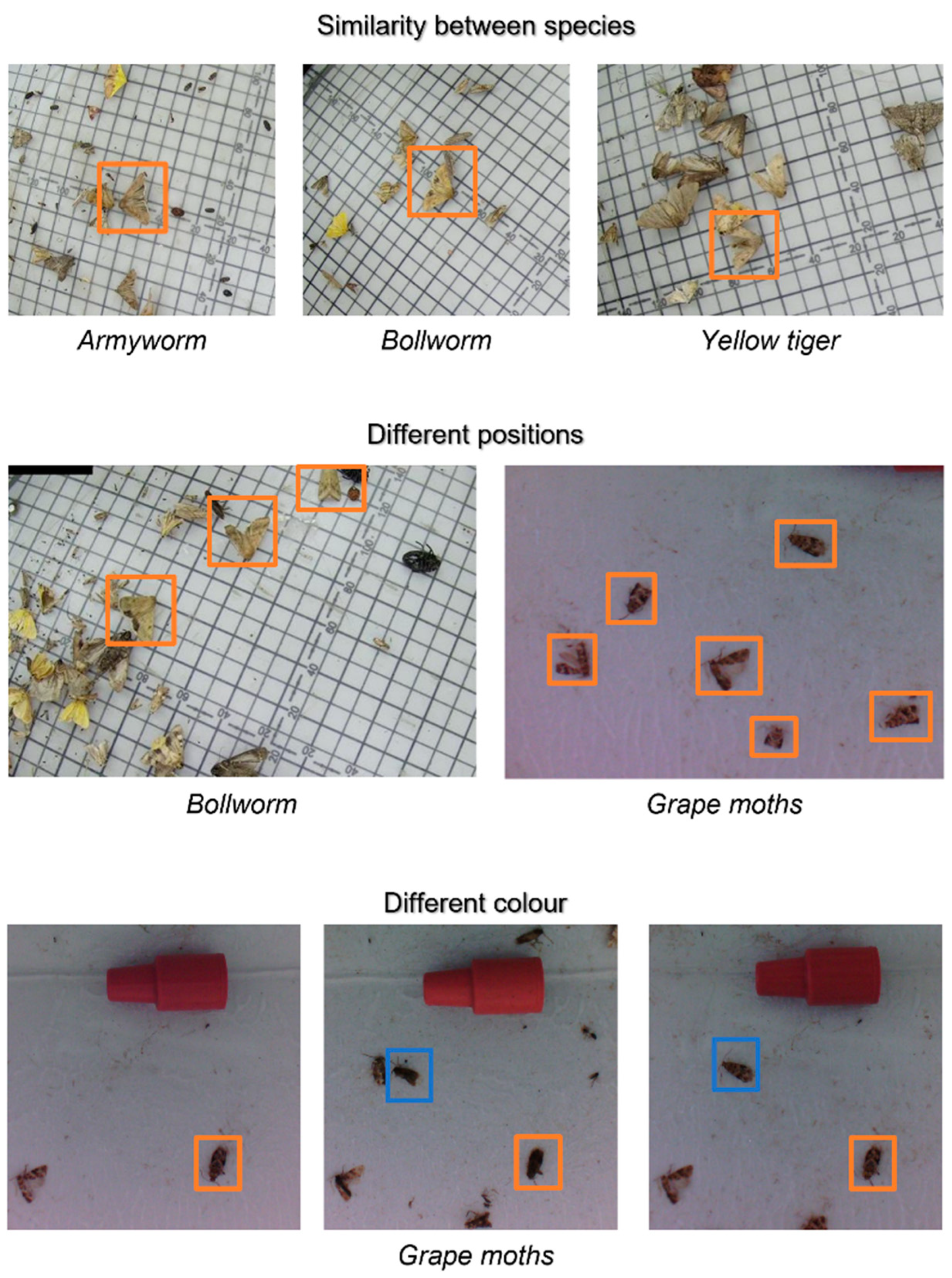

- Insects are frequently poorly visible in datasets images.

- Images captured in the field using SPM systems.

- Insect classes are unbalanced in datasets images.

- Complete annotated insect datasets.

- Multi-scale resource learning.

- Context-based detection

- GAN based detection

6. Conclusions

- (RQ1) What are the methods that obtain better mAP for the task of insect detection?

- (RQ2) What dataset variables have the most significant influence on detection?

- (RQ3) What are the main challenges of and recommendations for automatically detecting insects?

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| AP | average precision |

| CNN | Convolutional Neural Networks |

| DL | deep learning |

| IoT | Internet of things |

| IPM | integrated pest management |

| mAP | mean average precision |

| R-CNN | Region-Based Convolutional Neural Networks |

| RPN | Region Proposal Network |

| SPM | smart pest monitoring |

| SSD | Single Shot Multi-Box Detector |

| YOLO | You Only Look Once |

References

- Organization of the United Nations. The State of Food and Agriculture; Organization of the United Nations: Rome, Italy, 2014. [Google Scholar]

- Pereira, V.J.; da Cunha, J.P.A.R.; de Morais, T.P.; Ribeiro-Oliveira, J.P.; de Morais, J.B. Physical-chemical properties of pesticides: Concepts, applications, and interactions with the environment. Biosci. J. 2016, 32, 627–641. [Google Scholar] [CrossRef]

- Sarker, I.H. Deep learning: A comprehensive overview on techniques, taxonomy, applications and research directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef]

- Li, W.; Zheng, T.; Yang, Z.; Li, M.; Sun, C.; Yang, X. Classification and detection of insects from field images using deep learning for smart pest management: A systematic review. Ecol. Inform. 2021, 66, 101460. [Google Scholar] [CrossRef]

- Lima, M.C.F.; de Almeida Leandro, M.E.D.; Valero, C.; Coronel, L.C.P.; Bazzo, C.O.G. Automatic detection and monitoring of insect pests—A review. Agriculture 2020, 10, 161. [Google Scholar] [CrossRef]

- Rustia, D.J.A.; Chao, J.J.; Chiu, L.Y.; Wu, Y.F.; Chung, J.Y.; Hsu, J.C.; Lin, T.T. Automatic greenhouse insect pest detection and recognition based on a cascaded deep learning classification method. J. Appl. Entomol. 2021, 145, 206–222. [Google Scholar] [CrossRef]

- Preti, M.; Verheggen, F.; Angeli, S. Insect pest monitoring with camera-equipped traps: Strengths and limitations. J. Pest. Sci. 2021, 94, 203–217. [Google Scholar] [CrossRef]

- Henderson, P.A.; Southwood, T. Ecological Methods, 3rd ed.; Oxford: Oxford, UK, 2000; ISBN 978-0-632-05477-0. [Google Scholar]

- Nanni, L.; Manfè, A.; Maguolo, G.; Lumini, A.; Brahnam, S. High performing ensemble of convolutional neural networks for insect pest image detection. Ecol. Inform. 2022, 67, 101515. [Google Scholar] [CrossRef]

- Ramalingam, B.; Mohan, R.E.; Pookkuttath, S.; Gómez, B.F.; Sairam Borusu, C.S.C.; Teng, T.W.; Tamilselvam, Y.K. Remote insects trap monitoring system using deep learning framework and Iot. Sensors 2020, 20, 5280. [Google Scholar] [CrossRef]

- Chollet, F. Deep Learning with Python; Manning Publications: Shelter Island, NY, USA, 2017. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Abbas, M.; Ramzan, M.; Hussain, N.; Ghaffar, A.; Hussain, K.; Abbas, S.; Raza, A. Role of light traps in attracting, killing and biodiversity studies of insect pests in Thal. Pak. J. Agric. Res. 2019, 32, 684–690. [Google Scholar] [CrossRef]

- Trematerra, P.; Colacci, M. Recent advances in management by pheromones of Thaumetopoea Moths in urban parks and woodland recreational areas. Insects 2019, 10, 395. [Google Scholar] [CrossRef]

- Gilbert, A.J.; Bingham, R.R.; Nicolas, M.A.; Clark, R.A. Insect Trapping Guide, 13th ed.; Gilbert, A.J., Hoffman, K.M., Cannon, C.J., Cook, C.H., Chan, J.K., Eds.; CDFA: Sacramento, CA, USA, 2013. [Google Scholar]

- Mendes, J.; Peres, E.; Neves Dos Santos, F.; Silva, N.; Silva, R.; Sousa, J.J.; Cortez, I.; Morais, R. VineInspector: The vineyard assistant. Agriculture 2022, 12, 730. [Google Scholar] [CrossRef]

- Ennouri, K.; Smaoui, S.; Gharbi, Y.; Cheffi, M.; ben Braiek, O.; Ennouri, M.; Triki, M.A. Usage of artificial intelligence and remote sensing as efficient devices to increase agricultural system yields. J. Food Qual. 2021, 2021, 6242288. [Google Scholar] [CrossRef]

- Martineau, M.; Conte, D.; Raveaux, R.; Arnault, I.; Munier, D.; Venturini, G. A Survey on Image-Based Insect Classification. Pattern Recognit. 2017, 65, 273–284. [Google Scholar] [CrossRef]

- Gutierrez, A.; Ansuategi, A.; Susperregi, L.; Tubío, C.; Rankić, I.; Lenža, L. A benchmarking of learning strategies for pest detection and identification on tomato plants for autonomous scouting robots using internal databases. J. Sens. 2019, 2019, 5219471. [Google Scholar] [CrossRef]

- Saranya, K.; Dharini, P.; Monisha, S. Iot based pest controlling system for smart agriculture. In Proceedings of the 2019 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 15–16 November 2019; ISBN 9781728112619. [Google Scholar]

- Rustia, D.J.A.; Lin, T.T. An IoT-based wireless imaging and sensor node system for remote greenhouse pest monitoring. Chem. Eng. Trans. 2017, 58, 601–606. [Google Scholar] [CrossRef]

- Morais, R.; Silva, N.; Mendes, J.; Adão, T.; Pádua, L.; López-Riquelme, J.A.; Pavón-Pulido, N.; Sousa, J.J.; Peres, E. MySense: A comprehensive data management environment to improve precision agriculture practices. Comput. Electron. Agric. 2019, 162, 882–894. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, C.; Xiao, D.; Huang, Q. An enhanced insect pest counter based on saliency map and improved non-maximum suppression. Insects 2021, 12, 705. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.J.; Zhang, S.Y.; Dong, S.F.; Zhang, G.C.; Yang, J.; Li, R.; Wang, H.Q. Pest24: A large-scale very small object data set of agricultural pests for multi-target detection. Comput. Electron. Agric. 2020, 175, 105715. [Google Scholar] [CrossRef]

- He, Y.; Zhou, Z.; Tian, L.; Liu, Y.; Luo, X. Brown rice planthopper (Nilaparvata lugens stal) detection based on deep learning. Precis. Agric. 2020, 21, 1385–1402. [Google Scholar] [CrossRef]

- Kasinathan, T.; Singaraju, D.; Uyyala, S.R. Insect classification and detection in field crops using modern machine learning techniques. Inf. Process. Agric. 2021, 8, 446–457. [Google Scholar] [CrossRef]

- Qiao, M.; Lim, J.; Ji, C.W.; Chung, B.K.; Kim, H.Y.; Uhm, K.B.; Myung, C.S.; Cho, J.; Chon, T.S. Density estimation of Bemisia tabaci (Hemiptera: Aleyrodidae) in a greenhouse using sticky traps in conjunction with an image processing system. J. Asia Pac. Entomol. 2008, 11, 25–29. [Google Scholar] [CrossRef]

- Xia, C.; Chon, T.S.; Ren, Z.; Lee, J.M. Automatic identification and counting of small size pests in greenhouse conditions with low computational cost. Ecol. Inform. 2015, 29, 139–146. [Google Scholar] [CrossRef]

- Xie, C.; Zhang, J.; Li, R.; Li, J.; Hong, P.; Xia, J.; Chen, P. Automatic classification for field crop insects via multiple-task sparse representation and multiple-kernel learning. Comput. Electron. Agric. 2015, 119, 123–132. [Google Scholar] [CrossRef]

- More, S.; Nighot, M. AgroSearch: A web based search tool for pomegranate diseases and pests detection using image processing. In Proceedings of the ACM International Conference Proceeding Series; Association for Computing Machinery: Laval, France, 2016; Volume 04-05-March-2016. [Google Scholar]

- Ebrahimi, M.A.; Khoshtaghaza, M.H.; Minaei, S.; Jamshidi, B. Vision-based pest detection based on SVM classification method. Comput. Electron. Agric. 2017, 137, 52–58. [Google Scholar] [CrossRef]

- He, Y.; Zeng, H.; Fan, Y.; Ji, S.; Wu, J. Application of deep learning in integrated pest management: A real-time system for detection and diagnosis of oilseed rape pests. Mob. Inf. Syst. 2019, 2019, 4570808. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, P.; Cheng, A.; Wang, W.; Zhang, Y.; Cheng, J. Robust one-stage object detection with location-aware classifiers. Pattern Recognit. 2020, 105, 107334. [Google Scholar] [CrossRef]

- Xiao, Y.; Tian, Z.; Yu, J.; Zhang, Y.; Liu, S.; Du, S.; Lan, X. A review of object detection based on deep learning. Multimed. Tools Appl. 2020, 79, 23729–23791. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015. [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wu, X.; Zhan, C.; Lai, Y.K.; Cheng, M.M.; Yang, J. IP102: A Large-Scale Benchmark Dataset for Insect Pest Recognition. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE Computer Society, Long Beach, CA, USA, 15–19 June 2019; pp. 8779–8788. [Google Scholar]

- Cheng, X.; Zhang, Y.; Chen, Y.; Wu, Y.; Yue, Y. Pest identification via deep residual learning in complex background. Comput. Electron. Agric. 2017, 141, 351–356. [Google Scholar] [CrossRef]

- Thenmozhi, K.; Srinivasulu Reddy, U. Crop pest classification based on deep convolutional neural network and transfer learning. Comput. Electron. Agric. 2019, 164, 104906. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Dang, L.M.; Sadeghi-Niaraki, A.; Moon, H. Crop pest recognition in natural scenes using convolutional neural networks. Comput. Electron. Agric. 2020, 169, 105174. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; de Souza Belete, N.A.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Pattnaik, G.; Shrivastava, V.K.; Parvathi, K. Transfer learning-based framework for classification of pest in tomato plants. Appl. Artif. Intell. 2020, 34, 981–993. [Google Scholar] [CrossRef]

- Rahman, C.R.; Arko, P.S.; Ali, M.E.; Iqbal Khan, M.A.; Apon, S.H.; Nowrin, F.; Wasif, A. Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 2020, 194, 112–120. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Feng, H.; Ren, L.; Du, X.; Wu, J. Common pests image recognition based on deep convolutional neural network. Comput. Electron. Agric. 2020, 179, 105834. [Google Scholar] [CrossRef]

- Alves, A.N.; Souza, W.S.R.; Borges, D.L. Cotton pests classification in field-based images using deep residual networks. Comput. Electron. Agric. 2020, 174, 105488. [Google Scholar] [CrossRef]

- Karar, M.E.; Alsunaydi, F.; Albusaymi, S.; Alotaibi, S. A new mobile application of agricultural pests recognition using deep learning in cloud computing system. Alex. Eng. J. 2021, 60, 4423–4432. [Google Scholar] [CrossRef]

- Malathi, V.; Gopinath, M.P. Classification of pest detection in paddy crop based on transfer learning approach. Acta Agric. Scand. B Soil Plant Sci. 2021, 71, 552–559. [Google Scholar] [CrossRef]

- Chen, C.J.; Huang, Y.Y.; Li, Y.S.; Chen, Y.C.; Chang, C.Y.; Huang, Y.M. Identification of fruit tree pests with deep learning on embedded drone to achieve accurate pesticide spraying. IEEE Access 2021, 9, 21986–21997. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef]

- Nieuwenhuizen, A.; Hemming, J.; Suh, H. Detection and Classification of Insects on Stick-Traps in a Tomato Crop Using Faster R-CNN. In Proceedings of the Netherlands Conference on Computer Vision, Eindhoven, The Netherlands, 26–27 September 2018; pp. 1–4. [Google Scholar]

- Sun, Y.; Liu, X.; Yuan, M.; Ren, L.; Wang, J.; Chen, Z. Automatic in-trap pest detection using deep learning for pheromone-based Dendroctonus valens monitoring. Biosyst. Eng. 2018, 176, 140–150. [Google Scholar] [CrossRef]

- Shi, Z.; Dang, H.; Liu, Z.; Zhou, X. Detection and identification of stored-grain insects using deep learning: A more effective neural network. IEEE Access 2020, 8, 163703–163714. [Google Scholar] [CrossRef]

- Chen, C.J.; Huang, Y.Y.; Li, Y.S.; Chang, C.Y.; Huang, Y.M. An AIoT based smart agricultural system for pests detection. IEEE Access 2020, 8, 180750–180761. [Google Scholar] [CrossRef]

- Hong, S.J.; Nam, I.; Kim, S.Y.; Kim, E.; Lee, C.H.; Ahn, S.; Park, I.K.; Kim, G. Automatic pest counting from pheromone trap images using deep learning object detectors for Matsucoccus thunbergianae monitoring. Insects 2021, 12, 342. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Liu, L.; Xie, C.; Yang, P.; Li, R.; Zhou, M. Agripest: A Large-scale domain-specific benchmark dataset for practical agricultural pest detection in the wild. Sensors 2021, 21, 1601. [Google Scholar] [CrossRef]

- Yun, W.; Kumar, J.P.; Lee, S.; Kim, D.S.; Cho, B.K. Deep learning-based system development for black pine bast scale detection. Sci. Rep. 2022, 12, 606. [Google Scholar] [CrossRef]

- Butera, L.; Ferrante, A.; Jermini, M.; Prevostini, M.; Alippi, C. Precise agriculture: Effective deep learning strategies to detect pest insects. IEEE/CAA J. Autom. Sin. 2022, 9, 246–258. [Google Scholar] [CrossRef]

- Ding, W.; Taylor, G. Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 2016, 123, 17–28. [Google Scholar] [CrossRef]

- Liu, L.; Wang, R.; Xie, C.; Yang, P.; Wang, F.; Sudirman, S.; Liu, W. PestNet: An end-to-end deep learning approach for large-scale multi-class pest detection and classification. IEEE Access 2019, 7, 45301–45312. [Google Scholar] [CrossRef]

- Martins, V.A.M.; Freitas, L.C.; de Aguiar, M.S.; de Brisolara, L.B.; Ferreira, P.R. Deep learning applied to the identification of fruit fly in intelligent traps. In Proceedings of the Brazilian Symposium on Computing System Engineering, SBESC, Natal, Brazil, 19–22 November 2019; Volume 2019-November. [Google Scholar]

- Li, R.; Jia, X.; Hu, M.; Zhou, M.; Li, D.; Liu, W.; Wang, R.; Zhang, J.; Xie, C.; Liu, L.; et al. An effective data augmentation strategy for CNN-based pest localization and recognition in the field. IEEE Access 2019, 7, 160274–160283. [Google Scholar] [CrossRef]

- Li, W.; Chen, P.; Wang, B.; Xie, C. Automatic localization and count of agricultural crop pests based on an improved deep learning pipeline. Sci. Rep. 2019, 9, 7024. [Google Scholar] [CrossRef]

- Tetila, E.C.; MacHado, B.B.; Menezes, G.V.; de Souza Belete, N.A.; Astolfi, G.; Pistori, H. A deep-learning approach for automatic counting of soybean insect pests. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1837–1841. [Google Scholar] [CrossRef]

- Liu, L.; Xie, C.; Wang, R.; Yang, P.; Sudirman, S.; Zhang, J.; Li, R.; Wang, F. Deep learning based automatic multiclass wild pest monitoring approach using hybrid global and local activated features. IEEE Trans. Industr. Inform. 2021, 17, 7589–7598. [Google Scholar] [CrossRef]

- Wang, R.; Jiao, L.; Xie, C.; Chen, P.; Du, J.; Li, R. S-RPN: Sampling-balanced region proposal network for small crop pest detection. Comput. Electron. Agric. 2021, 187, 106290. [Google Scholar] [CrossRef]

- Li, W.; Wang, D.; Li, M.; Gao, Y.; Wu, J.; Yang, X. Field detection of tiny pests from sticky trap images using deep learning in agricultural greenhouse. Comput. Electron. Agric. 2021, 183, 106048. [Google Scholar] [CrossRef]

- Tang, Z.; Chen, Z.; Qi, F.; Zhang, L.; Chen, S. Pest-YOLO: Deep Image Mining and Multi-Feature Fusion for Real-Time Agriculture Pest Detection. In Proceedings of the 2021 IEEE International Conference on Data Mining (ICDM), Auckland, New Zeeland, 7–10 December 2011; pp. 1348–1353. [Google Scholar]

- Beltrão, F. Aplicação de Redes Neurais Artificais Profundas na Deteção de Placas Pare. Bachelor’s Thesis, Universidade Tecnológica Federal do Paraná, Curitiba, Brazil, 2019. [Google Scholar]

- Rodrigues, D.A. Deep Learning e Redes Neurais Convolucionais: Reconhecimento Automático de Caracteres em Placas de Licenciamento Automotivo. Bachelor’s Thesis, Universidade Tecnológica Federal do Paraná, Curitiba, Brazil, 2018. [Google Scholar]

- Zhang, Z.-Q. Animal biodiversity: An introduction to higher-level classification and taxonomic richness. Zootaxa 2011, 3148, 7–12. [Google Scholar] [CrossRef]

- Valan, M.; Makonyi, K.; Maki, A.; Vondráček, D.; Ronquist, F. Automated taxonomic identification of insects with expert-level accuracy using effective feature transfer from convolutional networks. Syst. Biol. 2019, 68, 876–895. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–15 June 2009; pp. 248–255. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part V 13; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS-improving object detection with one line of code. arXiv 2017, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Stork, N.E. Biodiversity: World of insects. Nature 2007, 448, 657–658. [Google Scholar] [CrossRef]

- Wosner, O.; Farjon, G.; Bar-Hillel, A. Object detection in agricultural contexts: A multiple resolution benchmark and comparison to human. Comput. Electron. Agric. 2021, 189, 106404. [Google Scholar] [CrossRef]

- Ouali, Y.; Hudelot, C.; Tami, M. An overview of deep semi-supervised learning. arXiv 2020, arXiv:2006.05278. [Google Scholar]

- Sohn, K.; Zhang, Z.; Li, C.-L.; Zhang, H.; Lee, C.-Y.; Pfister, T. A simple semi-supervised learning framework for object detection. arXiv 2020, arXiv:2005.04757. [Google Scholar]

- Amarathunga, D.C.; Grundy, J.; Parry, H.; Dorin, A. Methods of insect image capture and classification: A systematic literature review. Smart Agric. Technol. 2021, 1, 100023. [Google Scholar] [CrossRef]

- Ganganwar, V. An overview of classification algorithms for imbalanced datasets. Int. J. Emerg. Technol. Adv. Eng. 2012, 2, 42–47. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.; Lin, T.-Y.; Song, Y.; Belongie, S. Class-balanced loss based on effective number of samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019. [Google Scholar]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhao, C.; Wang, J.; Zhao, X.; Wu, Y.; Lu, H. CoupleNet: Coupling global structure with local parts for object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2017; Volume 2017-October, pp. 4146–4154. [Google Scholar]

- Li, Z.; Chen, Y.; Yu, G.; Deng, Y. R-FCN++: Towards accurate region-based fully convolutional networks for object detection. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, AAAI, New Orleans, LA, USA, 2–7 February 2018; pp. 7073–7080. [Google Scholar]

- Song, Z.; Chen, Q.; Huang, Z.; Hua, Y.; Yan, S. Contextualizing object detection and classification. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; IEEE Computer Society: Washington, DC, USA, 2011; pp. 1585–1592. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

| Paper | Year | Task | Method | Disadvantages |

|---|---|---|---|---|

| [27] | 2008 | Counting whiteflies | Low pass filter, binarisation, and other image processing operations | Methods developed for the resolution of only the proposed task. May be adaptable to other scenarios |

| [28] | 2015 | Detection of whiteflies, aphids, and thrips | Identification with a watershed algorithm to segment insects from the background | |

| [29] | 2015 | Counting whiteflies | A k-means grouping is applied in each image converted into a colour space | |

| [30] | 2016 | Classification of 24 insect species | Multiple task sparse representation and multiple kernel learning techniques | |

| [21] | 2017 | Classification of Thysanoptera | Support vector machine and other image processing operations | |

| [31] | 2017 | Classification of pests in pomegranate | Support vector machine and other image processing operations |

| Paper | Year | Number of Classes | Dataset Size | Methods | Results (Accuracy) |

|---|---|---|---|---|---|

| [43] | 2017 | 10 | 550 | ResNet101 | 98.7% |

| [44] | 2019 | 40 | 4263 | CNN proposed by authors | 96.8% |

| 24 | 1397 | 97.5% | |||

| 40 | 4500 | 95.9% | |||

| [45] | 2020 | 10 | 5629 | GoogLeNet—fine-tuning | 94.6% |

| [46] | 2020 | 2 | 5000 | Resnet50—fine-tuning | 93.8% |

| [47] | 2020 | 10 | 859 | DenseNet169—transfer learning | 88.8% |

| [48] | 2020 | 8 | 1426 | VGG16—fine-tuning | 97.1% |

| [49] | 2020 | 20 | 4909 | CPAFNet: created by authors | 92.6% |

| [50] | 2020 | 15 | 100 | ResNet34 | 97.8% |

| [26] | 2021 | 24 | 1387 | CNN proposed by authors | 90.0% |

| [51] | 2021 | 5 | 500 | Faster R-CNN | 99.0% |

| [52] | 2021 | 10 | 3549 | Resnet50—fine-tuning | 95.0% |

| [53] | 2021 | 1 | 700 | YOLOv3 | 95.3% |

| Paper | Year | Image Scenario | Number of Classes | Dataset Size | Method | Results (mAP) | Inference Time(s) |

|---|---|---|---|---|---|---|---|

| [54] | 2018 | In traps | 7 | 10,000 | YOLO | 92.5% | 0.167 |

| [55] | 2018 | In traps | 3 | 1350 | Faster R-CNN | 87.4% | n.a. |

| [56] | 2018 | In traps | 6 | 2183 | RetinaNet | 74.6% | 0.448 |

| [32] | 2019 | On plants | 12 | 3022 | SSD | 77.1% | 0.100 |

| [24] | 2020 | In traps | 24 | 25,378 | YOLOv3 | 58.8% | n.a. |

| [57] | 2020 | In traps | 8 | 1716 | R-FCN | 83.4% | 0.124 |

| [10] | 2020 | In traps | 14 | 1000 | Faster R-CNN | 88.8% | 0.032 |

| [58] | 2020 | On plants | 1 | 687 | YOLOv3 | 90.0% | n.a. |

| [25] | 2020 | On plants | 1 | 4600 | Faster R-CNN | 94.6% | 0.360 |

| [59] | 2021 | In traps | 1 | 50 | Faster R-CNN | 85.6% | 0.078 |

| [60] | 2021 | On plants | 14 | 49,700 | Cascade R-CNN | 70.8% | n.a. |

| [61] | 2022 | In traps | 1 | 4134 | YOLOv5 | 94.7% | n.a. |

| [62] | 2022 | On plants | 3 | 4541 | Faster R-CNN | 92.7% | 0.016 |

| Paper | Year | Image Scenario | Number of Classes | Dataset Size | Method | Results (mAP) | Inference Time(s) |

|---|---|---|---|---|---|---|---|

| [63] | 2016 | In traps | 1 | 177 | CNN with sliding window | 93.1% | n.a. |

| [64] | 2019 | In traps | 16 | 88,670 | PestNet: created by authors | 75.5% | 0.441 |

| [65] | 2019 | In traps | 3 | 662 | Segmentation + CNN | 92.4% | 0.145 |

| [66] | 2019 | On plants | 4 | 4400 | Multi-scale CNN + RPN | 81.4% | n.a. |

| [67] | 2019 | On plants | 1 | 85 | CNN + RPN | 88.5% | n.a. |

| [68] | 2020 | On plants | 1 | 2300 | Segmentation + CNN | 92.0% | n.a. |

| [69] | 2021 | In traps | 16 | 88,600 | Modified Faster R-CNN | 83.6% | n.a. |

| [70] | 2021 | In traps | 21 | 24,412 | S-RPN | 78.7% | 0.045 |

| [6] | 2021 | In traps | 4 | 5173 | YOLOv3 + CNN | 91.0% | 2.380 |

| [71] | 2021 | In traps | 2 | 1400 | Modified Faster R-CNN | 95.2% | n.a. |

| [72] | 2022 | In traps | 24 | 28,000 | Modified YOLOv4 | 71.6% | 0.013 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teixeira, A.C.; Ribeiro, J.; Morais, R.; Sousa, J.J.; Cunha, A. A Systematic Review on Automatic Insect Detection Using Deep Learning. Agriculture 2023, 13, 713. https://doi.org/10.3390/agriculture13030713

Teixeira AC, Ribeiro J, Morais R, Sousa JJ, Cunha A. A Systematic Review on Automatic Insect Detection Using Deep Learning. Agriculture. 2023; 13(3):713. https://doi.org/10.3390/agriculture13030713

Chicago/Turabian StyleTeixeira, Ana Cláudia, José Ribeiro, Raul Morais, Joaquim J. Sousa, and António Cunha. 2023. "A Systematic Review on Automatic Insect Detection Using Deep Learning" Agriculture 13, no. 3: 713. https://doi.org/10.3390/agriculture13030713

APA StyleTeixeira, A. C., Ribeiro, J., Morais, R., Sousa, J. J., & Cunha, A. (2023). A Systematic Review on Automatic Insect Detection Using Deep Learning. Agriculture, 13(3), 713. https://doi.org/10.3390/agriculture13030713