Robot Path Planning Navigation for Dense Planting Red Jujube Orchards Based on the Joint Improved A* and DWA Algorithms under Laser SLAM

Abstract

:1. Introduction

2. Materials and Methods

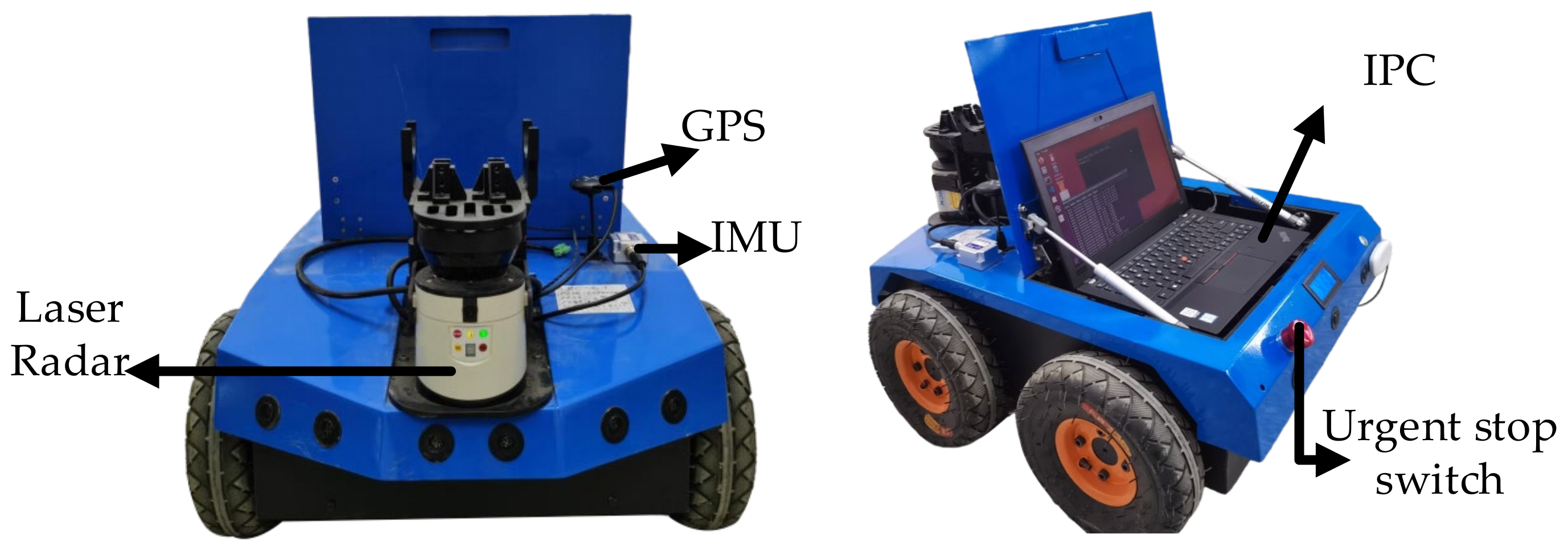

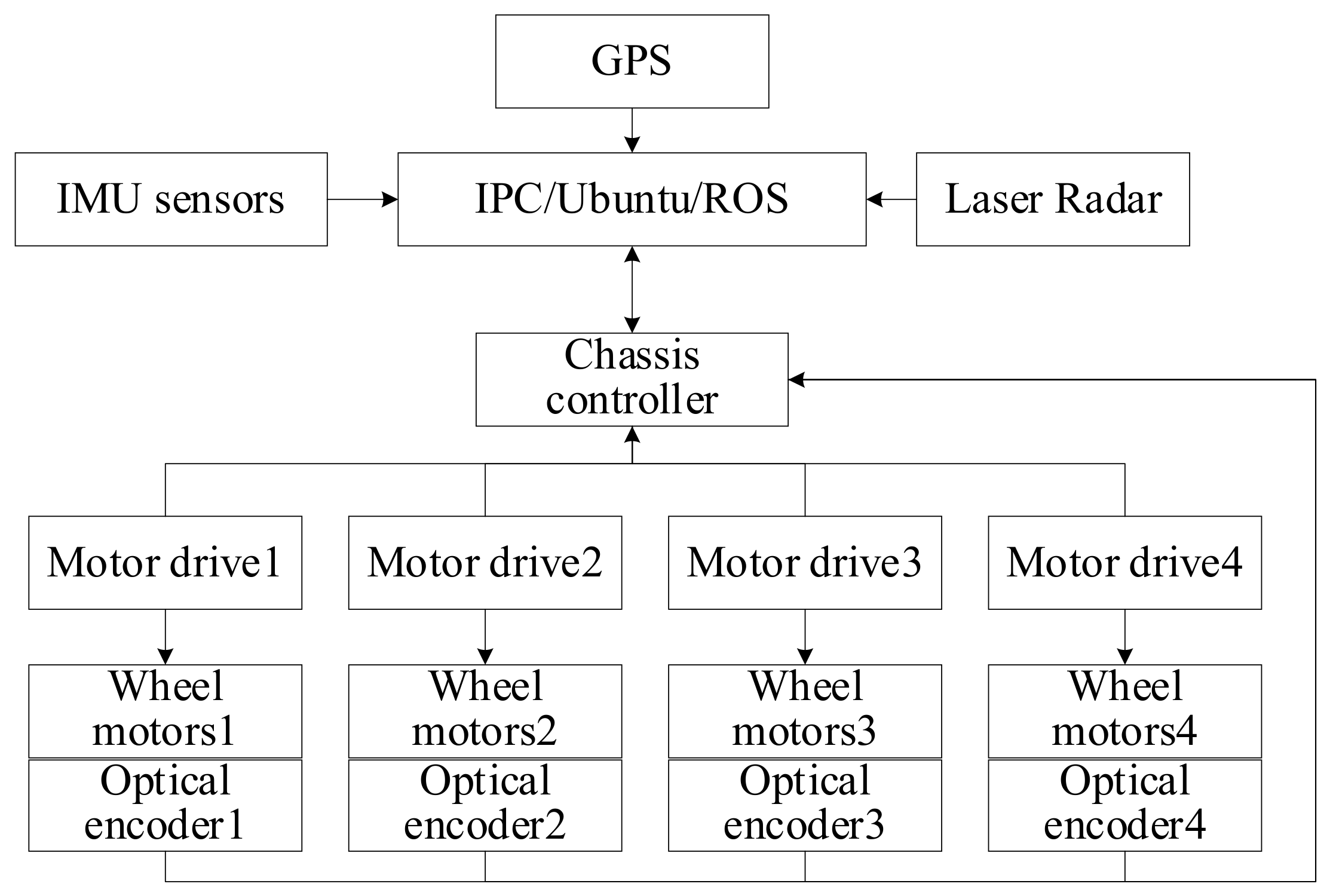

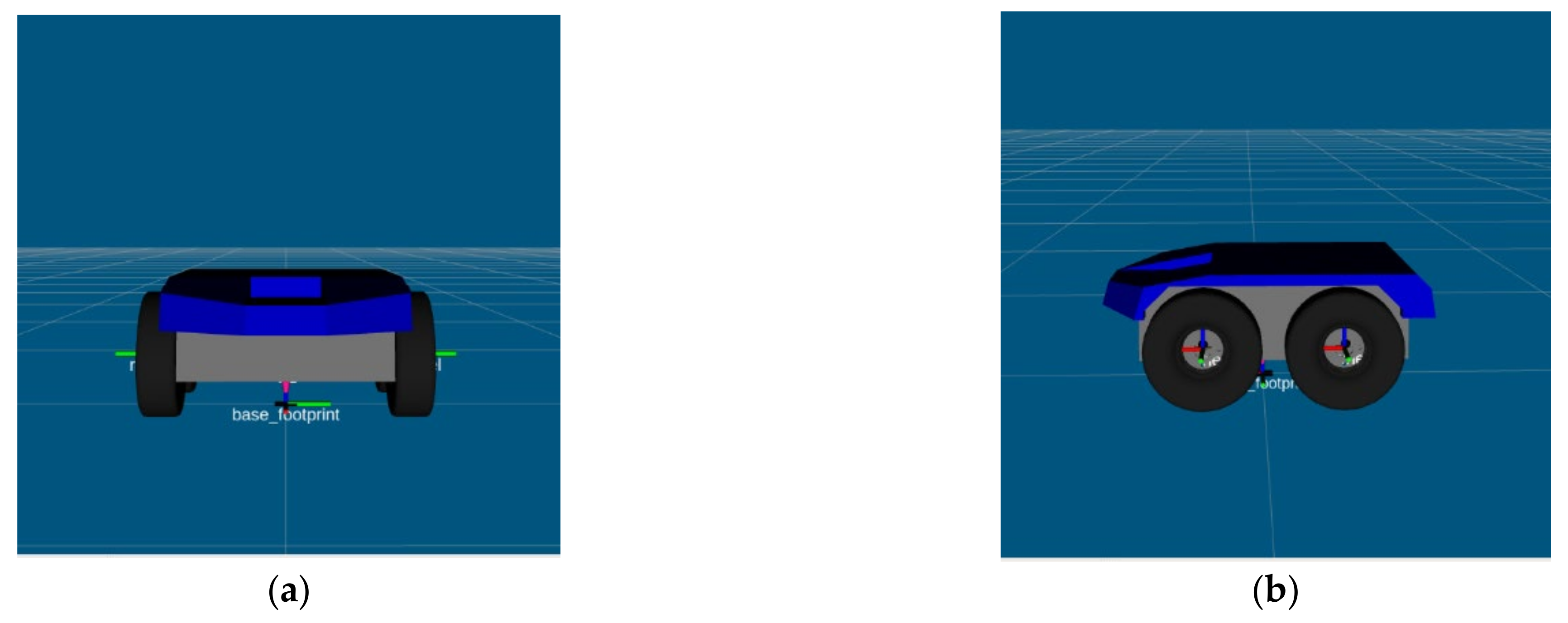

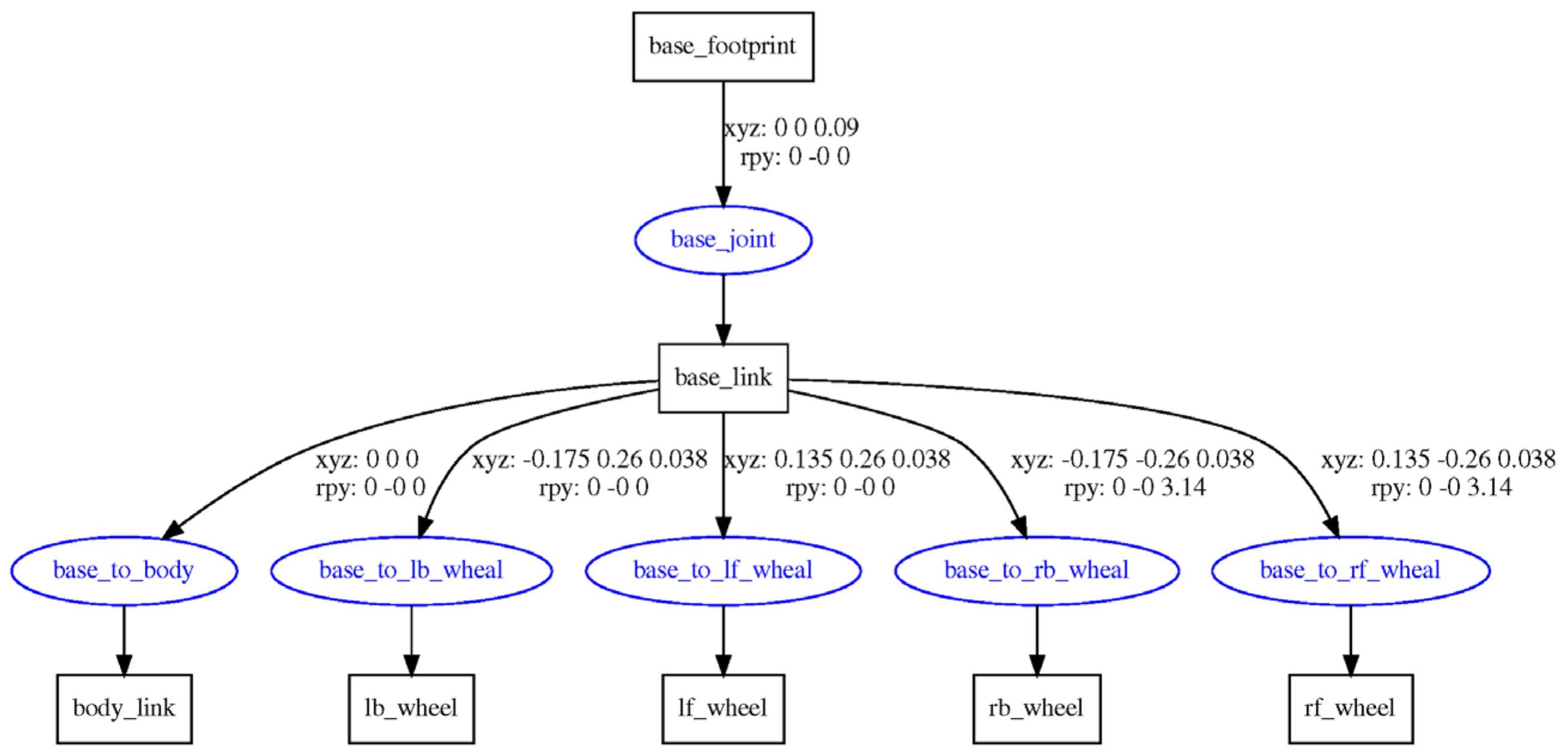

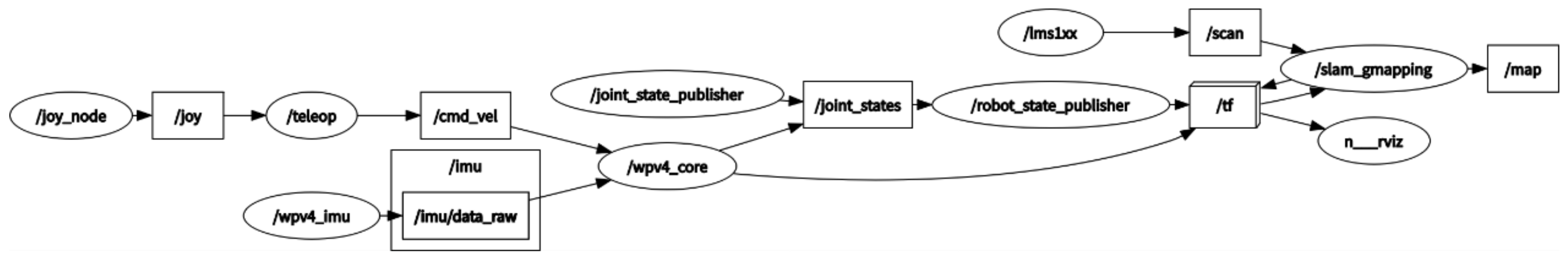

2.1. Robot Platform

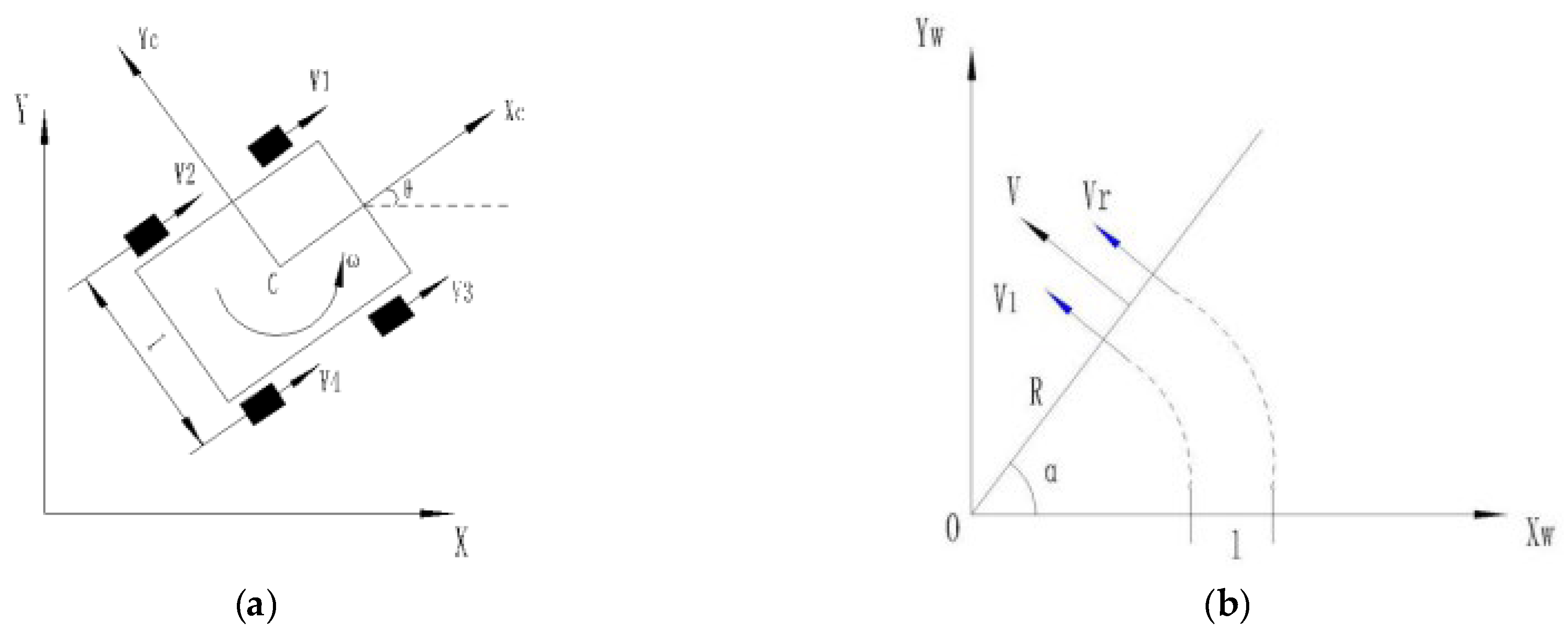

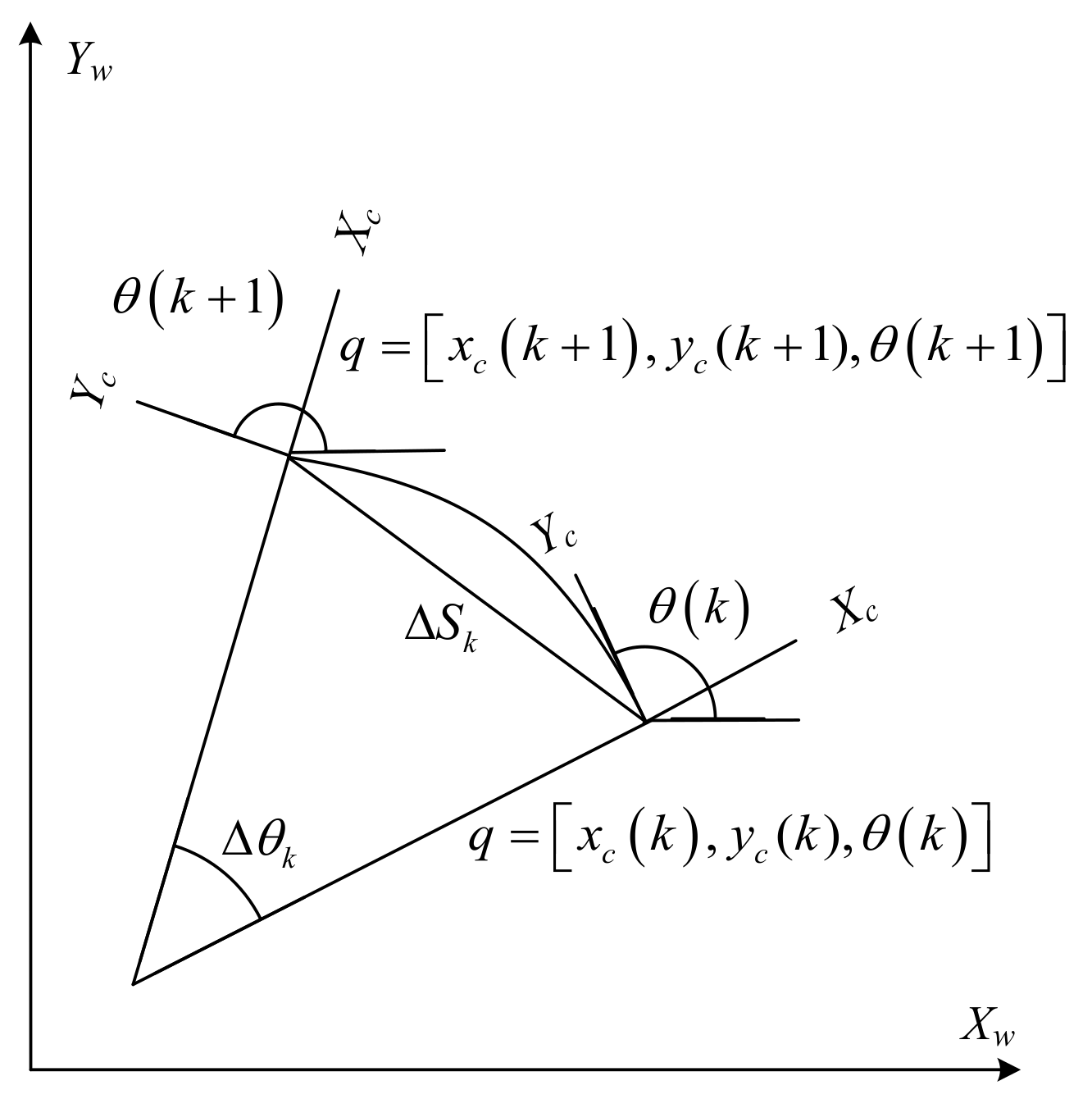

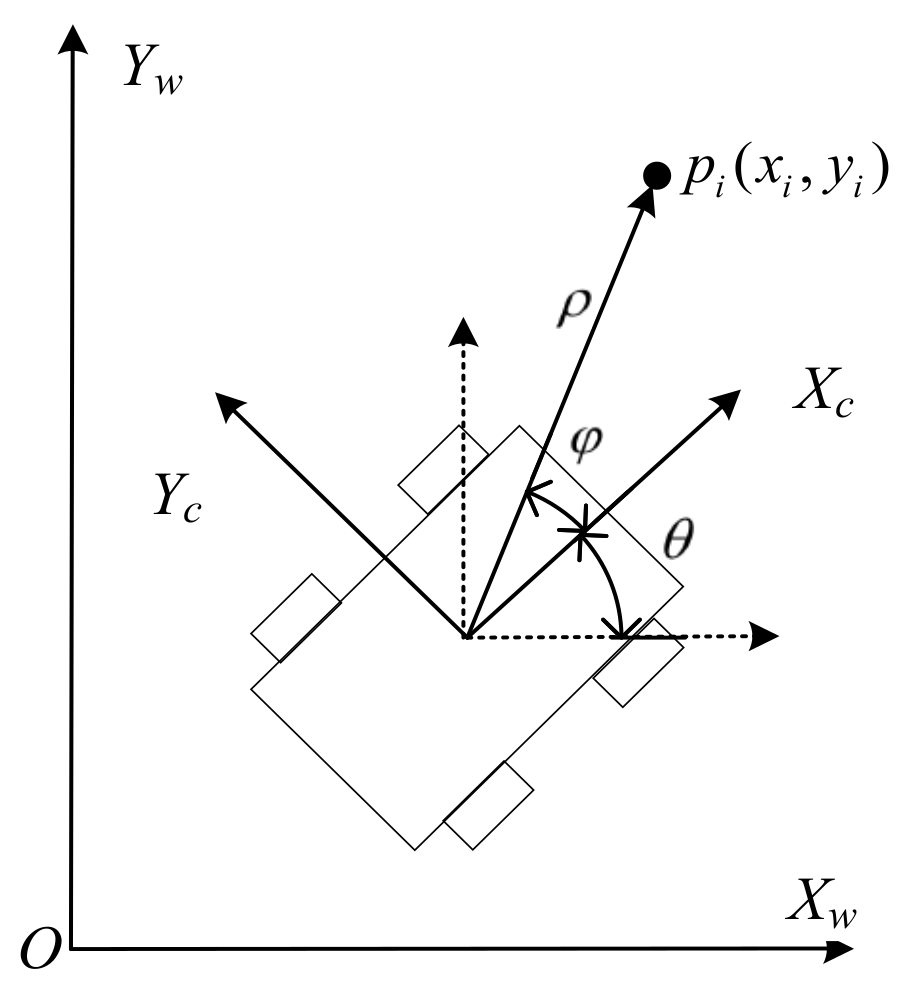

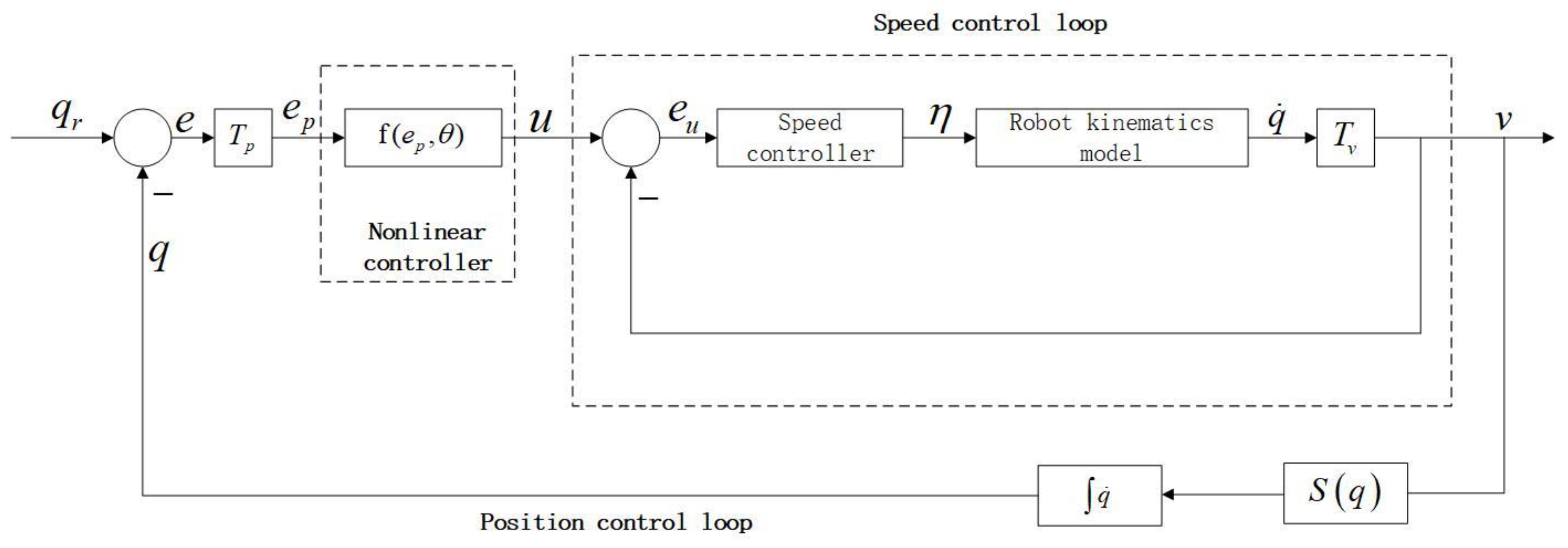

2.2. Kinematic Modeling of the Orchard Mobile Robot

2.3. Robotic Physical Simulation Model of a Densely Planted Jujube Orchard

2.4. SLAM and AMCL Localisation Algorithms

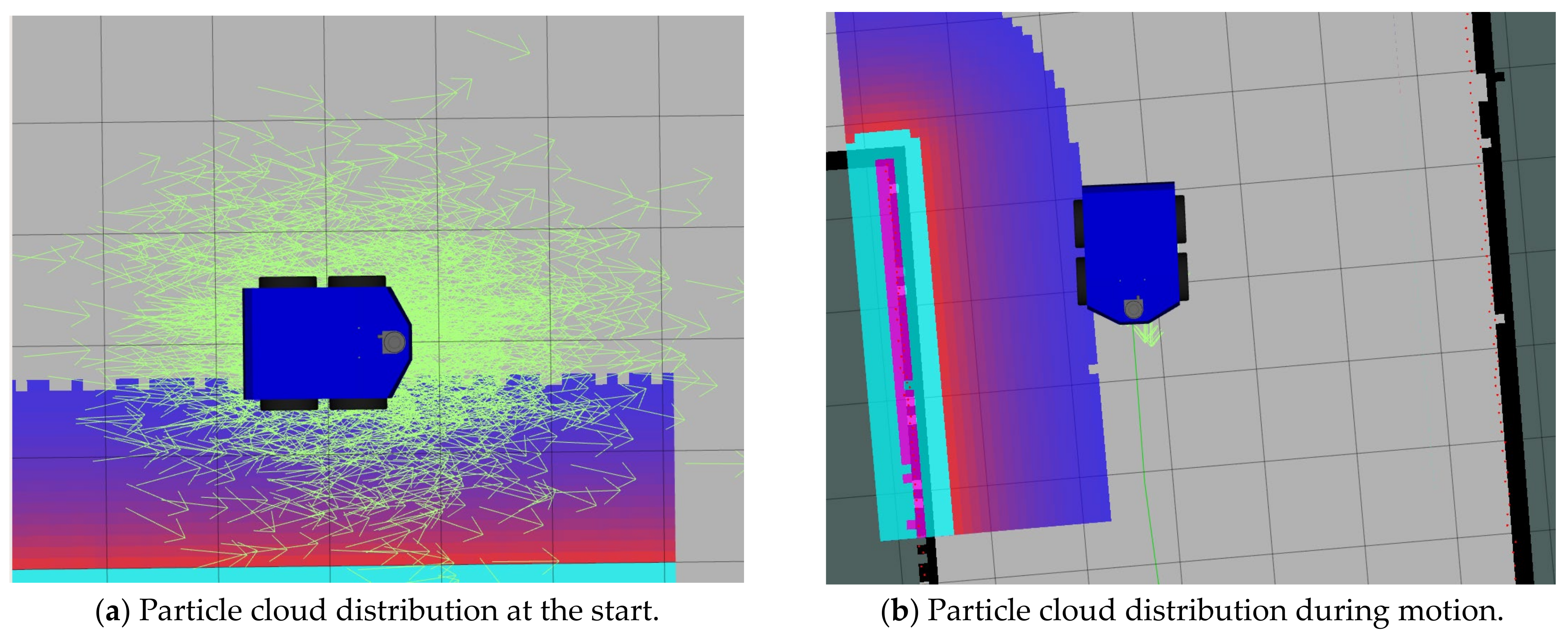

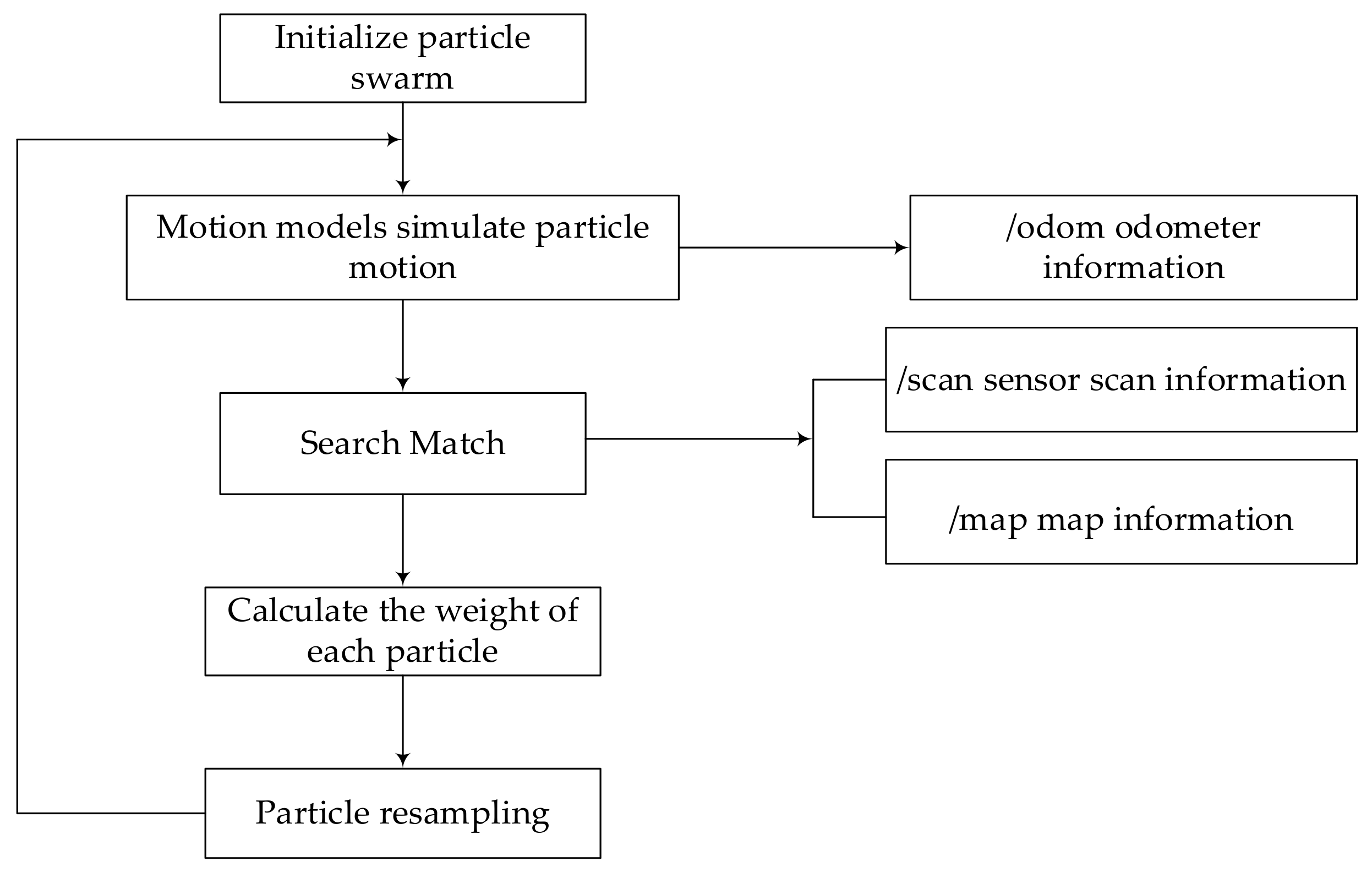

- Prediction phase: When the robot starts its motion, a certain number of particles (each representing a sample) are set up within the unknown map. These particles are evenly scattered on each piece of the map. The weighted sum of these particles is used to approximate the posterior probability density, calculate the bit pose based on the robot’s current Laser Radar data, and save the particles.

- Calibration phase: As observations arrive consecutively, a corresponding significance weight is determined for each particle. This weight represents the probability of obtaining an observation when the predicted pose corresponds to the first particle. Thus, all particles are evaluated so that the more likely they are to obtain an observation, the higher the weight obtained.

- Decision phase: As the robot continues to advance, the particle weights for the real situation will be rated higher, the data from the scanning Laser Radar will be compared with the predicted particle data, and particles that differ significantly from the actual example weights will be rejected.

- Resampling stage: Redistribution of the sampled particles in proportion to the weight is conducted. This step is important because the number of particles that approximate a continuous distribution is limited. In the next filtering round, the resampled particle set is fed into the state transfer equation to obtain new predicted particles.

- Filtering stage: The system continues to iterate through the cycle of resampled particles, and eventually, most of the particles gather in the area closest to the true value, obtaining the location of the robot according to the density of the particle cloud arrangement in an unknown environment.

- Map estimation: For each sampled particle, the corresponding map estimate was determined from the sampled trajectory and observations.

2.5. Global and Real-Time Local Path Planning

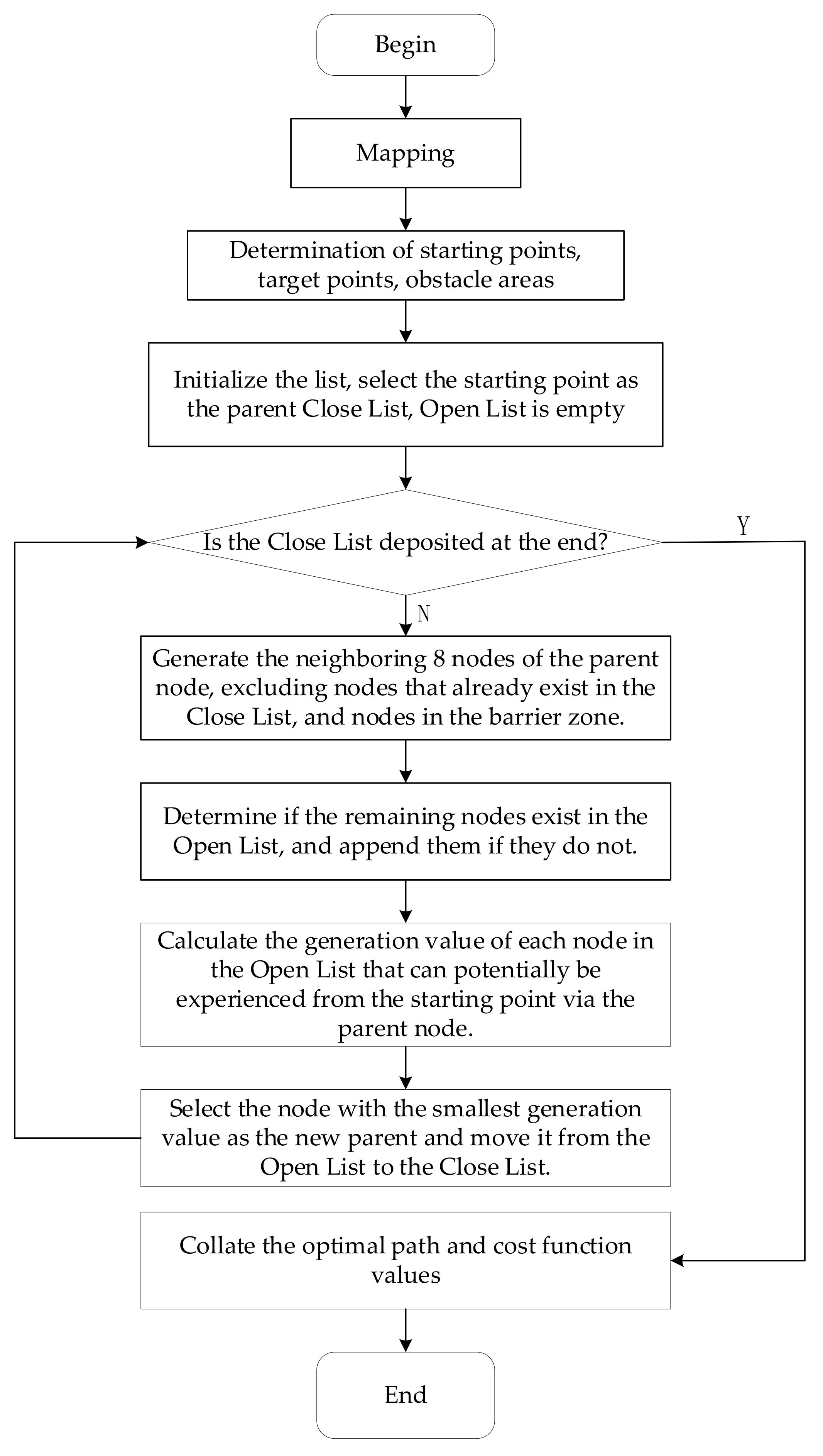

2.5.1. A* Algorithm

2.5.2. DWA Algorithm

- (1)

- The set of minimum and maximum velocities constrained by the robot is expressed in Equation (16) as follows:where and denote the maximum and minimum values of the linear velocity, whereas and denote the minimum and maximum values of the angular velocity, respectively.

- (2)

- The mobile robot is influenced by its motor control; there are acceleration and deceleration constraints, and the maximum and minimum set of velocities affected are expressed in Equation (17) as follows:where and represent the linear and angular velocities of the current operation of the robot, and represent the maximum linear and angular accelerations, and and represent the maximum linear and angular deceleration, respectively.

- (3)

- Mobile robot braking distance constraint; to ensure the reliability and safety of the robot when working, the robot should immediately stop moving when it is about to hit an obstacle. The set of velocities constrained by the maximum deceleration is expressed in Equation (18) as follows:where represents the shortest distance between the trajectory and the obstacle at a certain speed.

2.6. Algorithm Improvement and Fusion

2.6.1. Improved A*Algorithm

2.6.2. Introduction of Environmental Information into Evaluation Functions

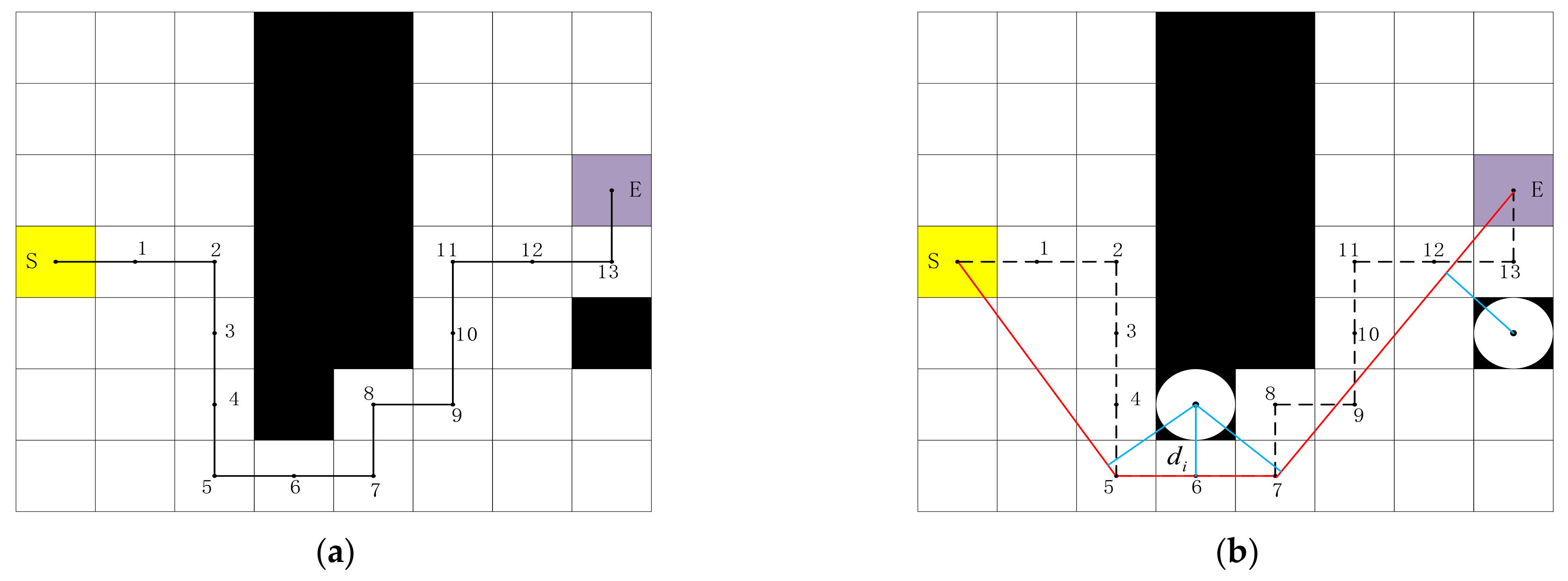

2.6.3. Route Smoothing Optimization

- (1)

- Traverse through all nodes, delete redundant nodes in the middle of each path segment, and preserve the start and inflection points. After deleting the intermediate node, there are five remaining S, 2, 5, 7, 8, 9, 11, 13, and E nodes.

- (2)

- Traverse through the starting point and inflection point, and connect each node with the following node as an alternative path from the starting point to determine the relationship between the distance of each path and the barrier raster and the safe distance D. If , the path is deleted. If , the path is preserved and the inflection point between the paths is deleted. Remaining S, 5, 7, and E nodes after removing unnecessary inflection points.

- (3)

- Extract the remaining nodes, output the optimized path and end the algorithm.

2.6.4. Evaluation Function Optimization of DWA Algorithm

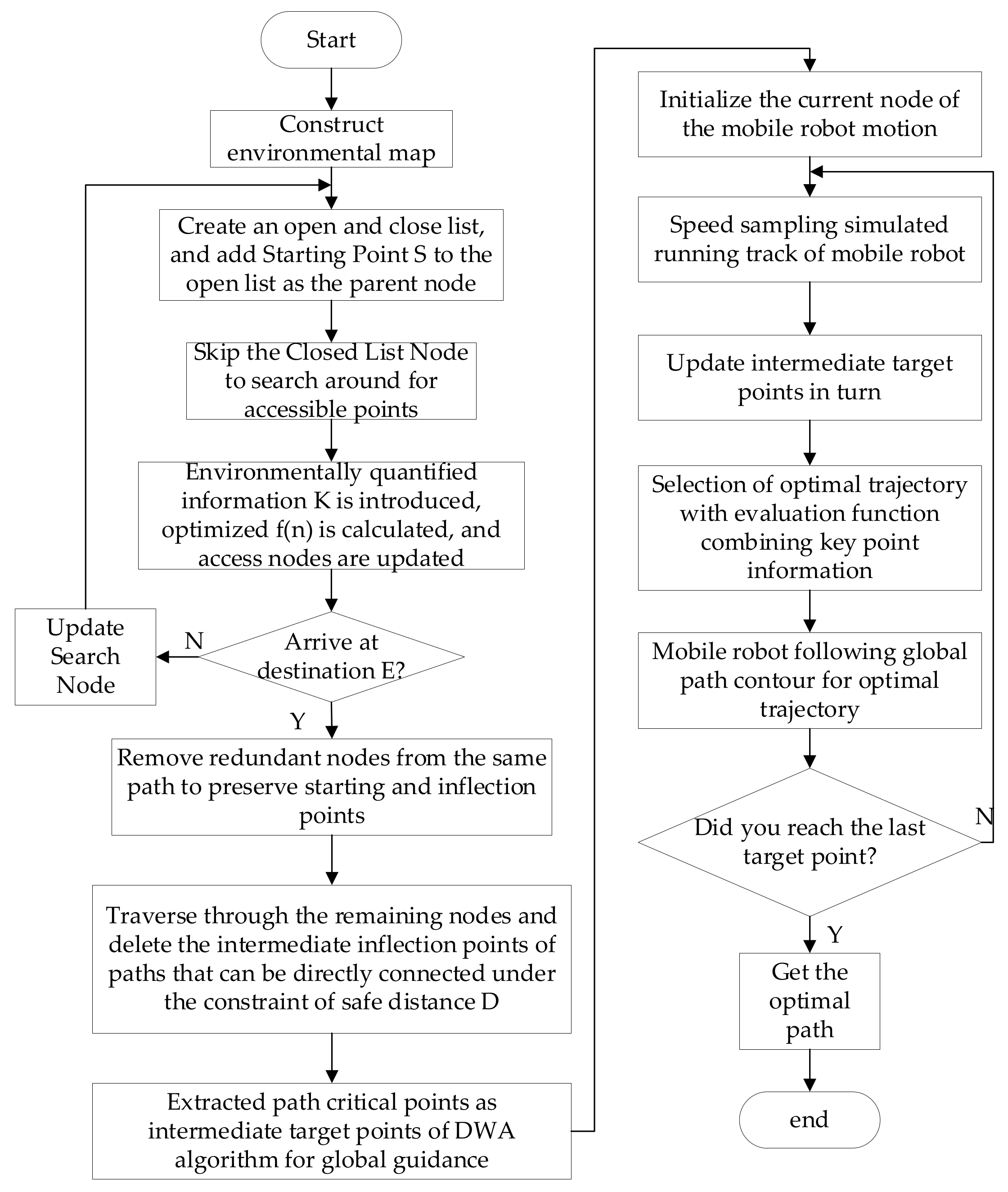

2.6.5. Hybrid Algorithm

2.7. Experiment

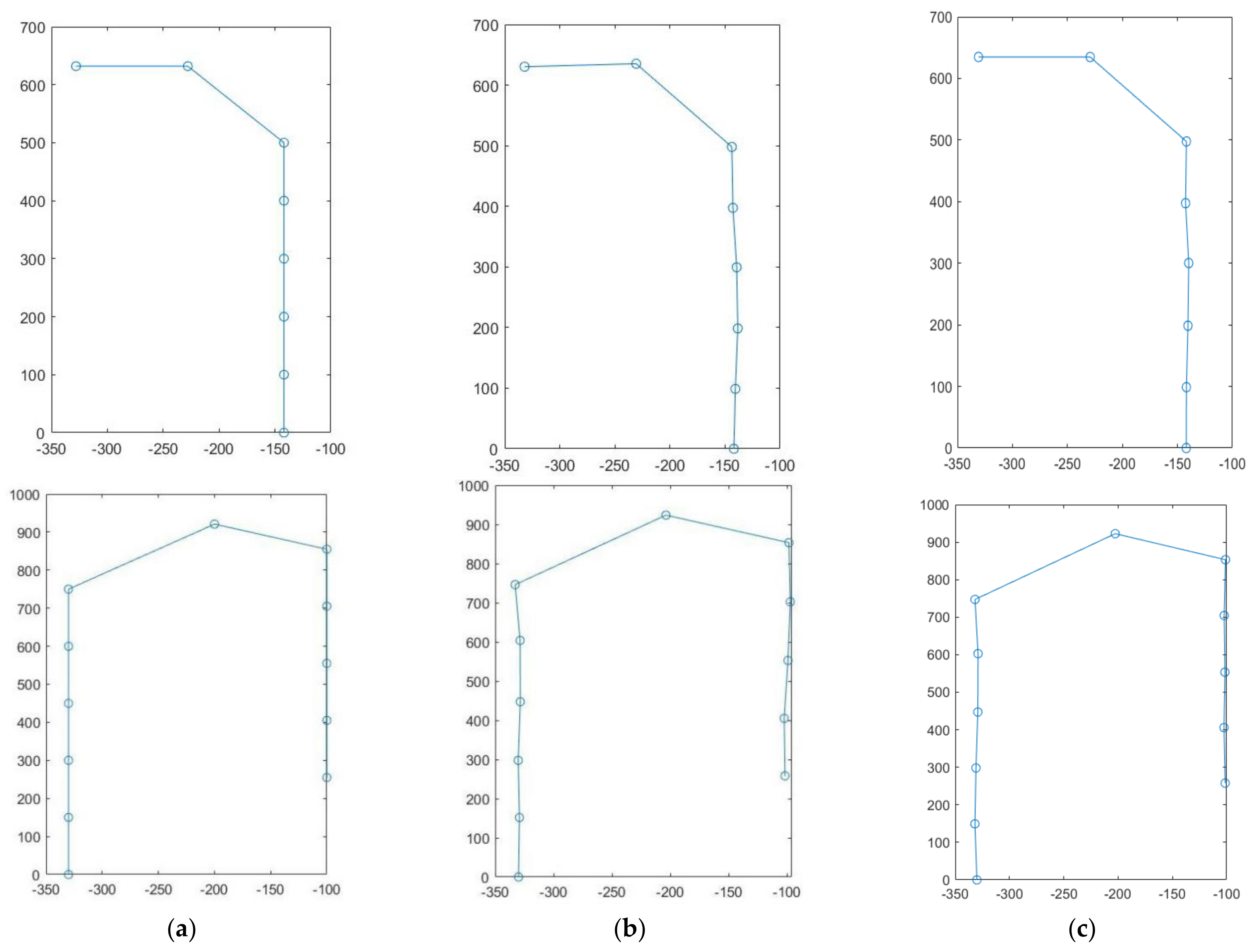

2.7.1. Linear Path Positioning Navigation

2.7.2. Path-Specific Navigation

3. Results

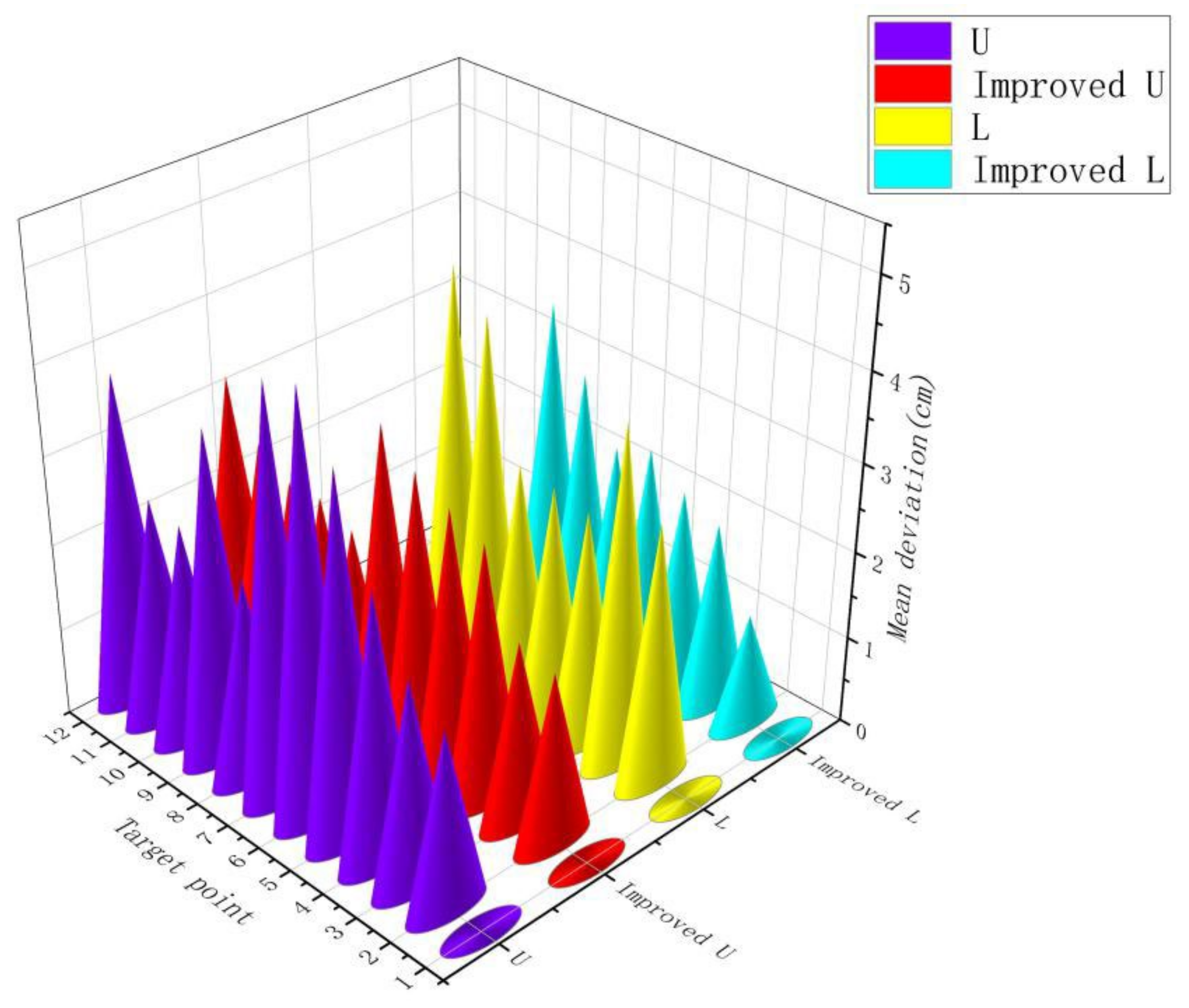

3.1. Experimental Results of Linear Path Positioning and Navigation

3.2. Experimental Results of Specific Path Positioning and Navigation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gao, X.; Li, J.; Fan, L.; Zhou, Q.; Yin, K.; Wang, J.; Song, C.; Huang, L.; Wang, Z. Review of wheeled mobile robots’ navigation problems and application prospects in agriculture. IEEE Access 2018, 6, 49248–49268. [Google Scholar] [CrossRef]

- Jin, Y.C.; Liu, J.Z.; Xu, Z.J.; Yuan, S.Q.; Li, P.P.; Wang, J.Z. Development status and trend of agricultural robot technology. Int. J. Agric. Biol. Eng. 2021, 14, 1–19. [Google Scholar] [CrossRef]

- Al-Mashhadani, Z.; Mainampati, M.; Chandrasekaran, B. Autonomous Exploring Map and Navigation for an Agricultural Robot. In Proceedings of the 2020 3rd International Conference on Control and Robots (ICCR), Tokyo, Japan, 26–29 December 2020. [Google Scholar]

- Santos, L.C.; Santos, F.N.; Pires, E.; Valente, A.; Costa, P.; Magalhães, S. Path Planning for ground robots in agriculture: A short review. In Proceedings of the 2020 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Ponta Delgada, Portugal, 15–17 April 2020. [Google Scholar]

- Skoczeń, M.; Ochman, M.; Spyra, K.; Nikodem, M.; Krata, D.; Panek, M.; Pawłowski, A. Obstacle Detection System for Agricultural Mobile Robot Application Using RGB-D Cameras. Sensors 2021, 21, 5292. [Google Scholar] [CrossRef] [PubMed]

- Nie, J.; Wang, N.; Li, J.; Wang, Y.; Wang, K. Prediction of liquid magnetization series data in agriculture based on enhanced CGAN. Front. Plant Sci. 2022, 13, 929140. [Google Scholar] [CrossRef]

- Ogawa, Y.; Kondo, N.; Monta, M.; Shibusawa, S. Field and Service Robotics, STAR 24; Yuta, S., Asama, H., Prassler, E., Tsubouchi, T., Thrun, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 539–548. [Google Scholar]

- Pingzeng, L.; Shusheng, B.; Dongju, F.; Liang, M. Research Review on the Navigation for Outdoor Agricultural Robot. Agric. Netw. Inf. 2010, 3, 5–10. [Google Scholar]

- Xuegui, H. Researches on Weed Recognition and Vision Navigation of Weeding Robot. Master’s Thesis, Nanjing Forestry University, Nanjing, China, 2007. [Google Scholar]

- Zhang, Q.; Chen, M.E.S.; Li, B. A visual navigation algorithm for paddy field weeding robot based on image understanding. Comput. Electron. Agric. 2017, 143, 66–78. [Google Scholar] [CrossRef]

- Meshram, A.T.; Vanalkar, A.V.; Kalambe, K.B.; Badar, A.M. Pesticide spraying robot for precision agriculture: A categorical literature review and future trends. J. Field Robot. 2022, 39, 153–171. [Google Scholar] [CrossRef]

- Bebis, G.; Boyle, R.; Parvin, B.; Koracin, D.; Pavlidis, I.; Feris, R.; McGraw, T.; Elendt, M.; Kopper, R.; Ragan, E.; et al. Lecture Notes in Computer Science. In Advances in Visual Computing; Chapter 43; Nonlinear Controller of Quadcopters for Agricultural Monitoring; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9474, pp. 476–487. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Duan, H.; Luo, R.; Lian, J.; Ruan, C.; Zhao, D.; Li, C. Autonomous navigation control based on improved adaptive filtering for agricultural robot. Int. J. Adv. Robot. Syst. 2020, 17, 1–12. [Google Scholar] [CrossRef]

- Nie, J.; Wang, N.; Li, J.; Wang, K.; Wang, H. Meta-learning prediction of physical and chemical properties of magnetized water and fertilizer based on LSTM. Plant Methods 2021, 17, 1–13. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Chatterjee, I.; Sáiz-Rubio, V. The role of GNSS in the navigation strategies of cost-effective agricultural robots. Comput. Electron. Agric. 2015, 112, 172–183. [Google Scholar] [CrossRef] [Green Version]

- Ponnambalam, V.R.; Bakken, M.; Moore, R.J.D.; Glenn Omholt Gjevestad, J.; Johan From, P. Autonomous Crop Row Guidance Using Adaptive Multi-ROI in Strawberry Fields. Sensors 2020, 20, 5249. [Google Scholar] [CrossRef]

- Kanagasingham, S.; Ekpanyapong, M.; Chaihan, R. Integrating machine vision-based row guidance with GPS and compass-based routing to achieve autonomous navigation for a rice field weeding robot. Precis. Agric. 2020, 21, 831–855. [Google Scholar] [CrossRef]

- Liu, L.; Mei, T.; Niu, R.; Wang, J.; Liu, Y.; Chu, S. RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy. Appl. Sci. 2016, 6, 182. [Google Scholar] [CrossRef]

- Riccardo, P.; Francesco, D.D.; Gerhard, N.; Marc, H. Navigate-and-Seek: A Robotics Framework for People Localization in Agricultural Environments. IEEE Robot. Autom. Lett. 2021, 6, 6577–6584. [Google Scholar]

- Pire, T.; Mujica, M.; Civera, J.; Kofman, E. The Rosario Dataset: Multisensor Data for Localization and Mapping in Agricultural Environments. Int. J. Robot. Res. 2019, 38, 633–641. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, H.; Yang, T.; Liu, L.; Cui, Y. Integrated Navigation by a Greenhouse Robot Based on an Odometer/Lidar. Instrum. Mes. Metrol. 2020, 19, 91–101. [Google Scholar] [CrossRef]

- Cheein, F.A.; Steiner, G.; Paina, G.P.; Carelli, R. Optimized EIF-SLAM algorithm for precision agriculture mapping based on stems detection. Comput. Electron. Agric. 2011, 78, 195–207. [Google Scholar] [CrossRef]

- Wang, H.; Cheng, W.; Xu, C.; Zhang, M.; Hu, L. Method for Identifying Pseudo GPS Signal Based on Radio Frequency Fingerprint. In Proceedings of the 10th International Conference on Communications, Circuits and Systems (ICCCAS), Chengdu, China, 22–24 December 2018. [Google Scholar]

- Yufeng, L.; Jingbin, L.; Qingwang, Y.; Wenhao, Z.; Jing, N. Research on Predictive Control Algorithm of Vehicle Turning Path Based on Monocular Vision. Processes 2022, 10, 417. [Google Scholar]

- Haoran, J.; Jun, C.; Hu, W.; Wangli, L.; Chi, Y. Research progress of automatic navigation technology for orchard mobile robot. J. Northwest A&F Univ. 2011, 39, 207–213. [Google Scholar] [CrossRef]

- Yaping, S. On the Technology of Apple Dwarfing and Dense Planting. Mod. Agric. Res. 2022, 28, 115–117. [Google Scholar] [CrossRef]

- Yang, L.; Bin, L.; Yuncheng, D.; Shiguo, W.; Yaxiong, L.; Tao, W. Research on Current Planting and Harvest Situation of Red Jujube in Southern Xinjiang. Xinjiang Agric. Mech. 2021, 3, 32–34. [Google Scholar] [CrossRef]

- Yuechao, W.; Xingjian, J. Steering and Control of Nonholonomic Wheeled Mobile Robots Using Artificial Fields. Acta Autom. Sin. 2002, 5, 777–783. [Google Scholar] [CrossRef]

- Qinyong, M. Motion Modeling of Two-Wheel Differential Drive Mobile Robot. Master’s Thesis, Chongqing University, Chongqing, China, 2013. [Google Scholar]

- Rivera, Z.B.; Simone, M.; Guida, D. Unmanned Ground Vehicle Modelling in Gazebo/ROS-Based Environments. Machines 2019, 7, 42. [Google Scholar] [CrossRef]

- Peng, G.; Zheng, W.; Lu, Z.; Liao, J.; Hu, L.; Zhang, G.; He, D.; Zhong, J. An Improved AMCL Algorithm Based on Laser Scanning Match in a Complex and Unstructured Environment. Complexity 2018, 2018, 2327637. [Google Scholar] [CrossRef]

- Zhang, S.; Guo, C.; Gao, Z.; Sugirbay, A.; Chen, J.; Chen, Y. Research on 2D Laser Automatic Navigation Control for Standardized Orchard. Appl. Sci. 2020, 10, 2763. [Google Scholar] [CrossRef]

- Mao, W.; Liu, H.; Hao, W.; Yang, F.; Liu, Z. Development of a Combined Orchard Harvesting Robot Navigation System. Remote Sens. 2022, 14, 675. [Google Scholar] [CrossRef]

- Blok, P.M.; Boheemen, K.V.; Evert, F.V.; Ijsselmuiden, J.; Kim, G.-H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Radcliffe, J.; Cox, J.; Bulanon, D.M. Machine vision for orchard navigation. Comput. Ind. 2018, 98, 165–171. [Google Scholar] [CrossRef]

| Number | Target Points | Actual Coordinates | Deviation |

|---|---|---|---|

| 1 | (−330.00, 0.00) | (−330.00, 0.00) | 0.00 |

| 2 | (−330.00, 100.00) | (−329.51, 101.60) | 1.67 |

| 3 | (−330.00, 200.00) | (−328.65, 201.51) | 2.03 |

| 4 | (−330.00, 300.00) | (−328.22, 298.64) | 2.24 |

| 5 | (−330.00, 400.00) | (−328.35, 397.95) | 2.63 |

| 6 | (−330.00, 500.00) | (−328.65, 497.67) | 2.69 |

| 7 | (−330.00, 600.00) | (−330.49, 602.86) | 2.90 |

| 8 | (−330.00, 700.00) | (−328.21, 702.37) | 2.97 |

| 9 | (−330.00, 800.00) | (−328.67, 802.79) | 3.09 |

| 10 | (−330.00, 900.00) | (−331.45, 902.86) | 3.21 |

| 11 | (−330.00,1000.00) | (−331.49,1002.97) | 3.32 |

| Number | Target Points | Actual Coordinates | Deviation |

|---|---|---|---|

| 1 | (−142.00, 0.00) | (−142.00, 0.00) | 0.00 |

| 2 | (−142.00, 100.00) | (−140.66, 98.76) | 2.93 |

| 3 | (−142.00, 200.00) | (−138.45, 198.58) | 3.82 |

| 4 | (−142.00, 300.00) | (−139.45, 299.33) | 2.64 |

| 5 | (−142.00, 400.00) | (−142.86, 397.43) | 2.71 |

| 6 | (−142.00, 500.00) | (−143.85, 497.95) | 2.76 |

| 7 | (−228.00, 632.00) | (−230.70, 635.26) | 4.23 |

| 8 | (−328.00,632.00) | (−331.96,630.35) | 4.60 |

| Number | Target Points | Actual Coordinates | Deviation |

|---|---|---|---|

| 1 | (−330.00, 0.00) | (−330.00, 0.00) | 0.00 |

| 2 | (−330.00, 150.00) | (−329.23, 151.86) | 2.01 |

| 3 | (−330.00, 300.00) | (−330.25, 297.69) | 2.32 |

| 4 | (−330.00, 450.00) | (−328.47, 447.36) | 3.05 |

| 5 | (−330.00, 600.00) | (−328.65, 603.87) | 4.10 |

| 6 | (−330.00,750.00) | (−332.88, 746.23) | 4.74 |

| 7 | (−200.00, 921.00) | (−203.84, 923.55) | 4.60 |

| 8 | (−100.00, 855.00) | (−98.50, 853.27) | 2.29 |

| 9 | (−100.00, 705.00) | (−97.35, 702.39) | 3.72 |

| 10 | (−100.00, 555.00) | (−99.33, 552.64) | 2.45 |

| 11 | (−100.00, 405.00) | (−102.54, 404.98) | 2.54 |

| 12 | (−100.00, 255.00) | (−101.87, 258.25) | 3.75 |

| Number | Target Points | Actual Coordinates | Deviation |

|---|---|---|---|

| 1 | (−142.00, 0.00) | (−142.00, 0.00) | 0.00 |

| 2 | (−142.00, 100.00) | (−141.86, 98.76) | 1.25 |

| 3 | (−142.00, 200.00) | (−140.45, 198.58) | 2.10 |

| 4 | (−142.00, 300.00) | (−139.75, 300.33) | 2.27 |

| 5 | (−142.00, 400.00) | (−142.63, 397.51) | 2.57 |

| 6 | (−142.00, 500.00) | (−141.85, 497.61) | 2.39 |

| 7 | (−228.00, 632.00) | (−229.33, 634.73) | 3.04 |

| 8 | (−328.00,632.00) | (−330.85,634.83) | 3.67 |

| Number | Target Points | Actual Coordinates | Deviation |

|---|---|---|---|

| 1 | (−330.00, 0.00) | (−330.00, 0.00) | 0.00 |

| 2 | (−330.00, 150.00) | (−331.82, 149.37) | 1.93 |

| 3 | (−330.00, 300.00) | (−330.86, 298.15) | 2.04 |

| 4 | (−330.00, 450.00) | (−329.14, 447.22) | 2.91 |

| 5 | (−330.00, 600.00) | (−328.94, 602.89) | 3.08 |

| 6 | (−330.00,750.00) | (−331.76, 747.23) | 3.28 |

| 7 | (−200.00, 921.00) | (−202.65, 922.44) | 3.60 |

| 8 | (−100.00, 855.00) | (−101.15, 853.11) | 2.21 |

| 9 | (−100.00, 705.00) | (−102.32, 704.48) | 2.38 |

| 10 | (−100.00, 555.00) | (−101.66, 553.33) | 2.35 |

| 11 | (−100.00, 405.00) | (−102.49, 405.73) | 2.59 |

| 12 | (−100.00, 255.00) | (−101.45, 257.83) | 3.18 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Li, J.; Zhou, W.; Yao, Q.; Nie, J.; Qi, X. Robot Path Planning Navigation for Dense Planting Red Jujube Orchards Based on the Joint Improved A* and DWA Algorithms under Laser SLAM. Agriculture 2022, 12, 1445. https://doi.org/10.3390/agriculture12091445

Li Y, Li J, Zhou W, Yao Q, Nie J, Qi X. Robot Path Planning Navigation for Dense Planting Red Jujube Orchards Based on the Joint Improved A* and DWA Algorithms under Laser SLAM. Agriculture. 2022; 12(9):1445. https://doi.org/10.3390/agriculture12091445

Chicago/Turabian StyleLi, Yufeng, Jingbin Li, Wenhao Zhou, Qingwang Yao, Jing Nie, and Xiaochen Qi. 2022. "Robot Path Planning Navigation for Dense Planting Red Jujube Orchards Based on the Joint Improved A* and DWA Algorithms under Laser SLAM" Agriculture 12, no. 9: 1445. https://doi.org/10.3390/agriculture12091445

APA StyleLi, Y., Li, J., Zhou, W., Yao, Q., Nie, J., & Qi, X. (2022). Robot Path Planning Navigation for Dense Planting Red Jujube Orchards Based on the Joint Improved A* and DWA Algorithms under Laser SLAM. Agriculture, 12(9), 1445. https://doi.org/10.3390/agriculture12091445